Evaluation Types of Evaluation Might evaluate several aspects

- Slides: 36

Evaluation

Types of Evaluation • Might evaluate several aspects – – – Assistance in formulating queries Speed of retrieval Resources required Presentation of documents Ability to find relevant documents • Evaluation generally comparative – System A vs. B – System A vs A´ • Most common evaluation - retrieval effectiveness Allan, Ballesteros, Croft, and/or Turtle

The Concept of Relevance • Relevance of a document D to a query Q is subjective – Different users will have different judgments – Same users may judge differently at different times – Degree of relevance of different documents may vary Allan, Ballesteros, Croft, and/or Turtle

The Concept of Relevance • In evaluating IR systems it is assumed that: – A subset of the documents of the database (DB) are relevant – A document is either relevant or not Allan, Ballesteros, Croft, and/or Turtle

Relevance • In a small collection - the relevance of each document can be checked • With real collections, never know full set of relevant documents • Any retrieval model includes an implicit definition of relevance – – Satisfiability of a FOL expression Distance P(Relevance|query, document) P(query|document) Allan, Ballesteros, Croft, and/or Turtle

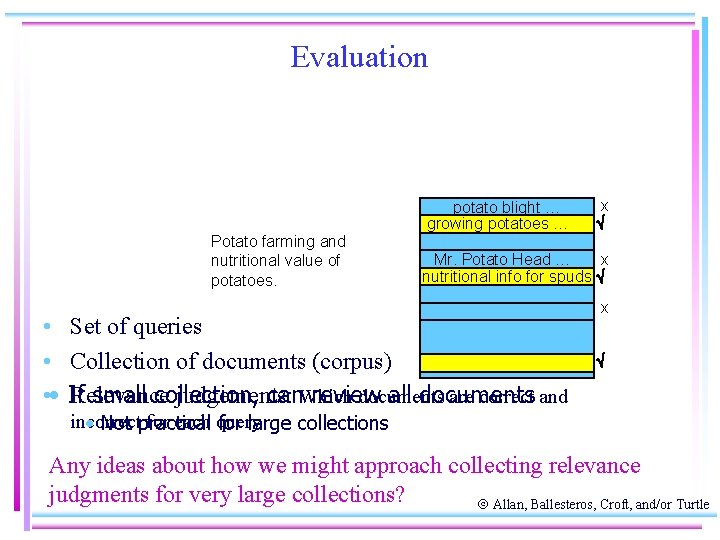

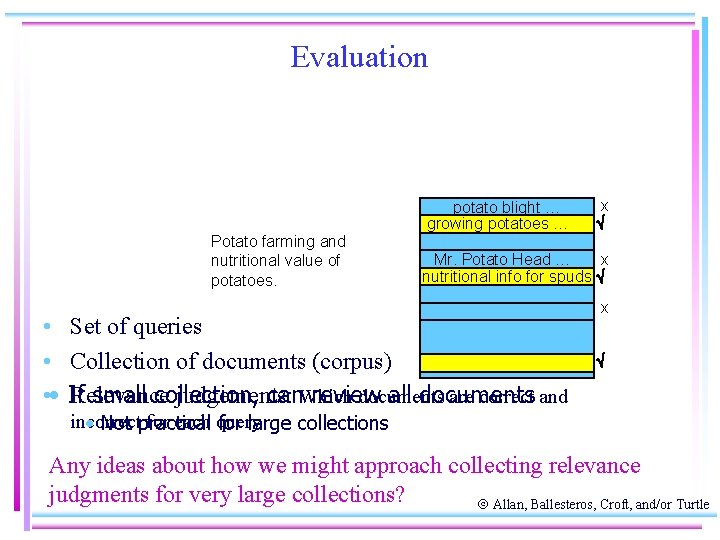

Evaluation potato blight … growing potatoes … Potato farming and nutritional value of potatoes. x Mr. Potato Head … x nutritional info for spuds • Set of queries • Collection of documents (corpus) small collection, can. Which review all documents • • If Relevance judgements: documents are correct and x incorrect for each query • Not practical for large collections Any ideas about how we might approach collecting relevance judgments for very large collections? Allan, Ballesteros, Croft, and/or Turtle

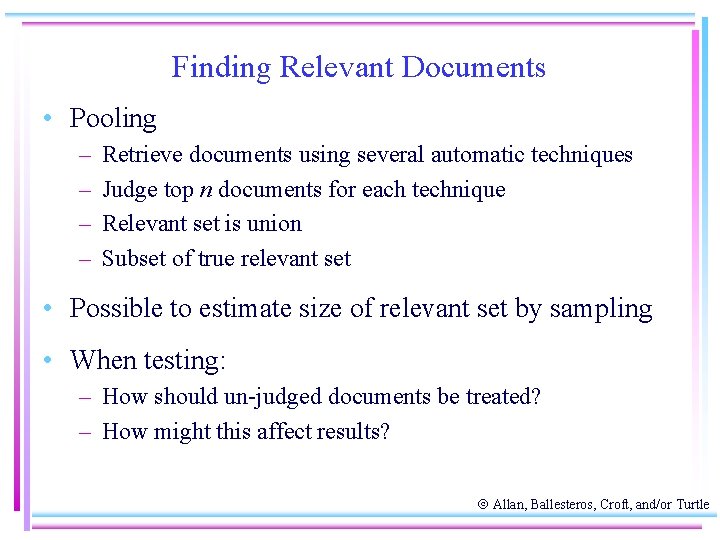

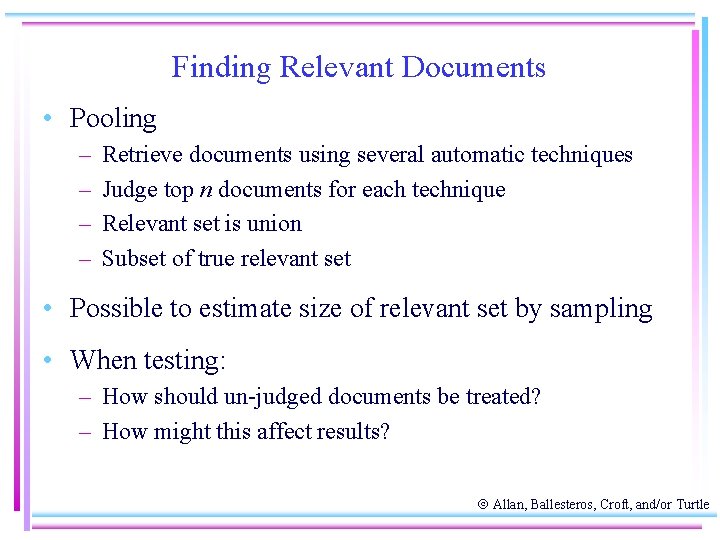

Finding Relevant Documents • Pooling – – Retrieve documents using several automatic techniques Judge top n documents for each technique Relevant set is union Subset of true relevant set • Possible to estimate size of relevant set by sampling • When testing: – How should un-judged documents be treated? – How might this affect results? Allan, Ballesteros, Croft, and/or Turtle

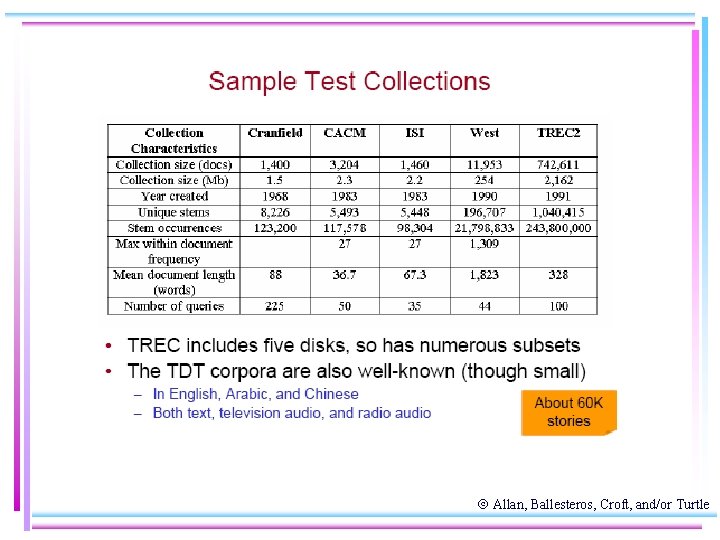

Test Collections • To compare the performance of two techniques: – each technique used to evaluate same queries – results (set or ranked list) compared using metric – most common measures - precision and recall • Usually use multiple measures to get different views of performance • Usually test with multiple collections – – performance is collection dependent Allan, Ballesteros, Croft, and/or Turtle

Allan, Ballesteros, Croft, and/or Turtle

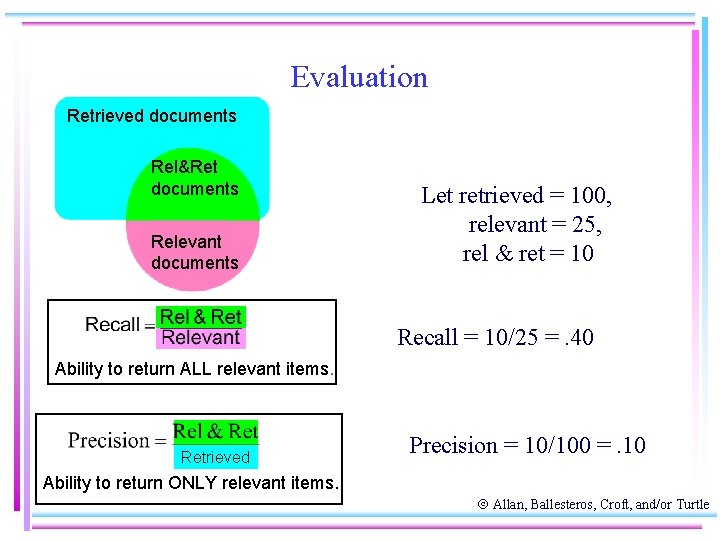

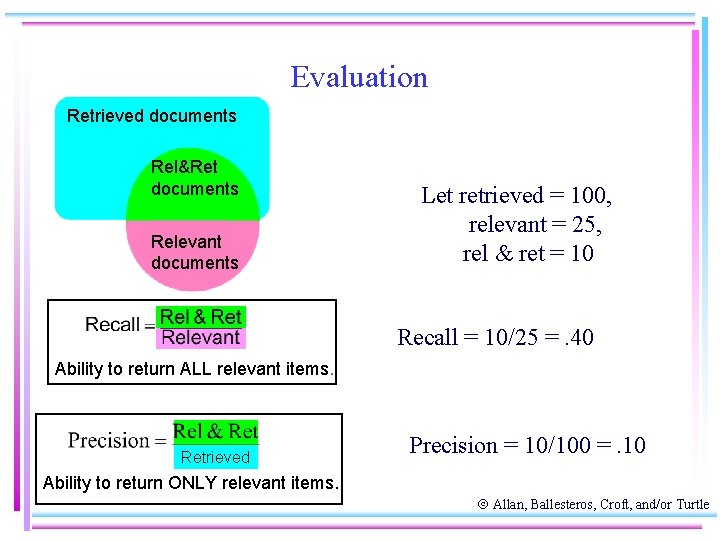

Evaluation Retrieved documents Rel&Ret documents Relevant documents Let retrieved = 100, relevant = 25, rel & ret = 10 Recall = 10/25 =. 40 Ability to return ALL relevant items. Retrieved Precision = 10/100 =. 10 Ability to return ONLY relevant items. Allan, Ballesteros, Croft, and/or Turtle

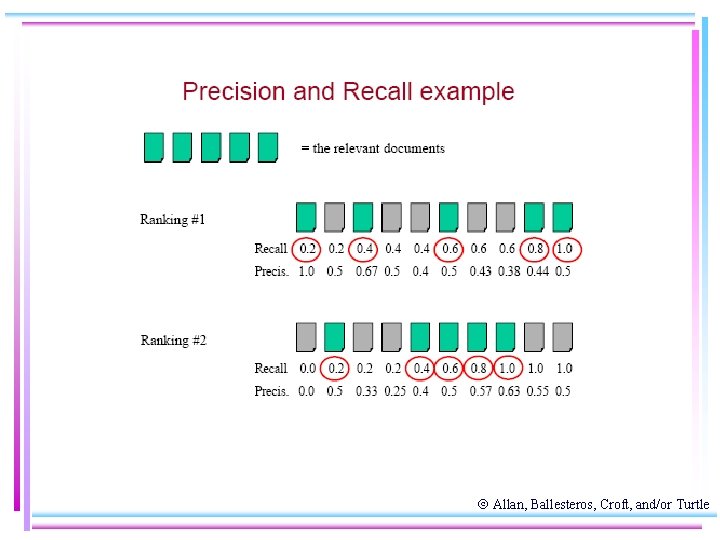

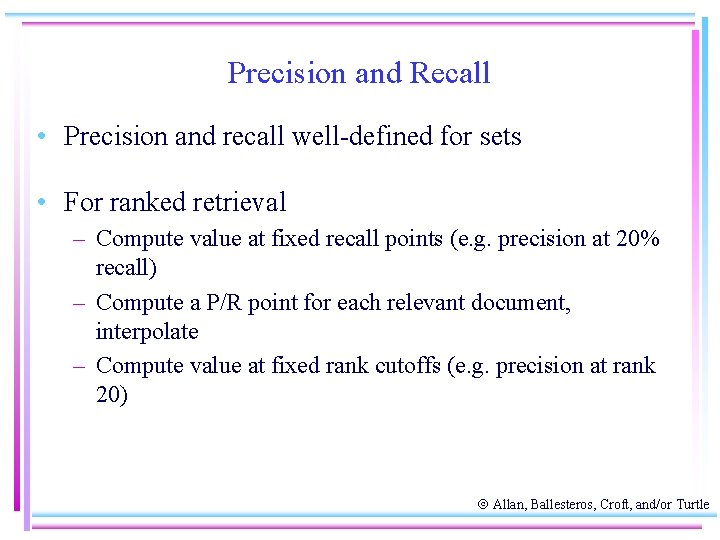

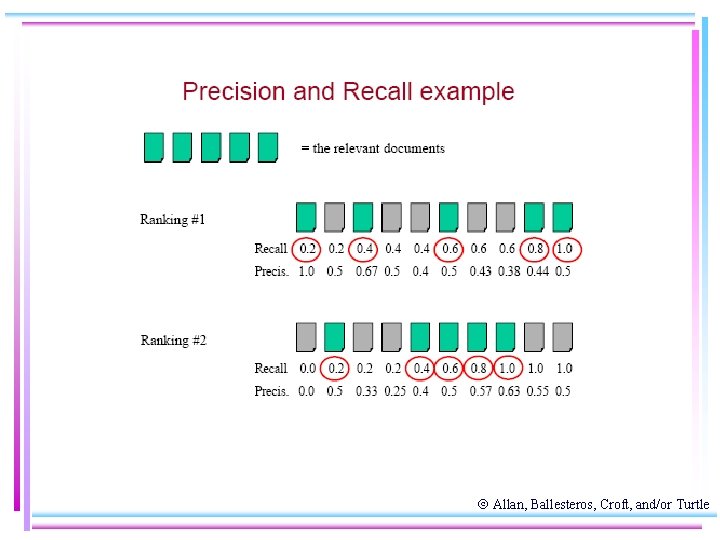

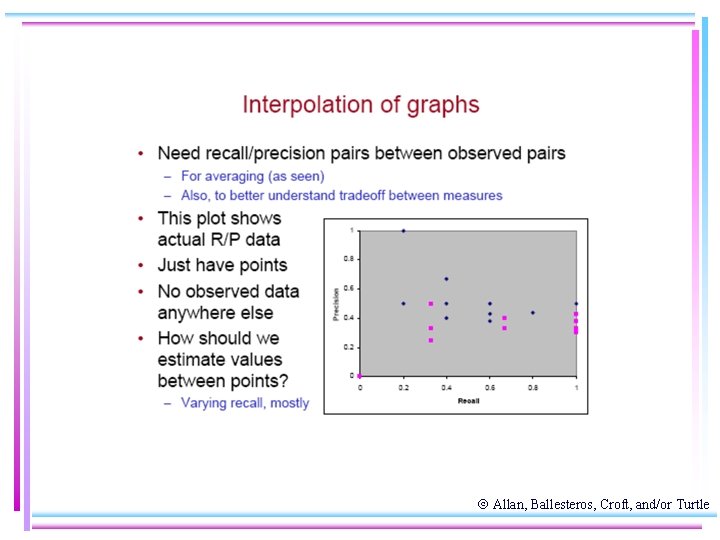

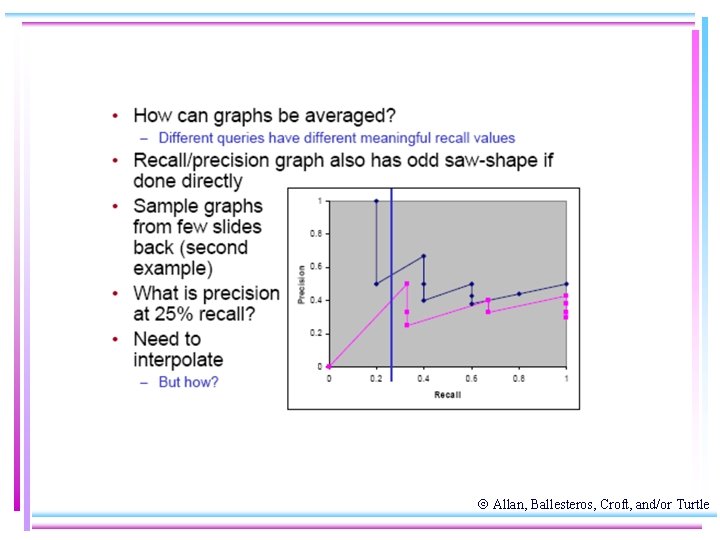

Precision and Recall • Precision and recall well-defined for sets • For ranked retrieval – Compute value at fixed recall points (e. g. precision at 20% recall) – Compute a P/R point for each relevant document, interpolate – Compute value at fixed rank cutoffs (e. g. precision at rank 20) Allan, Ballesteros, Croft, and/or Turtle

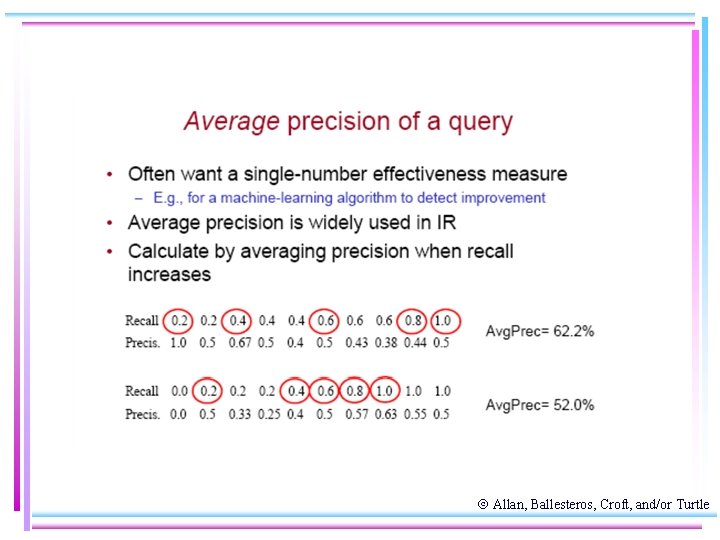

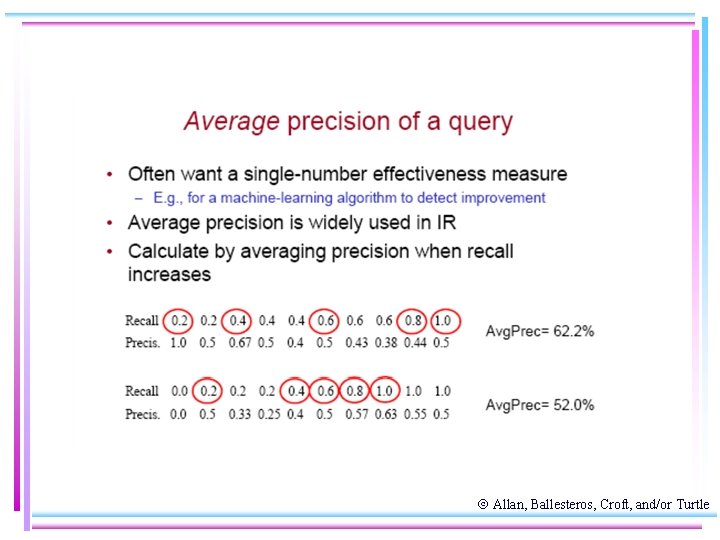

Average Precision for a Query • Often want a single-number effectiveness measure • Average precision is widely used in IR • Calculate by averaging precision when recall increases Allan, Ballesteros, Croft, and/or Turtle

Allan, Ballesteros, Croft, and/or Turtle

Allan, Ballesteros, Croft, and/or Turtle

Allan, Ballesteros, Croft, and/or Turtle

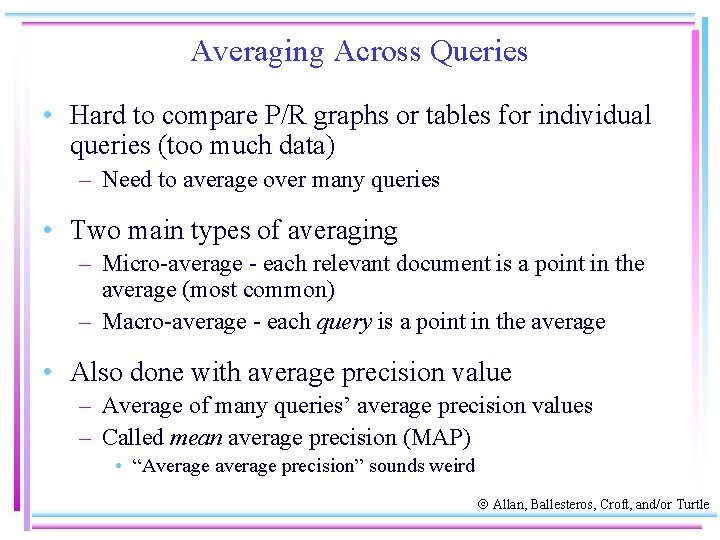

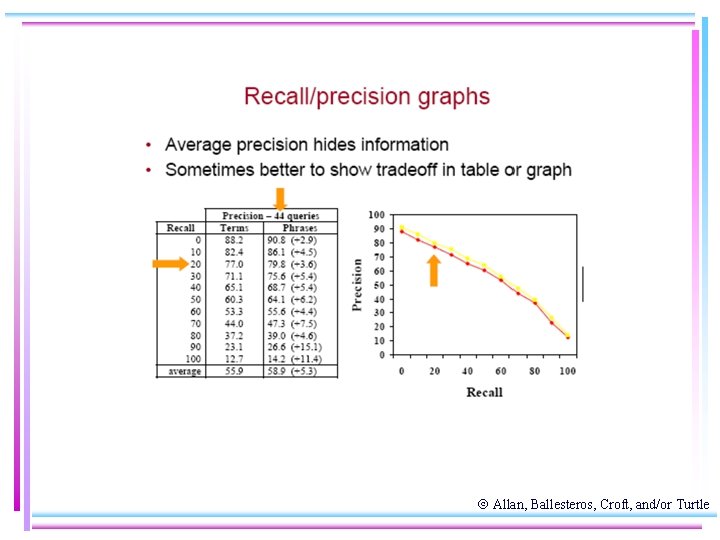

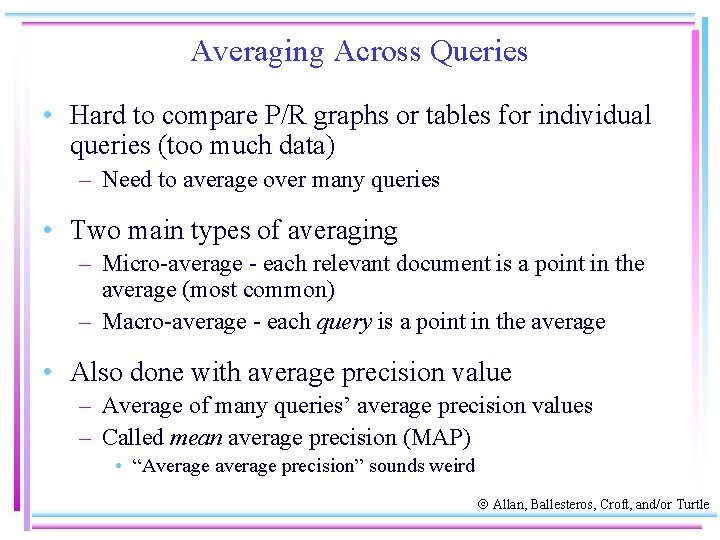

Averaging Across Queries • Hard to compare P/R graphs or tables for individual queries (too much data) – Need to average over many queries • Two main types of averaging – Micro-average - each relevant document is a point in the average (most common) – Macro-average - each query is a point in the average • Also done with average precision value – Average of many queries’ average precision values – Called mean average precision (MAP) • “Average average precision” sounds weird Allan, Ballesteros, Croft, and/or Turtle

Allan, Ballesteros, Croft, and/or Turtle

Allan, Ballesteros, Croft, and/or Turtle

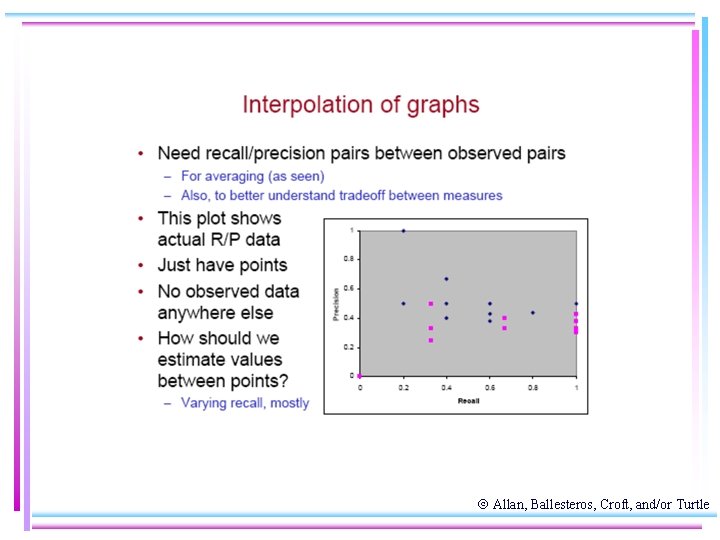

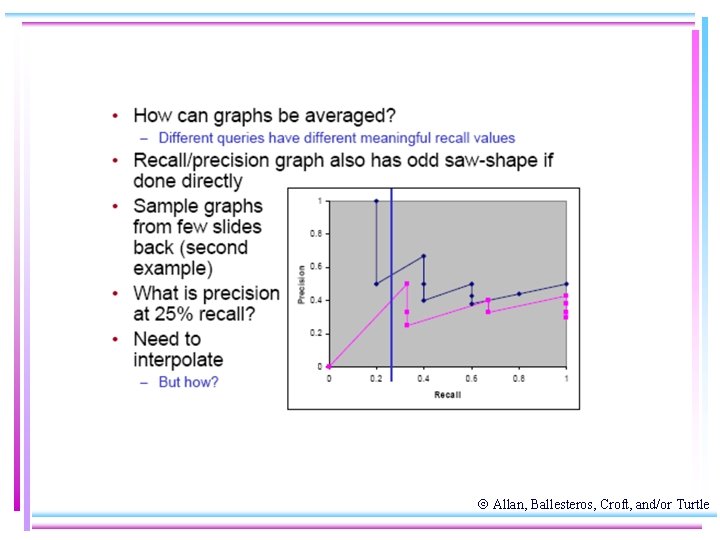

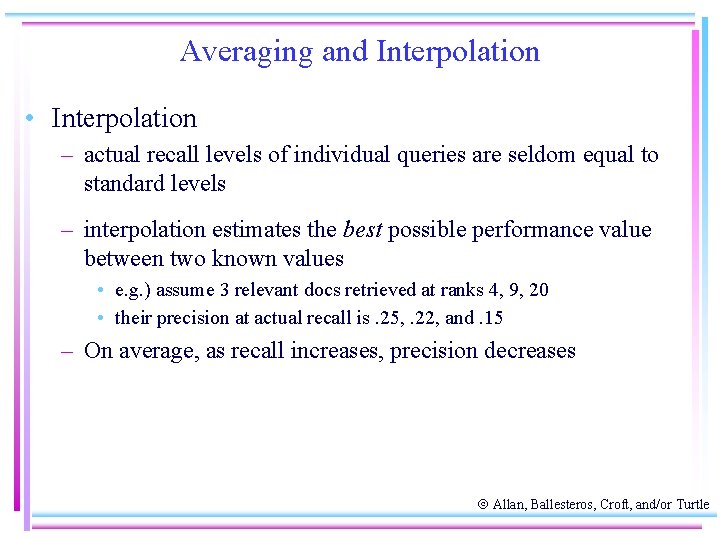

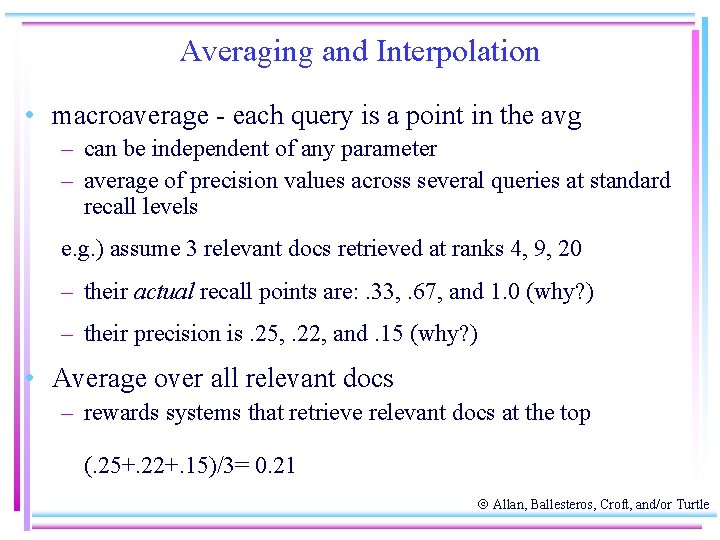

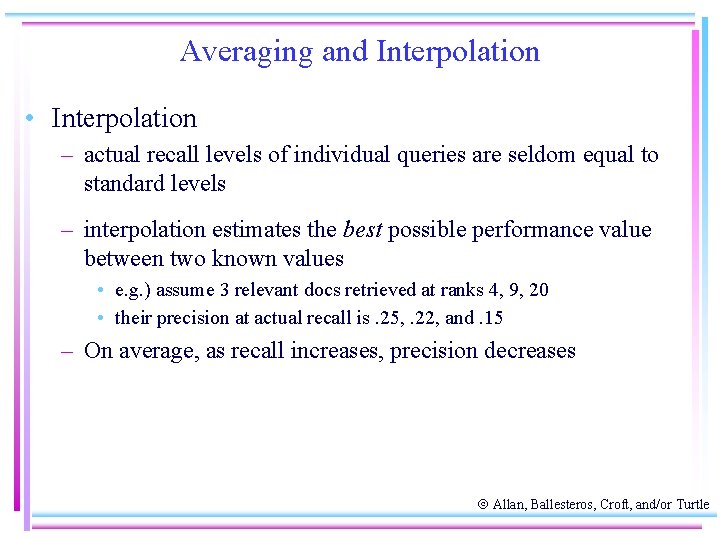

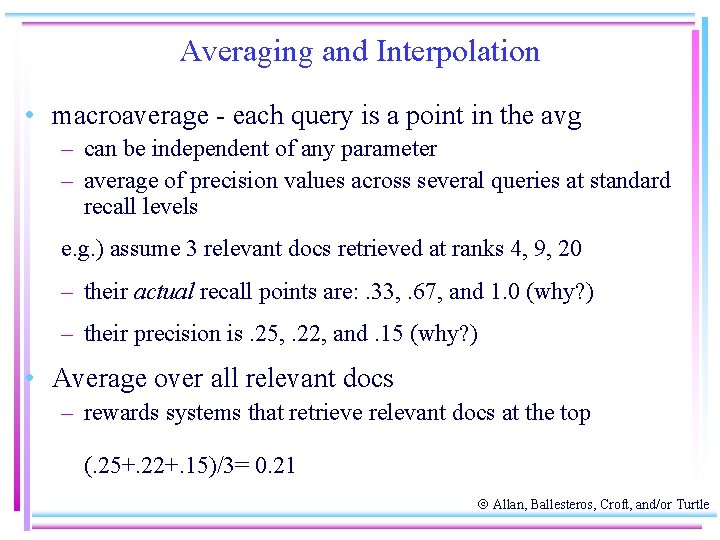

Averaging and Interpolation • Interpolation – actual recall levels of individual queries are seldom equal to standard levels – interpolation estimates the best possible performance value between two known values • e. g. ) assume 3 relevant docs retrieved at ranks 4, 9, 20 • their precision at actual recall is. 25, . 22, and. 15 – On average, as recall increases, precision decreases Allan, Ballesteros, Croft, and/or Turtle

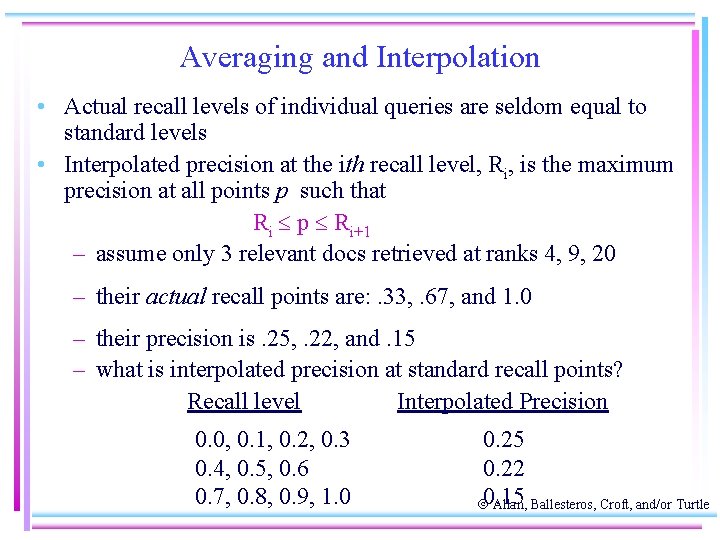

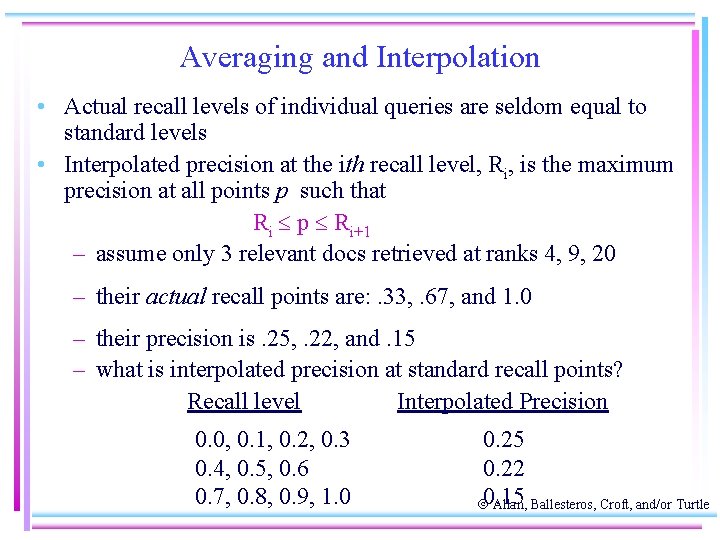

Averaging and Interpolation • Actual recall levels of individual queries are seldom equal to standard levels • Interpolated precision at the ith recall level, Ri, is the maximum precision at all points p such that Ri p Ri+1 – assume only 3 relevant docs retrieved at ranks 4, 9, 20 – their actual recall points are: . 33, . 67, and 1. 0 – their precision is. 25, . 22, and. 15 – what is interpolated precision at standard recall points? Recall level Interpolated Precision 0. 0, 0. 1, 0. 2, 0. 3 0. 4, 0. 5, 0. 6 0. 7, 0. 8, 0. 9, 1. 0 0. 25 0. 22 0. 15 Allan, Ballesteros, Croft, and/or Turtle

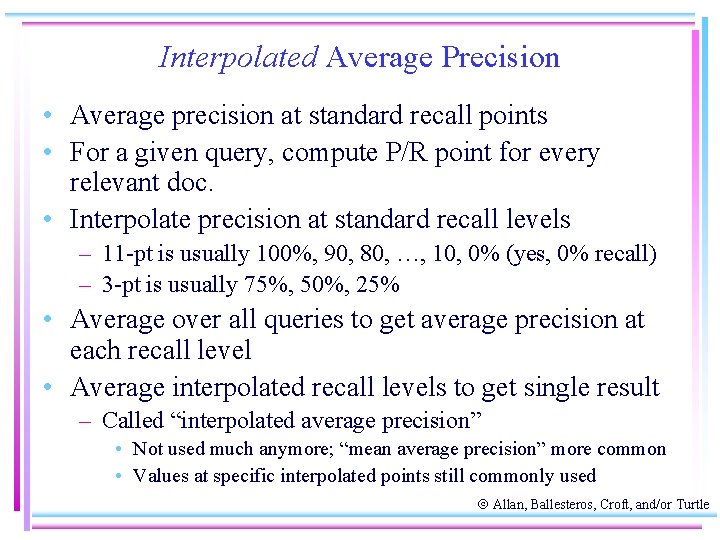

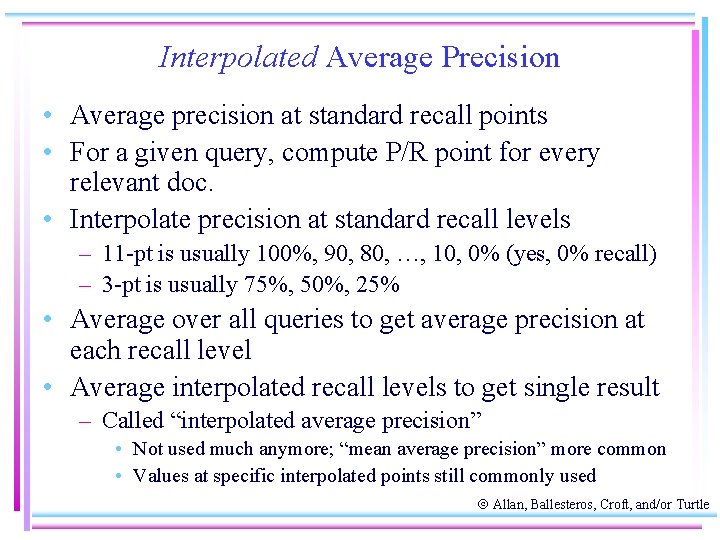

Interpolated Average Precision • Average precision at standard recall points • For a given query, compute P/R point for every relevant doc. • Interpolate precision at standard recall levels – 11 -pt is usually 100%, 90, 80, …, 10, 0% (yes, 0% recall) – 3 -pt is usually 75%, 50%, 25% • Average over all queries to get average precision at each recall level • Average interpolated recall levels to get single result – Called “interpolated average precision” • Not used much anymore; “mean average precision” more common • Values at specific interpolated points still commonly used Allan, Ballesteros, Croft, and/or Turtle

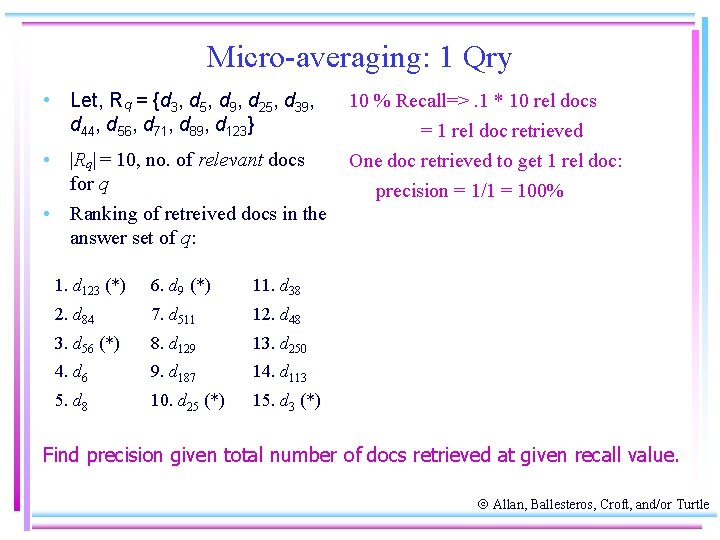

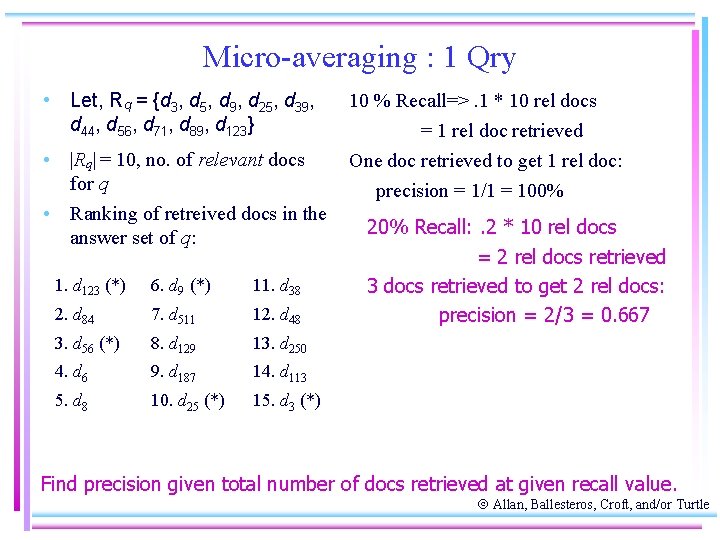

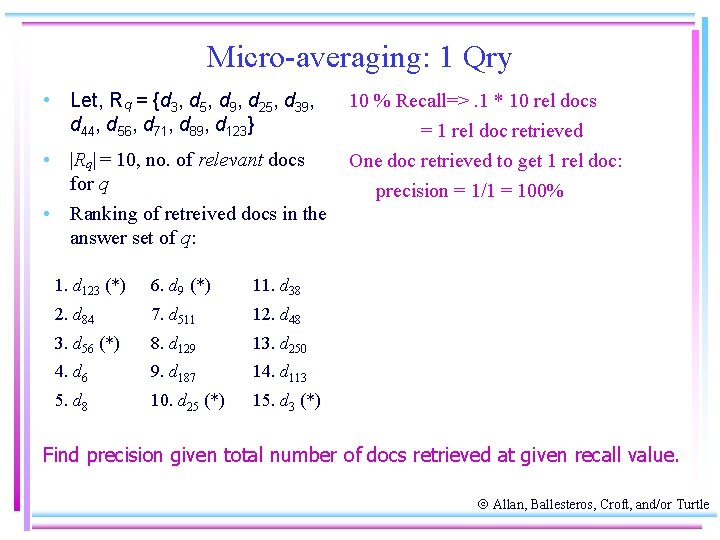

Micro-averaging: 1 Qry • Let, Rq = {d 3, d 5, d 9, d 25, d 39, d 44, d 56, d 71, d 89, d 123} • |Rq| = 10, no. of relevant docs for q • Ranking of retreived docs in the answer set of q: 1. d 123 (*) 6. d 9 (*) 11. d 38 2. d 84 7. d 511 12. d 48 3. d 56 (*) 8. d 129 13. d 250 4. d 6 9. d 187 14. d 113 5. d 8 10. d 25 (*) 15. d 3 (*) 10 % Recall=>. 1 * 10 rel docs = 1 rel doc retrieved One doc retrieved to get 1 rel doc: precision = 1/1 = 100% Find precision given total number of docs retrieved at given recall value. Allan, Ballesteros, Croft, and/or Turtle

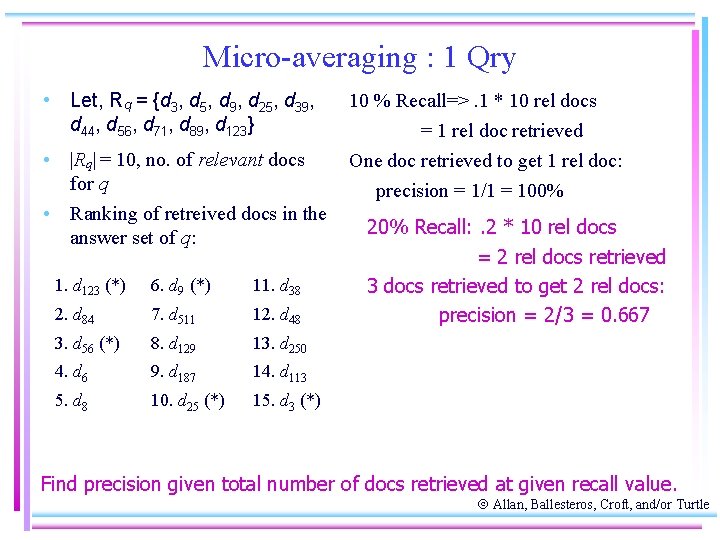

Micro-averaging : 1 Qry • Let, Rq = {d 3, d 5, d 9, d 25, d 39, d 44, d 56, d 71, d 89, d 123} • |Rq| = 10, no. of relevant docs for q • Ranking of retreived docs in the answer set of q: 1. d 123 (*) 6. d 9 (*) 11. d 38 2. d 84 7. d 511 12. d 48 3. d 56 (*) 8. d 129 13. d 250 4. d 6 9. d 187 14. d 113 5. d 8 10. d 25 (*) 15. d 3 (*) 10 % Recall=>. 1 * 10 rel docs = 1 rel doc retrieved One doc retrieved to get 1 rel doc: precision = 1/1 = 100% 20% Recall: . 2 * 10 rel docs = 2 rel docs retrieved 3 docs retrieved to get 2 rel docs: precision = 2/3 = 0. 667 Find precision given total number of docs retrieved at given recall value. Allan, Ballesteros, Croft, and/or Turtle

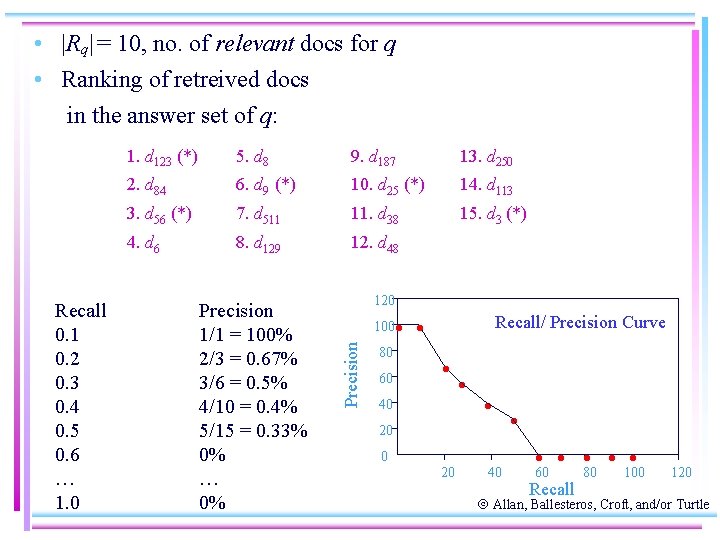

Micro-averaging : 1 Qry • Let, Rq = {d 3, d 5, d 9, d 25, d 39, d 44, d 56, d 71, d 89, d 123} • |Rq| = 10, no. of relevant docs for q • Ranking of retreived docs in the answer set of q: 1. d 123 (*) 6. d 9 (*) 11. d 38 2. d 84 7. d 511 12. d 48 3. d 56 (*) 8. d 129 13. d 250 4. d 6 9. d 187 14. d 113 5. d 8 10. d 25 (*) 15. d 3 (*) 10 % Recall=>. 1 * 10 rel docs = 1 rel doc retrieved One doc retrieved to get 1 rel doc: precision = 1/1 = 100% 20% Recall: . 2 * 10 rel docs = 2 rel docs retrieved 3 docs retrieved to get 2 rel docs: precision = 2/3 = 0. 667 30% Recall: . 3 * 10 rel docs = 3 rel docs retrieved 6 docs retrieved to get 3 rel docs: precision = 3/6 = 0. 5 What is precision at recall values from 40 -100%? Allan, Ballesteros, Croft, and/or Turtle

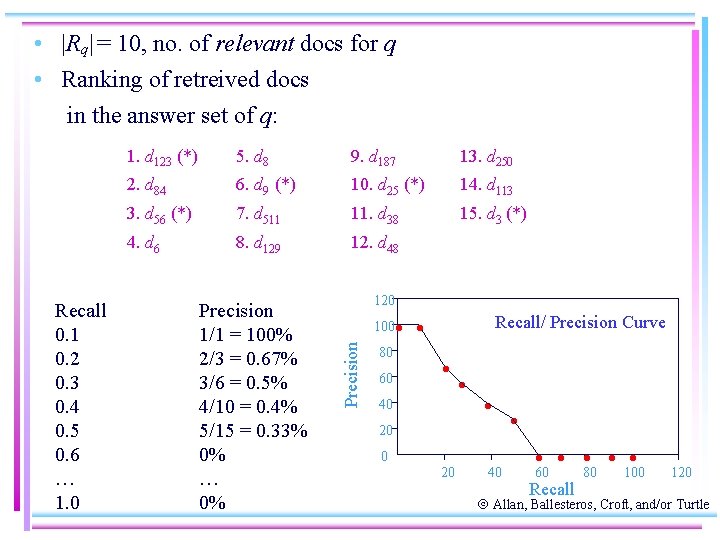

• |Rq| = 10, no. of relevant docs for q • Ranking of retreived docs in the answer set of q: 5. d 8 9. d 187 13. d 250 2. d 84 6. d 9 (*) 10. d 25 (*) 14. d 113 3. d 56 (*) 7. d 511 11. d 38 15. d 3 (*) 4. d 6 8. d 129 12. d 48 Precision 1/1 = 100% 2/3 = 0. 67% 3/6 = 0. 5% 4/10 = 0. 4% 5/15 = 0. 33% 0% … 0% 120 100 Precision Recall 0. 1 0. 2 0. 3 0. 4 0. 5 0. 6 … 1. 0 1. d 123 (*) 80 60 Recall/ Precision Curve • • • 40 • • 20 0 20 40 • • 60 • • 80 • 100 • Recall 120 Allan, Ballesteros, Croft, and/or Turtle

Averaging and Interpolation • macroaverage - each query is a point in the avg – can be independent of any parameter – average of precision values across several queries at standard recall levels e. g. ) assume 3 relevant docs retrieved at ranks 4, 9, 20 – their actual recall points are: . 33, . 67, and 1. 0 (why? ) – their precision is. 25, . 22, and. 15 (why? ) • Average over all relevant docs – rewards systems that retrieve relevant docs at the top (. 25+. 22+. 15)/3= 0. 21 Allan, Ballesteros, Croft, and/or Turtle

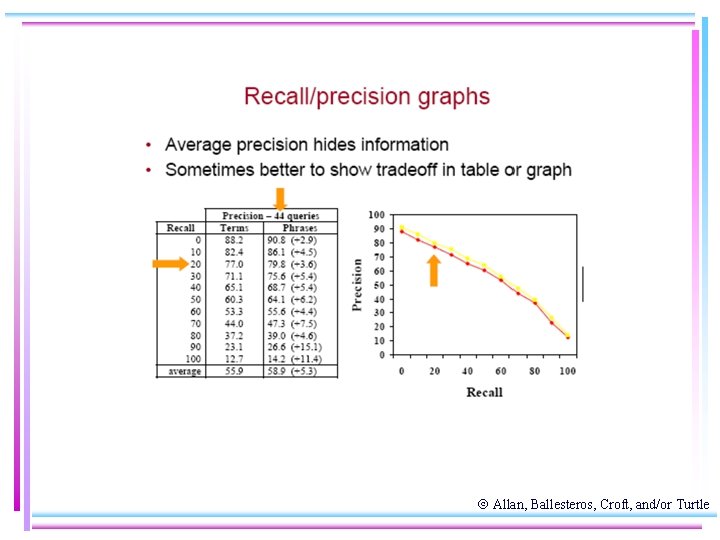

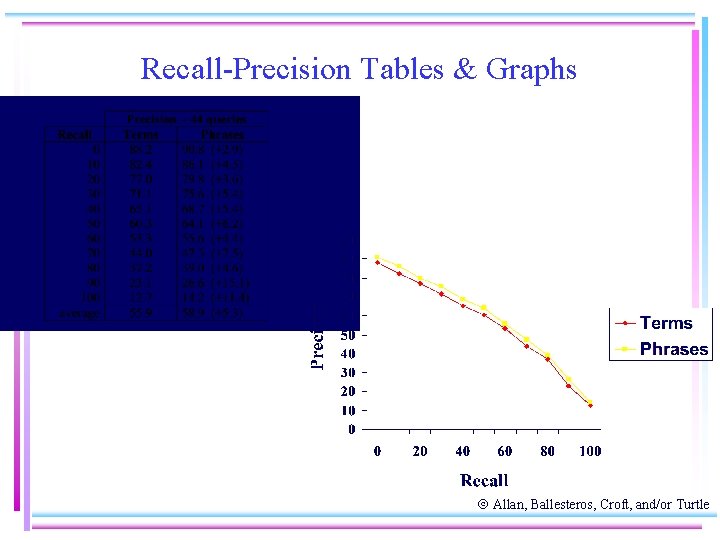

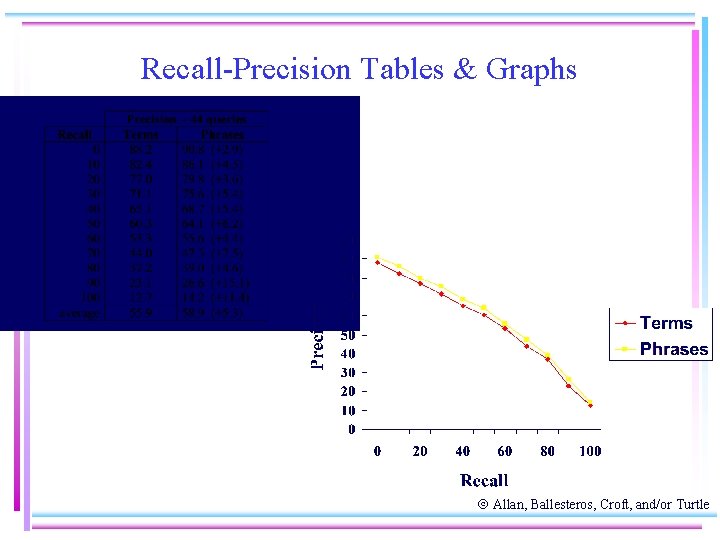

Recall-Precision Tables & Graphs Allan, Ballesteros, Croft, and/or Turtle

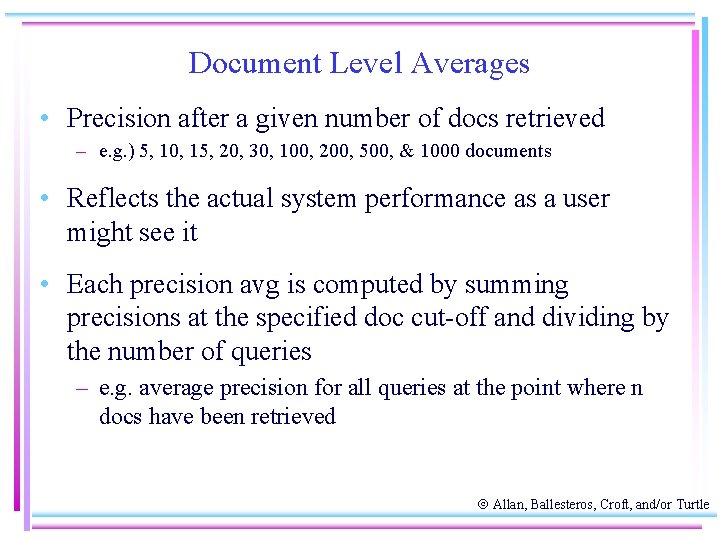

Document Level Averages • Precision after a given number of docs retrieved – e. g. ) 5, 10, 15, 20, 30, 100, 200, 500, & 1000 documents • Reflects the actual system performance as a user might see it • Each precision avg is computed by summing precisions at the specified doc cut-off and dividing by the number of queries – e. g. average precision for all queries at the point where n docs have been retrieved Allan, Ballesteros, Croft, and/or Turtle

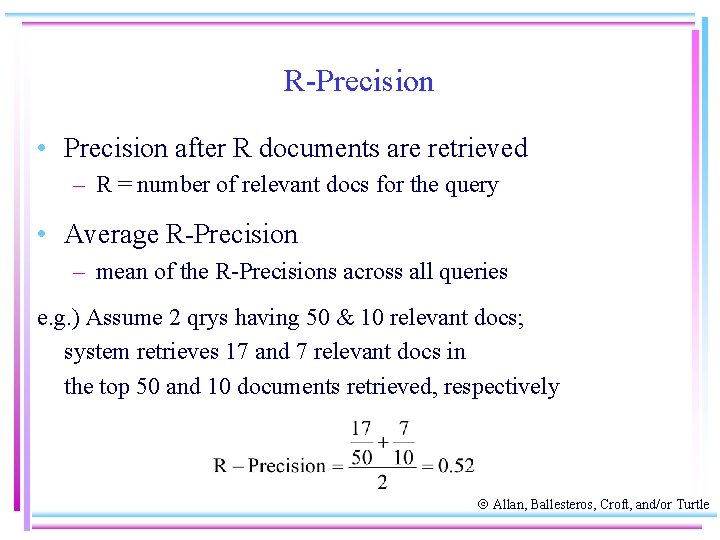

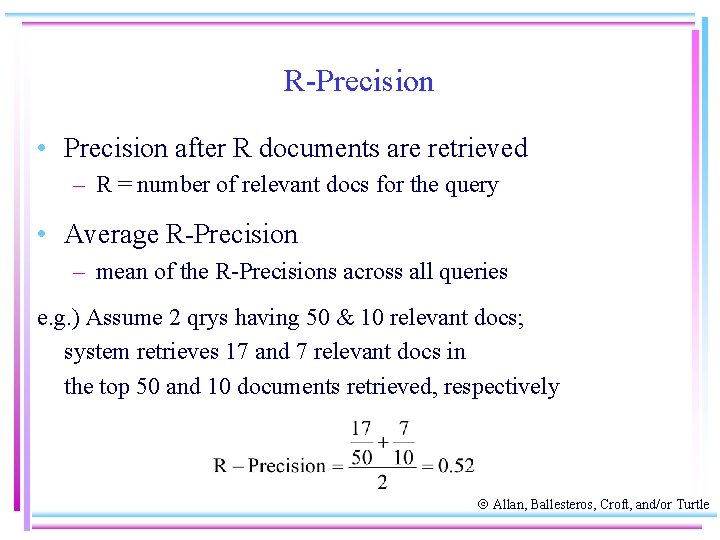

R-Precision • Precision after R documents are retrieved – R = number of relevant docs for the query • Average R-Precision – mean of the R-Precisions across all queries e. g. ) Assume 2 qrys having 50 & 10 relevant docs; system retrieves 17 and 7 relevant docs in the top 50 and 10 documents retrieved, respectively Allan, Ballesteros, Croft, and/or Turtle

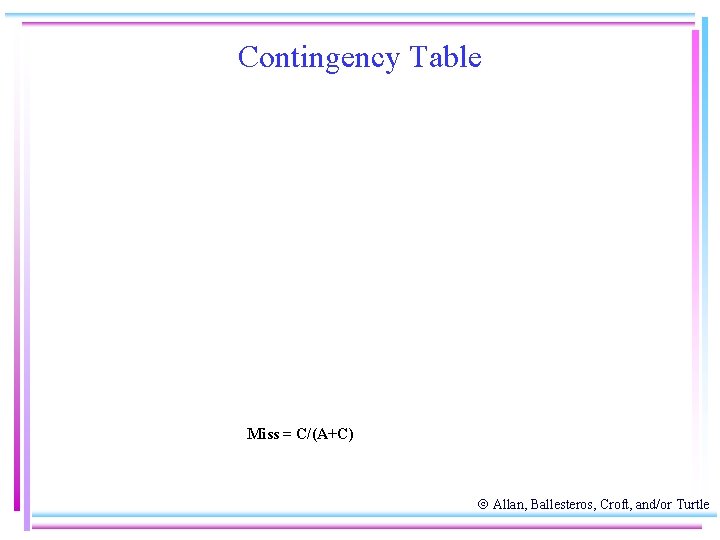

Evaluation • Recall-Precision value pairs may co-vary in ways that are hard to understand • Would like to find composite measures – A single number measure of effectiveness • primarily ad hoc and not theoretically justifiable • Some attempt to invent measures that combine parts of the contingency table into a single number measure Allan, Ballesteros, Croft, and/or Turtle

Contingency Table Miss = C/(A+C) Allan, Ballesteros, Croft, and/or Turtle

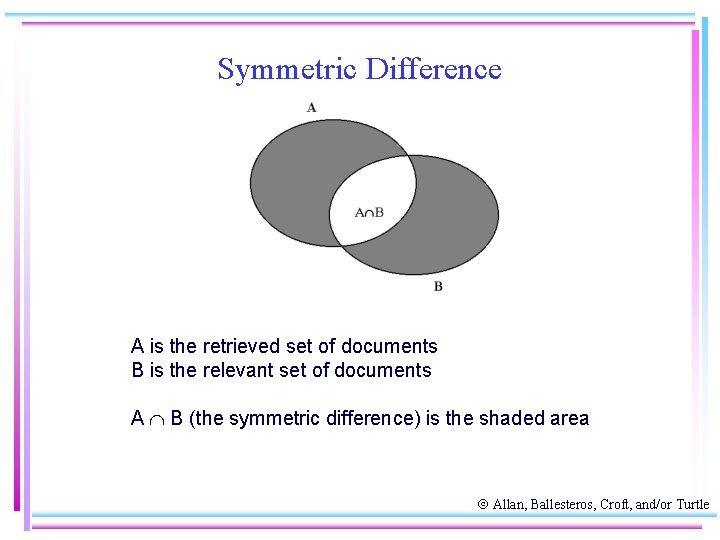

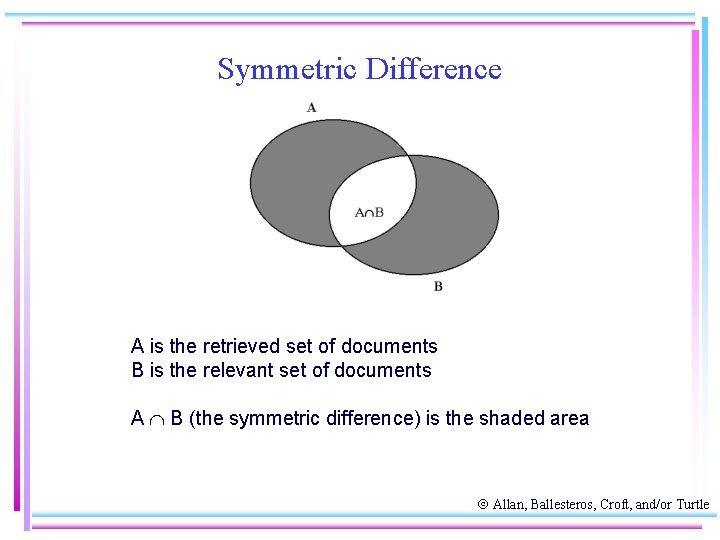

Symmetric Difference A is the retrieved set of documents B is the relevant set of documents A B (the symmetric difference) is the shaded area Allan, Ballesteros, Croft, and/or Turtle

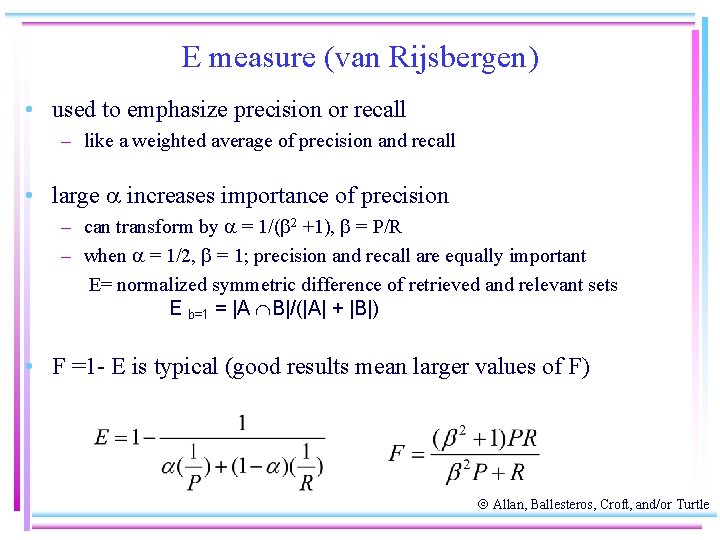

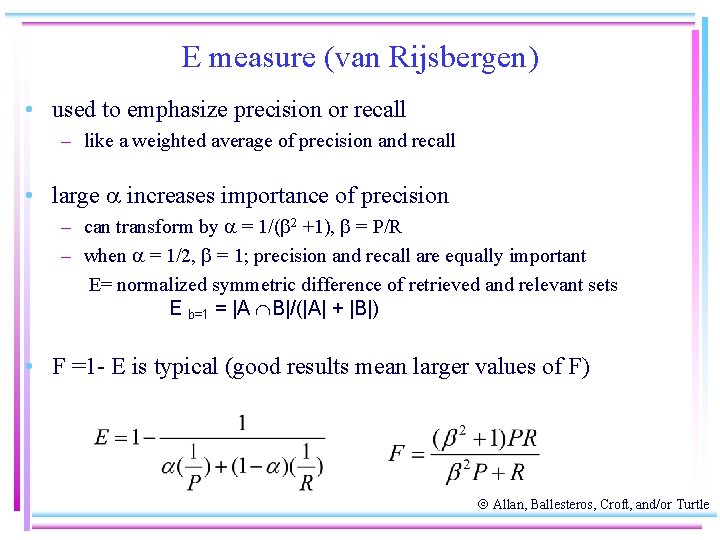

E measure (van Rijsbergen) • used to emphasize precision or recall – like a weighted average of precision and recall • large a increases importance of precision – can transform by a = 1/(b 2 +1), b = P/R – when a = 1/2, b = 1; precision and recall are equally important E= normalized symmetric difference of retrieved and relevant sets E b=1 = |A B|/(|A| + |B|) • F =1 - E is typical (good results mean larger values of F) Allan, Ballesteros, Croft, and/or Turtle

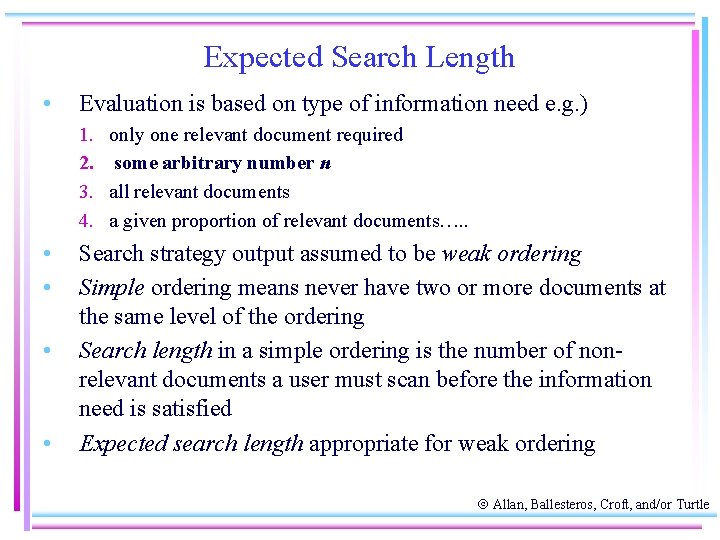

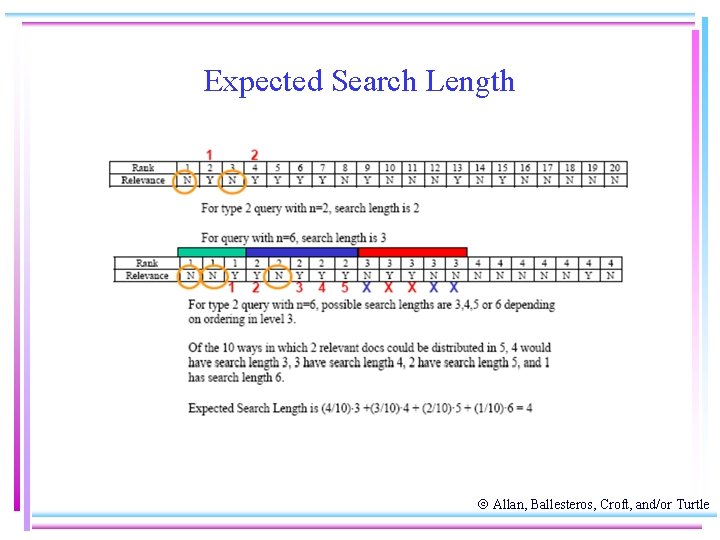

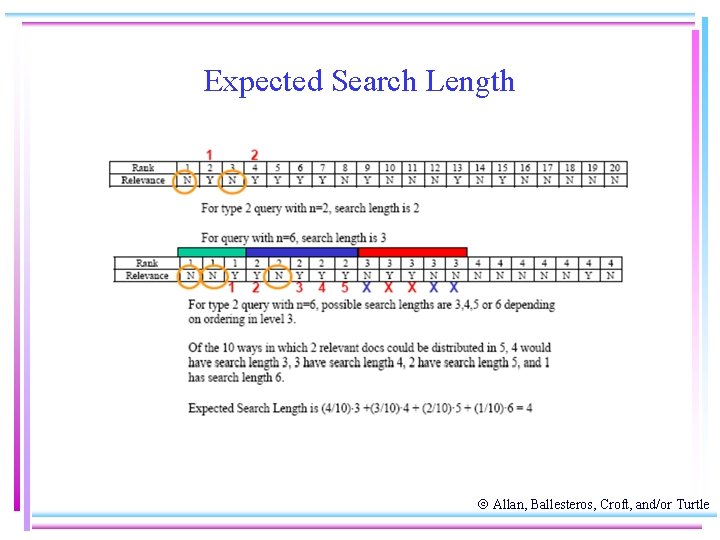

Expected Search Length • Evaluation is based on type of information need e. g. ) 1. 2. 3. 4. • • only one relevant document required some arbitrary number n all relevant documents a given proportion of relevant documents…. . Search strategy output assumed to be weak ordering Simple ordering means never have two or more documents at the same level of the ordering Search length in a simple ordering is the number of nonrelevant documents a user must scan before the information need is satisfied Expected search length appropriate for weak ordering Allan, Ballesteros, Croft, and/or Turtle

Expected Search Length Allan, Ballesteros, Croft, and/or Turtle

Other Single-Valued Measures • Breakeven point – point at which precision = recall • Swets model – use statistical decision theory to express recall, precision, and fallout in terms of conditional probabilities • Utility measures – assign costs to each cell in the contingency table – sum (or average) costs for all queries • Many others. . . Allan, Ballesteros, Croft, and/or Turtle