Evaluation Chapter 10 LEARNING OBJECTIVES 1 Know the

Evaluation Chapter 10

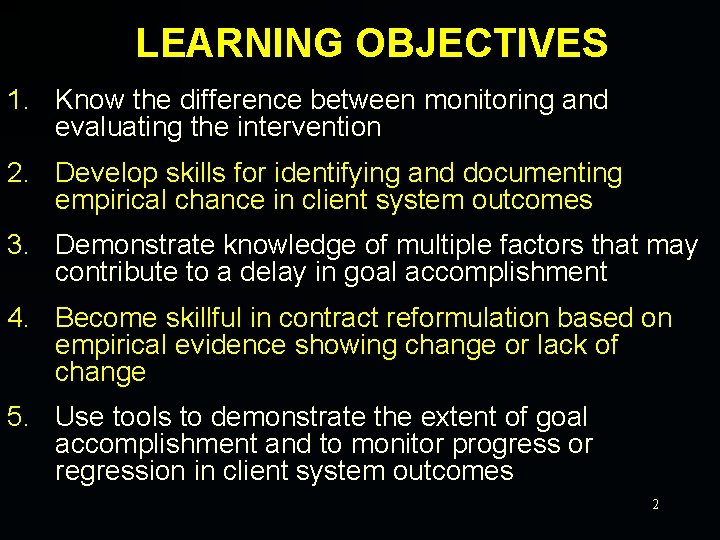

LEARNING OBJECTIVES 1. Know the difference between monitoring and evaluating the intervention 2. Develop skills for identifying and documenting empirical chance in client system outcomes 3. Demonstrate knowledge of multiple factors that may contribute to a delay in goal accomplishment 4. Become skillful in contract reformulation based on empirical evidence showing change or lack of change 5. Use tools to demonstrate the extent of goal accomplishment and to monitor progress or regression in client system outcomes 2

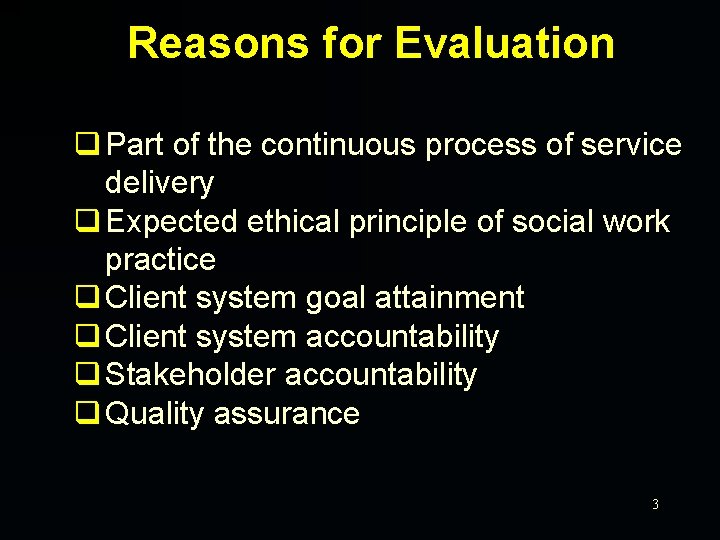

Reasons for Evaluation q Part of the continuous process of service delivery q Expected ethical principle of social work practice q Client system goal attainment q Client system accountability q Stakeholder accountability q Quality assurance 3

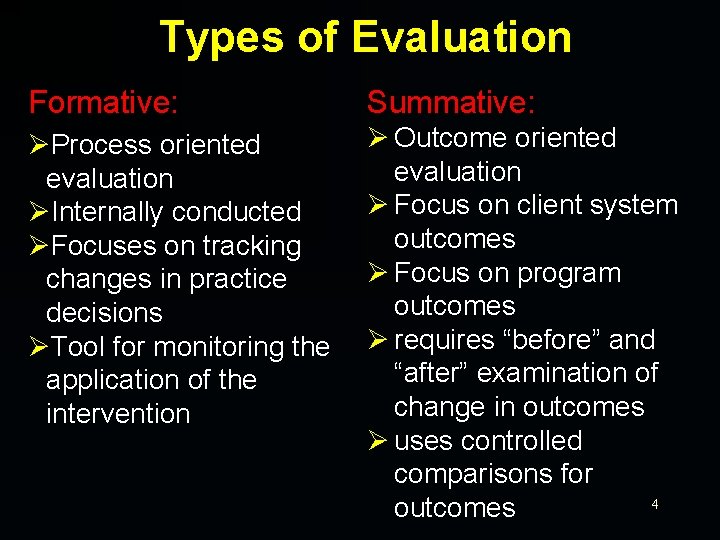

Types of Evaluation Formative: Summative: ØProcess oriented evaluation ØInternally conducted ØFocuses on tracking changes in practice decisions ØTool for monitoring the application of the intervention Ø Outcome oriented evaluation Ø Focus on client system outcomes Ø Focus on program outcomes Ø requires “before” and “after” examination of change in outcomes Ø uses controlled comparisons for 4 outcomes

FORMATIVE VS. SUMMATIVE Formative Evaluation Summative Evaluation Focus: Practice or program improvement General Question: Is the practice or program being Did this practice or program implemented as planned to achieve contribute to the planned impact set goals? and compensate the resources utilized? Evaluation • What are the strengths and Questions: weaknesses? • What is working and not working? • Why is it working or not working? • How should it be improved? Practice or Program accountability • What are the program results? • Did intended client or system benefit from the program? • Was the program or intervention cost effective? • Is it worth to continue this program or practice? 5

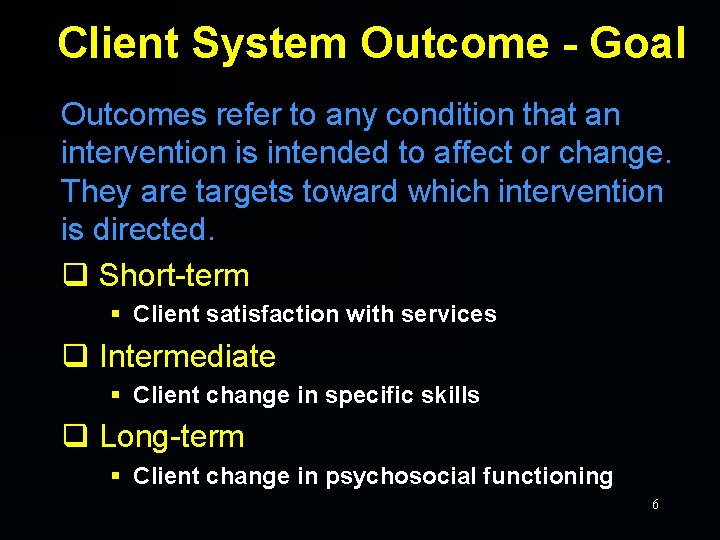

Client System Outcome - Goal Outcomes refer to any condition that an intervention is intended to affect or change. They are targets toward which intervention is directed. q Short-term § Client satisfaction with services q Intermediate § Client change in specific skills q Long-term § Client change in psychosocial functioning 6

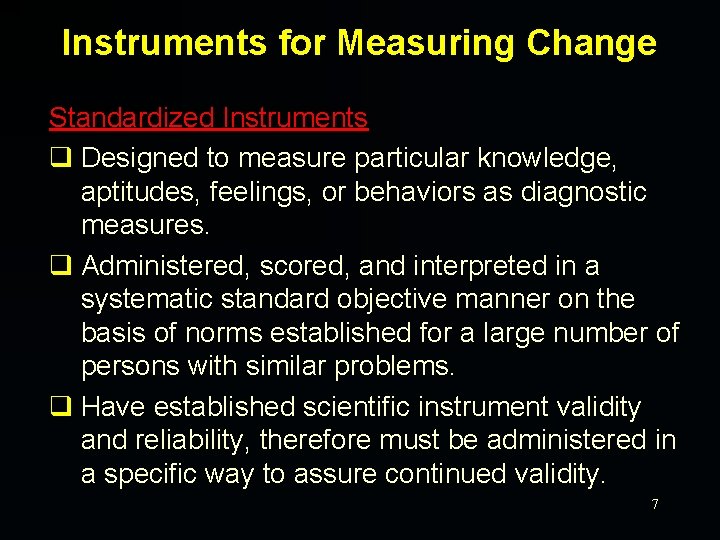

Instruments for Measuring Change Standardized Instruments q Designed to measure particular knowledge, aptitudes, feelings, or behaviors as diagnostic measures. q Administered, scored, and interpreted in a systematic standard objective manner on the basis of norms established for a large number of persons with similar problems. q Have established scientific instrument validity and reliability, therefore must be administered in a specific way to assure continued validity. 7

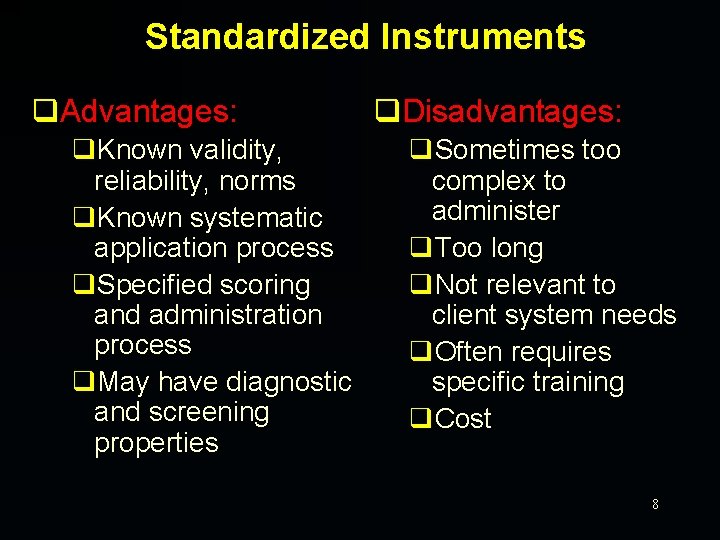

Standardized Instruments q. Advantages: q. Known validity, reliability, norms q. Known systematic application process q. Specified scoring and administration process q. May have diagnostic and screening properties q. Disadvantages: q. Sometimes too complex to administer q. Too long q. Not relevant to client system needs q. Often requires specific training q. Cost 8

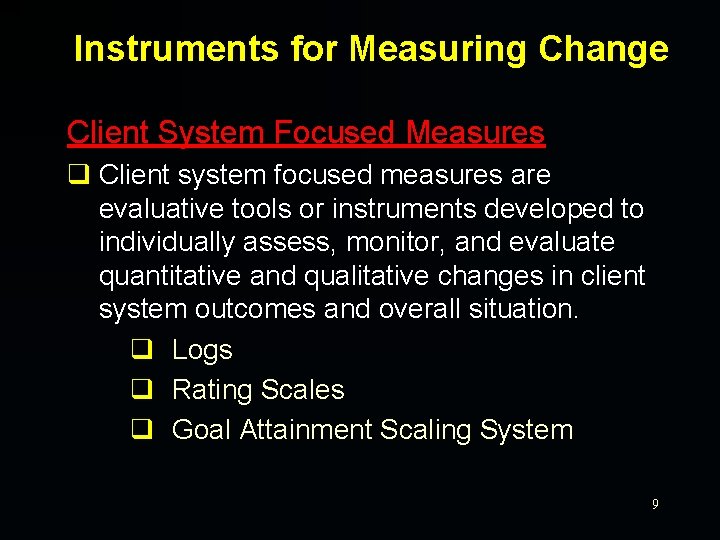

Instruments for Measuring Change Client System Focused Measures q Client system focused measures are evaluative tools or instruments developed to individually assess, monitor, and evaluate quantitative and qualitative changes in client system outcomes and overall situation. q Logs q Rating Scales q Goal Attainment Scaling System 9

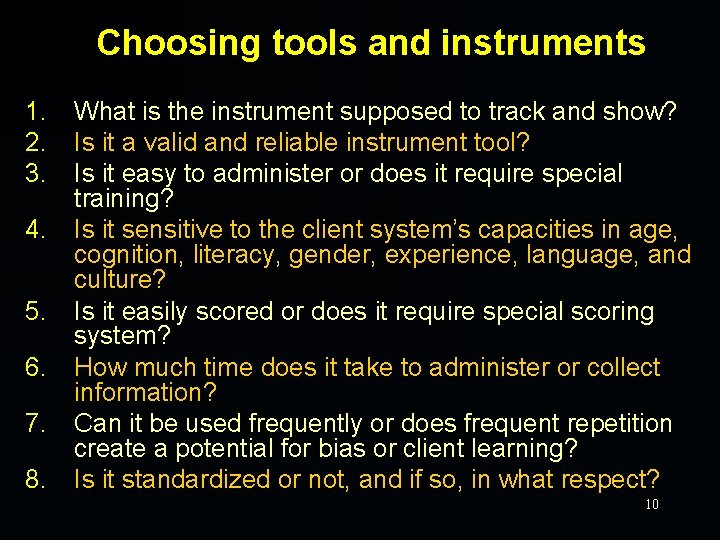

Choosing tools and instruments 1. 2. 3. 4. 5. 6. 7. 8. What is the instrument supposed to track and show? Is it a valid and reliable instrument tool? Is it easy to administer or does it require special training? Is it sensitive to the client system’s capacities in age, cognition, literacy, gender, experience, language, and culture? Is it easily scored or does it require special scoring system? How much time does it take to administer or collect information? Can it be used frequently or does frequent repetition create a potential for bias or client learning? Is it standardized or not, and if so, in what respect? 10

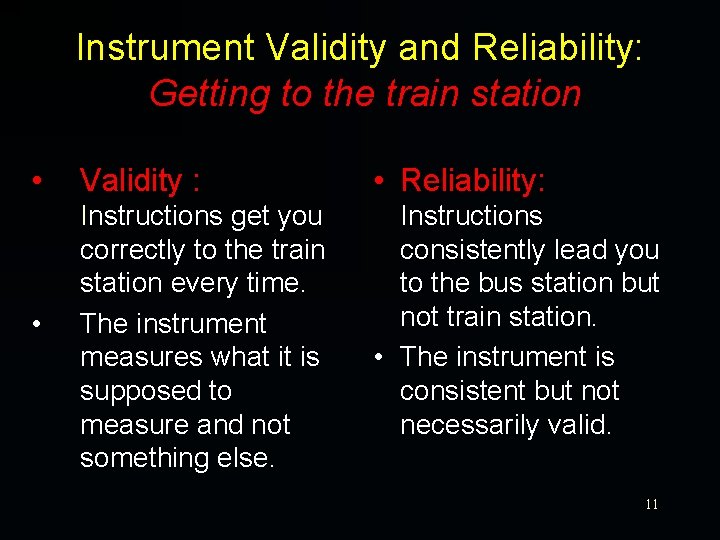

Instrument Validity and Reliability: Getting to the train station • Validity : • Reliability: • Instructions get you correctly to the train station every time. The instrument measures what it is supposed to measure and not something else. Instructions consistently lead you to the bus station but not train station. • The instrument is consistent but not necessarily valid. 11

Types of Research Designs Considered for Practice and Program Evaluation q Group based research designs q Single system designs q Qualitative designs 12

Practice Aspects of Evaluation q Reviewing contract accomplishments q Reviewing specific goal accomplishments q Reviewing specific objectives and task activities accomplishments 13

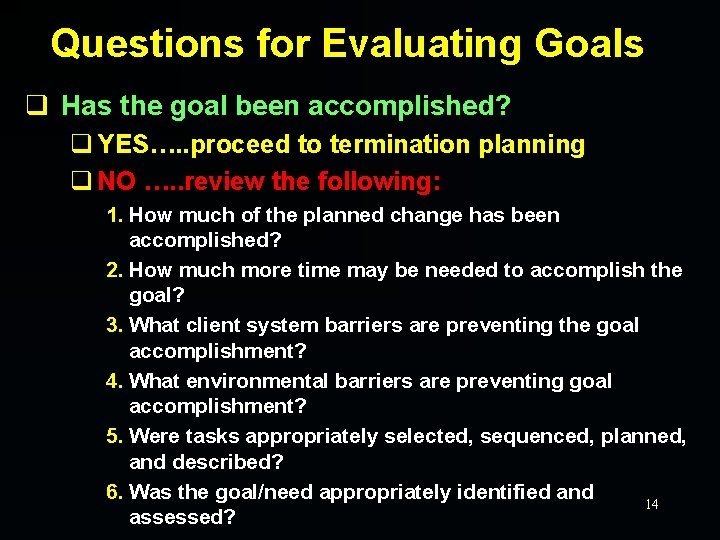

Questions for Evaluating Goals q Has the goal been accomplished? q YES…. . proceed to termination planning q NO …. . review the following: 1. How much of the planned change has been accomplished? 2. How much more time may be needed to accomplish the goal? 3. What client system barriers are preventing the goal accomplishment? 4. What environmental barriers are preventing goal accomplishment? 5. Were tasks appropriately selected, sequenced, planned, and described? 6. Was the goal/need appropriately identified and 14 assessed?

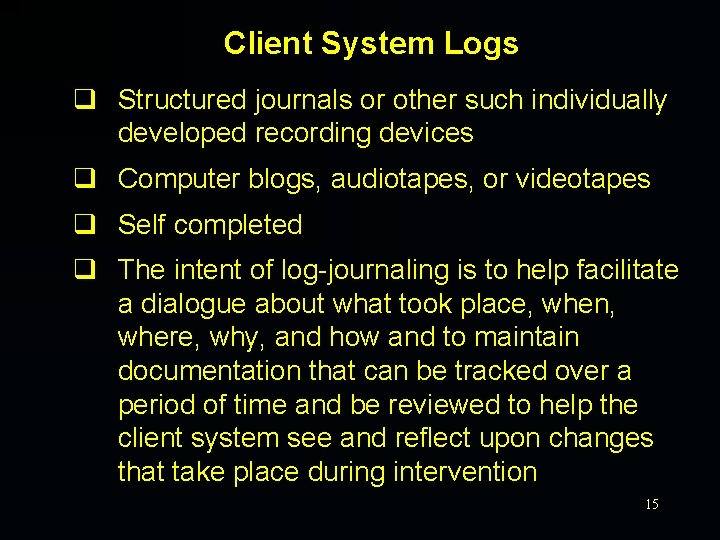

Client System Logs q Structured journals or other such individually developed recording devices q Computer blogs, audiotapes, or videotapes q Self completed q The intent of log-journaling is to help facilitate a dialogue about what took place, when, where, why, and how and to maintain documentation that can be tracked over a period of time and be reviewed to help the client system see and reflect upon changes that take place during intervention 15

Rating Scales Individually developed, empirically rank-ordered judgments that track a specified client system outcome. Typically, these scales are used for client systems to self-rate their feelings, behaviors, traits, problems, and changes in objectives. v Graphic rating scales v Self-anchored scales v Summated rating scales 16

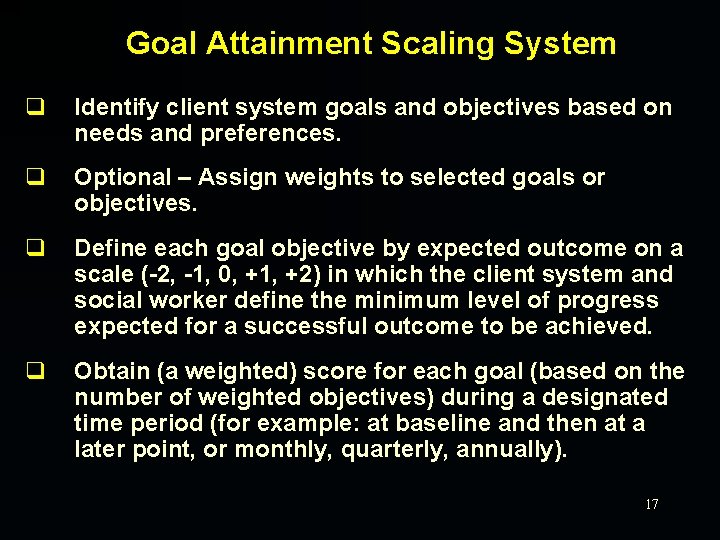

Goal Attainment Scaling System q Identify client system goals and objectives based on needs and preferences. q Optional – Assign weights to selected goals or objectives. q Define each goal objective by expected outcome on a scale (-2, -1, 0, +1, +2) in which the client system and social worker define the minimum level of progress expected for a successful outcome to be achieved. q Obtain (a weighted) score for each goal (based on the number of weighted objectives) during a designated time period (for example: at baseline and then at a later point, or monthly, quarterly, annually). 17

Single System Designs q Case-level empirical methods of evaluation q Assess change in practice objectives or client system outcomes by repeated frequent measurement q The evaluated subject can include single individual, couple, group, family, organization, or community q The case-level replications can be aggregated to determine program effectiveness 18

Purposes of Single System Designs q To monitor, evaluate, and adapt intervention to change over time q To evaluate whether changes occurred in the targeted outcomes q To determine if the changes could have been produced by the intervention q To determine the effectiveness of different interventions 19

Using Single System Designs v Decide how the system’s change is going to be measured over time v Specify and define the target outcomes (e. g. behaviors) that are going to be tracked over time v Operationalize the target outcomes and specify benchmarks for tracking positive change v Choose a design for the intervention application v Graph the measured results v Inspect results periodically or at specific intervals v Apply an analytical strategy for evaluating changes in targeted outcomes v Assess whether statistical and practical or clinical significance has been achieved 20

Understanding Single System Designs q Phase A - Baseline, no intervention is applied q Phase B - Application of the first intervention q Phase B 1 - Intensified application of the first intervention q Phase C - Second or different intervention is applied 21

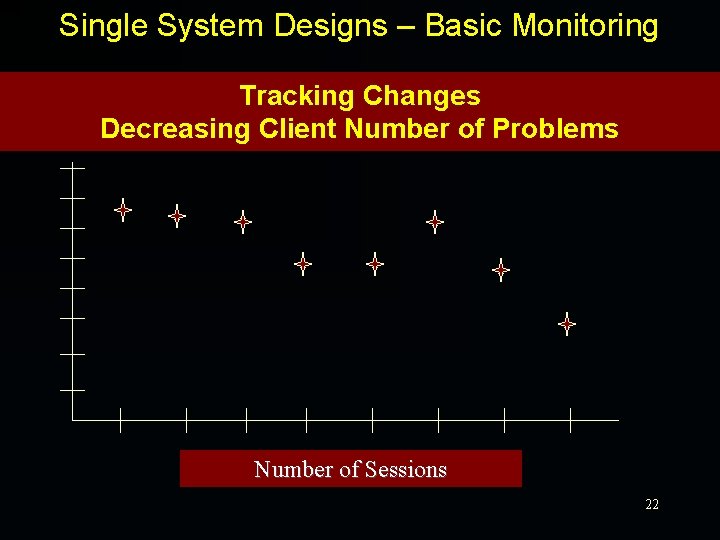

Single System Designs – Basic Monitoring Tracking Changes Decreasing Client Number of Problems Number of Sessions 22

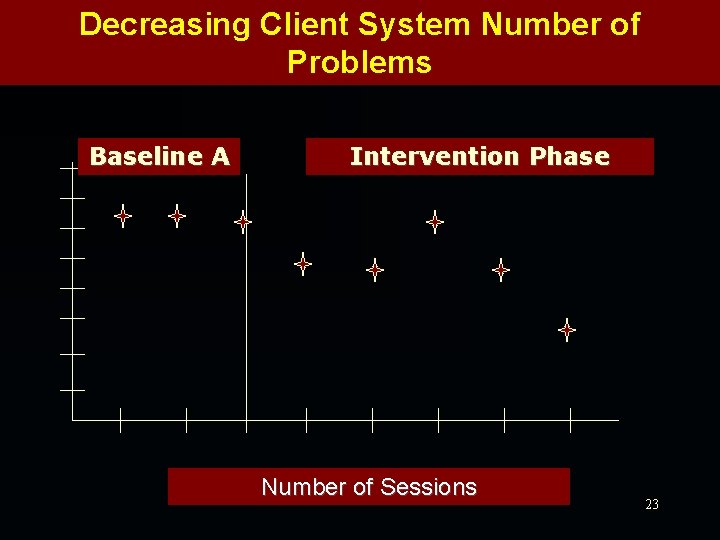

Decreasing Client System Number of Problems Baseline A Intervention Phase Number of Sessions 23

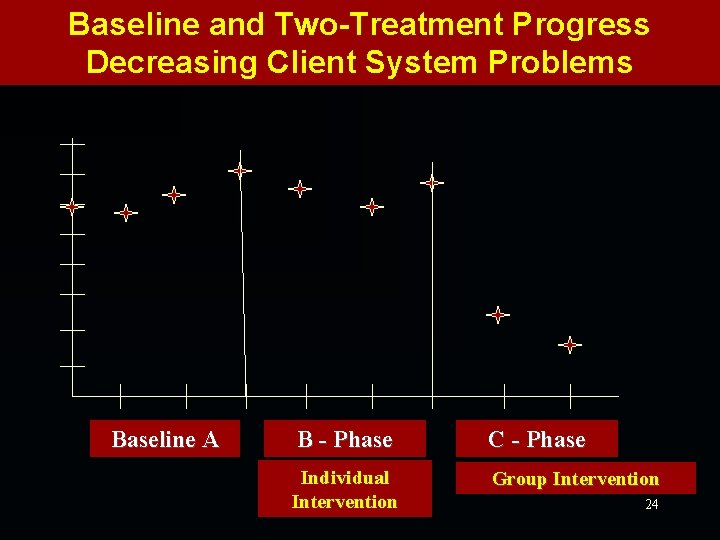

Baseline and Two-Treatment Progress Decreasing Client System Problems Baseline A B - Phase C - Phase Individual Intervention Group Intervention 24

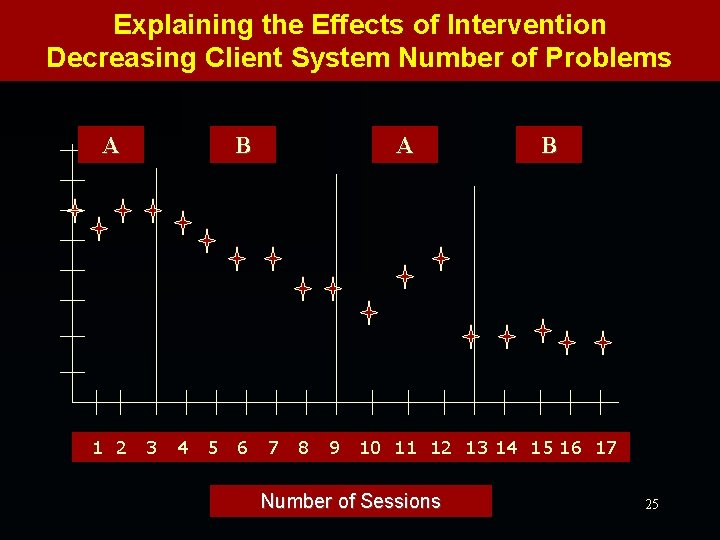

Explaining the Effects of Intervention Decreasing Client System Number of Problems A 1 2 B 3 4 5 6 A 7 8 9 B 10 11 12 13 14 15 16 17 Number of Sessions 25

q An empirically based approach to evaluation in the General Method encourages the integration of ethical, evidentiary, and application concerns. q It involves a systematic approach to improving and maintaining quality of client system services. q It includes steps that convert goals into measurable objectives. q It uses multiple sources of evidence. q It makes the process of evaluation an explicit process for public and client system scrutiny. q It can be used to evaluate the effectiveness and efficiency in carrying out the intervention. q It can be used for monitoring client system change as well as outcomes. q It seeks ways to assure and improve client best practices. 26

- Slides: 26