Evaluating the Performance of MPI Java in Future

- Slides: 1

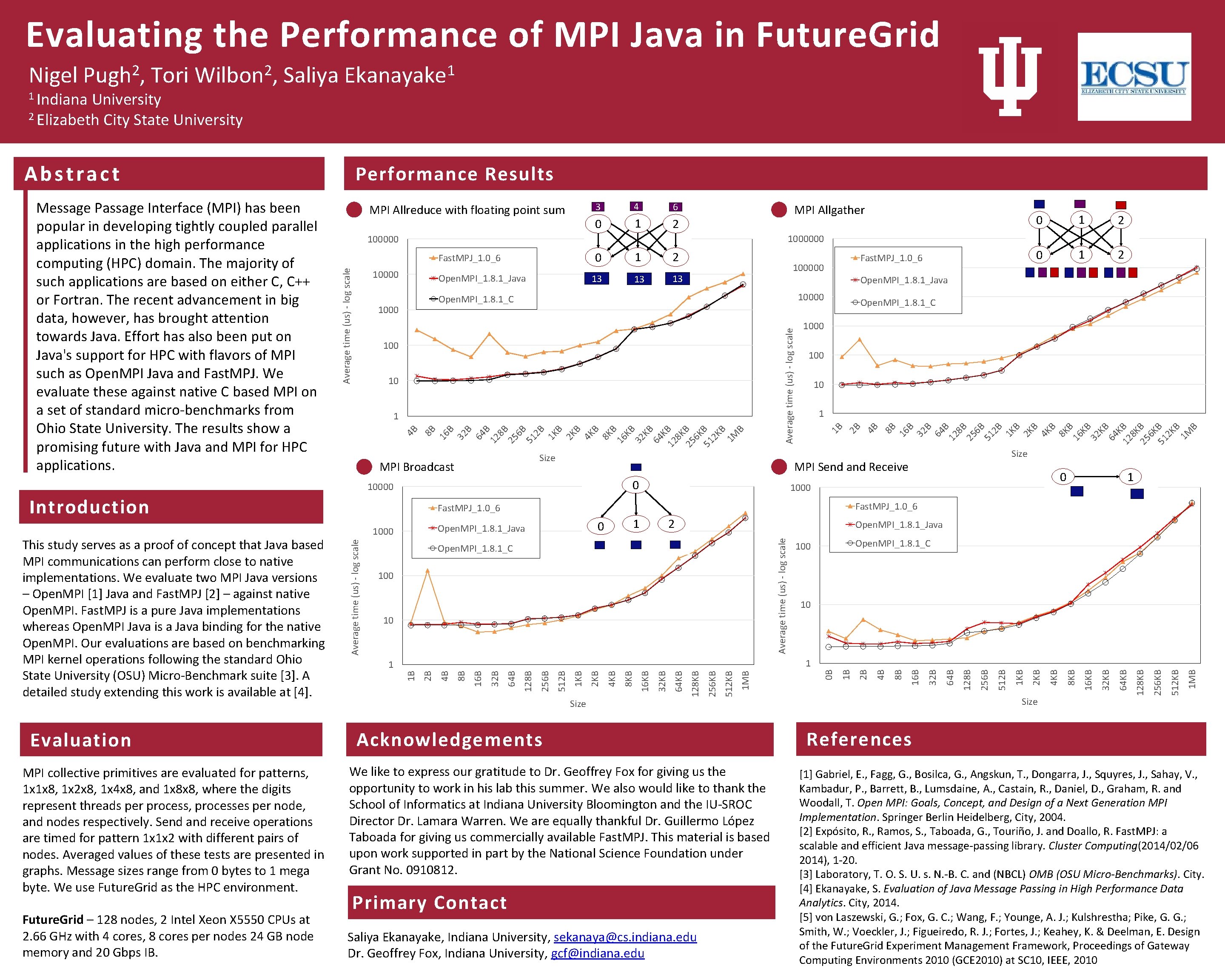

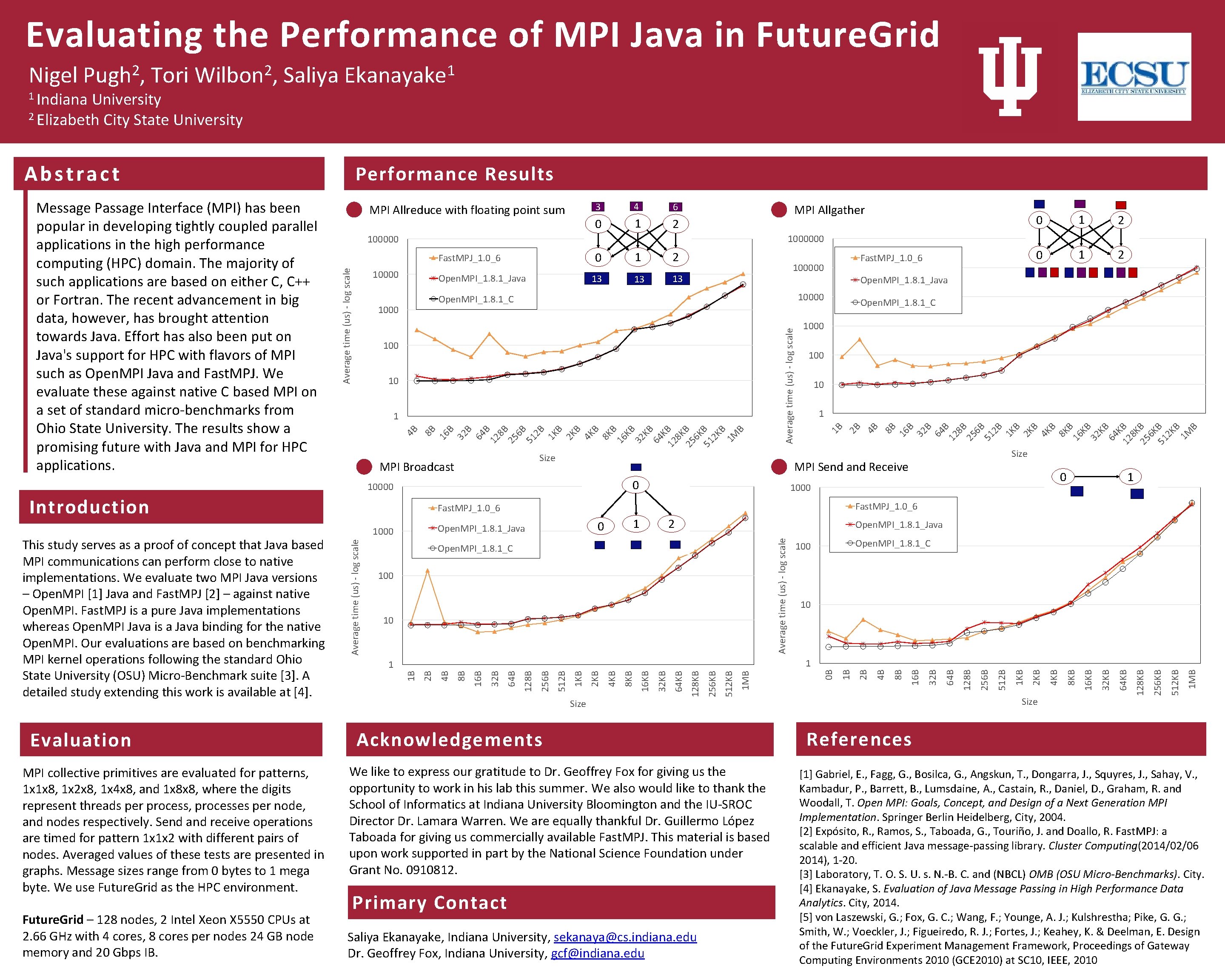

Evaluating the Performance of MPI Java in Future. Grid Abstract MPI Allreduce with floating point sum 3 4 6 0 1 2 MPI collective primitives are evaluated for patterns, 1 x 1 x 8, 1 x 2 x 8, 1 x 4 x 8, and 1 x 8 x 8, where the digits represent threads per process, processes per node, and nodes respectively. Send and receive operations are timed for pattern 1 x 1 x 2 with different pairs of nodes. Averaged values of these tests are presented in graphs. Message sizes range from 0 bytes to 1 mega byte. We use Future. Grid as the HPC environment. Future. Grid – 128 nodes, 2 Intel Xeon X 5550 CPUs at 2. 66 GHz with 4 cores, 8 cores per nodes 24 GB node memory and 20 Gbps IB. 10000 2 0 1 2 Size MPI Broadcast 100 10 1 K B 2 K B 4 K B 8 K B 16 KB 32 KB 64 KB 12 8 K 25 B 6 K 51 B 2 K B 1 M B 2 B 51 6 B 25 12 Size MPI Send and Receive 0 1000 Fast. MPJ_1. 0_6 1 0 2 Open. MPI_1. 8. 1_Java Average time (us) - log scale Open. MPI_1. 8. 1_Java 1000 8 B 1 B B 64 B 12 8 B 25 6 B 51 2 B 1 K B 2 K B 4 K B 8 K B 16 KB 32 KB 64 KB 12 8 K 25 B 6 K 51 B 2 K B 1 M B 32 4 B 1 1000 64 10 Open. MPI_1. 8. 1_C 2 B 100 Average time (us) - log scale Open. MPI_1. 8. 1_C 1000 Open. MPI_1. 8. 1_C 100 10 Acknowledgements We like to express our gratitude to Dr. Geoffrey Fox for giving us the opportunity to work in his lab this summer. We also would like to thank the School of Informatics at Indiana University Bloomington and the IU-SROC Director Dr. Lamara Warren. We are equally thankful Dr. Guillermo López Taboada for giving us commercially available Fast. MPJ. This material is based upon work supported in part by the National Science Foundation under Grant No. 0910812. Primary Contact Saliya Ekanayake, Indiana University, sekanaya@cs. indiana. edu Dr. Geoffrey Fox, Indiana University, gcf@indiana. edu 1 MB 512 KB 256 KB 64 KB 32 KB 16 KB 8 KB 128 KB Size 4 KB 2 KB 1 KB 512 B 256 B 128 B 64 B 32 B 16 B 8 B 4 B 2 B 1 MB 512 KB 256 KB 128 KB 64 KB 32 KB 16 KB 8 KB 4 KB 2 KB 1 KB 512 B 256 B 128 B 64 B 32 B 16 B 8 B 4 B 2 B 1 B Size 1 B 1 1 0 B Average time (us) - log scale 1 Open. MPI_1. 8. 1_Java B 13 32 13 100000 B 13 Fast. MPJ_1. 0_6 16 2 8 B Open. MPI_1. 8. 1_Java 1 1 B 10000 0 4 B Fast. MPJ_1. 0_6 Introduction Evaluation 0 1000000 10000 This study serves as a proof of concept that Java based MPI communications can perform close to native implementations. We evaluate two MPI Java versions – Open. MPI [1] Java and Fast. MPJ [2] – against native Open. MPI. Fast. MPJ is a pure Java implementations whereas Open. MPI Java is a Java binding for the native Open. MPI. Our evaluations are based on benchmarking MPI kernel operations following the standard Ohio State University (OSU) Micro-Benchmark suite [3]. A detailed study extending this work is available at [4]. MPI Allgather 100000 Average time (us) - log scale Message Passage Interface (MPI) has been popular in developing tightly coupled parallel applications in the high performance computing (HPC) domain. The majority of such applications are based on either C, C++ or Fortran. The recent advancement in big data, however, has brought attention towards Java. Effort has also been put on Java's support for HPC with flavors of MPI such as Open. MPI Java and Fast. MPJ. We evaluate these against native C based MPI on a set of standard micro-benchmarks from Ohio State University. The results show a promising future with Java and MPI for HPC applications. Performance Results B University 2 Elizabeth City State University Saliya 1 Ekanayake 16 1 Indiana Tori 2 Wilbon , 8 B Nigel 2 Pugh , References [1] Gabriel, E. , Fagg, G. , Bosilca, G. , Angskun, T. , Dongarra, J. , Squyres, J. , Sahay, V. , Kambadur, P. , Barrett, B. , Lumsdaine, A. , Castain, R. , Daniel, D. , Graham, R. and Woodall, T. Open MPI: Goals, Concept, and Design of a Next Generation MPI Implementation. Springer Berlin Heidelberg, City, 2004. [2] Expósito, R. , Ramos, S. , Taboada, G. , Touriño, J. and Doallo, R. Fast. MPJ: a scalable and efficient Java message-passing library. Cluster Computing(2014/02/06 2014), 1 -20. [3] Laboratory, T. O. S. U. s. N. -B. C. and (NBCL) OMB (OSU Micro-Benchmarks). City. [4] Ekanayake, S. Evaluation of Java Message Passing in High Performance Data Analytics. City, 2014. [5] von Laszewski, G. ; Fox, G. C. ; Wang, F. ; Younge, A. J. ; Kulshrestha; Pike, G. G. ; Smith, W. ; Voeckler, J. ; Figueiredo, R. J. ; Fortes, J. ; Keahey, K. & Deelman, E. Design of the Future. Grid Experiment Management Framework, Proceedings of Gateway Computing Environments 2010 (GCE 2010) at SC 10, IEEE, 2010