Evaluating Student Motivation in Field Testing Concepts Implementation

- Slides: 57

Evaluating Student Motivation in Field Testing: Concepts, Implementation, Analysis, and Interpretation

Background Information • • • SB 288 Legislation Impact on High School Testing Eligibility Rule for End of Course Examinations State Board of Education and NDE Leadership Guidelines Nevada Test Advisory Committee Recommendations Collaborative Approach with Nevada Department of Education, West. Ed and Measured Progress

EVALUATING STUDENT MOTIVATION IN FIELD TESTING: CONCEPTS, IMPLEMENTATION, ANALYSIS, AND INTERPRETATION Chad W. Buckendahl

Overview Impact of student motivation on assessment development and validation Strategies for evaluating motivation – Embedded, unscored items – Stand-alone field tests Cross-disciplinary research opportunity 1/3/2022 4

Defining malingering Medicine – fabricating or exaggerating mental or physical disorders for secondary gain motives (e. g. , financial, avoiding work, obtaining drugs) Education – demonstrating suboptimal effort or performance for secondary gain (e. g. , financial, attention) Distinct from apathy 1/3/2022 5

Clinical instruments Test of Memory Malingering (TOMM; Tombaugh, 1996) – 50 -item test that distinguishes between malingering and memory impairment Victoria Symptom Validity Test (VSVT; Slick, Hopp, Strauss, & Thompson, 1997) – 48 item test to evaluate possible exaggeration or feigning cognitive impairments 1/3/2022 6

Generalizing to EOCs New high school assessments Anticipated low student motivation Consequences for some, but not all students Additional strategy for evaluating credibility of field test data 1/3/2022 7

Methodology Embedding easier, unscored items within the content domain – Prioritization of assessment real estate Evaluating data with and without students identified as “unmotivated” Using results to inform standard setting, form construction, future field tests Footer Text 1/3/2022 8

Summary Commend NV Department of Education for supporting applied research Implications for practice – Adjunct approach for empirically evaluating motivation – Stability of item level data – Informing development and validation activities 1/3/2022 9

Evaluating Student Motivation in Field Testing: Design and Development of the Field Test Joanne L. Jensen, West. Ed Paper presented at the National Conference on Student Assessment June 23, 2015 San Diego

Assessment Program in Transition —Similarities and Differences Similarities… • Active collaboration with NDE staff • Active collaboration with Nevada educators • Stakes for students

Assessment Program in Transition— Similarities and Differences… • Proficiency examinations were based on a select number of content standards reflecting a breadth but not a depth of content • Writing prompt versus writing task based on a reading passage • Calculator use versus no calculator • Reporting structured by claims rather than content domains • New item types (multiple select, two-part items, gridded response) • Rigor of the assessment

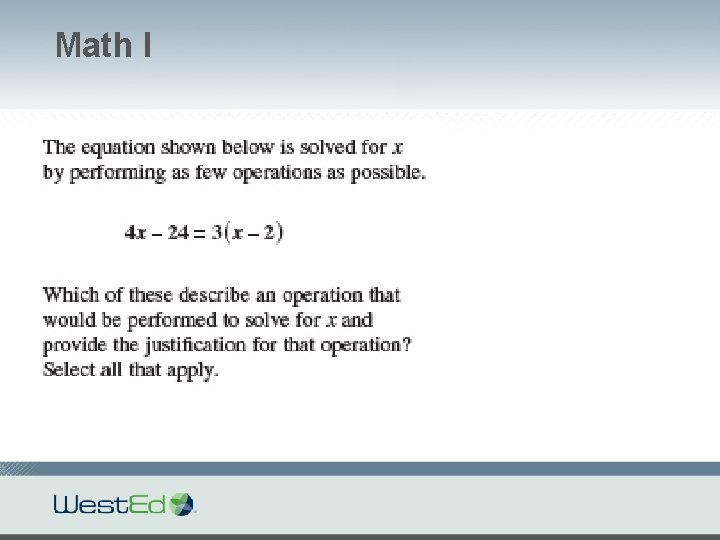

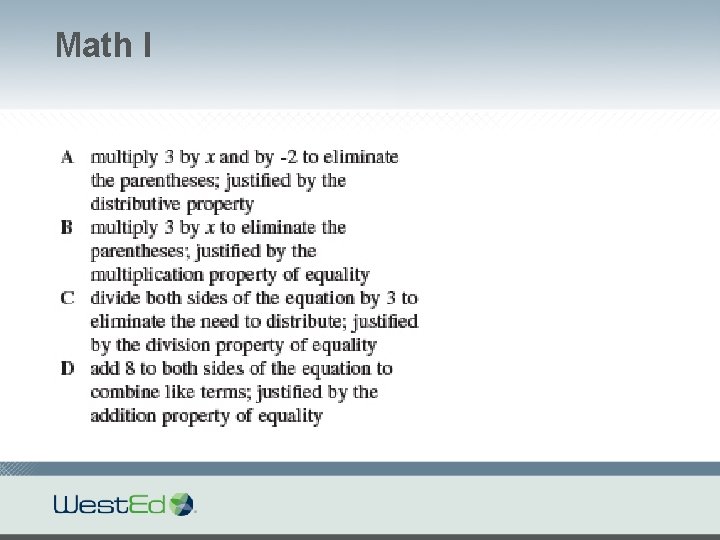

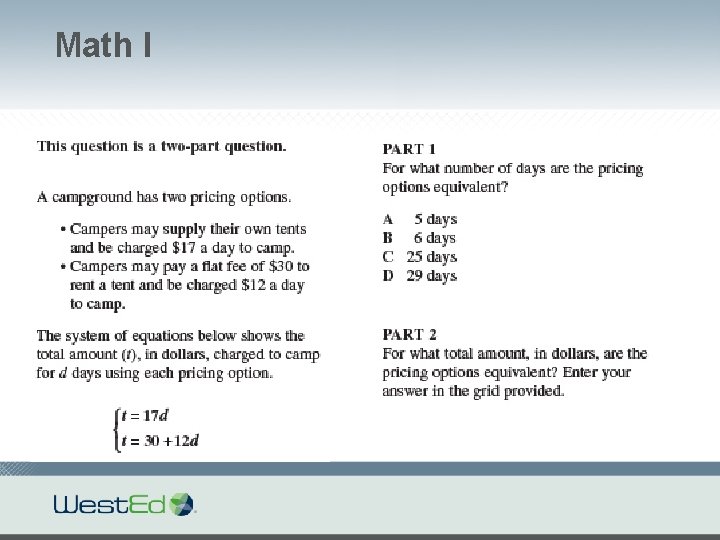

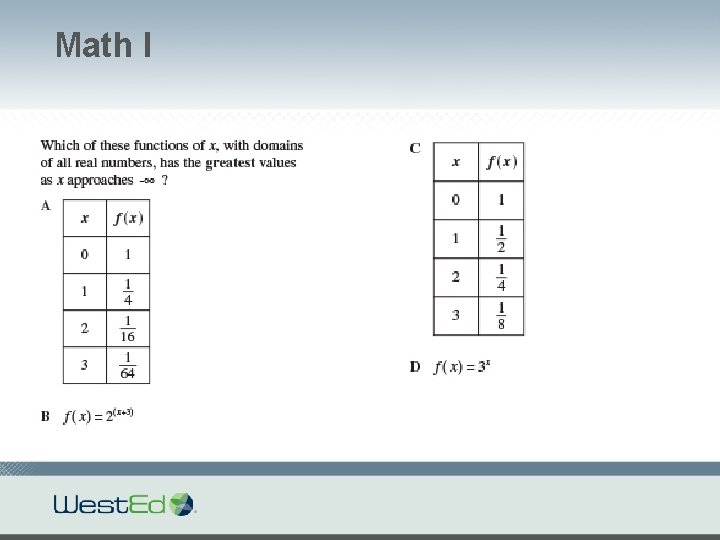

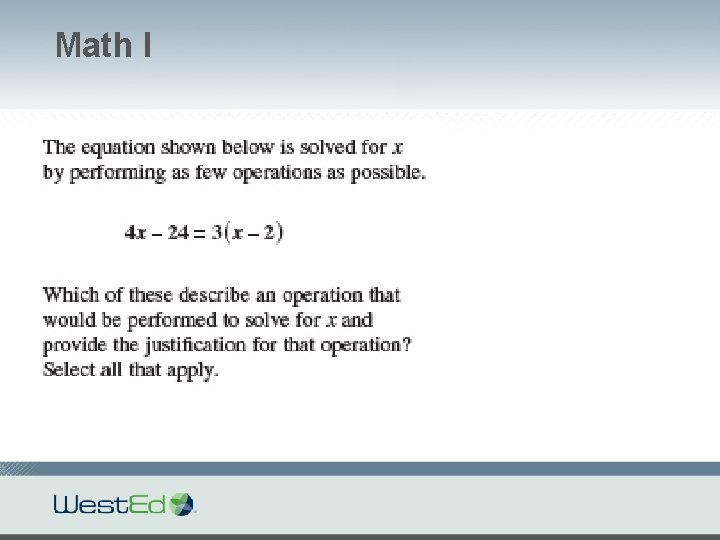

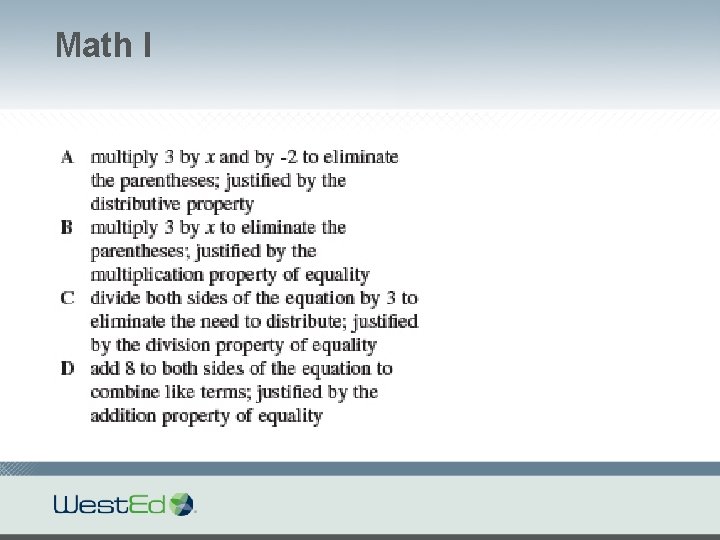

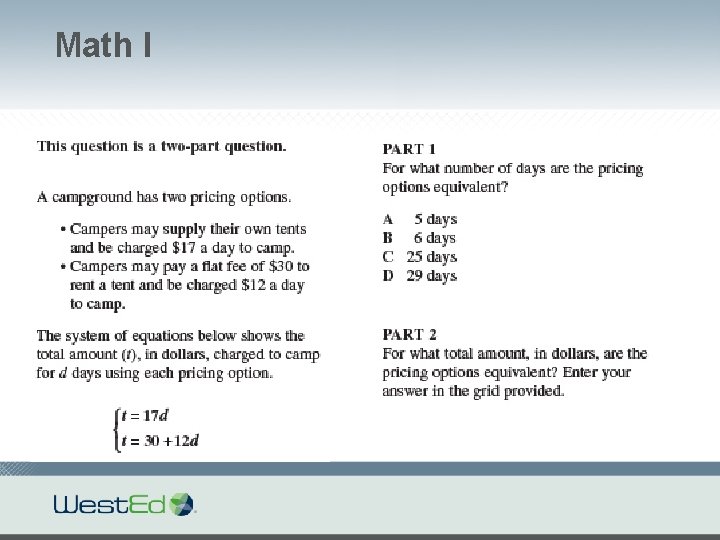

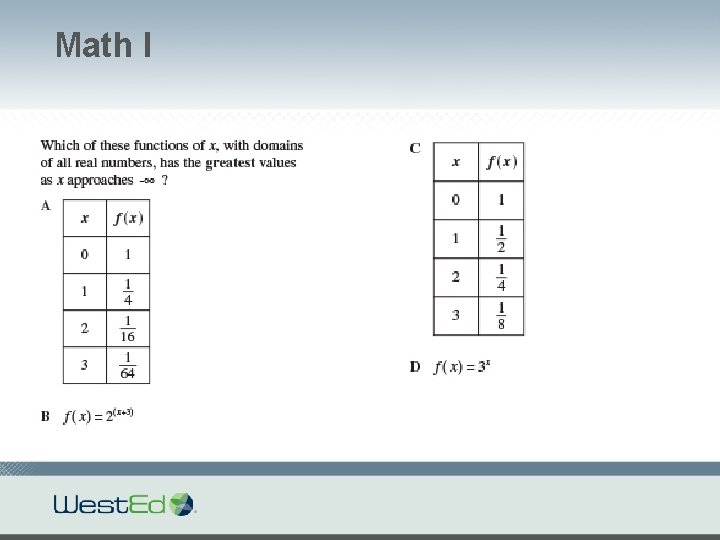

Math I

Math I

Math I

Math I

Math I

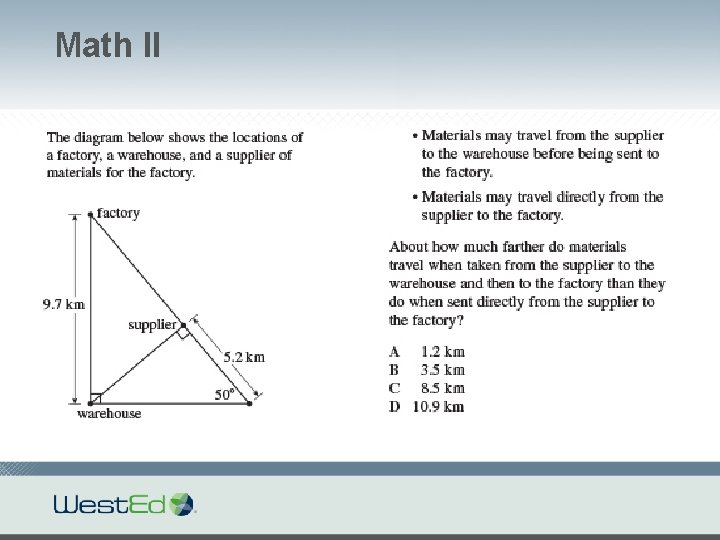

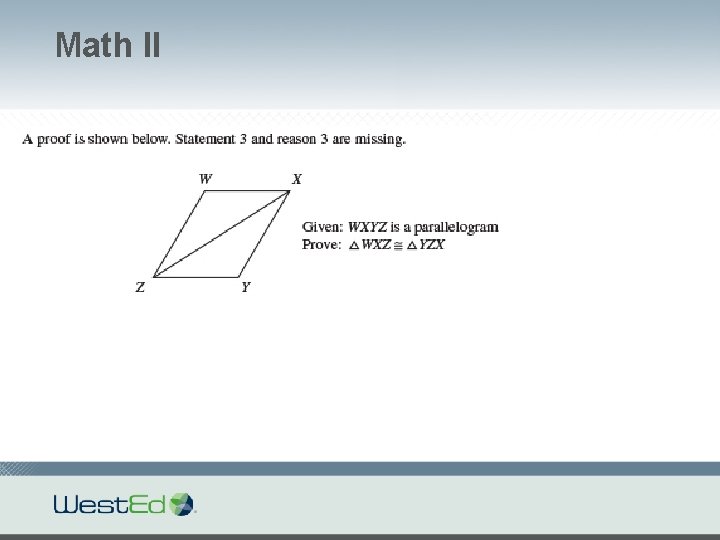

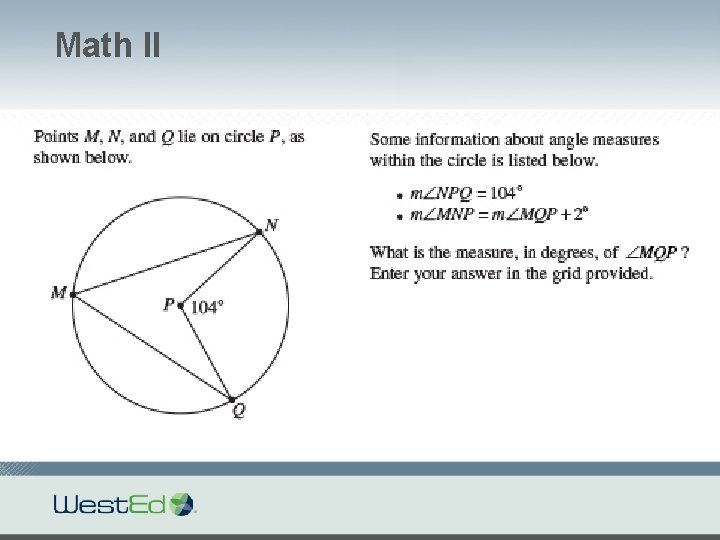

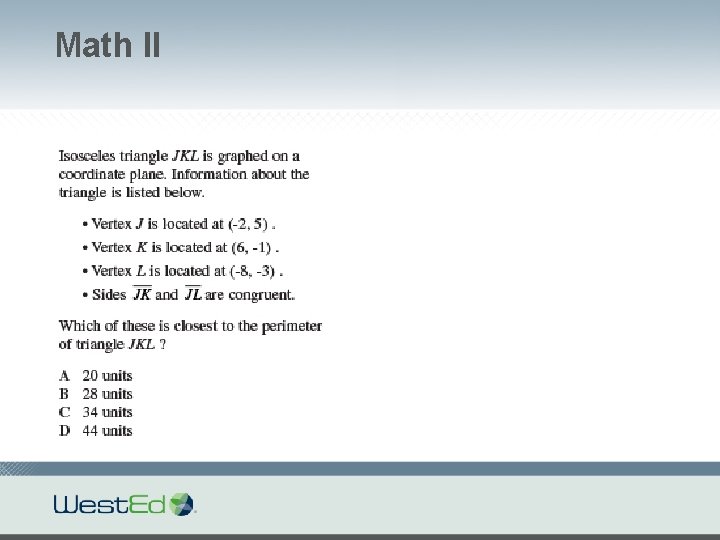

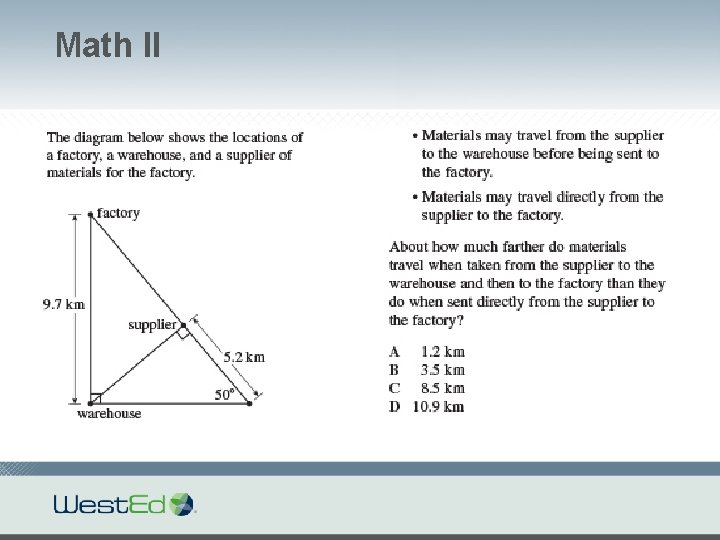

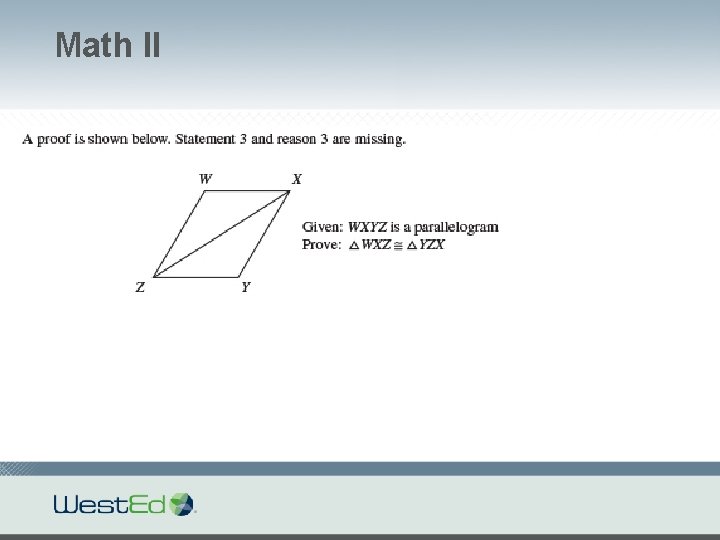

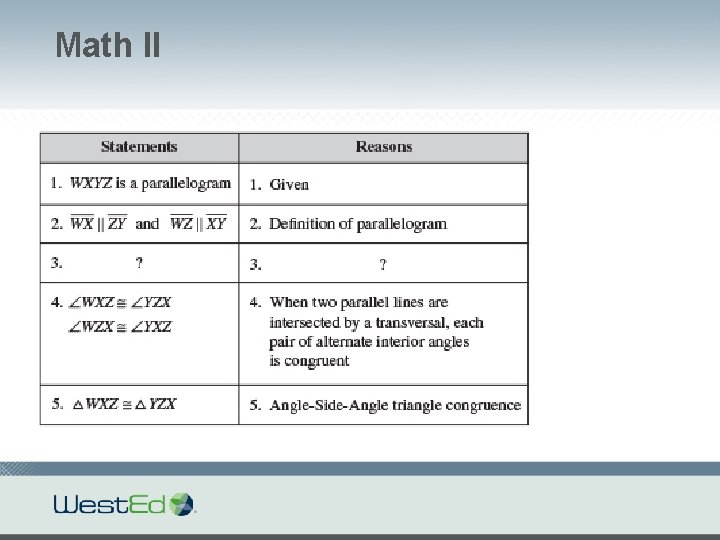

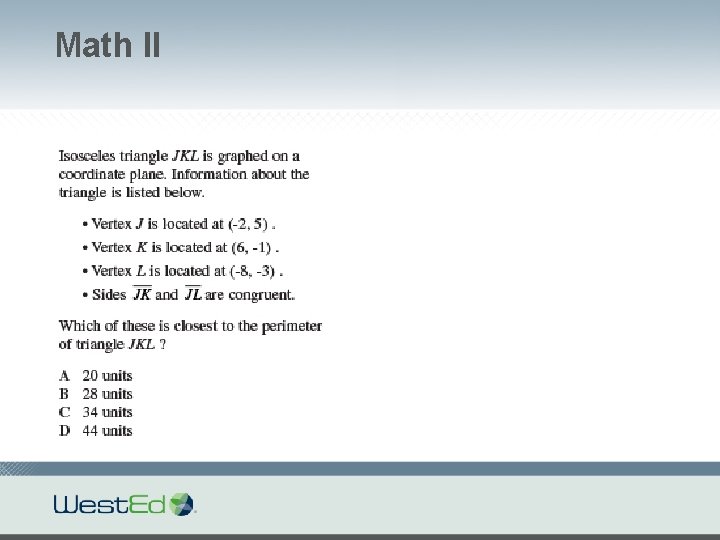

Math II

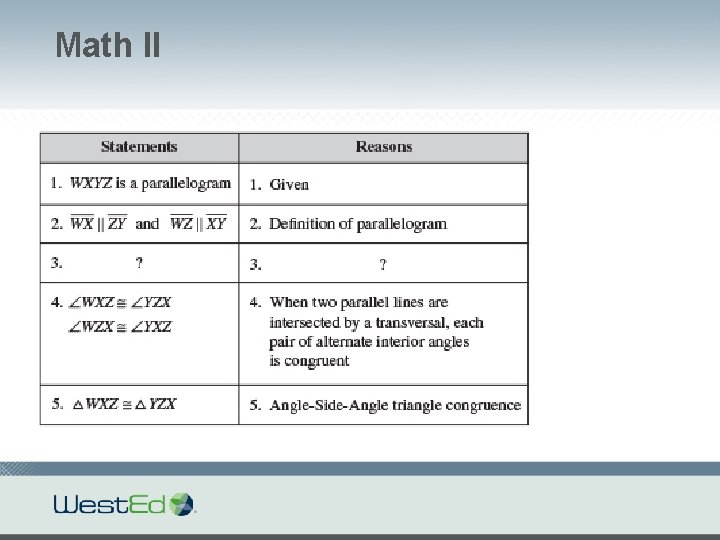

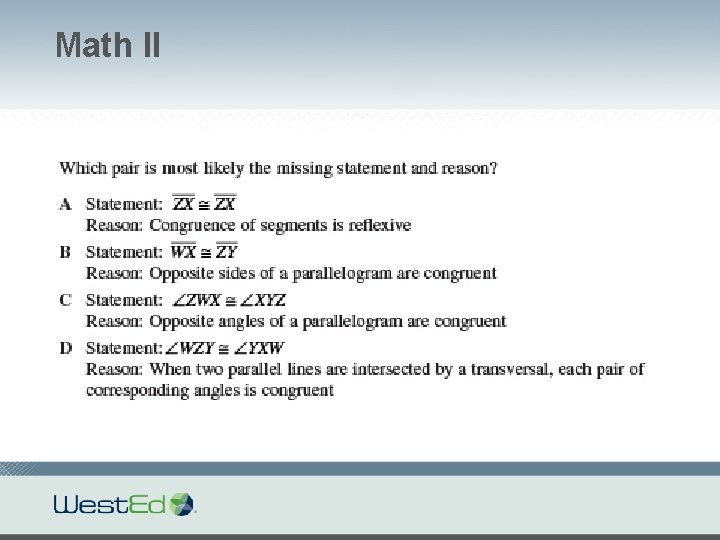

Math II

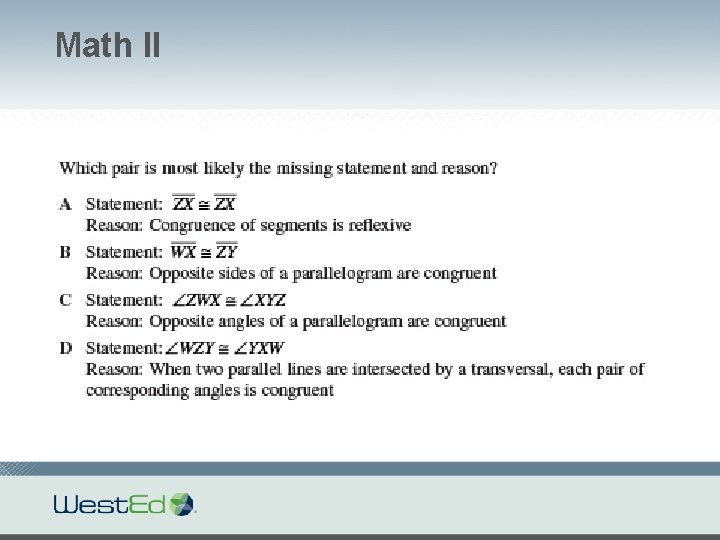

Math II

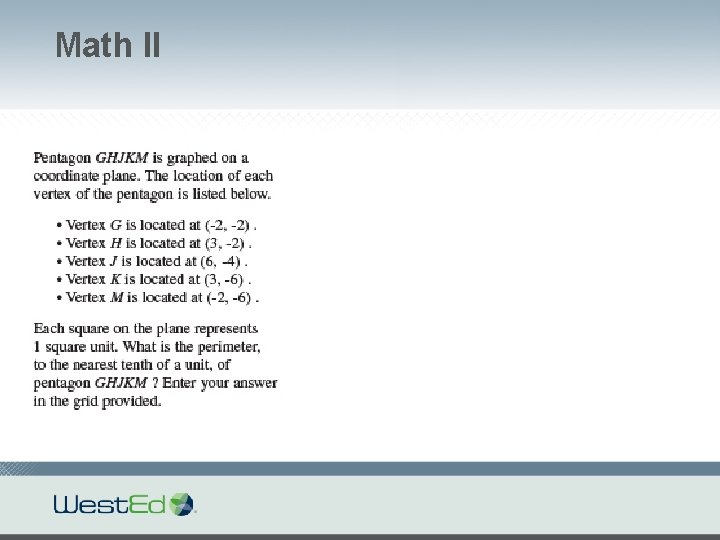

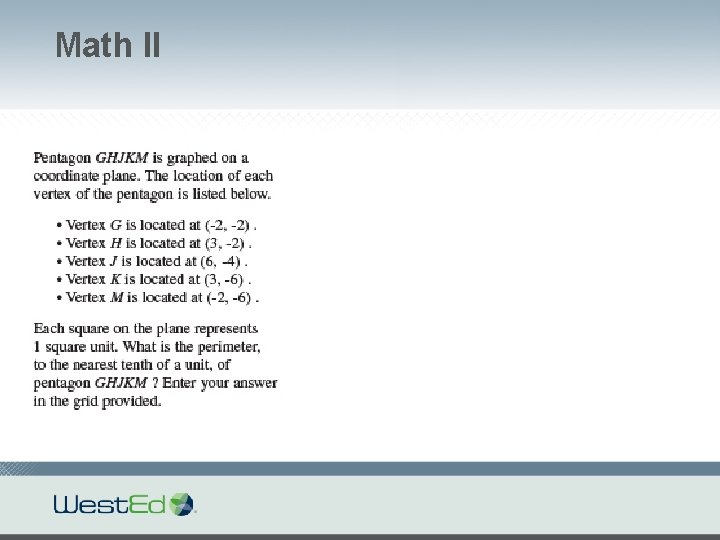

Math II

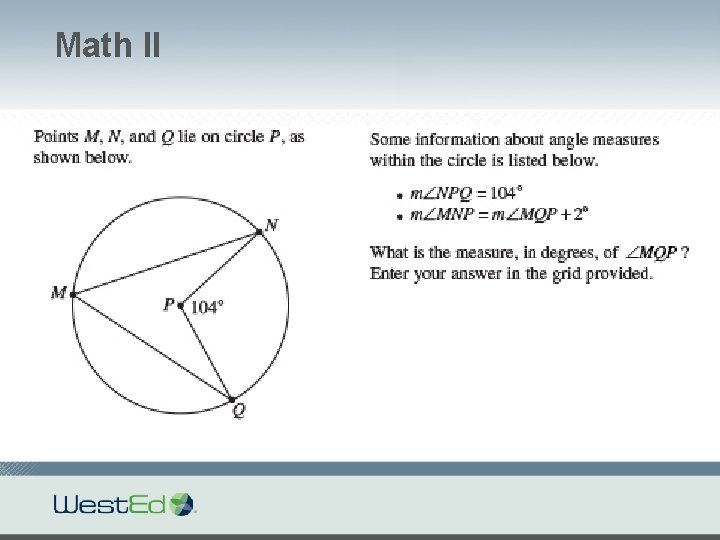

Math II

Math II

Math II

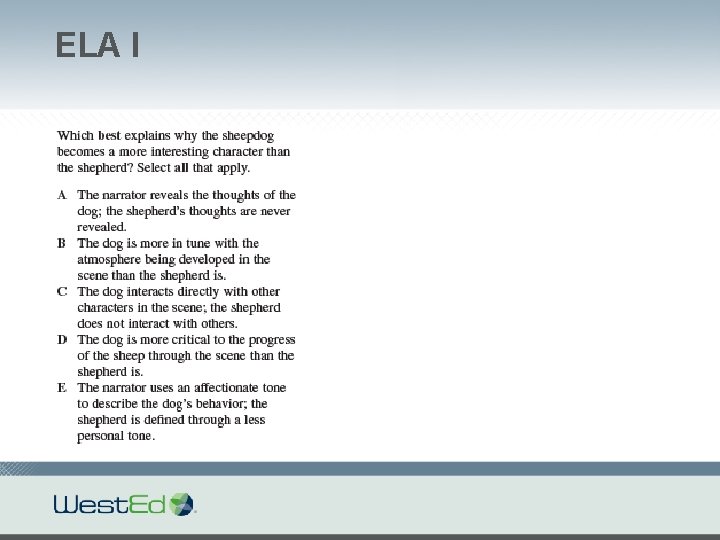

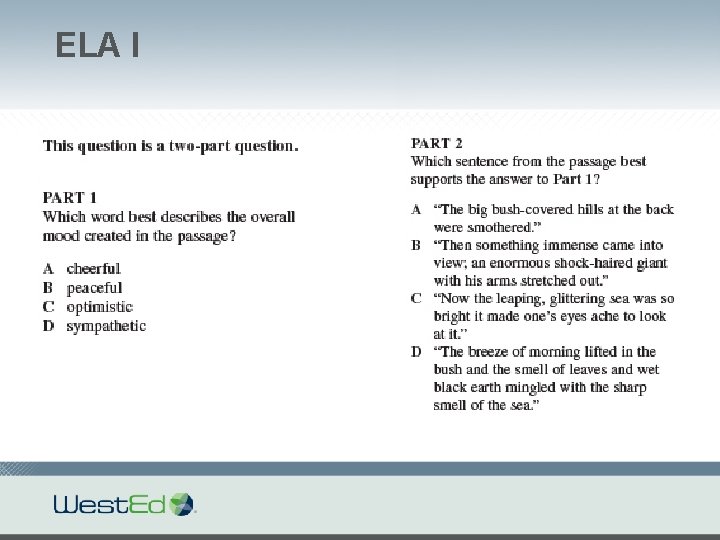

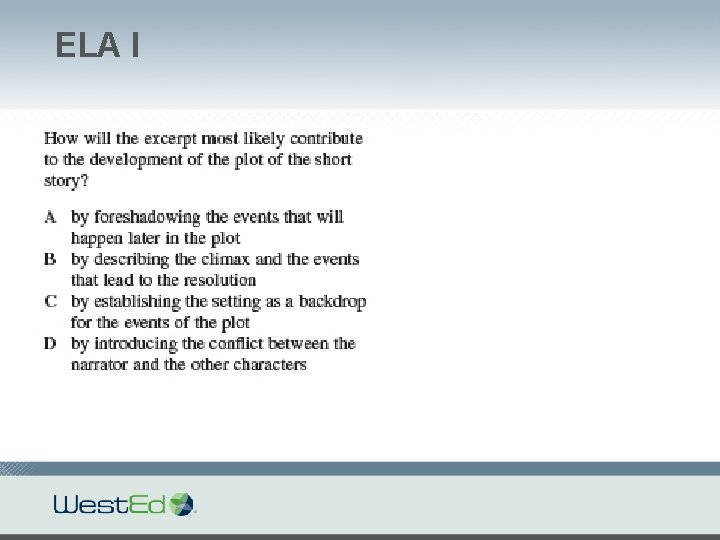

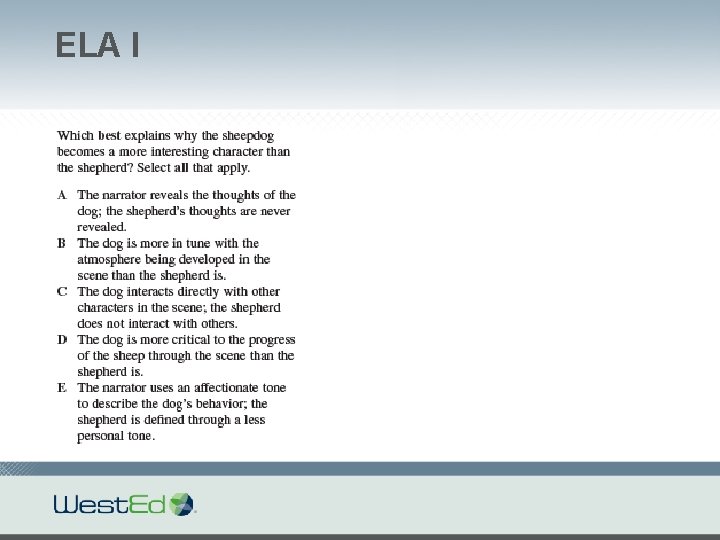

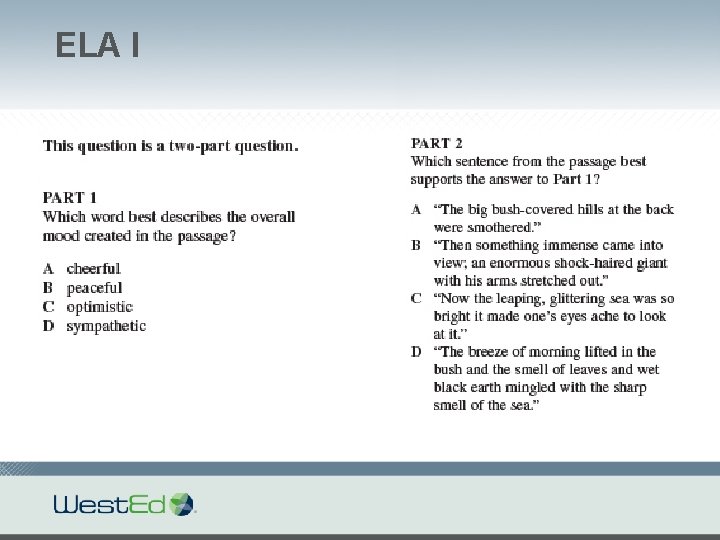

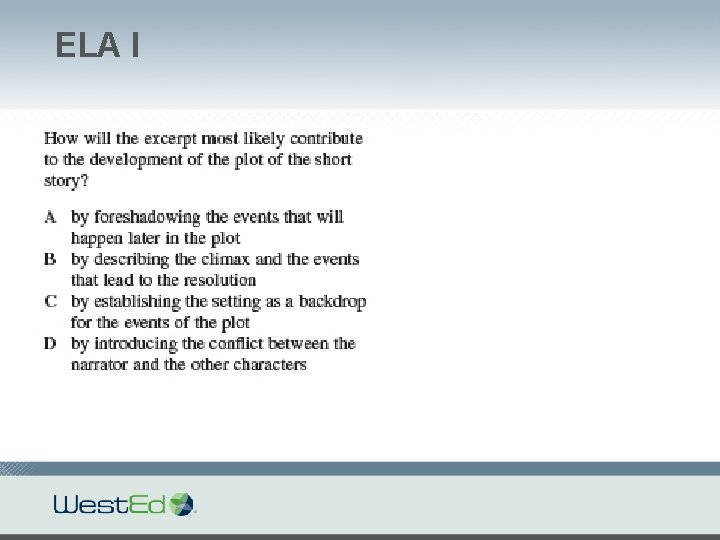

ELA I

ELA I

ELA I

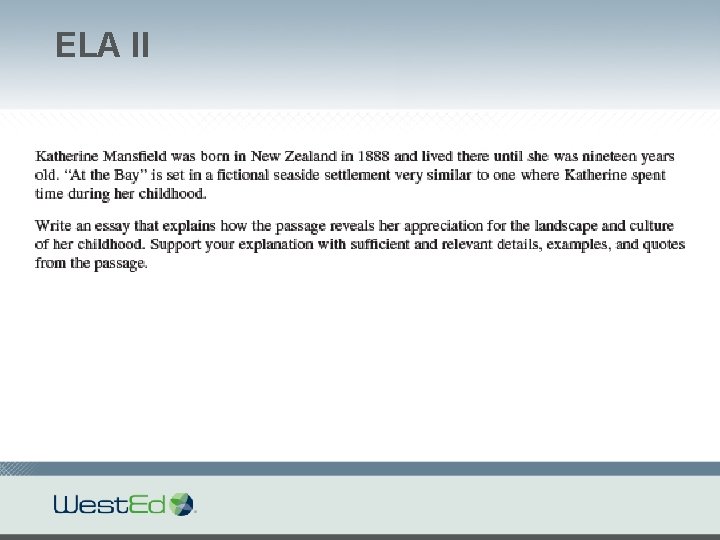

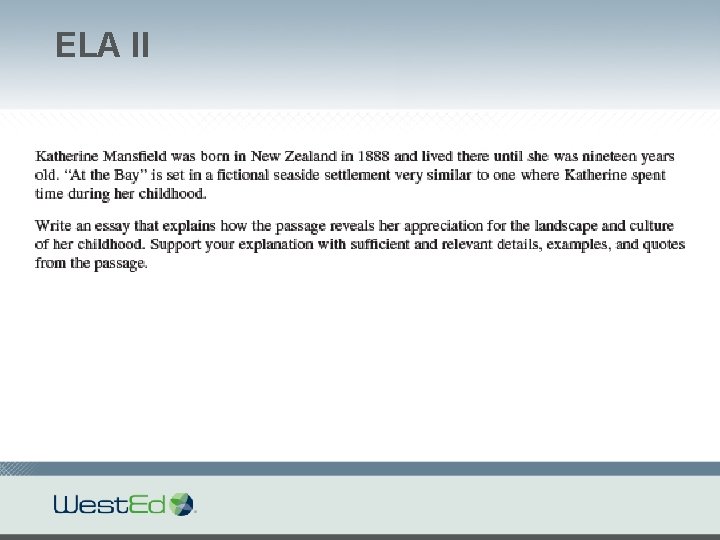

ELA II

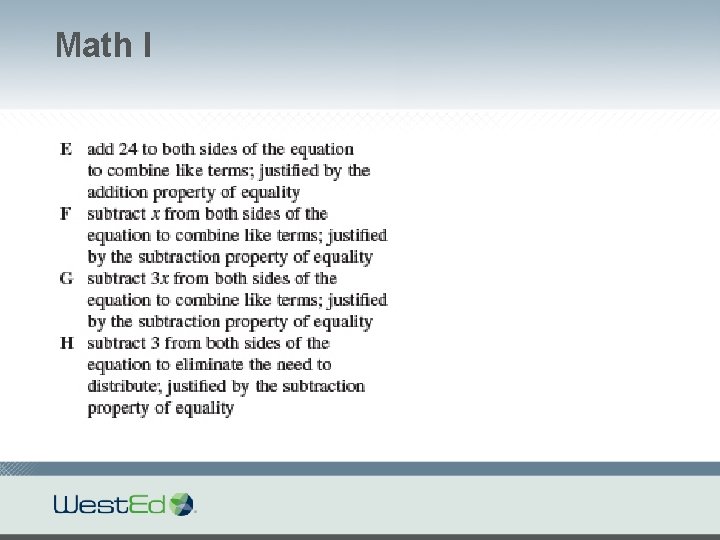

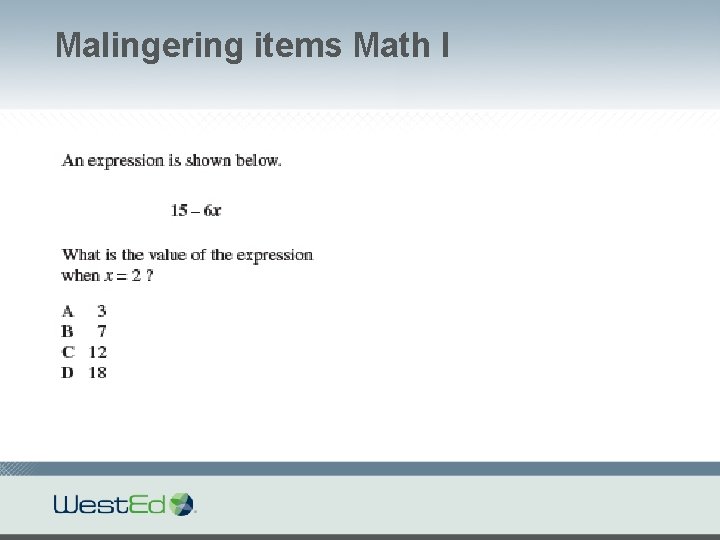

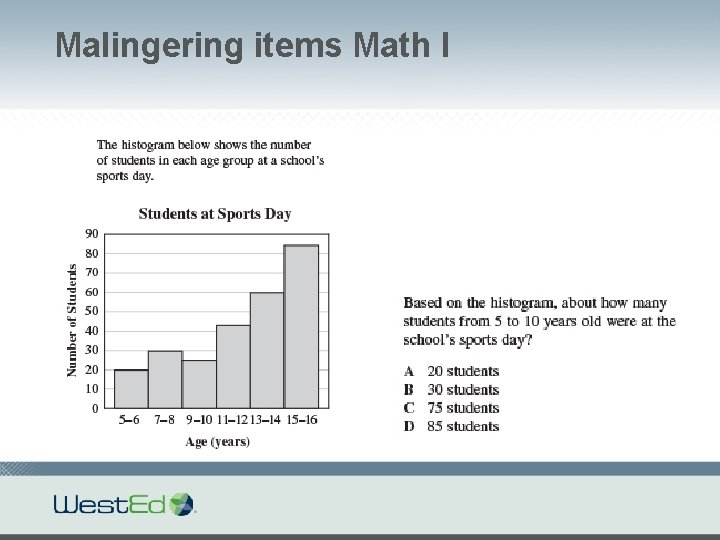

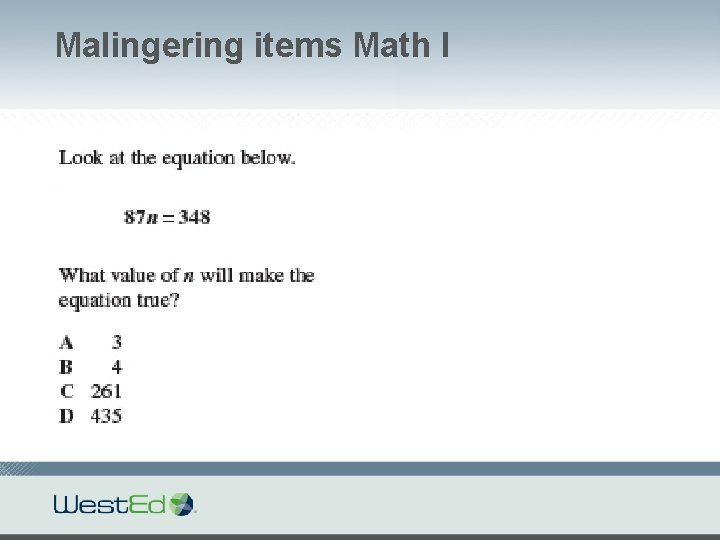

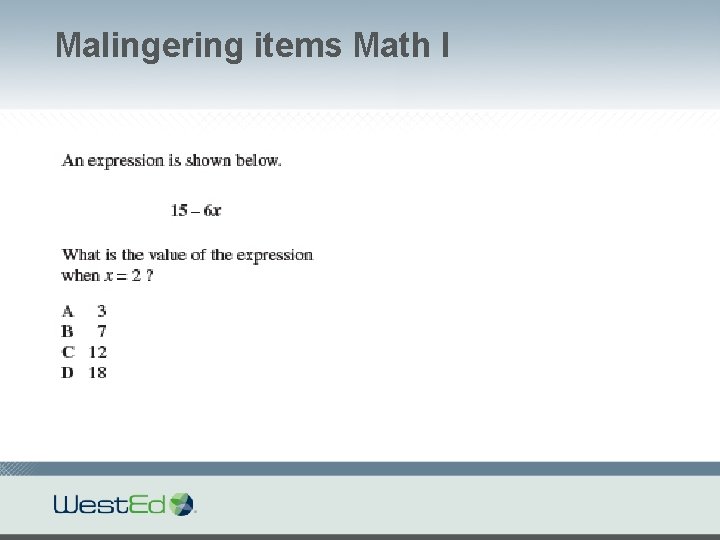

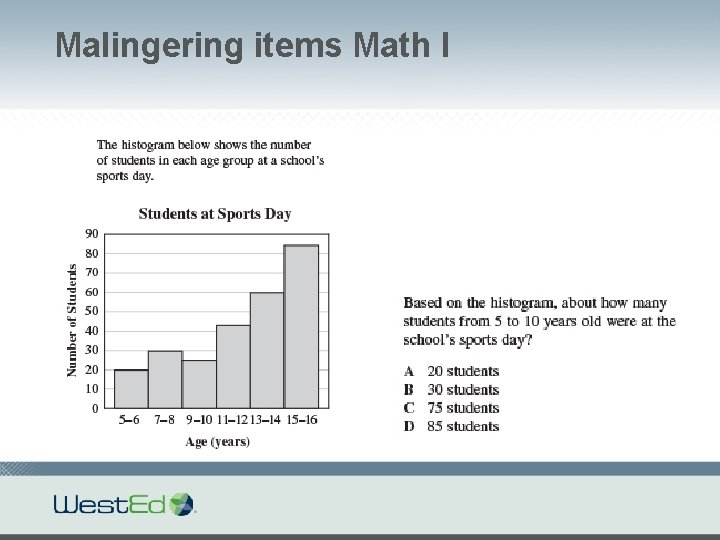

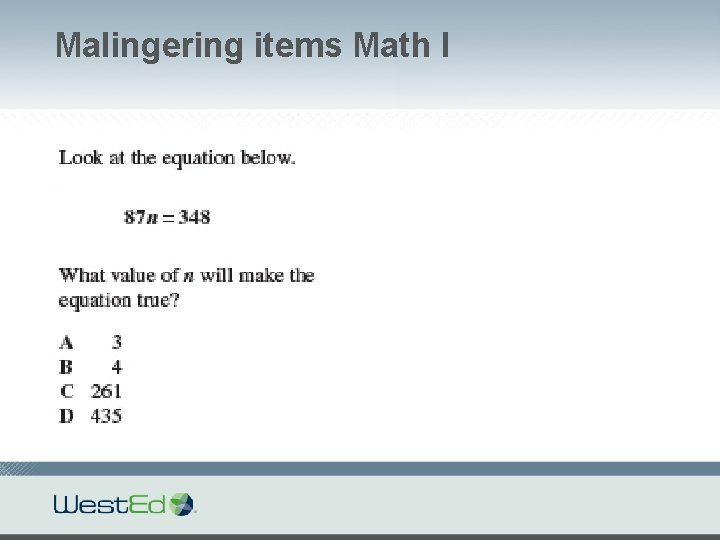

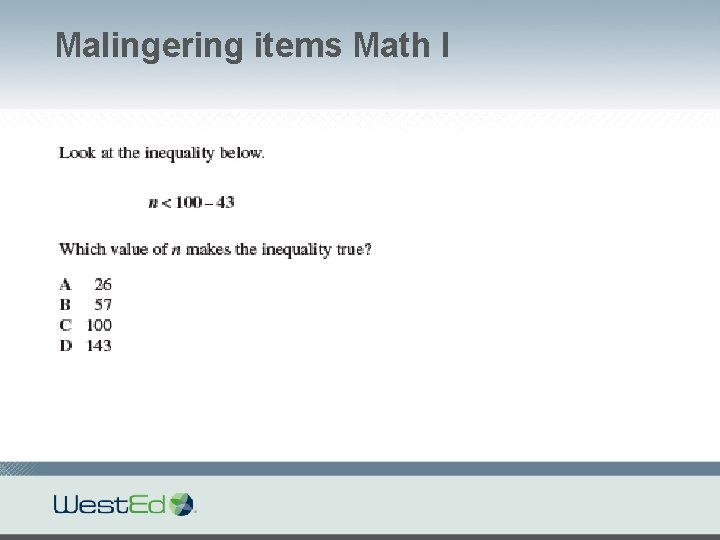

Malingering items Math I

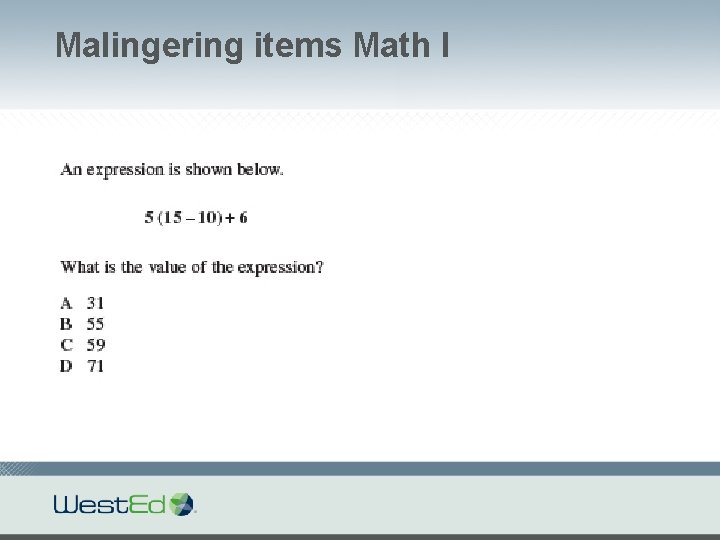

Malingering items Math I

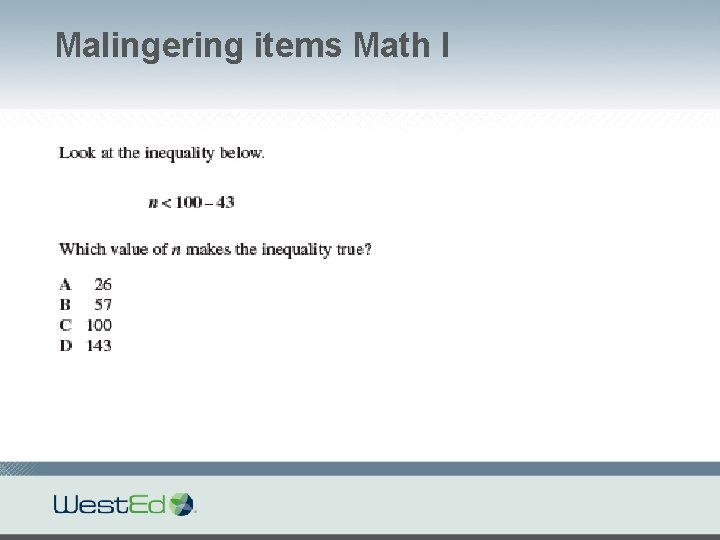

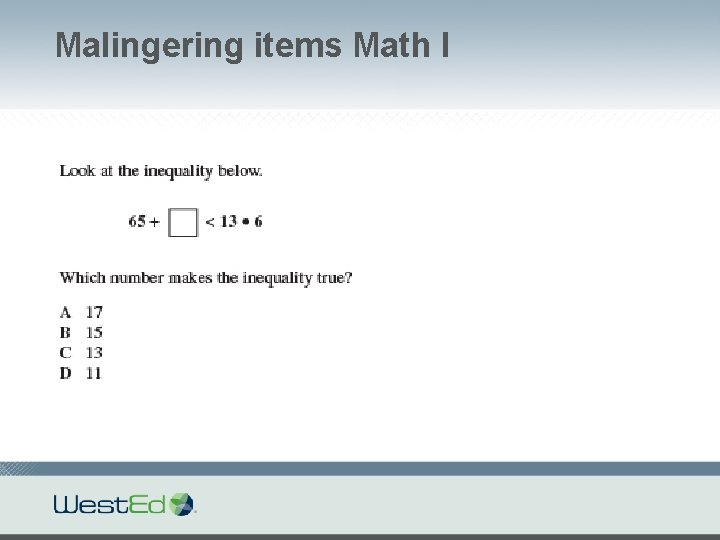

Malingering items Math I

Malingering items Math I

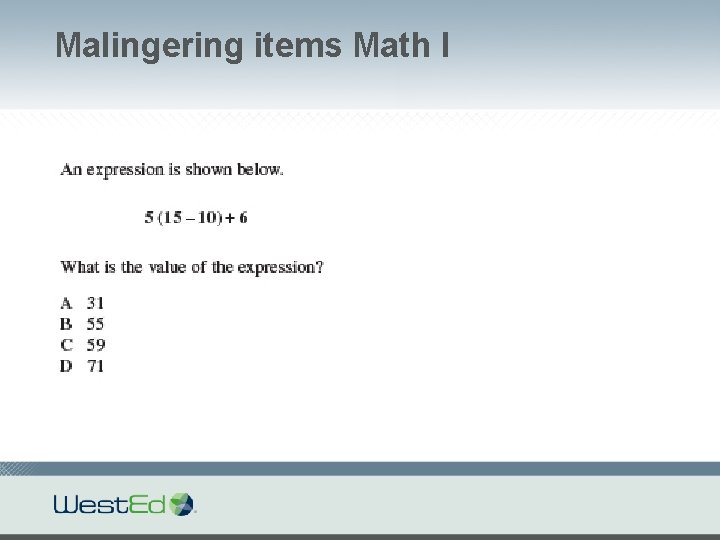

Malingering items Math I

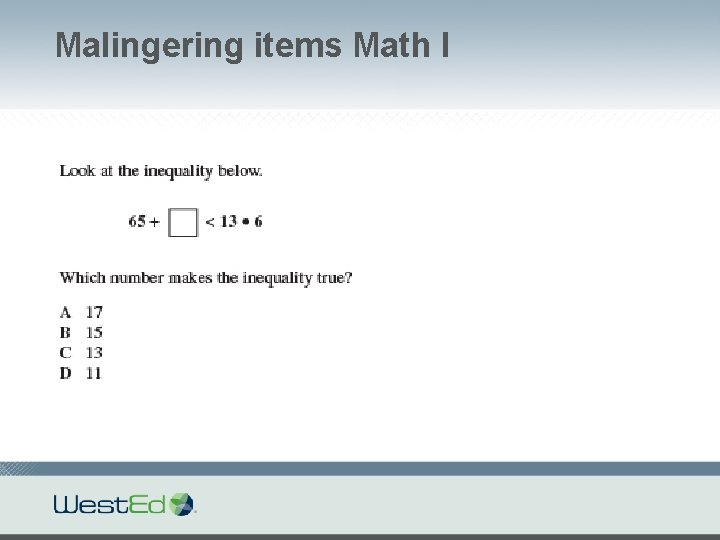

Malingering items Math I

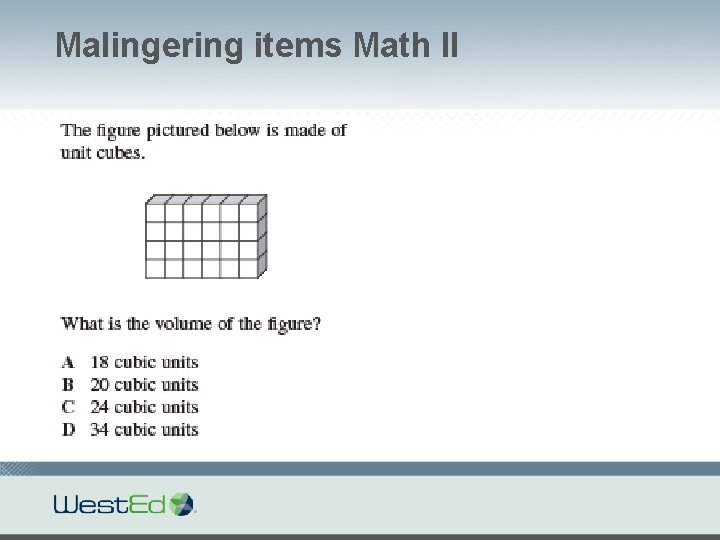

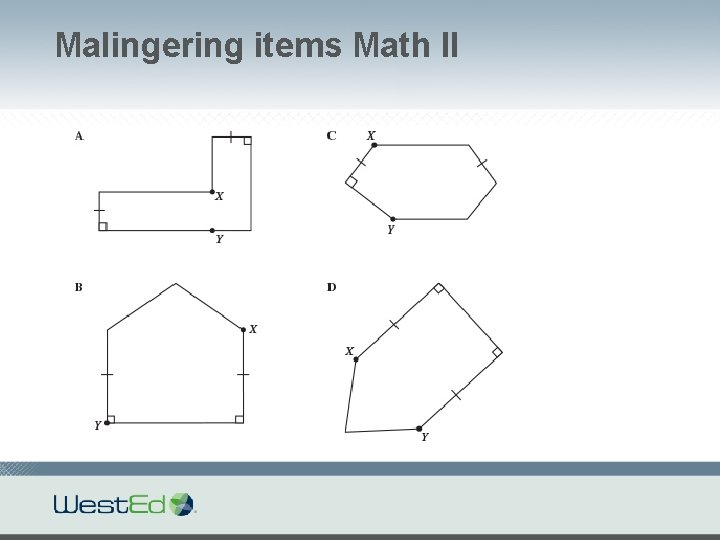

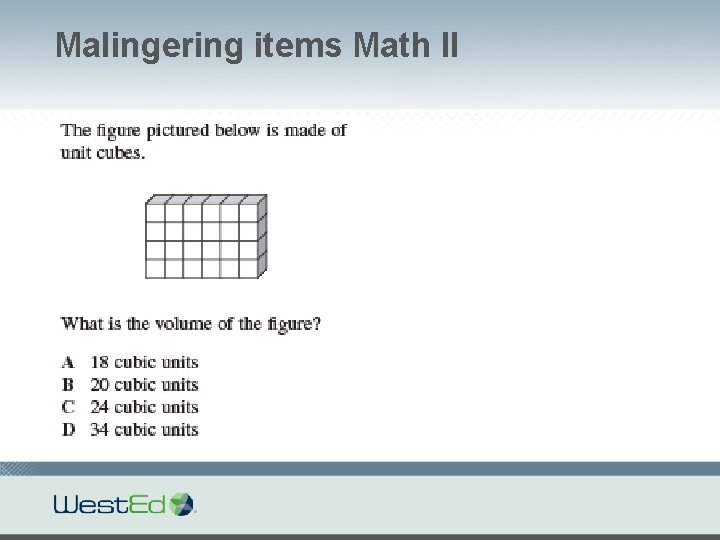

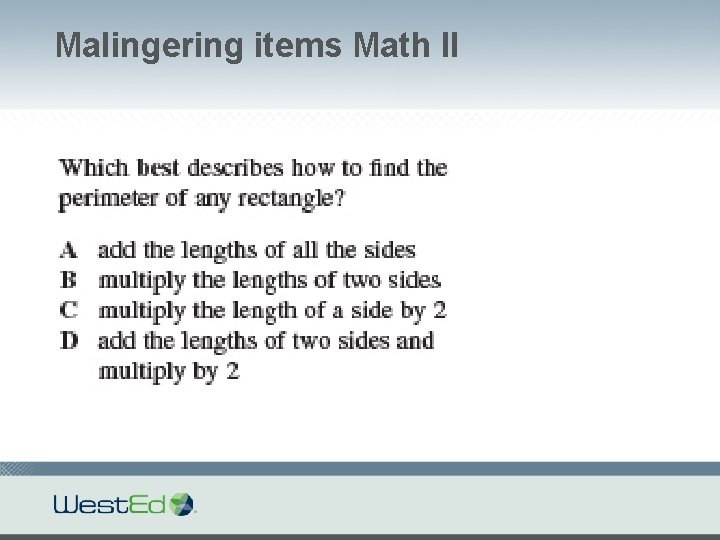

Malingering items Math II

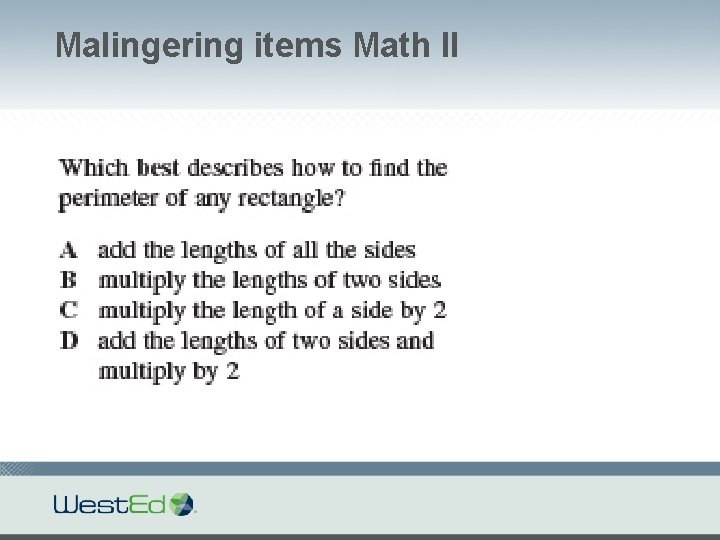

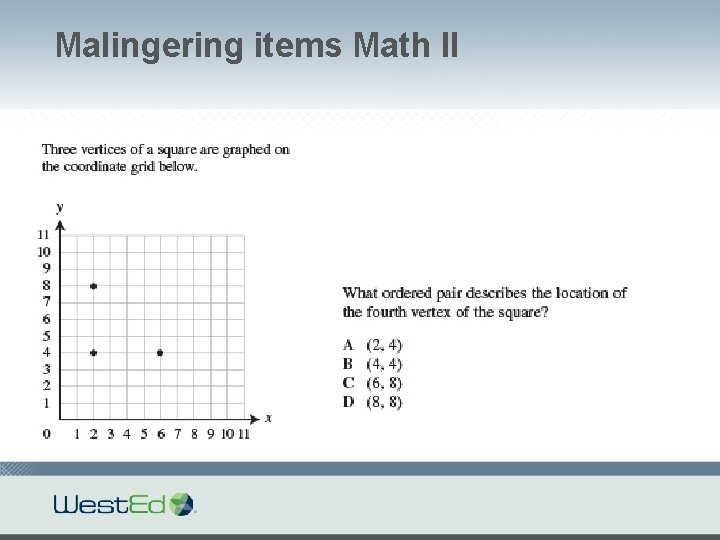

Malingering items Math II

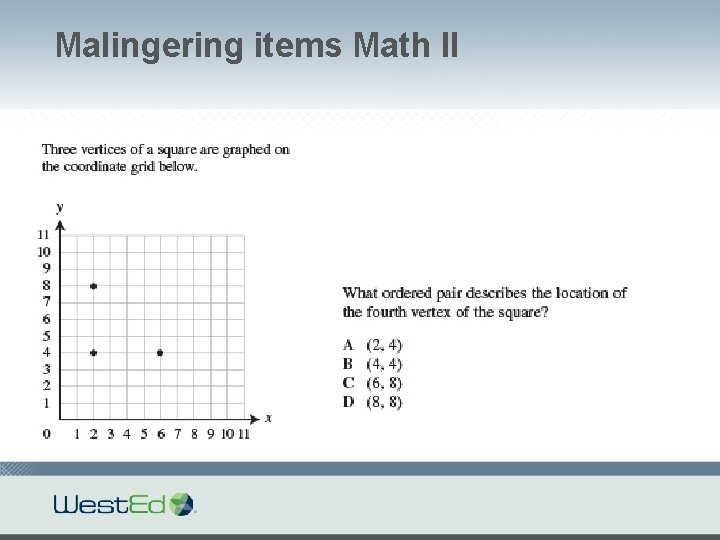

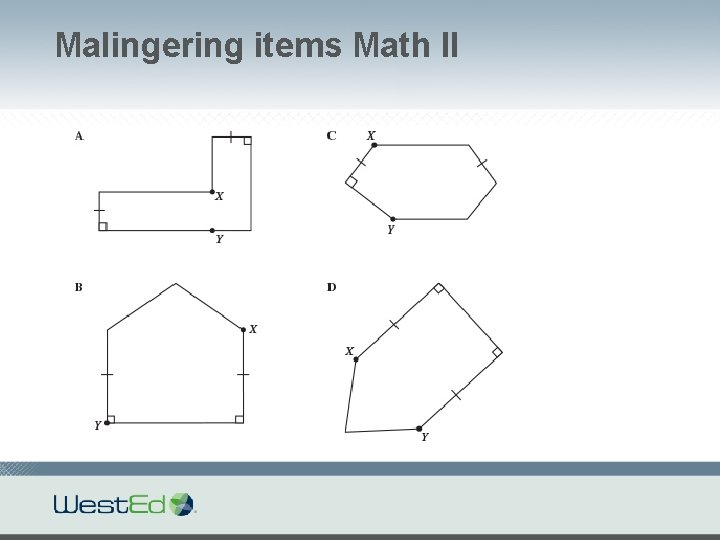

Malingering items Math II

Malingering items Math II

Malingering items Math II

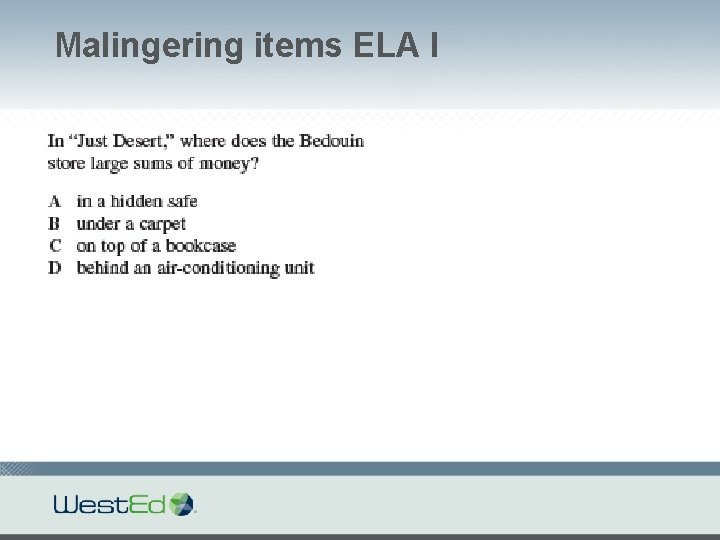

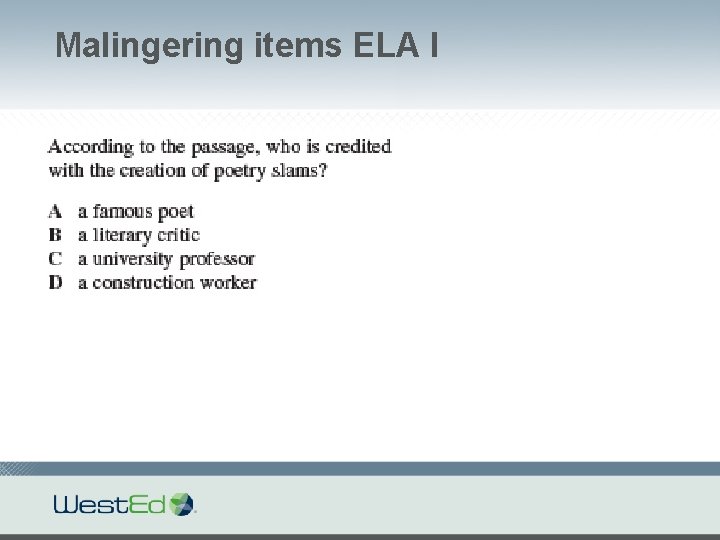

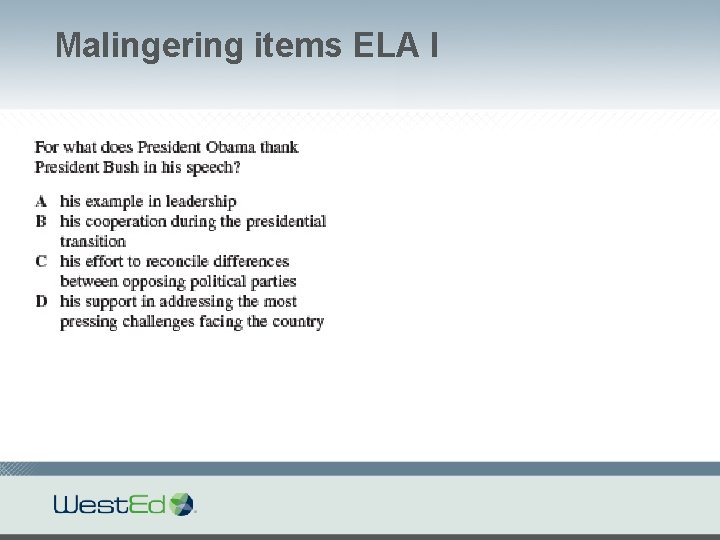

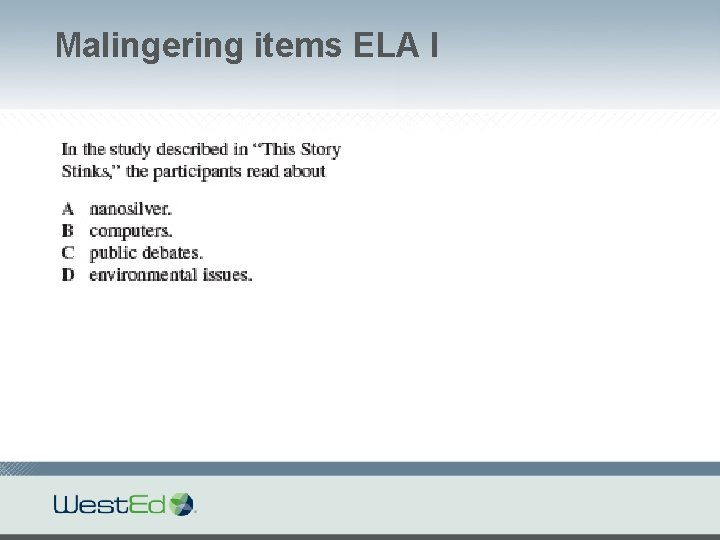

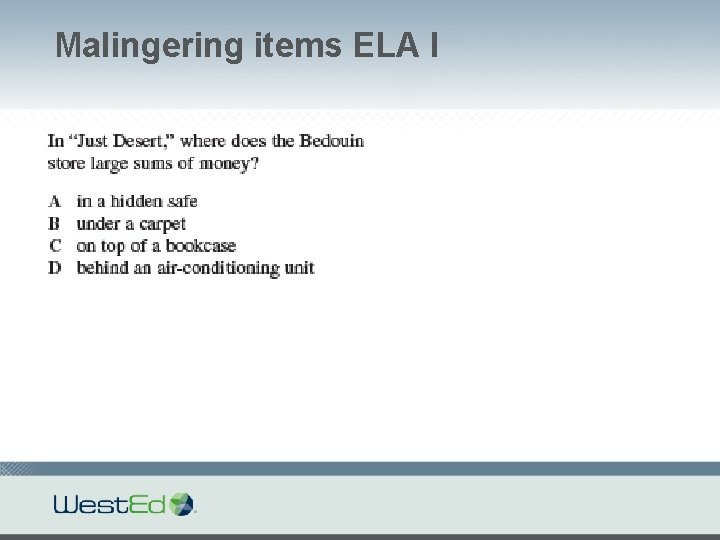

Malingering items ELA I

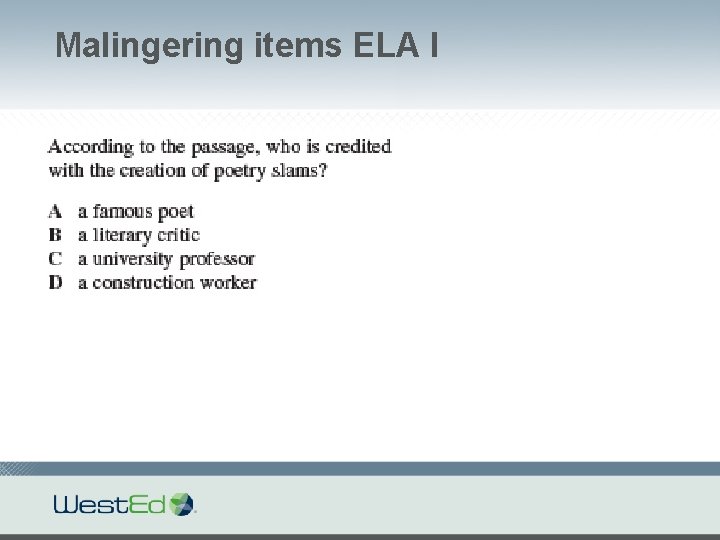

Malingering items ELA I

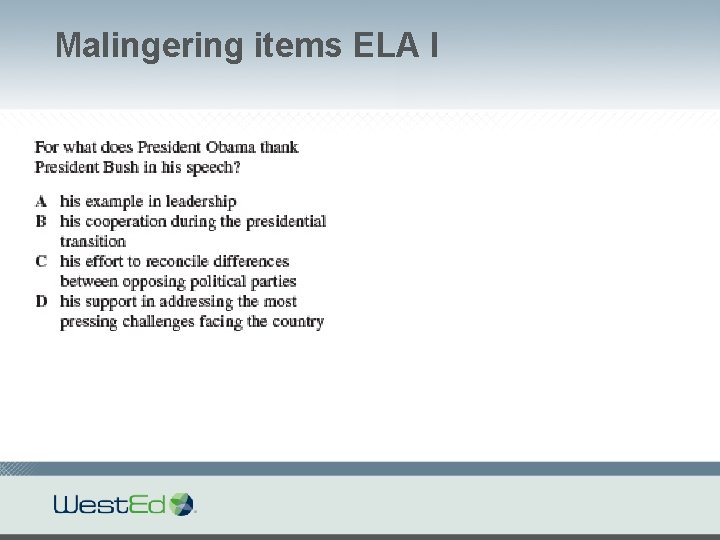

Malingering items ELA I

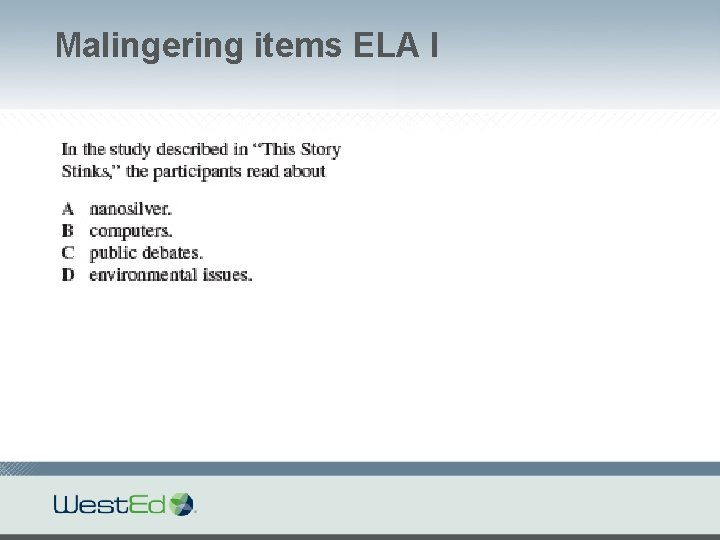

Malingering items ELA I

Feedback from the field… • Students report that English Language Arts did not seem to be more difficult than the previous HSPEs. • The mathematics assessments were too long (60 items) and too hard. • Need more practice items.

Jennifer L. Dunn Malingering Items: Some preliminary ideas around identification, evaluation and use. 45 Measured Progress © 2015

How should malingering items be used? The context: § In stand alone field tests, students often have very little incentive to make a significant effort in responding to items § The potential lack of valid responses may compromise evaluations of the items The initial idea: § Identify students with malingering behaviors § Remove these students from the data used to evaluate the items 46 Measured Progress © 2015

Goal Use malingering items to identify malingering students and evaluate the impact of removing these students on item evaluations. § Step 1: Identify malingering students § Step 2: Establish an understanding of malingering behaviors § Step 3: Compare the influence of these students on the item evaluations 47 Measured Progress © 2015

Identifying malingering students Identify students that are performing differently on the malingering items than expected Assumption: § Students should be getting the malingering items correct. § Students who get malingering items wrong are demonstrating malingering behavior Key Difference: § Other cognitive assessments: test takers are trying to be different. § Educational Assessment: test takers are not trying. 48 Measured Progress © 2015

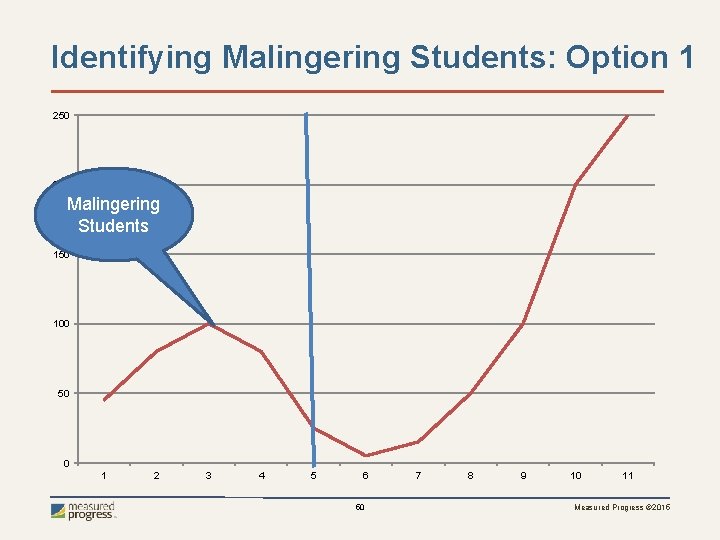

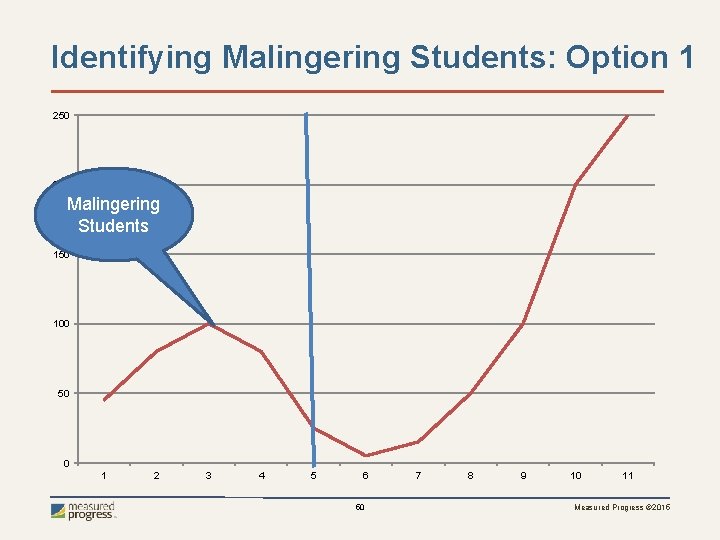

Identifying malingering students Option 1: § Calculate total scores for all students using only the malingering items § Examine the malingering total score distribution § If bimodal – the lower mode represents malingering students § If not bimodal – determine a reasonable cut point and carefully evaluate the response patterns of those students 49 Measured Progress © 2015

Identifying Malingering Students: Option 1 250 200 Malingering Students 150 100 50 0 1 2 3 4 5 6 50 7 8 9 10 11 Measured Progress © 2015

Identifying malingering students Option 2: § Identify low scoring students (using all items on the test) § Any student who scores at or below the total score that corresponds to random guessing § Calculate a total score using the malingering items § Remove any student who got a high score on the malingering items from the population of low scoring students § Remaining students are the malingerers 51 Measured Progress © 2015

Verifying the identification of malingering students Use multiple pieces of evidence § Larger than typical declines in performance § Inconsistent performance across content areas Compare the total score distribution of a randomly selected group of items to the total score distribution of the malingering items § The distribution of the randomly selected items should not be bimodal 52 Measured Progress © 2015

Understanding malingering students What are the characteristics of malingering students? Are malingering students simply random guessers? § Identify students who score below the chance level on the assessment. Compare the selected students and response patterns Do malingering behaviors change as the test progresses? § Do response the response patterns of the malingering students vary by test position? 53 Measured Progress © 2015

Understanding malingering items Evaluate the malingering items § Do malingering items differ from the other items on the test? § Are they easier? § Are they statistically identifiable? § Does test position impact the effectiveness of the malingering items? § Are the item characteristics of the malingering items consistent across test positions? How many malingering items are necessary? 54 Measured Progress © 2015

Assessing the impact on item evaluation Evaluate the items statistics with and without the malingering students § Item Statistics § P-value, Item total correlations, IRT § Distractor statistics § Model fit § Test Statistics § Reliability, Test Information How many malingering students does it take to make a difference? 55 Measured Progress © 2015

Additional Questions Are there other uses for malingering items? § Generating student scores § Linking § Standard Setting How can we verify that the malingering items are functioning as intended? Is including these items worthwhile? 56 Measured Progress © 2015

Thank You! Questions? Comments? Suggestions? 57 Measured Progress © 2015