Evaluating Organizational Change How and Why Dr Kate

- Slides: 29

Evaluating Organizational Change: How and Why? Dr Kate Mackenzie Davey Organizational Psychology Birkbeck, University of London k. mackenzie-davey@bbk. ac. uk

Aims n n n Examine the arguments for evaluating organizational change Consider the limitations of evaluation Consider different methods for evaluation Consider difficulties of evaluation in practice Consider costs and benefits in practice 2

Arguments for evaluating organizational change Sound professional practice n Basis for organizational learning n Central to the development of evidence based practice n Widespread cynicism about fads and fashions n To influence social or governmental policy n 3

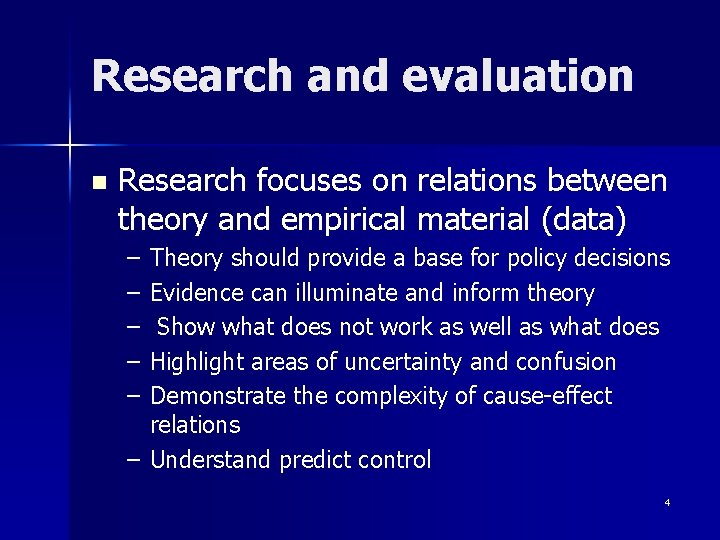

Research and evaluation n Research focuses on relations between theory and empirical material (data) – – – Theory should provide a base for policy decisions Evidence can illuminate and inform theory Show what does not work as well as what does Highlight areas of uncertainty and confusion Demonstrate the complexity of cause-effect relations – Understand predict control 4

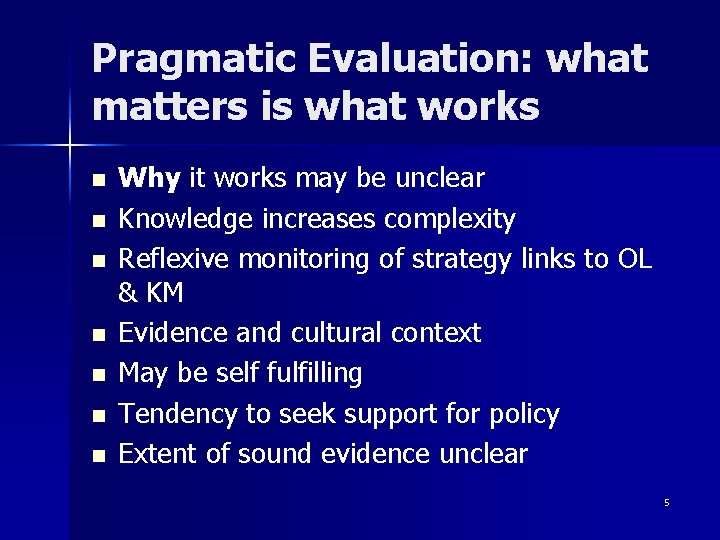

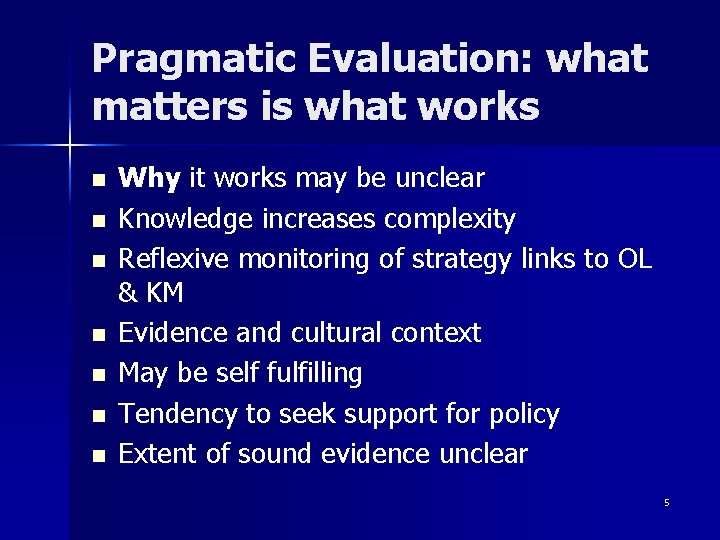

Pragmatic Evaluation: what matters is what works n n n n Why it works may be unclear Knowledge increases complexity Reflexive monitoring of strategy links to OL & KM Evidence and cultural context May be self fulfilling Tendency to seek support for policy Extent of sound evidence unclear 5

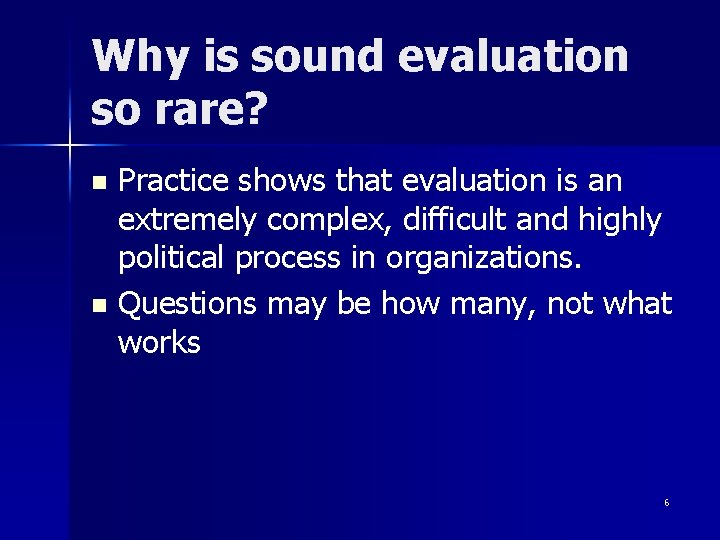

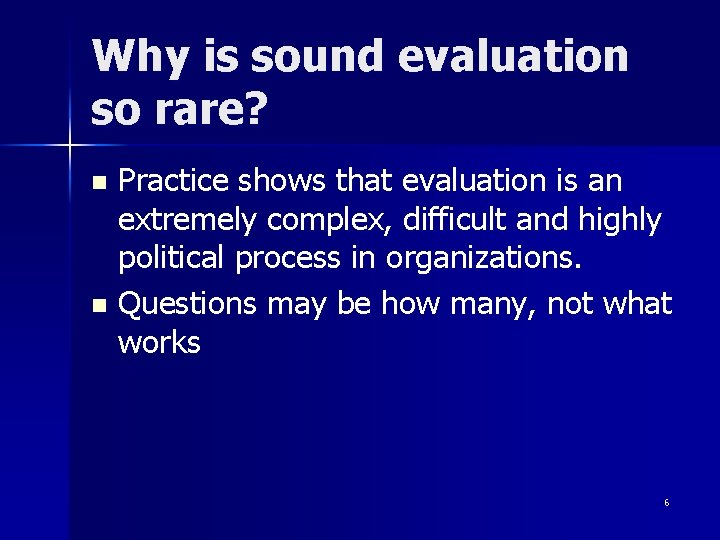

Why is sound evaluation so rare? Practice shows that evaluation is an extremely complex, difficult and highly political process in organizations. n Questions may be how many, not what works n 6

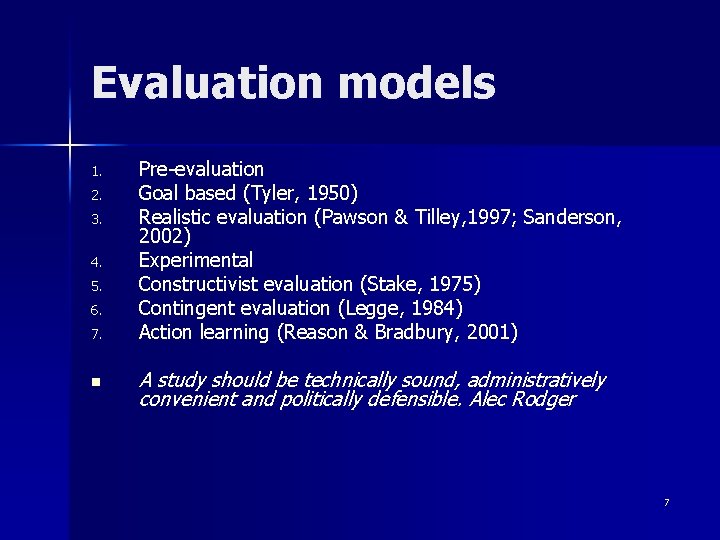

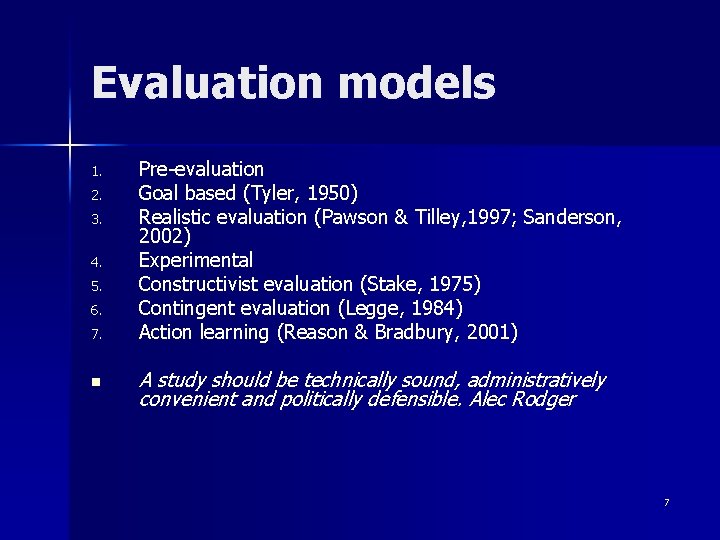

Evaluation models 1. 2. 3. 4. 5. 6. 7. n Pre-evaluation Goal based (Tyler, 1950) Realistic evaluation (Pawson & Tilley, 1997; Sanderson, 2002) Experimental Constructivist evaluation (Stake, 1975) Contingent evaluation (Legge, 1984) Action learning (Reason & Bradbury, 2001) A study should be technically sound, administratively convenient and politically defensible. Alec Rodger 7

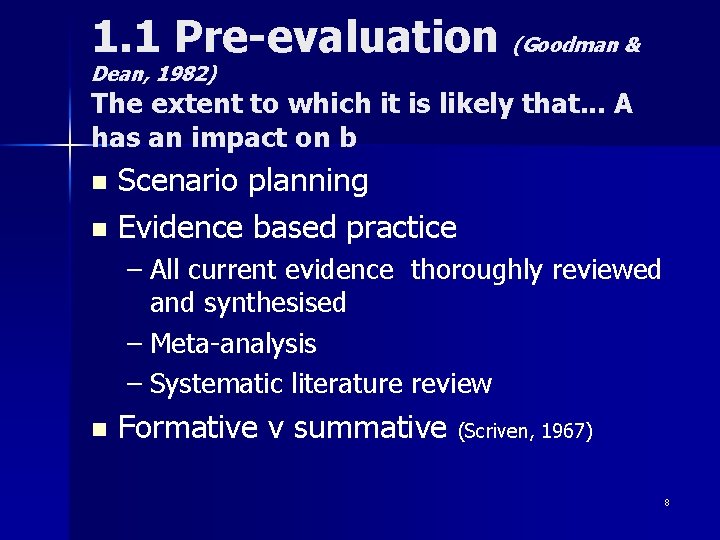

1. 1 Pre-evaluation (Goodman & Dean, 1982) The extent to which it is likely that. . . A has an impact on b Scenario planning n Evidence based practice n – All current evidence thoroughly reviewed and synthesised – Meta-analysis – Systematic literature review n Formative v summative (Scriven, 1967) 8

1. 2 Pre-evaluation issues Based on theory and past evidence: not clear it will generalise to the specific case n Formative: influences planning n Argument: to understand a system you must intervene (Lewin) n 9

2. 1. Goal based evaluation Tyler (1950) n n n n Objectives used to aid planned change Can help clarify models Goals from bench marking, theory or preevaluation exercises Predict changes Measure pre and post intervention Identify the interventions Were objectives achieved? 10

2. 2 Difficulties with Goal based evaluation Who sets the goals? How do you identify the intervention? – Tendency to managerialism (unitarist) – Failure to accommodate value pluralism – Over-commitment to scientific paradigm – What is measured gets done – No recognition of unanticipated effects – Focus on single outcome, not process 11

3. 1 Realistic evaluation: Conceptual clarity (Pawson & Tilley, 1997) n n n Evidence needs to be based on clear ideas about concepts Measures may be derived from theory Examine definitions used elsewhere Consider specific examples Ensure all aspects are covered 12

3. 2 Realistic evaluation Towards a theory: What are you looking for? n Make assumptions and ideas explicit What is your theory of cause and effect? – What are you expecting to change (outcome)? – How are you hoping to achieve this change (mechanism)? – What aspects of the context could be important? 13

3. 3 Realistic evaluation Context-mechanismoutcome n Context: What environmental aspects may affect the outcome? – What else may influence the outcomes? – What other effects may there be? 14

3. 4 Realistic evaluation Context-mechanismoutcome n Mechanism: What will you do to bring about this outcome? – How will you intervene (if at all)? – What will you observe? – How would you expect groups to differ? – What mechanisms do you expect to operate? 15

3. 5 Realistic evaluation Context-mechanismoutcome n Outcome: What effect or outcome do you aim for? – What evidence could show it worked? – How could you measure it? 16

4. 1 Experimental evaluation: Explain, predict and control by identifying causal relationships n Theory of causality makes predictions about variables eg training increases productivity n Two randomly assigned matched groups: experimental and control n One group experiences intervention, one does not n Measure outcome variable pre-test and post-test (longitudinal) n Analyse for statistically significant differences between the two groups n Outcome linked back to modify theory n The gold standard 17

4. 2 Difficulties with experimental evaluation in organizations Difficult to achieve in organizations n Unitarist view n Leaves out unforeseen effects n Problems with continuous change processes n Summative not formative n Generally at best quasi-experimental n 18

5. 1 Constructivist or stakeholder evaluation n n Responsive evaluation (Stake, 1975) or Fourth generation evaluation (Guba & Lincoln, 1989) Constructivist interpretivist hermeneutic methodology – Based on stakeholder claims concerns issues – Stakeholders: agents, beneficiaries, victims 19

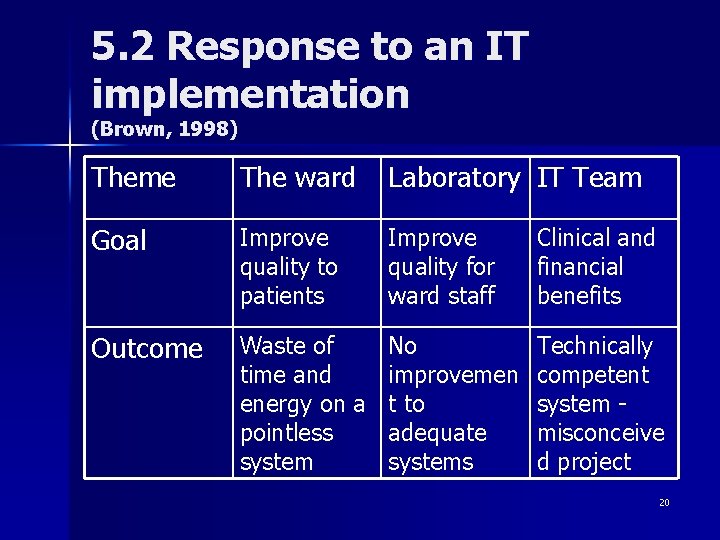

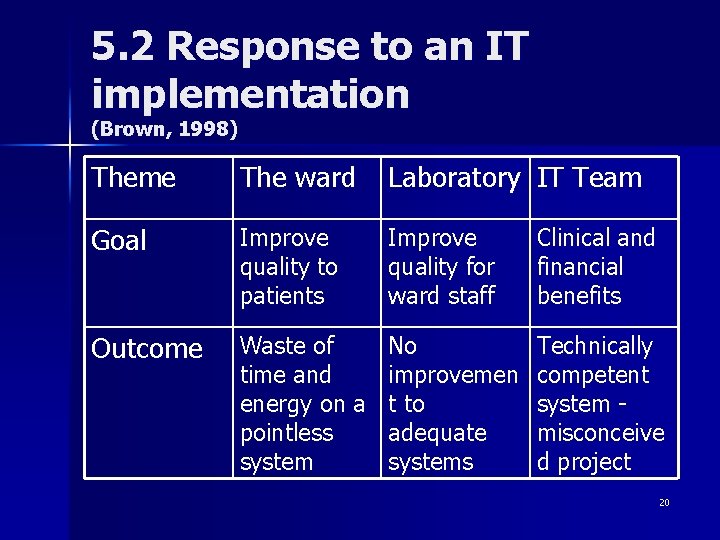

5. 2 Response to an IT implementation (Brown, 1998) Theme The ward Laboratory IT Team Goal Improve quality to patients Improve quality for ward staff Clinical and financial benefits Outcome Waste of time and energy on a pointless system No improvemen t to adequate systems Technically competent system misconceive d project 20

5. 3 Constructivist evaluation issues No one right answer n Demonstrates complexity of issues n Highlights conflicts of interests n Interesting for academics n Difficult for practitioners to resolve n 21

6 A Contingent approach to evaluation (Legge, 1984) n Do you want the proposed change programme to be evaluated? (Stakeholders) What functions do you wish its evaluation to serve? (Stakeholders) n What are the alternative approaches to evaluation? (Researcher) n Which of the alternatives best matches the requirements? (Discussion) n 22

7. Action research n Identify good practice (Reason & Bradbury, 2001) Action research – Responds to practical issues in organizations – Engages in collaborative relationships – Draws on diverse evidence – Value orientation - humanist – Emergent, developmental 23

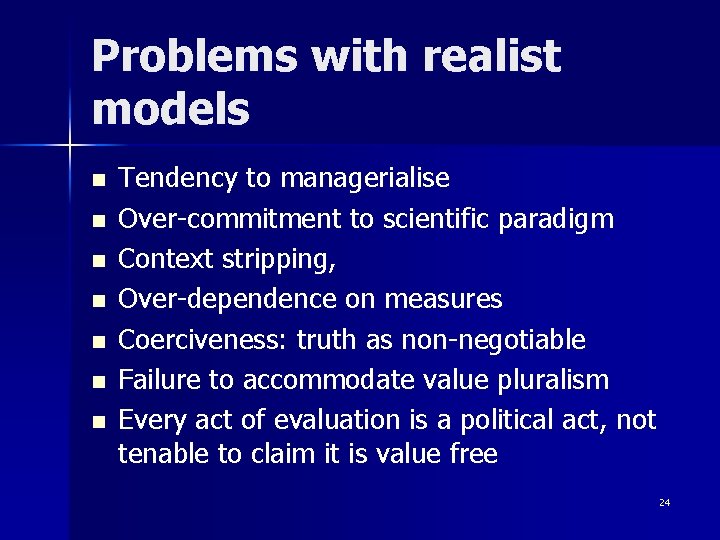

Problems with realist models n n n n Tendency to managerialise Over-commitment to scientific paradigm Context stripping, Over-dependence on measures Coerciveness: truth as non-negotiable Failure to accommodate value pluralism Every act of evaluation is a political act, not tenable to claim it is value free 24

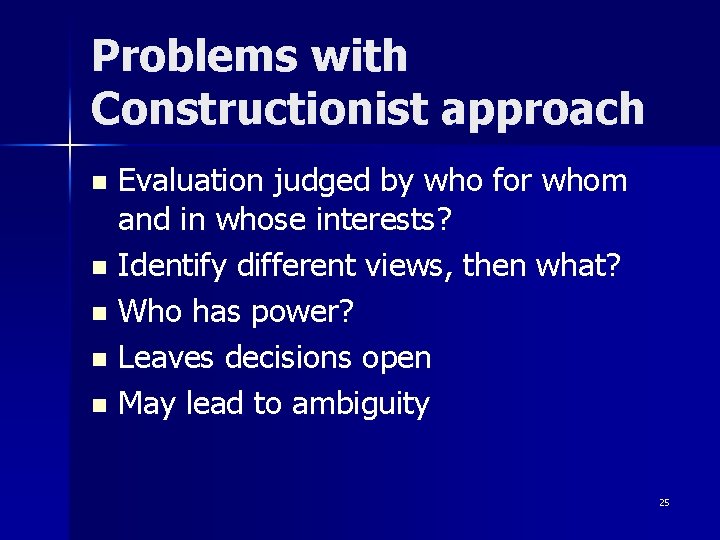

Problems with Constructionist approach Evaluation judged by who for whom and in whose interests? n Identify different views, then what? n Who has power? n Leaves decisions open n May lead to ambiguity n 25

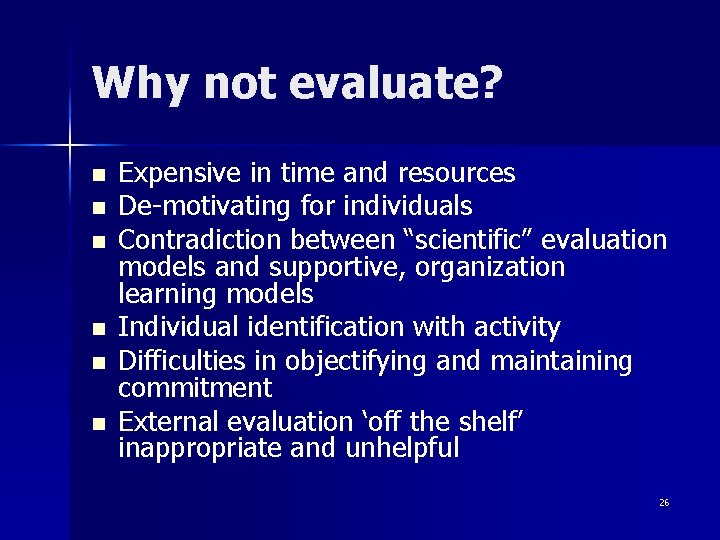

Why not evaluate? n n n Expensive in time and resources De-motivating for individuals Contradiction between “scientific” evaluation models and supportive, organization learning models Individual identification with activity Difficulties in objectifying and maintaining commitment External evaluation ‘off the shelf’ inappropriate and unhelpful 26

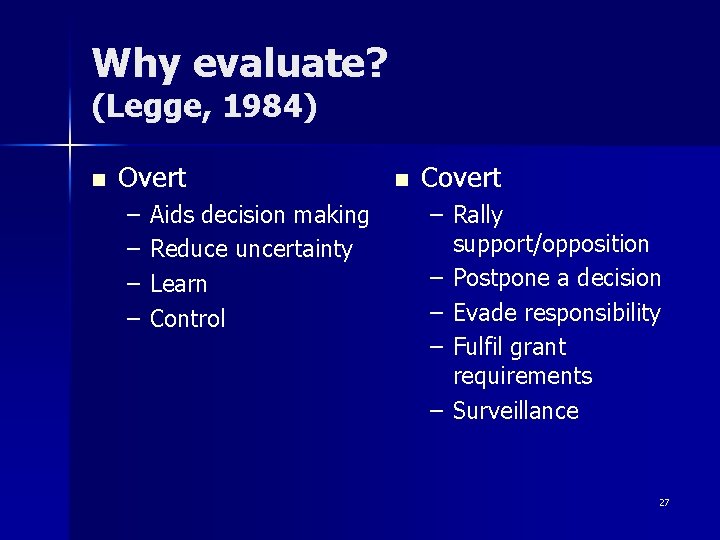

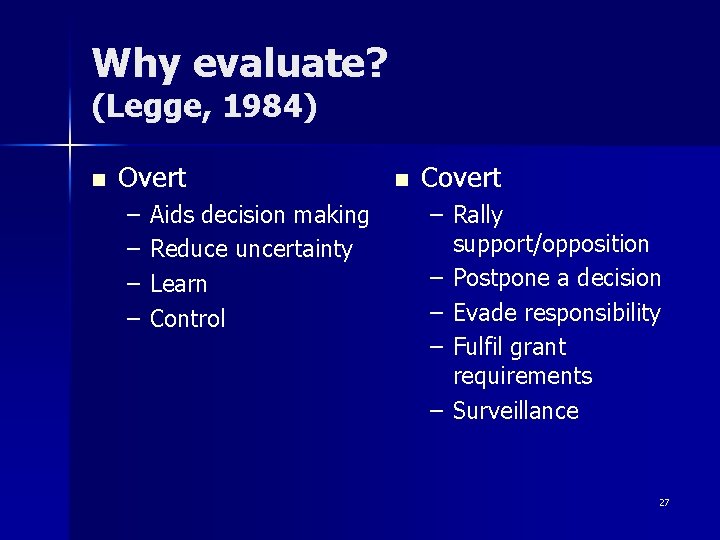

Why evaluate? (Legge, 1984) n Overt – – Aids decision making Reduce uncertainty Learn Control n Covert – Rally support/opposition – Postpone a decision – Evade responsibility – Fulfil grant requirements – Surveillance 27

Conclusion n n Evaluation is very expensive, demanding and complex Evaluation is a political process: need for clarity about why you do it Good evaluation always carries the risk of exposing failure Therefore evaluation is an emotional process Evaluation needs to be acceptable to the organization 28

Conclusion 2 n n n Plan and decide which model of evaluation is appropriate Identify who will carry out the evaluation and for what purpose Do not overload the evaluation process: judgment or development? Evaluation can give credibility and enhance learning Informal evaluation will take place whether you plan it or not 29