Evaluating models for a needle in a haystack

- Slides: 28

Evaluating models for a needle in a haystack: Applications in predictive maintenance Danielle Dean (Microsoft), Data Science Lead @danielleodean Shaheen Gauher (Microsoft), Data Scientist @Shaheen_Gauher

Outline § Predictive Maintenance Use Cases § Data Science Process § Modeling & Evaluation - Random Guess, Weighted Guess (by distribution, by threshold), Majority Class - Cost factor

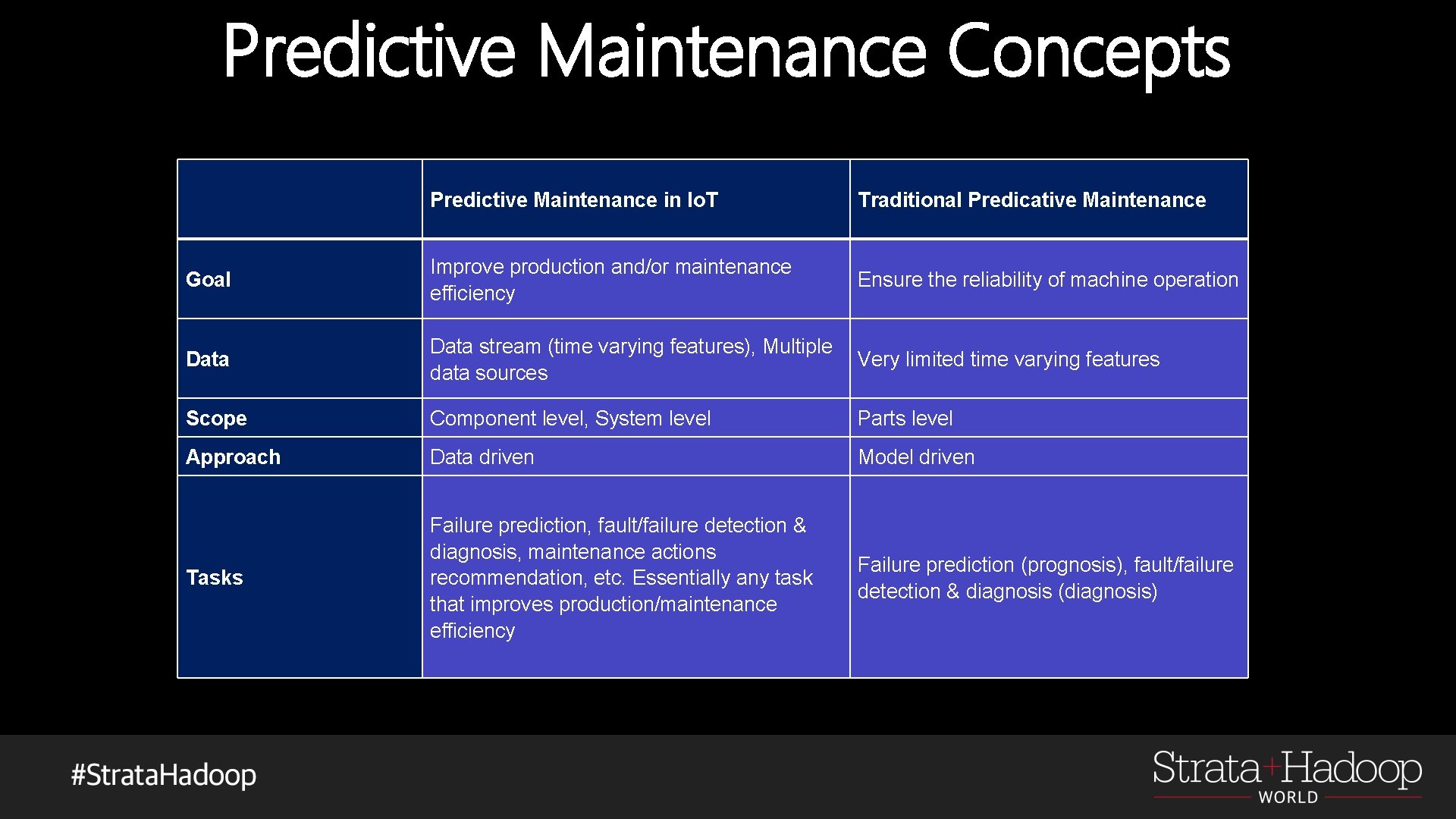

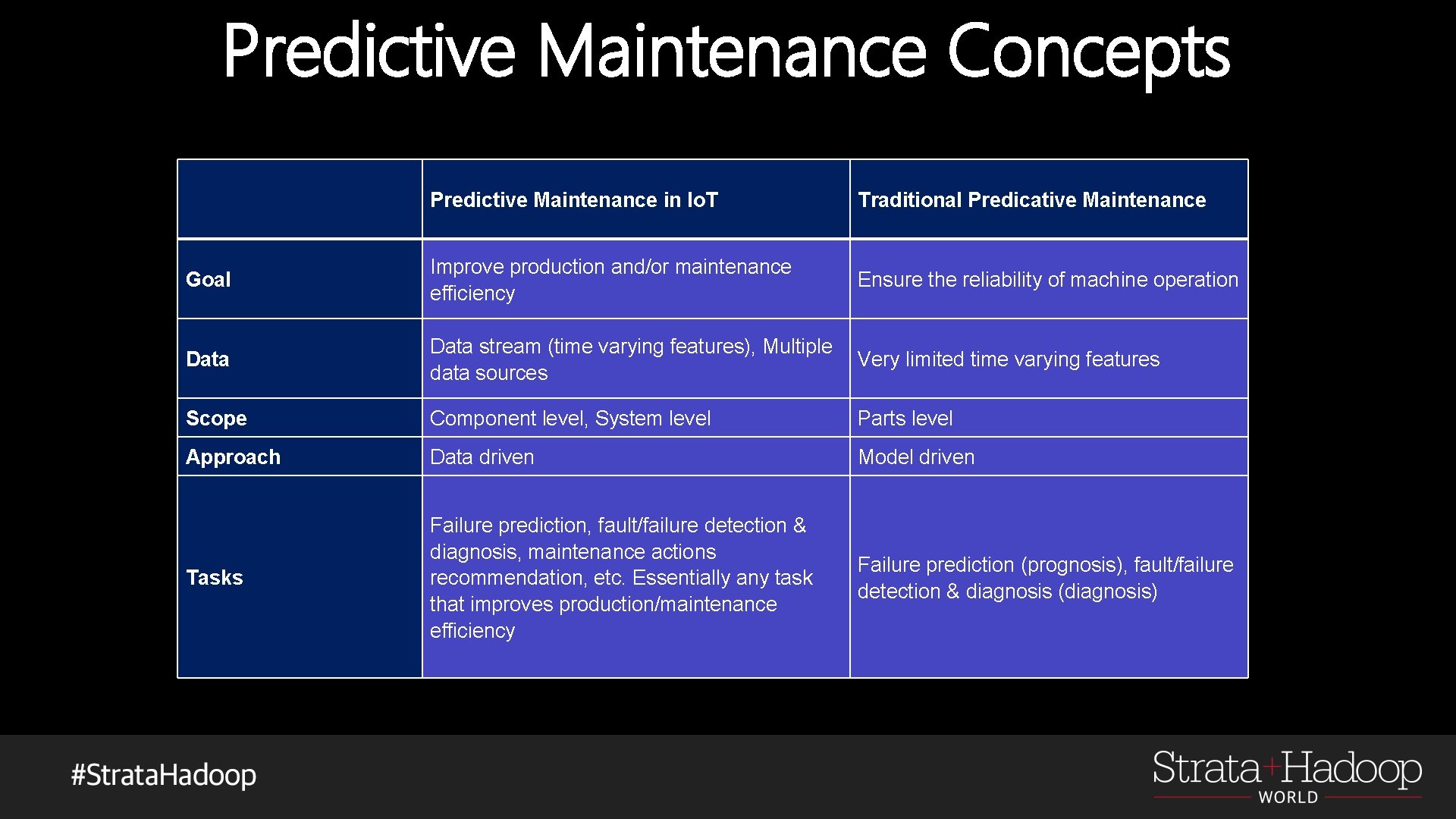

Predictive Maintenance Concepts Predictive Maintenance in Io. T Traditional Predicative Maintenance Goal Improve production and/or maintenance efficiency Ensure the reliability of machine operation Data stream (time varying features), Multiple Very limited time varying features data sources Scope Component level, System level Parts level Approach Data driven Model driven Tasks Failure prediction, fault/failure detection & diagnosis, maintenance actions recommendation, etc. Essentially any task that improves production/maintenance efficiency Failure prediction (prognosis), fault/failure detection & diagnosis (diagnosis)

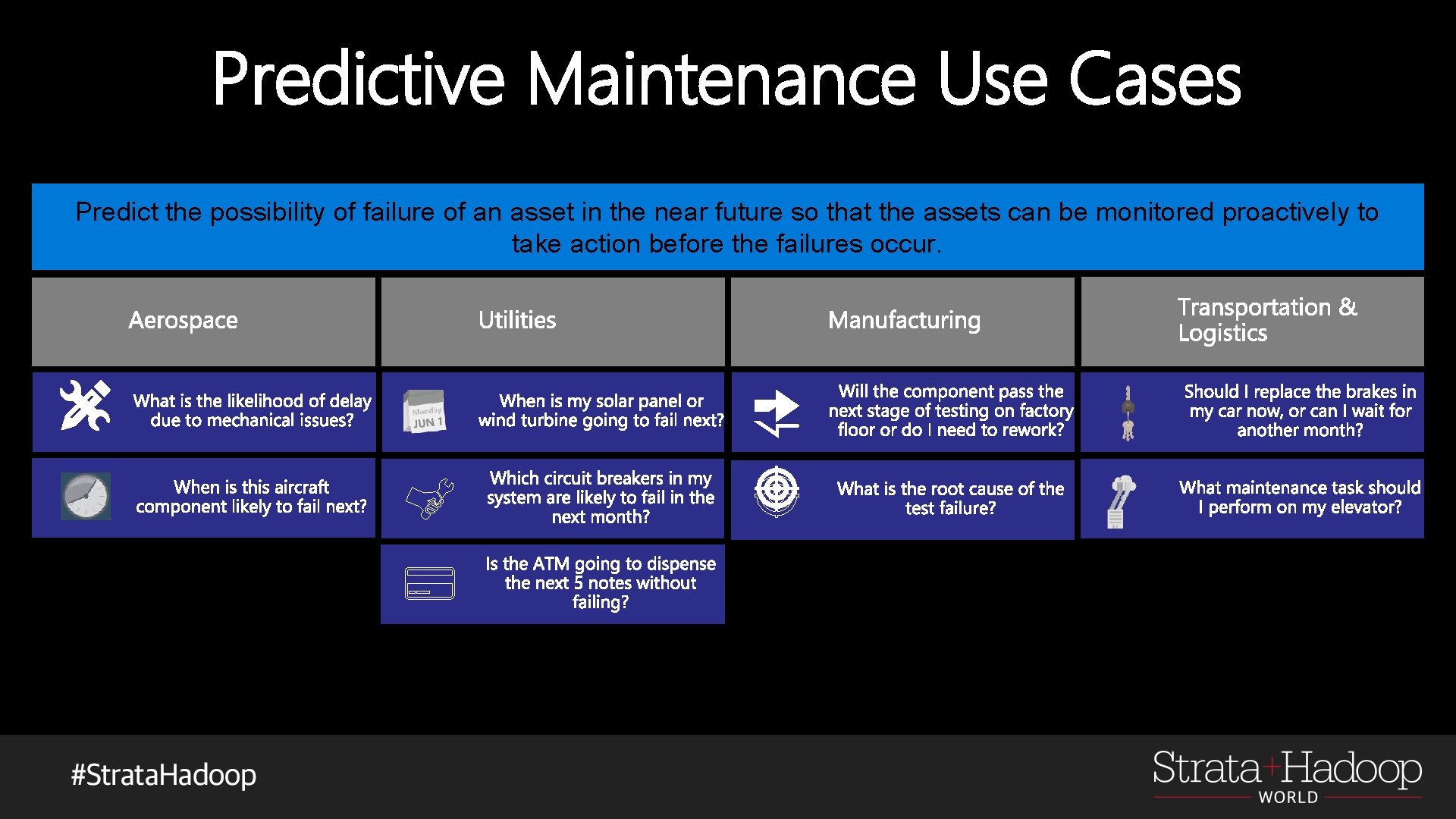

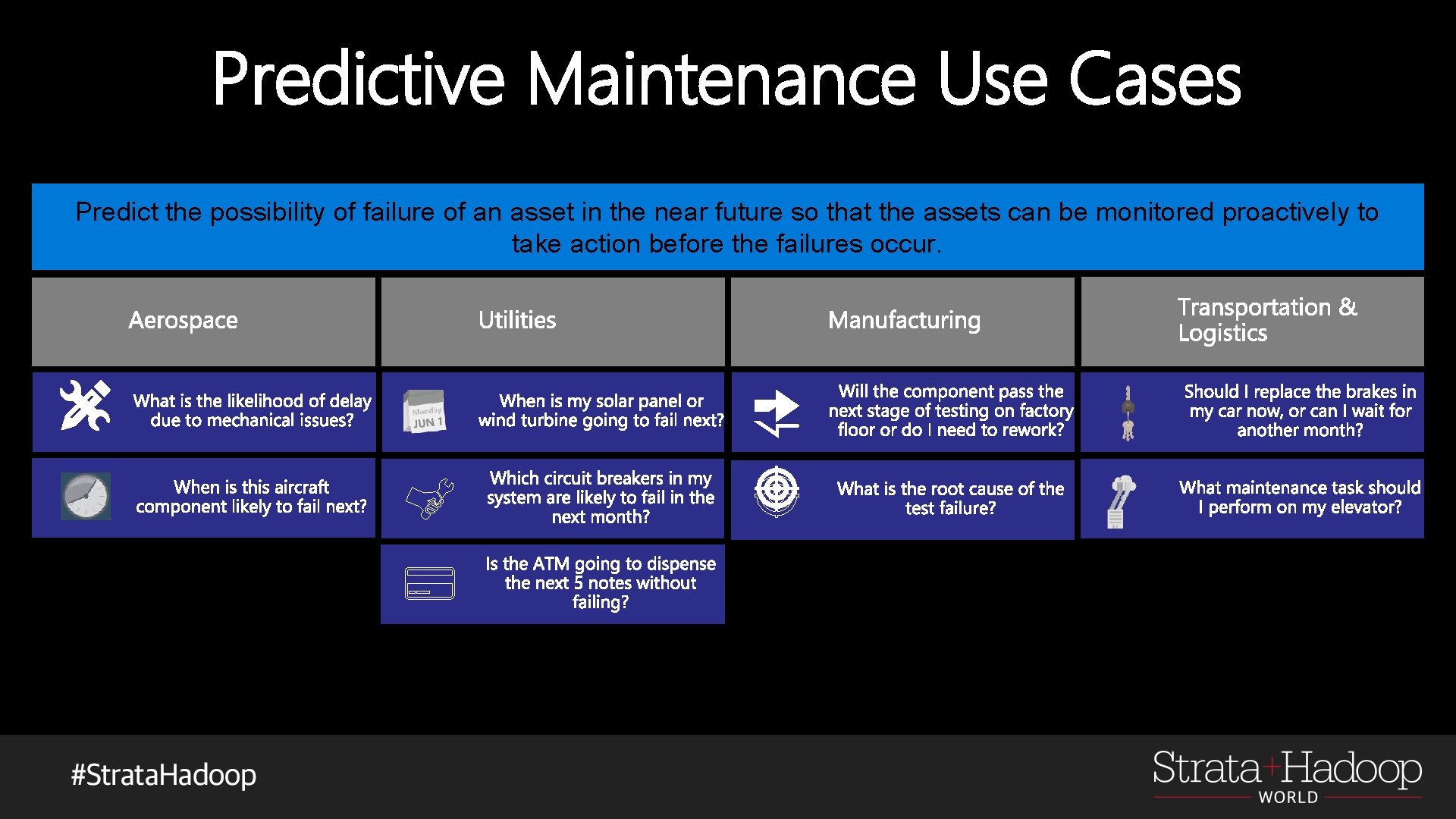

Predictive Maintenance Use Cases Predict the possibility of failure of an asset in the near future so that the assets can be monitored proactively to take action before the failures occur.

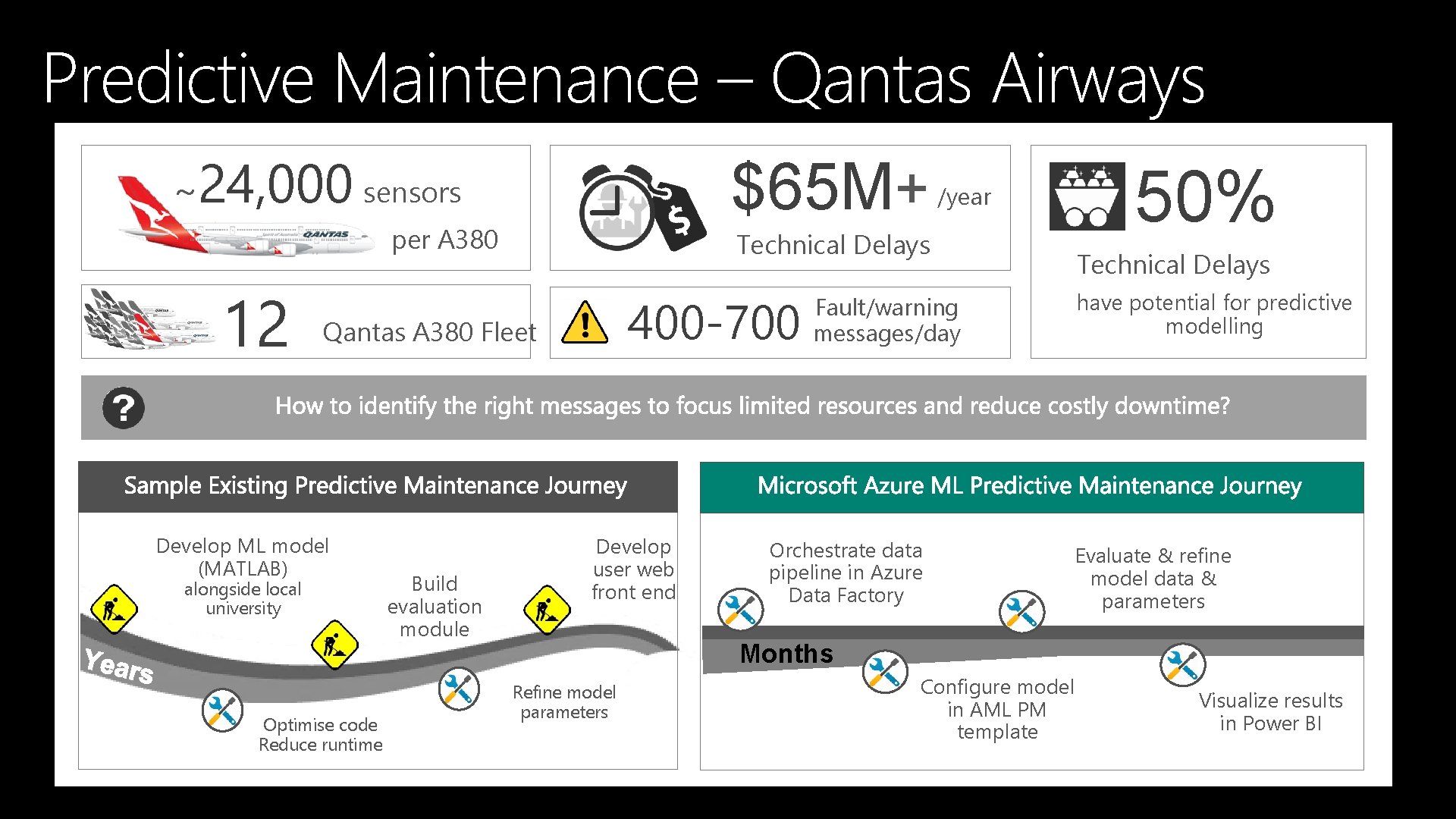

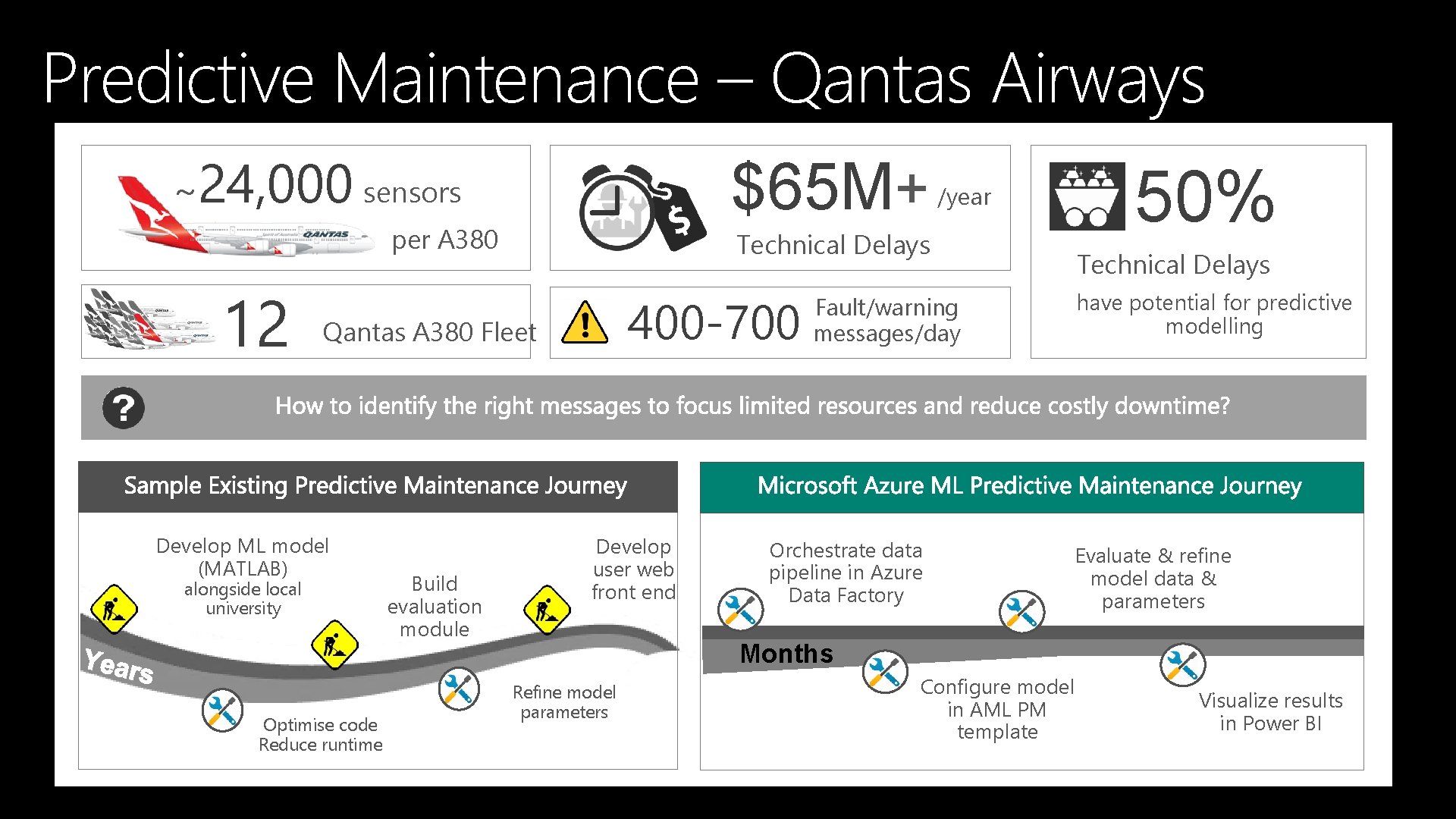

Predictive Maintenance – Qantas Airways ~24, 000 sensors $65 M+ per A 380 12 Technical Delays 400 -700 Qantas A 380 Fleet Develop ML model (MATLAB) alongside local university Optimise code Reduce runtime Build evaluation module /year Develop user web front end Fault/warning messages/day Orchestrate data pipeline in Azure Data Factory 50% Technical Delays have potential for predictive modelling Evaluate & refine model data & parameters Months Refine model parameters Configure model in AML PM template Visualize results in Power BI

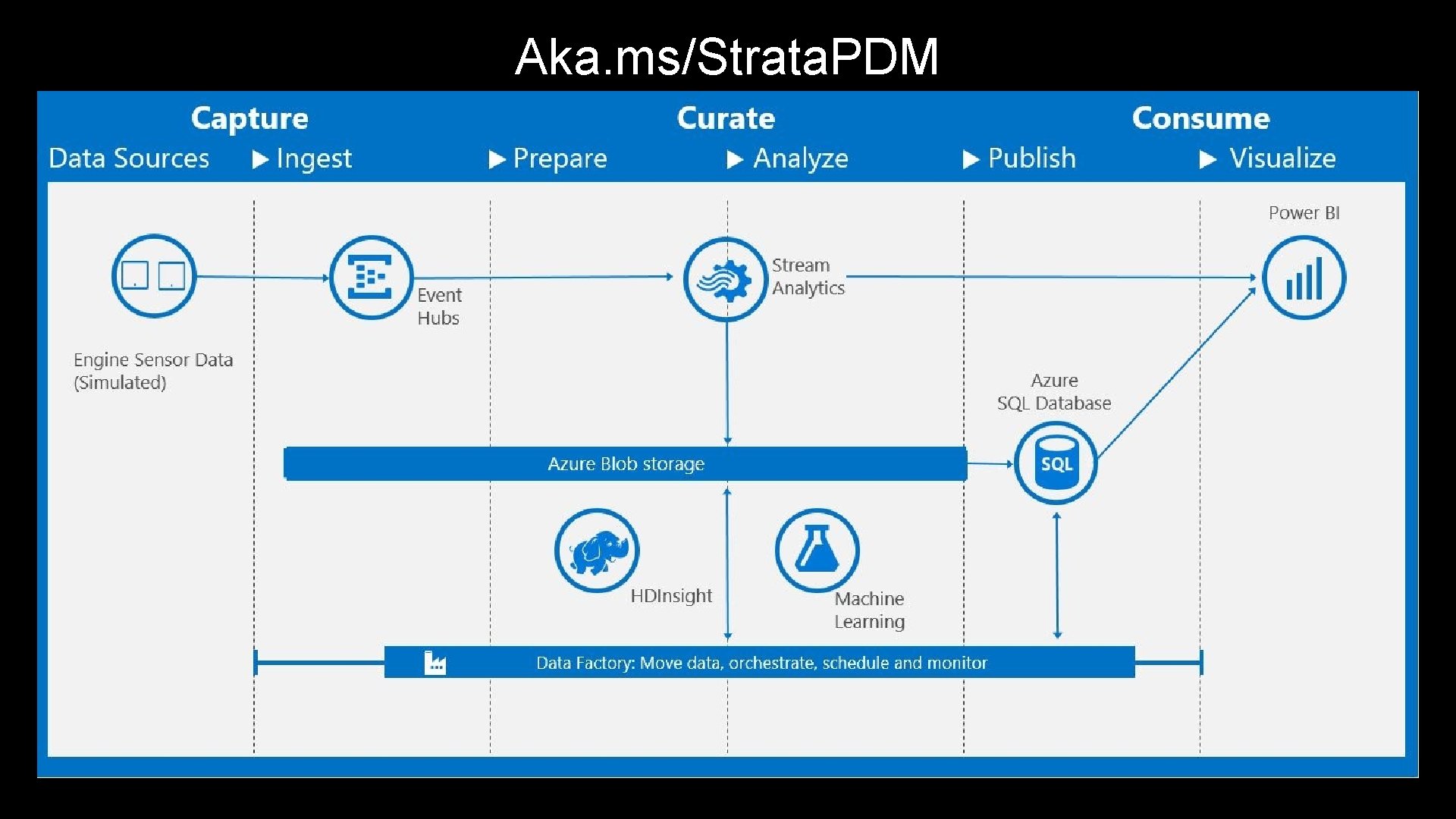

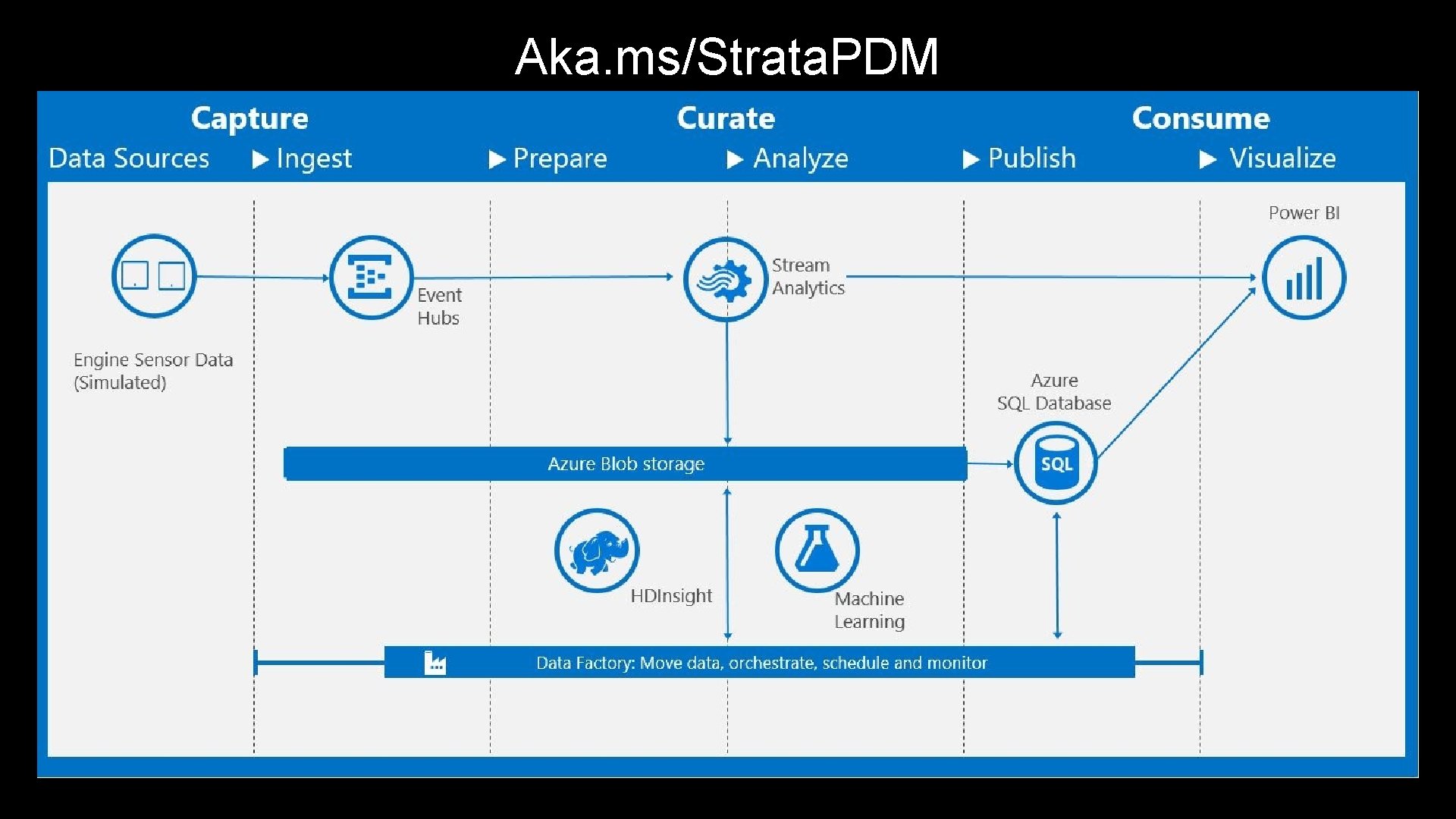

Aka. ms/Strata. PDM

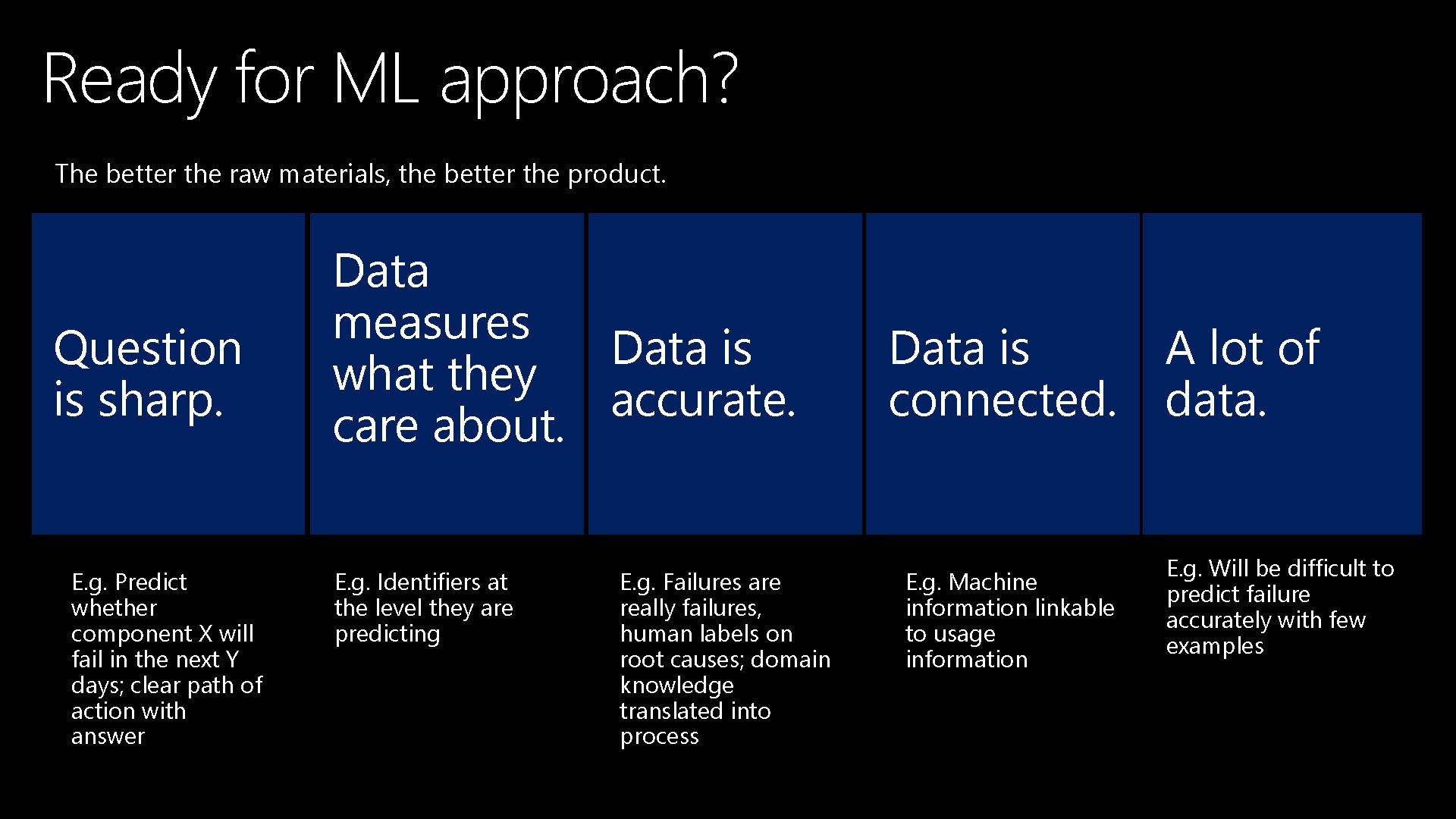

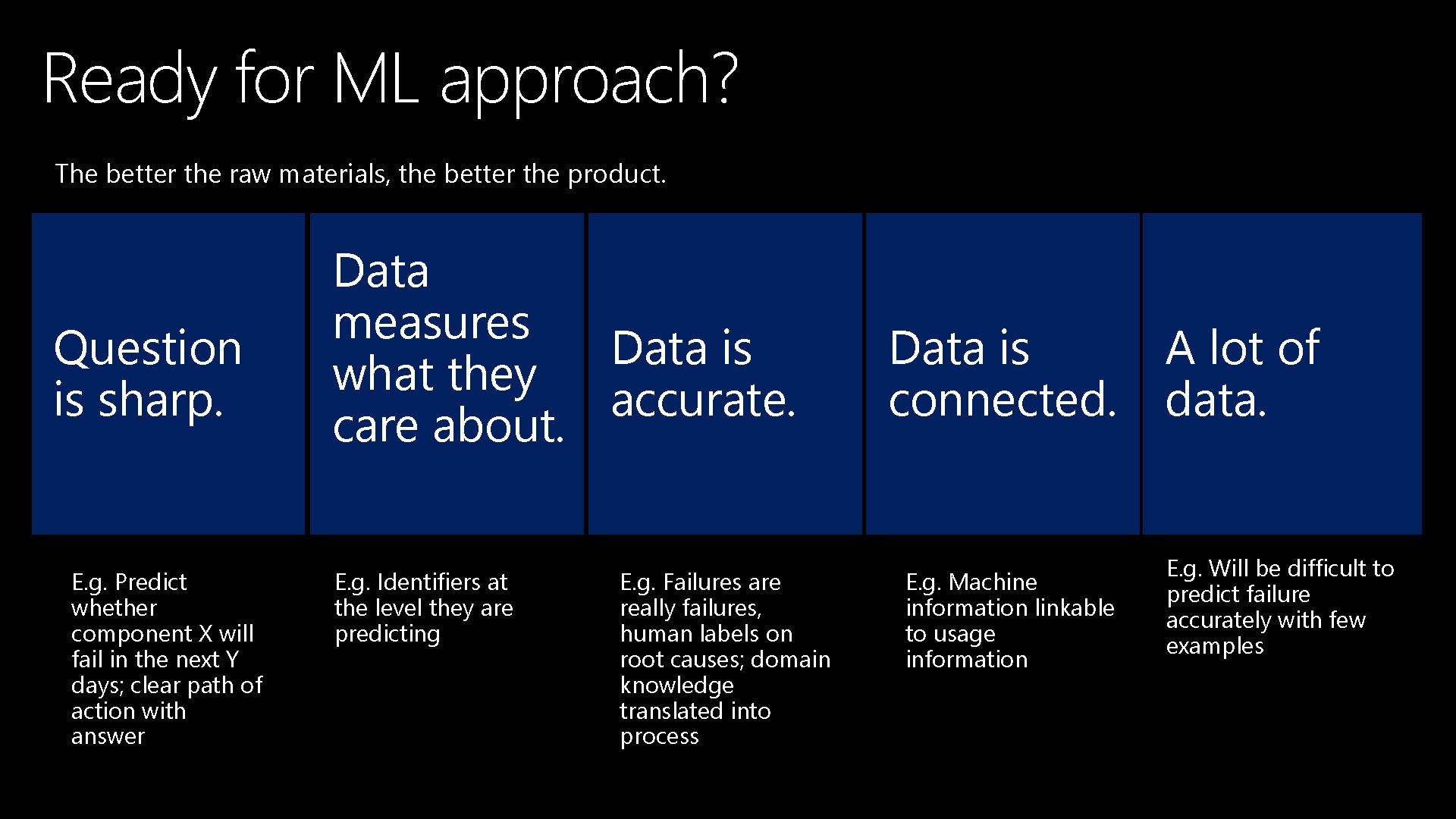

Ready for ML approach? The better the raw materials, the better the product. Question is sharp. E. g. Predict whether component X will fail in the next Y days; clear path of action with answer Data measures Data is what they accurate. care about. E. g. Identifiers at the level they are predicting E. g. Failures are really failures, human labels on root causes; domain knowledge translated into process Data is A lot of connected. data. E. g. Machine information linkable to usage information E. g. Will be difficult to predict failure accurately with few examples

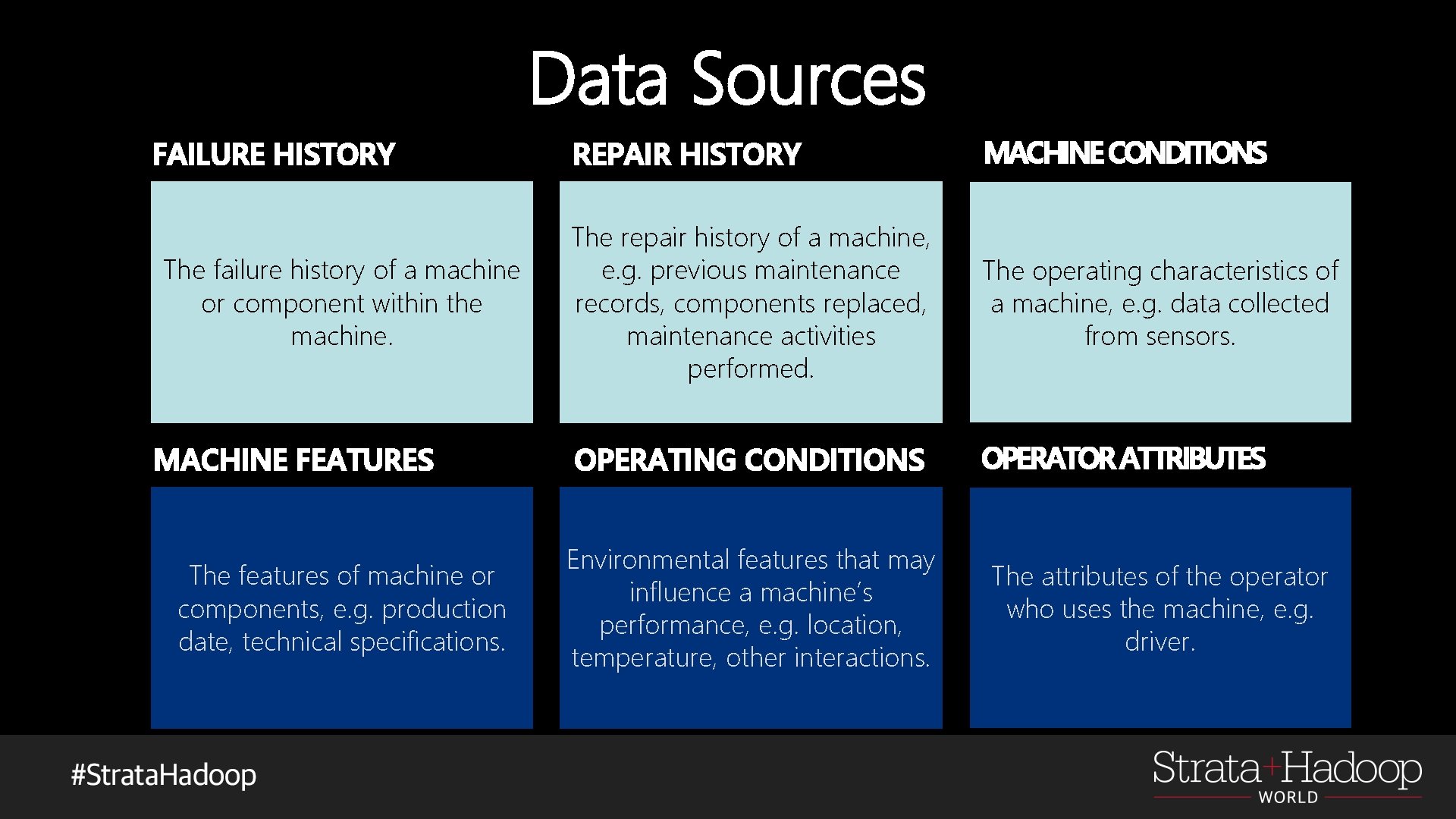

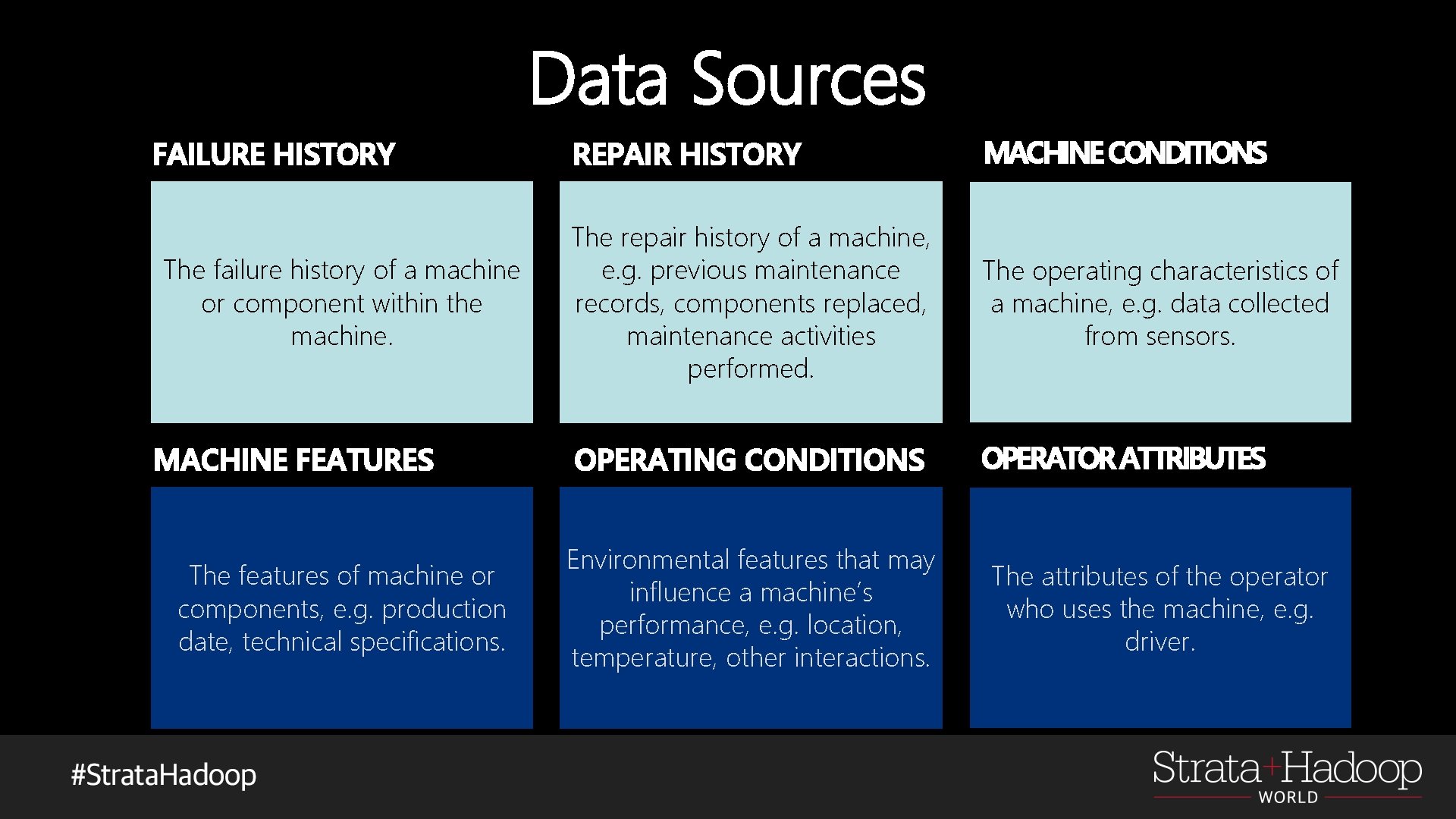

Data Sources FAILURE HISTORY The failure history of a machine or component within the machine. MACHINE FEATURES The features of machine or components, e. g. production date, technical specifications. REPAIR HISTORY MACHINE CONDITIONS The repair history of a machine, e. g. previous maintenance records, components replaced, maintenance activities performed. The operating characteristics of a machine, e. g. data collected from sensors. OPERATING CONDITIONS OPERATOR ATTRIBUTES Environmental features that may influence a machine’s performance, e. g. location, temperature, other interactions. The attributes of the operator who uses the machine, e. g. driver.

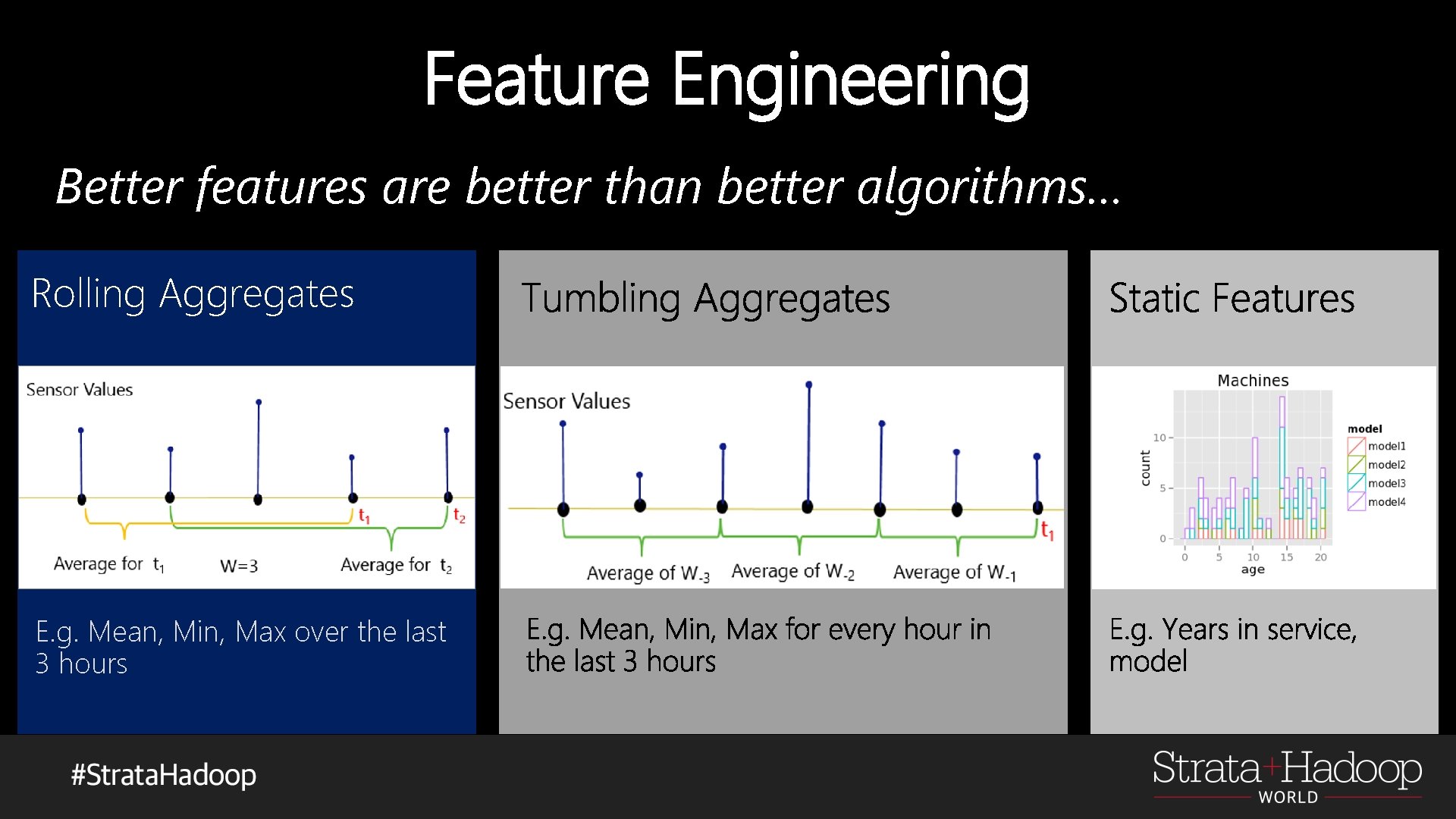

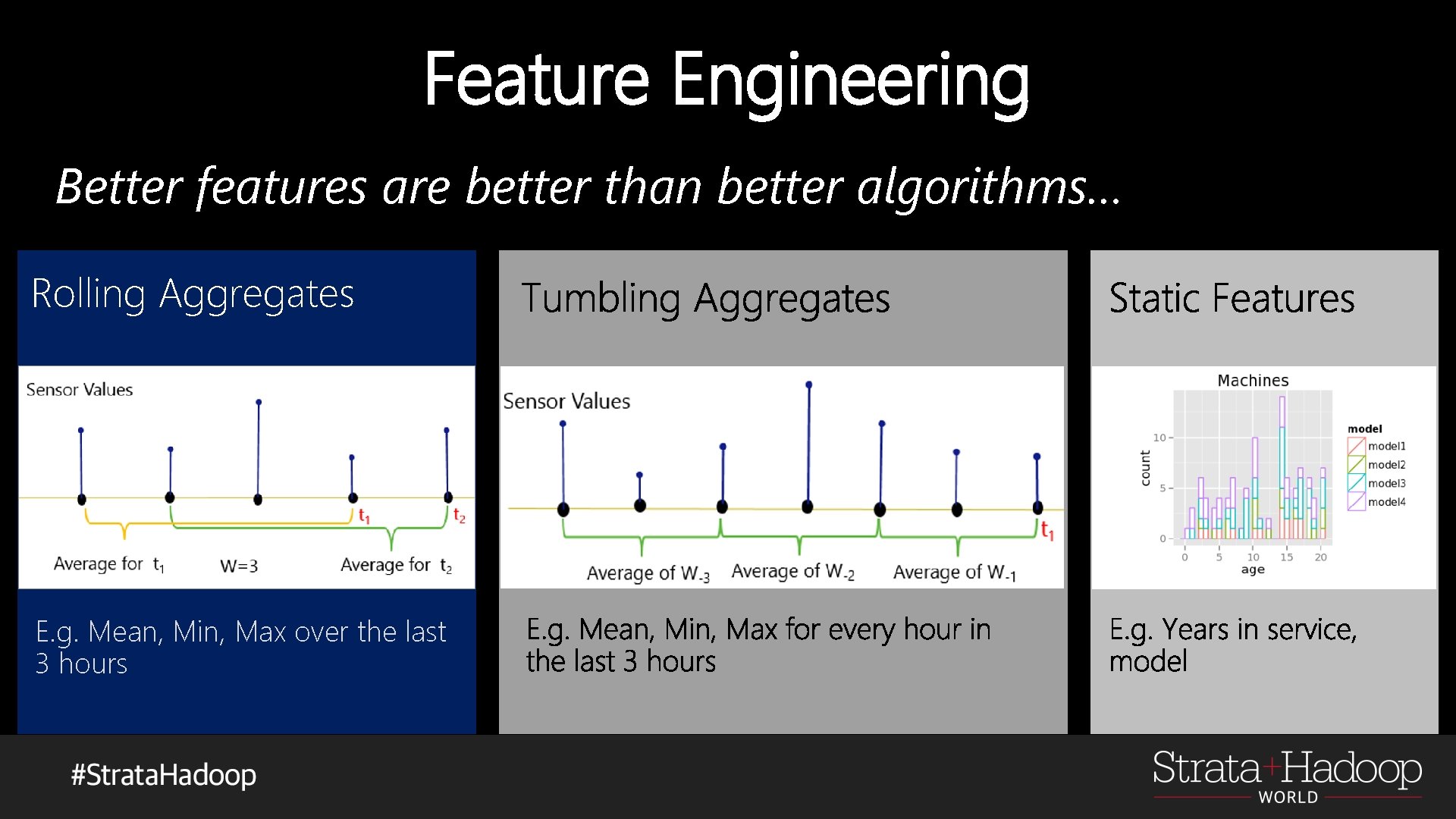

Feature Engineering Better features are better than better algorithms… Rolling Aggregates E. g. Mean, Min, Max over the last 3 hours

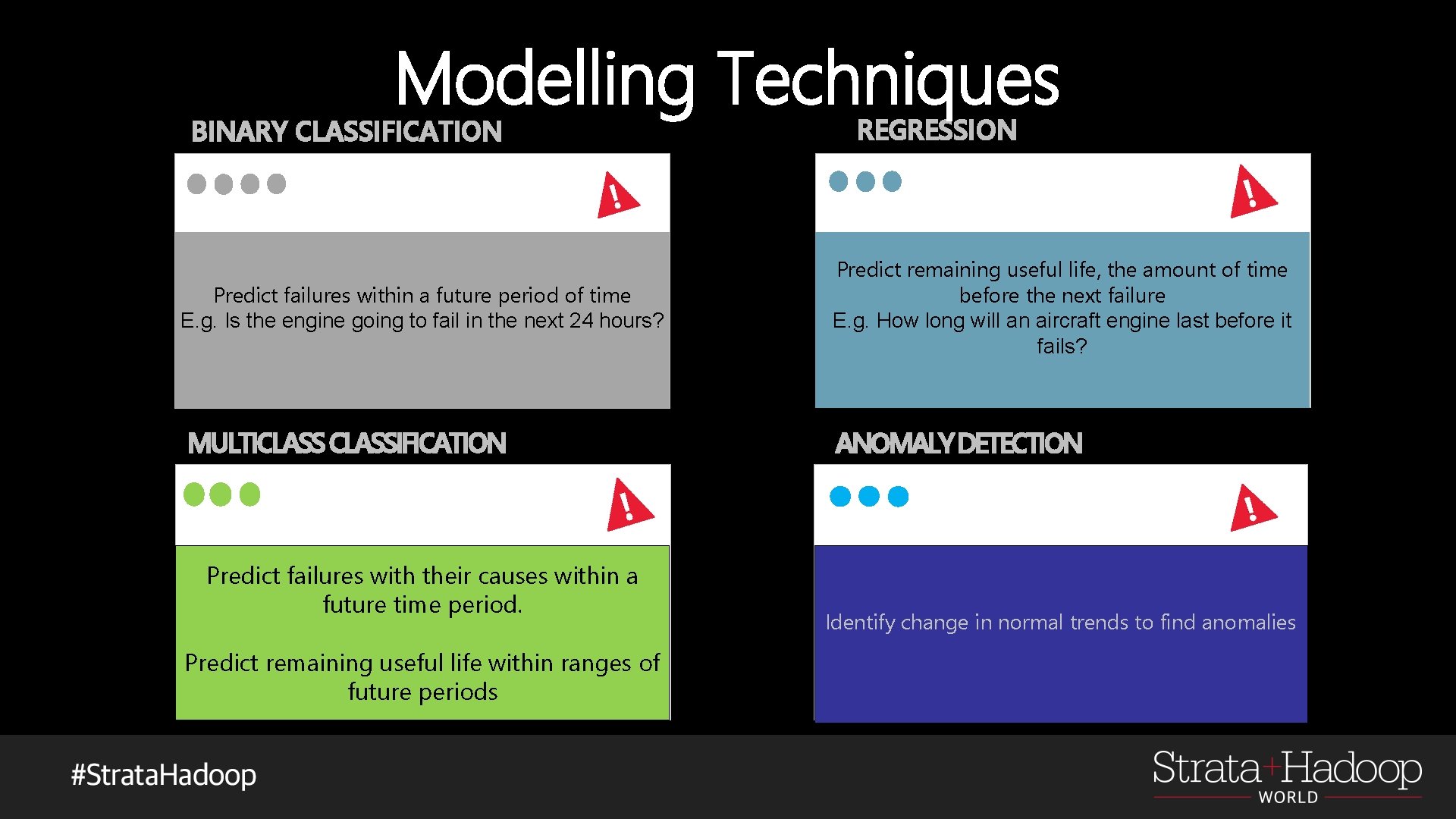

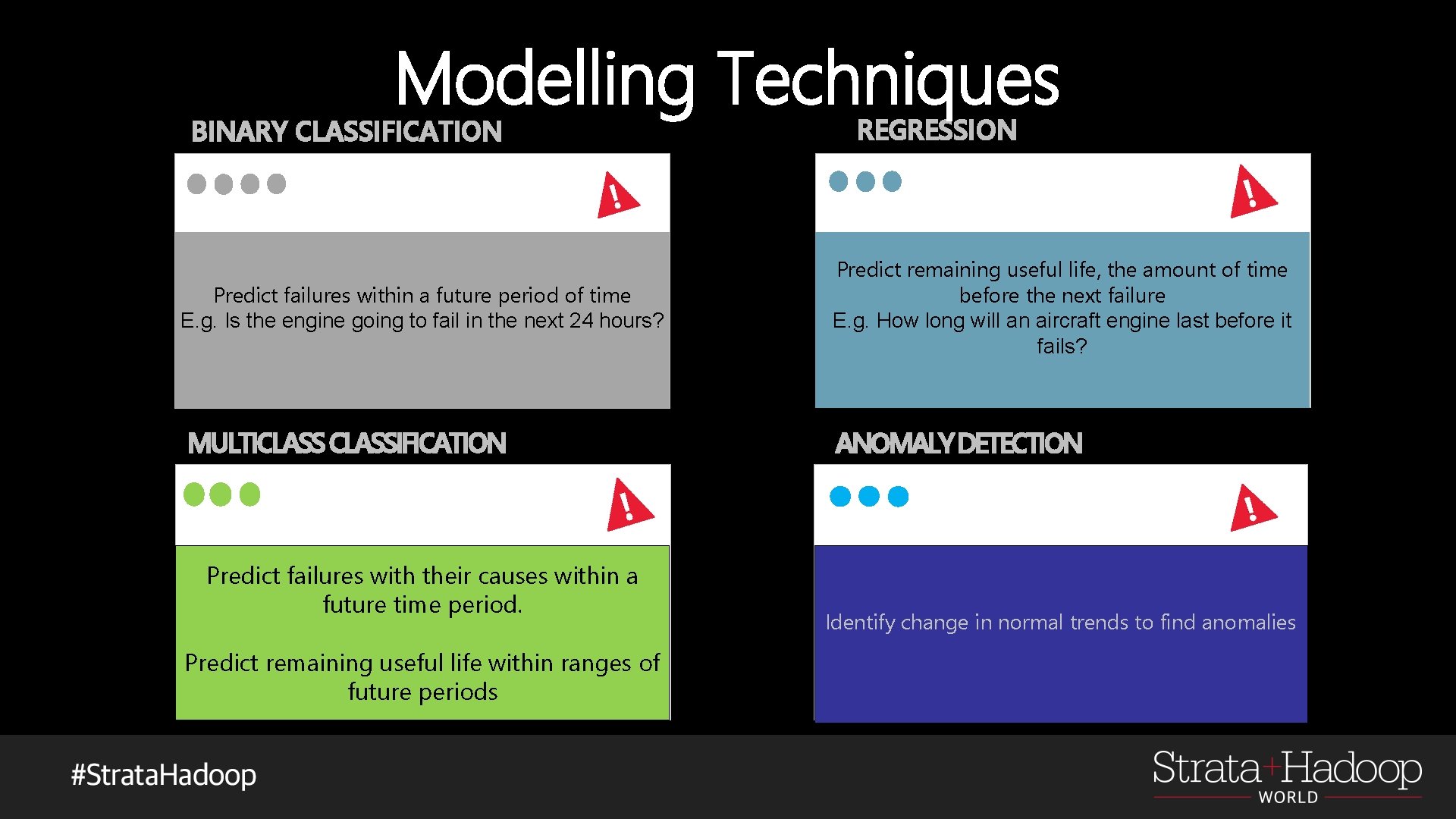

Modelling Techniques BINARY CLASSIFICATION Predict failures within a future period of time E. g. Is the engine going to fail in the next 24 hours? MULTICLASSIFICATION Predict failures with their causes within a future time period. Predict remaining useful life within ranges of future periods REGRESSION Predict remaining useful life, the amount of time before the next failure E. g. How long will an aircraft engine last before it fails? ANOMALY DETECTION Identify change in normal trends to find anomalies

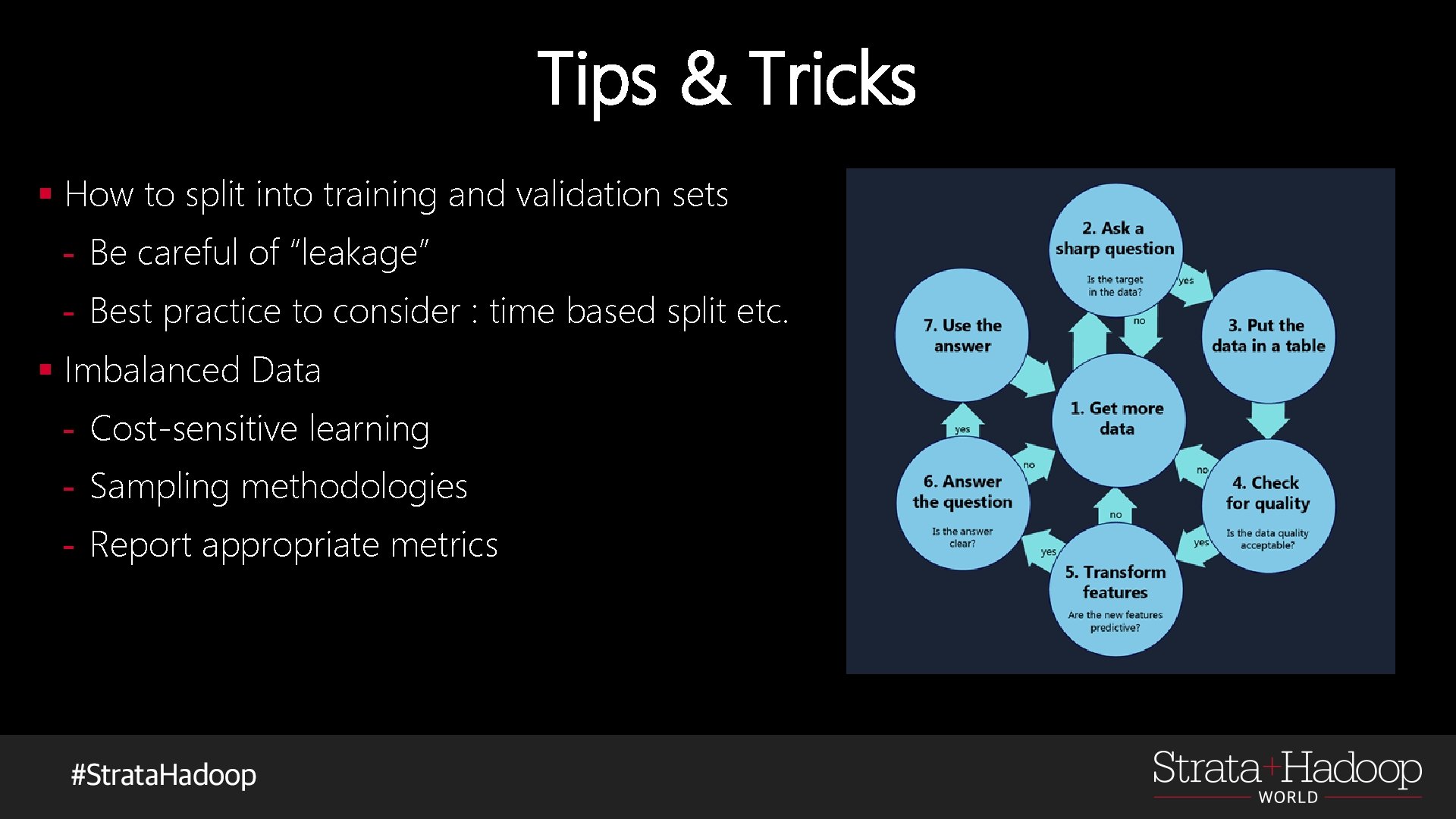

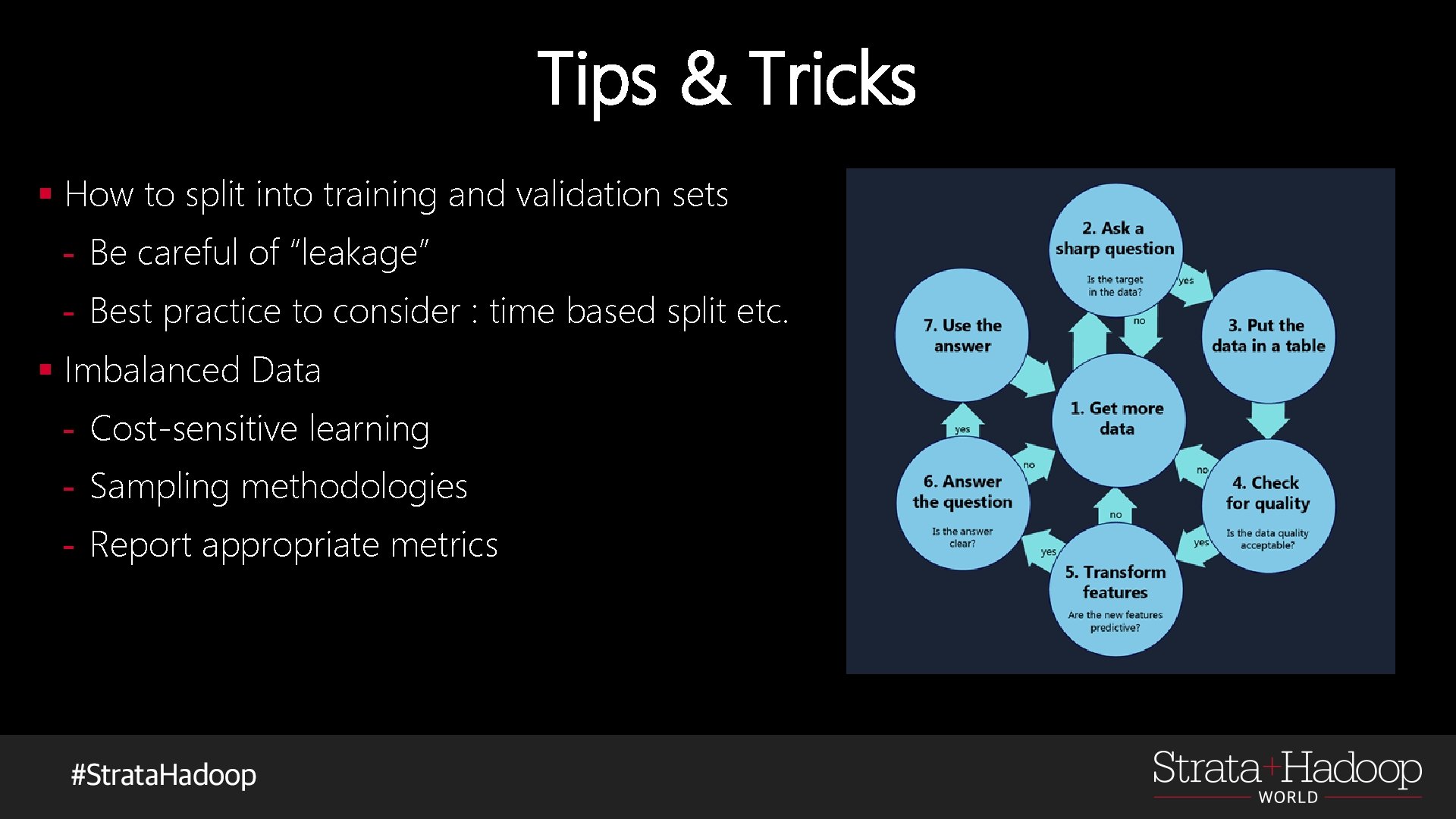

Tips & Tricks § How to split into training and validation sets - Be careful of “leakage” - Best practice to consider : time based split etc. § Imbalanced Data - Cost-sensitive learning - Sampling methodologies - Report appropriate metrics

How do you know if the model is good? 99% accuracy? Is 0. 2 recall good?

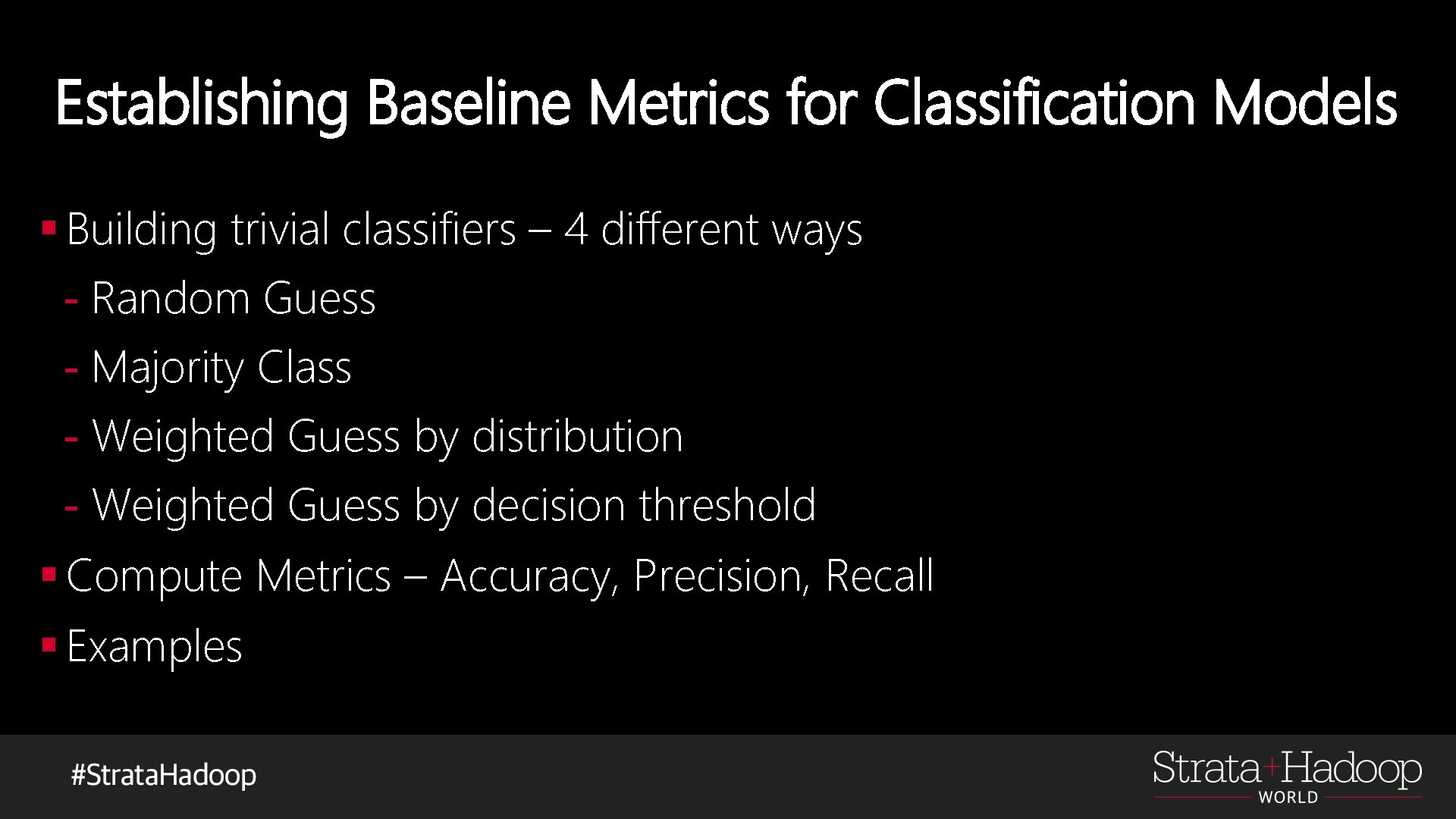

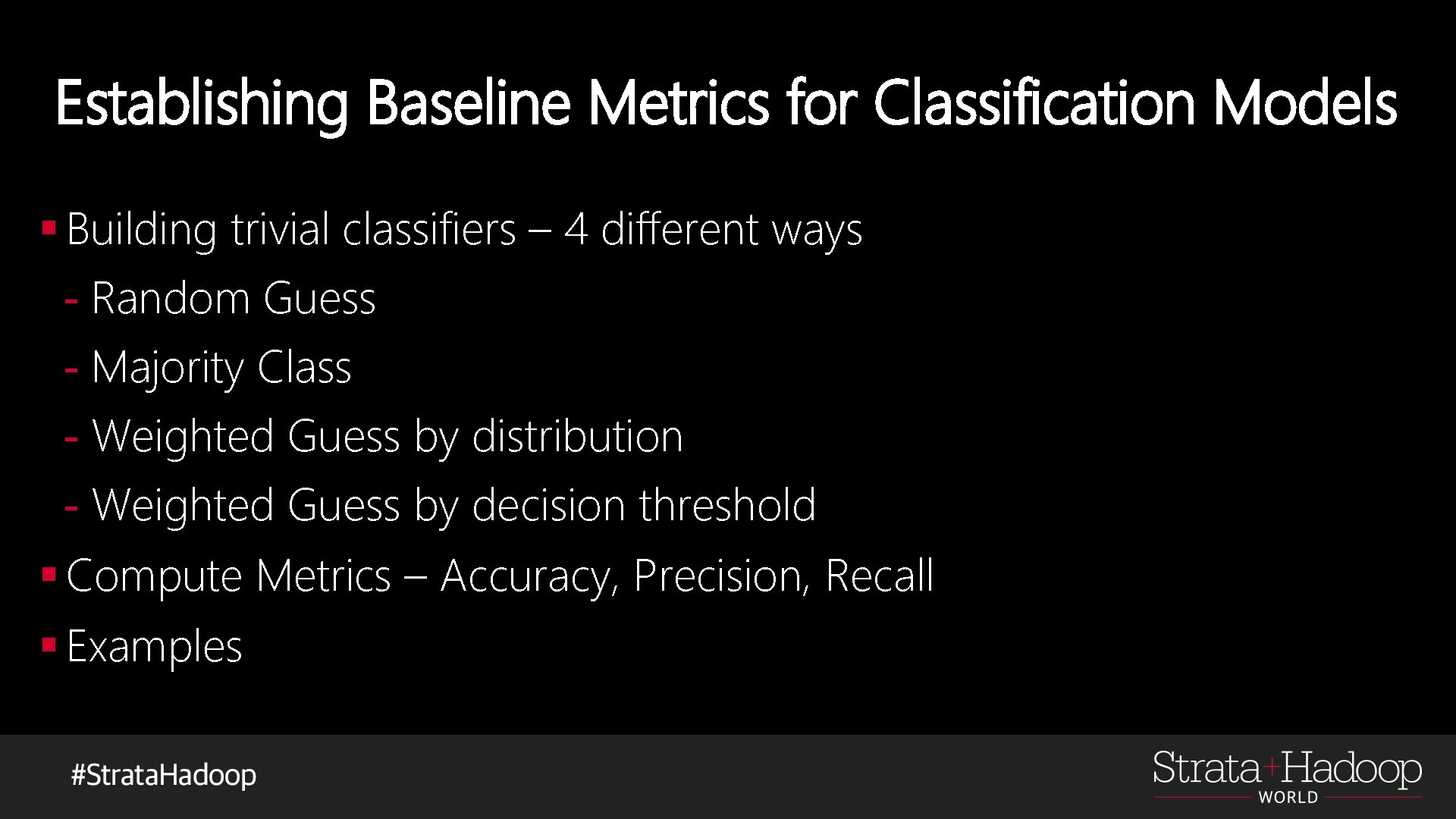

Establishing Baseline Metrics for Classification Models § Building trivial classifiers – 4 different ways - Random Guess - Majority Class - Weighted Guess by distribution - Weighted Guess by decision threshold § Compute Metrics – Accuracy, Precision, Recall § Examples

Model Evaluation

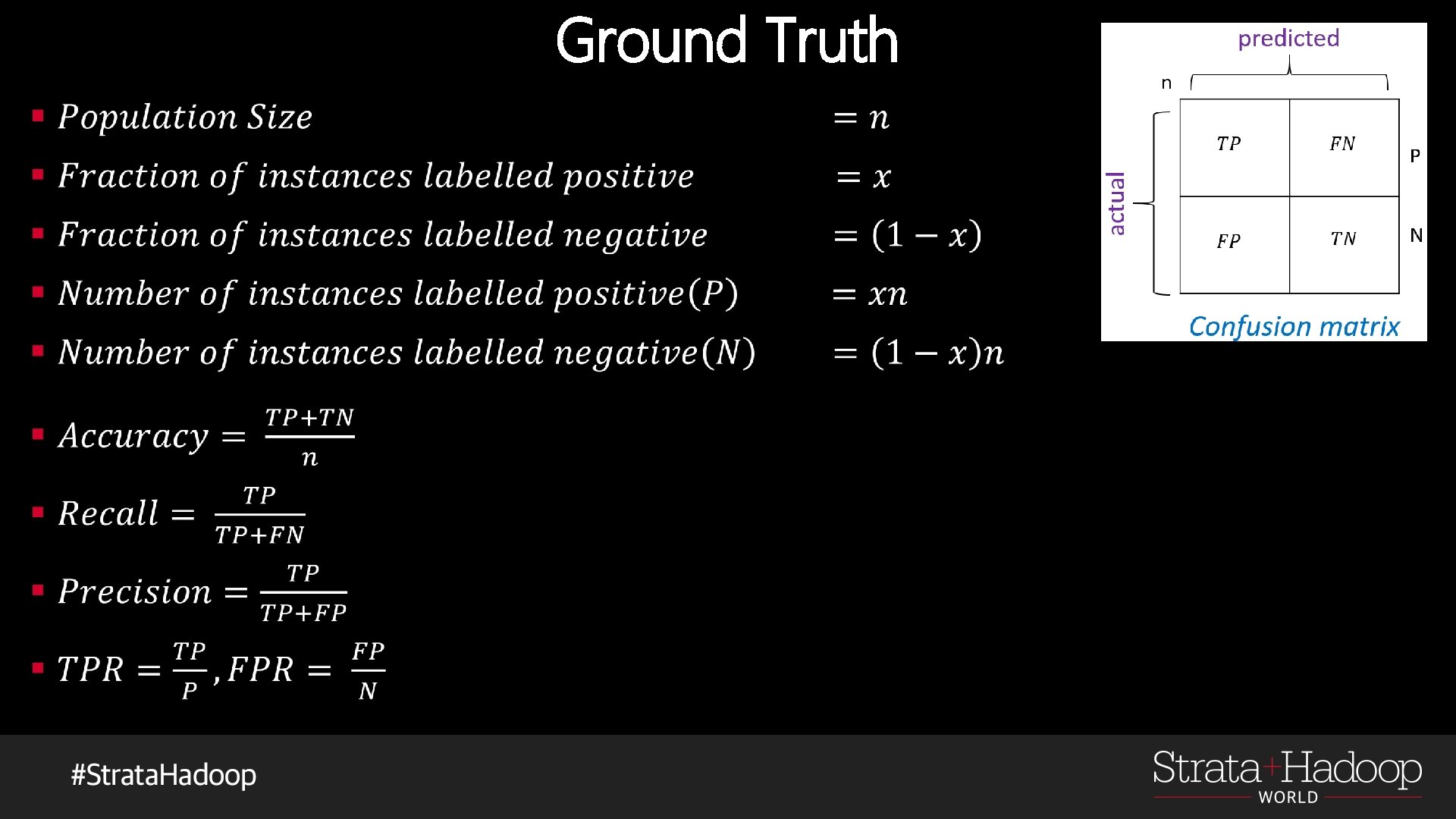

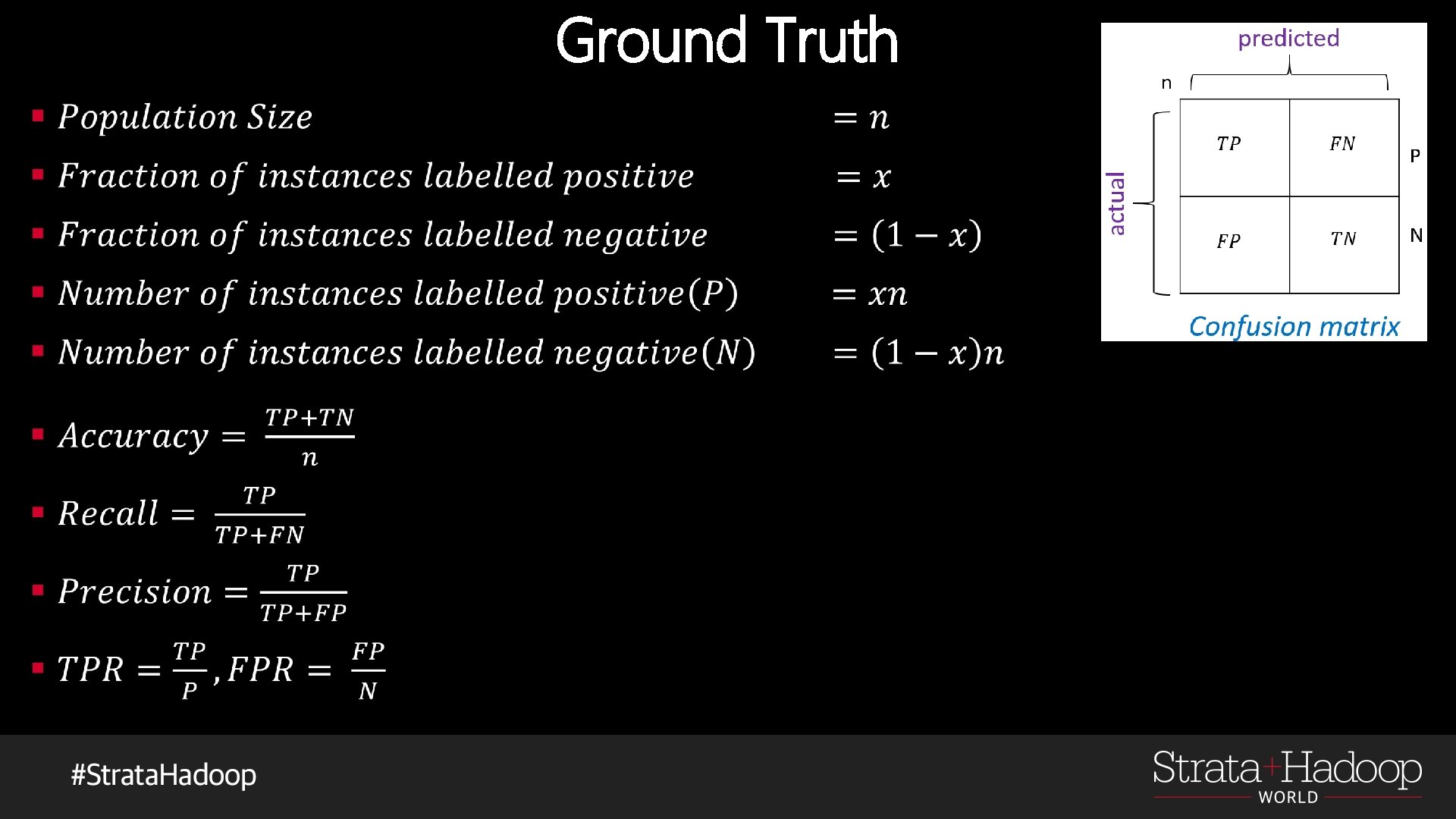

Ground Truth §

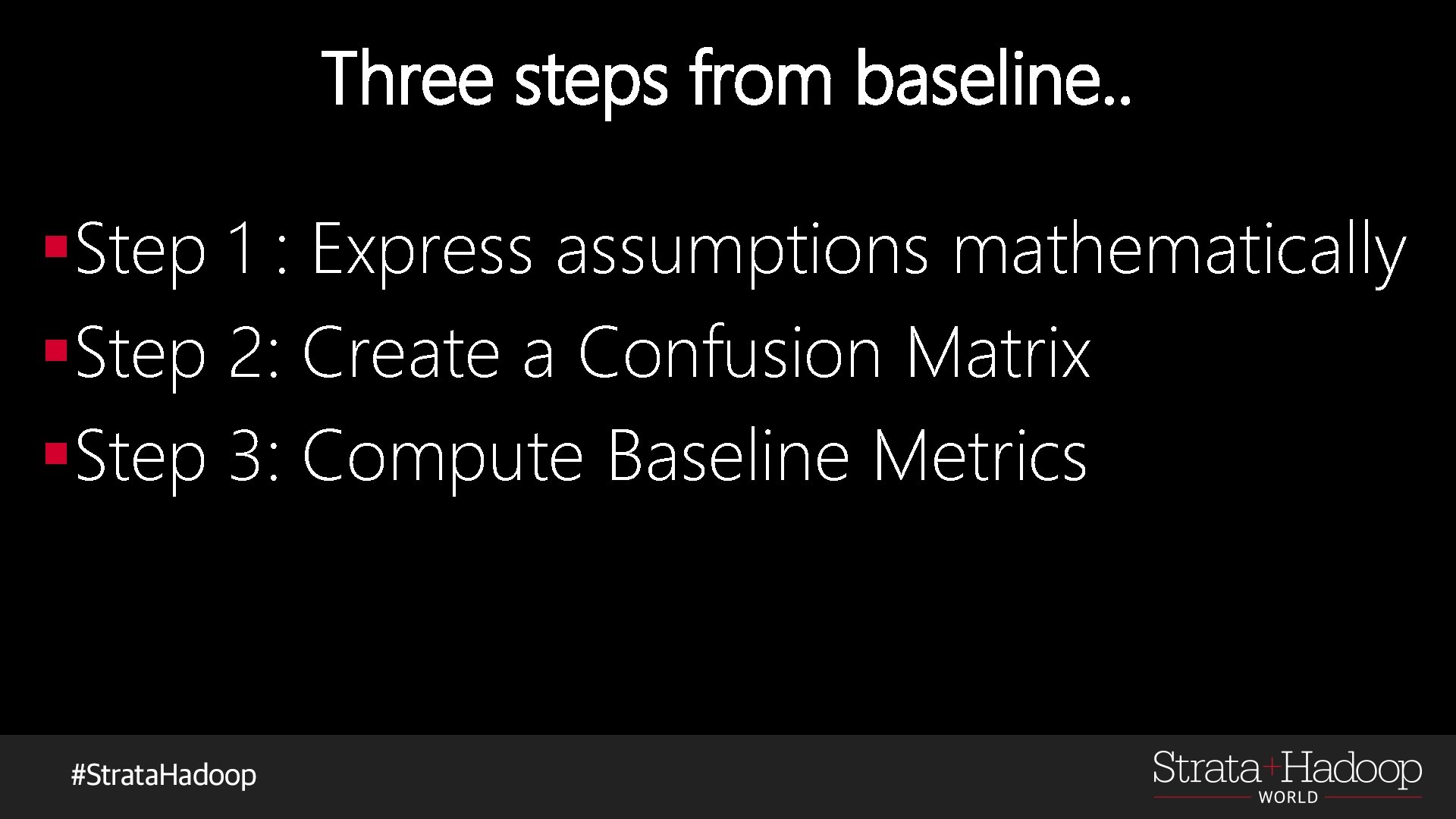

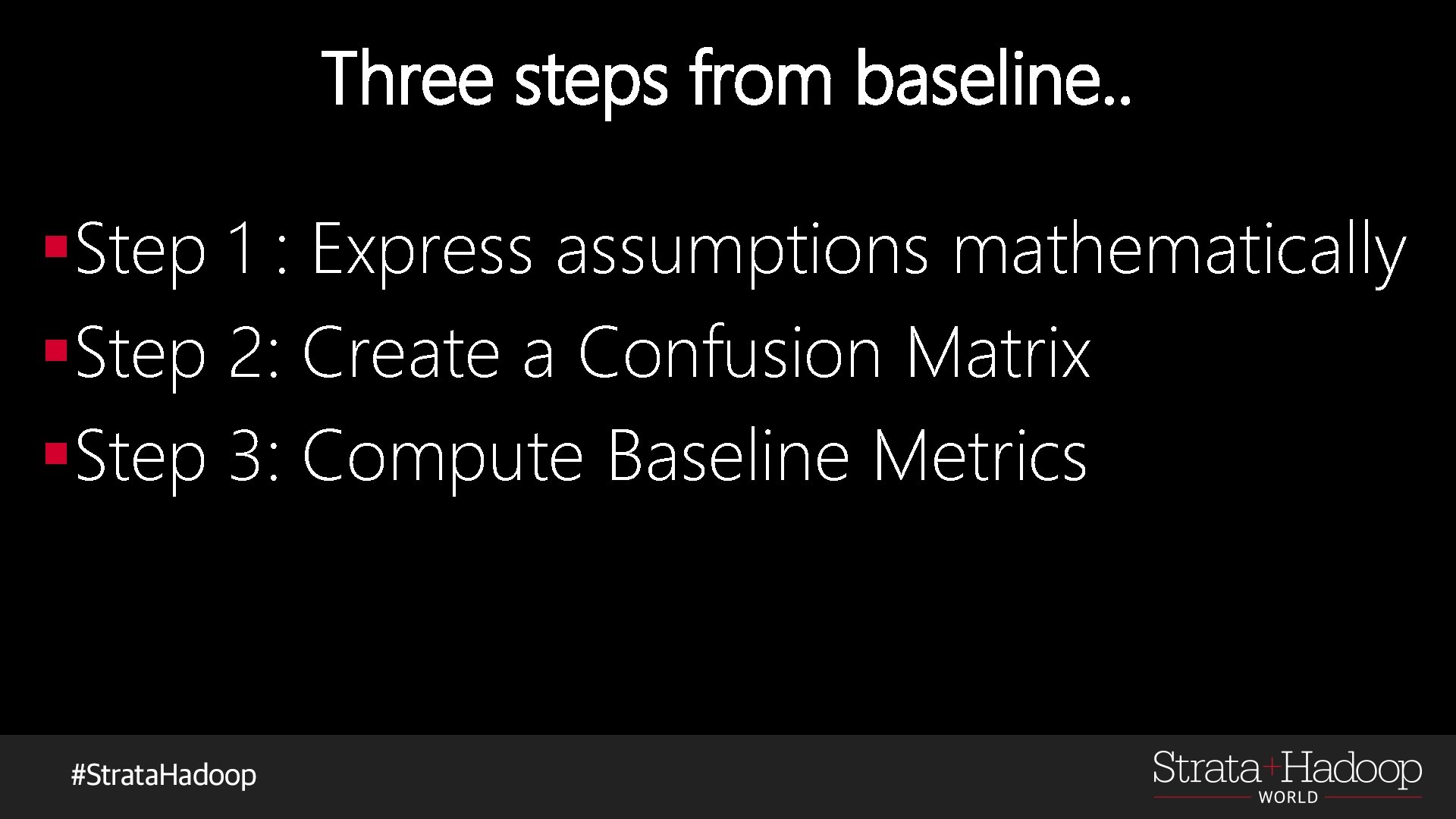

Three steps from baseline. . §Step 1 : Express assumptions mathematically §Step 2: Create a Confusion Matrix §Step 3: Compute Baseline Metrics

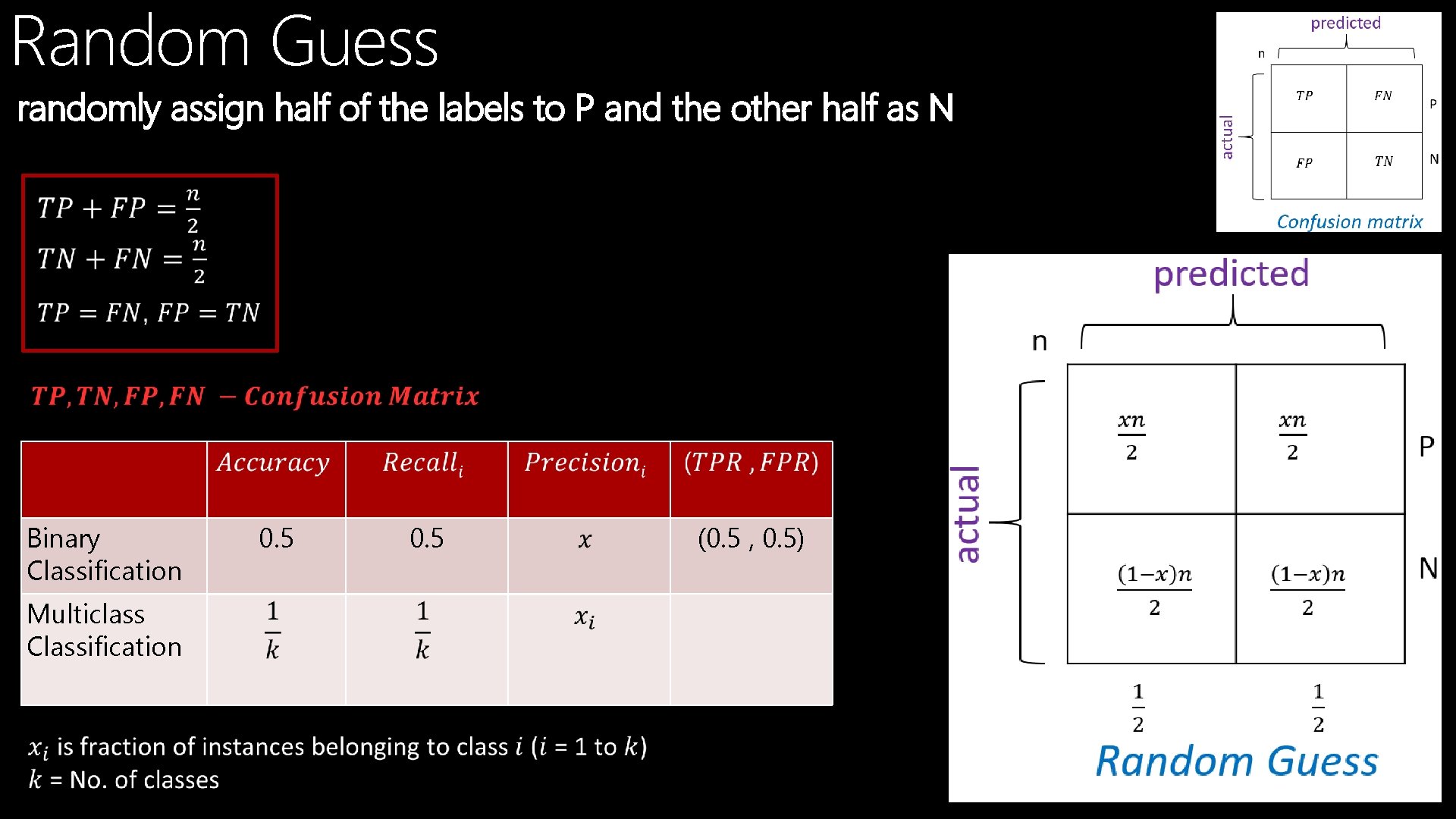

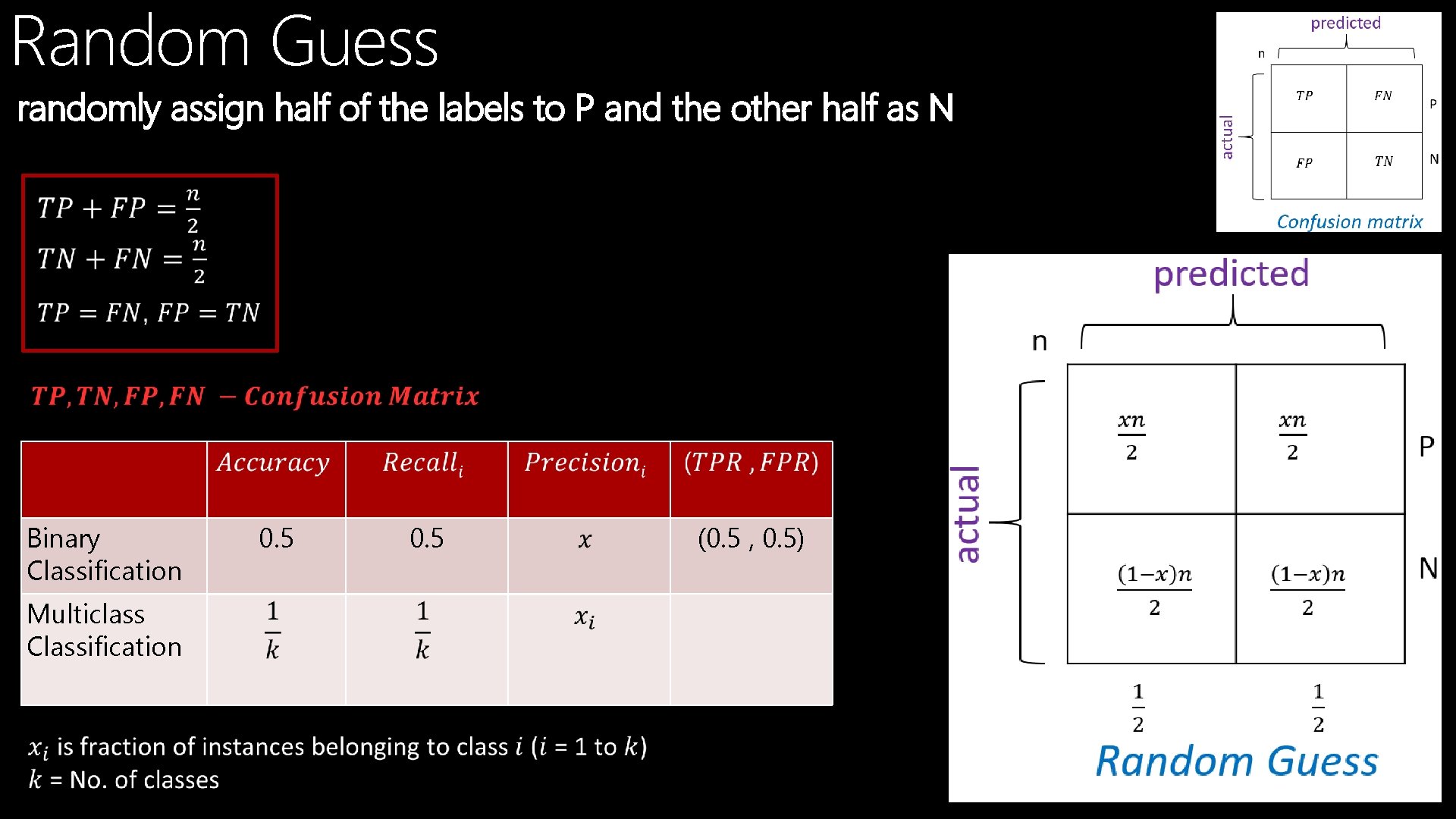

Random Guess randomly assign half of the labels to P and the other half as N Binary Classification 0. 5 Multiclass Classification (0. 5 , 0. 5)

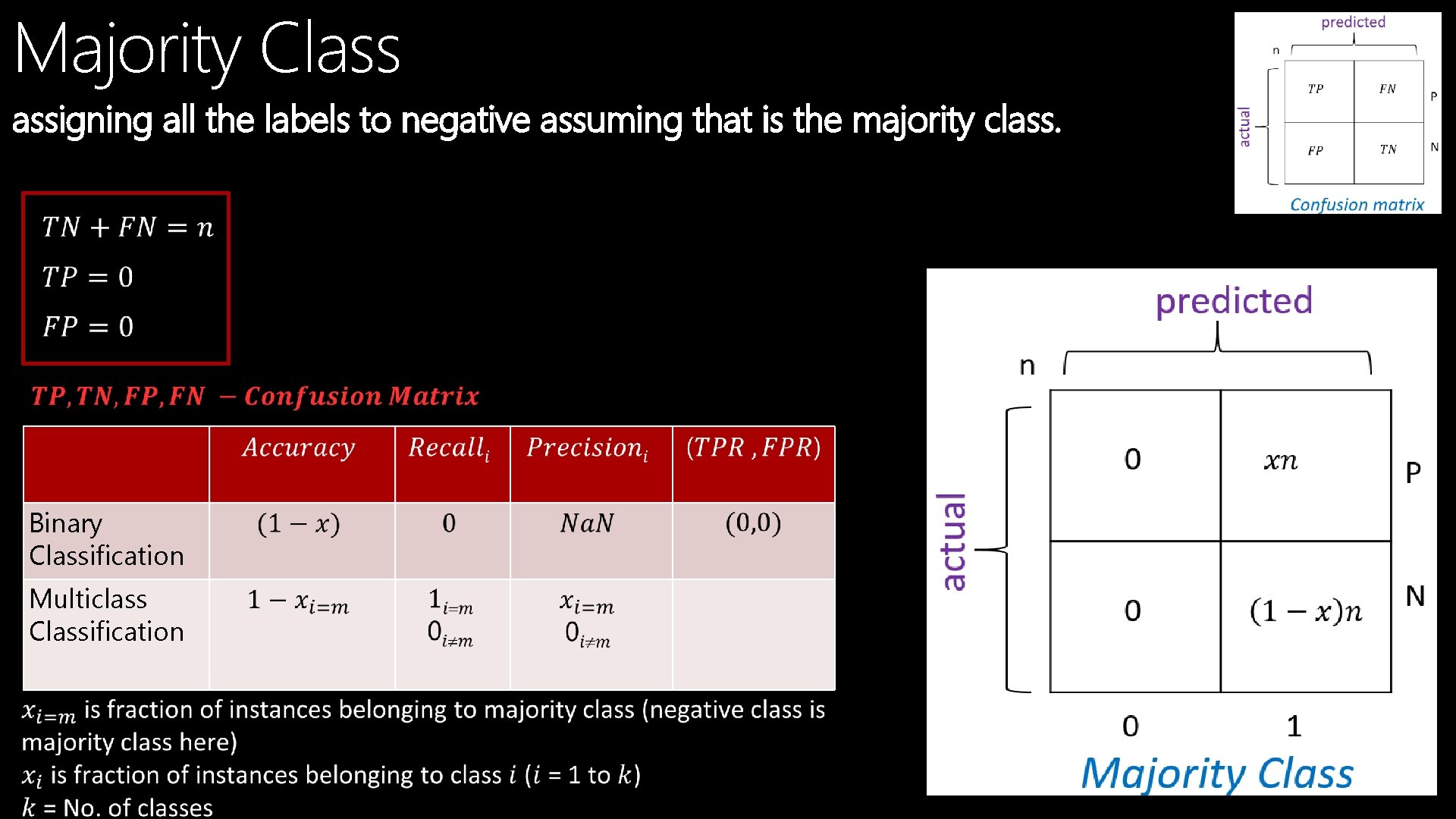

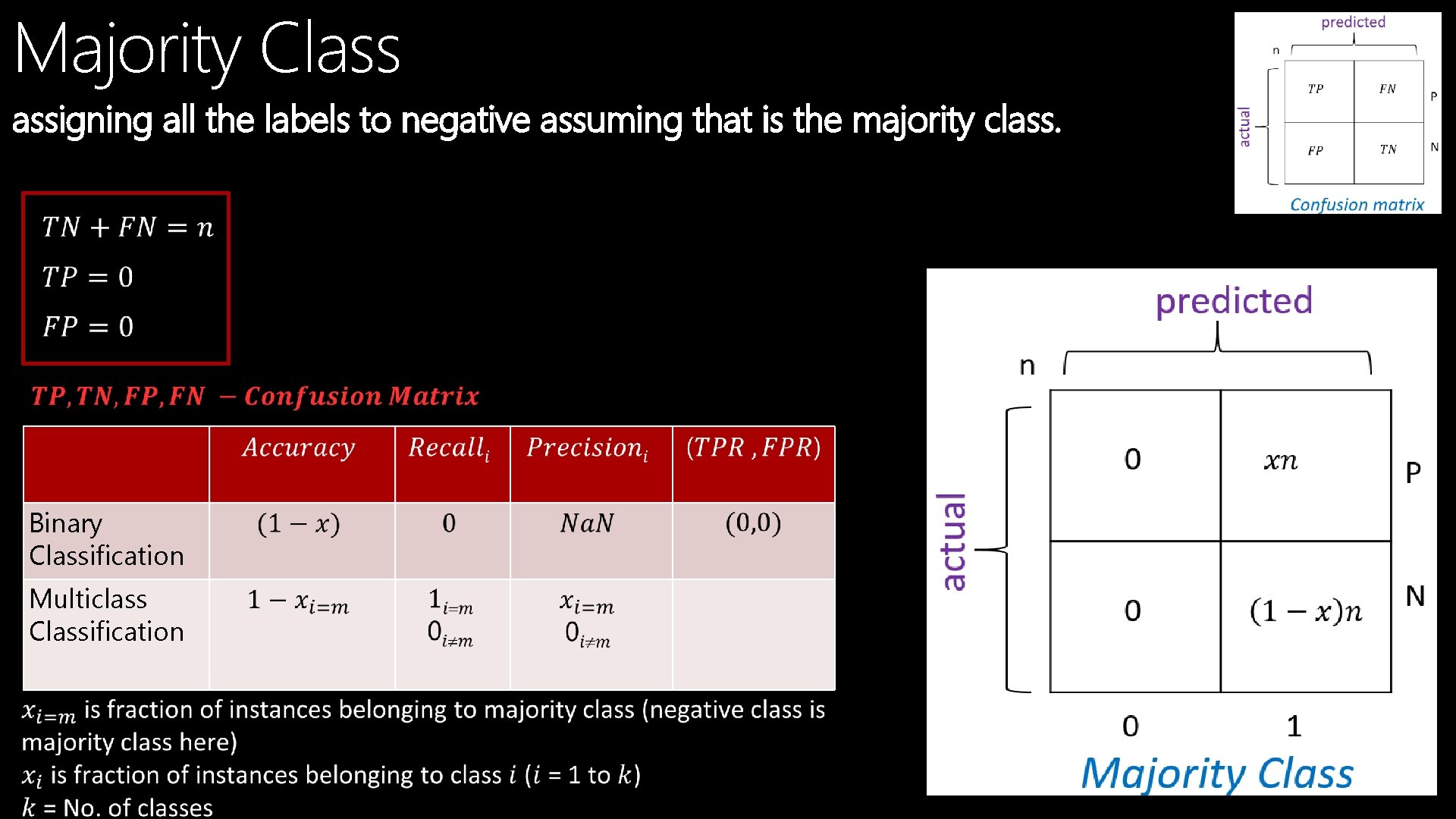

Majority Class assigning all the labels to negative assuming that is the majority class. Binary Classification Multiclass Classification

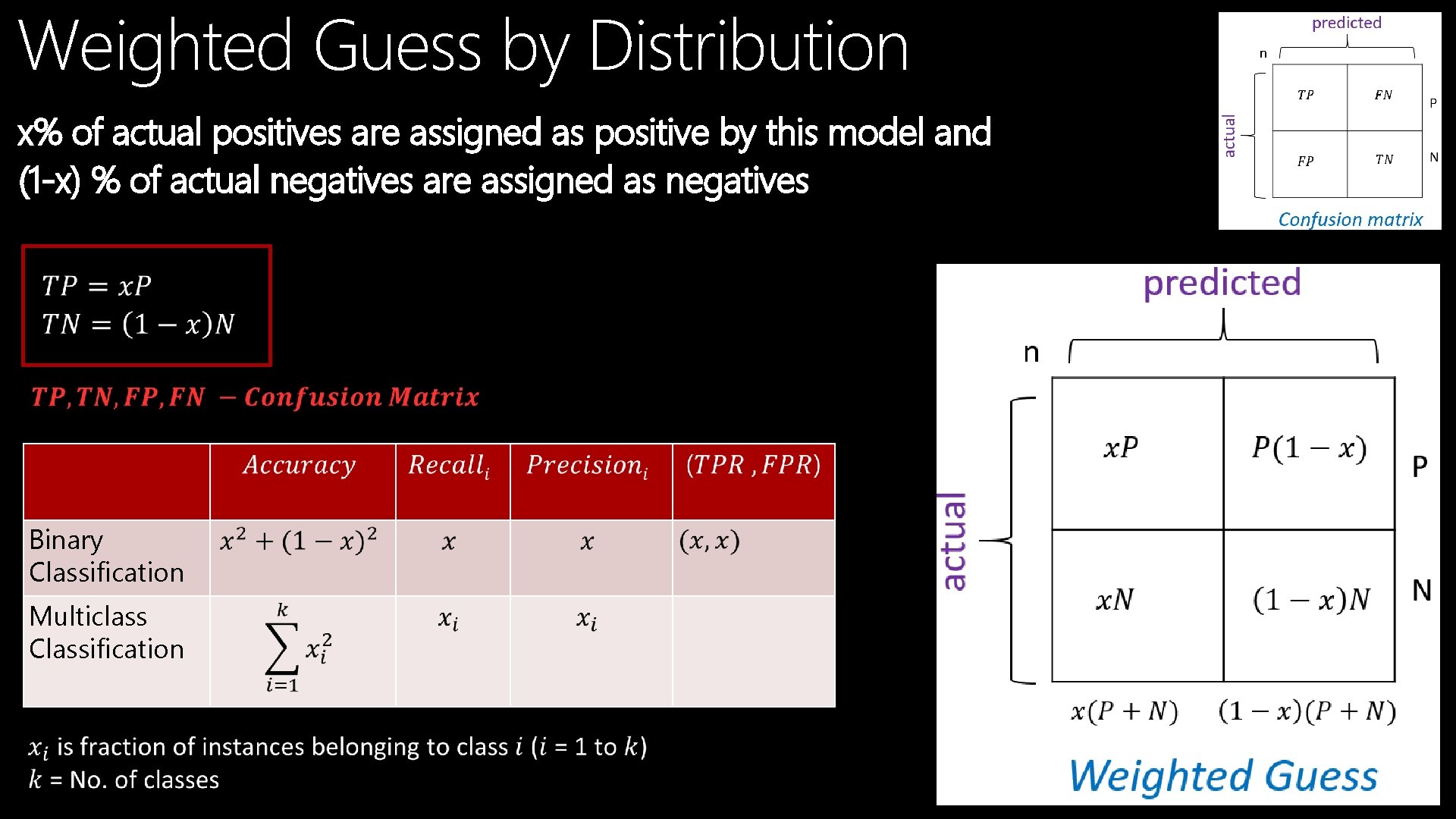

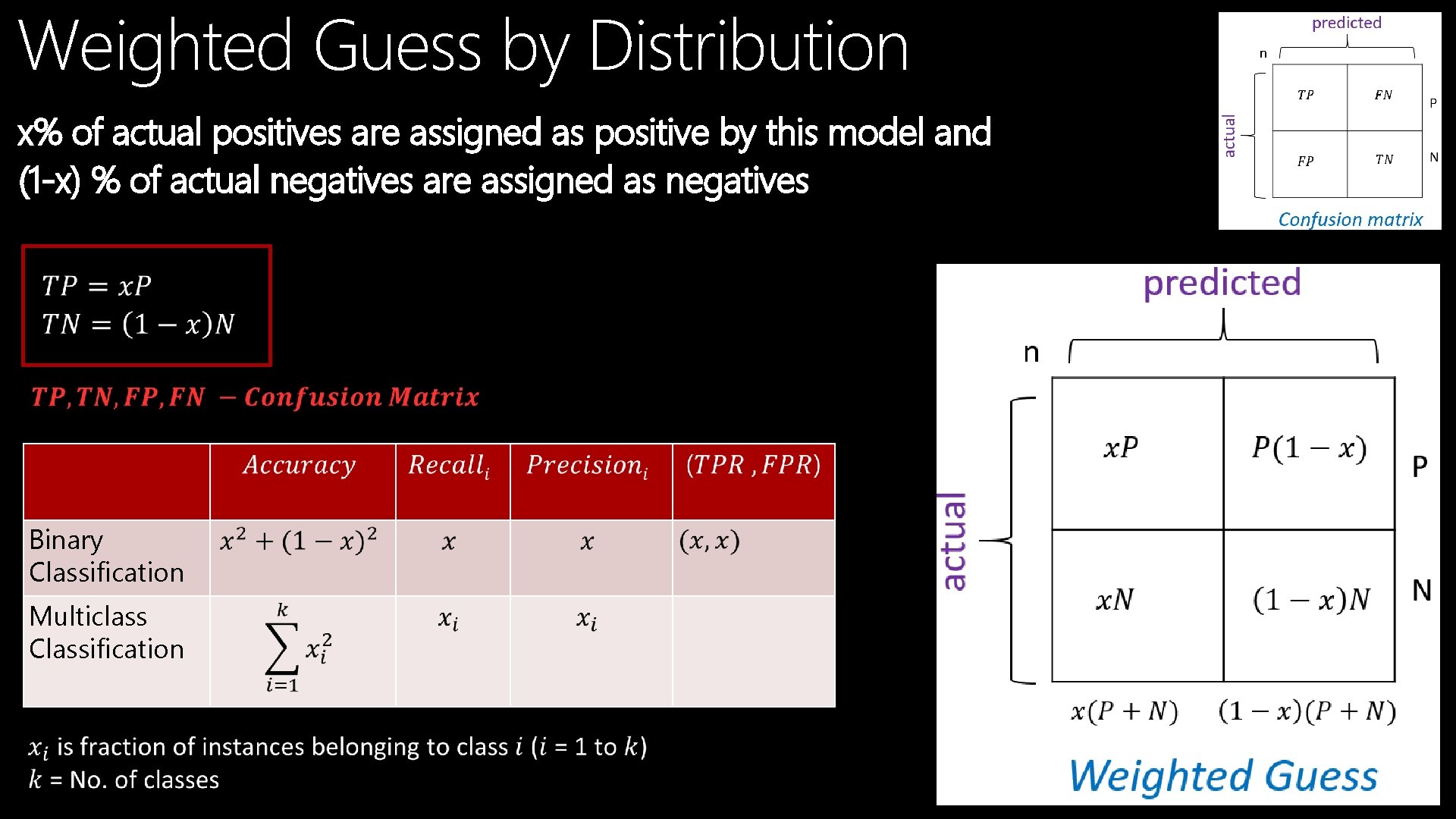

Weighted Guess by Distribution x% of actual positives are assigned as positive by this model and (1 -x) % of actual negatives are assigned as negatives Binary Classification Multiclass Classification

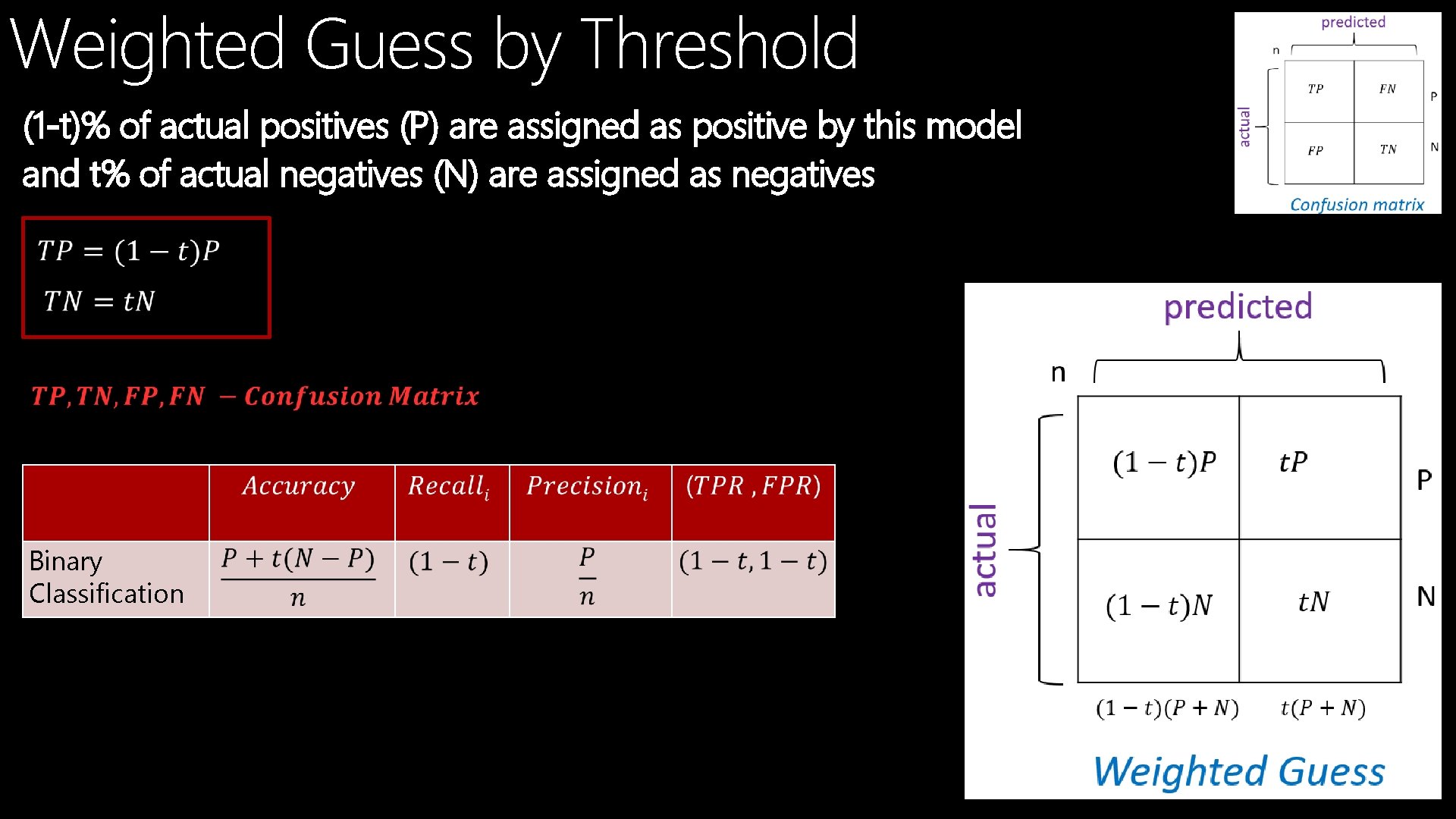

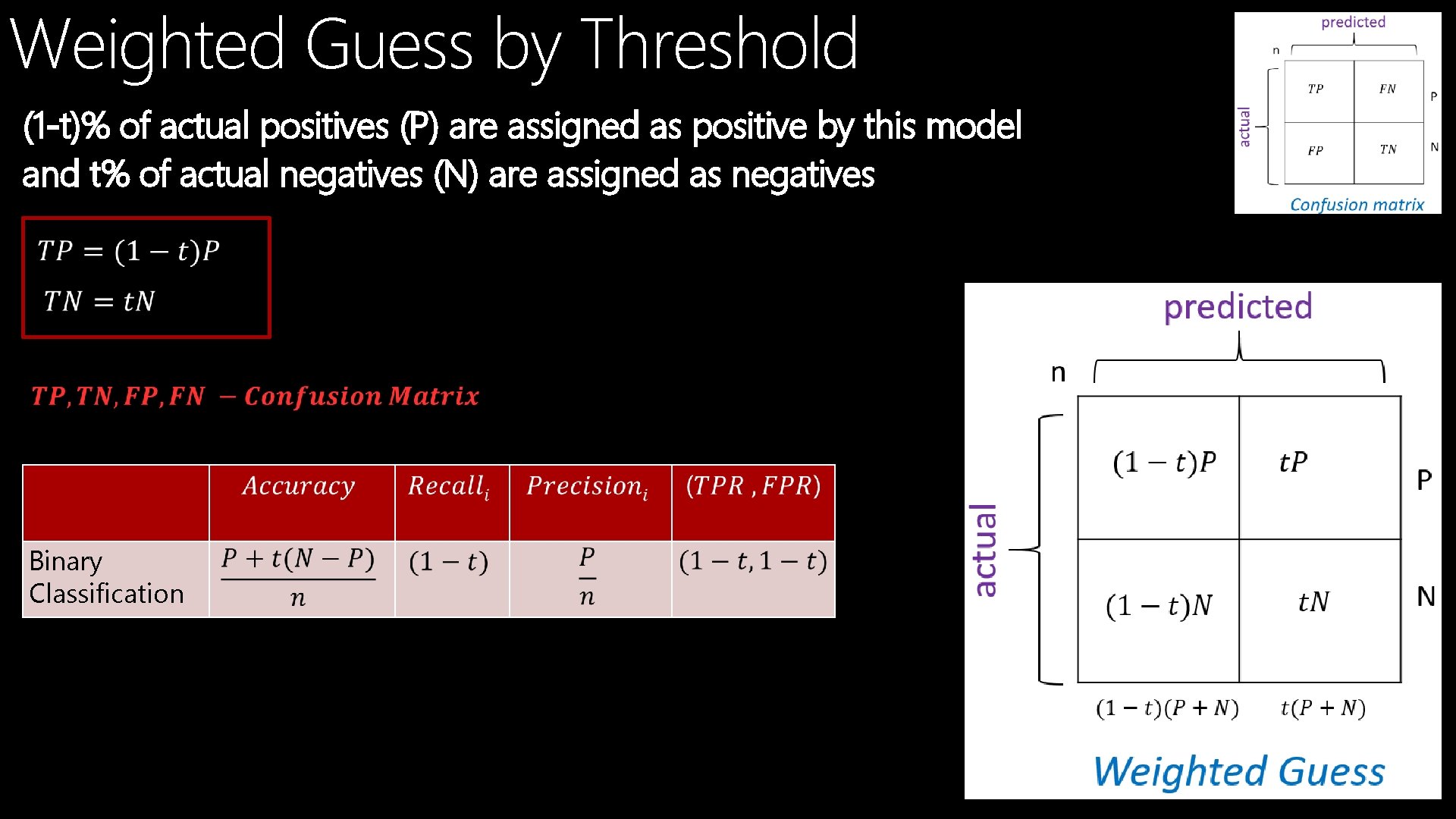

Weighted Guess by Threshold (1 -t)% of actual positives (P) are assigned as positive by this model and t% of actual negatives (N) are assigned as negatives Binary Classification

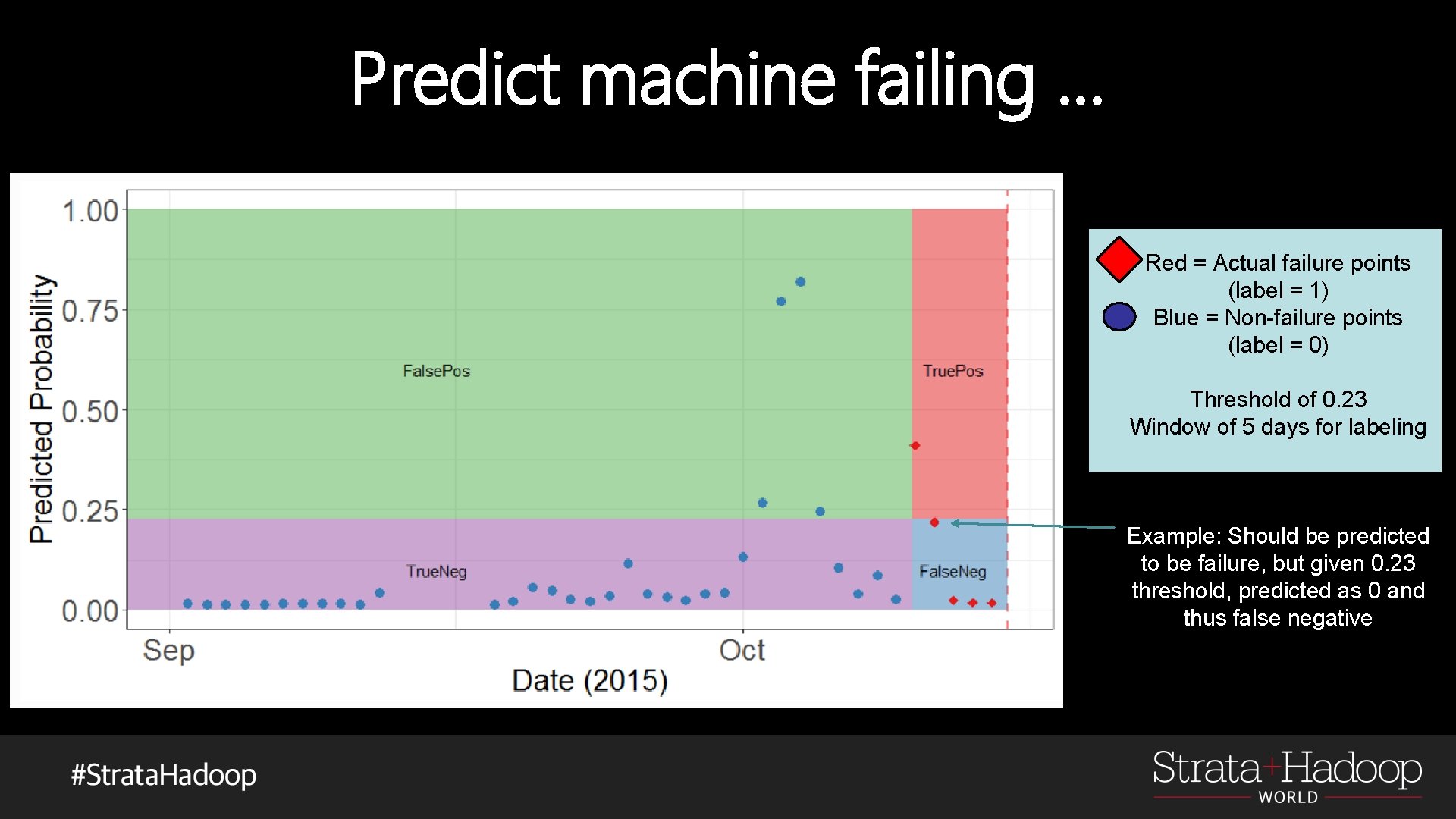

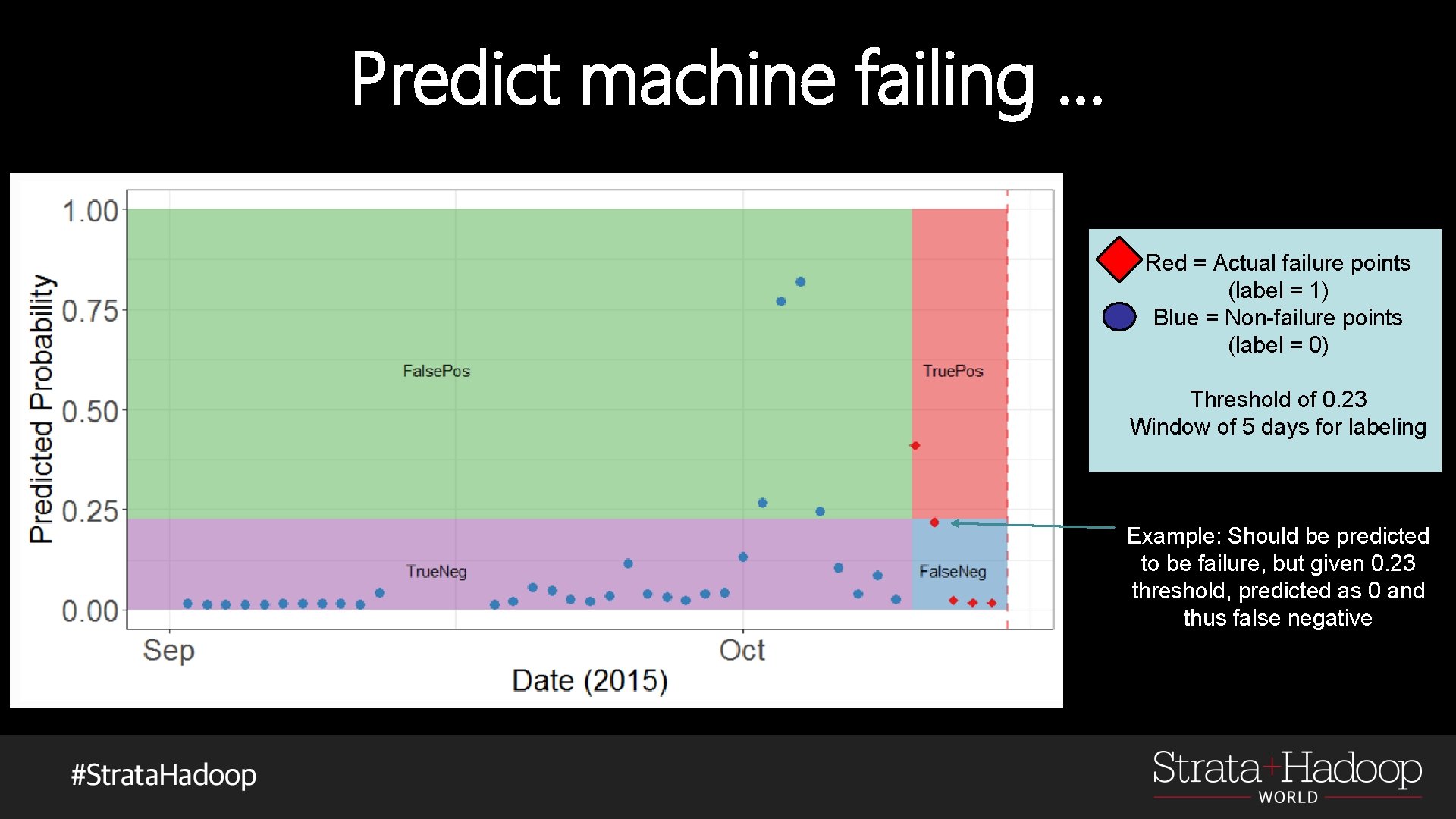

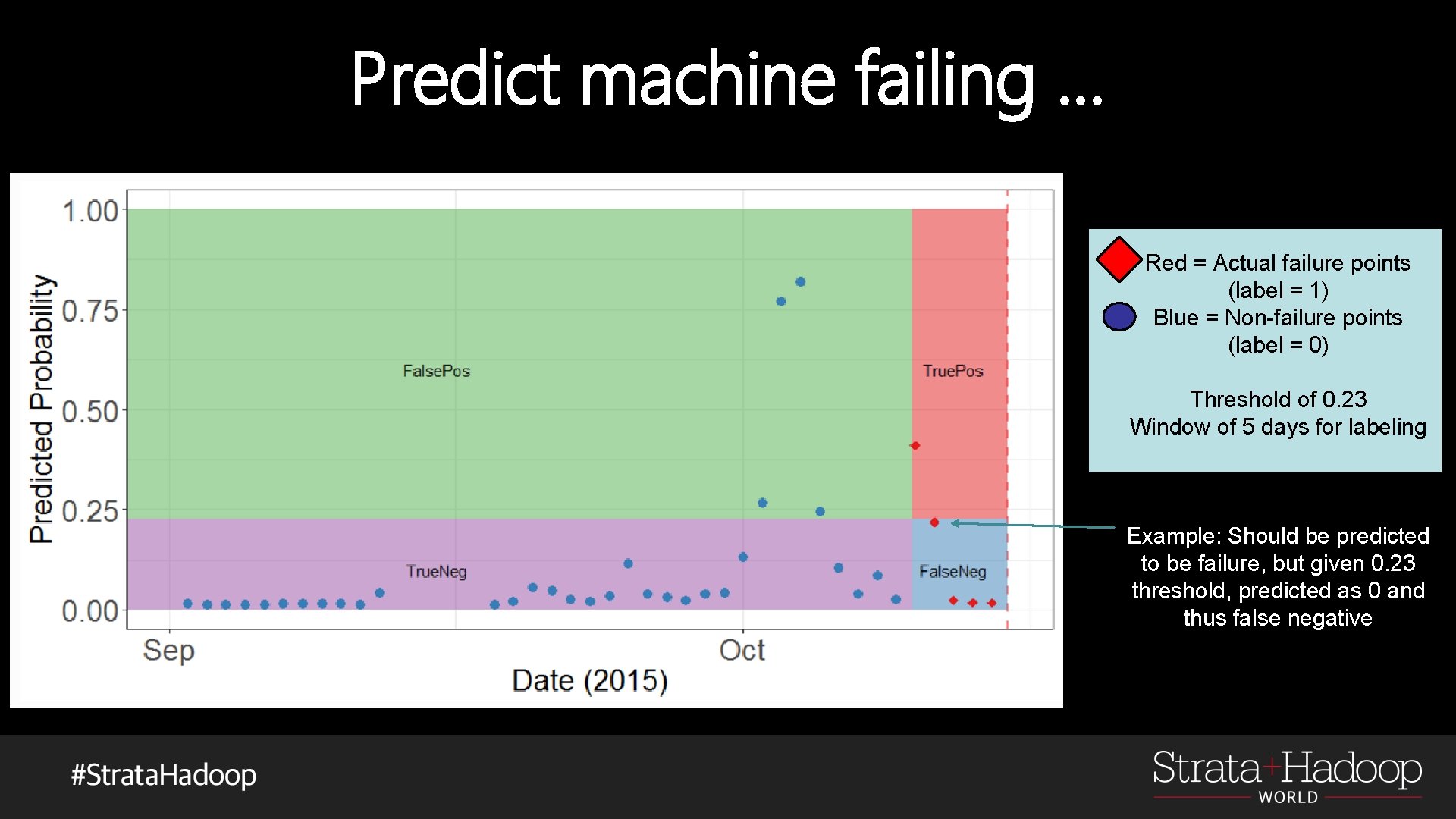

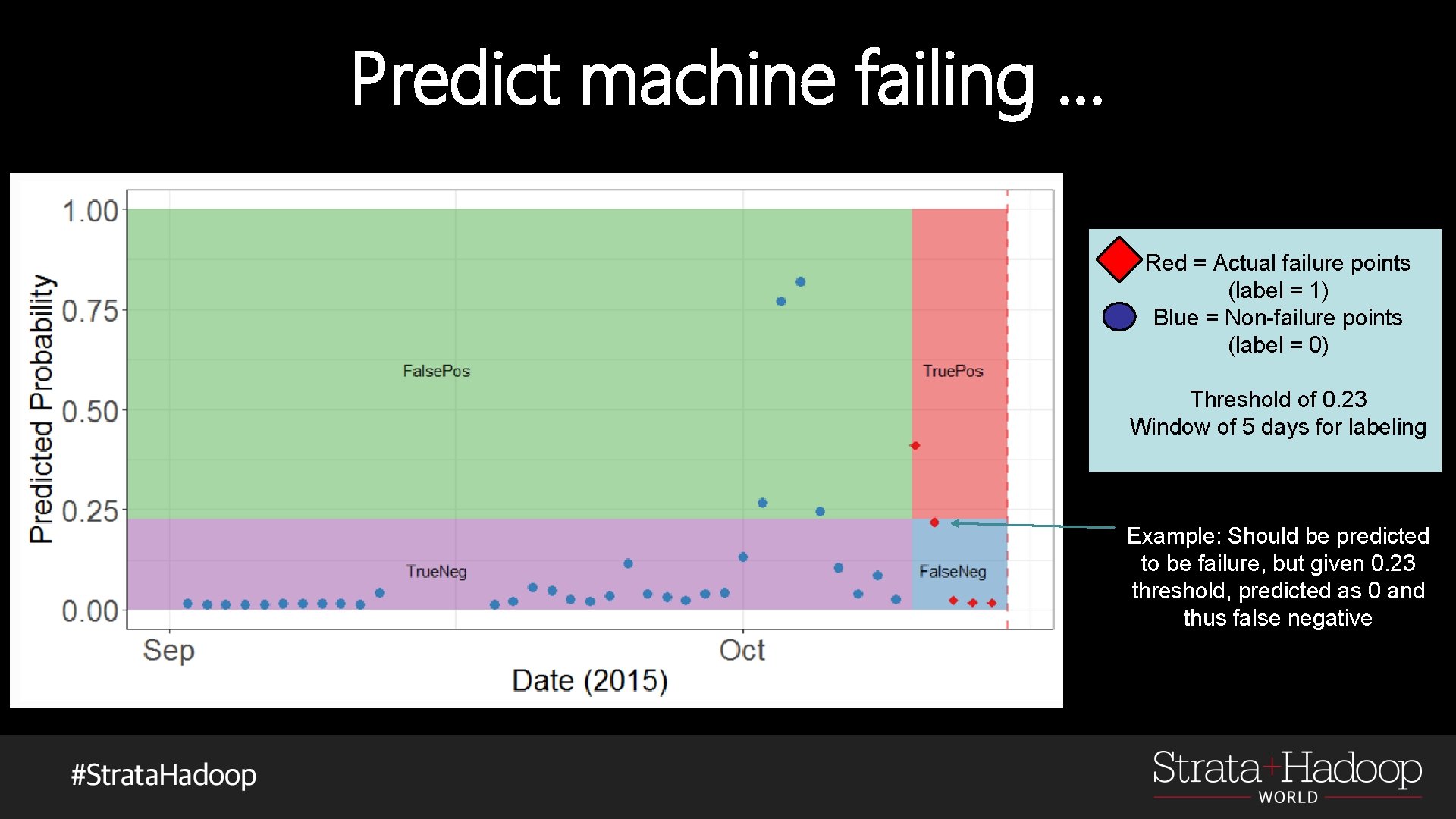

Predict machine failing … Red = Actual failure points (label = 1) Blue = Non-failure points (label = 0) Threshold of 0. 23 Window of 5 days for labeling Example: Should be predicted to be failure, but given 0. 23 threshold, predicted as 0 and thus false negative Failure point

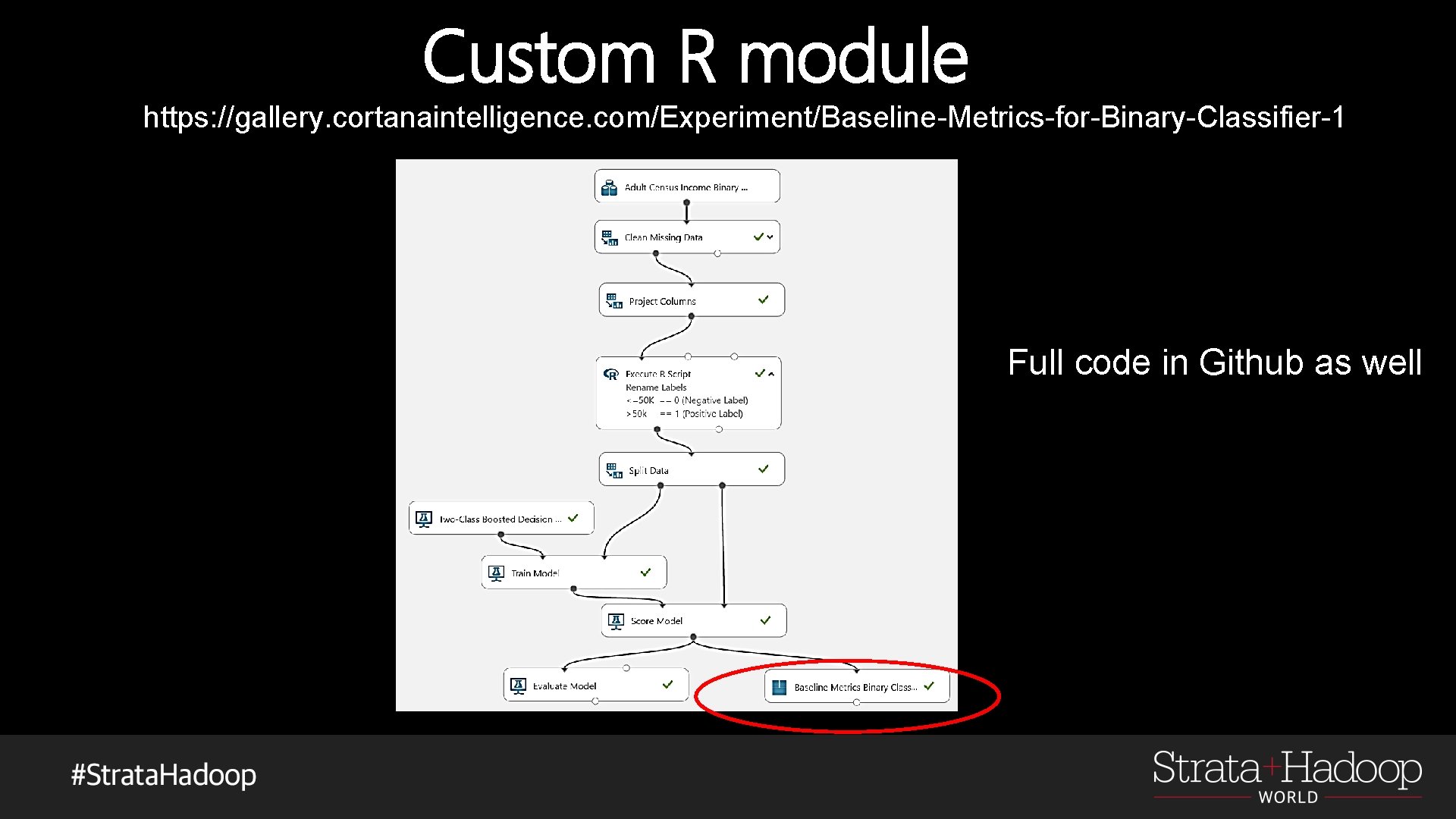

Custom R module https: //gallery. cortanaintelligence. com/Experiment/Baseline-Metrics-for-Binary-Classifier-1

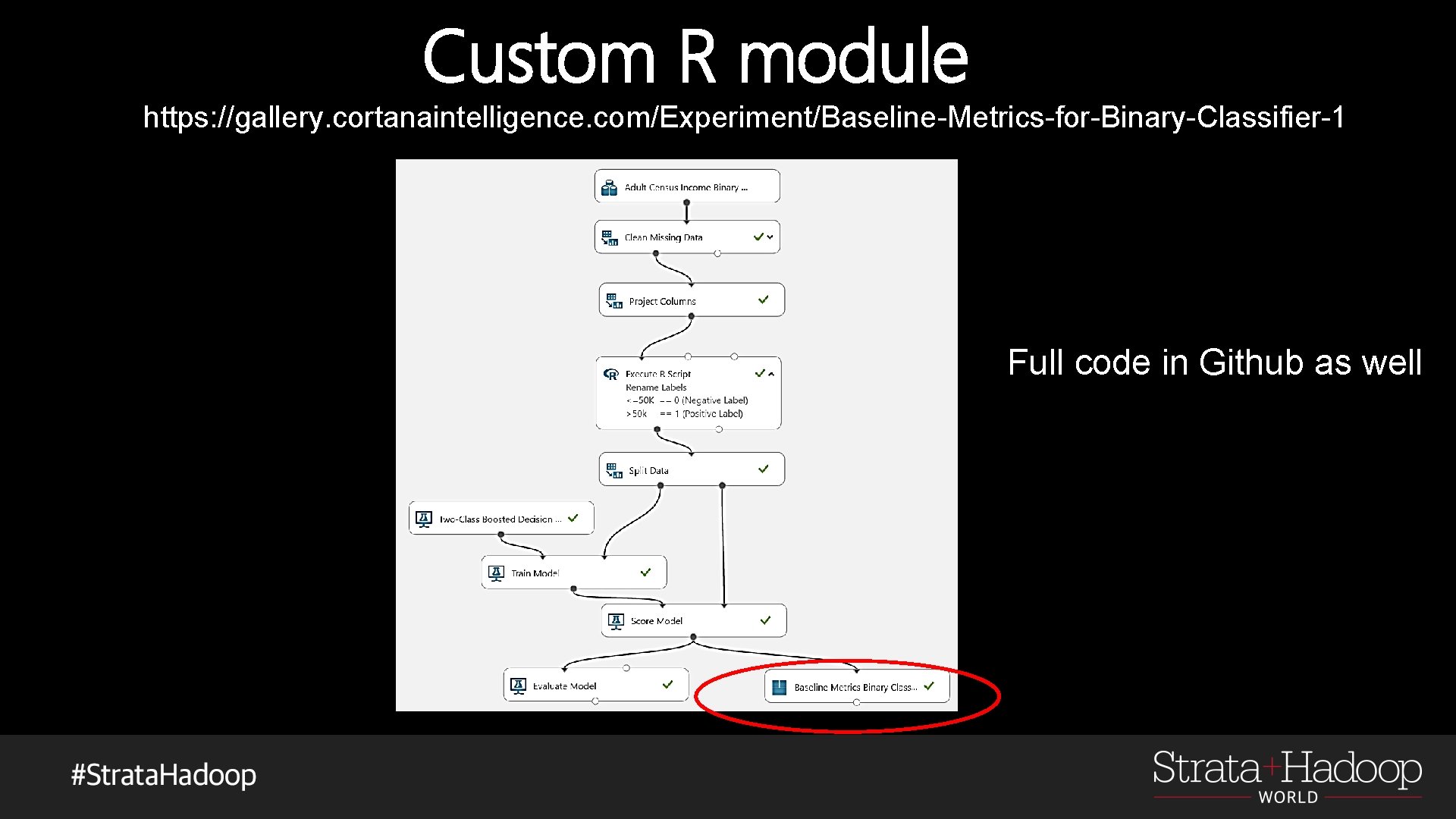

Custom R module https: //gallery. cortanaintelligence. com/Experiment/Baseline-Metrics-for-Binary-Classifier-1 Full code in Github as well

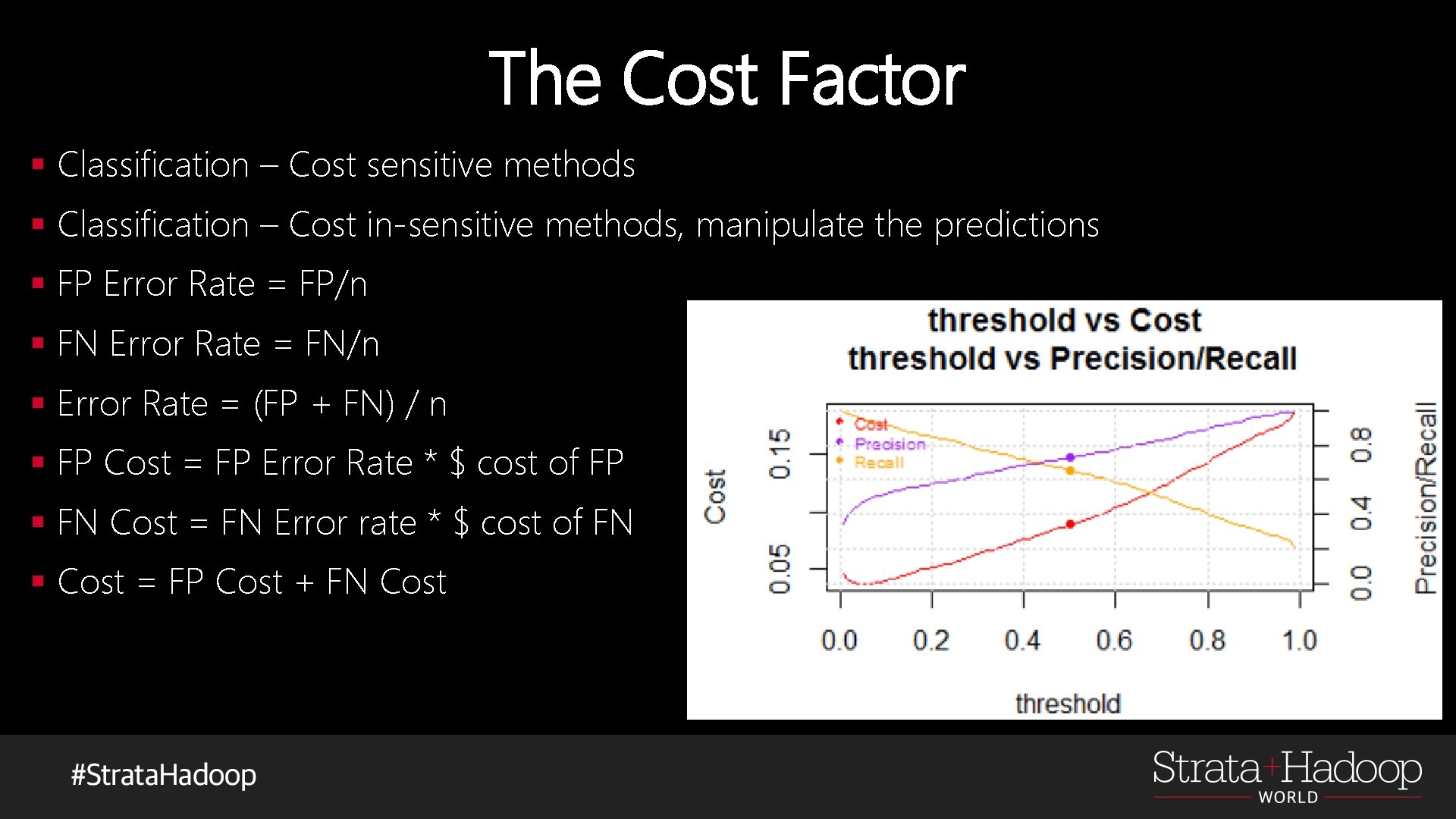

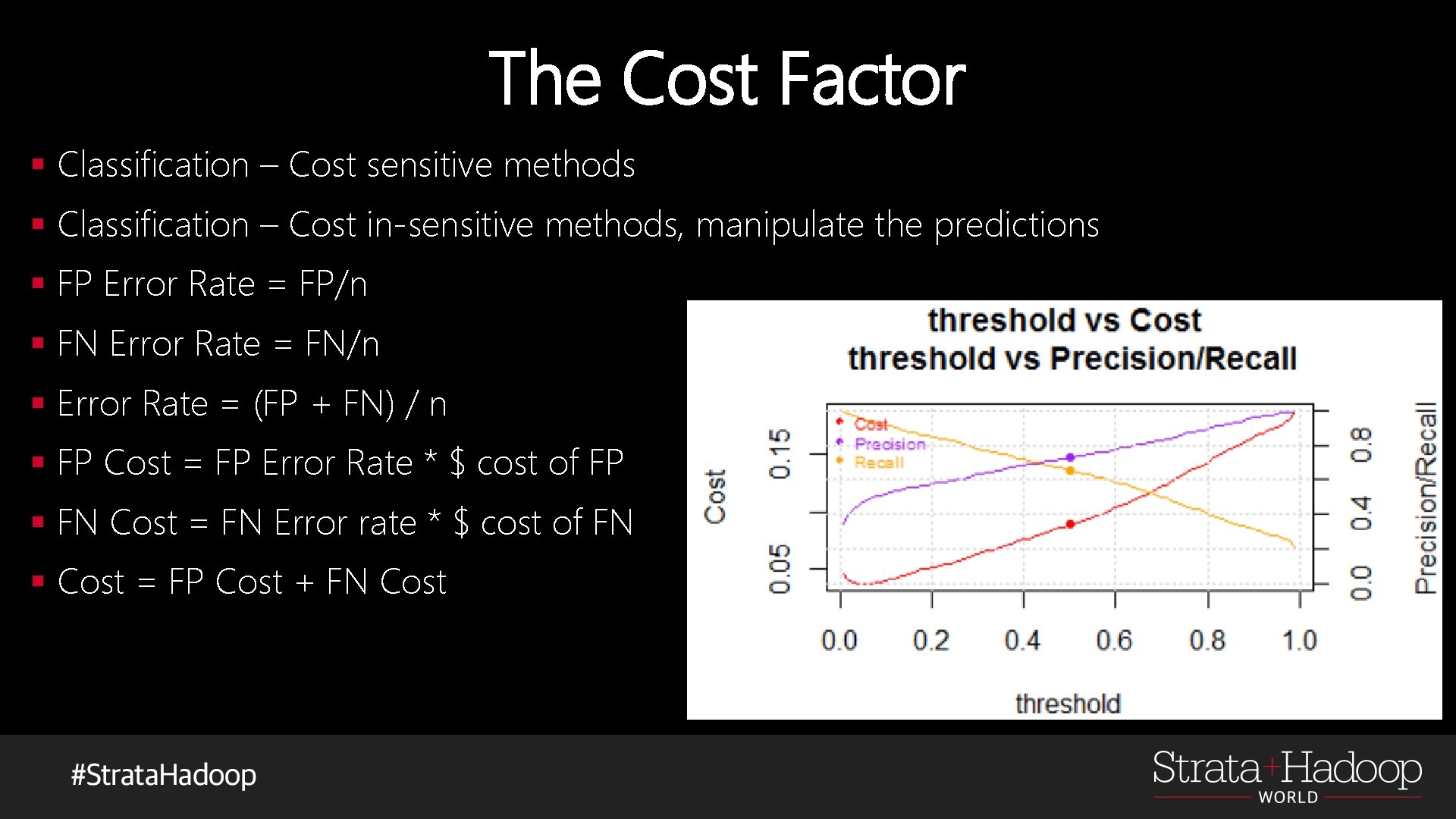

The Cost Factor § Classification – Cost sensitive methods § Classification – Cost in-sensitive methods, manipulate the predictions § FP Error Rate = FP/n § FN Error Rate = FN/n § Error Rate = (FP + FN) / n § FP Cost = FP Error Rate * $ cost of FP § FN Cost = FN Error rate * $ cost of FN § Cost = FP Cost + FN Cost

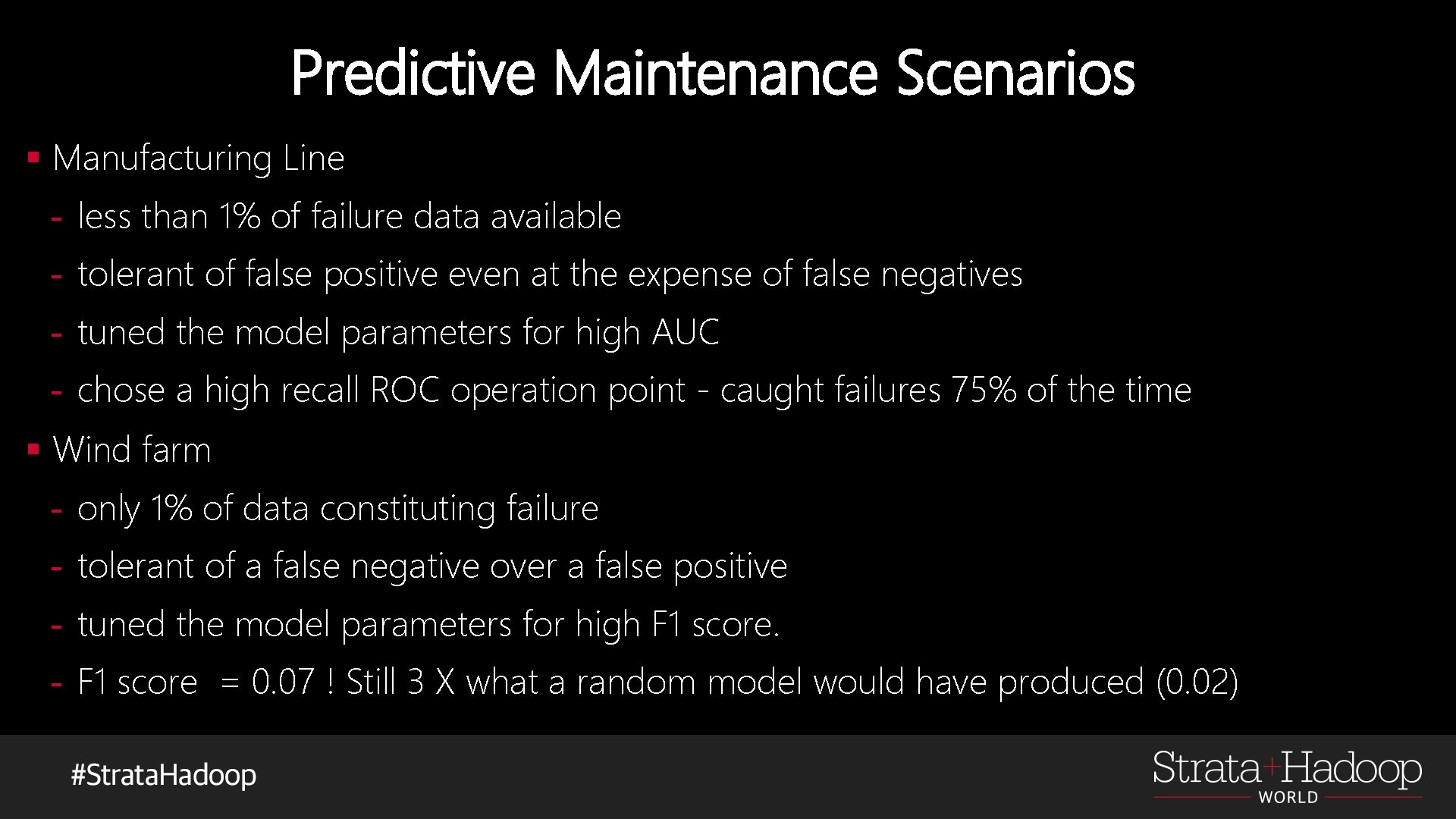

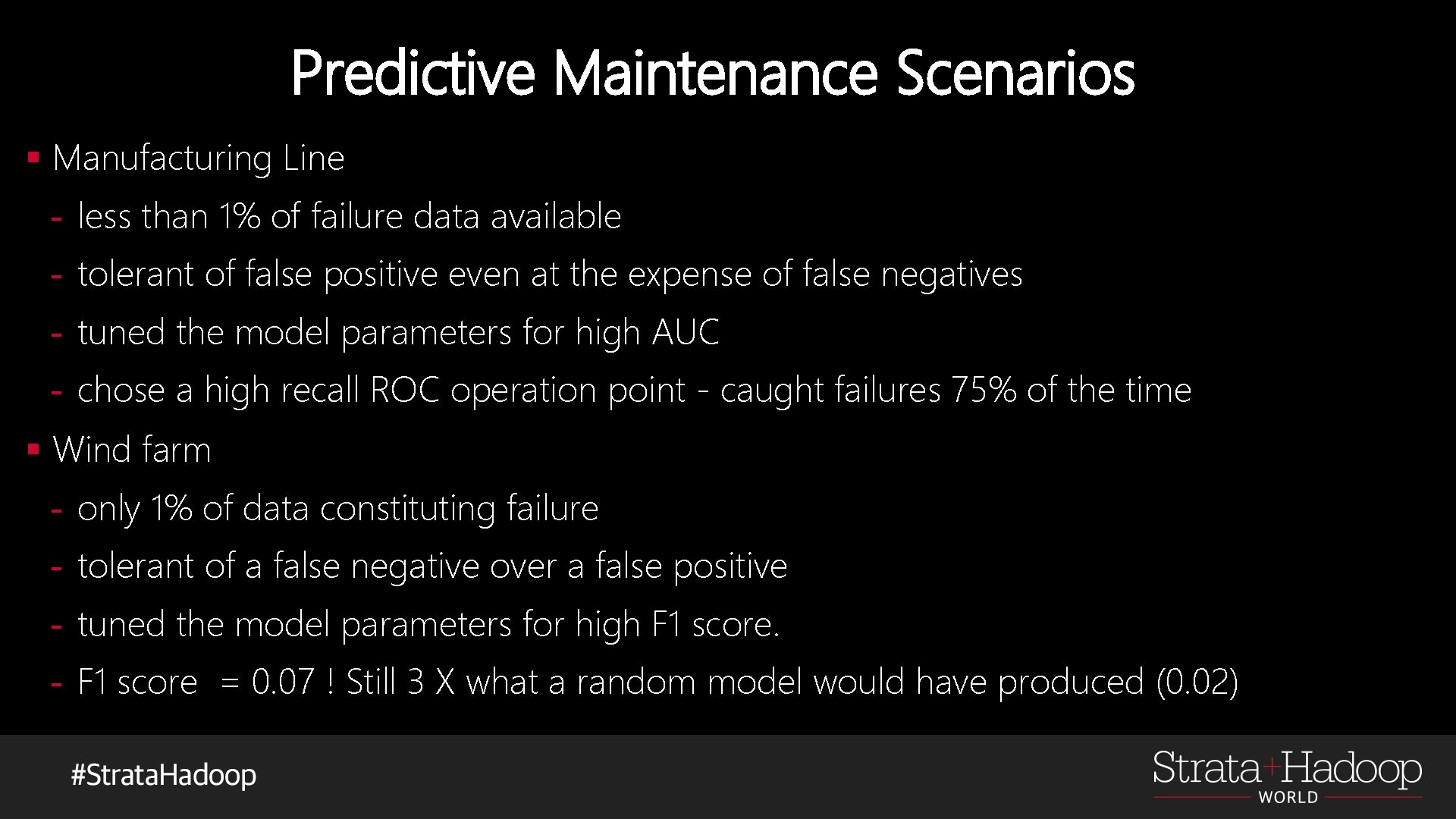

Predictive Maintenance Scenarios § Manufacturing Line - less than 1% of failure data available - tolerant of false positive even at the expense of false negatives - tuned the model parameters for high AUC - chose a high recall ROC operation point - caught failures 75% of the time § Wind farm - only 1% of data constituting failure - tolerant of a false negative over a false positive - tuned the model parameters for high F 1 score. - F 1 score = 0. 07 ! Still 3 X what a random model would have produced (0. 02)

Predict machine failing … Red = Actual failure points (label = 1) Blue = Non-failure points (label = 0) Threshold of 0. 23 Window of 5 days for labeling Example: Should be predicted to be failure, but given 0. 23 threshold, predicted as 0 and thus false negative Failure point

Think about cost/benefit ratio and remember than “ 99% accuracy” means nothing without context!

Learn and try yourself! • • • Learn from Cortana Intelligence Gallery Solution package material – deploy by hand to learn here Try Cortana Intelligence Solution Template – Predictive Maintenance for Aerospace Try Azure IOT pre-configured solution for Predictive Maintenance Read the Predictive Maintenance Playbook for more details on how to approach these problems Run the Modelling Guide R Notebook for a DS walk-through Baseline Metrics overview Performance Metrics overview For more details on metrics: Blogs and paper • http: //blog. revolutionanalytics. com/2016/03/classification-models. html • http: //blog. revolutionanalytics. com/2016/03/com_class_eval_metrics_r. html • https: //github. com/shaheeng/Classification. Model. Evaluation/blob/master/Baseline%20 Metrics_S haheen_article. pdf