Evaluating Impact Flowchart What are the current drivers

- Slides: 4

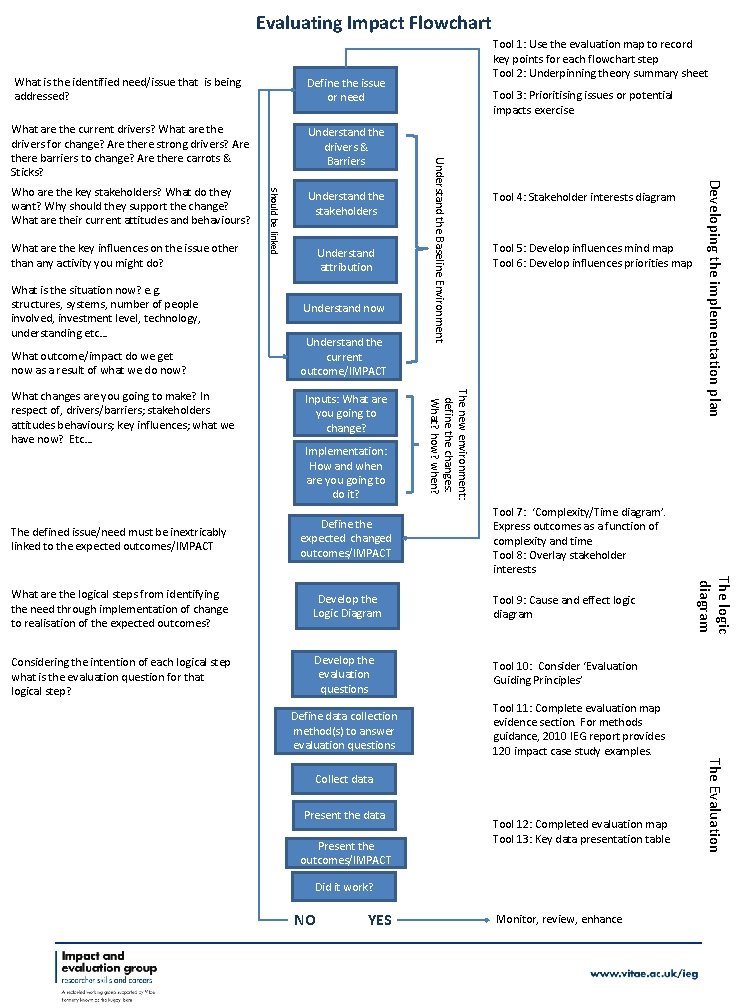

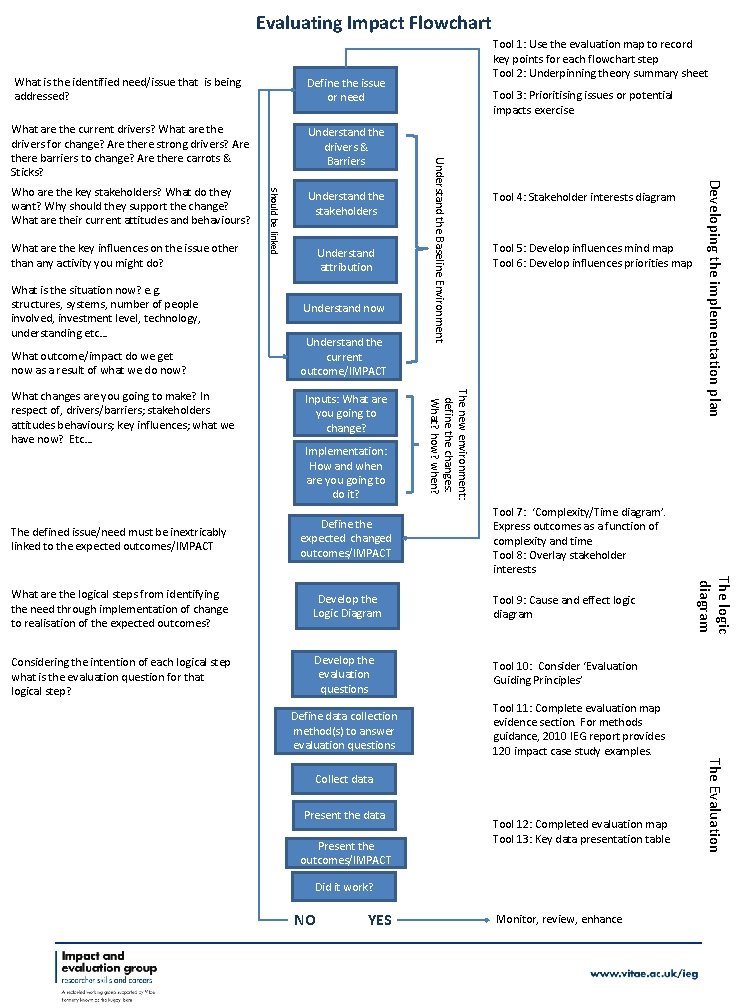

Evaluating Impact Flowchart What are the current drivers? What are the drivers for change? Are there strong drivers? Are there barriers to change? Are there carrots & Sticks? Understand the drivers & Barriers What are the key influences on the issue other than any activity you might do? What is the situation now? e. g. structures, systems, number of people involved, investment level, technology, understanding etc… What outcome/impact do we get now as a result of what we do now? Understand the stakeholders Understand attribution Understand now Understand the current outcome/IMPACT Inputs: What are you going to change? Implementation: How and when are you going to do it? Tool 4: Stakeholder interests diagram Tool 5: Develop influences mind map Tool 6: Develop influences priorities map The new environment: define the changes: What? how? when? What changes are you going to make? In respect of, drivers/barriers; stakeholders attitudes behaviours; key influences; what we have now? Etc… Should be linked Who are the key stakeholders? What do they want? Why should they support the change? What are their current attitudes and behaviours? Tool 3: Prioritising issues or potential impacts exercise The defined issue/need must be inextricably linked to the expected outcomes/IMPACT What are the logical steps from identifying the need through implementation of change to realisation of the expected outcomes? Develop the Logic Diagram Tool 9: Cause and effect logic diagram Considering the intention of each logical step what is the evaluation question for that logical step? Develop the evaluation questions Tool 10: Consider ‘Evaluation Guiding Principles’ Define data collection method(s) to answer evaluation questions Collect data Present the outcomes/IMPACT Tool 12: Completed evaluation map Tool 13: Key data presentation table Did it work? NO YES Monitor, review, enhance The Evaluation Tool 11: Complete evaluation map evidence section. For methods guidance, 2010 IEG report provides 120 impact case study examples. The logic diagram Tool 7: ‘Complexity/Time diagram’. Express outcomes as a function of complexity and time Tool 8: Overlay stakeholder interests Define the expected changed outcomes/IMPACT Developing the implementation plan Define the issue or need Understand the Baseline Environment What is the identified need/issue that is being addressed? Tool 1: Use the evaluation map to record key points for each flowchart step Tool 2: Underpinning theory summary sheet

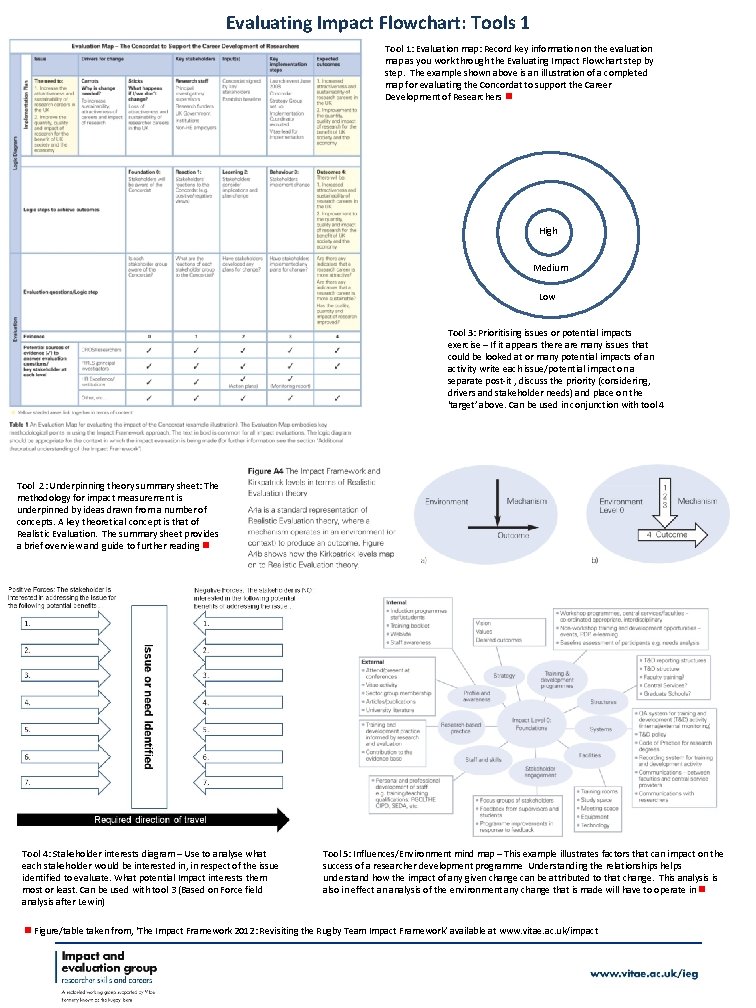

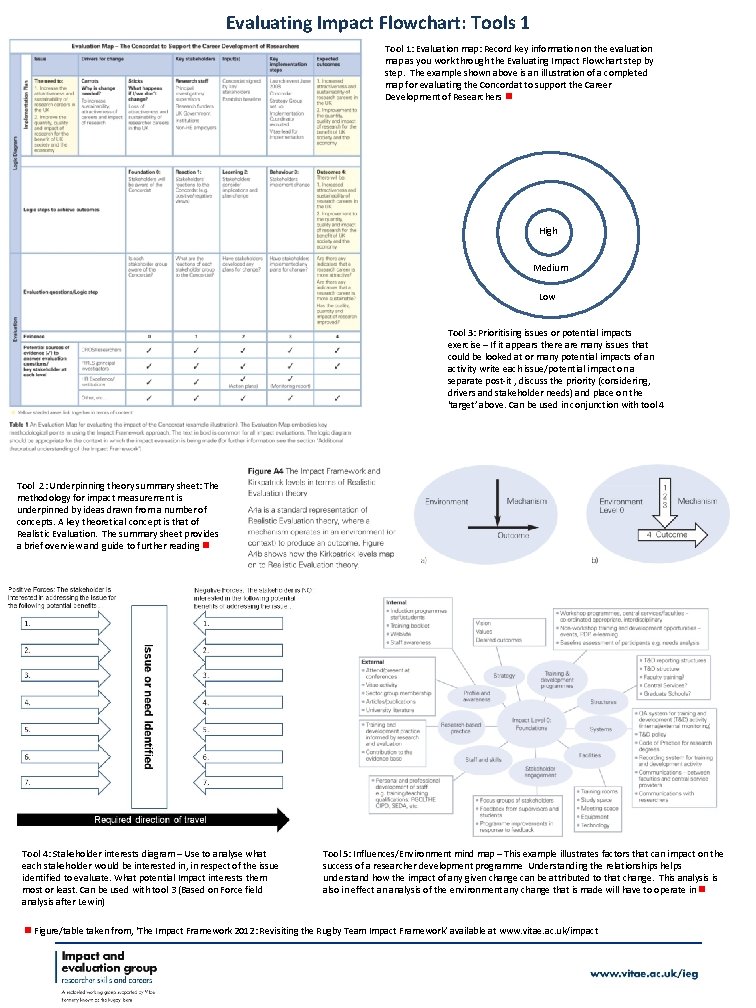

Evaluating Impact Flowchart: Tools 1 Tool 1: Evaluation map: Record key information on the evaluation map as you work through the Evaluating Impact Flowchart step by step. The example shown above is an illustration of a completed map for evaluating the Concordat to support the Career Development of Researchers High Medium Low Tool 3: Prioritising issues or potential impacts exercise – If it appears there are many issues that could be looked at or many potential impacts of an activity write each issue/potential impact on a separate post-it , discuss the priority (considering, drivers and stakeholder needs) and place on the ‘target’ above. Can be used in conjunction with tool 4 Tool 2: Underpinning theory summary sheet: The methodology for impact measurement is underpinned by ideas drawn from a number of concepts. A key theoretical concept is that of Realistic Evaluation. The summary sheet provides a brief overview and guide to further reading Tool 4: Stakeholder interests diagram – Use to analyse what each stakeholder would be interested in, in respect of the issue identified to evaluate. What potential Impact interests them most or least. Can be used with tool 3 (Based on Force field analysis after Lewin) Tool 5: Influences/Environment mind map – This example illustrates factors that can impact on the success of a researcher development programme Understanding the relationships helps understand how the impact of any given change can be attributed to that change. This analysis is also in effect an analysis of the environment any change that is made will have to operate in Figure/table taken from, ‘The Impact Framework 2012: Revisiting the Rugby Team Impact Framework’ available at www. vitae. ac. uk/impact

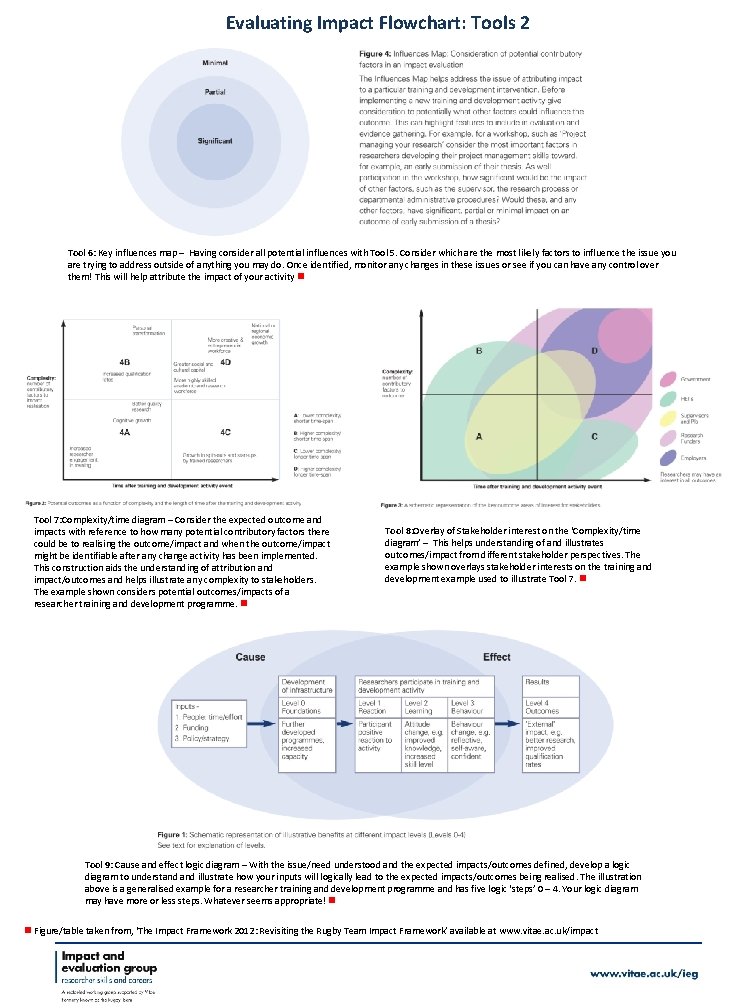

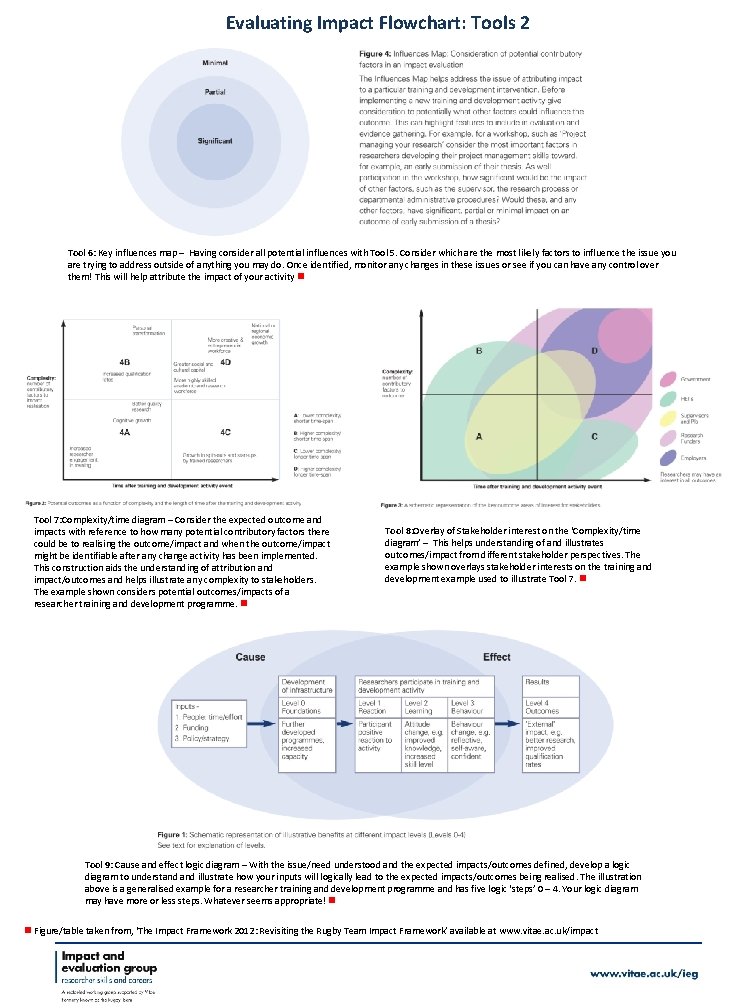

Evaluating Impact Flowchart: Tools 2 Tool 6: Key influences map – Having consider all potential influences with Tool 5. Consider which are the most likely factors to influence the issue you are trying to address outside of anything you may do. Once identified, monitor any changes in these issues or see if you can have any control over them! This will help attribute the impact of your activity Tool 7: Complexity/time diagram – Consider the expected outcome and impacts with reference to how many potential contributory factors there could be to realising the outcome/impact and when the outcome/impact might be identifiable after any change activity has been implemented. This construction aids the understanding of attribution and impact/outcomes and helps illustrate any complexity to stakeholders. The example shown considers potential outcomes/impacts of a researcher training and development programme. Tool 8: Overlay of Stakeholder interest on the ‘Complexity/time diagram’ – This helps understanding of and illustrates outcomes/impact from different stakeholder perspectives. The example shown overlays stakeholder interests on the training and development example used to illustrate Tool 7. Tool 9: Cause and effect logic diagram – With the issue/need understood and the expected impacts/outcomes defined, develop a logic diagram to understand illustrate how your inputs will logically lead to the expected impacts/outcomes being realised. The illustration above is a generalised example for a researcher training and development programme and has five logic ‘steps’ 0 – 4. Your logic diagram may have more or less steps. Whatever seems appropriate! Figure/table taken from, ‘The Impact Framework 2012: Revisiting the Rugby Team Impact Framework’ available at www. vitae. ac. uk/impact

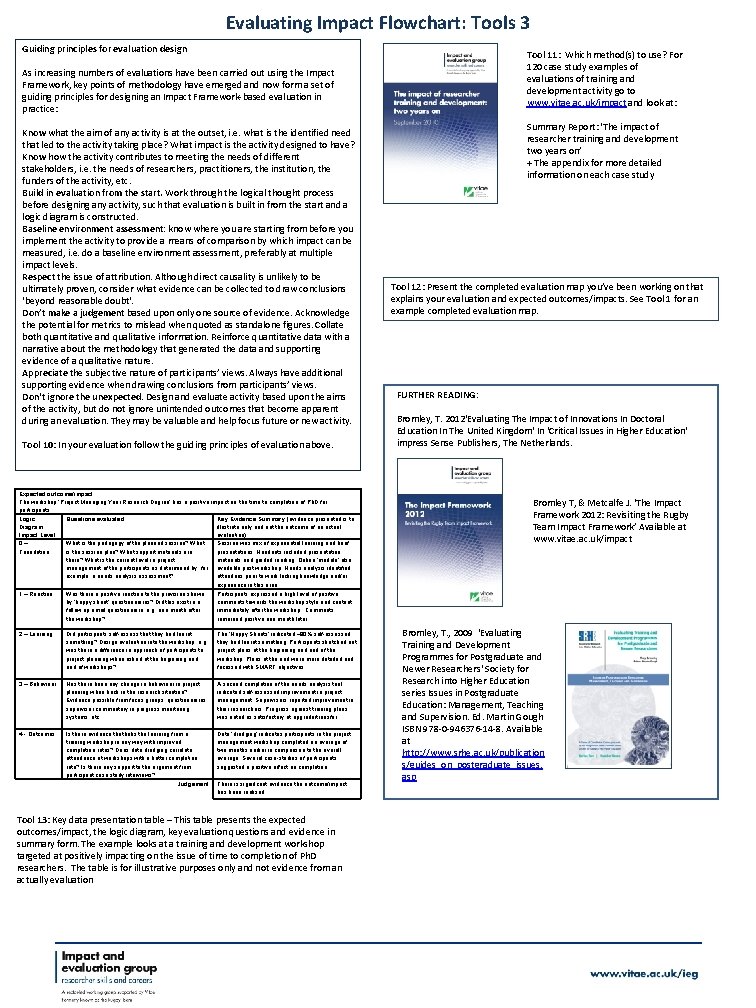

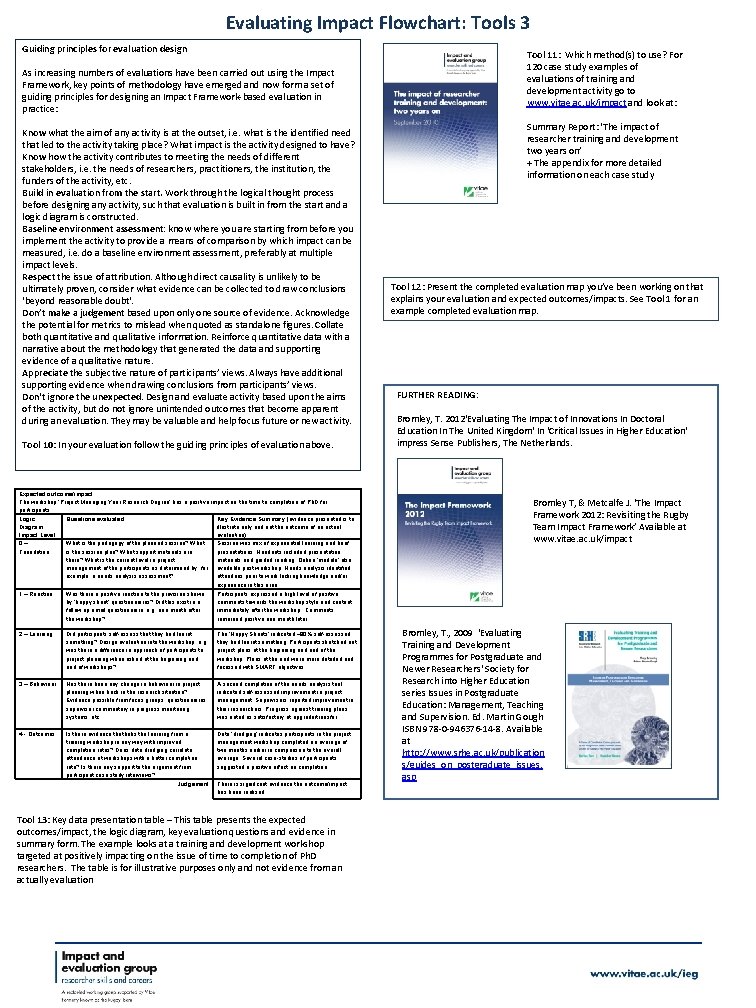

Evaluating Impact Flowchart: Tools 3 Guiding principles for evaluation design Tool 11: Which method(s) to use? For 120 case study examples of evaluations of training and development activity go to www. vitae. ac. uk/impact and look at: As increasing numbers of evaluations have been carried out using the Impact Framework, key points of methodology have emerged and now form a set of guiding principles for designing an Impact Framework-based evaluation in practice: Know what the aim of any activity is at the outset, i. e. what is the identified need that led to the activity taking place? What impact is the activity designed to have? Know how the activity contributes to meeting the needs of different stakeholders, i. e. the needs of researchers, practitioners, the institution, the funders of the activity, etc. Build in evaluation from the start. Work through the logical thought process before designing any activity, such that evaluation is built in from the start and a logic diagram is constructed. Baseline environment assessment: know where you are starting from before you implement the activity to provide a means of comparison by which impact can be measured, i. e. do a baseline environment assessment, preferably at multiple impact levels. Respect the issue of attribution. Although direct causality is unlikely to be ultimately proven, consider what evidence can be collected to draw conclusions ‘beyond reasonable doubt'. Don’t make a judgement based upon only one source of evidence. Acknowledge the potential for metrics to mislead when quoted as standalone figures. Collate both quantitative and qualitative information. Reinforce quantitative data with a narrative about the methodology that generated the data and supporting evidence of a qualitative nature. Appreciate the subjective nature of participants’ views. Always have additional supporting evidence when drawing conclusions from participants’ views. Don't ignore the unexpected. Design and evaluate activity based upon the aims of the activity, but do not ignore unintended outcomes that become apparent during an evaluation. They may be valuable and help focus future or new activity. Tool 10: In your evaluation follow the guiding principles of evaluation above. Expected outcome/impact: The workshop ‘Project Managing Your Research Degree’ has a positive impact on the time to completion of Ph. D for participants Logic Questions evaluated Key Evidence Summary (evidence presented is to Diagram illustrate only and not the outcome of an actual Impact Level evaluation) 0– What is the pedagogy of the planned session? What Session was mix of experiential learning and brief Foundation is the session plan? What support materials are presentations. Handouts included presentation there? What is the current level in project materials and guided reading. Online ‘module’ also management of the participants as determined by, for available post workshop. Needs analysis identified example, a needs analysis assessment? attendees prior to work lacking knowledge and/or experience in this area 1 – Reaction Was there a positive reaction to the provision shown by ‘happy sheet’ questionnaires? Did this exist in a follow up email questionnaire, e. g. one month after the workshop? Participants expressed a high level of positive comments towards the workshop style and content immediately after the workshop. Comments remained positive one month later. 2 – Learning Did participants self-assess that they had learnt something? Design evaluation into the workshop, e. g. was there a difference in approach of participants to project planning when asked at the beginning and end of workshops? The ‘Happy Sheets’ indicated ~80% self-assessed they had learnt something. Participants sketched out project plans at the beginning and end of the workshop. Plans at the end were more detailed and focussed with SMART objectives. 3 – Behaviour Has there been any change in behaviour in project planning when back in the research situation? Evidence possible from focus groups, questionnaires, supervisor commentary in progress monitoring systems, etc. A second completion of the needs analysis tool indicated self-assessed improvement in project management. Supervisors reported improvement in their researchers. Progress against training plans was noted as satisfactory at upgrade/transfer. 4 - Outcomes Is there evidence that links the learning from a training workshop in any way with improved completion rates? Does data dredging correlate attendance at workshops with a better completion rate? Is there any support to the argument from participant case study interviews? Judgement Data ‘dredging’ indicates participants in the project management workshop completed an average of two months earlier in comparison to the overall average. Several case-studies of participants suggested a positive effect on completion. There is significant evidence the outcome/impact has been realised Tool 13: Key data presentation table – This table presents the expected outcomes/impact, the logic diagram, key evaluation questions and evidence in summary form. The example looks at a training and development workshop targeted at positively impacting on the issue of time to completion of Ph. D researchers. The table is for illustrative purposes only and not evidence from an actually evaluation Summary Report: ‘The impact of researcher training and development two years on’ + The appendix for more detailed information on each case study Tool 12: Present the completed evaluation map you’ve been working on that explains your evaluation and expected outcomes/impacts. See Tool 1 for an example completed evaluation map. FURTHER READING: Bromley, T. 2012'Evaluating The Impact of Innovations In Doctoral Education In The United Kingdom' In 'Critical Issues in Higher Education' impress Sense Publishers, The Netherlands. Bromley T, & Metcalfe J. ‘The Impact Framework 2012: Revisiting the Rugby Team Impact Framework’ Available at www. vitae. ac. uk/impact Bromley, T. , 2009 'Evaluating Training and Development Programmes for Postgraduate and Newer Researchers' Society for Research into Higher Education series Issues in Postgraduate Education: Management, Teaching and Supervision. Ed. Martin Gough ISBN 978 -0 -946376 -14 -8. Available at http: //www. srhe. ac. uk/publication s/guides_on_postgraduate_issues. asp