EScience and LCG2 PPAP Summary Results from Grid

- Slides: 27

E-Science and LCG-2 PPAP Summary Results from Grid. PP 1/LCG 1 Value of the UK contribution to LCG? Aims of Grid. PP 2/LCG 2 UK special contribution to LCG 2? How much effort will be needed to continue activities during the LHC era? 14 September 2004 Grid. PP 1 and AHM Tony Doyle - University of Glasgow

Outline 1. What has been achieved in Grid. PP 1? • Grid. PP I (09/01 -08/04) Prototype [7’] complete 2. What is being attempted in Grid. PP 2? [6’] • Grid. PP II (09/04 -08/07) Production short timescale • What is the value of a UK LCG Phase-2 contribution? 3. Resources needed in medium-long term? [10’] • (09/07 -08/10) Exploitation medium • Focus on resources needed in 2008 • (09/10 -08/14) Exploitation long-term 26 October 2004 PPAP Tony Doyle - University of Glasgow

Executive Summary • Introduction • Project Management • • • the Grid is a reality • A project was/is needed (under control via Project Map) • Deployed according to planning Resources • Phase 1. . Phase 2 CERN • Prototype(s) made impact Middleware • Fully engaged (value added) Applications • Tier-1 production mode Tier-1/A • Resources now being utilised Tier-2 • UK flagship project Dissemination • Preliminary planning Exploitation Ref: http: //www. gridpp. ac. uk/ 26 October 2004 PPAP Tony Doyle - University of Glasgow

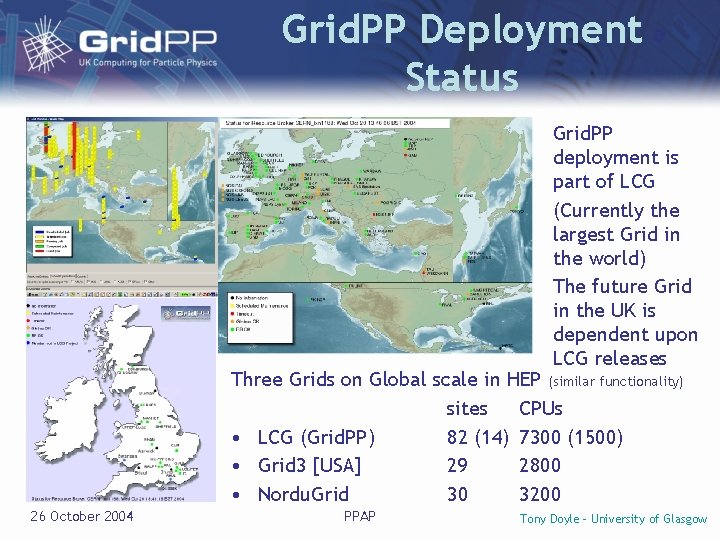

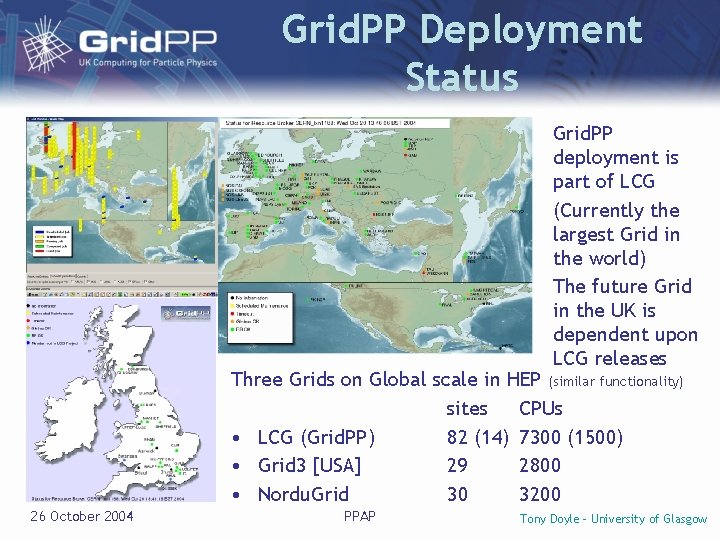

Grid. PP Deployment Status Grid. PP deployment is part of LCG (Currently the largest Grid in the world) The future Grid in the UK is dependent upon LCG releases Three Grids on Global scale in HEP (similar functionality) sites CPUs • LCG (Grid. PP) 82 (14) 7300 (1500) • Grid 3 [USA] 29 2800 • Nordu. Grid 30 3200 26 October 2004 PPAP Tony Doyle - University of Glasgow

Deployment Status (26/10/04) • Incremental releases: significant improvements in reliability, performance and scalability – within the limits of the current architecture – scalability is much better than expected a year ago • Many more nodes and processors than anticipated – installation problems of last year overcome – many small sites have contributed to MC productions • • Full-scale testing as part of this year’s data challenges Grid. PP “The Grid becomes a reality” – widely reported Technology Sites 26 October 2004 British Embassy (USA) PPAP British Embassy (Russia) Tony Doyle - University of Glasgow

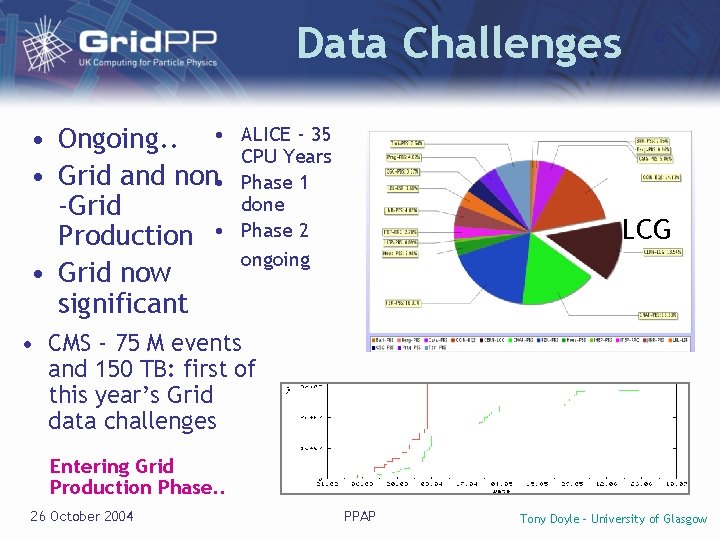

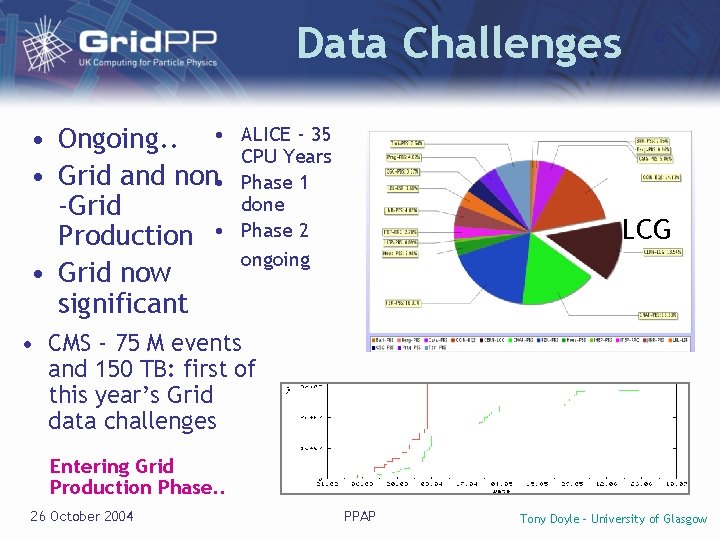

Data Challenges • Ongoing. . • • Grid and non • -Grid Production • • Grid now significant ALICE - 35 CPU Years Phase 1 done Phase 2 ongoing LCG • CMS - 75 M events and 150 TB: first of this year’s Grid data challenges Entering Grid Production Phase. . 26 October 2004 PPAP Tony Doyle - University of Glasgow

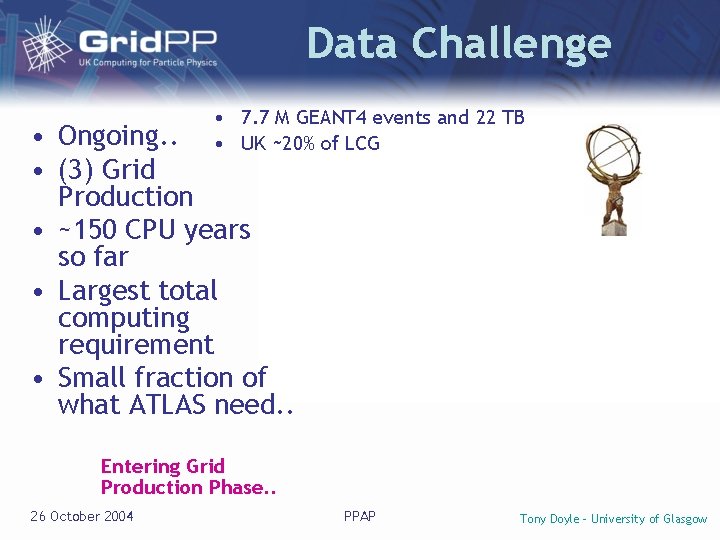

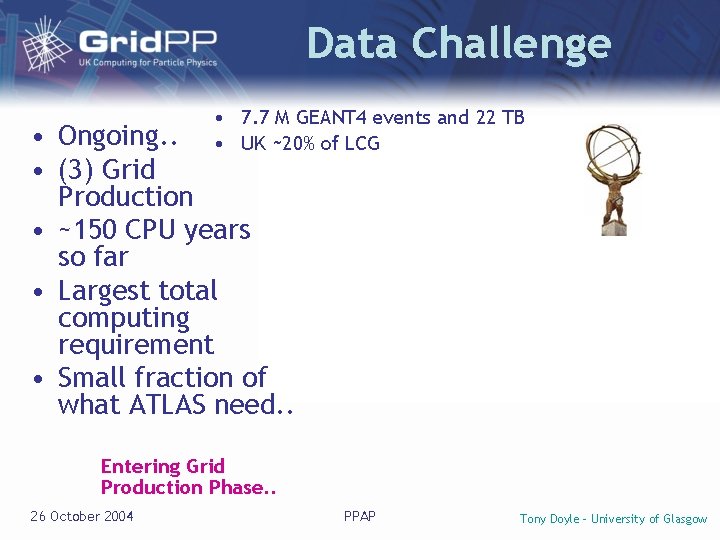

Data Challenge • 7. 7 M GEANT 4 events and 22 TB • UK ~20% of LCG • Ongoing. . • (3) Grid Production • ~150 CPU years so far • Largest total computing requirement • Small fraction of what ATLAS need. . Entering Grid Production Phase. . 26 October 2004 PPAP Tony Doyle - University of Glasgow

LHCb Data Challenge 424 CPU years (4, 000 k. SI 2 k months), 186 M events • UK’s input significant (>1/4 total) Entering Grid Production Phase. . • LCG(UK) resource: – Tier-1 7. 7% 186 M Produced Events. Phase 1 – – Tier-2 sites: London 3. 9% South 2. 3% North 1. 4% • DIRAC: – – Imperial 2. 0% L'pool 3. 1% Oxford 0. 1% Scot. Grid 5. 1% 26 October 2004 3 -5 106/day Completed LCG paused restarted LCG in action 1. 8 106/day DIRAC alone PPAP Tony Doyle - University of Glasgow

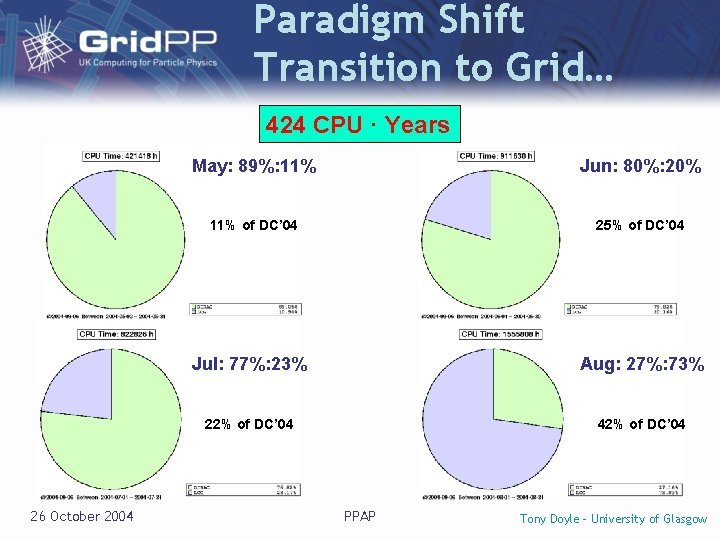

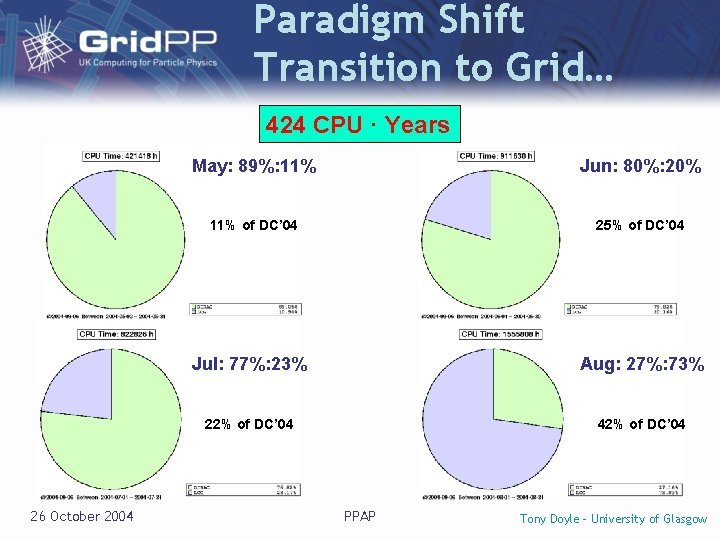

Paradigm Shift Transition to Grid… 424 CPU · Years 26 October 2004 May: 89%: 11% Jun: 80%: 20% 11% of DC’ 04 25% of DC’ 04 Jul: 77%: 23% Aug: 27%: 73% 22% of DC’ 04 42% of DC’ 04 PPAP Tony Doyle - University of Glasgow

What was Grid. PP 1? • A team that built a working prototype grid of significant scale s s e c c u g n i h t ” e d m pte o s f m o e t t t > 1, 500 (7, 300) CPUs n a e r m o > 500 (6, 500) TB of storage e , v d e > 1000 (6, 000) simultaneous h jobs i nne c a pla e h , 82% of the T d • A complex project “ irwhere e sfirst three years 190 tasks for the e d were completed S A 26 October 2004 PPAP Tony Doyle - University of Glasgow

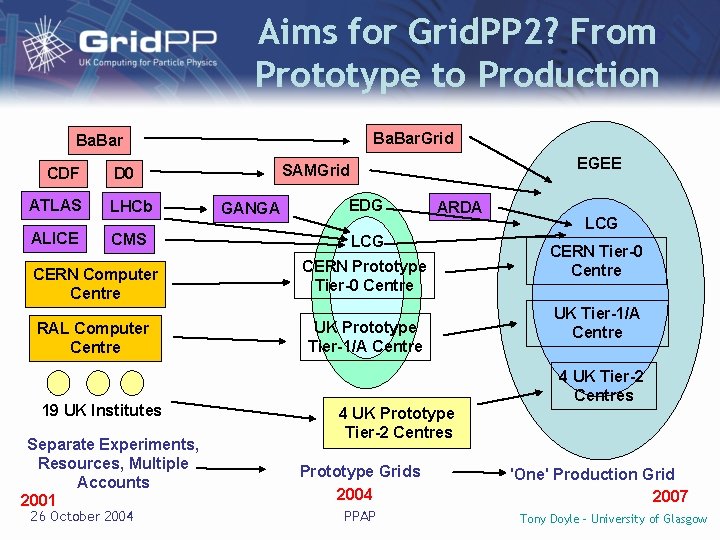

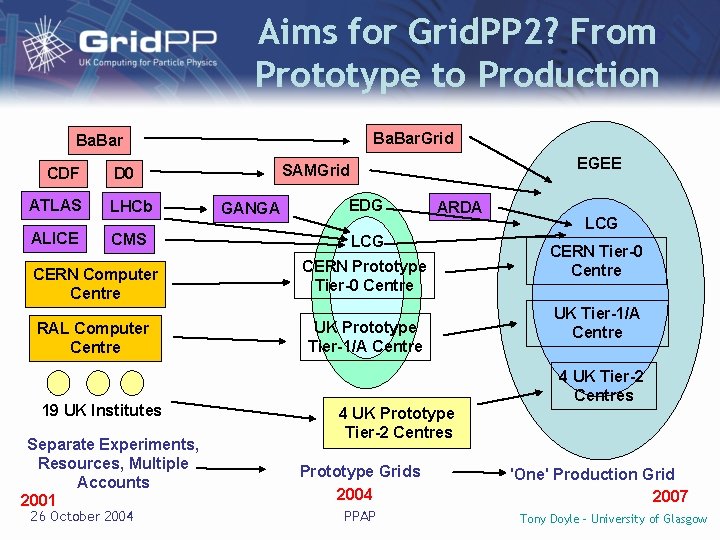

Aims for Grid. PP 2? From Prototype to Production Ba. Bar. Grid Ba. Bar CDF ATLAS LHCb ALICE CMS CERN Computer Centre RAL Computer Centre 19 UK Institutes Separate Experiments, Resources, Multiple Accounts 2001 26 October 2004 EGEE SAMGrid D 0 GANGA EDG ARDA LCG CERN Prototype Tier-0 Centre UK Prototype Tier-1/A Centre LCG CERN Tier-0 Centre UK Tier-1/A Centre 4 UK Tier-2 Centres 4 UK Prototype Tier-2 Centres Prototype Grids 2004 PPAP 'One' Production Grid 2007 Tony Doyle - University of Glasgow

Planning: Grid. PP 2 Project. Map Need to recognise future requirements in each area… 26 October 2004 PPAP Tony Doyle - University of Glasgow

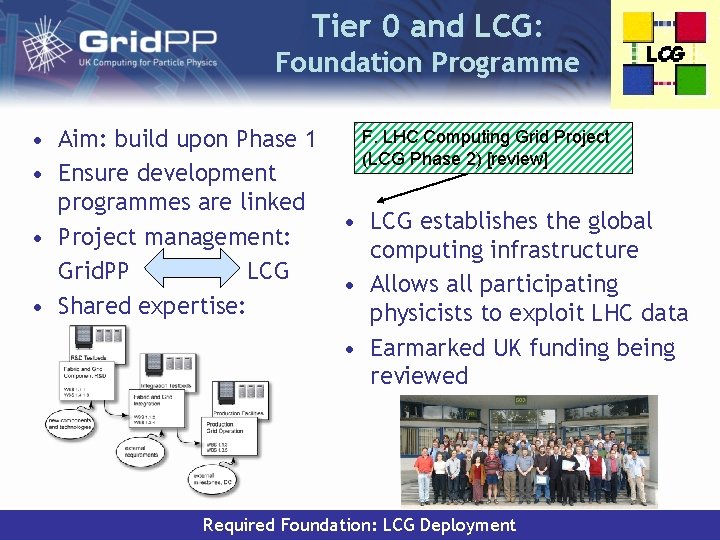

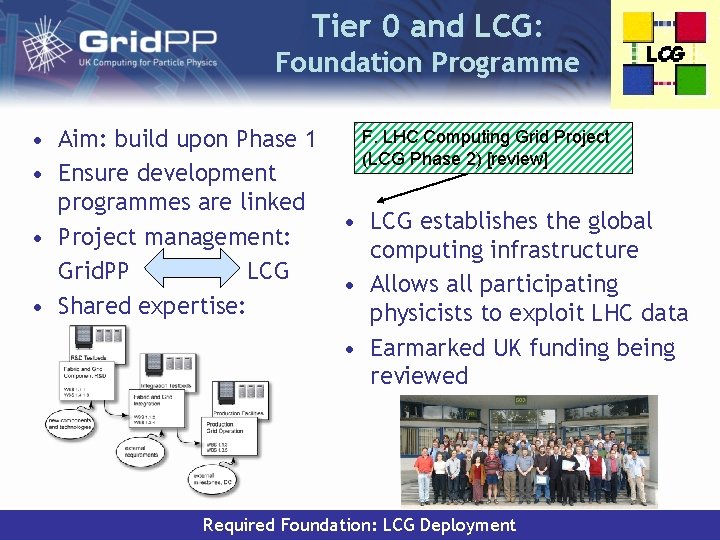

Tier 0 and LCG: Foundation Programme • Aim: build upon Phase 1 • Ensure development programmes are linked • Project management: Grid. PP LCG • Shared expertise: 26 October 2004 F. LHC Computing Grid Project (LCG Phase 2) [review] • LCG establishes the global computing infrastructure • Allows all participating physicists to exploit LHC data • Earmarked UK funding being reviewed PPAP Required Foundation: LCG Deployment Tony Doyle - University of Glasgow

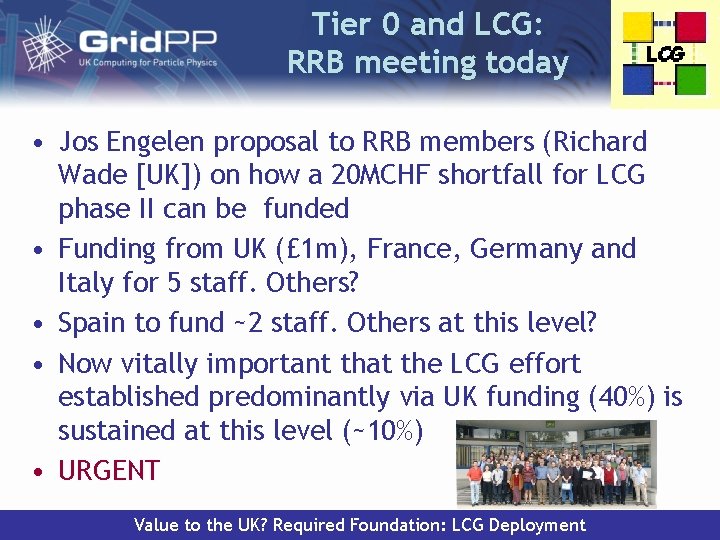

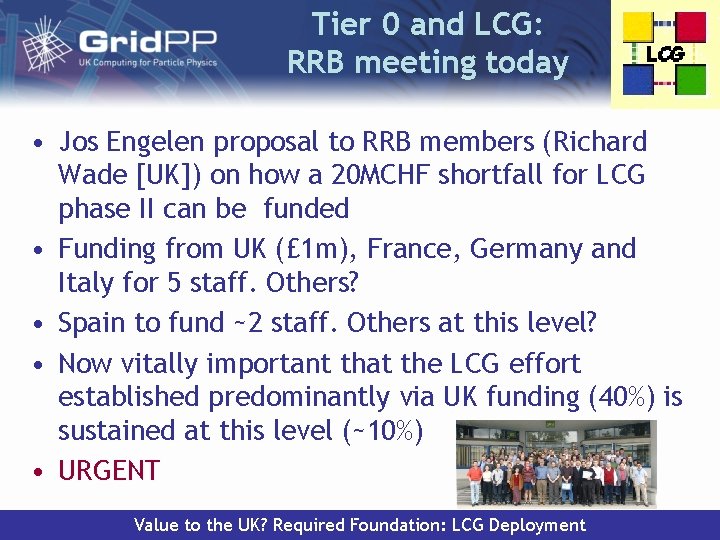

Tier 0 and LCG: RRB meeting today • Jos Engelen proposal to RRB members (Richard Wade [UK]) on how a 20 MCHF shortfall for LCG phase II can be funded • Funding from UK (£ 1 m), France, Germany and Italy for 5 staff. Others? • Spain to fund ~2 staff. Others at this level? • Now vitally important that the LCG effort established predominantly via UK funding (40%) is sustained at this level (~10%) • URGENT 26 October 2004 PPAP Tony Doyle - University of Glasgow Value to the UK? Required Foundation: LCG Deployment

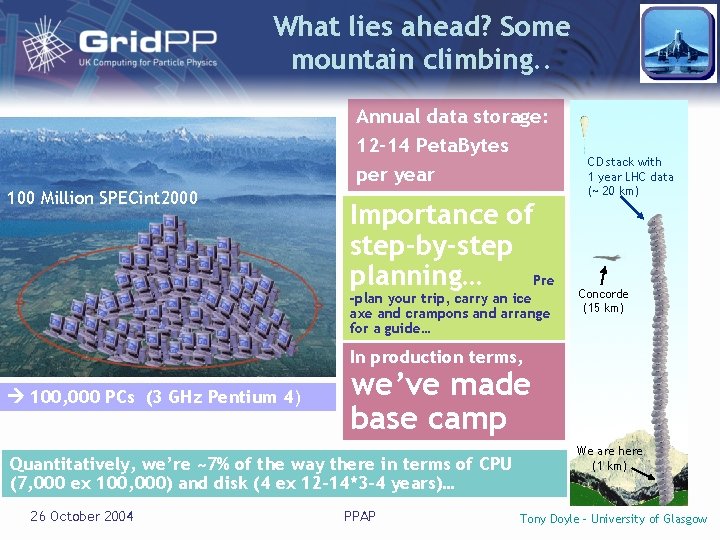

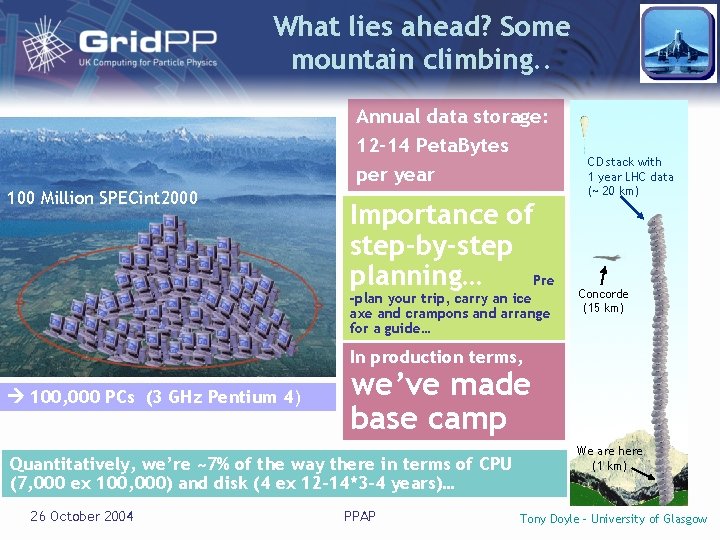

What lies ahead? Some mountain climbing. . Annual data storage: 12 -14 Peta. Bytes per year 100 Million SPECint 2000 Importance of step-by-step planning… Pre -plan your trip, carry an ice axe and crampons and arrange for a guide… CD stack with 1 year LHC data (~ 20 km) Concorde (15 km) In production terms, 100, 000 PCs (3 GHz Pentium 4) we’ve made base camp Quantitatively, we’re ~7% of the way there in terms of CPU (7, 000 ex 100, 000) and disk (4 ex 12 -14*3 -4 years)… 26 October 2004 PPAP We are here (1 km) Tony Doyle - University of Glasgow

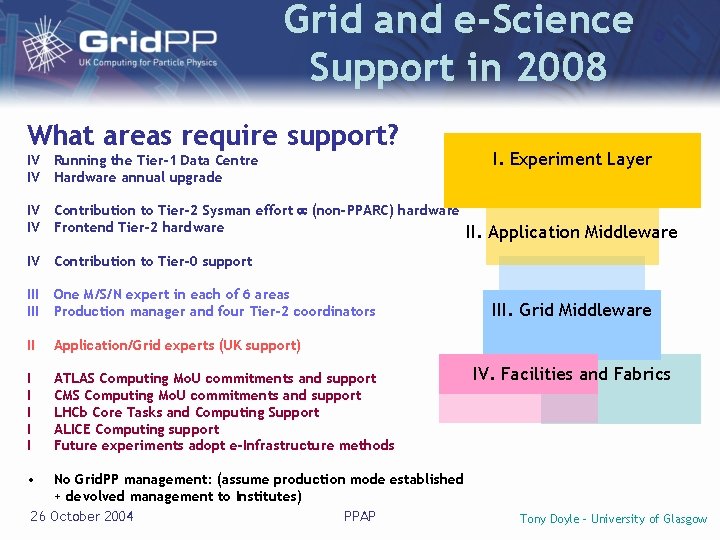

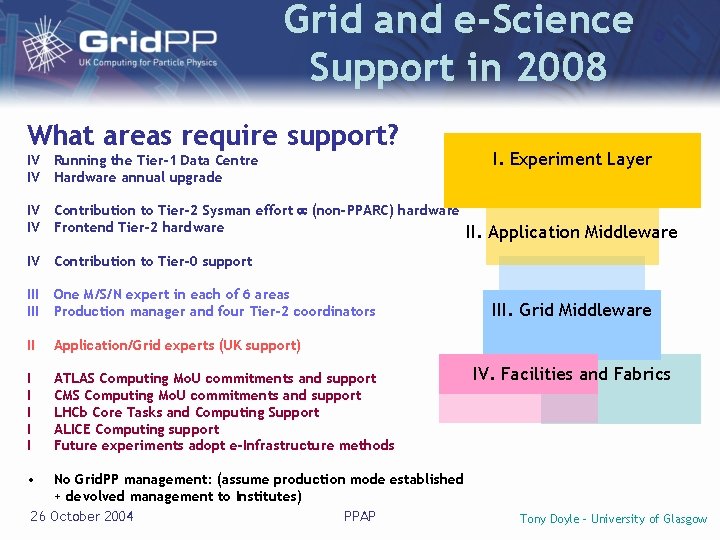

Grid and e-Science Support in 2008 What areas require support? IV IV Running the Tier-1 Data Centre Hardware annual upgrade IV IV Contribution to Tier-2 Sysman effort (non-PPARC) hardware Frontend Tier-2 hardware IV Contribution to Tier-0 support III One M/S/N expert in each of 6 areas Production manager and four Tier-2 coordinators II Application/Grid experts (UK support) I I I ATLAS Computing Mo. U commitments and support CMS Computing Mo. U commitments and support LHCb Core Tasks and Computing Support ALICE Computing support Future experiments adopt e-Infrastructure methods I. Experiment Layer II. Application Middleware III. Grid Middleware IV. Facilities and Fabrics • No Grid. PP management: (assume production mode established + devolved management to Institutes) 26 October 2004 PPAP Tony Doyle - University of Glasgow

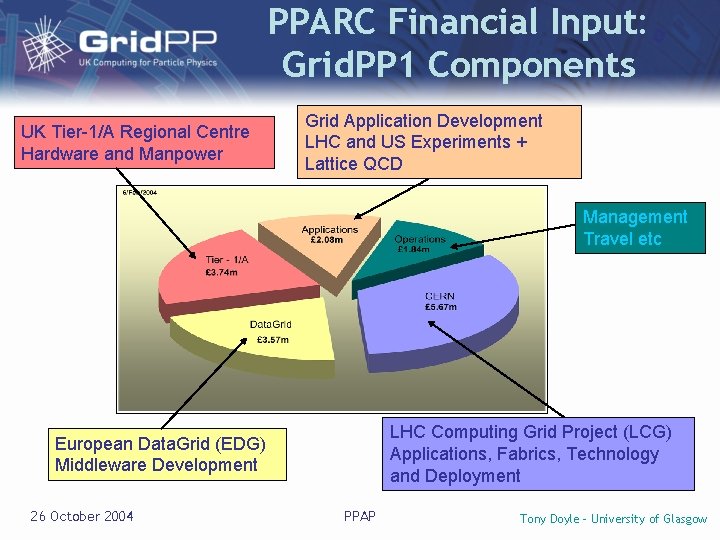

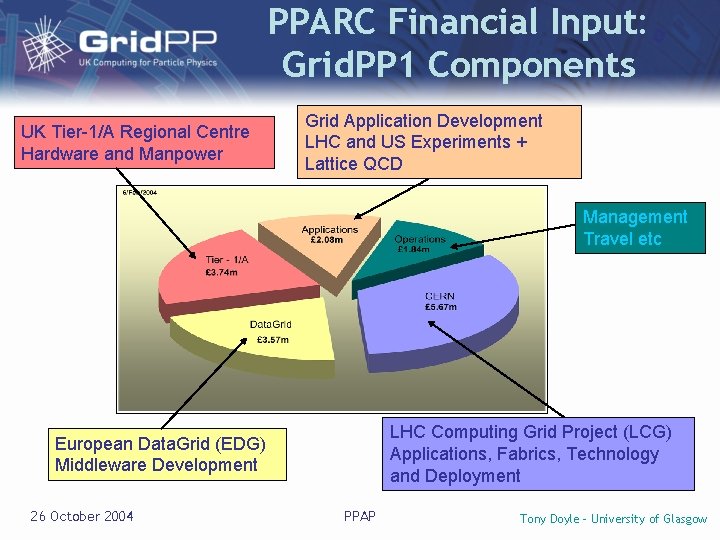

PPARC Financial Input: Grid. PP 1 Components UK Tier-1/A Regional Centre Hardware and Manpower Grid Application Development LHC and US Experiments + Lattice QCD Management Travel etc LHC Computing Grid Project (LCG) Applications, Fabrics, Technology and Deployment European Data. Grid (EDG) Middleware Development 26 October 2004 PPAP Tony Doyle - University of Glasgow

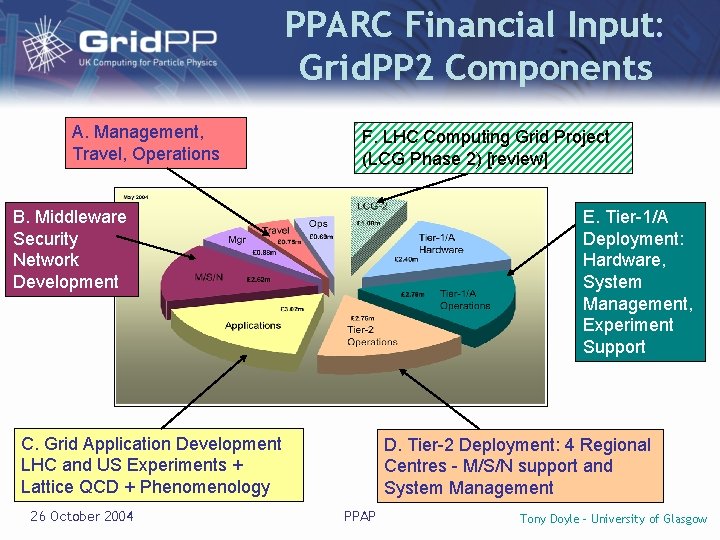

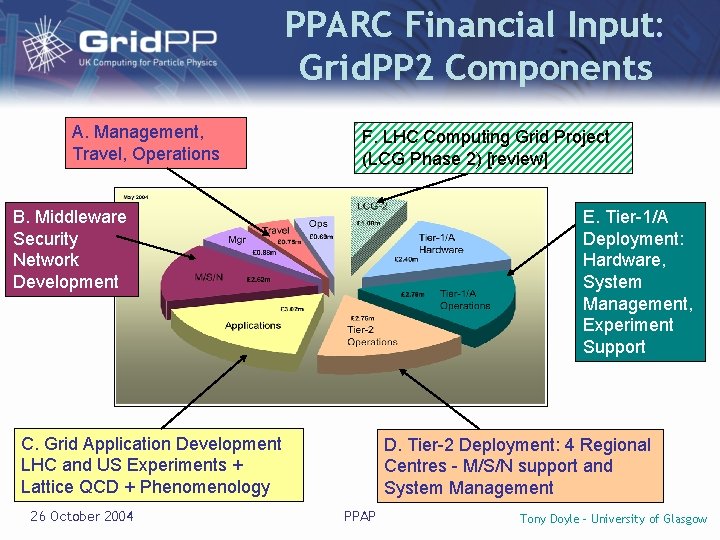

PPARC Financial Input: Grid. PP 2 Components A. Management, Travel, Operations F. LHC Computing Grid Project (LCG Phase 2) [review] B. Middleware Security Network Development E. Tier-1/A Deployment: Hardware, System Management, Experiment Support C. Grid Application Development LHC and US Experiments + Lattice QCD + Phenomenology 26 October 2004 D. Tier-2 Deployment: 4 Regional Centres - M/S/N support and System Management PPAP Tony Doyle - University of Glasgow

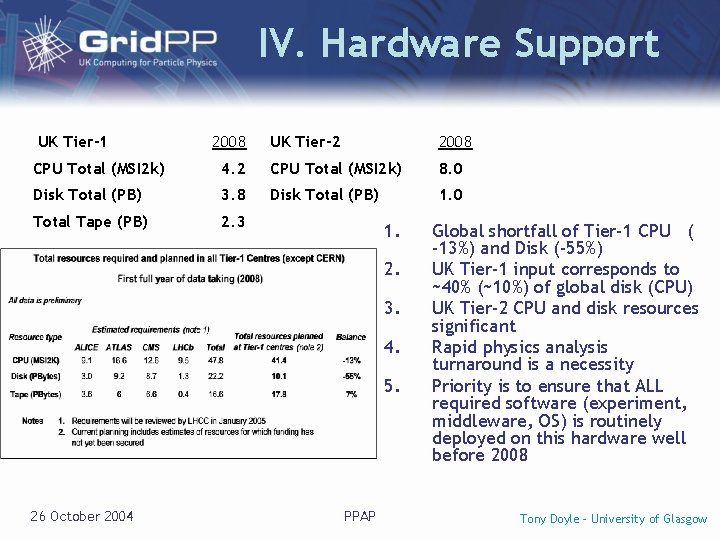

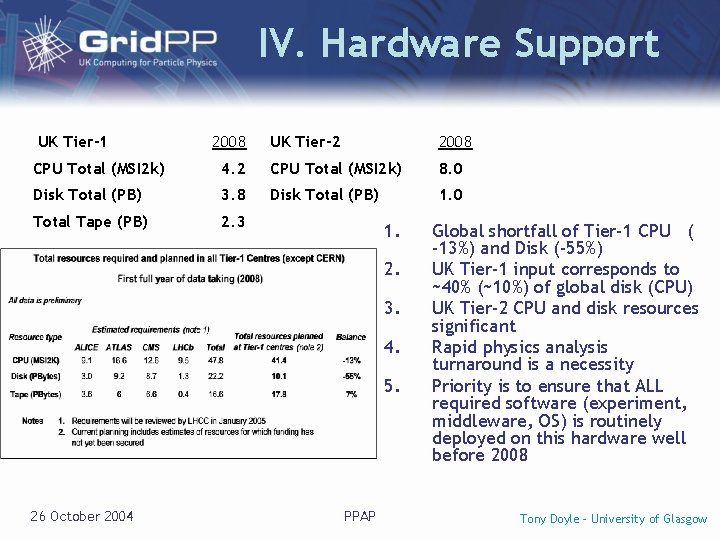

IV. Hardware Support UK Tier-1 2008 UK Tier-2 2008 CPU Total (MSI 2 k) 4. 2 CPU Total (MSI 2 k) 8. 0 Disk Total (PB) 3. 8 Disk Total (PB) 1. 0 Total Tape (PB) 2. 3 1. 2. 3. 4. 5. 26 October 2004 PPAP Global shortfall of Tier-1 CPU ( -13%) and Disk (-55%) UK Tier-1 input corresponds to ~40% (~10%) of global disk (CPU) UK Tier-2 CPU and disk resources significant Rapid physics analysis turnaround is a necessity Priority is to ensure that ALL required software (experiment, middleware, OS) is routinely deployed on this hardware well before 2008 Tony Doyle - University of Glasgow

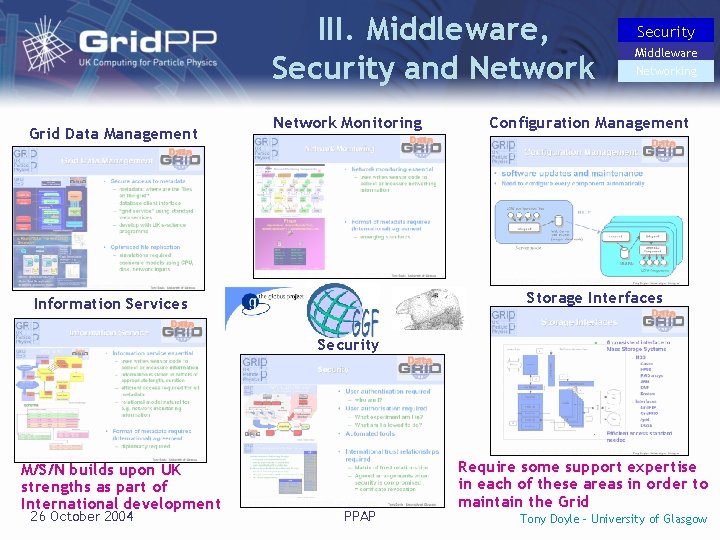

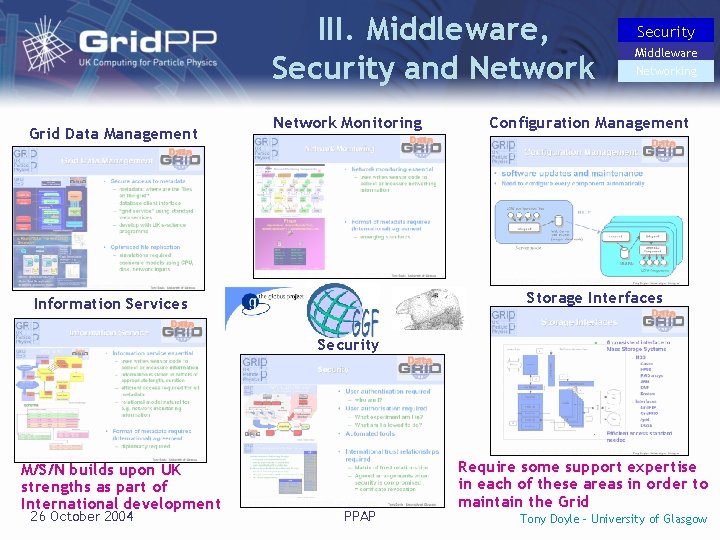

III. Middleware, Security and Network Grid Data Management Network Monitoring Security Middleware Networking Configuration Management Storage Interfaces Information Services Security M/S/N builds upon UK strengths as part of International development 26 October 2004 PPAP Require some support expertise in each of these areas in order to maintain the Grid Tony Doyle - University of Glasgow

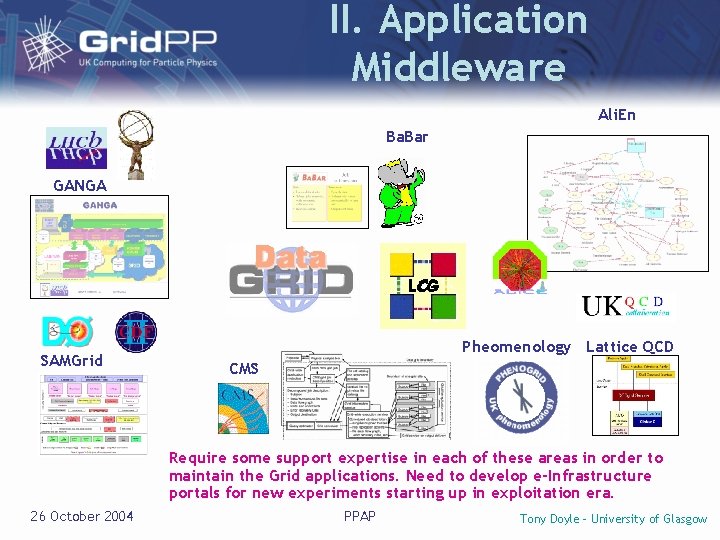

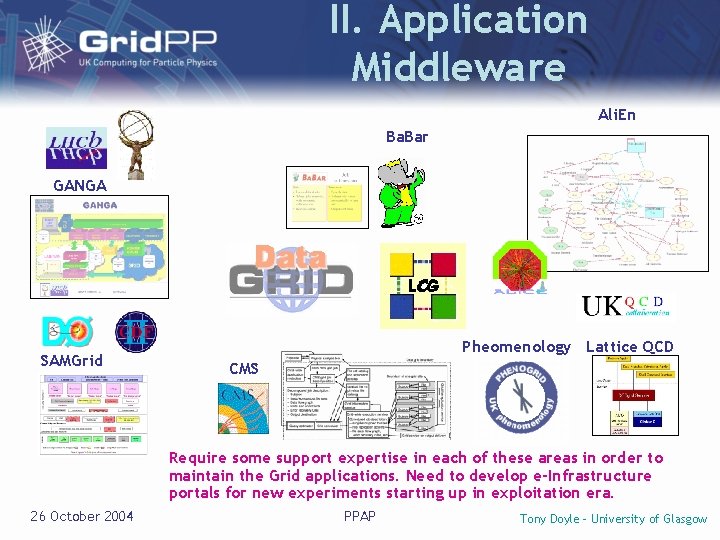

II. Application Middleware Ali. En Ba. Bar GANGA SAMGrid Pheomenology Lattice QCD CMS Require some support expertise in each of these areas in order to maintain the Grid applications. Need to develop e-Infrastructure portals for new experiments starting up in exploitation era. 26 October 2004 PPAP Tony Doyle - University of Glasgow

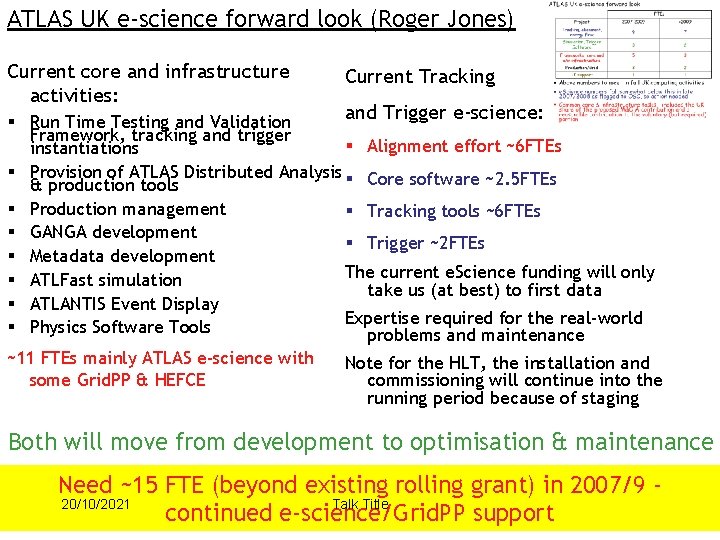

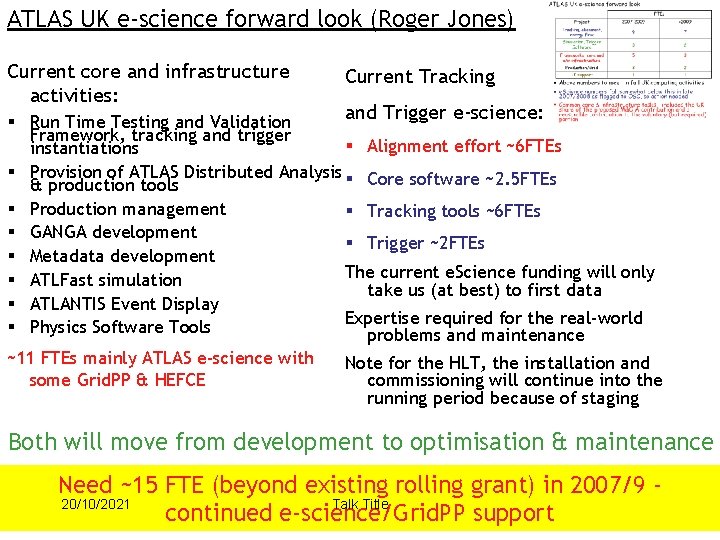

ATLAS UK e-science forward look (Roger Jones) Current core and infrastructure activities: Current Tracking ~11 FTEs mainly ATLAS e-science with some Grid. PP & HEFCE Note for the HLT, the installation and commissioning will continue into the running period because of staging and Trigger e-science: § Run Time Testing and Validation Framework, tracking and trigger § Alignment effort ~6 FTEs instantiations § Provision of ATLAS Distributed Analysis § Core software ~2. 5 FTEs & production tools § Production management § Tracking tools ~6 FTEs § GANGA development § Trigger ~2 FTEs § Metadata development The current e. Science funding will only § ATLFast simulation take us (at best) to first data § ATLANTIS Event Display Expertise required for the real-world § Physics Software Tools problems and maintenance Both will move from development to optimisation & maintenance Need ~15 FTE (beyond existing rolling grant) in 2007/9 20/10/2021 Talk Title continued e-science/Grid. PP support

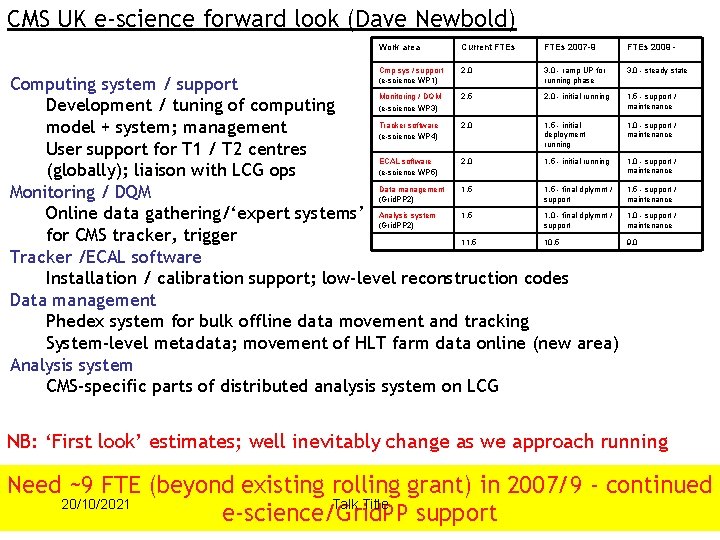

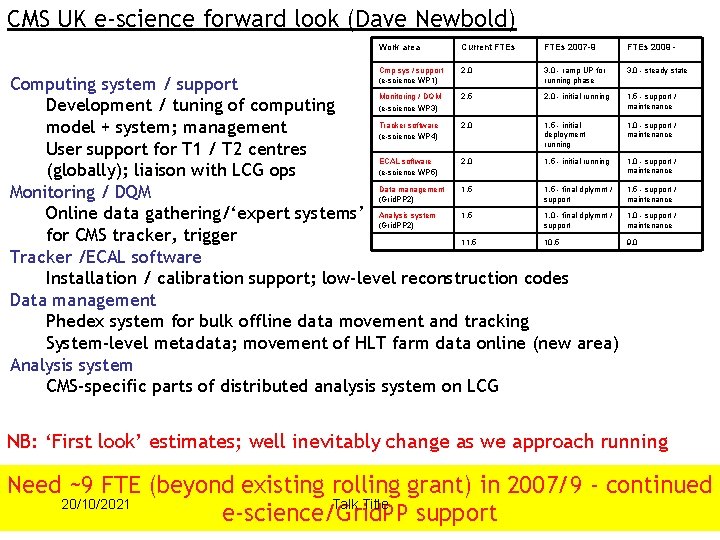

CMS UK e-science forward look (Dave Newbold) Work area Current FTEs 2007 -9 FTEs 2009 - Cmp sys / support (e-science WP 1) 2. 0 3. 0 - ramp UP for running phase 3. 0 - steady state Computing system / support Monitoring / DQM 2. 5 2. 0 - initial running (e-science WP 3) Development / tuning of computing Tracker software 2. 0 1. 5 - initial model + system; management deployment (e-science WP 4) running User support for T 1 / T 2 centres ECAL software 2. 0 1. 5 - initial running (e-science WP 5) (globally); liaison with LCG ops Data management 1. 5 - final dplymnt / Monitoring / DQM (Grid. PP 2) support system 1. 5 1. 0 - final dplymnt / Online data gathering/‘expert systems’ Analysis (Grid. PP 2) support for CMS tracker, trigger 11. 5 10. 5 Tracker /ECAL software Installation / calibration support; low-level reconstruction codes Data management Phedex system for bulk offline data movement and tracking System-level metadata; movement of HLT farm data online (new area) Analysis system CMS-specific parts of distributed analysis system on LCG 1. 5 - support / maintenance 1. 0 - support / maintenance 9. 0 NB: ‘First look’ estimates; well inevitably change as we approach running Need ~9 FTE (beyond existing rolling grant) in 2007/9 - continued 20/10/2021 Talk Title e-science/Grid. PP support

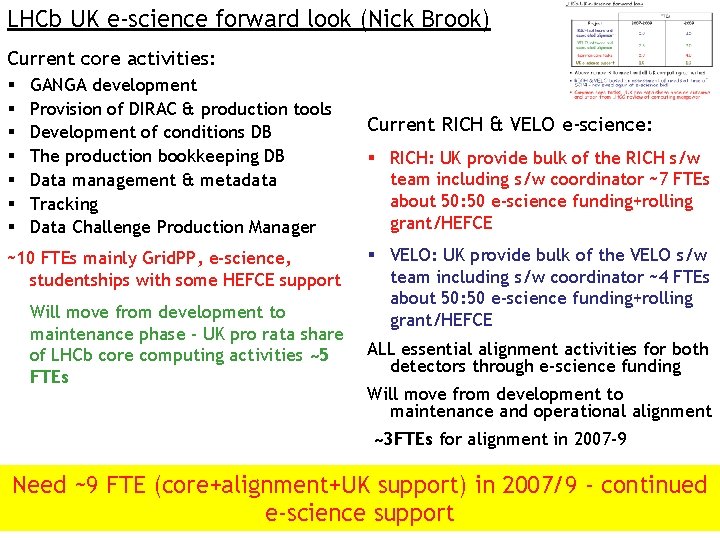

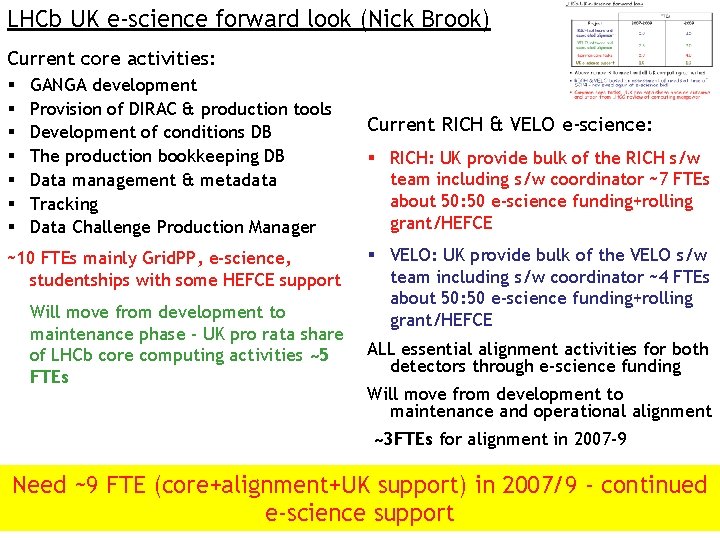

LHCb UK e-science forward look (Nick Brook) Current core activities: § § § § GANGA development Provision of DIRAC & production tools Development of conditions DB The production bookkeeping DB Data management & metadata Tracking Data Challenge Production Manager ~10 FTEs mainly Grid. PP, e-science, studentships with some HEFCE support Will move from development to maintenance phase - UK pro rata share of LHCb core computing activities ~5 FTEs Current RICH & VELO e-science: § RICH: UK provide bulk of the RICH s/w team including s/w coordinator ~7 FTEs about 50: 50 e-science funding+rolling grant/HEFCE § VELO: UK provide bulk of the VELO s/w team including s/w coordinator ~4 FTEs about 50: 50 e-science funding+rolling grant/HEFCE ALL essential alignment activities for both detectors through e-science funding Will move from development to maintenance and operational alignment ~3 FTEs for alignment in 2007 -9 Need ~9 FTE (core+alignment+UK support) in 2007/9 - continued e-science support

Grid and e-Science funding requirements • • Priorities in context of a financial snapshot in 2008 Grid (£ 5. 6 m p. a. ) and e-Science (£ 2. 7 m p. a. ) • • Assumes no Grid. PP project management Savings? – – • EGEE Phase 2 (2006 -08) may contribute UK e-Science context is To be compared with Road Map: Not a Bid - Preliminary Input 1. NGS (National Grid Service) 2. OMII (Open Middleware Infrastructure Institute) 3. DCC (Digital Curation Centre) Timeline? 26 October 2004 PPAP Tony Doyle - University of Glasgow

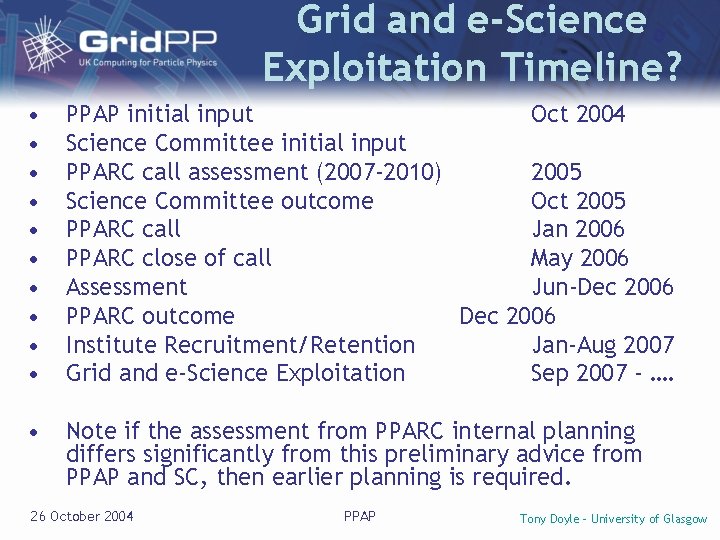

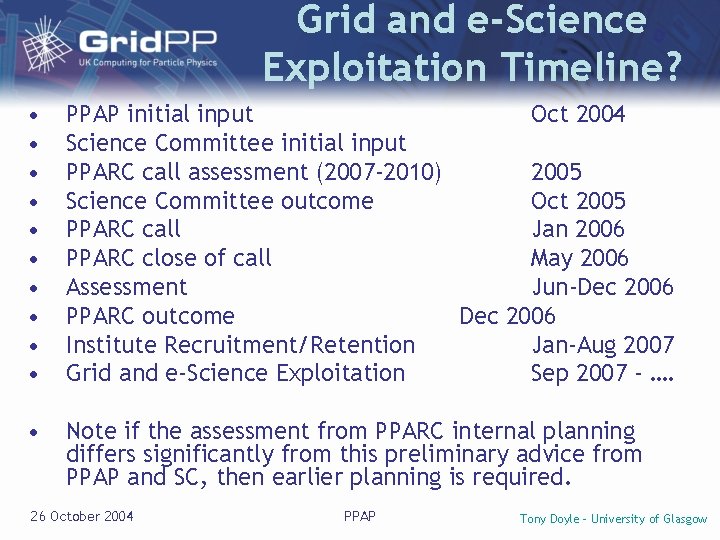

Grid and e-Science Exploitation Timeline? • • • PPAP initial input Oct 2004 Science Committee initial input PPARC call assessment (2007 -2010) 2005 Science Committee outcome Oct 2005 PPARC call Jan 2006 PPARC close of call May 2006 Assessment Jun-Dec 2006 PPARC outcome Dec 2006 Institute Recruitment/Retention Jan-Aug 2007 Grid and e-Science Exploitation Sep 2007 - …. • Note if the assessment from PPARC internal planning differs significantly from this preliminary advice from PPAP and SC, then earlier planning is required. 26 October 2004 PPAP Tony Doyle - University of Glasgow

Summary 1. What has been achieved in Grid. PP 1? • Widely recognised as successful at many levels 2. What is being attempted in Grid. PP 2? • Prototype to Production – typically most difficult phase • UK should invest further in LCG Phase-2 3. Resources needed for Grid and e-Science in medium-long term? • Current Road Map • Resources needed in 2008 estimated at • Timeline for decision-making outlined. . 26 October 2004 PPAP ~£ 6 m p. a. £ 8. 3 m Tony Doyle - University of Glasgow