ERROR CONTROL CODING Basic concepts Classes of codes

ERROR CONTROL CODING Basic concepts Classes of codes: Block Codes Linear Codes Cyclic Codes Convolutional Codes 1

Basic Concepts Example: Binary Repetition Codes (3, 1) code: 0 ==> 000 1 ==> 111 Received: 011. What was transmitted? scenario A: 111 with one error in 1 st location scenario B: 000 with two errors in 2 nd & 3 rd locations. Decoding: P(A) = (1 - p)2 p P(B) = (1 - p) p 2 P(A) > P(B) (for p<0. 5) Decoding decision: 011 ==> 111 2

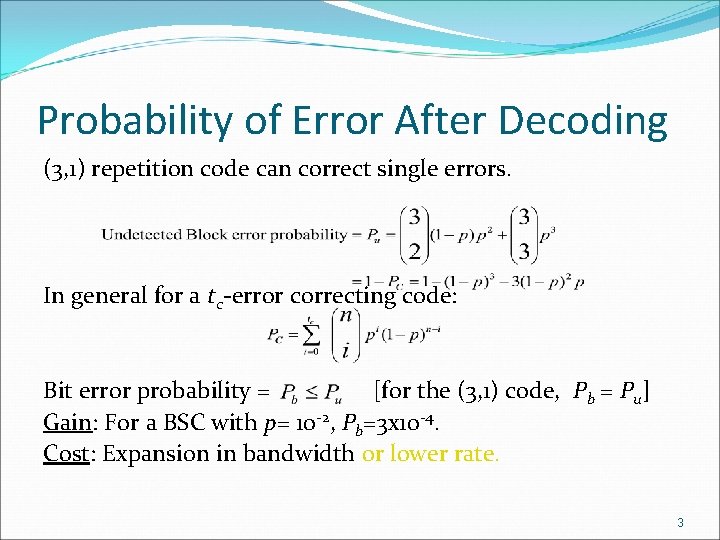

Probability of Error After Decoding (3, 1) repetition code can correct single errors. In general for a tc-error correcting code: Bit error probability = [for the (3, 1) code, Pb = Pu] Gain: For a BSC with p= 10 -2, Pb=3 x 10 -4. Cost: Expansion in bandwidth or lower rate. 3

Hamming Distance Def. : The Hamming distance between two codewords ci and cj, denoted by d(ci, cj), is the number of components at which they differ. d. H(011, 000) = 2 d. H [C 1, C 2]=WH(C 1+C 2) d. H (011, 111) = 1 Therefore 011 is closer to 111. Maximum Likelihood Decoding reduces to Minimum Distance Decoding, if the priory probabilities are equal (P(0)=P(1)) 4

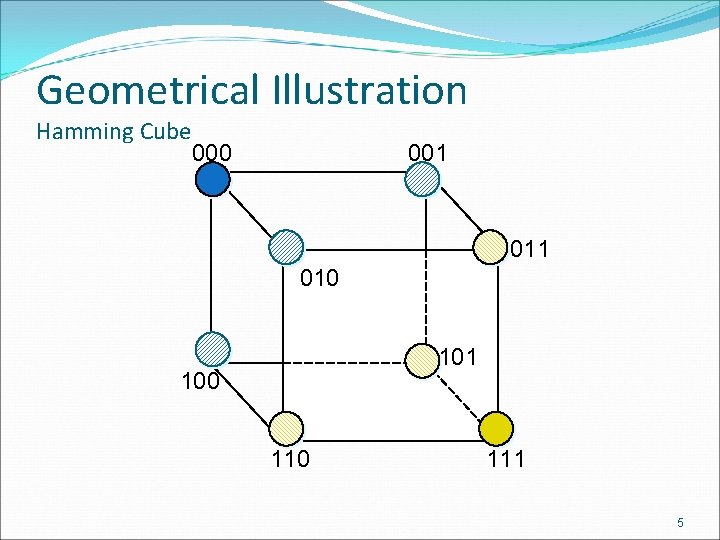

Geometrical Illustration Hamming Cube 000 001 010 101 100 111 5

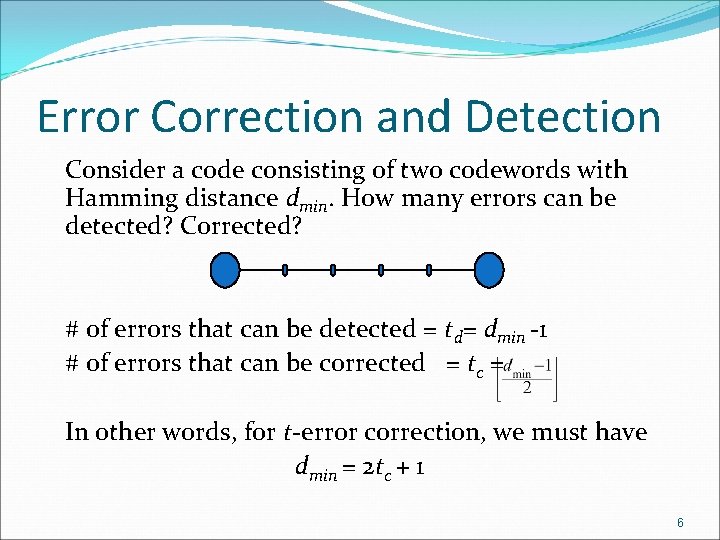

Error Correction and Detection Consider a code consisting of two codewords with Hamming distance dmin. How many errors can be detected? Corrected? # of errors that can be detected = td= dmin -1 # of errors that can be corrected = tc = In other words, for t-error correction, we must have dmin = 2 tc + 1 6

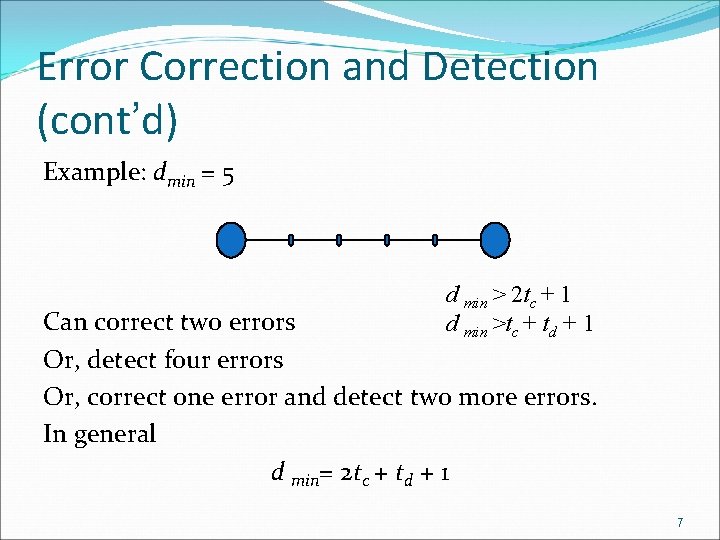

Error Correction and Detection (cont’d) Example: dmin = 5 d min > 2 tc + 1 d min >tc + td + 1 Can correct two errors Or, detect four errors Or, correct one error and detect two more errors. In general d min= 2 tc + td + 1 7

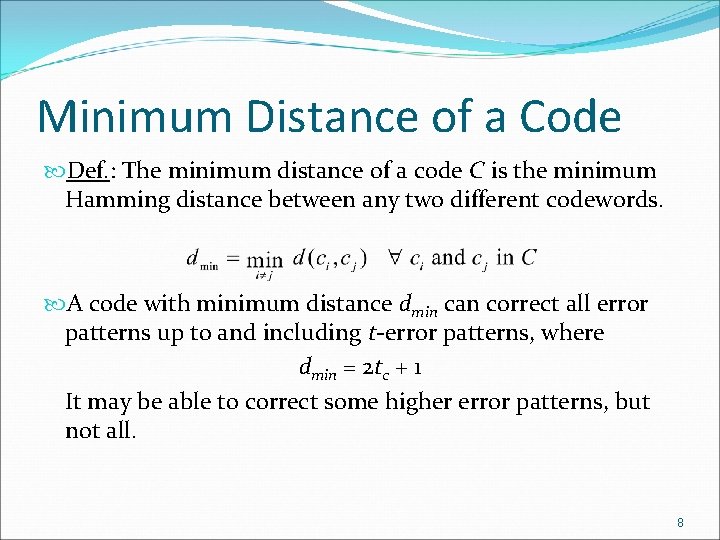

Minimum Distance of a Code Def. : The minimum distance of a code C is the minimum Hamming distance between any two different codewords. A code with minimum distance dmin can correct all error patterns up to and including t-error patterns, where dmin = 2 tc + 1 It may be able to correct some higher error patterns, but not all. 8

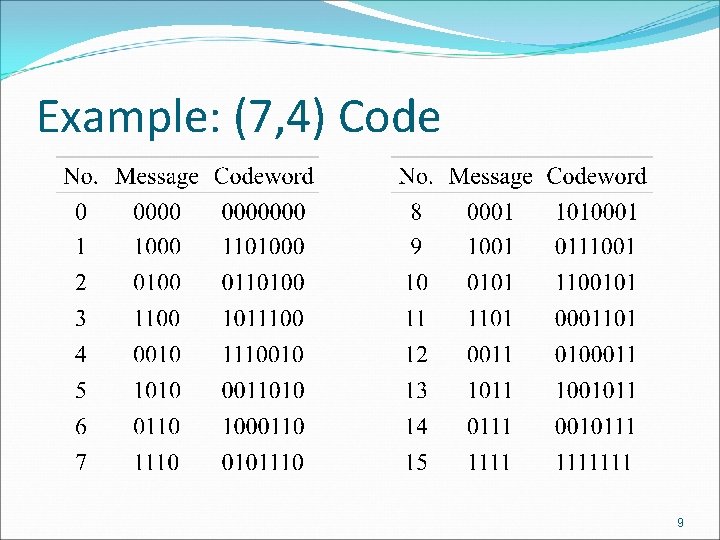

Example: (7, 4) Code 9

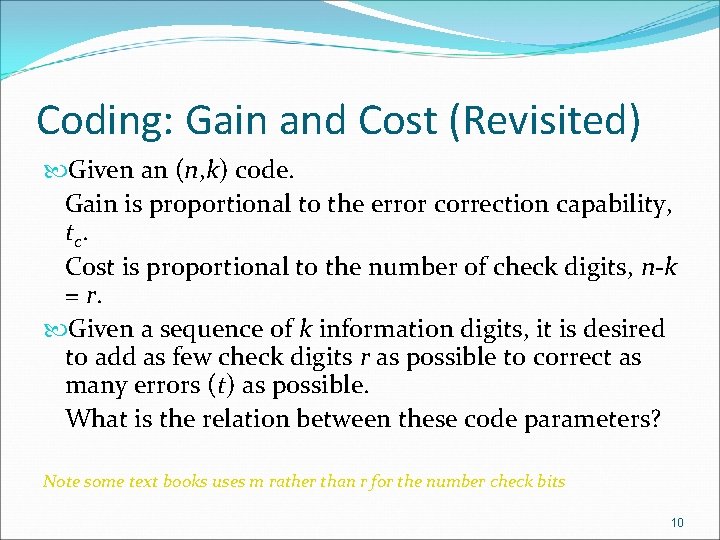

Coding: Gain and Cost (Revisited) Given an (n, k) code. Gain is proportional to the error correction capability, t c. Cost is proportional to the number of check digits, n-k = r. Given a sequence of k information digits, it is desired to add as few check digits r as possible to correct as many errors (t) as possible. What is the relation between these code parameters? Note some text books uses m rather than r for the number check bits 10

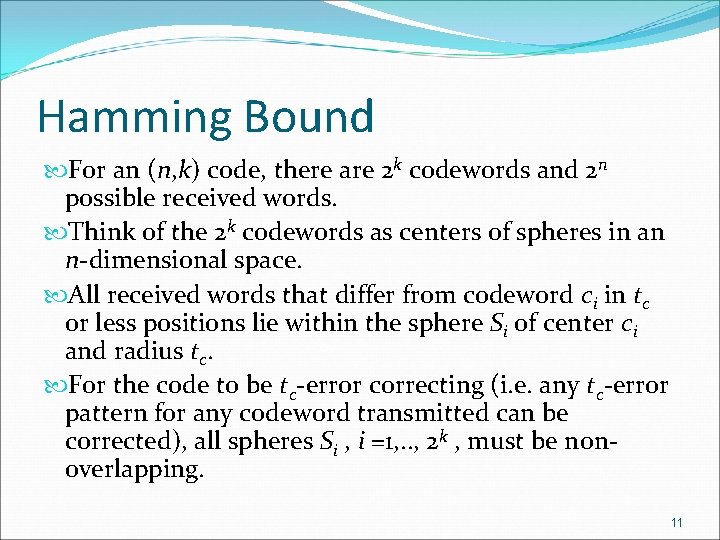

Hamming Bound For an (n, k) code, there are 2 k codewords and 2 n possible received words. Think of the 2 k codewords as centers of spheres in an n-dimensional space. All received words that differ from codeword ci in tc or less positions lie within the sphere Si of center ci and radius tc. For the code to be tc-error correcting (i. e. any tc-error pattern for any codeword transmitted can be corrected), all spheres Si , i =1, . . , 2 k , must be nonoverlapping. 11

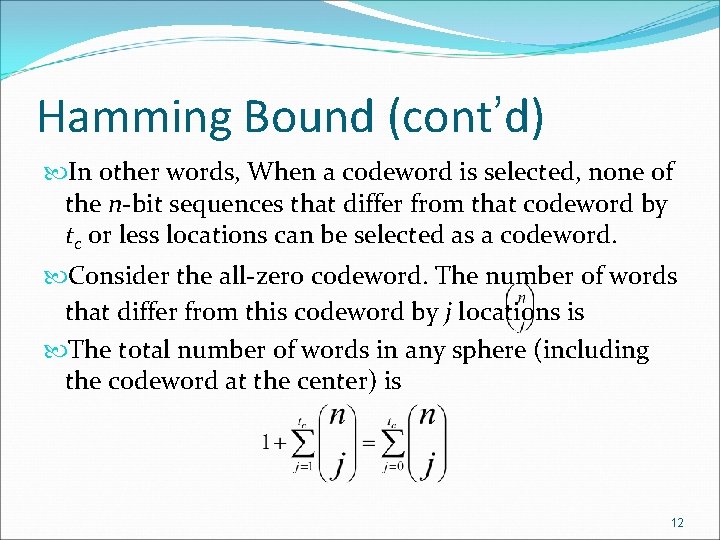

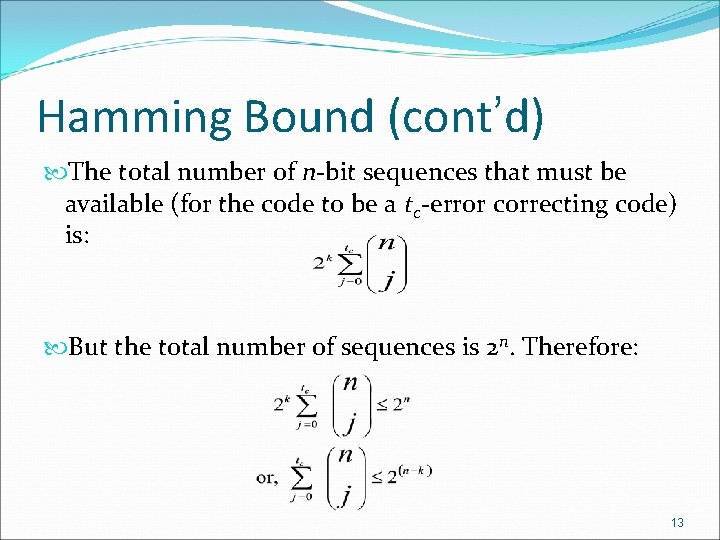

Hamming Bound (cont’d) In other words, When a codeword is selected, none of the n-bit sequences that differ from that codeword by tc or less locations can be selected as a codeword. Consider the all-zero codeword. The number of words that differ from this codeword by j locations is The total number of words in any sphere (including the codeword at the center) is 12

Hamming Bound (cont’d) The total number of n-bit sequences that must be available (for the code to be a tc-error correcting code) is: But the total number of sequences is 2 n. Therefore: 13

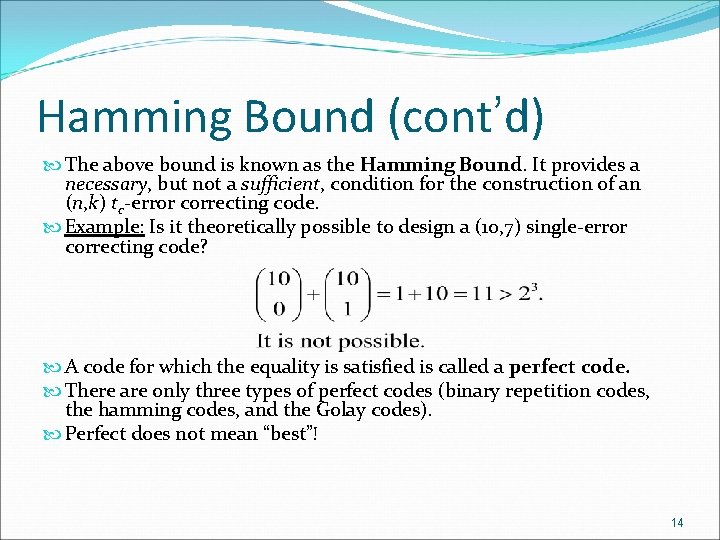

Hamming Bound (cont’d) The above bound is known as the Hamming Bound. It provides a necessary, but not a sufficient, condition for the construction of an (n, k) tc-error correcting code. Example: Is it theoretically possible to design a (10, 7) single-error correcting code? A code for which the equality is satisfied is called a perfect code. There are only three types of perfect codes (binary repetition codes, the hamming codes, and the Golay codes). Perfect does not mean “best”! 14

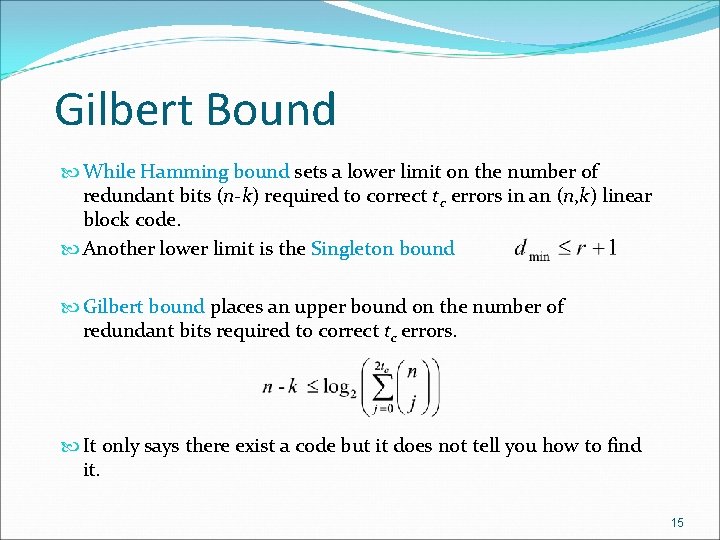

Gilbert Bound While Hamming bound sets a lower limit on the number of redundant bits (n-k) required to correct tc errors in an (n, k) linear block code. Another lower limit is the Singleton bound Gilbert bound places an upper bound on the number of redundant bits required to correct tc errors. It only says there exist a code but it does not tell you how to find it. 15

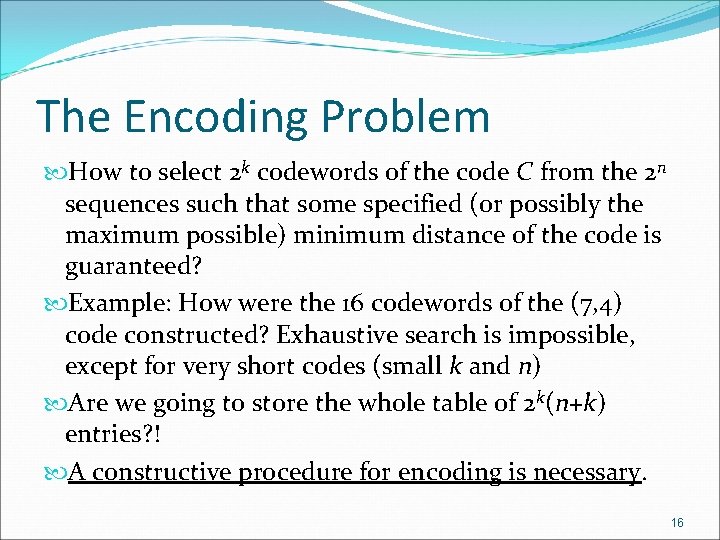

The Encoding Problem How to select 2 k codewords of the code C from the 2 n sequences such that some specified (or possibly the maximum possible) minimum distance of the code is guaranteed? Example: How were the 16 codewords of the (7, 4) code constructed? Exhaustive search is impossible, except for very short codes (small k and n) Are we going to store the whole table of 2 k(n+k) entries? ! A constructive procedure for encoding is necessary. 16

The Decoding Problem Standard Array 0000000 1101000 0110100 101110010 0011010 1000110 0101110 1010001 011100101 0001101 0100011 10010111 1111111 0000001 1101001 0110101 1011101 1110011011 1000111 0101111 1010000 0111000 1100100 0001100 0100010 1001010 0010110 1111110 0000010 1101010 0110110 1011110000 0011000100 0101100 1010011 0111011 1100111 0001111 0100001 1001001 0010101 1111101 0000100 110110000 1011000 1110110 0011110 1000010 01010101 0111101 1100001001 01001111 0010011 1111011 0001000 1100000 0111100 1010100 1111010 0010010 1001110 0100110 1011001 0`10001 1101101 0000101011 1000011111 1110111 0010000 1111000 0100100 1001100010 0001010110 0111110 1000001 0101001 1110101 0011101 0110011 1011011 0000111 1101111 0100000 1001000 0010100 1111100 1010010 0111010 1100110 0001110001 0011001 1000101101 0000011 1101011 011011111 1000000 0101000 1110100 0011100 0110010 1011010 0000110 1101110 0010001 1111001 0100101 1001101 1100011 0001011 1010111111 Exhaustive decoding is impossible!! Well-constructed decoding methods are required. Two possible types of decoders: 1) Complete: always chooses minimum distance 2) Bounded-distance: chooses the minimum distance up to a certain tc. Error detection is utilized otherwise. 17

- Slides: 17