Error Control Code Goal Goal of this lecture

- Slides: 23

Error Control Code

Goal • Goal of this lecture – Review. CDMA, OFDM, MIMO – Introduction to error control codes

Review • Still remember the analogy of TDMA, FDMA, and CDMA? • The cell phone to base station model. Also, in wireless LAN, it’s PC to access point model. • What does the chip sequence achieve in CDMA?

Error Control Code • Widely used in many areas, like communications, DVD, data storage… • In communications, because of noise, you can never be sure that a received bit is right • In physical layer, what you do is, given k data bits, add n-k redundant bits and make it into a n-bit codeword. You send the codeword to the receiver. If some bits in the codeword is wrong, the receiver should be able to do some calculation and find out – There is something wrong – Or, these things are wrong (for binary codes, this is enough) – Or, these things should be corrected as this for non-binary codes – (this is called Block Code)

Error Control Codes • You want a code to – Use as few redundant bits as possible – Can detect or correct as many error bits as possible

Error Control Code • Repetition code is the simplest, but requires a lot of redundant bits, and the error correction power is questionable for the amount of extra bits used • Checksum does not require a lot of redundant bits, but can only tell you “something is wrong” and cannot tell you what is wrong

(7, 4) Hamming Code • The best example for introductory purpose and is also used in many applications • (7, 4) Hamming code. Given 4 information bits, (i 0, i 1, i 2, i 3), code it into 7 bits C=(c 0, c 1, c 2, c 3, c 4, c 5, c 6). The first four bits are just copies of the information bits, e. g. , c 0=i 0. Then produce three parity checking bits c 4, c 5, and c 6 as (additions are in the binary field) – c 4=i 0+i 1+i 2 – c 5=i 1+i 2+i 3 – c 6=i 0+i 1+i 3 • For example, (1, 0, 1, 1) coded to (1, 0, 1, 1, 0, 0, 0). • Is capable of correcting one bit error

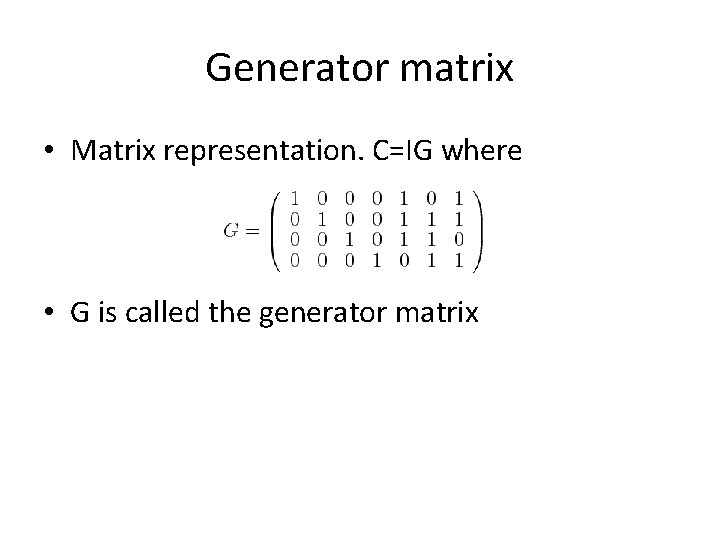

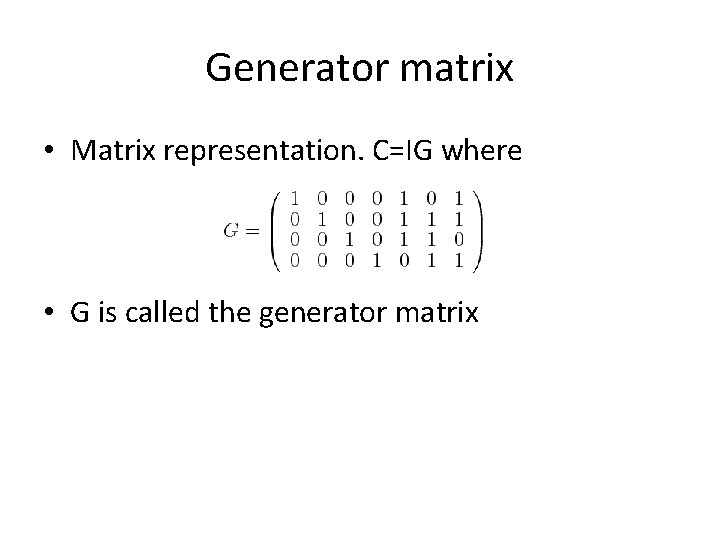

Generator matrix • Matrix representation. C=IG where • G is called the generator matrix

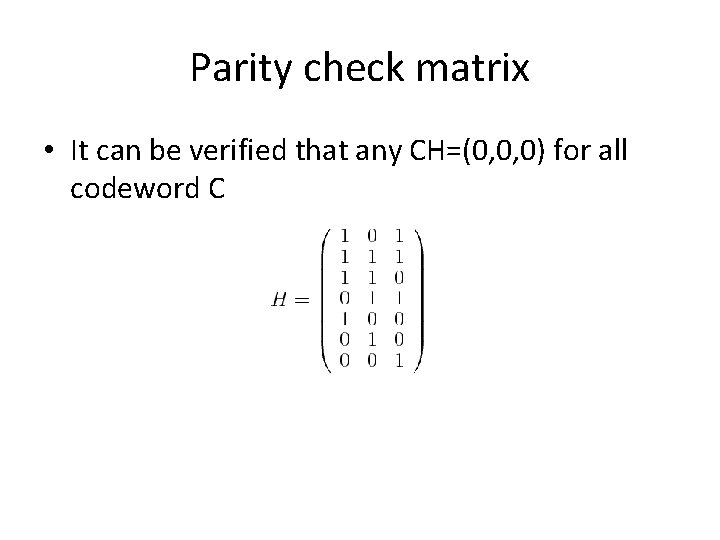

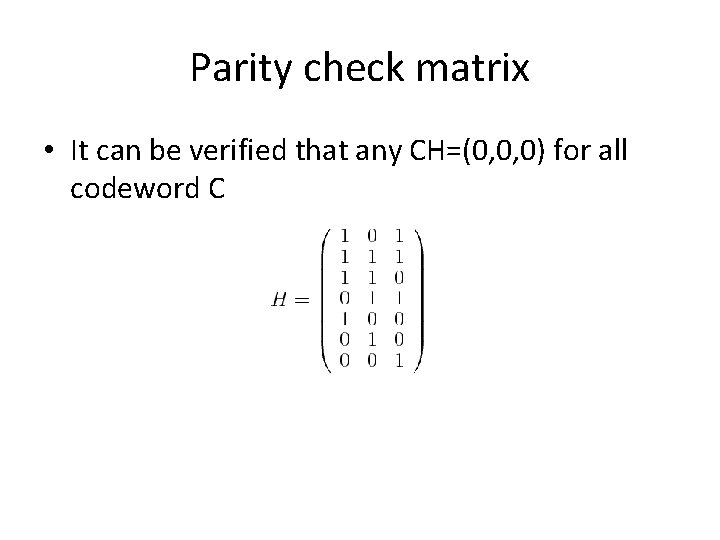

Parity check matrix • It can be verified that any CH=(0, 0, 0) for all codeword C

Error Correction • What you receive is R=C+E. You multiply R with H: S=RH=(C+E)H=CH+EH=EH. S is called the syndrome. If there is only one `1’ in E, S will be one of the rows of H. Because each row is unique, you know which bit in E is `1’. • The decoding scheme is: – Compute the syndrome – If S=(0, 0, 0), do nothing. If S!=(0, 0, 0), output one error bit.

How G is chosen • How G is chosen such that it can correct one error? • Any combinations of the row vectors of G has weight at least 3 (having at least three `1’s) – and codeword has weight at least 3. • The sum of any two codeword is still a codeword, so the distance (number of bits that differ) is also at least 3. • So if one bit is wrong, won’t confuse it with other codewords

The existence of H • We didn’t compare a received vector with all codewords. We used H. • The existence of H is no coincidence (need some basic linear algebra!) Let Omega be the space of all 7 -bit vectors. The codeword space is a subspace of Omega spanned by the row vectors of G. There must be a subspace orthogonal to the codeword space spanned by 3 vectors which is the column vectors of H.

Linear Block Code • Hamming Code is a Linear Block Code means that the codeword is generated by multiplying the message vector with the generator matrix. • Minimum weight as large as possible. If minimum weight is 2 t+1, capable of detecting 2 t error bits and correcting t error bits.

Cyclic Codes • Hamming code is useful but there exist codes that offers same (if not larger) error control capabilities while can be implemented much simpler. • Cyclic code is a linear code that any cyclic shift of a codeword is still a codeword. • Makes encoding/decoding much simpler, no need of matrix multiplication.

Cyclic code • Polynomial representation of cyclic codes. C(x) = C_{n -1}x^{n-1} + C_{n-2}x^{n-2} + … + C_{1}x^{1} + C_{0}x^{0}. • Division of polynomials. Try divide x^3+3 x^2+3 x+1 by x+1. • Verify that x. C(x) mod x^n-1 is the cyclic shift of C(x). • A (n, k) cyclic code can be generated with a polynomial g(x) which has degree n-k and is a factor of x^n-1. Call it the generator polynomial.

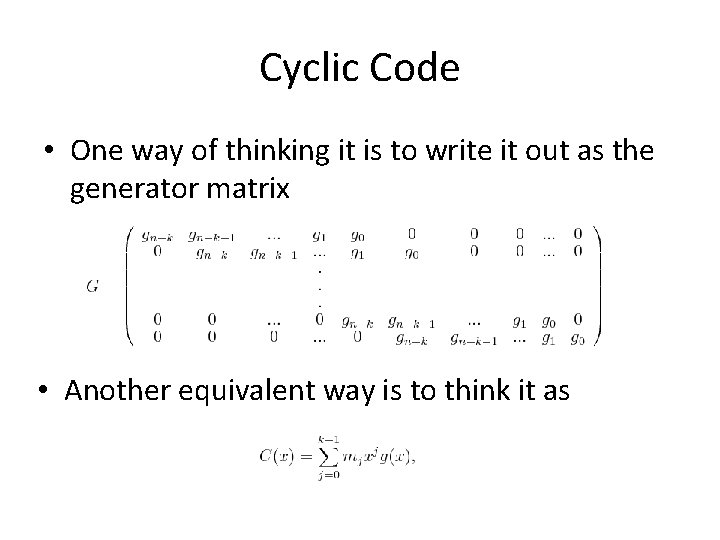

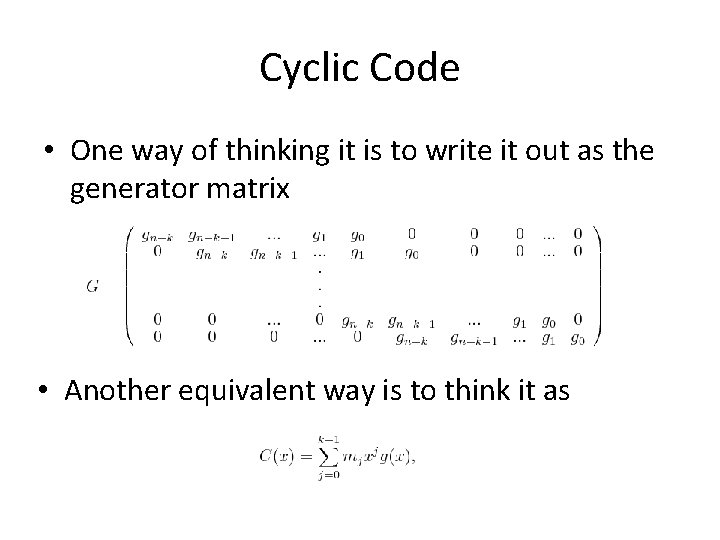

Cyclic Code • One way of thinking it is to write it out as the generator matrix • Another equivalent way is to think it as

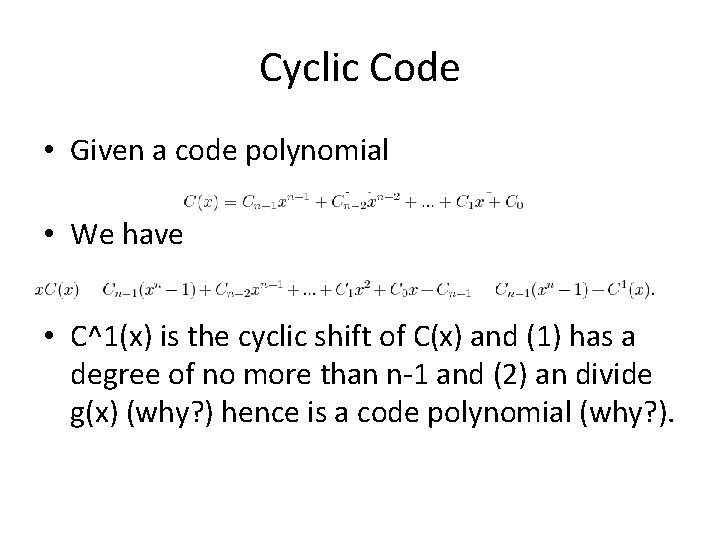

Cyclic Code • Given a code polynomial • We have • C^1(x) is the cyclic shift of C(x) and (1) has a degree of no more than n-1 and (2) an divide g(x) (why? ) hence is a code polynomial (why? ).

Cyclic Code • So, to generate a cyclic code is to find a polynomial that (1) has degree n-k and (2) is a factor of x^n-1. • So, each row of the generator matrix is just a shifted version of the first row. Unlike Hamming Code.

Generating Systematic Cyclic Code • A systematic code means that the first k bits are the data bits and the rest n-k bits are parity checking bits. • To generate it, you let where • The claim is that C(x) must divide g(x) hence is a code polynomial. 33 mod 7 = 6. Hence 336=28 can be divided by 7.

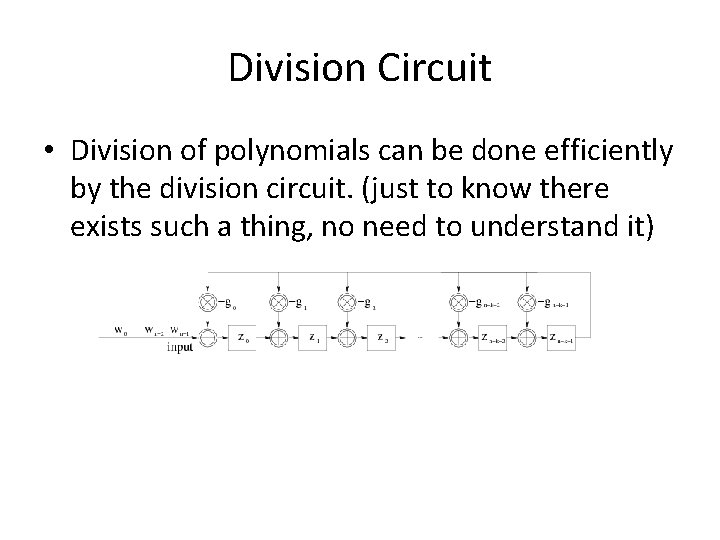

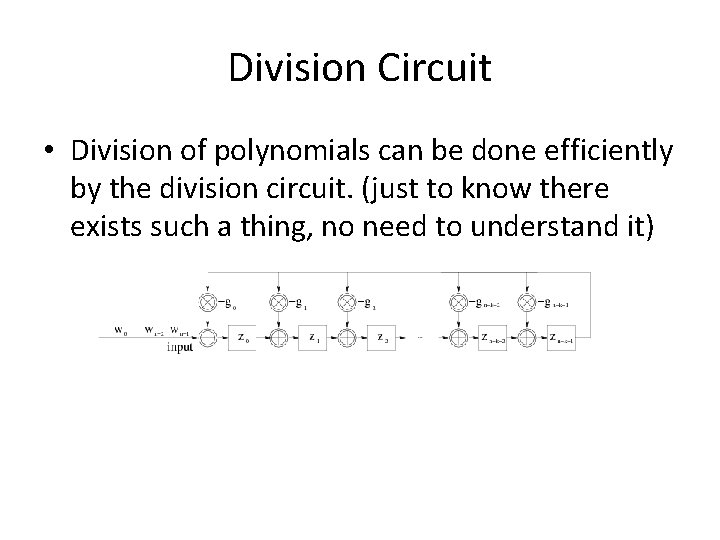

Division Circuit • Division of polynomials can be done efficiently by the division circuit. (just to know there exists such a thing, no need to understand it)

Remaining Questions for Those Really Interested • Decoding. Divide the received polynomial by g(x). If there is no error you should get a 0 (why? ). Make sure that the error polynomial you have in mind does not divide g(x). • How to make sure to choose a good g(x) to make the minimum degree larger? Turns out to learn this you have to study more – it’s the BCH code.

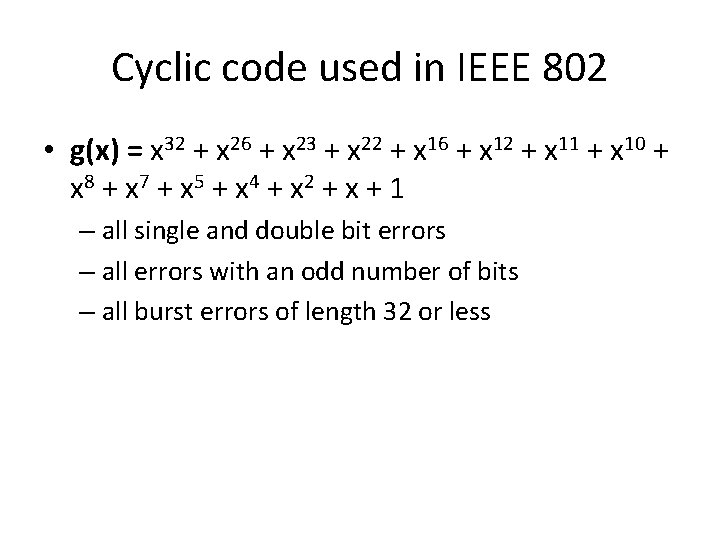

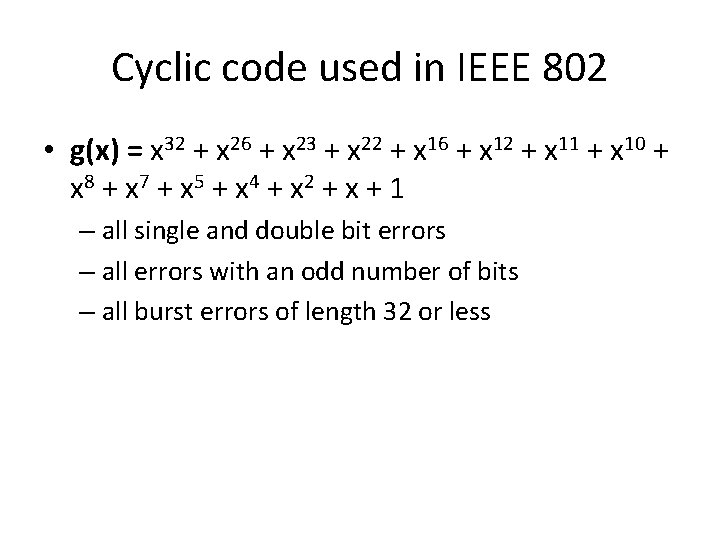

Cyclic code used in IEEE 802 • g(x) = x 32 + x 26 + x 23 + x 22 + x 16 + x 12 + x 11 + x 10 + x 8 + x 7 + x 5 + x 4 + x 2 + x + 1 – all single and double bit errors – all errors with an odd number of bits – all burst errors of length 32 or less

Other codes • RS code. Block code. Used in CD, DVD, HDTV transmission. • LDPC code. Also block code. Reinvented after first proposed 40 some years ago. Proposed to be used in 802. 11 n. Achieve close-to-Shannon bound • Trellis code. Not block code. More closely coupled with modulation. • Turbo code. Achieve close-to-Shannon bound.