Error Control Code Error Control Code Widely used

- Slides: 21

Error Control Code

Error Control Code • Widely used in many areas, like communications, DVD, data storage… • In communications, because of noise, you can never be sure that a received bit is right • In physical layer, what we do is, given k data bits, add n-k redundant bits and make it into a n-bit codeword. We send the codeword to the receiver. If some bits in the codeword is wrong, the receiver should be able to do some calculation and find out – There is something wrong – Or, these things are wrong (for binary codes, this is enough) – Or, these things should be corrected as this for non-binary codes – (this is called Block Code)

Error Control Codes • You want a code to – Use as few redundant bits as possible – Can detect or correct as many error bits as possible

Error Control Code • Repetition code is the simplest, but requires a lot of redundant bits, and the error correction power is questionable for the amount of extra bits used • Checksum does not require a lot of redundant bits, but can only tell you “something is wrong” and cannot tell you what is wrong

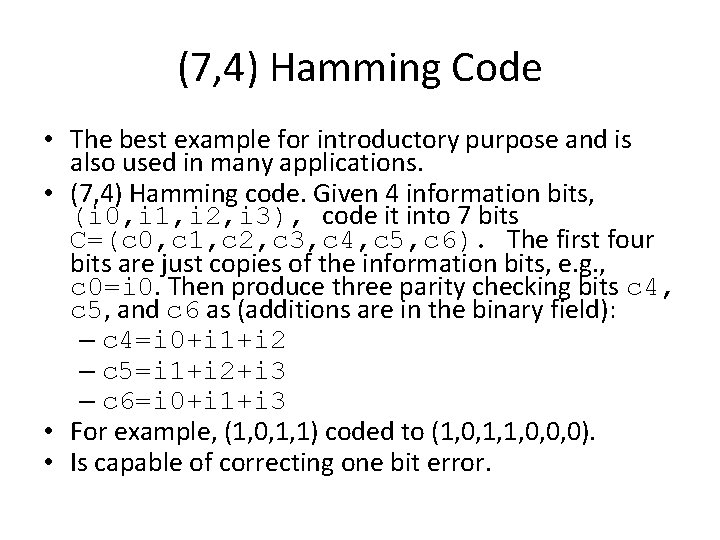

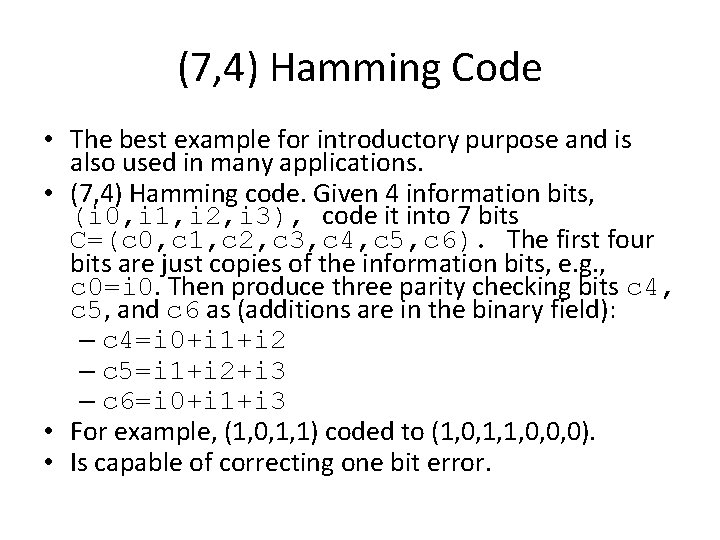

(7, 4) Hamming Code • The best example for introductory purpose and is also used in many applications. • (7, 4) Hamming code. Given 4 information bits, (i 0, i 1, i 2, i 3), code it into 7 bits C=(c 0, c 1, c 2, c 3, c 4, c 5, c 6). The first four bits are just copies of the information bits, e. g. , c 0=i 0. Then produce three parity checking bits c 4, c 5, and c 6 as (additions are in the binary field): – c 4=i 0+i 1+i 2 – c 5=i 1+i 2+i 3 – c 6=i 0+i 1+i 3 • For example, (1, 0, 1, 1) coded to (1, 0, 1, 1, 0, 0, 0). • Is capable of correcting one bit error.

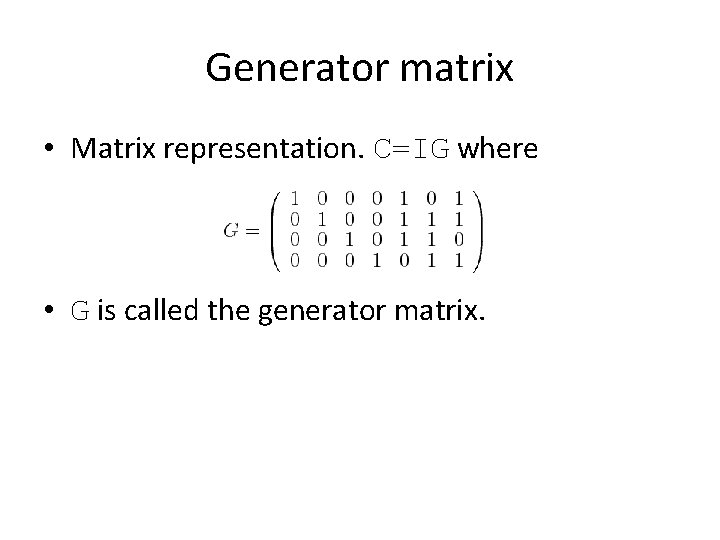

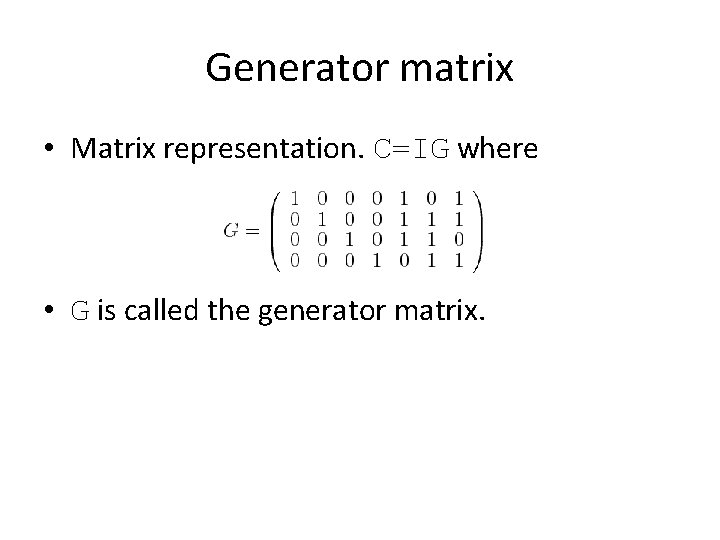

Generator matrix • Matrix representation. C=IG where • G is called the generator matrix.

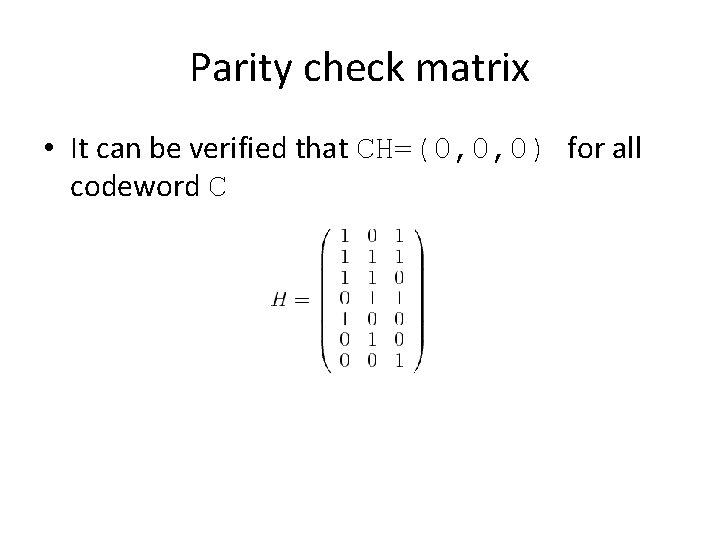

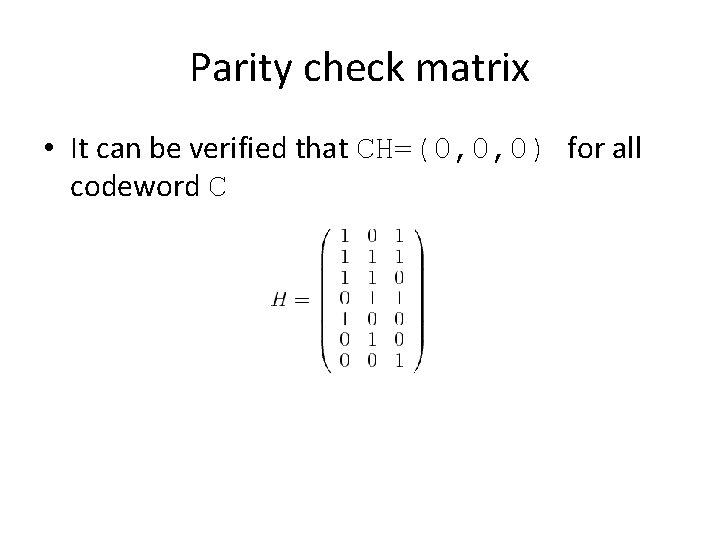

Parity check matrix • It can be verified that CH=(0, 0, 0) for all codeword C

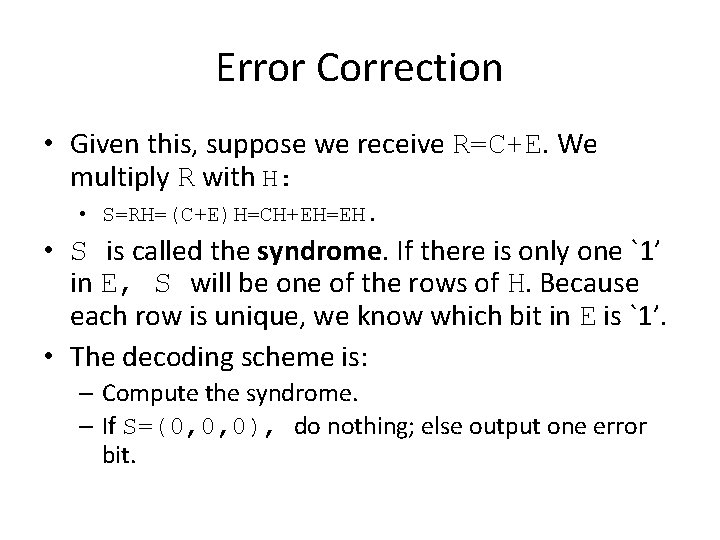

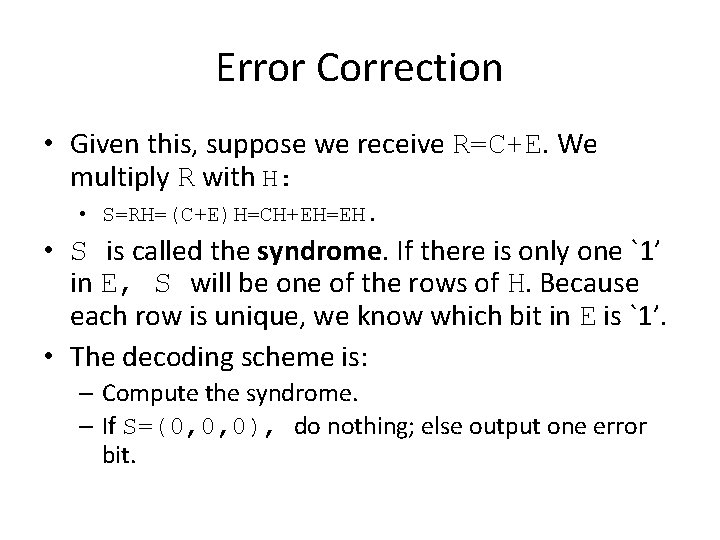

Error Correction • Given this, suppose we receive R=C+E. We multiply R with H: • S=RH=(C+E)H=CH+EH=EH. • S is called the syndrome. If there is only one `1’ in E, S will be one of the rows of H. Because each row is unique, we know which bit in E is `1’. • The decoding scheme is: – Compute the syndrome. – If S=(0, 0, 0), do nothing; else output one error bit.

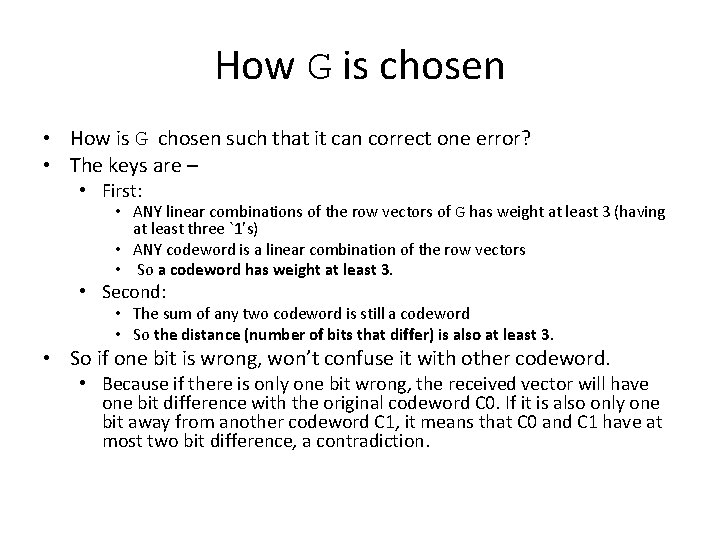

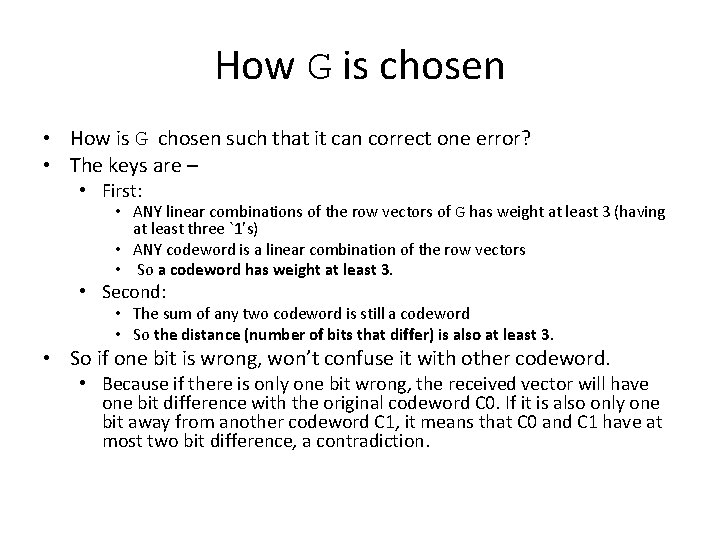

How G is chosen • How is G chosen such that it can correct one error? • The keys are – • First: • ANY linear combinations of the row vectors of G has weight at least 3 (having at least three `1’s) • ANY codeword is a linear combination of the row vectors • So a codeword has weight at least 3. • Second: • The sum of any two codeword is still a codeword • So the distance (number of bits that differ) is also at least 3. • So if one bit is wrong, won’t confuse it with other codeword. • Because if there is only one bit wrong, the received vector will have one bit difference with the original codeword C 0. If it is also only one bit away from another codeword C 1, it means that C 0 and C 1 have at most two bit difference, a contradiction.

Error Detection • What if there are 2 error bits? Can the code correct it? Can the code detect it? • What if there are 3 error bits? Can the code correct it? Can the code detect it?

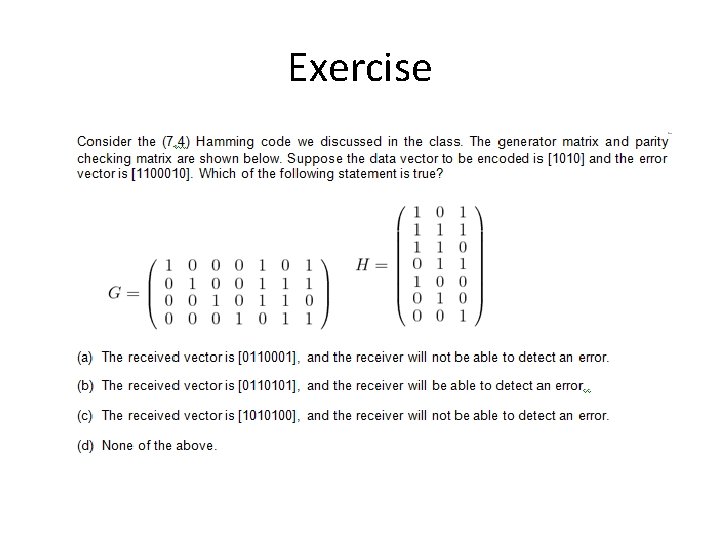

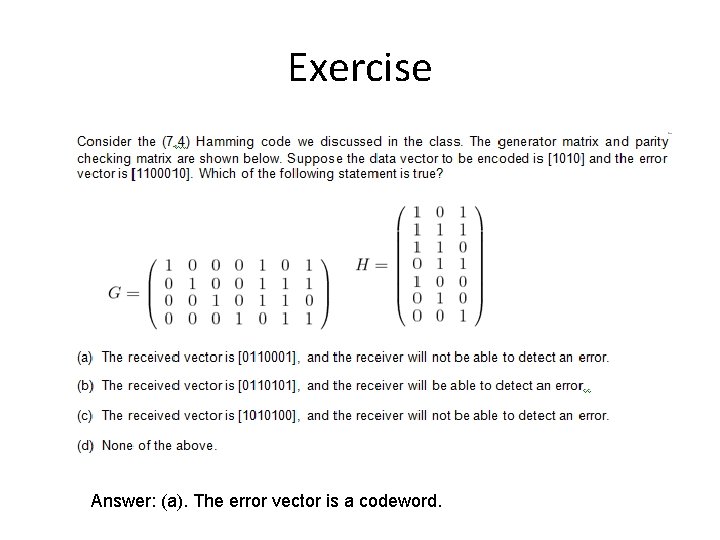

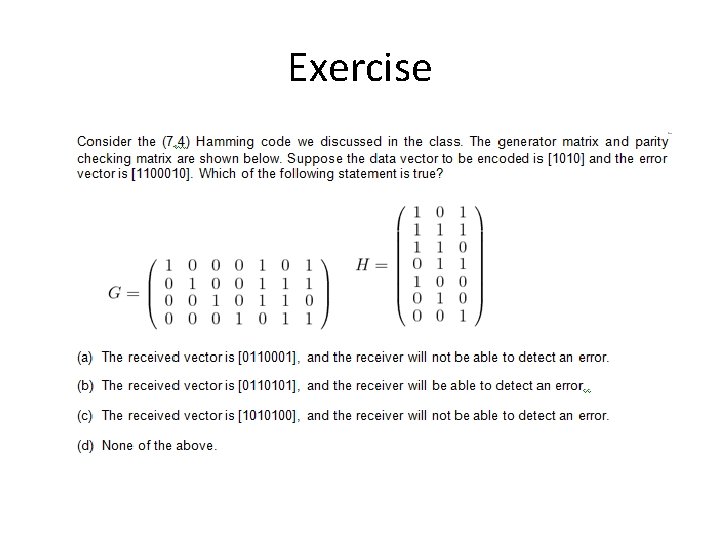

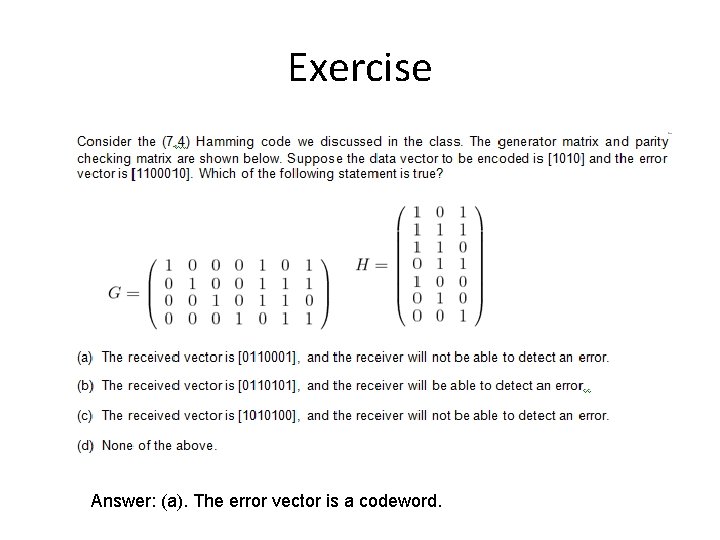

Exercise

Exercise Answer: (a). The error vector is a codeword.

The existence of H • We didn’t compare a received vector with all codewords. We used H. • The existence of H is no coincidence (need some basic linear algebra). Let Ω be the space of all 7 -bit vectors. The codeword space is a subspace of Ω spanned by the row vectors of G. There must be a subspace orthogonal to the codeword space spanned by 3 vectors. • So, we can take these 3 vectors and make them the column vectors of H. Given we have chosen a correct G (can correct 1 error, i. e. , any non-zero vector in that space has at least 3 `1’s), any vector with only one `1’ bit or two `1’ bits multiplied with H will result in a non-zero vector.

Performance • What is the overhead of the (7, 4) Hamming code? How much price do we pay to correct one error bit in seven bits? • Is it worth it?

Performance • Probability distributions: – Bernoulli: Throw a coin, the probability to see head is p and tail 1 -p. – Geometric: Keep throwing coins, the probability of throwing i times until seeing the first head is (1 p)i-1 p where i>0. – Binomial: Throw a total of n times, the probability that seeing head for i times is (n, i)(1 -p)n-ipi where (n, i) means n choose i.

Performance • Suppose the raw Bit Error Ratio (BER) is 10 -2. What is the BER after decoding the Hamming code? – Assume that if there are more than one bit error in a codeword, all decoded bits are wrong.

Performance • Suppose the raw Bit Error Ratio (BER) is 10 -3. What is the BER after decoding the Hamming code? – Assume that if there are more than one bit error in a codeword, all decoded bits are wrong. • Look at a codeword. The probability that it has i error bits is (7, i)(1 -p)7 -ipi. – The probability that it has no error is 0. 993. The probability that it has one error is 0. 00696. In these cases there is no error, with total probability of 0. 99996. – In the remaining 0. 00004 fraction of the cases, assume all data bits are wrong. – The average error ratio is 0. 00004. • The (7, 4) Hamming code thus reduces the BER by almost two orders of magnitude.

Interleaving • The errors in communications do not occur independently. – Independence between two events A and B means that given A happens, we still know nothing more about whether or not B will happen. – Errors are not independent because if one bit is wrong, the probability that the next bit is wrong increases, just as if we know today is raining, the probability that tomorrow will rain increases. • But we used the independence assumption in the analysis of the performance!

Interleaving • In fact, it is more true to assume the errors occurring in bursts. • If errors are in bursts, the (7, 4) Hamming code will be useless because – It is unnecessary when there is no error – It cannot correct errors during the error bursts because every codeword will have more than one error bit

Interleaving • The solution is simple and neat. • The sender will – Encode the data into codewords. – Apply a random permutation on the encoded bits. – Transmit. • The receiver will – Apply the reverse of the random permutation. – Decode the codewords. • The errors occurred in the channel will be relocated to random locations!

A Useful Website • http: //www. ka 9 q. net/code/fec/