Eraser A Dynamic Data Race Detector for Multithreaded

![Data Race (2) Thread 1 y += 1; [y_1] lock(mu); v += 1; A Data Race (2) Thread 1 y += 1; [y_1] lock(mu); v += 1; A](https://slidetodoc.com/presentation_image/0e9c8e0ce6d52811978cae3e845de18d/image-4.jpg)

- Slides: 19

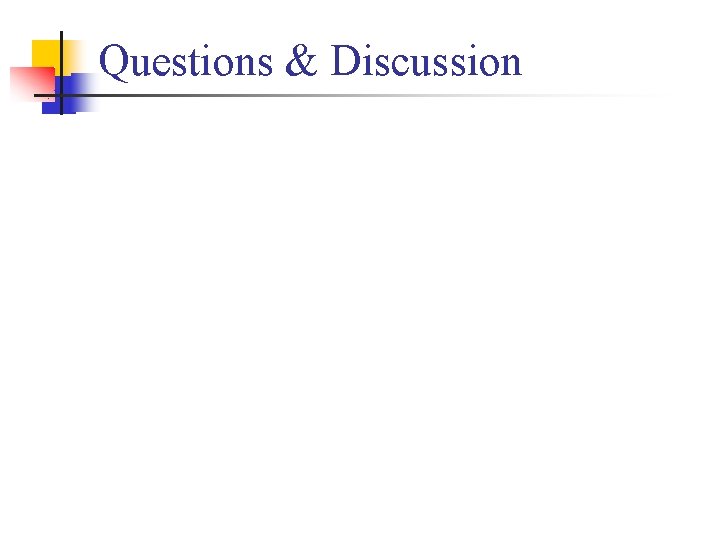

Eraser: A Dynamic Data Race Detector for Multithreaded Programs SAVAGE/BURROWS/NELSON/ SOBALVARRO/ANDERSON TOCS, ’ 97 Presented by Pilsung Kang

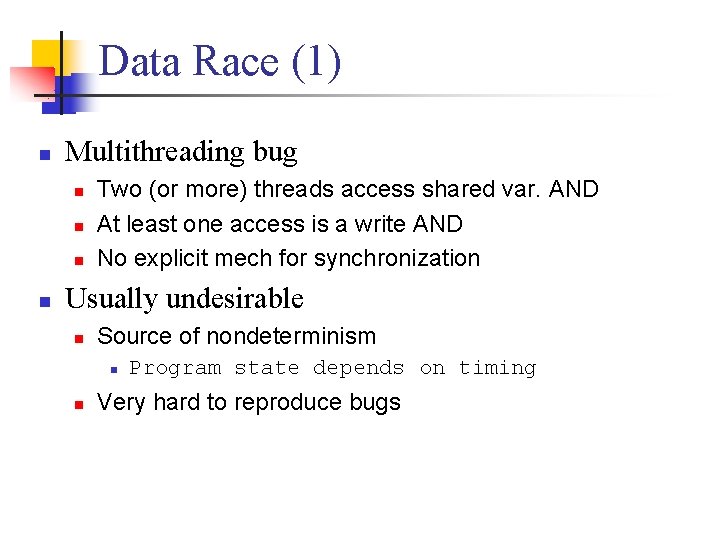

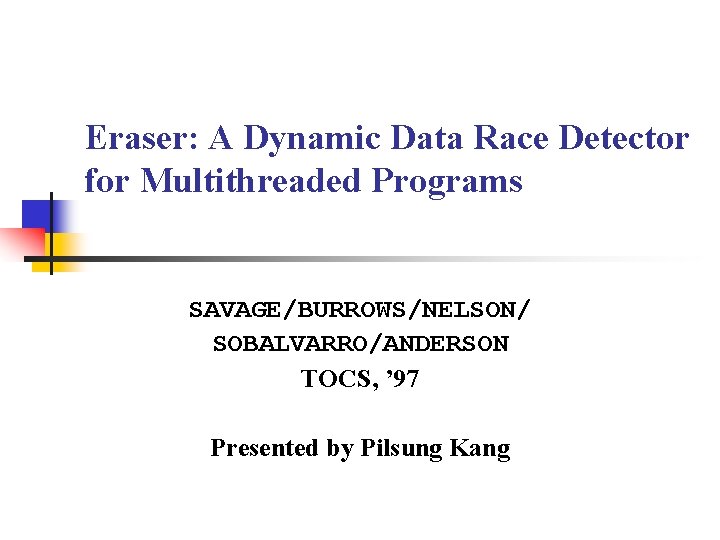

Key Ideas n Multithreaded programming n n n New approach to data race detection n Easy to make sync mistake -> data race Hard to debug data race Enforce locking discipline Every shared variable to be protected by some lock Claim n More efficient, simpler, more thorough

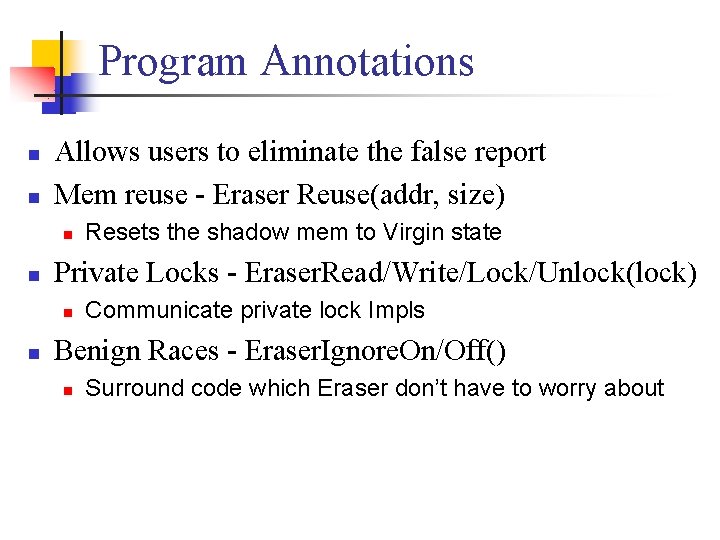

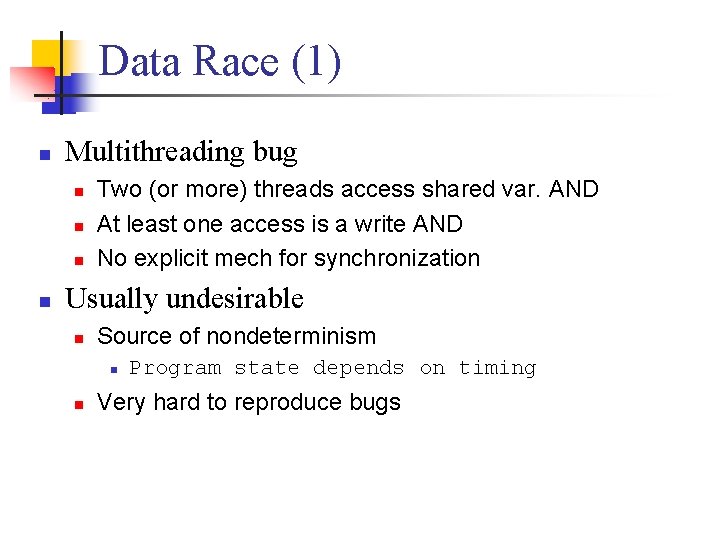

Data Race (1) n Multithreading bug n n Two (or more) threads access shared var. AND At least one access is a write AND No explicit mech for synchronization Usually undesirable n Source of nondeterminism n n Program state depends on timing Very hard to reproduce bugs

![Data Race 2 Thread 1 y 1 y1 lockmu v 1 A Data Race (2) Thread 1 y += 1; [y_1] lock(mu); v += 1; A](https://slidetodoc.com/presentation_image/0e9c8e0ce6d52811978cae3e845de18d/image-4.jpg)

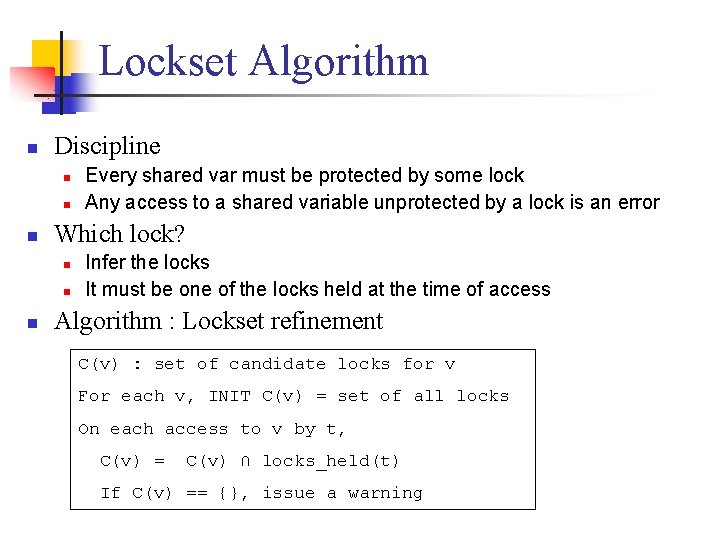

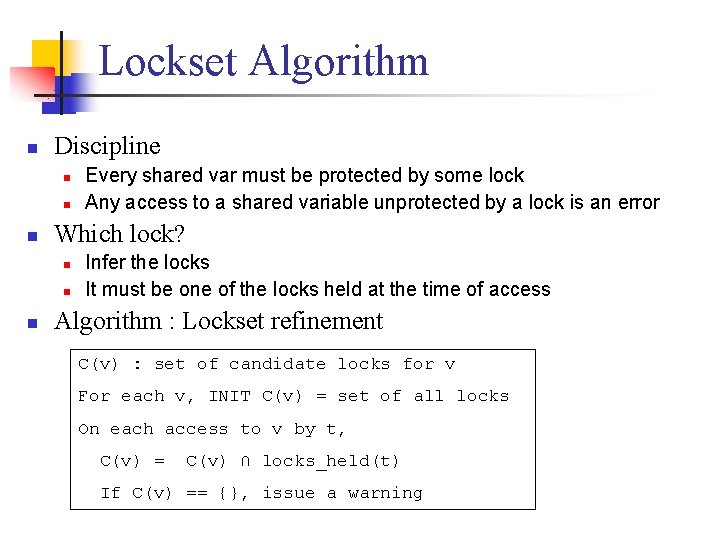

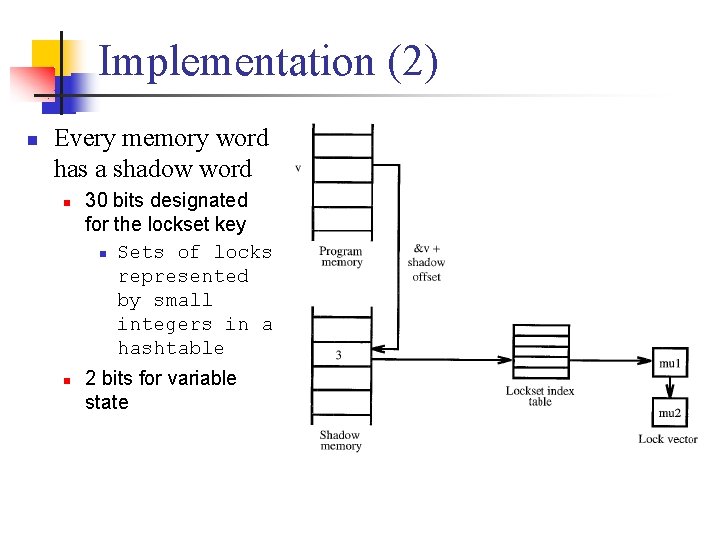

Data Race (2) Thread 1 y += 1; [y_1] lock(mu); v += 1; A unlock(mu); n Thread 2 lock(mu); B v += 1; unlock(mu); y += 1; [y_2] Race condition check n n n Two threads (T 1, T 2) access y Both of them are write No protection on y

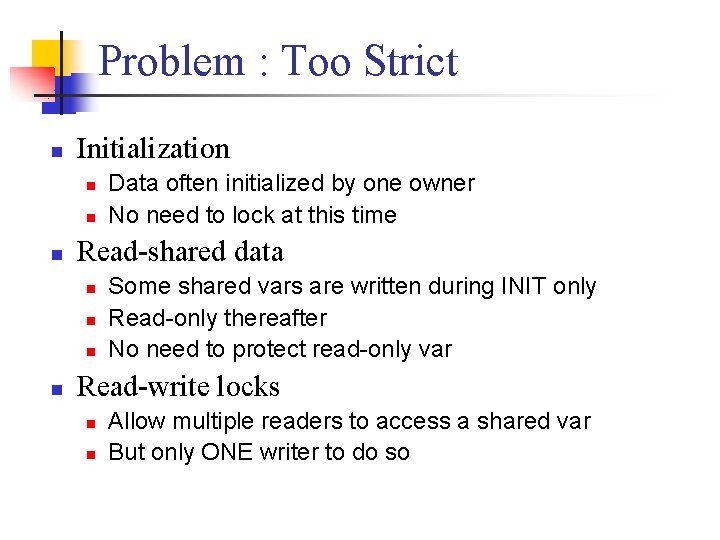

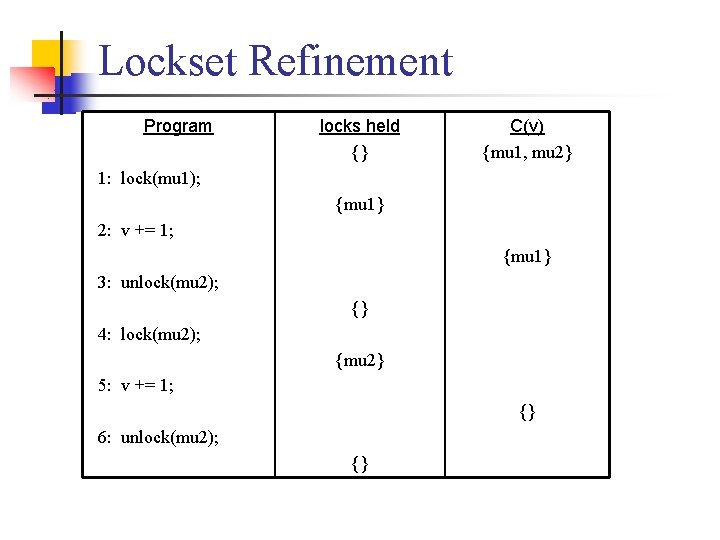

Lockset Algorithm n Discipline n n n Which lock? n n n Every shared var must be protected by some lock Any access to a shared variable unprotected by a lock is an error Infer the locks It must be one of the locks held at the time of access Algorithm : Lockset refinement C(v) : set of candidate locks for v For each v, INIT C(v) = set of all locks On each access to v by t, C(v) = C(v) ∩ locks_held(t) If C(v) == {}, issue a warning

Lockset Refinement Program locks held {} C(v) {mu 1, mu 2} 1: lock(mu 1); {mu 1} 2: v += 1; {mu 1} 3: unlock(mu 2); {} 4: lock(mu 2); {mu 2} 5: v += 1; {} 6: unlock(mu 2); {}

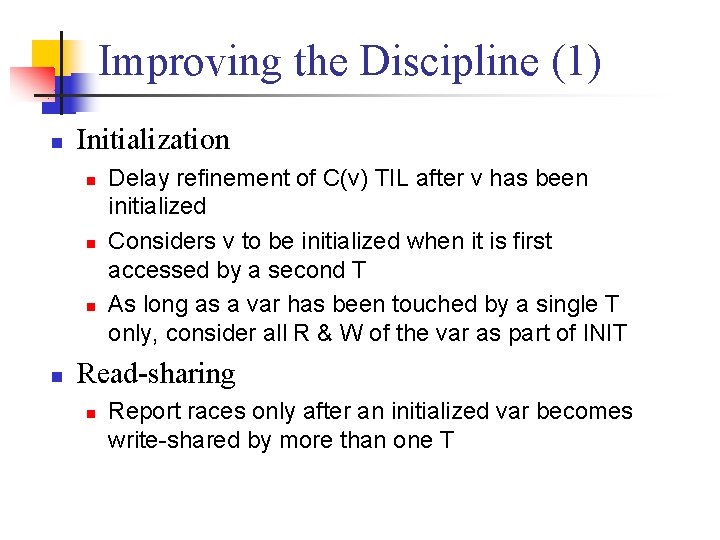

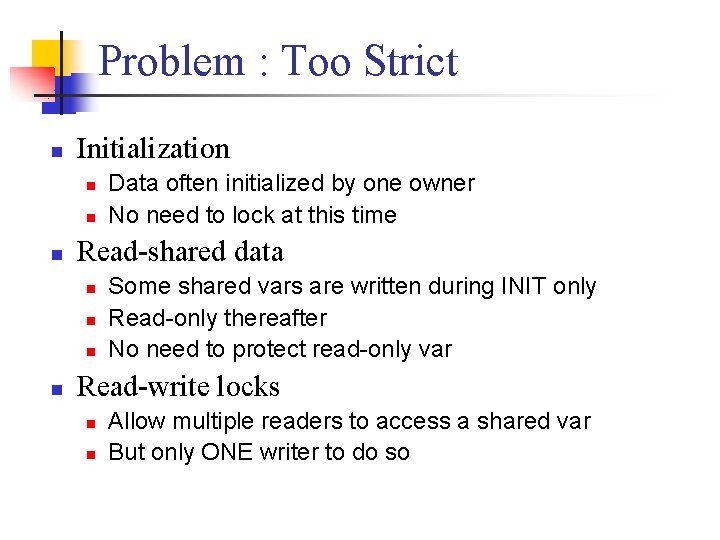

Problem : Too Strict n Initialization n Read-shared data n n Data often initialized by one owner No need to lock at this time Some shared vars are written during INIT only Read-only thereafter No need to protect read-only var Read-write locks n n Allow multiple readers to access a shared var But only ONE writer to do so

Improving the Discipline (1) n Initialization n n Delay refinement of C(v) TIL after v has been initialized Considers v to be initialized when it is first accessed by a second T As long as a var has been touched by a single T only, consider all R & W of the var as part of INIT Read-sharing n Report races only after an initialized var becomes write-shared by more than one T

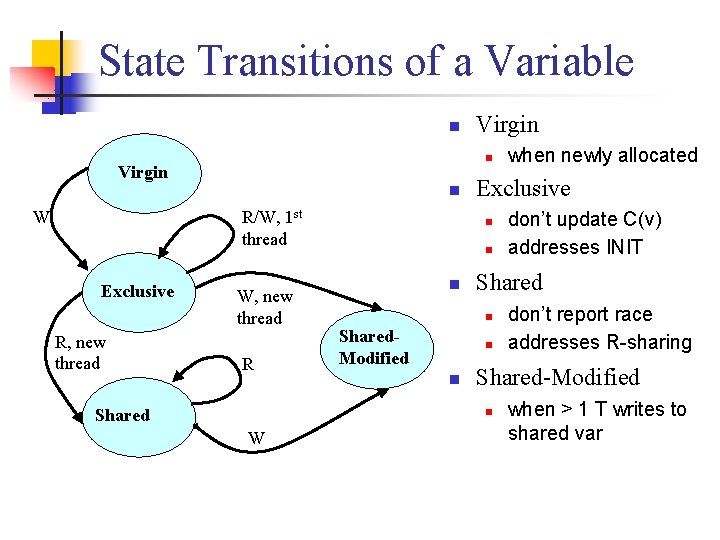

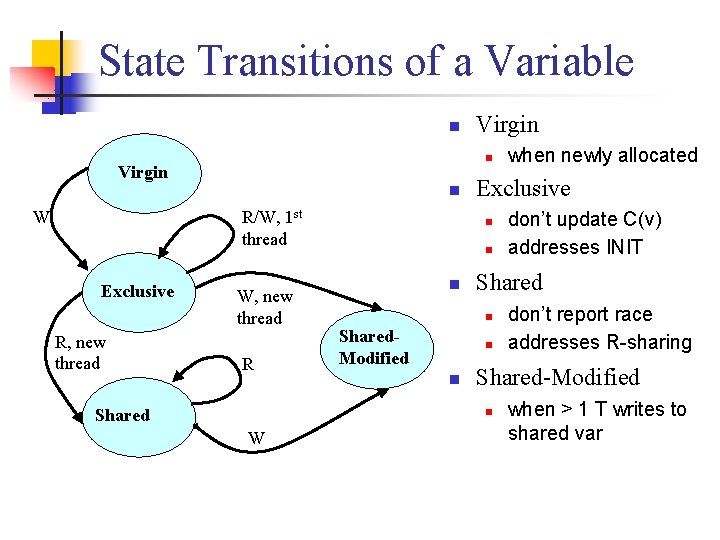

State Transitions of a Variable n n Virgin n W R/W, 1 st thread Exclusive R, new thread Virgin W, new thread R Shared Exclusive n n n don’t report race addresses R-sharing Shared-Modified n W don’t update C(v) addresses INIT Shared n Shared. Modified when newly allocated when > 1 T writes to shared var

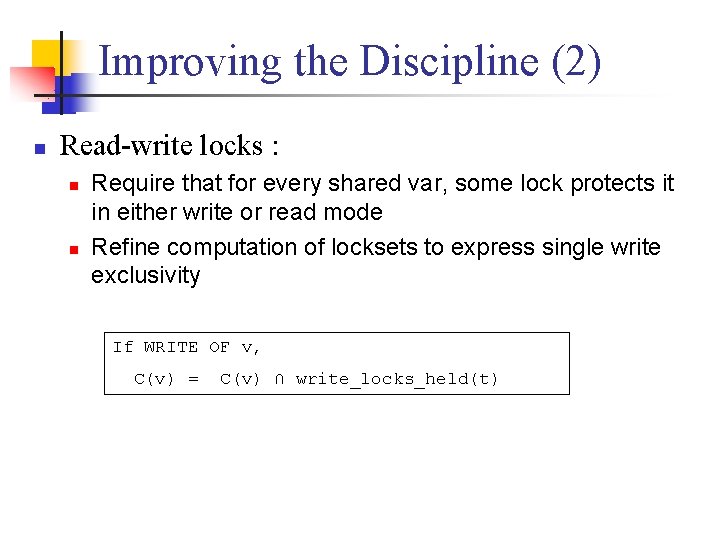

Improving the Discipline (2) n Read-write locks : n n Require that for every shared var, some lock protects it in either write or read mode Refine computation of locksets to express single write exclusivity If WRITE OF v, C(v) = C(v) ∩ write_locks_held(t)

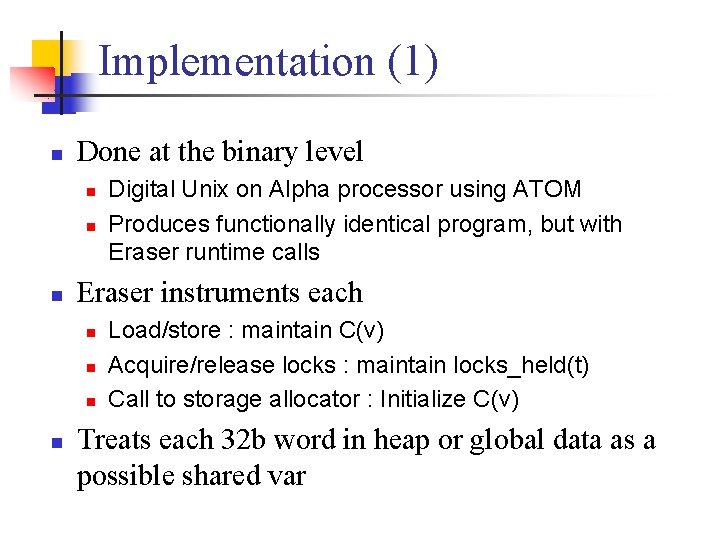

Implementation (1) n Done at the binary level n n n Eraser instruments each n n Digital Unix on Alpha processor using ATOM Produces functionally identical program, but with Eraser runtime calls Load/store : maintain C(v) Acquire/release locks : maintain locks_held(t) Call to storage allocator : Initialize C(v) Treats each 32 b word in heap or global data as a possible shared var

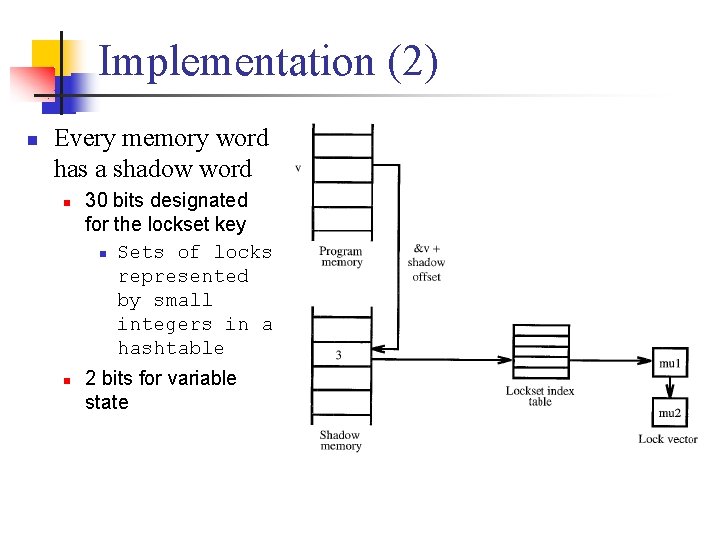

Implementation (2) n Every memory word has a shadow word n n 30 bits designated for the lockset key n Sets of locks represented by small integers in a hashtable 2 bits for variable state

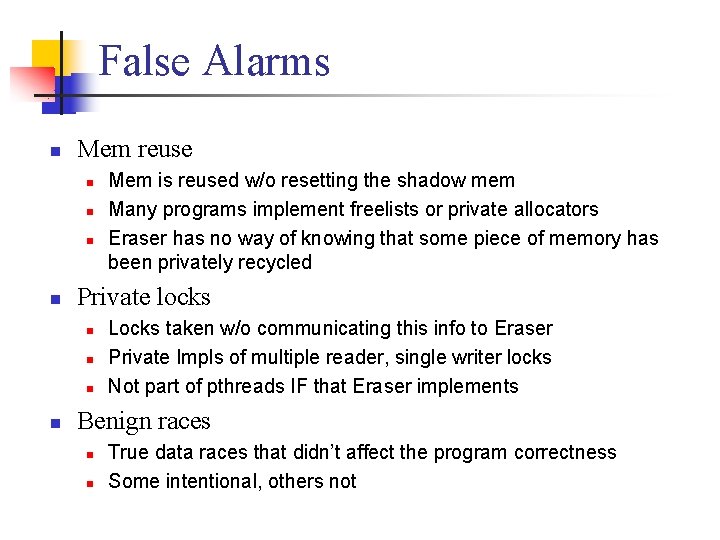

Performance n n Not optimized Apps slow down by 10 x to 30 x n Half of slow down due to proc call OH n n n Could be eliminated with code inlining Could use static analysis to reduce OH of monitoring code Fast enough to debug most programs

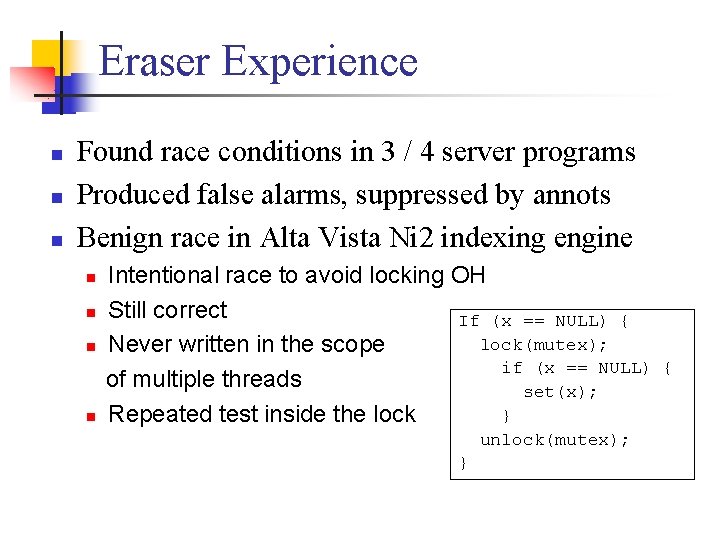

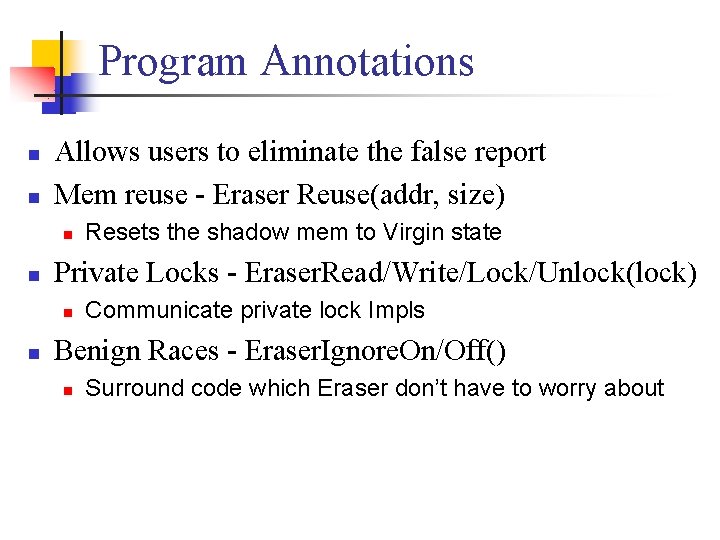

False Alarms n Mem reuse n n Private locks n n Mem is reused w/o resetting the shadow mem Many programs implement freelists or private allocators Eraser has no way of knowing that some piece of memory has been privately recycled Locks taken w/o communicating this info to Eraser Private Impls of multiple reader, single writer locks Not part of pthreads IF that Eraser implements Benign races n n True data races that didn’t affect the program correctness Some intentional, others not

Program Annotations n n Allows users to eliminate the false report Mem reuse - Eraser Reuse(addr, size) n n Private Locks - Eraser. Read/Write/Lock/Unlock(lock) n n Resets the shadow mem to Virgin state Communicate private lock Impls Benign Races - Eraser. Ignore. On/Off() n Surround code which Eraser don’t have to worry about

Eraser Experience n n n Found race conditions in 3 / 4 server programs Produced false alarms, suppressed by annots Benign race in Alta Vista Ni 2 indexing engine n n Intentional race to avoid locking OH Still correct If (x == NULL) { lock(mutex); Never written in the scope if (x == NULL) of multiple threads set(x); } Repeated test inside the lock unlock(mutex); } {

Review n Data race : Multithreading bug n n Two (or more) threads access shared var. AND At least one access is a write AND No explicit mech for synchronization Eraser detects data races dynamically n n Enforces a simple locking discipline Every shared var access should be protected by a lock Practical for checking data races False alarms & Annotations

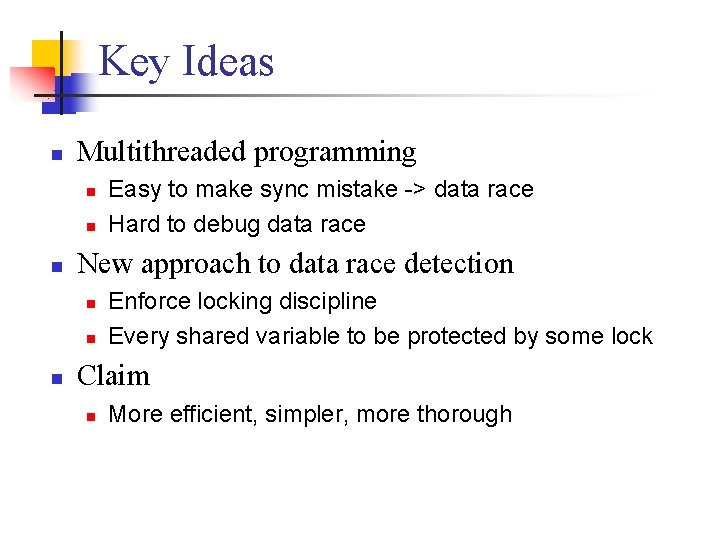

Paper Evaluation n Practical approach to data race detection n n Eraser is slow: 10 x to 30 x slowdown n n Easier to implement than previous work Checking the discipline finds errors with relatively few runs First attempt of lockset-based detection Could have used static analysis to reduce overhead False positives issues n n Perfectly valid and racefree code can violate the locking discipline Annotating can’t be perfect

Questions & Discussion