Environment Cloudera Quick Start VM with 5 4

- Slides: 35

Environment • Cloudera Quick. Start VM with 5. 4. 2 • Guide for Download • http: //ailab. ssu. ac. kr/rb/? c=8/29&cat=2015_2_%EC%9 C%A 0%EB%B 9%84%EC %BF%BC%ED%84%B 0%EC%8 A%A 4+%EC%9 D%91%EC%9 A%A 9%EC%8 B%9 C% EC%8 A%A 4%ED%85%9 C&uid=660

Contents • Using HDFS • • How To Use How To Upload File How To View and Manipulate File Exercise • Running Map. Reduce Job : Word. Count • • Goal Remind Map. Reduce Code Review Run Word. Count Program • Extra Exercise : Number of Connection per Hour • Meaningful Data from ‘Access Log’ • Foundation of Regural Expression • Run Map. Reduce Job • Importing Data With Sqoop • Review My. SQL • How To Use

Using HDFS With Exercise

Using HDFS • How to use HDFS • How to Upload File • How to View and Manipulate File

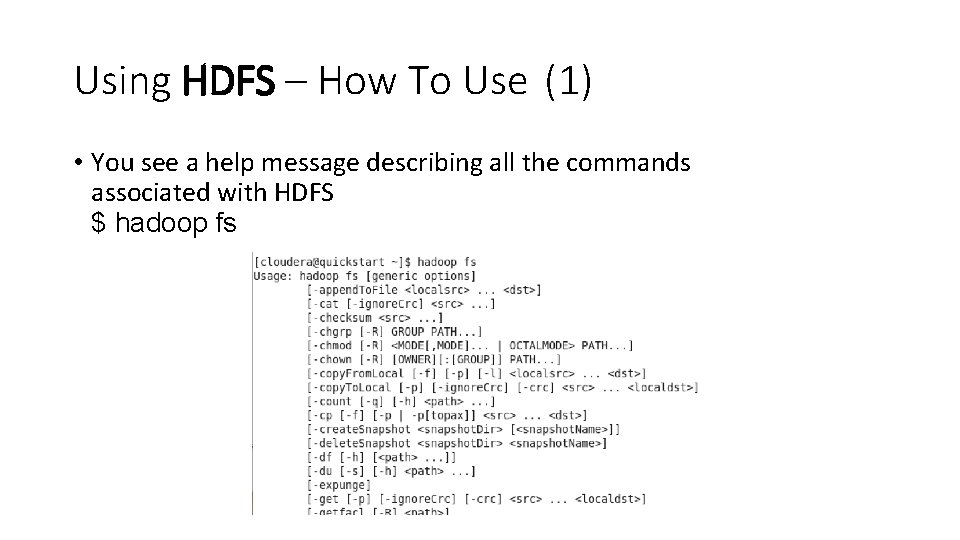

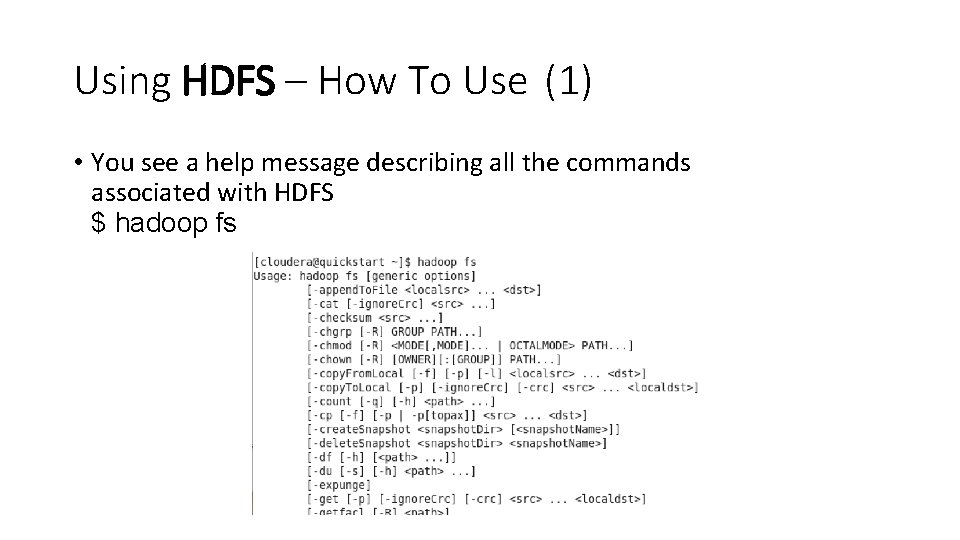

Using HDFS – How To Use (1) • You see a help message describing all the commands associated with HDFS $ hadoop fs

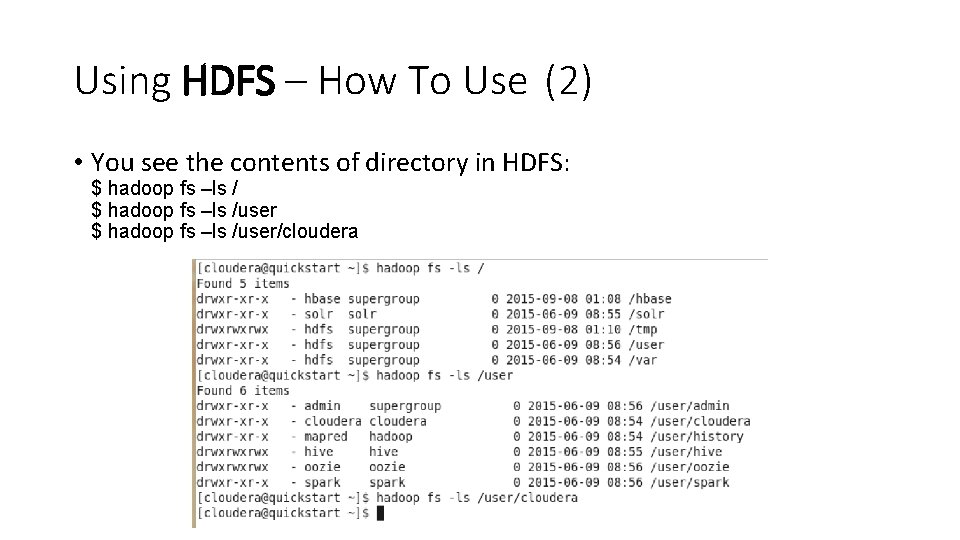

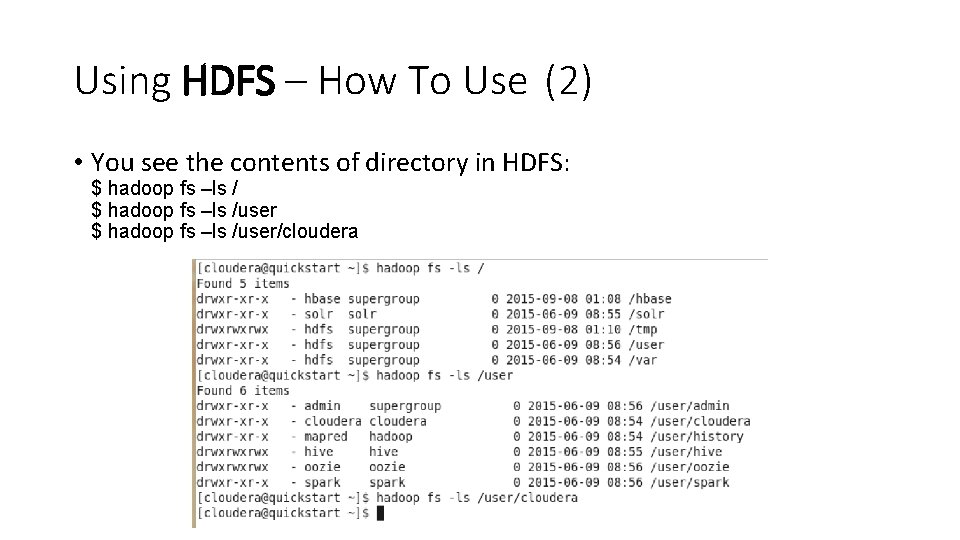

Using HDFS – How To Use (2) • You see the contents of directory in HDFS: $ hadoop fs –ls /user/cloudera

Exercise How To Use

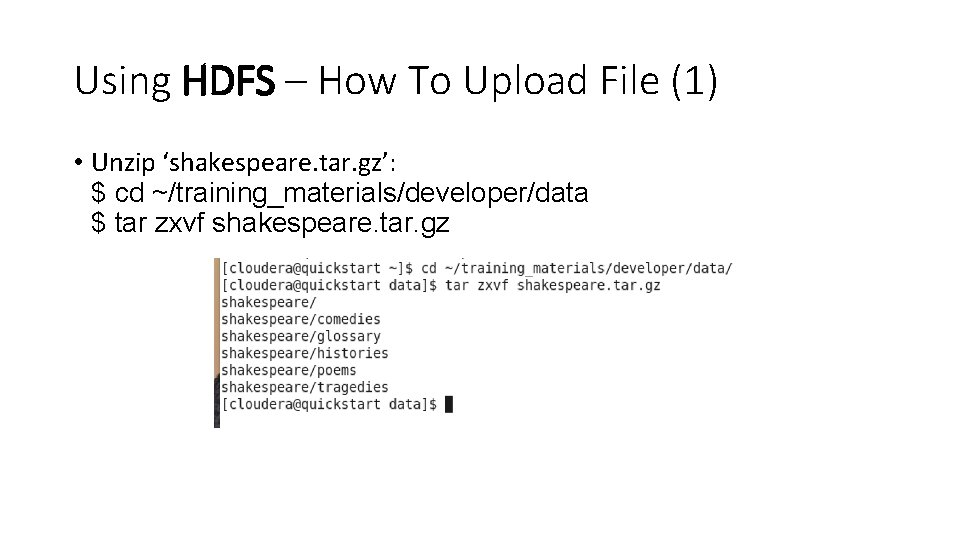

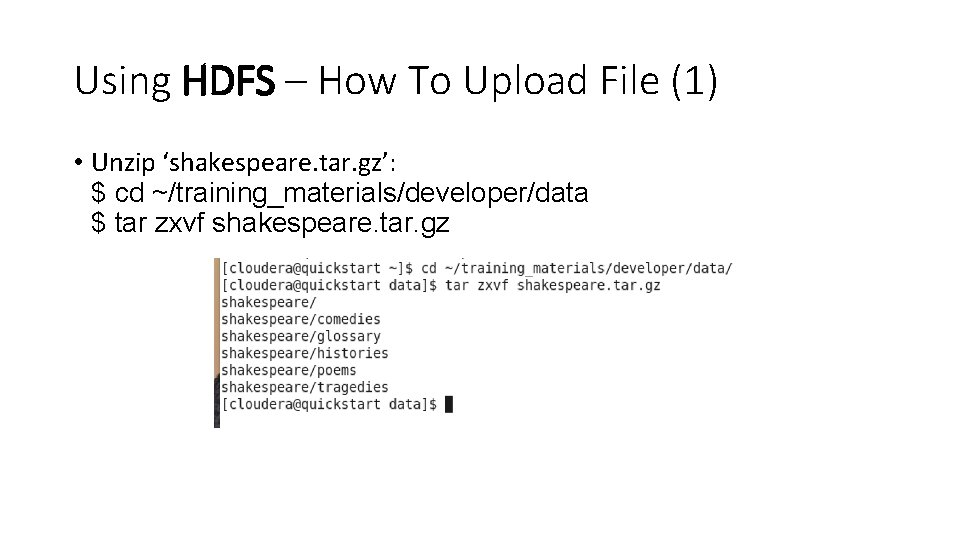

Using HDFS – How To Upload File (1) • Unzip ‘shakespeare. tar. gz’: $ cd ~/training_materials/developer/data $ tar zxvf shakespeare. tar. gz

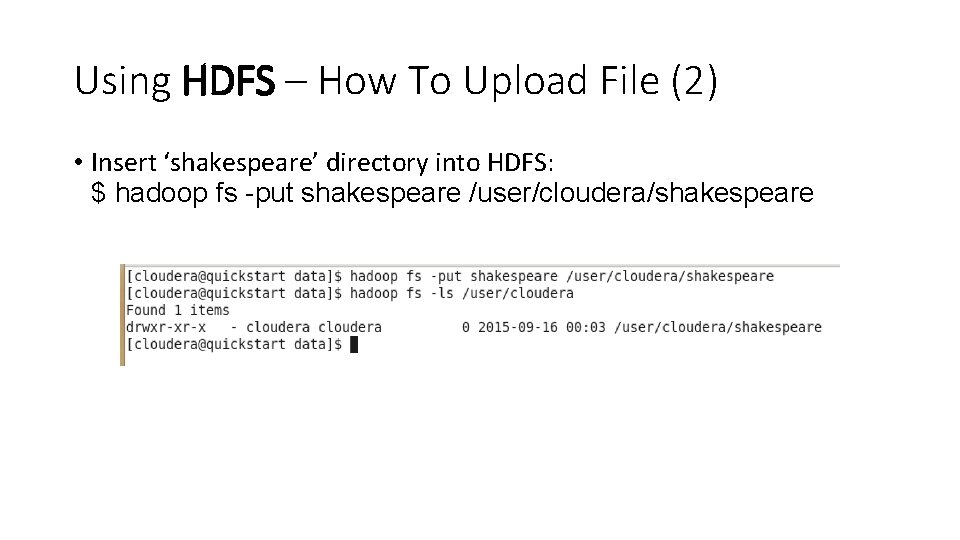

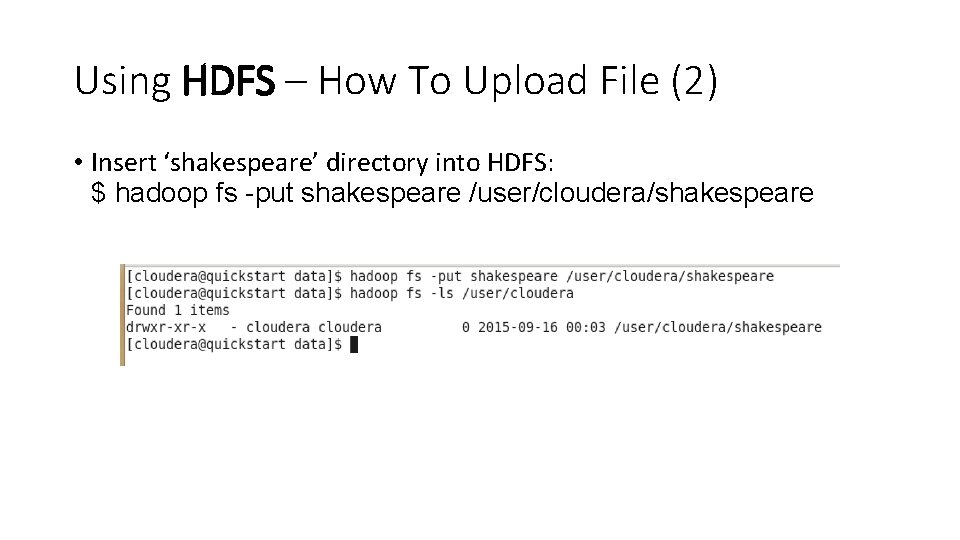

Using HDFS – How To Upload File (2) • Insert ‘shakespeare’ directory into HDFS: $ hadoop fs -put shakespeare /user/cloudera/shakespeare

Exercise How To Upload

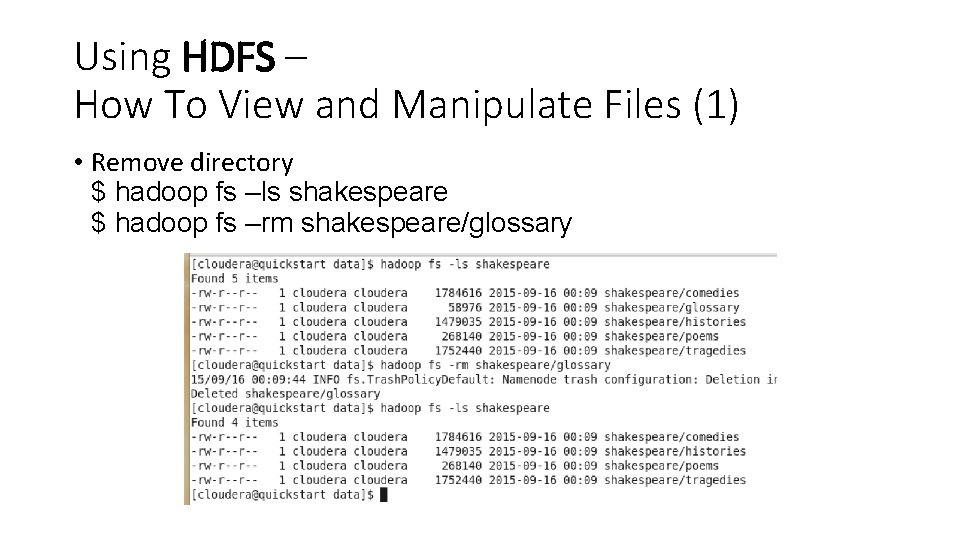

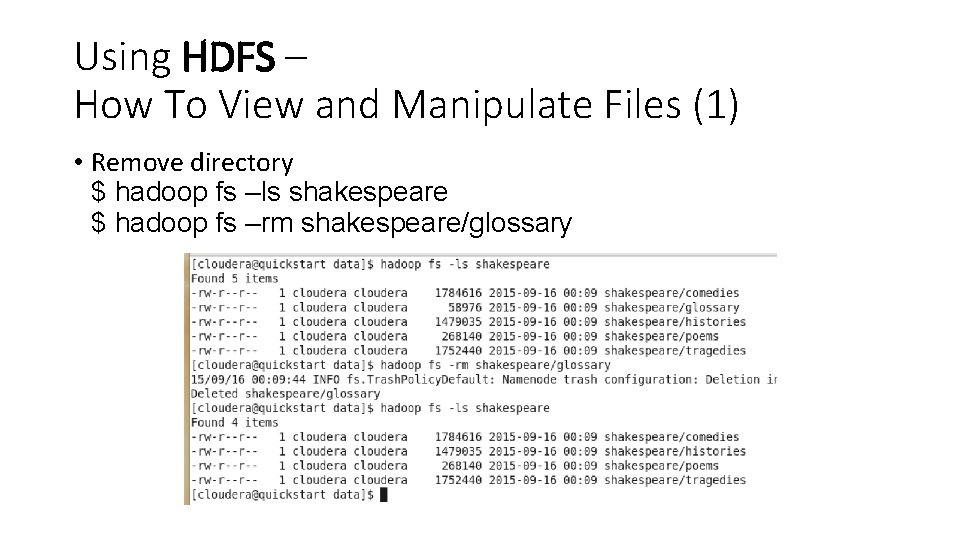

Using HDFS – How To View and Manipulate Files (1) • Remove directory $ hadoop fs –ls shakespeare $ hadoop fs –rm shakespeare/glossary

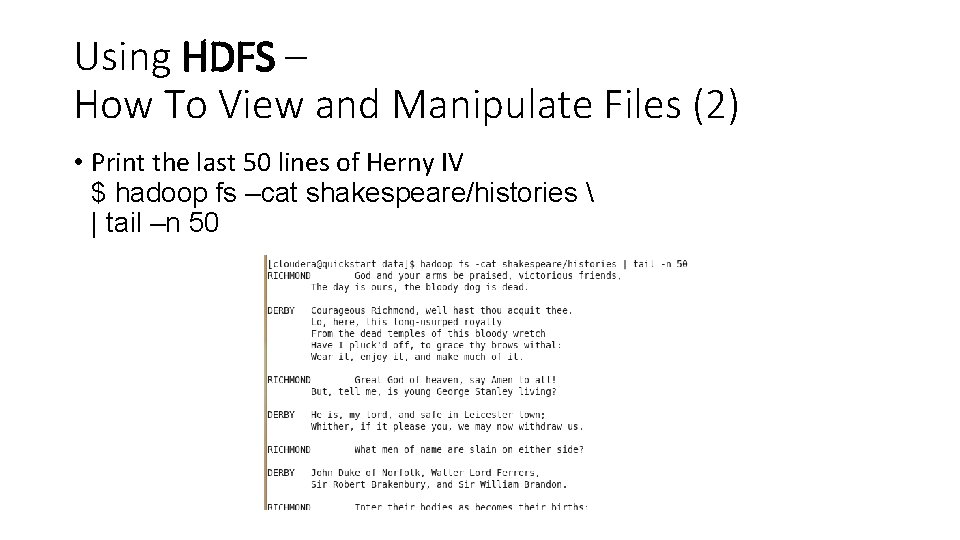

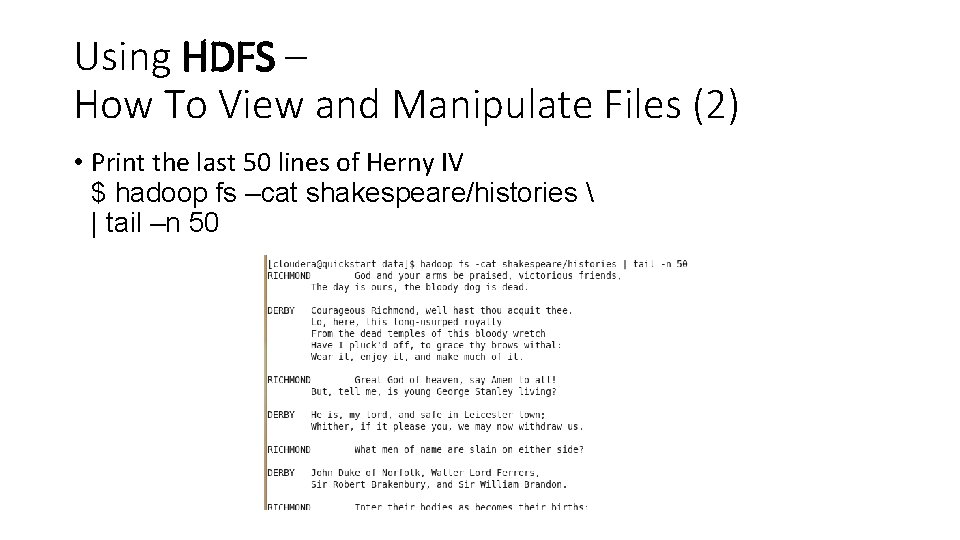

Using HDFS – How To View and Manipulate Files (2) • Print the last 50 lines of Herny IV $ hadoop fs –cat shakespeare/histories | tail –n 50

Using HDFS – How To View and Manipulate Files (3) • Download file and manipulate $ hadoop fs –get shakespeare/poems ~/shakepoems. txt $ less ~/shakepoems. txt • If you want to know other command: $ hadoop fs

Exercise How To View and Manipulate Files

Running a Map. Reduce Job With Exercise

Running a Map. Reduce Job • Goal • Remind Map. Reduce • Code Review • Run Word. Count Program

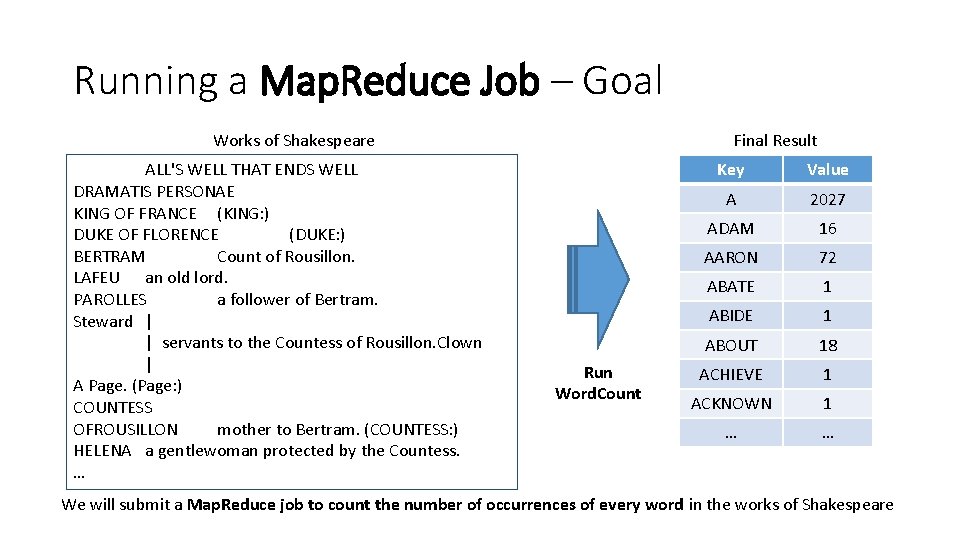

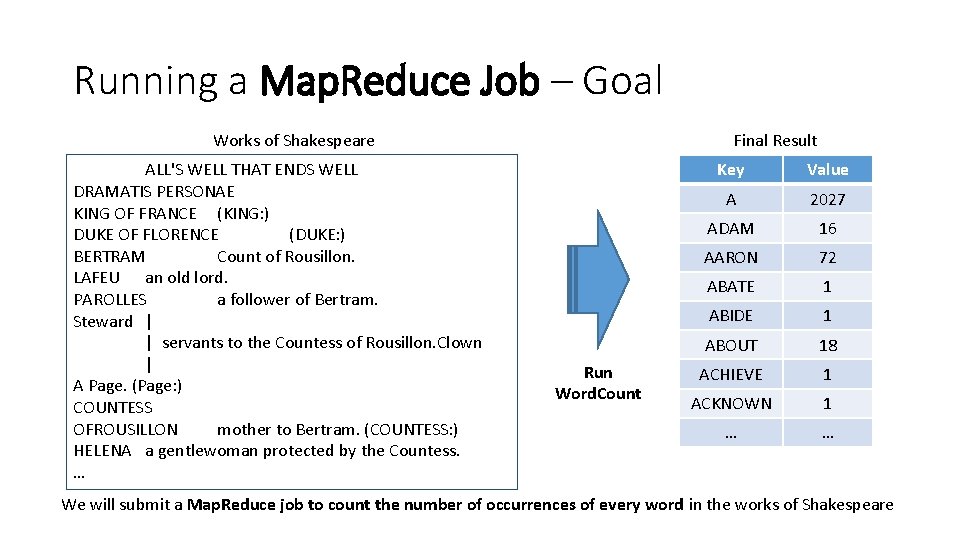

Running a Map. Reduce Job – Goal Works of Shakespeare ALL'S WELL THAT ENDS WELL DRAMATIS PERSONAE KING OF FRANCE (KING: ) DUKE OF FLORENCE (DUKE: ) BERTRAM Count of Rousillon. LAFEU an old lord. PAROLLES a follower of Bertram. Steward | | servants to the Countess of Rousillon. Clown | A Page. (Page: ) COUNTESS OFROUSILLON mother to Bertram. (COUNTESS: ) HELENA a gentlewoman protected by the Countess. … Final Result Run Word. Count Key Value A 2027 ADAM 16 AARON 72 ABATE 1 ABIDE 1 ABOUT 18 ACHIEVE 1 ACKNOWN 1 … … We will submit a Map. Reduce job to count the number of occurrences of every word in the works of Shakespeare

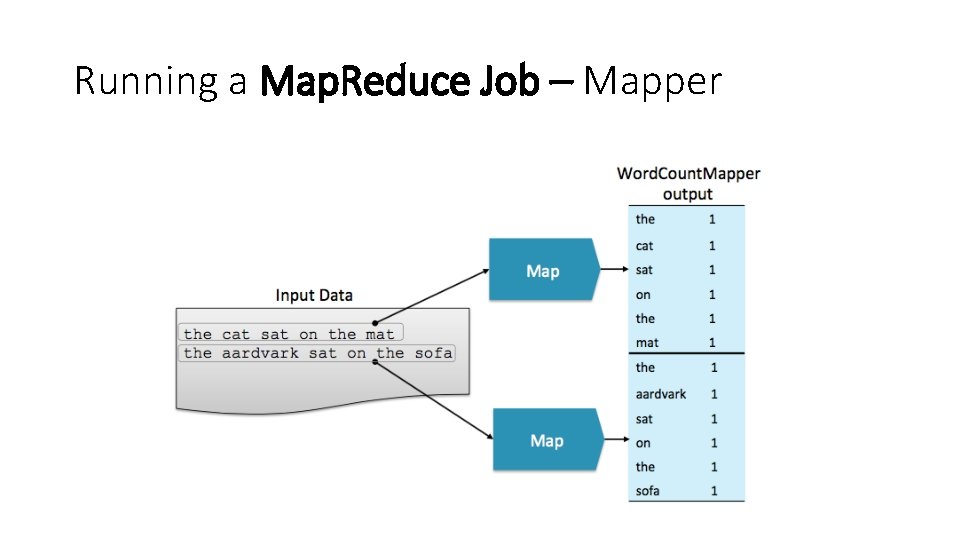

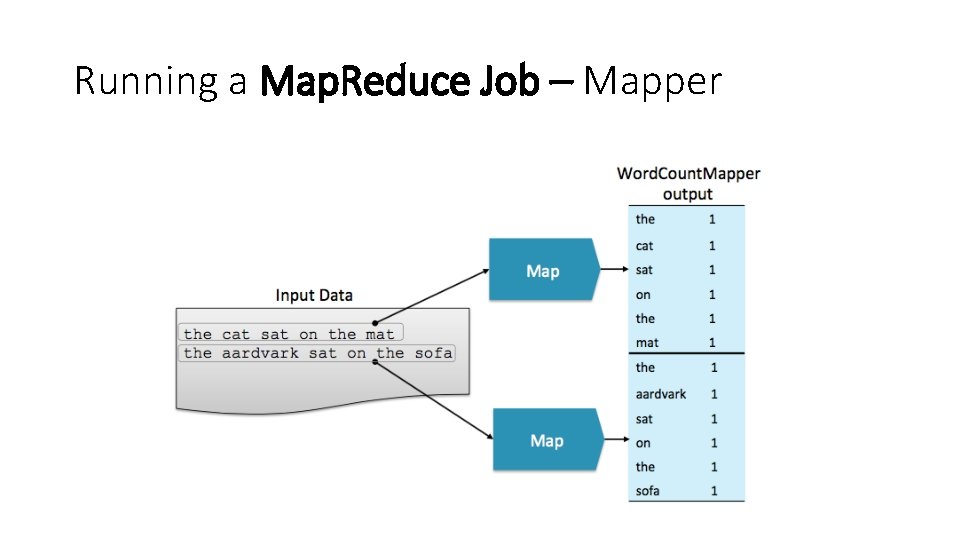

Running a Map. Reduce Job – Mapper

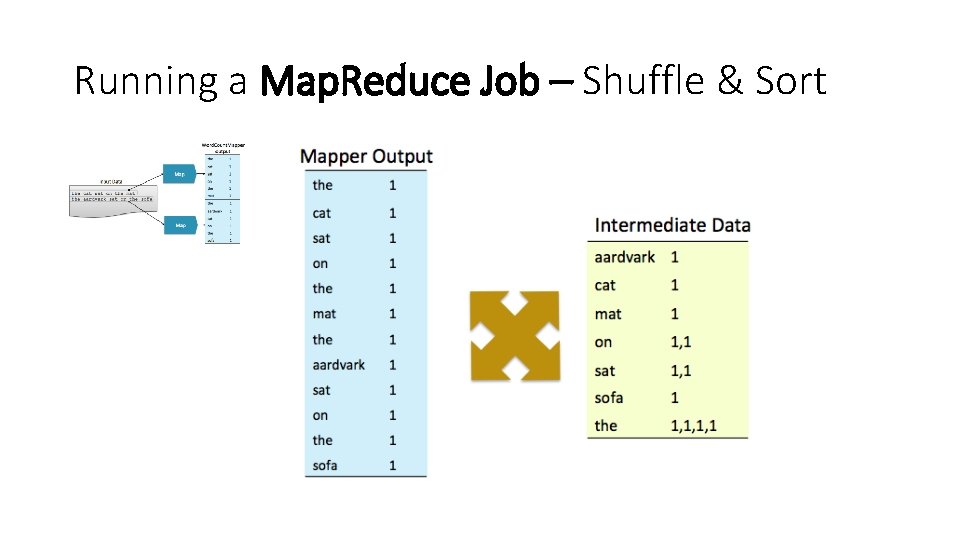

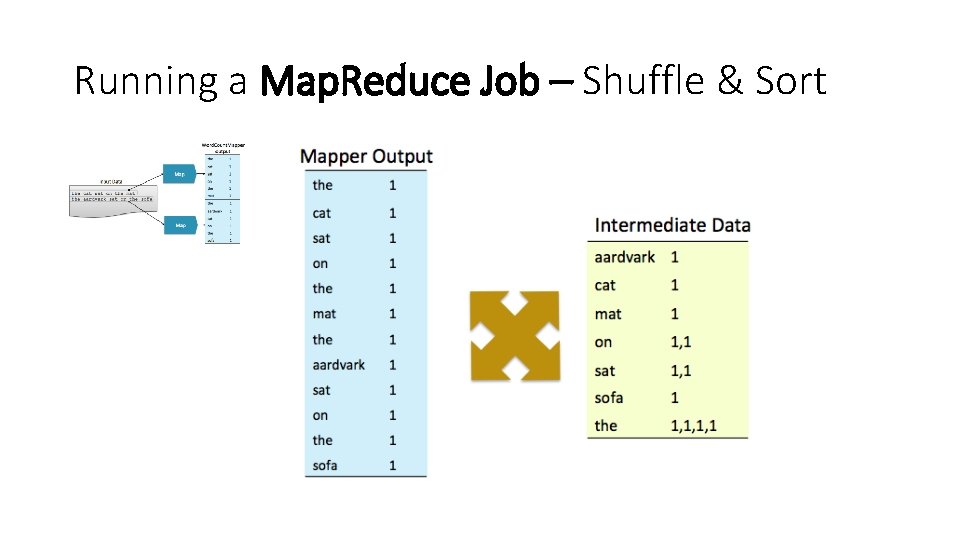

Running a Map. Reduce Job – Shuffle & Sort

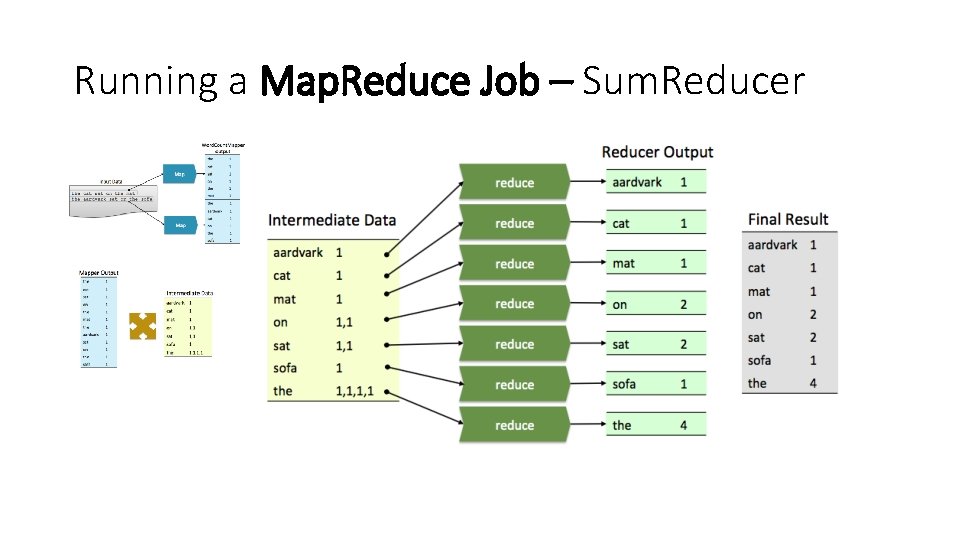

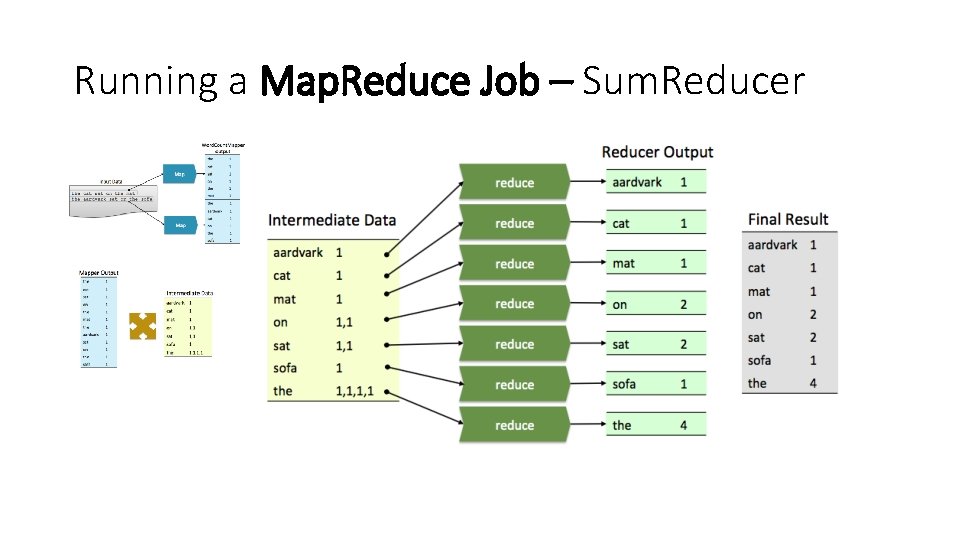

Running a Map. Reduce Job – Sum. Reducer

Running a Map. Reduce Job – Word. Count Code Review • Word. Count. java • A simple Map. Reduce driver class • Word. Mapper. java • A Mapper class for the job • Sum. Reducer. java • A reducer class for the job

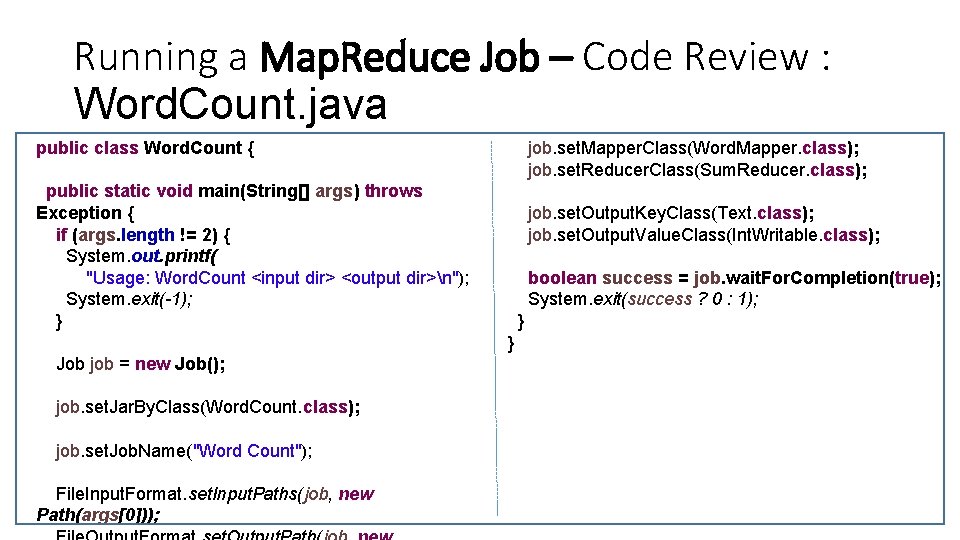

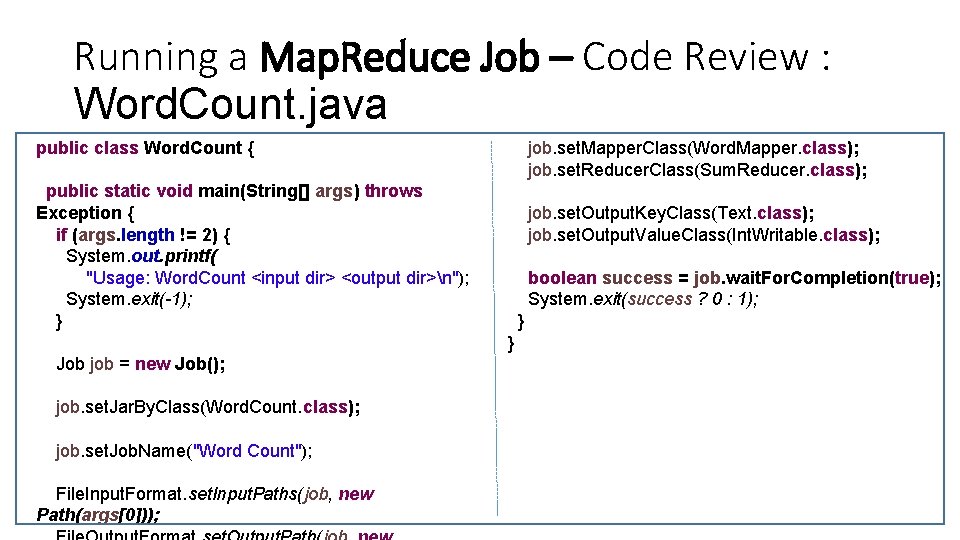

Running a Map. Reduce Job – Code Review : Word. Count. java public class Word. Count { job. set. Mapper. Class(Word. Mapper. class); job. set. Reducer. Class(Sum. Reducer. class); public static void main(String[] args) throws Exception { if (args. length != 2) { System. out. printf( "Usage: Word. Count <input dir> <output dir>n"); System. exit(-1); } job. set. Output. Key. Class(Text. class); job. set. Output. Value. Class(Int. Writable. class); boolean success = job. wait. For. Completion(true); System. exit(success ? 0 : 1); } } Job job = new Job(); job. set. Jar. By. Class(Word. Count. class); job. set. Job. Name("Word Count"); File. Input. Format. set. Input. Paths(job, new Path(args[0]));

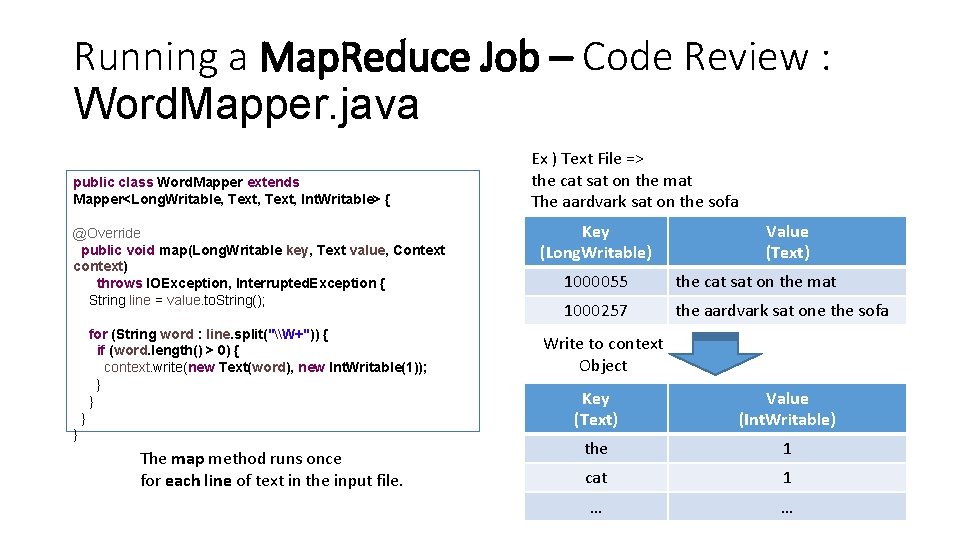

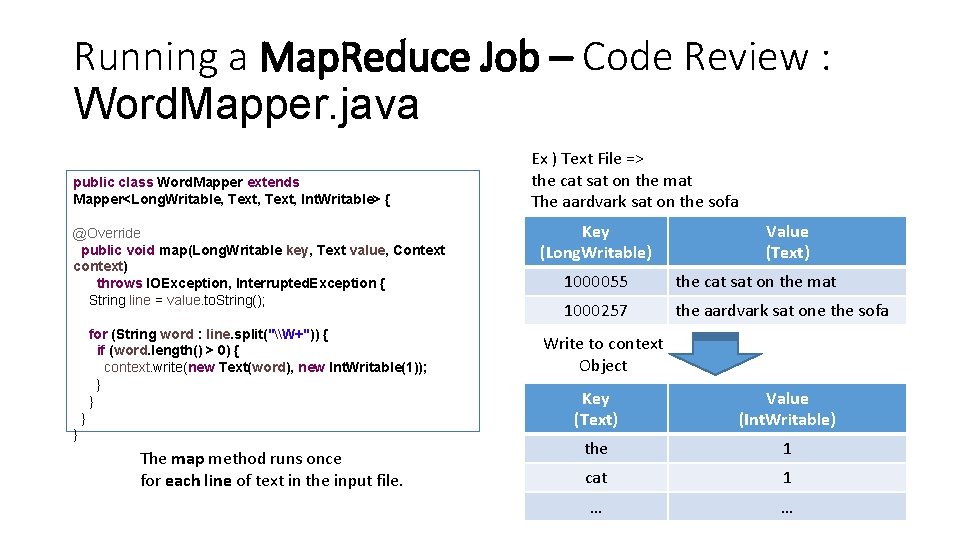

Running a Map. Reduce Job – Code Review : Word. Mapper. java public class Word. Mapper extends Mapper<Long. Writable, Text, Int. Writable> { @Override public void map(Long. Writable key, Text value, Context context) throws IOException, Interrupted. Exception { String line = value. to. String(); for (String word : line. split("\W+")) { if (word. length() > 0) { context. write(new Text(word), new Int. Writable(1)); } } The map method runs once for each line of text in the input file. Ex ) Text File => the cat sat on the mat The aardvark sat on the sofa Key (Long. Writable) Value (Text) 1000055 the cat sat on the mat 1000257 the aardvark sat one the sofa Write to context Object Key (Text) Value (Int. Writable) the 1 cat 1 … …

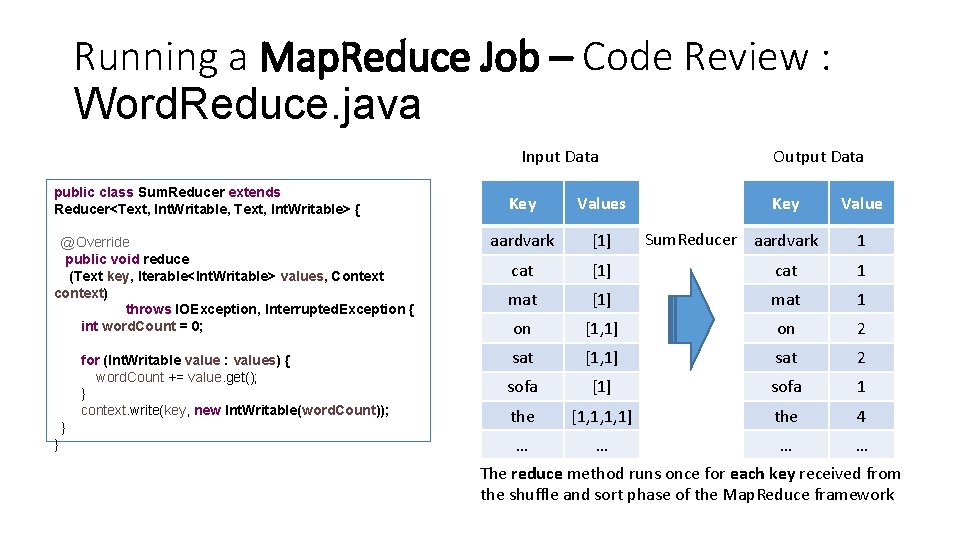

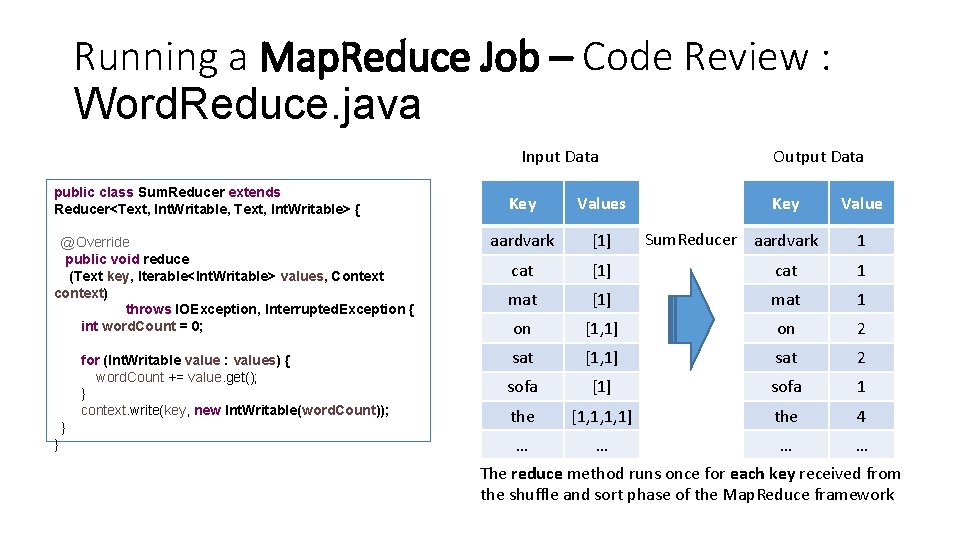

Running a Map. Reduce Job – Code Review : Word. Reduce. java Input Data public class Sum. Reducer extends Reducer<Text, Int. Writable, Text, Int. Writable> { Output Data Key Values @Override public void reduce (Text key, Iterable<Int. Writable> values, Context context) throws IOException, Interrupted. Exception { int word. Count = 0; aardvark [1] cat 1 mat [1] mat 1 on [1, 1] on 2 for (Int. Writable value : values) { word. Count += value. get(); } context. write(key, new Int. Writable(word. Count)); sat [1, 1] sat 2 sofa [1] sofa 1 the [1, 1, 1, 1] the 4 … … } } Key Sum. Reducer aardvark Value 1 The reduce method runs once for each key received from the shuffle and sort phase of the Map. Reduce framework

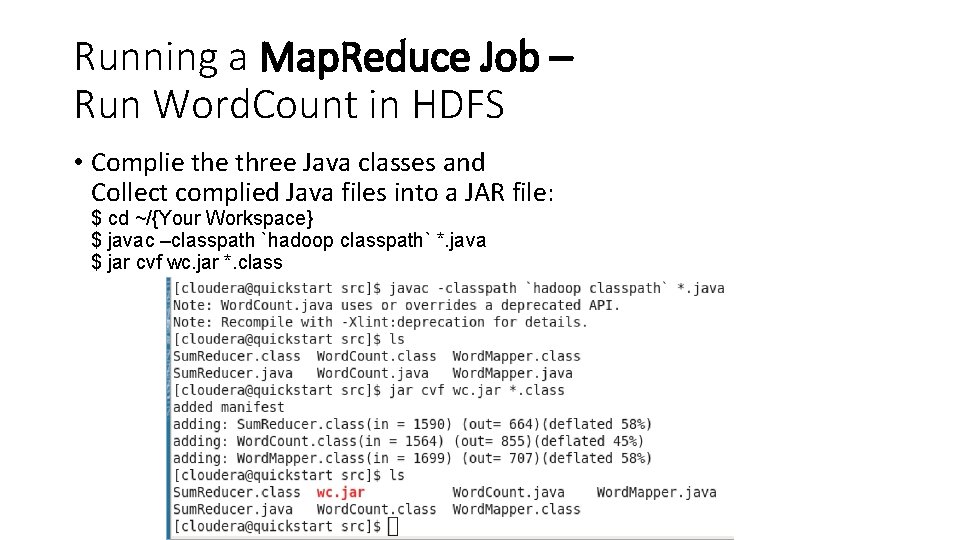

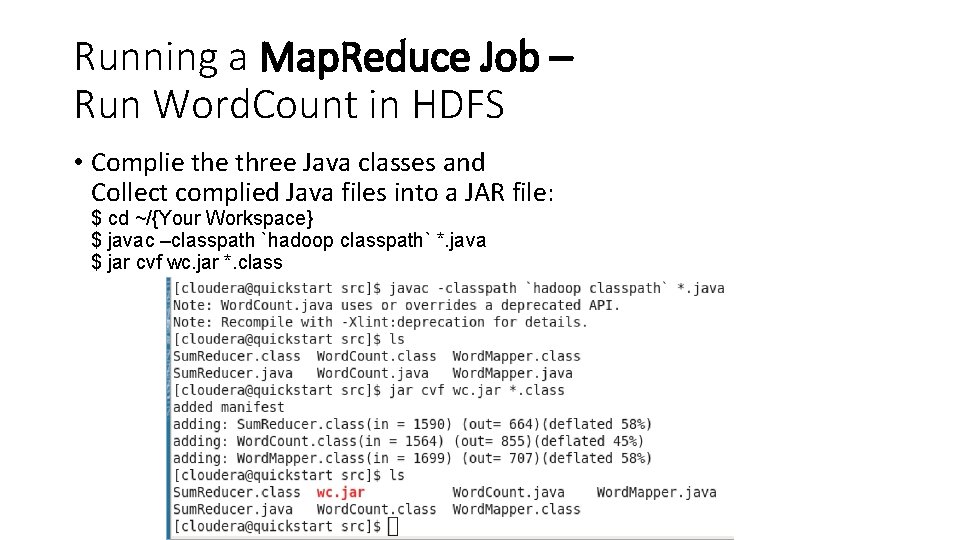

Running a Map. Reduce Job – Run Word. Count in HDFS • Complie three Java classes and Collect complied Java files into a JAR file: $ cd ~/{Your Workspace} $ javac –classpath `hadoop classpath` *. java $ jar cvf wc. jar *. class

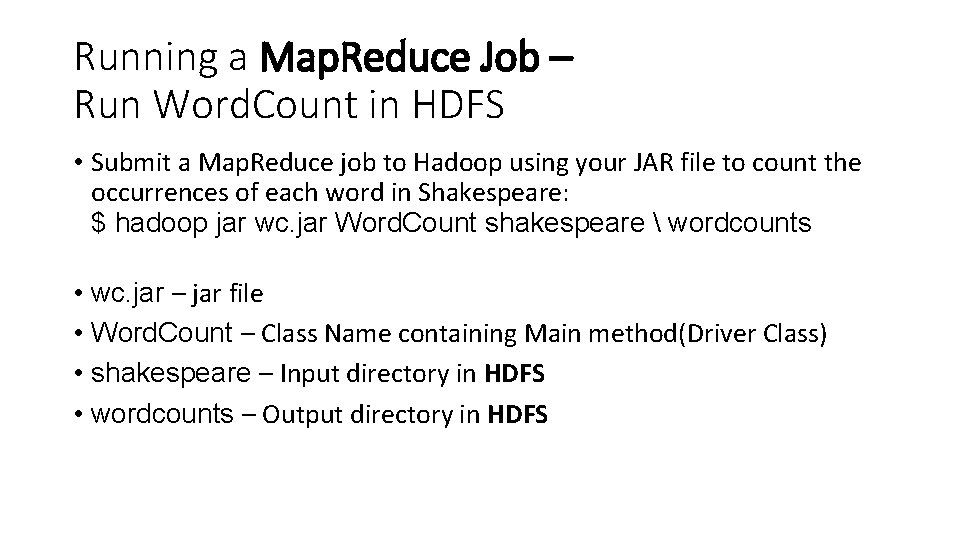

Running a Map. Reduce Job – Run Word. Count in HDFS • Submit a Map. Reduce job to Hadoop using your JAR file to count the occurrences of each word in Shakespeare: $ hadoop jar wc. jar Word. Count shakespeare wordcounts • wc. jar – jar file • Word. Count – Class Name containing Main method(Driver Class) • shakespeare – Input directory in HDFS • wordcounts – Output directory in HDFS

Exercise Map. Reduce Job : Word. Count

Importing Data With Sqoop Review My. SQL and Exercise

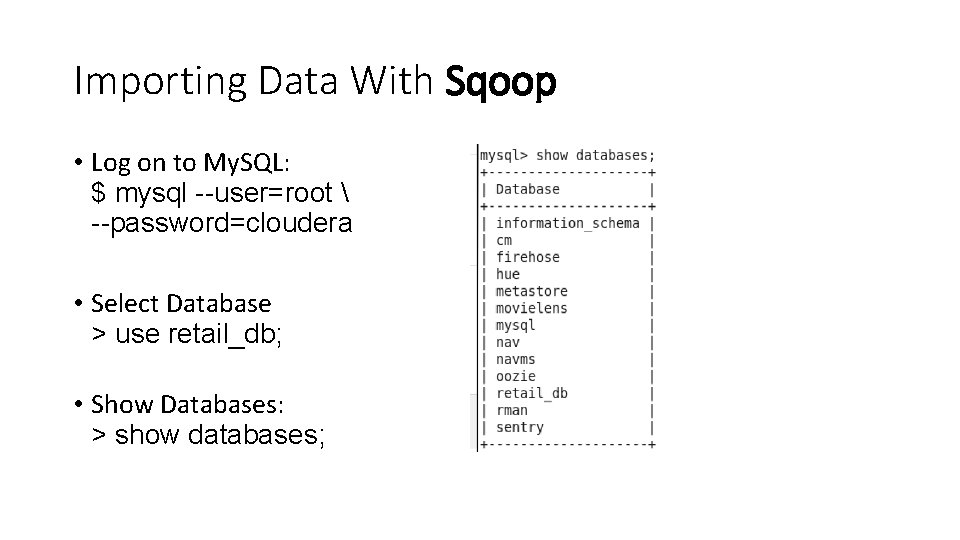

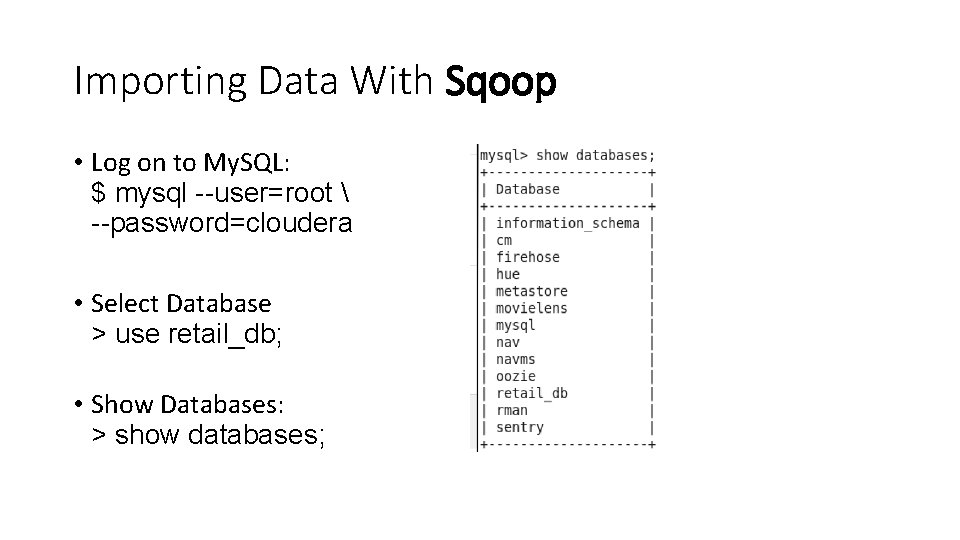

Importing Data With Sqoop • Log on to My. SQL: $ mysql --user=root --password=cloudera • Select Database > use retail_db; • Show Databases: > show databases;

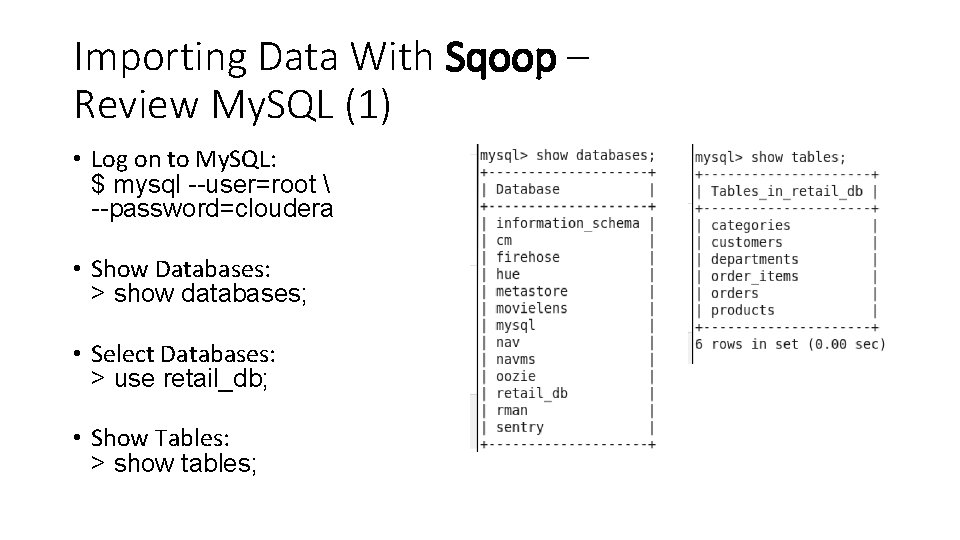

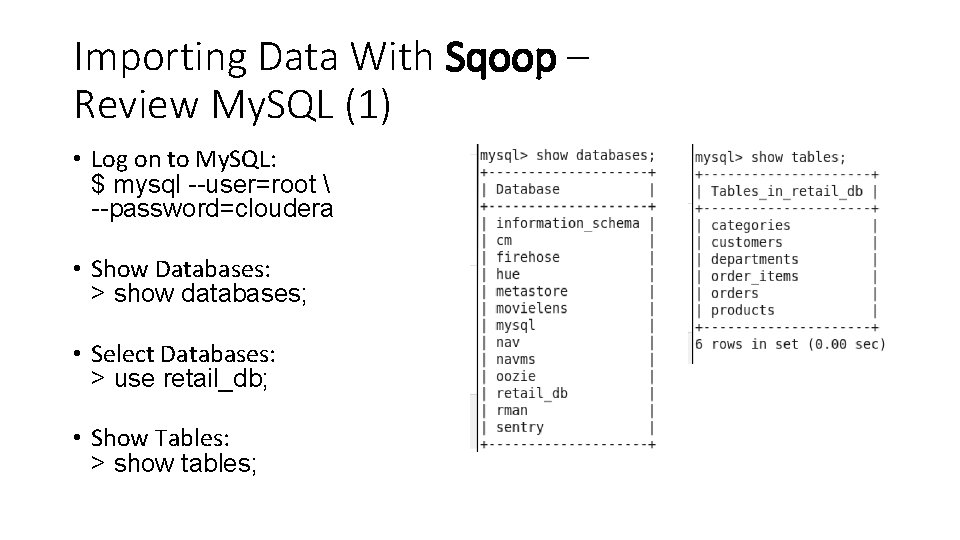

Importing Data With Sqoop – Review My. SQL (1) • Log on to My. SQL: $ mysql --user=root --password=cloudera • Show Databases: > show databases; • Select Databases: > use retail_db; • Show Tables: > show tables;

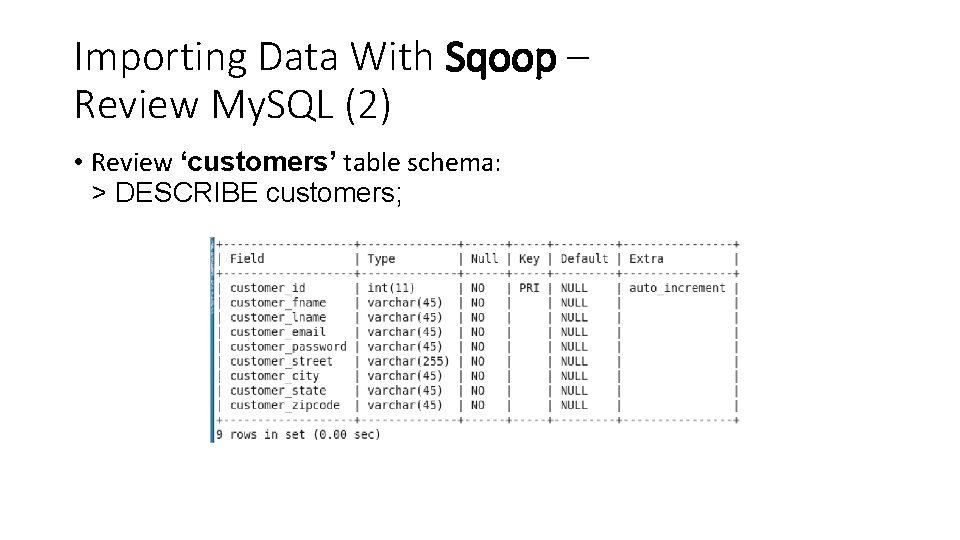

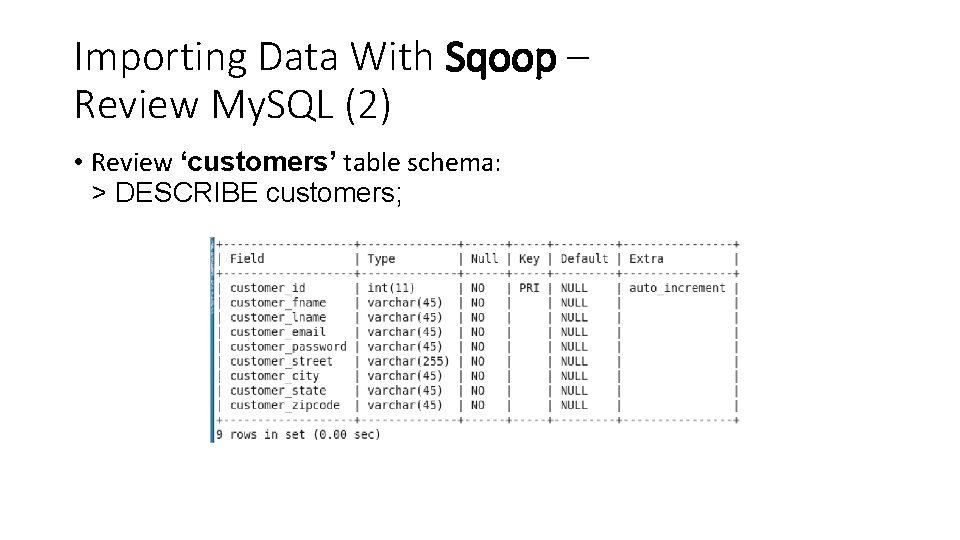

Importing Data With Sqoop – Review My. SQL (2) • Review ‘customers’ table schema: > DESCRIBE customers;

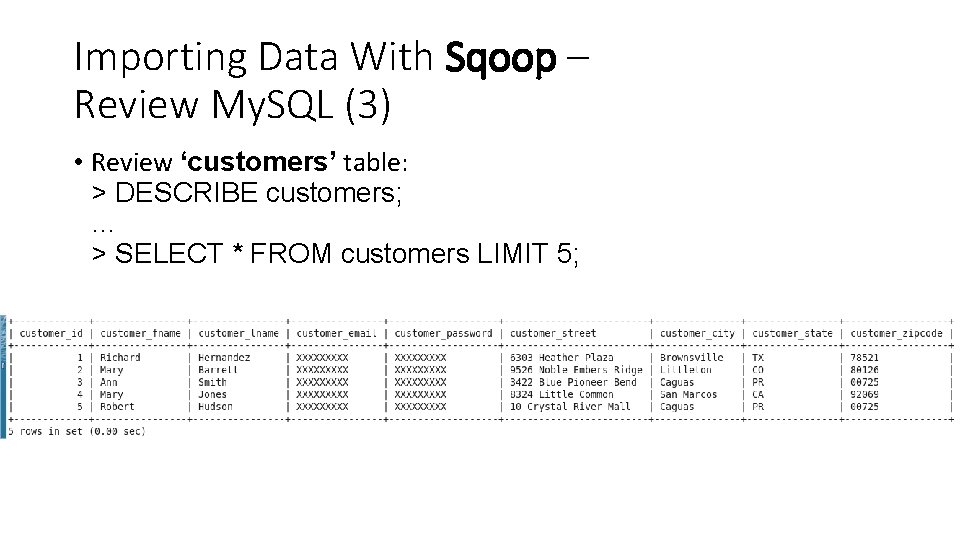

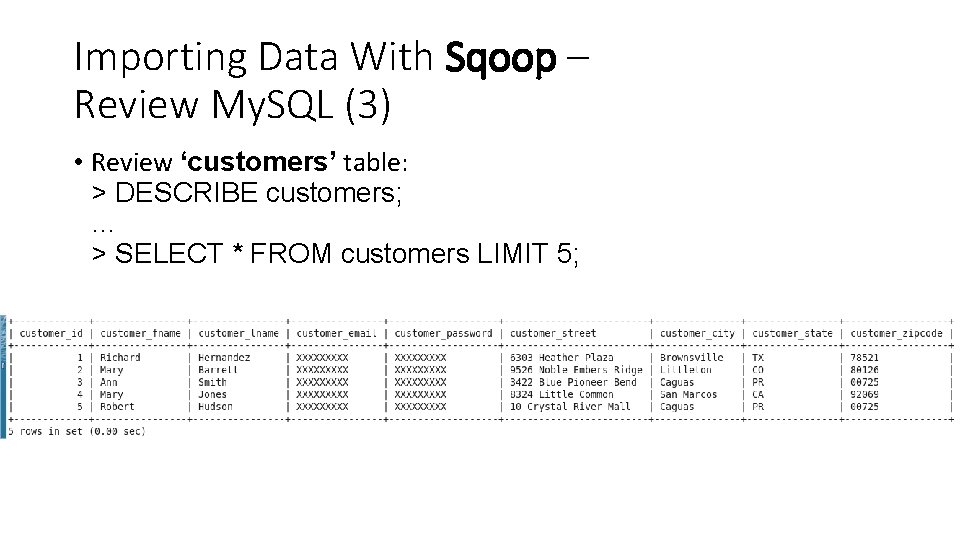

Importing Data With Sqoop – Review My. SQL (3) • Review ‘customers’ table: > DESCRIBE customers; … > SELECT * FROM customers LIMIT 5;

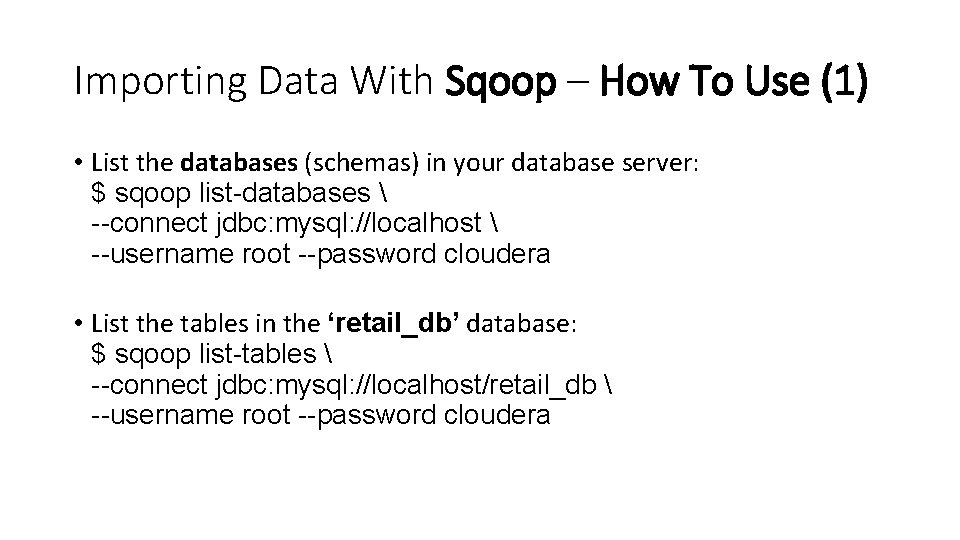

Importing Data With Sqoop – How To Use (1) • List the databases (schemas) in your database server: $ sqoop list-databases --connect jdbc: mysql: //localhost --username root --password cloudera • List the tables in the ‘retail_db’ database: $ sqoop list-tables --connect jdbc: mysql: //localhost/retail_db --username root --password cloudera

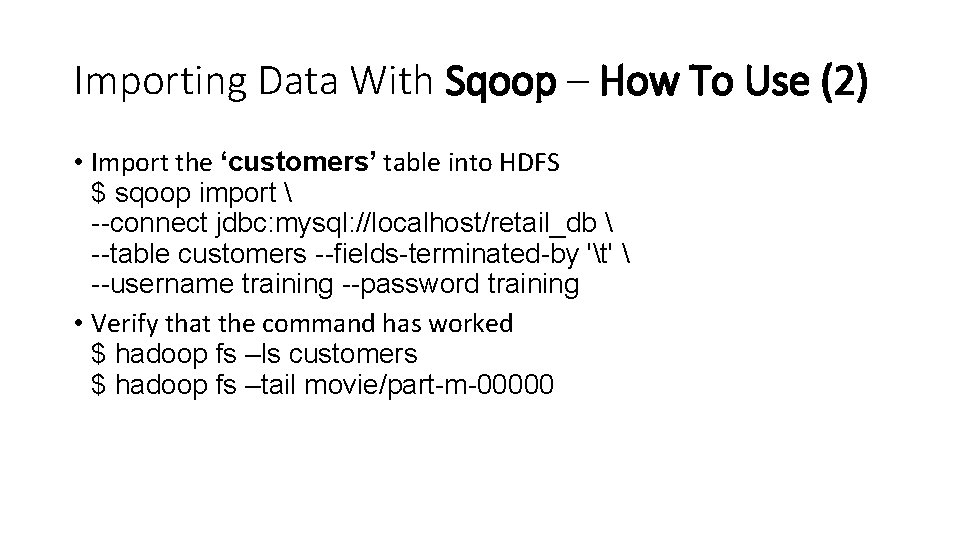

Importing Data With Sqoop – How To Use (2) • Import the ‘customers’ table into HDFS $ sqoop import --connect jdbc: mysql: //localhost/retail_db --table customers --fields-terminated-by 't' --username training --password training • Verify that the command has worked $ hadoop fs –ls customers $ hadoop fs –tail movie/part-m-00000