Ensemble Learning Lecturer Dr Bo Yuan LOGO Email

Ensemble Learning Lecturer: Dr. Bo Yuan LOGO E-mail: yuanb@sz. tsinghua. edu. cn

Real World Scenarios VS. 2

Real World Scenarios 3

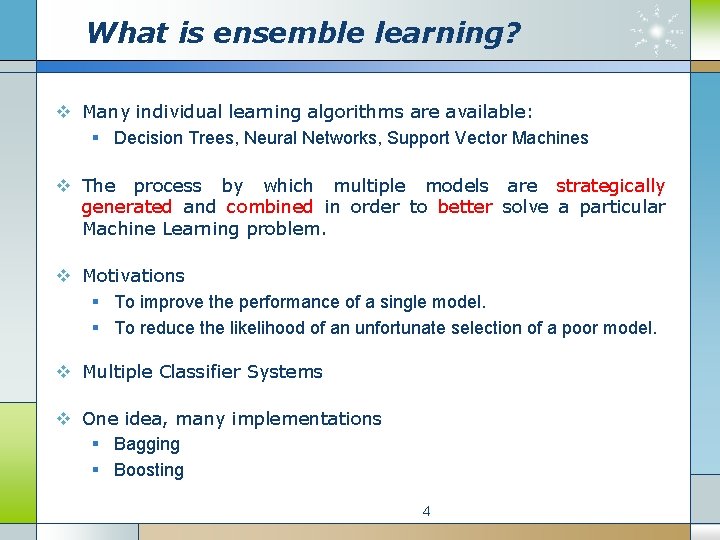

What is ensemble learning? v Many individual learning algorithms are available: § Decision Trees, Neural Networks, Support Vector Machines v The process by which multiple models are strategically generated and combined in order to better solve a particular Machine Learning problem. v Motivations § To improve the performance of a single model. § To reduce the likelihood of an unfortunate selection of a poor model. v Multiple Classifier Systems v One idea, many implementations § Bagging § Boosting 4

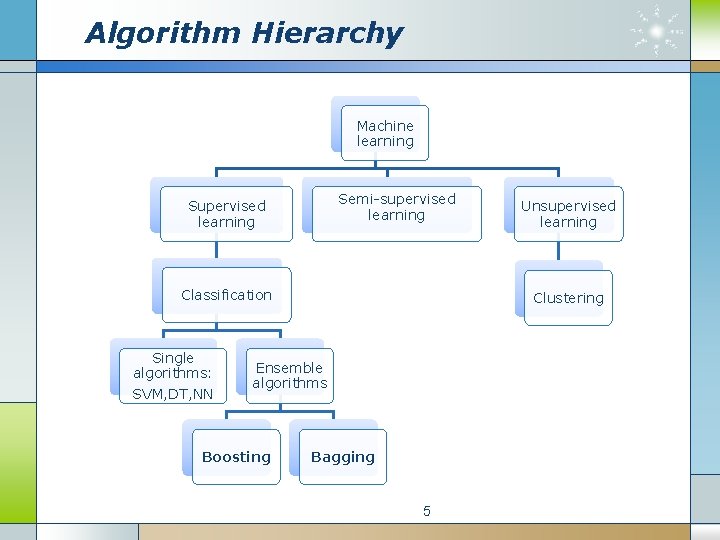

Algorithm Hierarchy Machine learning Semi-supervised learning Supervised learning Classification Single algorithms: SVM, DT, NN Clustering Ensemble algorithms Boosting Unsupervised learning Bagging 5

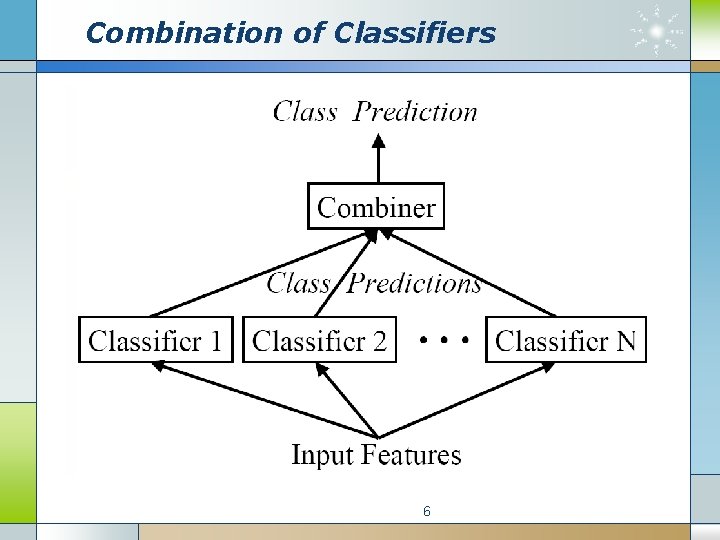

Combination of Classifiers 6

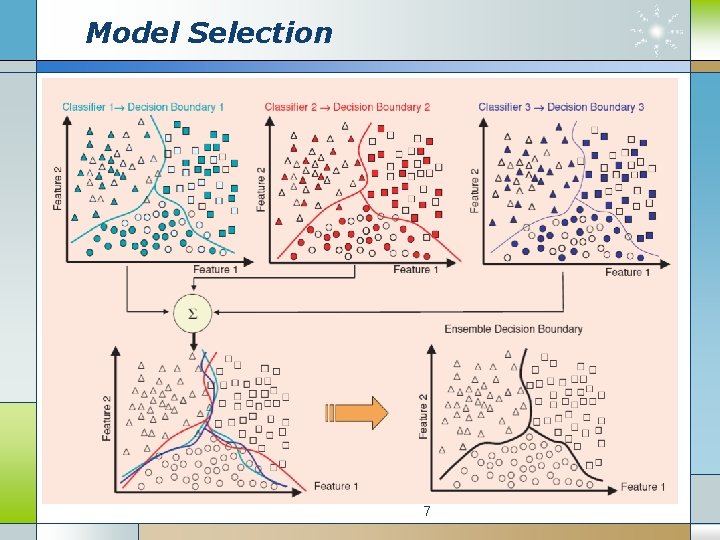

Model Selection 7

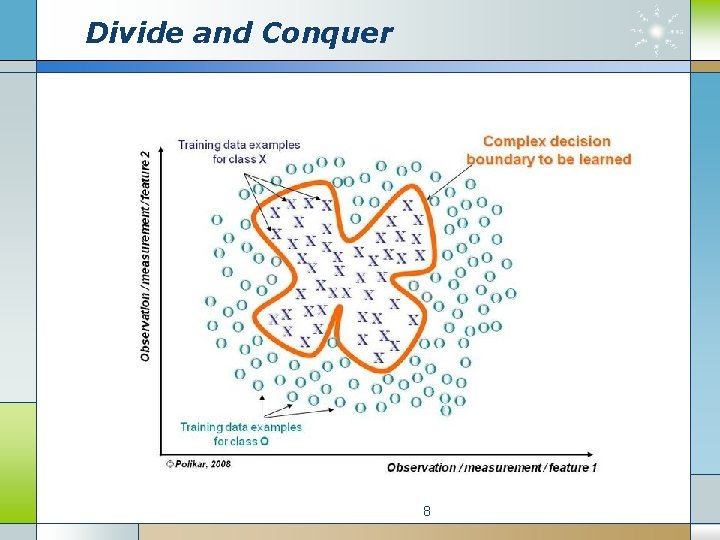

Divide and Conquer 8

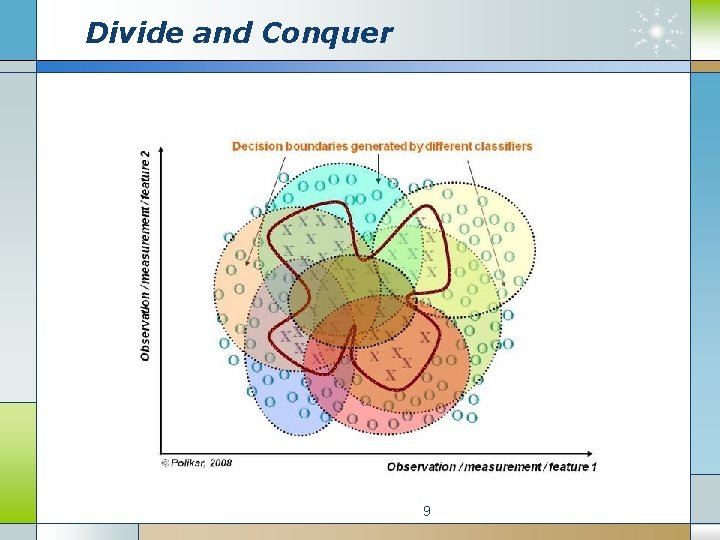

Divide and Conquer 9

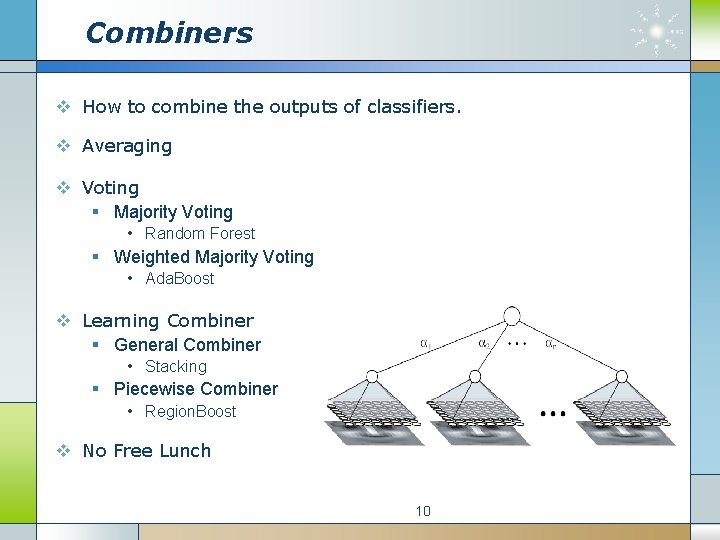

Combiners v How to combine the outputs of classifiers. v Averaging v Voting § Majority Voting • Random Forest § Weighted Majority Voting • Ada. Boost v Learning Combiner § General Combiner • Stacking § Piecewise Combiner • Region. Boost v No Free Lunch 10

Diversity v The key to the success of ensemble learning § Need to correct the errors made by other classifiers. § Does not work if all models are identical. v Different Learning Algorithms § DT, SVM, NN, KNN … v Different Training Processes § Different Parameters § Different Training Sets § Different Feature Sets v Weak Learners § Easy to create different decision boundaries. § Stumps … 11

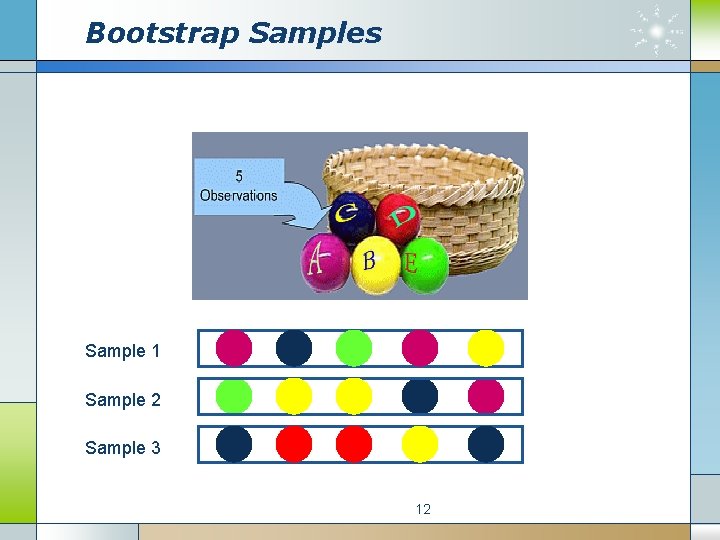

Bootstrap Samples Sample 1 Sample 2 Sample 3 12

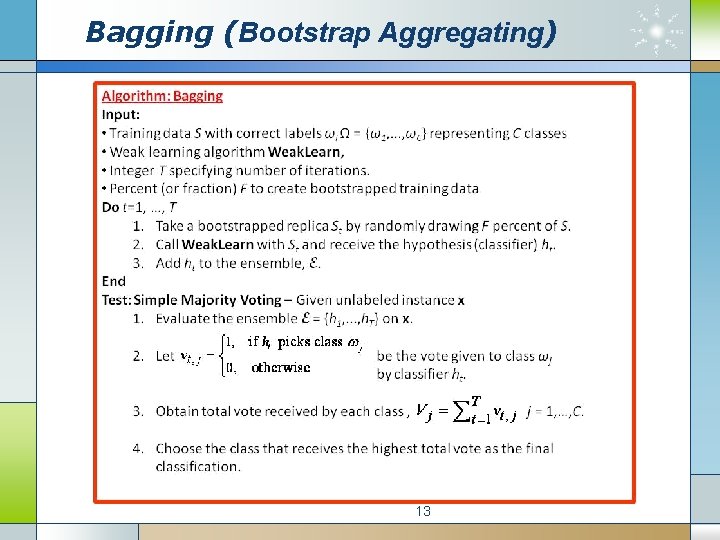

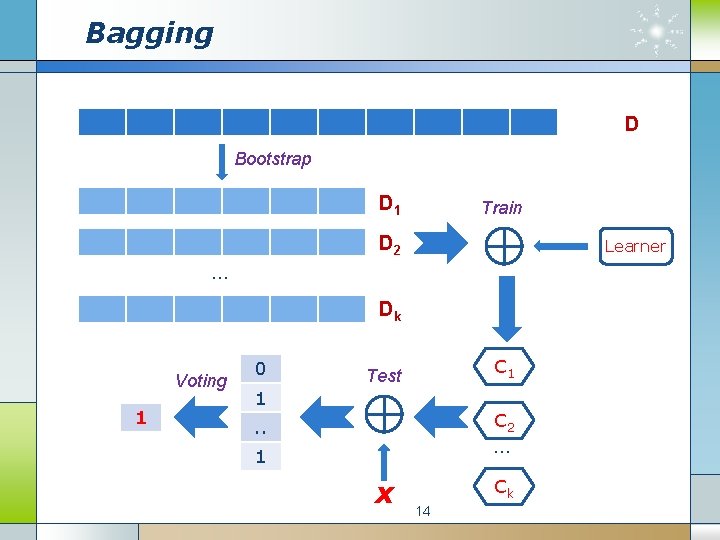

Bagging (Bootstrap Aggregating) 13

Bagging D Bootstrap D 1 Train D 2 Learner … Dk Voting 1 0 C 1 Test 1 C 2 … . . 1 x Ck 14

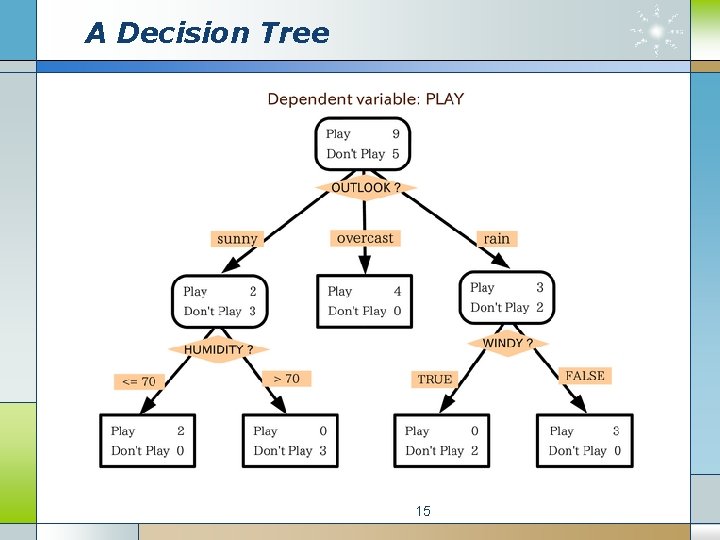

A Decision Tree 15

Tree vs. Forest 16

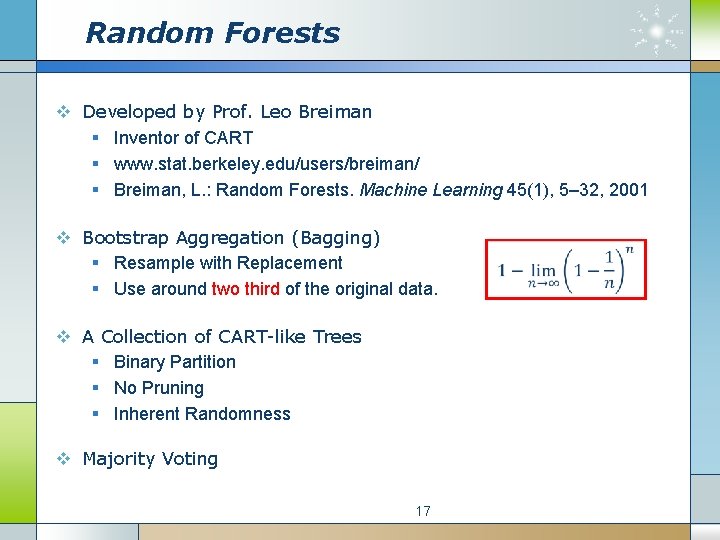

Random Forests v Developed by Prof. Leo Breiman § Inventor of CART § www. stat. berkeley. edu/users/breiman/ § Breiman, L. : Random Forests. Machine Learning 45(1), 5– 32, 2001 v Bootstrap Aggregation (Bagging) § Resample with Replacement § Use around two third of the original data. v A Collection of CART-like Trees § Binary Partition § No Pruning § Inherent Randomness v Majority Voting 17

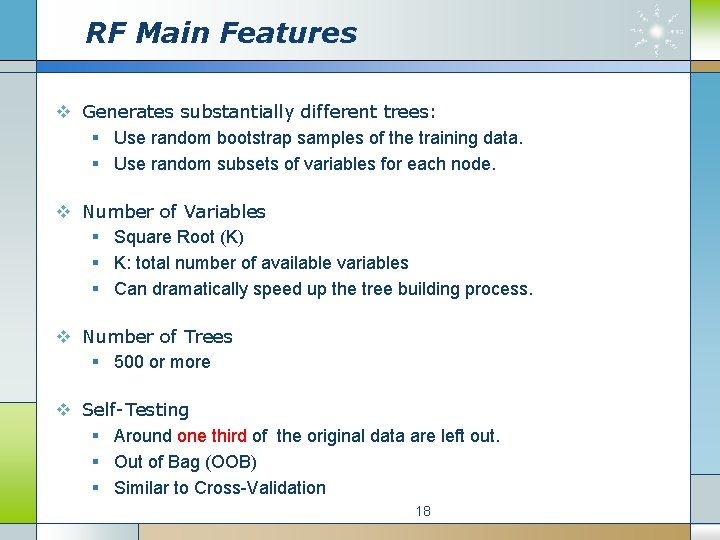

RF Main Features v Generates substantially different trees: § Use random bootstrap samples of the training data. § Use random subsets of variables for each node. v Number of Variables § Square Root (K) § K: total number of available variables § Can dramatically speed up the tree building process. v Number of Trees § 500 or more v Self-Testing § Around one third of the original data are left out. § Out of Bag (OOB) § Similar to Cross-Validation 18

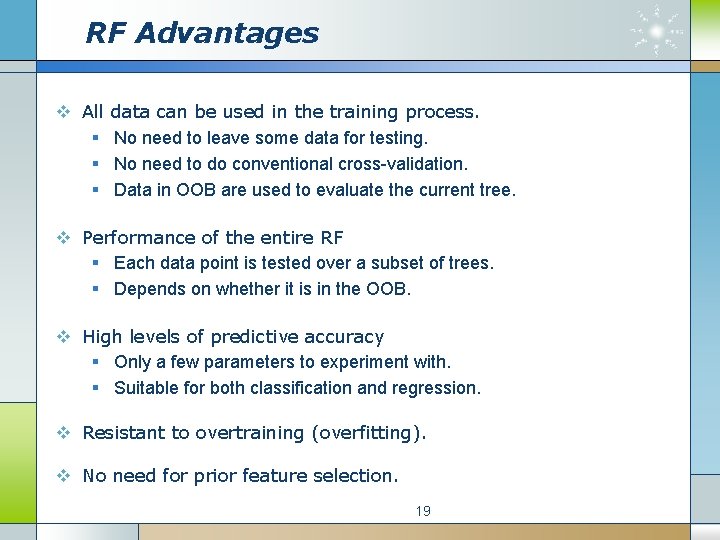

RF Advantages v All § § § data can be used in the training process. No need to leave some data for testing. No need to do conventional cross-validation. Data in OOB are used to evaluate the current tree. v Performance of the entire RF § Each data point is tested over a subset of trees. § Depends on whether it is in the OOB. v High levels of predictive accuracy § Only a few parameters to experiment with. § Suitable for both classification and regression. v Resistant to overtraining (overfitting). v No need for prior feature selection. 19

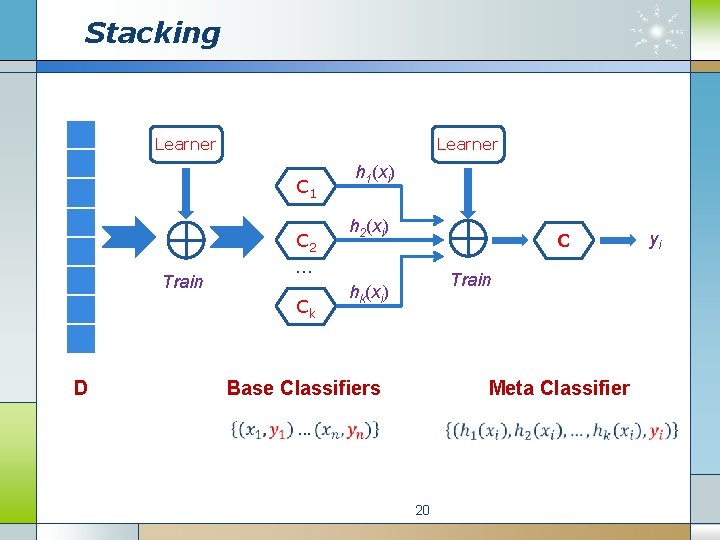

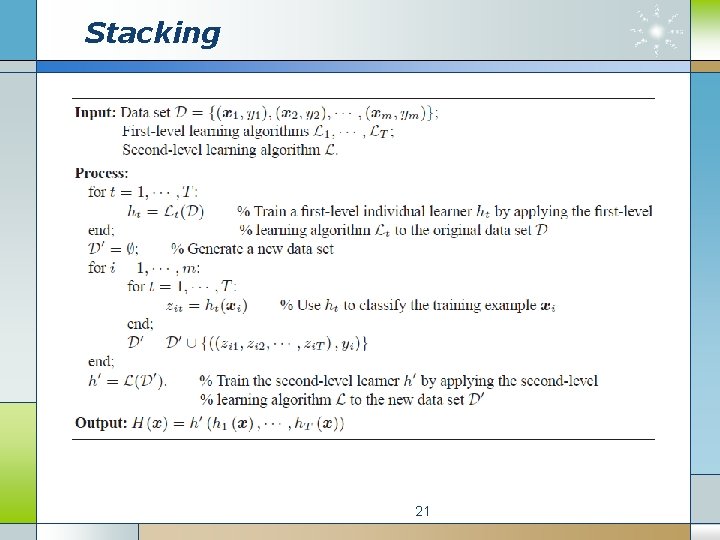

Stacking Learner C 1 Train C 2 … Ck D h 1(xi) h 2(xi) C Train hk(xi) Base Classifiers Meta Classifier 20 yi

Stacking 21

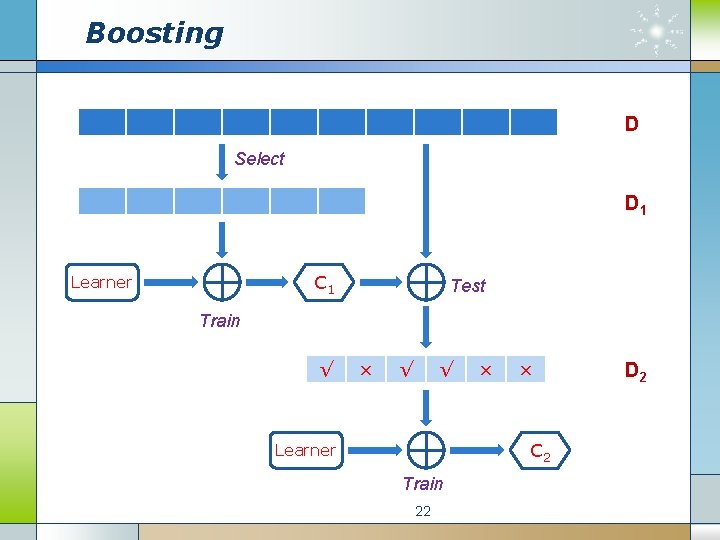

Boosting D Select D 1 C 1 Learner Test Train √ × √ √ × × C 2 Learner Train 22 D 2

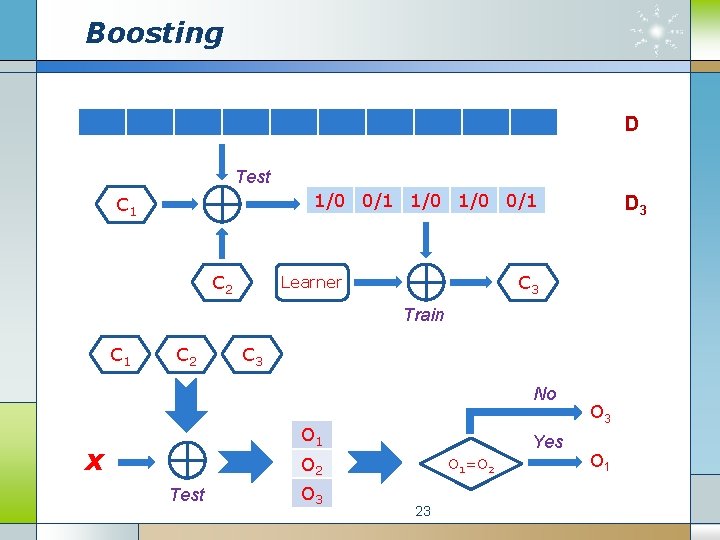

Boosting D Test 1/0 0/1 C 2 D 3 C 3 Learner Train C 1 C 2 C 3 No O 1 x Yes O 2 Test O 3 O 1=O 2 23 O 1

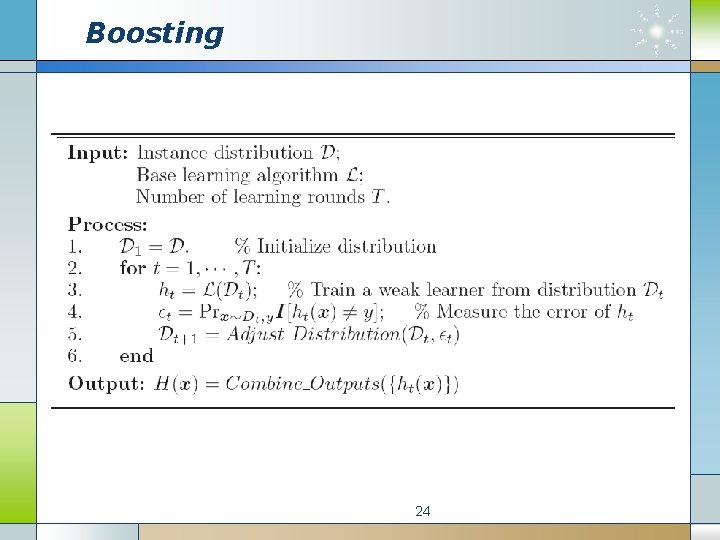

Boosting 24

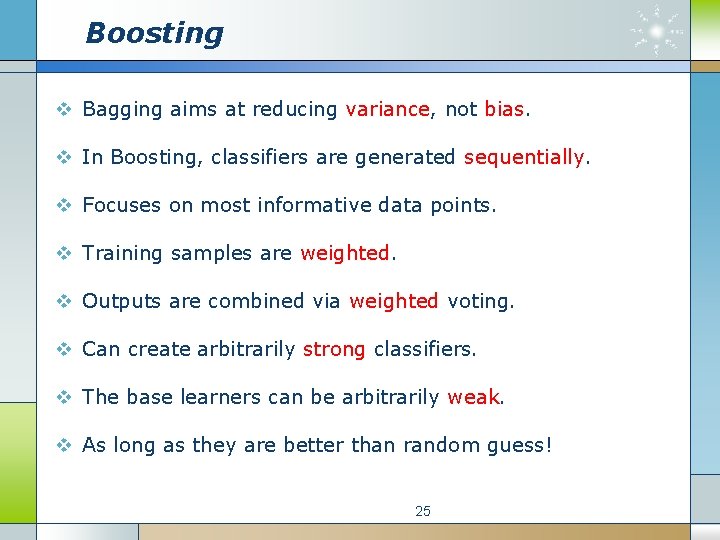

Boosting v Bagging aims at reducing variance, not bias. v In Boosting, classifiers are generated sequentially. v Focuses on most informative data points. v Training samples are weighted. v Outputs are combined via weighted voting. v Can create arbitrarily strong classifiers. v The base learners can be arbitrarily weak. v As long as they are better than random guess! 25

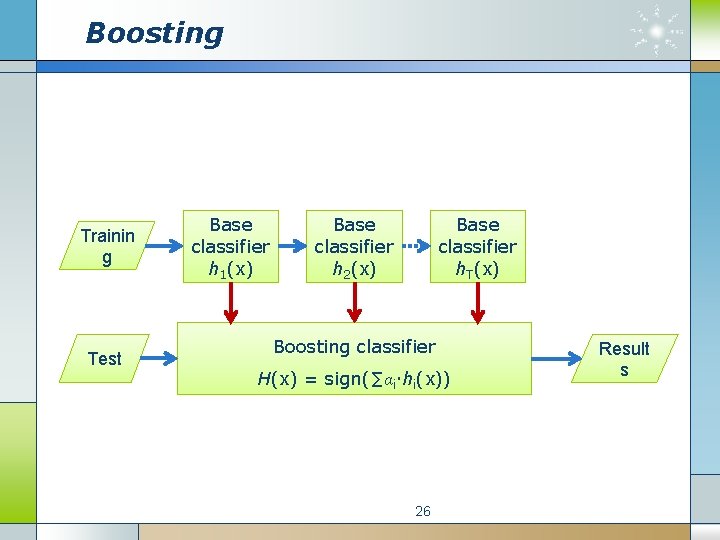

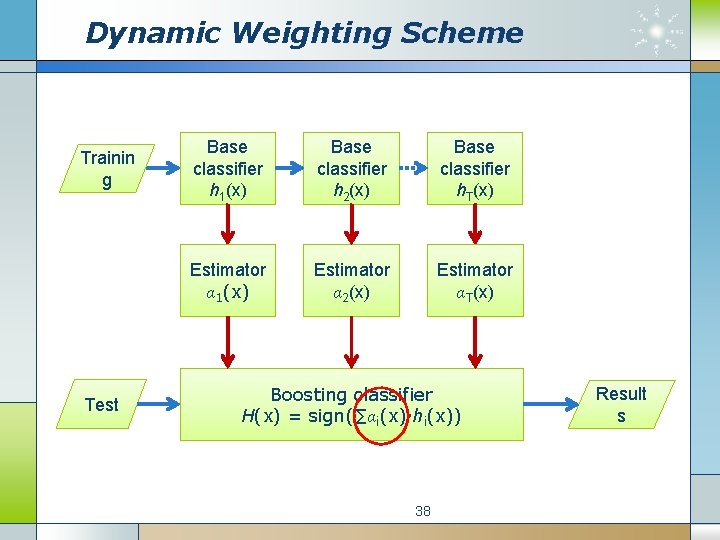

Boosting Trainin g Test Base classifier h 1(x) Base classifier h 2(x) Base classifier h. T(x) Boosting classifier H(x) = sign(∑αi hi(x)) 26 Result s

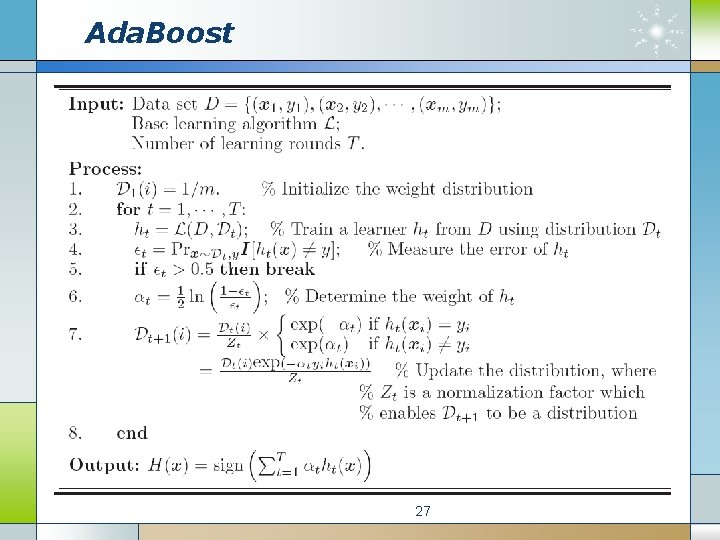

Ada. Boost 27

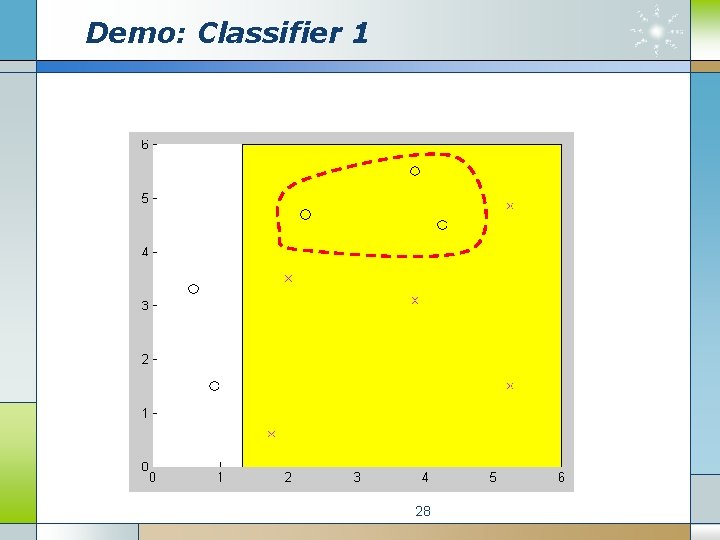

Demo: Classifier 1 28

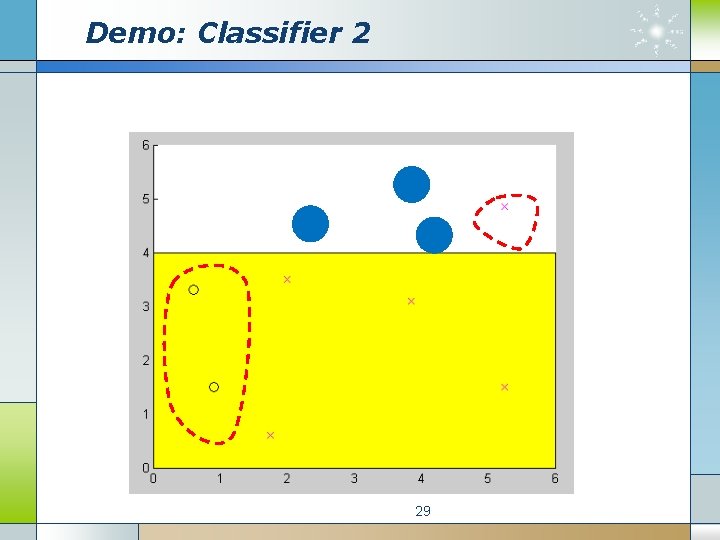

Demo: Classifier 2 29

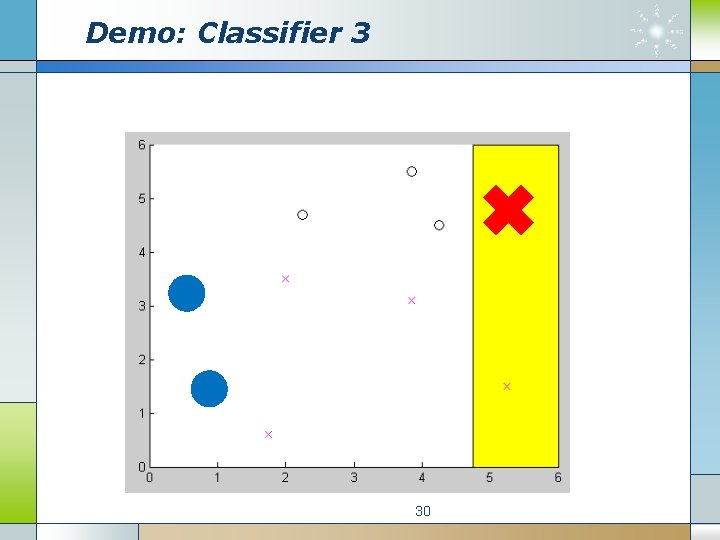

Demo: Classifier 3 30

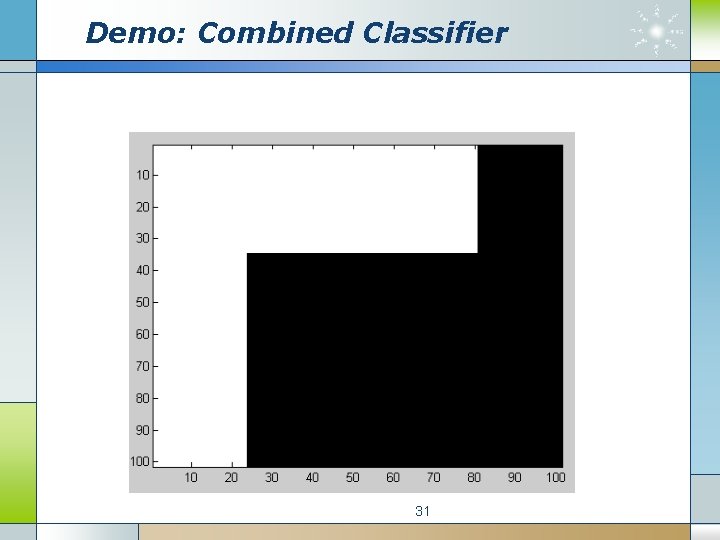

Demo: Combined Classifier 31

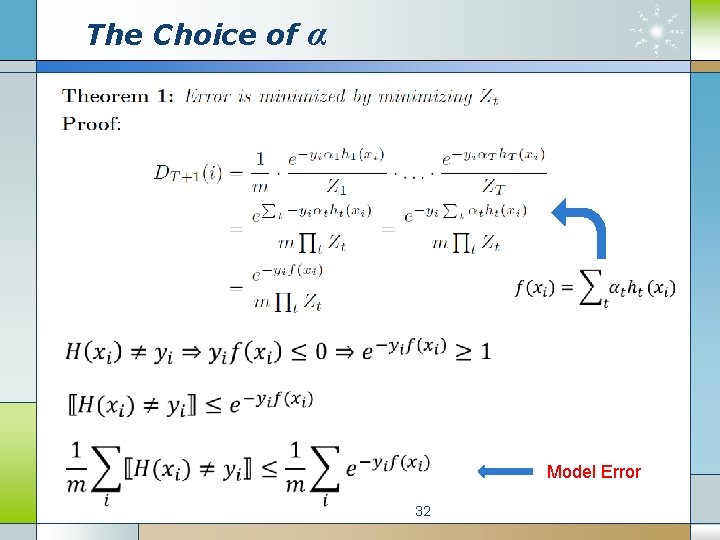

The Choice of α Model Error 32

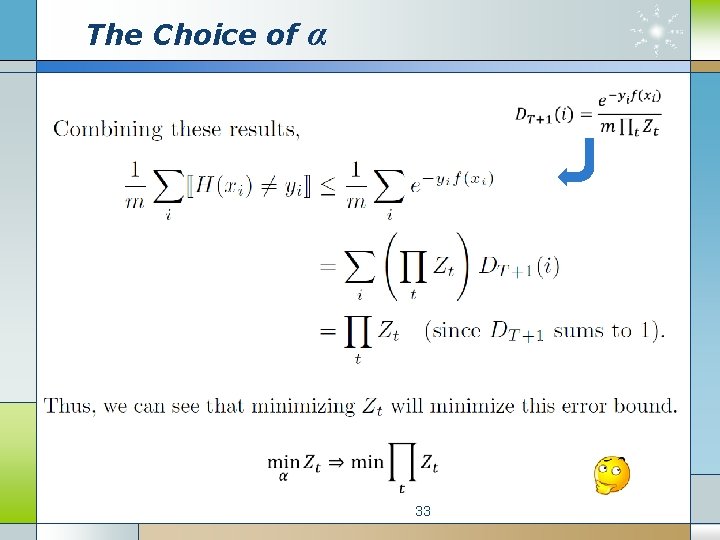

The Choice of α 33

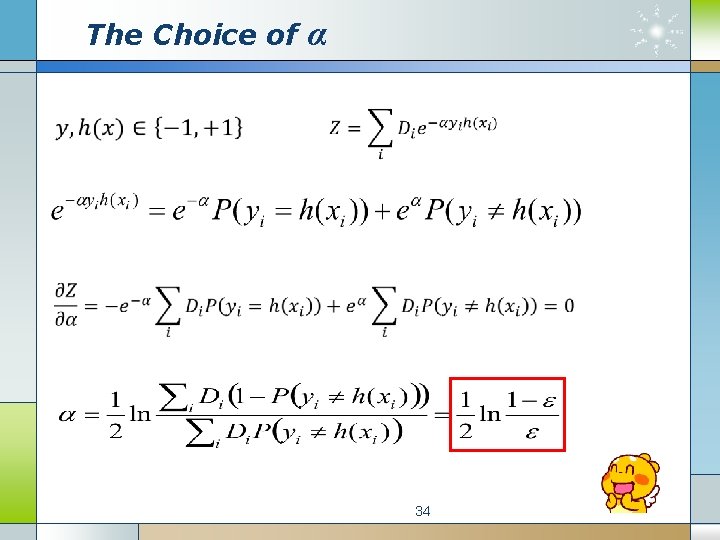

The Choice of α 34

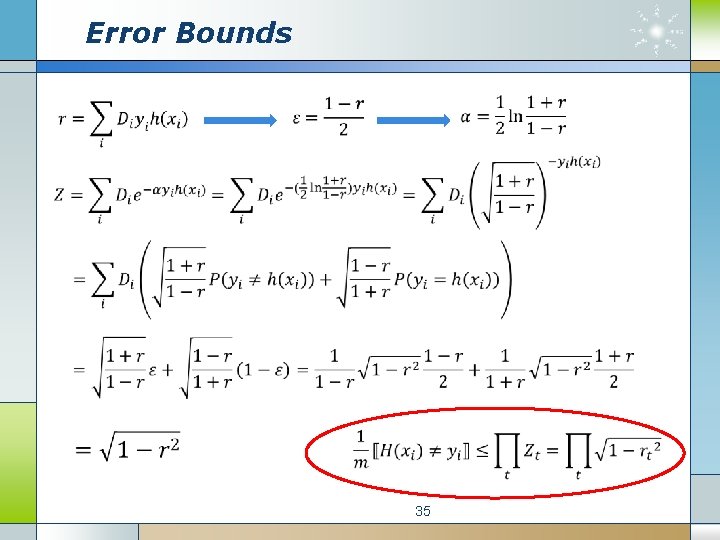

Error Bounds 35

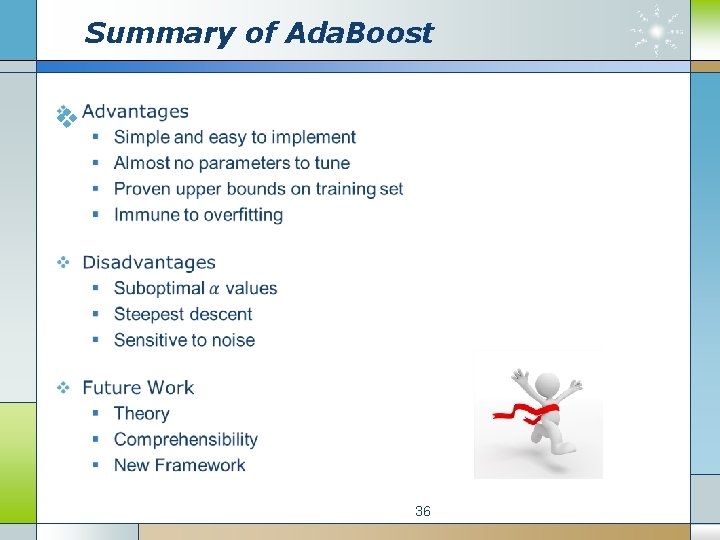

Summary of Ada. Boost v 36

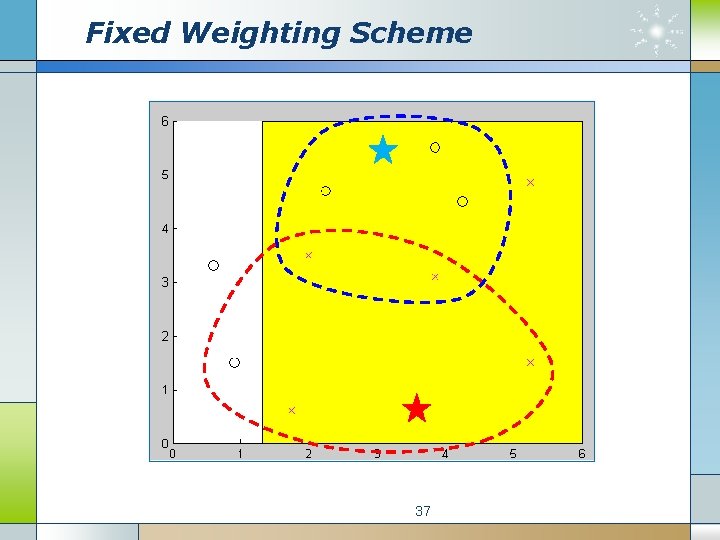

Fixed Weighting Scheme 37

Dynamic Weighting Scheme Trainin g Test Base classifier h 1(x) Base classifier h 2(x) Base classifier h. T(x) Estimator α 1(x) Estimator α 2(x) Estimator αT(x) Boosting classifier H(x) = sign(∑αi(x) hi(x)) 38 Result s

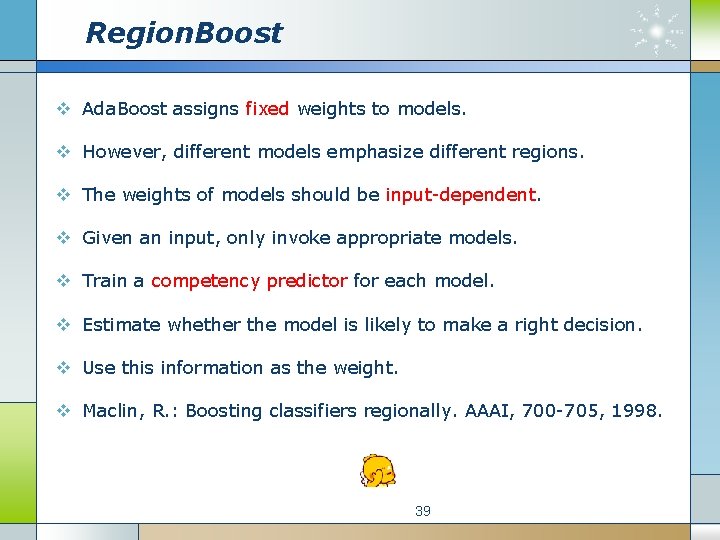

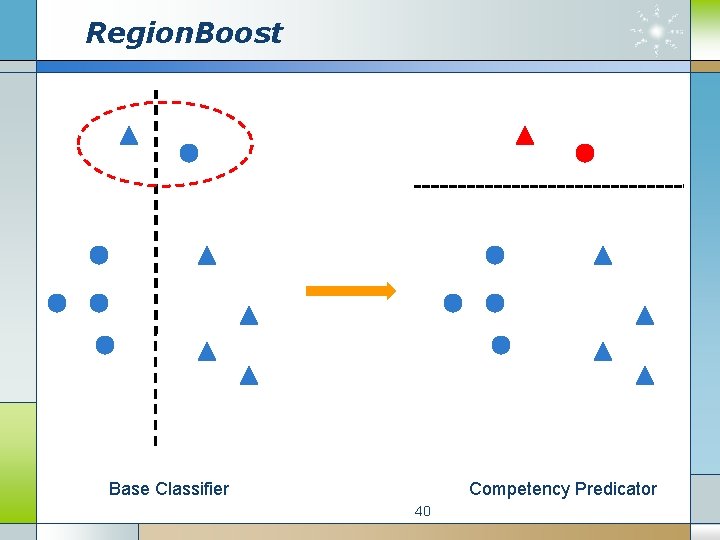

Region. Boost v Ada. Boost assigns fixed weights to models. v However, different models emphasize different regions. v The weights of models should be input-dependent. v Given an input, only invoke appropriate models. v Train a competency predictor for each model. v Estimate whether the model is likely to make a right decision. v Use this information as the weight. v Maclin, R. : Boosting classifiers regionally. AAAI, 700 -705, 1998. 39

Region. Boost Base Classifier Competency Predicator 40

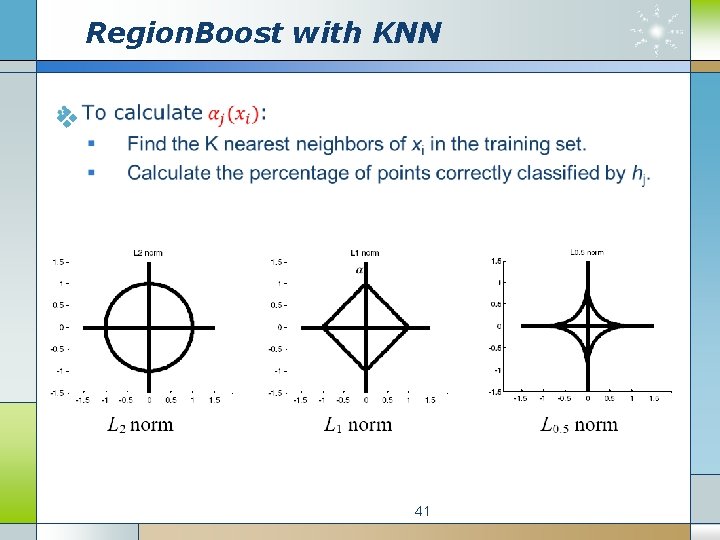

Region. Boost with KNN v 41

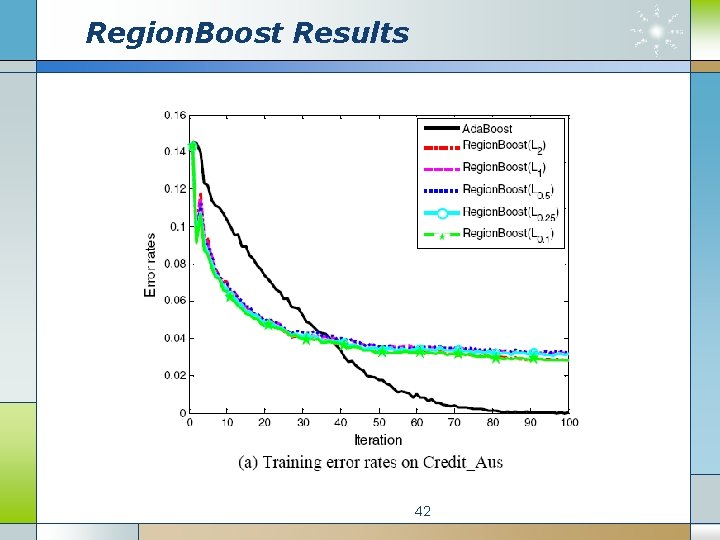

Region. Boost Results 42

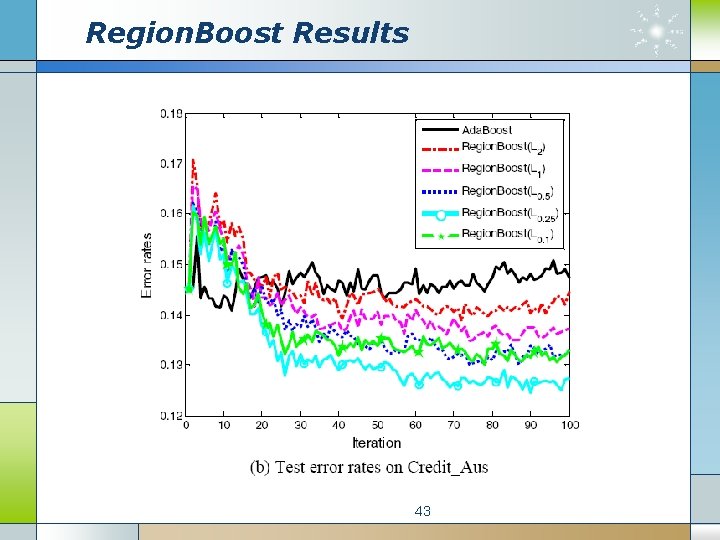

Region. Boost Results 43

Review v What is ensemble learning? v What can ensemble learning help us? v Two major types of ensemble learning: § Parallel (Bagging) § Sequential (Boosting) v Different ways to combine models: § Average § Majority Voting § Weighted Majority Voting v Some representative algorithms: § Random Forests § Ada. Boost § Region. Boost 44

Next Week’s Class Talk v Volunteers are required for next week’s class talk. v Topic: Applications of Ada. Boost v Suggested Reading § Robust Real-Time Object Detection § P. Viola and M. Jones § International Journal of Computer Vision 57(2), 137 -154, 2004. v Length: 20 minutes plus question time 45

- Slides: 45