Ensemble Learning Introduction to Machine Learning and Data

Ensemble Learning Introduction to Machine Learning and Data Mining, Carla Brodley

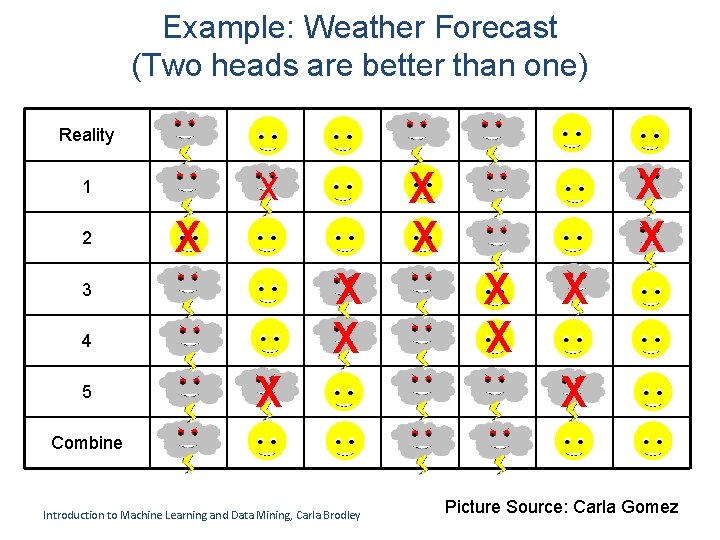

Example: Weather Forecast (Two heads are better than one) Reality 2 X X X 3 4 5 X X X 1 X X X Combine Introduction to Machine Learning and Data Mining, Carla Brodley Picture Source: Carla Gomez

Majority Vote Model • Majority vote – Choose the class predicted by more than ½ the classifiers – If no agreement return an error – When does this work? Introduction to Machine Learning and Data Mining, Carla Brodley

Majority Vote Model • Let p be the probability that a classifier makes an error • Assume that classifier errors are independent • The probability that k of the n classifiers make an error is: • Therefore the probability that a majority vote classifier is in error is: Introduction to Machine Learning and Data Mining, Carla Brodley

Value of Ensembles • “No Free Lunch” Theorem – No single algorithm wins all the time! • When combing multiple independent decisions, each of which is at least more accurate than random guessing, random errors cancel each other out and correct decisions are reinforced • Human ensembles are demonstrably better – How many jelly beans are in the jar? – Who Wants to be a Millionaire: “Ask the audience” Introduction to Machine Learning and Data Mining, Carla Brodley

What is Ensemble Learning? • Ensemble: collection of base learners – Each learns the target function – Combine their outputs for a final predication – Often called “meta-learning” • How can you get different learners? • How can you combine learners? Introduction to Machine Learning and Data Mining, Carla Brodley

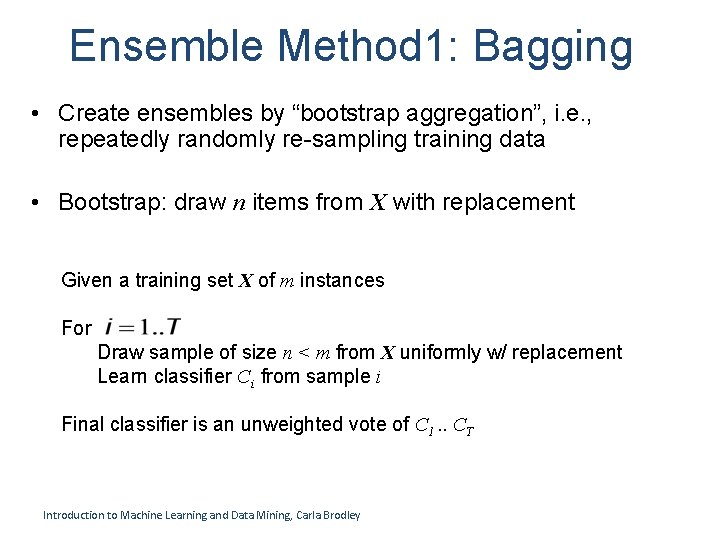

Ensemble Method 1: Bagging • Create ensembles by “bootstrap aggregation”, i. e. , repeatedly randomly re-sampling training data • Bootstrap: draw n items from X with replacement Given a training set X of m instances For Draw sample of size n < m from X uniformly w/ replacement Learn classifier Ci from sample i Final classifier is an unweighted vote of C 1. . CT Introduction to Machine Learning and Data Mining, Carla Brodley

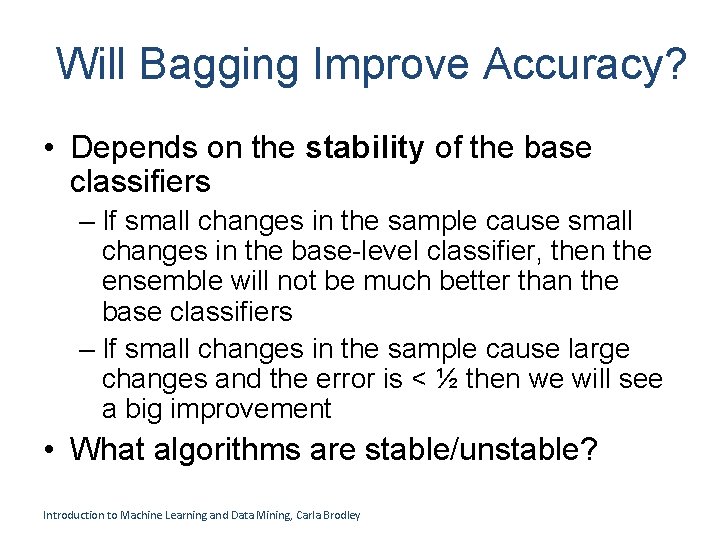

Will Bagging Improve Accuracy? • Depends on the stability of the base classifiers – If small changes in the sample cause small changes in the base-level classifier, then the ensemble will not be much better than the base classifiers – If small changes in the sample cause large changes and the error is < ½ then we will see a big improvement • What algorithms are stable/unstable? Introduction to Machine Learning and Data Mining, Carla Brodley

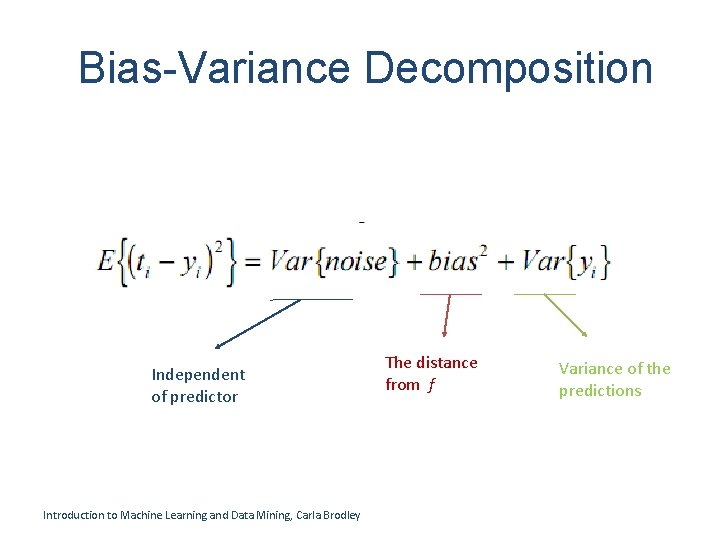

Bias-Variance Decomposition Independent of predictor Introduction to Machine Learning and Data Mining, Carla Brodley The distance from f Variance of the predictions

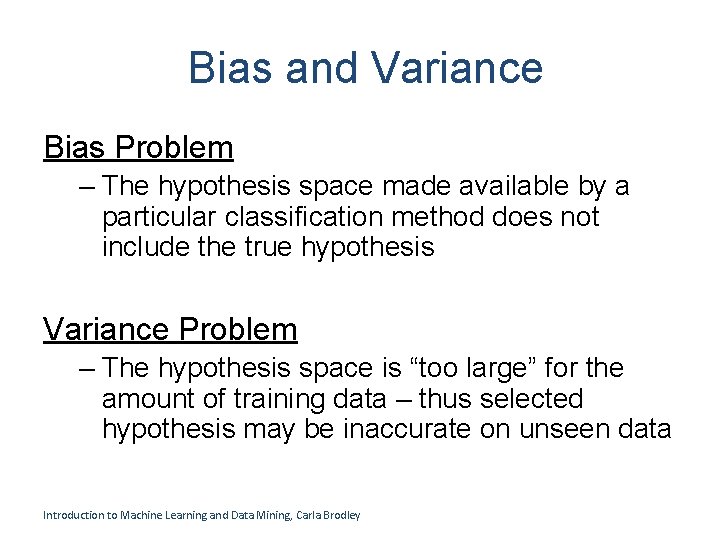

Bias and Variance Bias Problem – The hypothesis space made available by a particular classification method does not include the true hypothesis Variance Problem – The hypothesis space is “too large” for the amount of training data – thus selected hypothesis may be inaccurate on unseen data Introduction to Machine Learning and Data Mining, Carla Brodley

Why Bagging Improves Accuracy • Decreases error by decreasing the variance in the results due to unstable learners, algorithms (like decision trees and neural networks) whose output can change dramatically when the training data is slightly changed Introduction to Machine Learning and Data Mining, Carla Brodley

Why Bagging Improves Accuracy • “Bagging goes a ways toward making a silk purse out of a sows ear especially if the sow’s ear is twitchy” – Leo Breiman 1928 - 2005 Introduction to Machine Learning and Data Mining, Carla Brodley

Ensemble Method 2: Boosting: • Key idea: Instead of sampling (as in bagging) reweigh examples Let m be the number of hypotheses to generate Initialize all training instances to have the same weight for i=1, m generate hypothesis hi increase weights of the training instances that hi misclassifies Final classifier is a weighted vote of all m hypotheses (where the weights are set based on training set accuracy) Introduction to Machine Learning and Data Mining, Carla Brodley

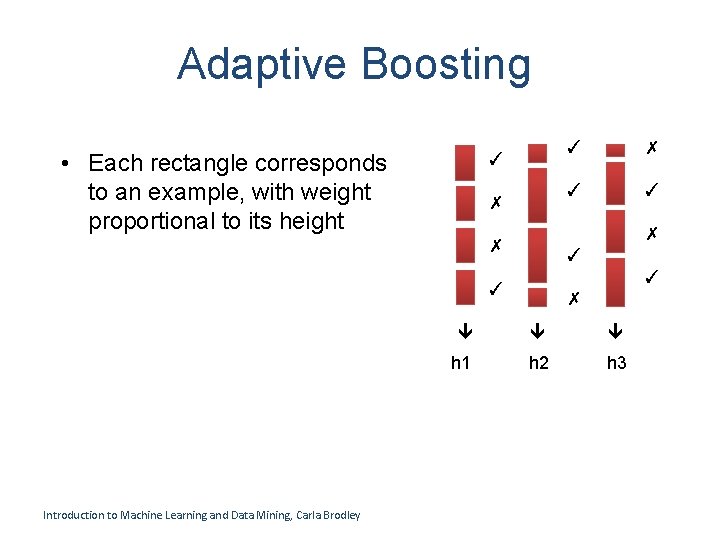

Adaptive Boosting • Each rectangle corresponds to an example, with weight proportional to its height ✓ ✗ ✗ ✓ ✓ ✓ Introduction to Machine Learning and Data Mining, Carla Brodley ✓ ✗ h 1 h 2 h 3

How do these algorithms handle instance weights? • Linear discriminant functions • Decision trees • K-NN Introduction to Machine Learning and Data Mining, Carla Brodley

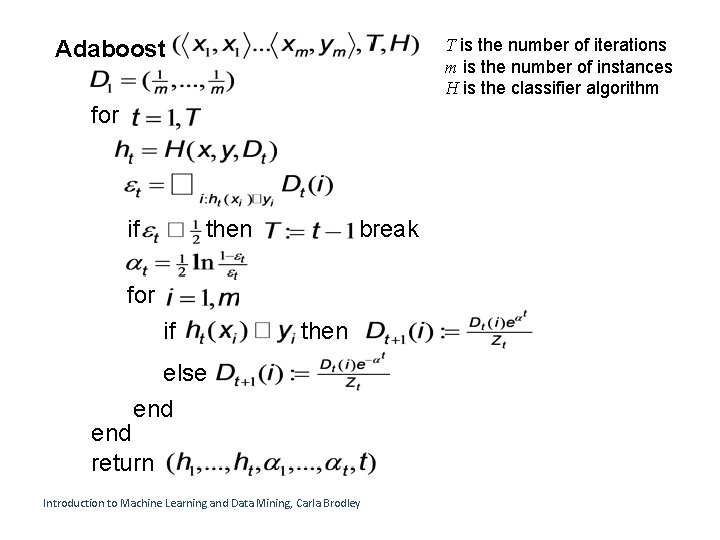

T is the number of iterations m is the number of instances H is the classifier algorithm Adaboost for if then break for if then else end return Introduction to Machine Learning and Data Mining, Carla Brodley

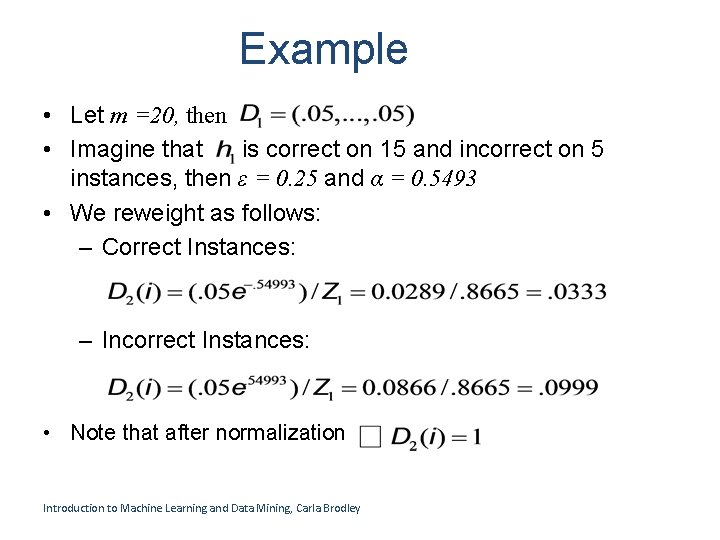

Example • Let m =20, then • Imagine that is correct on 15 and incorrect on 5 instances, then ε = 0. 25 and α = 0. 5493 • We reweight as follows: – Correct Instances: – Incorrect Instances: • Note that after normalization Introduction to Machine Learning and Data Mining, Carla Brodley

Boosting • Originally developed by computational learning theorists to guarantee performance improvements on fitting training data for a weak learner that only needs to generate a hypothesis with a training accuracy greater than 0. 5 (Schapire, 1990). • Revised to be a practical algorithm, Ada. Boost, for building ensembles that empirically improves generalization performance (Freund & Shapire, 1996). Introduction to Machine Learning and Data Mining, Carla Brodley

Strong and Weak Learners • “Strong learner” produces a classifier which can be arbitrarily accuracy • “Weak Learner” produces a classifier more accurate than random guessing • Original question: Can a set of weak learners create a single strong learner? Introduction to Machine Learning and Data Mining, Carla Brodley

Summary of Boosting and Bagging • Called “homogenous ensembles” • Both use a single learning algorithm but manipulate training data to learn multiple models – Data 1 Data 2 … Data T – Learner 1 = Learner 2 = … = Learner T • Methods for changing training data: – Bagging: Resample training data – Boosting: Reweight training data • In WEKA, these are called meta-learners, they take a learning algorithm as an argument (base learner) and create a new learning algorithm Introduction to Machine Learning and Data Mining, Carla Brodley

What is Ensemble Learning? • Ensemble: collection of base learners – Each learns the target function – Combine their outputs for a final predication – Often called “meta-learning” • How can you get different learners? • How can you combine learners? Introduction to Machine Learning and Data Mining, Carla Brodley

Where do Learners come from? • Bagging • Boosting • Partitioning the data (must have a large amount) • Using different feature subsets, different algorithms, different parameters of the same algorithm Introduction to Machine Learning and Data Mining, Carla Brodley

Ensemble Method 3: Random Forests • For i = 1 to T, – Take a bootstrap sample (bag) – Grow a random decision tree T_i • At each node choose a feature from one of n features (n < total number of features) • Grow a full tree (do not prune) • Classify new objects by taking a majority vote of the T random trees Introduction to Machine Learning and Data Mining, Carla Brodley

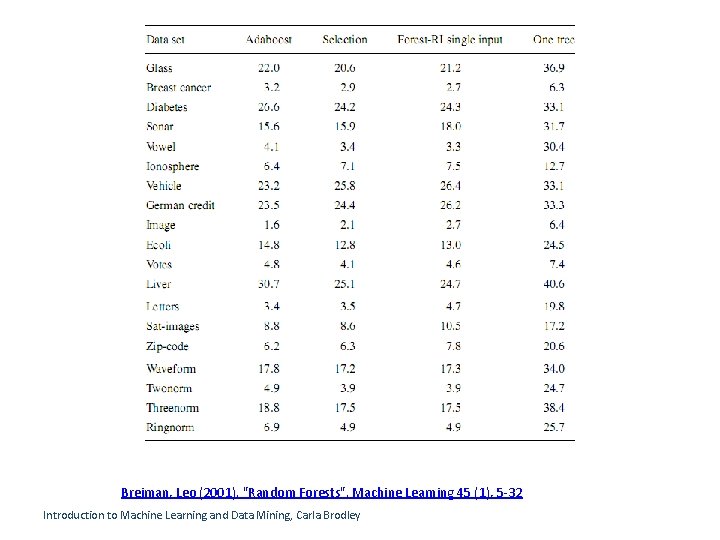

Breiman, Leo (2001). "Random Forests". Machine Learning 45 (1), 5 -32 Introduction to Machine Learning and Data Mining, Carla Brodley

What is Ensemble Learning? • Ensemble: collection of base learners – Each learns the target function – Combine their outputs for a final predication – Often called “meta-learning” • How can you get different learners? • How can you combine learners? Introduction to Machine Learning and Data Mining, Carla Brodley

Methods for Combining Classifiers • Unweighted vote (Bagging) • If classifiers produce class probabilities rather than votes we can combine probabilities • Weighted vote (typically a function of the accuracy) • Stacking – learning how to combine classifiers Introduction to Machine Learning and Data Mining, Carla Brodley

Introduction to Machine Learning and Data Mining, Carla Brodley

Began October 2006 • Supervised learning task – Training data is a set of users and ratings (1, 2, 3, 4, 5 stars) those users have given to movies. – Construct a classifier that given a user and an unrated movie, correctly classifies that movie as either 1, 2, 3, 4, or 5 stars • $1 million prize for a 10% improvement over Netflix’s current movie recommender Introduction to Machine Learning and Data Mining, Carla Brodley

. Ensemble methods are the best performers… Introduction to Machine Learning and Data Mining, Carla Brodley

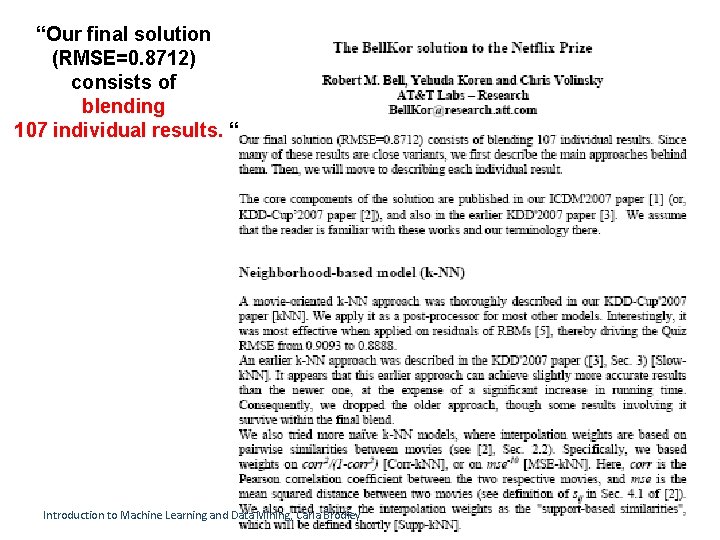

“Our final solution (RMSE=0. 8712) consists of blending 107 individual results. “ Introduction to Machine Learning and Data Mining, Carla Brodley

- Slides: 30