Ensemble Learning Bagging Boosting and Stacking and other

Ensemble Learning – Bagging, Boosting, and Stacking, and other topics Professor Carolina Ruiz Department of Computer Science WPI http: //www. cs. wpi. edu/~ruiz

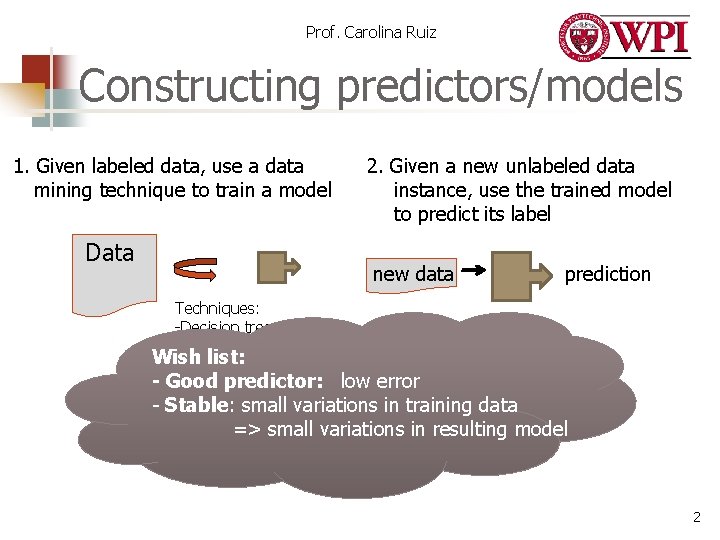

Prof. Carolina Ruiz Constructing predictors/models 1. Given labeled data, use a data mining technique to train a model Data 2. Given a new unlabeled data instance, use the trained model to predict its label new data prediction Techniques: -Decision trees - Bayesian nets Wish list: nets - Neural - Good predictor: -… low error - Stable: small variations in training data => small variations in resulting model 2

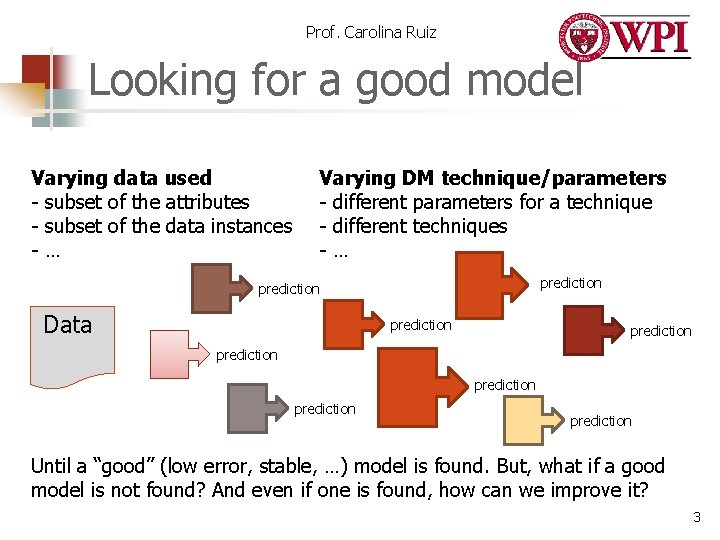

Prof. Carolina Ruiz Looking for a good model Varying data used - subset of the attributes - subset of the data instances -… Varying DM technique/parameters - different parameters for a technique - different techniques -… prediction Data prediction prediction Until a “good” (low error, stable, …) model is found. But, what if a good model is not found? And even if one is found, how can we improve it? 3

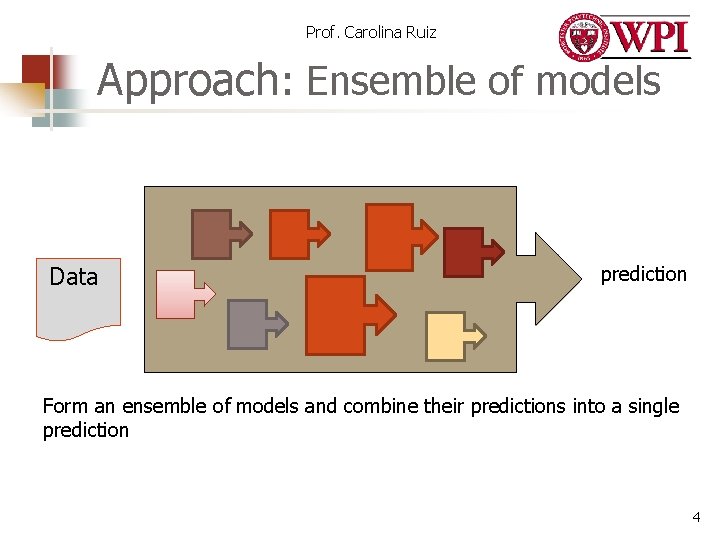

Prof. Carolina Ruiz Approach: Ensemble of models Data prediction Form an ensemble of models and combine their predictions into a single prediction 4

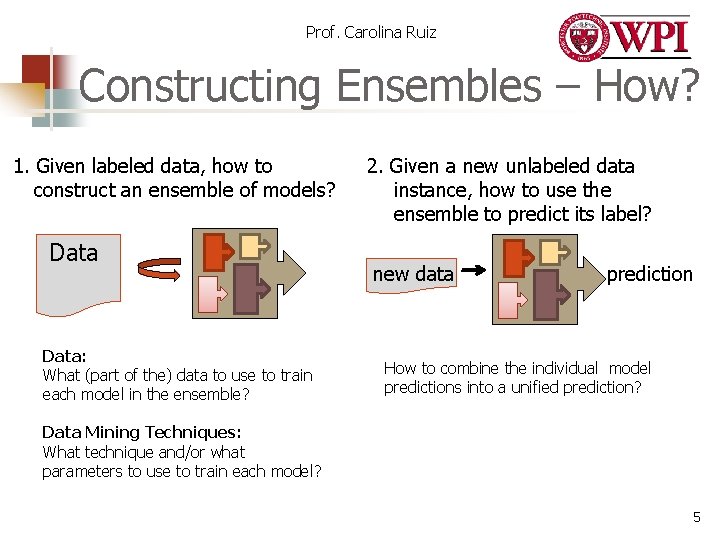

Prof. Carolina Ruiz Constructing Ensembles – How? 1. Given labeled data, how to construct an ensemble of models? Data: What (part of the) data to use to train each model in the ensemble? 2. Given a new unlabeled data instance, how to use the ensemble to predict its label? new data prediction How to combine the individual model predictions into a unified prediction? Data Mining Techniques: What technique and/or what parameters to use to train each model? 5

Prof. Carolina Ruiz Several Approaches n Bagging (Bootstrap Aggregating) n n Boosting n n Floyd, Ruiz, Alvarez, WPI and Boston College Mixture of Experts in Neural Nets n n Wolpert, NASA Ames Research Center Model Selection Meta-learning n n Schapire, ATT Research (now at Princeton U). Friedman, Stanford U. Stacking n n Breiman, UC Berkeley … Alvarez, Ruiz, Kawato, Kogel, Boston College and WPI 6

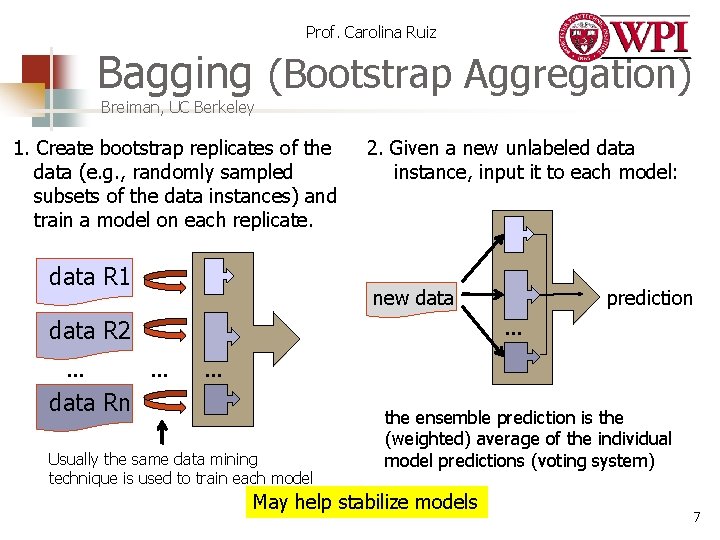

Prof. Carolina Ruiz Bagging (Bootstrap Aggregation) Breiman, UC Berkeley 1. Create bootstrap replicates of the data (e. g. , randomly sampled subsets of the data instances) and train a model on each replicate. data R 1 2. Given a new unlabeled data instance, input it to each model: new data R 2 … prediction … … … data Rn Usually the same data mining technique is used to train each model the ensemble prediction is the (weighted) average of the individual model predictions (voting system) May help stabilize models 7

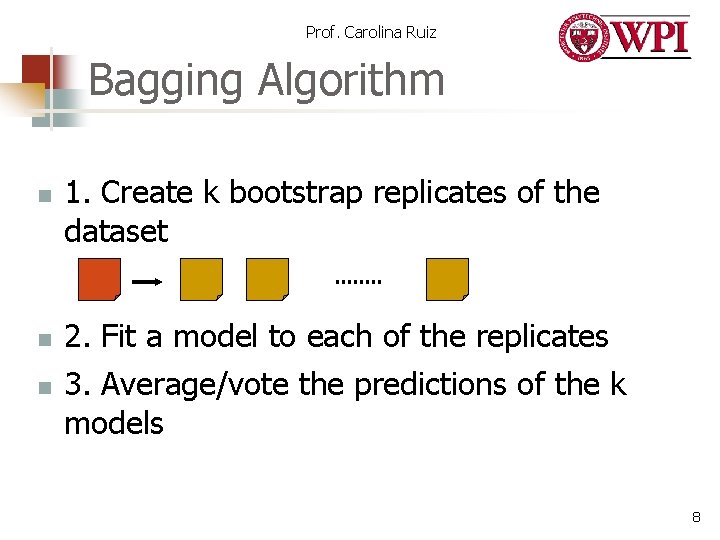

Prof. Carolina Ruiz Bagging Algorithm n n n 1. Create k bootstrap replicates of the dataset 2. Fit a model to each of the replicates 3. Average/vote the predictions of the k models 8

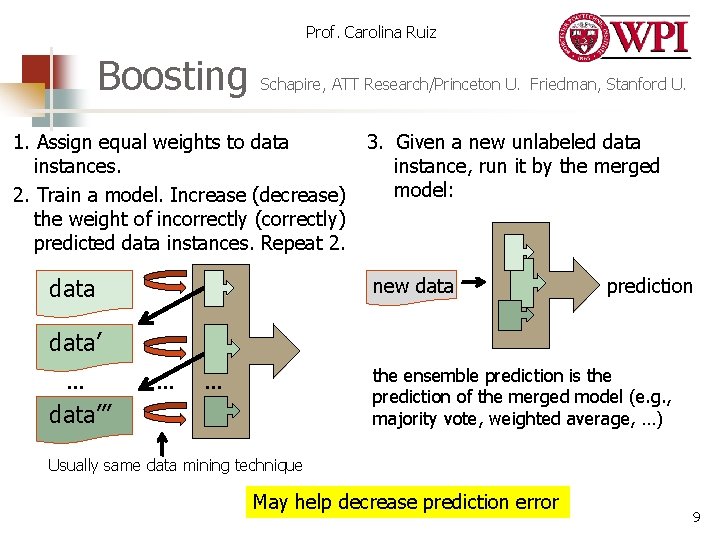

Prof. Carolina Ruiz Boosting Schapire, ATT Research/Princeton U. Friedman, Stanford U. 1. Assign equal weights to data instances. 2. Train a model. Increase (decrease) the weight of incorrectly (correctly) predicted data instances. Repeat 2. data 3. Given a new unlabeled data instance, run it by the merged model: new data prediction data’ … … the ensemble prediction is the prediction of the merged model (e. g. , majority vote, weighted average, …) … data’’’ Usually same data mining technique May help decrease prediction error 9

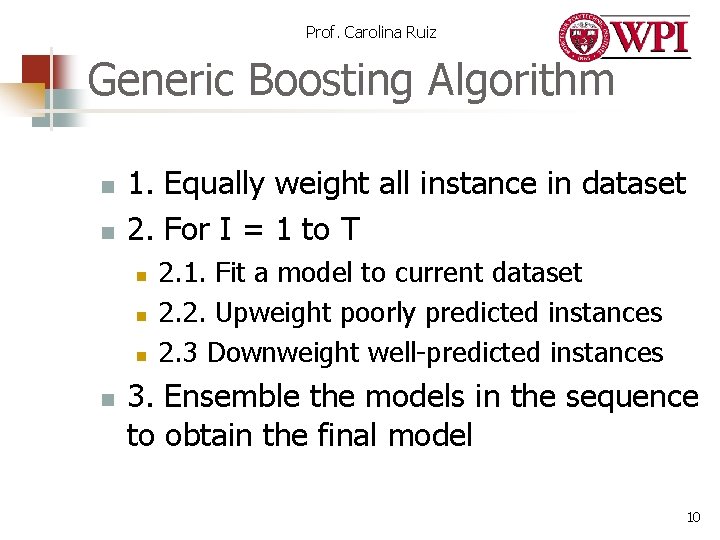

Prof. Carolina Ruiz Generic Boosting Algorithm n n 1. Equally weight all instance in dataset 2. For I = 1 to T n n 2. 1. Fit a model to current dataset 2. 2. Upweight poorly predicted instances 2. 3 Downweight well-predicted instances 3. Ensemble the models in the sequence to obtain the final model 10

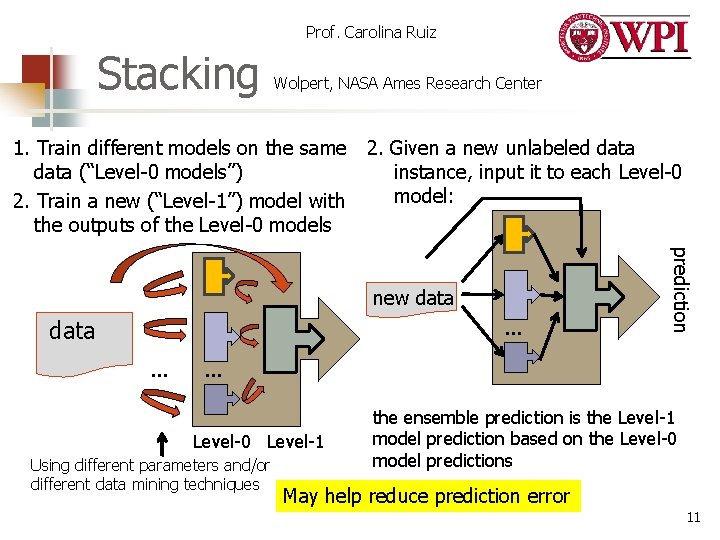

Prof. Carolina Ruiz Stacking Wolpert, NASA Ames Research Center 1. Train different models on the same data (“Level-0 models”) 2. Train a new (“Level-1”) model with the outputs of the Level-0 models 2. Given a new unlabeled data instance, input it to each Level-0 model: data … … prediction new data … Level-0 Level-1 Using different parameters and/or different data mining techniques the ensemble prediction is the Level-1 model prediction based on the Level-0 model predictions May help reduce prediction error 11

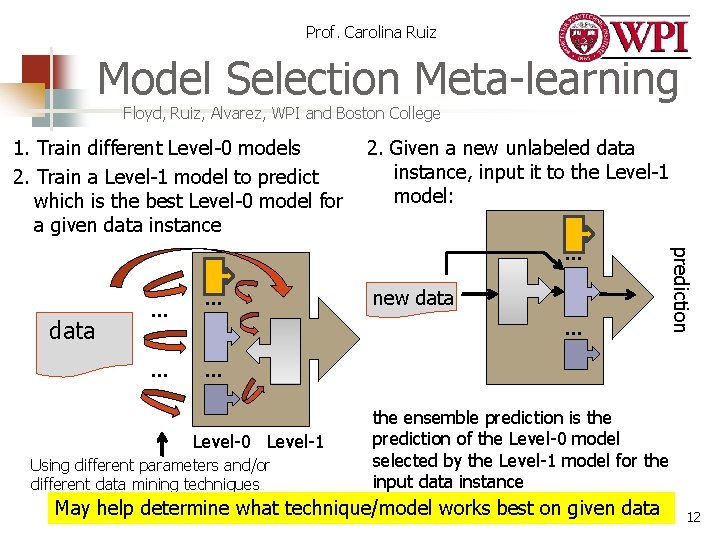

Prof. Carolina Ruiz Model Selection Meta-learning Floyd, Ruiz, Alvarez, WPI and Boston College 1. Train different Level-0 models 2. Train a Level-1 model to predict which is the best Level-0 model for a given data instance 2. Given a new unlabeled data instance, input it to the Level-1 model: data … … … new data … prediction … … Level-0 Level-1 Using different parameters and/or different data mining techniques the ensemble prediction is the prediction of the Level-0 model selected by the Level-1 model for the input data instance May help determine what technique/model works best on given data 12

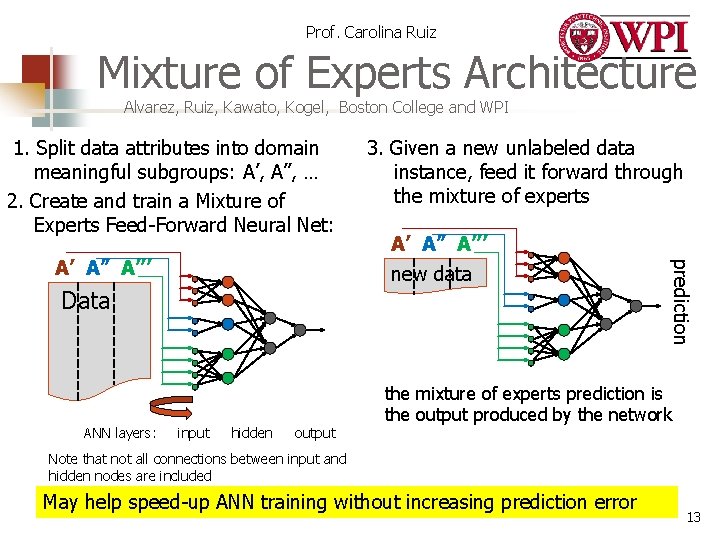

Prof. Carolina Ruiz Mixture of Experts Architecture Alvarez, Ruiz, Kawato, Kogel, Boston College and WPI 1. Split data attributes into domain meaningful subgroups: A’, A”, … 2. Create and train a Mixture of Experts Feed-Forward Neural Net: new data Data ANN layers: A’ A” A’’’ input hidden output prediction A’ A” A’’’ 3. Given a new unlabeled data instance, feed it forward through the mixture of experts prediction is the output produced by the network Note that not all connections between input and hidden nodes are included May help speed-up ANN training without increasing prediction error 13

Prof. Carolina Ruiz Conclusions Ensemble methods construct and/or combine collection of predictors with the purpose of improving upon the properties of the individual predictors: n n stabilize models reduce prediction error aggregate individual predictors that make different errors more resistant to noise 14

Prof. Carolina Ruiz References n n n n J. F. Elder, G. Ridgeway. “Combining Estimators to Improve Performance” KDD 99 tutorial notes L. Breiman. “Bagging Predictors”. Machine Learning, 24(2), 123 -140. 1996. R. E. Schapire. “The strength of weak learnability. ” Machine Learning. 5(2), 197227. 1990 Y. Freund, R. Schapire. “Experiments with a new boosting algorithm. ” Proc. of the 13 th Intl. Conf. on Machine Learning. 148 -156. 1996. J. Friedman, T. Hastie, R. Tibshirani. “Additive Logistic Regression: a statistical view of boosting”. Annals of Statistics. 1998. D. H Wolpert. “Stacked Generalization. ” Neural Networks. 5(2), 241 -259. 1992. S. Floyd, C. Ruiz, S. A. Alvarez, J. Tseng, and G. Whalen. "Model Selection Meta. Learning for the Prognosis of Pancreatic Cancer", full paper, Proc. 3 rd Intl. Conf. on Health Informatics (HEALTHINF 2010), pp. 29 -37. 2010. S. A. Alvarez, C. Ruiz , T. Kawato, and W. Kogel. Faster neural networks for combined collaborative and content based recommendation. Journal of Computational Methods in Sciences and Engineering (JCMSE). IOS Press. Vol. 11, N. 4, pp. 161 -172. 2011. 15

Prof. Carolina Ruiz The End n Questions? 16

Prof. Carolina Ruiz Bagging (Bootstrap Aggregation) n Model Creation: n n Prediction: n n Create bootstrap replicates of the dataset and fit a model to each one Average/vote predictions of each model Advantages n n Stabilizes “unstable” methods Easy to implement, parallelizable. 17

Prof. Carolina Ruiz Boosting n Creating the model: n n Prediction: n n Construct a sequence of datasets and models in such a way that a dataset in the sequence weights an instance heavily when the previous model has misclassified it. “Merge” the models in the sequence Advantages: n Improves classification accuracy 18

- Slides: 18