Engi Net WARNING All rights reserved No Part

- Slides: 56

Engi. Net™ WARNING All rights reserved. No Part of this video lecture series may be reproduced in any form or by any electronic or mechanical means, including the use of information storage and retrieval systems, without written approval from the copyright owner. © 1999 The Research Foundation of the State University of New York

Binghamton University Engi. Net™ State University of New York

CS 533 Information Retrieval Dr. Michal Cutler Lecture #21 April 19, 1999

Clustering using existing clusters z. Start with a set of clusters z. Compute centroids z. Compare every item to centroids z. Move to most similar cluster z. Update centroids z. Process ends when all items in best cluster

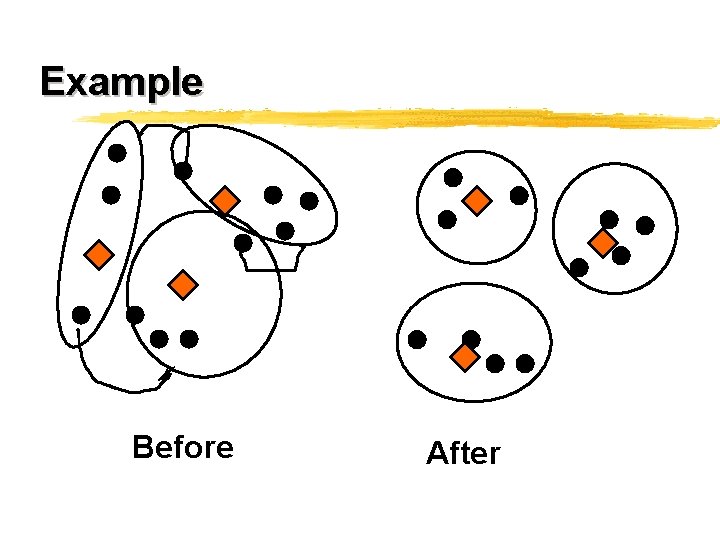

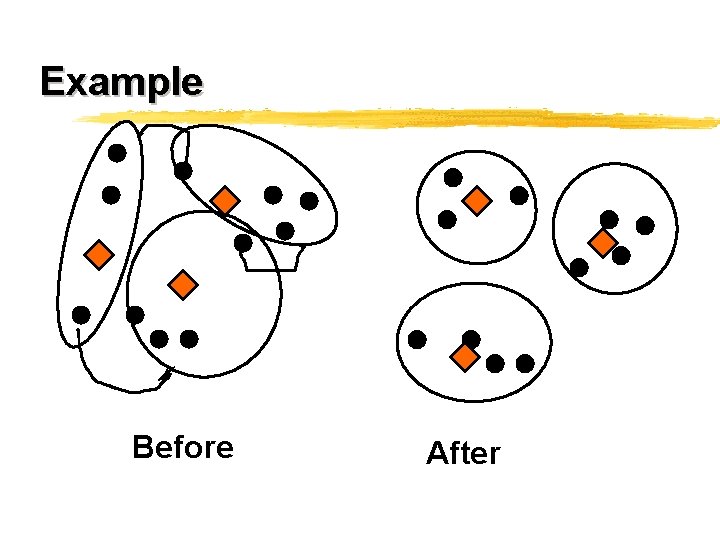

Example Before After

Heuristic clustering methods z. Similarity matrix is not generated z. Cluster depends on order of items z. Inferior clusters but much faster z. Can be used for incremental clustering

One pass assignments z Item 1 placed in cluster z. Each subsequent item compared against all clusters (initially against item 1) y. Placed into an existing cluster if similar enough y. Otherwise into a new cluster

Heuristic clustering methods z. Uses similarity between an item and centroid of existing clusters z. When item added to cluster its centroid is updated

Cluster based retrieval z Given a cluster hierarchy for a collection z. A cluster is selected for retrieval when a query is similar to its centroid z. The search can be done either top down or bottom up

Cluster Retrieval Issues z. A cluster is selected for retrieval when: y. The similarity between query and centroid is above a threshold x. Who decides on the threshold? x. What should it be? z. Instead of threshold a user may limit number retrieved to n

Cluster Retrieval Issues z. Should all the documents in a selected cluster be retrieved? z. If yes, how will they be ranked? z. Should each document in a selected cluster be compared to the query?

Cluster based retrieval z. Advantage y. Relevant documents which do not contain query terms may be retrieved y. Retrieval may be fast if only centroids compared to query

Cluster based retrieval z. Disadvantage y. When whole cluster is returned precision may be low y. Clusters may contain relevant documents even when a centroid is not similar to query

Cluster based retrieval z. Disadvantages y. When clusters are large too many documents may be retrieved y. Comparing each document in a selected cluster to query is time consuming

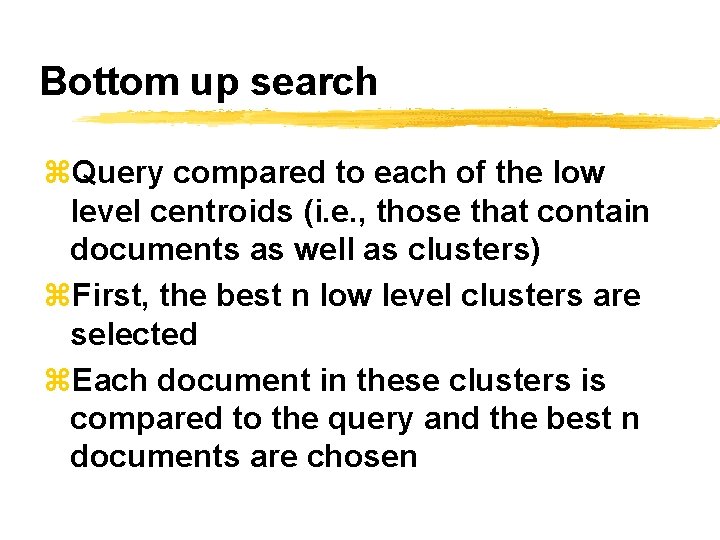

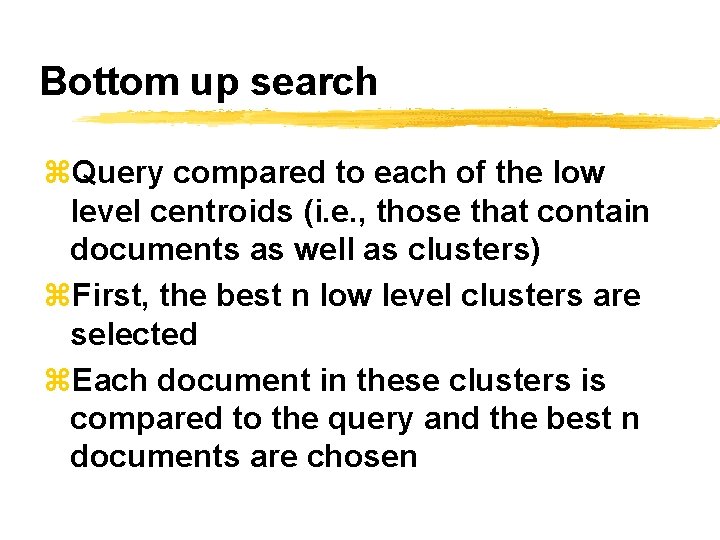

Bottom up search z. Query compared to each of the low level centroids (i. e. , those that contain documents as well as clusters) z. First, the best n low level clusters are selected z. Each document in these clusters is compared to the query and the best n documents are chosen

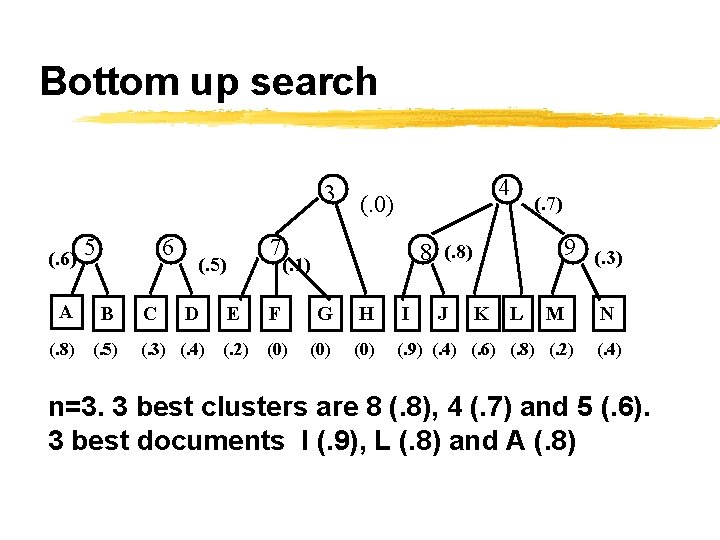

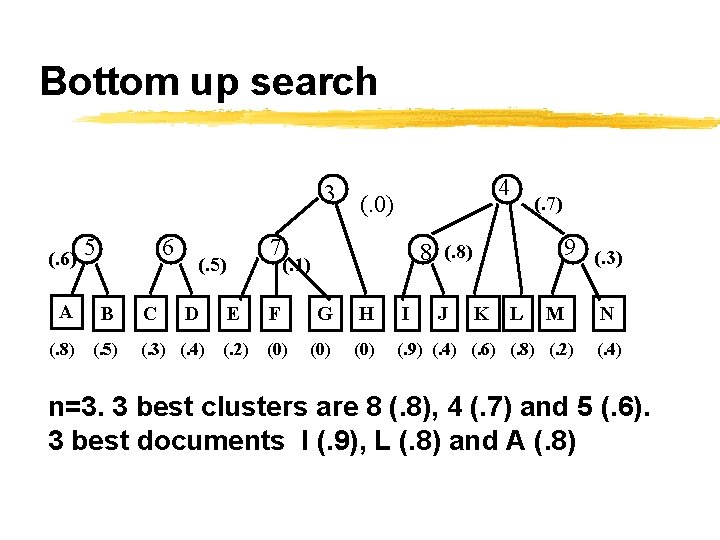

Bottom up search 3 (. 6) 5 6 A B (. 8) (. 5) C (. 3) (. 4) (. 0) 7 (. 5) D 4 (. 7) 9 (. 3) 8 (. 8) (. 1) E F G H I J K L M (. 2) (0) (0) (. 9) (. 4) (. 6) (. 8) (. 2) N (. 4) n=3. 3 best clusters are 8 (. 8), 4 (. 7) and 5 (. 6). 3 best documents I (. 9), L (. 8) and A (. 8)

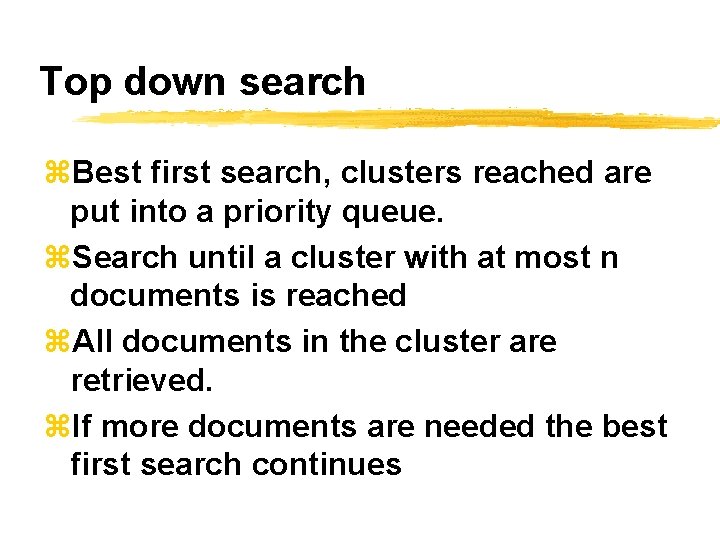

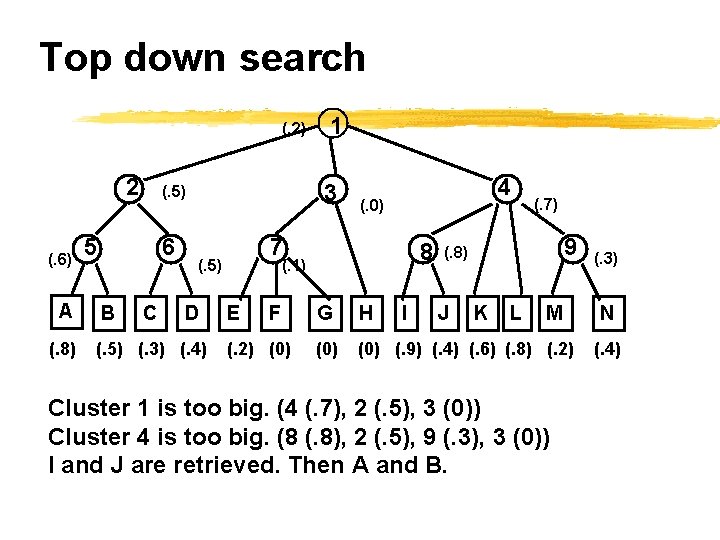

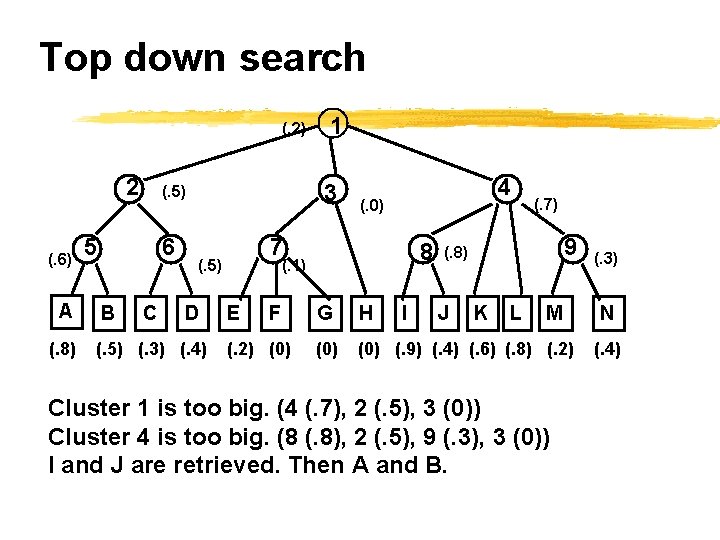

Top down search z. Best first search, clusters reached are put into a priority queue. z. Search until a cluster with at most n documents is reached z. All documents in the cluster are retrieved. z. If more documents are needed the best first search continues

Top down search (. 2) 2 (. 6) A (. 8) 6 B 3 (. 5) 5 C 1 (. 5) (. 3) (. 4) (. 0) 7 (. 5) D 4 8 (. 1) E F (. 2) (0) I (. 7) 9 (. 8) G H J K L M (0) (. 9) (. 4) (. 6) (. 8) (. 2) Cluster 1 is too big. (4 (. 7), 2 (. 5), 3 (0)) Cluster 4 is too big. (8 (. 8), 2 (. 5), 9 (. 3), 3 (0)) I and J are retrieved. Then A and B. (. 3) N (. 4)

Thesaurus z. A general thesaurus z. Domain specific thesauri z. Usage in IR z. Building a thesaurus

A general thesaurus z. A general thesaurus - contains synonyms and antonyms z. Different word senses z. Sometimes broader terms z. Many domain specific terms not included (C++, OS/2, RAM, etc. )

A general thesaurus z. Roget http: //humanities. uchicago. edu/forms_ unrest/ROGET. html z. Word based z. Provides related terms, and broader term

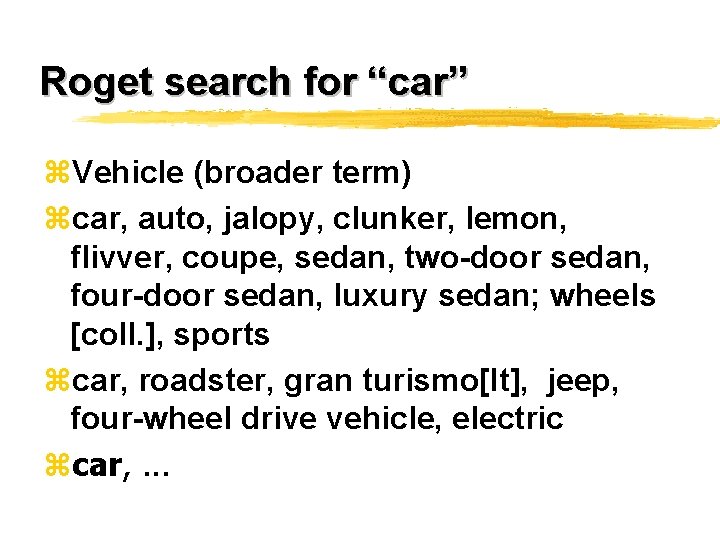

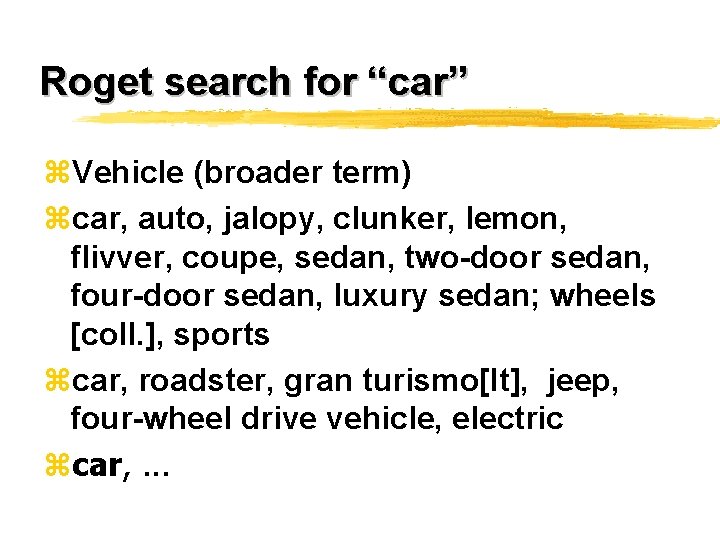

Roget search for “car” z. Vehicle (broader term) zcar, auto, jalopy, clunker, lemon, flivver, coupe, sedan, two-door sedan, four-door sedan, luxury sedan; wheels [coll. ], sports zcar, roadster, gran turismo[It], jeep, four-wheel drive vehicle, electric zcar, . . .

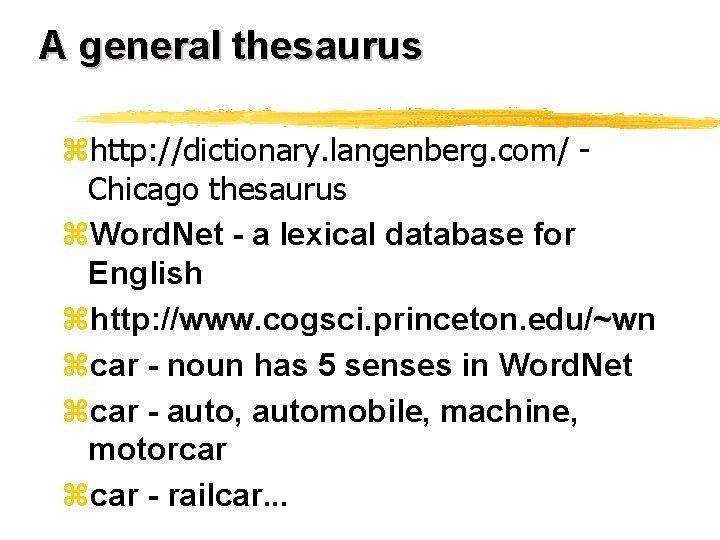

A general thesaurus zhttp: //dictionary. langenberg. com/ Chicago thesaurus z. Word. Net - a lexical database for English zhttp: //www. cogsci. princeton. edu/~wn zcar - noun has 5 senses in Word. Net zcar - auto, automobile, machine, motorcar zcar - railcar. . .

Word. Net Evaluation z"Natural language processing is essential for dealing efficiently with the large quantities of text now available online: fact extraction and summarization, automated indexing and text categorization, and machine translation. ”

Word. Net Evaluation z“Another essential function is helping the user with query formulation through synonym relationships between words and hierarchical and other relationships between concepts. Word. Net supports both of these functions and thus deserves careful study by the digital library community”

Domain specific thesauri z. Keywords are usually phrases z. Medical thesaurus z. Law based thesaurus, etc.

A thesaurus for IR z. Contains relations between concepts such as broader, narrower, related, etc. z. Usually concepts form hierarchies z. Thesaurus can be on-line or off-line z. Library of Congress, for example, provides a thesaurus to determine search subjects and keywords

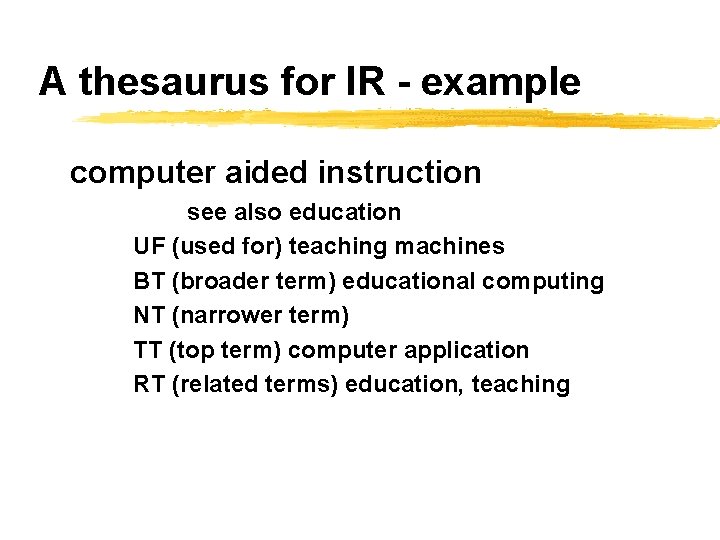

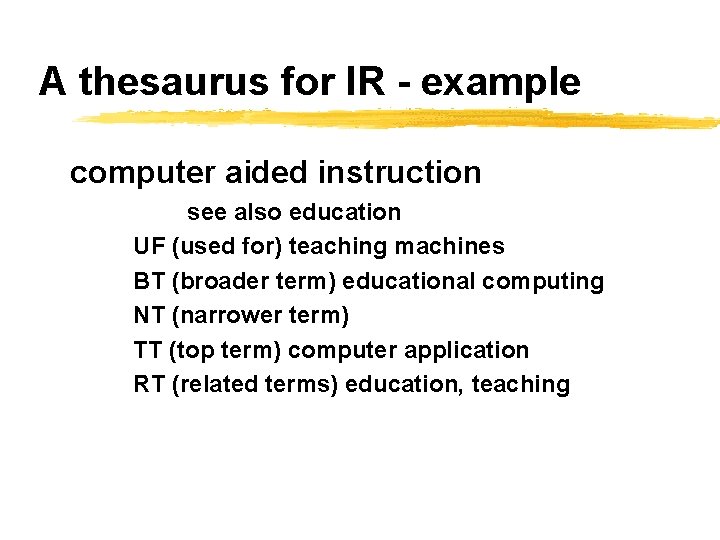

A thesaurus for IR - example computer aided instruction see also education UF (used for) teaching machines BT (broader term) educational computing NT (narrower term) TT (top term) computer application RT (related terms) education, teaching

How it is used z. Manual indexing - term selection z. Automatic indexing - set of synonyms represented by one term z. Query formulation - helps users select query terms (important in controlled vocabulary)

How it is used z. Query expansion - suggests terms to user z. Broaden or narrow a query depending on retrieval results z. Automatic query expansion

Building a thesaurus z. Manual z. Automatic yword based yphrase based

Manual generation z. Domain experts z. Information experts z. Keywords selected z. Hierarchy built z. Expensive, time consuming and subjective

Word based thesauri y. Salton y. Pederson

Corpus-based word-based thesaurus (S) z(Salton 1971) z. Main idea: When ti and tj often co-occur in the same documents, they are related. z. The terms “trial”, “defendant”, “prosecution” and “judge” will tend to co-occur in the same documents

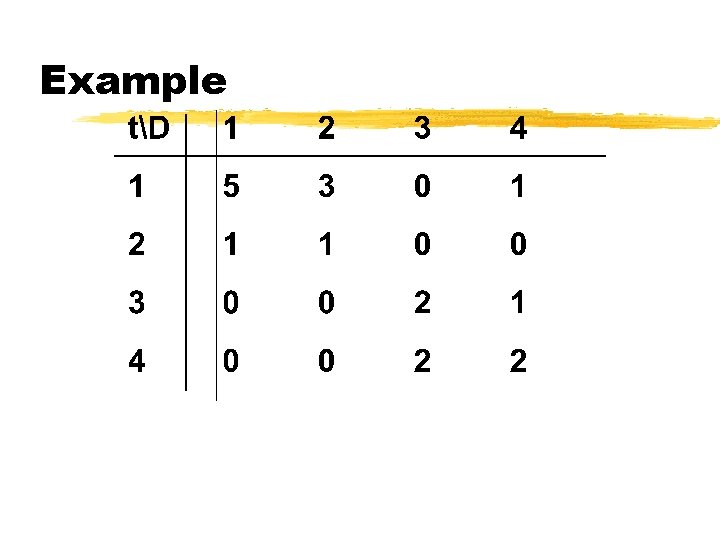

Corpus-based word-based thesaurus (S) z. Uses the term/document weight matrix W zwi, k is the weight assigned to term i in document k z. Computes a matrix T z. The element in the ith row and jth column of T is the “relation” of term i to term j

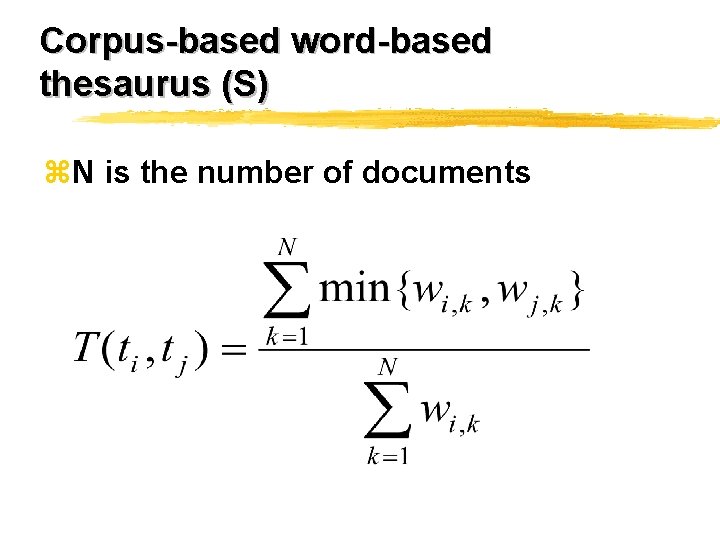

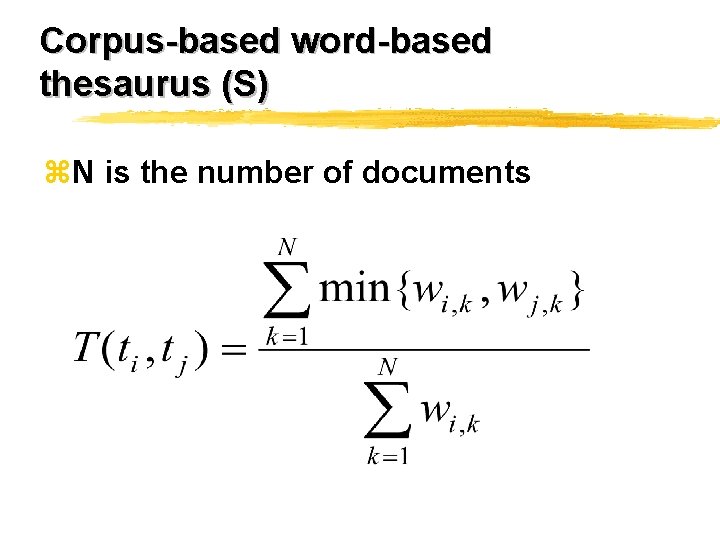

Corpus-based word-based thesaurus (S) z. N is the number of documents

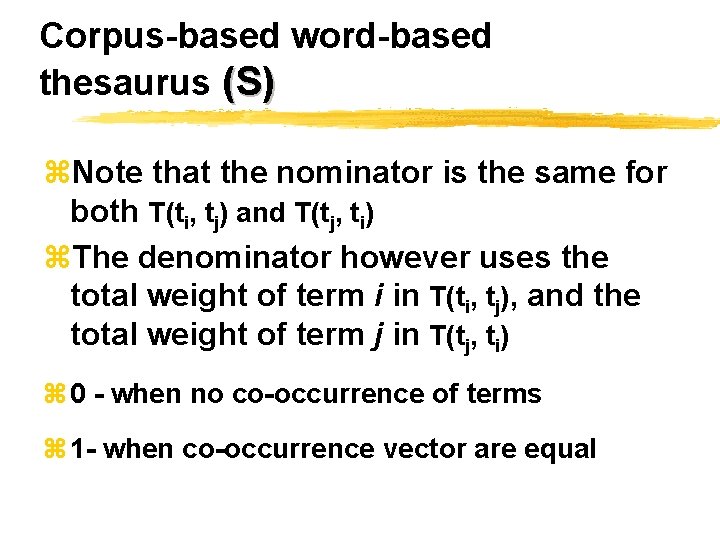

Corpus-based word-based thesaurus (S) z. Note that the nominator is the same for both T(ti, tj) and T(tj, ti) z. The denominator however uses the total weight of term i in T(ti, tj), and the total weight of term j in T(tj, ti) z 0 - when no co-occurrence of terms z 1 - when co-occurrence vector are equal

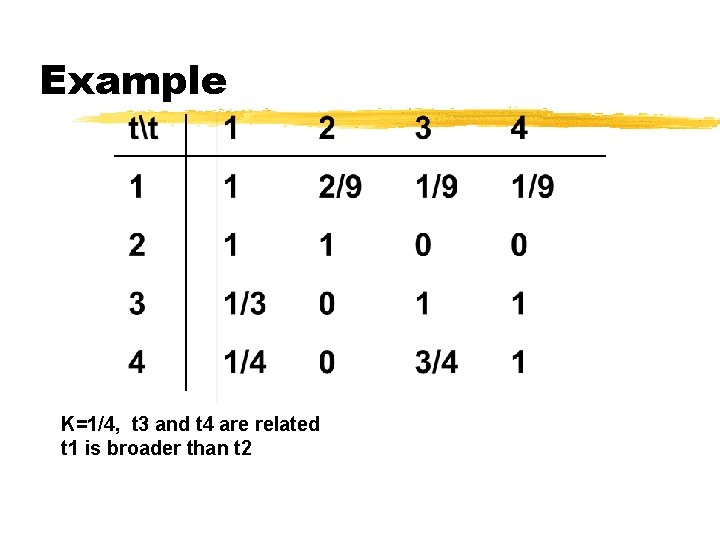

Corpus-based word-based thesaurus (S) z. The values in the matrix are used to distinguish between, ybroad, y narrow and yrelated terms

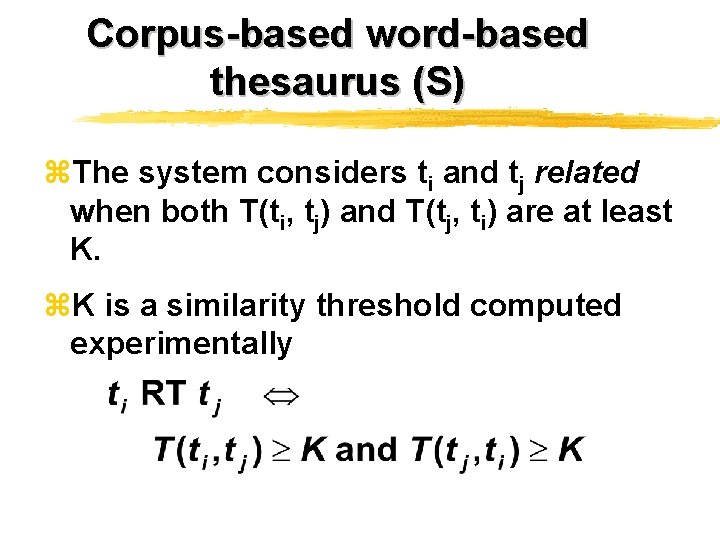

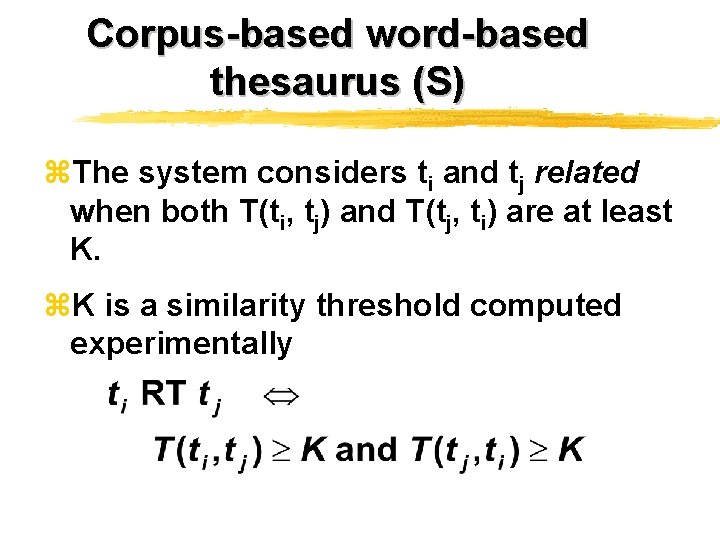

Corpus-based word-based thesaurus (S) z. The system considers ti and tj related when both T(ti, tj) and T(tj, ti) are at least K. z. K is a similarity threshold computed experimentally

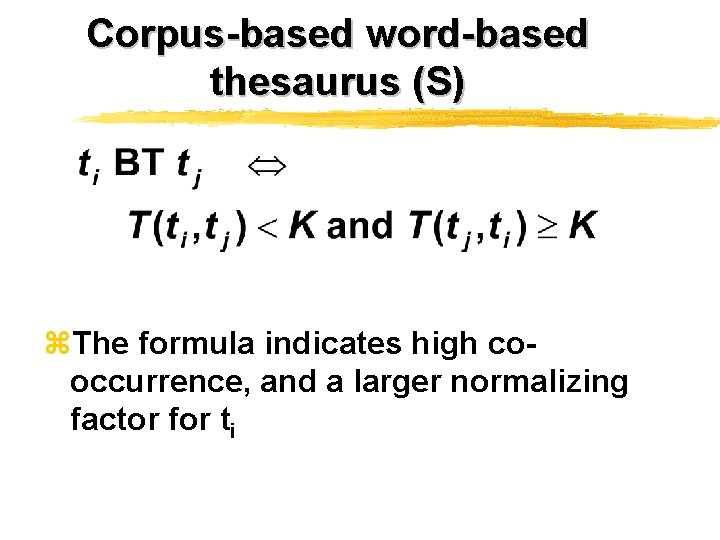

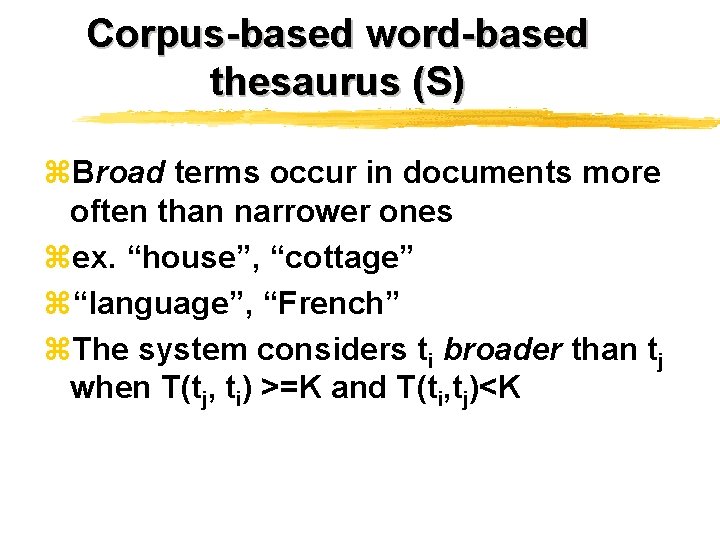

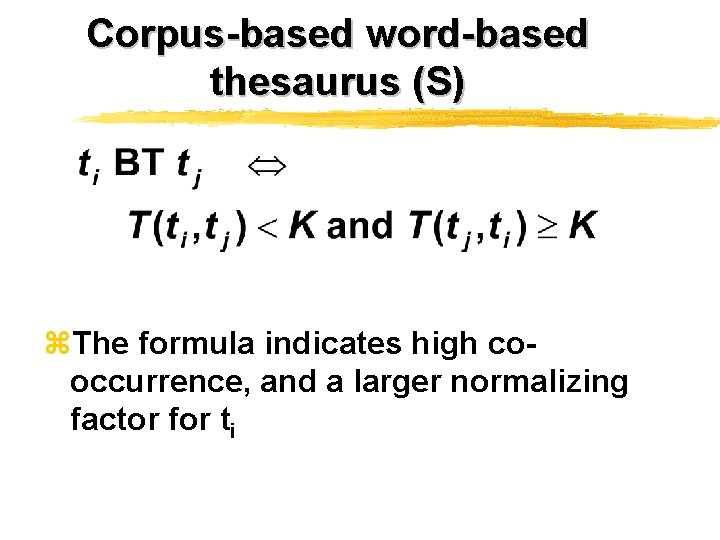

Corpus-based word-based thesaurus (S) z. Broad terms occur in documents more often than narrower ones zex. “house”, “cottage” z“language”, “French” z. The system considers ti broader than tj when T(tj, ti) >=K and T(ti, tj)<K

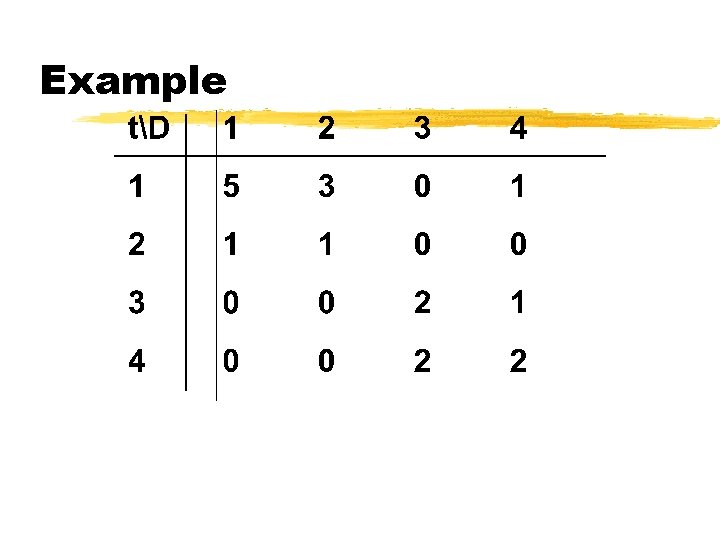

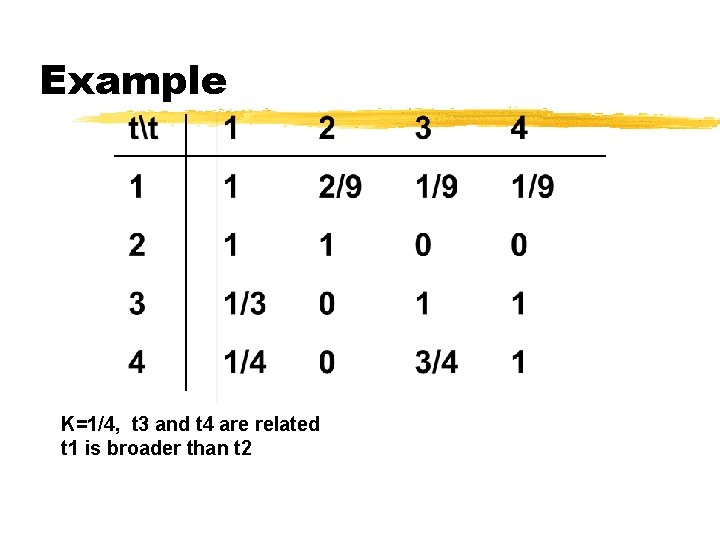

Example

Example K=1/4, t 3 and t 4 are related t 1 is broader than t 2

Corpus-based word-based thesaurus (S) z. The formula indicates high cooccurrence, and a larger normalizing factor for ti

Drawback (S) z. Terms found related in this method may not be semantically related.

Full text collections z. Co-occurrence may not be meaningful in large documents which cover many topics z. Use co-occurrence in document “window” instead of whole document

Full text collections z. The idea is that co-occurrences of terms should be in a small part of the text z. A “window” may be a few paragraphs or a “chunk” of q terms

Co-occurrence-based Thesaurus (P) z(Schutze and Pedersen) z. Another idea: words with similar meanings co-occur with similar neighbors z"litigation" and "lawsuit" share neighbors such as "court", “judge", “witness” and "proceedings"

Co-occurrence-based Thesaurus (P) z. Matrix C is computed z. Ci, j = number of times words i and j cooccur in collection in windows of size k z. Cosine similarity between columns i and j of C used to compute the “Semantic” similarity between terms i and j

Co-occurrence-based thesaurus (P) z Similarity will be high when two terms frequently share neighbors. z It will be small when they only seldomly share neighbors z For 400, 000 terms, 16*1010 pairs for which vector similarity must be computed z (Cosine requires inner product, and normalization)

Co-occurrence thesaurus (P) z PROBLEMS ymatrix size and ycomputation time

Co-occurrence thesaurus (P) z SOLUTION y. Use only some of the documents, or yuse singular value decomposition (SVD) on C and create a much smaller matrix C’ ysingular value decomposition also time consuming y. Now compute semantic similarities

Drawbacks of word-based thesaurus z. Expansion with corpus-based “related” terms may cause queries to become noisy or changed. Do more harm than good z. Adding the word “house”, and “selling” related to “agent” to query “intelligent agents”

Drawbacks z. Adding words with more than one meaning also a problem z“bank” to “mortgage interest”

Results z. Word-based corpus-based thesaurus has not proved helpful for retrieval from small collections and fairly long queries z. Better results with large collections