Energy Efficient Prefetching and Caching Athanasios E Papathanasiou

- Slides: 22

Energy Efficient Prefetching and Caching Athanasios E. Papathanasiou and Michael L. Scott Proc. USENIX ’ 04 Ann. Technical Conf. , June 2004. 1

Introduction � Prefetching and caching ◦ Increase throughput ◦ Decrease latency ◦ Ignore energy efficiency 2

Introduction � Problem of traditional algorithm: ◦ Standby mode: � Standby to active transition: �Time: at least 3 seconds �Spin up power: 1. 5 -2 X (compares to active mode) 3

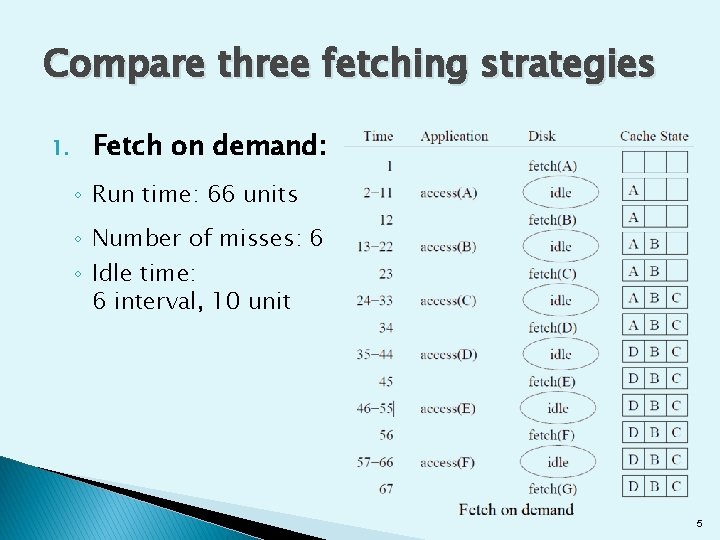

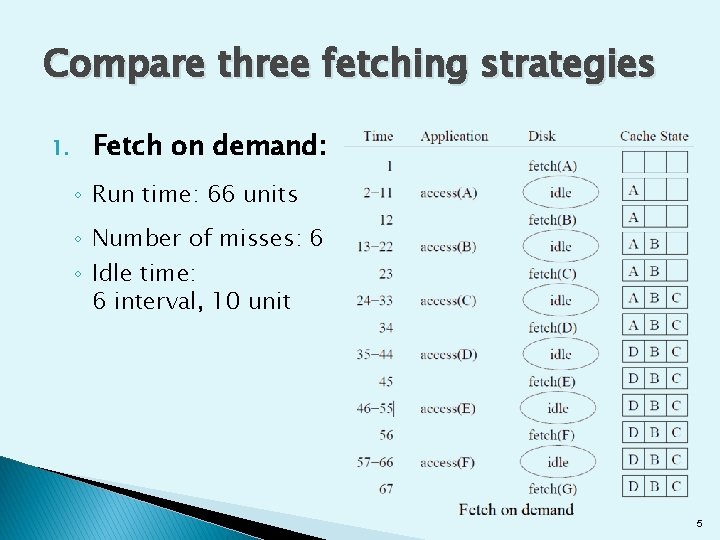

Compare three fetching strategies 1. Fetch on demand 2. Traditional prefetching strategy 3. Energy conscious strategy 4

Compare three fetching strategies 1. Fetch on demand: ◦ Run time: 66 units ◦ Number of misses: 6 ◦ Idle time: 6 interval, 10 unit 5

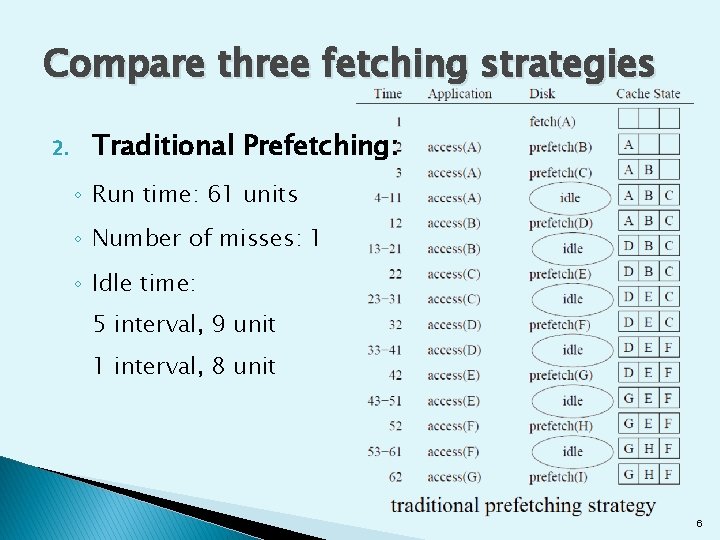

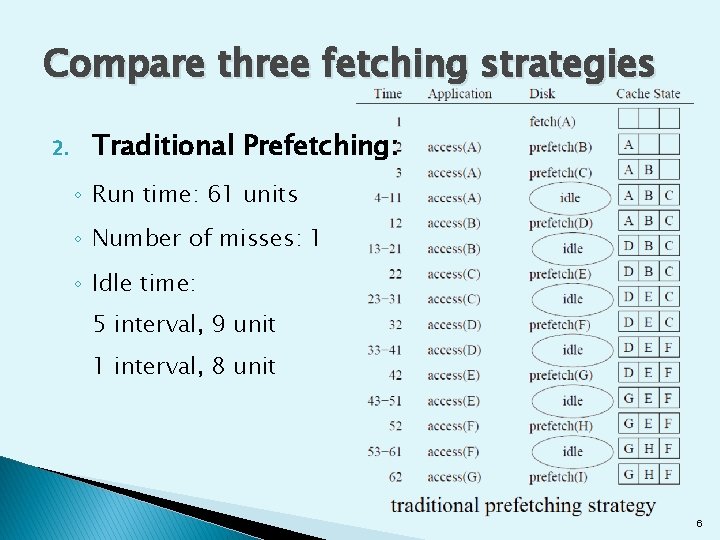

Compare three fetching strategies 2. Traditional Prefetching: ◦ Run time: 61 units ◦ Number of misses: 1 ◦ Idle time: 5 interval, 9 unit 1 interval, 8 unit 6

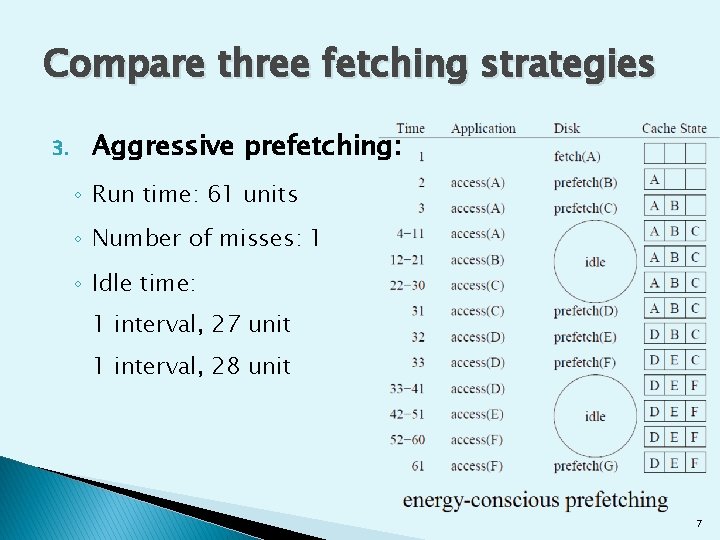

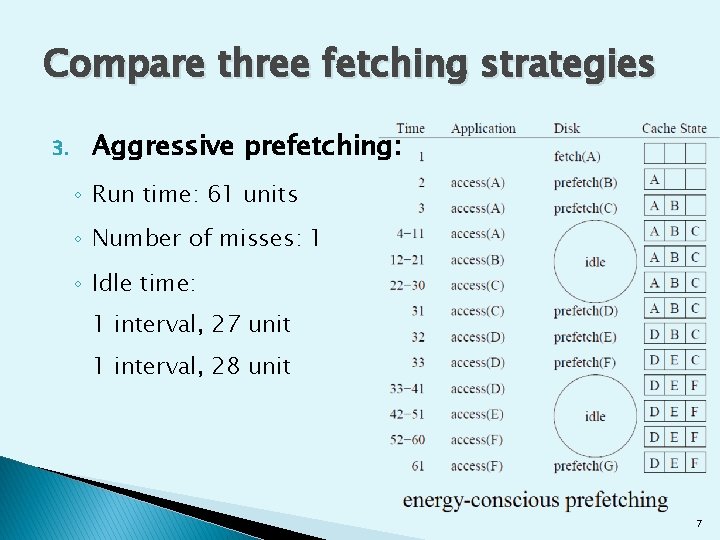

Compare three fetching strategies 3. Aggressive prefetching: ◦ Run time: 61 units ◦ Number of misses: 1 ◦ Idle time: 1 interval, 27 unit 1 interval, 28 unit 7

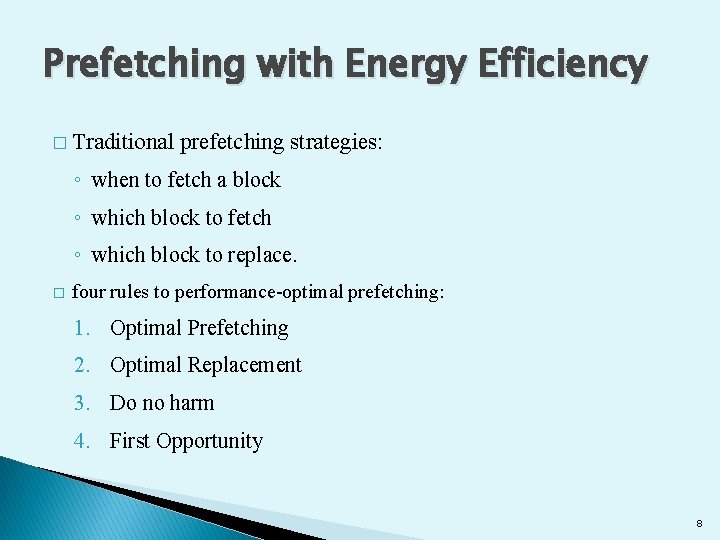

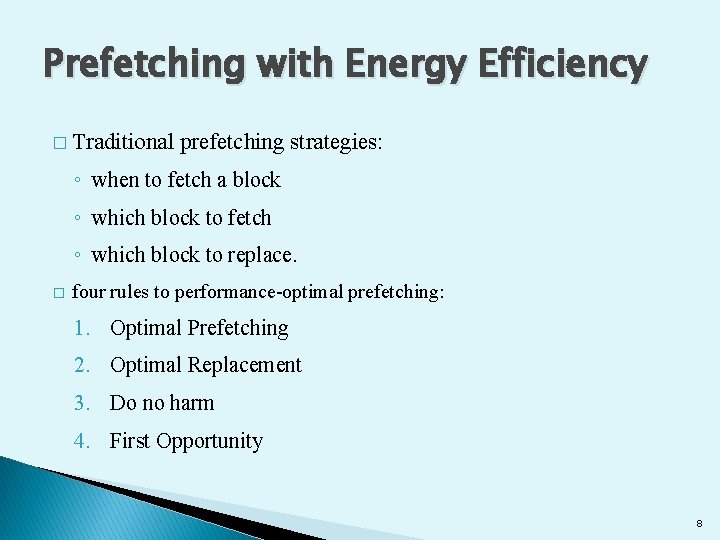

Prefetching with Energy Efficiency � Traditional prefetching strategies: ◦ when to fetch a block ◦ which block to fetch ◦ which block to replace. � four rules to performance-optimal prefetching: 1. Optimal Prefetching 2. Optimal Replacement 3. Do no harm 4. First Opportunity 8

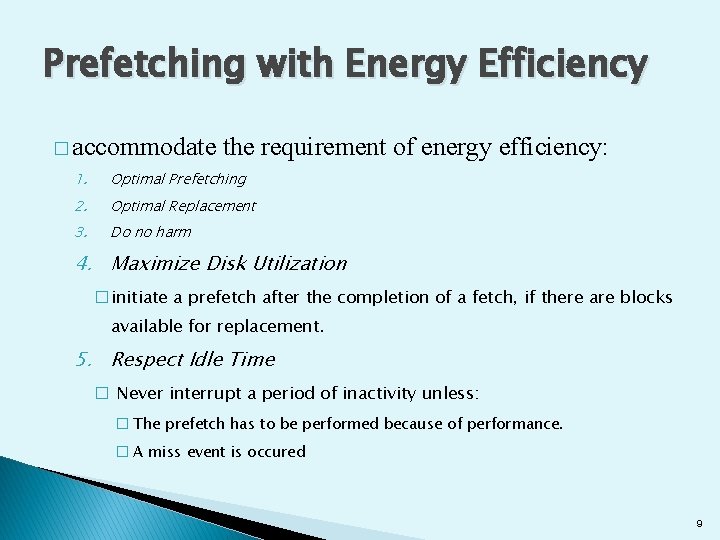

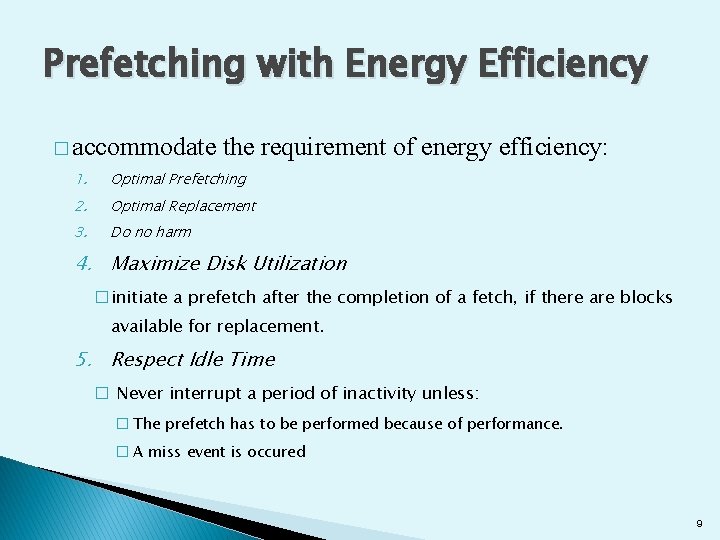

Prefetching with Energy Efficiency � accommodate the requirement of energy efficiency: 1. Optimal Prefetching 2. Optimal Replacement 3. Do no harm 4. Maximize Disk Utilization �initiate a prefetch after the completion of a fetch, if there are blocks available for replacement. 5. Respect Idle Time � Never interrupt a period of inactivity unless: � The prefetch has to be performed because of performance. � A miss event is occured 9

Prefetching with Energy Efficiency � Problem: miss in idle mode ◦ Spin up! � Solution: fetch much more data ◦ prefetch enough blocks to reduce miss during an idle interval 10

Design of Energy-Aware Prefetching 1. Deciding When to Prefetch 2. Deciding What to Prefetch 3. Deciding What to Replace 11

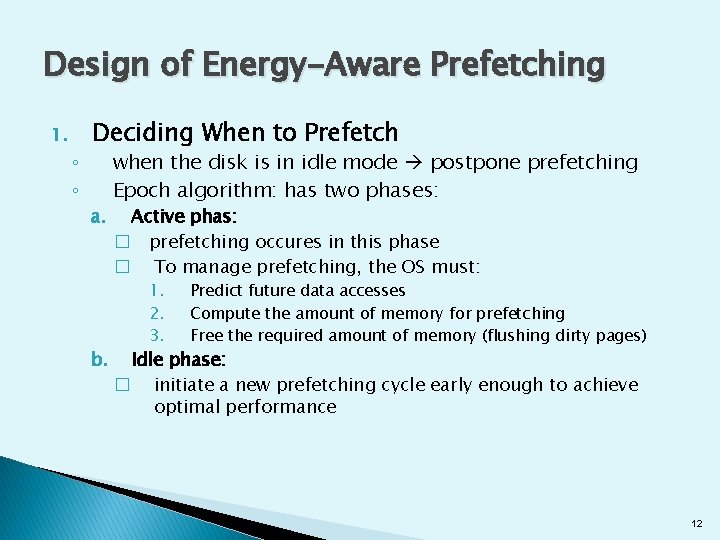

Design of Energy-Aware Prefetching 1. ◦ ◦ Deciding When to Prefetch a. b. when the disk is in idle mode postpone prefetching Epoch algorithm: has two phases: Active phas: � prefetching occures in this phase � To manage prefetching, the OS must: 1. 2. 3. Predict future data accesses Compute the amount of memory for prefetching Free the required amount of memory (flushing dirty pages) Idle phase: � initiate a new prefetching cycle early enough to achieve optimal performance 12

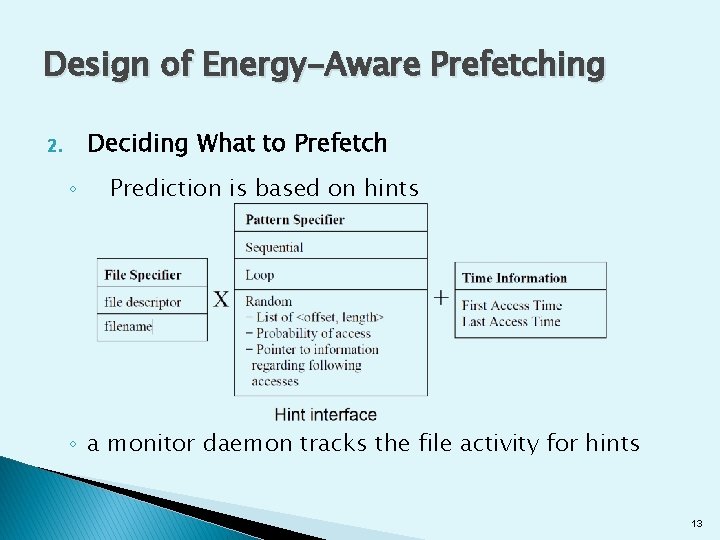

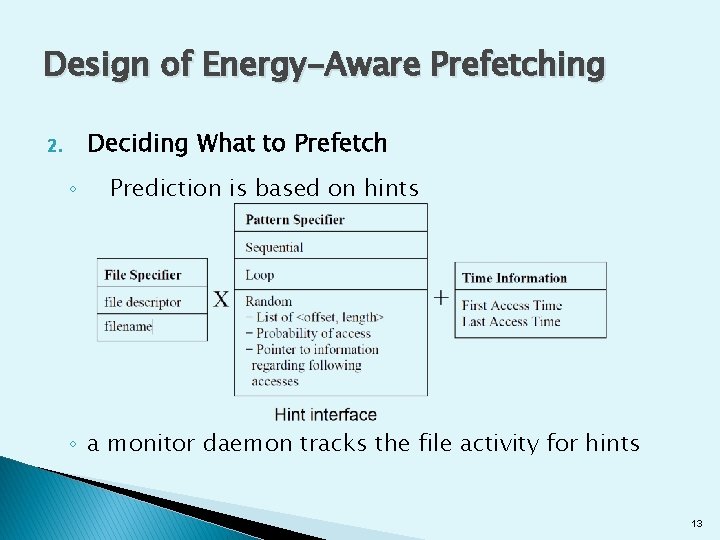

Design of Energy-Aware Prefetching Deciding What to Prefetch 2. ◦ Prediction is based on hints ◦ a monitor daemon tracks the file activity for hints 13

Design of Energy-Aware Prefetching Deciding What to Replace: 3. ◦ ◦ parameters determine probability of wrong eviction: 1. The reserved amount of memory 2. Prefetching or future writes miss types: 1. Eviction miss (suggests that the prefetching depth should decrease) 2. Prefetch miss (suggests that the prefetching depth should increase) 3. Compulsory miss 14

Implementation � Epoch: Implement epoch prefetching algorithm in the Linux kernel version 2. 4. 20 1. Hinted Files: Disclosed by monitor or application. ◦ kernel automatically detects sequential accesses. 15

Implementation Prefetch Thread 2. ◦ Problem: lack of coordination among requests generated by different applications. ◦ Solution: centralized entity that is responsible for generating prefetching requests for all running applications. 16

Implementation Prefetch Cache 3. ◦ Each page in the prefetch cache has a timestamp ◦ Indicates when it is expected to be accessed ◦ page is moved to the standard LRU list when: 1. page is referenced 2. its timestamp is exceeded 17

Implementation 4. Eviction Cache: ◦ Data structure to keep track of pages that are evicted ◦ retains the metadata of recently evicted pages ◦ It can be used as an estimate of a suitable prefetching depth 18

Implementation 5. Handling Write Activity ◦ The original Linux kernel: �The update daemon runs every 5 seconds ◦ current implementation: flushes all dirty buffers once per minute ◦ write-behind of dirty buffers belonging to the file can be postponed until: 1. file is closed 2. process that opened the file exits 19

Implementation 5. Power Management Policy: ◦ prefetch thread predicts the length of the idle phase ◦ If ( predicted length>Standby breakeven time) disk is set to the Standby state ◦ power management algorithm monitors the prediction accuracy �If (repeatedly mispredicts the length of the idle phase) power management policy ignores prediction 20

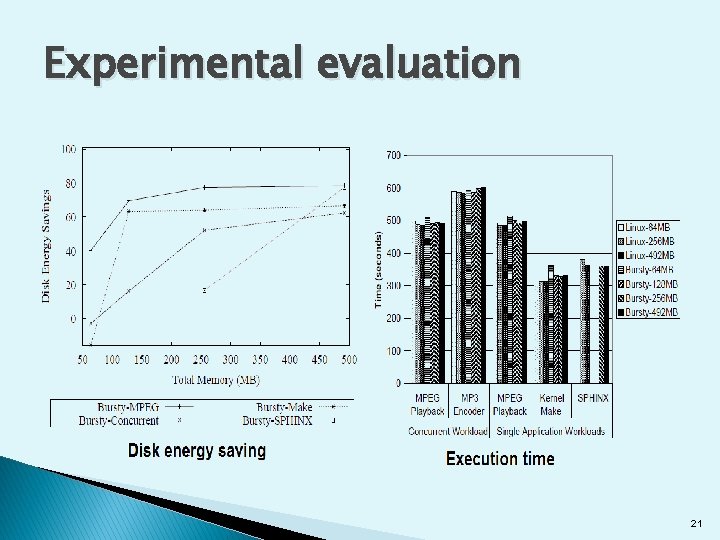

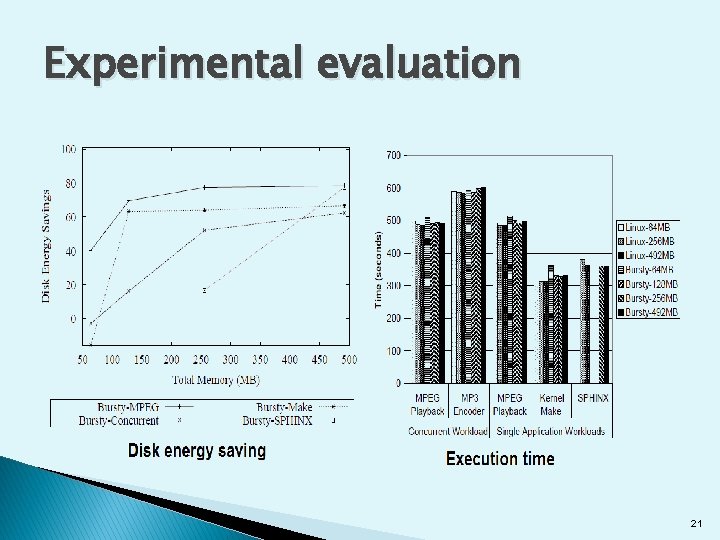

Experimental evaluation 21

22