End to End Scientific Data Management Framework for

- Slides: 48

End to End Scientific Data Management Framework for Petascale Science ESMF 9/23/2008 Scott Klasky, Jay Lofstead, Mladen Vouk ORNL, Georgia Tech, NCSU 1 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

Outline · EFFIS (Klasky) · ADIOS. – ADIOS Overview (Klasky) – ADIOS Advanced Topics (Lofstead) · Workflow. (Vouk) · Dashboard. (Vouk) · Conclusions. (Klasky) 2 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

Supercomputers creating a hurricane of data. · Some simulations are starting to produce 100 TB/day on the 270 TF Cray XT at ORNL. · Old way of run now, and look at results later has problems. – Data will be eventually archived on tape. · Lots of files from 1 run with multiple users gives us a data management headache. · Need to keep track of data over multiple system. · Extracting information from files needs to be easy. – Example: min/max of 100 GB arrays needs to be almost instant. ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 3 Managed by UT-Battelle for the Department of Energy

Vision · Problem: Managing the data from a petascale simulation, and debugging the simulation, and extracting the science involves. – Tracking the codes: Simulation, Analysis. – Tracking the input files/parameters – Tracking the output files, from the simulation and then analysis programs. – Tracking the machines and environment the codes ran on. – Gluing everything together. – Visualizing the results, and analyzing the results without requiring users to know all of the file names. – Fast I/O which can be easily tracked. ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy 4

Vision – Workflow Automation to automate all of the mundane tasks. – Analyzing the results, without knowing all of the file locations/names. – Moving data from the simulation side to remote locations without knowledge of filename(s)/locations. – Monitoring results in real-time, · Requirements. – Want technologies integrated together; easy to talk to one another. – Want to make the system scalable in the I/O workflow, analysis, visualization, data management. ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy 5

Outline · EFFIS · ADIOS. – ADIOS Overview – BP format, and compatibility with hdf 5/netcdf. · Workflow. · Dashboard. · Conclusions. 6 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

ADIOS: Motivation · “Those fine fort. * files!” · Multiple HPC architectures – Blue. Gene, Cray, IB-based clusters · Multiple Parallel Filesystems – Lustre, PVFS 2, GPFS, Panasas, PNFS · Many different APIs – MPI-IO, POSIX, HDF 5, net. CDF – GTC (fusion) has changed IO routines 8 times so far based on performance when moving to different platforms. · Different IO patterns – Restarts, analysis, diagnostics – Different combinations provide different levels of IO performance · Compensate for inefficiencies in the current IO infrastructures to improve overall performance ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 7 Managed by UT-Battelle for the Department of Energy

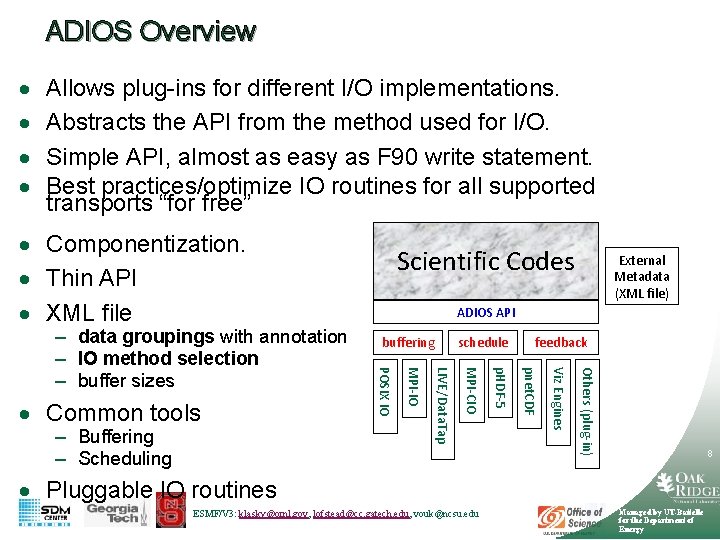

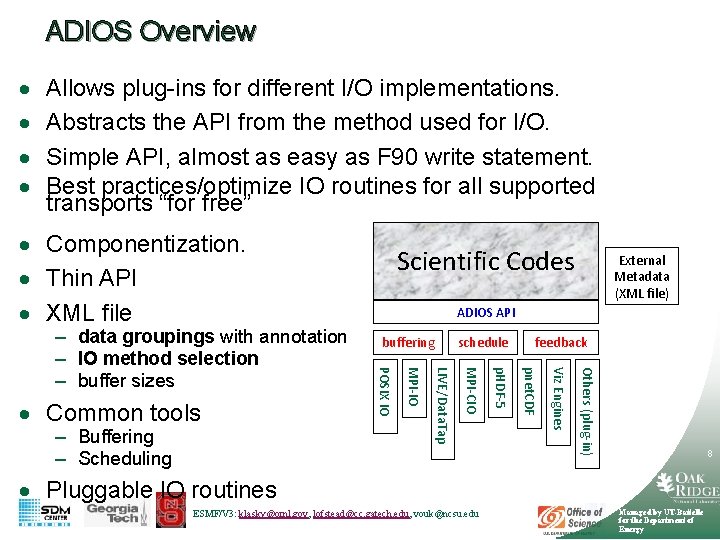

ADIOS Overview · · Allows plug-ins for different I/O implementations. Abstracts the API from the method used for I/O. Simple API, almost as easy as F 90 write statement. Best practices/optimize IO routines for all supported transports “for free” · Componentization. · Thin API · XML file schedule feedback Others (plug-in) Viz Engines pnet. CDF p. HDF-5 MPI-CIO LIVE/Data. Tap – Buffering – Scheduling buffering MPI-IO · Common tools External Metadata (XML file) ADIOS API POSIX IO – data groupings with annotation – IO method selection – buffer sizes Scientific Codes 8 · Pluggable IO routines ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

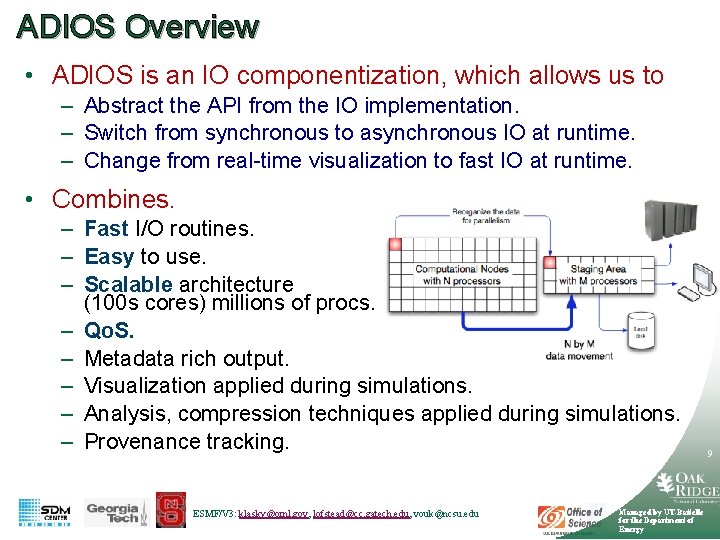

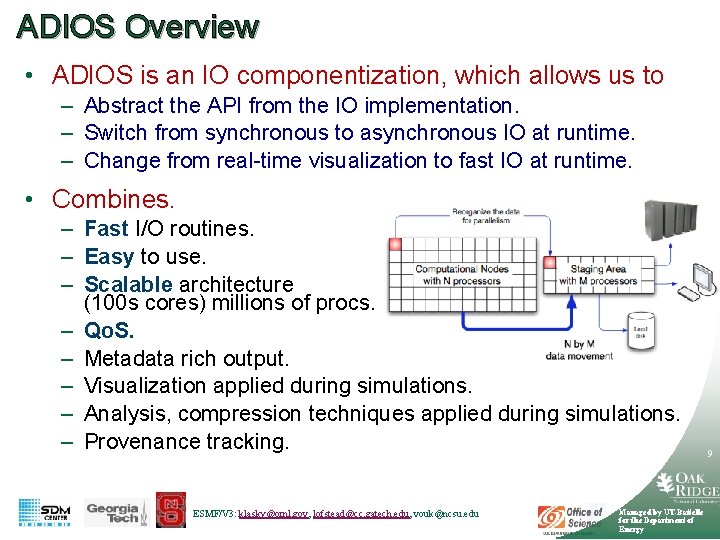

ADIOS Overview • ADIOS is an IO componentization, which allows us to – Abstract the API from the IO implementation. – Switch from synchronous to asynchronous IO at runtime. – Change from real-time visualization to fast IO at runtime. • Combines. – Fast I/O routines. – Easy to use. – Scalable architecture (100 s cores) millions of procs. – Qo. S. – Metadata rich output. – Visualization applied during simulations. – Analysis, compression techniques applied during simulations. – Provenance tracking. ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy 9

ADIOS Philosophy (End User) · Simple API very similar to standard Fortran or C POSIX IO calls. – As close to identical as possible for C and Fortran API – open, read/write, close is the core – set_path, end_iteration, begin/end_computation, init/finalize are the auxiliaries · No changes in the API for different transport methods. · Metadata and configuration defined in an external XML file parsed once on startup. – Describe the various IO grouping including attributes and hierarchical path structures for elements as an adios-group – Define the transport method used for each adios-group and give parameters for communication/writing/reading – Change on a per element basis what is written – Change on a per adios-group basis how the IO is handled ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 10 Managed by UT-Battelle for the Department of Energy

Design Goals · ADIOS Fortran and C based API almost as simple as standard POSIX IO · External configuration to describe metadata and control IO settings · Take advantage of existing IO techniques (no new native IO methods) Fast, simple-to-write, efficient IO for multiple platforms without changing the source code 11 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

Architecture · Data groupings – logical groups of related items written at the same time. · Not necessarily one group per writing event · IO Methods – Choose what works best for each grouping – Vetted, improved, and/or written by experts for each · · · · POSIX (Wei-keng Liao, Northwestern) MPI-IO (Steve Hodson, ORNL) MPI-IO Collective (Wei-keng Liao, Northwestern) NULL (Jay Lofstead, GT) Ga Tech Data. Tap Asynchronous (Hasan. Abbasi, GT) phdf 5 others. . (pnetcdf on the way). ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 12 Managed by UT-Battelle for the Department of Energy

Related Work · Specialty APIs – HDF-5 – complex API – Parallel net. CDF – no structure · File system aware middleware – MPI ADIO layer – File system connection, complex API · Parallel File systems – – Lustre – Metadata server issues PVFS 2 – client complexity LWFS – client complexity GPFS, p. NFS, Panasas – may have other issues ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 13 Managed by UT-Battelle for the Department of Energy

Supported Features · Platforms tested – Cray CNL (ORNL Jaguar) – Cray Catamount (SNL Redstorm) – Linux Infiniband/Gigabit (ORNL Ewok) – Blue. Gene P now being tested/debugged. – Looking for future OSX support. · Native IO Methods – MPI-IO independent, MPI-IO collective, POSIX, NULL, Ga Tech Data. Tap asynchronous, Rutgers DART asynchronous, Posix-Nx. M, phdf 5, pnetcdf, kepler-db ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 14 Managed by UT-Battelle for the Department of Energy

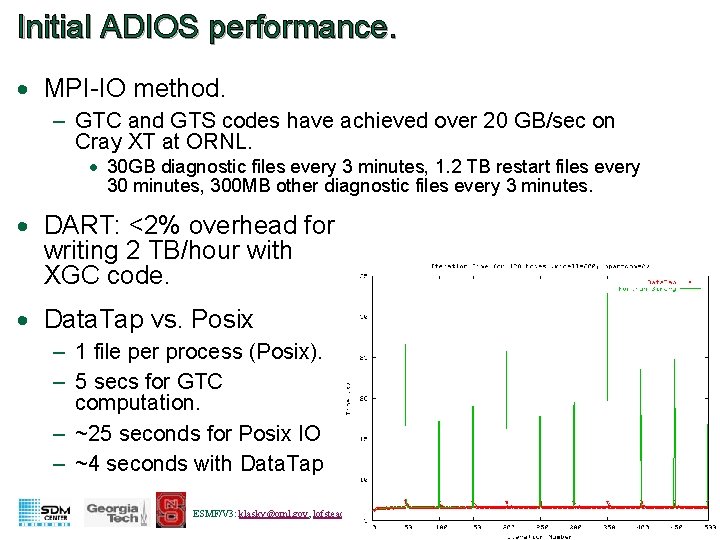

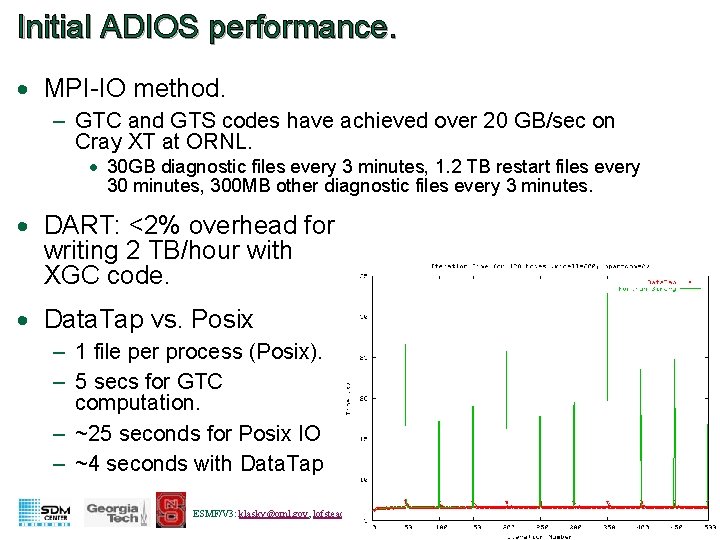

Initial ADIOS performance. · MPI-IO method. – GTC and GTS codes have achieved over 20 GB/sec on Cray XT at ORNL. · 30 GB diagnostic files every 3 minutes, 1. 2 TB restart files every 30 minutes, 300 MB other diagnostic files every 3 minutes. · DART: <2% overhead for writing 2 TB/hour with XGC code. · Data. Tap vs. Posix – 1 file per process (Posix). – 5 secs for GTC computation. – ~25 seconds for Posix IO – ~4 seconds with Data. Tap ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 15 Managed by UT-Battelle for the Department of Energy

Codes & Performance · June 7, 2008: 24 hour GTC run on Jaguar at ORNL – 93% of machine (28, 672 cores) – MPI-Open. MP mixed model on quad-core nodes (7168 MPI procs) – three interruptions total (simple node failure) with 2 10+ hour runs – Wrote 65 TB of data at >20 GB/sec (25 TB for post analysis) – IO overhead ~3% of wall clock time. – Mixed IO methods of synchronous MPI-IO and POSIX IO configured in the XML file ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 16 Managed by UT-Battelle for the Department of Energy

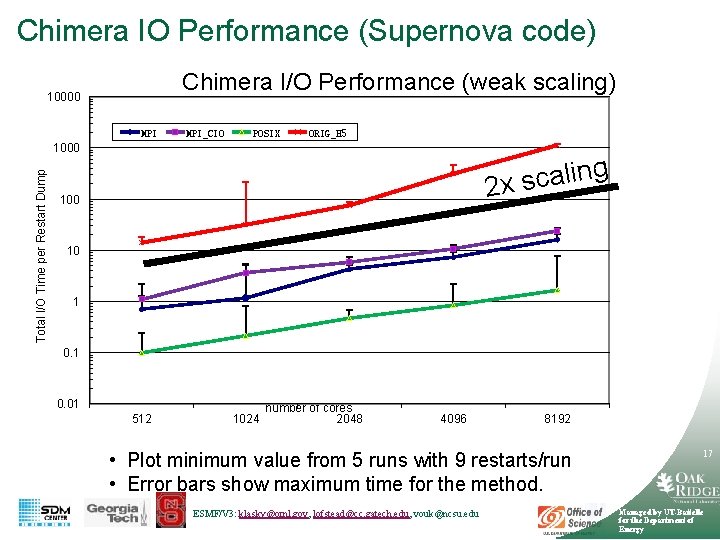

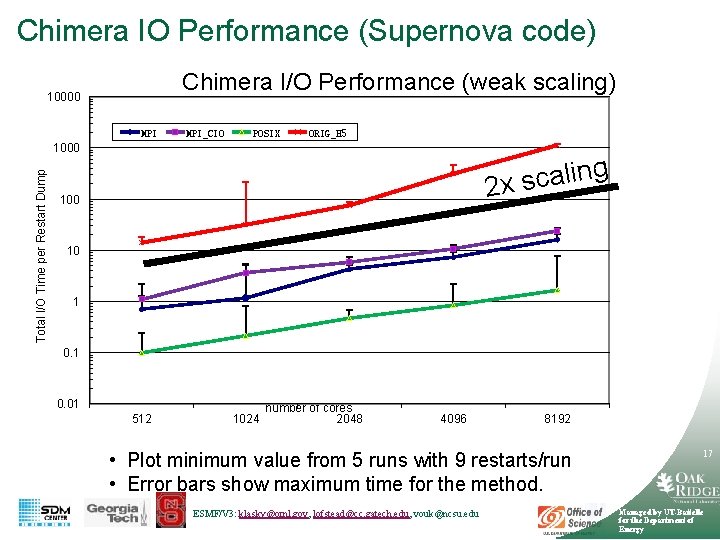

Chimera IO Performance (Supernova code) Chimera I/O Performance (weak scaling) 10000 MPI_CIO POSIX ORIG_H 5 Total I/O Time per Restart Dump 1000 g n i l a c s 2 x 100 10 1 0. 01 512 1024 number of cores 2048 4096 8192 • Plot minimum value from 5 runs with 9 restarts/run • Error bars show maximum time for the method. ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 17 Managed by UT-Battelle for the Department of Energy

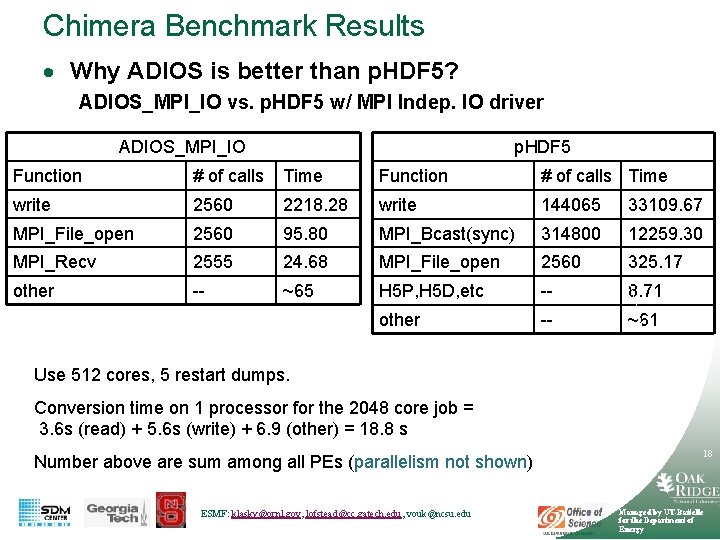

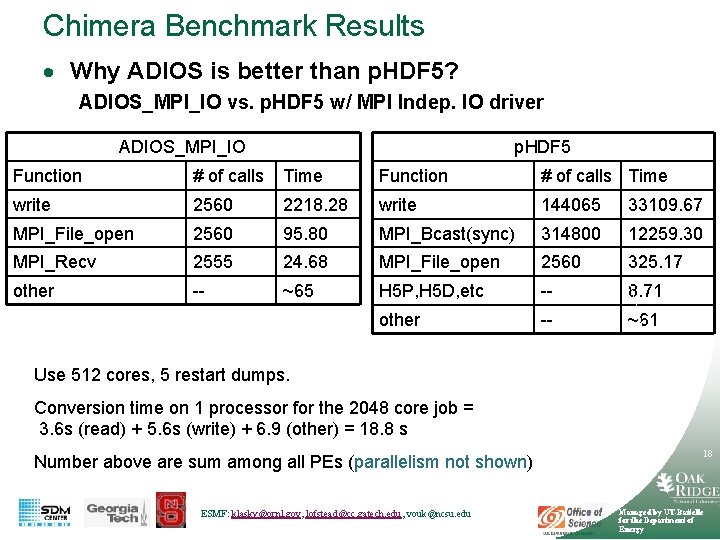

Chimera Benchmark Results · Why ADIOS is better than p. HDF 5? ADIOS_MPI_IO vs. p. HDF 5 w/ MPI Indep. IO driver ADIOS_MPI_IO p. HDF 5 Function # of calls Time write 2560 2218. 28 write 144065 33109. 67 MPI_File_open 2560 95. 80 MPI_Bcast(sync) 314800 12259. 30 MPI_Recv 2555 24. 68 MPI_File_open 2560 325. 17 other -- ~65 H 5 P, H 5 D, etc -- 8. 71 other -- ~61 Use 512 cores, 5 restart dumps. Conversion time on 1 processor for the 2048 core job = 3. 6 s (read) + 5. 6 s (write) + 6. 9 (other) = 18. 8 s Number above are sum among all PEs (parallelism not shown) ESMF: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 18 Managed by UT-Battelle for the Department of Energy

ADIOS Advanced Topics · J. Lofstead 19 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

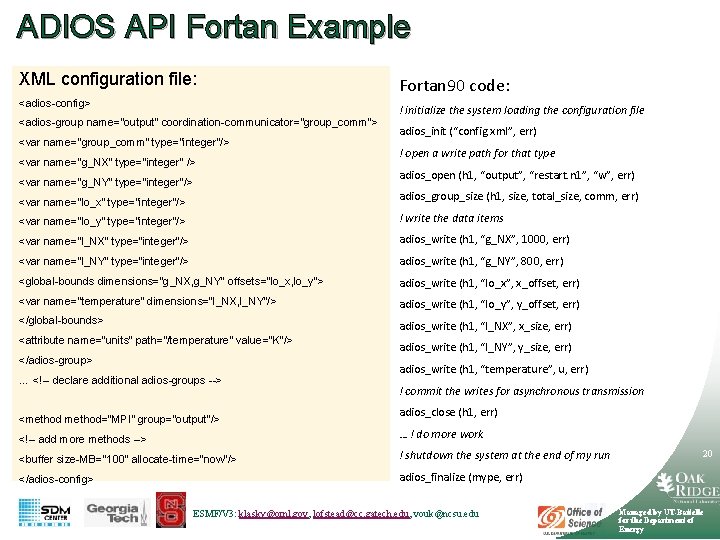

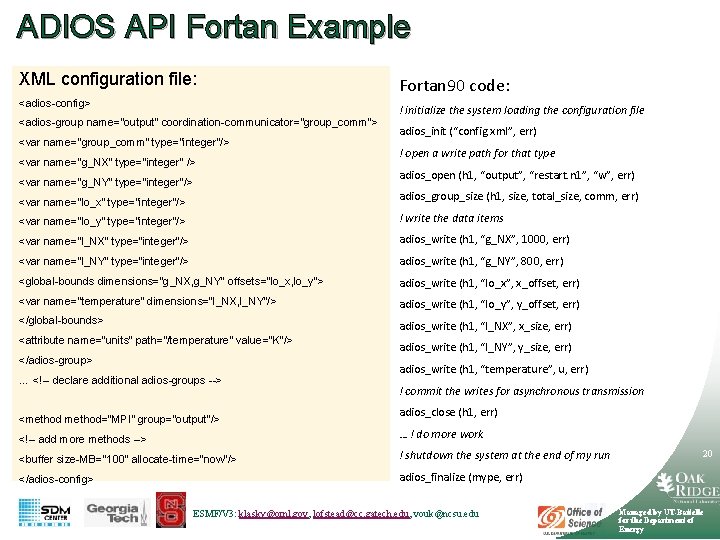

ADIOS API Fortan Example XML configuration file: <adios-config> <adios-group name=“output” coordination-communicator=“group_comm”> <var name=“group_comm” type=“integer”/> <var name=“g_NX” type=“integer” /> <var name=“g_NY” type=“integer”/> Fortan 90 code: ! initialize the system loading the configuration file adios_init (“config. xml”, err) ! open a write path for that type adios_open (h 1, “output”, “restart. n 1”, “w”, err) <var name=“lo_x” type=“integer”/> adios_group_size (h 1, size, total_size, comm, err) <var name=“lo_y” type=“integer”/> ! write the data items <var name=“l_NX” type=“integer”/> adios_write (h 1, “g_NX”, 1000, err) <var name=“l_NY” type=“integer”/> adios_write (h 1, “g_NY”, 800, err) <global-bounds dimensions=“g_NX, g_NY” offsets=“lo_x, lo_y”> adios_write (h 1, “lo_x”, x_offset, err) <var name=“temperature” dimensions=“l_NX, l_NY”/> adios_write (h 1, “lo_y”, y_offset, err) </global-bounds> adios_write (h 1, “l_NX”, x_size, err) <attribute name=“units” path=“/temperature” value=“K”/> </adios-group> … <!-- declare additional adios-groups --> <method=“MPI” group=“output”/> adios_write (h 1, “l_NY”, y_size, err) adios_write (h 1, “temperature”, u, err) ! commit the writes for asynchronous transmission adios_close (h 1, err) <!-- add more methods --> … ! do more work <buffer size-MB=“ 100” allocate-time=“now”/> ! shutdown the system at the end of my run </adios-config> adios_finalize (mype, err) ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 20 Managed by UT-Battelle for the Department of Energy

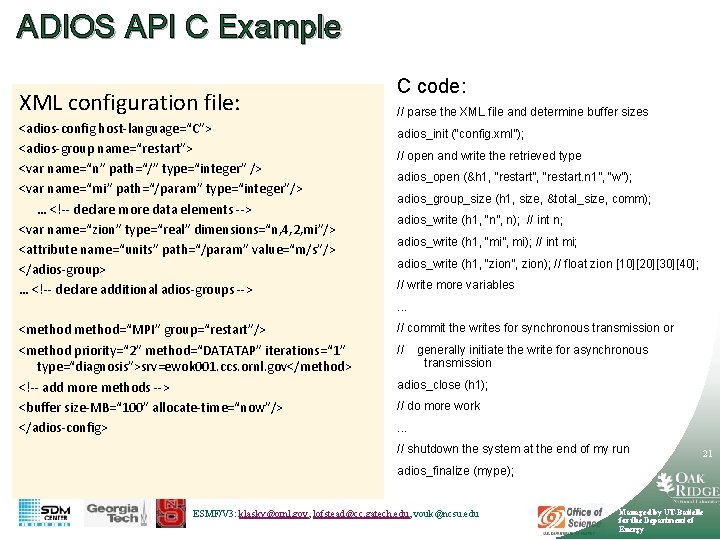

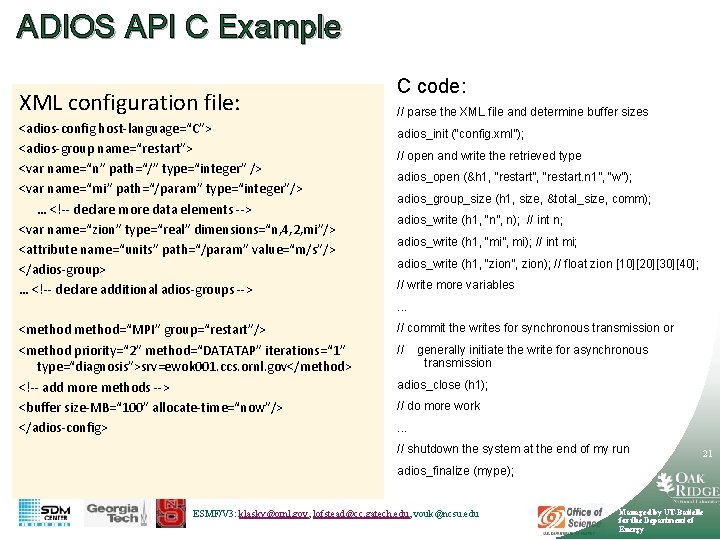

ADIOS API C Example XML configuration file: <adios-config host-language=“C”> <adios-group name=“restart”> <var name=“n” path=“/” type=“integer” /> <var name=“mi” path=“/param” type=“integer”/> … <!-- declare more data elements --> <var name=“zion” type=“real” dimensions=“n, 4, 2, mi”/> <attribute name=“units” path=“/param” value=“m/s”/> </adios-group> … <!-- declare additional adios-groups --> C code: // parse the XML file and determine buffer sizes adios_init (“config. xml”); // open and write the retrieved type adios_open (&h 1, “restart”, “restart. n 1”, “w”); adios_group_size (h 1, size, &total_size, comm); adios_write (h 1, “n”, n); // int n; adios_write (h 1, “mi”, mi); // int mi; adios_write (h 1, “zion”, zion); // float zion [10][20][30][40]; // write more variables. . . <method=“MPI” group=“restart”/> <method priority=“ 2” method=“DATATAP” iterations=“ 1” type=“diagnosis”>srv=ewok 001. ccs. ornl. gov</method> <!-- add more methods --> <buffer size-MB=“ 100” allocate-time=“now”/> </adios-config> // commit the writes for synchronous transmission or // generally initiate the write for asynchronous transmission adios_close (h 1); // do more work. . . // shutdown the system at the end of my run 21 adios_finalize (mype); ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

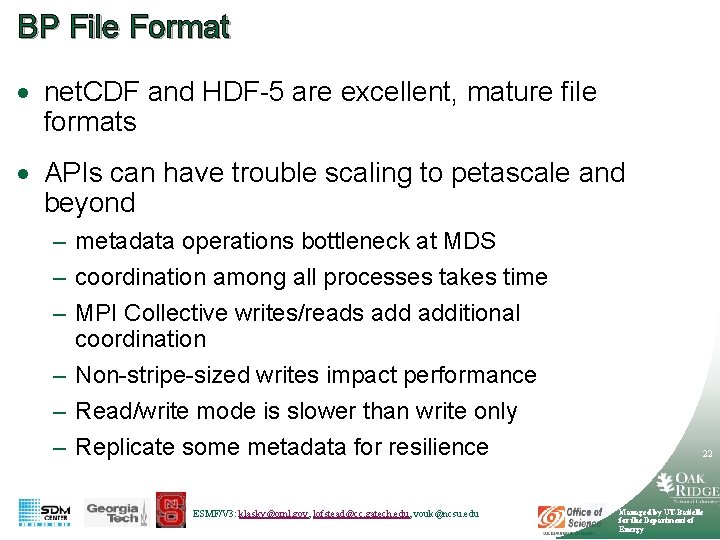

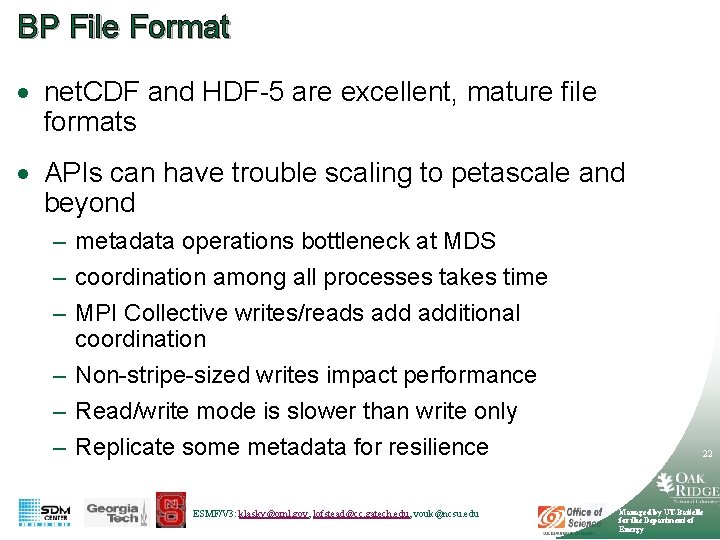

BP File Format · net. CDF and HDF-5 are excellent, mature file formats · APIs can have trouble scaling to petascale and beyond – metadata operations bottleneck at MDS – coordination among all processes takes time – MPI Collective writes/reads additional coordination – Non-stripe-sized writes impact performance – Read/write mode is slower than write only – Replicate some metadata for resilience ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 22 Managed by UT-Battelle for the Department of Energy

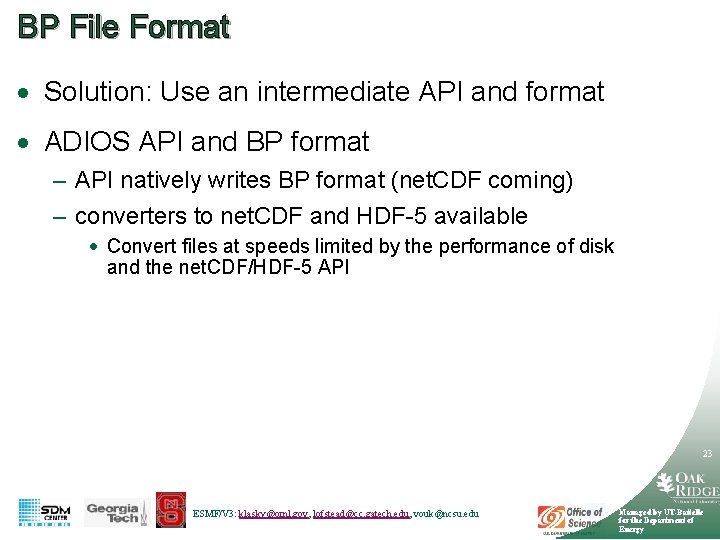

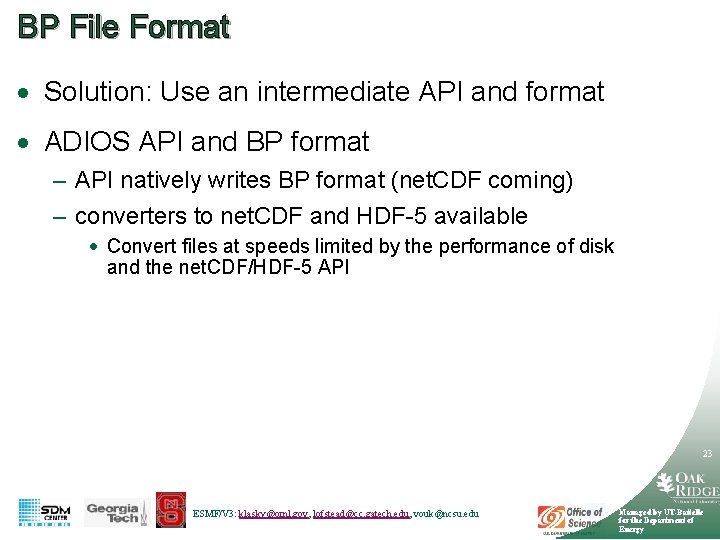

BP File Format · Solution: Use an intermediate API and format · ADIOS API and BP format – API natively writes BP format (net. CDF coming) – converters to net. CDF and HDF-5 available · Convert files at speeds limited by the performance of disk and the net. CDF/HDF-5 API 23 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

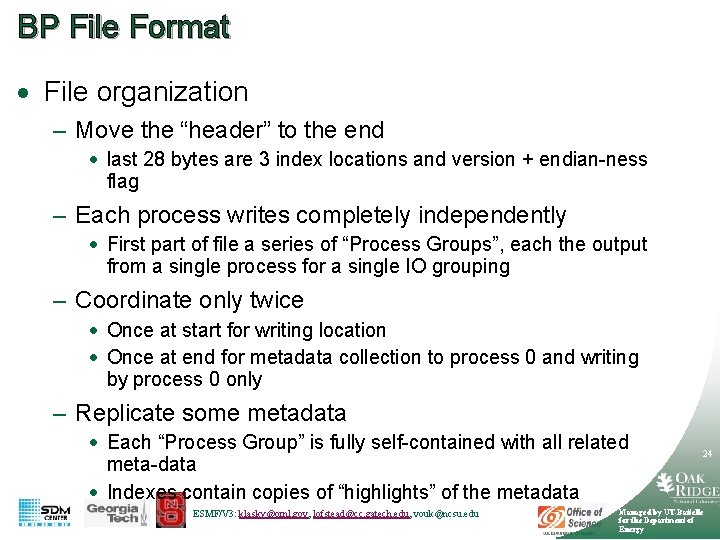

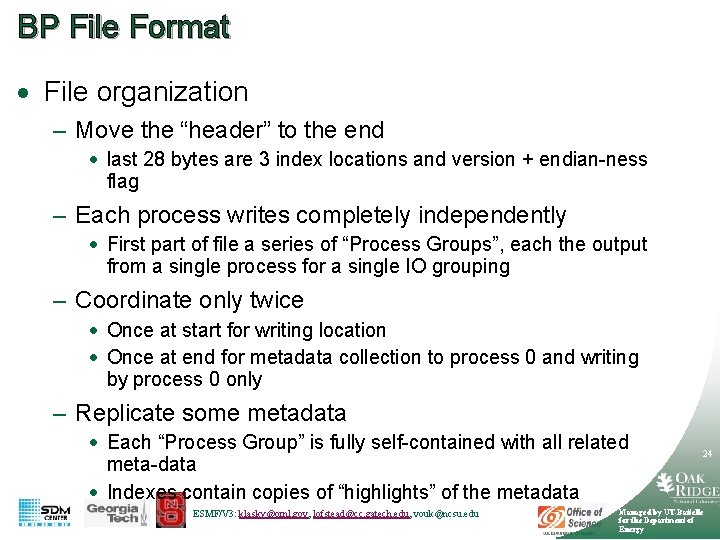

BP File Format · File organization – Move the “header” to the end · last 28 bytes are 3 index locations and version + endian-ness flag – Each process writes completely independently · First part of file a series of “Process Groups”, each the output from a single process for a single IO grouping – Coordinate only twice · Once at start for writing location · Once at end for metadata collection to process 0 and writing by process 0 only – Replicate some metadata · Each “Process Group” is fully self-contained with all related meta-data · Indexes contain copies of “highlights” of the metadata ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 24 Managed by UT-Battelle for the Department of Energy

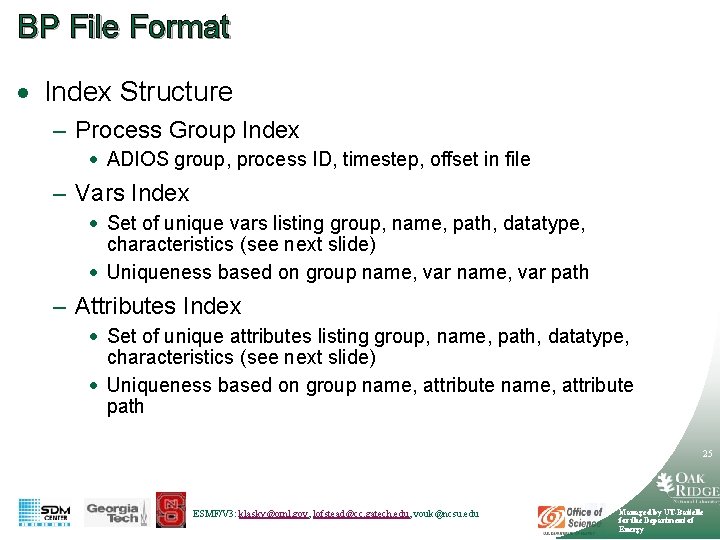

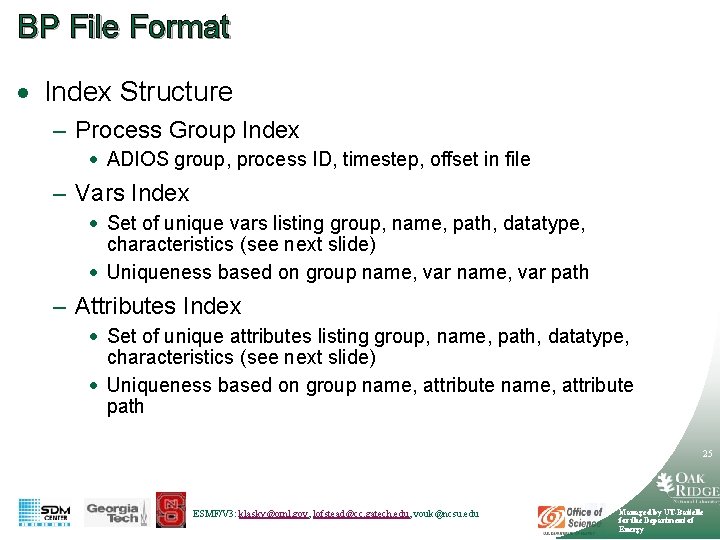

BP File Format · Index Structure – Process Group Index · ADIOS group, process ID, timestep, offset in file – Vars Index · Set of unique vars listing group, name, path, datatype, characteristics (see next slide) · Uniqueness based on group name, var path – Attributes Index · Set of unique attributes listing group, name, path, datatype, characteristics (see next slide) · Uniqueness based on group name, attribute path 25 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

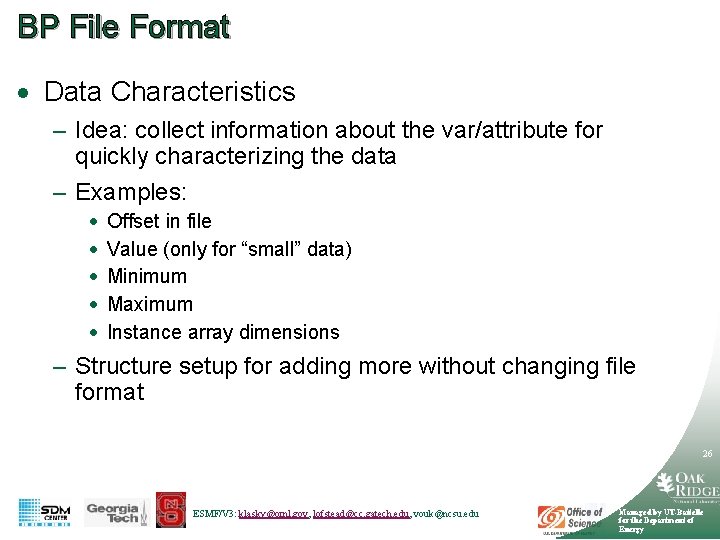

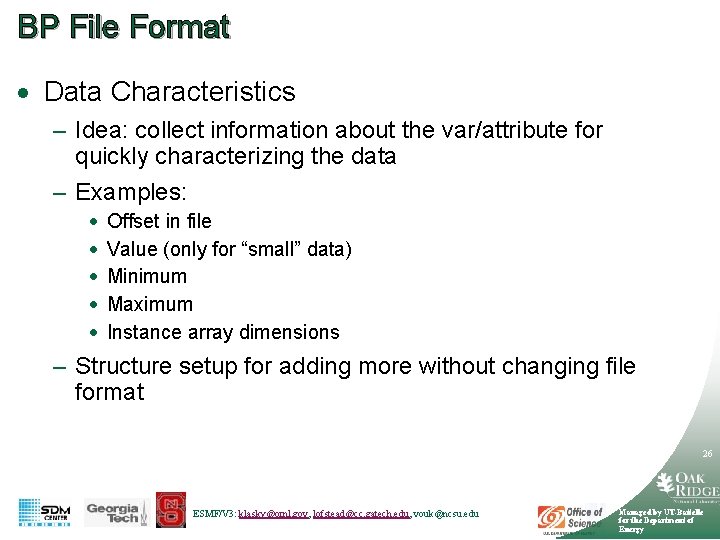

BP File Format · Data Characteristics – Idea: collect information about the var/attribute for quickly characterizing the data – Examples: · · · Offset in file Value (only for “small” data) Minimum Maximum Instance array dimensions – Structure setup for adding more without changing file format 26 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

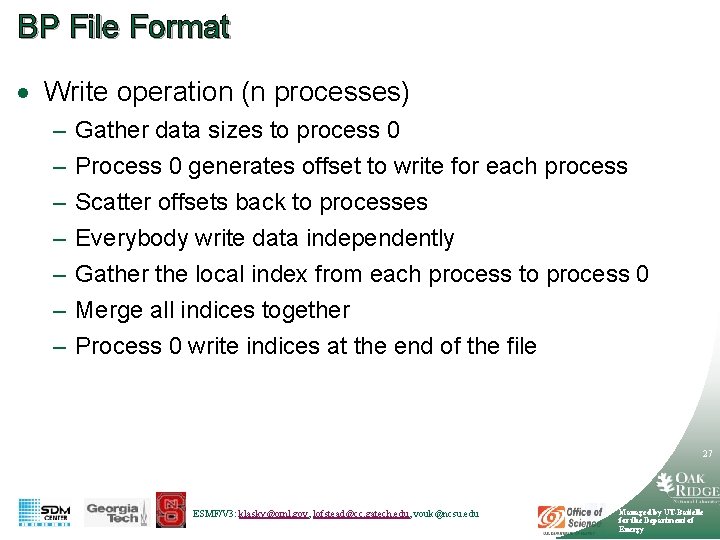

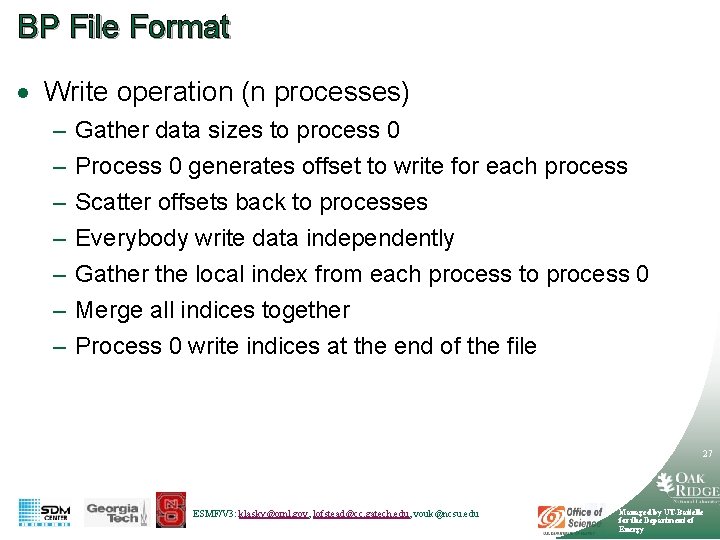

BP File Format · Write operation (n processes) – – – – Gather data sizes to process 0 Process 0 generates offset to write for each process Scatter offsets back to processes Everybody write data independently Gather the local index from each process to process 0 Merge all indices together Process 0 write indices at the end of the file 27 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

BP File Format · Compromises using BP Format – Each “Process Group” can have different variables defined and written (also an advantage) 28 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

BP File Format · Advantages using BP Format – – – Each process writes independently Limited coordination File organization more natural for striping Rich index contents “Append” operations do not require moving data · Indices read by process 0 on start and used as base index · First new Process Group overwrites old indicies – Index corruption does not potentially destroy entire file – Process Group corruption isolated by still getting access to the rest of the process groups (via indices) 29 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

Outline · EFFIS · ADIOS. – ADIOS Overview – BP format, and compatibility with hdf 5/netcdf. · Workflow. · Dashboard. · Conclusions. 30 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

Scientific Workflow Capture how a scientist works with data and analytical tools – data access, transformation, analysis, visualization – possible worldview: dataflow-oriented (cf. signal-processing) Scientific workflows start where script-based data-management solutions leave off. Scientific workflow (wf) benefits (v. s. script-based approaches): – wf automation – wf & component reuse, sharing, adaptation, archiving – wf design, documentation – built-in (model) concurrency (task-, pipeline-parallelism) – built-in provenance support – distributed ¶llel exec: Grid & cluster support Higher-level “language” vs. – wf fault-tolerance, reliability assembly-language nature – Other … Why a W/F System? of scripts ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 31 Managed by UT-Battelle for the Department of Energy

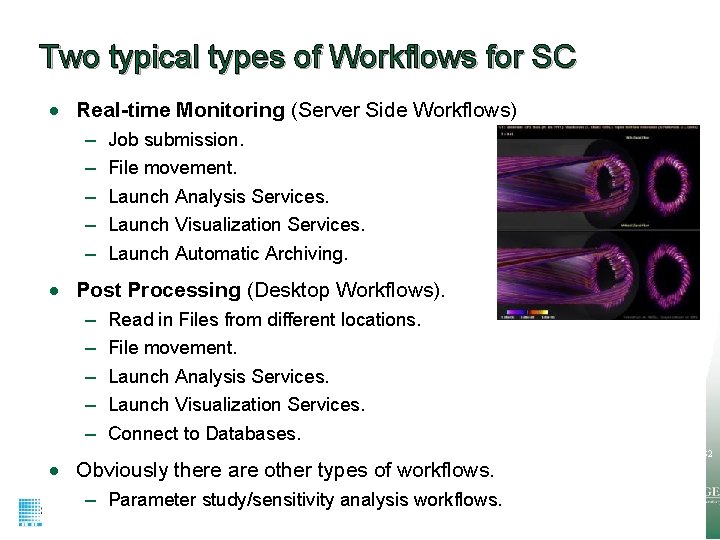

Two typical types of Workflows for SC · Real-time Monitoring (Server Side Workflows) – – – Job submission. File movement. Launch Analysis Services. Launch Visualization Services. Launch Automatic Archiving. · Post Processing (Desktop Workflows). – – – Read in Files from different locations. File movement. Launch Analysis Services. Launch Visualization Services. Connect to Databases. · Obviously there are other types of workflows. – Parameter study/sensitivity analysis workflows. ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 32 Managed by UT-Battelle for the Department of Energy

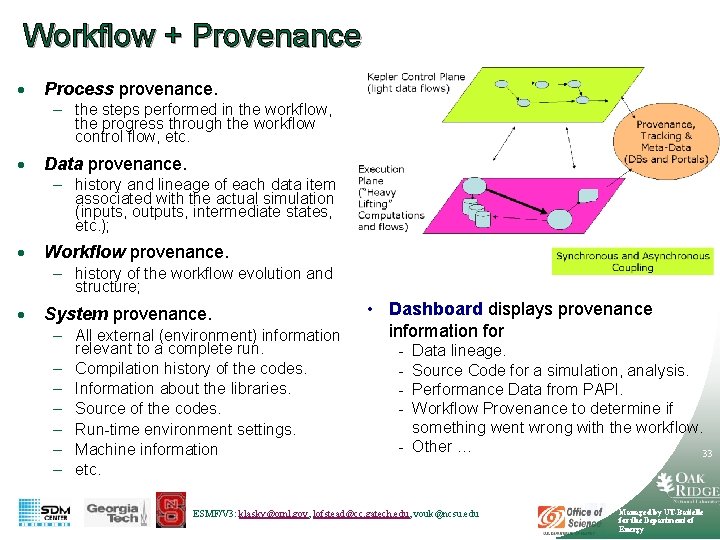

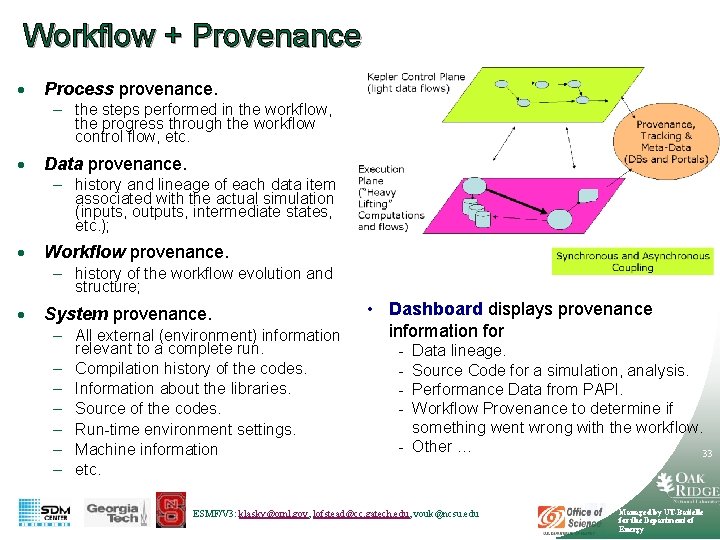

Workflow + Provenance · Process provenance. – the steps performed in the workflow, the progress through the workflow control flow, etc. · Data provenance. – history and lineage of each data item associated with the actual simulation (inputs, outputs, intermediate states, etc. ); · Workflow provenance. – history of the workflow evolution and structure; · System provenance. – All external (environment) information relevant to a complete run. – Compilation history of the codes. – Information about the libraries. – Source of the codes. – Run-time environment settings. – Machine information – etc. • Dashboard displays provenance information for - Data lineage. Source Code for a simulation, analysis. Performance Data from PAPI. Workflow Provenance to determine if something went wrong with the workflow. - Other … 33 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

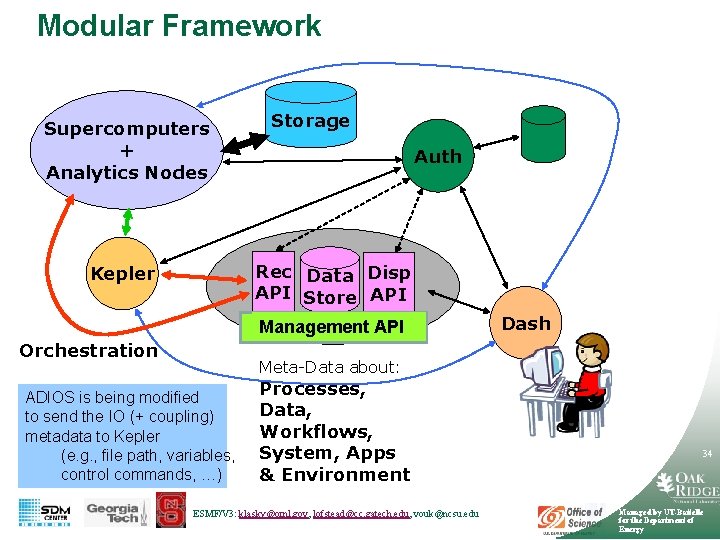

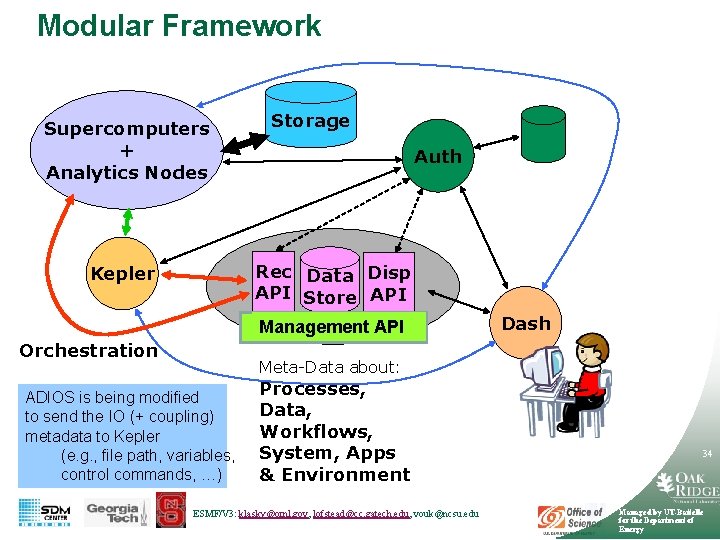

Modular Framework Supercomputers + Analytics Nodes Storage Auth Rec Data Disp API Store API Kepler Management API Orchestration Dash Meta-Data about: ADIOS is being modified to send the IO (+ coupling) metadata to Kepler (e. g. , file path, variables, control commands, …) Processes, Data, Workflows, System, Apps & Environment ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 34 Managed by UT-Battelle for the Department of Energy

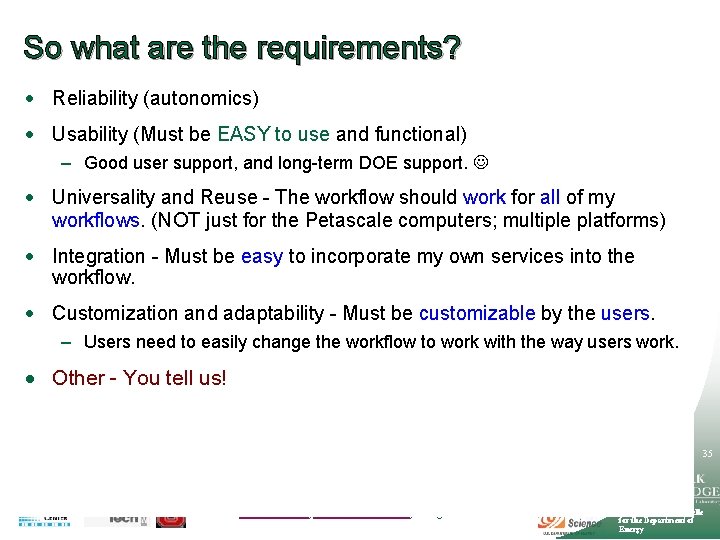

So what are the requirements? · Reliability (autonomics) · Usability (Must be EASY to use and functional) – Good user support, and long-term DOE support. · Universality and Reuse - The workflow should work for all of my workflows. (NOT just for the Petascale computers; multiple platforms) · Integration - Must be easy to incorporate my own services into the workflow. · Customization and adaptability - Must be customizable by the users. – Users need to easily change the workflow to work with the way users work. · Other - You tell us! 35 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

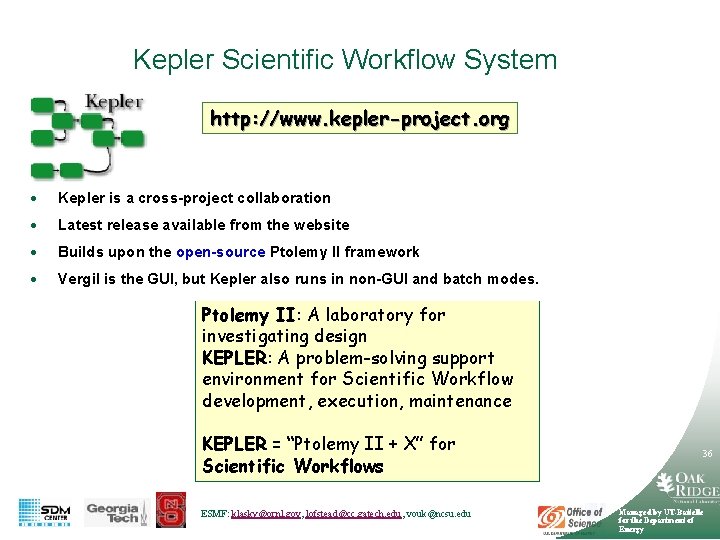

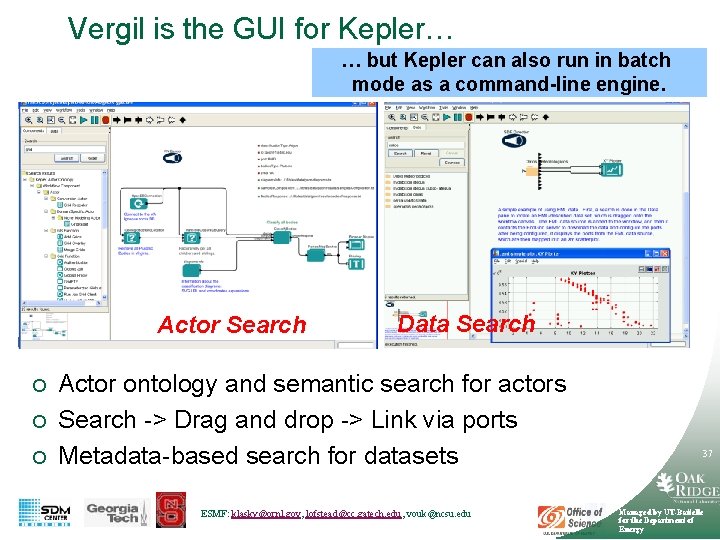

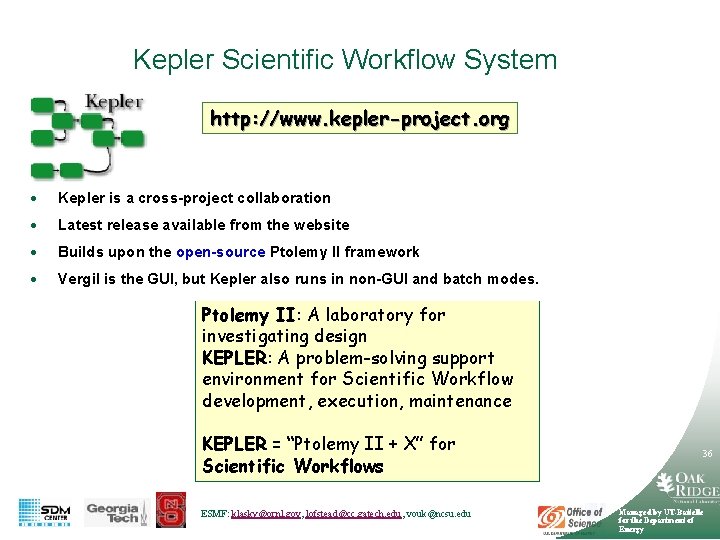

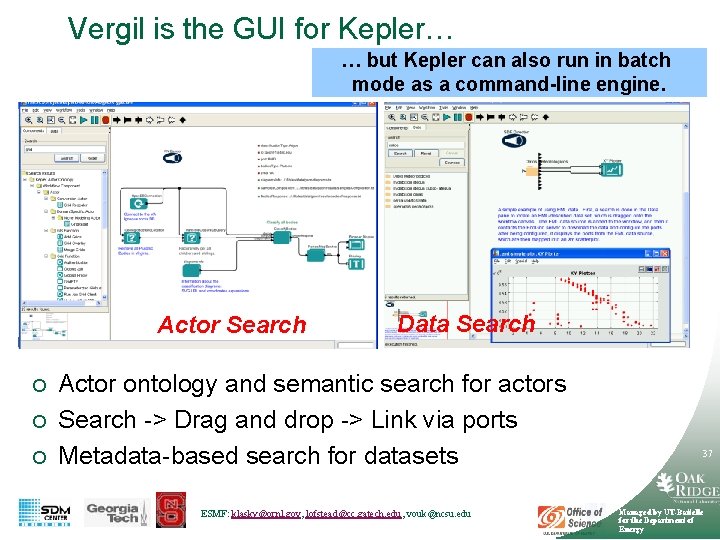

Kepler Scientific Workflow System http: //www. kepler-project. org · Kepler is a cross-project collaboration · Latest release available from the website · Builds upon the open-source Ptolemy II framework · Vergil is the GUI, but Kepler also runs in non-GUI and batch modes. Ptolemy II: A laboratory for investigating design KEPLER: A problem-solving support environment for Scientific Workflow development, execution, maintenance KEPLER = “Ptolemy II + X” for Scientific Workflows ESMF: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 36 Managed by UT-Battelle for the Department of Energy

Vergil is the GUI for Kepler… … but Kepler can also run in batch mode as a command-line engine. Actor Search ¡ ¡ ¡ Data Search Actor ontology and semantic search for actors Search -> Drag and drop -> Link via ports Metadata-based search for datasets ESMF: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 37 Managed by UT-Battelle for the Department of Energy

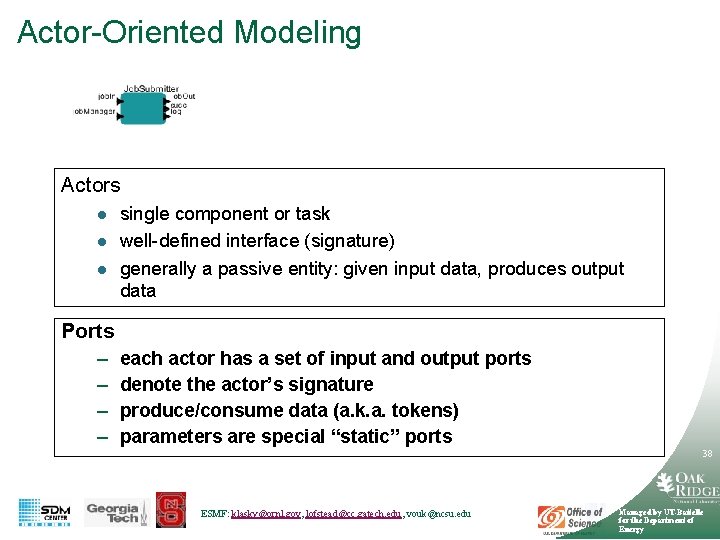

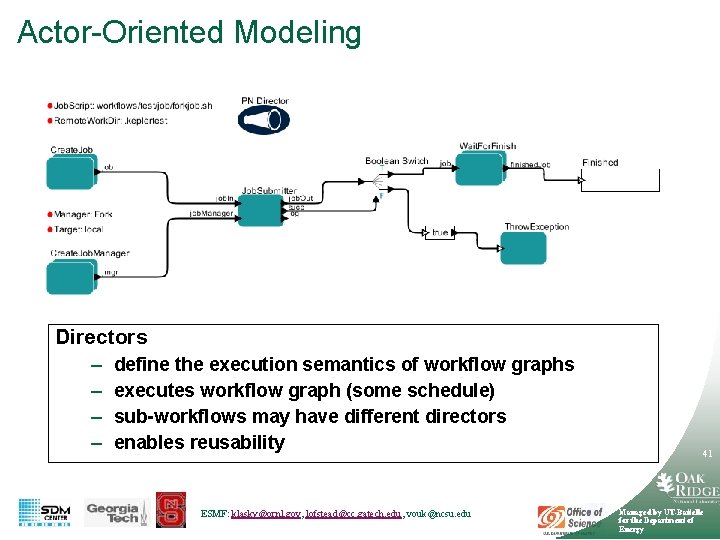

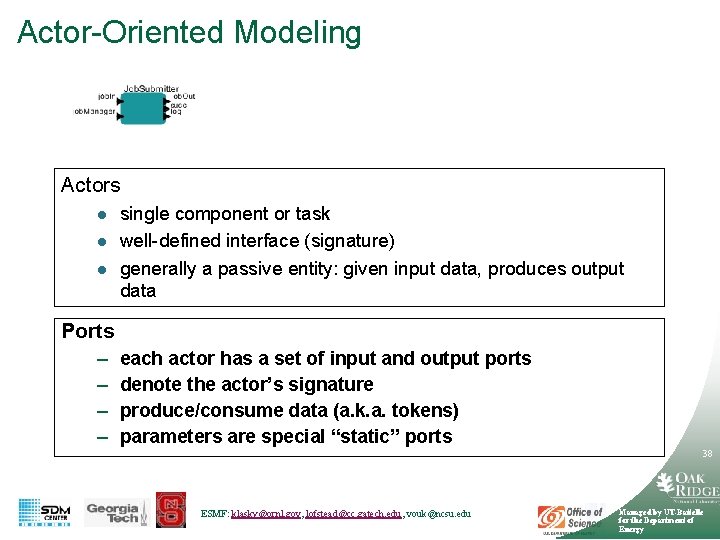

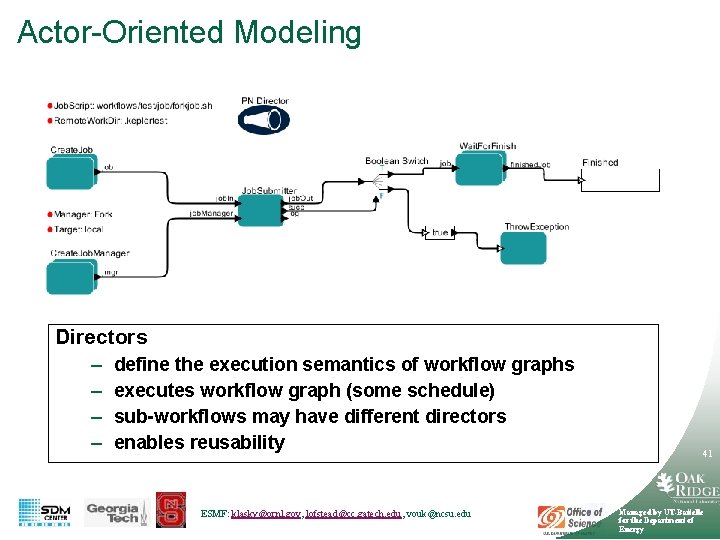

Actor-Oriented Modeling Actors l l l single component or task well-defined interface (signature) generally a passive entity: given input data, produces output data Ports – – each actor has a set of input and output ports denote the actor’s signature produce/consume data (a. k. a. tokens) parameters are special “static” ports 38 ESMF: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

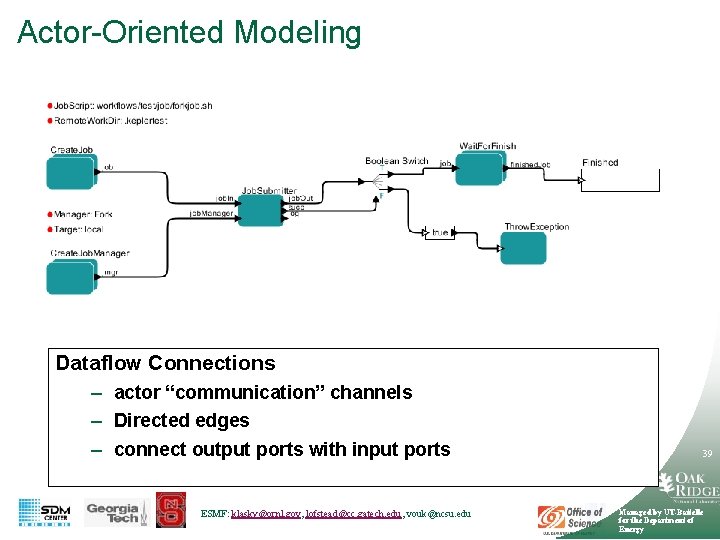

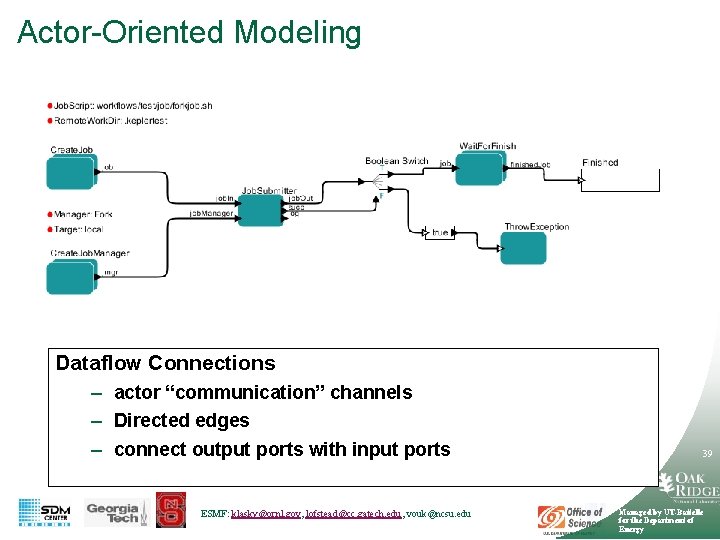

Actor-Oriented Modeling Dataflow Connections – actor “communication” channels – Directed edges – connect output ports with input ports ESMF: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 39 Managed by UT-Battelle for the Department of Energy

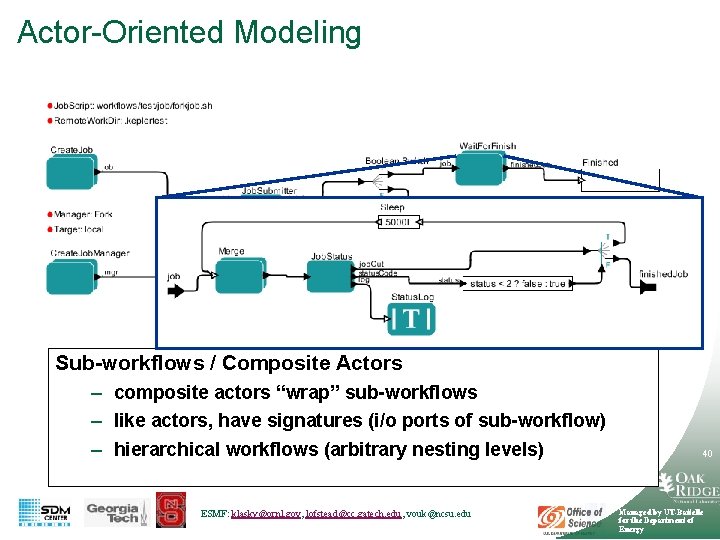

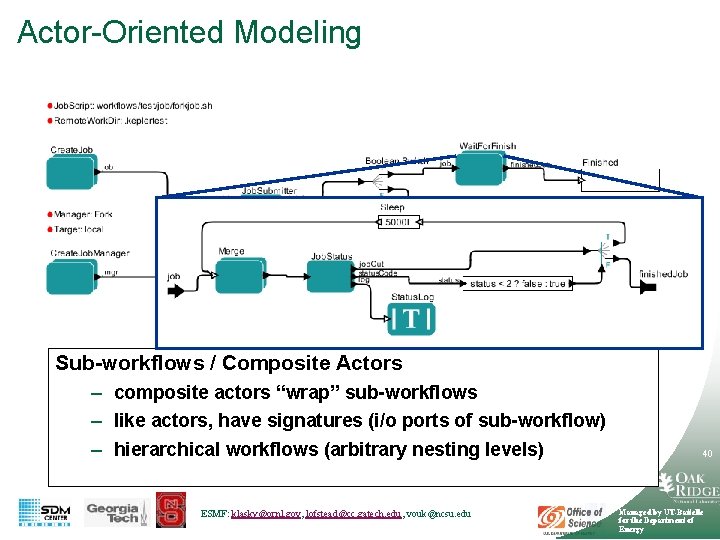

Actor-Oriented Modeling Sub-workflows / Composite Actors – composite actors “wrap” sub-workflows – like actors, have signatures (i/o ports of sub-workflow) – hierarchical workflows (arbitrary nesting levels) ESMF: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 40 Managed by UT-Battelle for the Department of Energy

Actor-Oriented Modeling Directors – – define the execution semantics of workflow graphs executes workflow graph (some schedule) sub-workflows may have different directors enables reusability ESMF: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 41 Managed by UT-Battelle for the Department of Energy

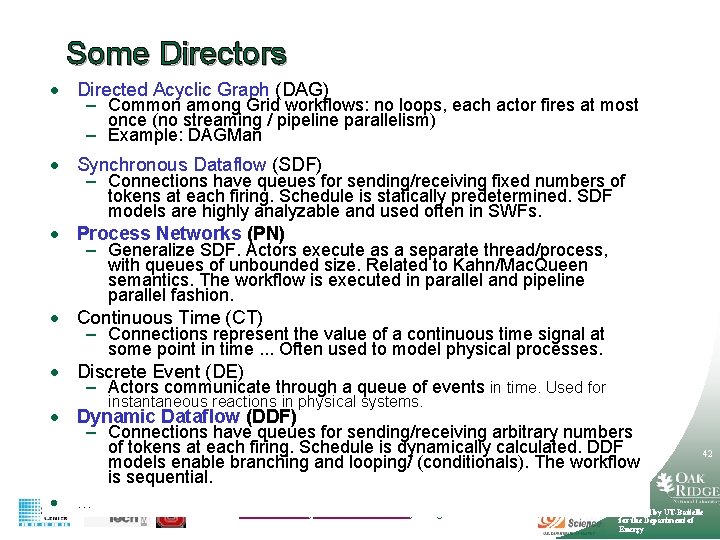

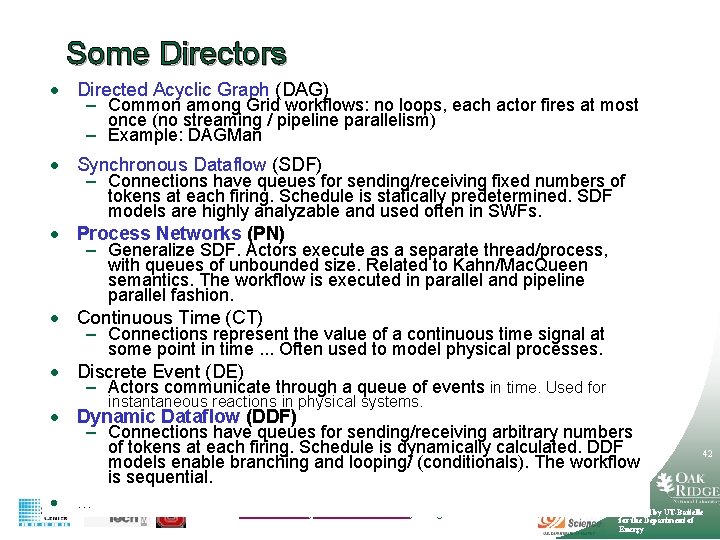

Some Directors · Directed Acyclic Graph (DAG) – Common among Grid workflows: no loops, each actor fires at most once (no streaming / pipeline parallelism) – Example: DAGMan · Synchronous Dataflow (SDF) – Connections have queues for sending/receiving fixed numbers of tokens at each firing. Schedule is statically predetermined. SDF models are highly analyzable and used often in SWFs. · Process Networks (PN) – Generalize SDF. Actors execute as a separate thread/process, with queues of unbounded size. Related to Kahn/Mac. Queen semantics. The workflow is executed in parallel and pipeline parallel fashion. · Continuous Time (CT) – Connections represent the value of a continuous time signal at some point in time. . . Often used to model physical processes. · Discrete Event (DE) – Actors communicate through a queue of events in time. Used for instantaneous reactions in physical systems. · Dynamic Dataflow (DDF) – Connections have queues for sending/receiving arbitrary numbers of tokens at each firing. Schedule is dynamically calculated. DDF models enable branching and looping/ (conditionals). The workflow is sequential. · … ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 42 Managed by UT-Battelle for the Department of Energy

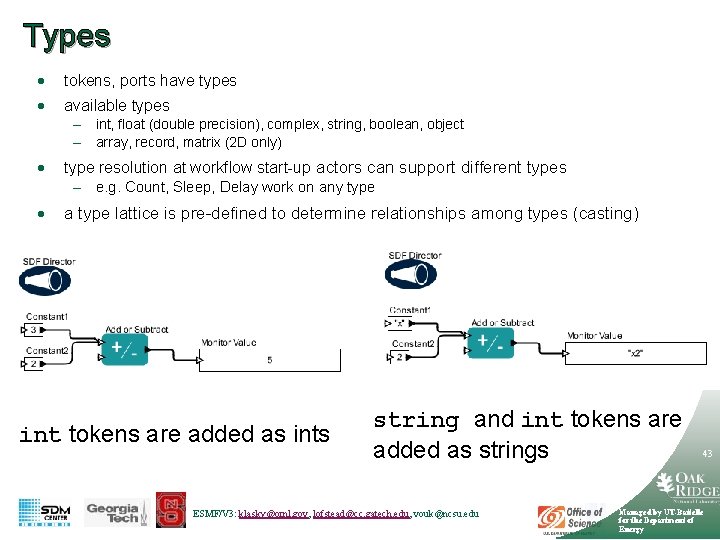

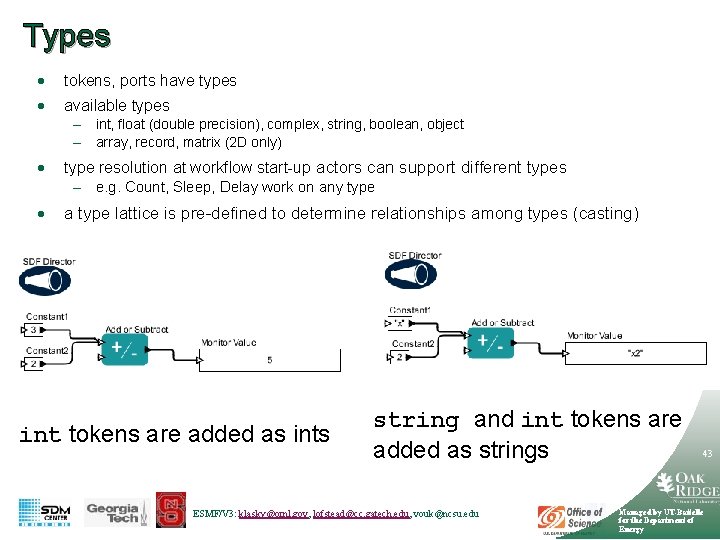

Types · tokens, ports have types · available types – int, float (double precision), complex, string, boolean, object – array, record, matrix (2 D only) · type resolution at workflow start-up actors can support different types – e. g. Count, Sleep, Delay work on any type · a type lattice is pre-defined to determine relationships among types (casting) int tokens are added as ints string and int tokens are added as strings ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 43 Managed by UT-Battelle for the Department of Energy

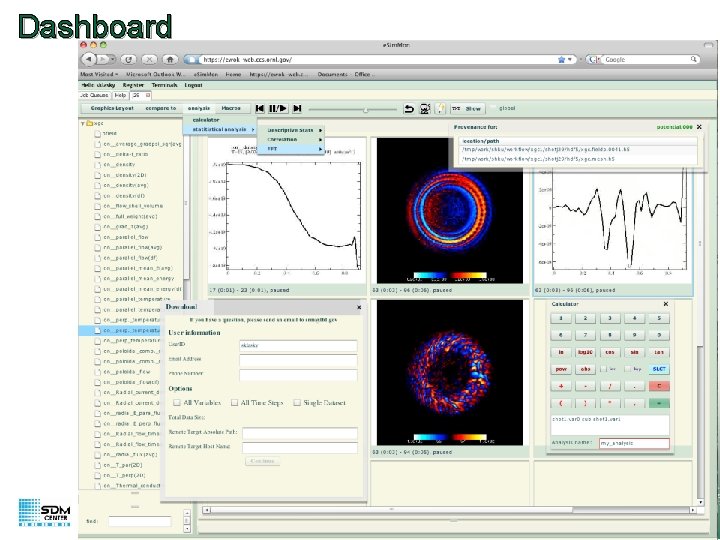

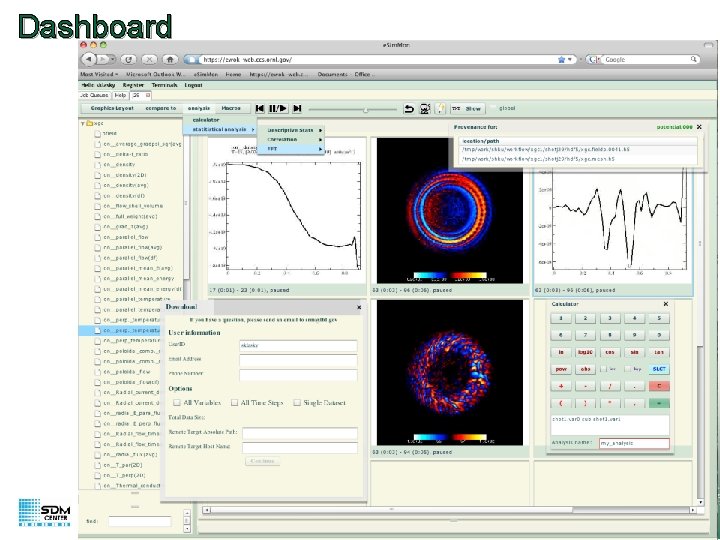

Dashboard 44 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

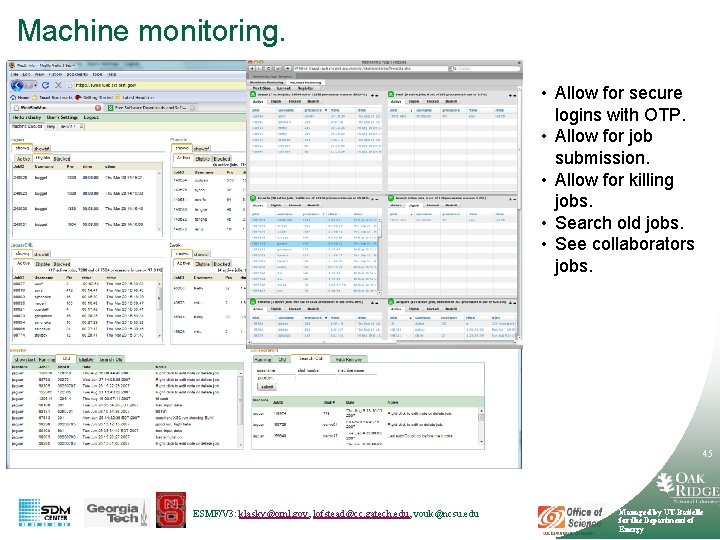

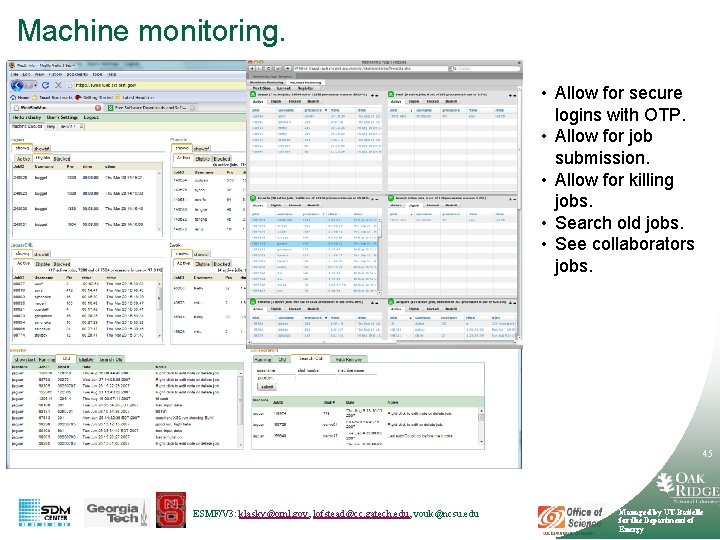

Machine monitoring. • Allow for secure logins with OTP. • Allow for job submission. • Allow for killing jobs. • Search old jobs. • See collaborators jobs. 45 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

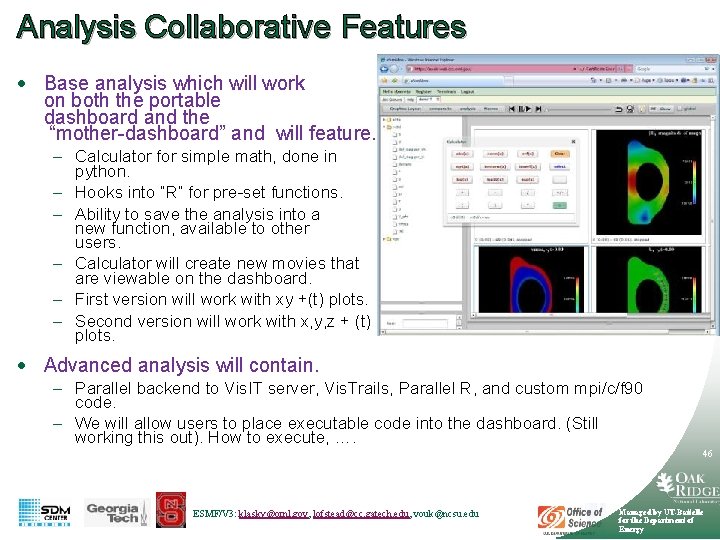

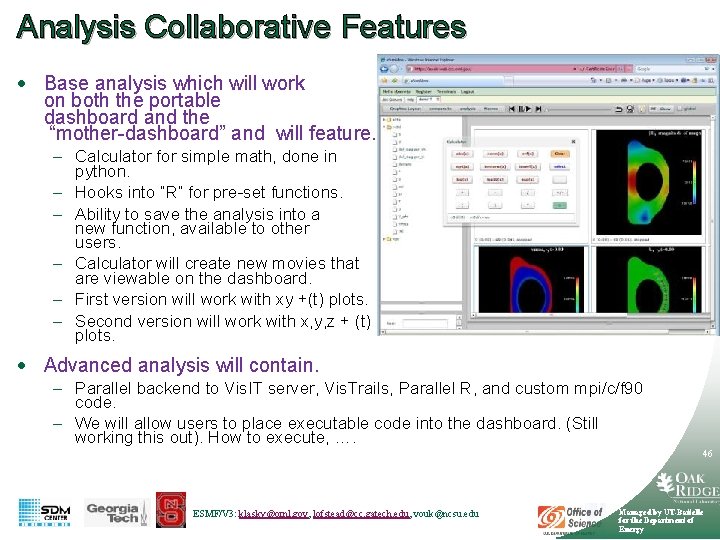

Analysis Collaborative Features · Base analysis which will work on both the portable dashboard and the “mother-dashboard” and will feature. – Calculator for simple math, done in python. – Hooks into “R” for pre-set functions. – Ability to save the analysis into a new function, available to other users. – Calculator will create new movies that are viewable on the dashboard. – First version will work with xy +(t) plots. – Second version will work with x, y, z + (t) plots. · Advanced analysis will contain. – Parallel backend to Vis. IT server, Vis. Trails, Parallel R, and custom mpi/c/f 90 code. – We will allow users to place executable code into the dashboard. (Still working this out). How to execute, …. 46 ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu Managed by UT-Battelle for the Department of Energy

Conclusions · ADIOS is an IO componentization. – – – ADIOS is being integrated into Kepler. Achieved over 20 GB/sec for several codes on Jaguar. Used daily by CPES researchers. Can change IO implementations at runtime. Metadata is contained in XML file. · Kepler is used daily for – Monitoring CPES simulations on Jaguar/Franklin/ewok. – Runs with 24 hour jobs, on large number of processors. · Dashboard uses enterprise (LAMP) technology. – Linux, Apache, My. SQL, PHP ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 47 Managed by UT-Battelle for the Department of Energy

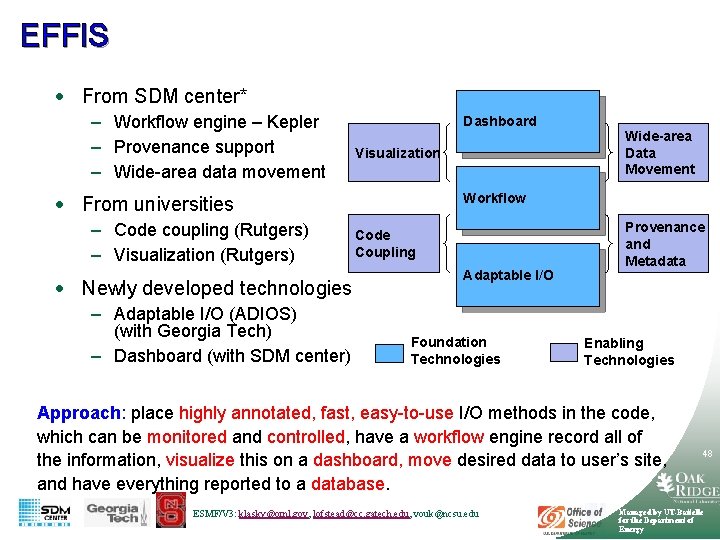

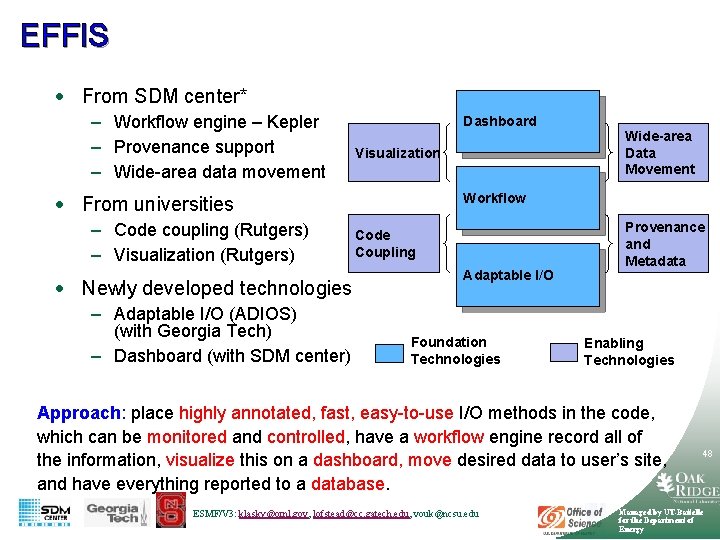

EFFIS · From SDM center* – Workflow engine – Kepler – Provenance support – Wide-area data movement Dashboard Visualization Workflow · From universities – Code coupling (Rutgers) – Visualization (Rutgers) · Newly developed technologies – Adaptable I/O (ADIOS) (with Georgia Tech) – Dashboard (with SDM center) Wide-area Data Movement Code Coupling Adaptable I/O Foundation Technologies Provenance and Metadata Enabling Technologies Approach: place highly annotated, fast, easy-to-use I/O methods in the code, which can be monitored and controlled, have a workflow engine record all of the information, visualize this on a dashboard, move desired data to user’s site, and have everything reported to a database. ESMF/V 3: klasky@ornl. gov, lofstead@cc. gatech. edu, vouk@ncsu. edu 48 Managed by UT-Battelle for the Department of Energy