End to End Protocols 1 End to End

![Selective repeat sender data from above : receiver pkt n in [rcvbase, rcvbase+N-1] r Selective repeat sender data from above : receiver pkt n in [rcvbase, rcvbase+N-1] r](https://slidetodoc.com/presentation_image_h/2949b42e4b226b6bd38aae4641920ec2/image-14.jpg)

![TCP ACK generation [RFC 1122, RFC 2581] Event TCP Receiver action in-order segment arrival, TCP ACK generation [RFC 1122, RFC 2581] Event TCP Receiver action in-order segment arrival,](https://slidetodoc.com/presentation_image_h/2949b42e4b226b6bd38aae4641920ec2/image-29.jpg)

![UDP: User Datagram Protocol [RFC 768] r “no frills, ” “bare bones” Internet transport UDP: User Datagram Protocol [RFC 768] r “no frills, ” “bare bones” Internet transport](https://slidetodoc.com/presentation_image_h/2949b42e4b226b6bd38aae4641920ec2/image-63.jpg)

- Slides: 66

End to End Protocols 1

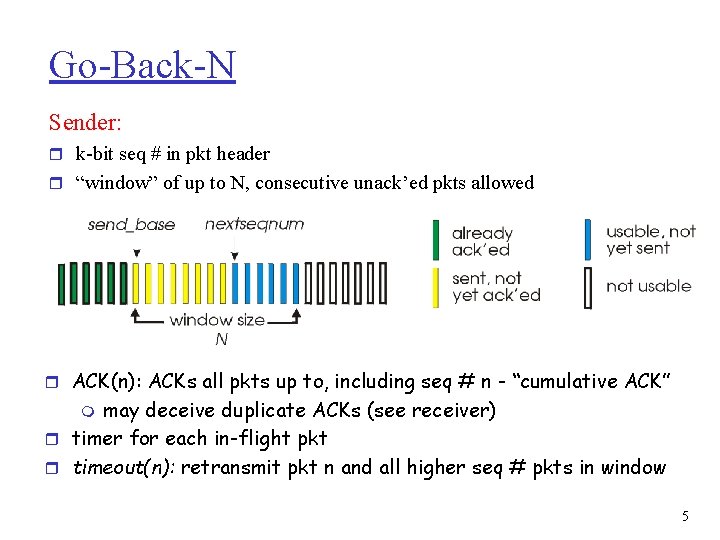

End to End Protocols r We already saw: m basic protocols m Stop & wait (Correct but low performance) r Now: m Window based protocol. • Go Back N • Selective Repeat m TCP protocol. m UDP protocol. 2

Pipelined protocols Pipelining: sender allows multiple, “in-flight”, yet-to-beacknowledged pkts m m range of sequence numbers must be increased buffering at sender and/or receiver r Two generic forms of pipelined protocols: go-Back-N, selective repeat 3

Go Back N (GBN) 4

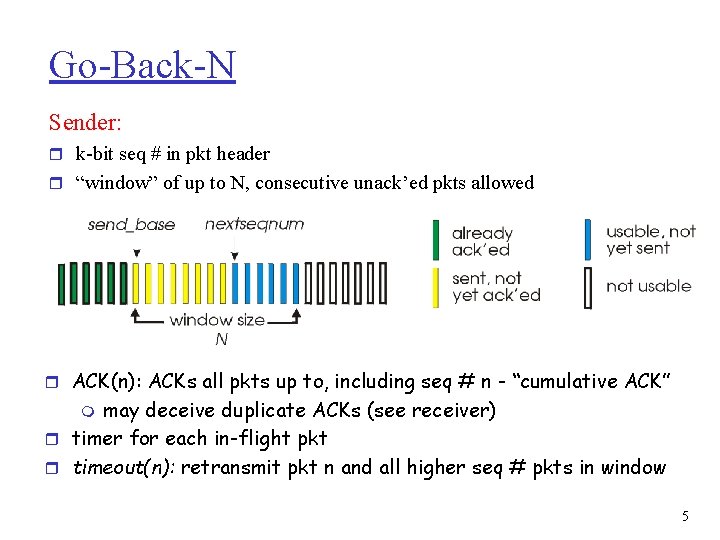

Go-Back-N Sender: r k-bit seq # in pkt header r “window” of up to N, consecutive unack’ed pkts allowed r ACK(n): ACKs all pkts up to, including seq # n - “cumulative ACK” may deceive duplicate ACKs (see receiver) r timer for each in-flight pkt r timeout(n): retransmit pkt n and all higher seq # pkts in window m 5

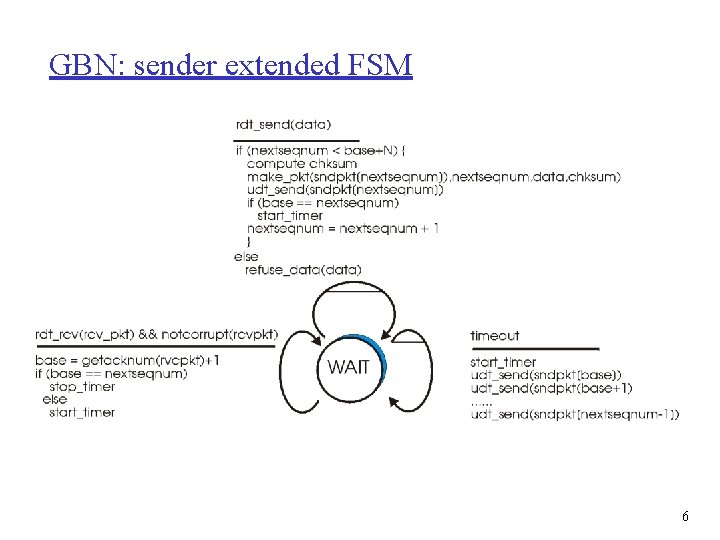

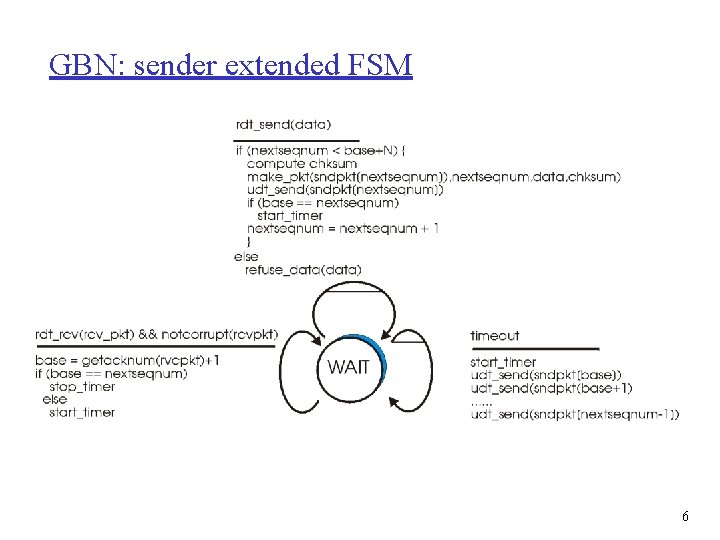

GBN: sender extended FSM 6

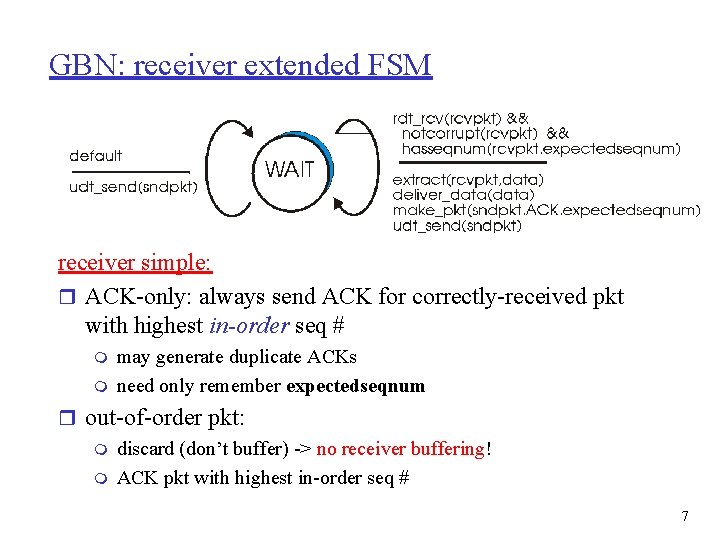

GBN: receiver extended FSM receiver simple: r ACK-only: always send ACK for correctly-received pkt with highest in-order seq # m m may generate duplicate ACKs need only remember expectedseqnum r out-of-order pkt: m discard (don’t buffer) -> no receiver buffering! m ACK pkt with highest in-order seq # 7

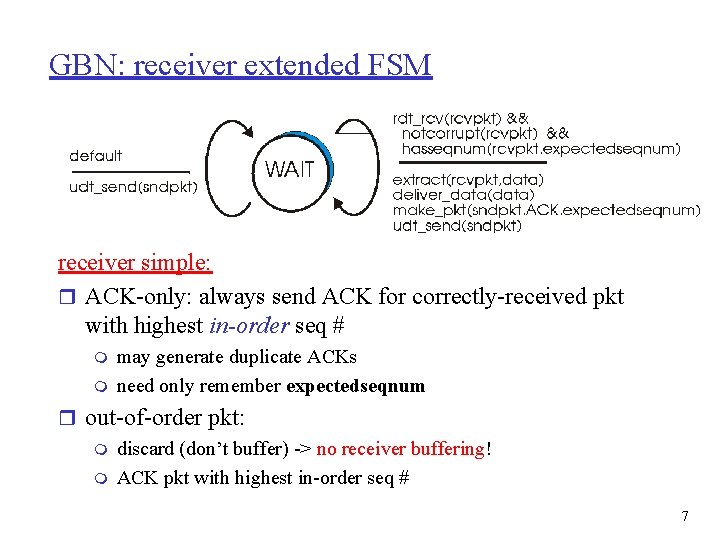

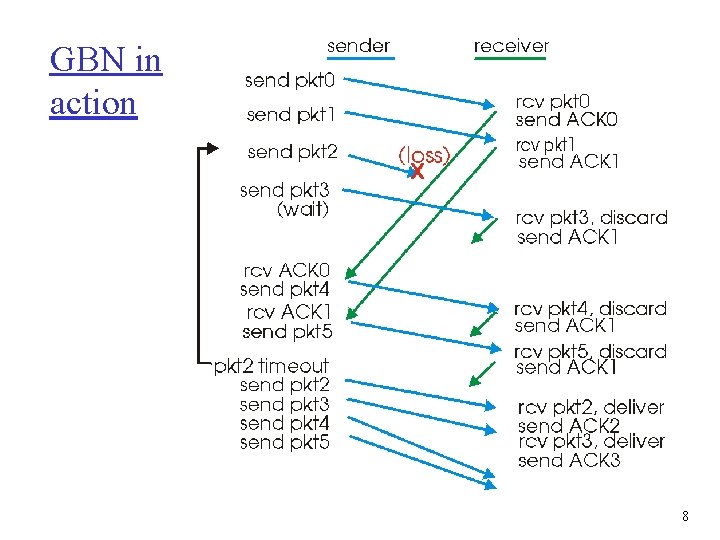

GBN in action 8

GBN - correctness r Safety: r The sequence numbers r r guarantee: packet received in order. No gaps. No duplicates. Safety follows from extectedsequencenum m m Next seg. Received exactly once. r Liveness: m Eventually timeout. m Re-sends the window. m Eventually base is received correctly. r Receiver: m from that time ACK at least base. m Eventually an ACK will get through. m The sender will update to Base (or more). 9

GBN - correctness Clearing a FIFO channel: Ack i<k impossible Ack k impossible Data i<k Data k Claim: After receiving Data/ACK k no Data/ACK i<k is received. Sufficient to use N+1 seq. num. 10

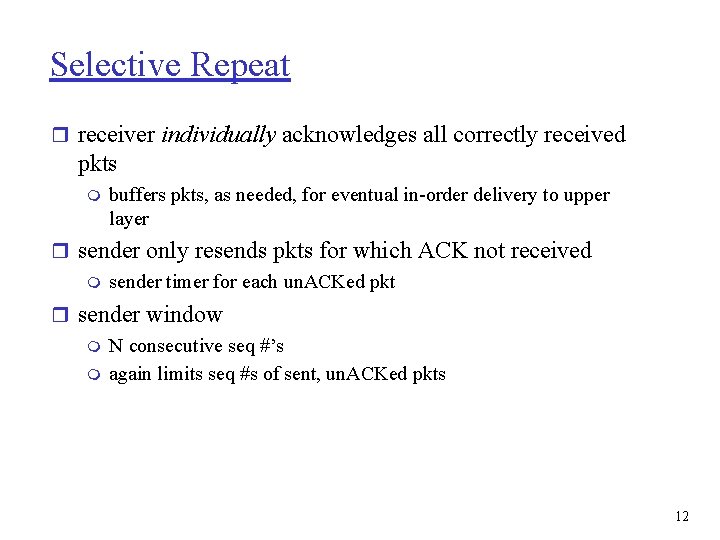

Selective Repeat 11

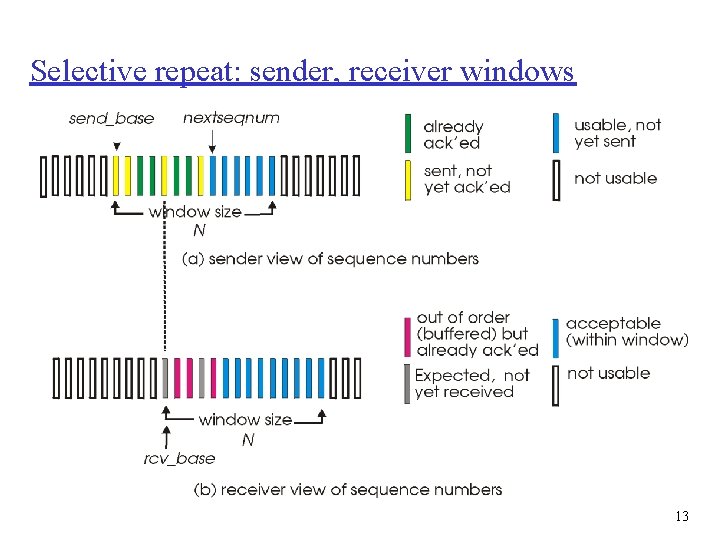

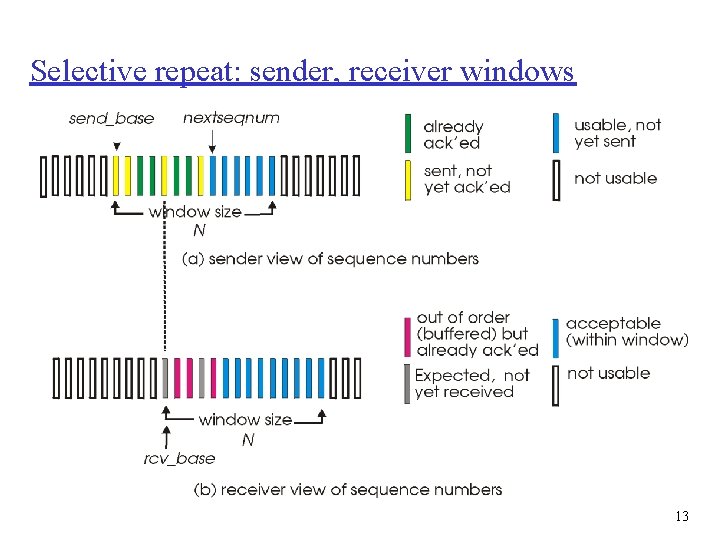

Selective Repeat r receiver individually acknowledges all correctly received pkts m buffers pkts, as needed, for eventual in-order delivery to upper layer r sender only resends pkts for which ACK not received m sender timer for each un. ACKed pkt r sender window m N consecutive seq #’s m again limits seq #s of sent, un. ACKed pkts 12

Selective repeat: sender, receiver windows 13

![Selective repeat sender data from above receiver pkt n in rcvbase rcvbaseN1 r Selective repeat sender data from above : receiver pkt n in [rcvbase, rcvbase+N-1] r](https://slidetodoc.com/presentation_image_h/2949b42e4b226b6bd38aae4641920ec2/image-14.jpg)

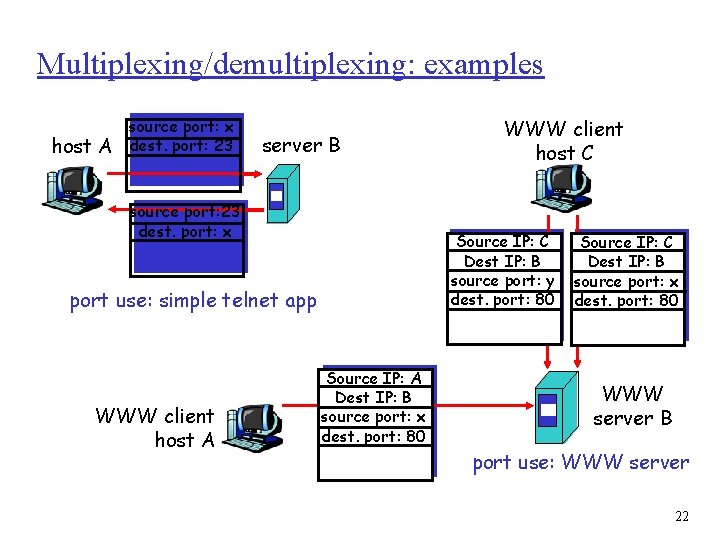

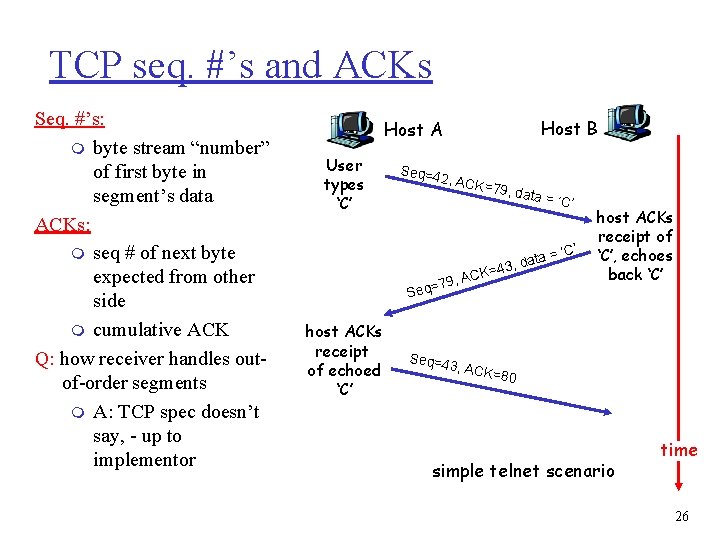

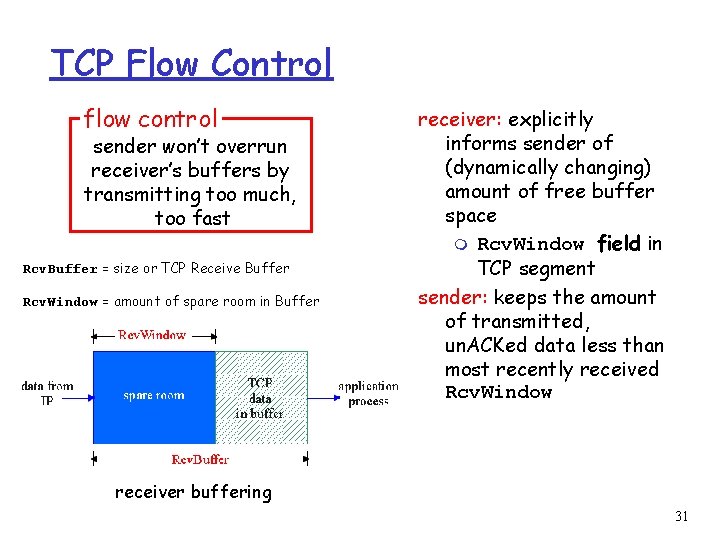

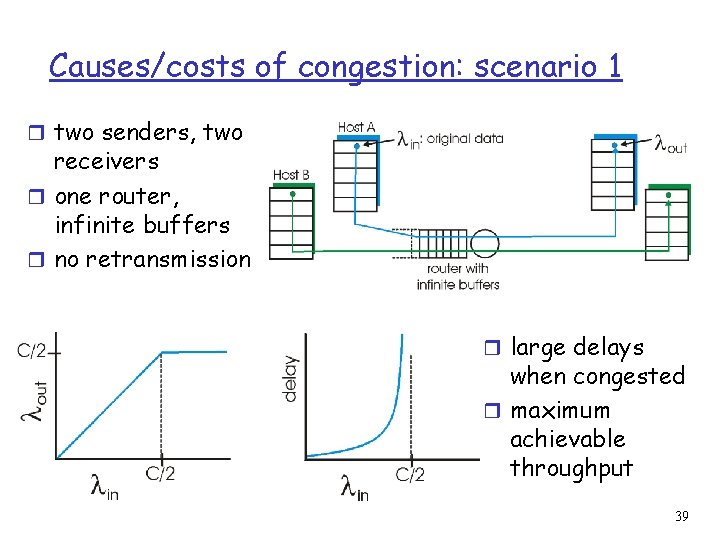

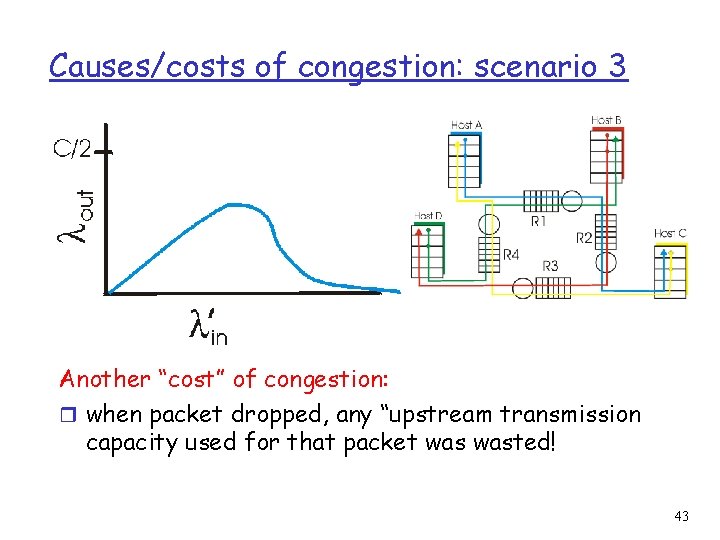

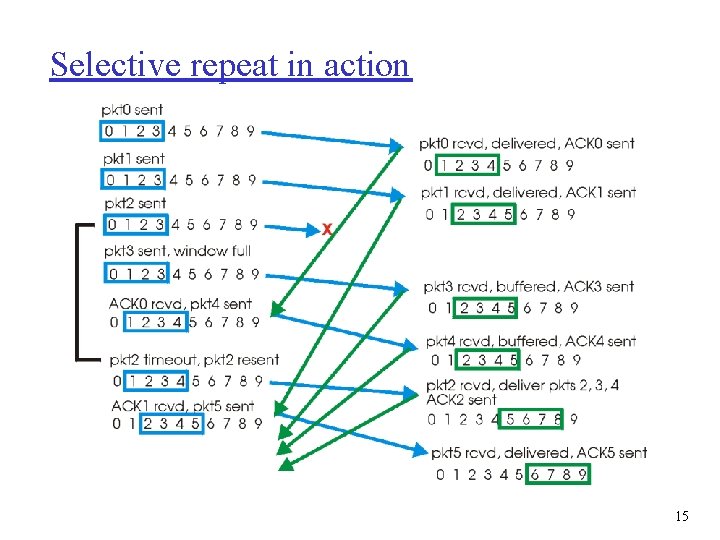

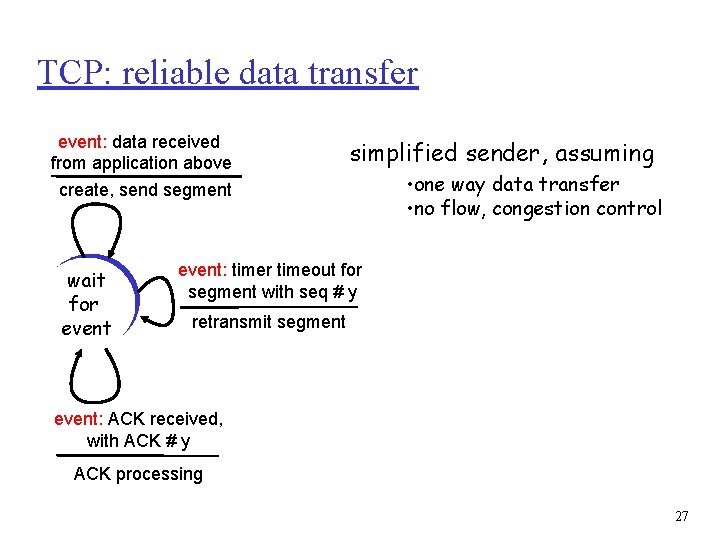

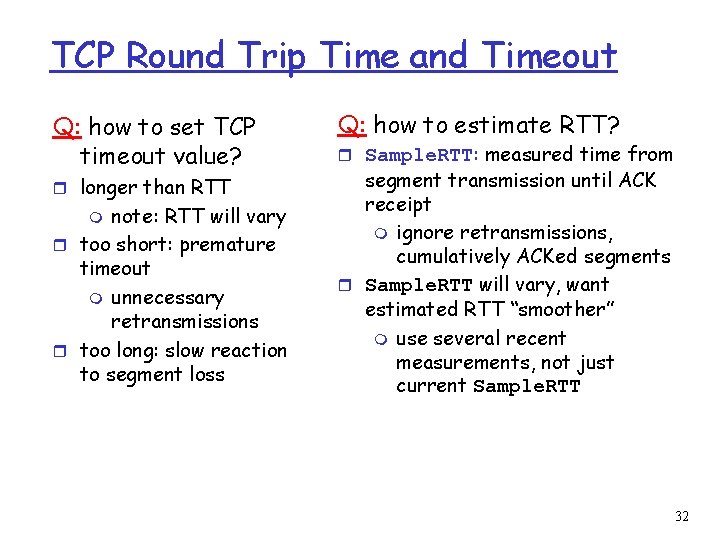

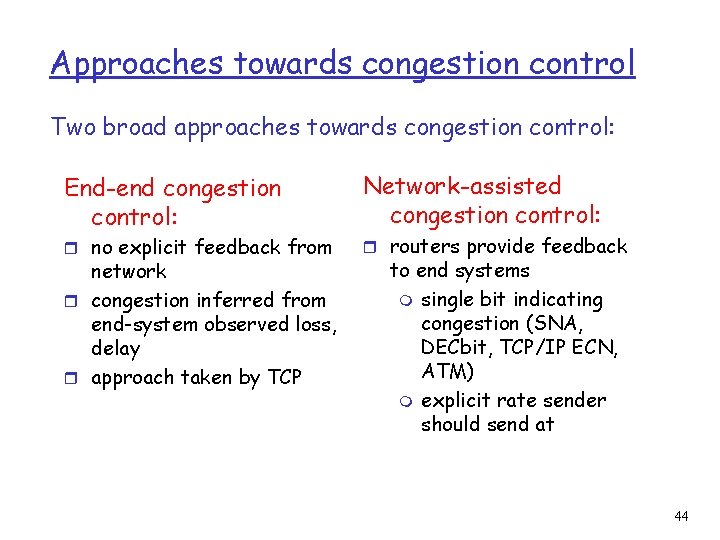

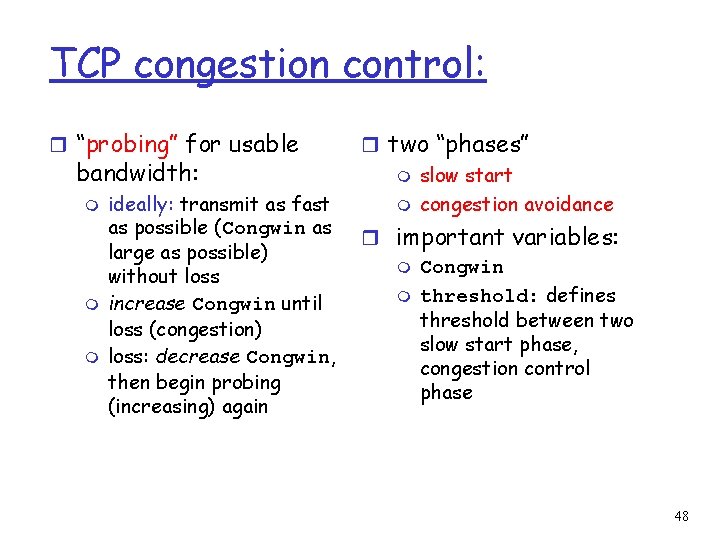

Selective repeat sender data from above : receiver pkt n in [rcvbase, rcvbase+N-1] r if next available seq # in r send ACK(n) timeout(n): r in-order: deliver (also window, send pkt r resend pkt n, restart timer ACK(n) in [sendbase, sendbase+N]: r mark pkt n as received r if n smallest un. ACKed pkt, advance window base to next un. ACKed seq # r out-of-order: buffer deliver buffered, in-order pkts), advance window to next not-yet-received pkt n in [rcvbase-N, rcvbase-1] r ACK(n) otherwise: r ignore 14

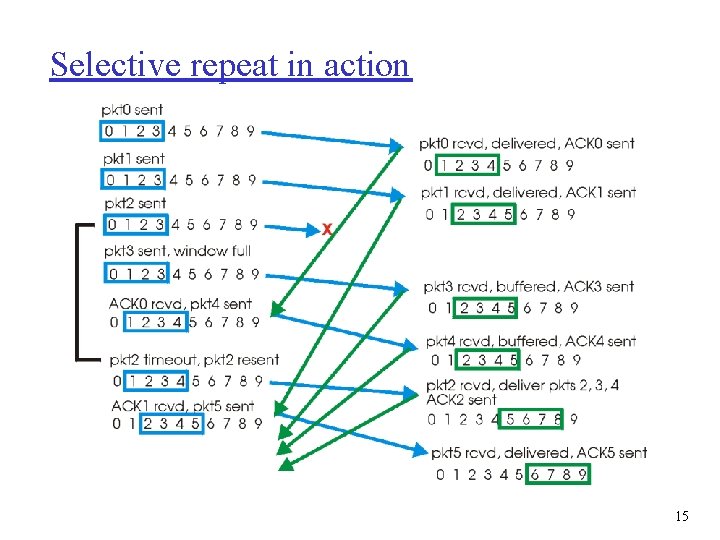

Selective repeat in action 15

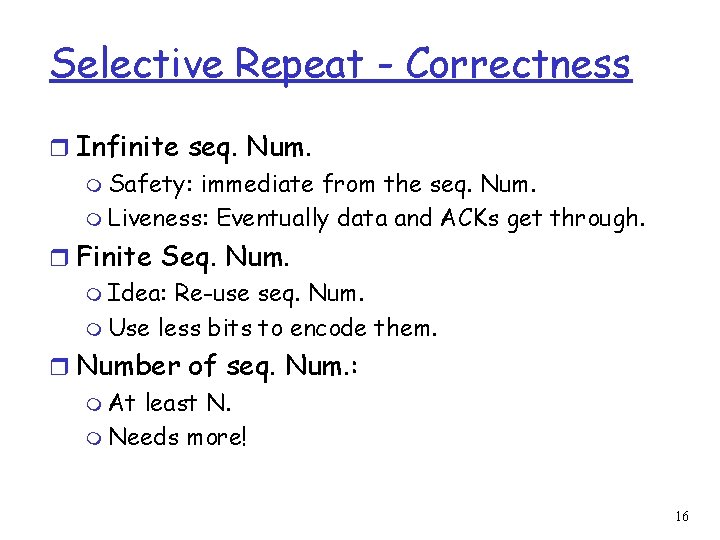

Selective Repeat - Correctness r Infinite seq. Num. m Safety: immediate from the seq. Num. m Liveness: Eventually data and ACKs get through. r Finite Seq. Num. m Idea: Re-use seq. Num. m Use less bits to encode them. r Number of seq. Num. : m At least N. m Needs more! 16

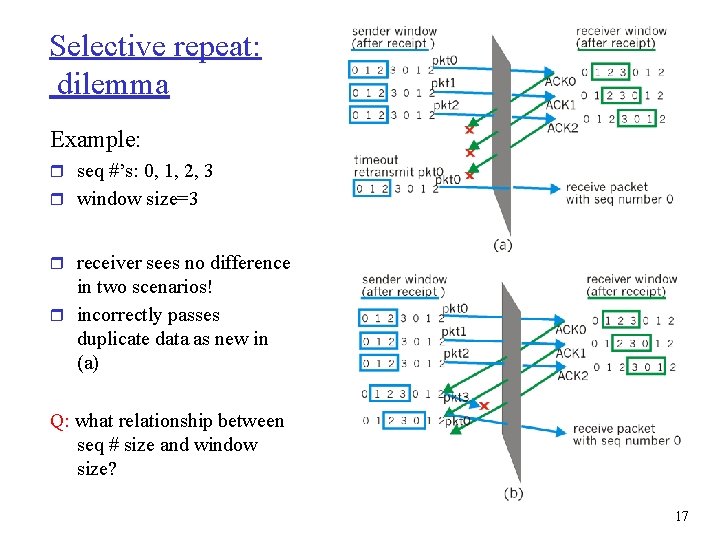

Selective repeat: dilemma Example: r seq #’s: 0, 1, 2, 3 r window size=3 r receiver sees no difference in two scenarios! r incorrectly passes duplicate data as new in (a) Q: what relationship between seq # size and window size? 17

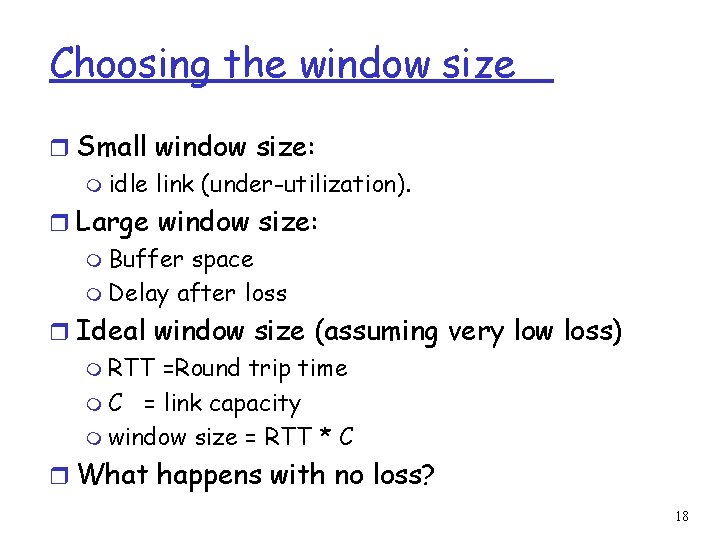

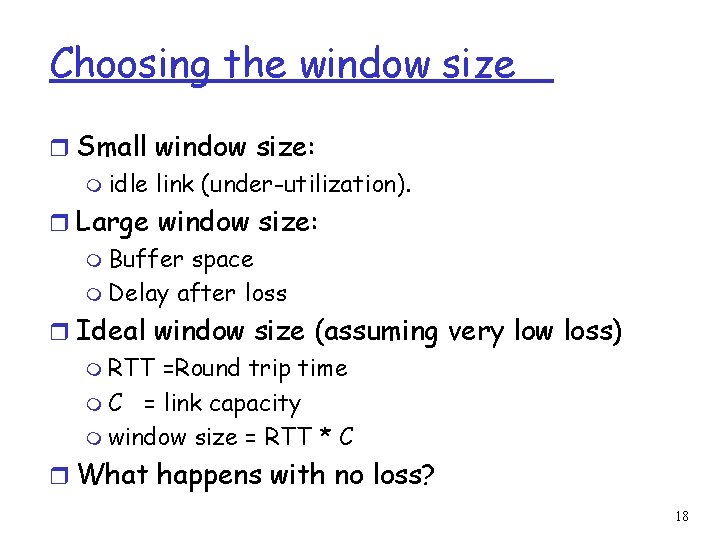

Choosing the window size r Small window size: m idle link (under-utilization). r Large window size: m Buffer space m Delay after loss r Ideal window size (assuming very low loss) m RTT =Round trip time m C = link capacity m window size = RTT * C r What happens with no loss? 18

End to End Protocols: Multiplexing & Demultiplexing 19

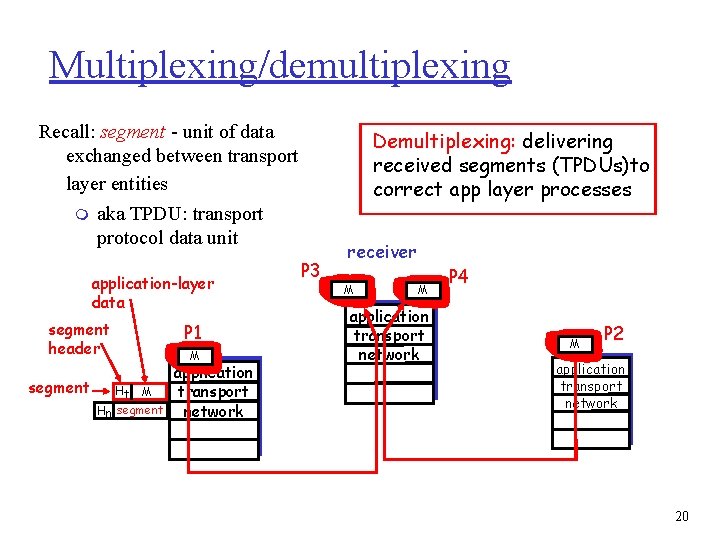

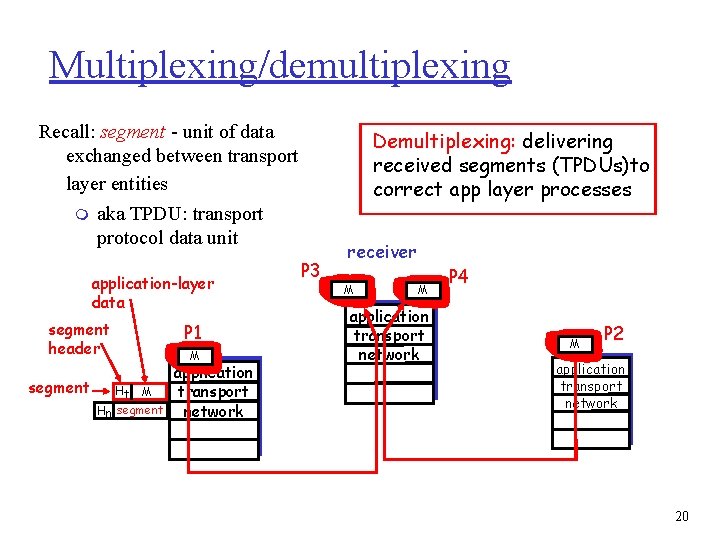

Multiplexing/demultiplexing Recall: segment - unit of data exchanged between transport layer entities m aka TPDU: transport protocol data unit application-layer data segment header segment Ht M Hn segment P 1 M application transport network Demultiplexing: delivering received segments (TPDUs)to correct app layer processes P 3 receiver M M application transport network P 4 M P 2 application transport network 20

Multiplexing/demultiplexing Multiplexing: gathering data from multiple app processes, enveloping data with header (later used for demultiplexing) multiplexing/demultiplexing: r based on sender, receiver port numbers, IP addresses m source, dest port #s in each segment m recall: well-known port numbers for specific applications 32 bits source port # dest port # other header fields application data (message) TCP/UDP segment format 21

Multiplexing/demultiplexing: examples host A source port: x dest. port: 23 server B source port: 23 dest. port: x Source IP: C Dest IP: B source port: y dest. port: 80 port use: simple telnet app WWW client host A WWW client host C Source IP: A Dest IP: B source port: x dest. port: 80 Source IP: C Dest IP: B source port: x dest. port: 80 WWW server B port use: WWW server 22

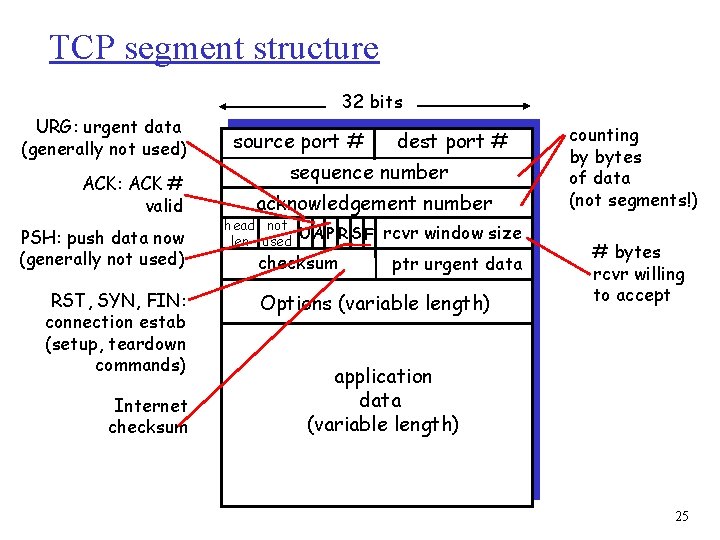

TCP Protocol 23

TCP: Overview r point-to-point: m one sender, one receiver r reliable, in-order byte steam: m no “message boundaries” r pipelined: m TCP congestion and flow control set window size RFCs: 793, 1122, 1323, 2018, 2581 r full duplex data: m bi-directional data flow in same connection m MSS: maximum segment size r connection-oriented: m handshaking (exchange of control msgs) init’s sender, receiver state before data exchange r flow controlled: m sender will not overwhelm receiver 24

TCP segment structure 32 bits URG: urgent data (generally not used) ACK: ACK # valid PSH: push data now (generally not used) RST, SYN, FIN: connection estab (setup, teardown commands) Internet checksum source port # dest port # sequence number acknowledgement number head not UA P R S F len used checksum rcvr window size ptr urgent data Options (variable length) counting by bytes of data (not segments!) # bytes rcvr willing to accept application data (variable length) 25

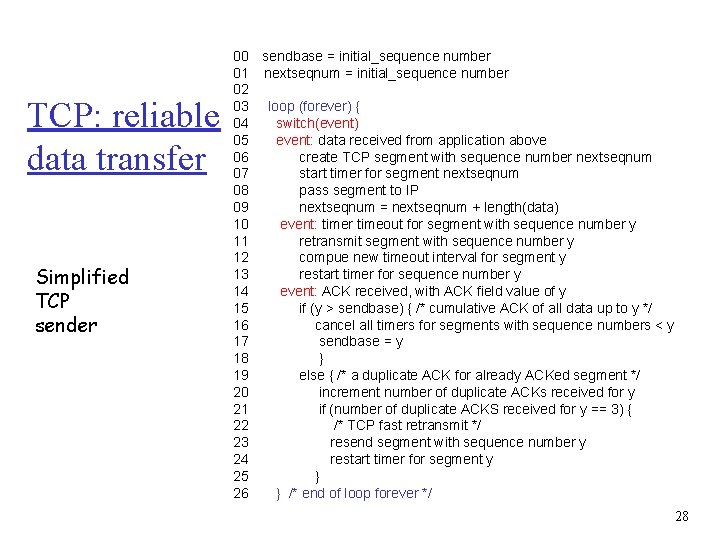

TCP seq. #’s and ACKs Seq. #’s: m byte stream “number” of first byte in segment’s data ACKs: m seq # of next byte expected from other side m cumulative ACK Q: how receiver handles outof-order segments m A: TCP spec doesn’t say, - up to implementor Host B Host A User types ‘C’ Seq=4 2, ACK = 79, da ta ata = d , 3 4 K= C 79, A = q e S host ACKs receipt of echoed ‘C’ = ‘C’ host ACKs receipt of ‘C’, echoes back ‘C’ Seq=4 3, ACK =80 simple telnet scenario time 26

TCP: reliable data transfer event: data received from application above create, send segment wait for event simplified sender, assuming • one way data transfer • no flow, congestion control event: timer timeout for segment with seq # y retransmit segment event: ACK received, with ACK # y ACK processing 27

TCP: reliable data transfer Simplified TCP sender 00 sendbase = initial_sequence number 01 nextseqnum = initial_sequence number 02 03 loop (forever) { 04 switch(event) 05 event: data received from application above 06 create TCP segment with sequence number nextseqnum 07 start timer for segment nextseqnum 08 pass segment to IP 09 nextseqnum = nextseqnum + length(data) 10 event: timer timeout for segment with sequence number y 11 retransmit segment with sequence number y 12 compue new timeout interval for segment y 13 restart timer for sequence number y 14 event: ACK received, with ACK field value of y 15 if (y > sendbase) { /* cumulative ACK of all data up to y */ 16 cancel all timers for segments with sequence numbers < y 17 sendbase = y 18 } 19 else { /* a duplicate ACK for already ACKed segment */ 20 increment number of duplicate ACKs received for y 21 if (number of duplicate ACKS received for y == 3) { 22 /* TCP fast retransmit */ 23 resend segment with sequence number y 24 restart timer for segment y 25 } 26 } /* end of loop forever */ 28

![TCP ACK generation RFC 1122 RFC 2581 Event TCP Receiver action inorder segment arrival TCP ACK generation [RFC 1122, RFC 2581] Event TCP Receiver action in-order segment arrival,](https://slidetodoc.com/presentation_image_h/2949b42e4b226b6bd38aae4641920ec2/image-29.jpg)

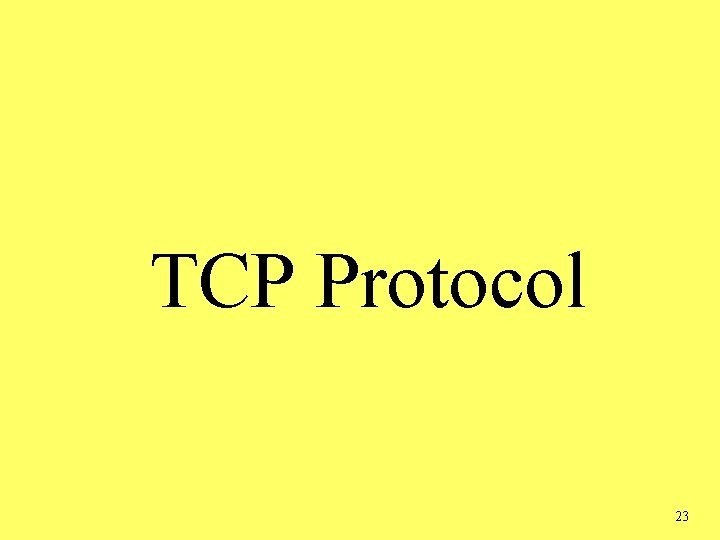

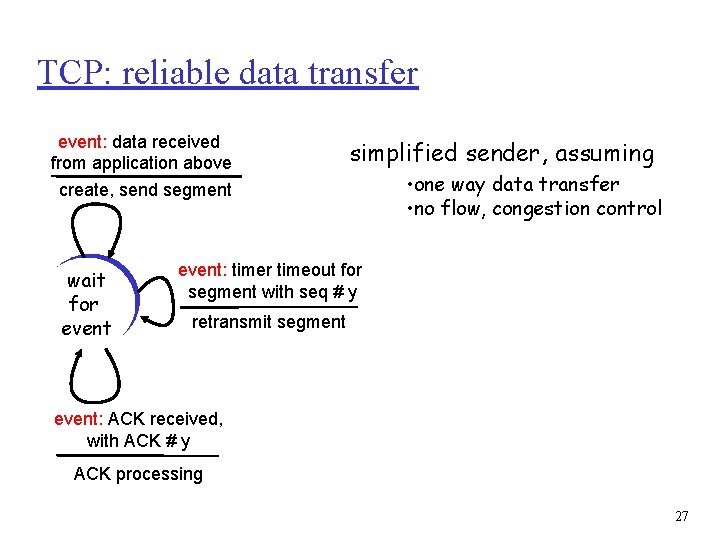

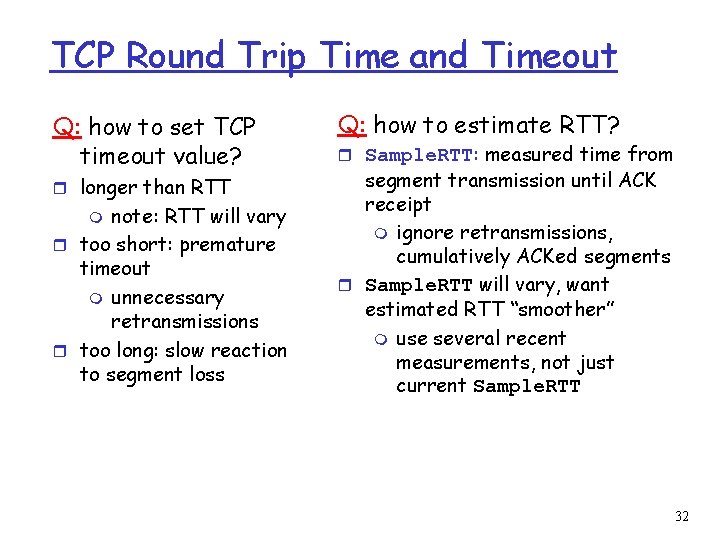

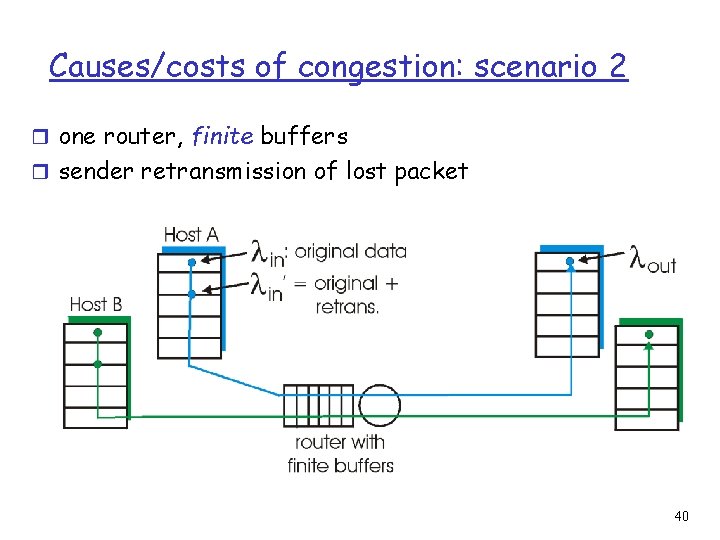

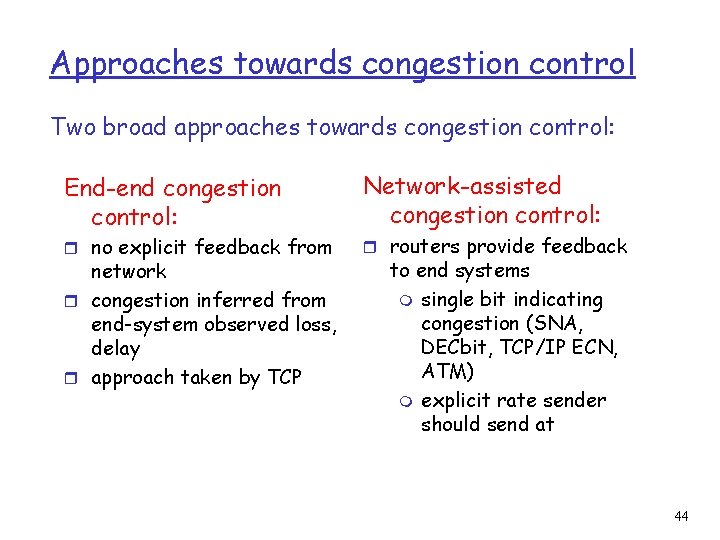

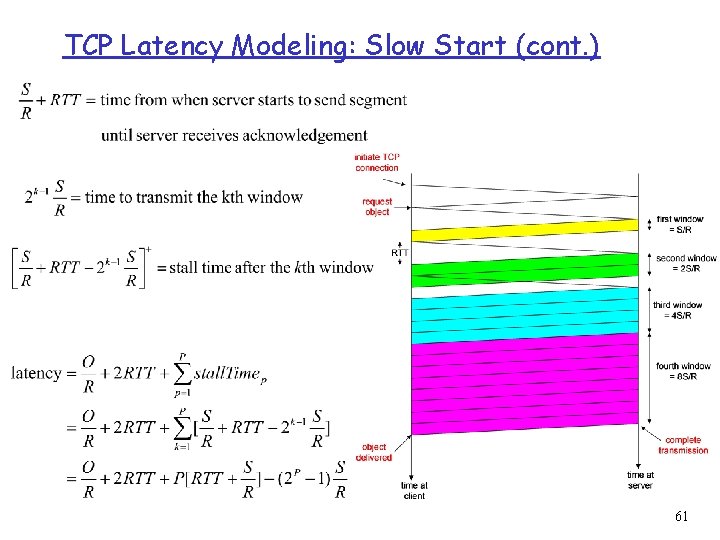

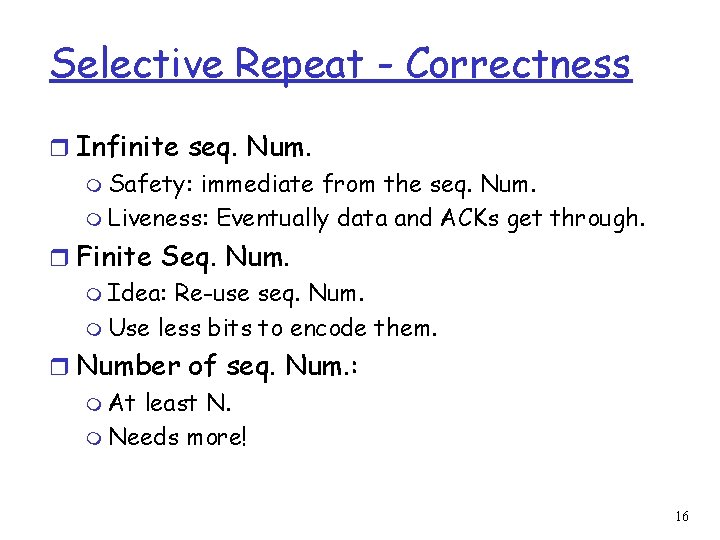

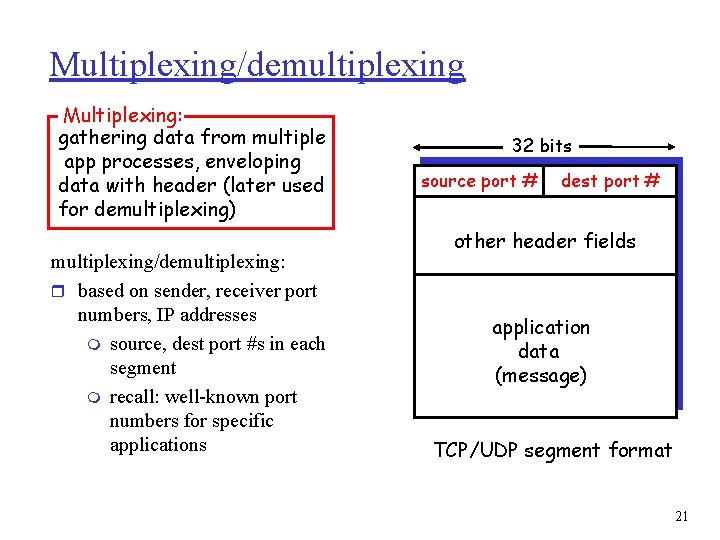

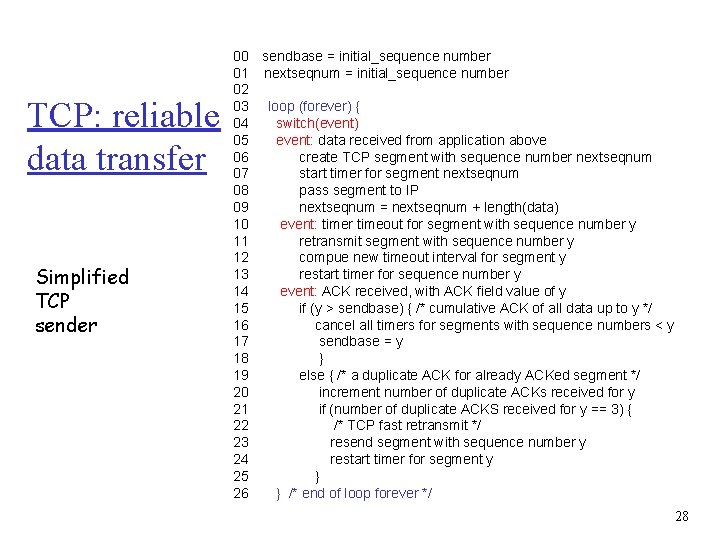

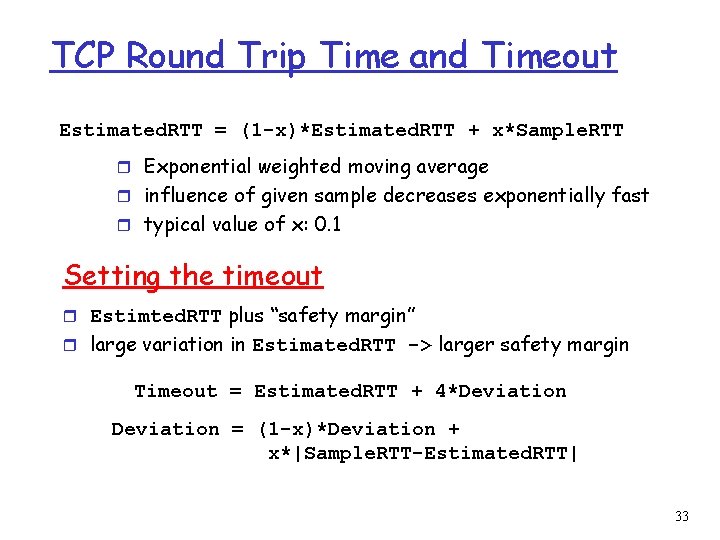

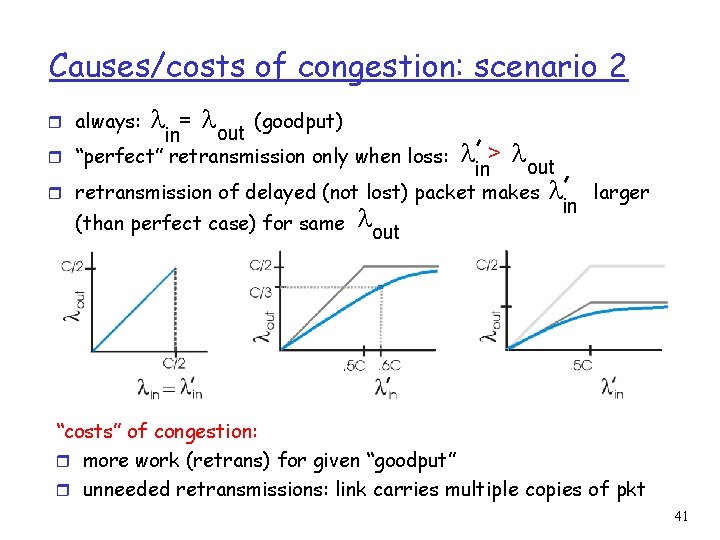

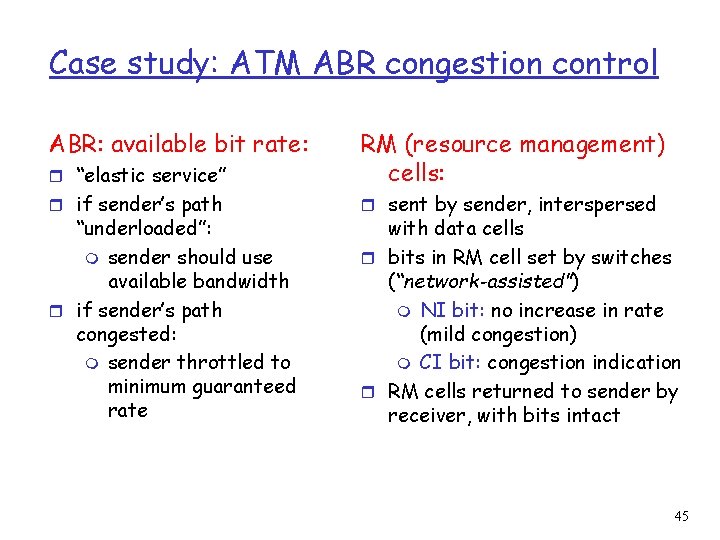

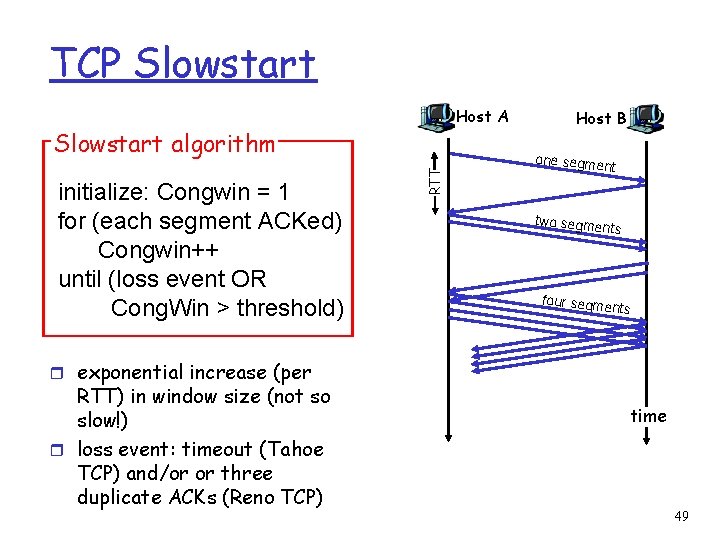

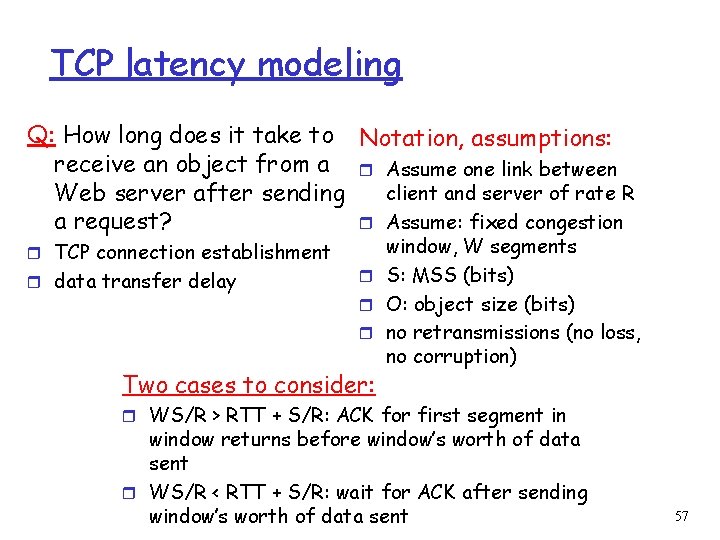

TCP ACK generation [RFC 1122, RFC 2581] Event TCP Receiver action in-order segment arrival, no gaps, everything else already ACKed delayed ACK. Wait up to 500 ms for next segment. If no next segment, send ACK in-order segment arrival, no gaps, one delayed ACK pending immediately send single cumulative ACK out-of-order segment arrival higher-than-expect seq. # gap detected send duplicate ACK, indicating seq. # of next expected byte arrival of segment that partially or completely fills gap immediate ACK if segment starts at lower end of gap 29

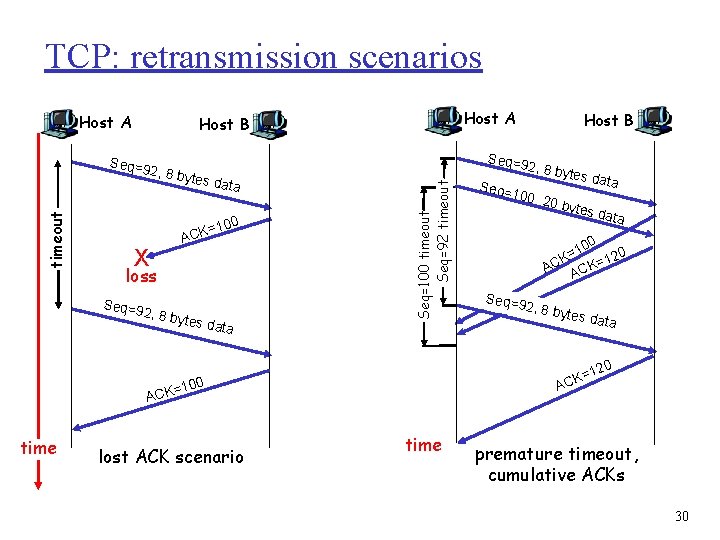

TCP: retransmission scenarios Host A , 8 byt es dat a X ACK =100 loss Seq=9 2 , 8 byt es dat a Seq= 100, 2 tes da ta 0 byte s data 0 10 = K 120 = C K A AC Seq=9 2, 8 by tes da ta 20 100 lost ACK scenario 2, 8 by K=1 AC = ACK time Host B Seq=9 Seq=100 timeout Seq=92 timeout Seq=9 2 timeout Host A Host B time premature timeout, cumulative ACKs 30

TCP Flow Control flow control sender won’t overrun receiver’s buffers by transmitting too much, too fast Rcv. Buffer = size or TCP Receive Buffer Rcv. Window = amount of spare room in Buffer receiver: explicitly informs sender of (dynamically changing) amount of free buffer space m Rcv. Window field in TCP segment sender: keeps the amount of transmitted, un. ACKed data less than most recently received Rcv. Window receiver buffering 31

TCP Round Trip Time and Timeout Q: how to set TCP timeout value? r longer than RTT note: RTT will vary r too short: premature timeout m unnecessary retransmissions r too long: slow reaction to segment loss m Q: how to estimate RTT? r Sample. RTT: measured time from segment transmission until ACK receipt m ignore retransmissions, cumulatively ACKed segments r Sample. RTT will vary, want estimated RTT “smoother” m use several recent measurements, not just current Sample. RTT 32

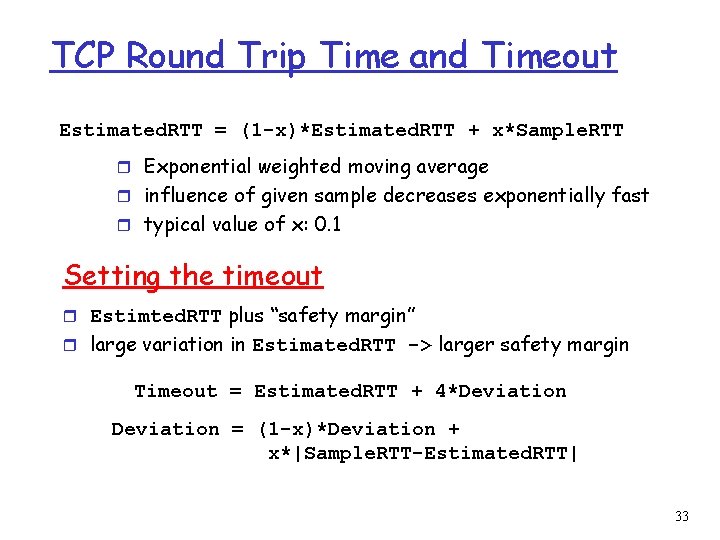

TCP Round Trip Time and Timeout Estimated. RTT = (1 -x)*Estimated. RTT + x*Sample. RTT r Exponential weighted moving average r influence of given sample decreases exponentially fast r typical value of x: 0. 1 Setting the timeout r Estimted. RTT plus “safety margin” r large variation in Estimated. RTT -> larger safety margin Timeout = Estimated. RTT + 4*Deviation = (1 -x)*Deviation + x*|Sample. RTT-Estimated. RTT| 33

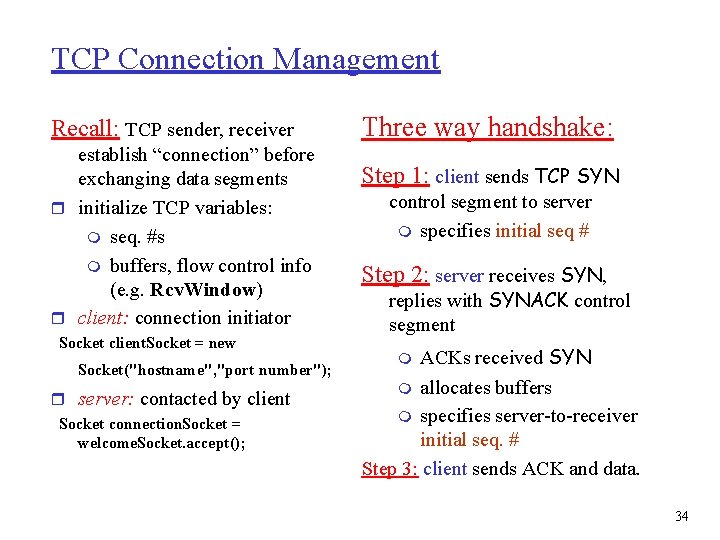

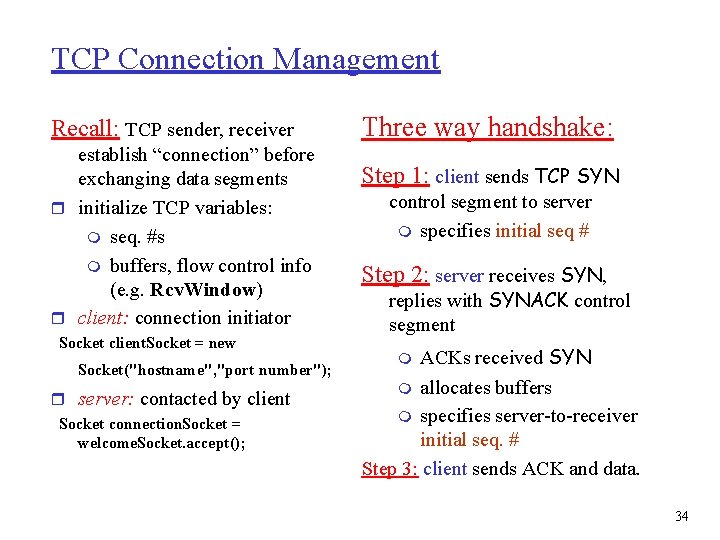

TCP Connection Management Recall: TCP sender, receiver establish “connection” before exchanging data segments r initialize TCP variables: m seq. #s m buffers, flow control info (e. g. Rcv. Window) r client: connection initiator Socket client. Socket = new Socket("hostname", "port number"); r server: contacted by client Socket connection. Socket = welcome. Socket. accept(); Three way handshake: Step 1: client sends TCP SYN control segment to server m specifies initial seq # Step 2: server receives SYN, replies with SYNACK control segment ACKs received SYN m allocates buffers m specifies server-to-receiver initial seq. # Step 3: client sends ACK and data. m 34

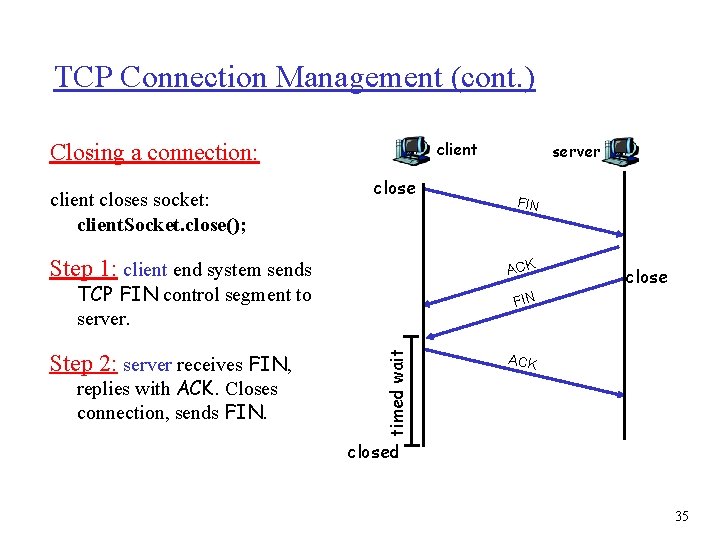

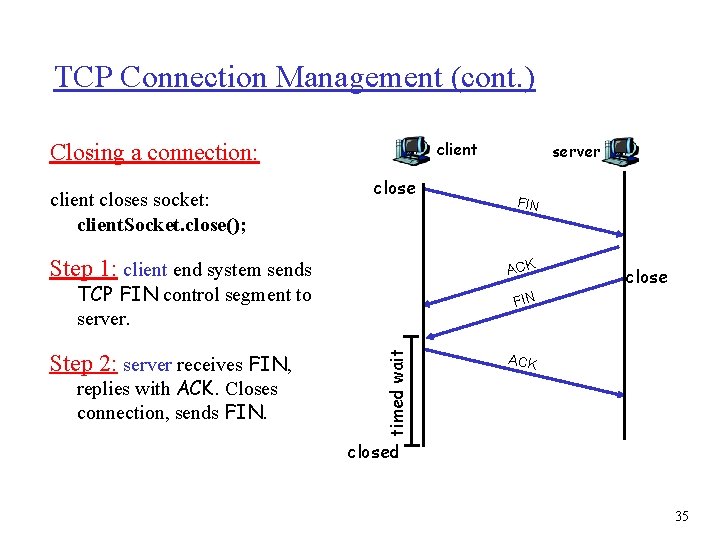

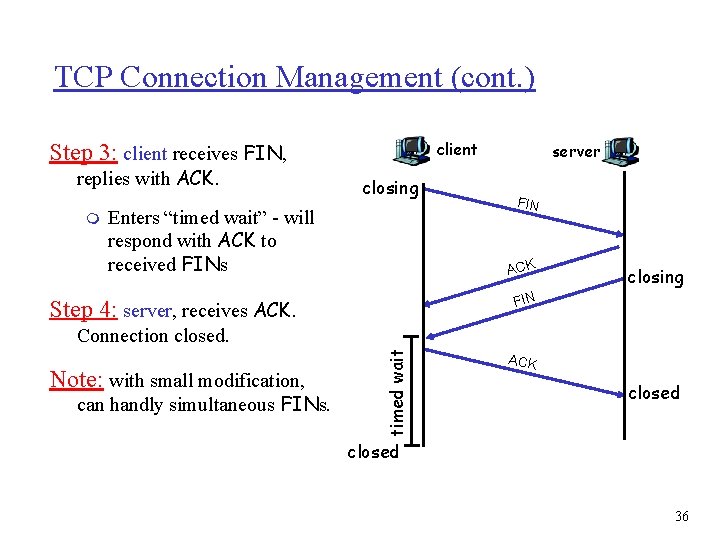

TCP Connection Management (cont. ) client Closing a connection: client closes socket: client. Socket. close(); close Step 1: client end system sends close FIN timed wait replies with ACK. Closes connection, sends FIN ACK TCP FIN control segment to server. Step 2: server receives FIN, server ACK closed 35

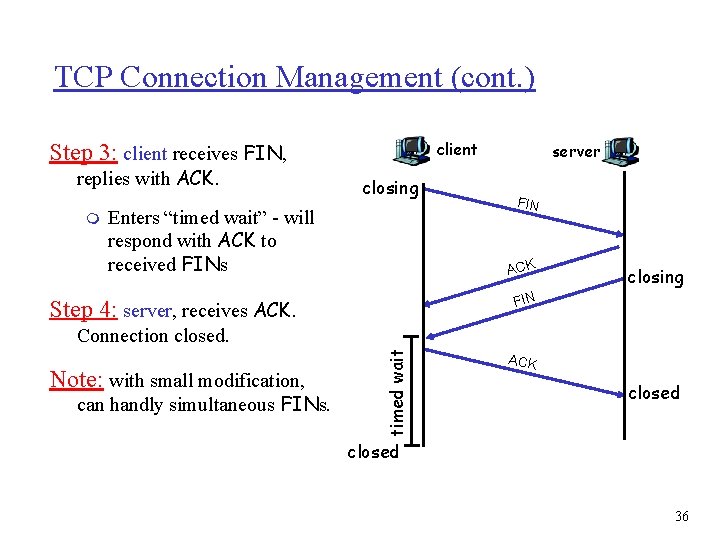

TCP Connection Management (cont. ) client Step 3: client receives FIN, replies with ACK. m closing Enters “timed wait” - will respond with ACK to received FINs server FIN ACK closing FIN Step 4: server, receives ACK. Note: with small modification, can handly simultaneous FINs. timed wait Connection closed. ACK closed 36

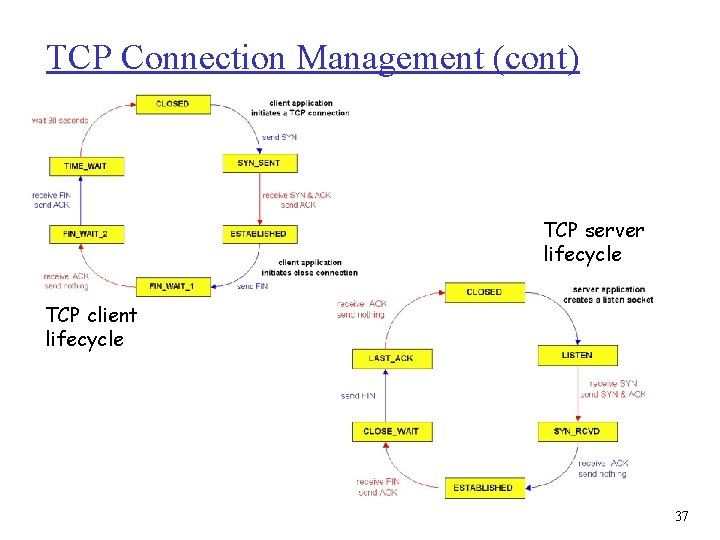

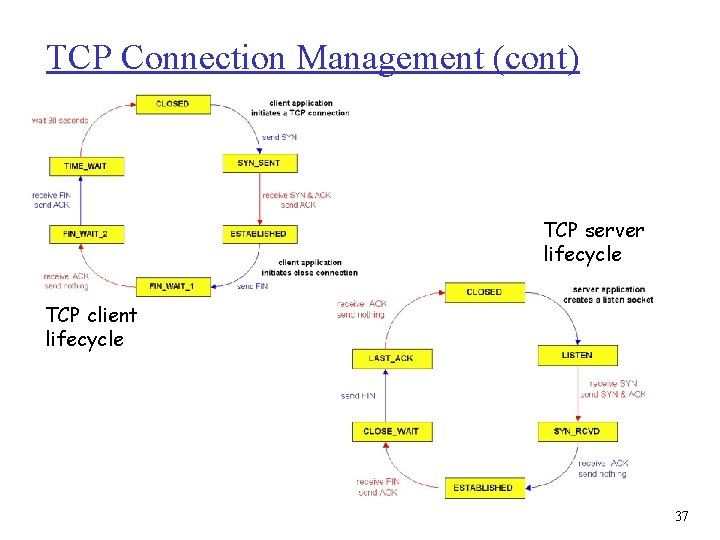

TCP Connection Management (cont) TCP server lifecycle TCP client lifecycle 37

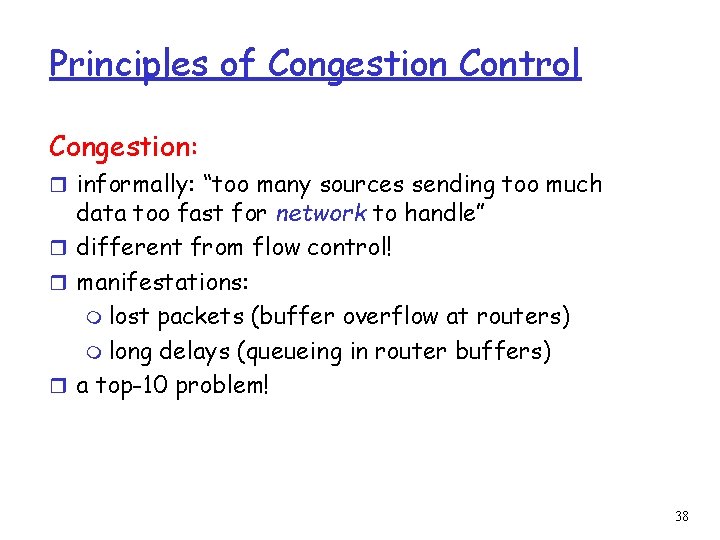

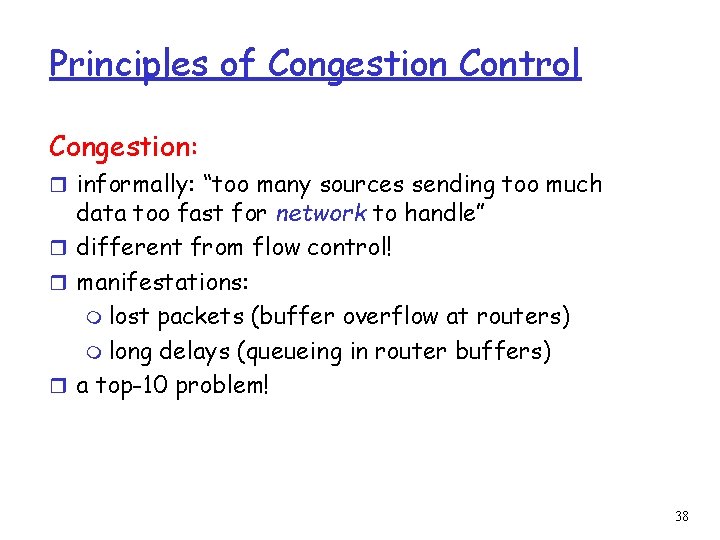

Principles of Congestion Control Congestion: r informally: “too many sources sending too much data too fast for network to handle” r different from flow control! r manifestations: m lost packets (buffer overflow at routers) m long delays (queueing in router buffers) r a top-10 problem! 38

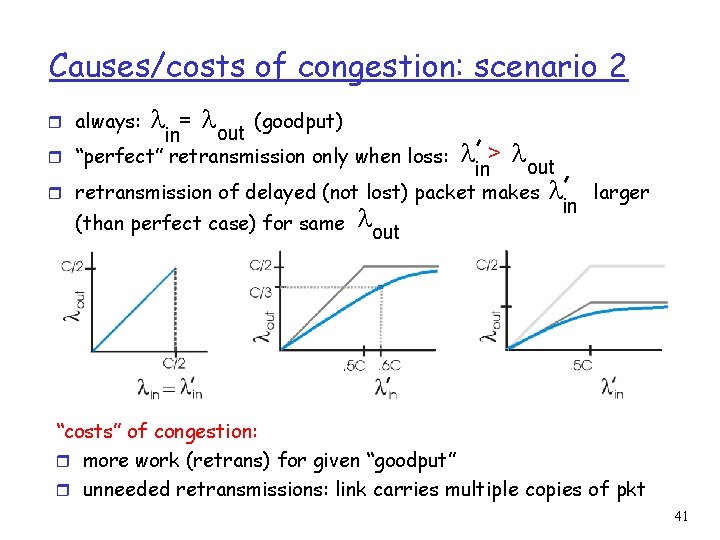

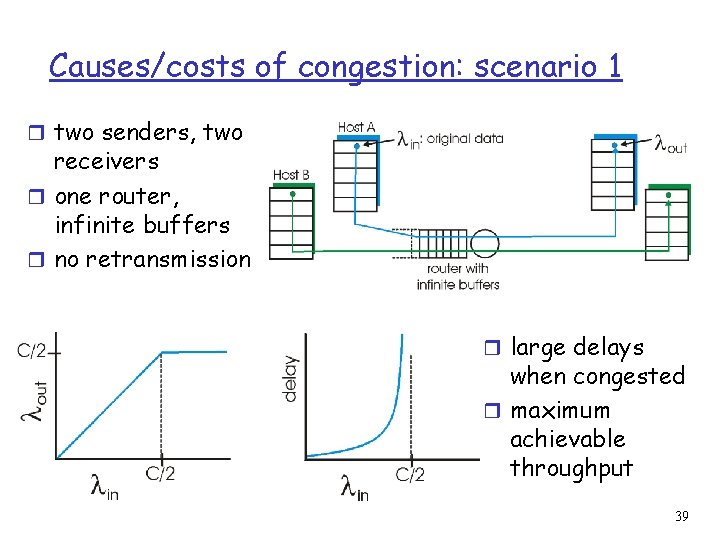

Causes/costs of congestion: scenario 1 r two senders, two receivers r one router, infinite buffers r no retransmission r large delays when congested r maximum achievable throughput 39

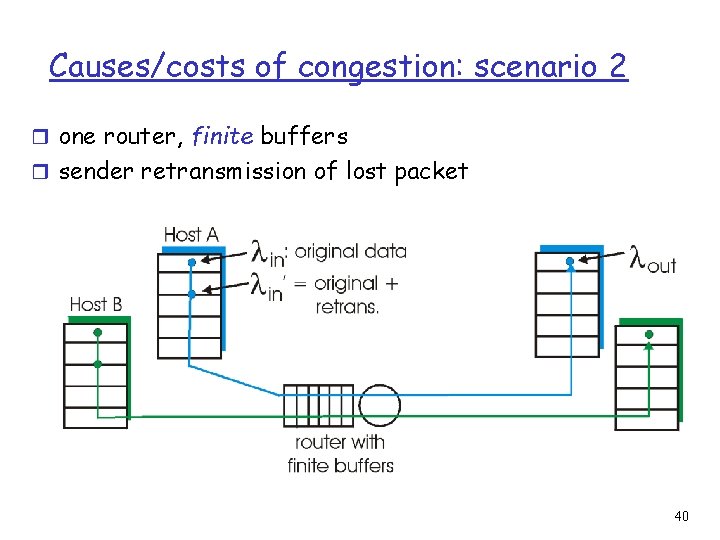

Causes/costs of congestion: scenario 2 r one router, finite buffers r sender retransmission of lost packet 40

Causes/costs of congestion: scenario 2 = l (goodput) out in r “perfect” retransmission only when loss: r always: r l l > lout in retransmission of delayed (not lost) packet makes l in l (than perfect case) for same out larger “costs” of congestion: r more work (retrans) for given “goodput” r unneeded retransmissions: link carries multiple copies of pkt 41

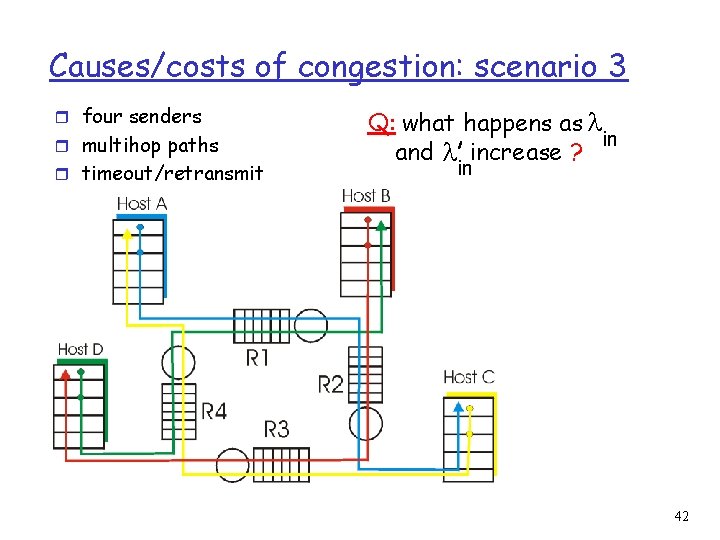

Causes/costs of congestion: scenario 3 r four senders r multihop paths r timeout/retransmit Q: what happens as l in and l increase ? in 42

Causes/costs of congestion: scenario 3 Another “cost” of congestion: r when packet dropped, any “upstream transmission capacity used for that packet wasted! 43

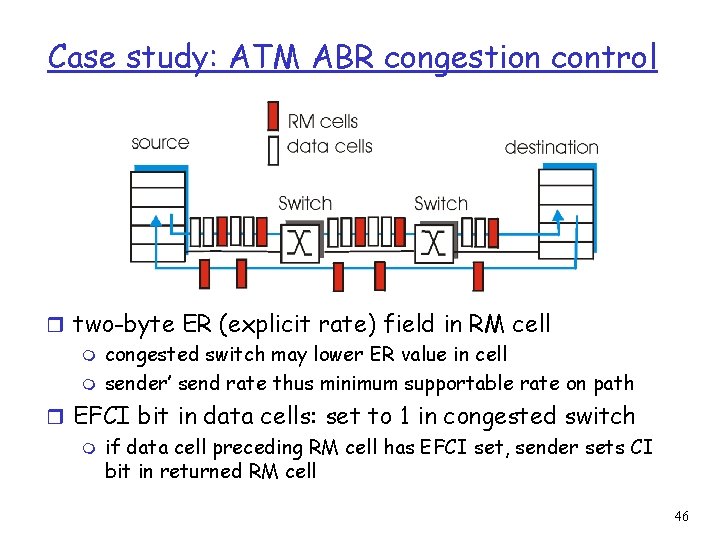

Approaches towards congestion control Two broad approaches towards congestion control: End-end congestion control: r no explicit feedback from network r congestion inferred from end-system observed loss, delay r approach taken by TCP Network-assisted congestion control: r routers provide feedback to end systems m single bit indicating congestion (SNA, DECbit, TCP/IP ECN, ATM) m explicit rate sender should send at 44

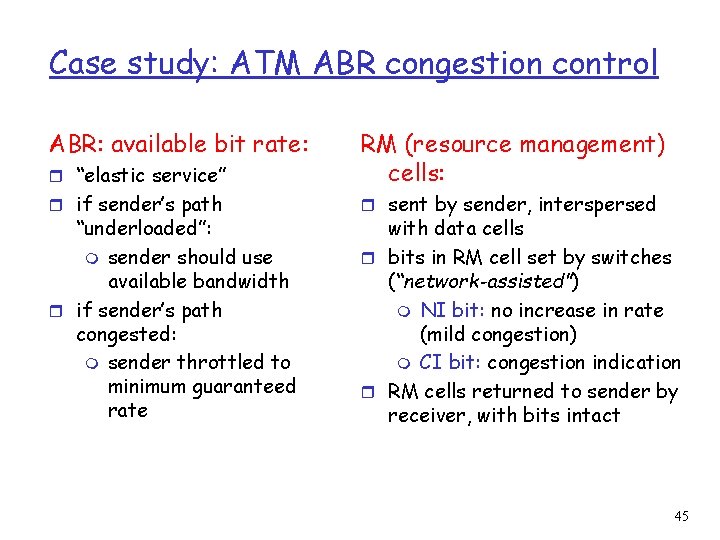

Case study: ATM ABR congestion control ABR: available bit rate: r “elastic service” RM (resource management) cells: r if sender’s path r sent by sender, interspersed “underloaded”: m sender should use available bandwidth r if sender’s path congested: m sender throttled to minimum guaranteed rate with data cells r bits in RM cell set by switches (“network-assisted”) m NI bit: no increase in rate (mild congestion) m CI bit: congestion indication r RM cells returned to sender by receiver, with bits intact 45

Case study: ATM ABR congestion control r two-byte ER (explicit rate) field in RM cell m congested switch may lower ER value in cell m sender’ send rate thus minimum supportable rate on path r EFCI bit in data cells: set to 1 in congested switch m if data cell preceding RM cell has EFCI set, sender sets CI bit in returned RM cell 46

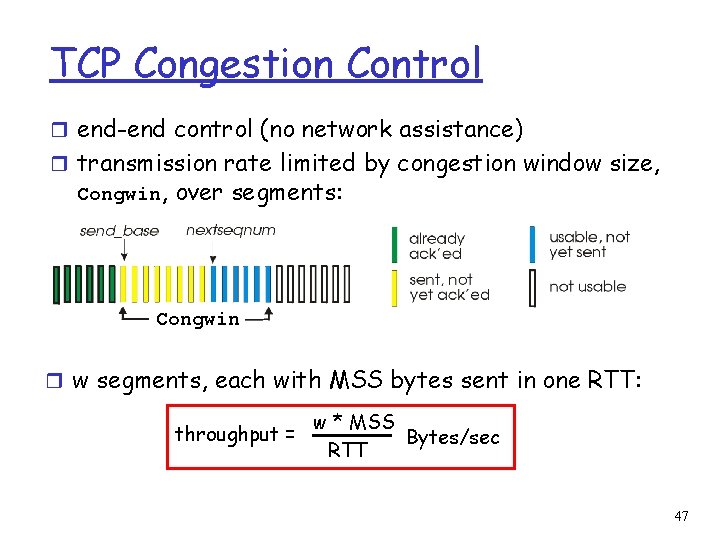

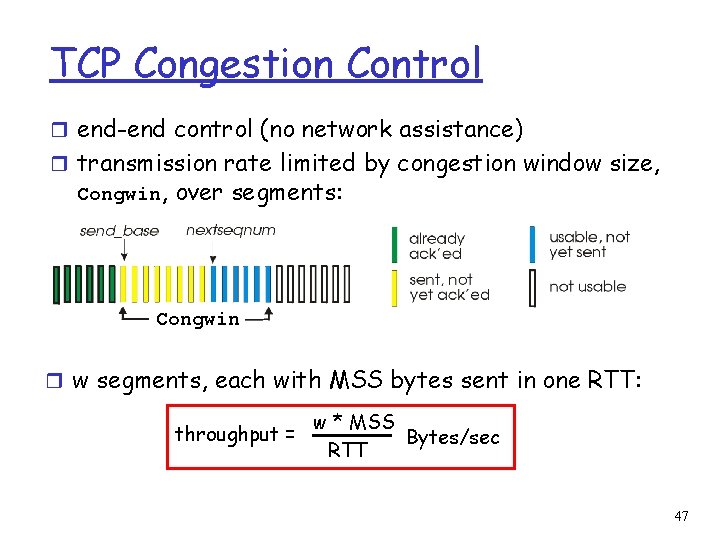

TCP Congestion Control r end-end control (no network assistance) r transmission rate limited by congestion window size, Congwin, over segments: Congwin r w segments, each with MSS bytes sent in one RTT: throughput = w * MSS Bytes/sec RTT 47

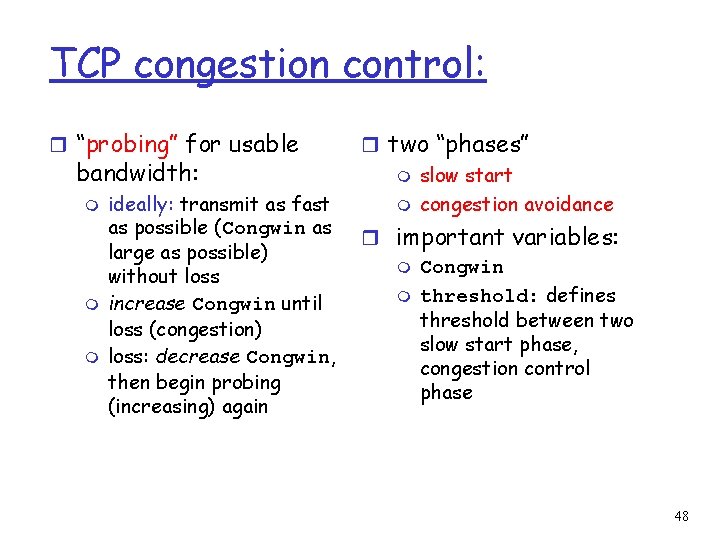

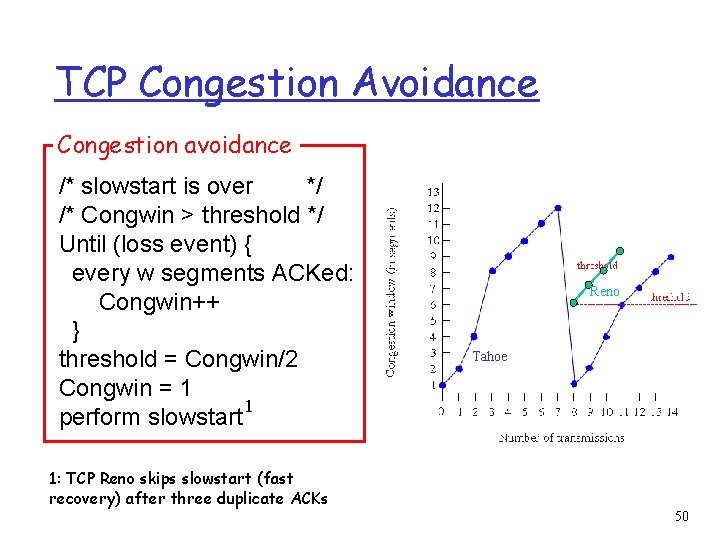

TCP congestion control: r “probing” for usable bandwidth: m m m ideally: transmit as fast as possible (Congwin as large as possible) without loss increase Congwin until loss (congestion) loss: decrease Congwin, then begin probing (increasing) again r two “phases” m slow start m congestion avoidance r important variables: m Congwin m threshold: defines threshold between two slow start phase, congestion control phase 48

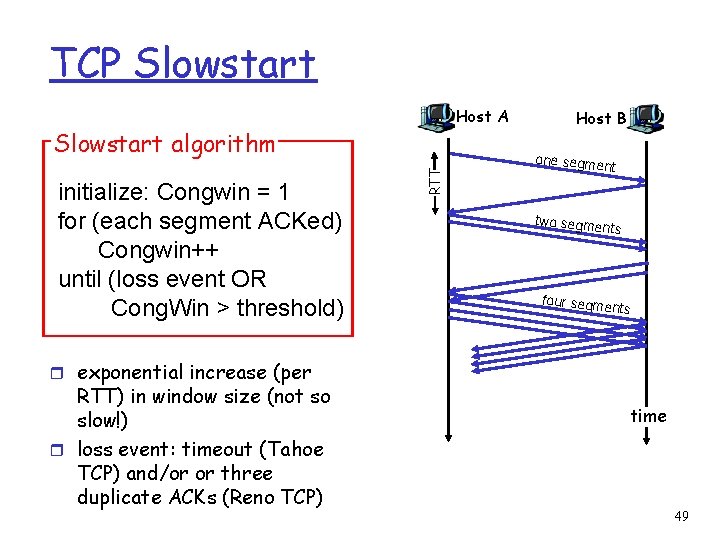

TCP Slowstart Host A initialize: Congwin = 1 for (each segment ACKed) Congwin++ until (loss event OR Cong. Win > threshold) RTT Slowstart algorithm Host B one segme nt two segme nts four segme nts r exponential increase (per RTT) in window size (not so slow!) r loss event: timeout (Tahoe TCP) and/or or three duplicate ACKs (Reno TCP) time 49

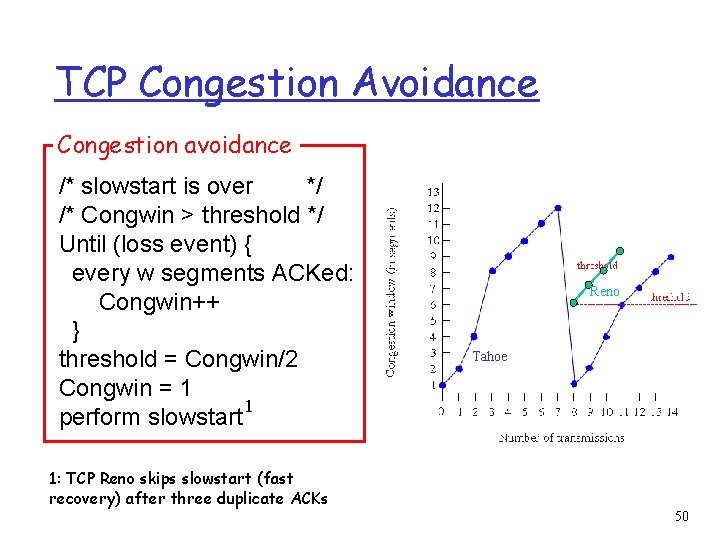

TCP Congestion Avoidance Congestion avoidance /* slowstart is over */ /* Congwin > threshold */ Until (loss event) { every w segments ACKed: Congwin++ } threshold = Congwin/2 Congwin = 1 1 perform slowstart Reno Tahoe 1: TCP Reno skips slowstart (fast recovery) after three duplicate ACKs 50

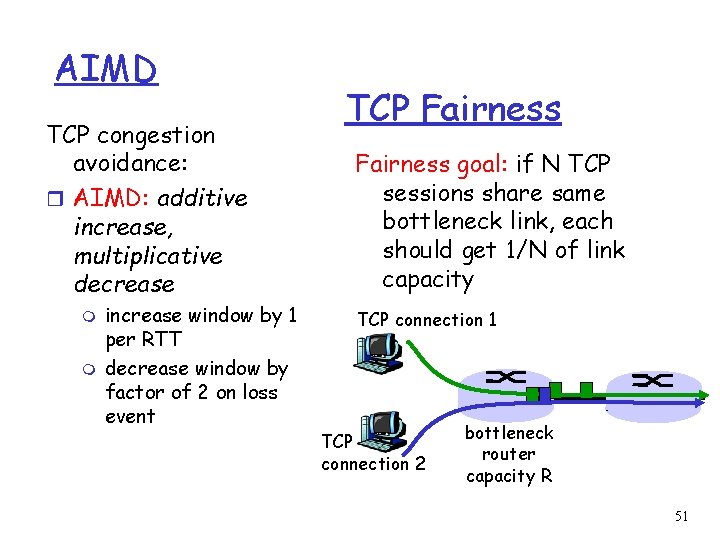

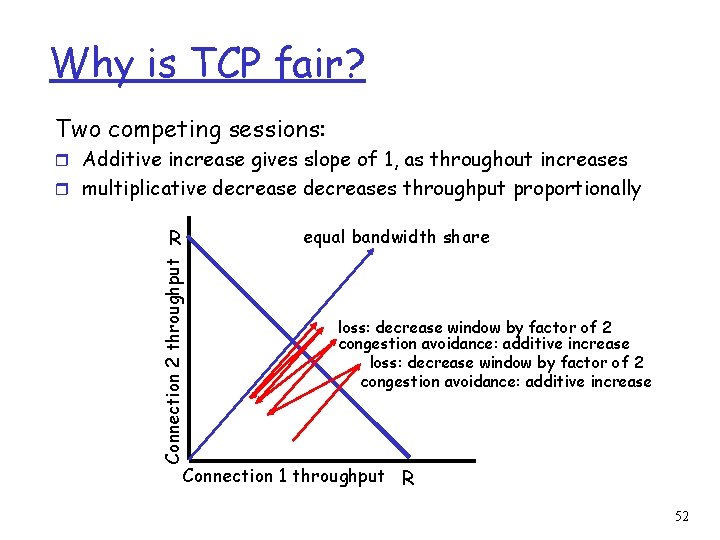

AIMD TCP congestion avoidance: r AIMD: additive increase, multiplicative decrease m m increase window by 1 per RTT decrease window by factor of 2 on loss event TCP Fairness goal: if N TCP sessions share same bottleneck link, each should get 1/N of link capacity TCP connection 1 TCP connection 2 bottleneck router capacity R 51

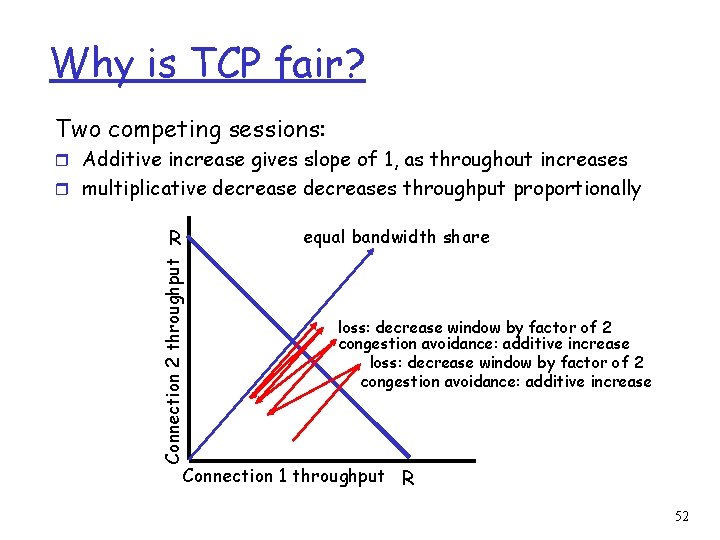

Why is TCP fair? Two competing sessions: r Additive increase gives slope of 1, as throughout increases r multiplicative decreases throughput proportionally equal bandwidth share Connection 2 throughput R loss: decrease window by factor of 2 congestion avoidance: additive increase Connection 1 throughput R 52

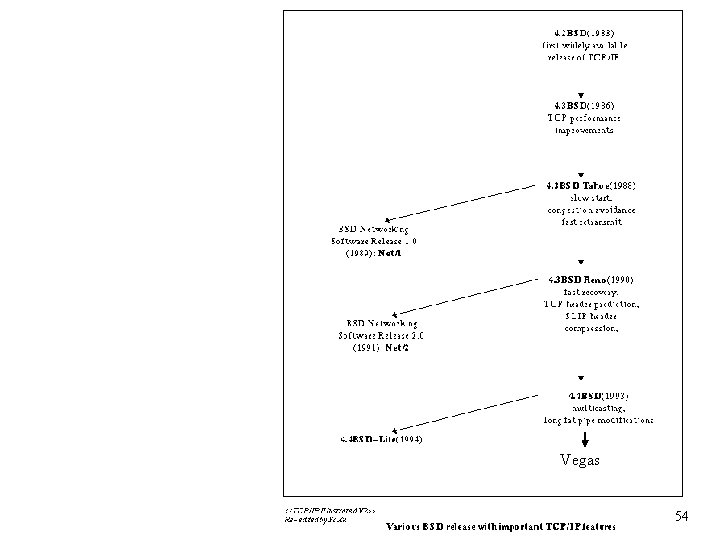

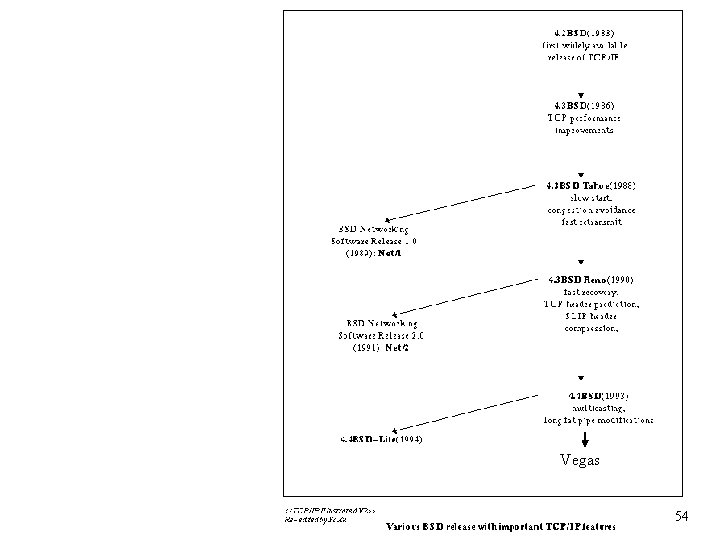

TCP strains Tahoe Reno Vegas 53

Vegas 54

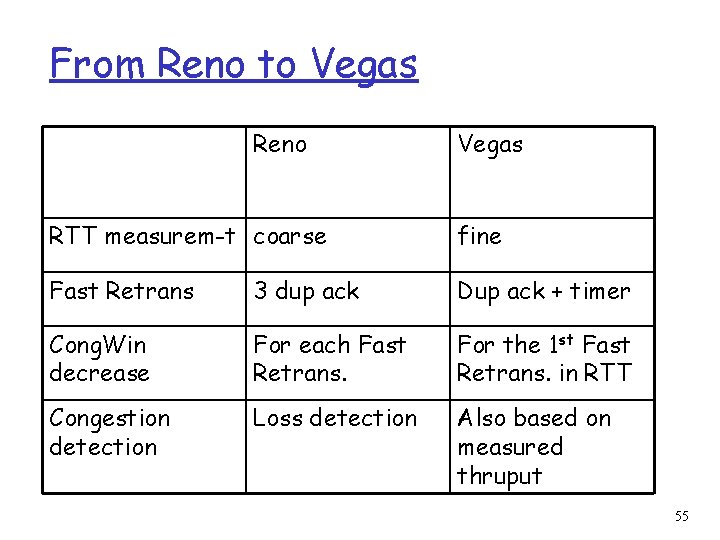

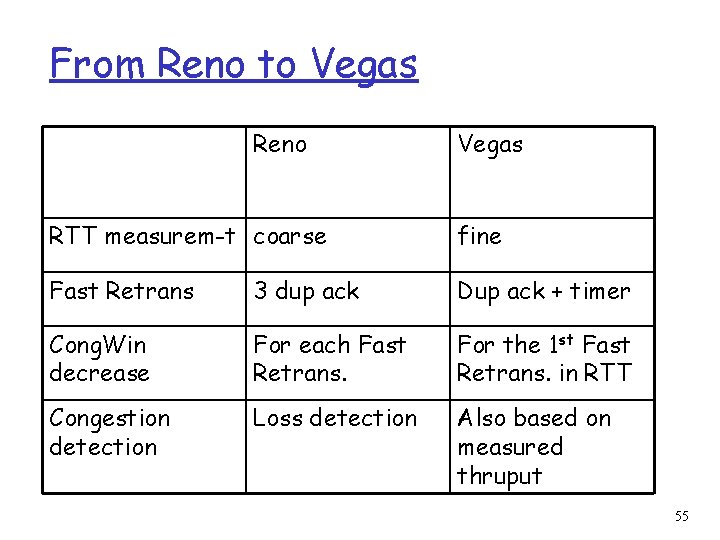

From Reno to Vegas Reno Vegas RTT measurem-t coarse fine Fast Retrans 3 dup ack Dup ack + timer Cong. Win decrease For each Fast Retrans. For the 1 st Fast Retrans. in RTT Congestion detection Loss detection Also based on measured thruput 55

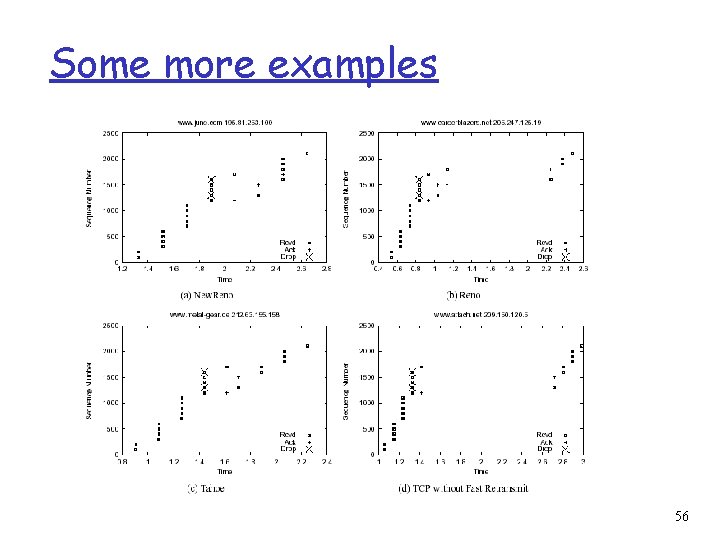

Some more examples 56

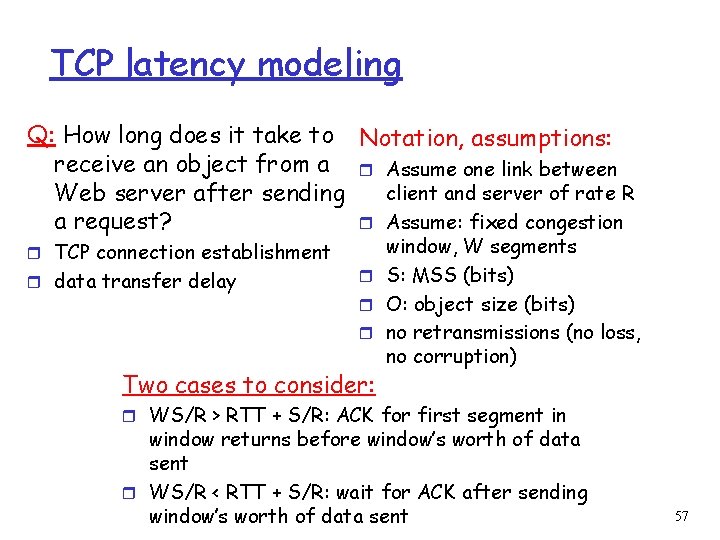

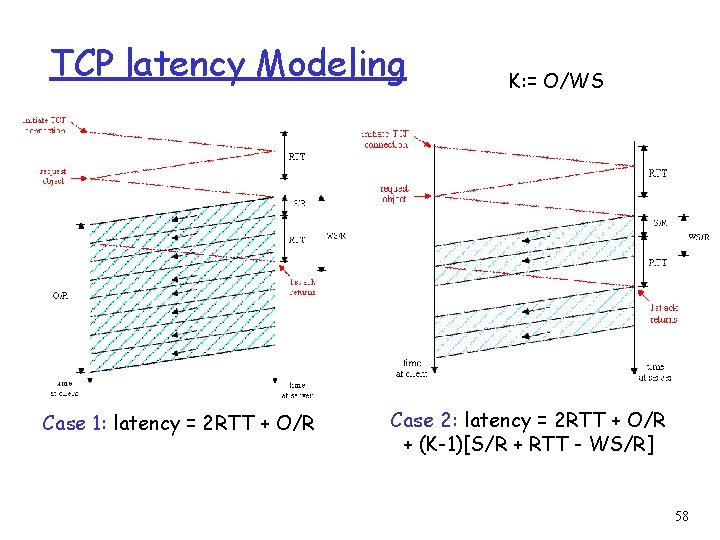

TCP latency modeling Q: How long does it take to Notation, assumptions: receive an object from a r Assume one link between client and server of rate R Web server after sending r Assume: fixed congestion a request? r TCP connection establishment r data transfer delay window, W segments r S: MSS (bits) r O: object size (bits) r no retransmissions (no loss, no corruption) Two cases to consider: r WS/R > RTT + S/R: ACK for first segment in window returns before window’s worth of data sent r WS/R < RTT + S/R: wait for ACK after sending window’s worth of data sent 57

TCP latency Modeling Case 1: latency = 2 RTT + O/R K: = O/WS Case 2: latency = 2 RTT + O/R + (K-1)[S/R + RTT - WS/R] 58

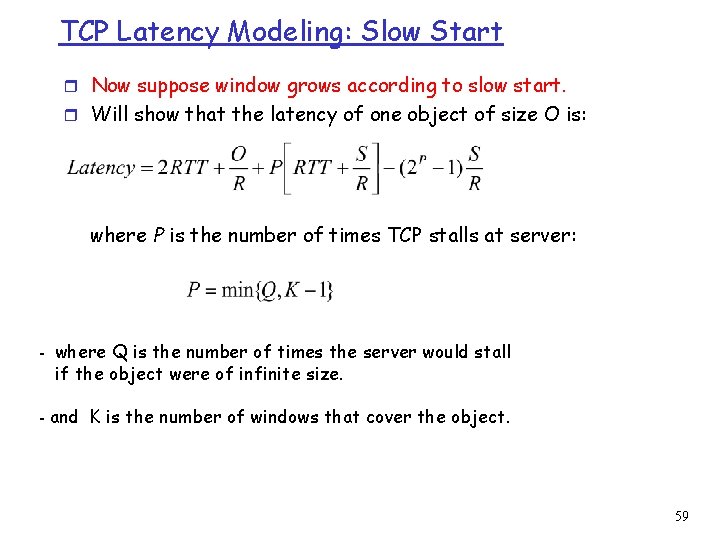

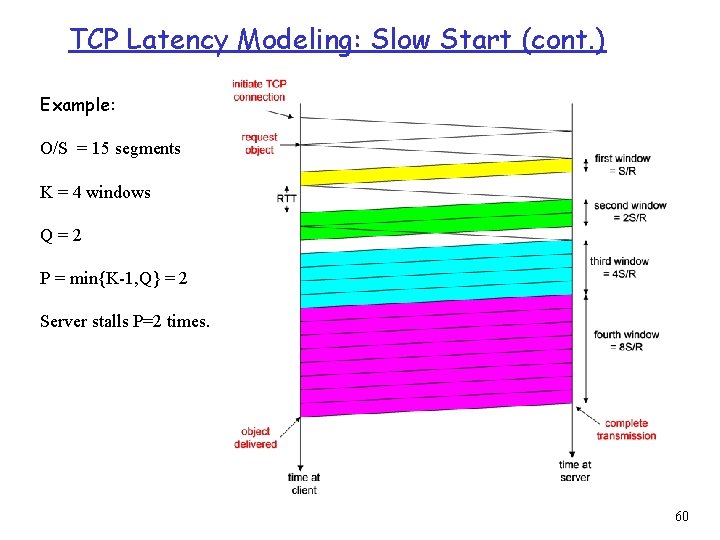

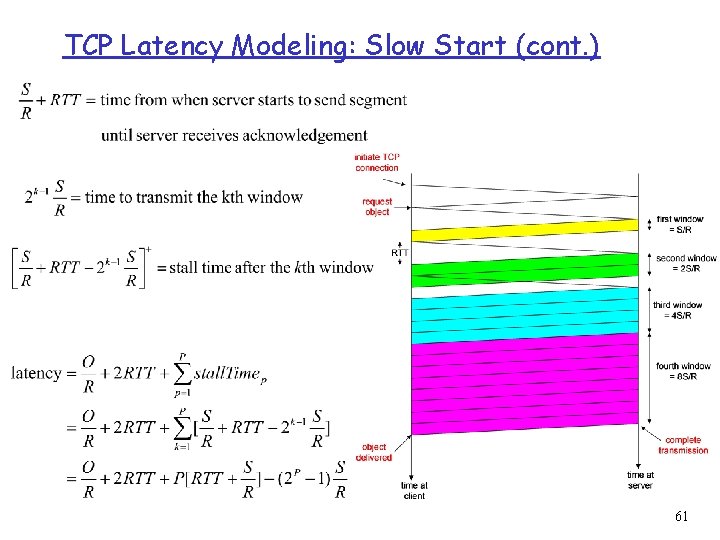

TCP Latency Modeling: Slow Start r Now suppose window grows according to slow start. r Will show that the latency of one object of size O is: where P is the number of times TCP stalls at server: - where Q is the number of times the server would stall if the object were of infinite size. - and K is the number of windows that cover the object. 59

TCP Latency Modeling: Slow Start (cont. ) Example: O/S = 15 segments K = 4 windows Q=2 P = min{K-1, Q} = 2 Server stalls P=2 times. 60

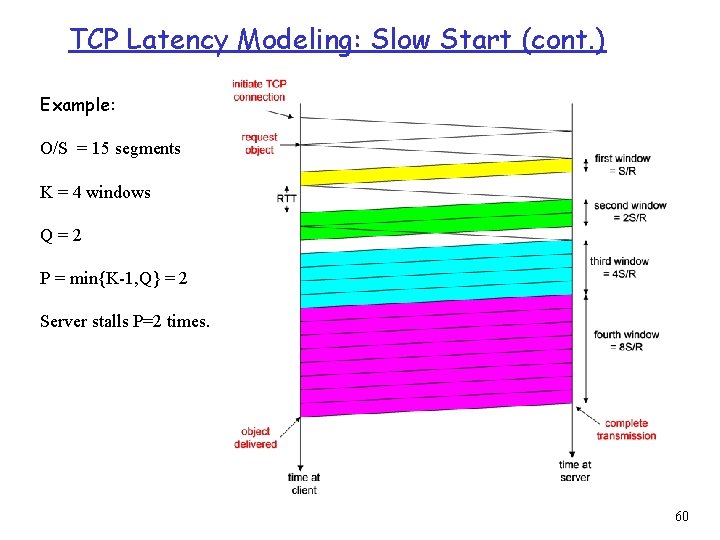

TCP Latency Modeling: Slow Start (cont. ) 61

UDP Protocol 62

![UDP User Datagram Protocol RFC 768 r no frills bare bones Internet transport UDP: User Datagram Protocol [RFC 768] r “no frills, ” “bare bones” Internet transport](https://slidetodoc.com/presentation_image_h/2949b42e4b226b6bd38aae4641920ec2/image-63.jpg)

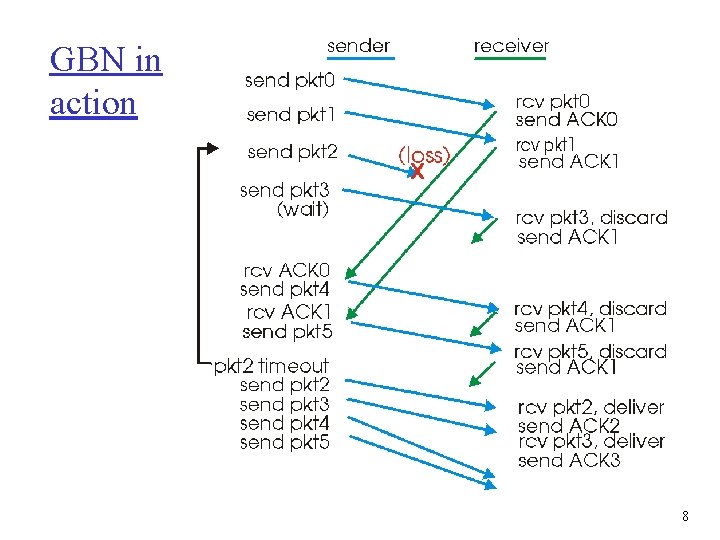

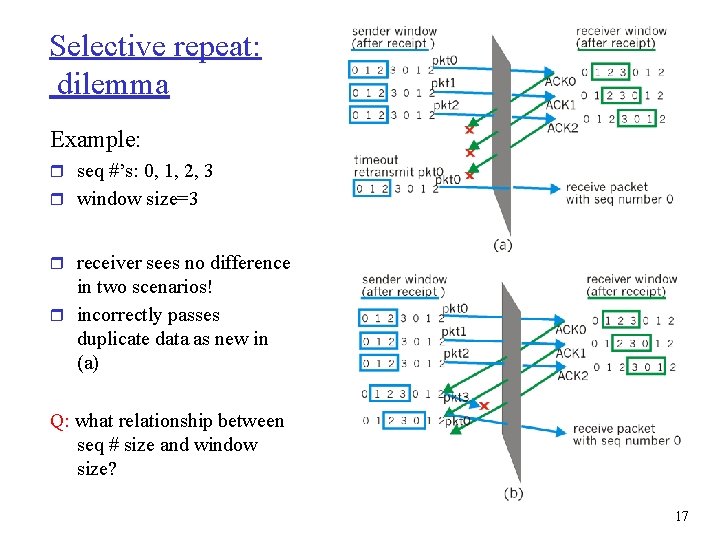

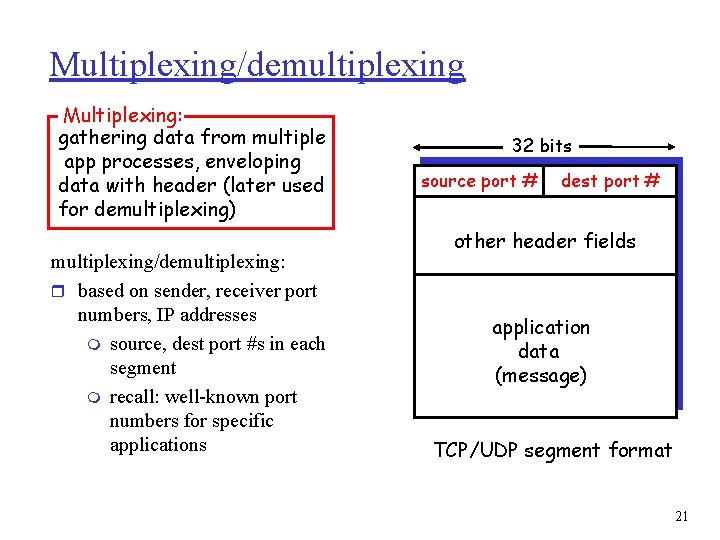

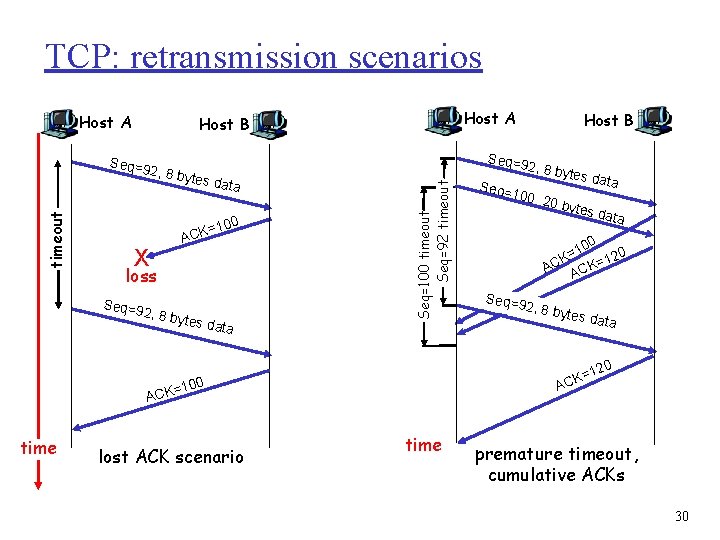

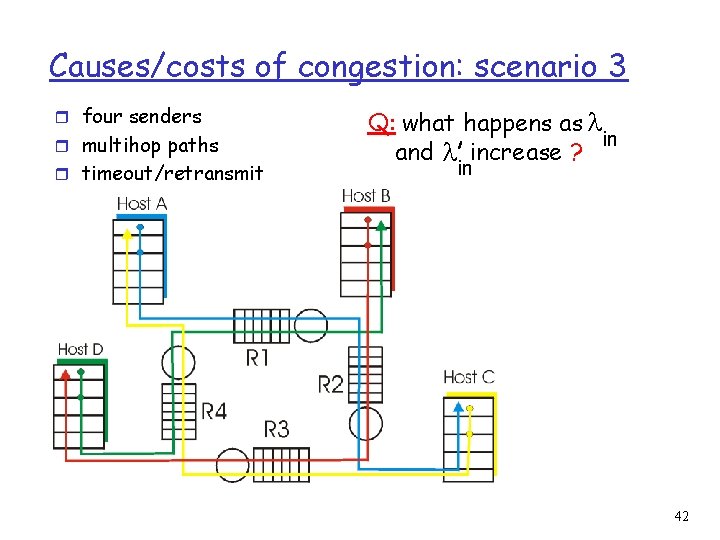

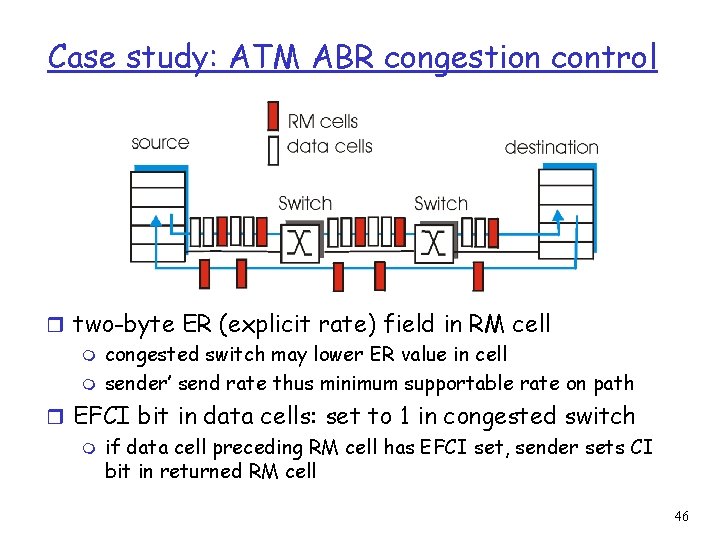

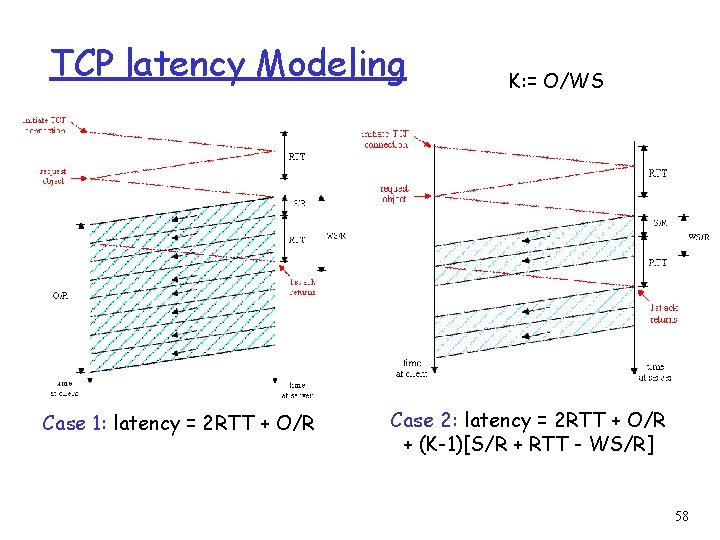

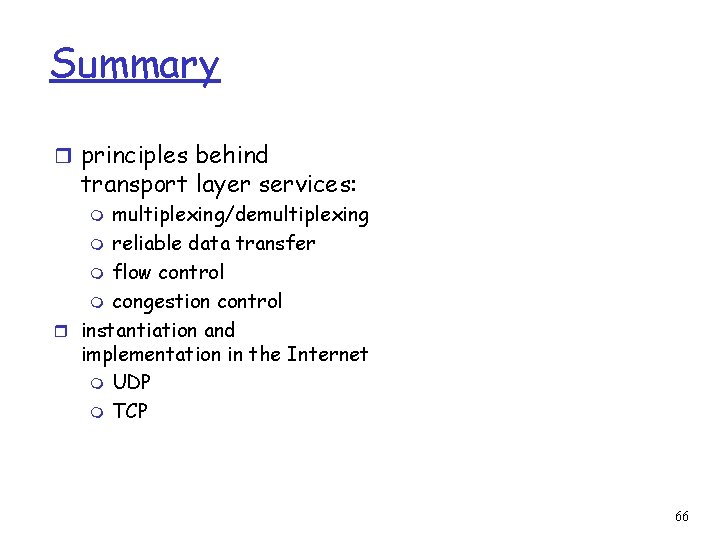

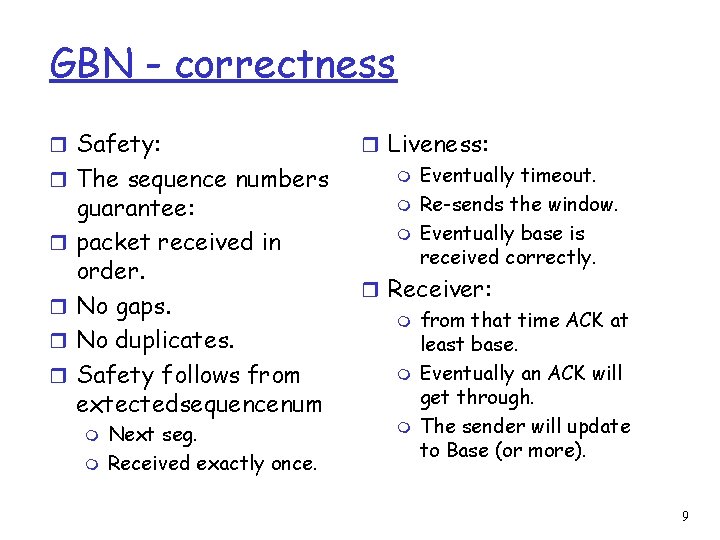

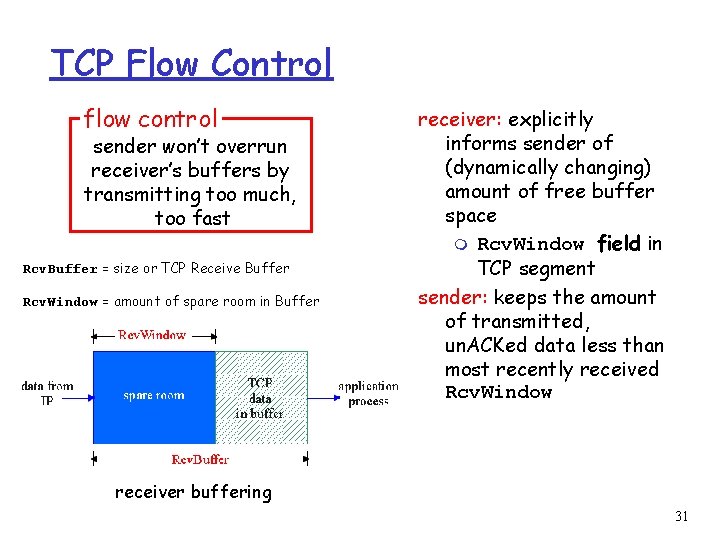

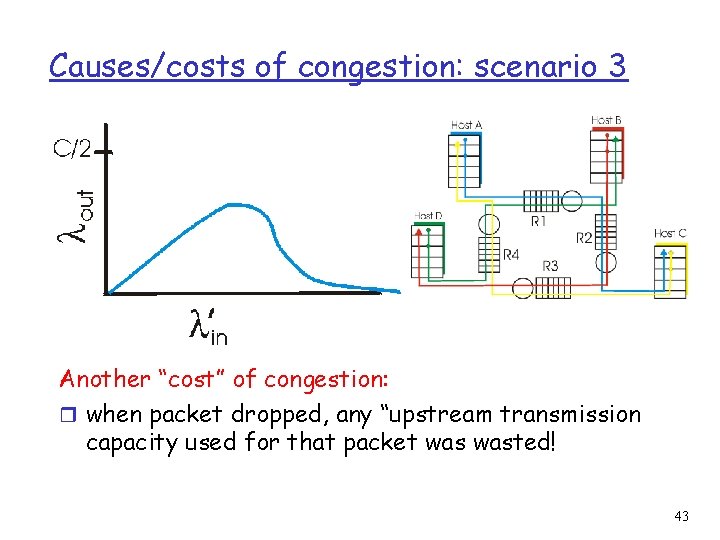

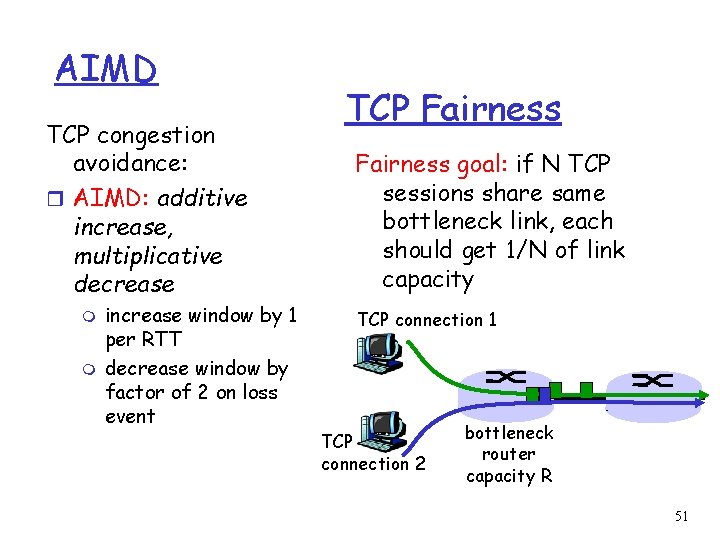

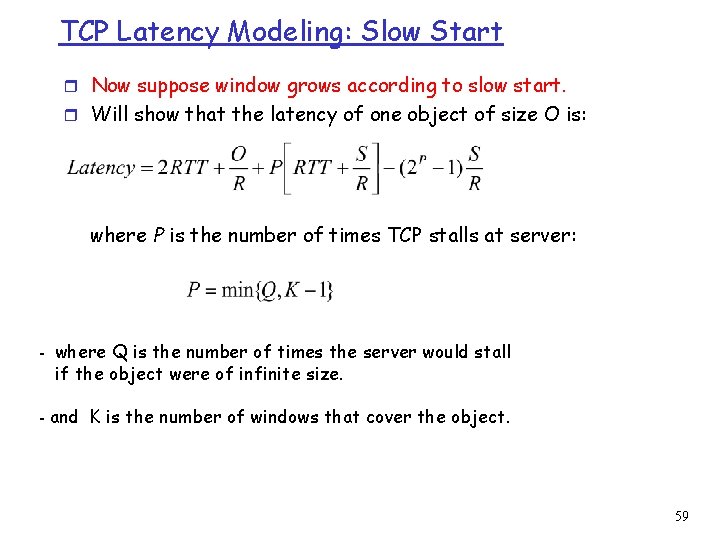

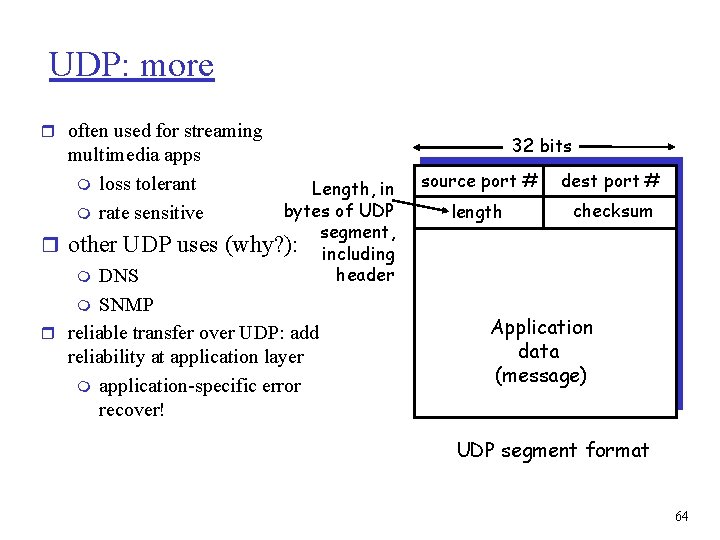

UDP: User Datagram Protocol [RFC 768] r “no frills, ” “bare bones” Internet transport protocol r “best effort” service, UDP segments may be: m lost m delivered out of order to app r connectionless: m no handshaking between UDP sender, receiver m each UDP segment handled independently of others Why is there a UDP? r no connection establishment (which can add delay) r simple: no connection state at sender, receiver r small segment header r no congestion control: UDP can blast away as fast as desired 63

UDP: more r often used for streaming multimedia apps m loss tolerant m rate sensitive Length, in bytes of UDP segment, (why? ): including header r other UDP uses m DNS m SNMP r reliable transfer over UDP: add reliability at application layer m application-specific error recover! 32 bits source port # dest port # length checksum Application data (message) UDP segment format 64

UDP checksum Goal: detect “errors” (e. g. , flipped bits) in transmitted segment Sender: Receiver: r treat segment contents as r compute checksum of received sequence of 16 -bit integers r checksum: addition (1’s complement sum) of segment contents r sender puts checksum value into UDP checksum field segment r check if computed checksum equals checksum field value: m NO - error detected m YES - no error detected. But maybe errors nonethless? 65

Summary r principles behind transport layer services: multiplexing/demultiplexing m reliable data transfer m flow control m congestion control r instantiation and implementation in the Internet m UDP m TCP m 66