Encoding Feature Maps of CNNs for Action Recognition

- Slides: 19

Encoding Feature Maps of CNNs for Action Recognition Xiaojiang Peng, Cordelia Schmid LEAR-Inria, Grenoble, France

Summary of LEAR Submission • Improved DT and Fisher vector • CNN features from very deep Conv. Nets • Key component: Encoding CNN feature maps

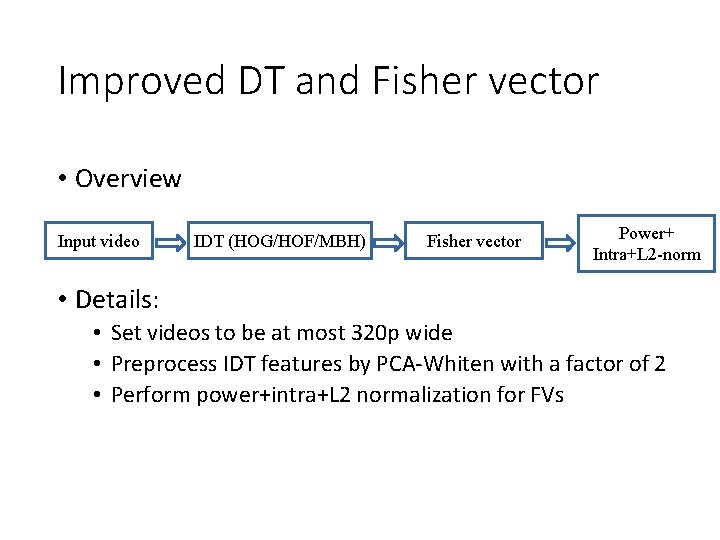

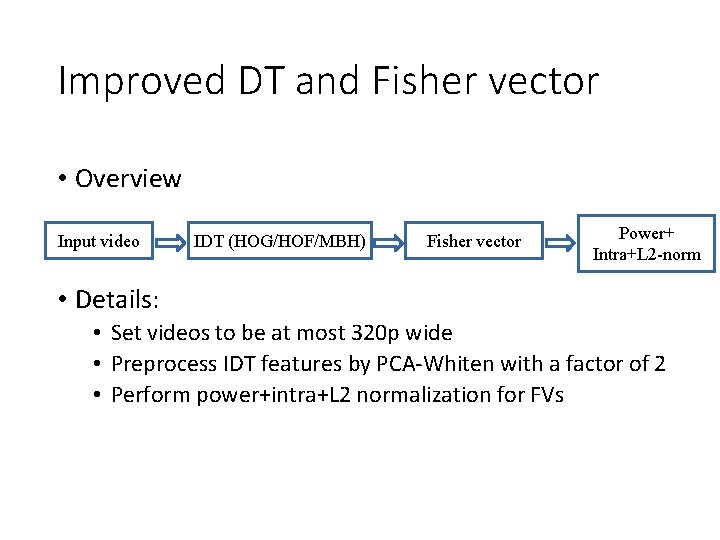

Improved DT and Fisher vector • Overview Input video IDT (HOG/HOF/MBH) Fisher vector Power+ Intra+L 2 -norm • Details: • Set videos to be at most 320 p wide • Preprocess IDT features by PCA-Whiten with a factor of 2 • Perform power+intra+L 2 normalization for FVs

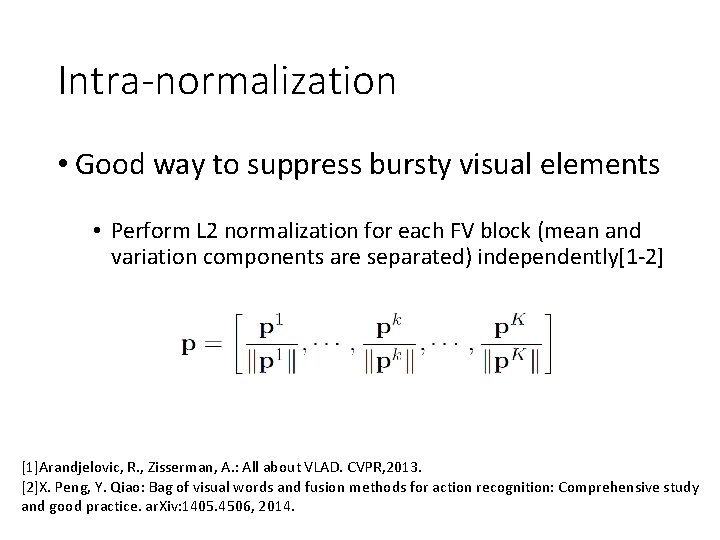

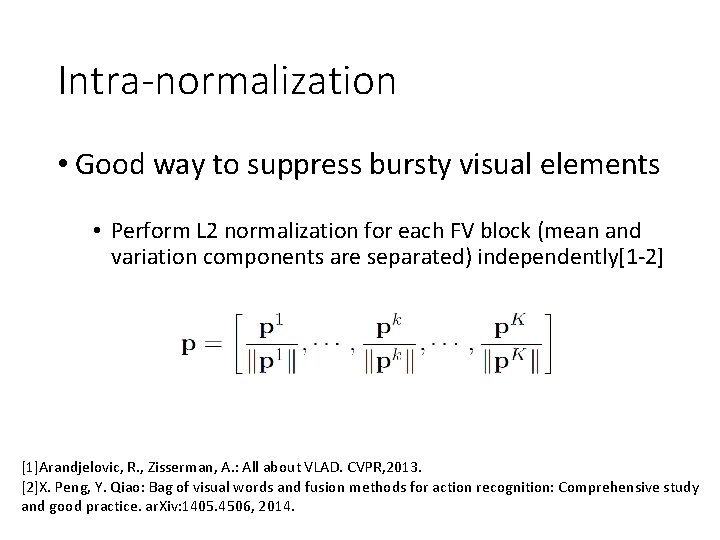

Intra-normalization • Good way to suppress bursty visual elements • Perform L 2 normalization for each FV block (mean and variation components are separated) independently[1 -2] [1]Arandjelovic, R. , Zisserman, A. : All about VLAD. CVPR, 2013. [2]X. Peng, Y. Qiao: Bag of visual words and fusion methods for action recognition: Comprehensive study and good practice. ar. Xiv: 1405. 4506, 2014.

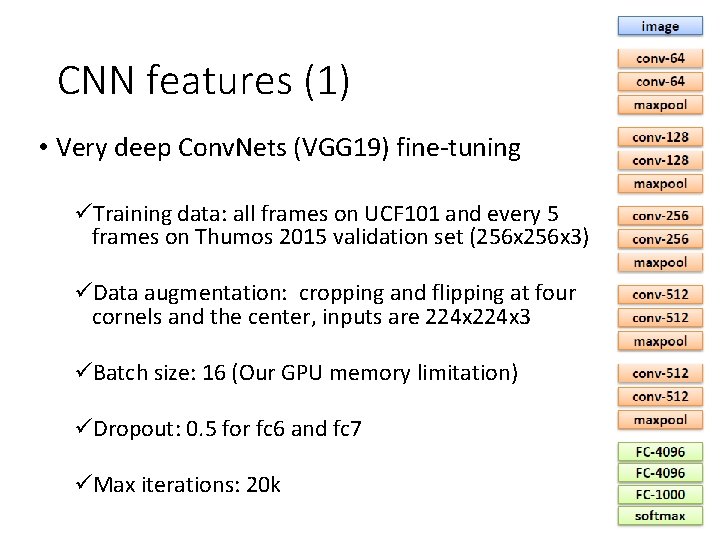

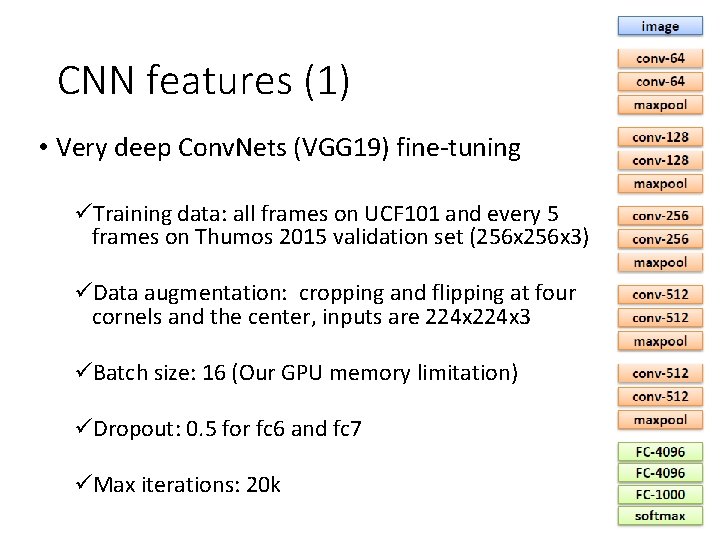

CNN features (1) • Very deep Conv. Nets (VGG 19) fine-tuning üTraining data: all frames on UCF 101 and every 5 frames on Thumos 2015 validation set (256 x 3) üData augmentation: cropping and flipping at four cornels and the center, inputs are 224 x 3 üBatch size: 16 (Our GPU memory limitation) üDropout: 0. 5 for fc 6 and fc 7 üMax iterations: 20 k

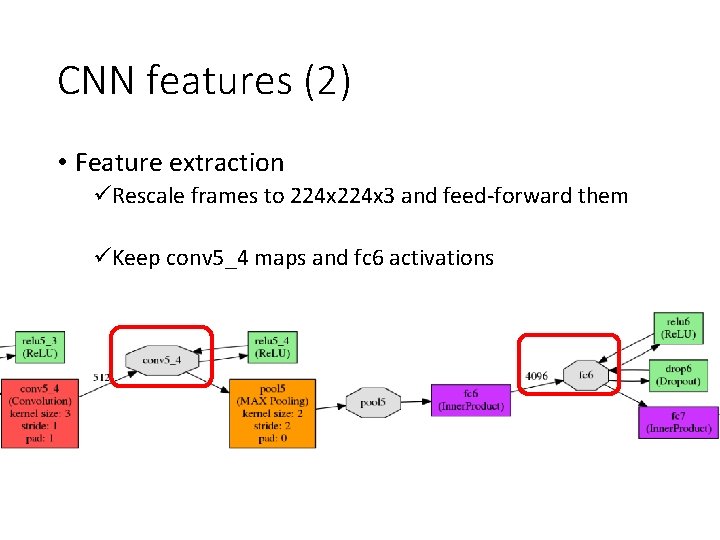

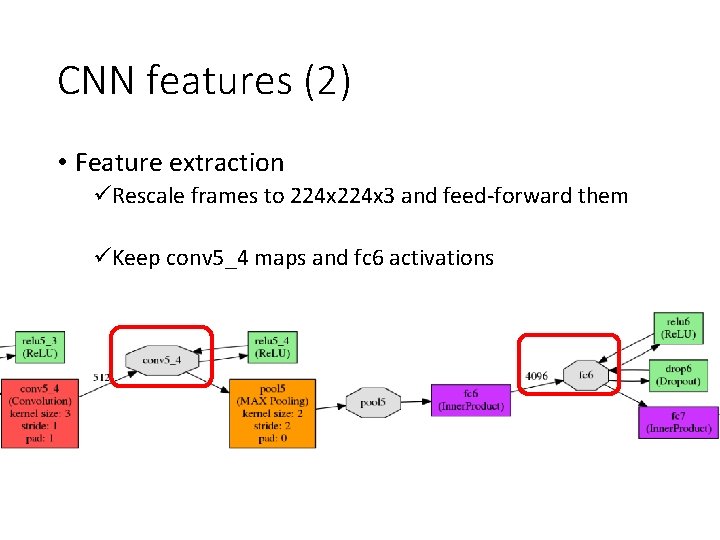

CNN features (2) • Feature extraction üRescale frames to 224 x 3 and feed-forward them üKeep conv 5_4 maps and fc 6 activations

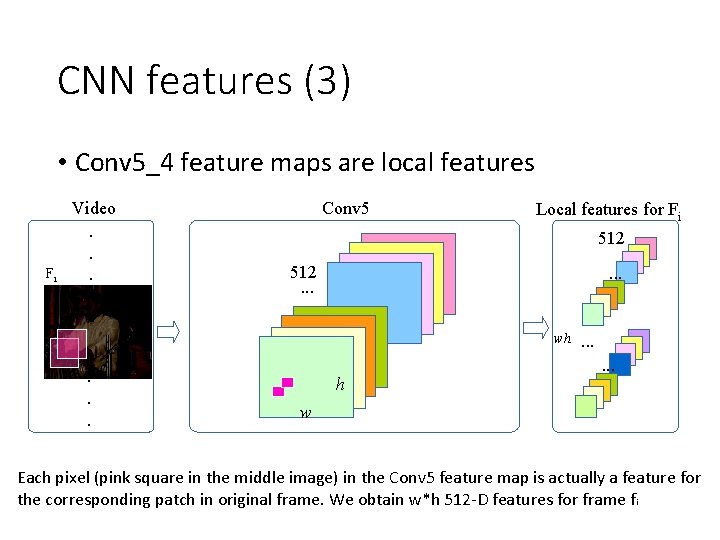

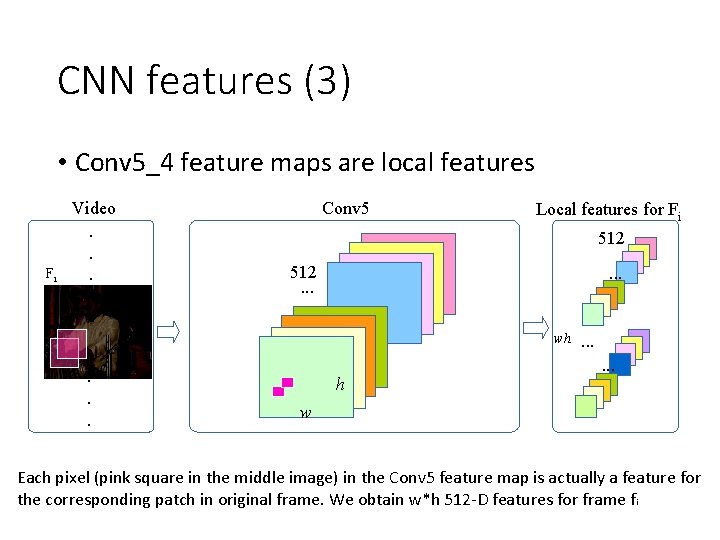

CNN features (3) • Conv 5_4 feature maps are local features Fi Video. . . Conv 5 Local features for Fi 512. . . wh . . . w Each pixel (pink square in the middle image) in the Conv 5 feature map is actually a feature for the corresponding patch in original frame. We obtain w*h 512 -D features for frame f i

CNN features (4) • Encoding Feature Maps üPreprocess Conv 5_4 local features by PCA-Whiten with a factor of 2 üConstruct a codebook of size 256 using k-means üApply VLAD encoding and power+intra+L 2 normalization for video representations Input video Video frames IDT features VGG 19 Conv 5 Fisher vector VLAD Power + Intra + L 2 norm Fusion & SVM

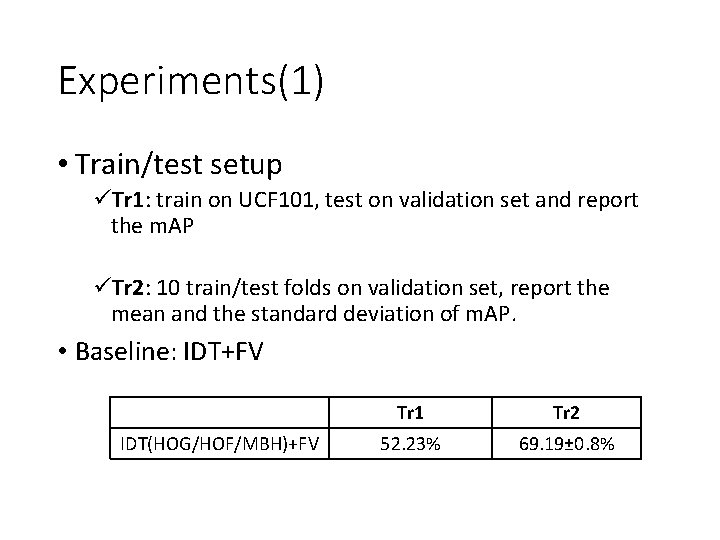

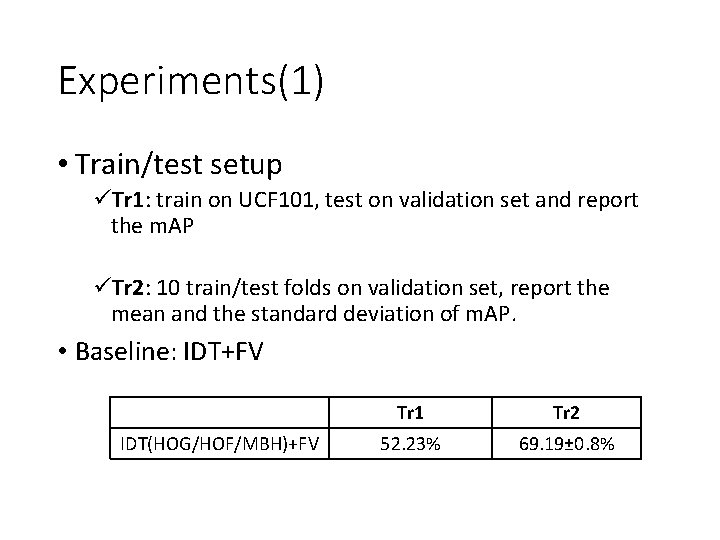

Experiments(1) • Train/test setup üTr 1: train on UCF 101, test on validation set and report the m. AP üTr 2: 10 train/test folds on validation set, report the mean and the standard deviation of m. AP. • Baseline: IDT+FV IDT(HOG/HOF/MBH)+FV Tr 1 Tr 2 52. 23% 69. 19± 0. 8%

Experiments(2) • Evaluation of CNN features and pooling methods Table 1. Evaluation of Conv 5_4 and fc 6 without fine-tuning Tr 1 Tr 2 Avg-pooling Max-pooling VLAD Conv 5_4 46. 02% 34. 3% 56. 95% 68. 7± 1. 1% fc 6 39. 38% 28. 38% - - • Conclusions: üConv 5_4 is better than fc 6 without fine-tuning üOriginal CNN model is trained to abstract concepts for object classification rather than action recognition üEncoding Conv 5_4 feature maps significantly outperforms others

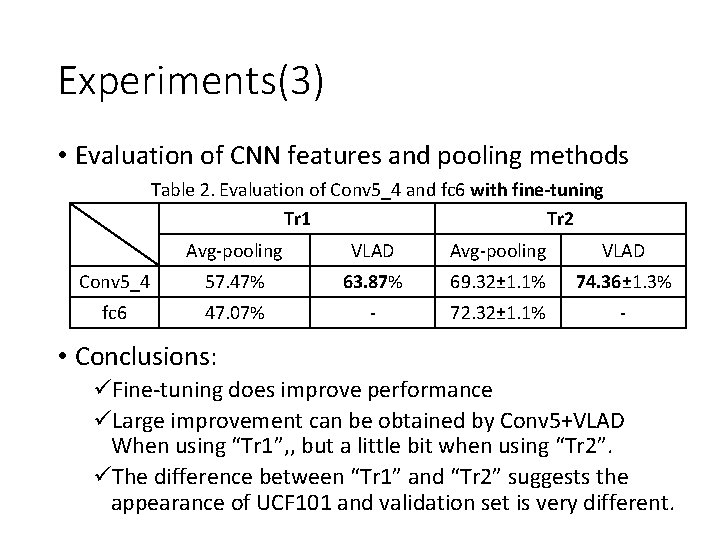

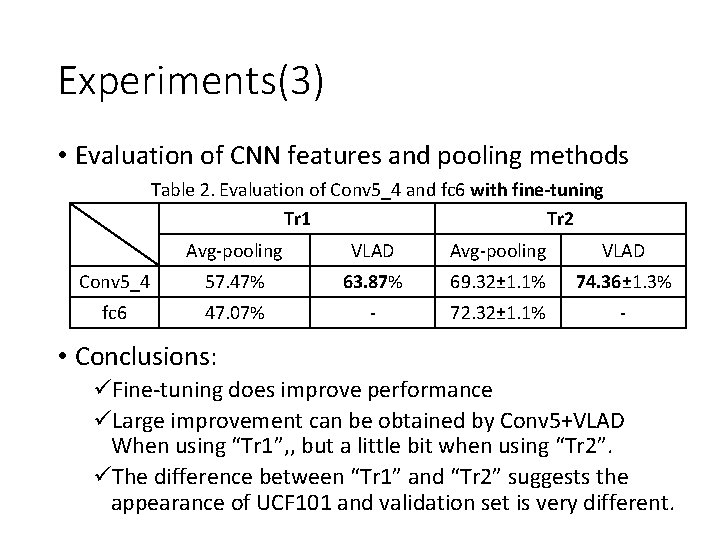

Experiments(3) • Evaluation of CNN features and pooling methods Table 2. Evaluation of Conv 5_4 and fc 6 with fine-tuning Tr 1 Tr 2 Avg-pooling VLAD Conv 5_4 57. 47% 63. 87% 69. 32± 1. 1% 74. 36± 1. 3% fc 6 47. 07% - 72. 32± 1. 1% - • Conclusions: üFine-tuning does improve performance üLarge improvement can be obtained by Conv 5+VLAD When using “Tr 1”, , but a little bit when using “Tr 2”. üThe difference between “Tr 1” and “Tr 2” suggests the appearance of UCF 101 and validation set is very different.

Experiments(4) • Feature combinations Index Method Tr 1 Tr 2 1 IDT (HOG+HOF+MBH) 52. 23% 69. 19± 0. 8% 2 Conv 5 -VLAD 63. 87% 74. 36± 1. 3% 3 Conv 5 -avg 57. 47% 69. 32± 1. 1% 4 fc 6 -avg 47. 07% 72. 32± 1. 1% 5 IDT+ Conv 5 -VLAD 65. 11% 76. 21± 1. 0% 6 IDT+ Conv 5 -avg 62. 95% 75. 38± 0. 8% 7 IDT+ fc 6 -avg 58. 59% 76. 1± 1. 0% 8 IDT+Conv 5 -VLAD+Conv 5 -avg 66. 17% 77. 69± 0. 9% 9 IDT+ Conv 5 -VLAD+fc 6 -avg 64. 84% 79. 36± 1. 0% 10 IDT+ Conv 5 -VLAD+Conv 5 -avg+fc 6 -avg 66. 64% 79. 52± 1. 1% 11 IDT+ Conv 5 -VLAD+Conv 5 -avg+softmax - 87. 45± 0. 8%

Experiments(4) • Conclusions: üCNN features complement the IDT features üAll independent CNN based methods outperform IDT+FV when using “Tr 2” üConv 5 -avg and fc 6 -avg complement the IDT and Conv 5 VLAD features, see 5 vs. 8 vs. 10 (table in last slide) üConv 5 -avg and fc 6 -avg capture global information while Conv 5 -VLAD does not

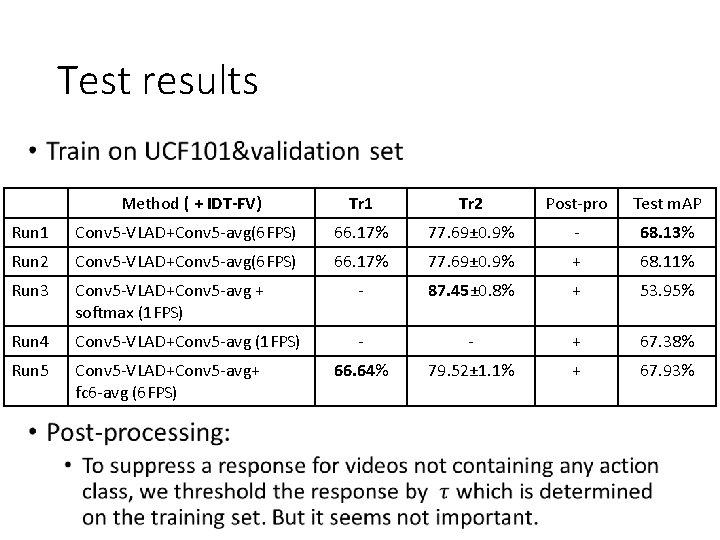

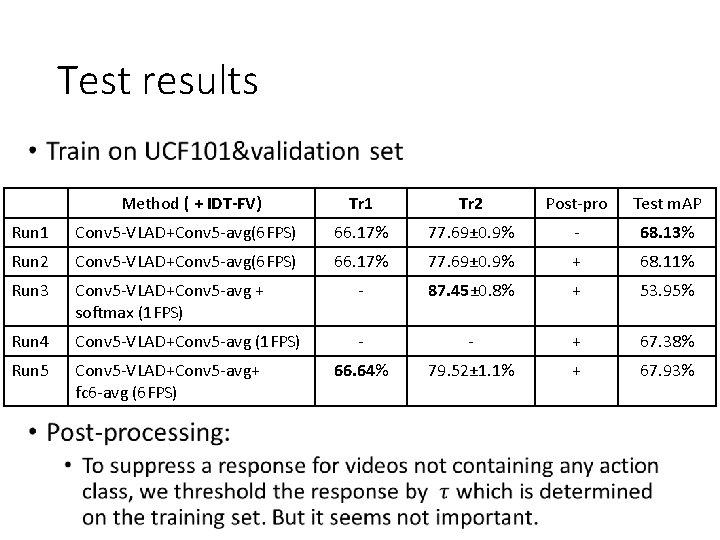

Test results • Method ( + IDT-FV) Tr 1 Tr 2 Post-pro Test m. AP Run 1 Conv 5 -VLAD+Conv 5 -avg(6 FPS) 66. 17% 77. 69± 0. 9% - 68. 13% Run 2 Conv 5 -VLAD+Conv 5 -avg(6 FPS) 66. 17% 77. 69± 0. 9% + 68. 11% Run 3 Conv 5 -VLAD+Conv 5 -avg + softmax (1 FPS) - 87. 45± 0. 8% + 53. 95% Run 4 Conv 5 -VLAD+Conv 5 -avg (1 FPS) - - + 67. 38% Run 5 Conv 5 -VLAD+Conv 5 -avg+ fc 6 -avg (6 FPS) 66. 64% 79. 52± 1. 1% + 67. 93%

Conclusions üCombining fc 6 features doesn’t improve test results üCombining softmax scores leads to overfitting üTrain/test setup is important since different observations can be obtained on different setup • Code available http: //lear. inrialpes. fr/software • Improved dense trajectories • Fisher vector encoding • VGG 19 fine-tuning model (coming soon)

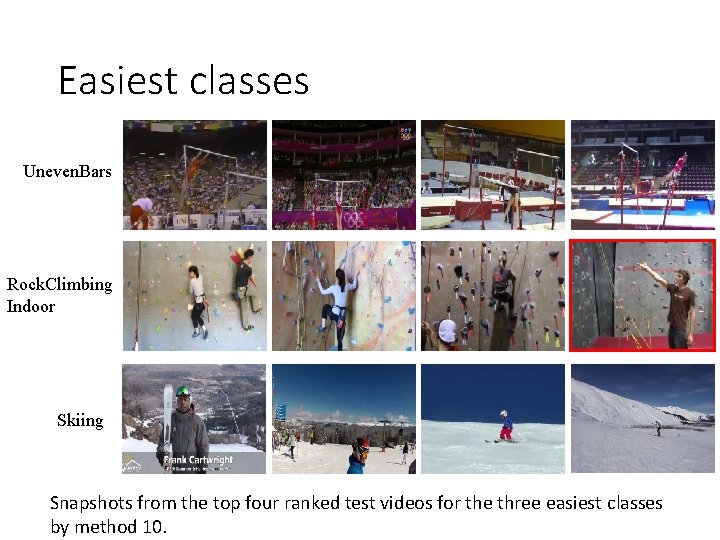

Statistics on validation set (1) The three easiest classes: Uneven. Bars, Rock. Climbing. Indoor, Skiing

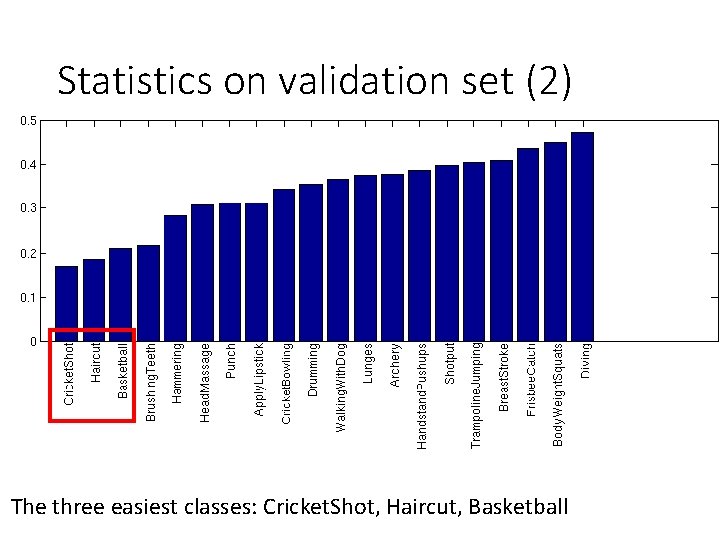

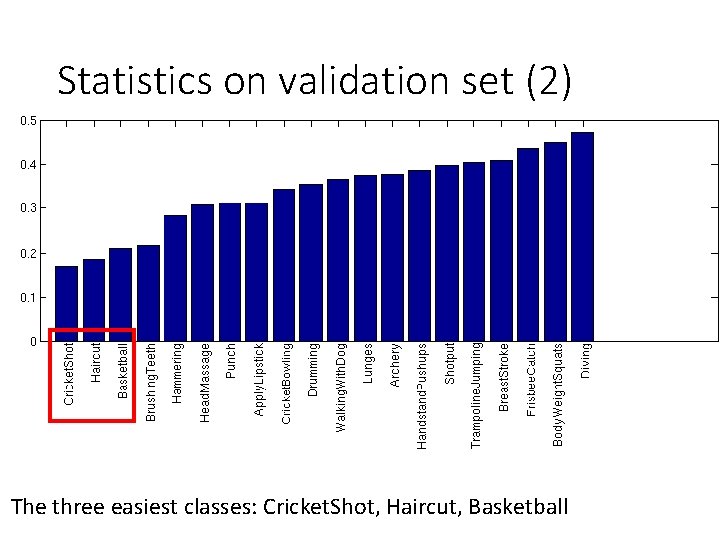

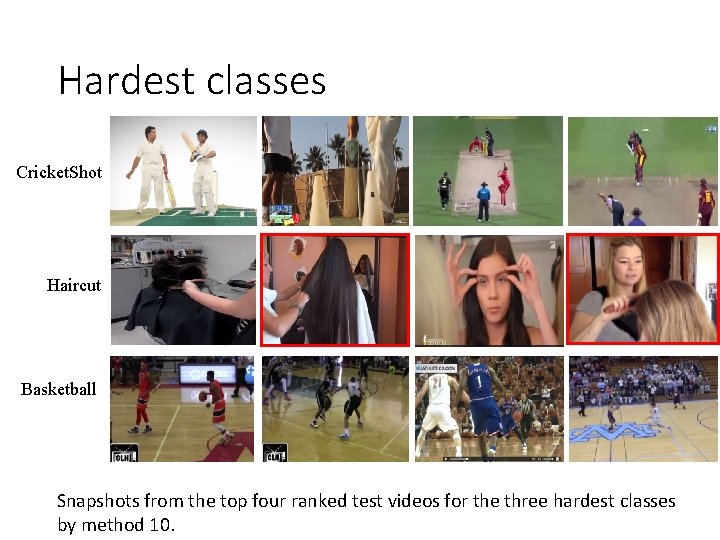

Statistics on validation set (2) The three easiest classes: Cricket. Shot, Haircut, Basketball

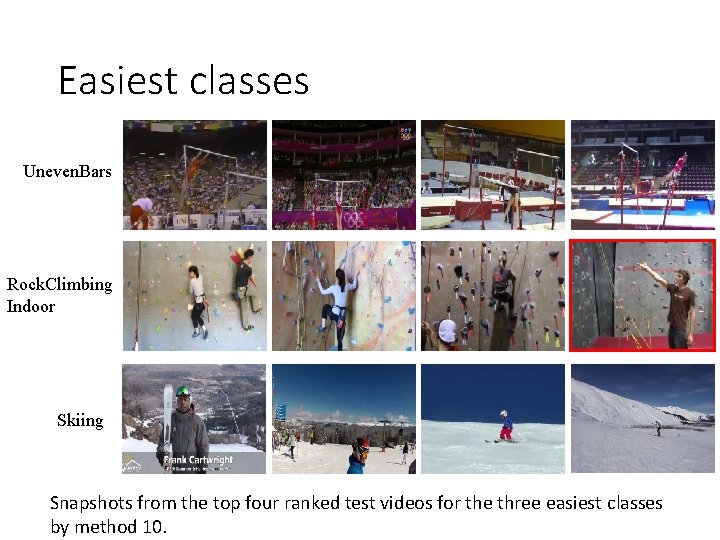

Easiest classes Uneven. Bars Rock. Climbing Indoor Skiing Snapshots from the top four ranked test videos for the three easiest classes by method 10.

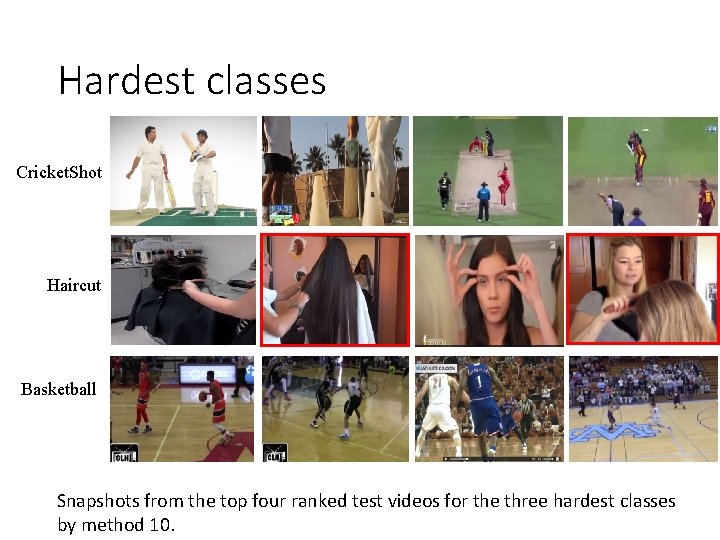

Hardest classes Cricket. Shot Haircut Basketball Snapshots from the top four ranked test videos for the three hardest classes by method 10.