Enabling Grids for Escienc E EGEE and autonomic

Enabling Grids for E-scienc. E EGEE and autonomic computing Cécile Germain-Renaud Grid Observatory meeting 19 October 2007 Orsay www. eu-egee. org EGEE-II INFSO-RI-031688

e-science infrastructures Enabling Grids for E-scienc. E • 2003 NSF Atkins Report : Revolutionizing Science and Engineering through Cyberinfrastructure – – – Grids of computational centers Comprehensive libraries of digital objects Well-curated collections of scientific data Online instruments and vast sensor arrays Convenient software toolkits • The classical definition of grids – A computational grid is a hardware and software infrastructure that provides dependable, consistent, pervasive, and inexpensive access to high computational capabilities. I. Foster, C. Kesselman, The Grid, 1998 EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 2

An old example Enabling Grids for E-scienc. E « Programmers at computer A have a blurred photo which they want to put into focus. Their program transmits the photo to computer B, which specializes in computer graphics (…). If B requires specialized computer assistance, it may call on computer C for help » EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 3

Technological facts Enabling Grids for E-scienc. E • Data acquisition – LHC: 10 PT/year – Medical images 10 TB/centre – DNA microarrays • Analysis is more than linear in the data size • Computing resources and data storage must be distributed • It makes sense from the point of view of technology trends EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 4

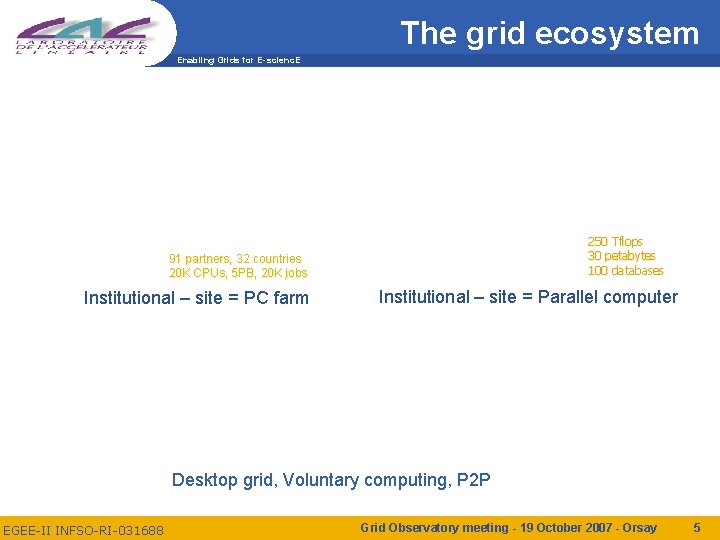

The grid ecosystem Enabling Grids for E-scienc. E 250 Tflops 30 petabytes 100 databases 91 partners, 32 countries 20 K CPUs, 5 PB, 20 K jobs Institutional – site = PC farm Institutional – site = Parallel computer Desktop grid, Voluntary computing, P 2 P EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 5

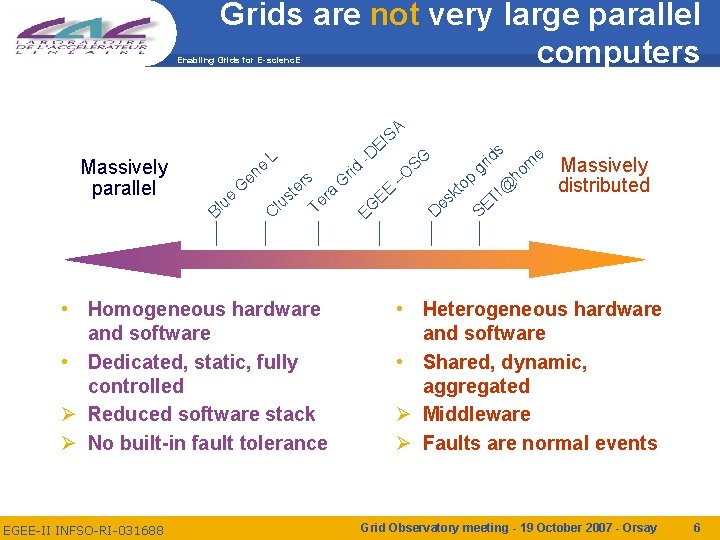

Grids are not very large parallel computers Enabling Grids for E-scienc. E Massively parallel e en Bl ue G L s SG O s – r G te ra s EE e lu T C EG • Homogeneous hardware and software • Dedicated, static, fully controlled Ø Reduced software stack Ø No built-in fault tolerance EGEE-II INFSO-RI-031688 rid -D SA I E op t k D es rid @ TI E S e m ho g Massively distributed • Heterogeneous hardware and software • Shared, dynamic, aggregated Ø Middleware Ø Faults are normal events Grid Observatory meeting - 19 October 2007 - Orsay 6

Grids are not very large parallel computers Enabling Grids for E-scienc. E Massively parallel e en Bl ue G L Ø Latency Ø Simple models (N 1/2, r ) or Log. P s SG O s – r G te ra s EE e lu T C EG • CPU-bound applications EGEE-II INFSO-RI-031688 rid -D SA I E op t k D es rid @ TI E S e m ho g Massively distributed • Moldable data-centric applications • Throughput (more on this later) Ø Models coupling middleware and applications Grid Observatory meeting - 19 October 2007 - Orsay 7

Autonomic Computing Enabling Grids for E-scienc. E Computing systems that manage themselves in accordance with high-level objectives from humans. Kephart & Chess A vision of Autonomic Computing, IEEE Computer 2003 – Self-*: configuration, optimization, healing, protection – On open non steady state dynamic systems EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 8

Autonomic Grids Enabling Grids for E-scienc. E • Very large scale: geographical and size • Common goods – Overall physical infrastructure: g. Lite tools greatly help in advertising resources – Each Virtual Organization share – Example of abuse: pilot jobs, multiple submissions • EGEE is a unique opportunity to acquire significant datasets – Data are already collected for various levels of operational needs EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 9

Data Collection and Publication Enabling Grids for E-scienc. E • Acquisition, consolidation, long-term conservation of traces of EGEE activities – Permanent storage of reliable, exhaustive, filtered information – Exhaustive: added value in snapshots of the inputs and grid state e. g. workload and available services during a relevant time range – Filtered: from operational to structured • Publication service: navigation and querying – Integration of independent sources – Indexing along the needs of the users communities § Scheduling: ongoing work with Core. Grid § Jobs: ongoing work with KDUbik EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 10

Data Collection Enabling Grids for E-scienc. E • Acquisition, consolidation, long-term conservation of traces of EGEE activities – No monitoring development: rich set of sources, with very different scopes, deployment and institutional status – Centralized “database” in the beginning • • CIC tools (GOCDB, SAM, SFT, …), core g. Lite (L&B, BDII, …) sites (Maui/PBS logs) g. Lite integrators (R-GMA, Job Provenance) • experience integrators (Dash. Board) • external software (Mona. Lisa) EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 11

Data Collection Enabling Grids for E-scienc. E • Acquisition, consolidation, long-term conservation of traces of EGEE activities – Permanent storage of reliable, exhaustive, filtered information: from operational to structured • In the long term, the major challenge is exhaustive – Some data are outside the scope: external traffic on shared resources – Inside the scope, we need snapshots of the grid state and inputs – Privacy related legal constraints – Scientific usage will help – Interaction with EGI – Long-term: privacy-preserving data mining EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 12

Jobs Enabling Grids for E-scienc. E • Simple • DAG (directed acyclic graph) – composed of several simple jobs – arbitrary mutual dependencies – handled by Condor DAGMan in WMS – both parent job and subjob live their life, generating L&B events – performance limitations • Collection – simple container for many subjobs – no dependencies, still shared inputs etc. – handled more efficiently in WMS – parent job disappears after break-up – its state is deduced by L&B from subjobs only • Annotated lifecycle recorded within the Logging and Bookkeeping – designed towards operational needs EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 13

The complexities of the L&B Enabling Grids for E-scienc. E • event order is critical for computing job state – timestamps are not reliable on the grid: strict clock synchronization cannot be enforced • event counter is not sufficient: dead branches of job submission – addressed by hierarchical sequence code in the L&B NS=4: WM=7: JSS=3: LM=6: LRMS=0 • it’s even worse. . . – shallow resubmissions allow parallel execution of submission branches – only one wins, but it may not be the most recent one – not the highest sequence code becomes the authoritative one • Thus recovering the job lifecycle is not trivial Slide Courtesy A. Krenek EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 14

Job Provenance Enabling Grids for E-scienc. E • developed at CESNET – JRA 1 • L&B is not, and is not designed for, long-term storage – data stored as rich structure – database size affects performance – index reconfiguration partially interferes with operation • Job Provenance – – – compact storage of huge data still allow efficient and frequent queries handle changes in time: data format and query patterns decoupled architecture: primary storage/index servers currently, experimental EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 15

Data Publication Enabling Grids for E-scienc. E • Initially, heap of data « as it is » – Examples based on voluntary contributions – Goal: bootstrap interactions • Perspective: ontology – The Glue Information Model: an ontology of the resources § Ongoing integration with the OGF reference model – Concepts for the grid dynamics e. g. job lifecycle or users relations – Expert concepts as prior knowledge of non-trivial correlations: workflows, failure modes, … – Concepts for elementary analysis EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 16

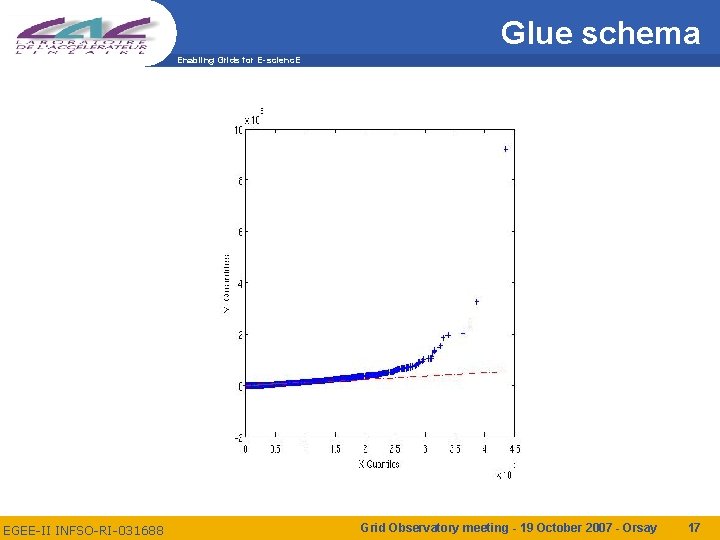

Glue schema Enabling Grids for E-scienc. E EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 17

Glue schema: Computing Element Enabling Grids for E-scienc. E EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 18

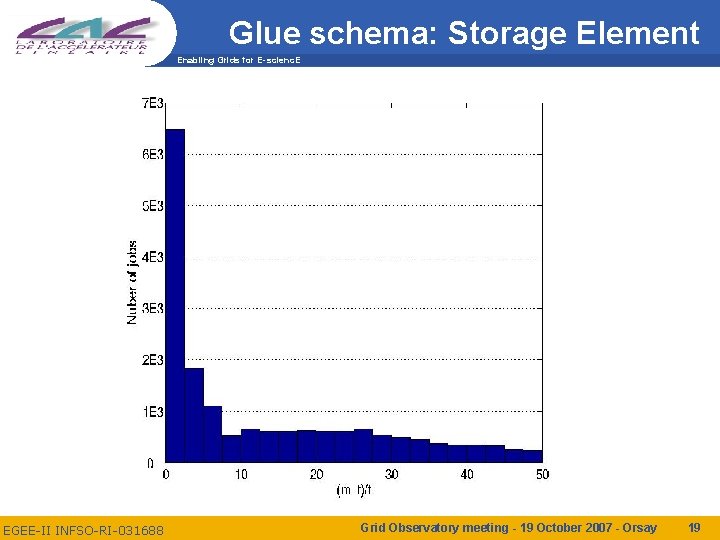

Glue schema: Storage Element Enabling Grids for E-scienc. E EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 19

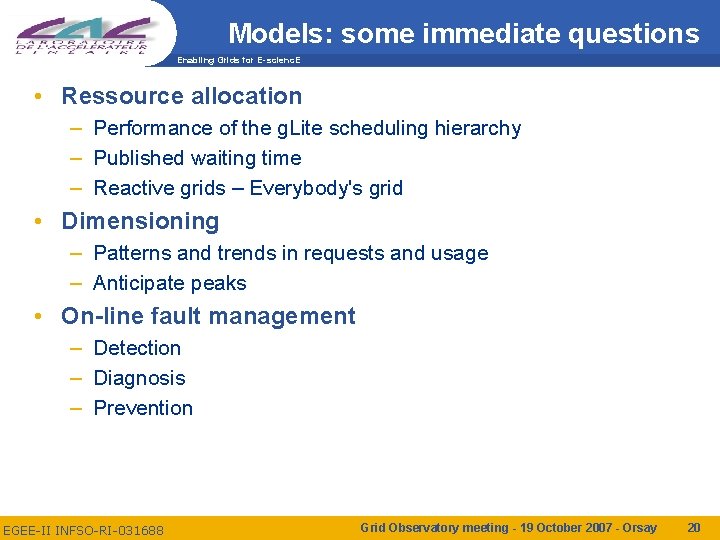

Models: some immediate questions Enabling Grids for E-scienc. E • Ressource allocation – Performance of the g. Lite scheduling hierarchy – Published waiting time – Reactive grids – Everybody's grid • Dimensioning – Patterns and trends in requests and usage – Anticipate peaks • On-line fault management – Detection – Diagnosis – Prevention EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 20

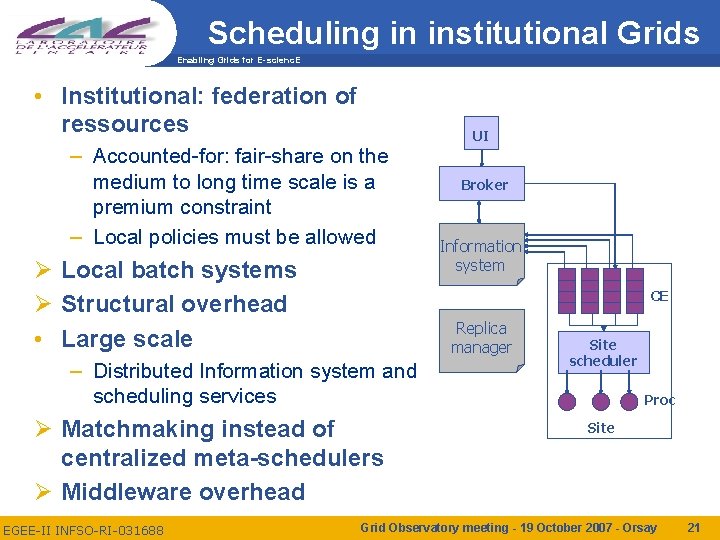

Scheduling in institutional Grids Enabling Grids for E-scienc. E • Institutional: federation of ressources UI – Accounted-for: fair-share on the medium to long time scale is a premium constraint – Local policies must be allowed Ø Local batch systems Ø Structural overhead • Large scale Information system CE Replica manager – Distributed Information system and scheduling services Ø Matchmaking instead of centralized meta-schedulers Ø Middleware overhead EGEE-II INFSO-RI-031688 Broker Site scheduler Proc Site Grid Observatory meeting - 19 October 2007 - Orsay 21

Models Enabling Grids for E-scienc. E • Intrinsic characterizations of «grid traffic» : (distribution of) e. g. job arrival rate, running time, application data locality – Likely to be similar to IP traffic: many short, and a significant number of long, at all scales – Long range dependencies EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 22

Models Enabling Grids for E-scienc. E • Intrinsic characterizations of «grid traffic» : (distribution of) e. g. job arrival rate, running time, application data locality • Characterizations of middleware-dependant metrics e. g. queuing delays, overhead, SE load EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 23

Models for Scheduling Enabling Grids for E-scienc. E • Considerable work has been done in predicting CPU load in shared environments – desktops, clusters, desktop grids [P. A. Dinda, R. Wolski, J. Schopf] – – – The basic technique is linear time-series analysis Self-similarity and epochal behavior Usual goal is the prediction of the next value Applied to soft real-time scheduling on shared clusters Practical application in NWS • Less work on predicting the behavior of dedicated systems • Papers are on parallel systems, mostly based on timeseries techniques, but at least one based on a genetic algorithm [Downey, Foster, Wolski] EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 24

Models Enabling Grids for E-scienc. E • There is more than time series – Massive use of community programs instead of (? ) sparse runs of a very long and complex digital experiment – Coupled middleware activity • Inference of models for middleware components and applications, users and usage profiles, users interactions • First examples in the two last talks EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 25

Autonomic dependability Enabling Grids for E-scienc. E • On-line failure detection and anticipation • Passive vs Active probing : a lot of information is available from user work • Black-box – On-line statistics from « similar » actions (executions, data access, middleware modules) EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 26

Autonomic dependability Enabling Grids for E-scienc. E • On-line failure detection and anticipation • Passive vs Active probing : a lot of information is available from user work • Black-box – eg on-line statistics from « similar » actions (executions, data access, middleware modules) • Interpretation may often be – Unknown – Not present in data EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 27

Blachole detection Enabling Grids for E-scienc. E • Specification: a blackhole is a site fault which results in an ultra-fast (erroneous) execution • Goal: on-line detection of blackholes – alarm • Observed quantities – Job arrival rate and job service rate – And users and queues distributions? EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 28

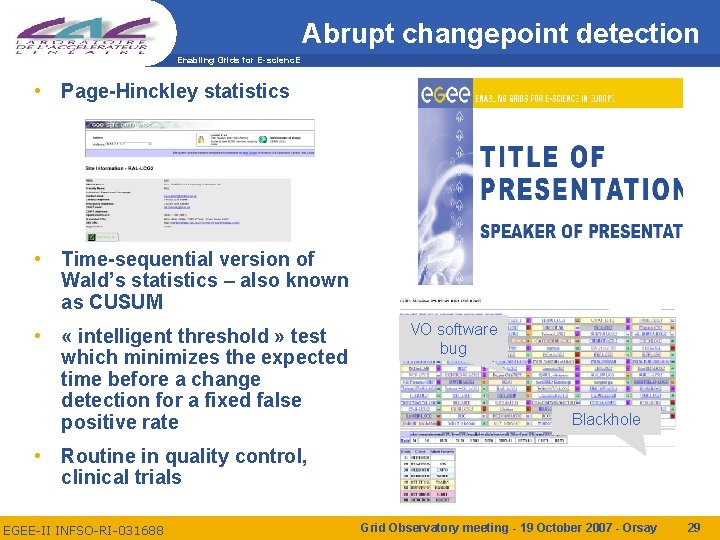

Abrupt changepoint detection Enabling Grids for E-scienc. E • Page-Hinckley statistics • Time-sequential version of Wald’s statistics – also known as CUSUM • « intelligent threshold » test which minimizes the expected time before a change detection for a fixed false positive rate VO software bug Blackhole • Routine in quality control, clinical trials EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 29

Autonomic dependability Enabling Grids for E-scienc. E • On-line failure detection and anticipation • Passive vs Active probing : a lot of information is available from user work • Black-box – eg on-line statistics from « similar » actions (executions, data access, middleware modules) • Interpretation may often be – Unknown – Not present in data • Active probing – Adaptive on-line test selection for best coverage of possibly faulty components [Rish] – Experience planning EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 30

Service Availability Monitoring Enabling Grids for E-scienc. E EGEE-II INFSO-RI-031688 Grid Observatory meeting - 19 October 2007 - Orsay 31

- Slides: 31