Empirical Studies in TestDriven Development Laurie Williams NCSU

- Slides: 30

Empirical Studies in Test-Driven Development Laurie Williams, NCSU williams@csc. ncsu. edu

Agenda • Overview of Test-Driven Development (TDD) • TDD Case Studies • TDD within XP Case Studies • Summary © Laurie Williams 2007

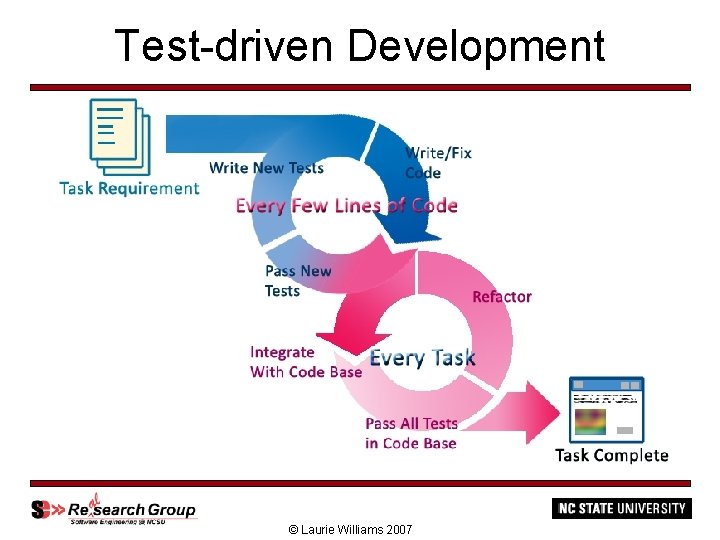

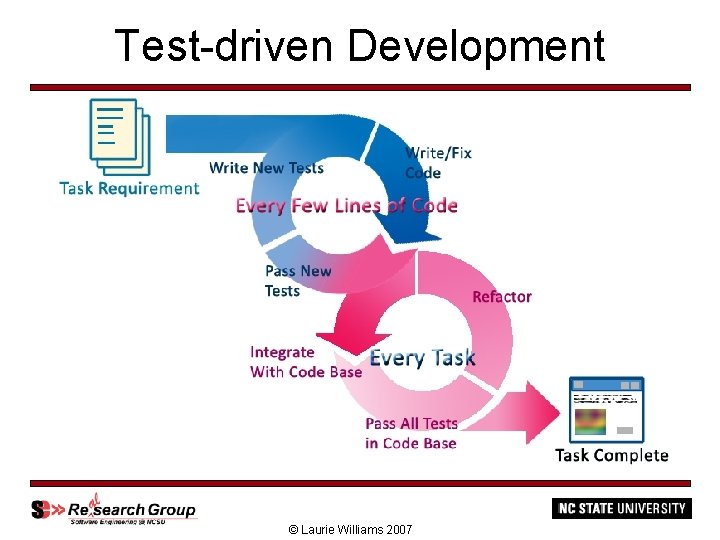

Test-driven Development © Laurie Williams 2007

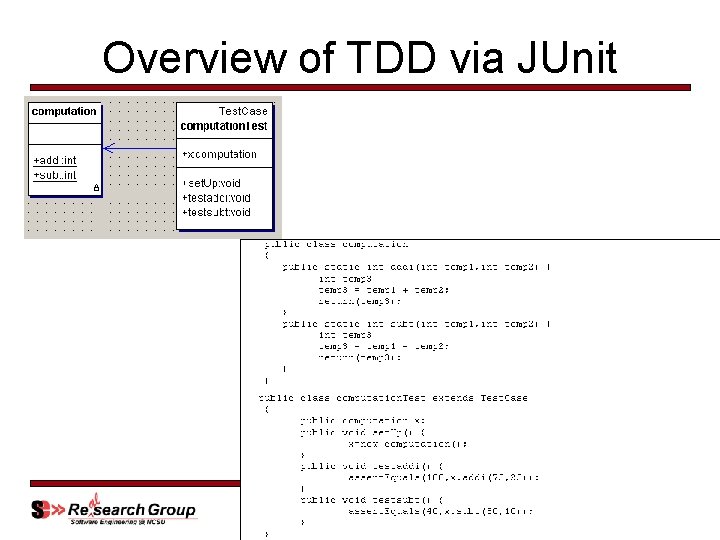

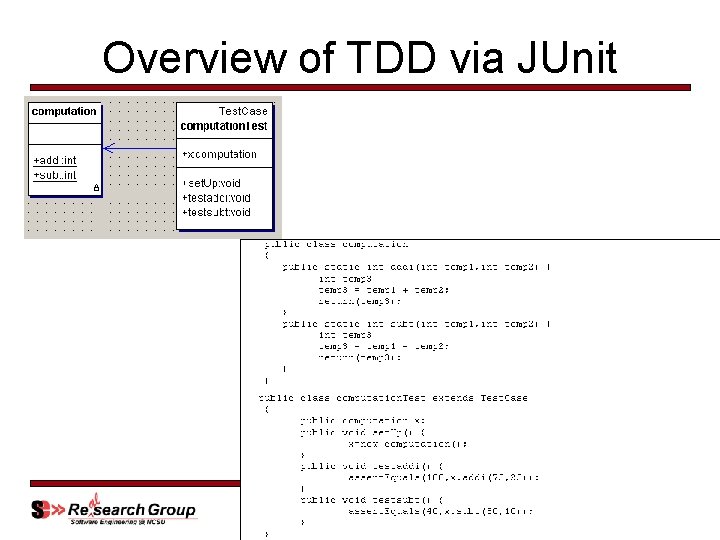

Overview of TDD via JUnit © Laurie Williams 2007

x. Unit tools © Laurie Williams 2007

Agenda • Overview of Test-Driven Development (TDD) • TDD Case Studies • TDD within XP Case Studies • Summary © Laurie Williams 2007

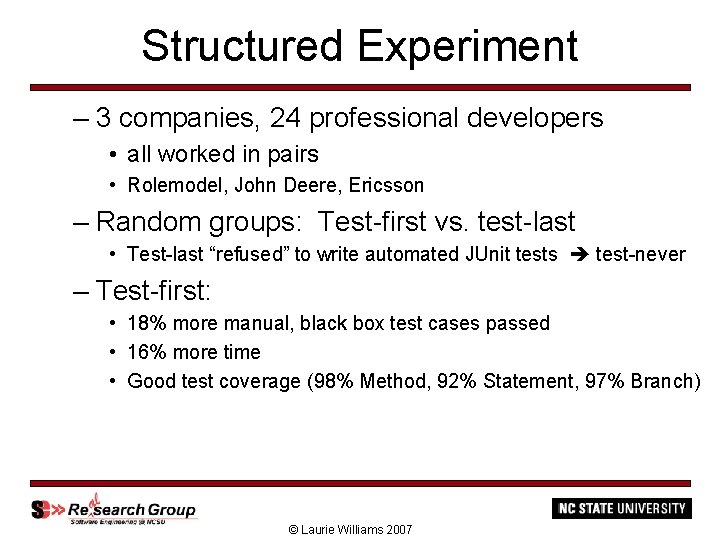

Structured Experiment – 3 companies, 24 professional developers • all worked in pairs • Rolemodel, John Deere, Ericsson – Random groups: Test-first vs. test-last • Test-last “refused” to write automated JUnit tests test-never – Test-first: • 18% more manual, black box test cases passed • 16% more time • Good test coverage (98% Method, 92% Statement, 97% Branch) © Laurie Williams 2007

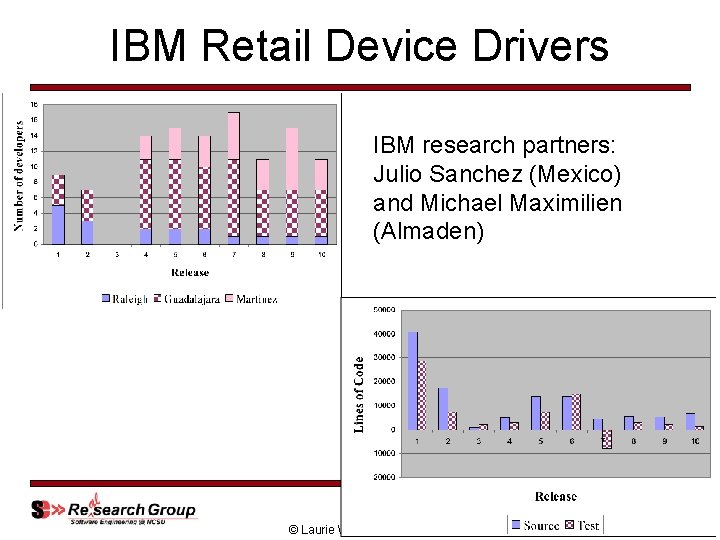

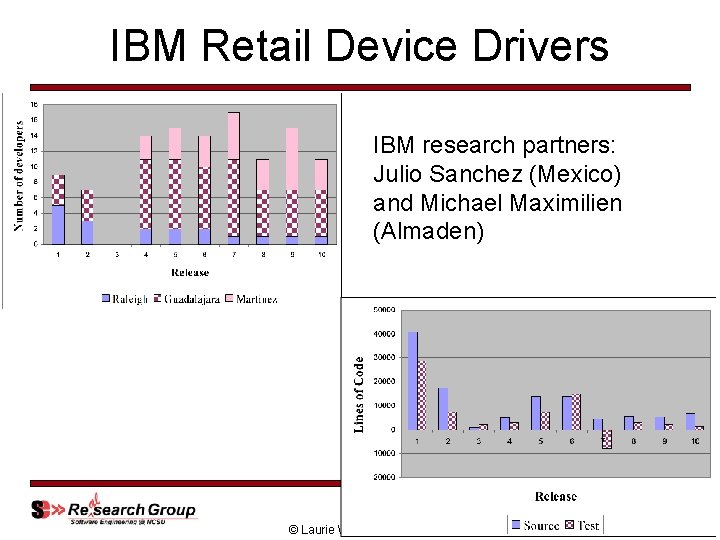

IBM Retail Device Drivers IBM research partners: Julio Sanchez (Mexico) and Michael Maximilien (Almaden) © Laurie Williams 2007

Building Test Assets © Laurie Williams 2007

Defect Density © Laurie Williams 2007

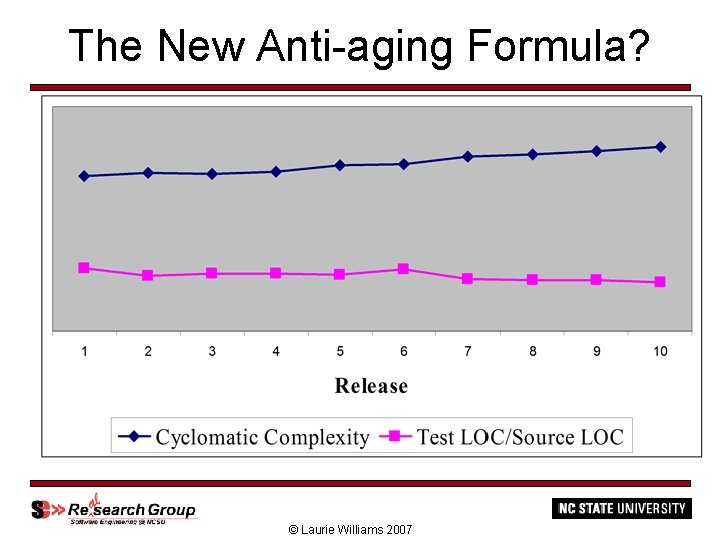

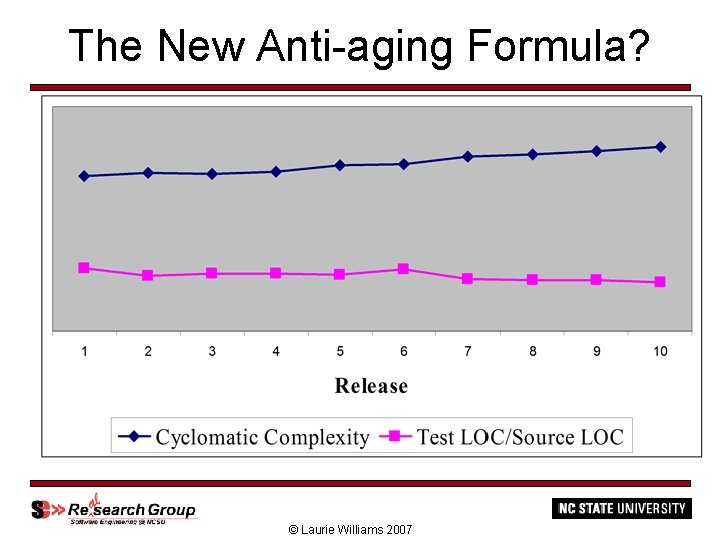

The New Anti-aging Formula? © Laurie Williams 2007

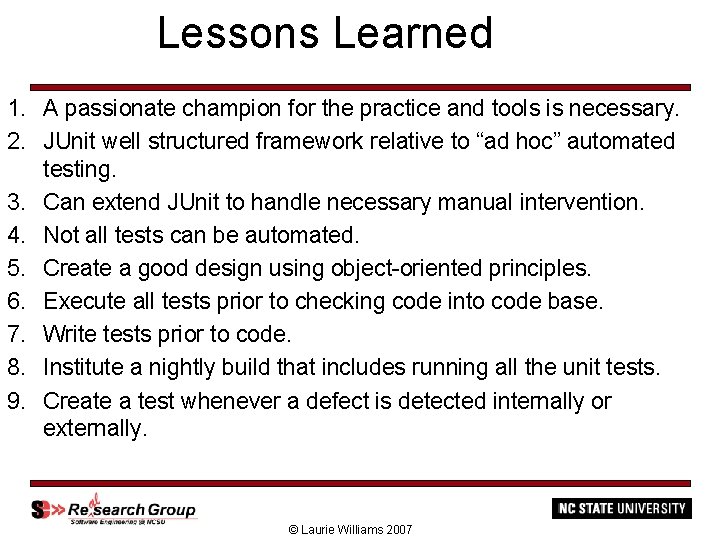

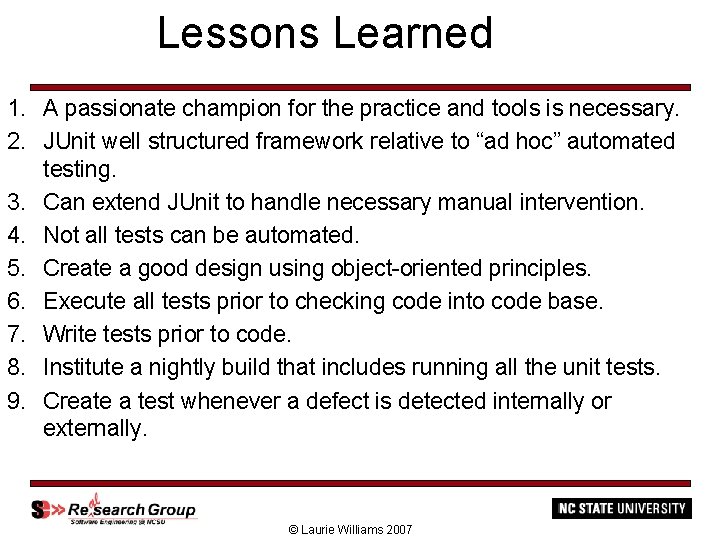

Lessons Learned 1. A passionate champion for the practice and tools is necessary. 2. JUnit well structured framework relative to “ad hoc” automated testing. 3. Can extend JUnit to handle necessary manual intervention. 4. Not all tests can be automated. 5. Create a good design using object-oriented principles. 6. Execute all tests prior to checking code into code base. 7. Write tests prior to code. 8. Institute a nightly build that includes running all the unit tests. 9. Create a test whenever a defect is detected internally or externally. © Laurie Williams 2007

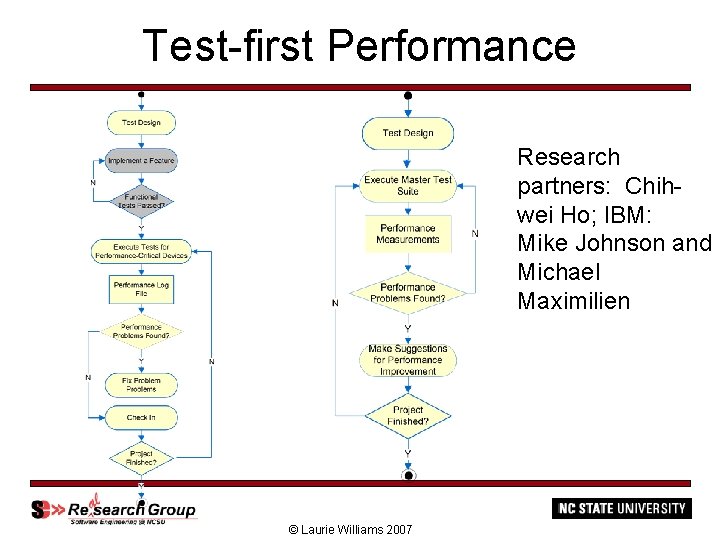

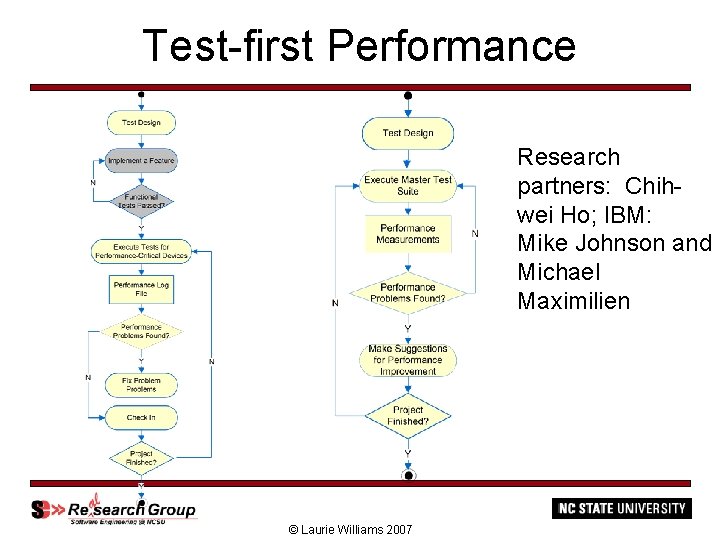

Test-first Performance Research partners: Chihwei Ho; IBM: Mike Johnson and Michael Maximilien © Laurie Williams 2007

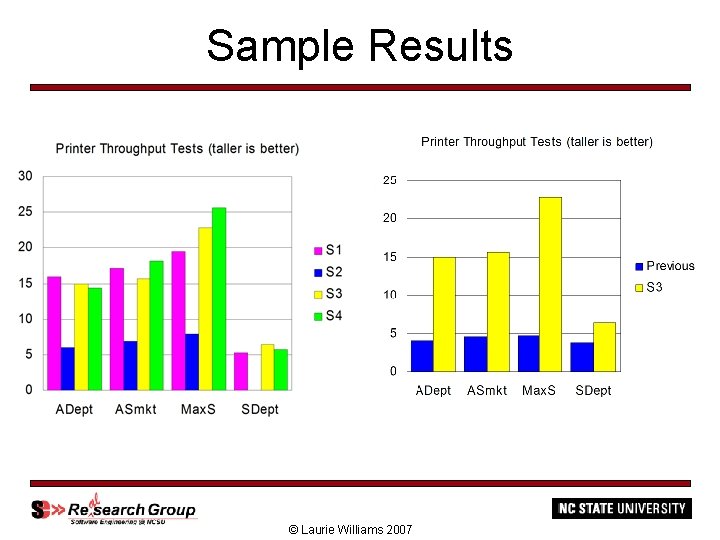

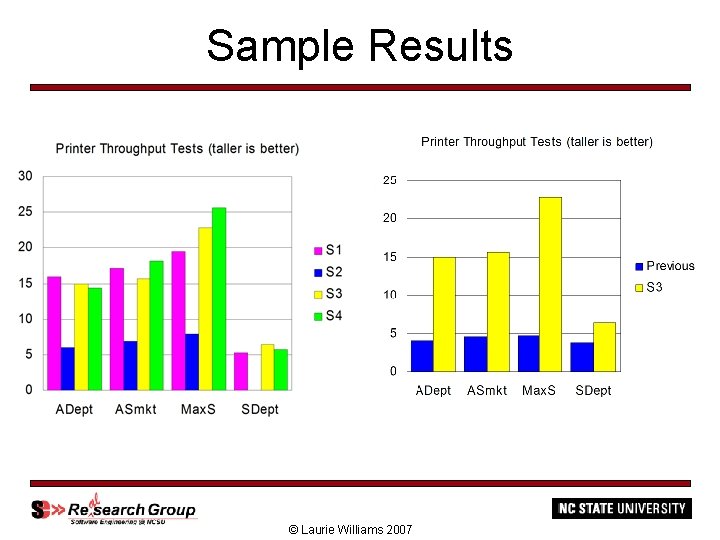

Sample Results © Laurie Williams 2007

Agenda • Overview of Test-Driven Development (TDD) • TDD Case Studies • TDD within XP Case Studies • Summary © Laurie Williams 2007

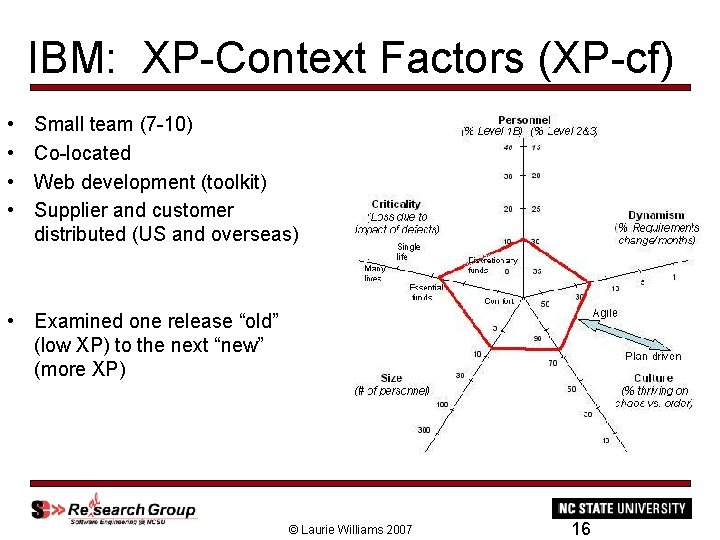

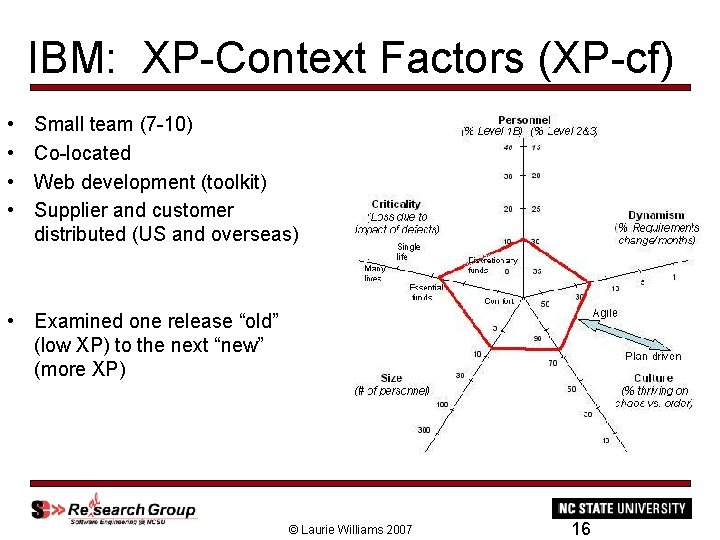

IBM: XP-Context Factors (XP-cf) • • Small team (7 -10) Co-located Web development (toolkit) Supplier and customer distributed (US and overseas) • Examined one release “old” (low XP) to the next “new” (more XP) © Laurie Williams 2007 16

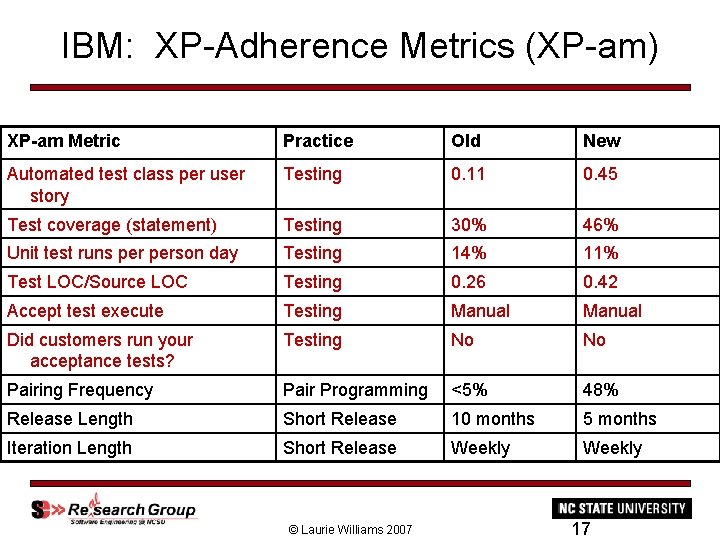

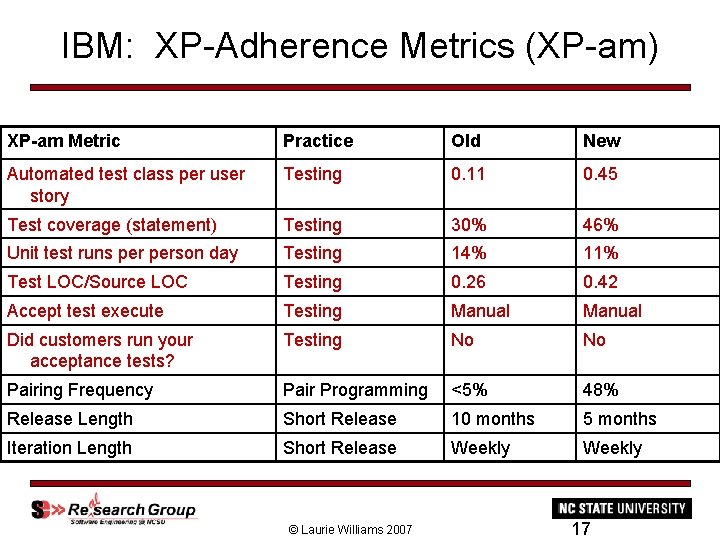

IBM: XP-Adherence Metrics (XP-am) XP-am Metric Practice Old New Automated test class per user story Testing 0. 11 0. 45 Test coverage (statement) Testing 30% 46% Unit test runs person day Testing 14% 11% Test LOC/Source LOC Testing 0. 26 0. 42 Accept test execute Testing Manual Did customers run your acceptance tests? Testing No No Pairing Frequency Pair Programming <5% 48% Release Length Short Release 10 months 5 months Iteration Length Short Release Weekly © Laurie Williams 2007 17

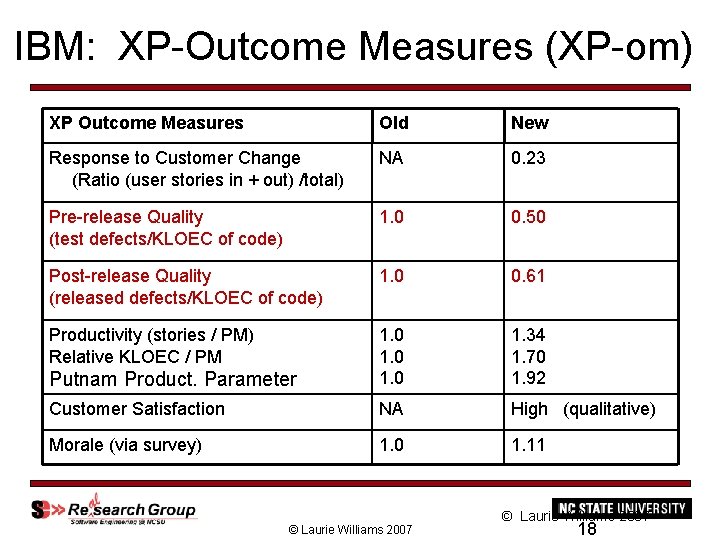

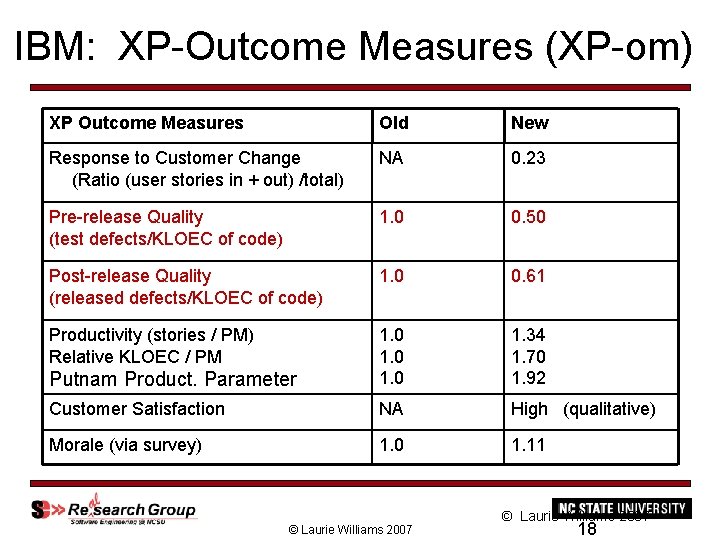

IBM: XP-Outcome Measures (XP-om) XP Outcome Measures Old New Response to Customer Change (Ratio (user stories in + out) /total) NA 0. 23 Pre-release Quality (test defects/KLOEC of code) 1. 0 0. 50 Post-release Quality (released defects/KLOEC of code) 1. 0 0. 61 Productivity (stories / PM) Relative KLOEC / PM Putnam Product. Parameter 1. 0 1. 34 1. 70 1. 92 Customer Satisfaction NA High (qualitative) Morale (via survey) 1. 0 1. 11 © Laurie Williams 2007 18

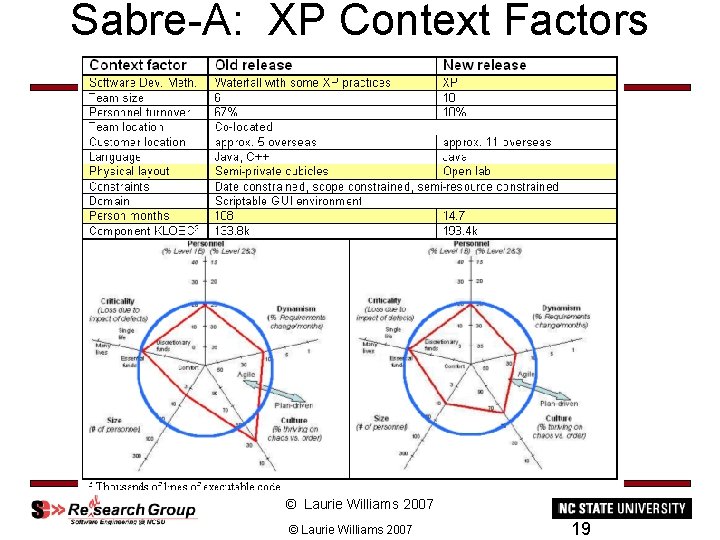

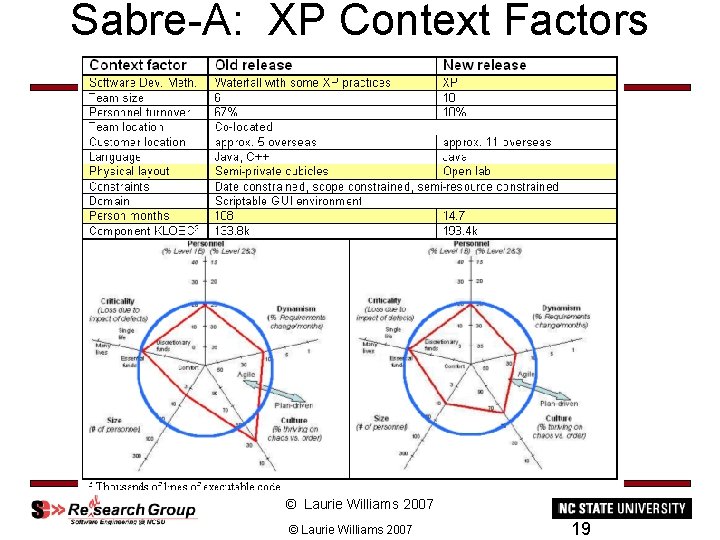

Sabre-A: XP Context Factors (XP-cf) © Laurie Williams 2007 19

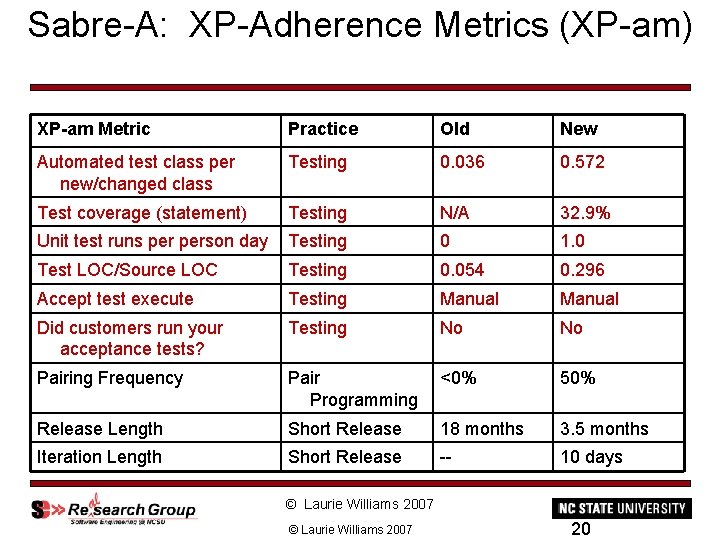

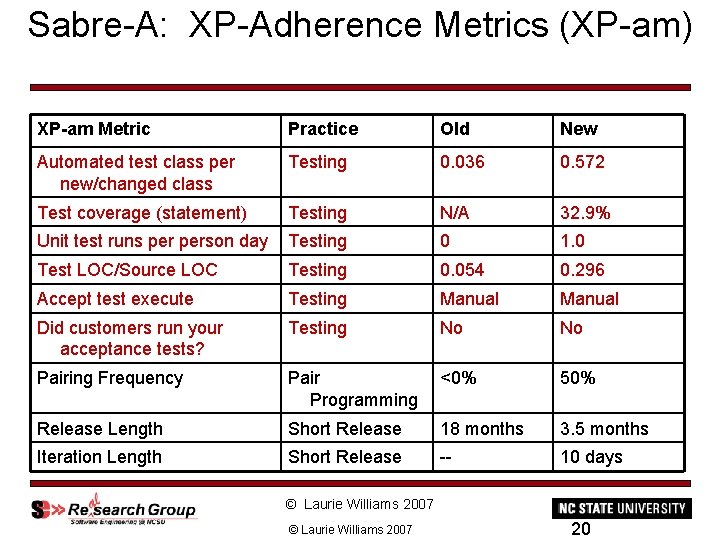

Sabre-A: XP-Adherence Metrics (XP-am) XP-am Metric Practice Old New Automated test class per new/changed class Testing 0. 036 0. 572 Test coverage (statement) Testing N/A 32. 9% Unit test runs person day Testing 0 1. 0 Test LOC/Source LOC Testing 0. 054 0. 296 Accept test execute Testing Manual Did customers run your acceptance tests? Testing No No Pairing Frequency Pair Programming <0% 50% Release Length Short Release 18 months 3. 5 months Iteration Length Short Release -- 10 days © Laurie Williams 2007 20

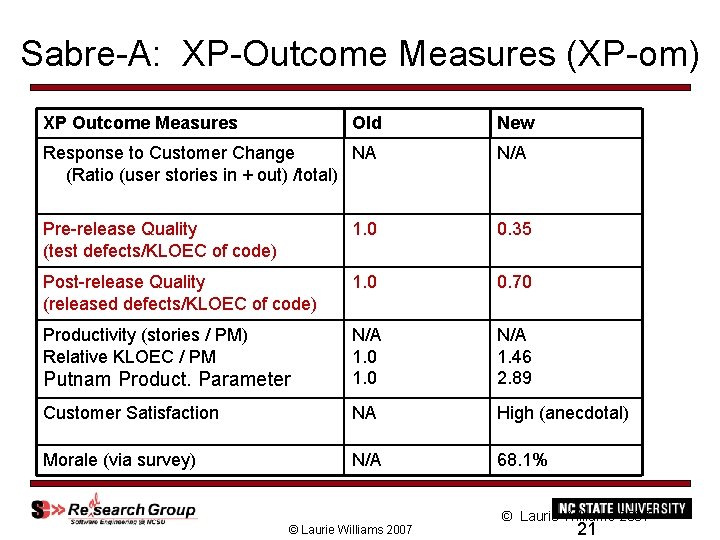

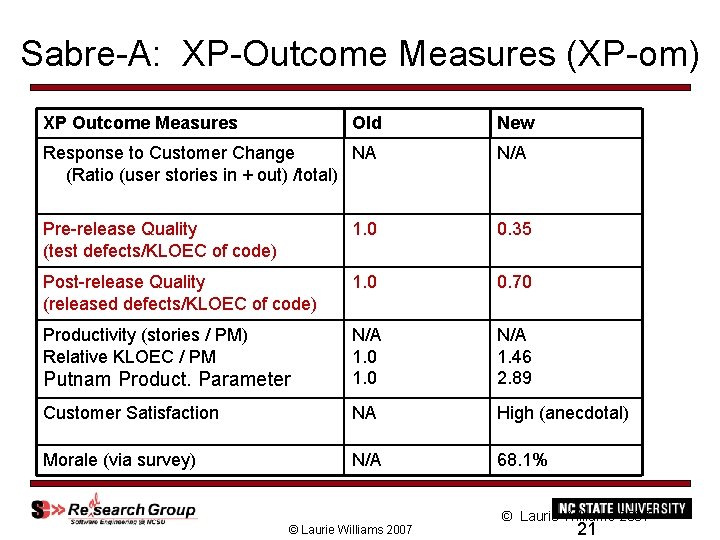

Sabre-A: XP-Outcome Measures (XP-om) XP Outcome Measures Old New Response to Customer Change NA (Ratio (user stories in + out) /total) N/A Pre-release Quality (test defects/KLOEC of code) 1. 0 0. 35 Post-release Quality (released defects/KLOEC of code) 1. 0 0. 70 Productivity (stories / PM) Relative KLOEC / PM Putnam Product. Parameter N/A 1. 0 N/A 1. 46 2. 89 Customer Satisfaction NA High (anecdotal) Morale (via survey) N/A 68. 1% © Laurie Williams 2007 21

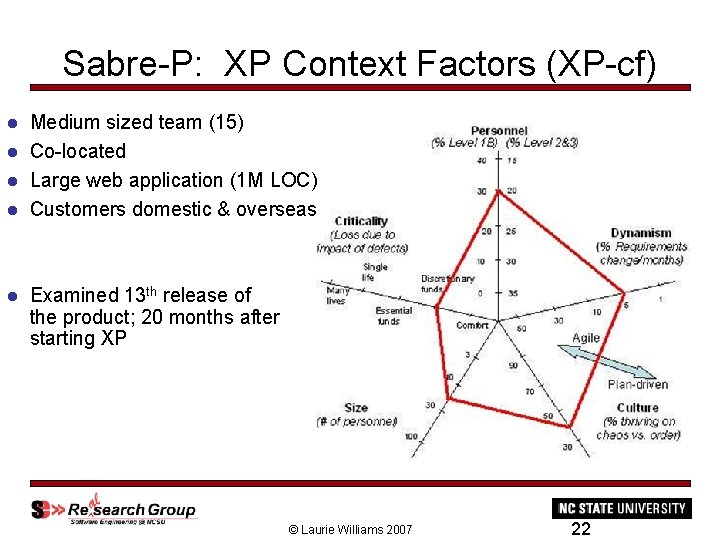

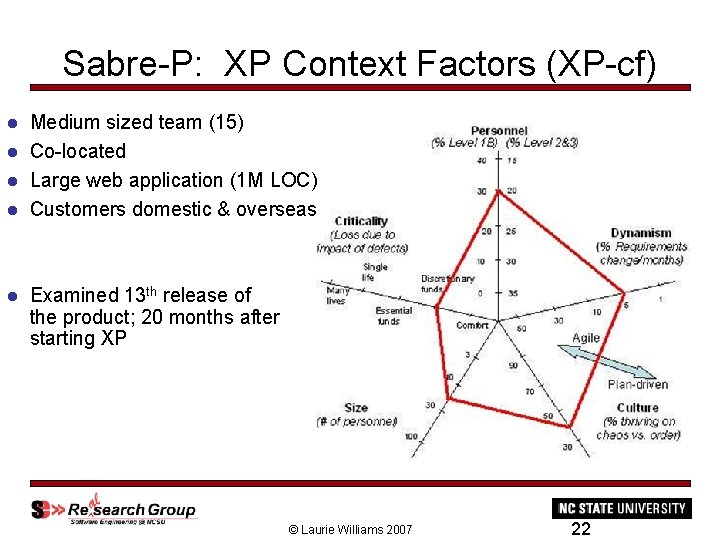

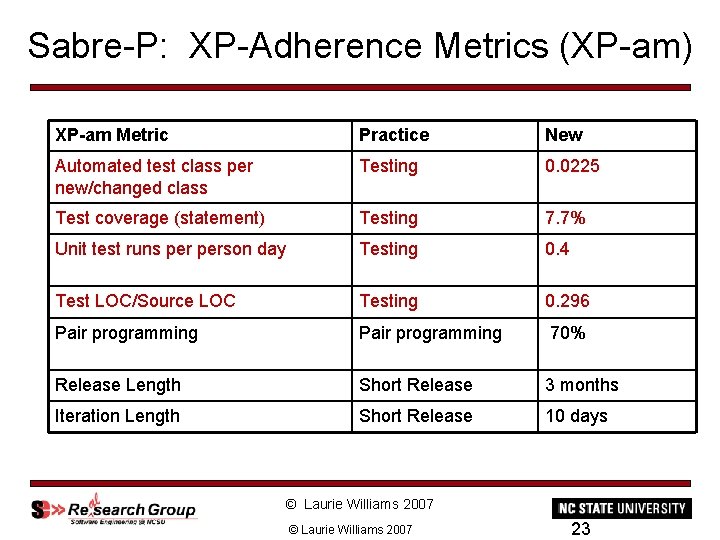

Sabre-P: XP Context Factors (XP-cf) l l l Medium sized team (15) Co-located Large web application (1 M LOC) Customers domestic & overseas Examined 13 th release of the product; 20 months after starting XP © Laurie Williams 2007 22

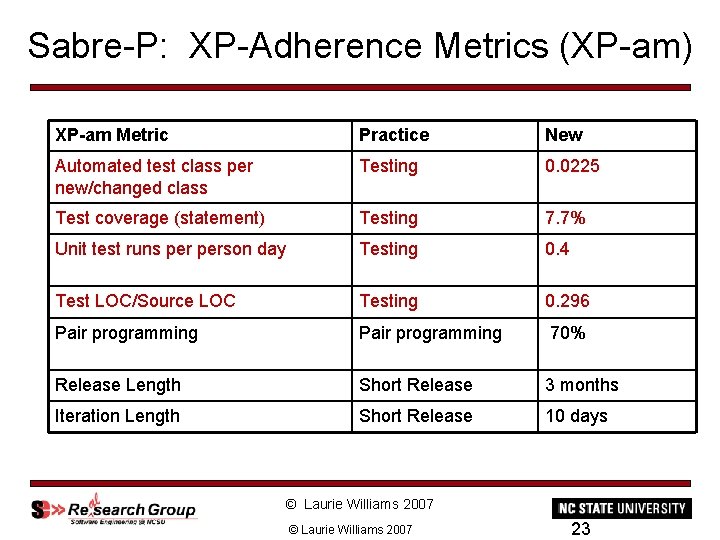

Sabre-P: XP-Adherence Metrics (XP-am) XP-am Metric Practice New Automated test class per new/changed class Testing 0. 0225 Test coverage (statement) Testing 7. 7% Unit test runs person day Testing 0. 4 Test LOC/Source LOC Testing 0. 296 Pair programming 70% Release Length Short Release 3 months Iteration Length Short Release 10 days © Laurie Williams 2007 23

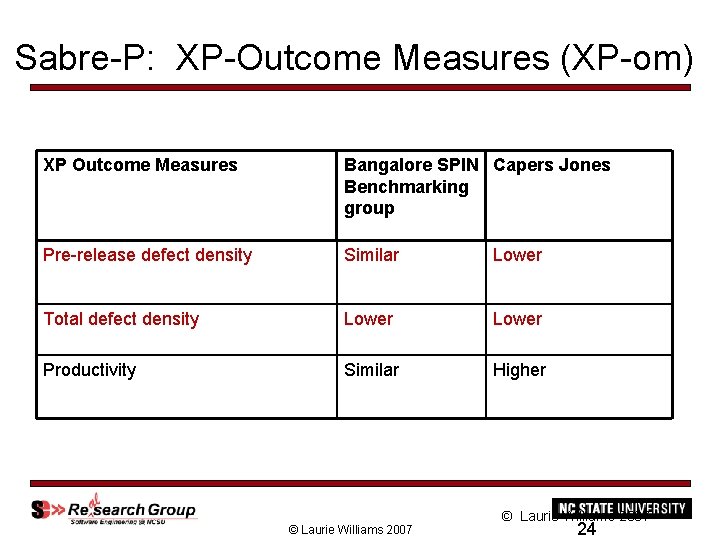

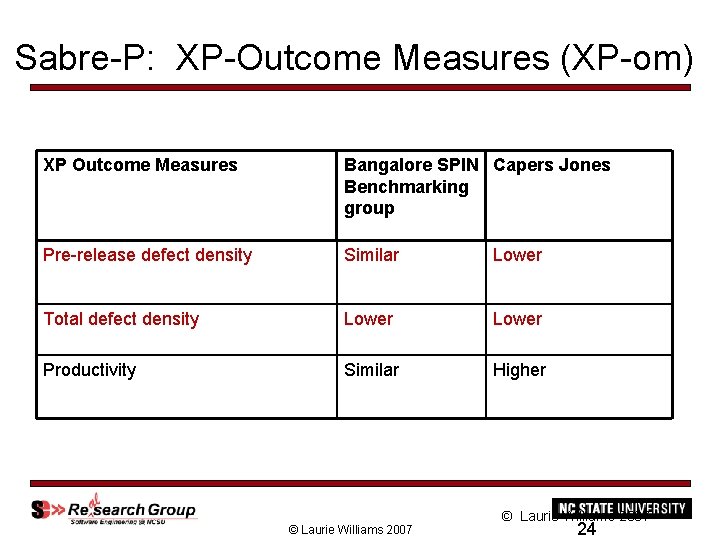

Sabre-P: XP-Outcome Measures (XP-om) XP Outcome Measures Bangalore SPIN Capers Jones Benchmarking group Pre-release defect density Similar Lower Total defect density Lower Productivity Similar Higher © Laurie Williams 2007 24

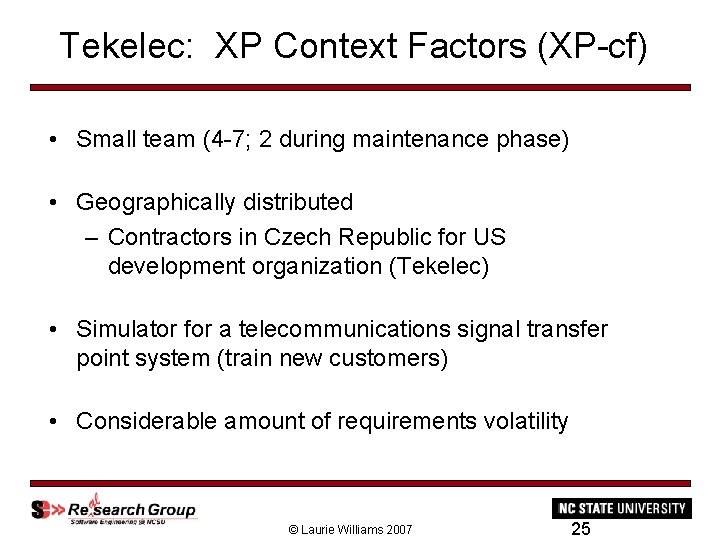

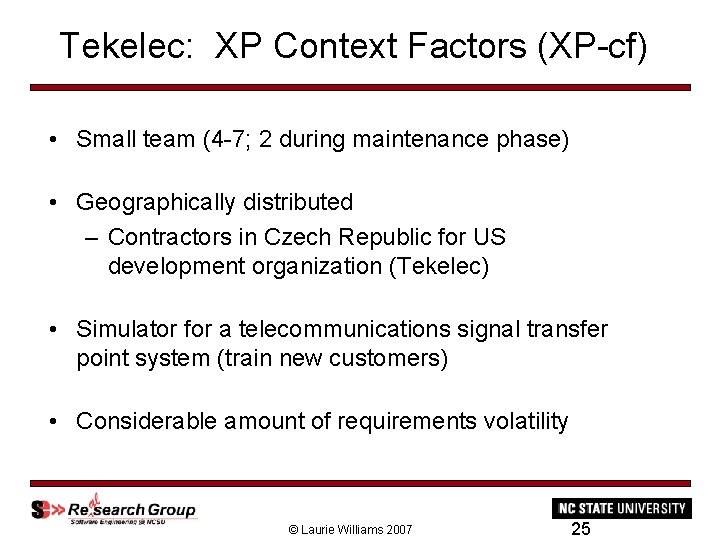

Tekelec: XP Context Factors (XP-cf) • Small team (4 -7; 2 during maintenance phase) • Geographically distributed – Contractors in Czech Republic for US development organization (Tekelec) • Simulator for a telecommunications signal transfer point system (train new customers) • Considerable amount of requirements volatility © Laurie Williams 2007 25

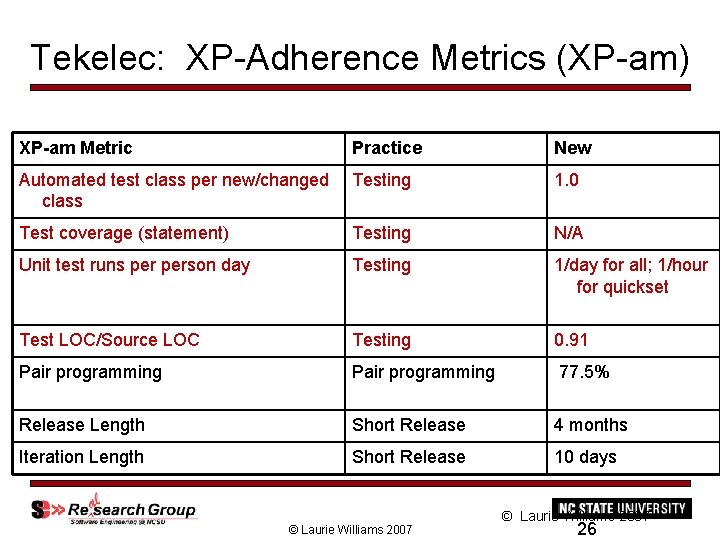

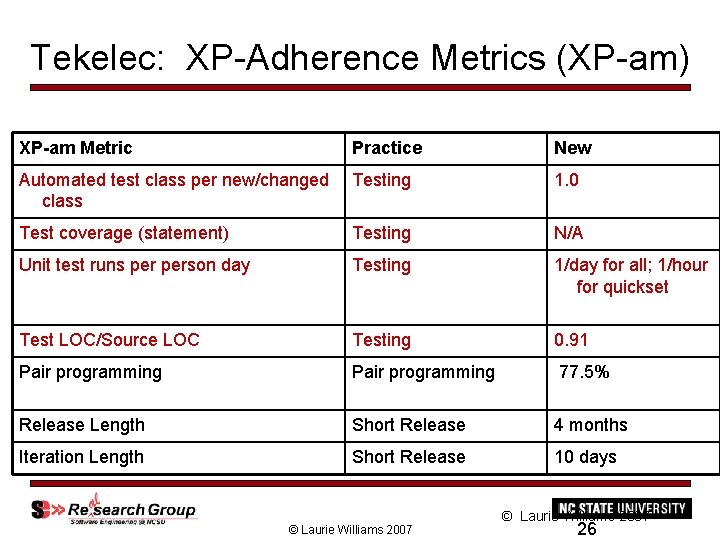

Tekelec: XP-Adherence Metrics (XP-am) XP-am Metric Practice New Automated test class per new/changed class Testing 1. 0 Test coverage (statement) Testing N/A Unit test runs person day Testing 1/day for all; 1/hour for quickset Test LOC/Source LOC Testing 0. 91 Pair programming 77. 5% Release Length Short Release 4 months Iteration Length Short Release 10 days © Laurie Williams 2007 26

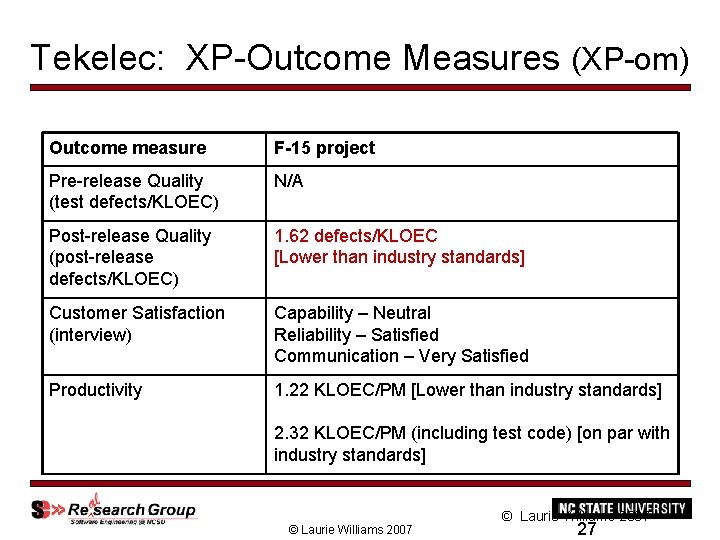

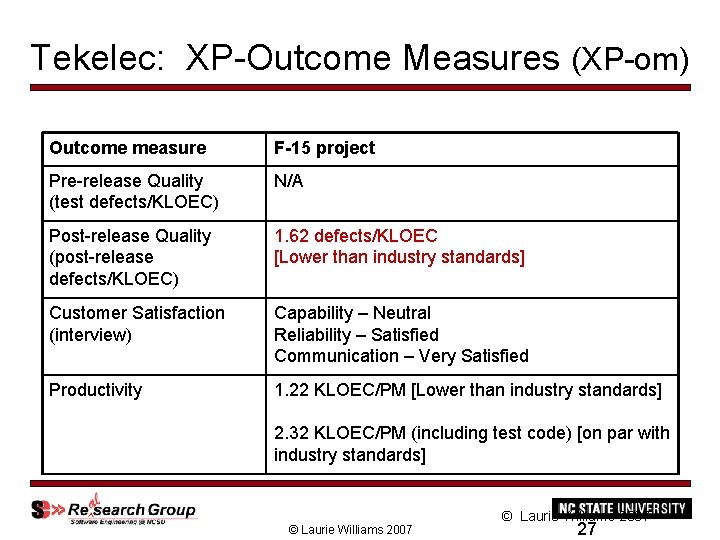

Tekelec: XP-Outcome Measures (XP-om) Outcome measure F-15 project Pre-release Quality (test defects/KLOEC) N/A Post-release Quality (post-release defects/KLOEC) 1. 62 defects/KLOEC [Lower than industry standards] Customer Satisfaction (interview) Capability – Neutral Reliability – Satisfied Communication – Very Satisfied Productivity 1. 22 KLOEC/PM [Lower than industry standards] 2. 32 KLOEC/PM (including test code) [on par with industry standards] © Laurie Williams 2007 27

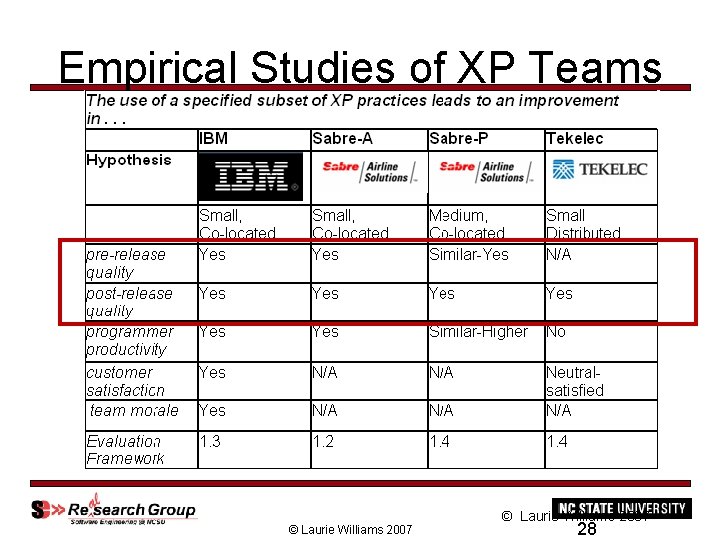

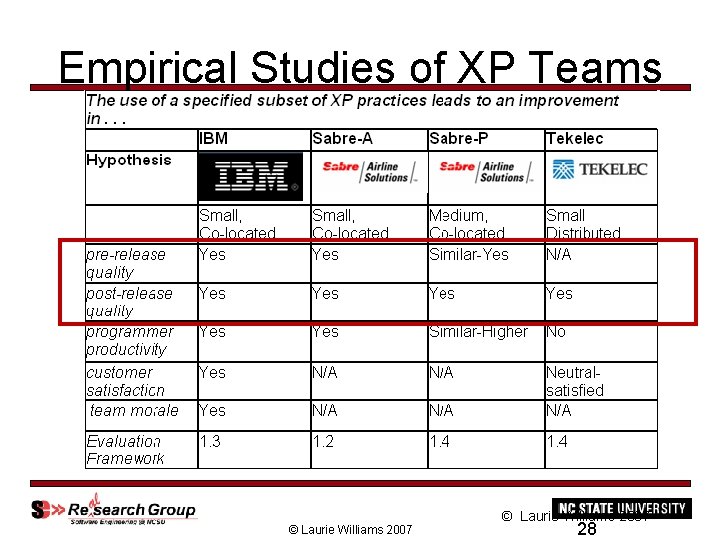

Empirical Studies of XP Teams © Laurie Williams 2007 28

Agenda • Overview of Test-Driven Development (TDD) • TDD Case Studies • TDD within XP Case Studies • Summary © Laurie Williams 2007

Summary • Increased quality with “no” long-run productivity impact • Valuable test assets created in the process • Indications: – Improved design – Anti-aging © Laurie Williams 2007