Emerging Database course Second Example of STATISTICAL CODING

Emerging Database course: Second Example of STATISTICAL CODING

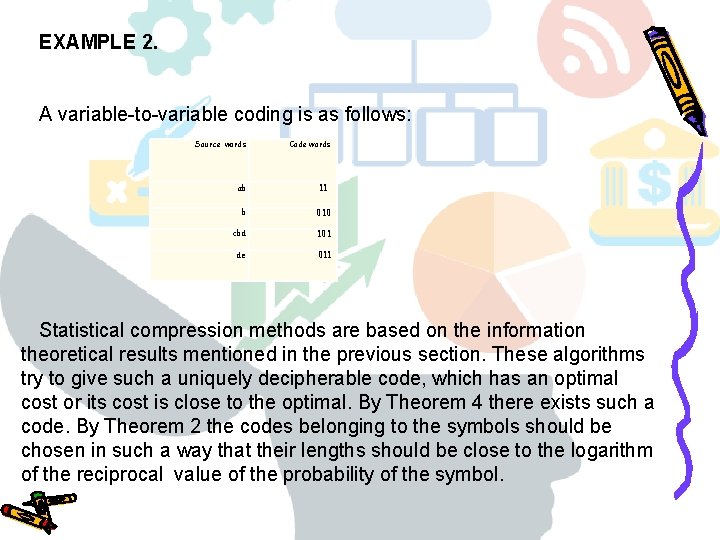

EXAMPLE 2. A variable to variable coding is as follows: Source words Code words ab 11 b 010 cbd 101 de 011 Statistical compression methods are based on the information theoretical results mentioned in the previous section. These algorithms try to give such a uniquely decipherable code, which has an optimal cost or its cost is close to the optimal. By Theorem 4 there exists such a code. By Theorem 2 the codes belonging to the symbols should be chosen in such a way that their lengths should be close to the logarithm of the reciprocal value of the probability of the symbol.

If the relative frequency of the characters of the source string can be computed, then the optimal code lengths could be derived. In practice the problem is that the logarithm values are usually not integers. Many algorithms were developed to solve this problem. The most popular ones are Shannon Fanocoding, Huffman coding, and Arithmetic coding. These methods always assume that the probabilities of the symbols are given. In practice the easiest method for evaluating the probabilities is to calculate the relative frequencies. So, usually these methods are not on line in the sense that before using the coding technique we need to count the relative frequencies in a preprocessing step, and so we need to know the whole string in advance. In the following we present the most widely used statistical compression methods.

REFERENCES • Timon C. Du. , Emerging Database System Architectures • Bochmann, G. Concepts for Distributed Systems Design • Capron, H. L. Computers: Tools for an Information Age

- Slides: 4