Embedded System Testing Testing Consider how testing techniques

Embedded System Testing

Testing • Consider how testing techniques may have made your state-machine project implementation go smoother – Test button circuit separate – Test software with debugger (instead of hardware) – etc.

![Testing • You have all [undoubtedly] done software testing • Much of what we Testing • You have all [undoubtedly] done software testing • Much of what we](http://slidetodoc.com/presentation_image_h2/3ab91491208d275176f13d5c34e49e21/image-3.jpg)

Testing • You have all [undoubtedly] done software testing • Much of what we talk about here will be similar • Although the differences may be subtle, they can have huge effects on system implementation

Differences • As much as we hate to admit it, more often than not embedded system software is ugly • It’s not that the programmer’s are subpar, but due to the fact that there are more constraints on the system • What are some of the constraints?

Differences • There’s more to the system than just software • In a pure software system (i. e. PC based application) we can safely assume that the hardware is working properly • In an embedded system we’re never quite sure • Thus, we must devise “isolation” techniques

Differences • Pure software systems (PC based applications) are, for the most part, deterministic systems – They [pretty much] know what to expect and when to expect it • Embedded systems generally must react to events at arbitrary times – Example? • How does this affect testing?

Differences • PC based applications spend a lot of time sitting around waiting for the user to do something • Many embedded systems must operate in real-time

Differences • PC based applications have an operating system to guide them along – This can be good in that the OS does a lot of bookkeeping for us – This can be bad in that the application is sharing the underlying OS and hardware with other applications • Embedded systems may or may not have an OS

Difficulties • Real-time and concurrency are often difficult to design and difficult to test • Resource constraints (speed, size, …) are often difficult to design and test • Testing tools are intrusive • Reliability is absolutely critical • All of these “traits” exist within an embedded system design

Bottom Line…

PC Based Testing

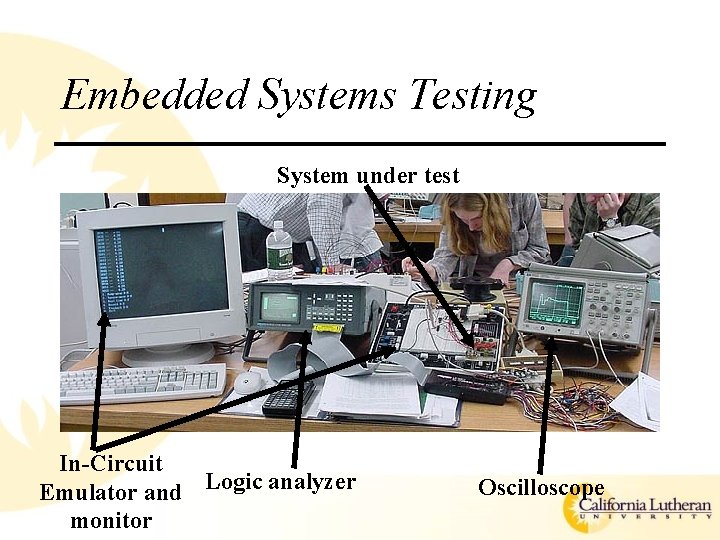

Embedded Systems Testing System under test In-Circuit Emulator and Logic analyzer monitor Oscilloscope

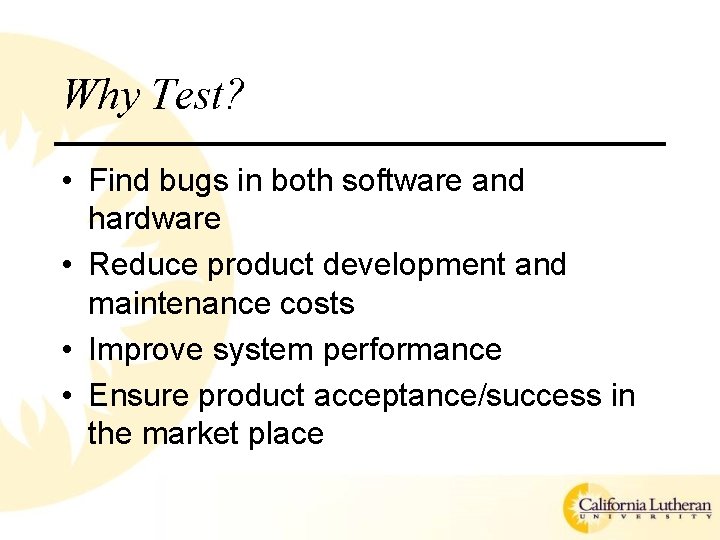

Why Test? • Find bugs in both software and hardware • Reduce product development and maintenance costs • Improve system performance • Ensure product acceptance/success in the market place

![Finding Bugs • It is theoretically impossible to prove [in the general case] that Finding Bugs • It is theoretically impossible to prove [in the general case] that](http://slidetodoc.com/presentation_image_h2/3ab91491208d275176f13d5c34e49e21/image-14.jpg)

Finding Bugs • It is theoretically impossible to prove [in the general case] that a program is correct – Proved with “The Halting Problem” • But, testing can prove that a program is incorrect • The goal of testing is to detect all the possible ways that a system might do the wrong thing • Don’t fall into the trap of telling yourself “the system is simple, there’s no need to test it”

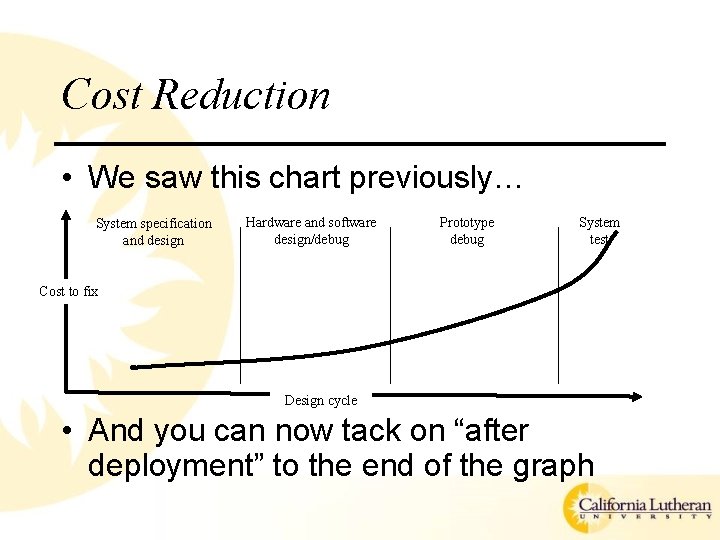

Cost Reduction • We saw this chart previously… System specification and design Hardware and software design/debug Prototype debug System test Cost to fix Design cycle • And you can now tack on “after deployment” to the end of the graph

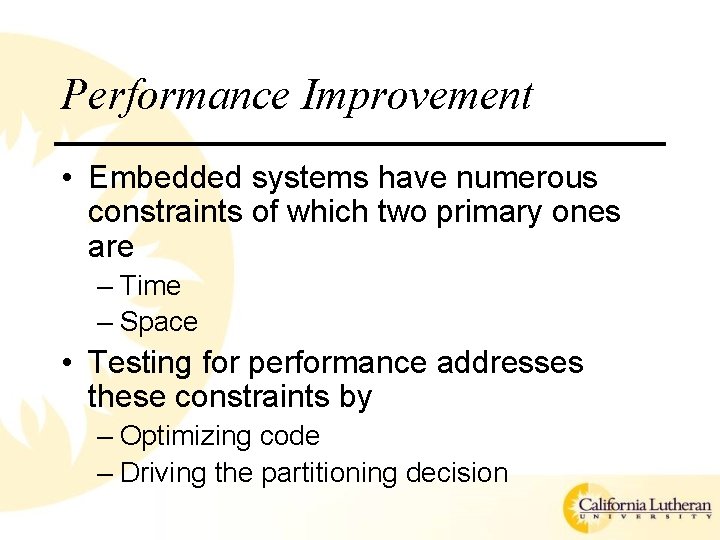

Performance Improvement • Embedded systems have numerous constraints of which two primary ones are – Time – Space • Testing for performance addresses these constraints by – Optimizing code – Driving the partitioning decision

Market Acceptance • Embedded systems don’t sit in a sterile lab environment • By their vary nature the exist in the harsh, real world • The systems must work as specified • Thus, many agencies demand thorough testing prior to introduction into the market – DOD, FAA, FCC, QVC… • And, if the agencies don’t get you, the consumer will

When To Test? • Testing is done in phases – Design phase • Prototypes – Development phase • Unit tests – Integration phase • Regression testing • More often than not, testing within the design and development phases is down played if it exists at all

Design Phase • Software developers write snippets of code to test algorithms • Hardware developers “burn” HDL designs to FPGA’s to test algorithms/circuits • These tend to be early “proof of concept” works and may or may not end up in the final design

Development Phase • Unit tests are generally designed and implemented by the designer of the unit (module) under test • Focus is on the operation of the module • Tests may or may not have anything to do [directly] with the final integrated product – The fact that a module works in isolation in no way guarantees that it will work within the system – Example? • But, unit tests are important – When integration begins it’s nice to know that the pieces work individually

Integration Phase • This is where “testing to specification” begins – Tests relate directly to the customer specification and may involve environmental testing, temperature range testing…as well as “does it do what it’s supposed to do under all circumstances” testing • The goal is to find out if the system works the way the customer expects it to work • Method of choice is “regression testing” – This involves a suite of tests that are all run from beginning to end whenever a change is made • Quite often these tests are run by an organization other than the design team – A method of “keeping everyone honest”

Testing Techniques • Black-Box testing (Functional Testing) – The test designer does not get to see how the system is implemented when designing/running the tests – These concentrate solely on the system (functional) specification • White-Box testing (Coverage Testing) – The test designer knows exactly how the system has been implemented and designs tests with breaking it in mind • Gray-Box testing – These are tests where you have suspicions about how a system may be implemented based on past experience – You hammer away at what you know were weak spots in similar systems – Competitors will do this as a means of reverse engineering your product

Testing Techniques • Functional tests are the most difficult to design (this is where the halting problem comes to play) – Unfortunately, they’re probably the most important • Coverage tests are easy to implement as there automated tools for doing them – But if the functional tests are truly complete and the code is not fully covered, who cares?

When To Stop Testing? • Coverage tests are easy (easier)…when all the code has been executed through testing (and the tests pass), you’re done. • Functional tests get trickier – For better or worse, your customer may tell you when you’re done – With each round of regression testing you may analyze the trends and determine when you’re done – Bottom line is you’ll never by 100% sure your system is correct [in the general case] so you play the “statistics game”…and hope you come out a winner – It ultimately becomes a money issue

How To Test? • Obviously, testing every possible input and every possible combination of paths through the system and under every possible environmental [external] condition will ensure success • Equally obviously, you can’t do this • So, once again you play the “statistics game”

Functional Test Categories • Stress tests – Try to break the system by flooding input channels with signals, overloading memory, reducing power supply inputs, dropping the device on a hard surface… • Boundary value tests – Check the extreme boundaries on input systems – Especially useful for input power supplies

Functional Test Categories • Exception tests – Provide inputs that you know will cause failure conditions and see how your system handles them – Remember, this is an embedded system and a BSOD may not be a suitable option • Error guessing – Figure out how to break a competitor’s system (or your previous version) then check to see how your system handles the same situation

Functional Test Categories • Random tests – Use a Monte Carlo simulation to generate input vectors then analyze the outputs/behaviors to check for consistency • Performance tests – The definition of “a working system” may have time/size/environmental constraints as well as “getting the right answer” constraints – These should be spelled out in the specification and, therefore, included in the functional testing

Functional Test Categories • And don’t forget to test those cases that “you know will never occur in the real world”… • …because they will!

Coverage Test Categories • Statement coverage – Design tests to execute every line of code at least once • Decision or branch coverage – Make sure every condition of/path through a conditional (if/then/else or switch) statement is executed • Condition coverage – Make sure every piece of a compound condition within a conditional statement is tested – Consider this gem…(if with no else) • These clearly require white-box testing and may need external devices to force certain conditions

Failure Modes • Always crashes (does the wrong thing) in the same place – Relatively easy to detect and correct – “Standard” techniques usually suffice • Crashes (does the wrong thing) at seemingly arbitrary places – Very difficult to detect and correct – Problems in this category are generally due to timing (“race conditions”), sequencing (ordering) of events, or number (size) of events – Recreating these conditions is often very difficult and time consuming, if not impossible – H/W – S/W co-verification [discussed previously] may be helpful here – These often lead to redesigns in either the software, hardware, or both

Instrumentation For Functional Testing • In general, you don’t get System. out. println() functionality – If you do [via a monitor program like the Stamp IDE] then you’re not testing the “real” system • You may resort to hardware devices (logic analyzers, oscilloscopes, ROM emulators…) but these things may add loading [i. e. capacitance] to a system which either hides the error or causes a different error

Instrumentation For Coverage Testing • Three categories (as specified previously) – Statement Coverage – instrumentation to verify that all statements are exercised – Decision Coverage – instrumentation to verify that all paths through all conditionals are exercised – Modified Condition Decision Coverage – instrumentation to verify that all terms of complex conditions are exercised • Basically, the instrumentation consists of memory “spies”, logic analyzers, and PC side software to analyze the recorded results • It’s all statistics gathering • The amount of data gathered can be staggering – consider a recursive function call

Instrumentation For Performance Testing • Again, it’s done with external devices – Logic analyzers and oscilloscopes are set to catch triggering events and measure the time to respond, etc.

Testing Is Hard • In all three cases (functional, coverage, performance) we’re dependant on external devices that may alter the functionality of the embedded system • In a PC based application, we see a similar situation, but not as often, when we run a debug build vs. a release build

Remember… • It’s safe to assume that the intentions of the designer were good • It’s equally safe to assume that the implementer fouled up the implementation • Even if they’re the same person • Therefore, test even the simplest of things!

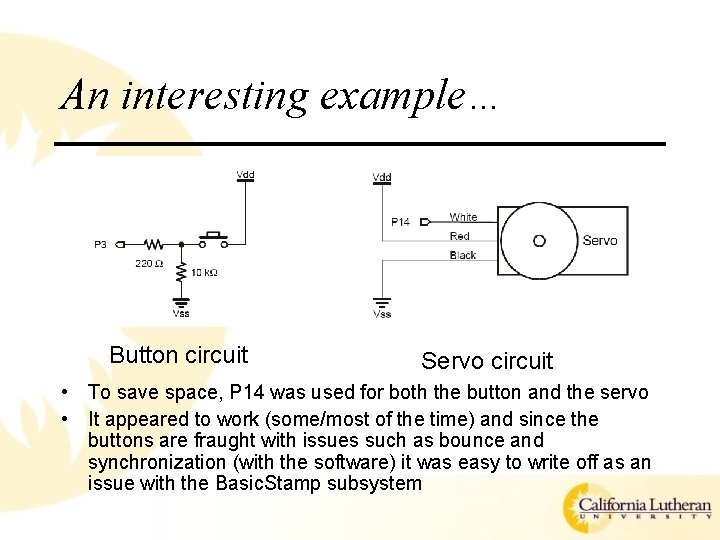

An interesting example… Button circuit Servo circuit • To save space, P 14 was used for both the button and the servo • It appeared to work (some/most of the time) and since the buttons are fraught with issues such as bounce and synchronization (with the software) it was easy to write off as an issue with the Basic. Stamp subsystem

An interesting example • But, what was the real problem? – Why did it appear to work sometimes and not others?

- Slides: 38