Embedded System Lab Thread and Memory Placement on

Embedded System Lab. Thread and Memory Placement on NUMA Systems: Asymmetry Matters 72151691 김해천 haecheon 100@gmail. com Embedded System Lab.

Index l Introduction £ £ £ l l The Impact of Interconnect Asymmetry on Performance New thread and memory placement £ l NUMA Modern OS, Thread load balancing Asymmetry arch Algorithm Evaluation 김해천 Embedded System Lab.

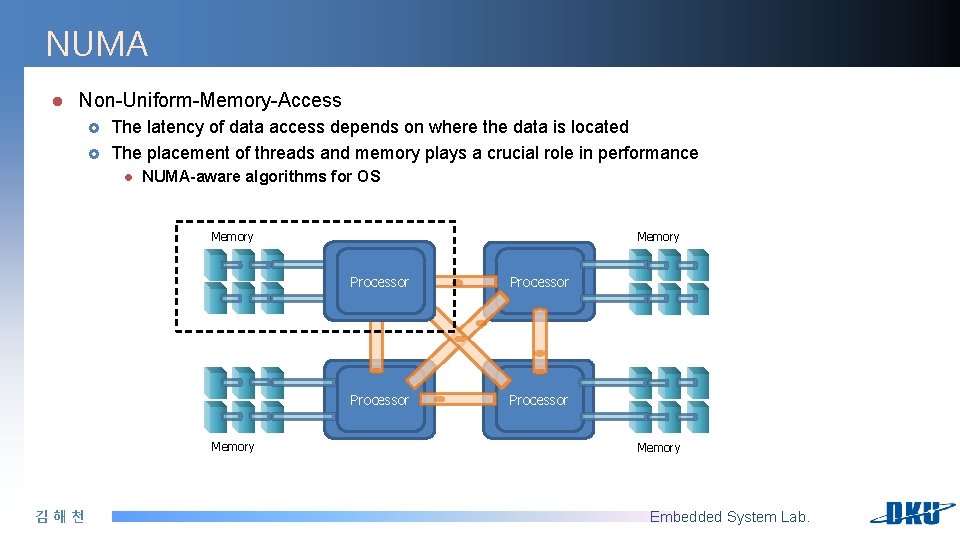

NUMA l Non-Uniform-Memory-Access £ £ The latency of data access depends on where the data is located The placement of threads and memory plays a crucial role in performance l NUMA-aware algorithms for OS Memory 김해천 Memory Processor Memory Embedded System Lab.

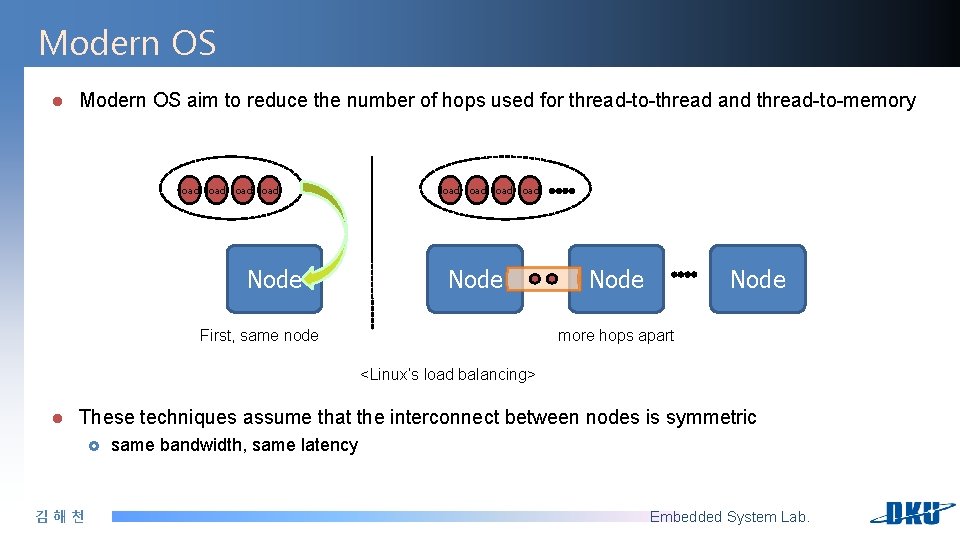

Modern OS l Modern OS aim to reduce the number of hops used for thread-to-thread and thread-to-memory load load Node more hops apart First, same node <Linux’s load balancing> l These techniques assume that the interconnect between nodes is symmetric £ 김해천 same bandwidth, same latency Embedded System Lab.

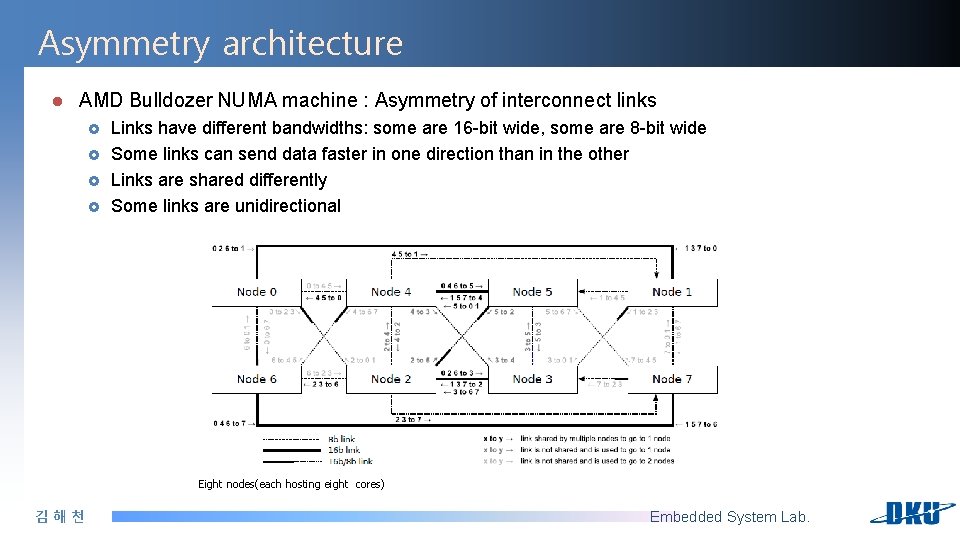

Asymmetry architecture l AMD Bulldozer NUMA machine : Asymmetry of interconnect links £ £ Links have different bandwidths: some are 16 -bit wide, some are 8 -bit wide Some links can send data faster in one direction than in the other Links are shared differently Some links are unidirectional Eight nodes(each hosting eight cores) 김해천 Embedded System Lab.

Asymmetry 김해천 Embedded System Lab.

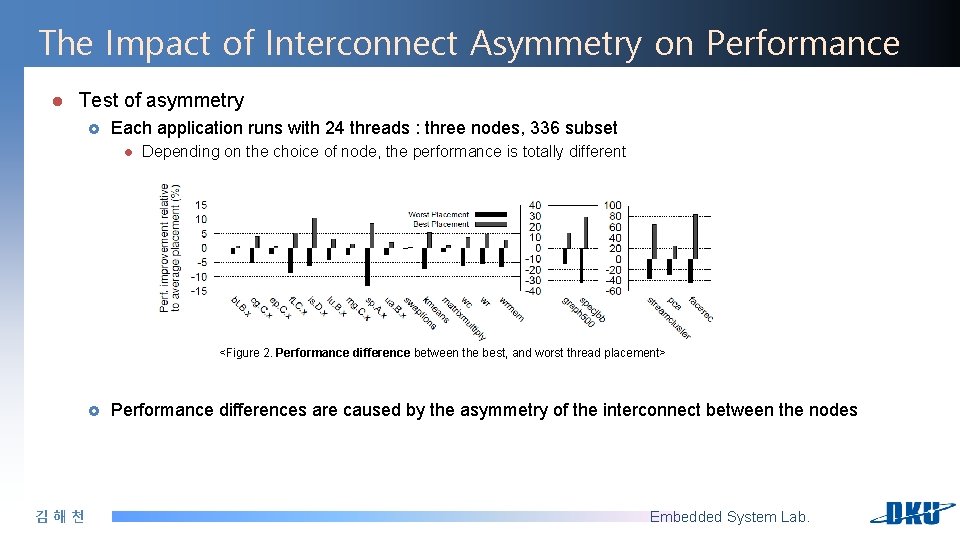

The Impact of Interconnect Asymmetry on Performance l Test of asymmetry £ Each application runs with 24 threads : three nodes, 336 subset l Depending on the choice of node, the performance is totally different <Figure 2. Performance difference between the best, and worst thread placement> £ 김해천 Performance differences are caused by the asymmetry of the interconnect between the nodes Embedded System Lab.

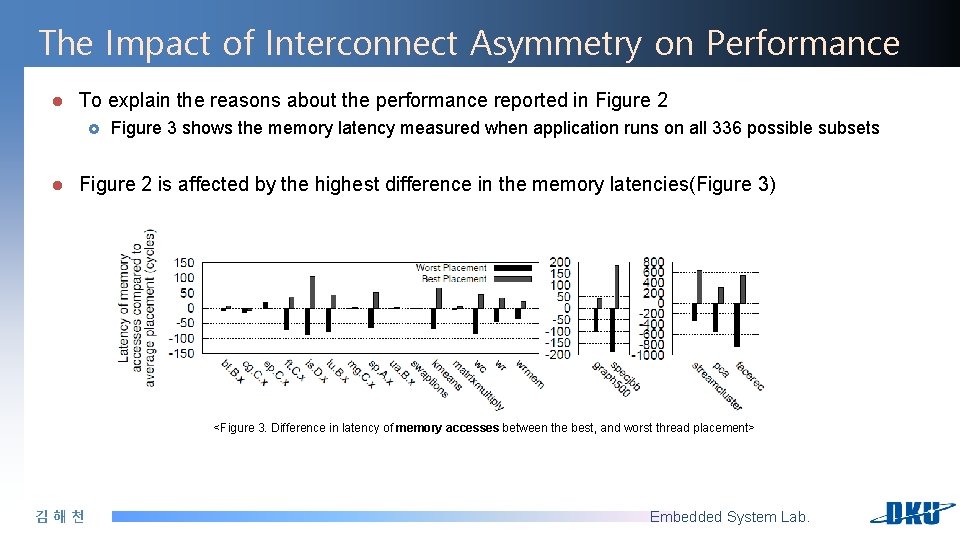

The Impact of Interconnect Asymmetry on Performance l To explain the reasons about the performance reported in Figure 2 £ l Figure 3 shows the memory latency measured when application runs on all 336 possible subsets Figure 2 is affected by the highest difference in the memory latencies(Figure 3) <Figure 3. Difference in latency of memory accesses between the best, and worst thread placement> 김해천 Embedded System Lab.

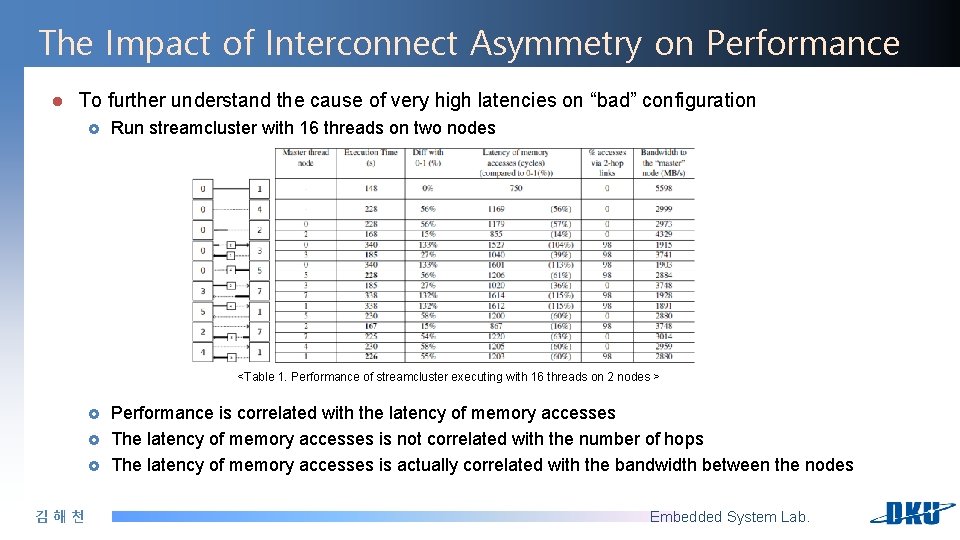

The Impact of Interconnect Asymmetry on Performance l To further understand the cause of very high latencies on “bad” configuration £ Run streamcluster with 16 threads on two nodes <Table 1. Performance of streamcluster executing with 16 threads on 2 nodes > £ £ £ 김해천 Performance is correlated with the latency of memory accesses The latency of memory accesses is not correlated with the number of hops The latency of memory accesses is actually correlated with the bandwidth between the nodes Embedded System Lab.

New thread and memory placement l Efficient online measurement of communication patterns is challenging l Changing the placement of threads and memory may incur high overhead l Accommodating multiple applications simultaneously is challenging l Selecting the best placement is combinatorically difficult 김해천 Embedded System Lab.

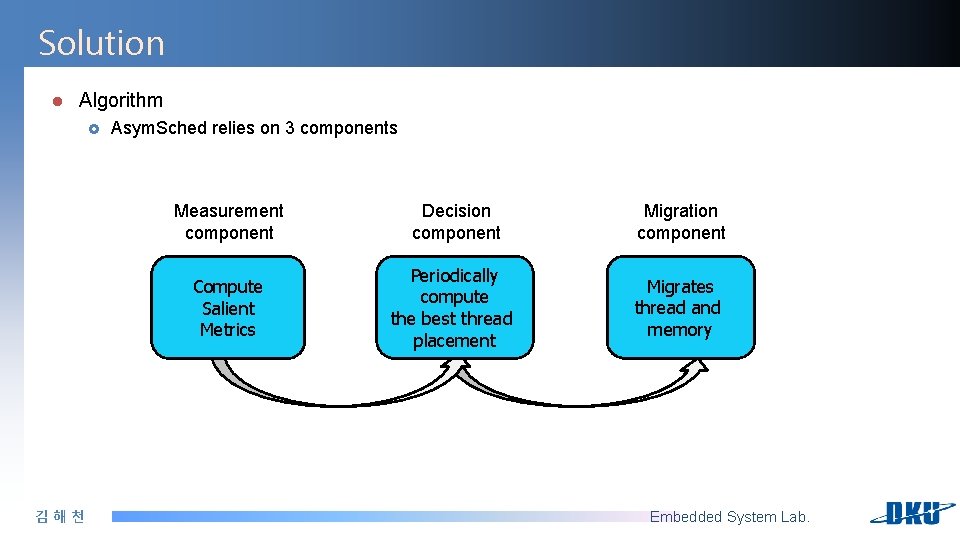

Solution l Algorithm £ 김해천 Asym. Sched relies on 3 components Measurement component Decision component Migration component Compute Salient Metrics Periodically compute the best thread placement Migrates thread and memory Embedded System Lab.

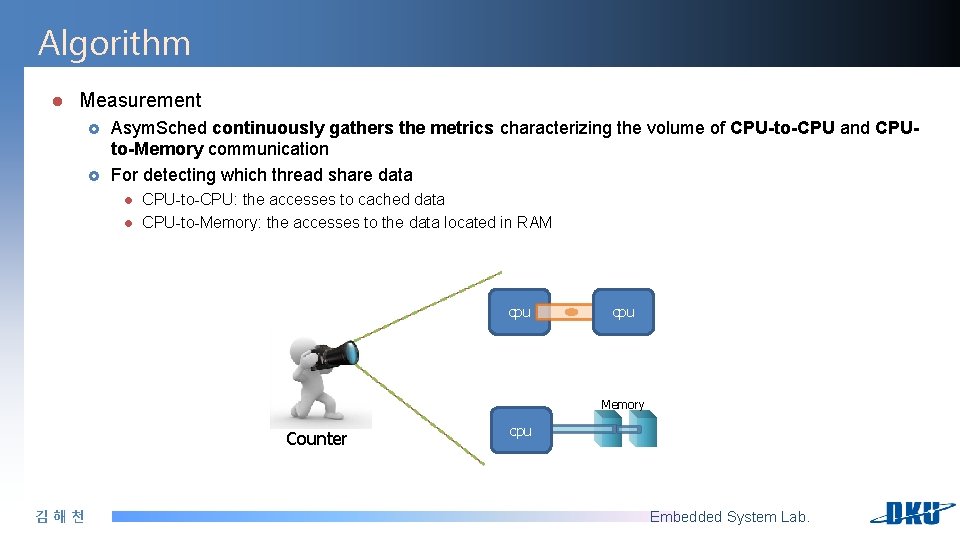

Algorithm l Measurement £ £ Asym. Sched continuously gathers the metrics characterizing the volume of CPU-to-CPU and CPUto-Memory communication For detecting which thread share data l l CPU-to-CPU: the accesses to cached data CPU-to-Memory: the accesses to the data located in RAM cpu Memory Counter 김해천 cpu Embedded System Lab.

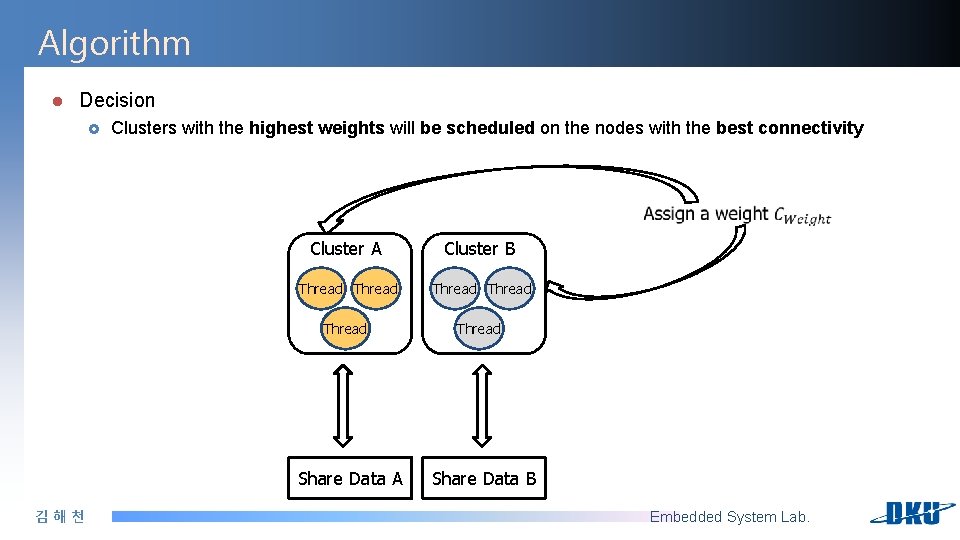

Algorithm l Decision £ Clusters with the highest weights will be scheduled on the nodes with the best connectivity Cluster A Cluster B Thread Thread Share Data A 김해천 Share Data B Embedded System Lab.

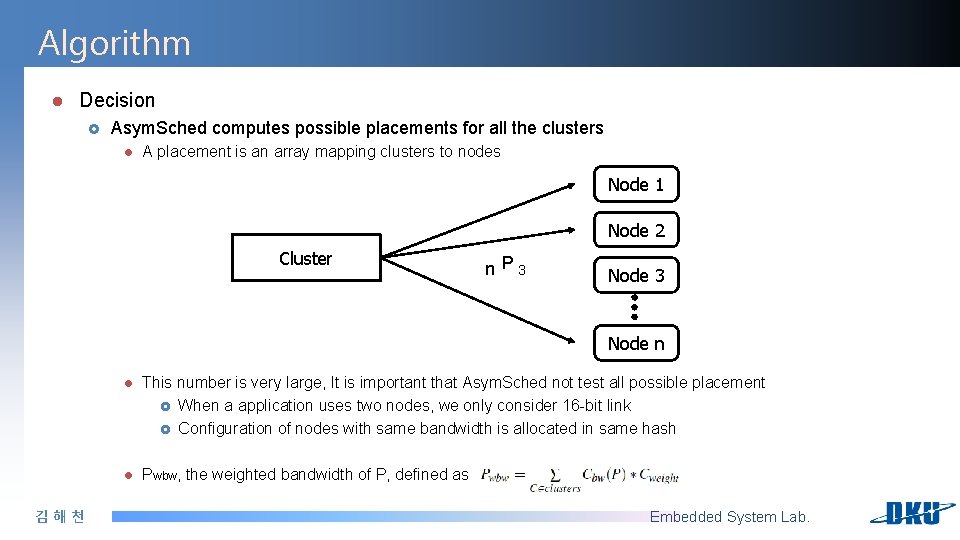

Algorithm l Decision £ Asym. Sched computes possible placements for all the clusters l A placement is an array mapping clusters to nodes Node 1 Node 2 Cluster n P 3 Node n 김해천 l This number is very large, It is important that Asym. Sched not test all possible placement £ When a application uses two nodes, we only consider 16 -bit link £ Configuration of nodes with same bandwidth is allocated in same hash l Pwbw, the weighted bandwidth of P, defined as Embedded System Lab.

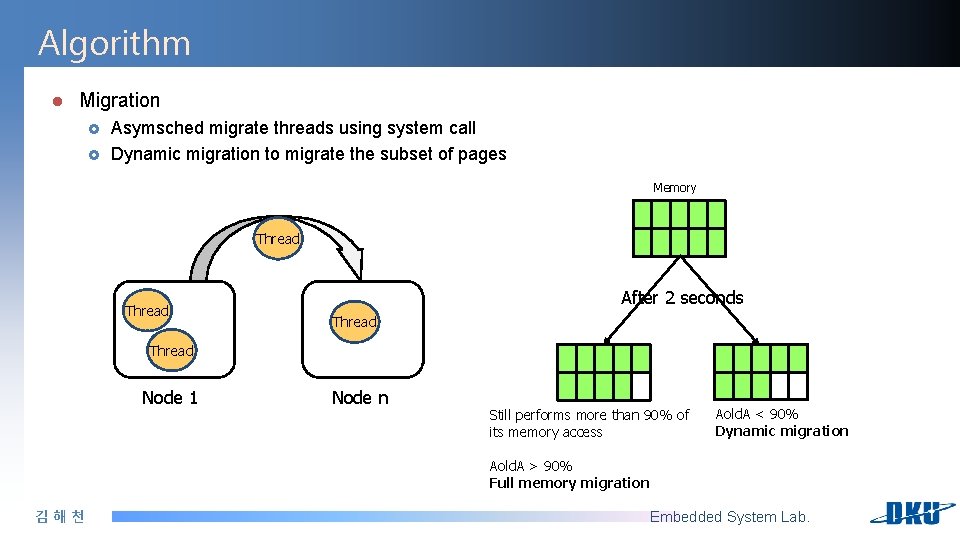

Algorithm l Migration £ £ Asymsched migrate threads using system call Dynamic migration to migrate the subset of pages Memory Thread After 2 seconds Thread Node 1 Node n Still performs more than 90% of its memory access Aold. A < 90% Dynamic migration Aold. A > 90% Full memory migration 김해천 Embedded System Lab.

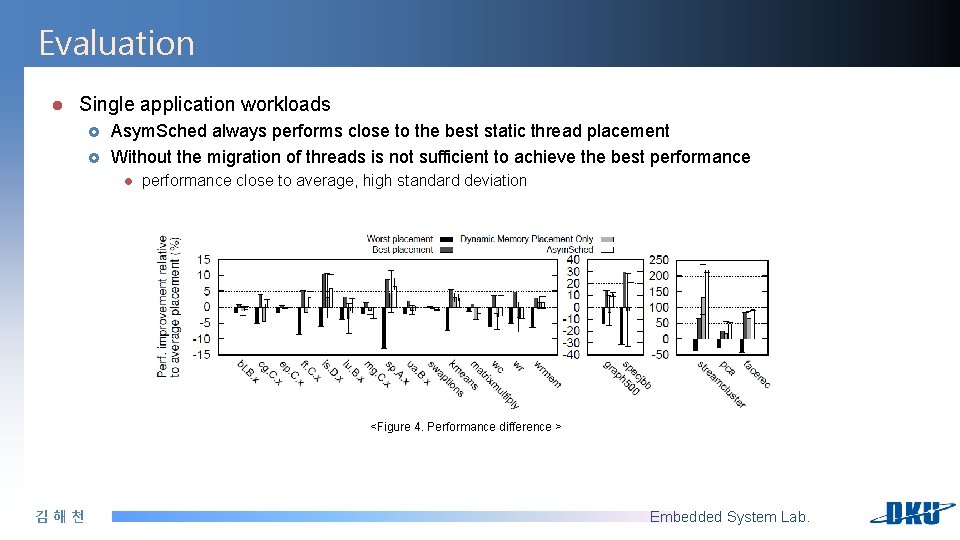

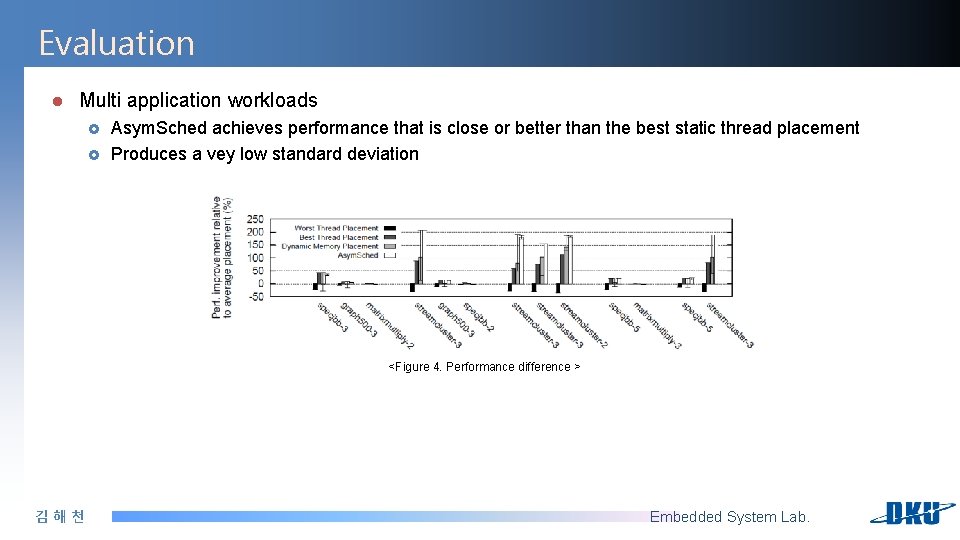

Evaluation l Single application workloads £ £ Asym. Sched always performs close to the best static thread placement Without the migration of threads is not sufficient to achieve the best performance l performance close to average, high standard deviation <Figure 4. Performance difference > 김해천 Embedded System Lab.

Evaluation l Multi application workloads £ £ Asym. Sched achieves performance that is close or better than the best static thread placement Produces a vey low standard deviation <Figure 4. Performance difference > 김해천 Embedded System Lab.

Conclusion l Asymmetry of the interconnect drastically impacts performance l The bandwidth between nodes is more important than the distance l Asymsched, a new thread and memory placement algorithm £ l maximize the bandwidth The number of nodes in NUMA systems increases, the interconnect is less likely to remain symmetric £ 김해천 Asymsched design principles will be of growing importance in the future Embedded System Lab.

- Slides: 18