Embedded System Lab Automatic NUMA Balancing Rik van

Embedded System Lab. Automatic NUMA Balancing Rik van Riel, Principal Software Engineer, Red Hat Vinod Chegu, Master Technologist, HP 김해천 haecheon 100@gmail. com Embedded System Lab.

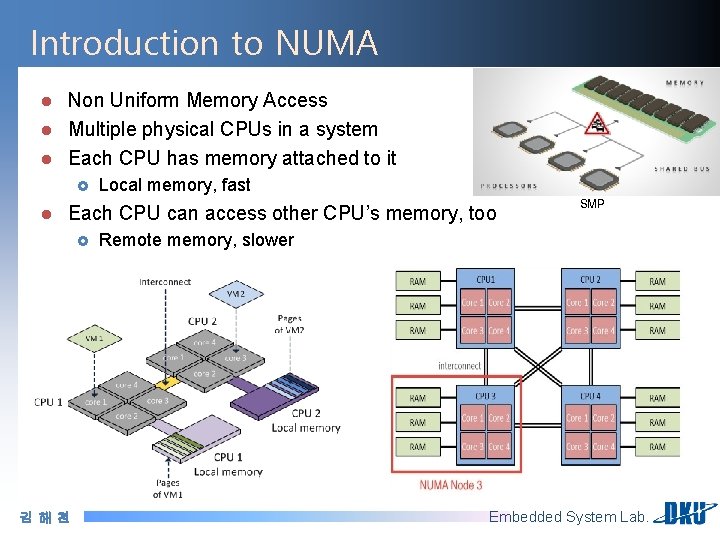

Introduction to NUMA l l l Non Uniform Memory Access Multiple physical CPUs in a system Each CPU has memory attached to it £ l Local memory, fast Each CPU can access other CPU’s memory, too £ 김해천 SMP Remote memory, slower Embedded System Lab.

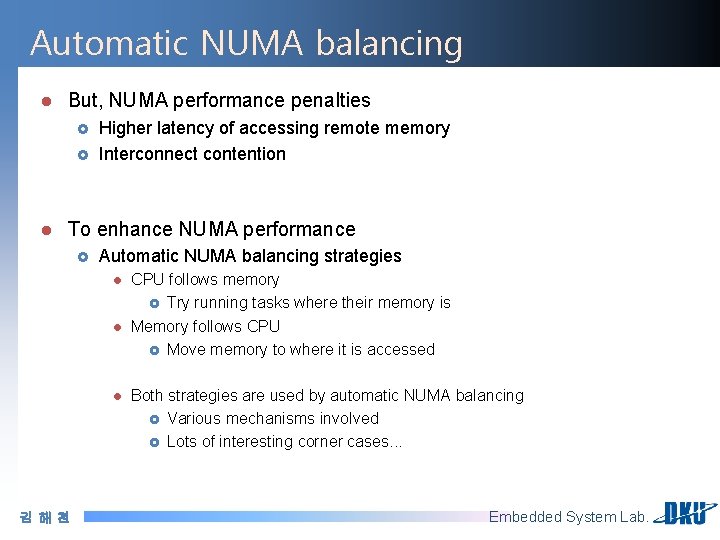

Automatic NUMA balancing l But, NUMA performance penalties £ £ l Higher latency of accessing remote memory Interconnect contention To enhance NUMA performance £ Automatic NUMA balancing strategies l l l 김해천 CPU follows memory £ Try running tasks where their memory is Memory follows CPU £ Move memory to where it is accessed Both strategies are used by automatic NUMA balancing £ Various mechanisms involved £ Lots of interesting corner cases. . . Embedded System Lab.

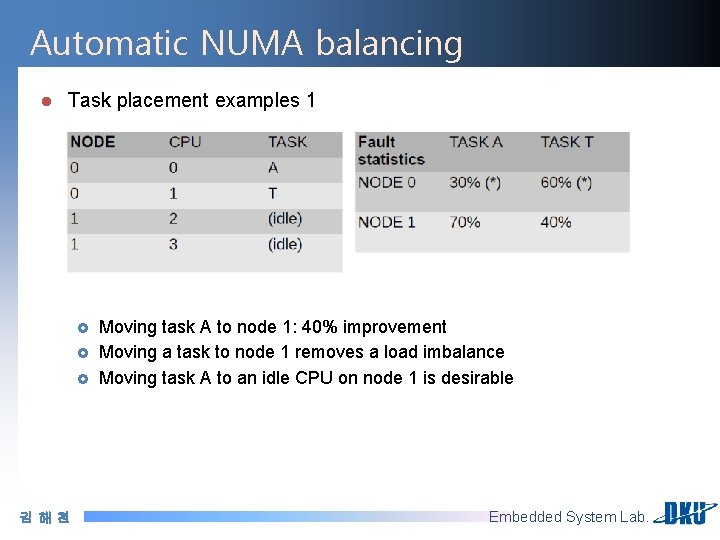

Automatic NUMA balancing l Task placement examples 1 £ £ £ 김해천 Moving task A to node 1: 40% improvement Moving a task to node 1 removes a load imbalance Moving task A to an idle CPU on node 1 is desirable Embedded System Lab.

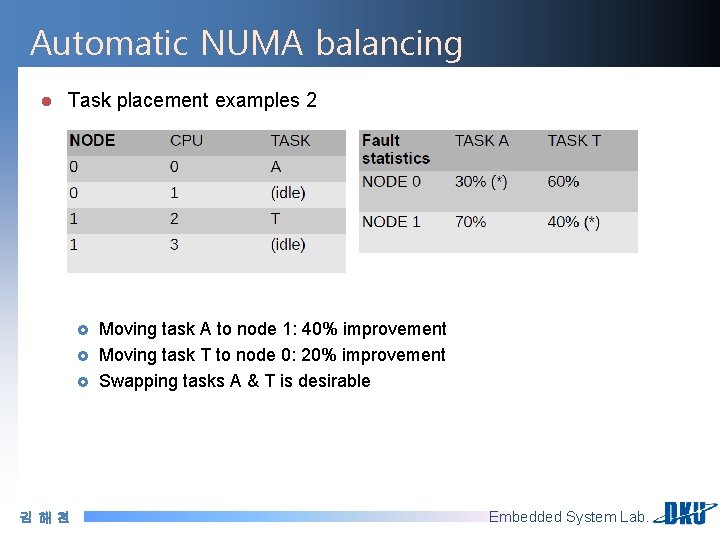

Automatic NUMA balancing l Task placement examples 2 £ £ £ 김해천 Moving task A to node 1: 40% improvement Moving task T to node 0: 20% improvement Swapping tasks A & T is desirable Embedded System Lab.

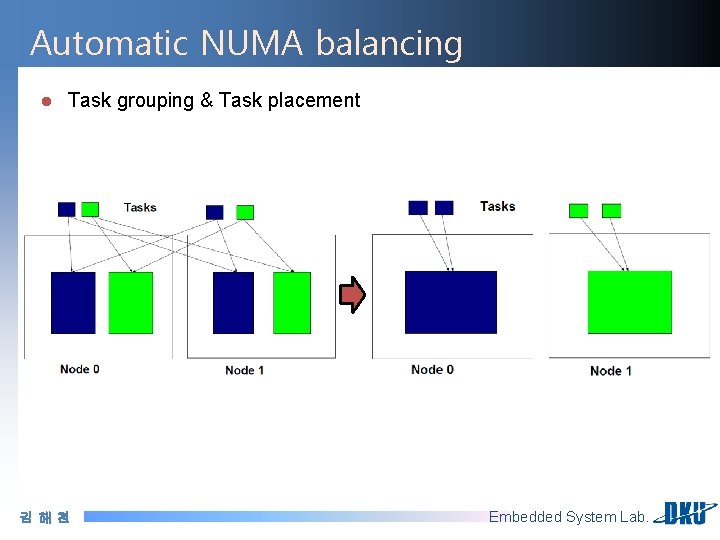

Automatic NUMA balancing l Task grouping £ £ Multiple tasks can access the same memory Use CPU num & pid in struct page to detect shared memory l l £ Grouping related tasks improves NUMA task placement l l At NUMA fault time, check CPU where page was last faulted Group tasks together in numa_group, if PID matches Only group truly related tasks Task grouping & Task placement £ 김해천 Task placement code similar to before Embedded System Lab.

Automatic NUMA balancing l Task grouping & Task placement 김해천 Embedded System Lab.

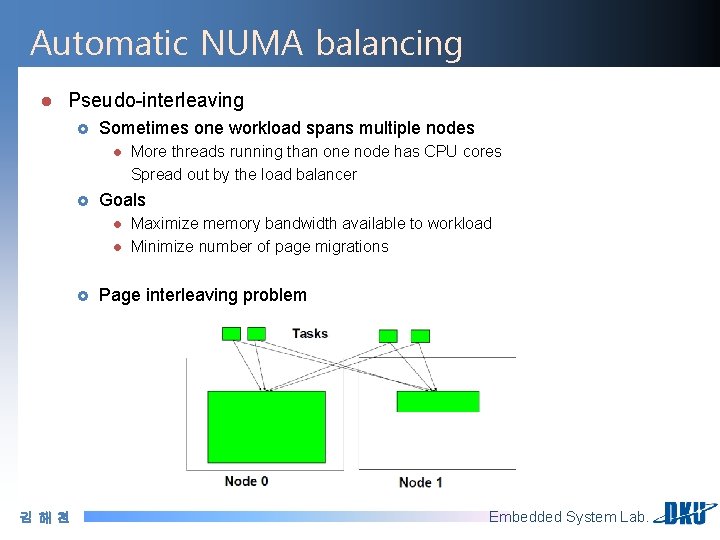

Automatic NUMA balancing l Pseudo-interleaving £ Sometimes one workload spans multiple nodes l £ Goals l l £ 김해천 More threads running than one node has CPU cores Spread out by the load balancer Maximize memory bandwidth available to workload Minimize number of page migrations Page interleaving problem Embedded System Lab.

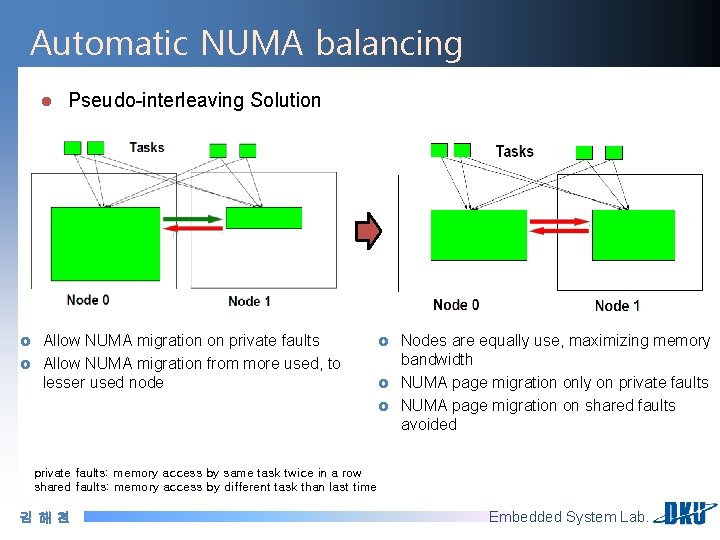

Automatic NUMA balancing l £ £ Pseudo-interleaving Solution Allow NUMA migration on private faults Allow NUMA migration from more used, to lesser used node £ £ £ Nodes are equally use, maximizing memory bandwidth NUMA page migration only on private faults NUMA page migration on shared faults avoided private faults: memory access by same task twice in a row shared faults: memory access by different task than last time 김해천 Embedded System Lab.

The End 김해천 Embedded System Lab.

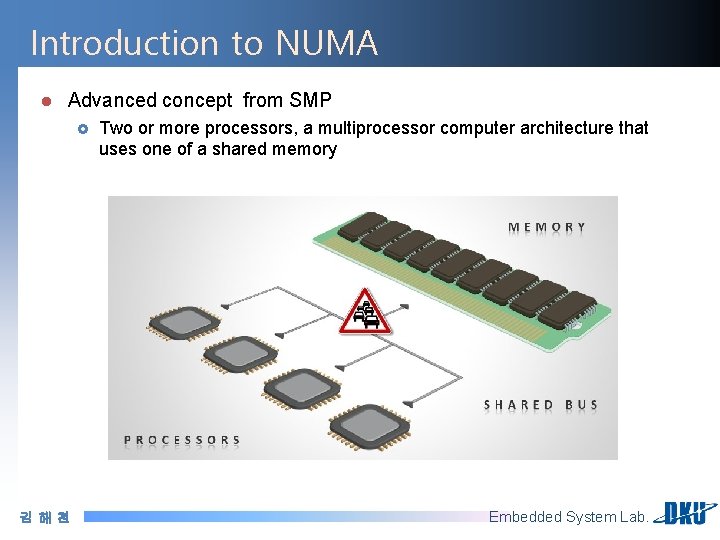

Introduction to NUMA l Advanced concept from SMP £ 김해천 Two or more processors, a multiprocessor computer architecture that uses one of a shared memory Embedded System Lab.

- Slides: 11