Eliminating Silent Data Corruptions caused by SoftErrors Siva

Eliminating Silent Data Corruptions caused by Soft-Errors Siva Hari, Sarita Adve, Helia Naeimi, Pradeep Ramachandran, University of Illinois at Urbana-Champaign, shari 2@illinois. edu

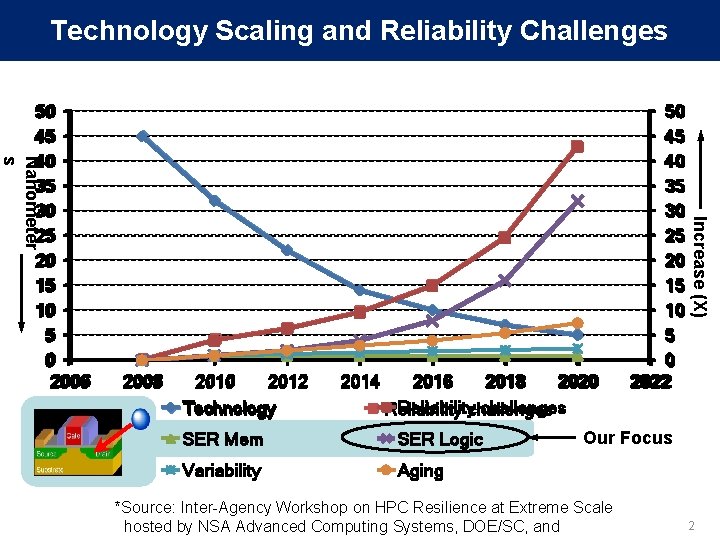

Technology Scaling and Reliability Challenges 2008 2010 2012 Technology 2014 2016 2018 2020 Reliabilitychallenges Reliability SER Mem SER Logic Variability Aging 50 45 40 35 30 25 20 15 10 5 0 2022 Increase (X) Nanometer s 50 50 45 45 40 40 35 35 30 30 25 25 20 20 15 15 10 10 55 00 2006 Our Focus *Source: Inter-Agency Workshop on HPC Resilience at Extreme Scale hosted by NSA Advanced Computing Systems, DOE/SC, and 2

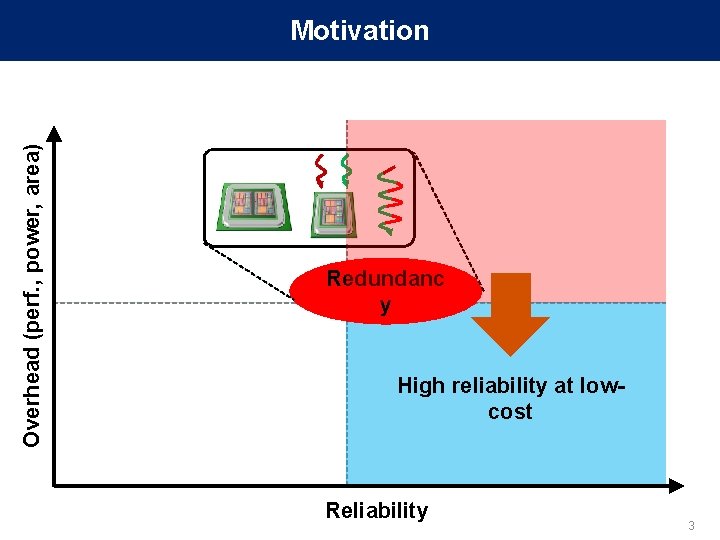

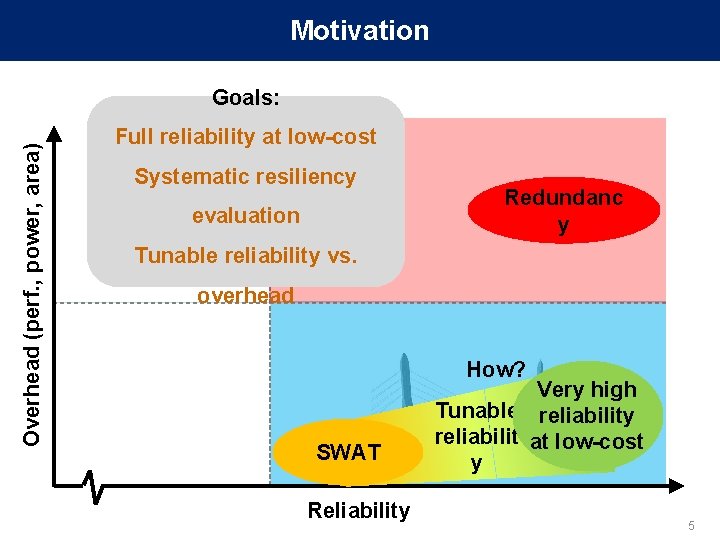

Overhead (perf. , power, area) Motivation Redundanc y High reliability at lowcost Reliability 3

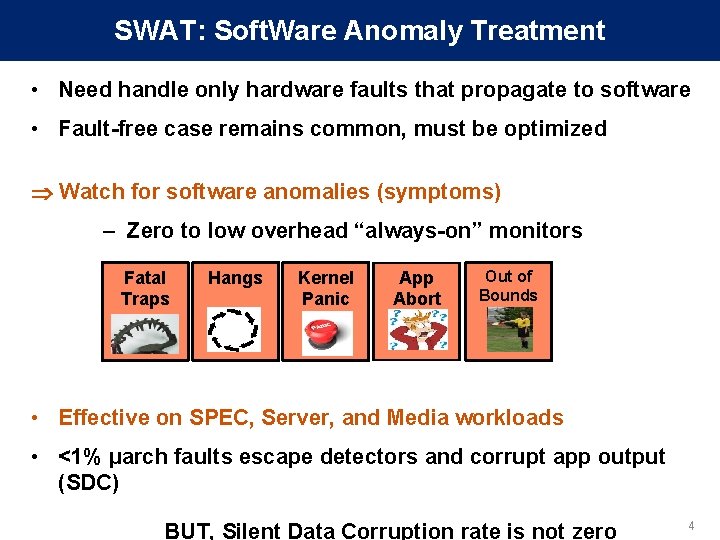

SWAT: Soft. Ware Anomaly Treatment • Need handle only hardware faults that propagate to software • Fault-free case remains common, must be optimized Watch for software anomalies (symptoms) – Zero to low overhead “always-on” monitors Fatal Traps Hangs Kernel Panic App Abort Out of Bounds • Effective on SPEC, Server, and Media workloads • <1% µarch faults escape detectors and corrupt app output (SDC) BUT, Silent Data Corruption rate is not zero 4

Motivation Overhead (perf. , power, area) Goals: Full reliability at low-cost Systematic resiliency evaluation Redundanc y Tunable reliability vs. overhead How? SWAT Reliability Very high Tunable reliability reliabilit at low-cost y 5

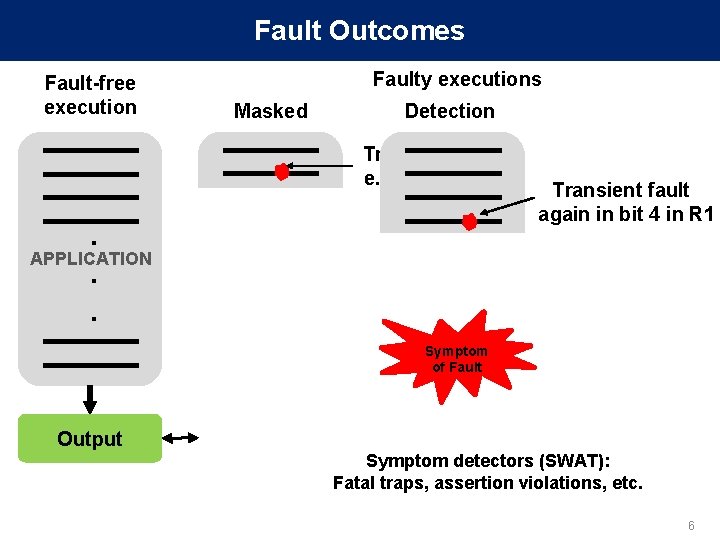

Fault Outcomes Fault-free execution Faulty executions Masked Detection Transient Fault e. g. , bit 4 in R 1 . APPLICATION. . Transient fault again in bit 4 in R 1 Symptom of Fault Output Symptom detectors (SWAT): Fatal traps, assertion violations, etc. 6

Fault Outcomes Fault-free execution Faulty executions Masked Detection . SDCs are worst. of all outcomes. APPLICATION. SDCs? . . How to eliminate. . . SDC . APPLICATION. . Symptom of Fault Output X Output Silent Data Corruption (SDC) 7

![Approach Find SDC causing application sites [ASPLOS 2012] Comprehensive resiliency analysis, 96% accuracy ~5 Approach Find SDC causing application sites [ASPLOS 2012] Comprehensive resiliency analysis, 96% accuracy ~5](http://slidetodoc.com/presentation_image_h2/955acf01fb738ac7552805fb87e27774/image-8.jpg)

Approach Find SDC causing application sites [ASPLOS 2012] Comprehensive resiliency analysis, 96% accuracy ~5 Years. app APPLICATION for one Relyzer . <2 days. app for one APPLICATION Detect at low cost [DSN 2012] 84% SDCs detected at 10% cost Selective duplication for rest SDC-causing fault Programlevel Error Detectors . APPLICATION Error Detectio n New detectors + selective duplication = Tunable resiliency at low cost 8

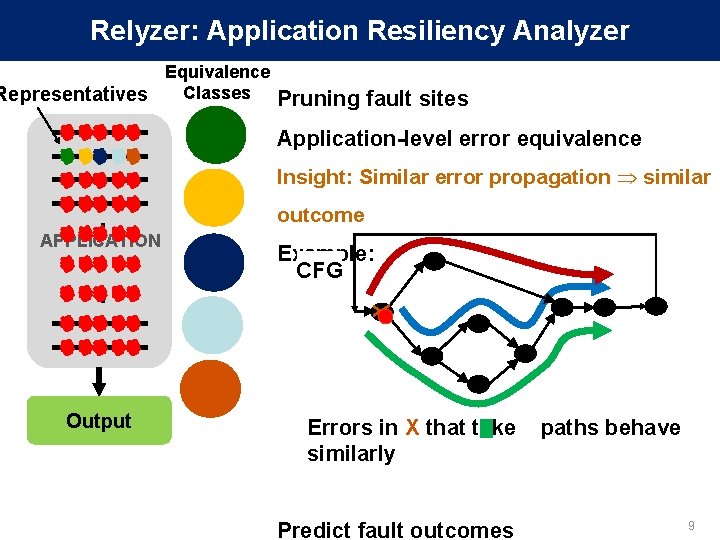

Relyzer: Application Resiliency Analyzer Equivalence Classes Representatives Pruning fault sites Application-level error equivalence Insight: Similar error propagation similar Output outcome Example: CFG X . APPLICATION. . Errors in X that take similarly Predict fault outcomes paths behave 9

![Relyzer Contributions [ASPLOS 2012] • Relyzer: A complete application resiliency analysis technique • Developed Relyzer Contributions [ASPLOS 2012] • Relyzer: A complete application resiliency analysis technique • Developed](http://slidetodoc.com/presentation_image_h2/955acf01fb738ac7552805fb87e27774/image-10.jpg)

Relyzer Contributions [ASPLOS 2012] • Relyzer: A complete application resiliency analysis technique • Developed novel fault pruning techniques – 3 to 6 orders of magnitude fewer injections for most apps – 99. 78% app fault sites pruned § Only 0. 004% represent 99% of all fault sites . . APPLICATION Output Relyzer . . APPLICATION Output § Can identify all potential SDC causing fault sites 10

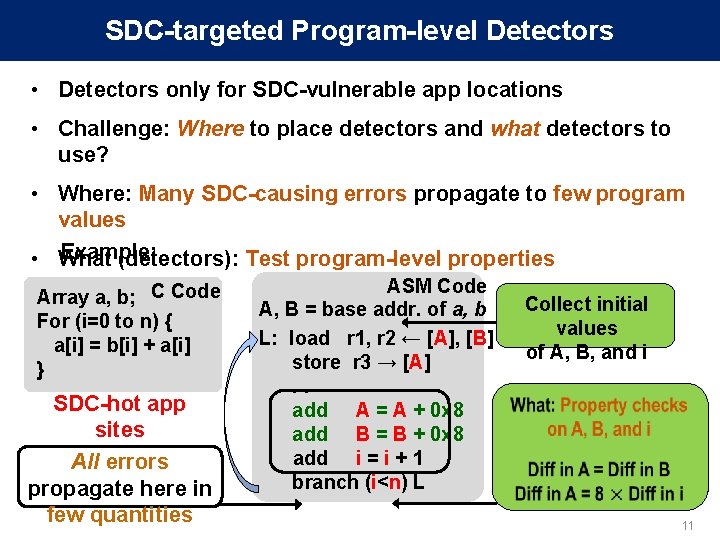

SDC-targeted Program-level Detectors • Detectors only for SDC-vulnerable app locations • Challenge: Where to place detectors and what detectors to use? • Where: Many SDC-causing errors propagate to few program values Example: • What (detectors): Test program-level properties Array a, b; C Code For (i=0 to n) { a[i] = b[i] + a[i] } SDC-hot app sites All errors propagate here in few quantities ASM Code A, B = base addr. of a, b L: load r 1, r 2 ← [A], [B] store r 3 → [A]. . add A = A + 0 x 8 add B = B + 0 x 8 add i = i + 1 branch (i<n) L Collect initial values of A, B, and i 11

![Contributions [DSN 2012] • Discovered common program properties around most SDCcausing sites • Devised Contributions [DSN 2012] • Discovered common program properties around most SDCcausing sites • Devised](http://slidetodoc.com/presentation_image_h2/955acf01fb738ac7552805fb87e27774/image-12.jpg)

Contributions [DSN 2012] • Discovered common program properties around most SDCcausing sites • Devised low-cost program-level detectors – Avg. SDC reduction of 84% @ 10% avg. cost Execution Overhead • New detectors + selective duplication = Tunable resiliency at Relyzer + new Relyzer + 50% low-cost detectors + selective 40% duplication 30% duplication 24% 18% 20% 10% 0% 0% 90 99 % % 100% 10% 20% 30% 40% 50% 60% 70% 80% 90% Average SDC Reduction 12

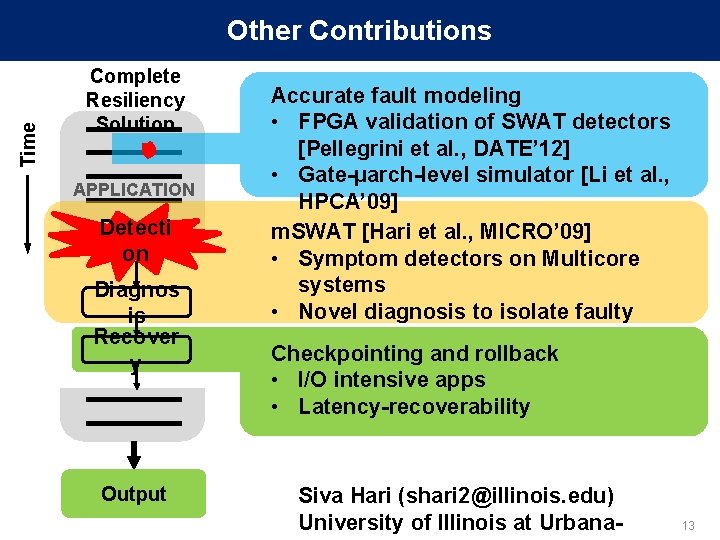

Time Other Contributions Complete Resiliency Solution APPLICATION Detecti on Diagnos is Recover y Output Accurate fault modeling • FPGA validation of SWAT detectors [Pellegrini et al. , DATE’ 12] • Gate-µarch-level simulator [Li et al. , HPCA’ 09] m. SWAT [Hari et al. , MICRO’ 09] • Symptom detectors on Multicore systems • Novel diagnosis to isolate faulty core Checkpointing and rollback • I/O intensive apps • Latency-recoverability Siva Hari (shari 2@illinois. edu) University of Illinois at Urbana- 13

Backup 14

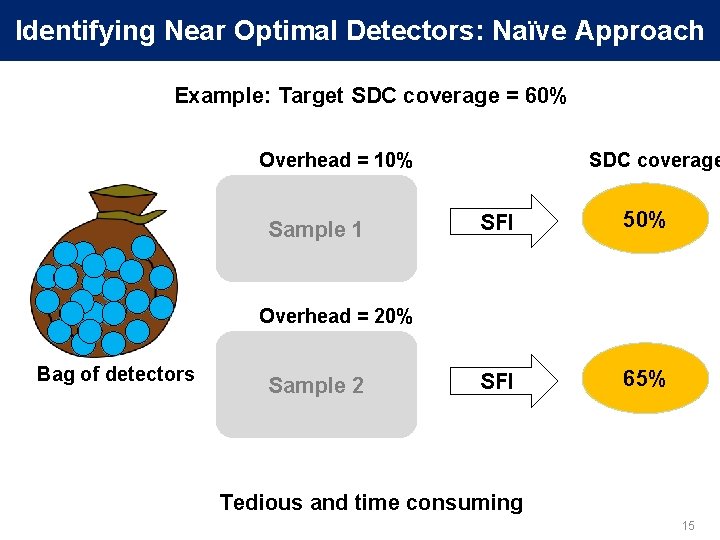

Identifying Near Optimal Detectors: Naïve Approach Example: Target SDC coverage = 60% Overhead = 10% Sample 1 SDC coverage SFI 50% SFI 65% Overhead = 20% Bag of detectors Sample 2 Tedious and time consuming 15

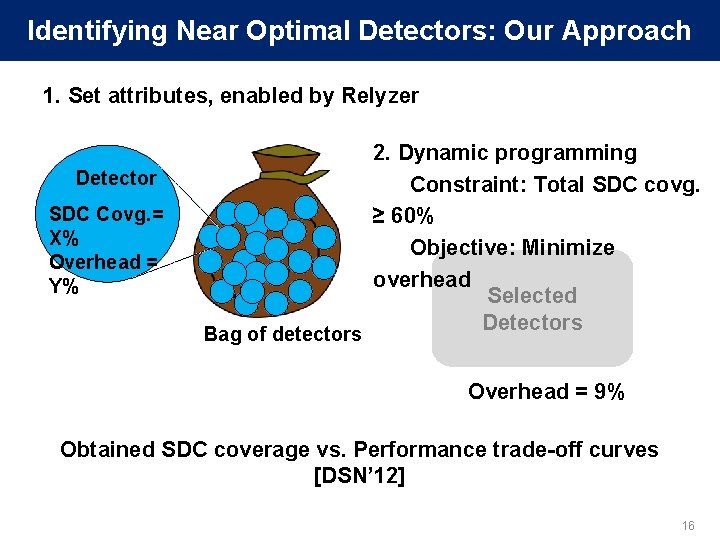

Identifying Near Optimal Detectors: Our Approach 1. Set attributes, enabled by Relyzer Detector SDC Covg. = X% Overhead = Y% Bag of detectors 2. Dynamic programming Constraint: Total SDC covg. ≥ 60% Objective: Minimize overhead Selected Detectors Overhead = 9% Obtained SDC coverage vs. Performance trade-off curves [DSN’ 12] 16

- Slides: 16