Elements of SAN capacity planning Mark Friedman VP

- Slides: 49

Elements of SAN capacity planning Mark Friedman VP, Storage Technology markf@demandtech. com (941) 261 -8945

Data. Core Software Corporation u. Founded 1998 - Storage networking Software u 170+ employees, private - Over $45 M raised l Top Venture firms - NEA, One. Liberty l Funds – Van. Wagoner, Bank of America, etc l Intel Business and Technical collaboration agreement u. Exec. Team l Proven Storage expertise l Proven Software company experience l Operating systems, high-availability, Caching, networking l Enterprise level support and training u. Worldwide: Ft. Lauderdale HQ, Silicon Valley, Canada France, Germany, U. K. , Japan

Overview u. How do we take what we know about storage processor performance and apply it to emerging SAN technology? u. What is a SAN? u. Planning for SANs: l. SAN performance characteristics l. Backup and replication performance

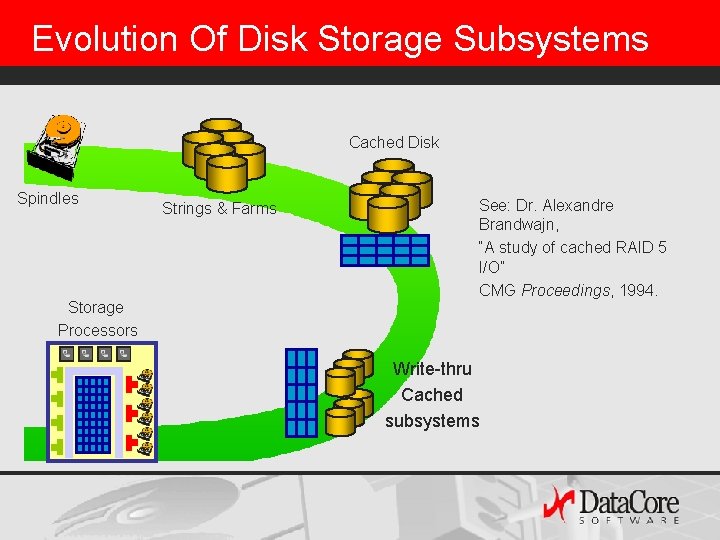

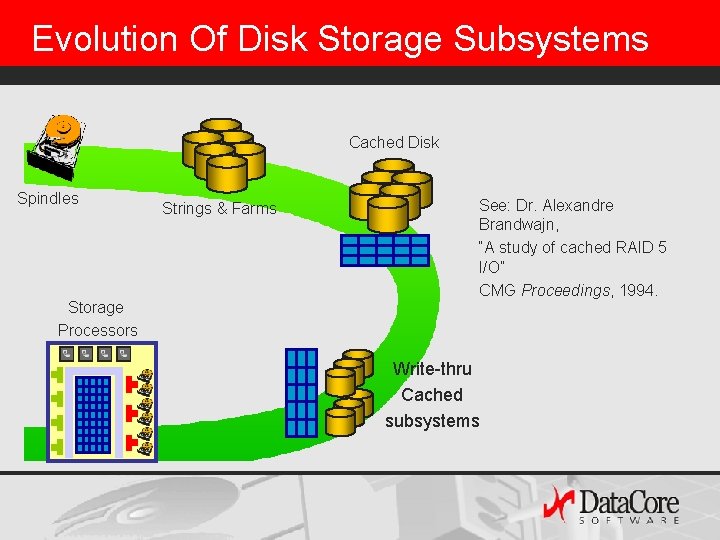

Evolution Of Disk Storage Subsystems Cached Disk Spindles Storage Processors Strings & Farms See: Dr. Alexandre Brandwajn, “A study of cached RAID 5 I/O” CMG Proceedings, 1994. Write-thru Cached subsystems

What Is A SAN? u. Storage Area Networks are designed to exploit Fibre Channel plumbing u. Approaches to simplified networked storage: l. SAN appliances l. SAN Metadata Controllers (“out of band”) l. SAN storage managers (“in band”)

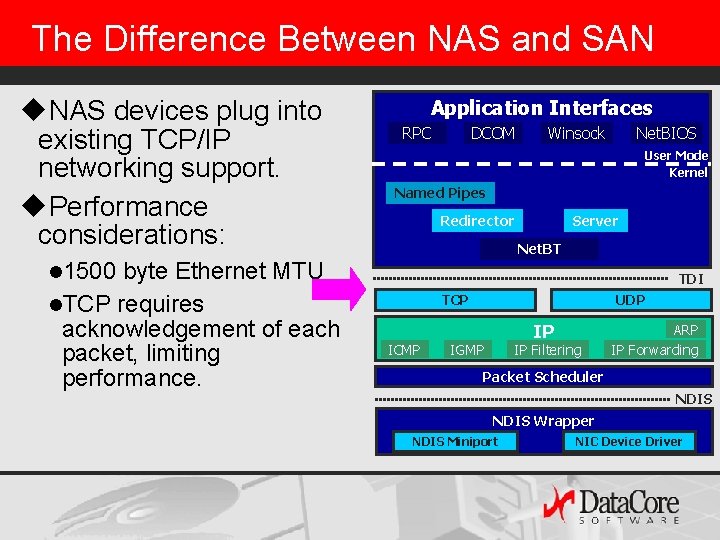

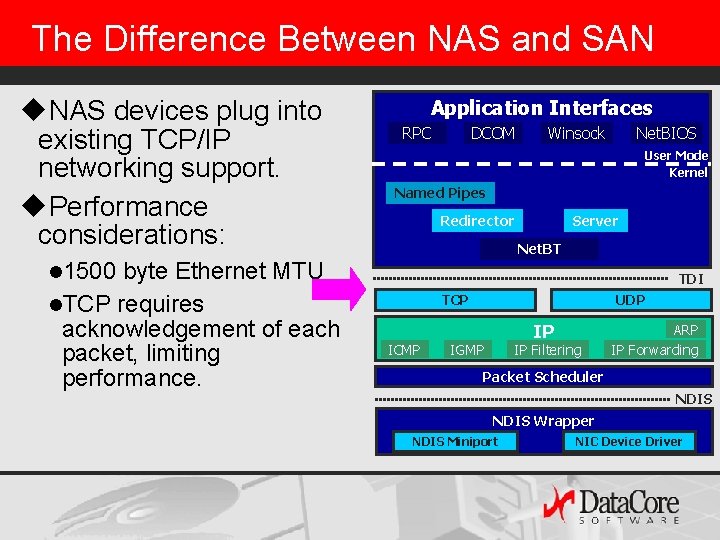

The Difference Between NAS and SAN u. Storage Area Network (SAN) designed to exploit Fibre Channel plumbing require a new infrastructure. u. Network Attached Storage (NAS) devices plug into the existing networking infrastructure. l. Networked file access protocols (NFS, SMB, CIFS) l. TCP/IP stack Application: HTTP, RPC Host-to-Host: TCP, UDP Internet Protocol: IP Media Access: Ethernet, FDDI Packet

The Difference Between NAS and SAN u. NAS devices plug into existing TCP/IP networking support. u. Performance considerations: byte Ethernet MTU l. TCP requires acknowledgement of each packet, limiting performance. Application Interfaces RPC DCOM Winsock Net. BIOS User Mode Kernel Named Pipes Redirector Server Net. BT l 1500 TDI TCP ICMP UDP IP IGMP IP Filtering ARP IP Forwarding Packet Scheduler NDIS Wrapper NDIS Miniport NIC Device Driver

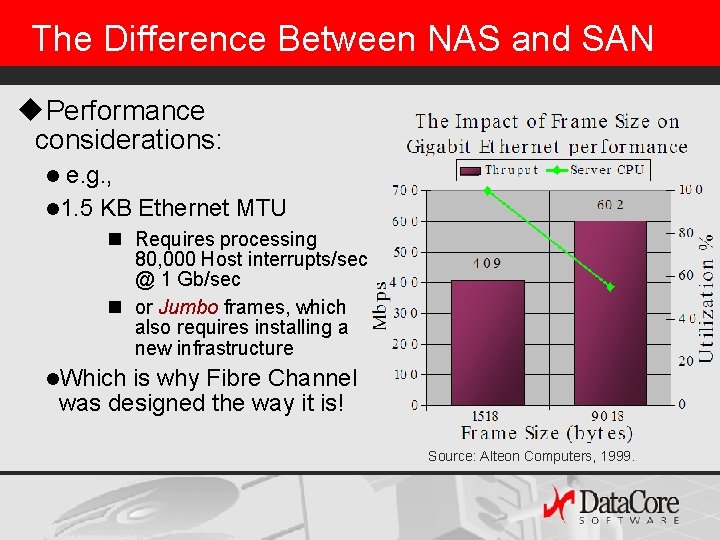

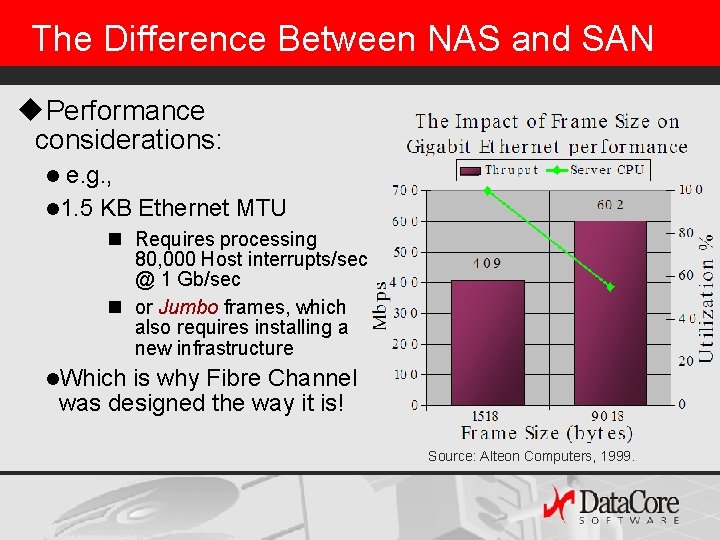

The Difference Between NAS and SAN u. Performance considerations: e. g. , l 1. 5 KB Ethernet MTU l n Requires processing 80, 000 Host interrupts/sec @ 1 Gb/sec n or Jumbo frames, which also requires installing a new infrastructure l. Which is why Fibre Channel was designed the way it is! Source: Alteon Computers, 1999.

Competing Network File System Protocols u. Universal data sharing is developing ad hoc on top of de facto industry standards designed for network access. l. Sun NFS l. HTTP, FTP l. Microsoft CIFS (and DFS) also known as SMB n CIFS-compatible is the largest and fastest growing category of data n

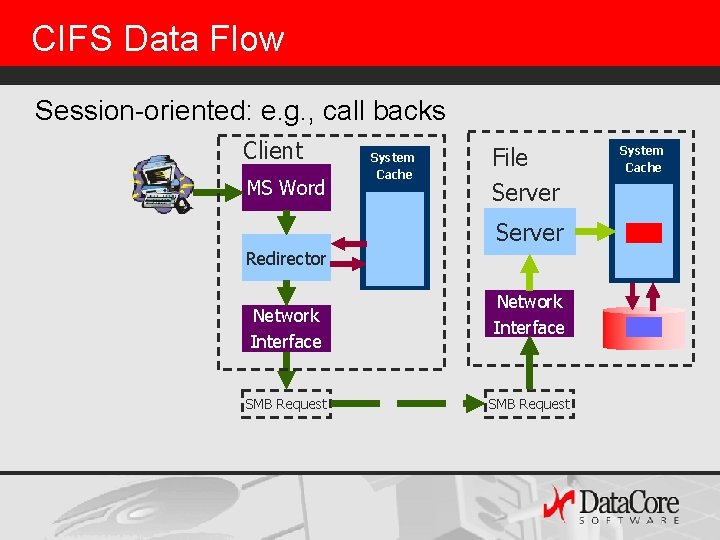

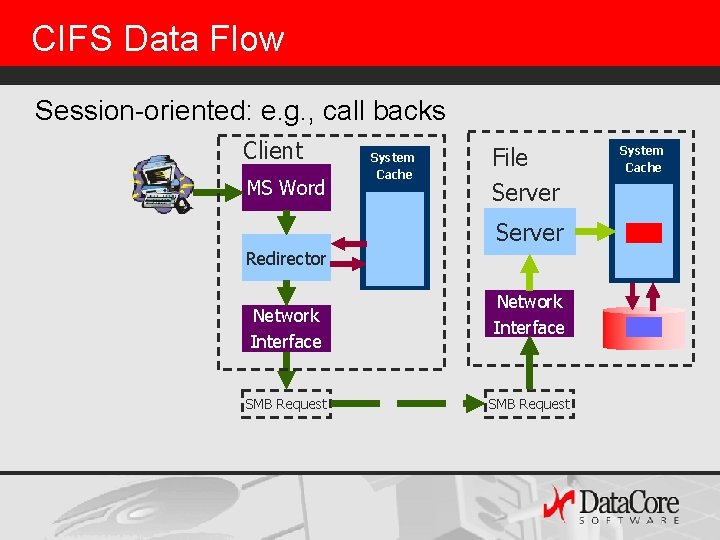

CIFS Data Flow Session-oriented: e. g. , call backs Client MS Word System Cache File Server Redirector Network Interface SMB Request System Cache

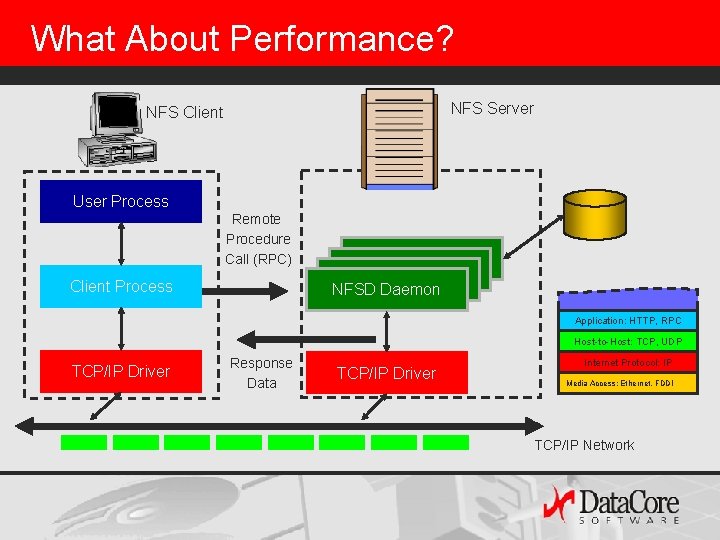

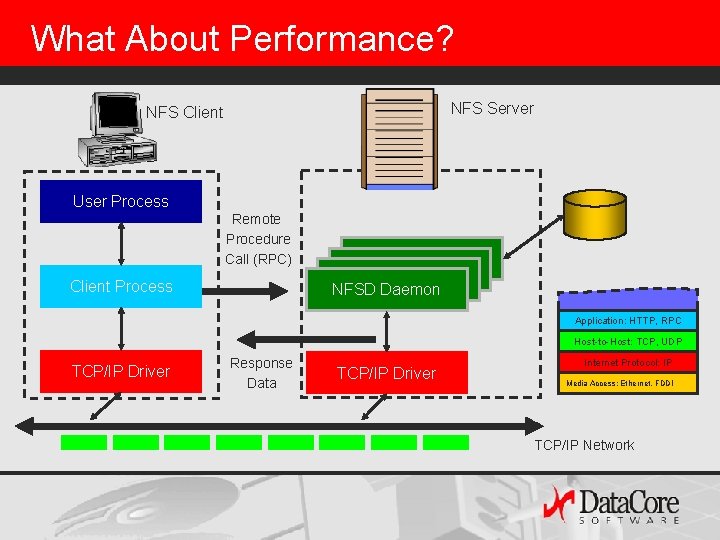

What About Performance? NFS Server NFS Client User Process Remote Procedure Call (RPC) Client Process NFSD Daemon Application: HTTP, RPC Host-to-Host: TCP, UDP TCP/IP Driver Response Data TCP/IP Driver Internet Protocol: IP Media Access: Ethernet, FDDI TCP/IP Network

What About Performance? § Network-attached yields fraction of the performance of directattached drives when block size does not match frame size. Client MS Word System Cache File Server Redirector Application: HTTP, RPC Host-to-Host: TCP, UDP Network Interface Internet Protocol: IP Media Access: Ethernet, FDDI SMB Request See ftp: //ftp. research. microsoft. com/pub/tr/tr-2000 -55. pdf SMB Request System Cache

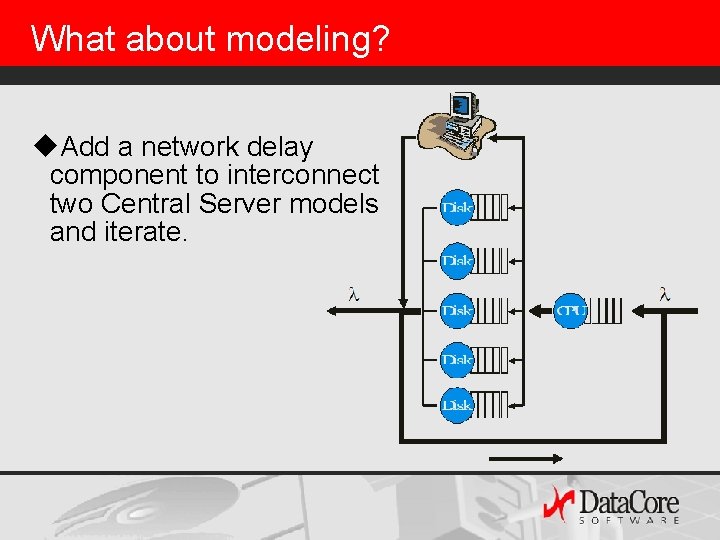

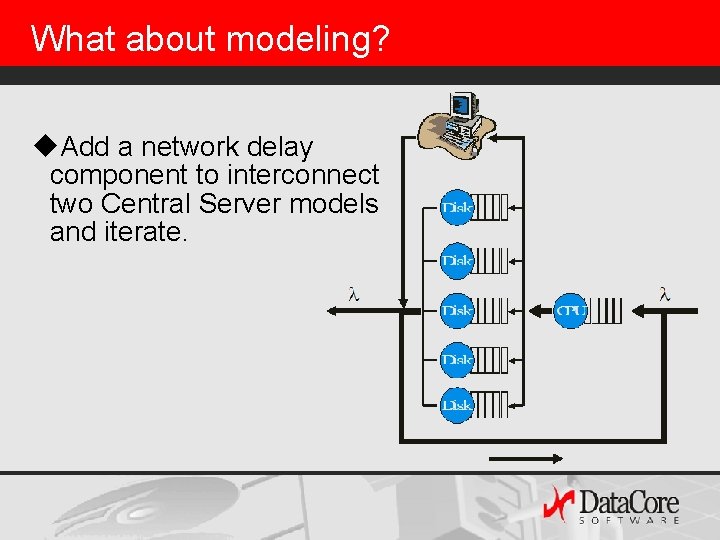

What about modeling? u. Add a network delay component to interconnect two Central Server models and iterate.

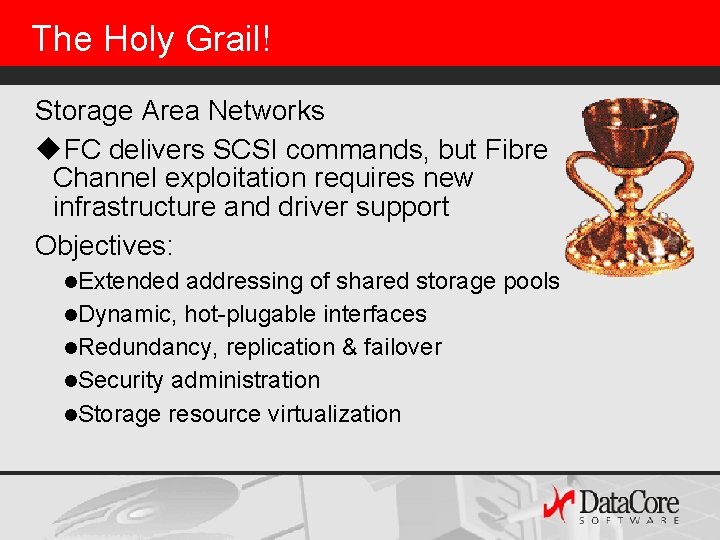

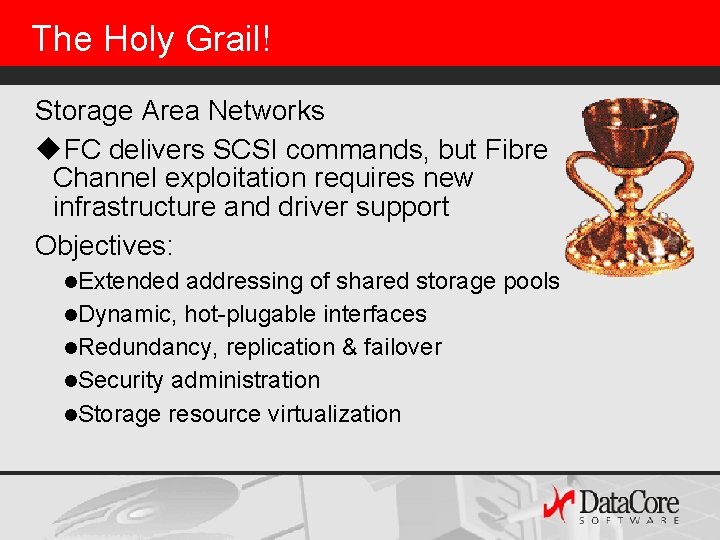

The Holy Grail! Storage Area Networks u. Uses low latency, high performance Fibre Channel switching technology (plumbing) u 100 MB/sec Full duplex serial protocol over copper or fiber u. Extended distance using fiber u. Three topologies: l. Point-to-Point l. Arbitrated Loop: 127 addresses, but can be bridged l. Fabric: 16 MB addresses

The Holy Grail! Storage Area Networks u. FC delivers SCSI commands, but Fibre Channel exploitation requires new infrastructure and driver support Objectives: l. Extended addressing of shared storage pools l. Dynamic, hot-plugable interfaces l. Redundancy, replication & failover l. Security administration l. Storage resource virtualization

Distributed Storage & Centralized Administration Traditional tethered vs untethered SAN storage u. Untethered storage can (hopefully) be pooled for centralized administration u. Disk space pooling (virtualization) l. Currently, using LUN virtualization l. In the future, implementing dynamic virtual: real address mapping (e. g. , the IBM Storage Tank) u. Centralized back-up l. SAN LAN-free backup

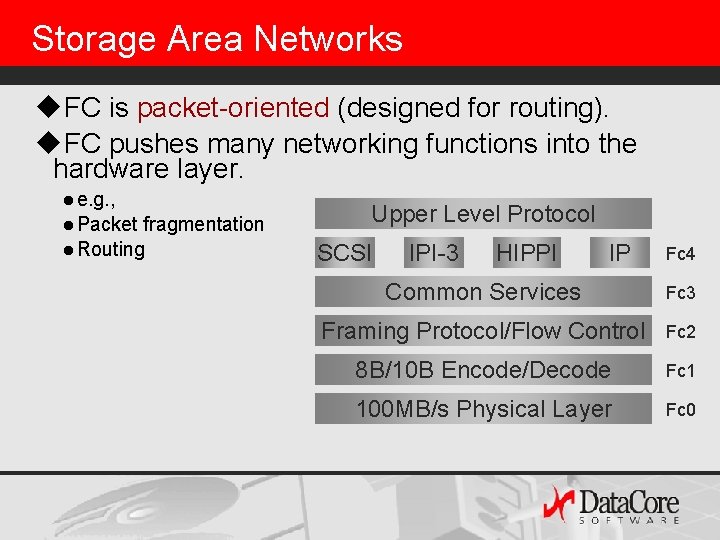

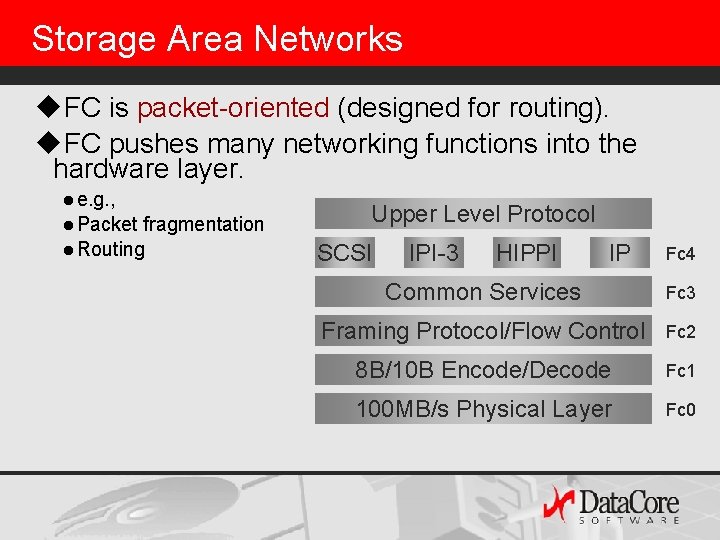

Storage Area Networks u. FC is packet-oriented (designed for routing). u. FC pushes many networking functions into the hardware layer. l e. g. , l Packet fragmentation l Routing Upper Level Protocol SCSI IPI-3 HIPPI IP Fc 4 Common Services Fc 3 Framing Protocol/Flow Control Fc 2 8 B/10 B Encode/Decode Fc 1 100 MB/s Physical Layer Fc 0

Storage Area Networks u. FC is designed to work with optical fiber and lasers consistent with Gigabit Ethernet hardware l 100 MB/sec interfaces l 200 MB/sec interfaces u. This creates a new class of hardware that you must budget for: FC hubs and switches.

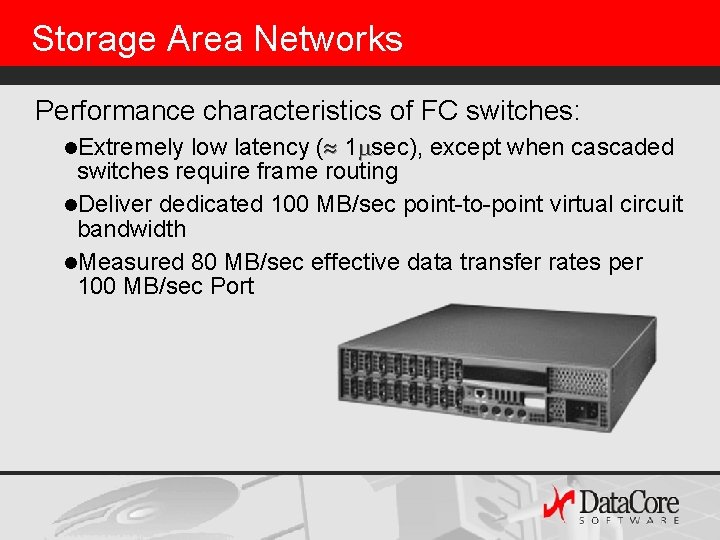

Storage Area Networks Performance characteristics of FC switches: low latency ( 1 sec), except when cascaded switches require frame routing l. Deliver dedicated 100 MB/sec point-to-point virtual circuit bandwidth l. Measured 80 MB/sec effective data transfer rates per 100 MB/sec Port l. Extremely

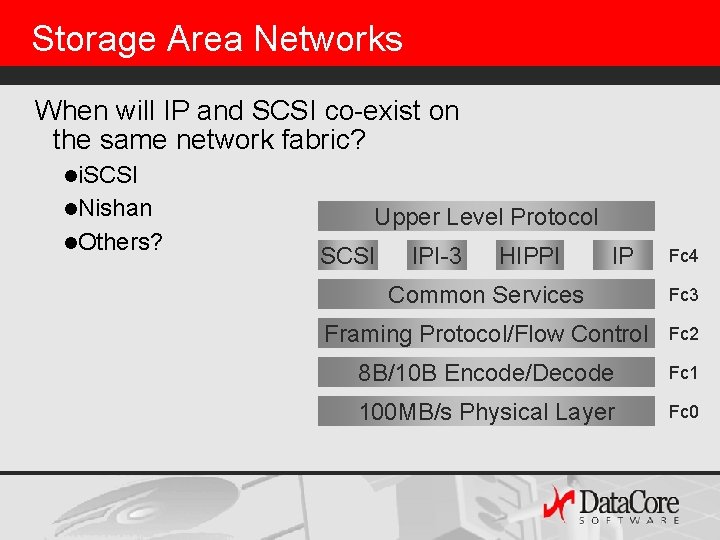

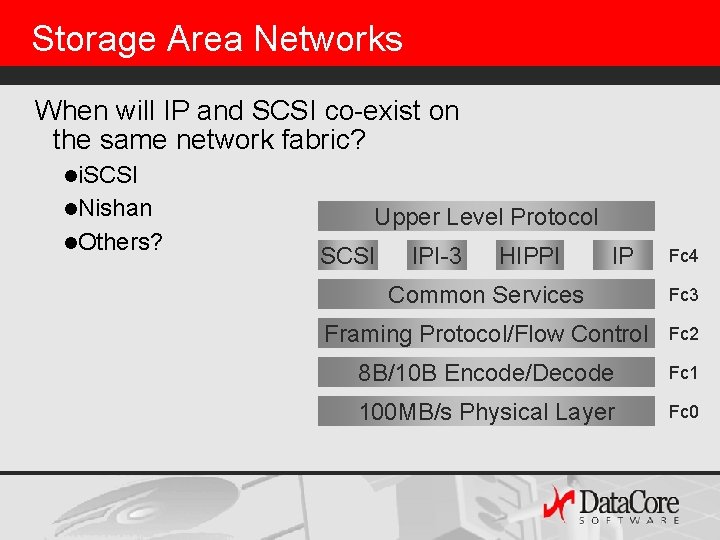

Storage Area Networks When will IP and SCSI co-exist on the same network fabric? li. SCSI l. Nishan l. Others? Upper Level Protocol SCSI IPI-3 HIPPI IP Fc 4 Common Services Fc 3 Framing Protocol/Flow Control Fc 2 8 B/10 B Encode/Decode Fc 1 100 MB/s Physical Layer Fc 0

Storage Area Networks u. FC zoning is used to control access to resources (security) u. Two approaches to SAN management: l. Management functions must migrate to the switch, storage processor, or…. l. OS must be extended to support FC topologies.

Approaches to building SANs u. Fibre Channel-based Storage Area Networks (SANs) l. SAN appliances l. SAN Metadata Controllers l. SAN Storage Managers u. Architecture (and performance) considerations

Approaches to building SANs u. Where does the logical device: physical device mapping run? l. Out-of-band: on the client l. In-band: inside the SAN appliance, transparent to the client u. Many industry analysts have focused on this relatively unimportant distinction.

SAN appliances Conventional storage processors with u. Fibre Channel interfaces u. Fibre Channel support l. FC Fabric l. Zoning l. LUN virtualization

SAN Appliance Performance Same as before, except faster Fibre Channel interfaces l. Commodity FC Interfaces Cache Memory FC Disks Internal Bus Host Interfaces processors, internal buses, disks, front-end and backend interfaces l. Proprietary storage processor architecture considerations Multiple Processors

SAN appliances SAN and NAS convergence? l. Adding Fibre Channel interfaces and Fibre Channel support to a NAS box l. SAN-NAS hybrids when SAN appliances are connected via TCP/IP. Current Issues: l. Managing multiple boxes l. Proprietary management platforms

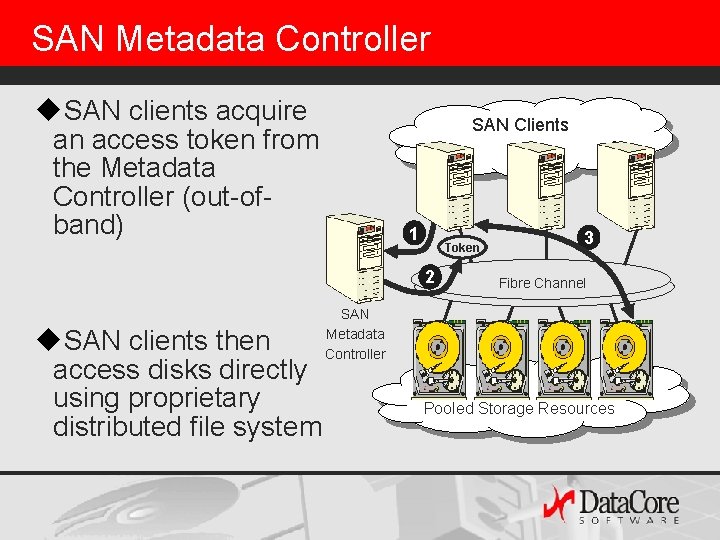

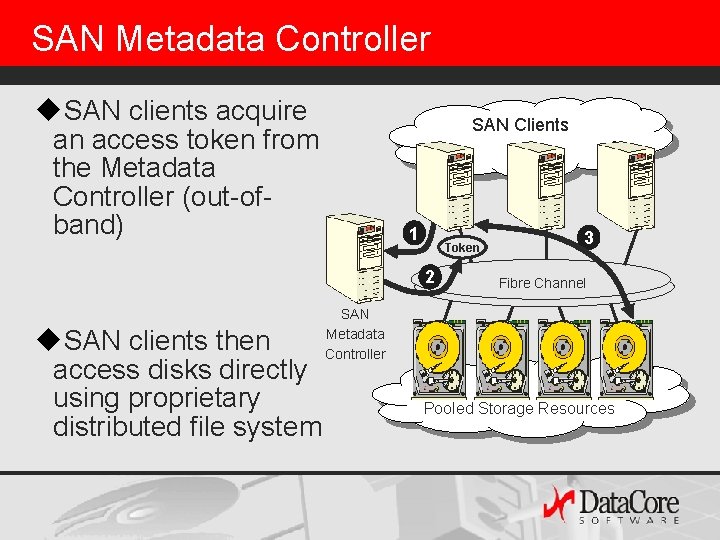

SAN Metadata Controller u. SAN clients acquire an access token from the Metadata Controller (out-ofband) SAN Clients 1 Token 2 u. SAN clients then access disks directly using proprietary distributed file system 3 Fibre Channel SAN Metadata Controller Pooled Storage Resources

SAN Metadata Controller u. Performance considerations: l. MDC latency (low access rate assumed) l. Additional latency to map client file system request to the distributed file system u. Other administrative considerations: l. Requirement for client-side software is a burden!

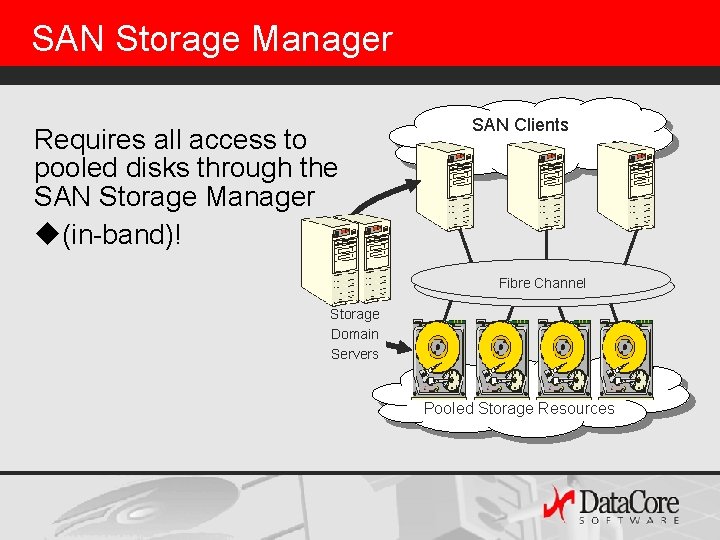

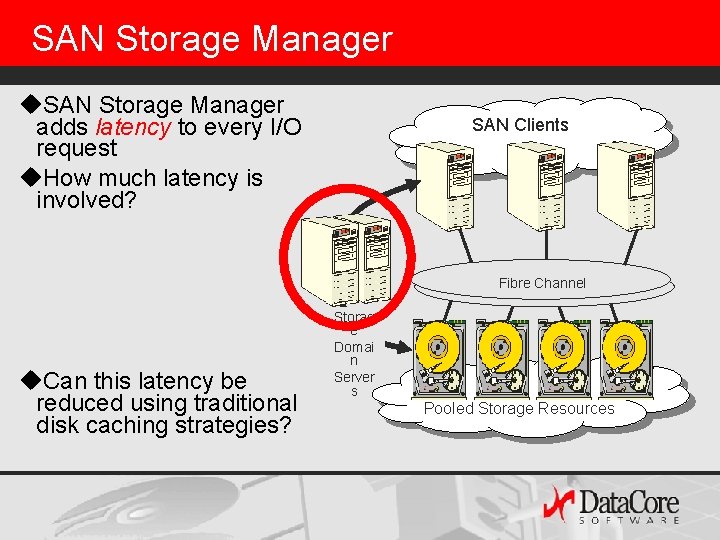

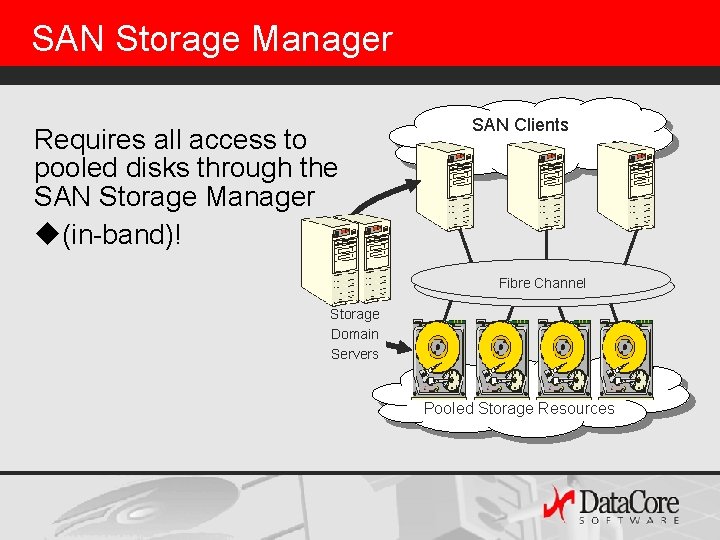

SAN Storage Manager Requires all access to pooled disks through the SAN Storage Manager u(in-band)! SAN Clients Fibre Channel Storage Domain Servers Pooled Storage Resources

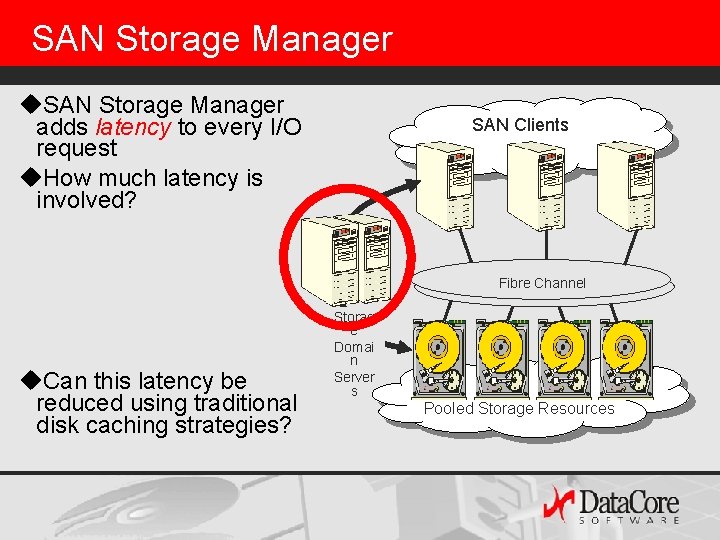

SAN Storage Manager u. SAN Storage Manager adds latency to every I/O request u. How much latency is involved? SAN Clients Fibre Channel u. Can this latency be reduced using traditional disk caching strategies? Storag e Domai n Server s Pooled Storage Resources

Architecture of a Storage Domain Server u. Runs on an ordinary Win 2 K Intel server u. The SDS intercepts SAN I/O requests, impersonating a SCSI disk u. Leverages: l Native Device drivers l Disk management l Security l Native CIFS support Client I/O SANsymphony Storage Domain Server Initiator/Target Emulation FC Adaptor Polling Threads Security Fault Tolerance Data Cache Natives W 2 K I/O Manager Disk Driver Diskperf (measurement) Fault Tolerance (Optional) SCSI miniport Driver Fibre Channel HBA Driver

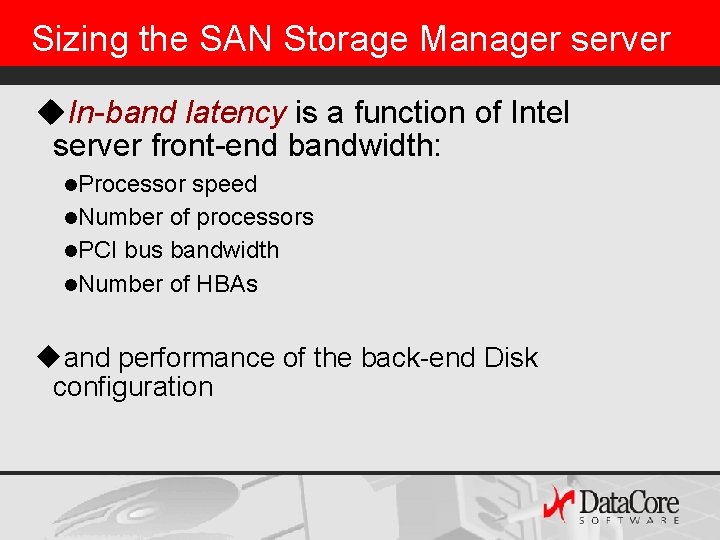

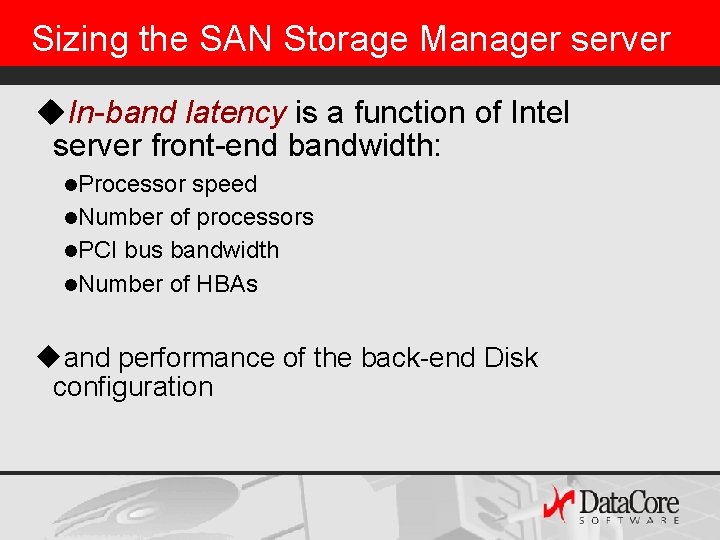

Sizing the SAN Storage Manager server u. In-band latency is a function of Intel server front-end bandwidth: l. Processor speed l. Number of processors l. PCI bus bandwidth l. Number of HBAs uand performance of the back-end Disk configuration

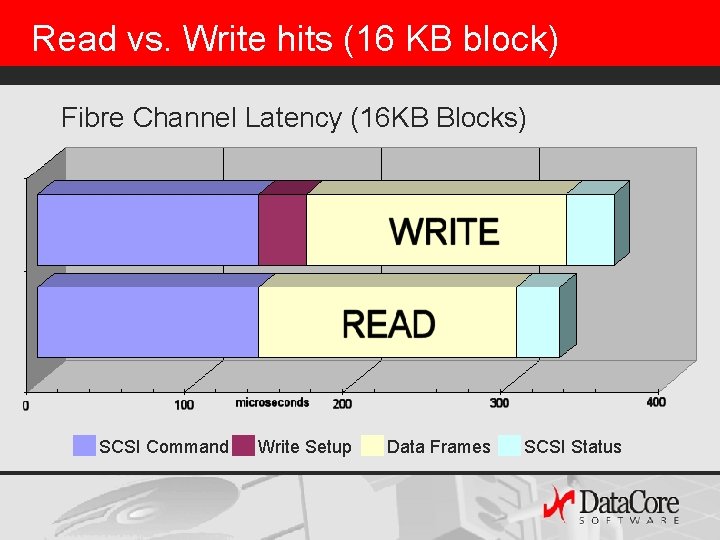

SAN Storage Manager Can SAN Storage Manager in-band latency be reduced using traditional disk caching strategies? l. Read hits l. Read misses n Disk I/O + (2 * data transfer) l. Fast Writes to cache (with mirrored caches) n 2 * data transfer n Write performance ultimately determined by the disk configuration

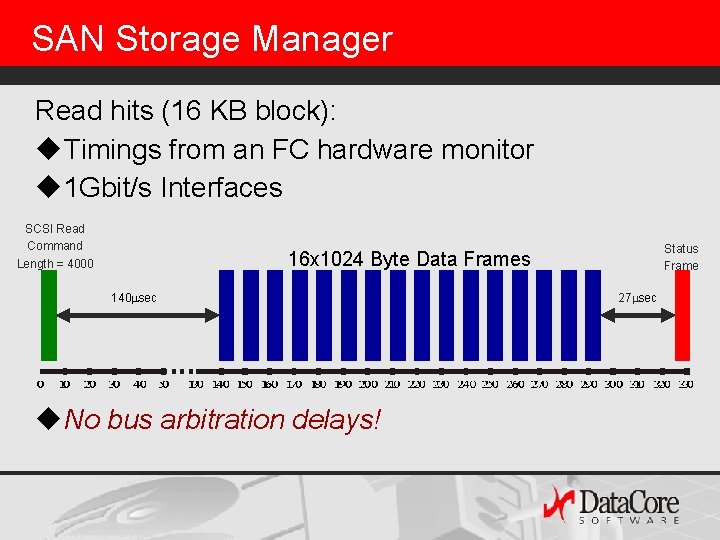

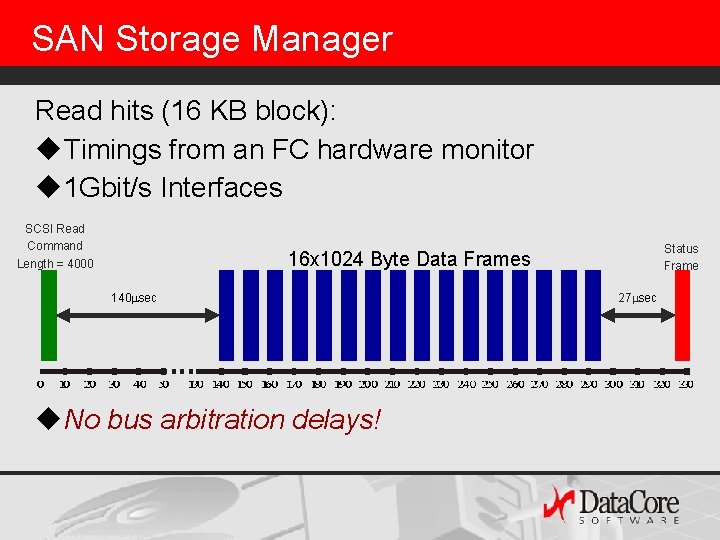

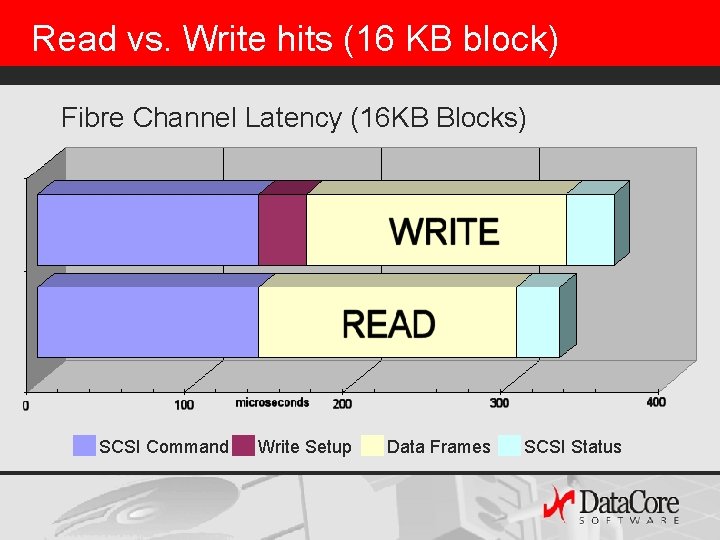

SAN Storage Manager Read hits (16 KB block): u. Timings from an FC hardware monitor u 1 Gbit/s Interfaces SCSI Read Command Length = 4000 Status Frame 16 x 1024 Byte Data Frames 140 sec u. No bus arbitration delays! 27 sec

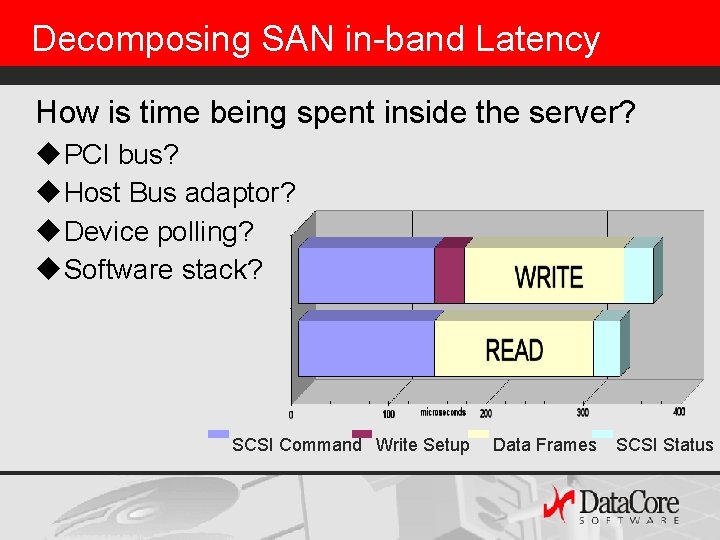

Read vs. Write hits (16 KB block) Fibre Channel Latency (16 KB Blocks) SCSI Command Write Setup Data Frames SCSI Status

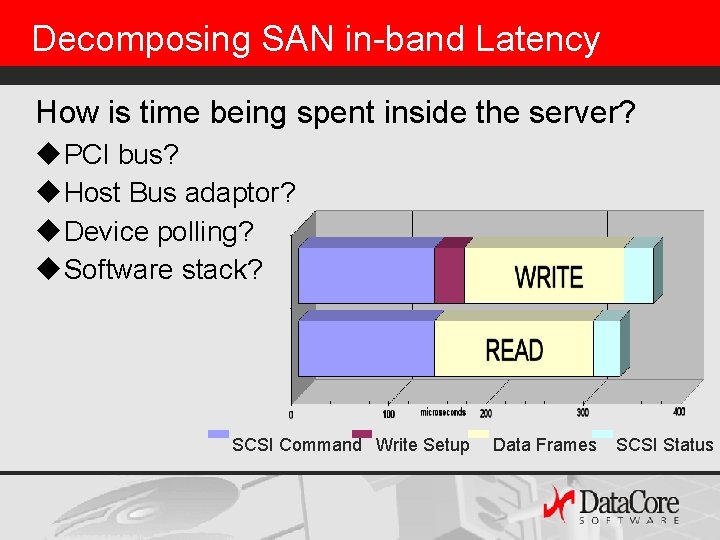

Decomposing SAN in-band Latency How is time being spent inside the server? u. PCI bus? u. Host Bus adaptor? u. Device polling? u. Software stack? SCSI Command Write Setup Data Frames SCSI Status

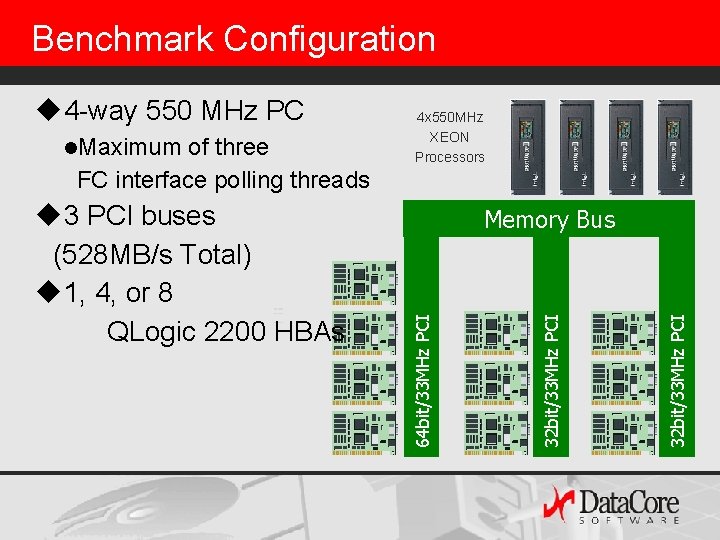

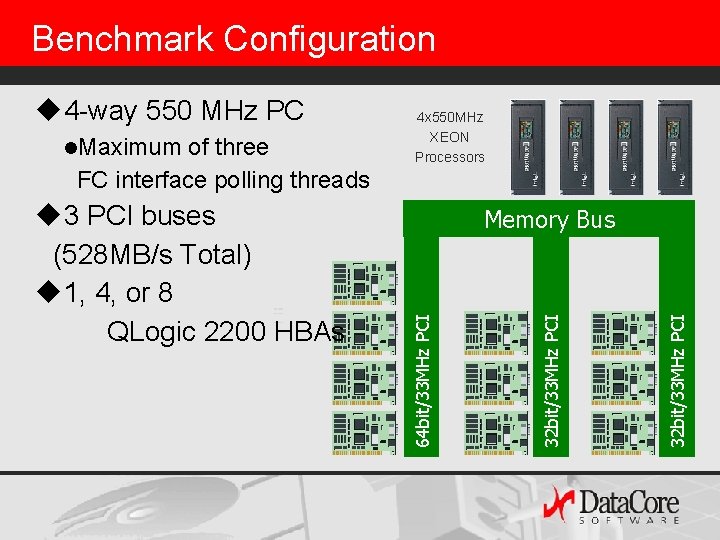

Benchmark Configuration u 3 PCI buses (528 MB/s Total) u 1, 4, or 8 QLogic 2200 HBAs Memory Bus 32 bit/33 MHz PCI of three FC interface polling threads 32 bit/33 MHz PCI l. Maximum 4 x 550 MHz XEON Processors 64 bit/33 MHz PCI u 4 -way 550 MHz PC

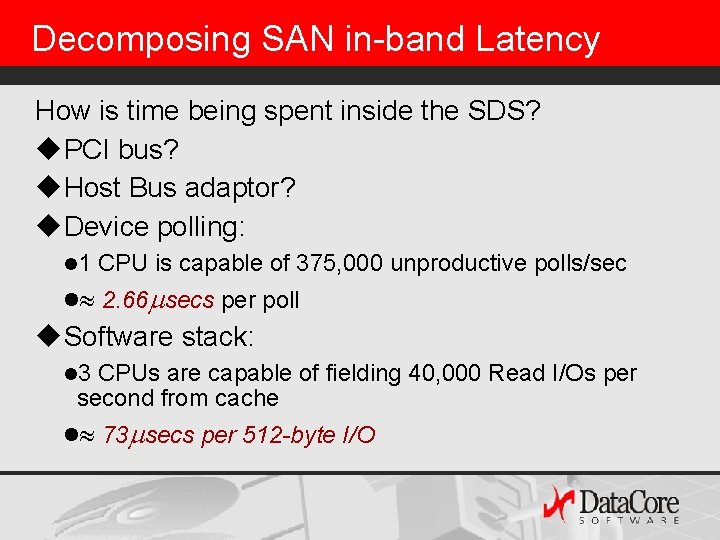

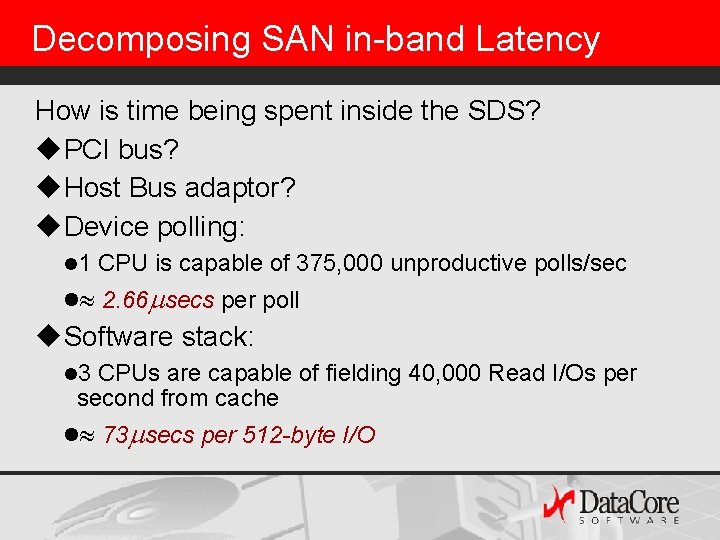

Decomposing SAN in-band Latency How is time being spent inside the SDS? u. PCI bus? u. Host Bus adaptor? u. Device polling: l 1 CPU is capable of 375, 000 unproductive polls/sec l 2. 66 secs per poll u. Software stack: l 3 CPUs are capable of fielding 40, 000 Read I/Os per second from cache l 73 secs per 512 -byte I/O

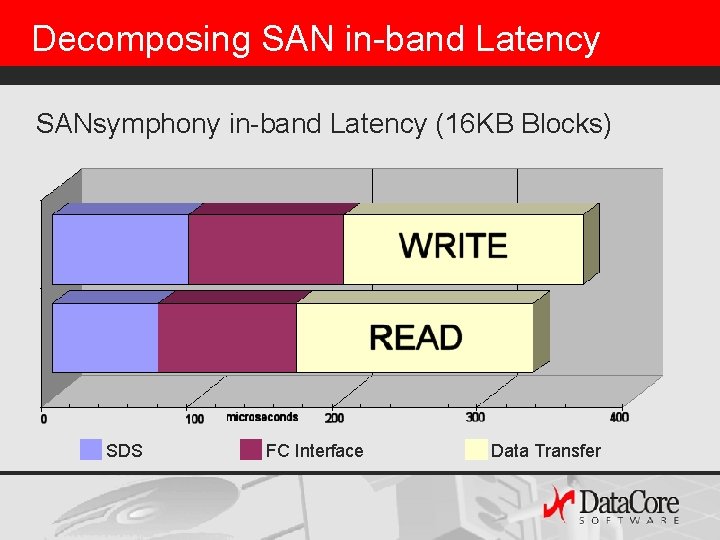

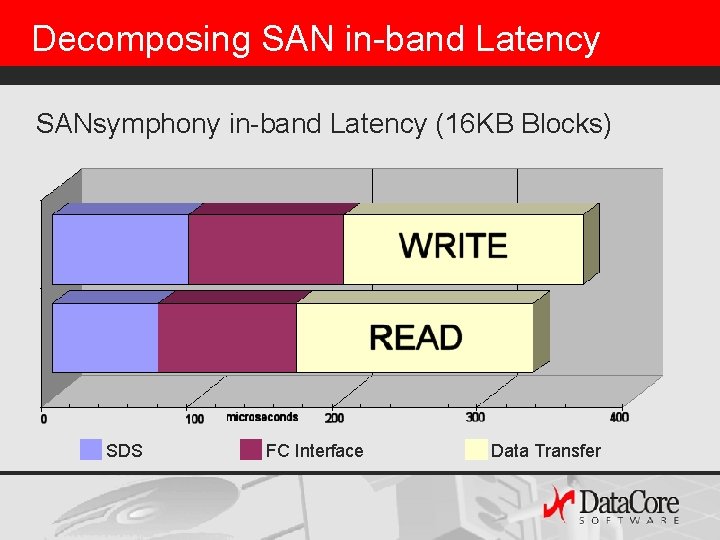

Decomposing SAN in-band Latency SANsymphony in-band Latency (16 KB Blocks) SDS FC Interface Data Transfer

Impact Of New Technologies Front-end bandwidth: l. Different speed Processors l. Different number of processors l. Faster PCI Bus l. Faster HBAs e. g. Next Generation Server l 2 GHz Processors (4 x Benchmark System) l 200 MB/sec FC interfaces (2 x Benchmark System) l 4 x 800 MB/s PCI bus (6 x Benchmark System) u. . .

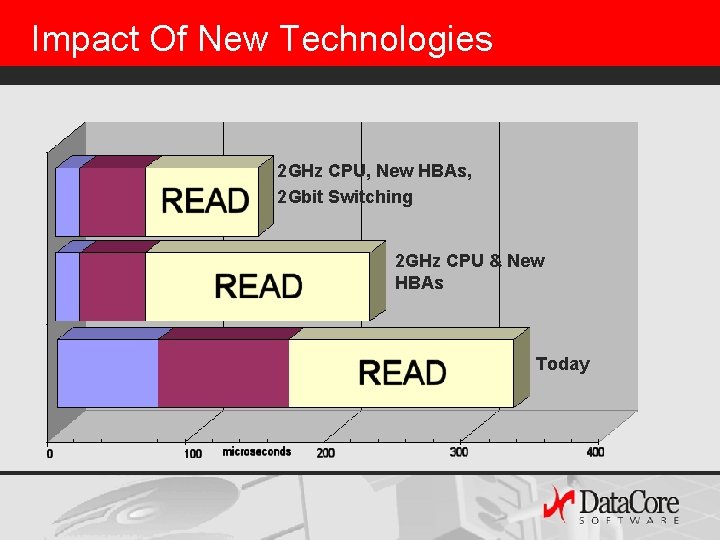

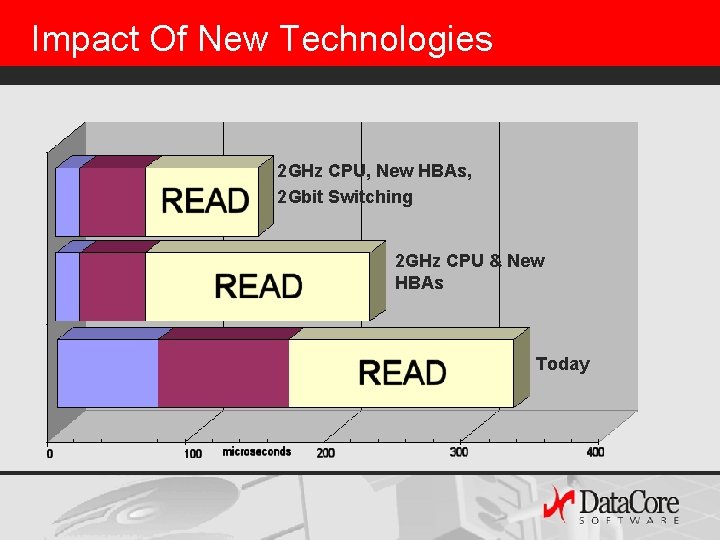

Impact Of New Technologies 2 GHz CPU, New HBAs, 2 Gbit Switching 2 GHz CPU & New HBAs Today

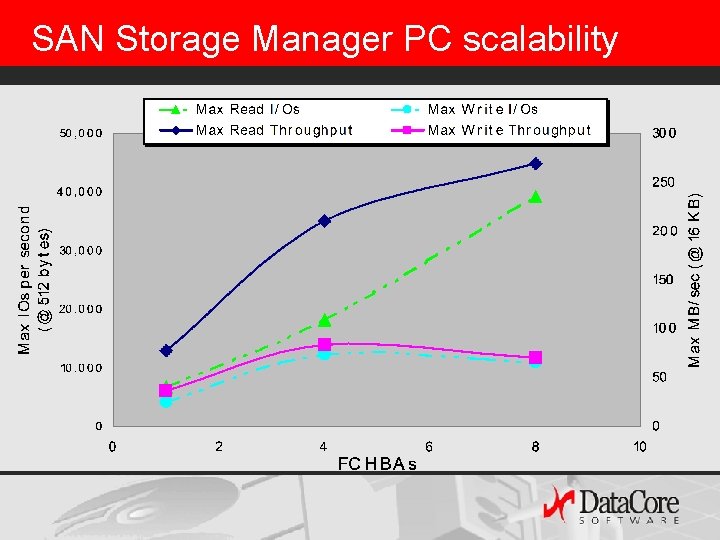

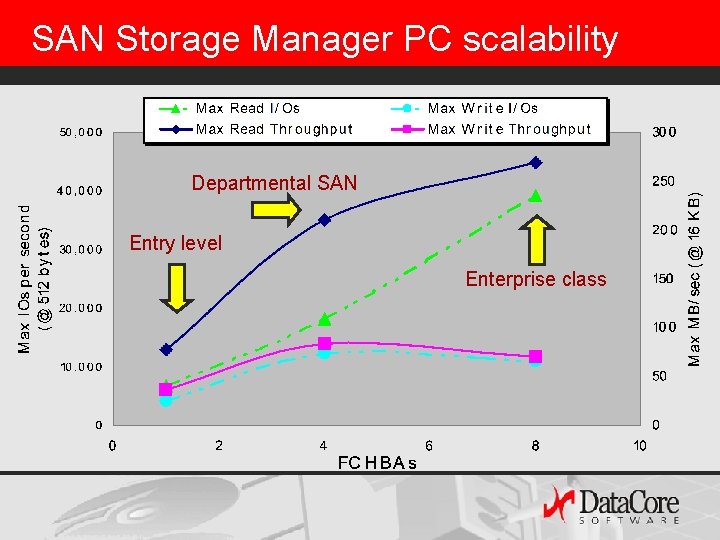

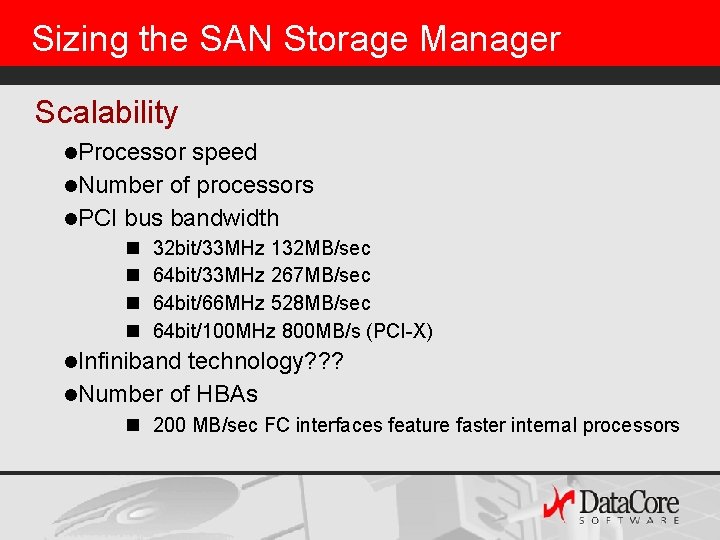

Sizing the SAN Storage Manager Scalability l. Processor speed l. Number of processors l. PCI bus bandwidth n n 32 bit/33 MHz 132 MB/sec 64 bit/33 MHz 267 MB/sec 64 bit/66 MHz 528 MB/sec 64 bit/100 MHz 800 MB/s (PCI-X) l. Infiniband technology? ? ? l. Number of HBAs n 200 MB/sec FC interfaces feature faster internal processors

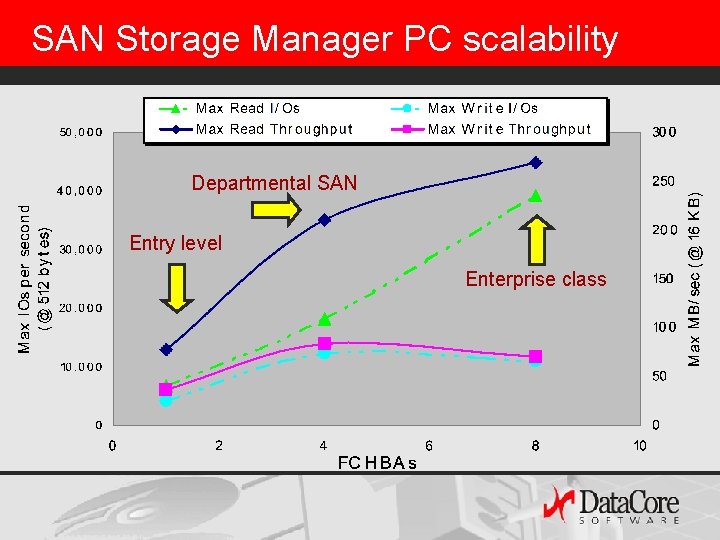

Sizing the SAN Storage Manager Entry level system: l. Dual Processor, single PCI bus, 1 GB RAM Mid-level departmental system: l. Dual Processor, dual PCI bus, 2 GB RAM Enterprise-class system: l. Quad Processor, triple PCI bus, 4 GB RAM

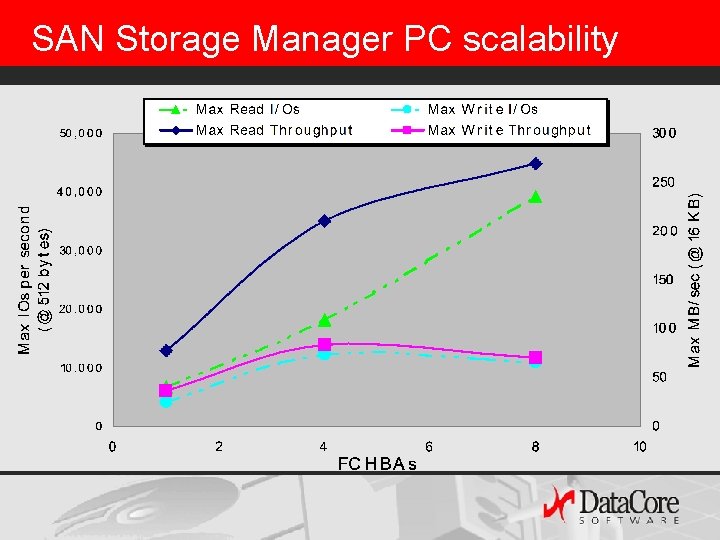

SAN Storage Manager PC scalability

SAN Storage Manager PC scalability Departmental SAN Entry level Enterprise class

SANsymphony Performance Conclusions l. FC switches provide virtually unlimited bandwidth with exceptionally low latency so long as you do not cascade switches l. General purpose Intel PCs are a great source of inexpensive MIPS. l. In-band SAN management is not a CPU-bound process. l. PCI bandwidth is the most significant bottleneck in the Intel architecture. l. FC Interface cards speeds and feeds are also very significant

SAN Storage Manager – Next Steps u. Cacheability of Unix and NT workloads l. Domino, MS Exchange l. Oracle, SQL Server, Apache, IIS u. Given mirrored writes, what is the effect of different physical disk configurations? l. JBOD l. RAID 0 disk striping l. RAID 5 write penalty u. Asynchronous disk mirroring over long distances u. Backup and Replication (snapshot)

Questions ?

www. datacore. com

San capacity planning

San capacity planning Aggregate planning is capacity planning for:

Aggregate planning is capacity planning for: List the strategic objectives of aggregate planning

List the strategic objectives of aggregate planning Examples of aggregate planning

Examples of aggregate planning Production units have an optimal rate of output where:

Production units have an optimal rate of output where: Vmware capacity planner

Vmware capacity planner Rough cut capacity planning example

Rough cut capacity planning example Planned capacity

Planned capacity Leading demand with incremental expansion

Leading demand with incremental expansion What is rough cut capacity planning

What is rough cut capacity planning Ibm mlc software

Ibm mlc software Collaborative capacity planning

Collaborative capacity planning Capacity planning in cloud computing

Capacity planning in cloud computing Process selection and capacity planning

Process selection and capacity planning Channel capacity planning

Channel capacity planning Capacity planning example problems

Capacity planning example problems Capacity planning bottlenecks

Capacity planning bottlenecks Explain the importance of capacity planning

Explain the importance of capacity planning Apa itu capacity planning

Apa itu capacity planning Capacity and performance management

Capacity and performance management Contoh capacity planning

Contoh capacity planning Capacity requirement planning flow chart

Capacity requirement planning flow chart Microsoft capacity planning

Microsoft capacity planning Mrp meaning

Mrp meaning Intermediate range capacity planning

Intermediate range capacity planning Capacity planning answers the question

Capacity planning answers the question Capacity requirements planning

Capacity requirements planning Safety capacity

Safety capacity Capacity requirements planning

Capacity requirements planning Capacity requirements planning

Capacity requirements planning Process selection and capacity planning

Process selection and capacity planning Course capacity

Course capacity Facility capacity planning

Facility capacity planning Products and services

Products and services Chase capacity plan

Chase capacity plan Backup capacity planning

Backup capacity planning Rough cut capacity planning example

Rough cut capacity planning example Design capacity in operations management

Design capacity in operations management Leading demand with incremental expansion

Leading demand with incremental expansion Strategic capacity planning definition

Strategic capacity planning definition Developing capacity strategies

Developing capacity strategies Strategic capacity planning for products and services

Strategic capacity planning for products and services Strategic capacity planning for products and services

Strategic capacity planning for products and services The ratio of actual output to design capacity is

The ratio of actual output to design capacity is Objectives of capacity planning

Objectives of capacity planning The art of capacity planning

The art of capacity planning Contoh soal kruskal wallis

Contoh soal kruskal wallis Dr theodore friedman

Dr theodore friedman Flat world theory friedman

Flat world theory friedman Richard j friedman, md

Richard j friedman, md