ELEC 801 Pattern Recognition 9182018 ELEC 801 PATTERN

![ELEC 801 Pattern Recognition MATLAB/Octave Implementation [ex_knn_iris_train. m] function [Xr, Tr] = ex_knn_iris_train(trainfile) [Xr, ELEC 801 Pattern Recognition MATLAB/Octave Implementation [ex_knn_iris_train. m] function [Xr, Tr] = ex_knn_iris_train(trainfile) [Xr,](https://slidetodoc.com/presentation_image_h/dade690de8cf5e51c04376d835a5e205/image-71.jpg)

- Slides: 71

ELEC 801 Pattern Recognition 9/18/2018 ELEC 801 PATTERN RECOGNITION FALL 2018, KNU Instructor: Gil-Jin Jang Textbook: Pattern Classification (2 nd ed) by R. O. Duda, P. E. Hart and D. G. Stork, John Wiley & Sons, 2000 Slide credit: R. O. Duda, P. E. Hart and D. G. Stork BAYESIAN DECISION THEORY Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 1

ELEC 801 Pattern Recognition 9/18/2018 2 Pattern Classification All materials in these slides were taken from Pattern Classification (2 nd ed) by R. O. Duda, P. E. Hart and D. G. Stork, John Wiley & Sons, 2000 with the permission of the authors and the publisher Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

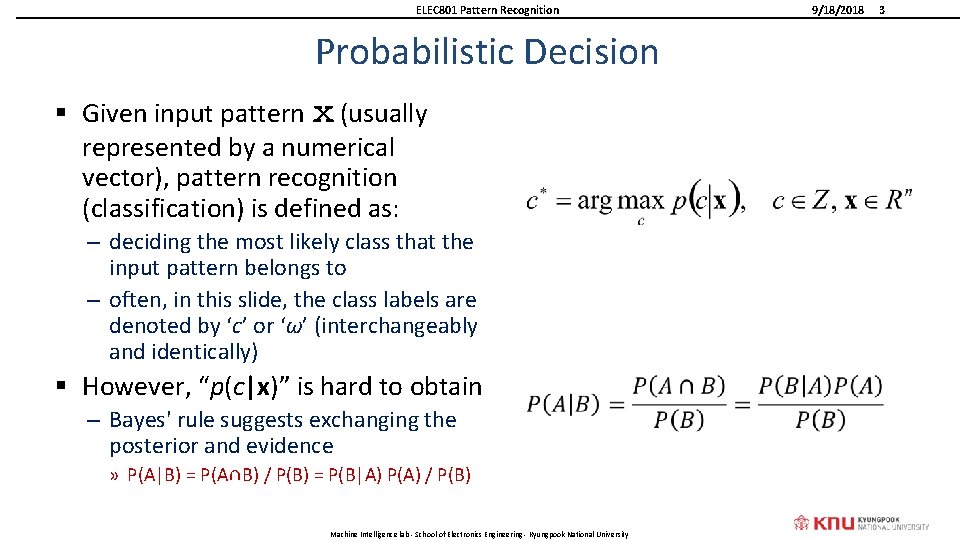

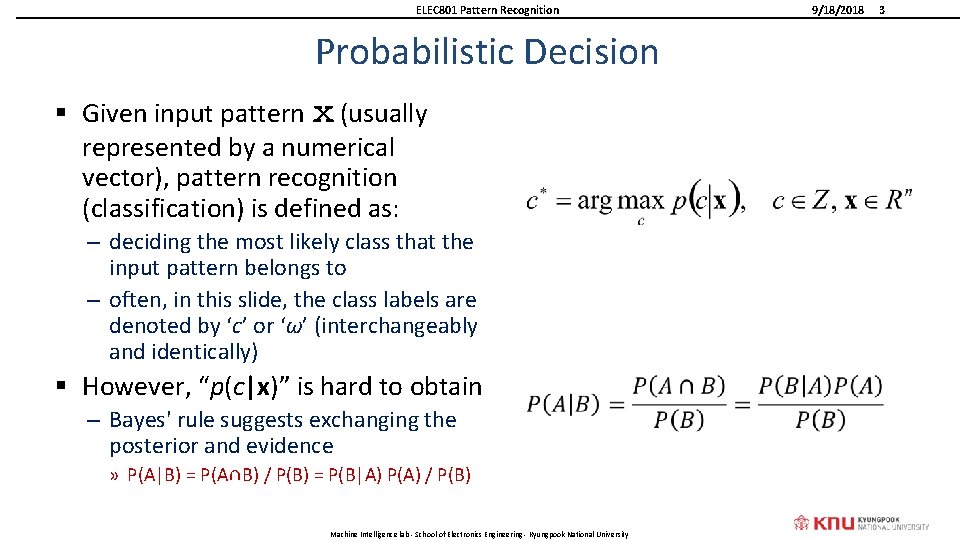

ELEC 801 Pattern Recognition Probabilistic Decision § Given input pattern x (usually represented by a numerical vector), pattern recognition (classification) is defined as: – deciding the most likely class that the input pattern belongs to – often, in this slide, the class labels are denoted by ‘c’ or ‘ω’ (interchangeably and identically) § However, “p(c|x)” is hard to obtain – Bayes' rule suggests exchanging the posterior and evidence » P(A|B) = P(A∩B) / P(B) = P(B|A) P(A) / P(B) Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 3

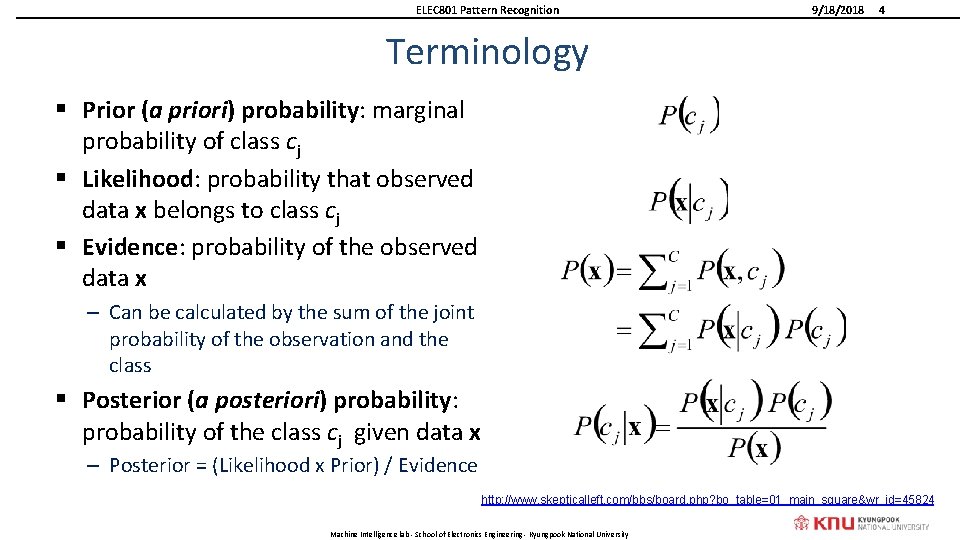

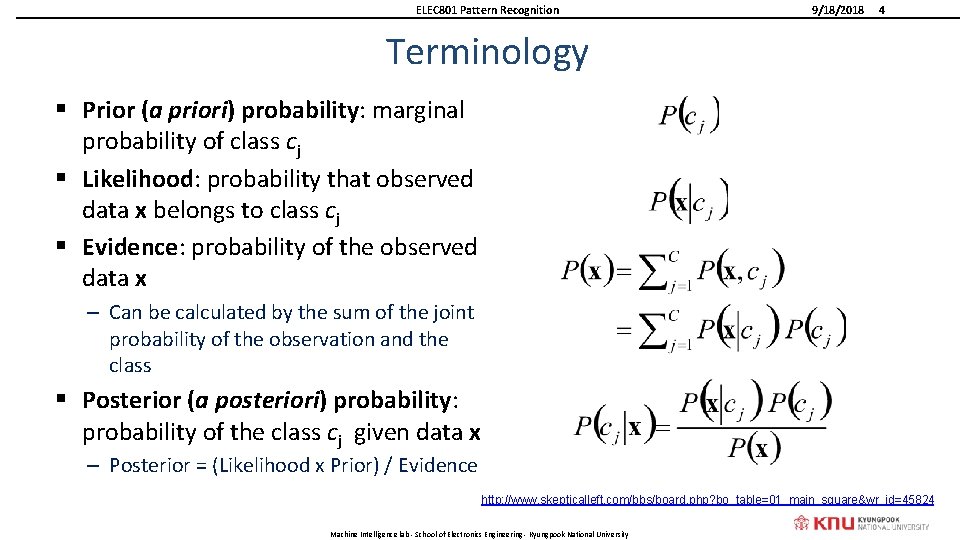

ELEC 801 Pattern Recognition 9/18/2018 4 Terminology § Prior (a priori) probability: marginal probability of class cj § Likelihood: probability that observed data x belongs to class cj § Evidence: probability of the observed data x – Can be calculated by the sum of the joint probability of the observation and the class § Posterior (a posteriori) probability: probability of the class cj given data x – Posterior = (Likelihood x Prior) / Evidence http: //www. skepticalleft. com/bbs/board. php? bo_table=01_main_square&wr_id=45824 Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

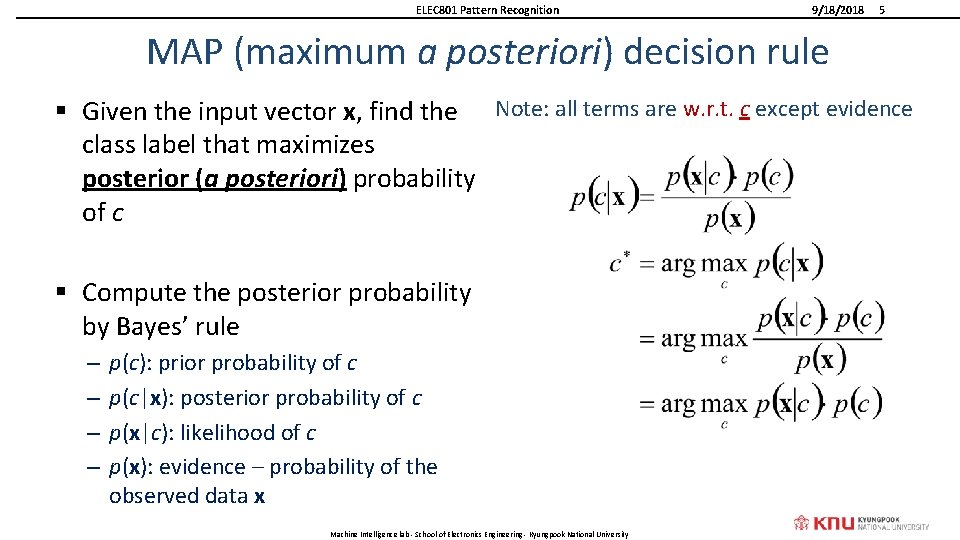

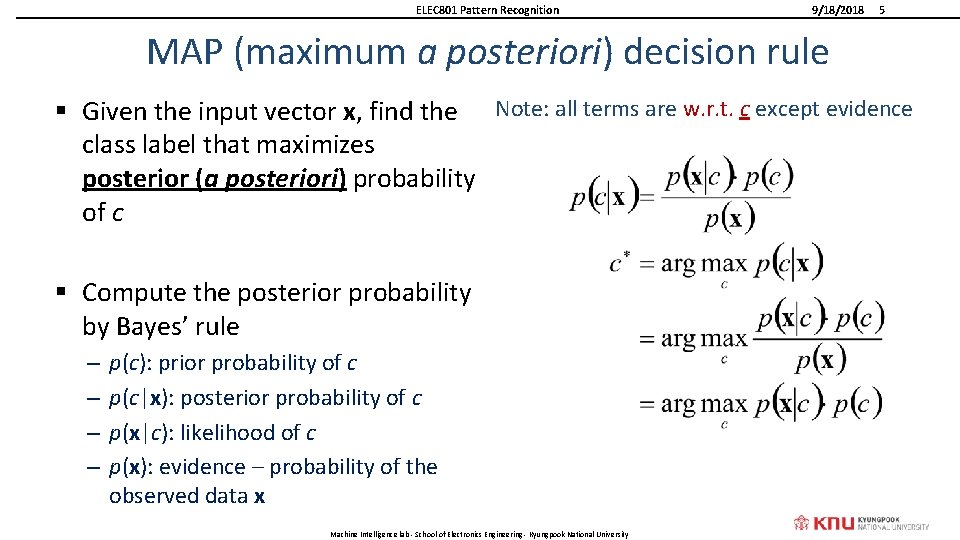

ELEC 801 Pattern Recognition 9/18/2018 5 MAP (maximum a posteriori) decision rule § Given the input vector x, find the Note: all terms are w. r. t. c except evidence class label that maximizes posterior (a posteriori) probability of c § Compute the posterior probability by Bayes’ rule – – p(c): prior probability of c p(c|x): posterior probability of c p(x|c): likelihood of c p(x): evidence – probability of the observed data x Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

ELEC 801 Pattern Recognition Approximation of Prior and Likelihood § Approximation of likelihood function – Collect class-labeled data (say this as training data) – Use parametric pdf models such as Gaussian » Mean and variance – Use non-parametric models » Piecewise approximation by relative frequencies of bins of histogram § Approximation of the prior probabilities – By the relative frequency of class c – In practical situations, hard to obtain Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 6

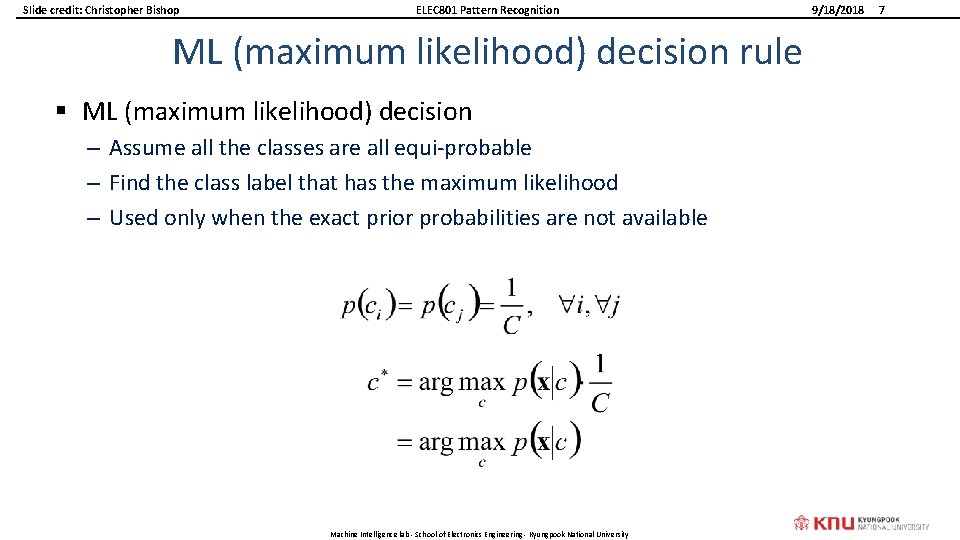

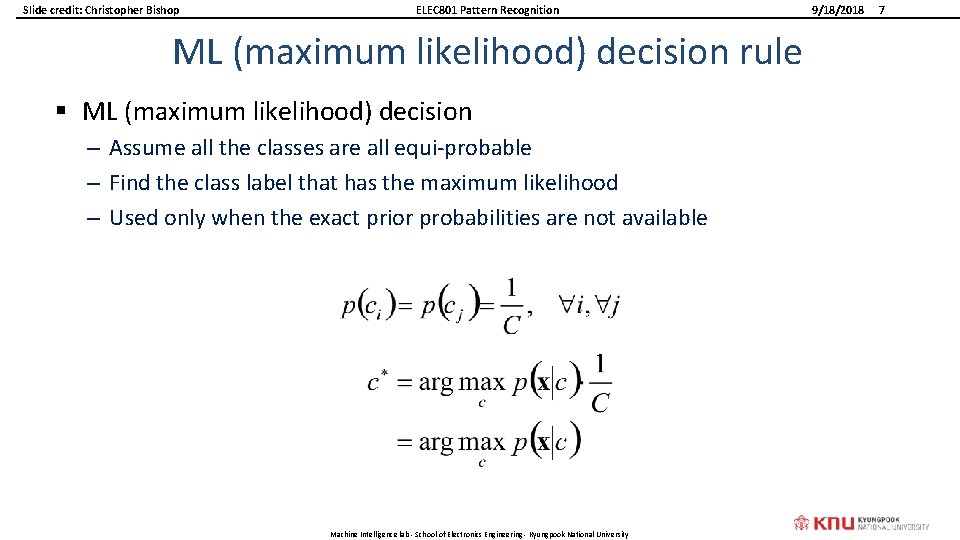

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition ML (maximum likelihood) decision rule § ML (maximum likelihood) decision – Assume all the classes are all equi-probable – Find the class label that has the maximum likelihood – Used only when the exact prior probabilities are not available Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 7

ELEC 801 Pattern Recognition 9/18/2018 SEA BASS AND SALMON CLASSIFICATION Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 8

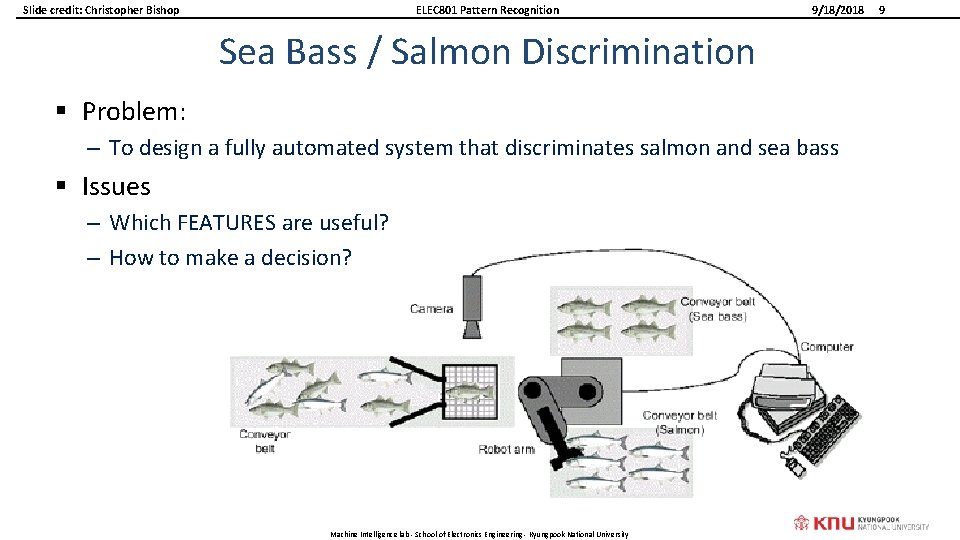

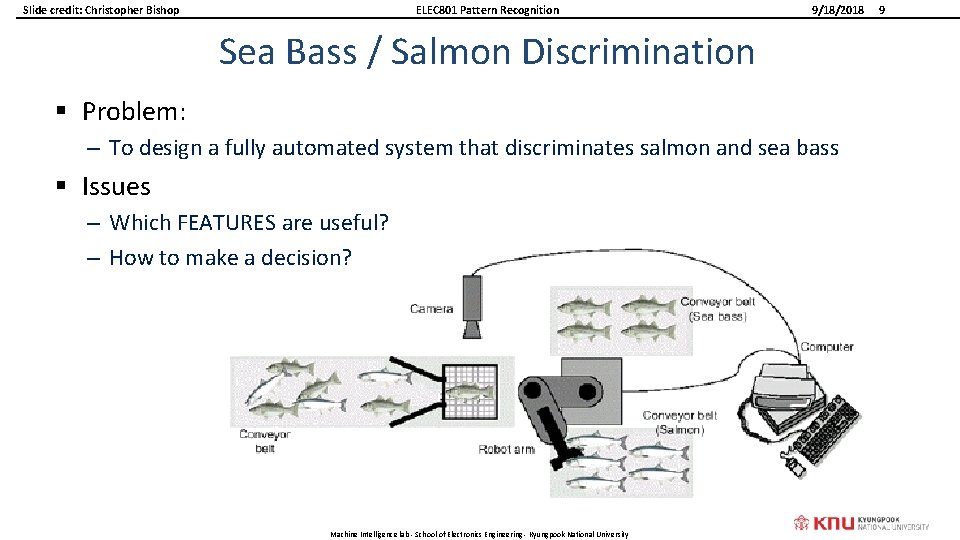

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 Sea Bass / Salmon Discrimination § Problem: – To design a fully automated system that discriminates salmon and sea bass § Issues – Which FEATURES are useful? – How to make a decision? Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9

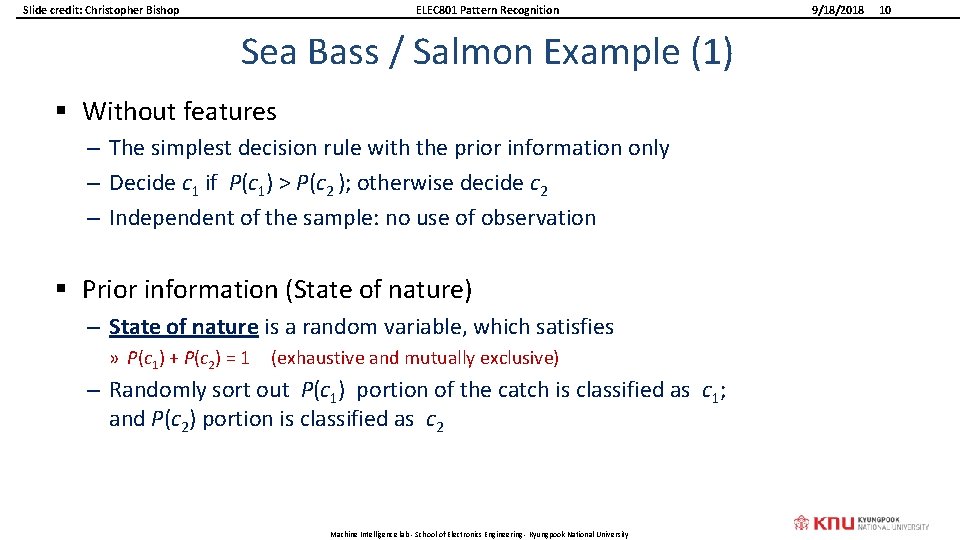

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Sea Bass / Salmon Example (1) § Without features – The simplest decision rule with the prior information only – Decide c 1 if P(c 1) > P(c 2 ); otherwise decide c 2 – Independent of the sample: no use of observation § Prior information (State of nature) – State of nature is a random variable, which satisfies » P(c 1) + P(c 2) = 1 (exhaustive and mutually exclusive) – Randomly sort out P(c 1) portion of the catch is classified as c 1; and P(c 2) portion is classified as c 2 Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 10

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 11 Sea Bass / Salmon Example (2) § When features are available – Length, thickness, color, etc. § ML (maximum likelihood decision) – Use of the class-conditional information – P(x | c 1) and P(x | c 2): » probability of the fish assuming that it belongs to class c 1 and c 2 » Likelihood of c 1 and c 2 given the data x – The two probabilities describe the difference in lightness between populations of sea and salmon Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Likelihood Plot Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 12

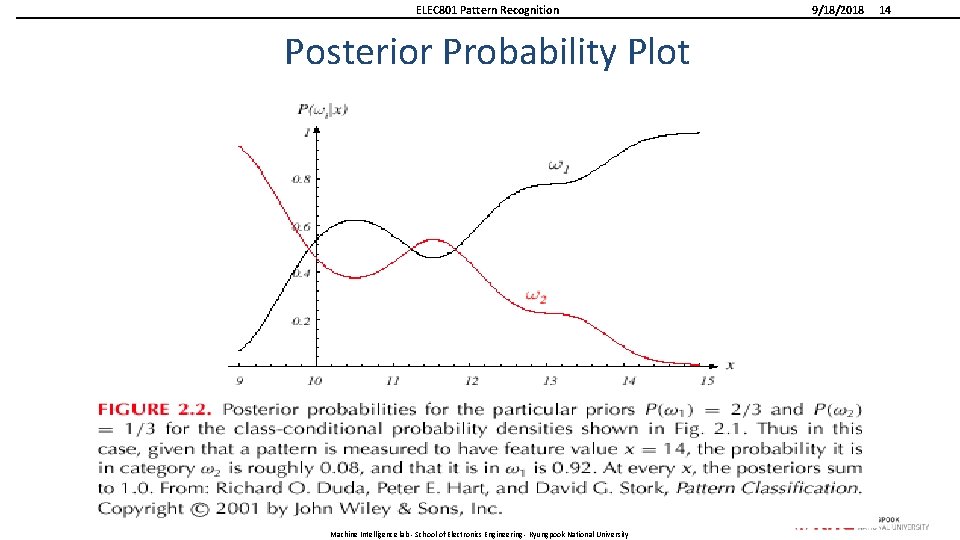

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 13 MAP Decision Rule § If prior probabilities are known, the posterior probability of the classes can be calculated – p( c 1|x ) = p( x|c 1 ) p(c 1) / p(x) ; p( c 2|x ) = p( x|c 2 ) p(c 2) / p(x) » where the evidence p( x ) = p( x|c 1 ) p(c 1) + p( x|c 2 ) p(c 2) » Certainly, p( c 1 |x ) + p( c 2 |x ) = 1 (WHY? ) § Resultant decision rule: – Decide c 1 if p( c 1 | x ) ≥ p( c 2 | x ); otherwise decide c 2 § Decision error: – P(error | x) = min [ p( c 1 | x ), p( c 2 | x ) ] Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

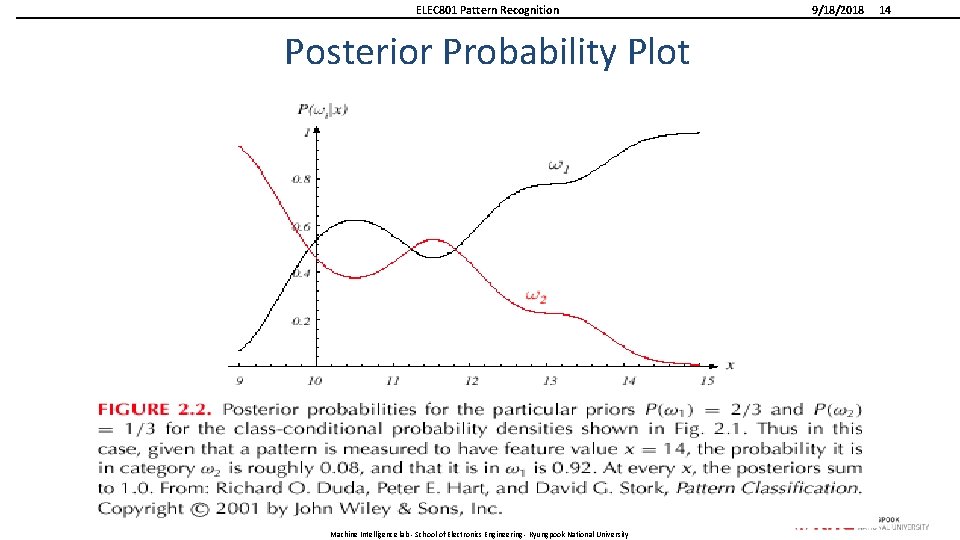

ELEC 801 Pattern Recognition Posterior Probability Plot Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 14

ELEC 801 Pattern Recognition RISK MINIMIZATION Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 15

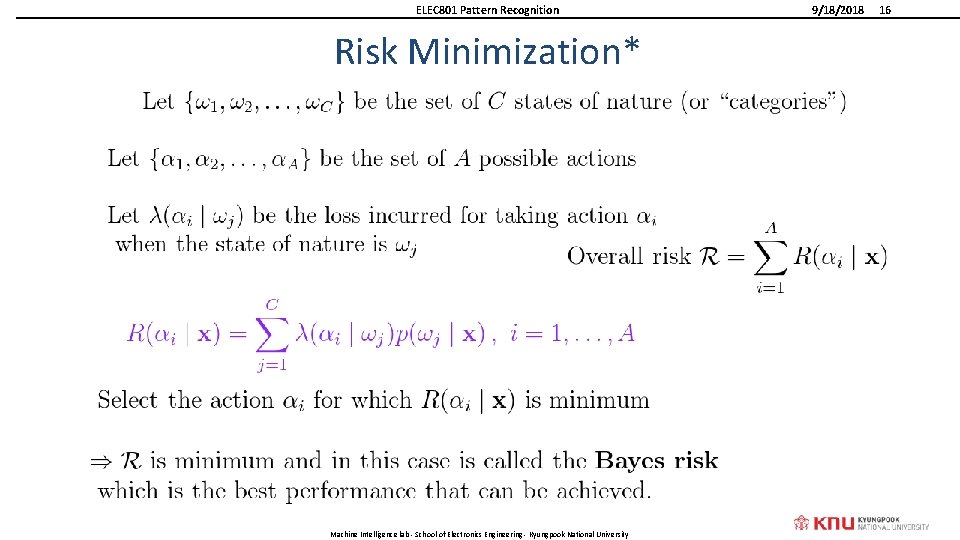

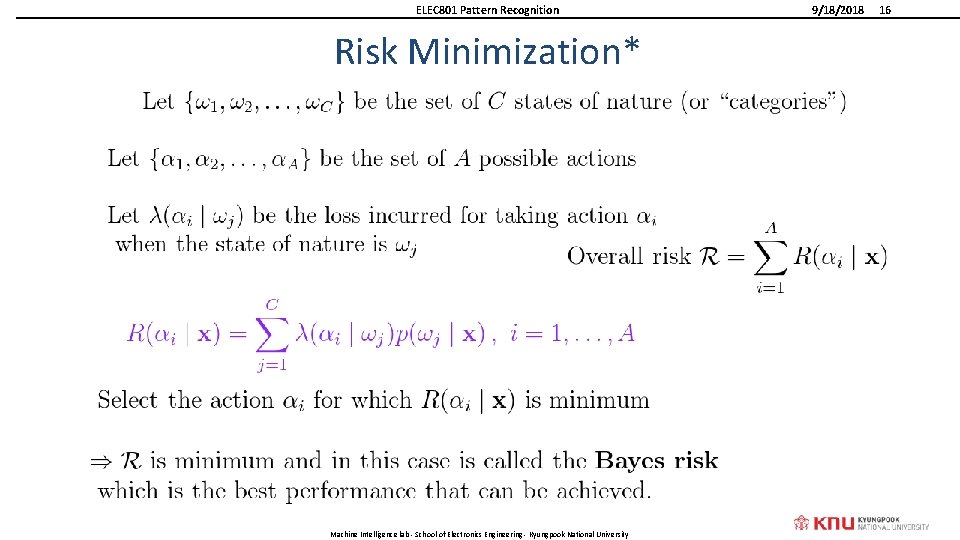

ELEC 801 Pattern Recognition Risk Minimization* Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 16

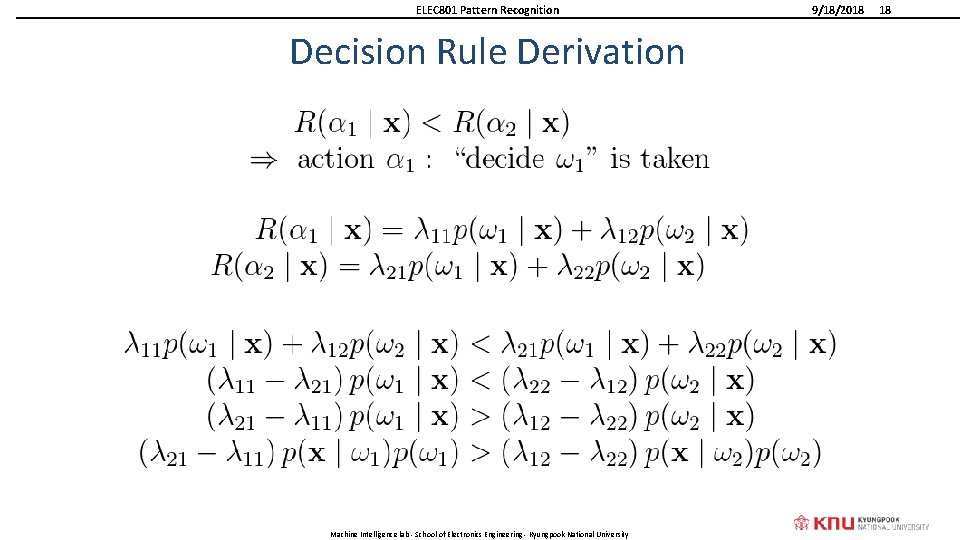

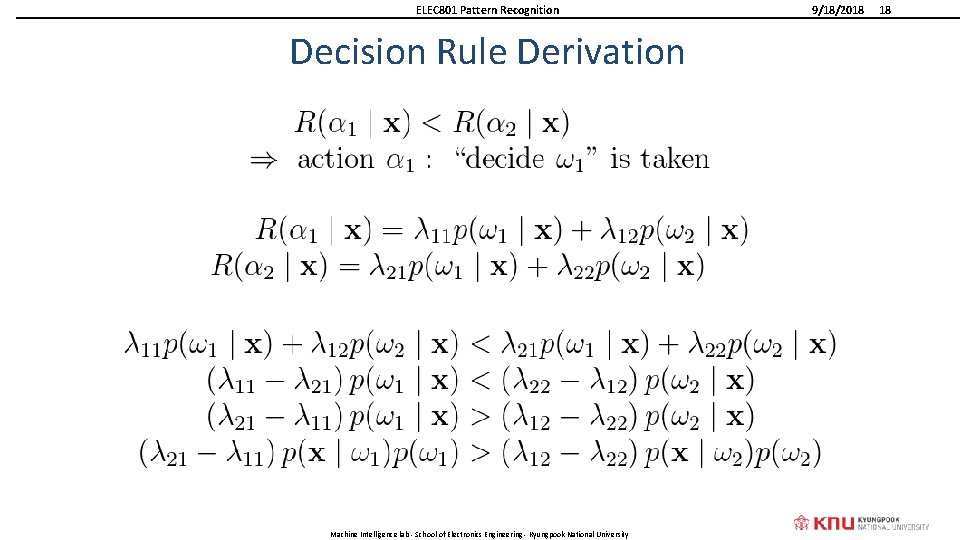

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Example § Two-category classification 1 : deciding 1 2 : deciding 2 ij = ( i | j) loss incurred for deciding i when the true state of nature is j Conditional risk: Our rule is the following: R( 1 | x) = 11 P( 1 | x) + 12 P( 2 | x) R( 2 | x) = 21 P( 1 | x) + 22 P( 2 | x) if R( 1 | x) < R( 2 | x) action 1: “decide 1” is taken Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 17

ELEC 801 Pattern Recognition Decision Rule Derivation Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 18

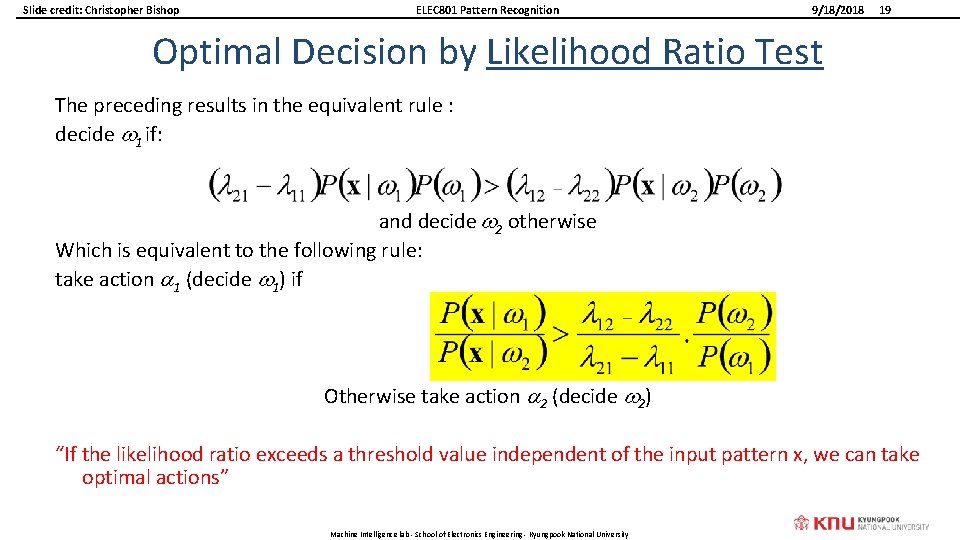

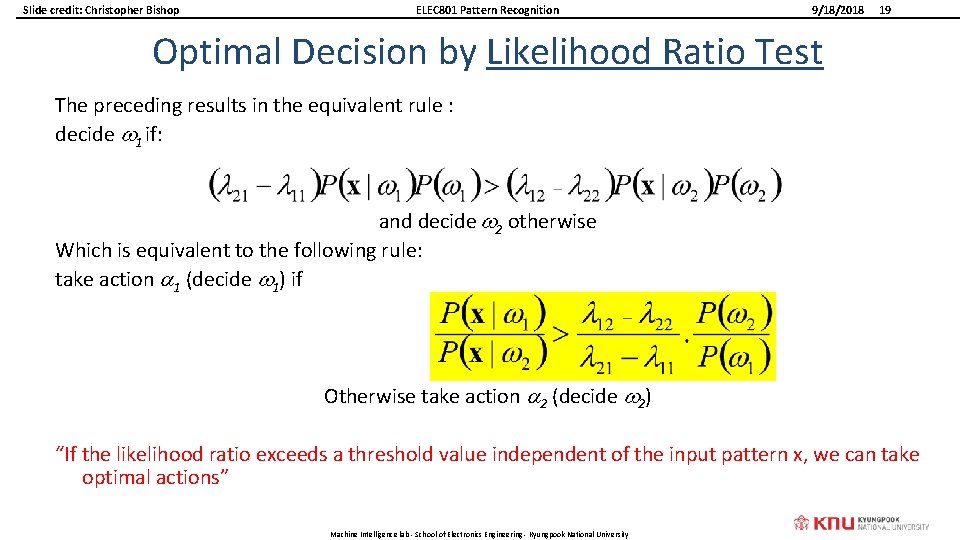

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 19 Optimal Decision by Likelihood Ratio Test The preceding results in the equivalent rule : decide 1 if: and decide 2 otherwise Which is equivalent to the following rule: take action 1 (decide 1) if Otherwise take action 2 (decide 2) “If the likelihood ratio exceeds a threshold value independent of the input pattern x, we can take optimal actions” Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

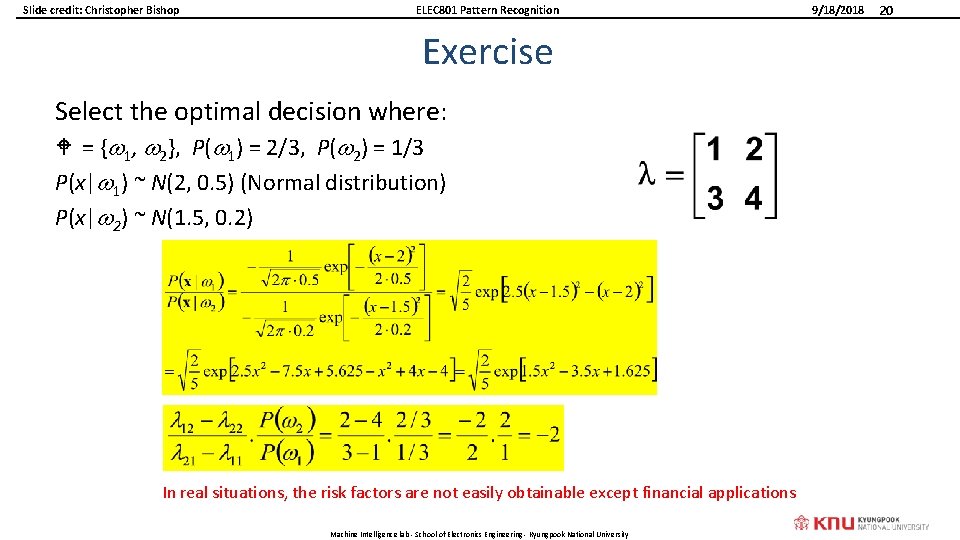

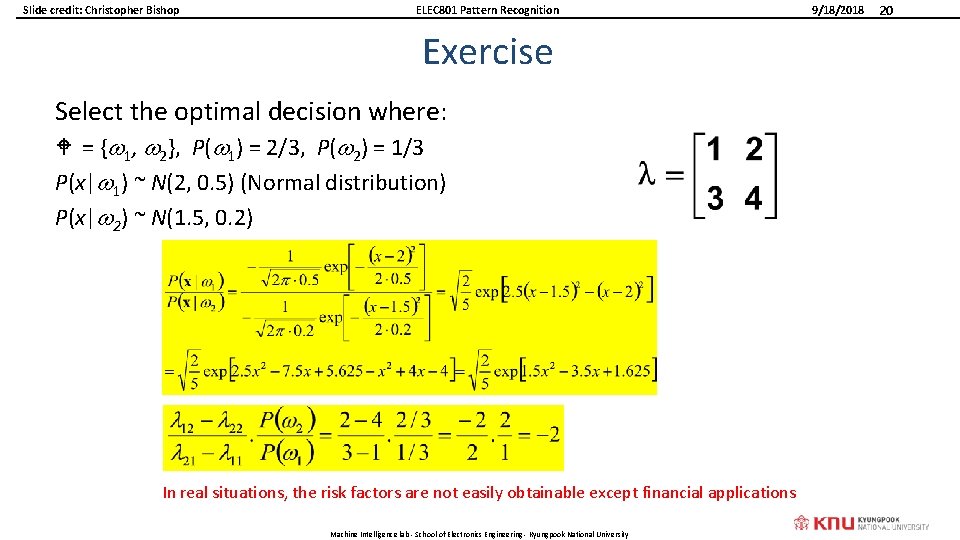

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Exercise Select the optimal decision where: W = { 1, 2}, P( 1) = 2/3, P( 2) = 1/3 P(x| 1) ~ N(2, 0. 5) (Normal distribution) P(x| 2) ~ N(1. 5, 0. 2) In real situations, the risk factors are not easily obtainable except financial applications Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 20

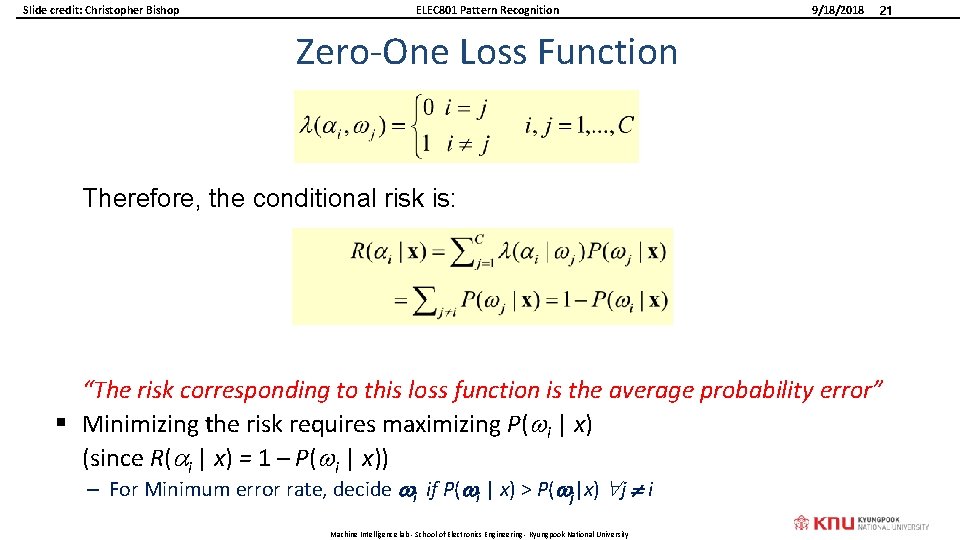

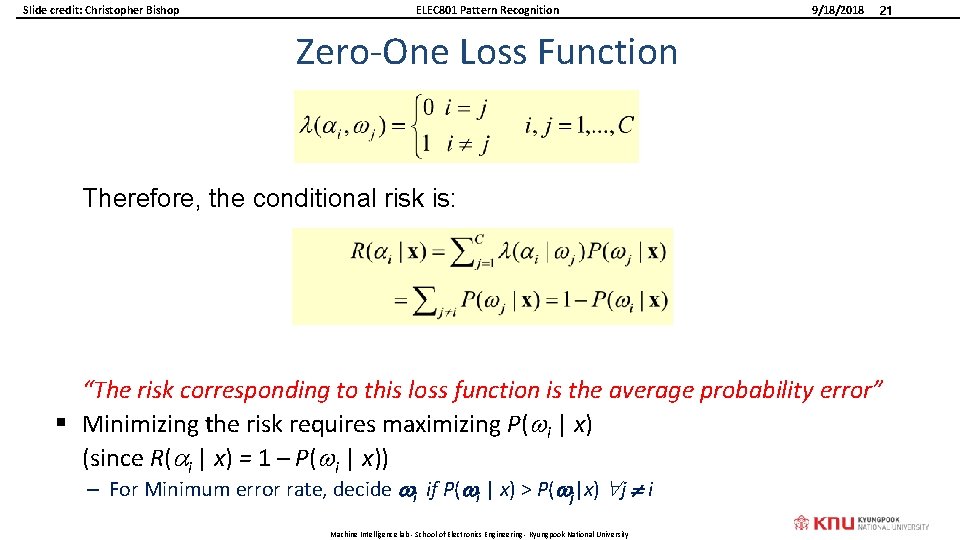

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 21 Zero-One Loss Function Therefore, the conditional risk is: “The risk corresponding to this loss function is the average probability error” § Minimizing the risk requires maximizing P( i | x) (since R( i | x) = 1 – P( i | x)) – For Minimum error rate, decide i if P( i | x) > P( j|x) j i Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

ELEC 801 Pattern Recognition Computing the Probability of Erroneous Decision Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 22

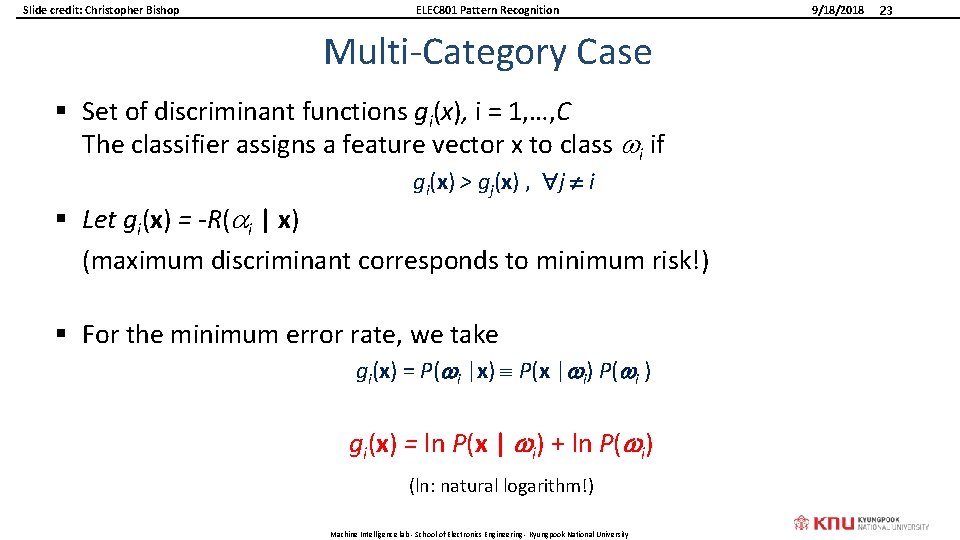

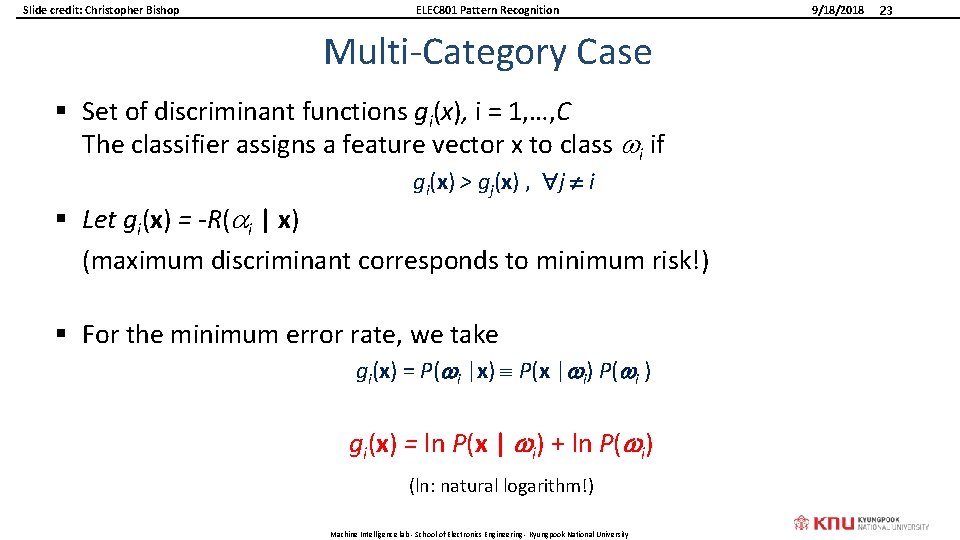

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Multi-Category Case § Set of discriminant functions gi(x), i = 1, …, C The classifier assigns a feature vector x to class i if gi(x) > gj(x) , j i § Let gi(x) = -R( i | x) (maximum discriminant corresponds to minimum risk!) § For the minimum error rate, we take gi(x) = P( i |x) P(x | i) P( i ) gi(x) = ln P(x | i) + ln P( i) (ln: natural logarithm!) Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 23

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 Summary § The decision rules are characterized by the discriminant functions – Bayes’ decision rule: – MAP: – ML: gi(x) = -R( i | x) gi(x) = P(ωi | x) gi(x) = P(x | ωi) § In terms of the number of concerning factors – Bayes’ decision > MAP > ML Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 25

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 Bayesian Decision for Continuous Features § Generalization of the preceding ideas – Use of more than one feature – Use more than two states of nature (classes) – Introduce a loss of function which is more general than the probability of error § Actions other than classification – Rejection or verification – Refusing to make a decision in close or bad cases! § The loss function states how costly each action is Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 26

ELEC 801 Pattern Recognition PARAMETER ESTIMATION: REGRESSION CASE Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 27

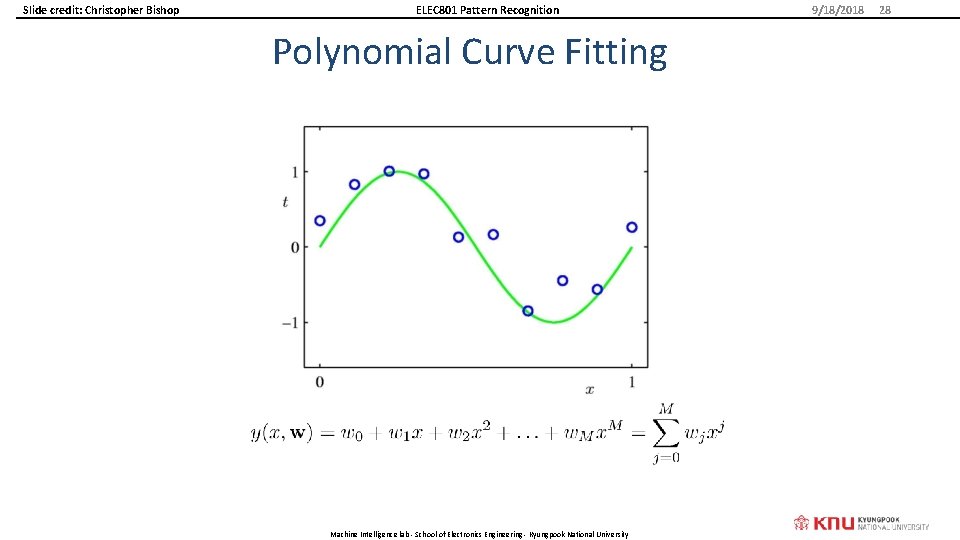

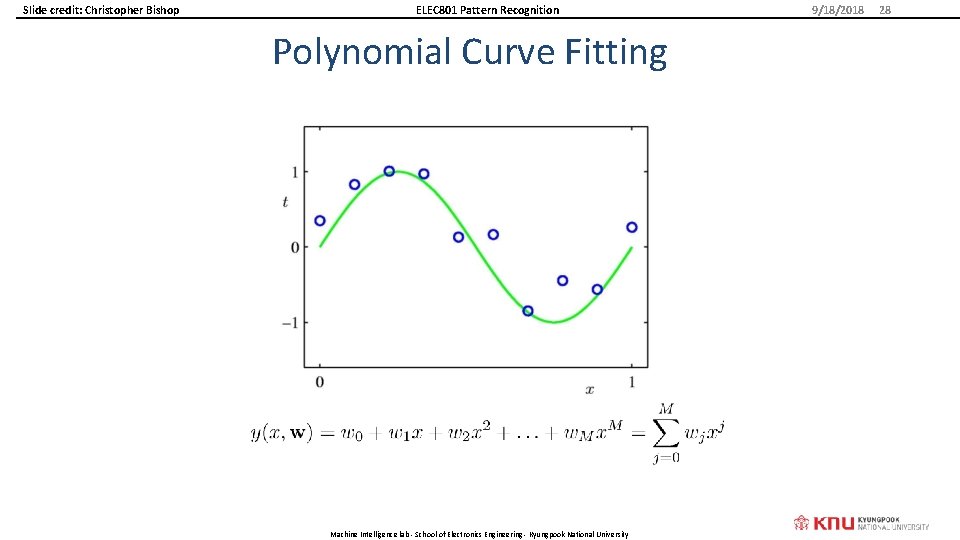

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Polynomial Curve Fitting Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 28

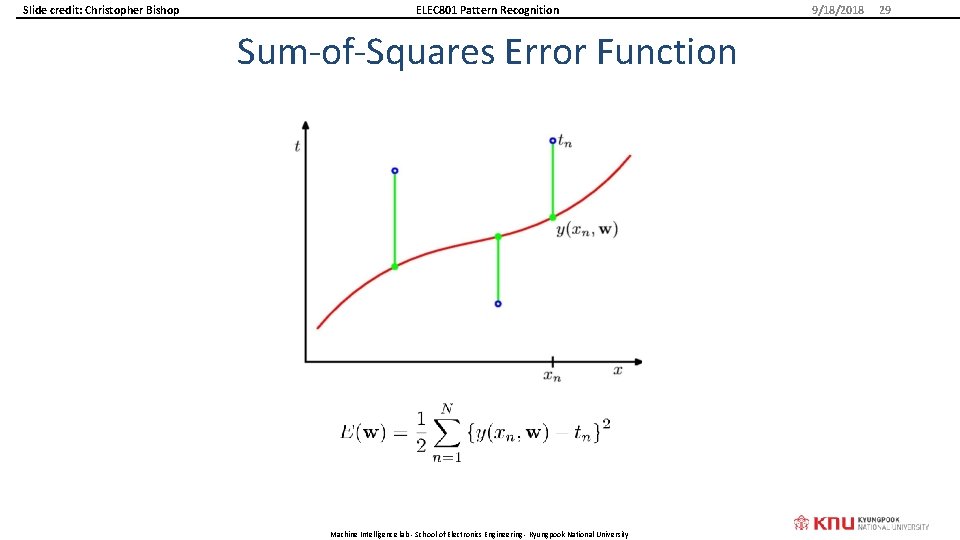

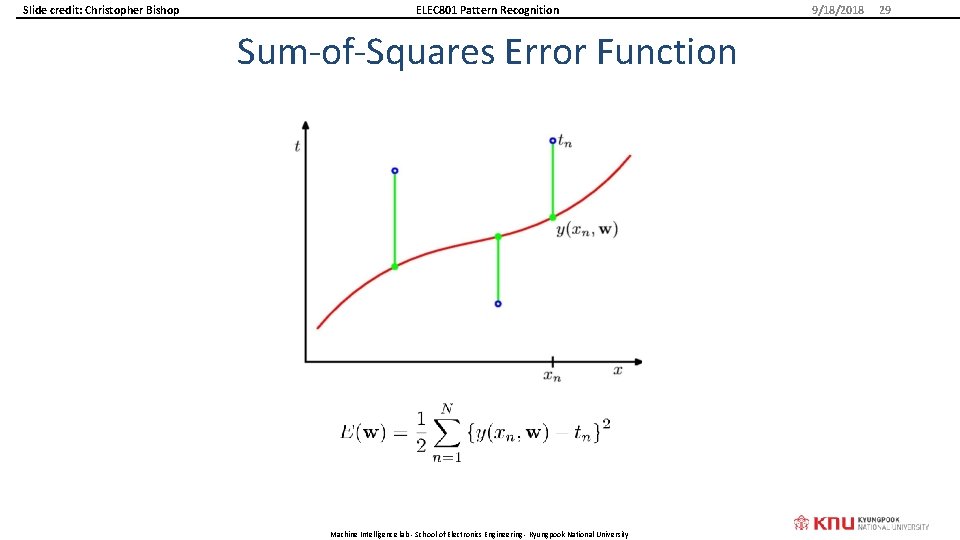

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Sum-of-Squares Error Function Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 29

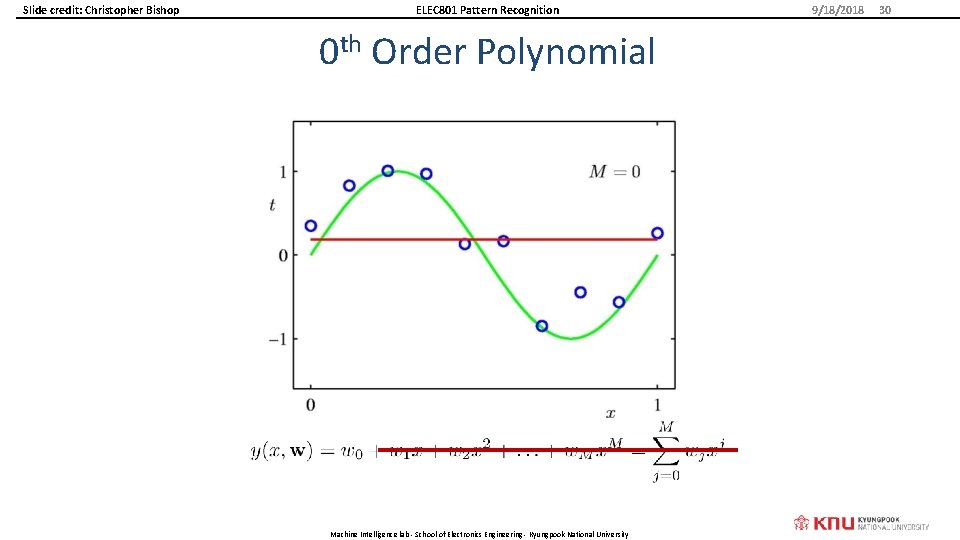

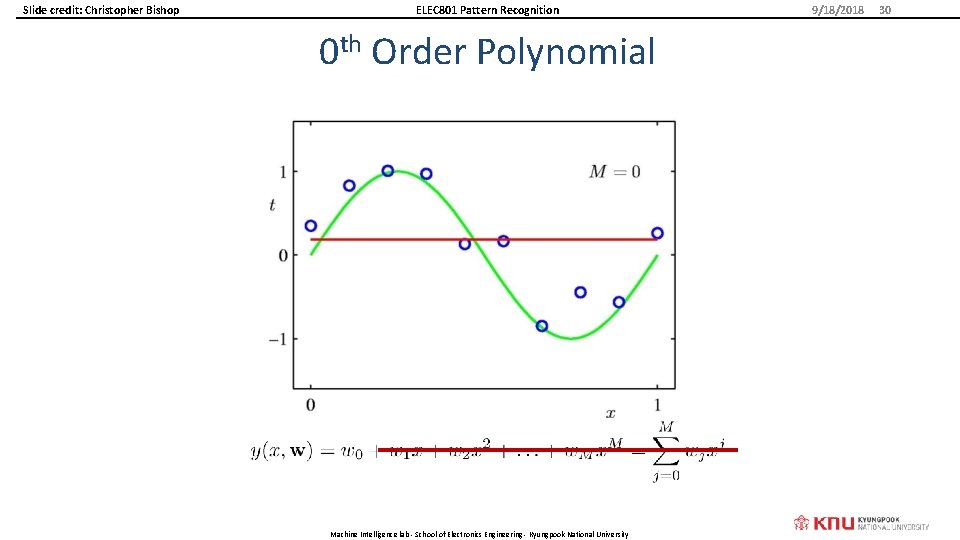

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 0 th Order Polynomial Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 30

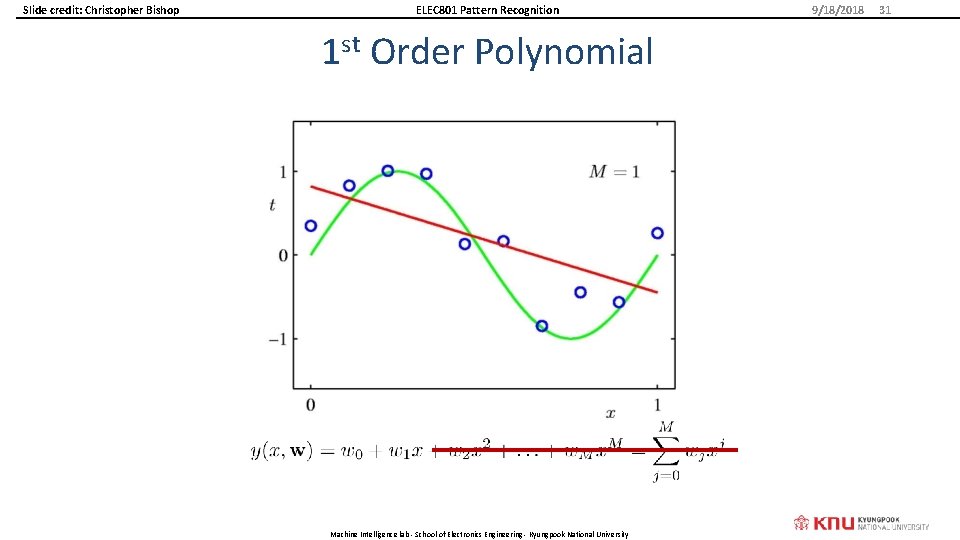

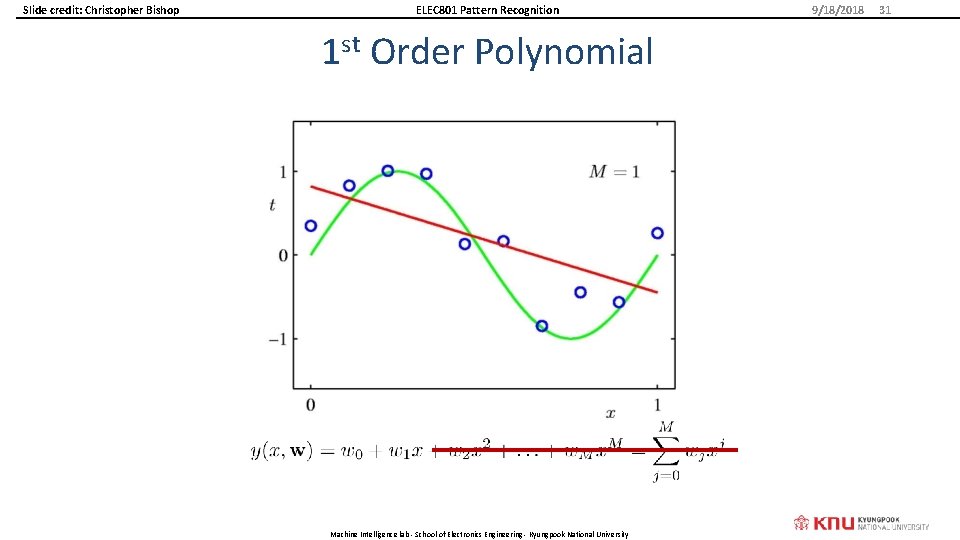

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 1 st Order Polynomial Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 31

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 3 rd Order Polynomial Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 32

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9 th Order Polynomial on 10 samples Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 33

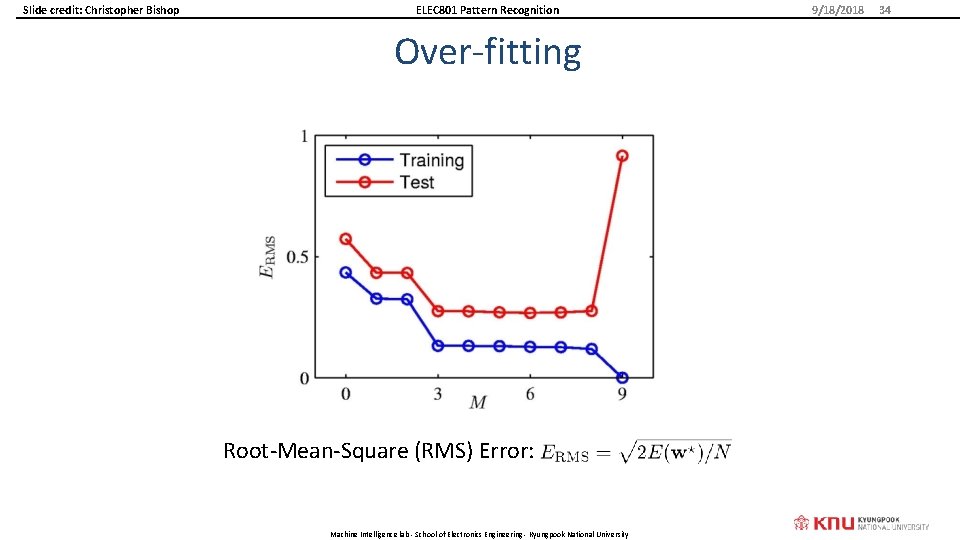

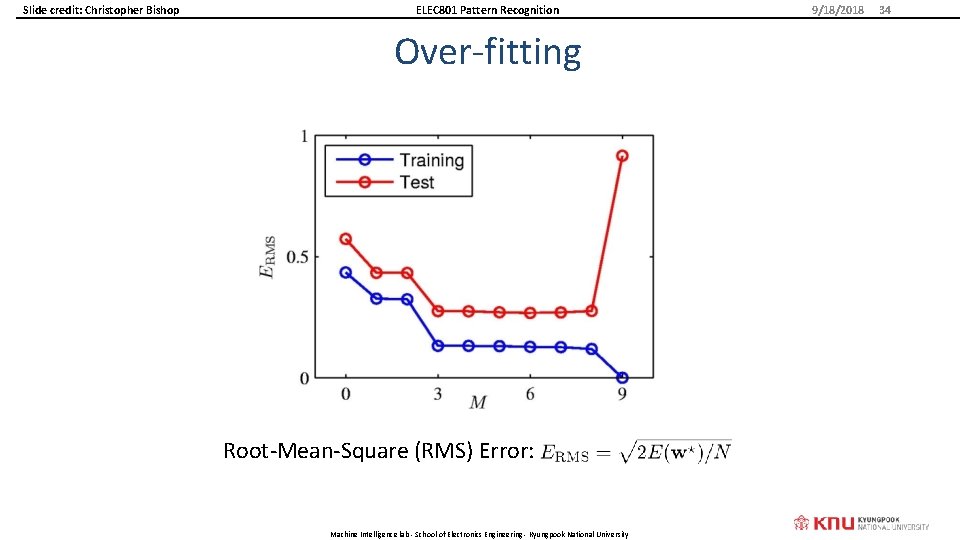

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Over-fitting Root-Mean-Square (RMS) Error: Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 34

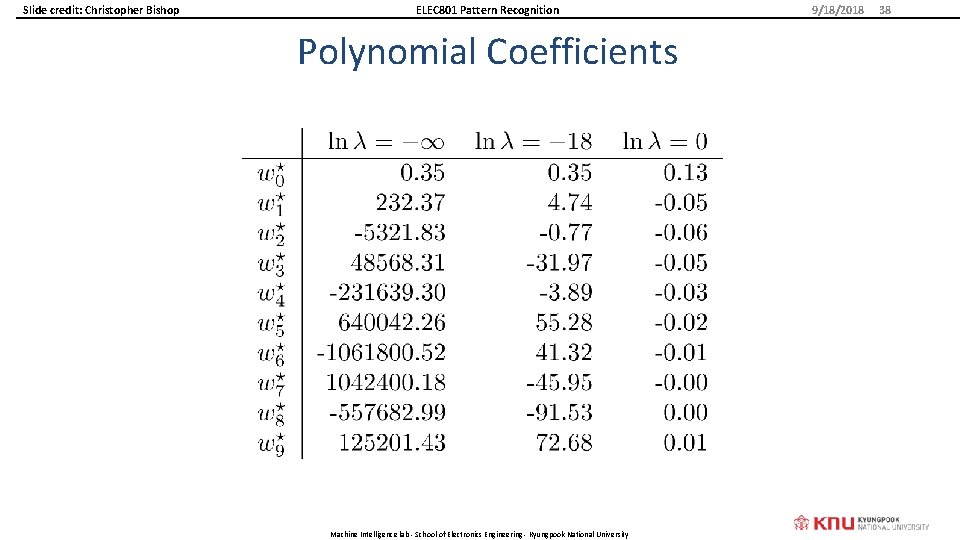

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Polynomial Coefficients Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 35

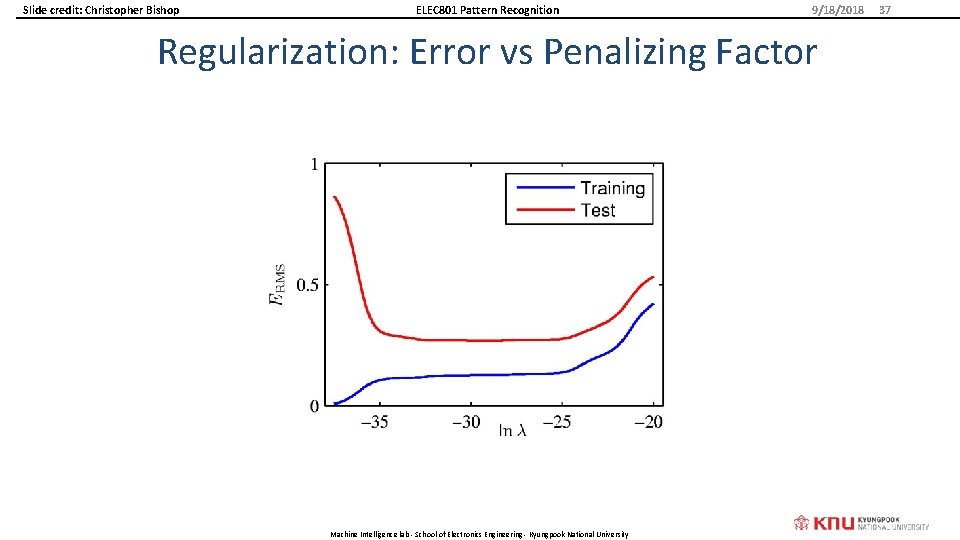

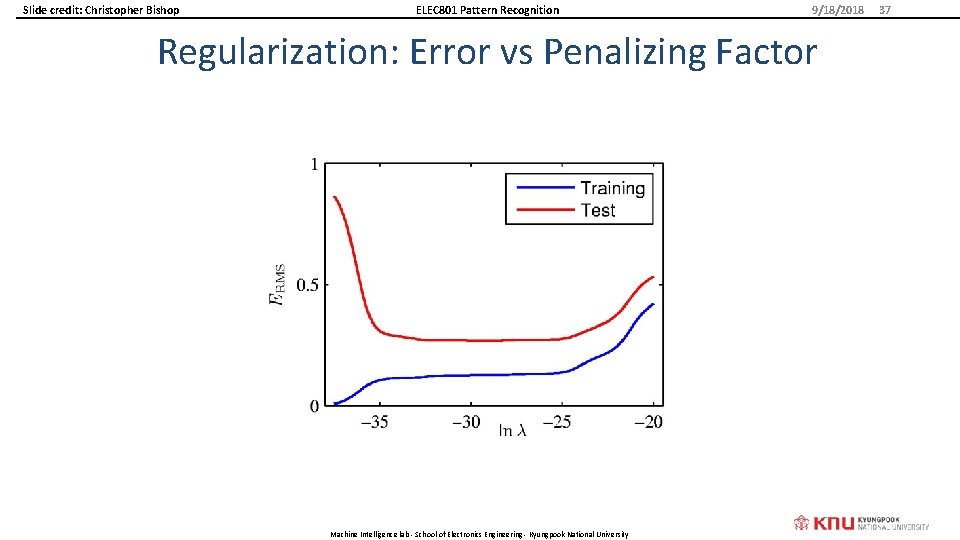

ELEC 801 Pattern Recognition Regularization § Penalize large coefficient values Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 36

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 Regularization: Error vs Penalizing Factor Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 37

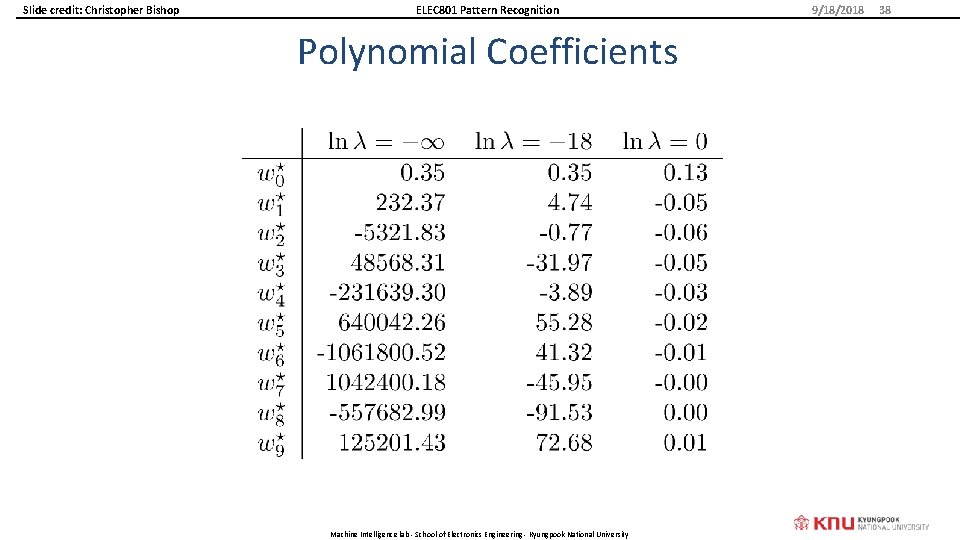

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Polynomial Coefficients Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 38

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Larger Data Set Size: 9 th Order Polynomial approximation on 15 samples Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 39

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Much Larger Data Set Size: 9 th Order Polynomial Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 40

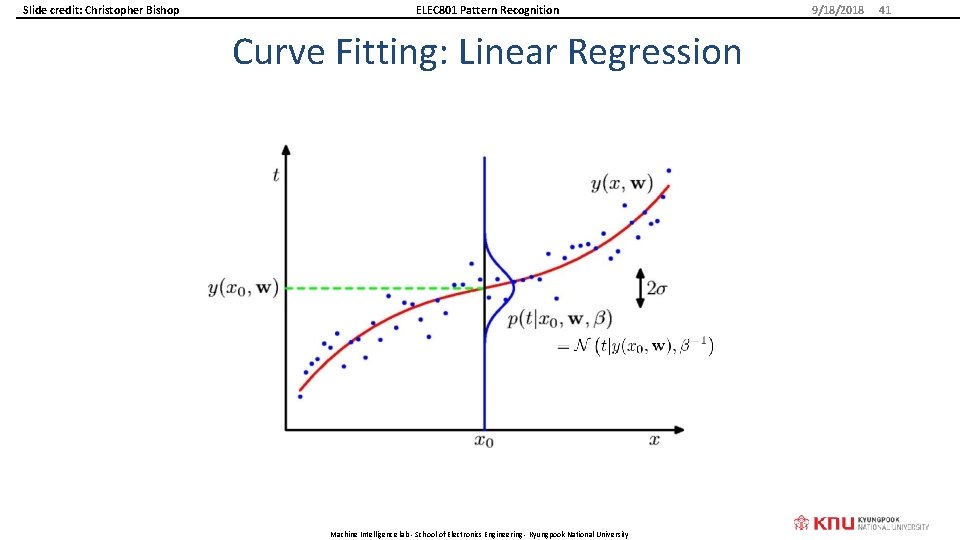

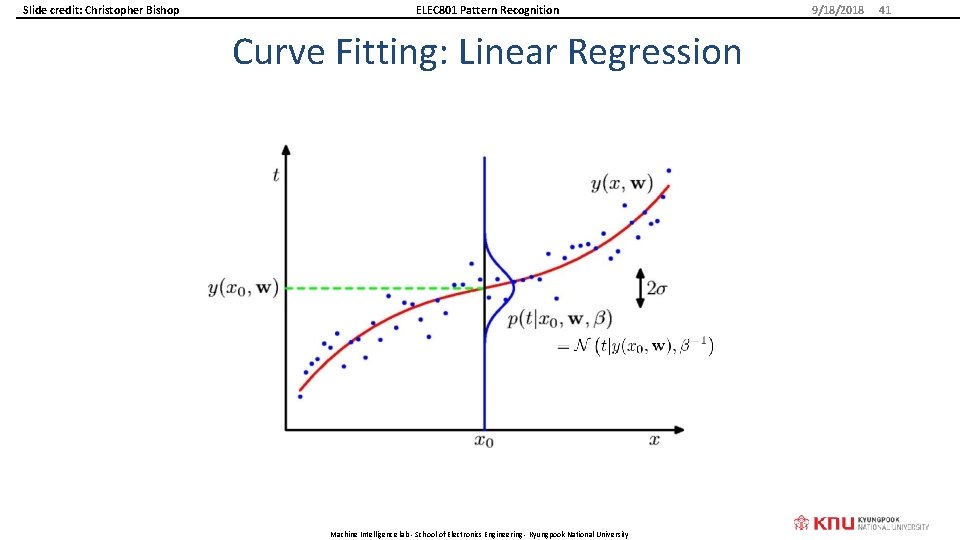

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Curve Fitting: Linear Regression Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 41

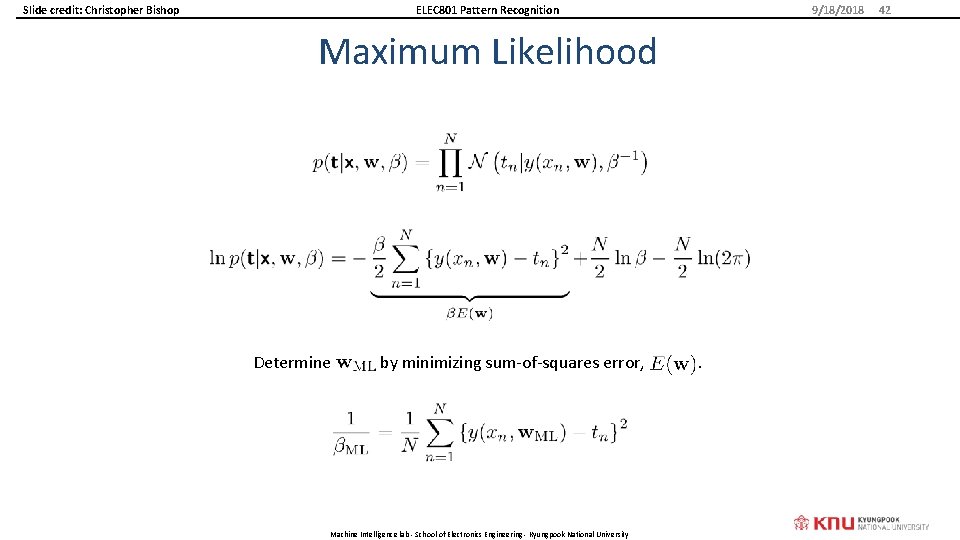

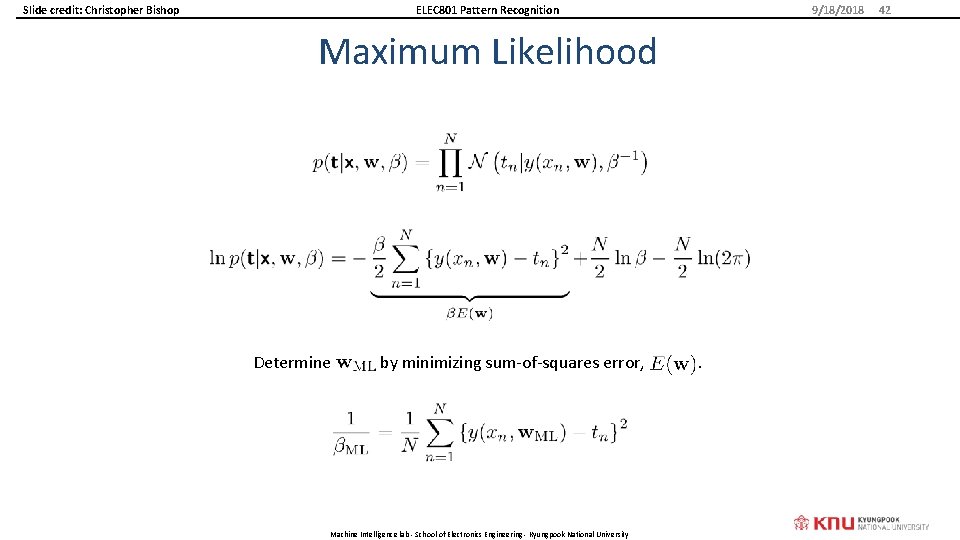

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 Maximum Likelihood Determine by minimizing sum-of-squares error, Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University . 42

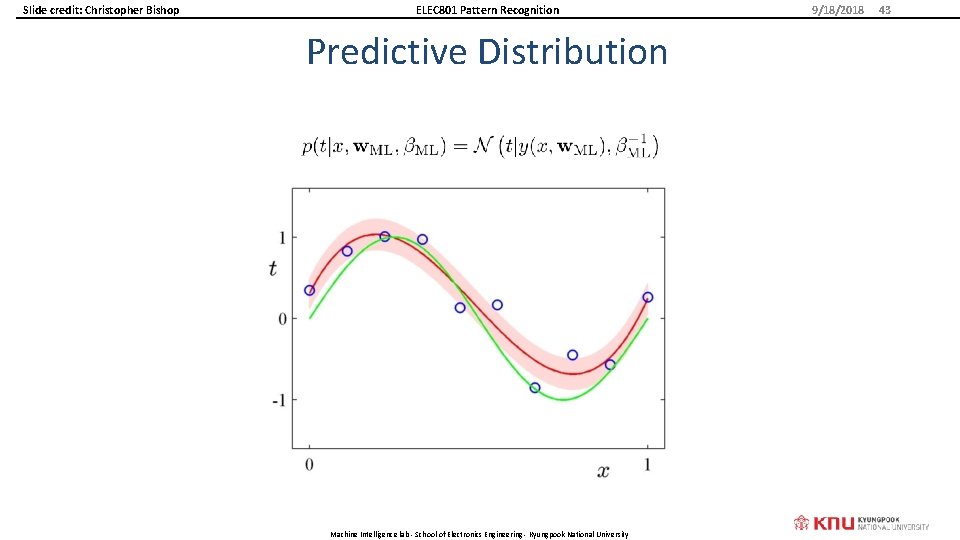

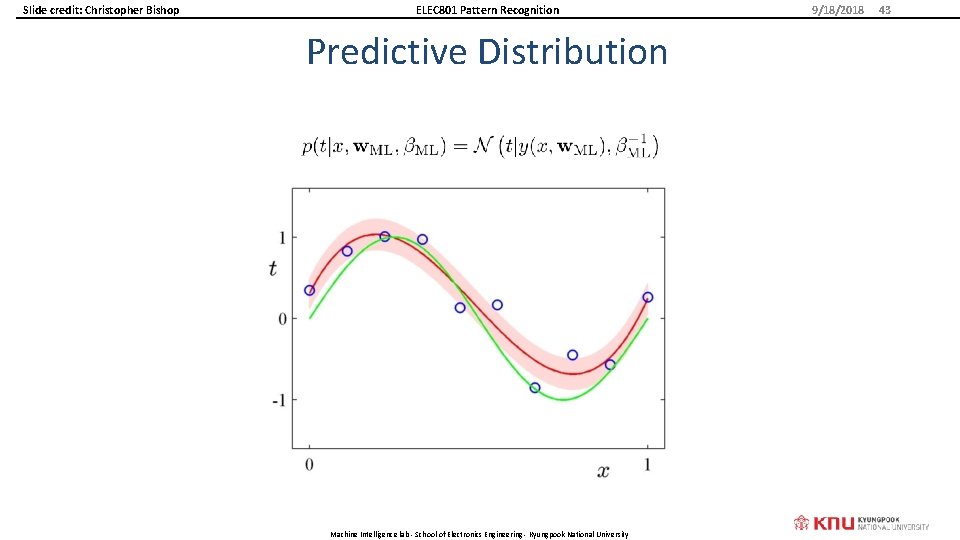

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Predictive Distribution Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 43

ELEC 801 Pattern Recognition NAÏVE BAYES CLASSIFIER Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 48

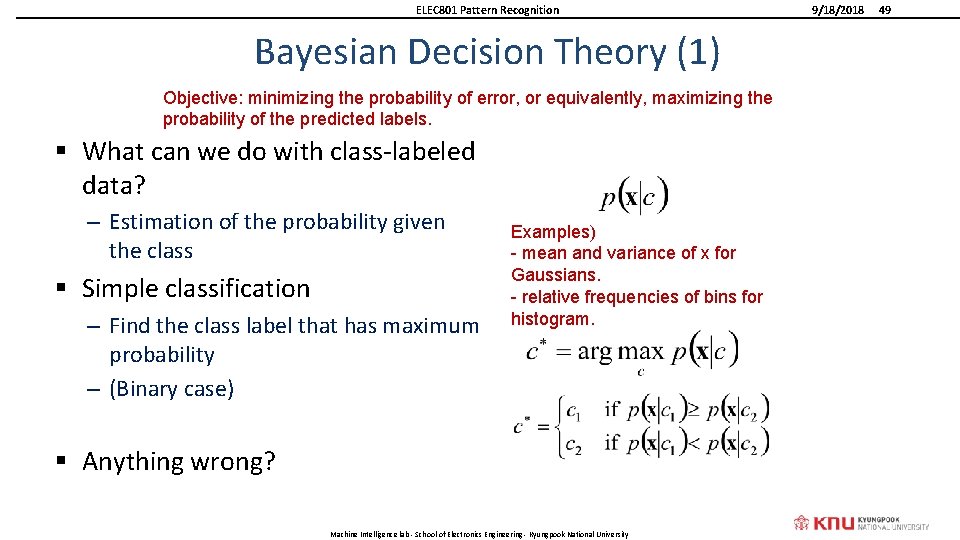

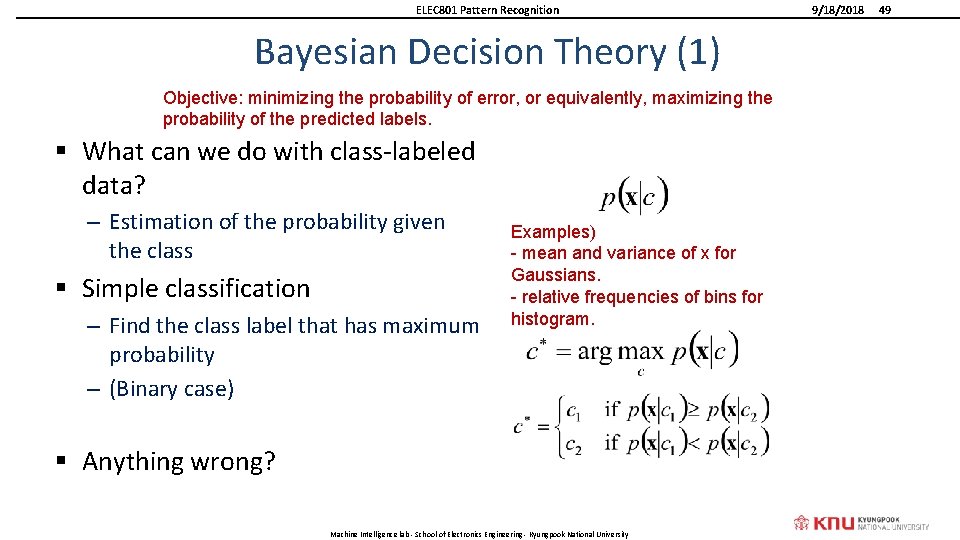

ELEC 801 Pattern Recognition Bayesian Decision Theory (1) Objective: minimizing the probability of error, or equivalently, maximizing the probability of the predicted labels. § What can we do with class-labeled data? – Estimation of the probability given the class § Simple classification – Find the class label that has maximum probability – (Binary case) Examples) - mean and variance of x for Gaussians. - relative frequencies of bins for histogram. § Anything wrong? Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 49

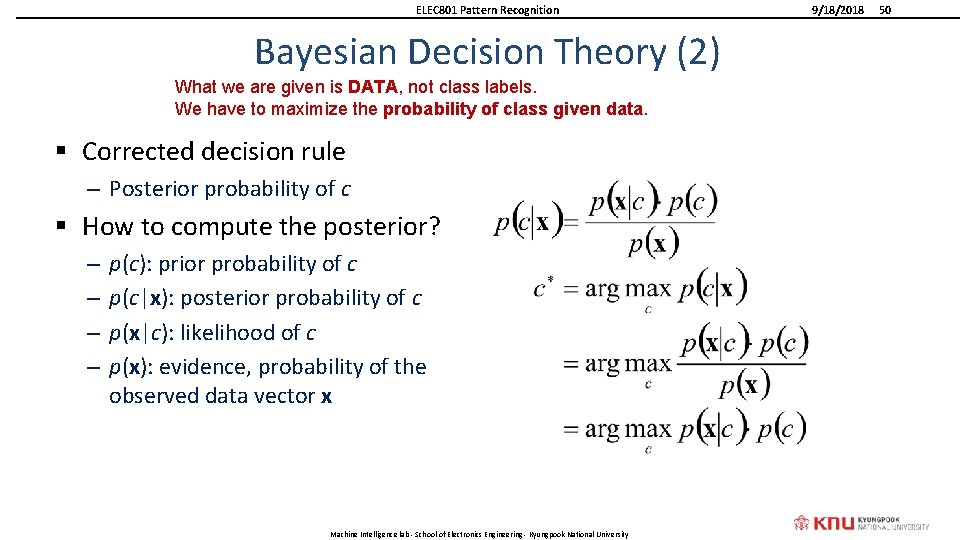

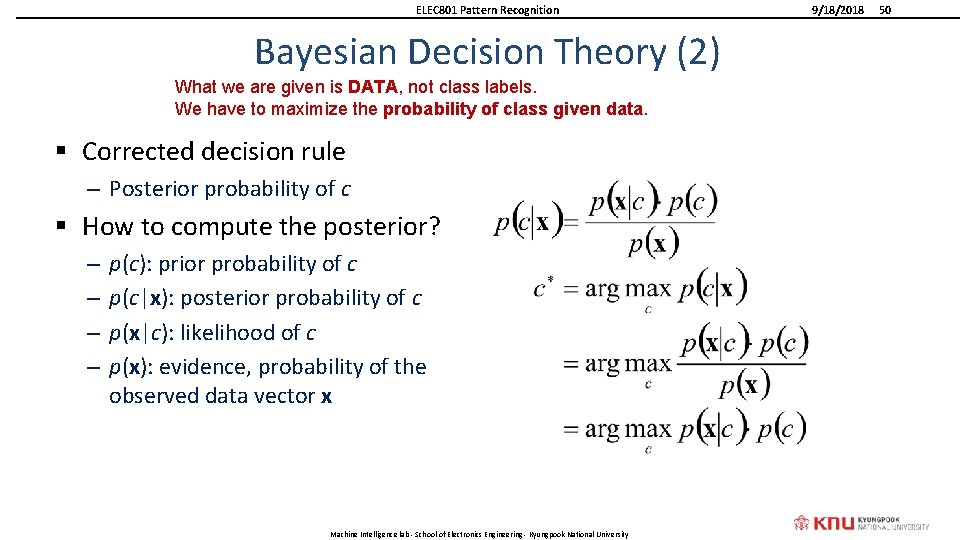

ELEC 801 Pattern Recognition Bayesian Decision Theory (2) What we are given is DATA, not class labels. We have to maximize the probability of class given data. § Corrected decision rule – Posterior probability of c § How to compute the posterior? – – p(c): prior probability of c p(c|x): posterior probability of c p(x|c): likelihood of c p(x): evidence, probability of the observed data vector x Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 50

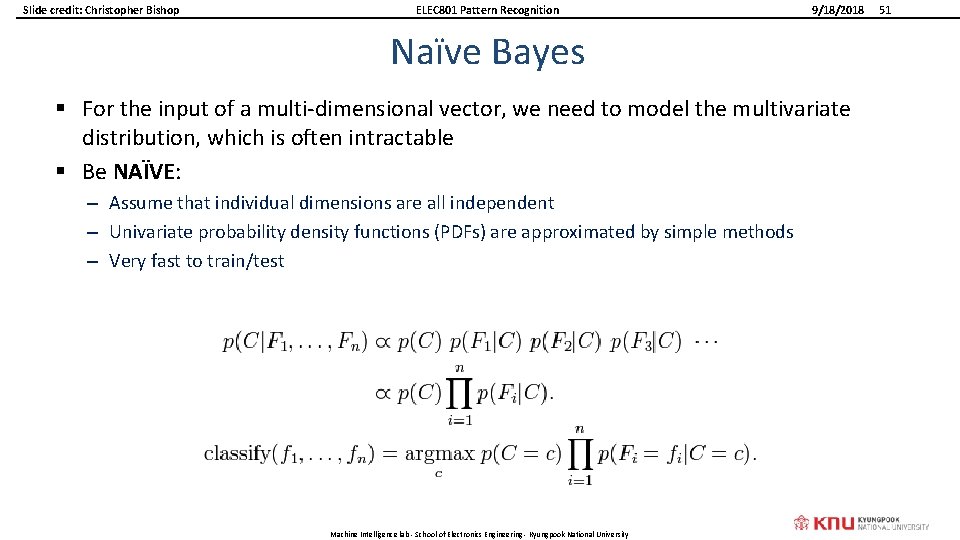

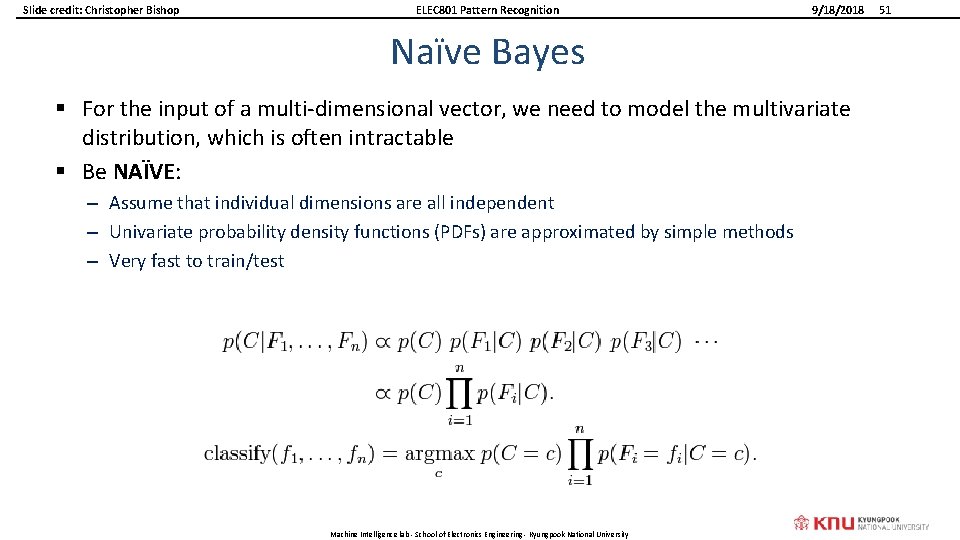

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 Naïve Bayes § For the input of a multi-dimensional vector, we need to model the multivariate distribution, which is often intractable § Be NAÏVE: – Assume that individual dimensions are all independent – Univariate probability density functions (PDFs) are approximated by simple methods – Very fast to train/test Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 51

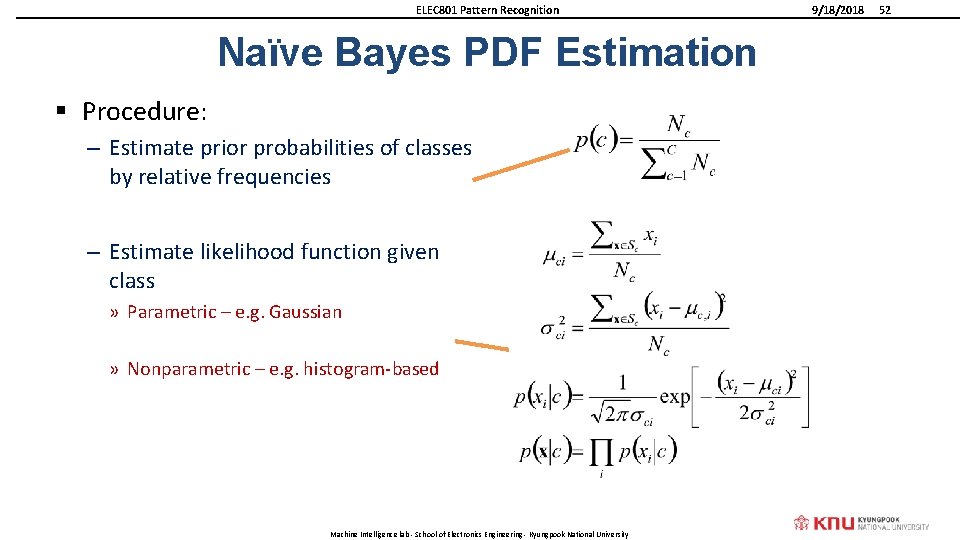

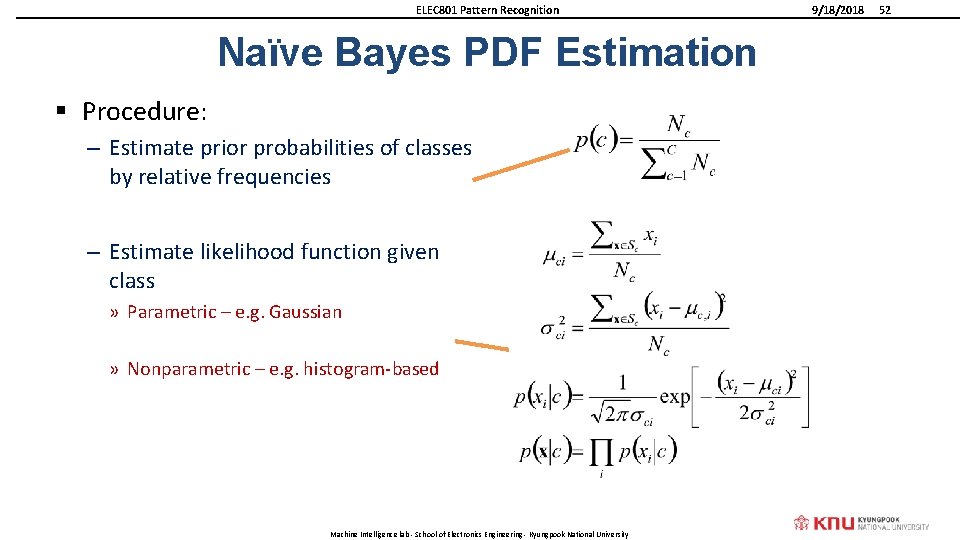

ELEC 801 Pattern Recognition Naïve Bayes PDF Estimation § Procedure: – Estimate prior probabilities of classes by relative frequencies – Estimate likelihood function given class » Parametric – e. g. Gaussian » Nonparametric – e. g. histogram-based Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 52

ELEC 801 Pattern Recognition Other Parametric PDF models In most cases, Normal (Gaussian) distribution is adopted. Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 53

ELEC 801 Pattern Recognition PROGRAMMING PRACTICE: IRIS FLOWER DATASET Naïve Bayes implementation on IRIS dataset Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 54

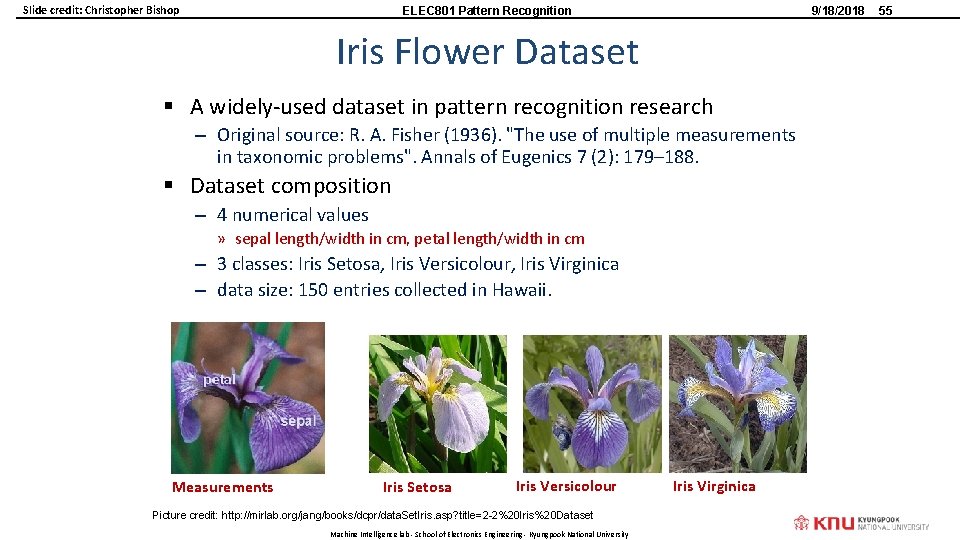

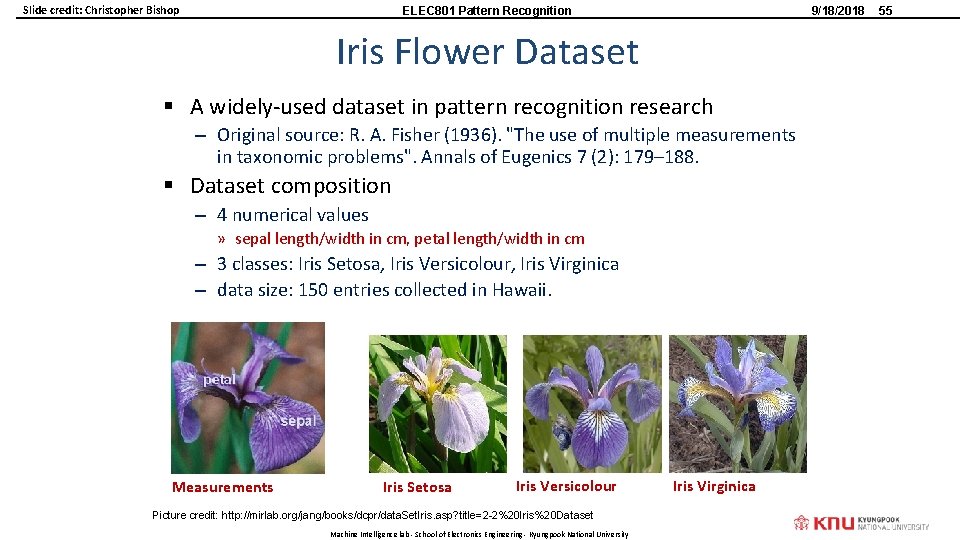

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 Iris Flower Dataset § A widely-used dataset in pattern recognition research – Original source: R. A. Fisher (1936). "The use of multiple measurements in taxonomic problems". Annals of Eugenics 7 (2): 179– 188. § Dataset composition – 4 numerical values » sepal length/width in cm, petal length/width in cm – 3 classes: Iris Setosa, Iris Versicolour, Iris Virginica – data size: 150 entries collected in Hawaii. Measurements Iris Setosa Iris Versicolour Picture credit: http: //mirlab. org/jang/books/dcpr/data. Set. Iris. asp? title=2 -2%20 Iris%20 Dataset Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University Iris Virginica 55

ELEC 801 Pattern Recognition 9/18/2018 Iris Dataset Distribution 1 (blue): Setosa 2 (red): Versicolour 3 (green): Virginica Sepal Length Sepal Width Petal Length Petal Width Picture credit: https: //www. projectrhea. org/rhea/index. php/Naive_Bayes_Old. Kiwi Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 56

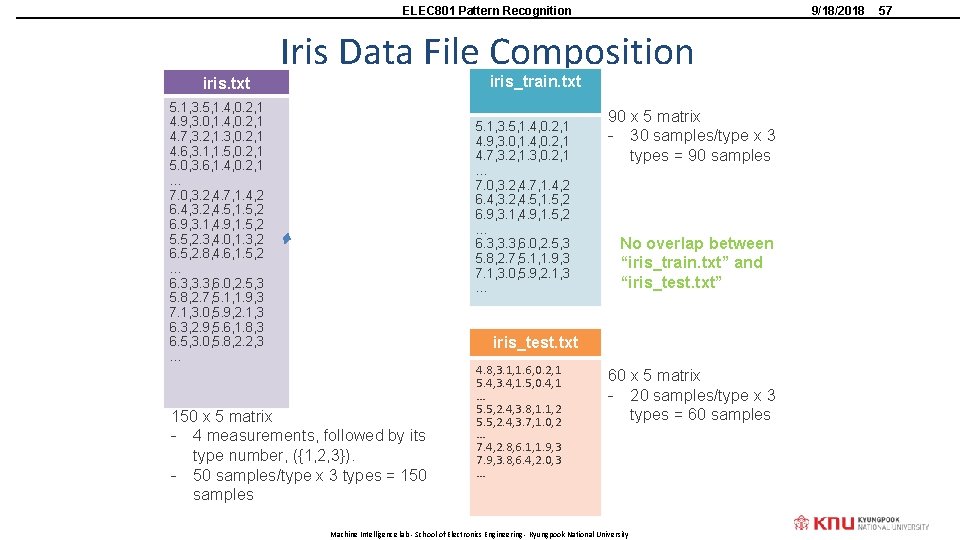

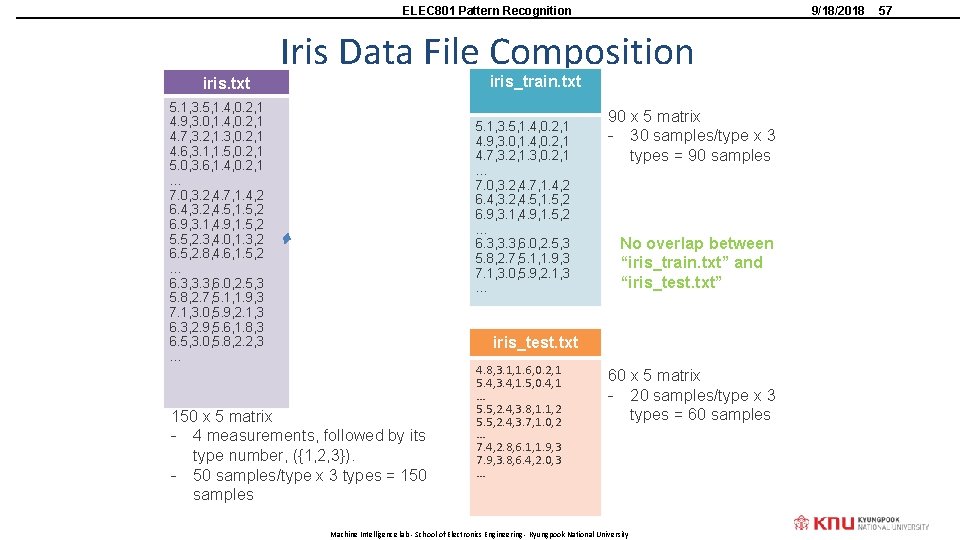

ELEC 801 Pattern Recognition iris. txt 9/18/2018 Iris Data File Composition iris_train. txt 5. 1, 3. 5, 1. 4, 0. 2, 1 4. 9, 3. 0, 1. 4, 0. 2, 1 4. 7, 3. 2, 1. 3, 0. 2, 1 4. 6, 3. 1, 1. 5, 0. 2, 1 5. 0, 3. 6, 1. 4, 0. 2, 1 … 7. 0, 3. 2, 4. 7, 1. 4, 2 6. 4, 3. 2, 4. 5, 1. 5, 2 6. 9, 3. 1, 4. 9, 1. 5, 2 5. 5, 2. 3, 4. 0, 1. 3, 2 6. 5, 2. 8, 4. 6, 1. 5, 2 … 6. 3, 3. 3, 6. 0, 2. 5, 3 5. 8, 2. 7, 5. 1, 1. 9, 3 7. 1, 3. 0, 5. 9, 2. 1, 3 6. 3, 2. 9, 5. 6, 1. 8, 3 6. 5, 3. 0, 5. 8, 2. 2, 3 … 5. 1, 3. 5, 1. 4, 0. 2, 1 4. 9, 3. 0, 1. 4, 0. 2, 1 4. 7, 3. 2, 1. 3, 0. 2, 1 … 7. 0, 3. 2, 4. 7, 1. 4, 2 6. 4, 3. 2, 4. 5, 1. 5, 2 6. 9, 3. 1, 4. 9, 1. 5, 2 … 6. 3, 3. 3, 6. 0, 2. 5, 3 5. 8, 2. 7, 5. 1, 1. 9, 3 7. 1, 3. 0, 5. 9, 2. 1, 3 … 90 x 5 matrix - 30 samples/type x 3 types = 90 samples No overlap between “iris_train. txt” and “iris_test. txt” iris_test. txt 150 x 5 matrix - 4 measurements, followed by its type number, ({1, 2, 3}). - 50 samples/type x 3 types = 150 samples 4. 8, 3. 1, 1. 6, 0. 2, 1 5. 4, 3. 4, 1. 5, 0. 4, 1 … 5. 5, 2. 4, 3. 8, 1. 1, 2 5. 5, 2. 4, 3. 7, 1. 0, 2 … 7. 4, 2. 8, 6. 1, 1. 9, 3 7. 9, 3. 8, 6. 4, 2. 0, 3 … 60 x 5 matrix - 20 samples/type x 3 types = 60 samples Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 57

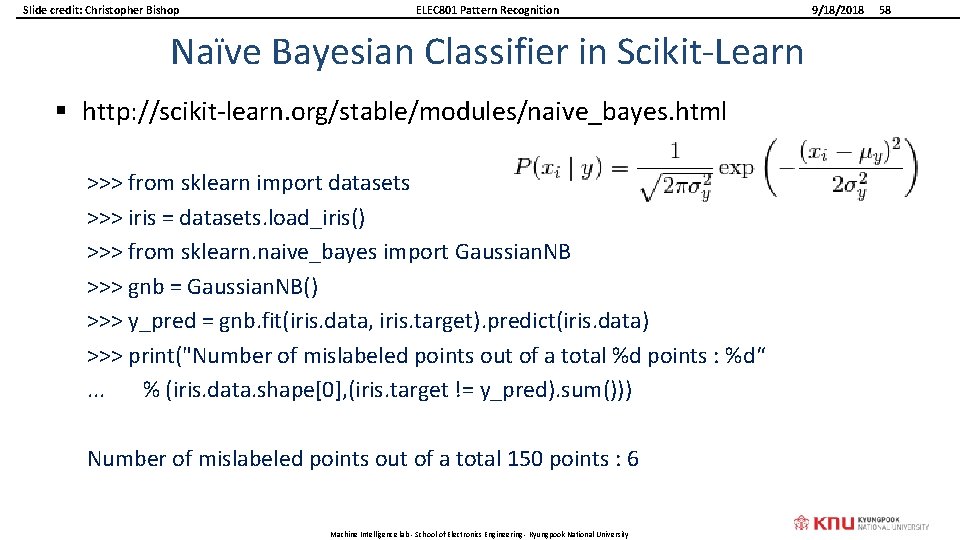

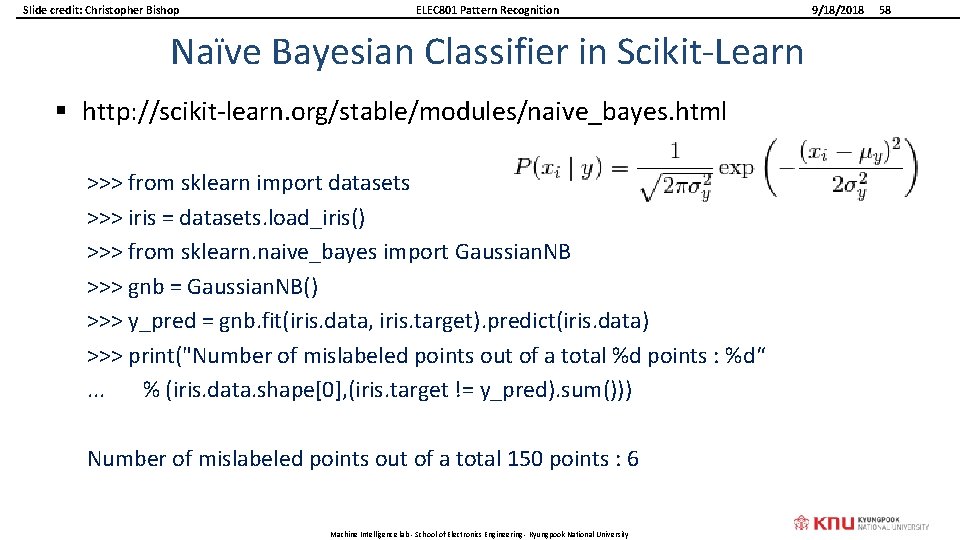

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Naïve Bayesian Classifier in Scikit-Learn § http: //scikit-learn. org/stable/modules/naive_bayes. html >>> from sklearn import datasets >>> iris = datasets. load_iris() >>> from sklearn. naive_bayes import Gaussian. NB >>> gnb = Gaussian. NB() >>> y_pred = gnb. fit(iris. data, iris. target). predict(iris. data) >>> print("Number of mislabeled points out of a total %d points : %d“. . . % (iris. data. shape[0], (iris. target != y_pred). sum())) Number of mislabeled points out of a total 150 points : 6 Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 58

ELEC 801 Pattern Recognition 9/18/2018 K-NEAREST NEIGHBORS CLASSIFIER (KNN) “Probably, the simplest classifier” Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 59

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 60 Classification § Assign input vector to one of two or more classes § Any decision rule divides input space into decision regions separated by decision boundaries – Region-based vs boundary-based classifiers Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University Slide credit: L. Lazebnik

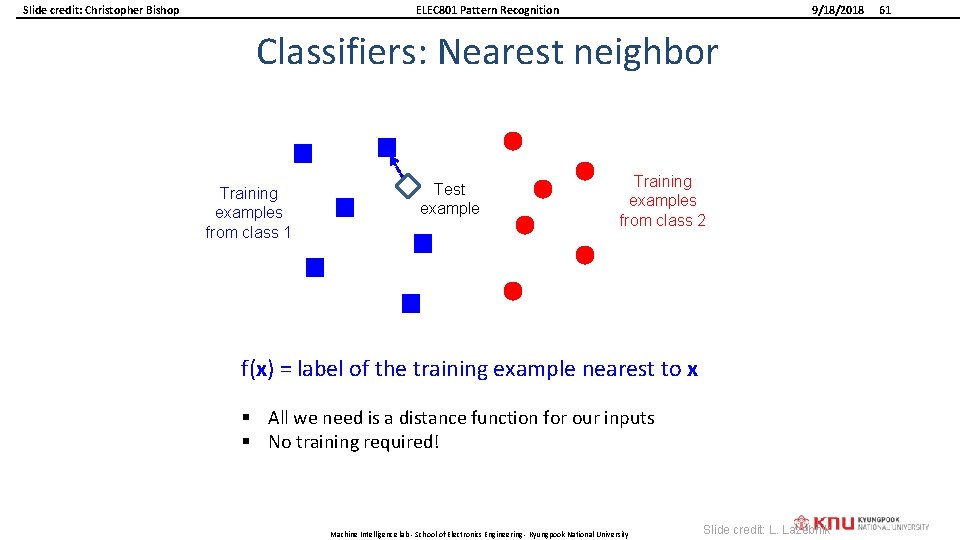

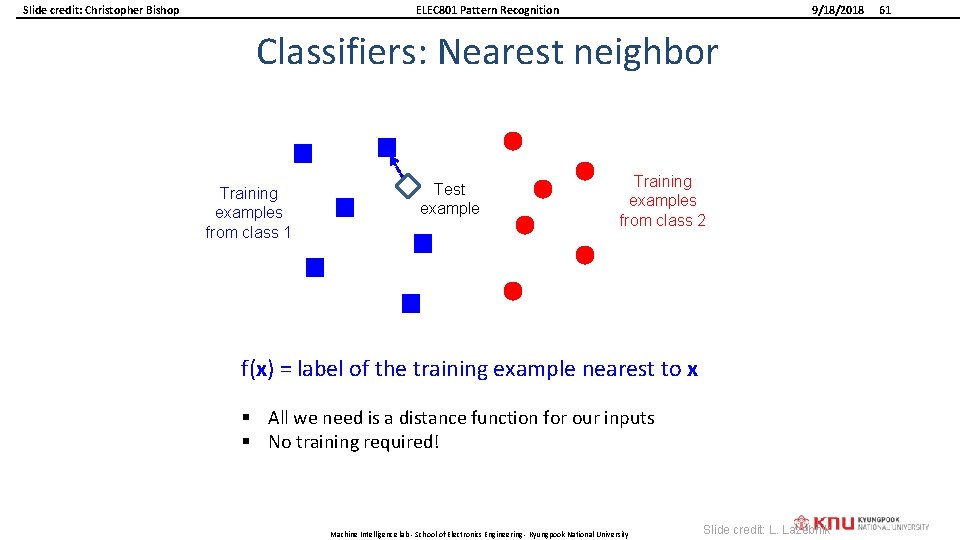

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 Classifiers: Nearest neighbor Training examples from class 1 Test example Training examples from class 2 f(x) = label of the training example nearest to x § All we need is a distance function for our inputs § No training required! Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University Slide credit: L. Lazebnik 61

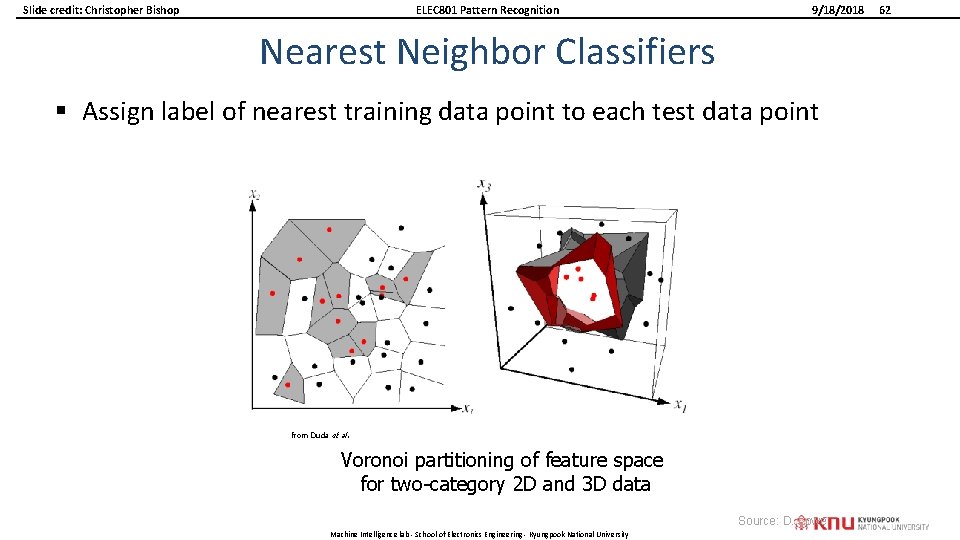

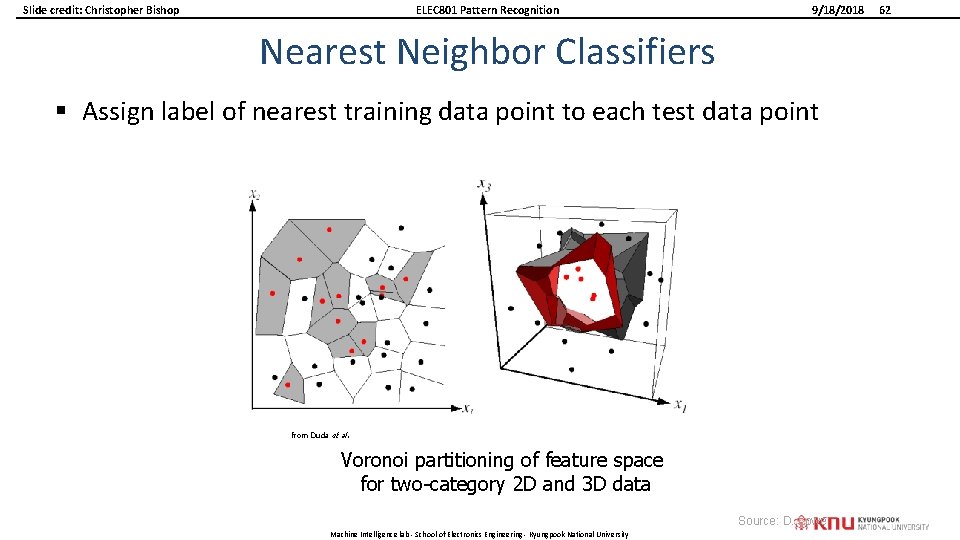

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 Nearest Neighbor Classifiers § Assign label of nearest training data point to each test data point from Duda et al. Voronoi partitioning of feature space for two-category 2 D and 3 D data Source: D. Lowe Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 62

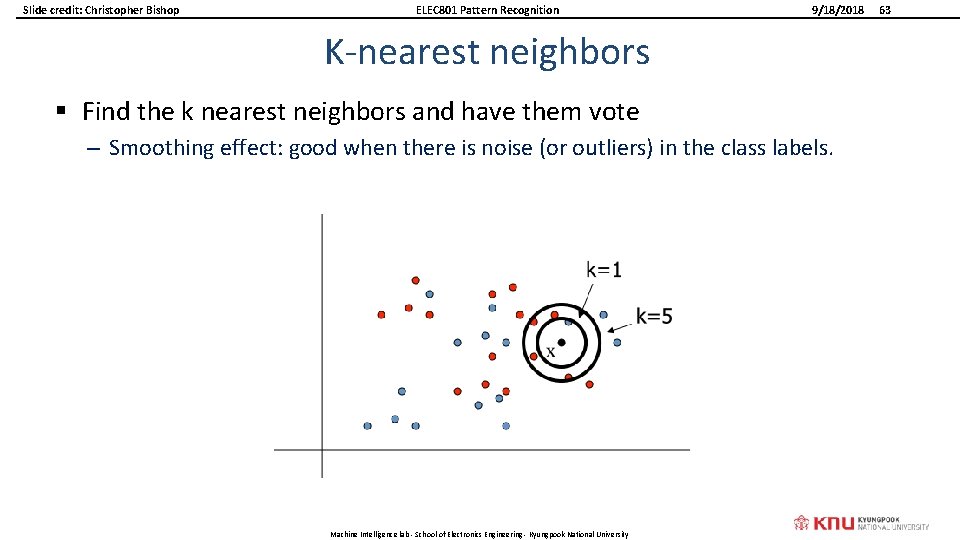

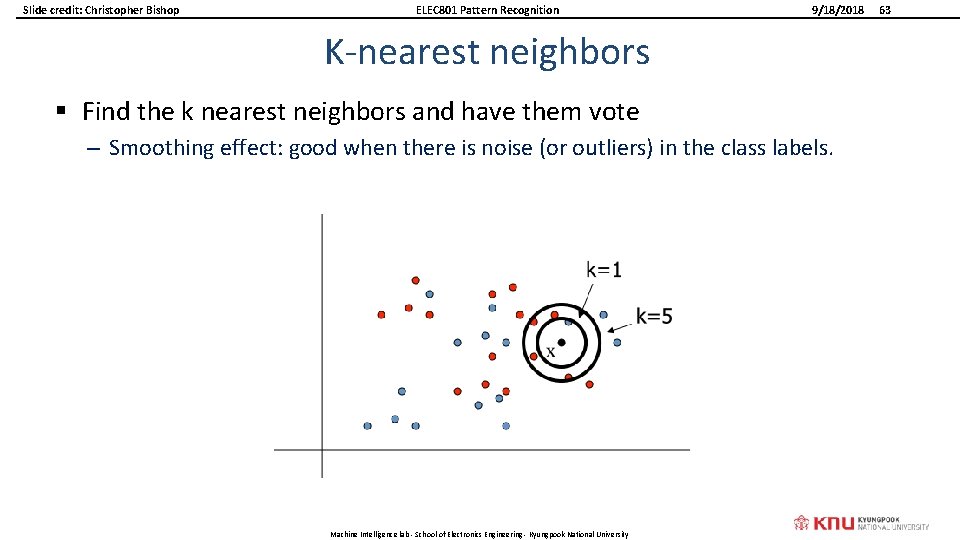

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 K-nearest neighbors § Find the k nearest neighbors and have them vote – Smoothing effect: good when there is noise (or outliers) in the class labels. Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 63

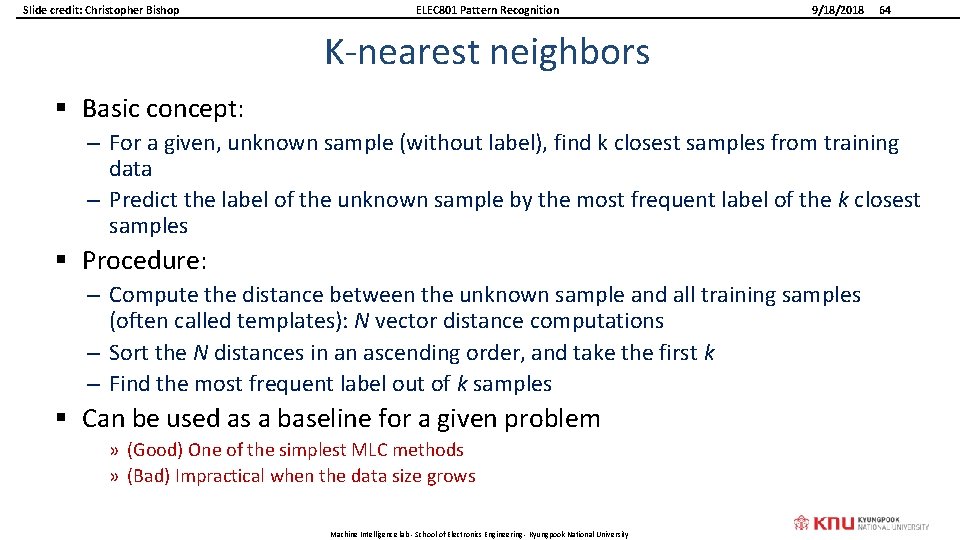

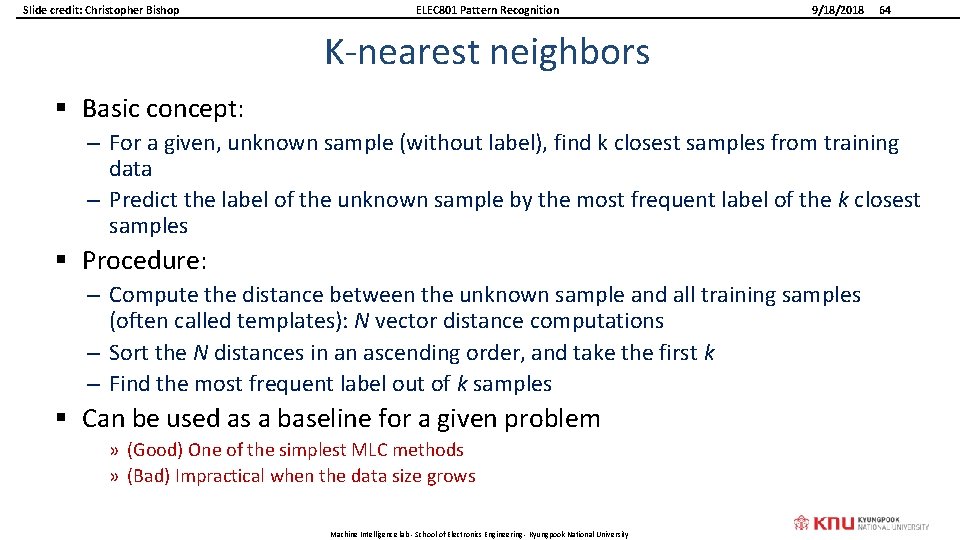

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 64 K-nearest neighbors § Basic concept: – For a given, unknown sample (without label), find k closest samples from training data – Predict the label of the unknown sample by the most frequent label of the k closest samples § Procedure: – Compute the distance between the unknown sample and all training samples (often called templates): N vector distance computations – Sort the N distances in an ascending order, and take the first k – Find the most frequent label out of k samples § Can be used as a baseline for a given problem » (Good) One of the simplest MLC methods » (Bad) Impractical when the data size grows Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

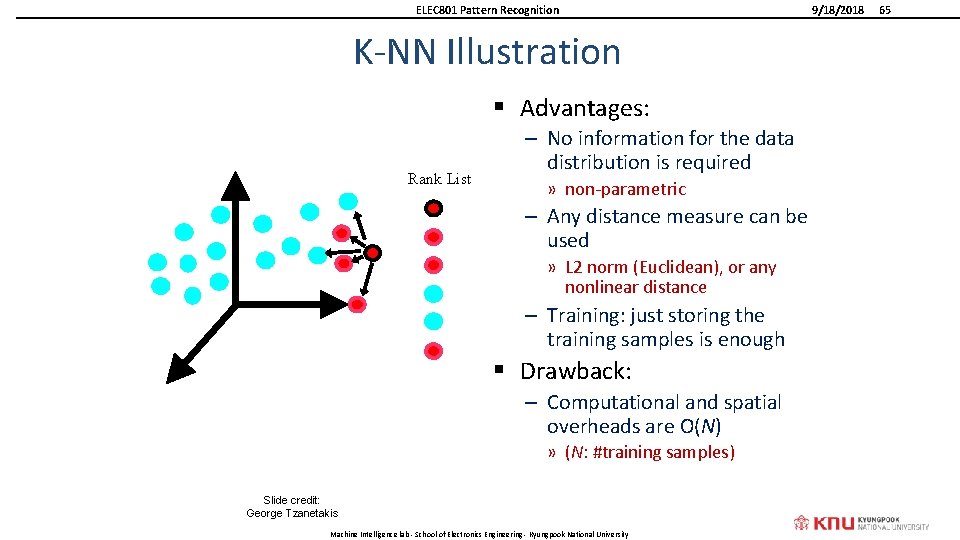

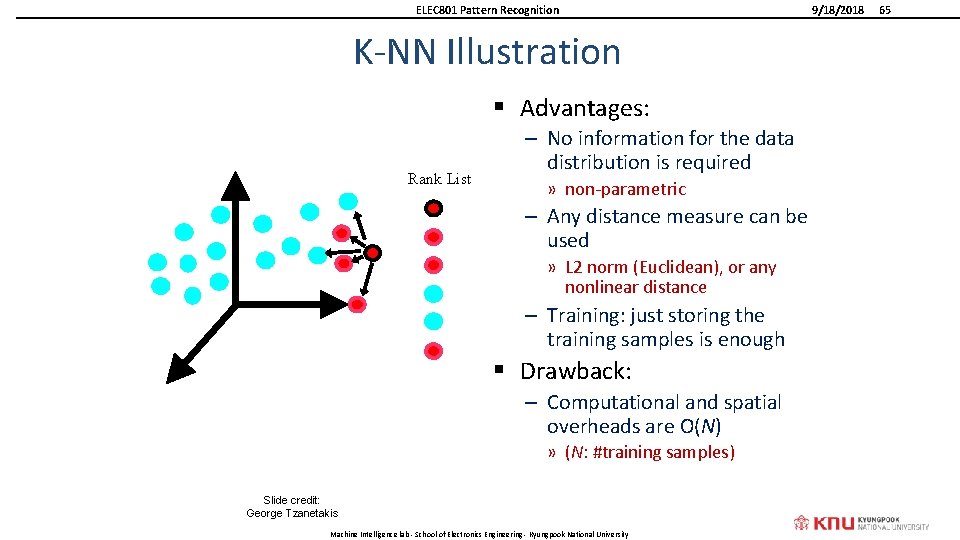

ELEC 801 Pattern Recognition K-NN Illustration § Advantages: Rank List – No information for the data distribution is required » non-parametric – Any distance measure can be used » L 2 norm (Euclidean), or any nonlinear distance – Training: just storing the training samples is enough § Drawback: – Computational and spatial overheads are O(N) » (N: #training samples) Slide credit: George Tzanetakis Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 65

ELEC 801 Pattern Recognition 9/18/2018 1/3/5 -nearest neighbor x x x 2 x o x + o o o x K=1 x o+ x x + o o o x x 2 x 1 x x x 2 x + o o o x 1 K=5 x o+ x x x o x Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University K=3 x o+ x x x 66

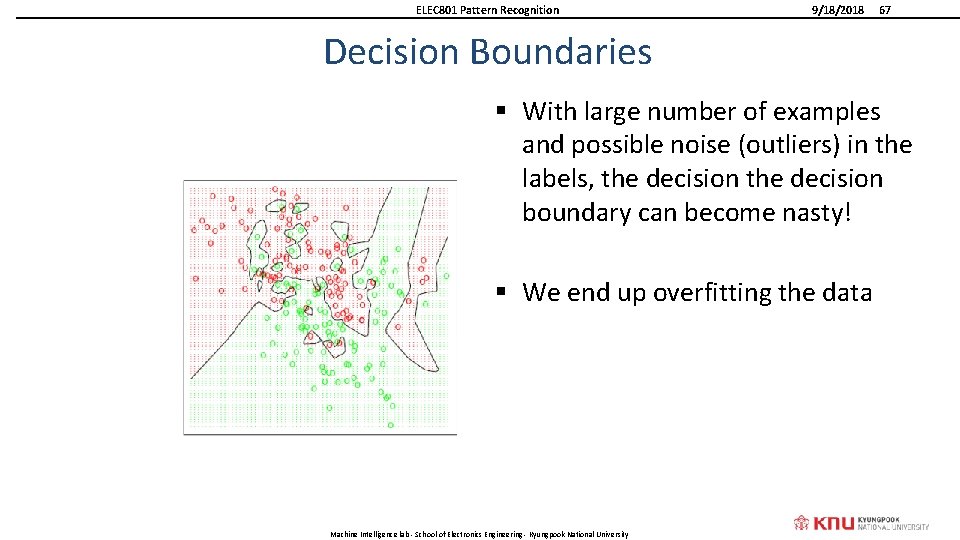

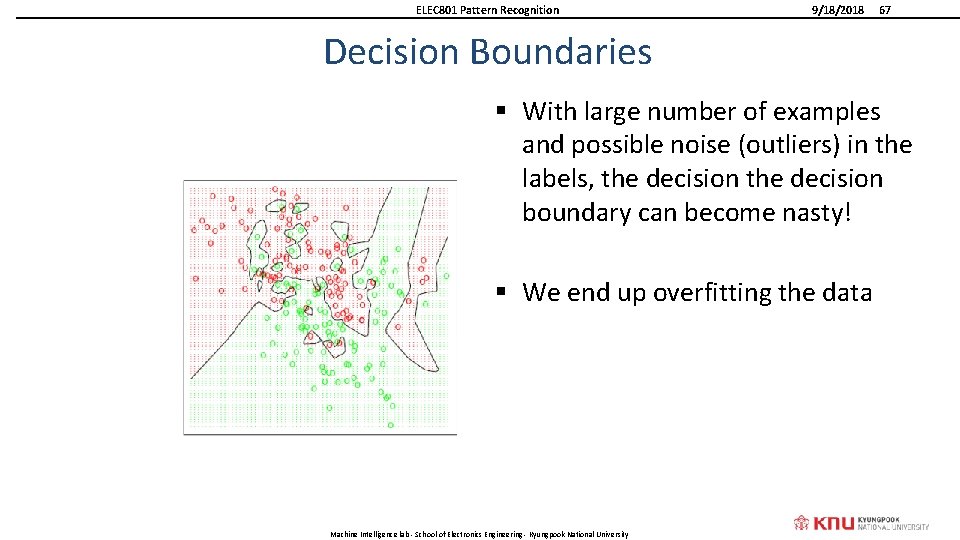

ELEC 801 Pattern Recognition 9/18/2018 67 Decision Boundaries § With large number of examples and possible noise (outliers) in the labels, the decision boundary can become nasty! § We end up overfitting the data Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

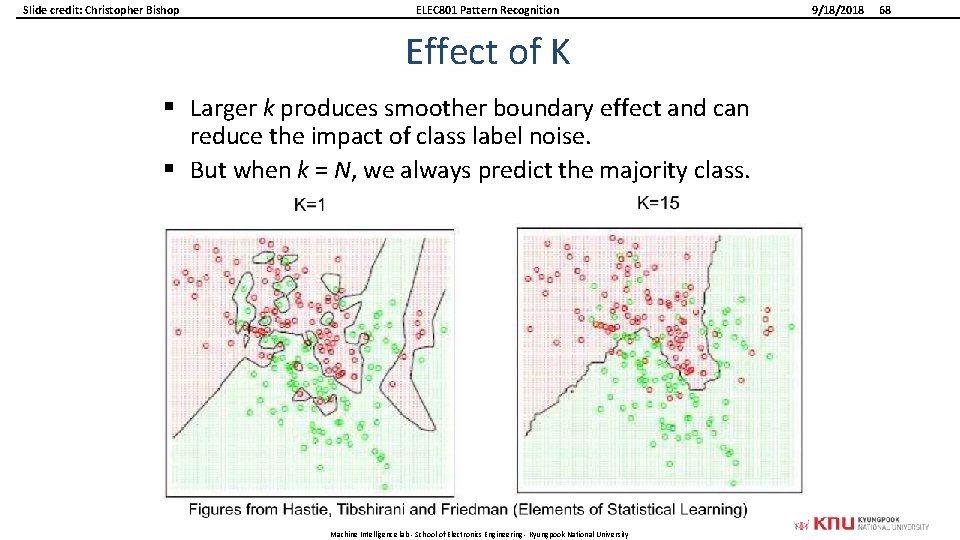

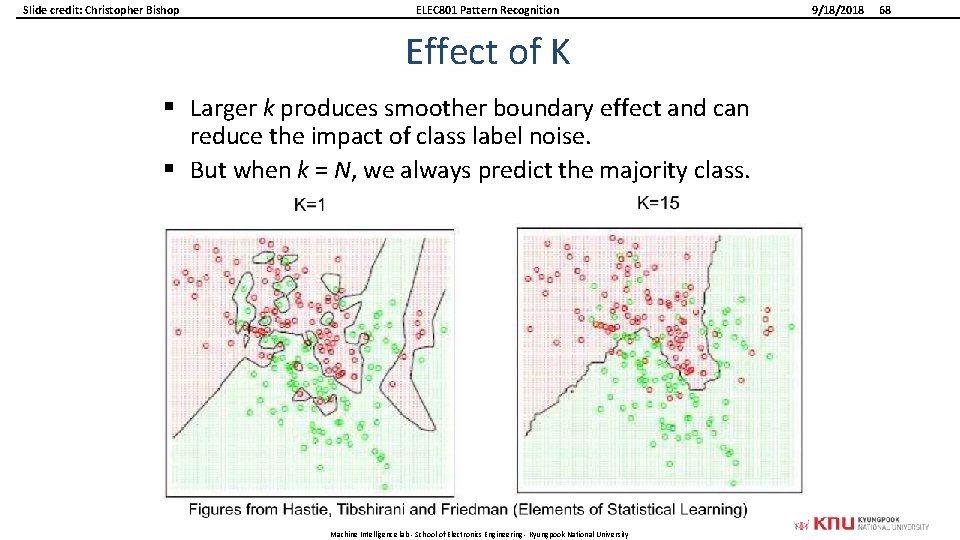

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Effect of K § Larger k produces smoother boundary effect and can reduce the impact of class label noise. § But when k = N, we always predict the majority class. Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 68

ELEC 801 Pattern Recognition 9/13/2017 Effect of K • K acts as a smother • For N → ∞, the error rate of the 1 -nearest-neighbour classifier is never more than twice the optimal error (obtained from the true conditional class distributions). Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 69

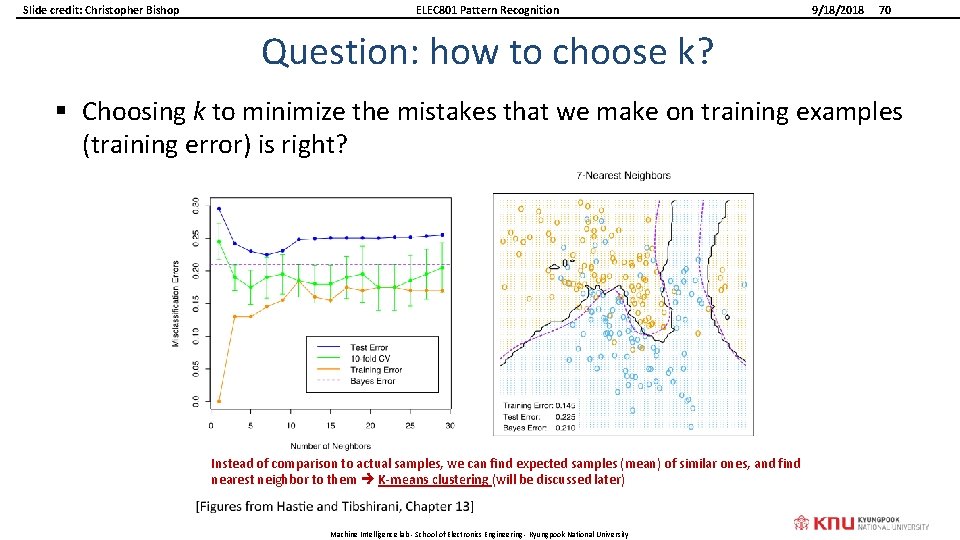

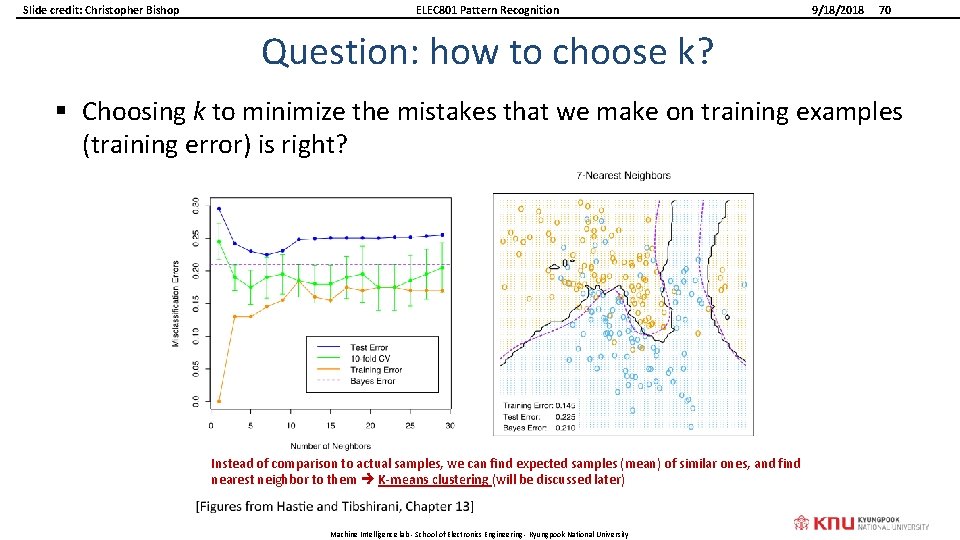

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 70 Question: how to choose k? § Choosing k to minimize the mistakes that we make on training examples (training error) is right? Instead of comparison to actual samples, we can find expected samples (mean) of similar ones, and find nearest neighbor to them K-means clustering (will be discussed later) Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

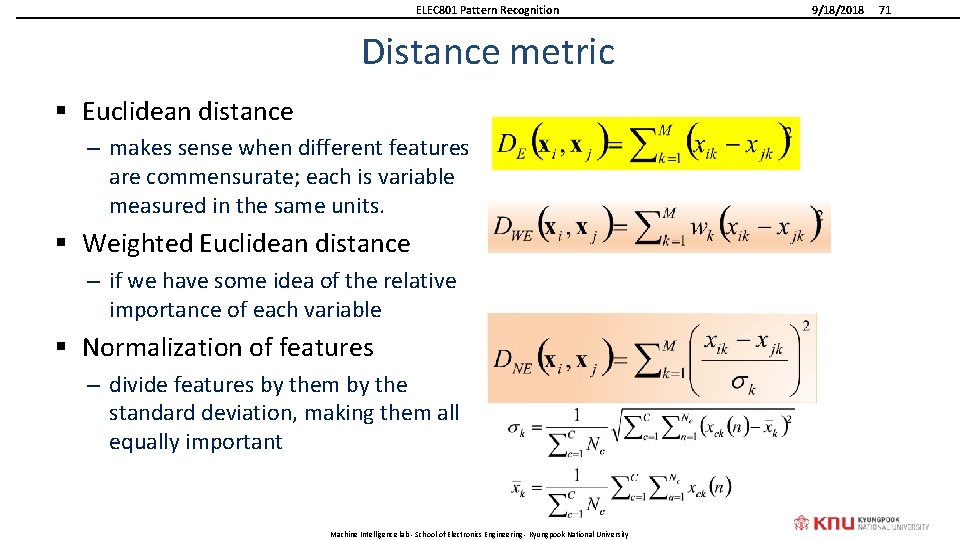

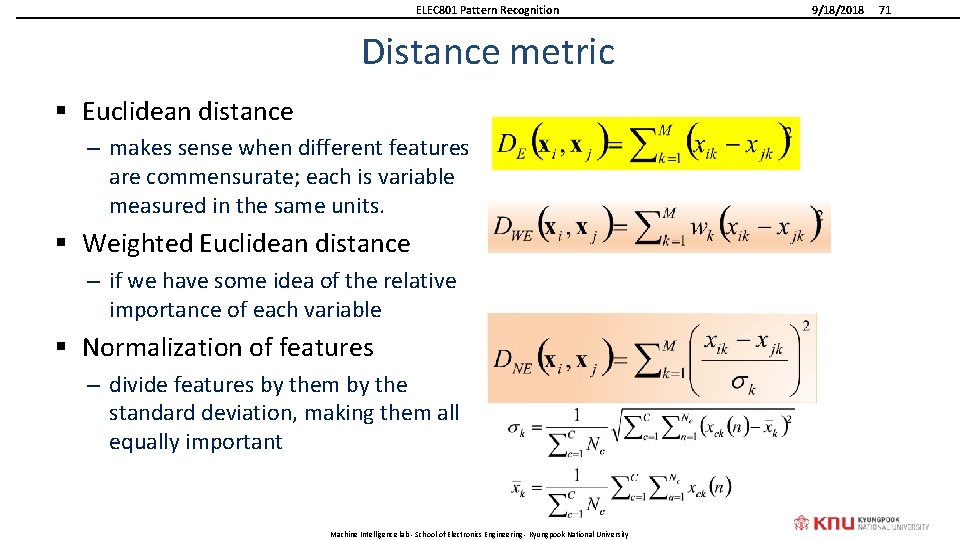

ELEC 801 Pattern Recognition Distance metric § Euclidean distance – makes sense when different features are commensurate; each is variable measured in the same units. § Weighted Euclidean distance – if we have some idea of the relative importance of each variable § Normalization of features – divide features by them by the standard deviation, making them all equally important Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 71

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 72 Other distance metric § Distance Weighted Nearest Neighbor – It makes sense to weight the contribution of each example according to the distance to the new query example – Weight varies inversely with the distance, such that examples closer to the query points get higher weight § Learning a distance metric: – that weights of each feature by its ability to minimize the prediction error, e. g. , its mutual information with the class. – that weights each feature differently or only use a subset of features and use cross validation to select the weights or feature subsets – Learning distance function is an active research area Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

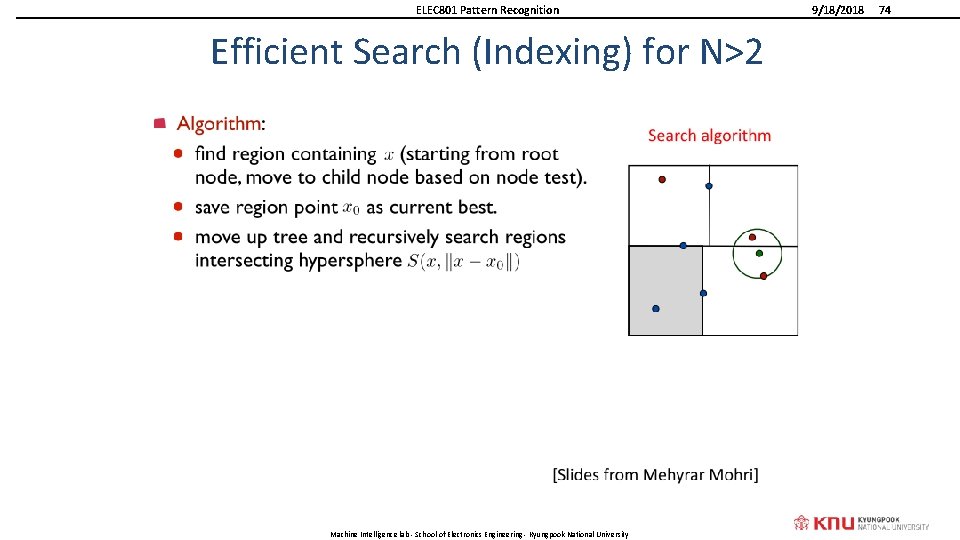

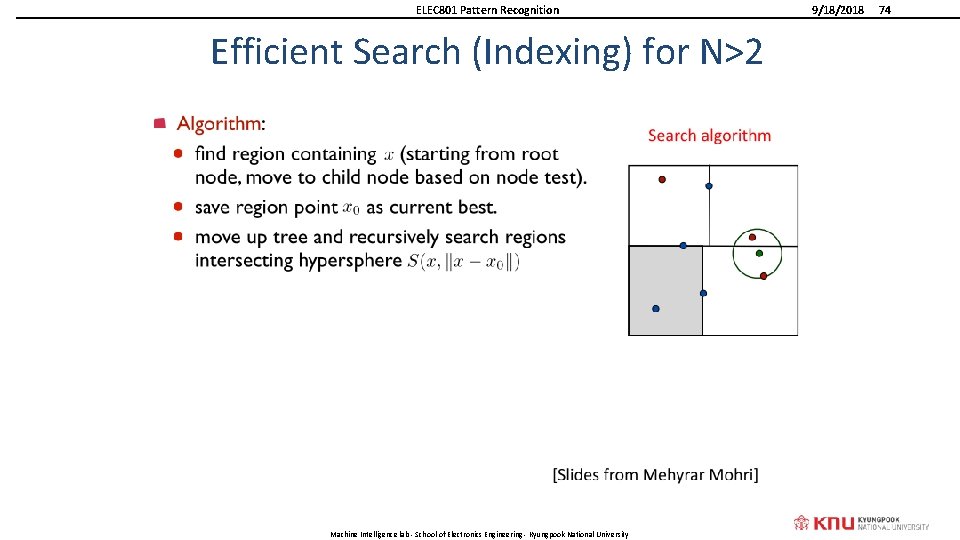

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition Efficient Search (Indexing) for N>2 § Tree-based data structures: k-d trees (k-dimensional trees). Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 73

ELEC 801 Pattern Recognition Efficient Search (Indexing) for N>2 Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 74

Slide credit: Christopher Bishop ELEC 801 Pattern Recognition 9/18/2018 75 K-NN in Scikit-Learn § http: //scikitlearn. org/stable/auto_examples/neighbors/plot_classification. html#sphx -glr-auto-examples-neighbors-plot-classification-py End of Lecture 3. Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University

![ELEC 801 Pattern Recognition MATLABOctave Implementation exknniristrain m function Xr Tr exknniristraintrainfile Xr ELEC 801 Pattern Recognition MATLAB/Octave Implementation [ex_knn_iris_train. m] function [Xr, Tr] = ex_knn_iris_train(trainfile) [Xr,](https://slidetodoc.com/presentation_image_h/dade690de8cf5e51c04376d835a5e205/image-71.jpg)

ELEC 801 Pattern Recognition MATLAB/Octave Implementation [ex_knn_iris_train. m] function [Xr, Tr] = ex_knn_iris_train(trainfile) [Xr, Tr] = load_iris_textfile(trainfile); % nothing to train, return what you read [knn_test. m] [ex_knn_iris_test. m] function ACC = ex_knn_iris_test(Xr, Tr, testfile, K); [Xe, Te] = load_iris_textfile(testfile); ACC = knn_test(Xr, Tr, Xe, Te, K); Tknn = zeros(size(T)); for ii=1: n_test, D = eval(sprintf('%s(X(ii, : ), Xr)', distfun)); function dist_vec = eucdist(v_test, Templates) n_template = size(Templates, 1); dist_vec = zeros(n_template, 1); tmp = repmat(v_test, n_template, 1)-Templates; dist_vec(: ) = sum(tmp. *tmp, 2); function ACC = knn_test_gj 03 (Xr, Tr, X, T, K, distfun) % distfun: accepts (1 x D vector, N x D matrix), and returns an N x 1 vector for distances between them. % find K nearest indices if K==1, [~, I] = min(D); else [~, I] = sort(D, 'ascend'); I = I(1: K); end Tknn(ii) = mode(Tr(I)); end % compute accuracy n_corr = sum(T==Tknn); ACC = n_corr/n_test; Machine Intelligence lab - School of Electronics Engineering - Kyungpook National University 9/18/2018 76