Efficient Placement of Compressed Code for Parallel Decompression

- Slides: 27

Efficient Placement of Compressed Code for Parallel Decompression Xiaoke Qin and Prabhat Mishra Embedded Systems Lab Computer and Information Science and Engineering University of Florida, USA

Outline l Introduction l Code Compression Techniques l Efficient Placement of Compressed Binaries u. Compression u. Code Algorithm Placement Algorithm u. Decompression l Experiments l Conclusion Mechanism

Why Code Compression? l Embedded systems are ubiquitous u. Automobiles, digital cameras, PDAs, cellular phones, medical and military equipments, …. l Memory imposes cost, area and energy constraints during embedded systems design u. Increasing complexity of applications l Code compression techniques address this by reducing the size of application programs

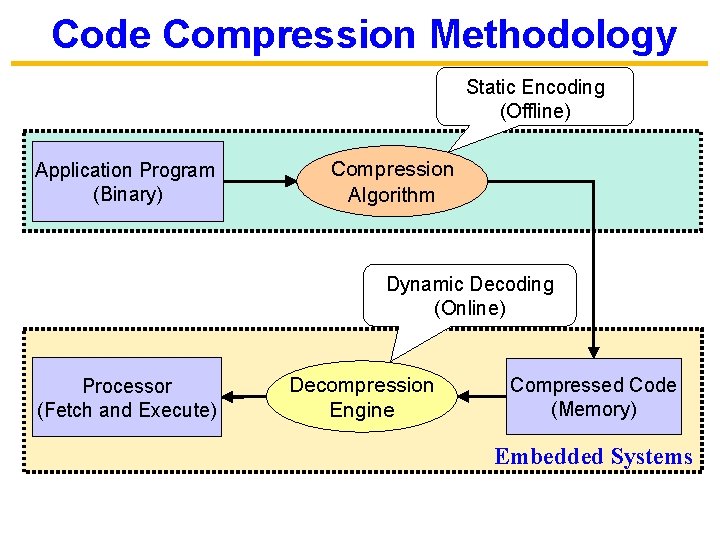

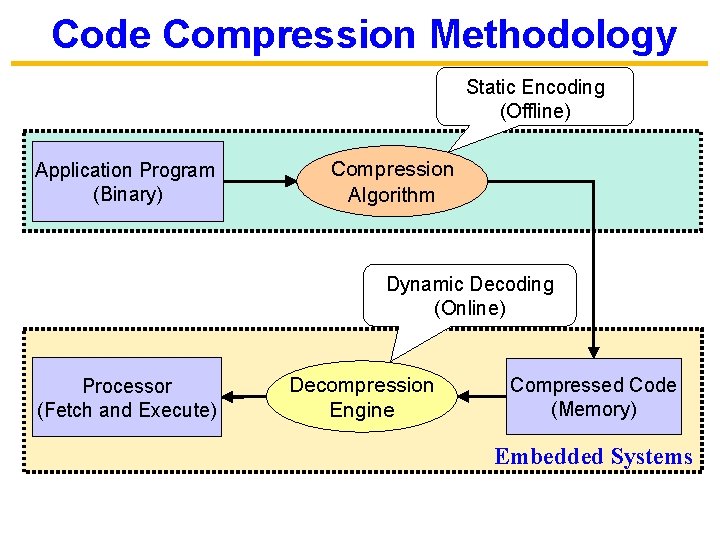

Code Compression Methodology Static Encoding (Offline) Application Program (Binary) Compression Algorithm Dynamic Decoding (Online) Processor (Fetch and Execute) Decompression Engine Compressed Code (Memory) Embedded Systems

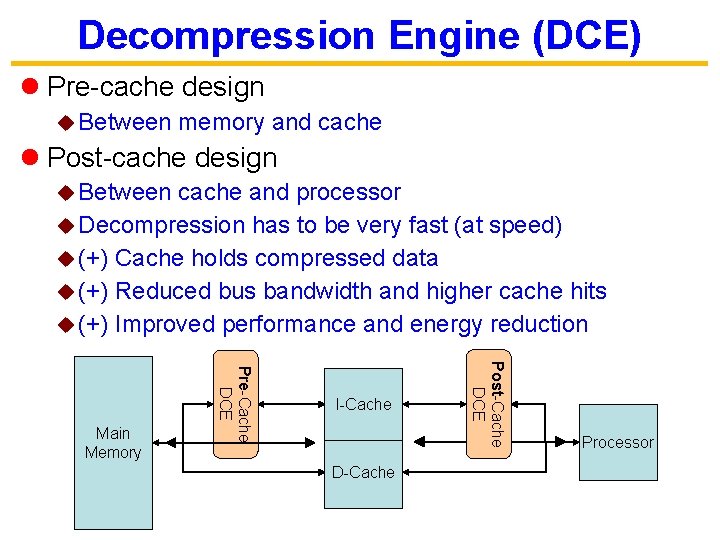

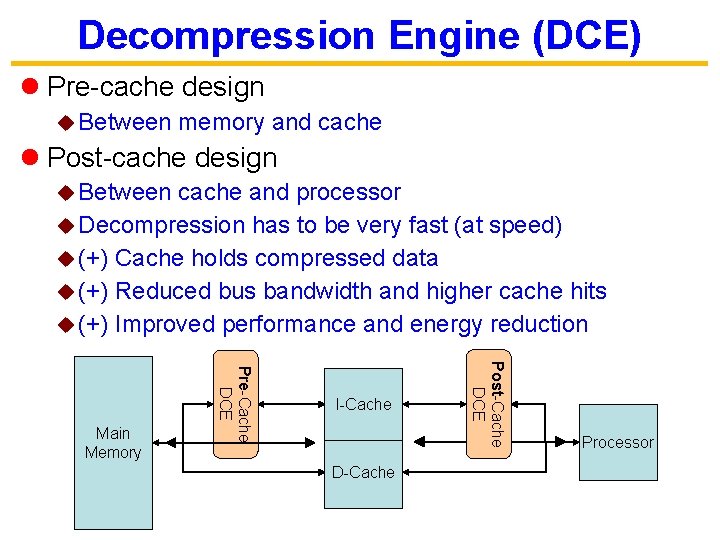

Decompression Engine (DCE) l Pre-cache design u Between memory and cache l Post-cache design u Between cache and processor u Decompression has to be very fast (at speed) u (+) Cache holds compressed data u (+) Reduced bus bandwidth and higher cache hits u (+) Improved performance and energy reduction D-Cache Post-Cache DCE Pre-Cache DCE Main Memory I-Cache Processor

Outline l Introduction l Code Compression Techniques l Efficient Placement of Compressed Binaries u. Compression u. Code Algorithm Placement Algorithm u. Decompression l Experiments l Conclusion Mechanism

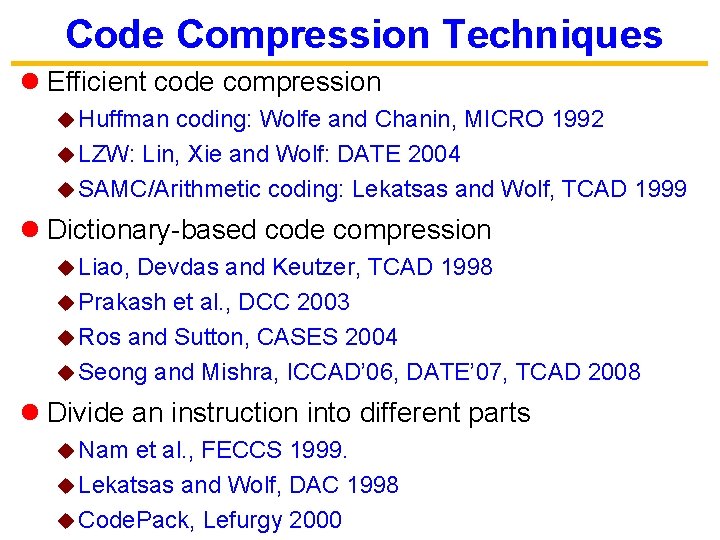

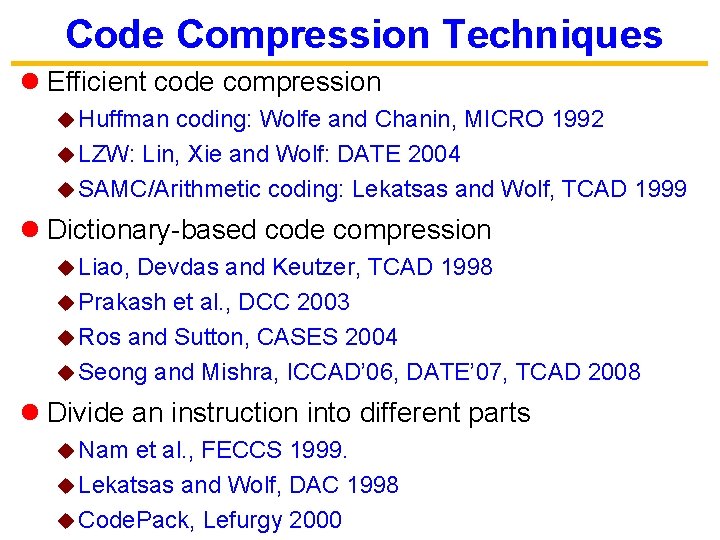

Code Compression Techniques l Efficient code compression u Huffman coding: Wolfe and Chanin, MICRO 1992 u LZW: Lin, Xie and Wolf: DATE 2004 u SAMC/Arithmetic coding: Lekatsas and Wolf, TCAD 1999 l Dictionary-based code compression u Liao, Devdas and Keutzer, TCAD 1998 u Prakash et al. , DCC 2003 u Ros and Sutton, CASES 2004 u Seong and Mishra, ICCAD’ 06, DATE’ 07, TCAD 2008 l Divide an instruction into different parts u Nam et al. , FECCS 1999. u Lekatsas and Wolf, DAC 1998 u Code. Pack, Lefurgy 2000

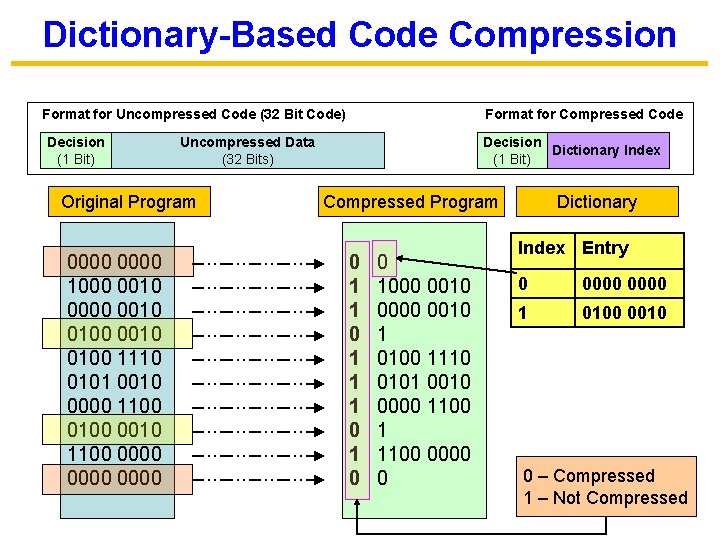

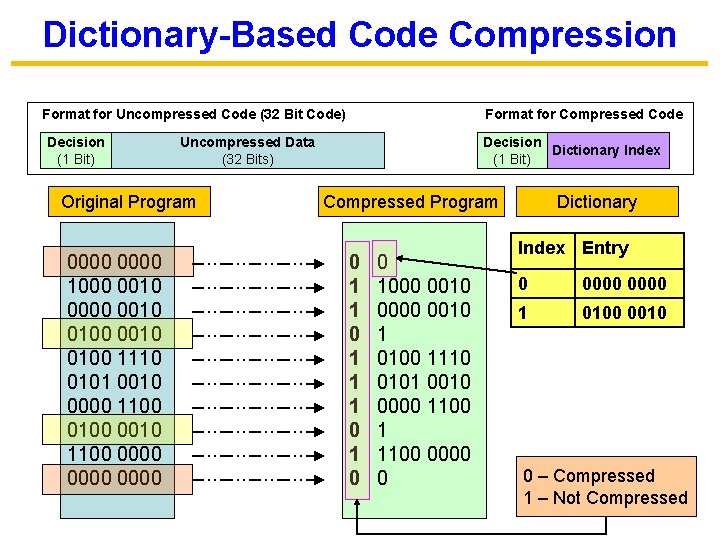

Dictionary-Based Code Compression Format for Uncompressed Code (32 Bit Code) Decision (1 Bit) Uncompressed Data (32 Bits) Original Program 0000 1000 0010 0100 1110 0101 0010 0000 1100 0010 1100 0000 Format for Compressed Code Decision Dictionary Index (1 Bit) Compressed Program 0 1 1 1 0 0 1000 0010 0000 0010 1 0100 1110 0101 0010 0000 1100 1 1100 0000 0 Dictionary Index Entry 0 0000 1 0100 0010 0 – Compressed 1 – Not Compressed

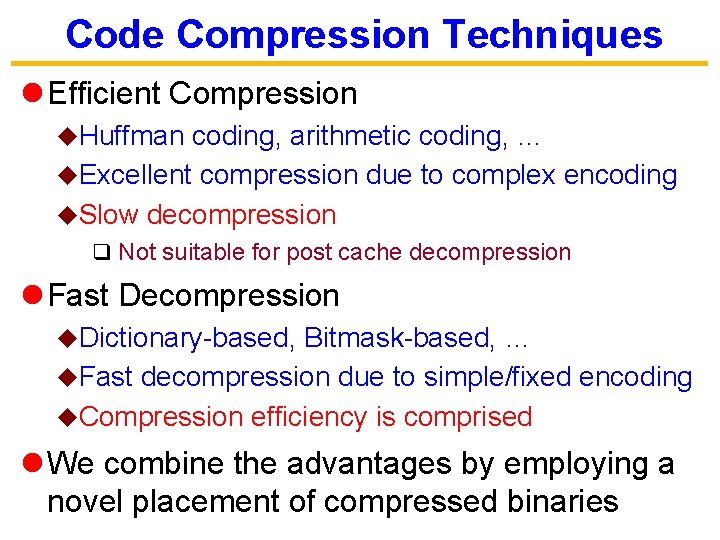

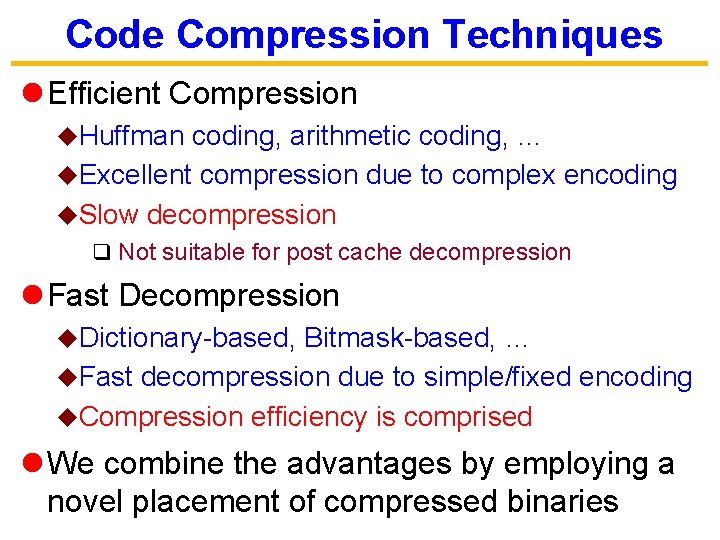

Code Compression Techniques l Efficient Compression u. Huffman coding, arithmetic coding, … u. Excellent compression due to complex encoding u. Slow decompression q Not suitable for post cache decompression l Fast Decompression u. Dictionary-based, Bitmask-based, … u. Fast decompression due to simple/fixed encoding u. Compression efficiency is comprised l We combine the advantages by employing a novel placement of compressed binaries

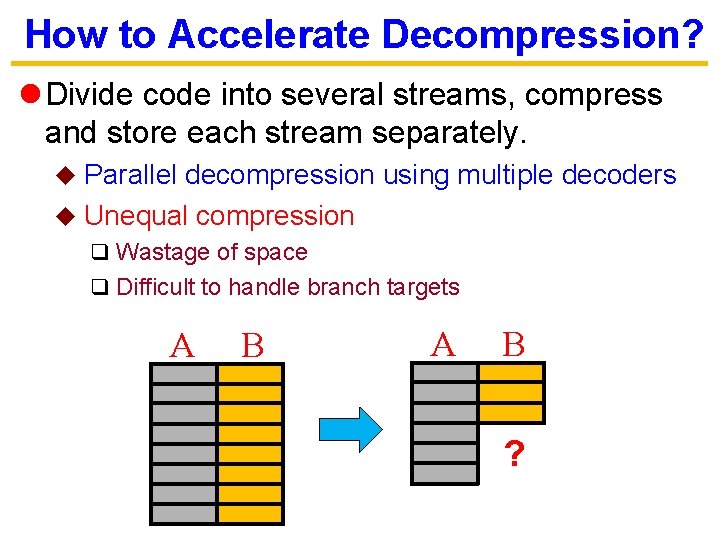

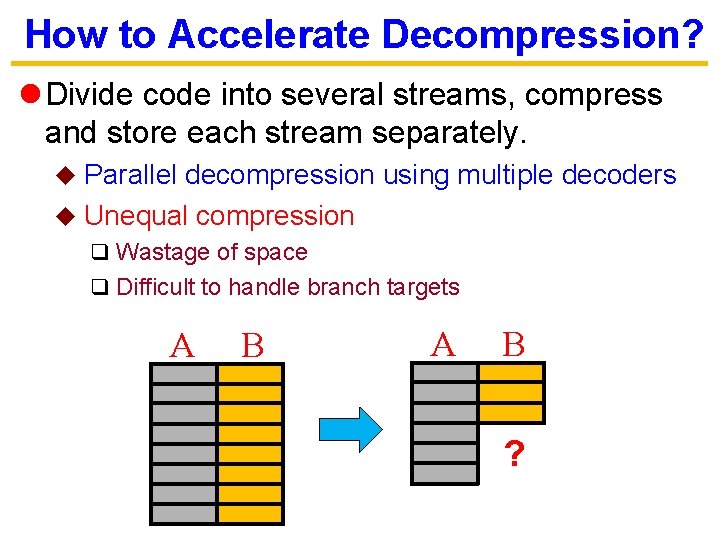

How to Accelerate Decompression? l Divide code into several streams, compress and store each stream separately. Parallel decompression using multiple decoders u Unequal compression u q Wastage of space q Difficult to handle branch targets A B ?

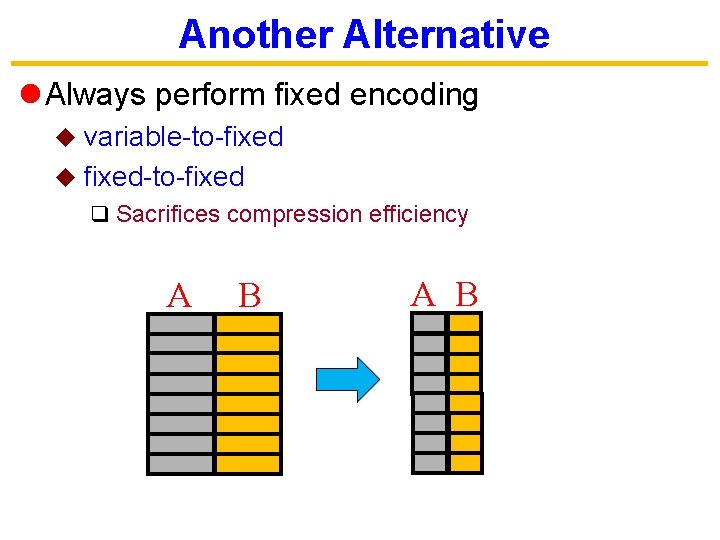

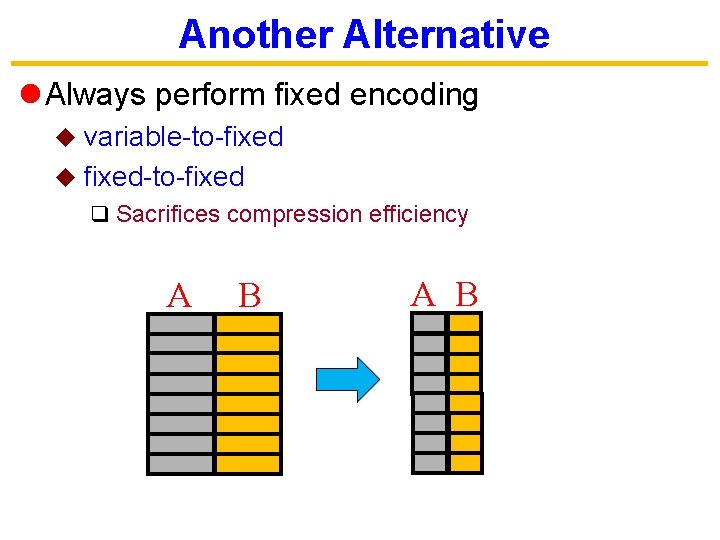

Another Alternative l Always perform fixed encoding variable-to-fixed u fixed-to-fixed u q Sacrifices compression efficiency A B

Outline l Introduction l Code Compression Techniques l Efficient Placement of Compressed Binaries u. Compression u. Code Algorithm Placement Algorithm u. Decompression l Experiments l Conclusion Mechanism

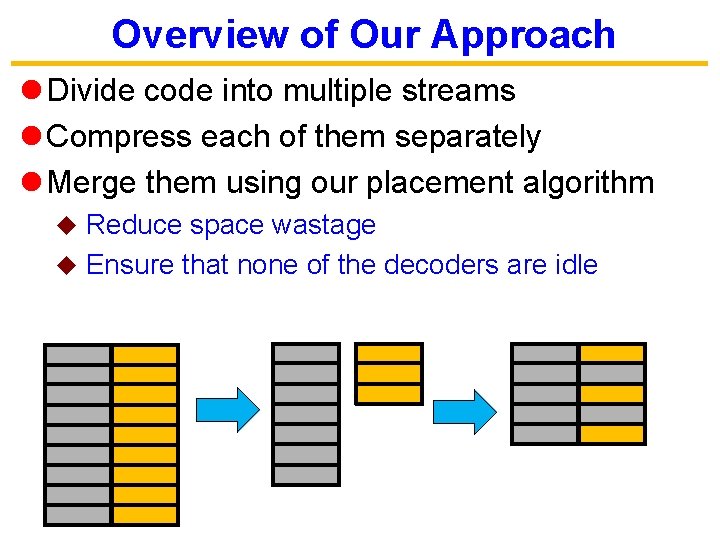

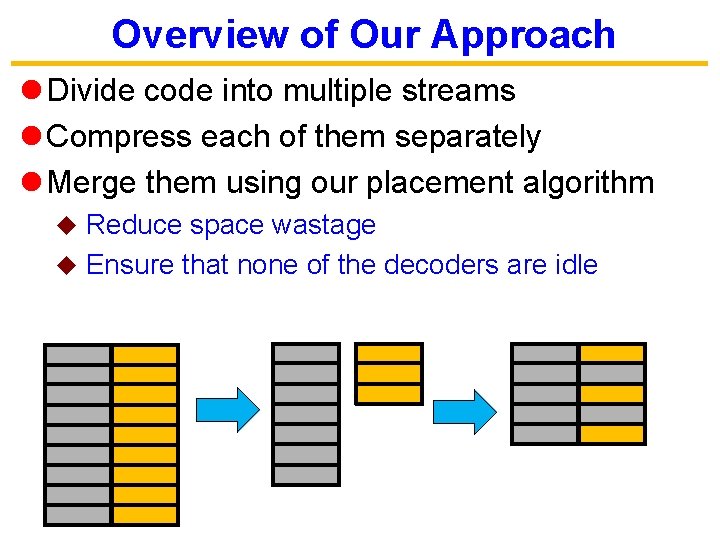

Overview of Our Approach l Divide code into multiple streams l Compress each of them separately l Merge them using our placement algorithm Reduce space wastage u Ensure that none of the decoders are idle u

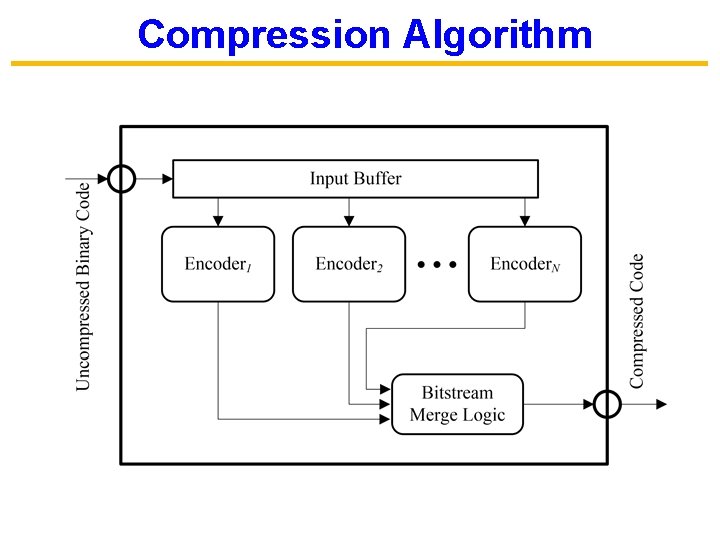

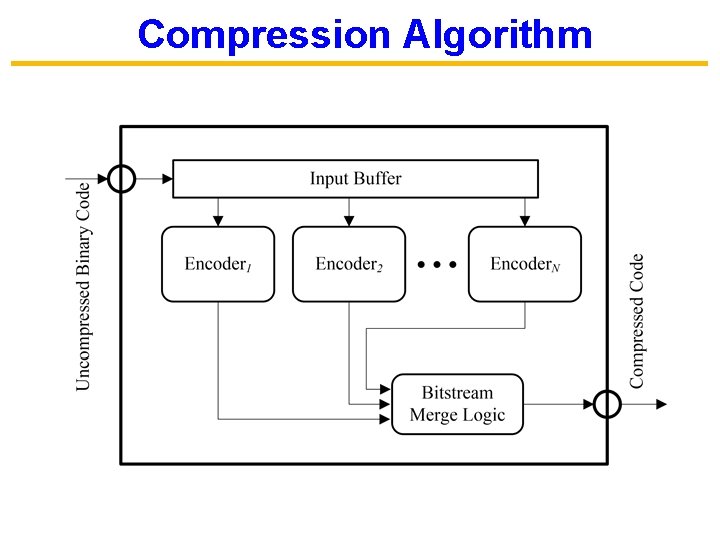

Compression Algorithm

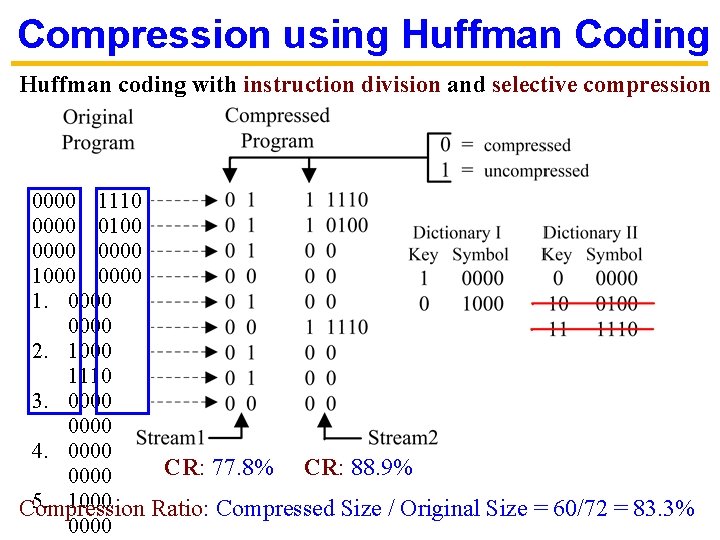

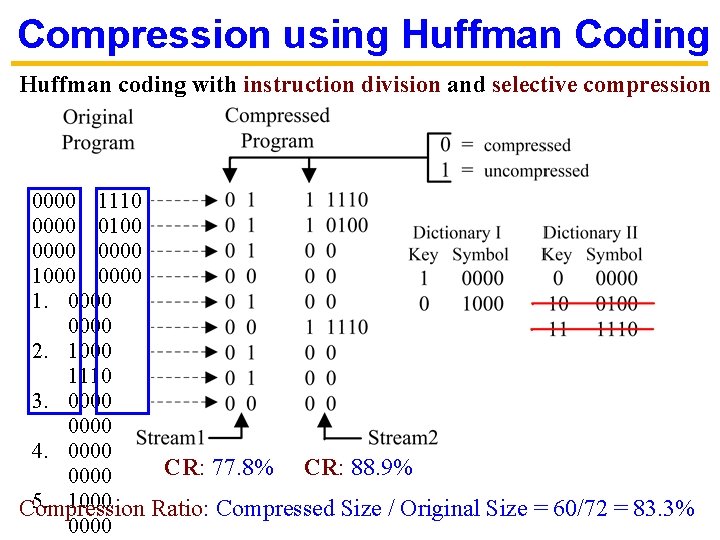

Compression using Huffman Coding Huffman coding with instruction division and selective compression 0000 1110 0000 0100 0000 1000 0000 1. 0000 2. 1000 1110 3. 0000 4. 0000 CR: 77. 8% CR: 88. 9% 0000 5. 1000 Compression Ratio: Compressed Size / Original Size = 60/72 = 83. 3% 0000

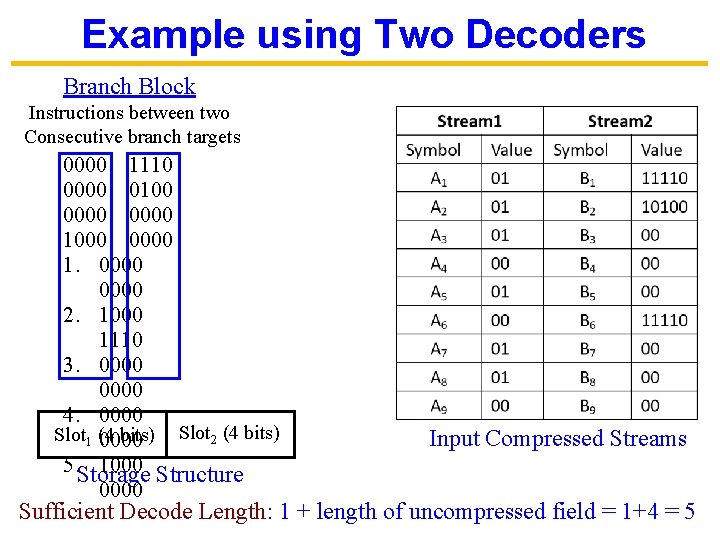

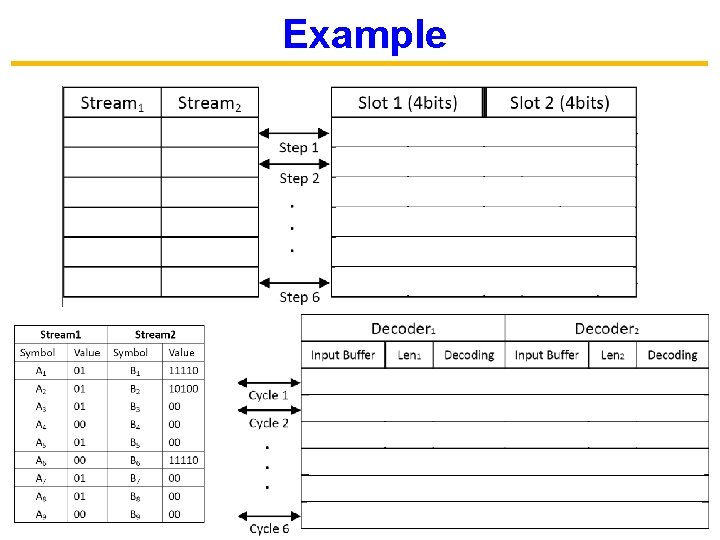

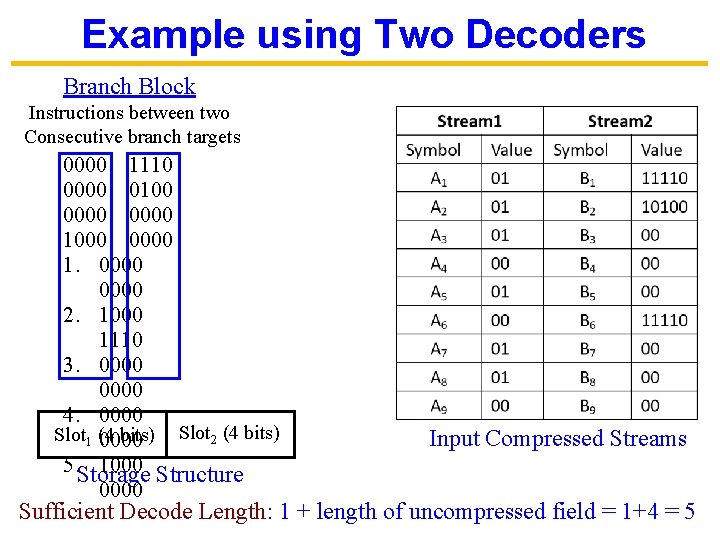

Example using Two Decoders Branch Block Instructions between two Consecutive branch targets 0000 1110 0000 0100 0000 1000 0000 1. 0000 2. 1000 1110 3. 0000 4. 0000 Slot 1 (4 bits) Slot 2 (4 bits) 0000 5. Storage 1000 Structure 0000 Input Compressed Streams Sufficient Decode Length: 1 + length of uncompressed field = 1+4 = 5

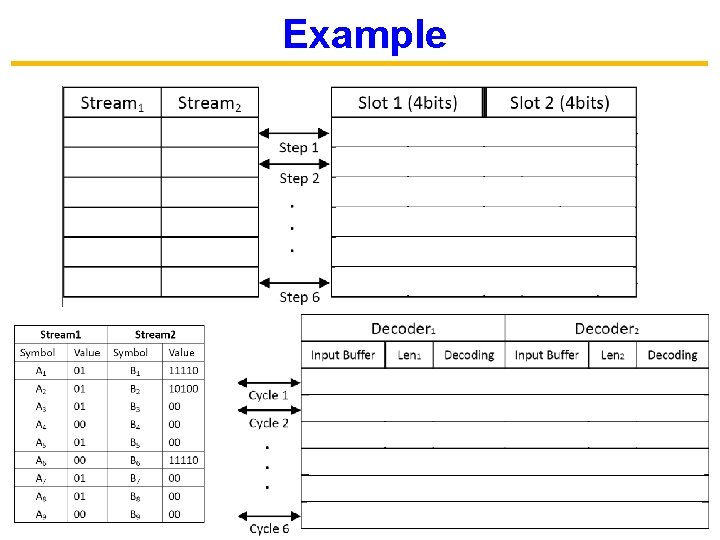

Example

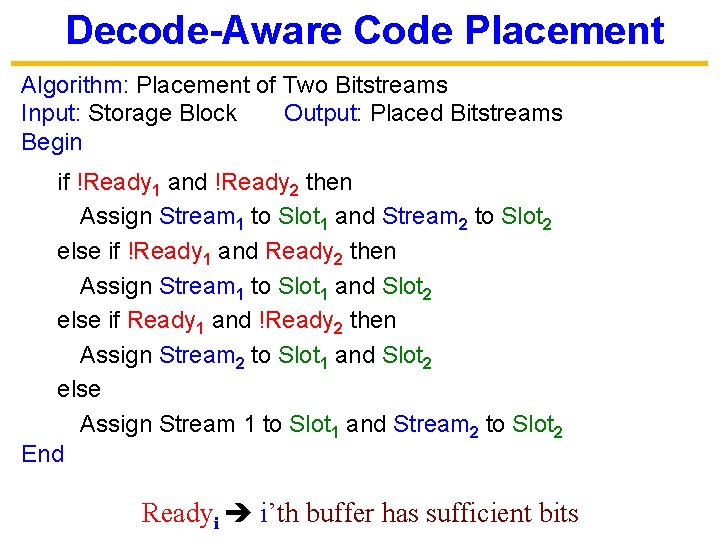

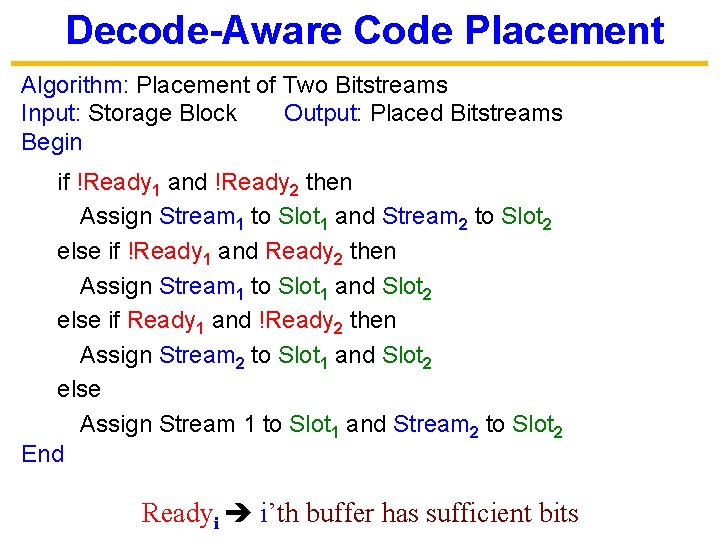

Decode-Aware Code Placement Algorithm: Placement of Two Bitstreams Input: Storage Block Output: Placed Bitstreams Begin if !Ready 1 and !Ready 2 then Assign Stream 1 to Slot 1 and Stream 2 to Slot 2 else if !Ready 1 and Ready 2 then Assign Stream 1 to Slot 1 and Slot 2 else if Ready 1 and !Ready 2 then Assign Stream 2 to Slot 1 and Slot 2 else Assign Stream 1 to Slot 1 and Stream 2 to Slot 2 End Readyi i’th buffer has sufficient bits

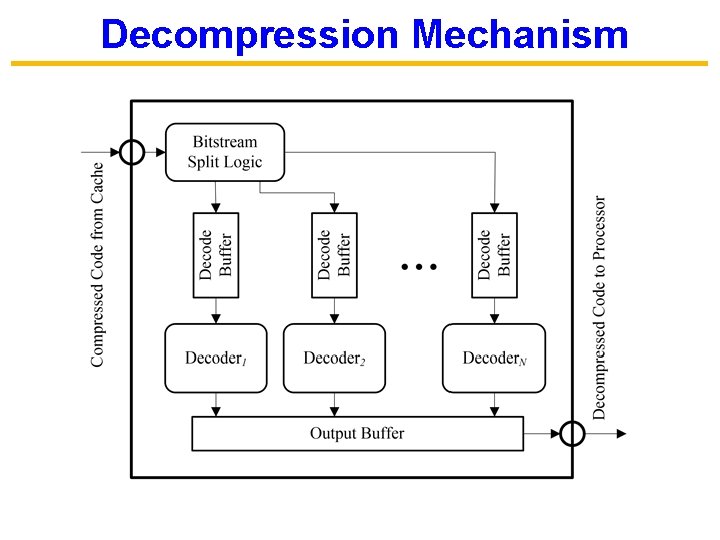

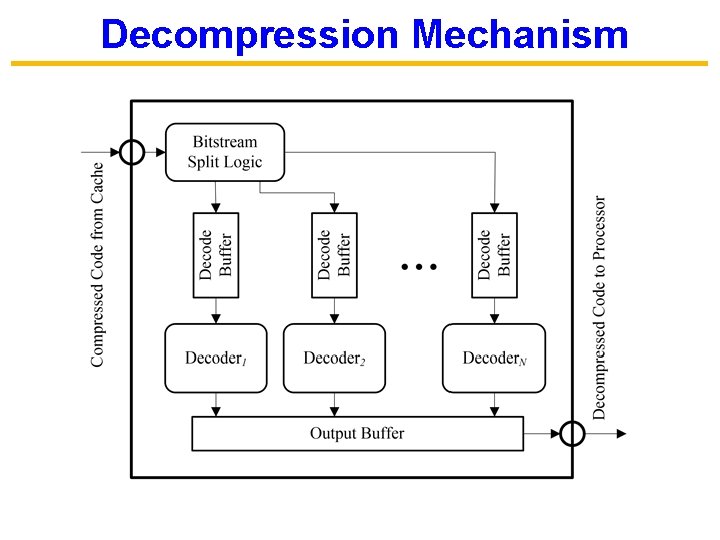

Decompression Mechanism

Outline l Introduction l Code Compression Techniques l Efficient Placement of Compressed Binaries u. Compression u. Code Algorithm Placement Algorithm u. Decompression l Experiments l Conclusion Mechanism

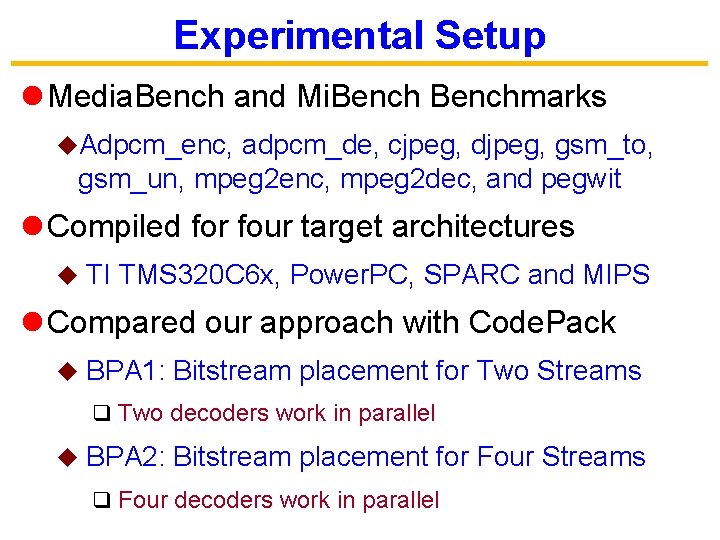

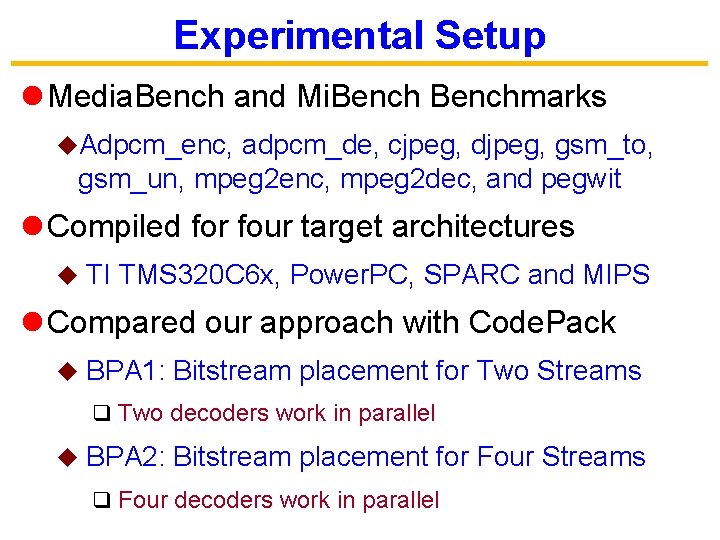

Experimental Setup l Media. Bench and Mi. Benchmarks u. Adpcm_enc, adpcm_de, cjpeg, djpeg, gsm_to, gsm_un, mpeg 2 enc, mpeg 2 dec, and pegwit l Compiled for four target architectures u TI TMS 320 C 6 x, Power. PC, SPARC and MIPS l Compared our approach with Code. Pack u BPA 1: Bitstream placement for Two Streams q Two decoders work in parallel u BPA 2: Bitstream placement for Four Streams q Four decoders work in parallel

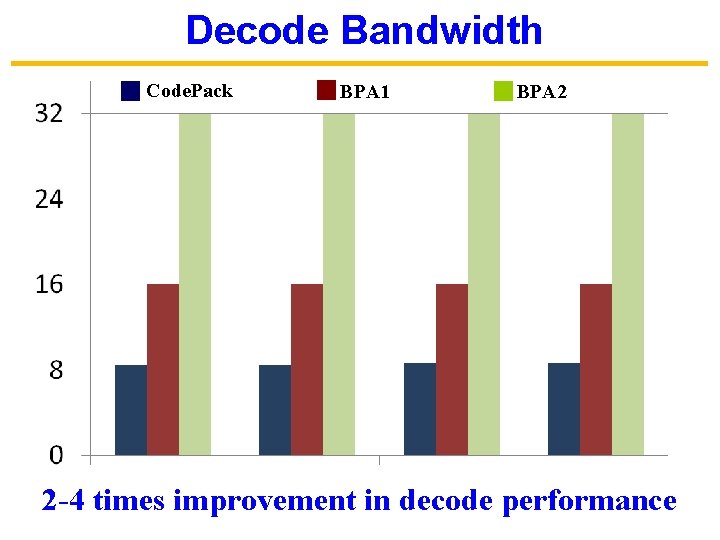

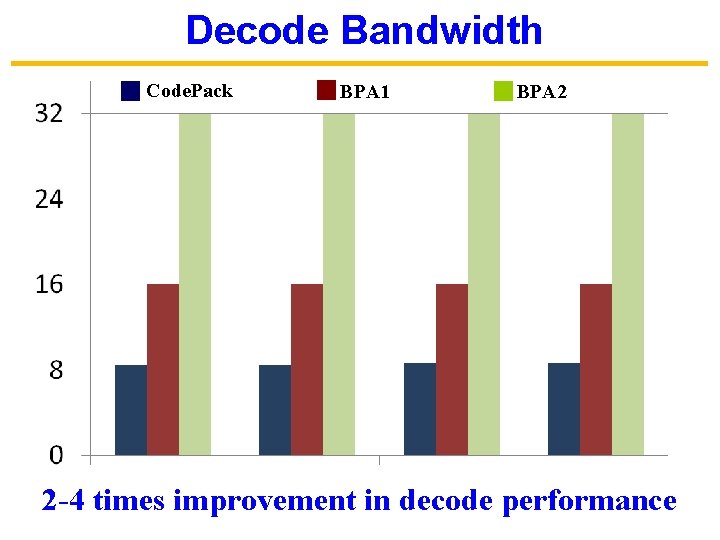

Decode Bandwidth Code. Pack BPA 1 BPA 2 2 -4 times improvement in decode performance

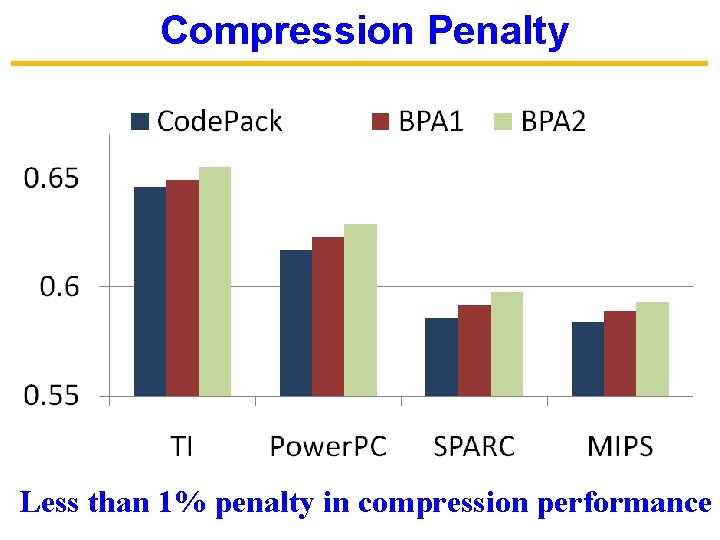

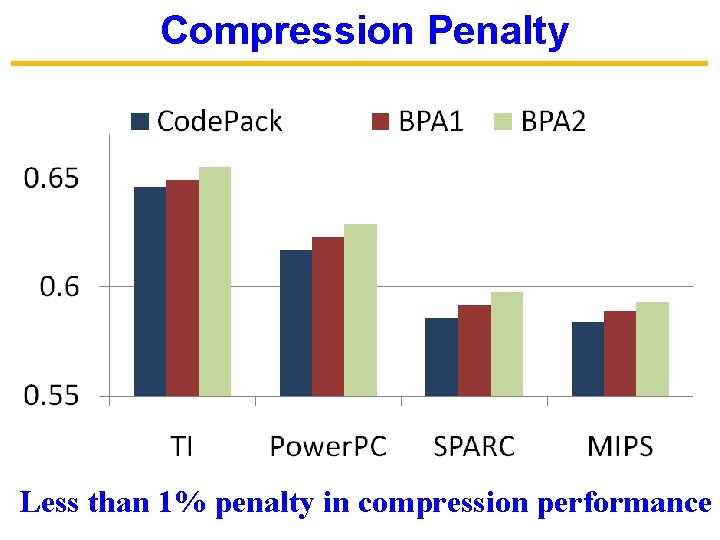

Compression Penalty Less than 1% penalty in compression performance

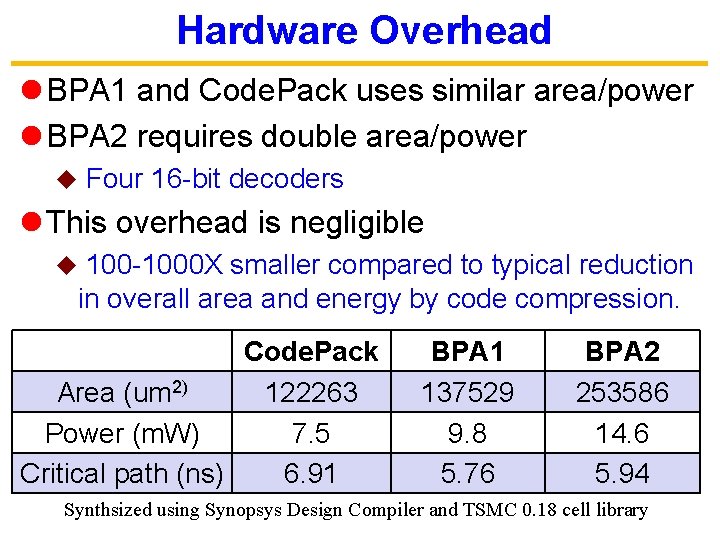

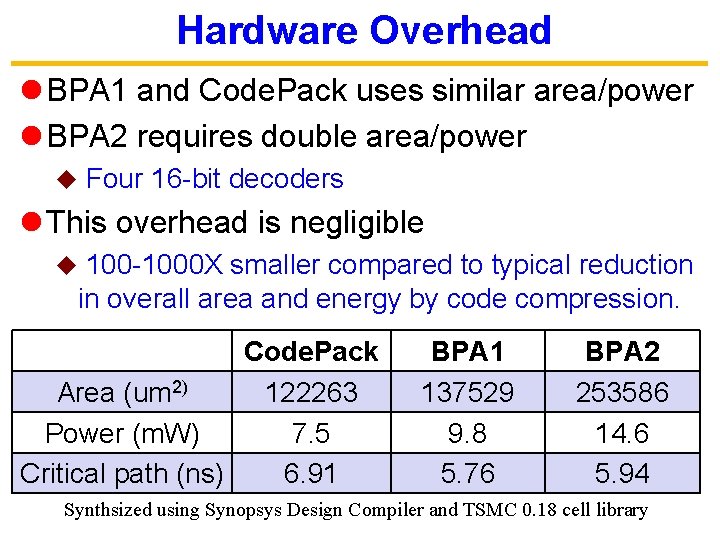

Hardware Overhead l BPA 1 and Code. Pack uses similar area/power l BPA 2 requires double area/power u Four 16 -bit decoders l This overhead is negligible 100 -1000 X smaller compared to typical reduction in overall area and energy by code compression. u Code. Pack Area (um 2) 122263 Power (m. W) 7. 5 Critical path (ns) 6. 91 BPA 1 137529 9. 8 5. 76 BPA 2 253586 14. 6 5. 94 Synthsized using Synopsys Design Compiler and TSMC 0. 18 cell library

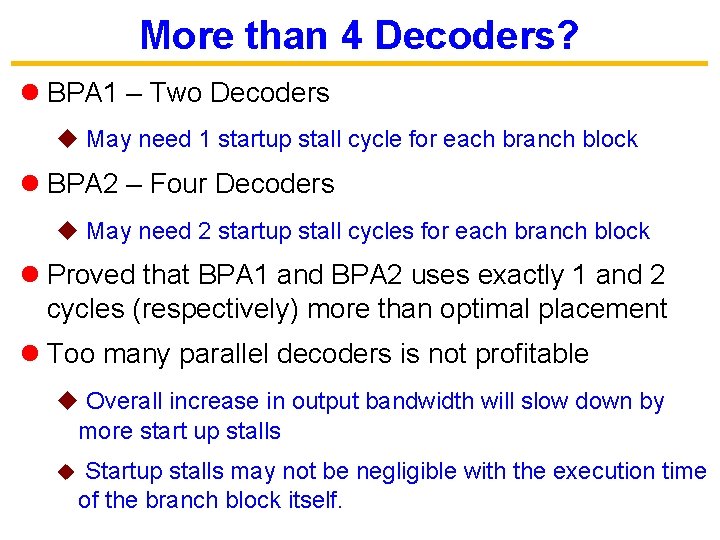

More than 4 Decoders? l BPA 1 – Two Decoders u May need 1 startup stall cycle for each branch block l BPA 2 – Four Decoders u May need 2 startup stall cycles for each branch block l Proved that BPA 1 and BPA 2 uses exactly 1 and 2 cycles (respectively) more than optimal placement l Too many parallel decoders is not profitable u Overall increase in output bandwidth will slow down by more start up stalls u Startup stalls may not be negligible with the execution time of the branch block itself.

Conclusion l Memory is a major constraint u. Existing compression methods provide either efficient compression or fast decompression l Our approach combines the benefits u. Efficient u. Up placement for parallel decompression to 4 times improvement in decode bandwidth u. Less than 1% impact on compression efficiency l Future work u. Apply it for data compression q data values, FPGA bitstream, manufacturing test, …

Thank you !