Efficient Convex Relaxation for Transductive Support Vector Machine

- Slides: 1

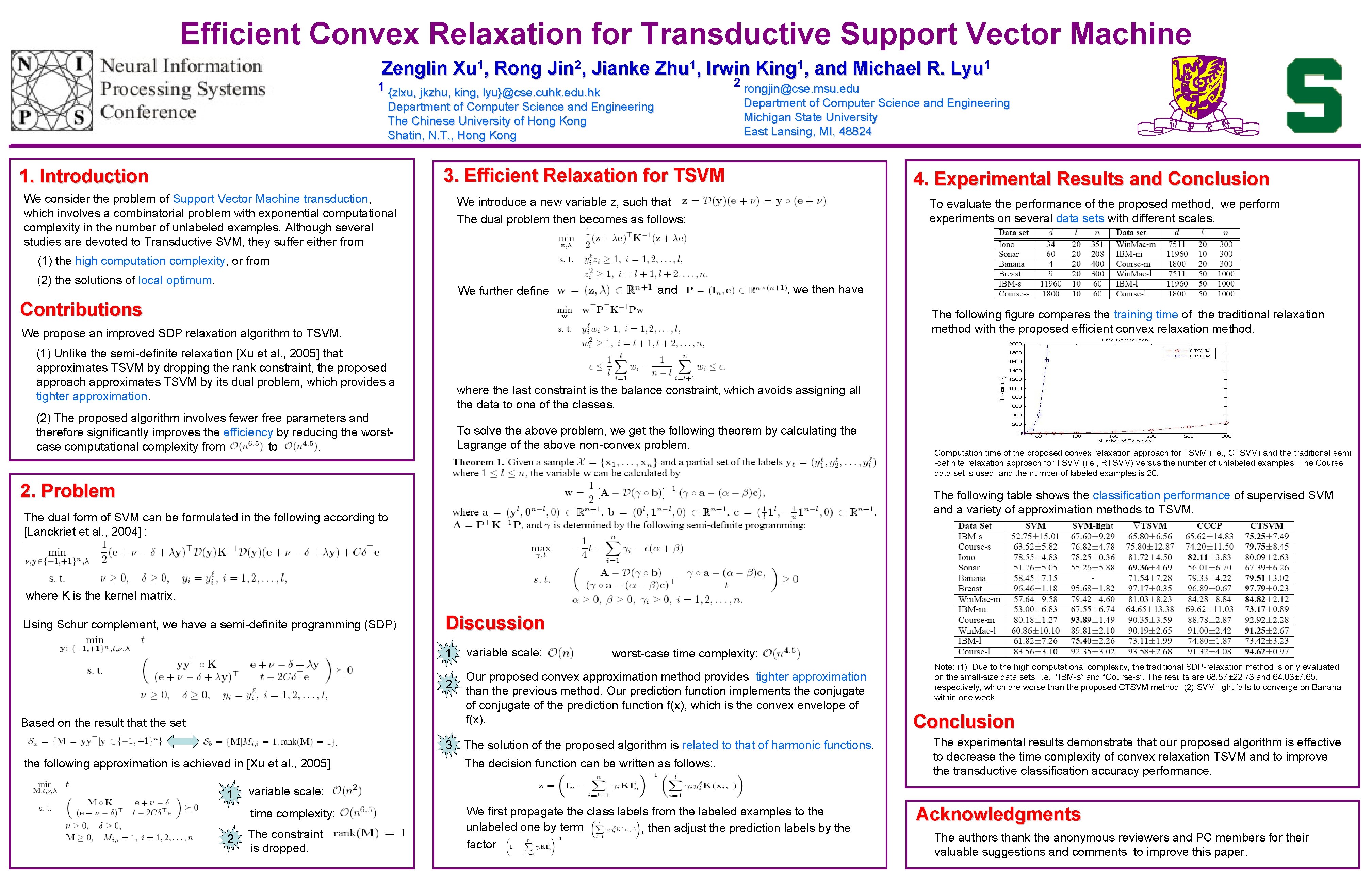

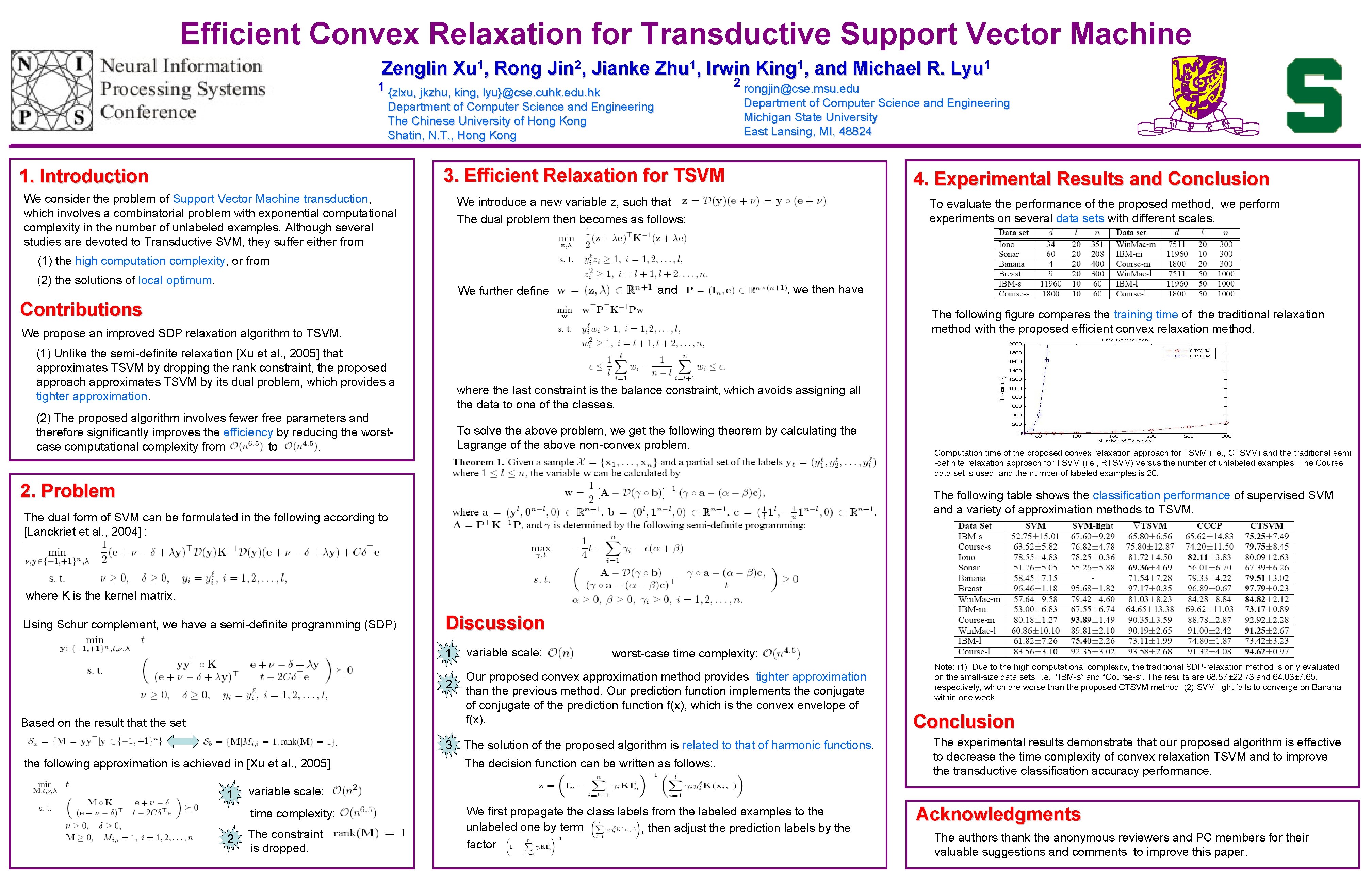

Efficient Convex Relaxation for Transductive Support Vector Machine Zenglin 1 Xu , Rong 2 Jin , Jianke 1 Zhu , Irwin 1 {zlxu, jkzhu, king, lyu}@cse. cuhk. edu. hk 1 King , and Michael R. 2 rongjin@cse. msu. edu Department of Computer Science and Engineering Michigan State University East Lansing, MI, 48824 Department of Computer Science and Engineering The Chinese University of Hong Kong Shatin, N. T. , Hong Kong 3. Efficient Relaxation for TSVM 1. Introduction We consider the problem of Support Vector Machine transduction, which involves a combinatorial problem with exponential computational complexity in the number of unlabeled examples. Although several studies are devoted to Transductive SVM, they suffer either from 1 Lyu 4. Experimental Results and Conclusion We introduce a new variable z, such that The dual problem then becomes as follows: To evaluate the performance of the proposed method, we perform experiments on several data sets with different scales. (1) the high computation complexity, or from (2) the solutions of local optimum. We further define and , we then have Contributions The following figure compares the training time of the traditional relaxation method with the proposed efficient convex relaxation method. We propose an improved SDP relaxation algorithm to TSVM. (1) Unlike the semi-definite relaxation [Xu et al. , 2005] that approximates TSVM by dropping the rank constraint, the proposed approach approximates TSVM by its dual problem, which provides a tighter approximation. where the last constraint is the balance constraint, which avoids assigning all the data to one of the classes. (2) The proposed algorithm involves fewer free parameters and therefore significantly improves the efficiency by reducing the worstcase computational complexity from to. To solve the above problem, we get the following theorem by calculating the Lagrange of the above non-convex problem. 2. Problem Computation time of the proposed convex relaxation approach for TSVM (i. e. , CTSVM) and the traditional semi -definite relaxation approach for TSVM (i. e. , RTSVM) versus the number of unlabeled examples. The Course data set is used, and the number of labeled examples is 20. The following table shows the classification performance of supervised SVM and a variety of approximation methods to TSVM. The dual form of SVM can be formulated in the following according to [Lanckriet et al. , 2004] : where K is the kernel matrix. Using Schur complement, we have a semi-definite programming (SDP) Discussion 1 2 Based on the result that the set , the following approximation is achieved in [Xu et al. , 2005] 1 worst-case time complexity: Our proposed convex approximation method provides tighter approximation than the previous method. Our prediction function implements the conjugate of the prediction function f(x), which is the convex envelope of f(x). 3 The solution of the proposed algorithm is related to that of harmonic functions. The decision function can be written as follows: . Note: (1) Due to the high computational complexity, the traditional SDP-relaxation method is only evaluated on the small-size data sets, i. e. , “IBM-s” and “Course-s”. The results are 68. 57± 22. 73 and 64. 03± 7. 65, respectively, which are worse than the proposed CTSVM method. (2) SVM-light fails to converge on Banana within one week. Conclusion The experimental results demonstrate that our proposed algorithm is effective to decrease the time complexity of convex relaxation TSVM and to improve the transductive classification accuracy performance. variable scale: time complexity: 2 variable scale: The constraint is dropped. We first propagate the class labels from the labeled examples to the unlabeled one by term , then adjust the prediction labels by the factor Acknowledgments The authors thank the anonymous reviewers and PC members for their valuable suggestions and comments to improve this paper.