Efficient and Portable Virtual NVMe Storage on ARM

Efficient and Portable Virtual NVMe Storage on ARM So. Cs Huaicheng Li, Mingzhe Hao, Stanko Novakovic, Vaibhav Gogte, Sriram Govindan, Dan Ports, Irene Zhang, Ricardo Bianchini, Haryadi S. Gunawi, Anirudh Badam

Today’s Cloud Storage Model Reliabilit y VM VM /dev/sda /dev/vda Securit y Versionin g VM /dev/nvme 0 n 1 Performanc e Scalabili ty Disaggregati on 2

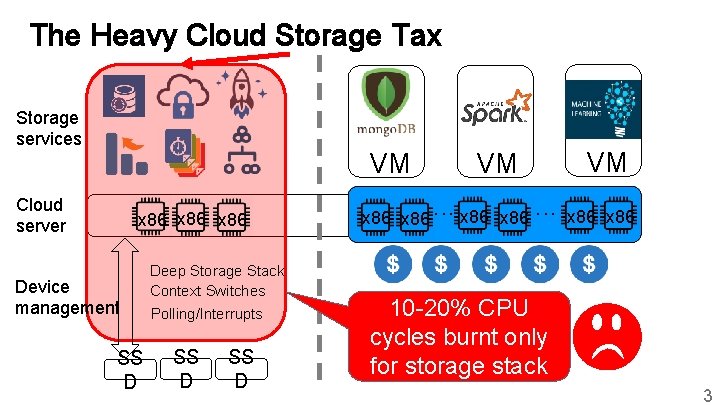

The Heavy Cloud Storage Tax Storage services VM Cloud server x 86 Device management SS D Deep Storage Stack Context Switches Polling/Interrupts SS D x 86 VM VM. . . x 86 . . . x 86 10 -20% CPU cycles burnt only for storage stack 3

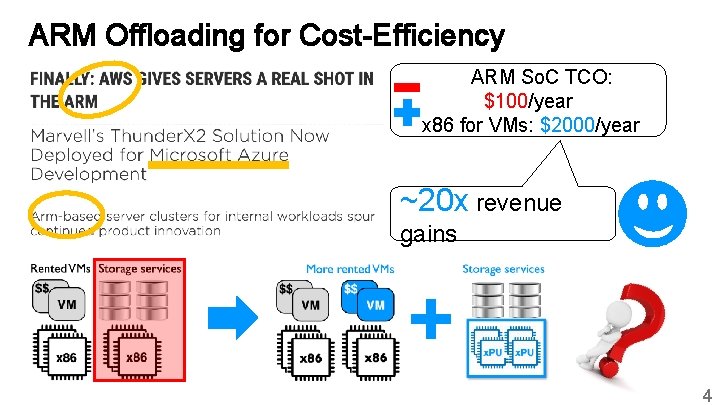

ARM Offloading for Cost-Efficiency ARM So. C TCO: $100/year x 86 for VMs: $2000/year ~20 x revenue gains + 4

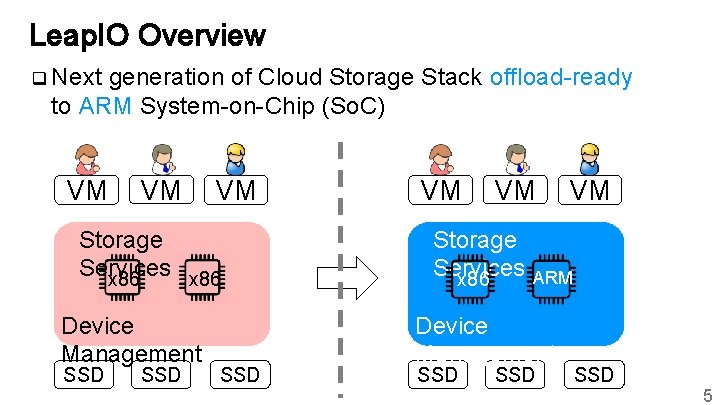

Leap. IO Overview q Next generation of Cloud Storage Stack offload-ready to ARM System-on-Chip (So. C) VM VM VM Storage Services x 86 Device Management SSD SSD VM VM Storage Services x 86 ARM Device Management SSD VM SSD 5

Motivation & Goals Leap. IO Architecture Leap. IO Designs - Portability - Efficiency Evaluation Conclusion 6

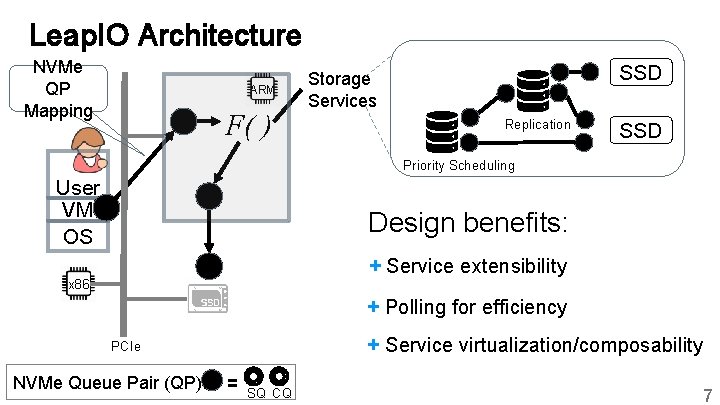

Leap. IO Architecture NVMe QP Mapping ARM F( ) SSD Storage Services Replication SSD Priority Scheduling User VM OS Design benefits: + Service extensibility x 86 + Polling for efficiency + Service virtualization/composability PCIe NVMe Queue Pair (QP): = SQ CQ 7

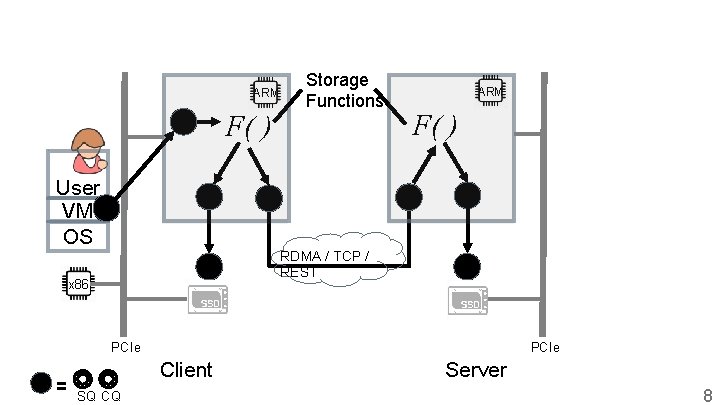

ARM F( ) User VM OS Storage Functions ARM F( ) RDMA / TCP / REST x 86 PCIe = PCIe Client SQ CQ Server 8

Leap. IO Challenges How to achieve portability? How to achieve bare-metal performance? 9

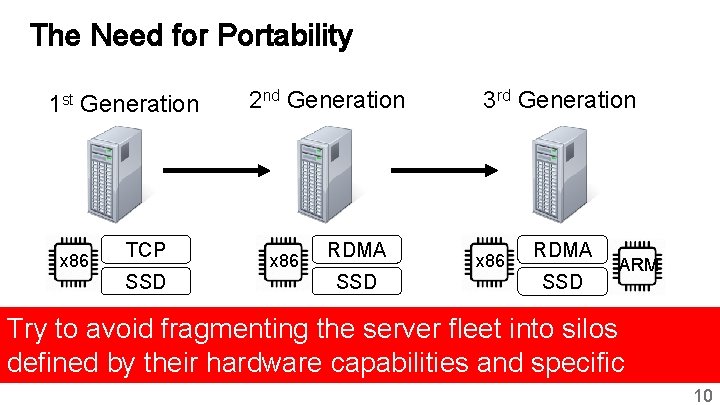

The Need for Portability 1 st Generation x 86 TCP SSD 2 nd Generation x 86 RDMA SSD 3 rd Generation x 86 RDMA SSD ARM Try to avoid fragmenting the server fleet into silos defined by their hardware capabilities and specific 10

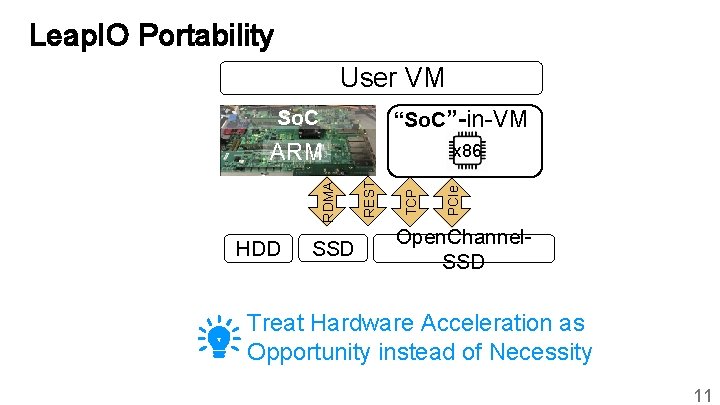

Leap. IO Portability User VM “So. C”-in-VM So. C HDD SSD PCIe TCP x 86 REST RDMA ARM Open. Channel. SSD Treat Hardware Acceleration as Opportunity instead of Necessity 11

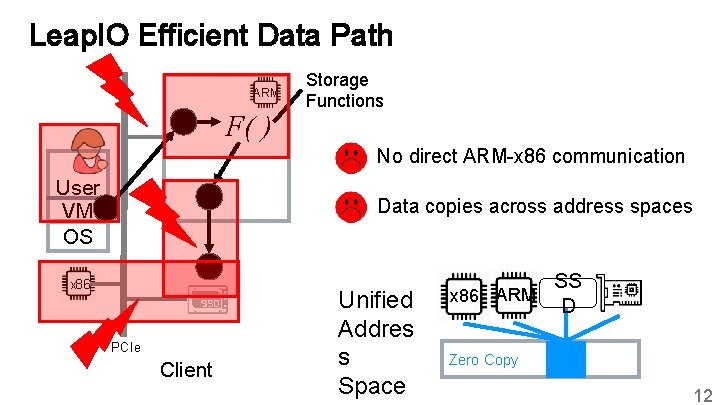

Leap. IO Efficient Data Path ARM F( ) Storage Functions No direct ARM-x 86 communication User VM OS Data copies across address spaces x 86 PCIe Client Unified Addres s Space x 86 ARM SS D Zero Copy 12

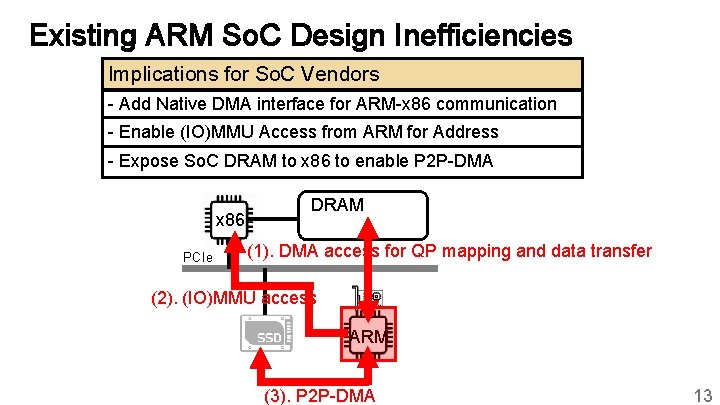

Existing ARM So. C Design Inefficiencies Implications for So. C Vendors - Add Native DMA interface for ARM-x 86 communication - Enable (IO)MMU Access from ARM for Address Translation - Expose So. C DRAM to x 86 to enable P 2 P-DMA x 86 PCIe DRAM (1). DMA access for QP mapping and data transfer (2). (IO)MMU access ARM (3). P 2 P-DMA 13

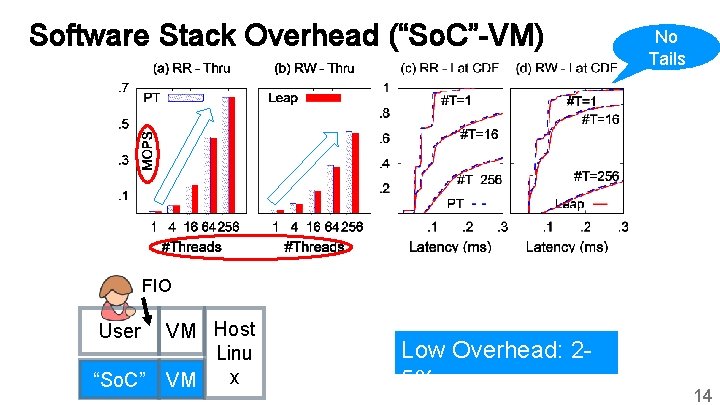

Software Stack Overhead (“So. C”-VM) No Tails FIO User “So. C” VM Host Linu x VM Low Overhead: 25% 14

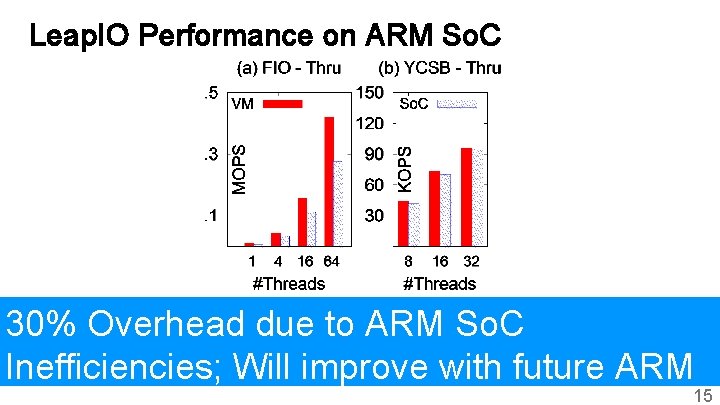

Leap. IO Performance on ARM So. C 30% Overhead due to ARM So. C Inefficiencies; Will improve with future ARM 15

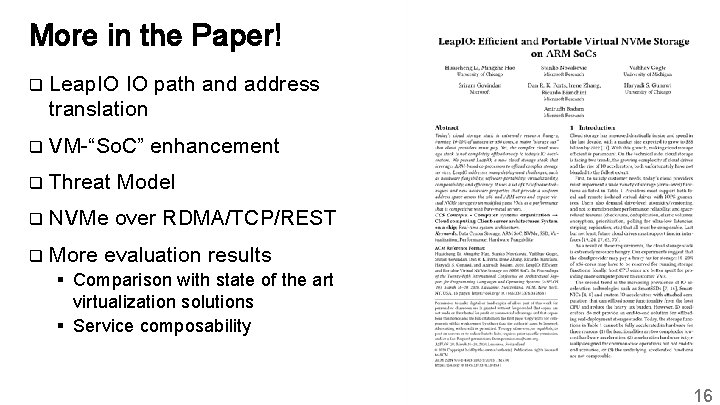

More in the Paper! q Leap. IO IO path and address translation q VM-“So. C” enhancement q Threat Model q NVMe over RDMA/TCP/REST q More evaluation results § Comparison with state of the art virtualization solutions § Service composability 16

Leap. IO Summary q End-to-end Offload-Ready Cloud Storage Stack § Portability § Extensibility § Efficiency q 20 x revenue gains q 2 -5% software overhead (30% on So. C) q Implications for So. C vendors to bridge the performance gap between ARM and x 86 Thank you! Questions ? 17

- Slides: 17