EEE 4 ALL PROCESS EVALUATION AND CONCEPTS Process

- Slides: 17

EEE 4 ALL PROCESS EVALUATION AND CONCEPTS

Process evaluation of complex interventions: Medical Research Council guidance Key recommendations for process evaluation 1. Planning: Carefully define the parameters of relationships with intervention developers or implementers Ensure that the research team has the correct expertise Decide the degree of separation or integration between process and outcome evaluation teams Moore, G, Audrey, S, Barker, M, Bond, L, Bonell, C, Hardeman, W, Moore, L, O'Cathain, A, Tinati, T, Wight, D, & Baird, J 2015, 'Process evaluation of complex interventions: Medical Research Council guidance', BMJ (Clinical Research Ed. ), 350, p. h 1258

2. Design and Conduct Clearly describe the intervention and clarify causal assumptions Identify key uncertainties and systematically select the most important questions to address Select a combination of methods appropriate to the research questions Moore, G, Audrey, S, Barker, M, Bond, L, Bonell, C, Hardeman, W, Moore, L, O'Cathain, A, Tinati, T, Wight, D, & Baird, J 2015, 'Process evaluation of complex interventions: Medical Research Council guidance', BMJ (Clinical Research Ed. ), 350, p. h 1258

3. Analysis Provide descriptive quantitative information on fidelity, dose, and reach Consider more detailed modelling of variations between participants or sites in terms of factors such as fidelity or reach Collect and analyse qualitative data iteratively so that themes that emerge in early interviews can be explored in later ones Ensure that quantitative and qualitative analyses build upon one another Transparently report whether process data are being used to generate hypotheses Moore, G, Audrey, S, Barker, M, Bond, L, Bonell, C, Hardeman, W, Moore, L, O'Cathain, A, Tinati, T, Wight, D, & Baird, J 2015, 'Process evaluation of complex interventions: Medical Research Council guidance', BMJ (Clinical Research Ed. ), 350, p. h 1258

4. Reporting Identify existing reporting guidance specific to the methods adopted Report the logic model or intervention theory and clarify how it was used to guide selection of research questions and methods Disseminate findings to policy and practice stakeholders If multiple journal articles are published from the same process evaluation ensure that each article makes clear its context within the evaluation as a whole Moore, G, Audrey, S, Barker, M, Bond, L, Bonell, C, Hardeman, W, Moore, L, O'Cathain, A, Tinati, T, Wight, D, & Baird, J 2015, 'Process evaluation of complex interventions: Medical Research Council guidance', BMJ (Clinical Research Ed. ), 350, p. h 1258

Process-evaluation planning: Important issues: understanding health promotion, defining the process, considering how characteristics and contexts affect performance Saunders R. P. , Evans M. H. , Joshi P. (2005), Developing a process-evaluation plan for assessing health promotion program implementation: a how-to guide. Health promotion practice, 6(2), 134 -147

Steps of process-evaluation plan: 1. step: fully described plan program (expected impects and outcomes of the intervantion) 2. step: diteled description (strategies, activities, accepteble delivery of the program) 3. step: list of possible quations 4. step: consider the methods (measures needed to collect data, data- managment, analyses) 5. step: conceders program recourses to answer the questions from step 3 and step 4 (considering the program characteristics) 6. step: final plan (description of plan questions for each component) Saunders R. P. , Evans M. H. , Joshi P. (2005), Developing a processevaluation plan for assessing health promotion program implementation: a how-to guide. Health promotion practice, 6(2), 134 -147

Implementation Matters: A Review of Research on the Influence of Implementation on Program Outcomes and the Factors Affecting Implementation

Purpose: a review on influence of implementation on outcome and factors affecting implementation refers to what a program consists of when it is delivered in a particular setting. There are eight different aspects to implementation: (1) There is fidelity, which is the extent to which the innovation corresponds to the originally intended program (adherence, compliance, integrity, faithful replication). (2) There is dosage, which refers to how much of the original program has been delivered (quantity, intervention strength). (3) Quality refers to how well different program components have been conducted (e. g. , are the main program elements delivered clearly and correctly? ). (4) Participant responsiveness refers to the degree to which the program stimulates the interest or holds the attention of participants (e. g. , are students attentive during program lessons? ). (5) Program differentiation involves the extent to which a program’s theory and practices can be distinguished from other programs (program uniqueness). (6) the monitoring of control/comparison conditions, which involves describing the nature and amount of services received by members of these groups (treatment contamination, usual care, alternative services). (7) Program reach (participation rates, program scope) refers to the rate of involvement and representativeness of program participants. (8) adaptation which refers to changes made in the original program during implementation (program modification, reinvention).

Assessment on aspects of implementation is a necessity in program evaluation Assessment of fidelity and dosage achieve better scope when measuring outcome of implementation Finding the appropriate level of fidelity and adaptation in intervention implementation is necessary. If any adaptation occurs, it is necessary to monitor and record changes.

1. 2. 3. 4. 5. Ecological Framework and Interactive Systems Framework were used to identify contextual factors which affect successful implementation process These are factors which affect the implementation process: Community level factors Provider (Relim) characteristics Characteristics of intervention Relevant factors to the intervention delivery system – organisational capacity Factors to intervention support system e. g training Additional 23 sub-categories were identified. Contextual factors must be considered when interventions are implemented.

Empowering community members can be an effective way to solve local problems (community participation and collaboration) and enhance implementation. This fits well with the Relim interventions. Community ownership and participation increases the likelihood of the effective program (as opposed to taking power away). This research review only covers prevention and promotion aimed at children and adolescents

Research on fidelity of implementation: implications for drug abuse prevention in school settings Fidelity has been measured in five ways: (1) adherence, (2) dose, (3) quality of program delivery, (4) participant responsiveness (5) program differentiation. Key elements of high fidelity include teacher/personnel training, program characteristics, teacher characteristics and organizational characteristics.

High-quality implementation simply did not exist in practice. The Rand report revealed that programs that were mutually adapted were more effective than coopted programs. Only with mutual adaptation did organizational behavior change. Research by Rogers and his colleagues (Rogers, 1977; Rogers et al. , 1995) revealed that consumers or ‘local adopters’ reinvented or changed innovations to meet their own needs and to derive a sense of ownership (Blakely et al. , 1987). This reconceptualization of theoretical model resulted in a shift in public policy approaches to dissemination

According to the Rand study (Berman and Mc. Laughlin, 1976), key elements to successful implementation include: (1) adaptive planning which is responsive to the needs of participants, (2) training tailored to local sites, (3) a critical mass of participants (including other implementers) to provide support and prevent isolation, and (4) local material development ranging from creating original materials to repackaging materials to make them more appealing to the local audience.

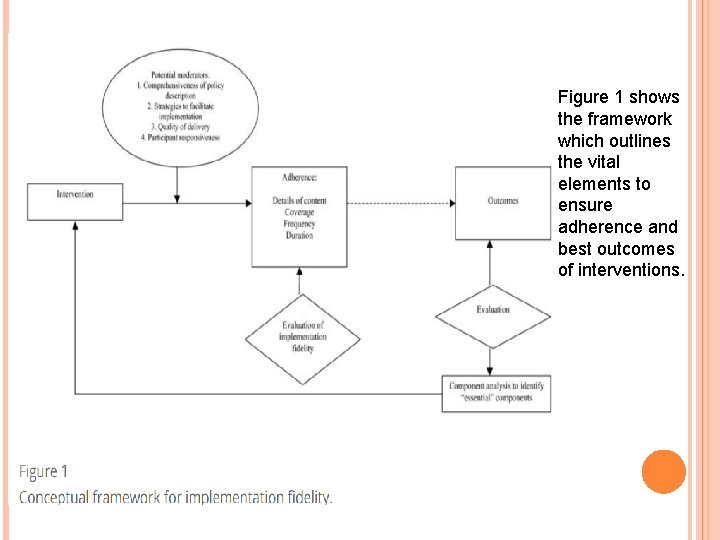

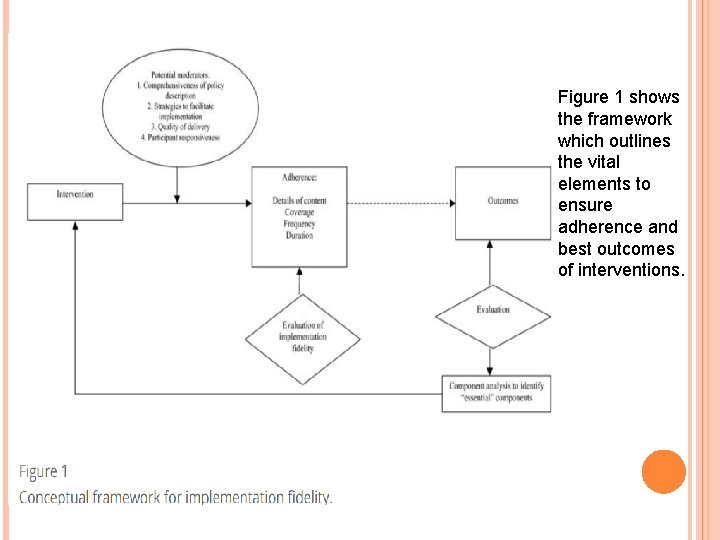

This paper offers a framework in which evidencebased practitioners a guide to the processes and factors involved when implementing interventions described in research It encompasses both the intervention and how its delivered. To achieve high implementation of fidelity, is one of the best ways of replicating the success of interventions achieved by original research for these. Further research is needed to test the framework itself in order to clarify the impact of the components of this framework.

Figure 1 shows the framework which outlines the vital elements to ensure adherence and best outcomes of interventions.