EECS 470 Lecture 4 Pipelining Hazards II GAS

- Slides: 51

EECS 470 Lecture 4 Pipelining & Hazards II GAS STATION Winter 2021 Jon Beaumont http: //www. eecs. umich. edu/courses/eecs 470 Slides developed in part by Profs. Austin, Brehob, Falsafi, Hill, Hoe, Lipasti, Martin, Roth, Shen, Smith, Sohi, Tyson, Vijaykumar, and Wenisch of Carnegie Mellon University, Purdue University, University of Michigan, University of Pennsylvania, and University of Wisconsin. Lecture EECS 470 Slide 1 4

Class Question Which of the following best explains why pipelining results in speedup? a) Instructions are executed with shorter latency b) Clock period is reduced c) More instructions are executed at the same time d) Magnets EECS 470 Lecture 4 Slide 2

Announcements • Reminder r Lab #1 due tomorrow by 12: 30 p m r Verilog assignment #1 due tomorrow m r Get checked off by GSI/IA Submit to autograder by 11: 59 p HW # 1 due Thursday 2/4 m Submit through Gradescope by 11: 59 p • I have OH today from 3 -4 OH format for all staff: Join Zoom link, put yourself on Office Hour Queue r You will be let into a breakout room when you are at the head r EECS 470 Lecture 4 Slide 3

Last Time • Baseline processor discussion r EECS 470 Review 5 -stage pipeline from EECS 370 Lecture 4 Slide 4

Today • Hazards r r Detection Resolution m m EECS 470 Software (avoidance) Hardware (stalling, forwarding) Lecture 4 Slide 5

Lingering Questions • "How recent was the pipeline method developed? What will be the next best method? " Basic pipelines have been used since the very early days of computing (1930 s) r Deep pipelines became very popular with vector processors in the 1970 s r Less popular know we'll discuss why r r Recent trends have been not towards better performance, but better reliability and power-effeciency m EECS 573 (Microarchitectures) covers a lot of these interesting topics • Remember, you can submit lingering questions to cover next lecture at: https: //bit. ly/3 o. Sr 5 FD EECS 470 Lecture 4 Slide 6

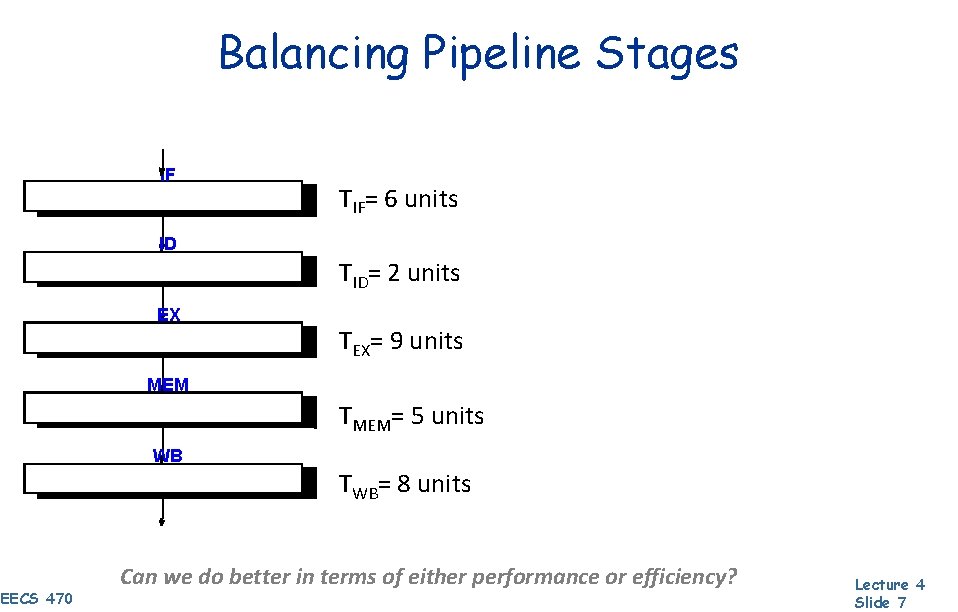

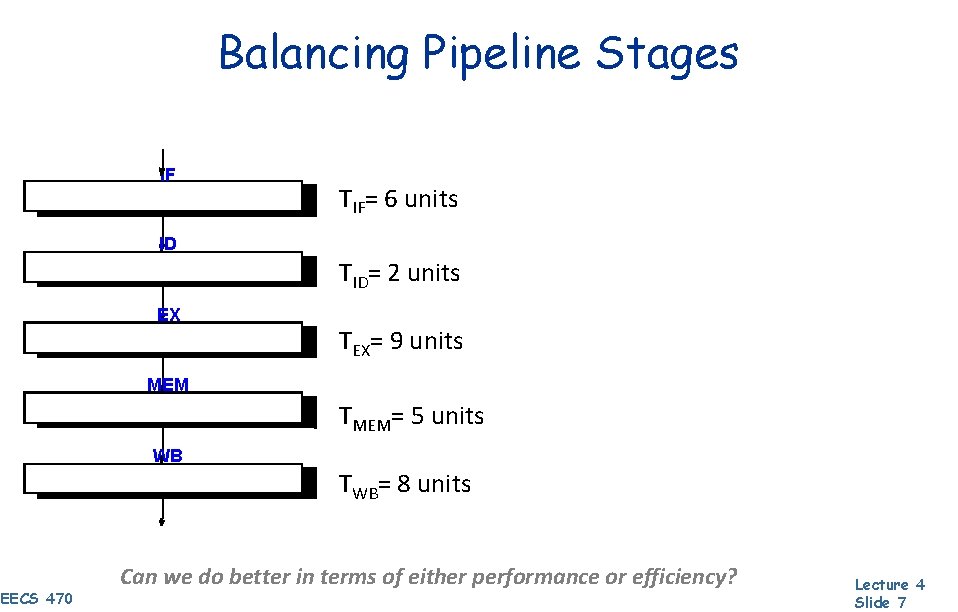

Balancing Pipeline Stages IF TIF= 6 units ID TID= 2 units EX TEX= 9 units MEM TMEM= 5 units WB TWB= 8 units EECS 470 Can we do better in terms of either performance or efficiency? Lecture 4 Slide 7

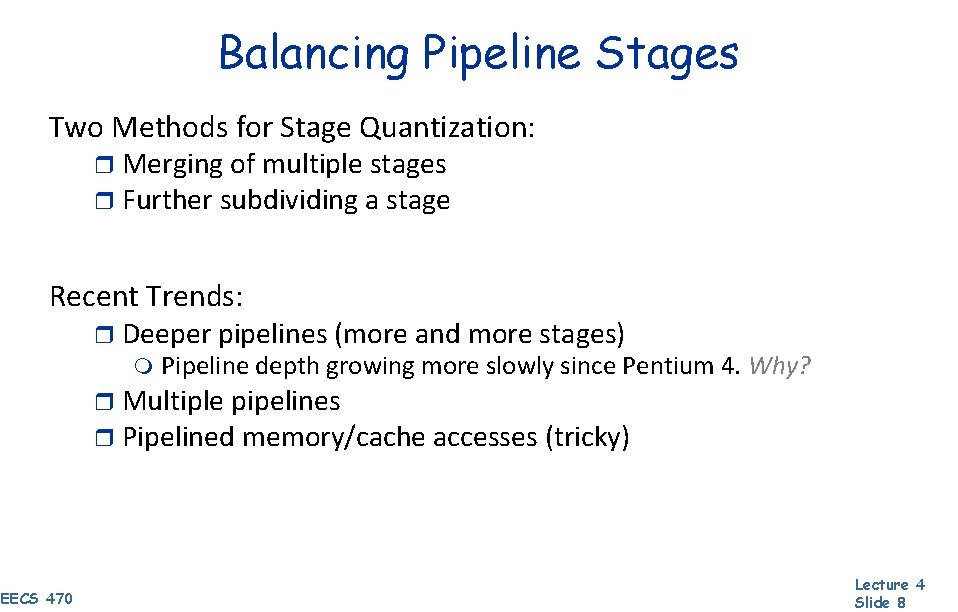

Balancing Pipeline Stages Two Methods for Stage Quantization: r Merging of multiple stages r Further subdividing a stage Recent Trends: r Deeper pipelines (more and more stages) m Pipeline depth growing more slowly since Pentium 4. Why? r Multiple pipelines r Pipelined memory/cache accesses (tricky) EECS 470 Lecture 4 Slide 8

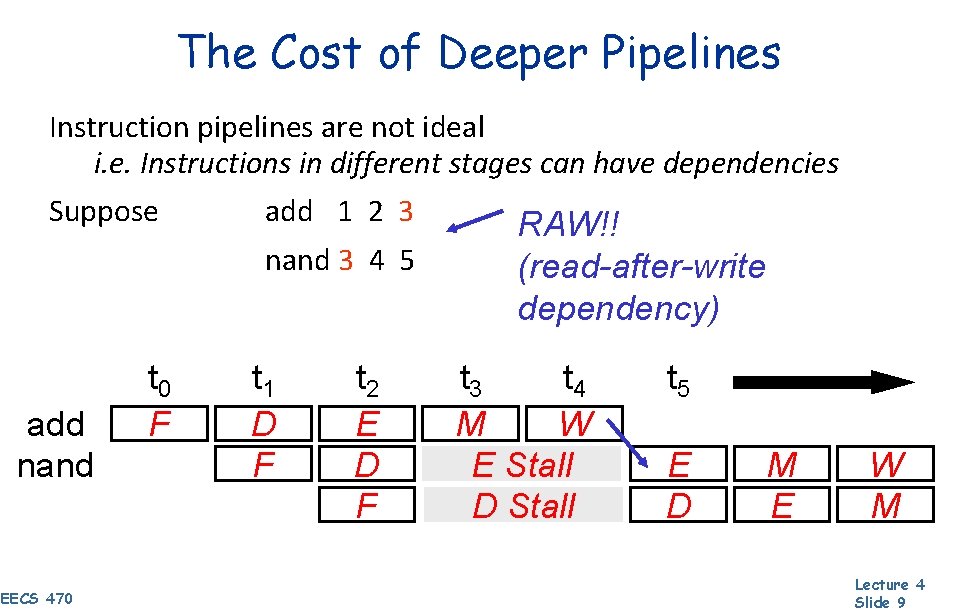

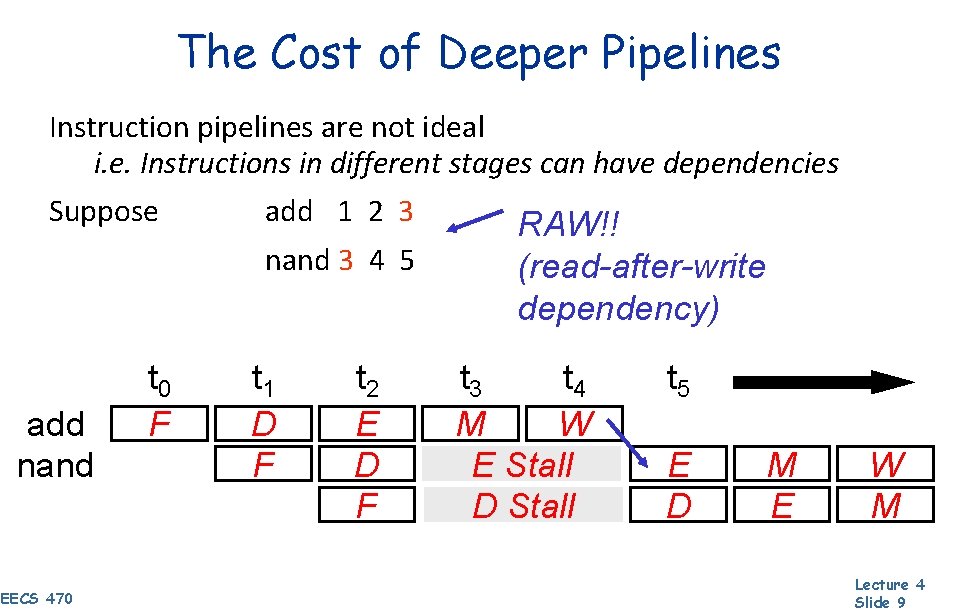

The Cost of Deeper Pipelines Instruction pipelines are not ideal i. e. Instructions in different stages can have dependencies Suppose add 1 2 3 nand 3 4 5 add Inst 0 nand Inst 1 EECS 470 t 0 F t 1 Dt 1 FD F t 2 Et 2 DE FD RAW!! (read-after-write dependency) t 3 t 4 Mt 3 Wt 4 M Stall W E E Stall M D t 5 E DW M E W M Lecture 4 Slide 9

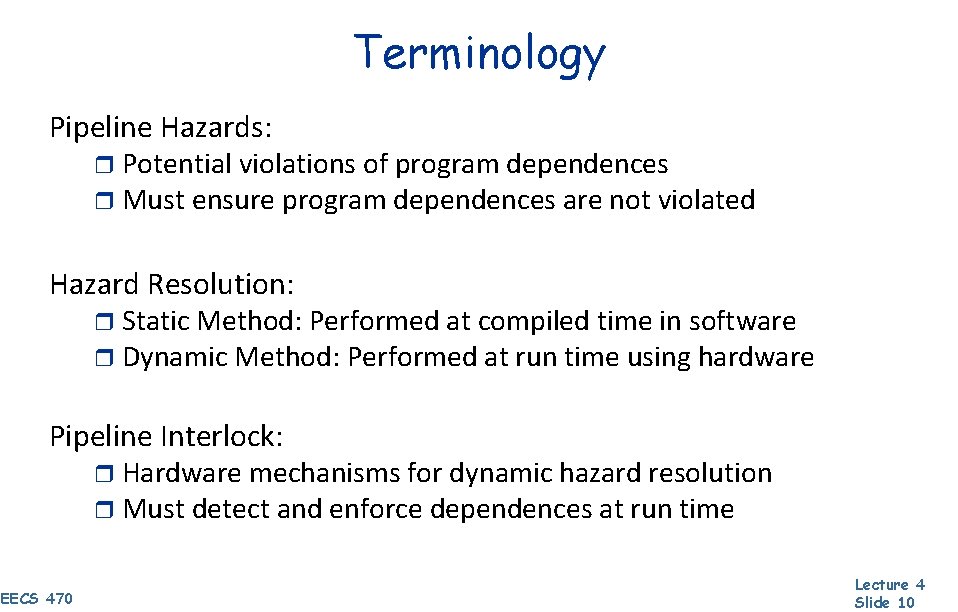

Terminology Pipeline Hazards: r Potential violations of program dependences r Must ensure program dependences are not violated Hazard Resolution: r Static Method: Performed at compiled time in software r Dynamic Method: Performed at run time using hardware Pipeline Interlock: r Hardware mechanisms for dynamic hazard resolution r Must detect and enforce dependences at run time EECS 470 Lecture 4 Slide 10

Handling Data Hazards Avoidance (static) r Make sure there are no hazards in the code Detect and Stall (dynamic) r Stall until earlier instructions finish Detect and Forward (dynamic) r EECS 470 Get correct value from elsewhere in pipeline Lecture 4 Slide 11

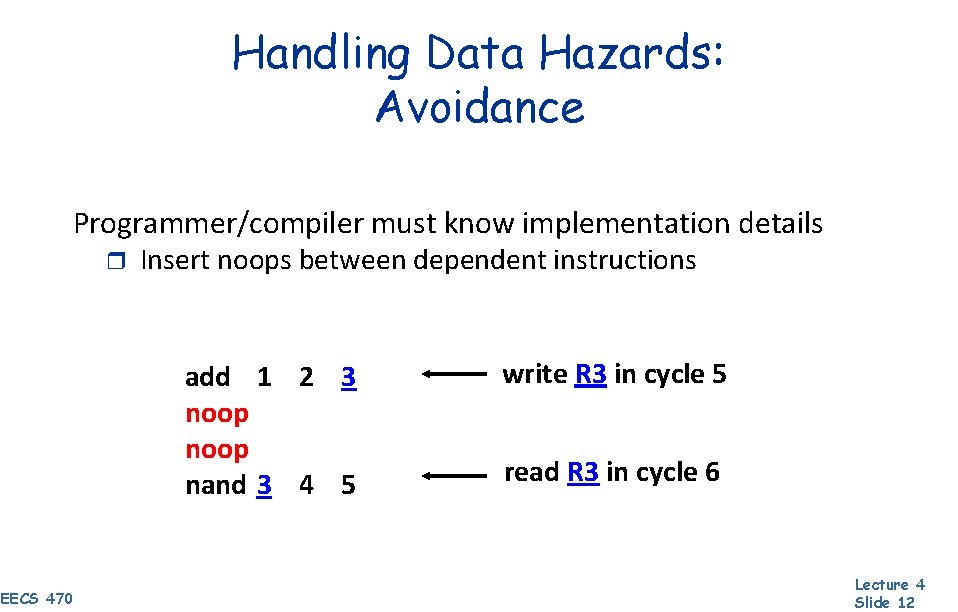

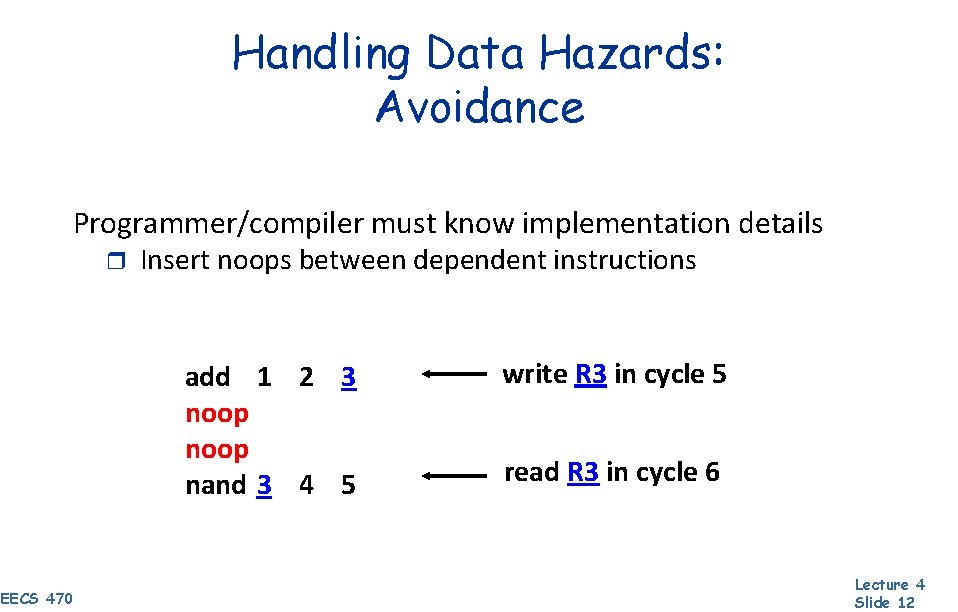

Handling Data Hazards: Avoidance Programmer/compiler must know implementation details r Insert noops between dependent instructions add 1 2 3 noop nand 3 4 5 EECS 470 write R 3 in cycle 5 read R 3 in cycle 6 Lecture 4 Slide 12

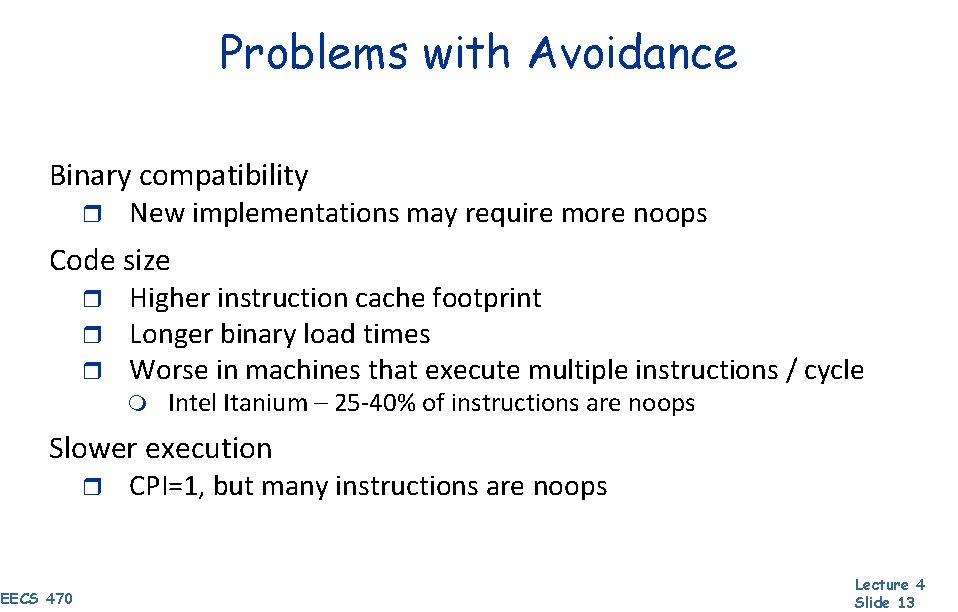

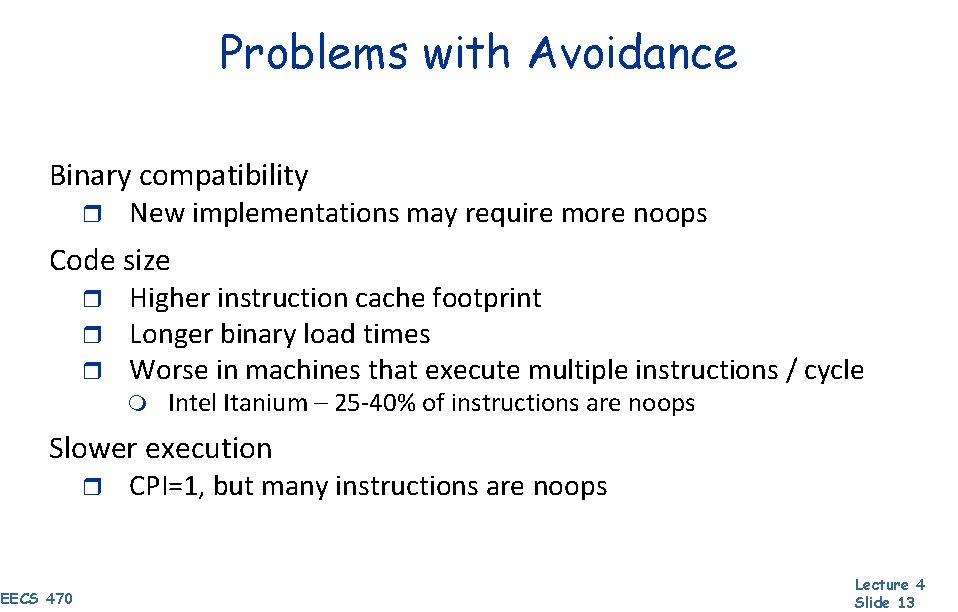

Problems with Avoidance Binary compatibility r New implementations may require more noops Code size r r r Higher instruction cache footprint Longer binary load times Worse in machines that execute multiple instructions / cycle m Intel Itanium – 25 -40% of instructions are noops Slower execution r EECS 470 CPI=1, but many instructions are noops Lecture 4 Slide 13

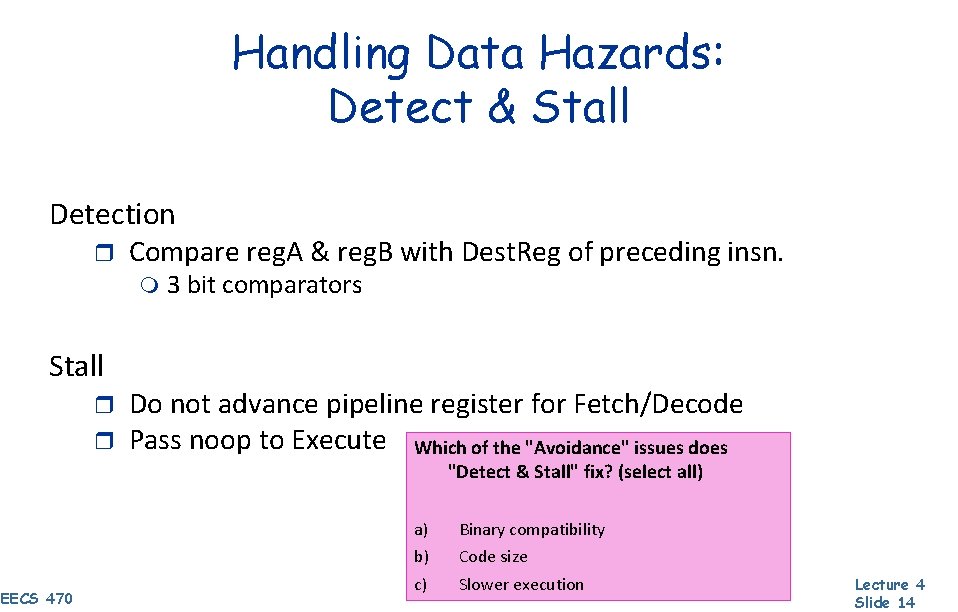

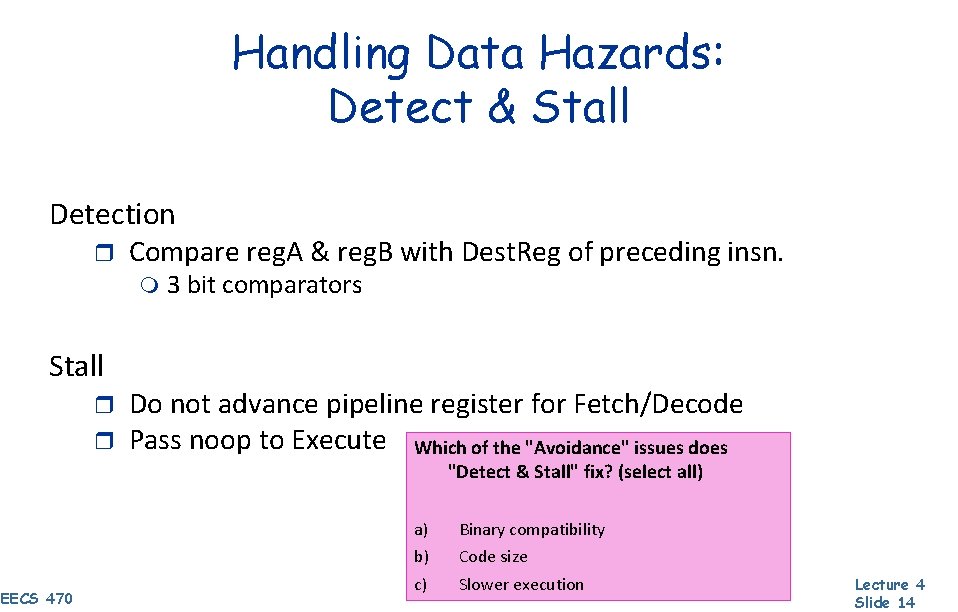

Handling Data Hazards: Detect & Stall Detection r Compare reg. A & reg. B with Dest. Reg of preceding insn. m Stall r r 3 bit comparators Do not advance pipeline register for Fetch/Decode Pass noop to Execute Which of the "Avoidance" issues does "Detect & Stall" fix? (select all) EECS 470 a) Binary compatibility b) Code size c) Slower execution Lecture 4 Slide 14

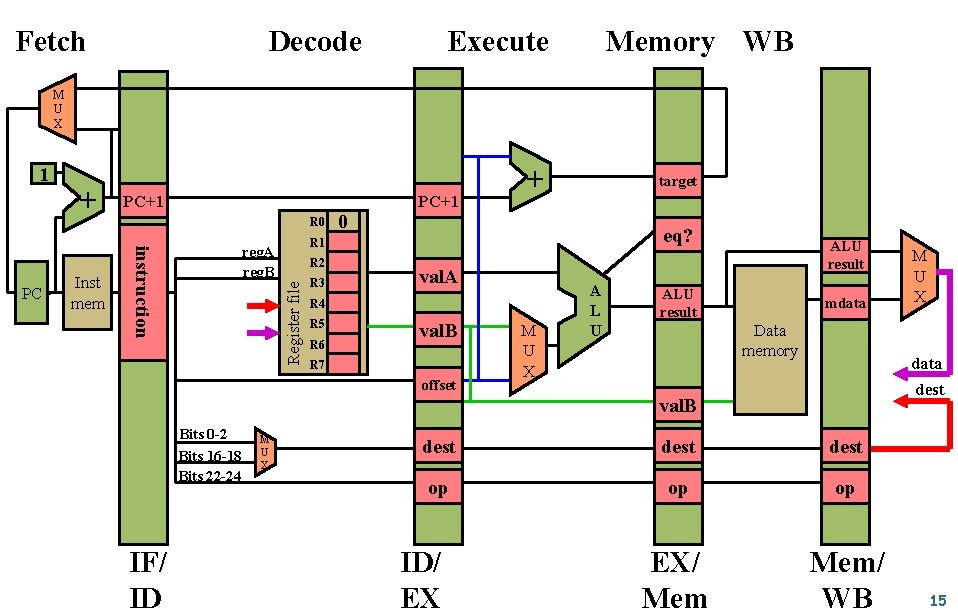

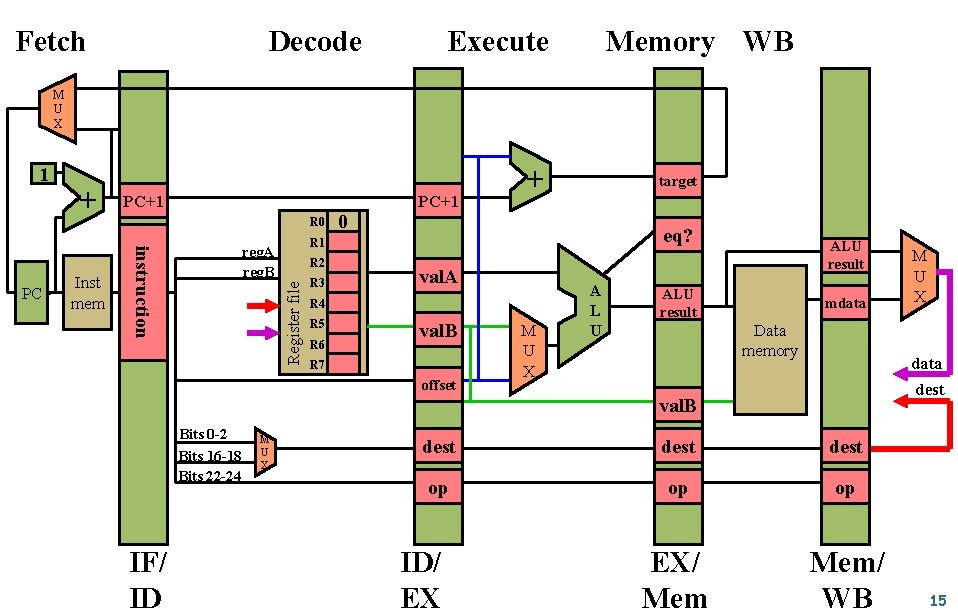

Fetch Decode Execute Memory WB M U X Inst mem PC+1 R 0 + target 0 eq? R 1 reg. A reg. B instruction PC + R 2 Register file 1 R 3 val. A R 4 R 5 R 6 val. B R 7 offset M U X A L U ALU result mdata Data memory data dest val. B Bits 0 -2 Bits 16 -18 Bits 22 -24 IF/ ID M U X dest op op op EX/ Mem/ WB ID/ EX M U X 15

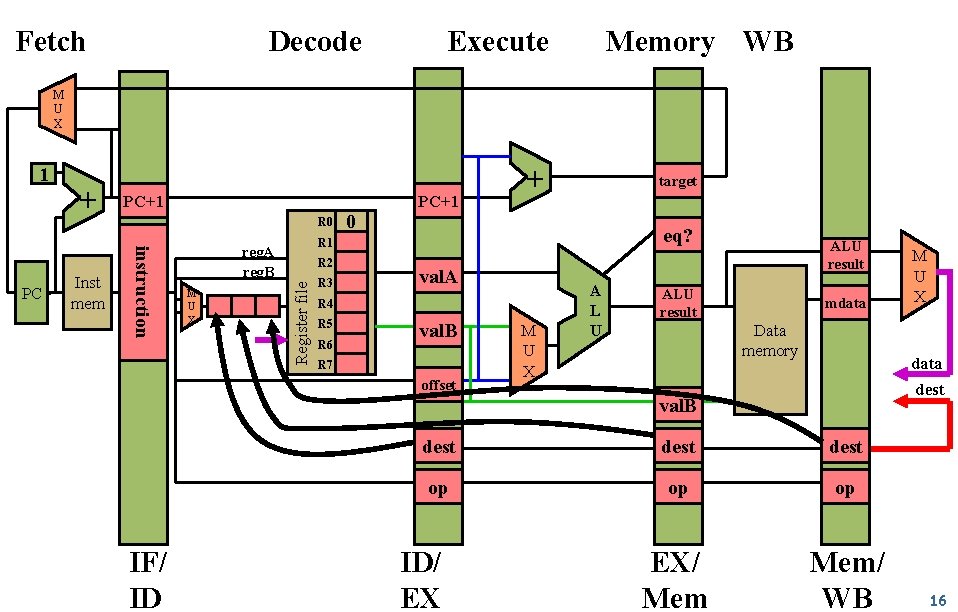

Fetch Decode Execute Memory WB M U X Inst mem PC+1 R 0 instruction PC + reg. A reg. B M U X + target 0 eq? R 1 R 2 Register file 1 R 3 val. A R 4 R 5 R 6 val. B R 7 offset M U X A L U ALU result mdata Data memory data dest val. B IF/ ID dest op op op EX/ Mem/ WB ID/ EX M U X 16

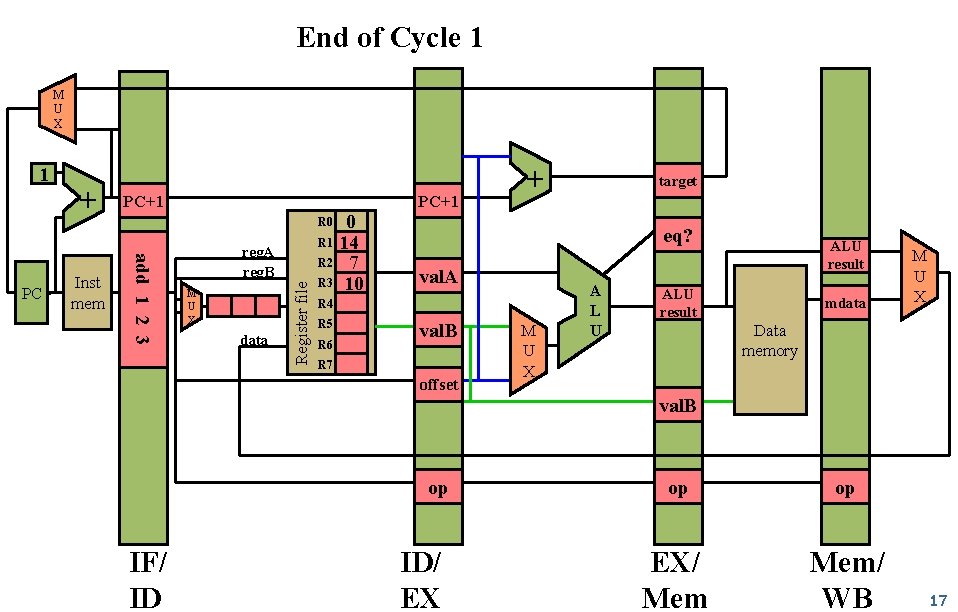

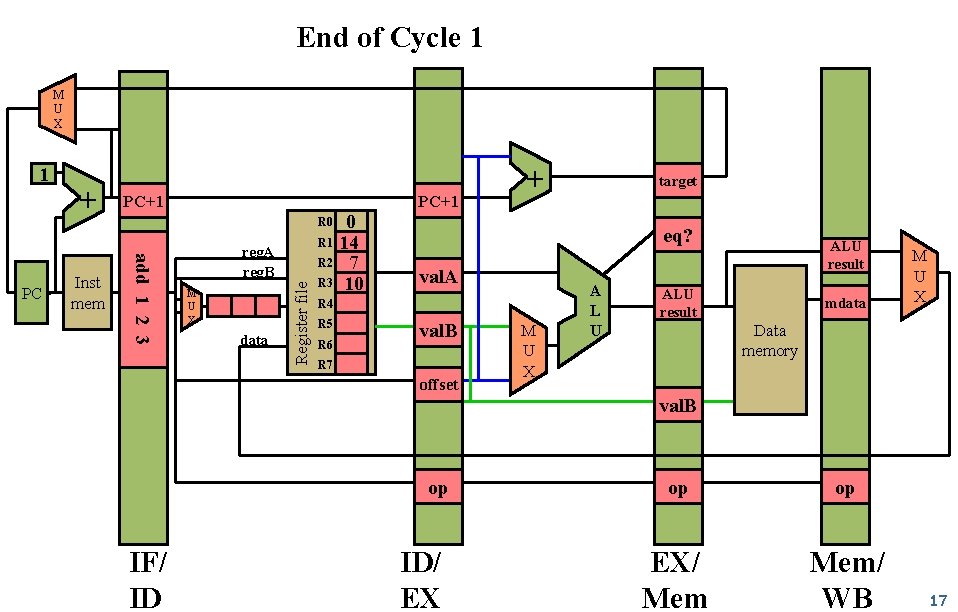

End of Cycle 1 M U X Inst mem PC+1 0 R 1 14 R 2 7 R 3 10 + target R 0 add 1 2 3 PC + reg. A reg. B M U X data Register file 1 eq? val. A R 4 R 5 R 6 val. B R 7 offset M U X A L U ALU result mdata M U X Data memory val. B op IF/ ID ID/ EX op op EX/ Mem/ WB 17

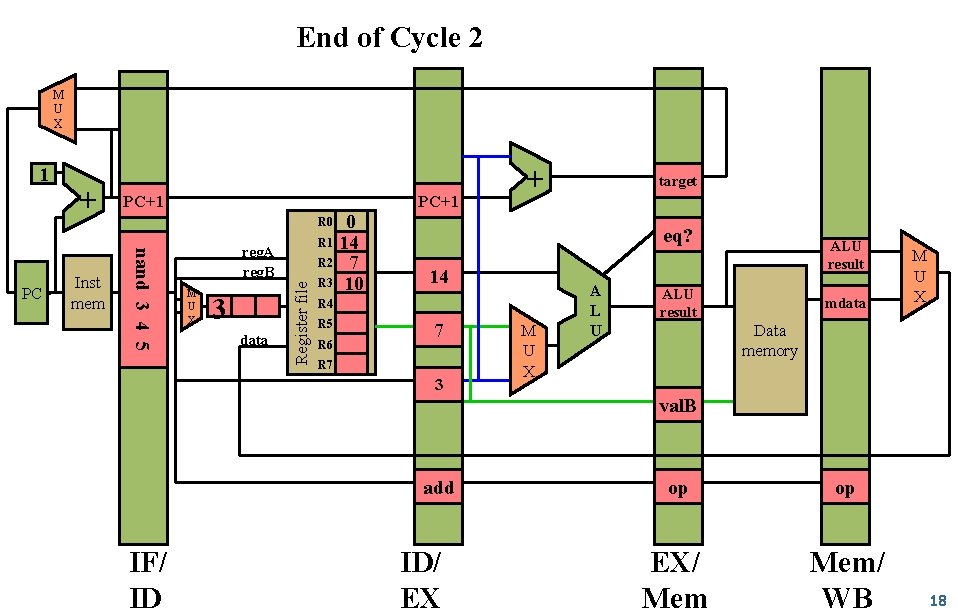

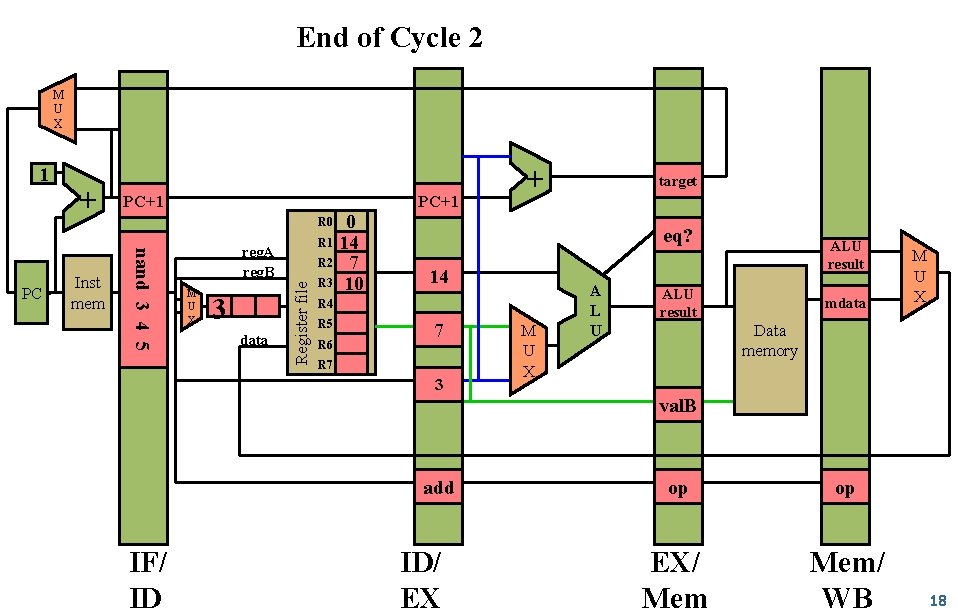

End of Cycle 2 M U X Inst mem PC+1 0 R 1 14 R 2 7 R 3 10 + target R 0 nand 3 4 5 PC + reg. A reg. B M U X 3 data Register file 1 eq? 14 R 5 R 6 7 R 7 3 M U X A L U ALU result mdata M U X Data memory val. B add IF/ ID ID/ EX op op EX/ Mem/ WB 18

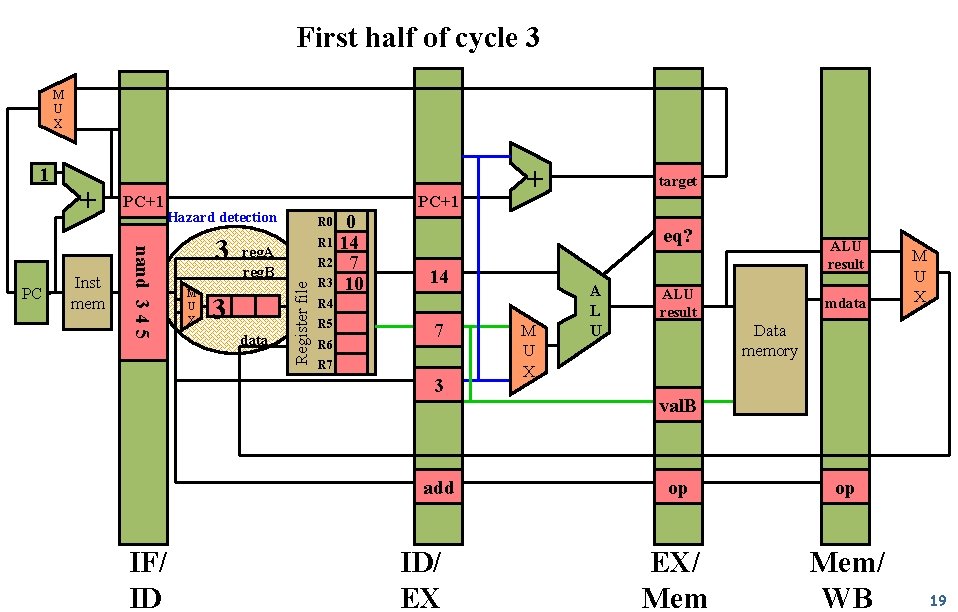

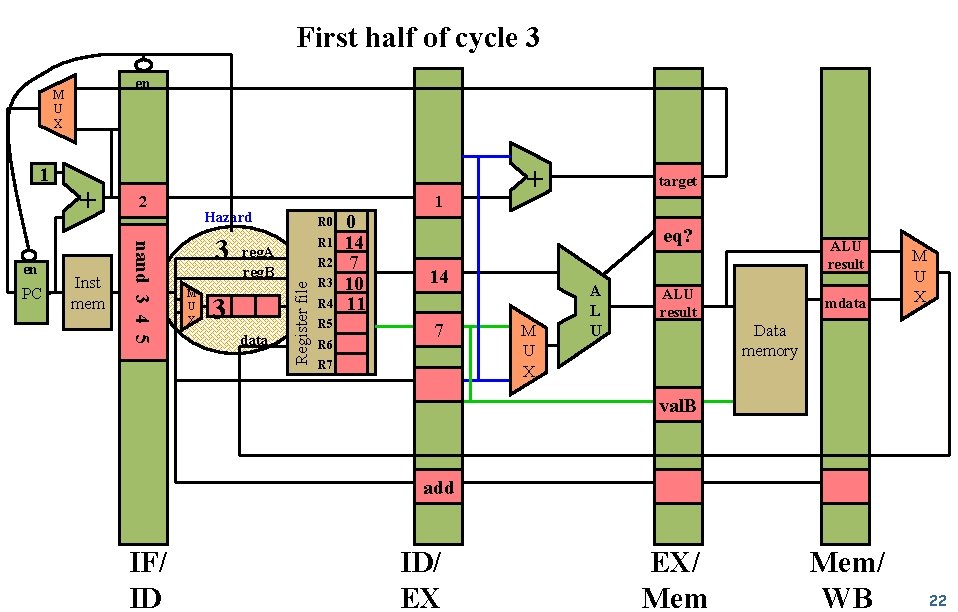

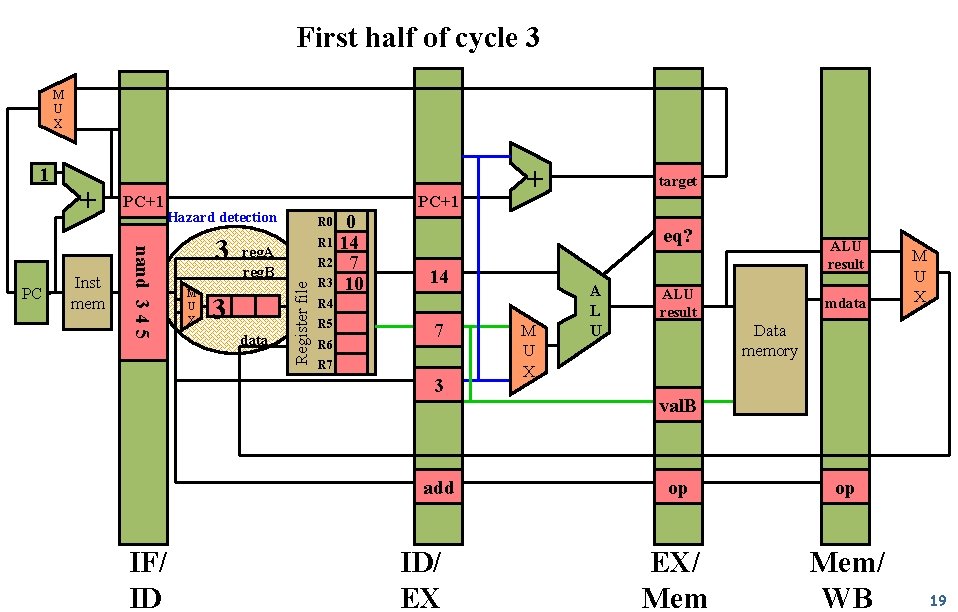

First half of cycle 3 M U X Inst mem PC+1 Hazard detection nand 3 4 5 PC + 3 M U X reg. A reg. B 3 data 0 R 1 14 R 2 7 R 3 10 target eq? 14 R 5 R 6 7 R 7 3 add IF/ ID + R 0 Register file 1 ID/ EX M U X A L U ALU result mdata M U X Data memory val. B op op EX/ Mem/ WB 19

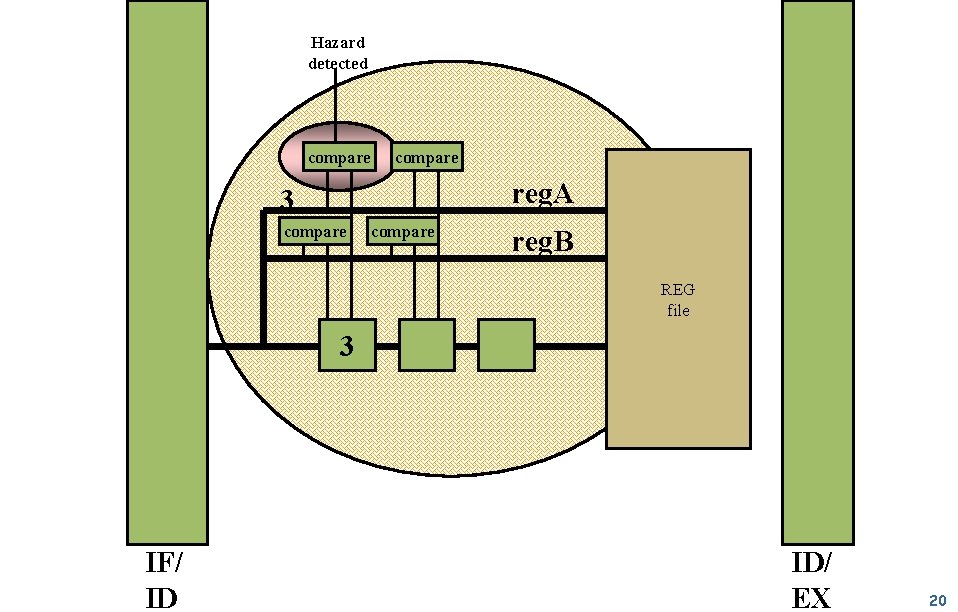

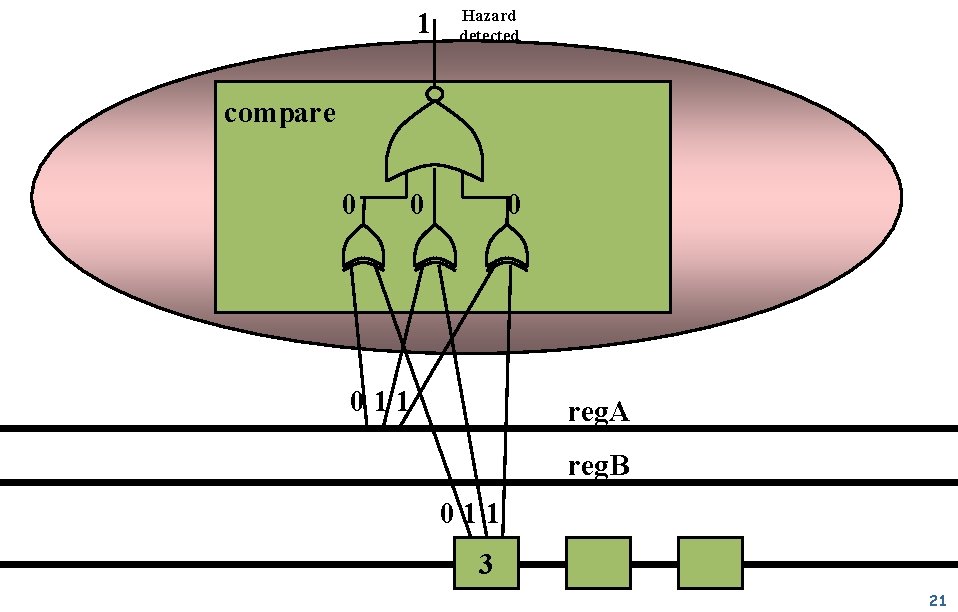

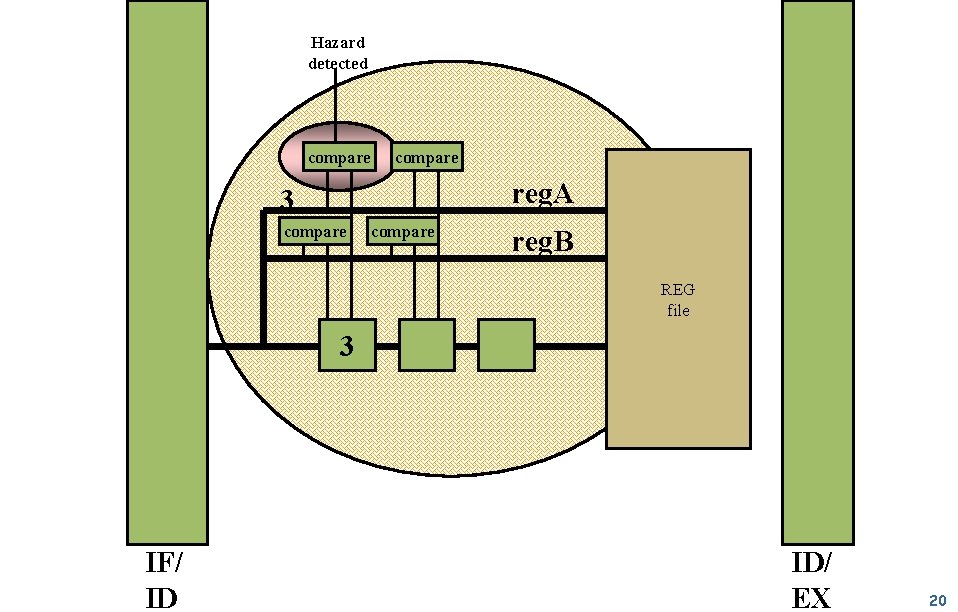

Hazard detected compare reg. A 3 compare reg. B REG file 3 IF/ ID ID/ EX 20

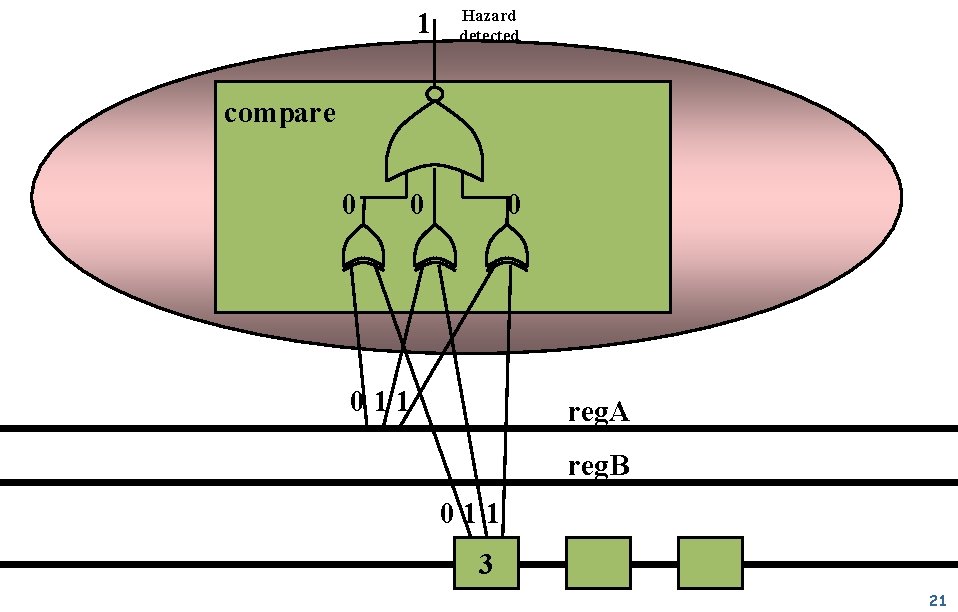

1 Hazard detected compare 0 011 reg. A reg. B 011 3 21

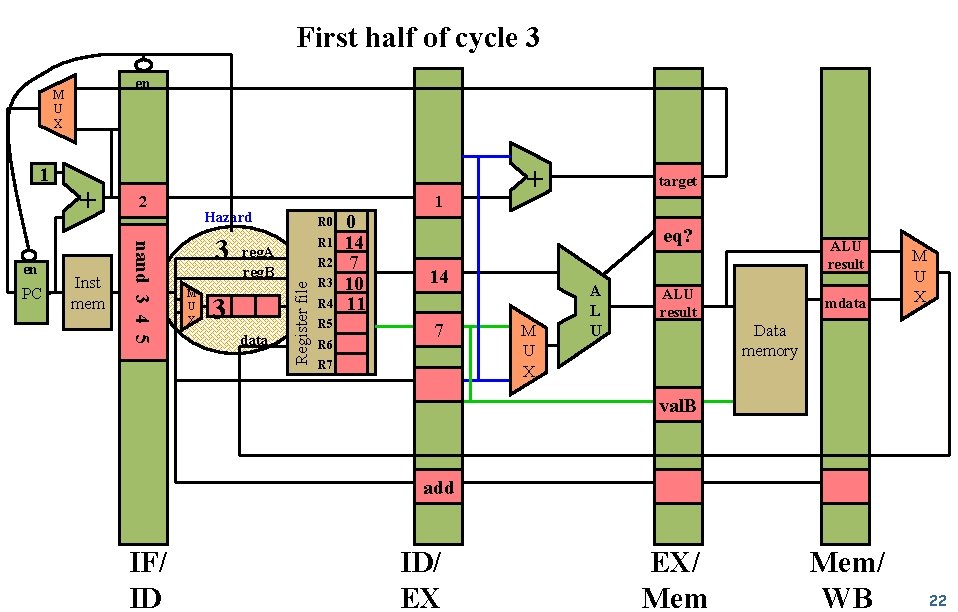

First half of cycle 3 en 1 PC Inst mem 2 nand 3 4 5 en + 1 Hazard 3 M U X R 0 R 1 reg. A reg. B 3 data R 2 Register file M U X R 3 R 4 R 5 R 6 0 14 7 10 11 + target eq? 14 7 R 7 M U X A L U ALU result mdata M U X Data memory val. B add IF/ ID ID/ EX EX/ Mem/ WB 22

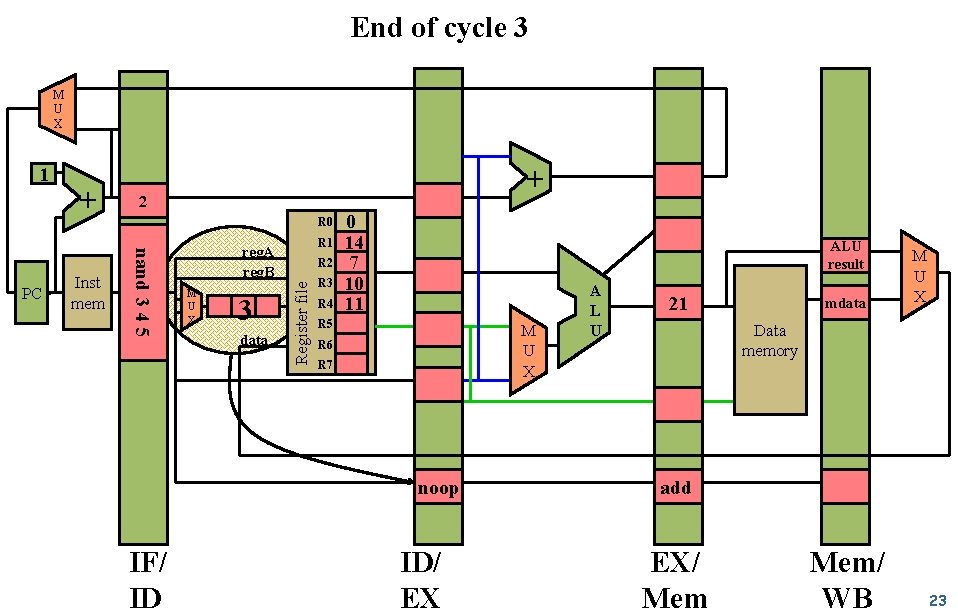

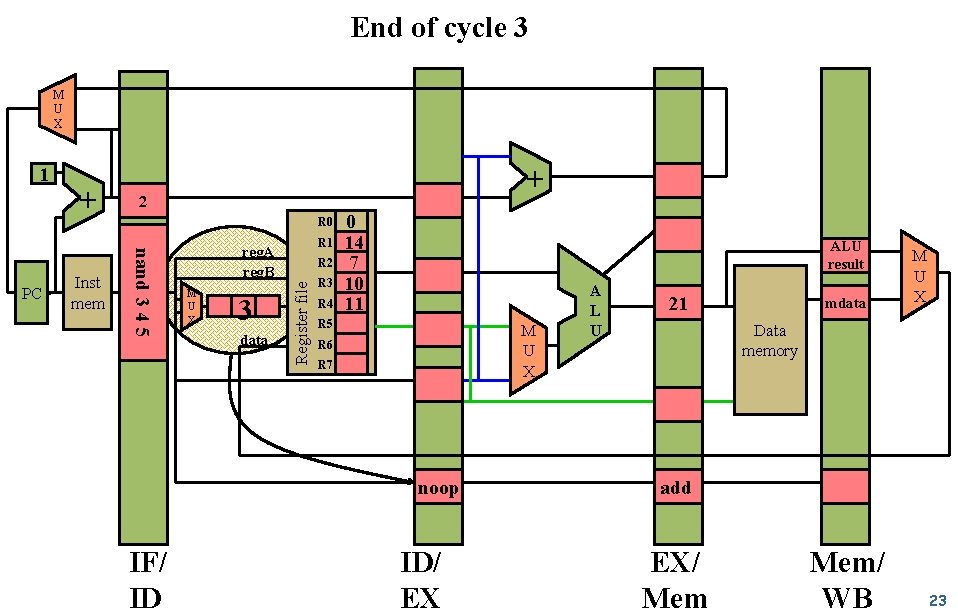

End of cycle 3 M U X Inst mem 2 R 0 nand 3 4 5 PC + + R 1 reg. A reg. B M U X 3 data R 2 Register file 1 R 3 R 4 0 14 7 10 11 ALU result R 5 M U X R 6 R 7 noop IF/ ID ID/ EX A L U 21 mdata M U X Data memory add EX/ Mem/ WB 23

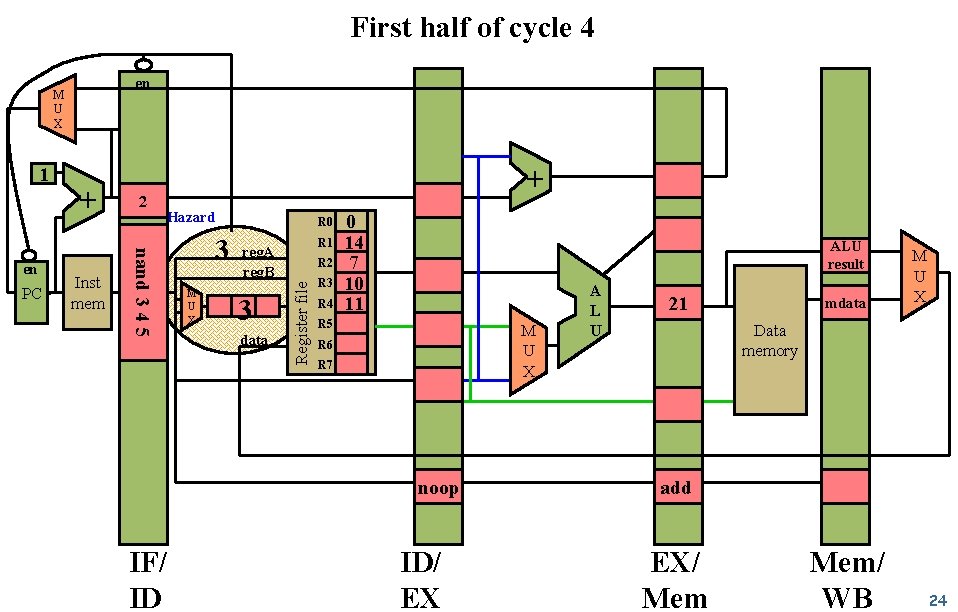

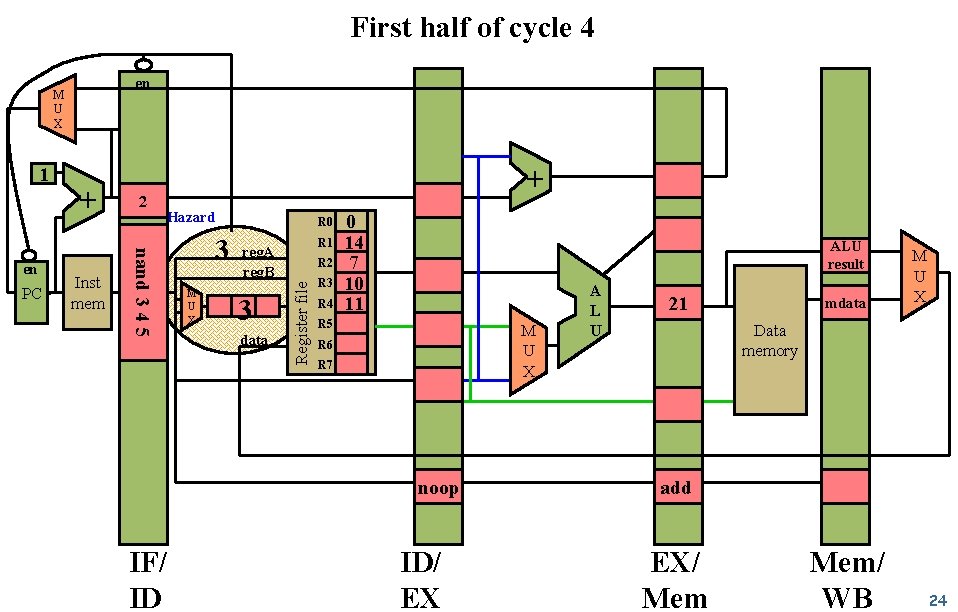

First half of cycle 4 en 1 PC Inst mem 2 Hazard nand 3 4 5 en + + 3 M U X R 0 R 1 reg. A reg. B 3 data R 2 Register file M U X R 3 R 4 0 14 7 10 11 ALU result R 5 M U X R 6 R 7 noop IF/ ID ID/ EX A L U 21 mdata M U X Data memory add EX/ Mem/ WB 24

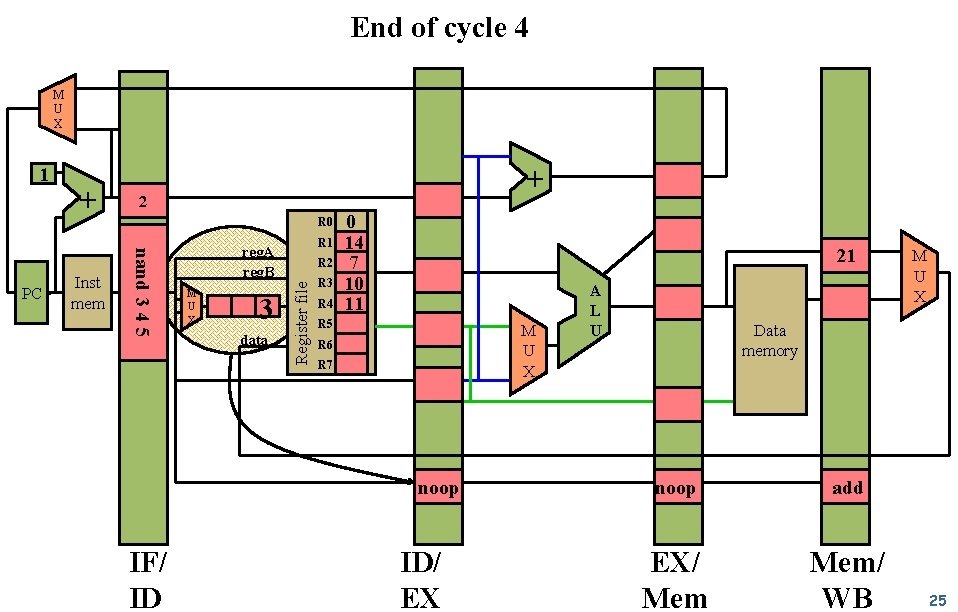

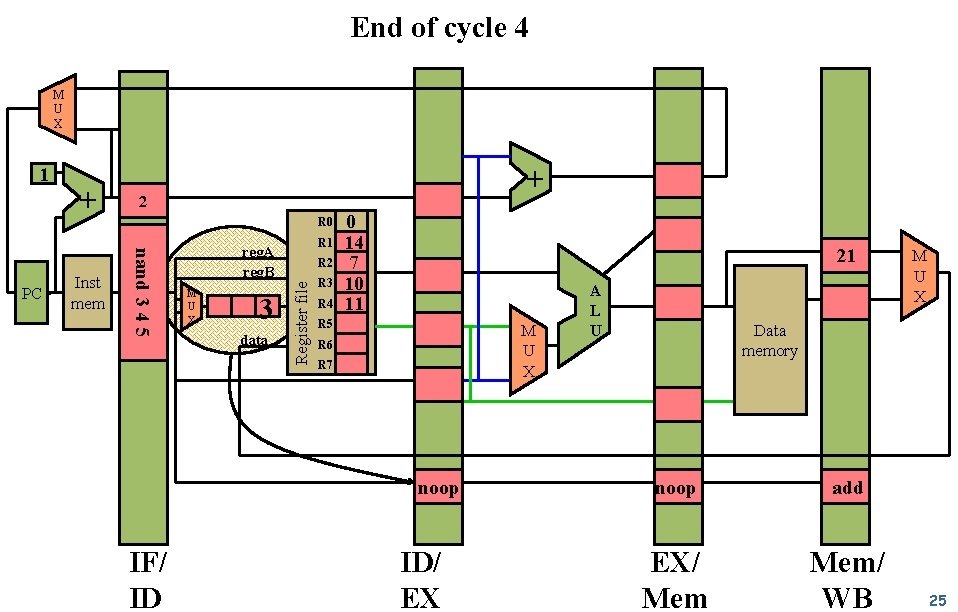

End of cycle 4 M U X Inst mem 2 R 0 nand 3 4 5 PC + + R 1 reg. A reg. B M U X 3 data R 2 Register file 1 R 3 R 4 0 14 7 10 11 21 R 5 M U X R 6 R 7 noop IF/ ID ID/ EX A L U M U X Data memory noop add EX/ Mem/ WB 25

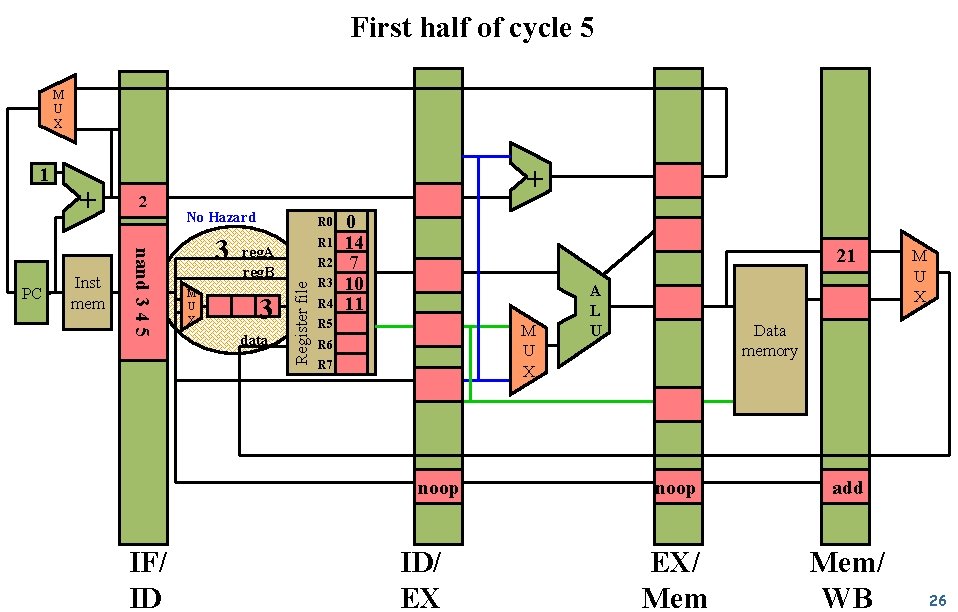

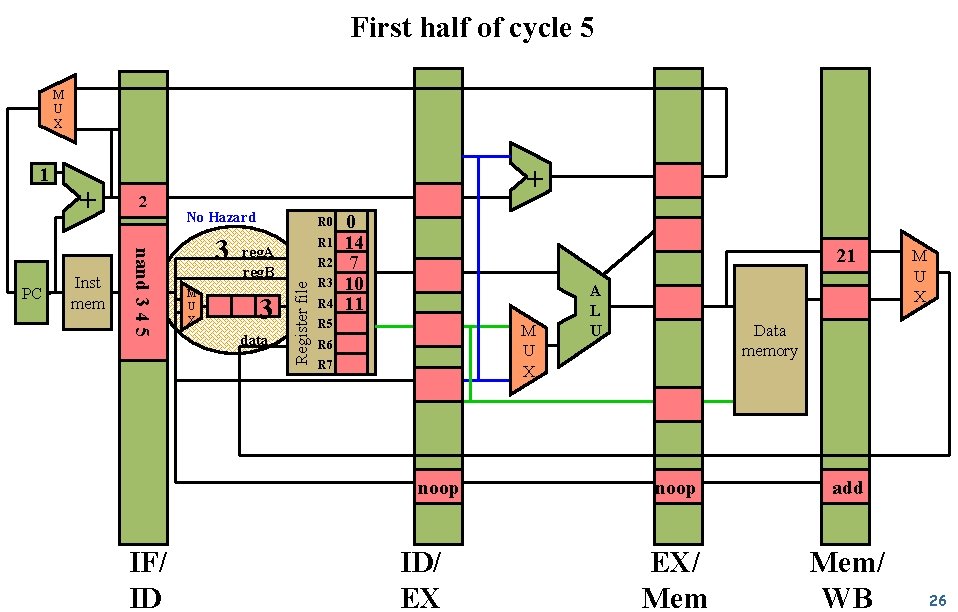

First half of cycle 5 M U X Inst mem 2 nand 3 4 5 PC + + No Hazard 3 M U X R 0 R 1 reg. A reg. B 3 data R 2 Register file 1 R 3 R 4 0 14 7 10 11 21 R 5 M U X R 6 R 7 noop IF/ ID ID/ EX A L U M U X Data memory noop add EX/ Mem/ WB 26

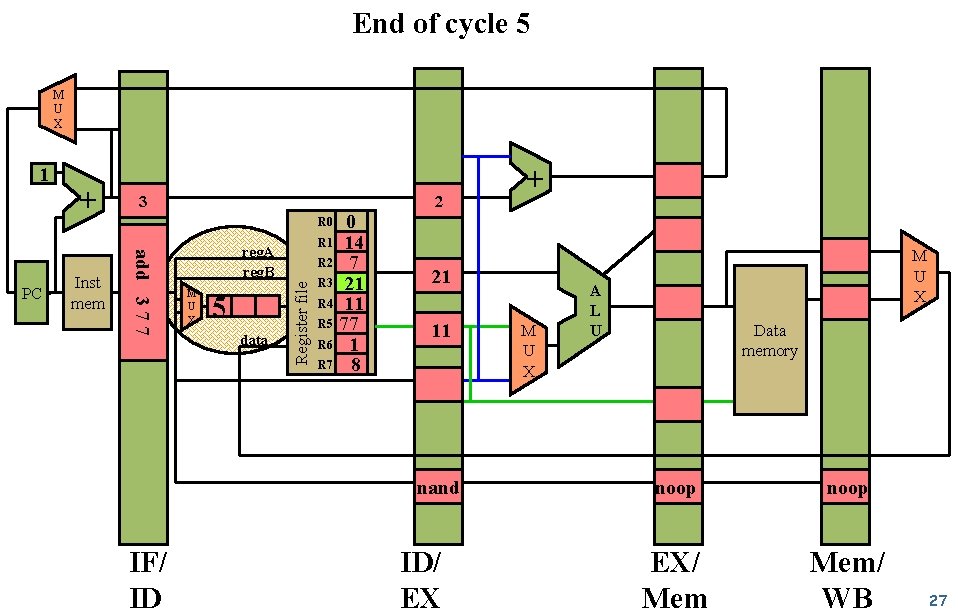

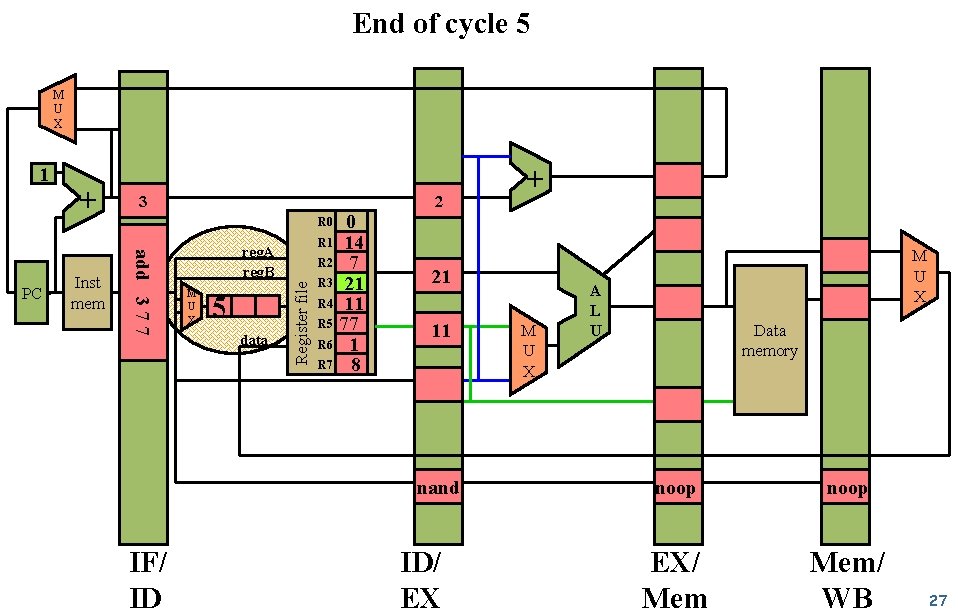

End of cycle 5 M U X Inst mem 3 2 0 R 1 14 R 2 7 R 3 21 R 4 11 R 5 77 R 6 1 R 7 8 + R 0 add 3 7 7 PC + reg. A reg. B M U X 5 data Register file 1 21 11 nand IF/ ID ID/ EX M U X A L U Data memory noop EX/ Mem/ WB 27

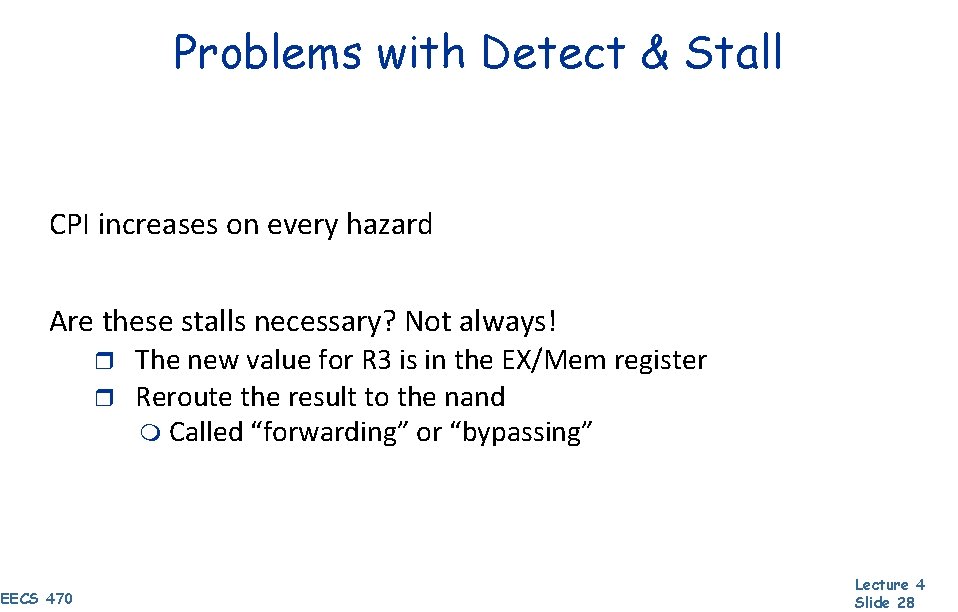

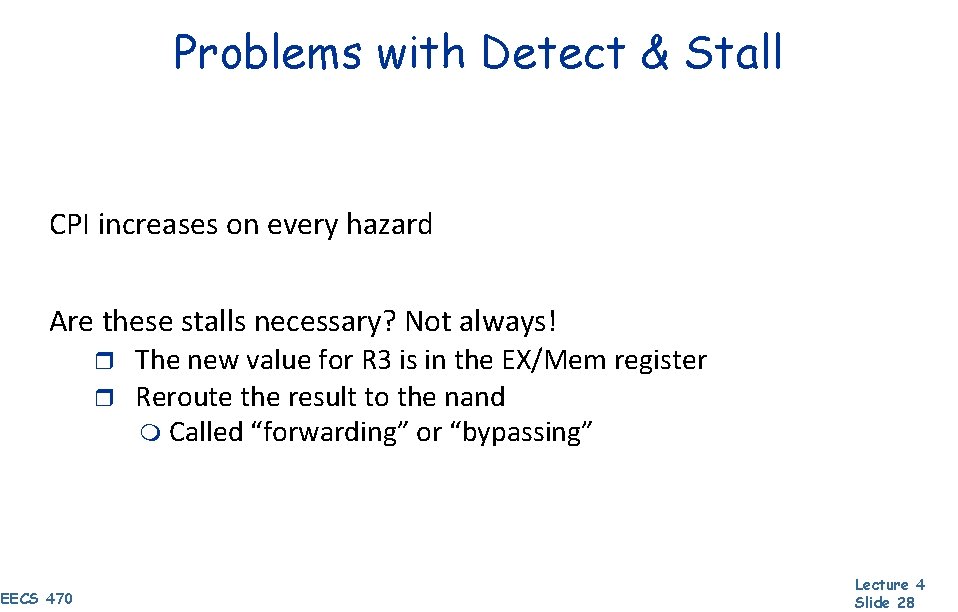

Problems with Detect & Stall CPI increases on every hazard Are these stalls necessary? Not always! The new value for R 3 is in the EX/Mem register r Reroute the result to the nand m Called “forwarding” or “bypassing” r EECS 470 Lecture 4 Slide 28

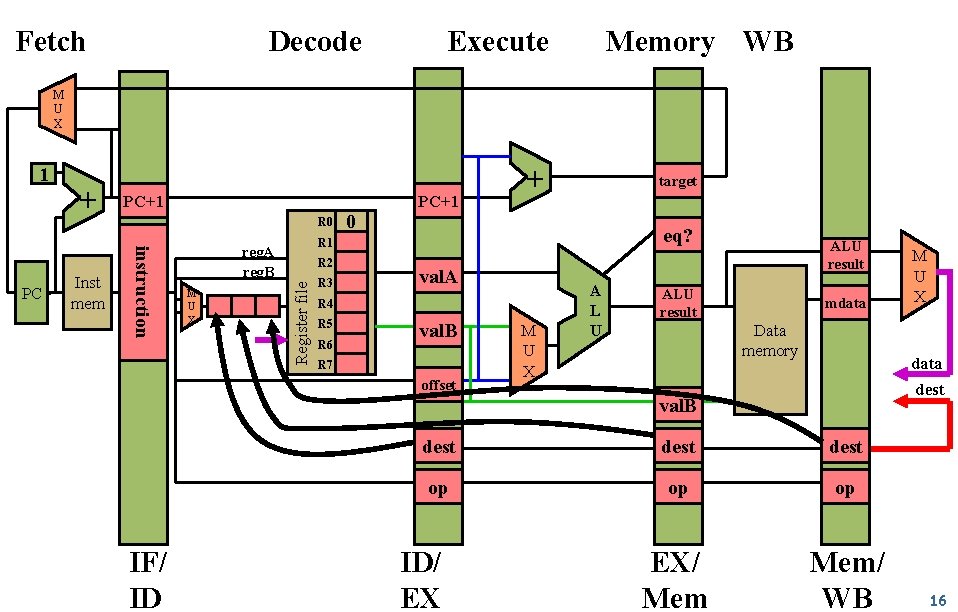

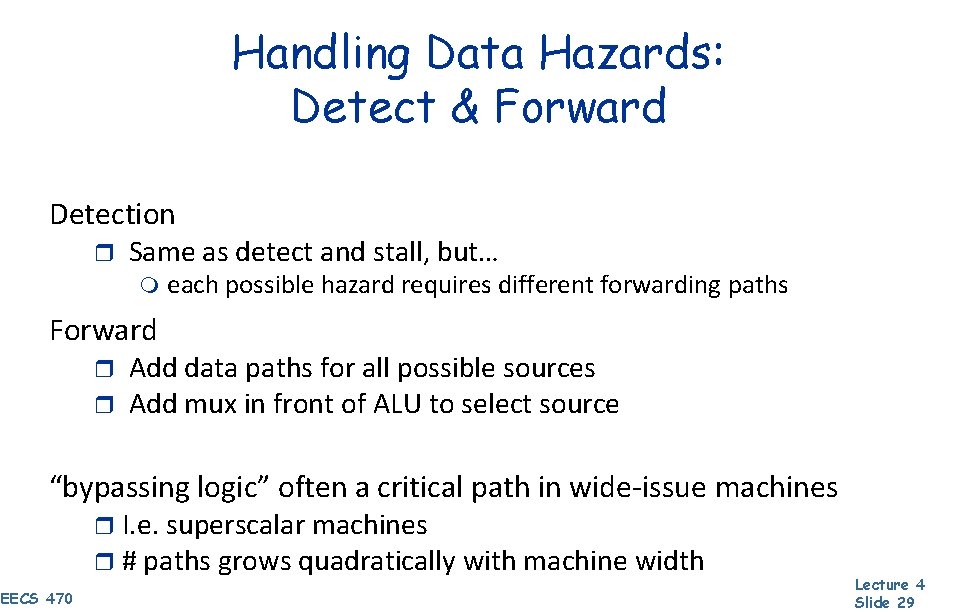

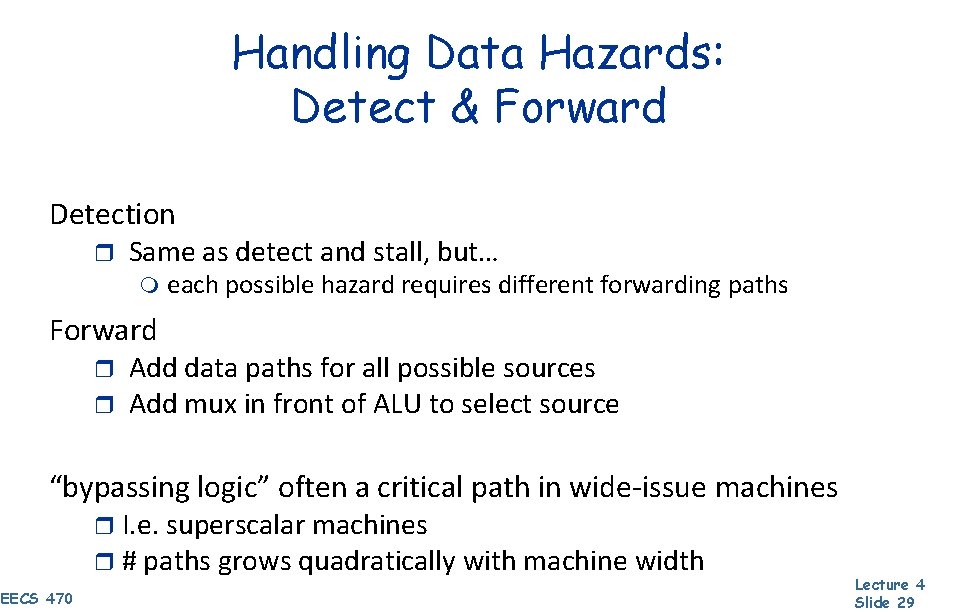

Handling Data Hazards: Detect & Forward Detection r Same as detect and stall, but… m each possible hazard requires different forwarding paths Forward r r Add data paths for all possible sources Add mux in front of ALU to select source “bypassing logic” often a critical path in wide-issue machines r I. e. superscalar machines r # paths grows quadratically with machine width EECS 470 Lecture 4 Slide 29

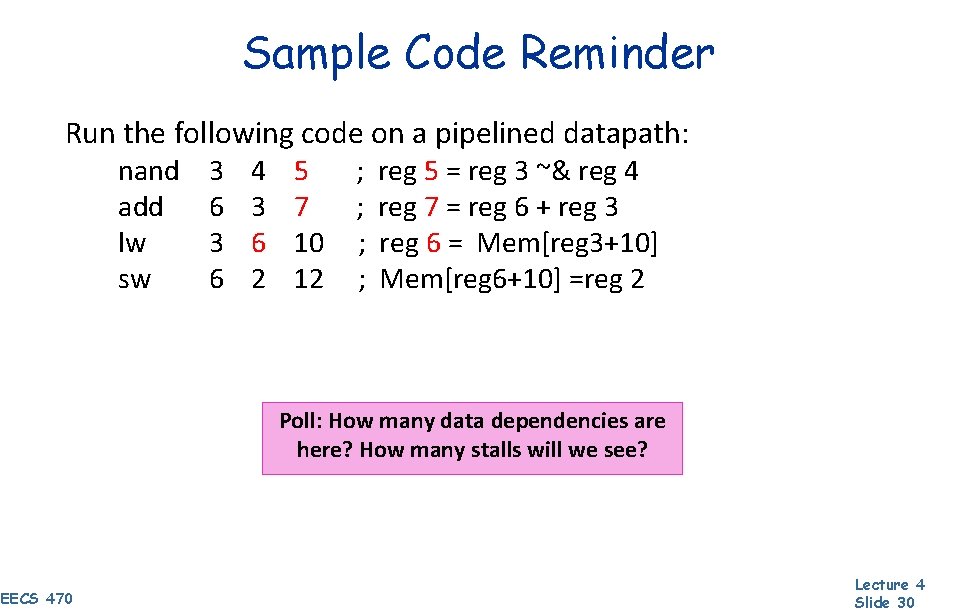

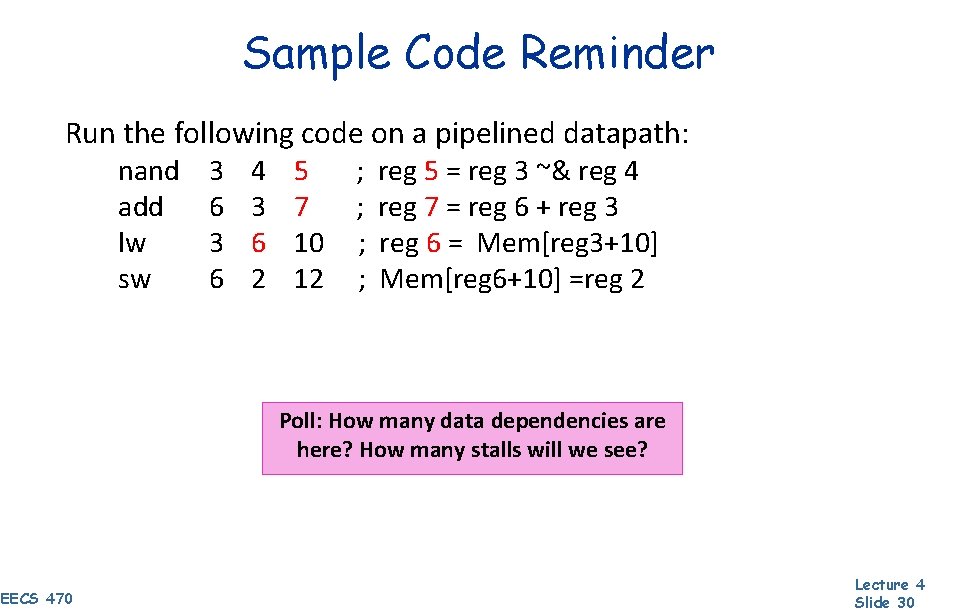

Sample Code Reminder Run the following code on a pipelined datapath: nand add lw sw 3 6 4 3 6 2 5 7 10 12 ; ; reg 5 = reg 3 ~& reg 4 reg 7 = reg 6 + reg 3 reg 6 = Mem[reg 3+10] Mem[reg 6+10] =reg 2 Poll: How many data dependencies are here? How many stalls will we see? EECS 470 Lecture 4 Slide 30

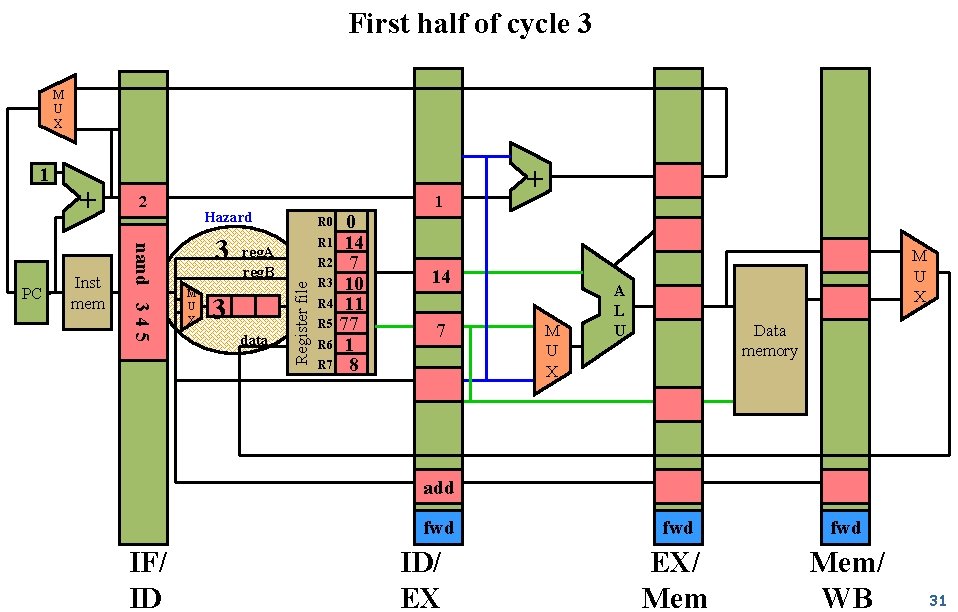

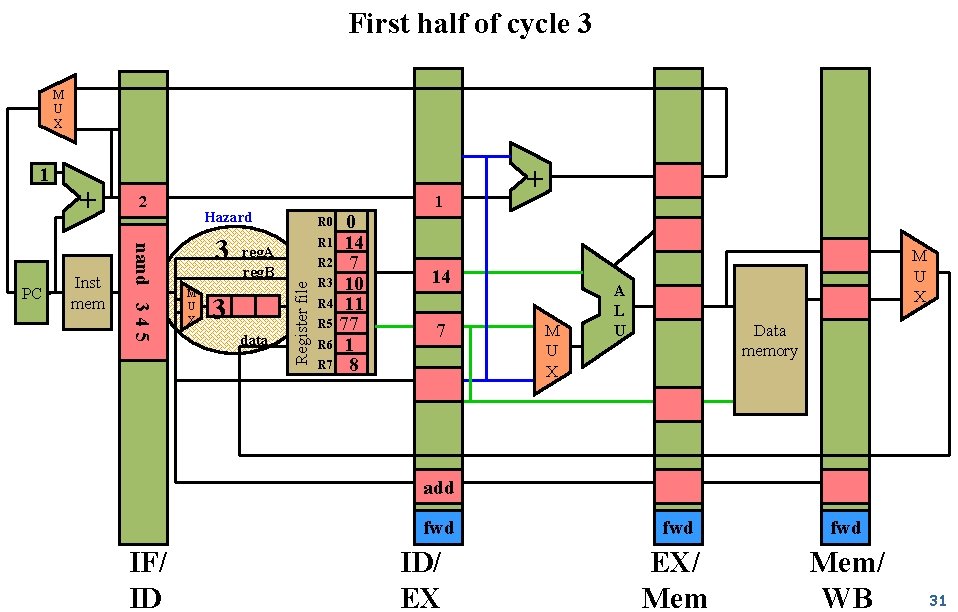

First half of cycle 3 M U X Inst mem 2 nand 3 4 5 PC + 1 Hazard 3 M U X reg. A reg. B 3 data 0 R 1 14 R 2 7 R 3 10 R 4 11 R 5 77 R 6 1 R 7 8 + R 0 Register file 1 14 7 M U X A L U Data memory add fwd IF/ ID ID/ EX fwd EX/ Mem/ WB 31

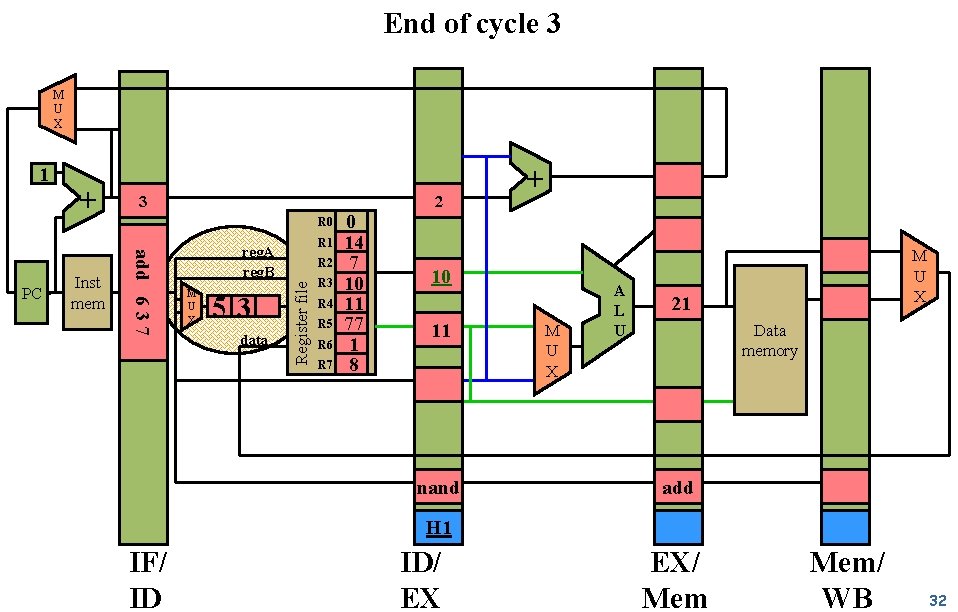

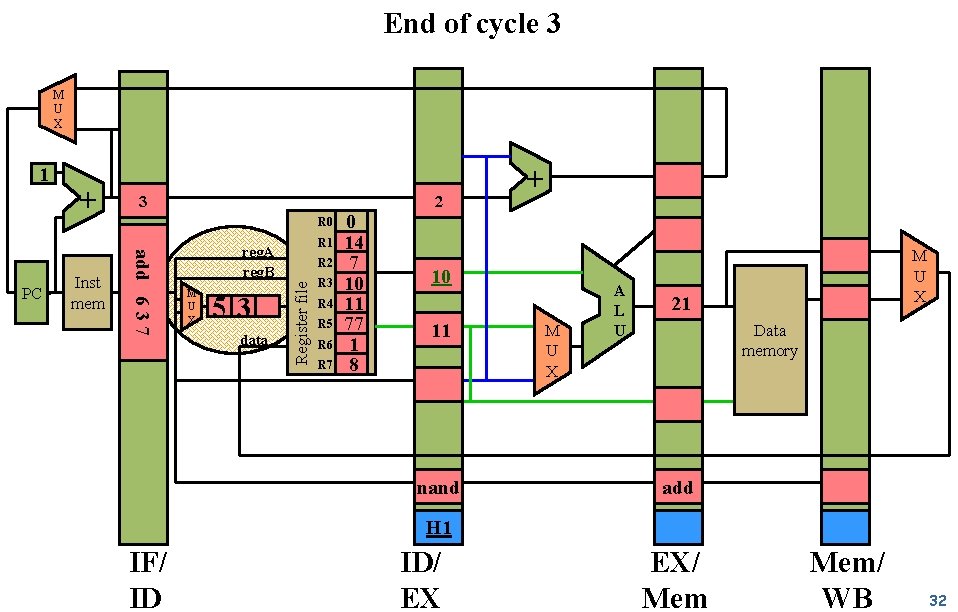

End of cycle 3 M U X Inst mem 3 2 R 0 add 6 3 7 PC + R 1 reg. A reg. B M U X 53 data R 2 Register file 1 R 3 R 4 R 5 R 6 R 7 0 14 7 10 11 77 1 8 + 10 11 nand M U X A L U M U X 21 Data memory add H 1 IF/ ID ID/ EX EX/ Mem/ WB 32

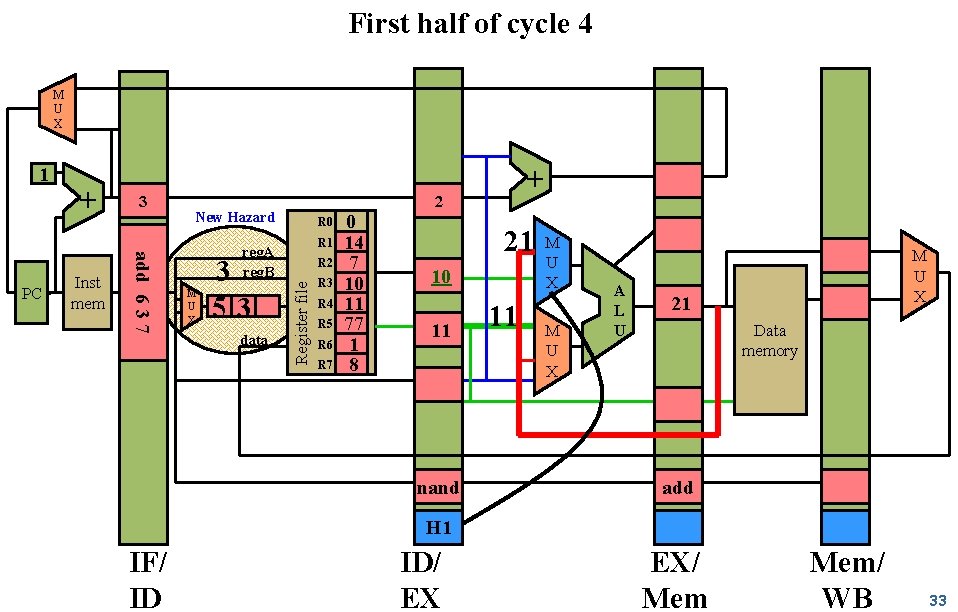

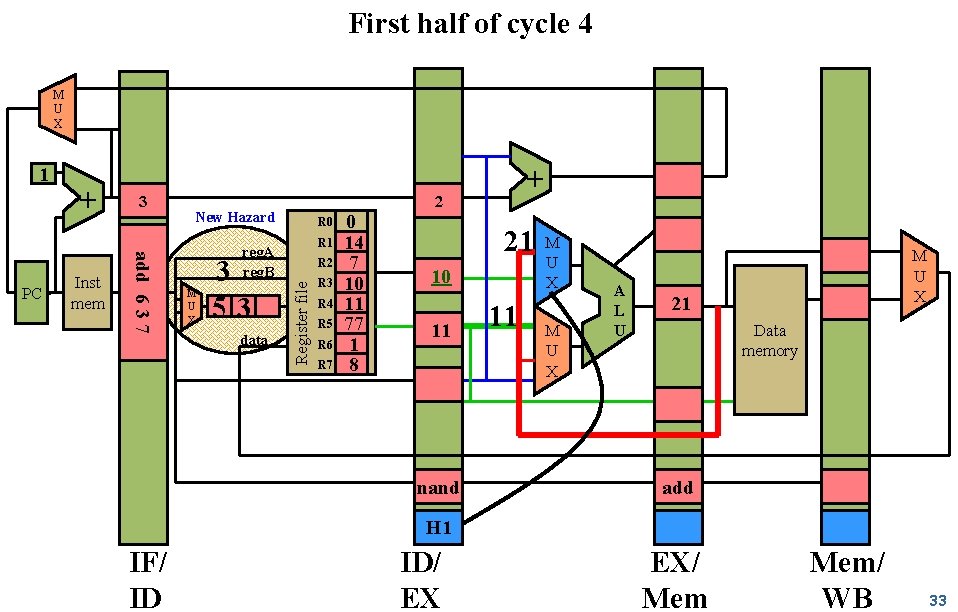

First half of cycle 4 M U X Inst mem 3 add 6 3 7 PC + New Hazard R 0 R 1 reg. A reg. B M U X 3 53 data + 2 Register file 1 R 3 R 4 R 5 R 6 R 7 0 14 7 10 11 77 1 8 21 10 11 nand 11 M U X A L U M U X 21 Data memory add H 1 IF/ ID ID/ EX EX/ Mem/ WB 33

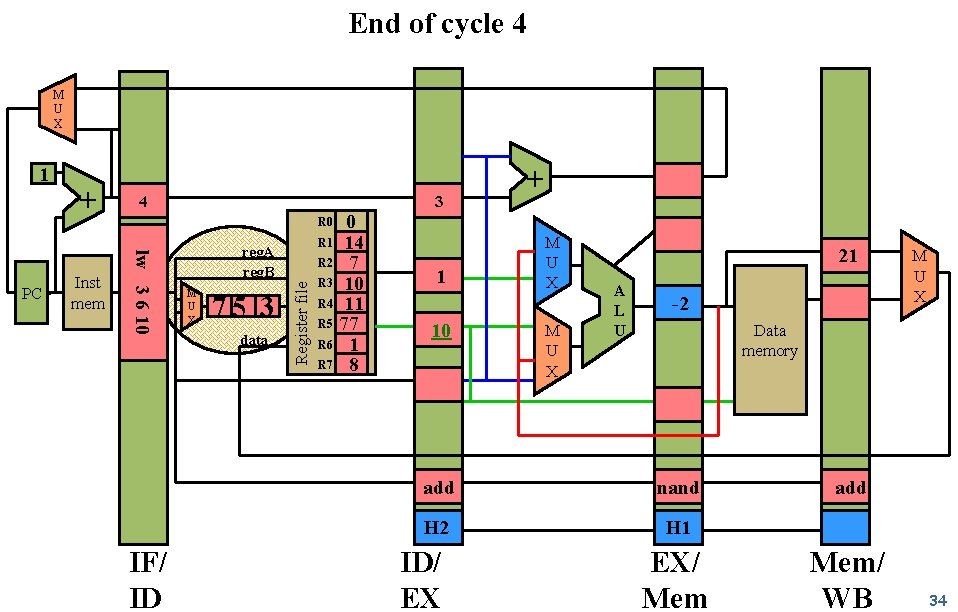

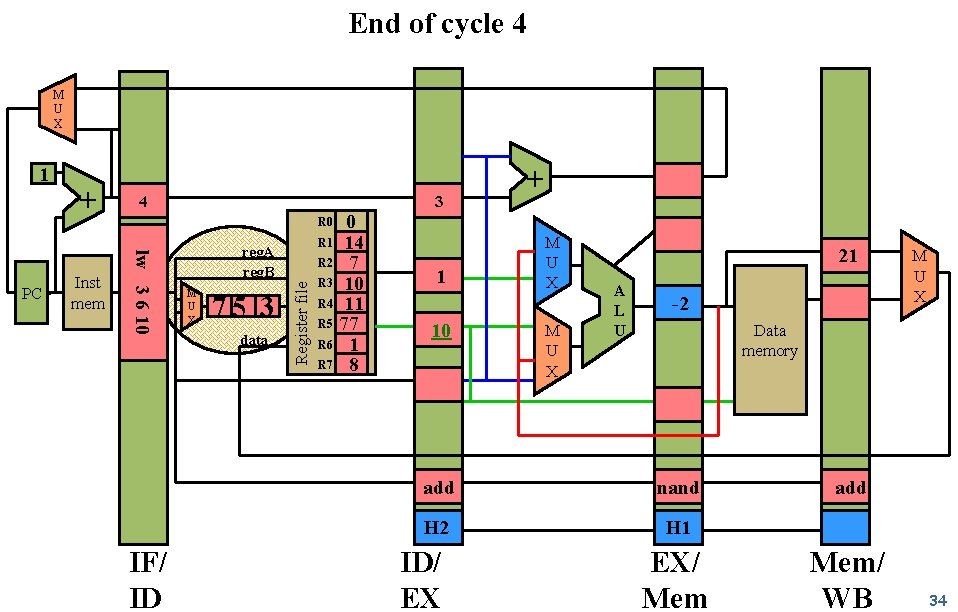

End of cycle 4 M U X Inst mem 4 3 0 R 1 14 R 2 7 R 3 10 R 4 11 R 5 77 R 6 1 R 7 8 + R 0 lw 3 6 10 PC + IF/ ID reg. A reg. B M U X 75 3 data Register file 1 1 10 M U X 21 A L U -2 Data memory add nand H 2 H 1 ID/ EX M U X EX/ Mem add Mem/ WB 34

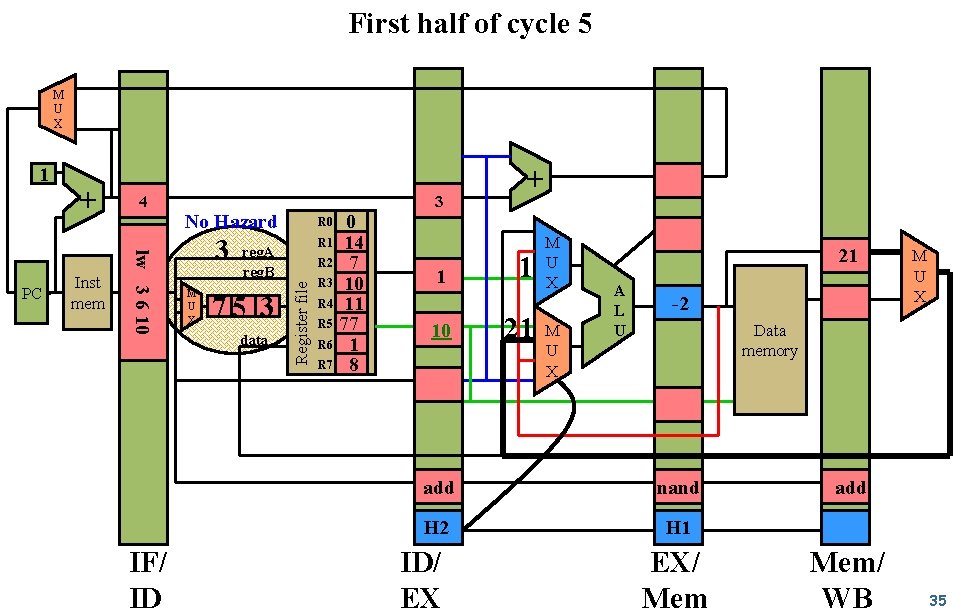

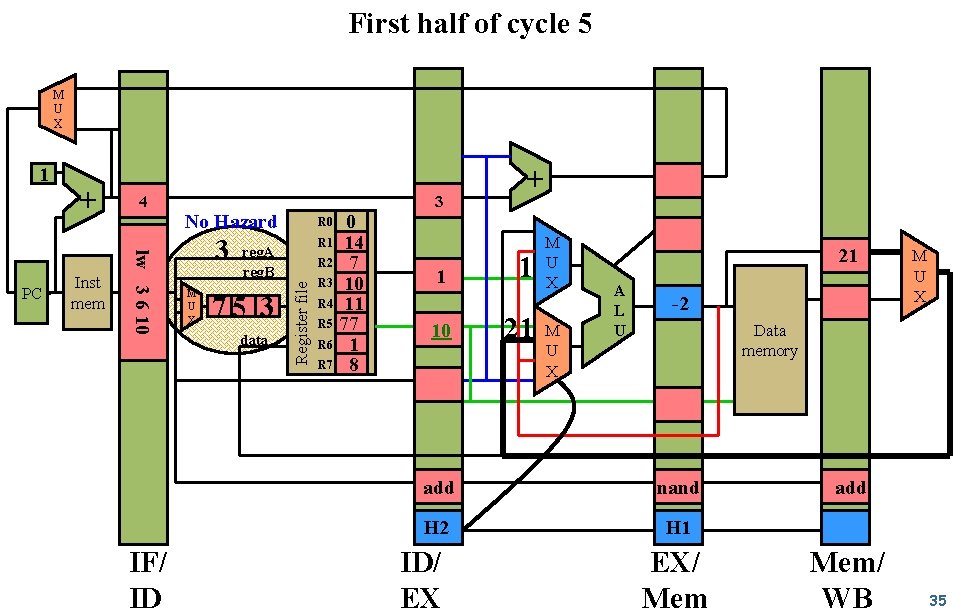

First half of cycle 5 M U X Inst mem 4 lw 3 6 10 PC + IF/ ID 3 No Hazard 3 M U X reg. A reg. B 75 3 data 0 R 1 14 R 2 7 R 3 10 R 4 11 R 5 77 R 6 1 R 7 8 + R 0 Register file 1 1 10 1 21 M U X 21 A L U -2 Data memory add nand H 2 H 1 ID/ EX M U X EX/ Mem add Mem/ WB 35

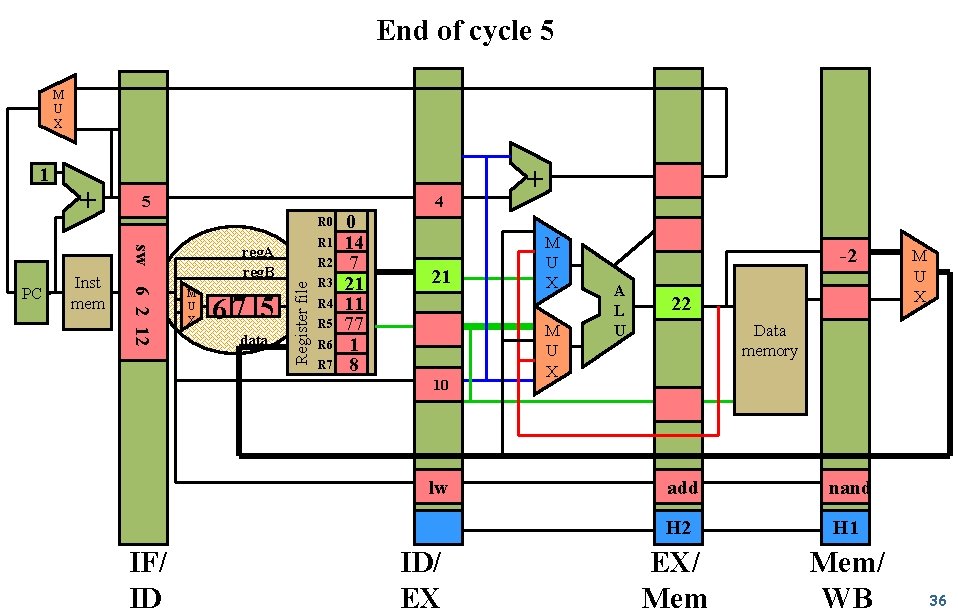

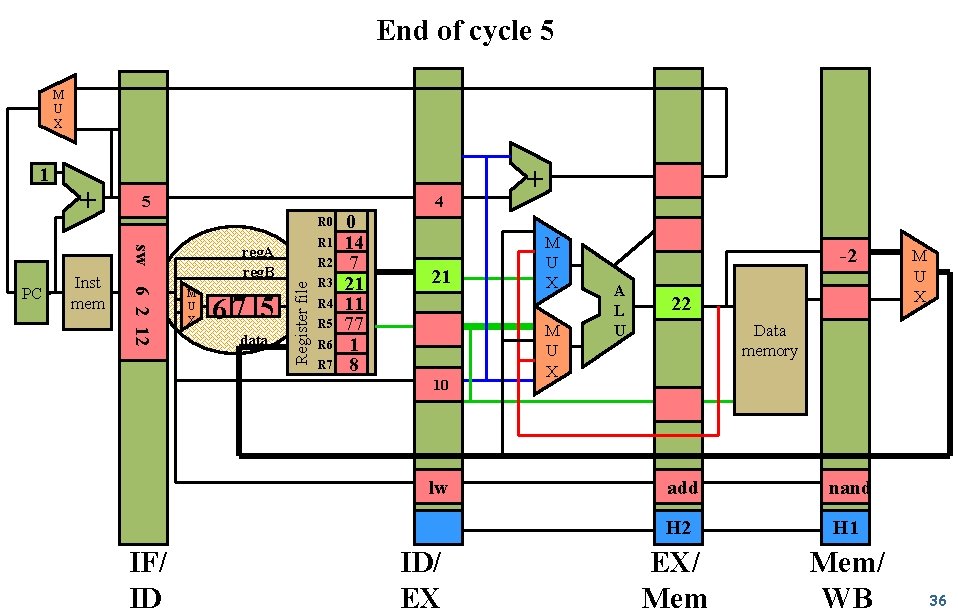

End of cycle 5 M U X + 5 4 R 0 sw 6 2 12 PC Inst mem R 1 reg. A reg. B M U X 67 5 data R 2 Register file 1 R 3 R 4 R 5 R 6 R 7 0 14 7 21 11 77 1 8 21 10 lw IF/ ID ID/ EX + M U X -2 A L U 22 M U X Data memory add nand H 2 H 1 EX/ Mem/ WB 36

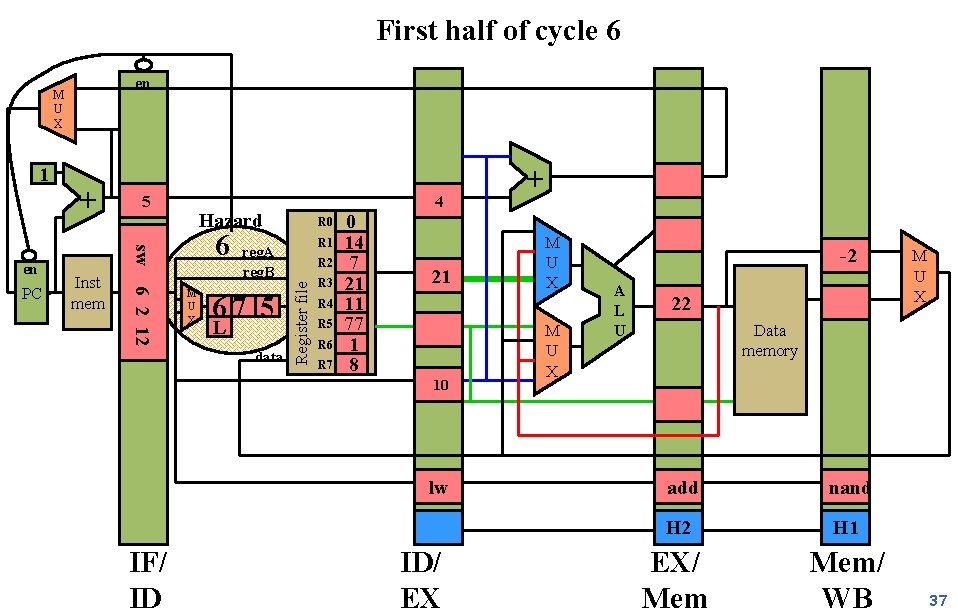

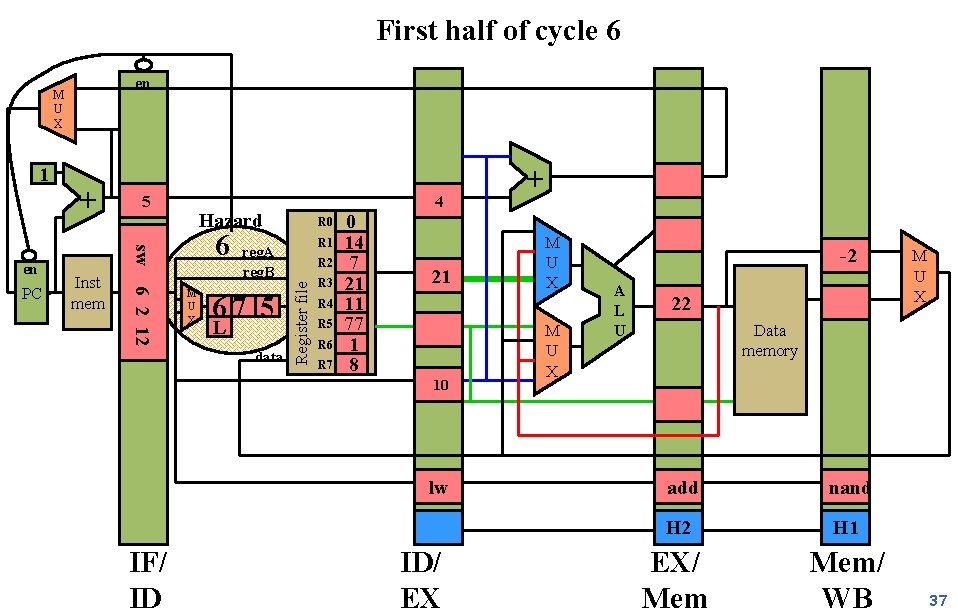

First half of cycle 6 en 1 Inst mem 6 2 12 PC 5 4 Hazard 6 sw en + M U X R 0 R 1 reg. A reg. B 67 5 L data R 2 Register file M U X R 3 R 4 R 5 R 6 R 7 0 14 7 21 11 77 1 8 21 10 lw IF/ ID ID/ EX + M U X -2 A L U 22 M U X Data memory add nand H 2 H 1 EX/ Mem/ WB 37

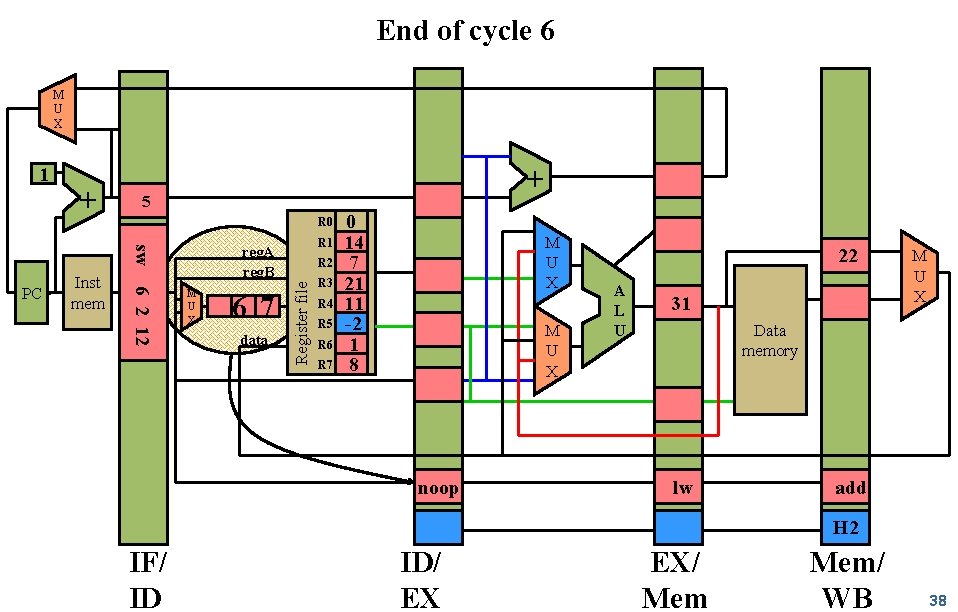

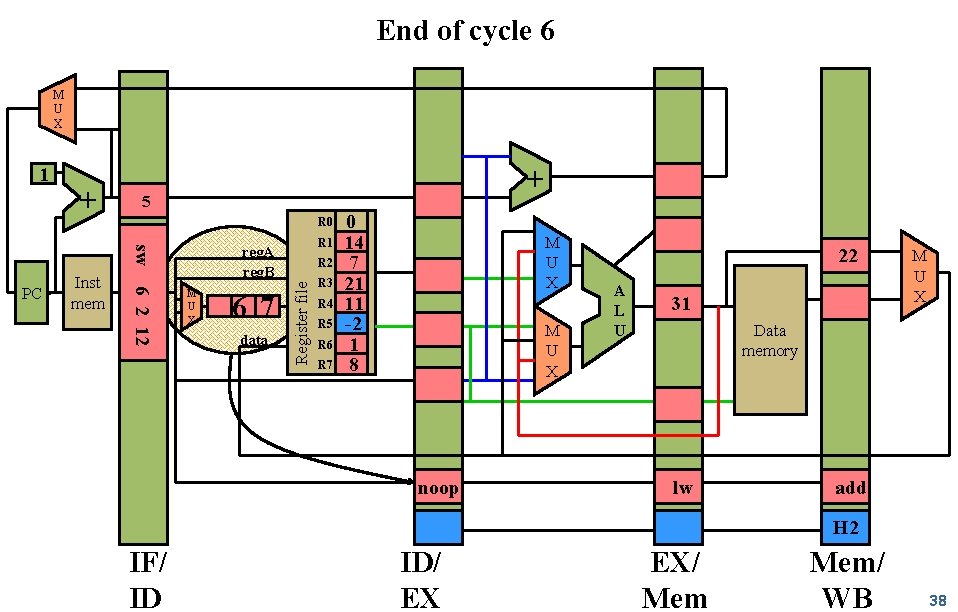

End of cycle 6 M U X + + 5 R 0 sw Inst mem 6 2 12 PC R 1 reg. A reg. B M U X 6 7 data R 2 Register file 1 R 3 R 4 R 5 R 6 R 7 0 14 7 21 11 -2 1 8 M U X noop 22 A L U 31 M U X Data memory lw add H 2 IF/ ID ID/ EX EX/ Mem/ WB 38

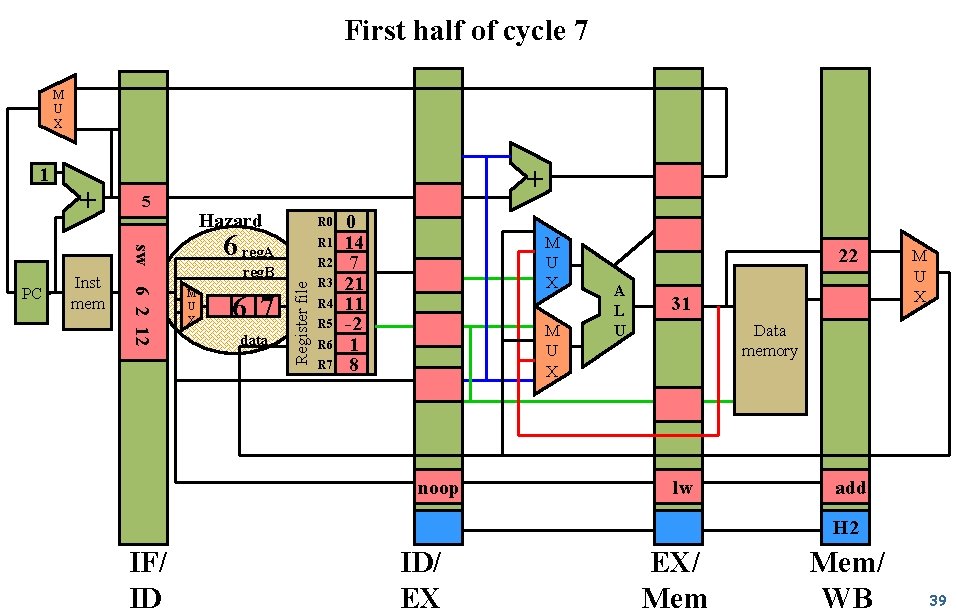

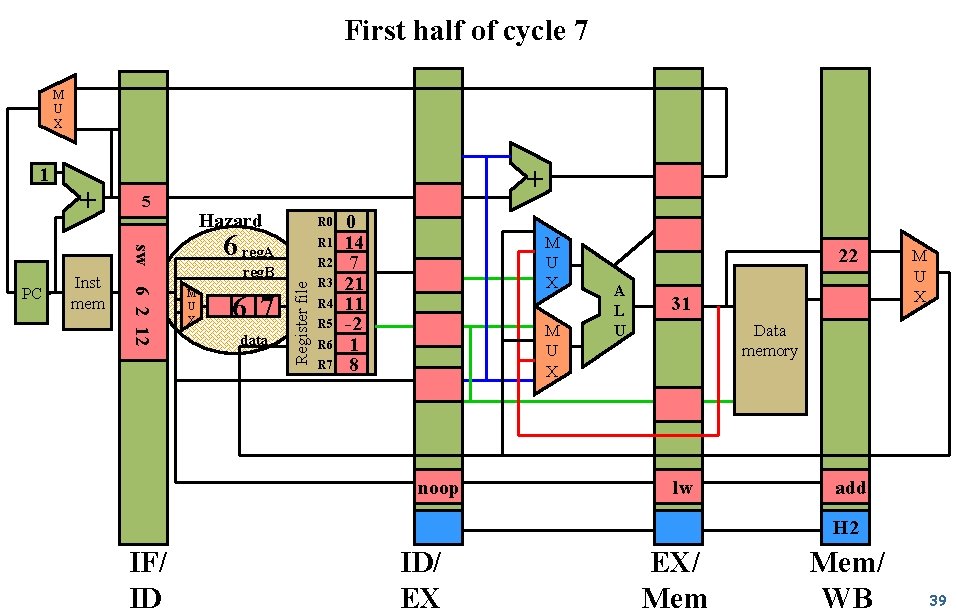

First half of cycle 7 M U X + + 5 Hazard 6 reg. A sw 6 2 12 PC Inst mem R 0 R 1 R 2 reg. B M U X 6 7 data Register file 1 R 3 R 4 R 5 R 6 R 7 0 14 7 21 11 -2 1 8 M U X noop 22 A L U 31 M U X Data memory lw add H 2 IF/ ID ID/ EX EX/ Mem/ WB 39

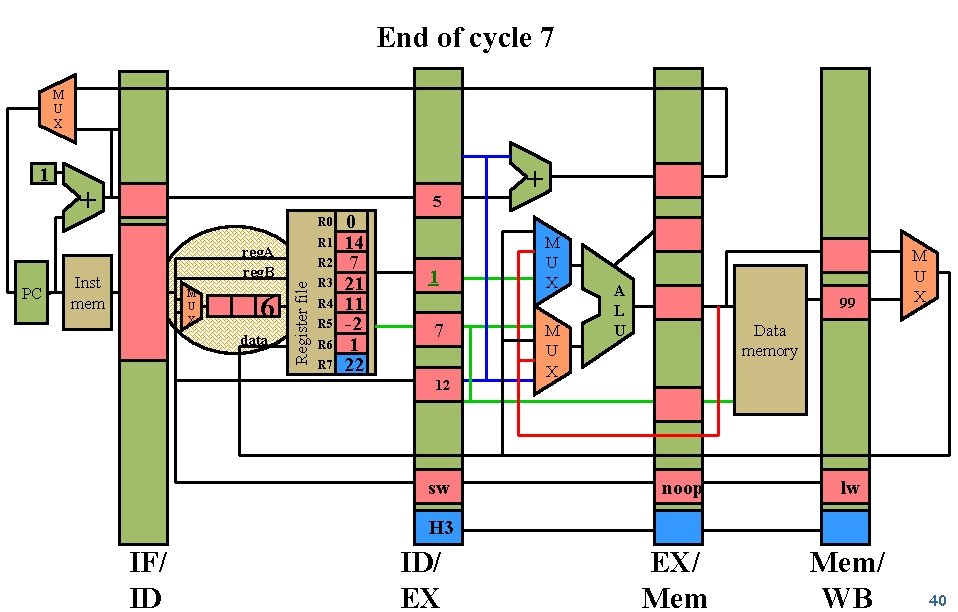

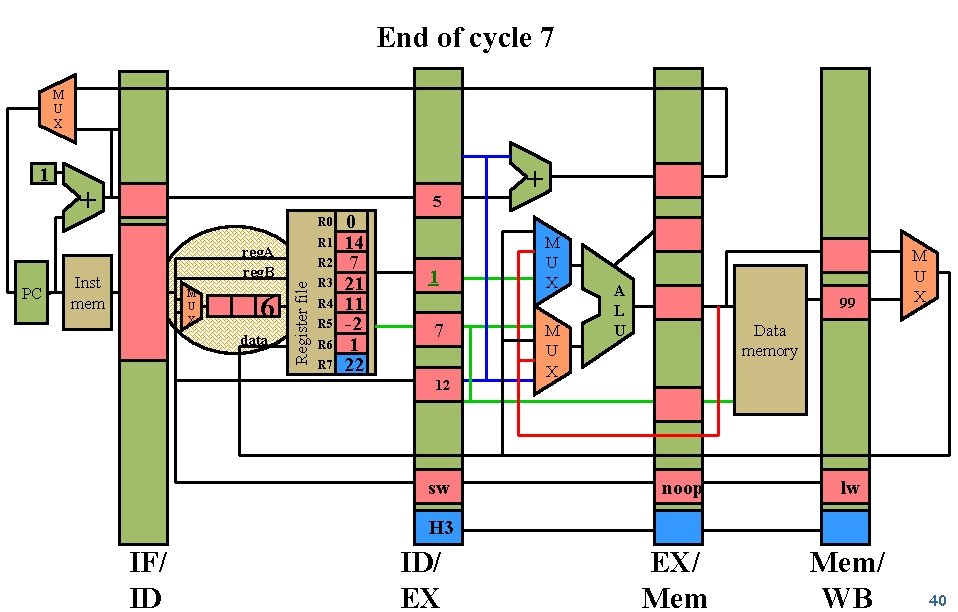

End of cycle 7 M U X PC + 5 R 0 R 1 reg. A reg. B Inst mem M U X 6 data R 2 Register file 1 R 3 R 4 R 5 R 6 R 7 0 14 7 21 11 -2 1 22 1 7 12 sw + M U X A L U 99 M U X Data memory noop lw H 3 IF/ ID ID/ EX EX/ Mem/ WB 40

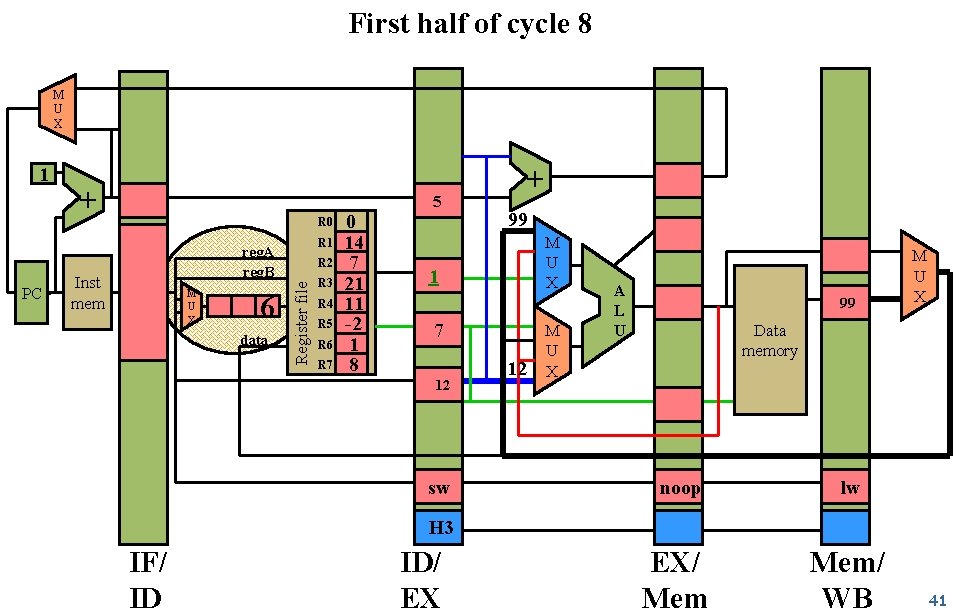

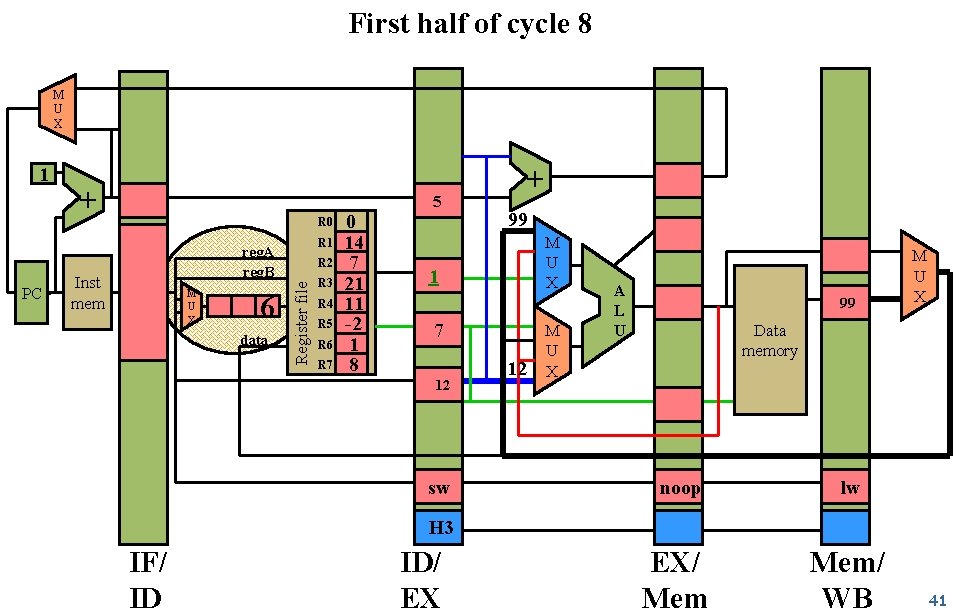

First half of cycle 8 M U X PC + 5 R 0 R 1 reg. A reg. B Inst mem M U X 6 data R 2 Register file 1 R 3 R 4 R 5 R 6 R 7 0 14 7 21 11 -2 1 8 + 99 M U X 1 7 12 sw 12 M U X A L U 99 M U X Data memory noop lw EX/ Mem/ WB H 3 IF/ ID ID/ EX 41

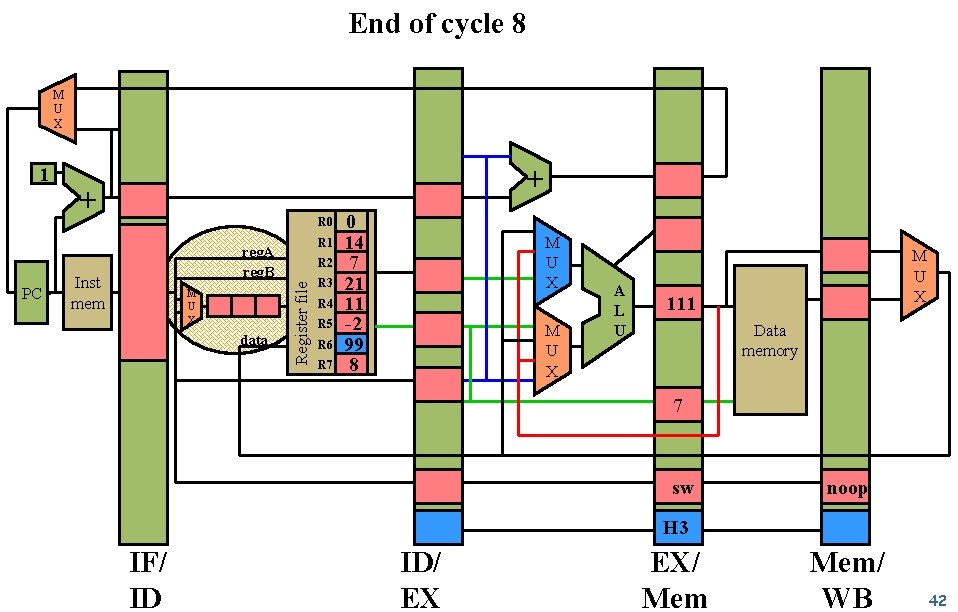

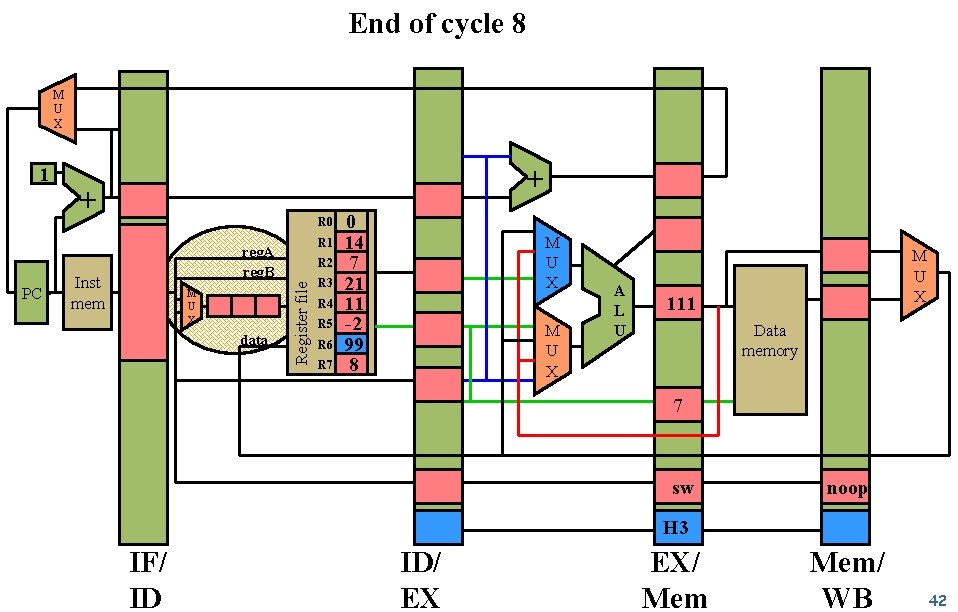

End of cycle 8 M U X PC + + R 0 R 1 reg. A reg. B Inst mem M U X data R 2 Register file 1 R 3 R 4 R 5 R 6 R 7 0 14 7 21 11 -2 99 8 M U X A L U M U X 111 Data memory 7 sw noop H 3 IF/ ID ID/ EX EX/ Mem/ WB 42

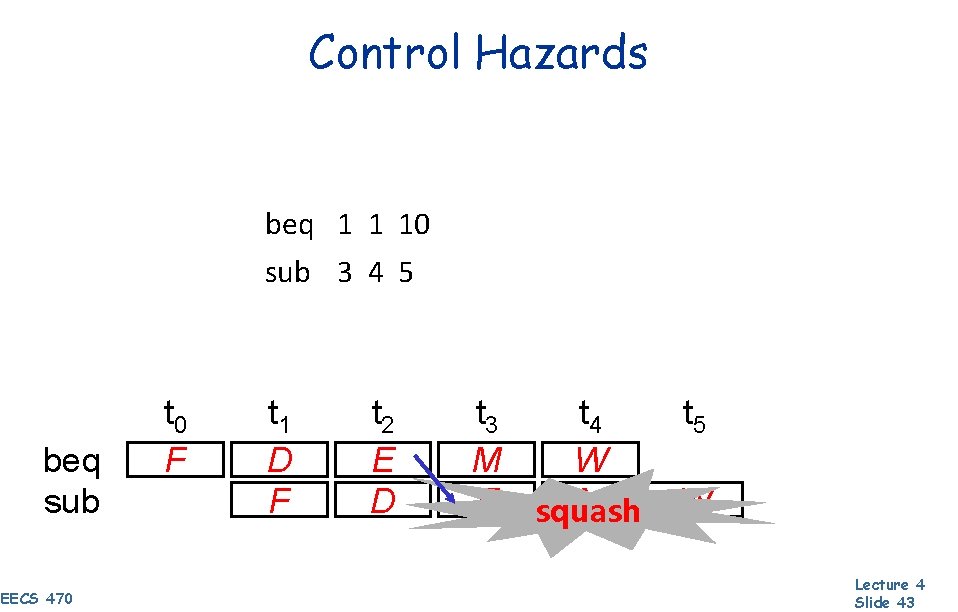

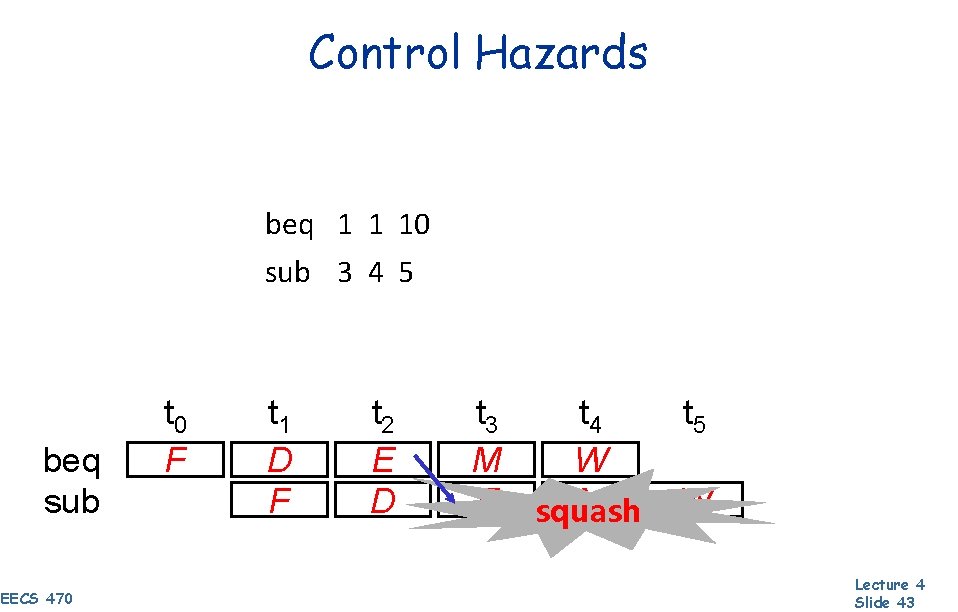

Control Hazards beq 1 1 10 sub 3 4 5 beq sub EECS 470 t 0 F t 1 D F t 2 E D t 3 t 4 M W E squash M t 5 W Lecture 4 Slide 43

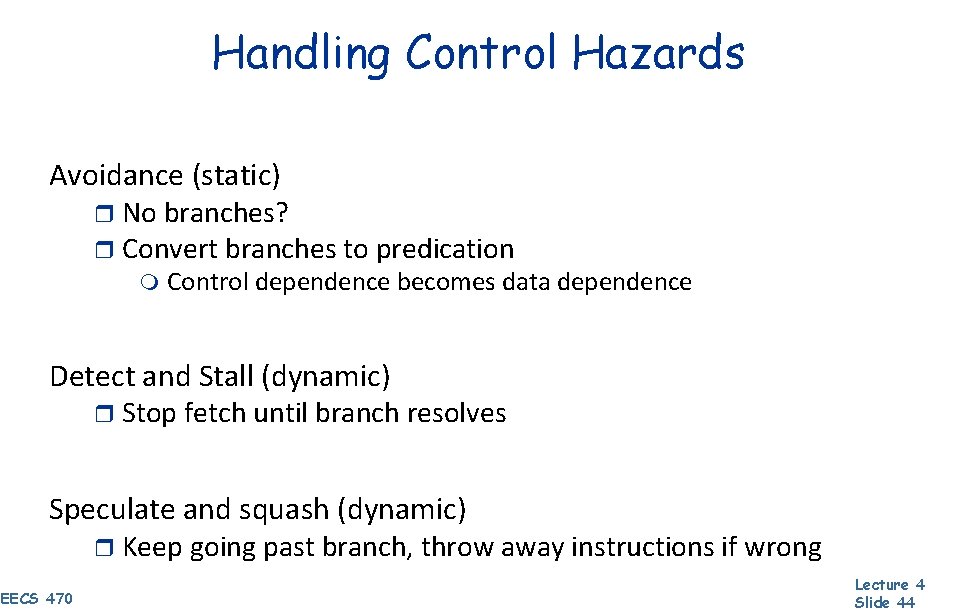

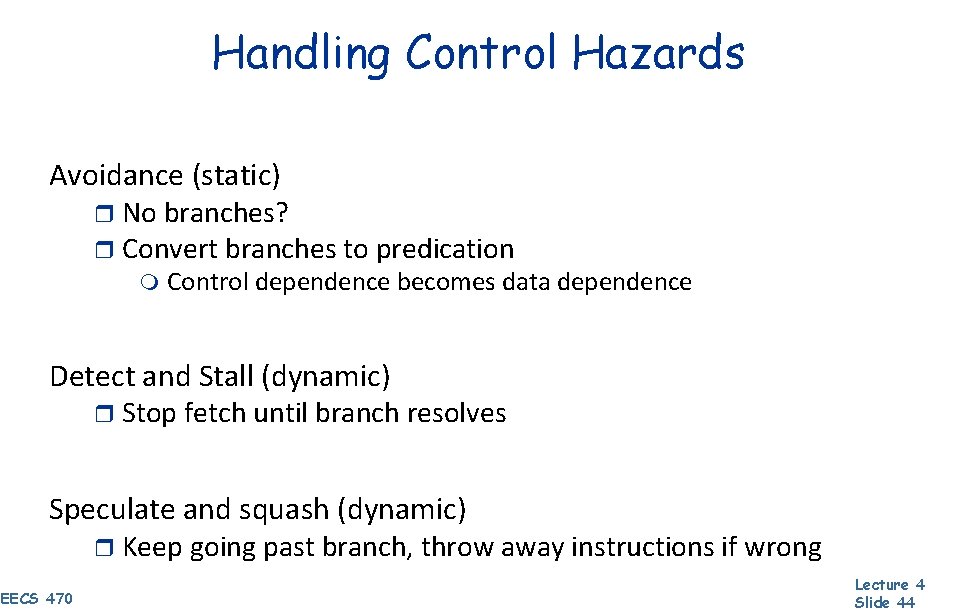

Handling Control Hazards Avoidance (static) r No branches? r Convert branches to predication m Control dependence becomes data dependence Detect and Stall (dynamic) r Stop fetch until branch resolves Speculate and squash (dynamic) r Keep going past branch, throw away instructions if wrong EECS 470 Lecture 4 Slide 44

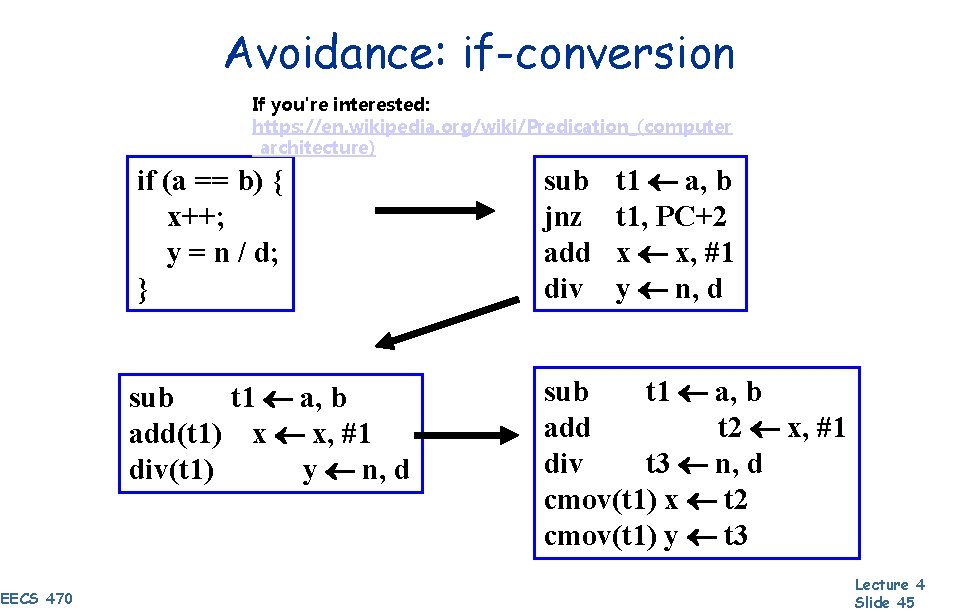

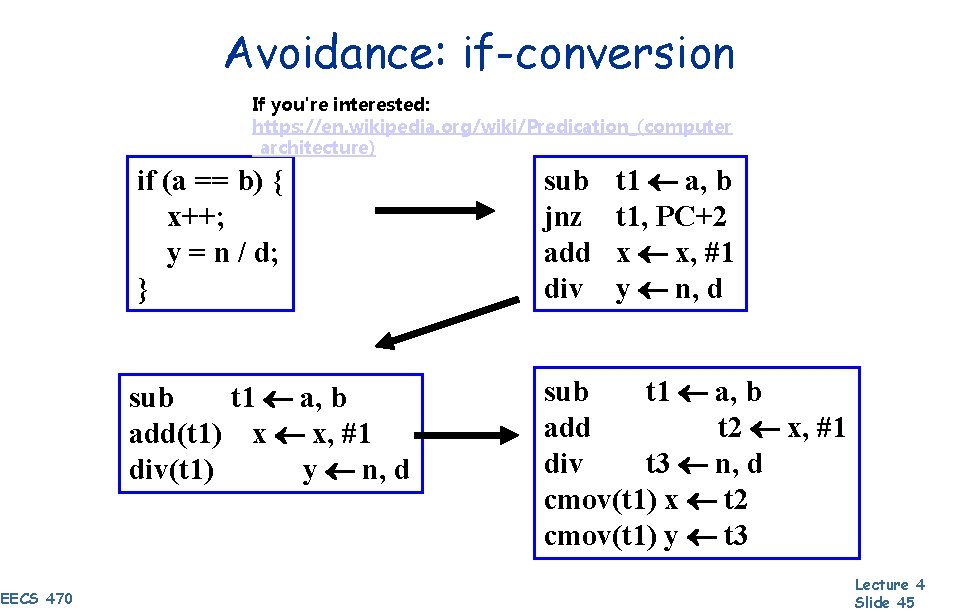

Avoidance: if-conversion If you're interested: https: //en. wikipedia. org/wiki/Predication_(computer _architecture) EECS 470 t 1 a, b t 1, PC+2 x x, #1 y n, d if (a == b) { x++; y = n / d; } sub jnz add div sub t 1 a, b add(t 1) x x, #1 div(t 1) y n, d sub t 1 a, b add t 2 x, #1 div t 3 n, d cmov(t 1) x t 2 cmov(t 1) y t 3 Lecture 4 Slide 45

Handling Control Hazards: Detect & Stall Detection r Stall r r EECS 470 In decode, check if opcode is branch or jump Hold next instruction in Fetch Pass noop to Decode Lecture 4 Slide 46

Problems with Detect & Stall CPI increases on every branch Are these stalls necessary? Not always! Branch is only taken half the time r Assume branch is NOT taken m Keep fetching, treat branch as noop m If wrong, make sure bad instructions don’t complete r EECS 470 Lecture 4 Slide 47

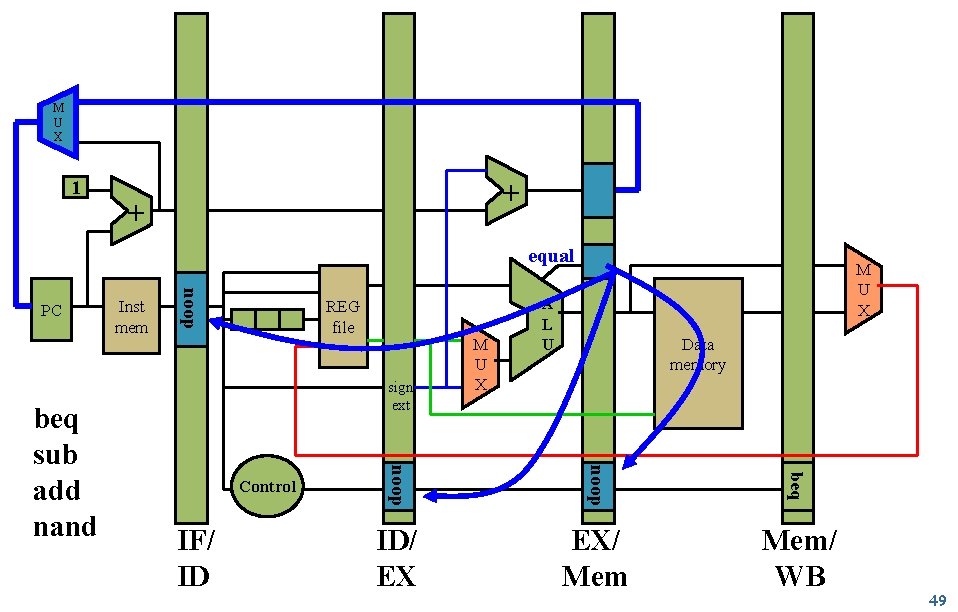

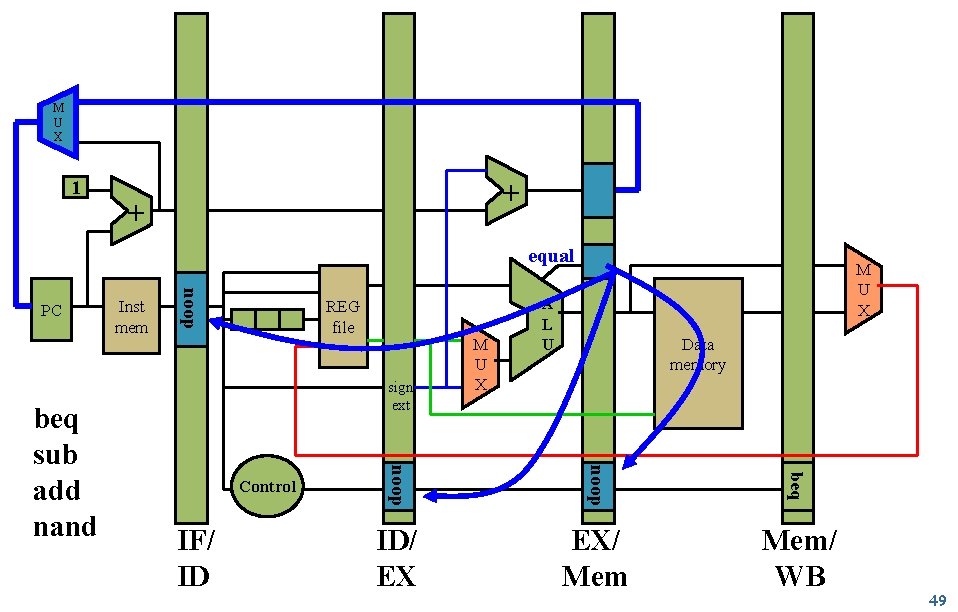

Handling Control Hazards: Speculate & Squash Speculate r Assume branch is not taken Squash r r EECS 470 Overwrite opcodes in Fetch, Decode, Execute with noop Pass target to Fetch Lecture 4 Slide 48

M U X 1 + + equal REG file sign ext beq IF/ ID Data memory beq noop Control M U X A L U sub noop beq sub add nand Inst mem add noop PC M U X ID/ EX EX/ Mem/ WB 49

Problems with Speculate & Squash Always assumes branch is not taken Can we do better? Yes. r r Predict branch direction and target! Why possible? Program behavior repeats. More on branch prediction to come. . . EECS 470 Lecture 4 Slide 50

Next Time • Going one step beyond pipelining: dynamic scheduling (a. k. a. out- of-order processing) r Introduce a specific algorithm: scoreboard scheduling • Lingering questions / feedback? I'll include an anonymous form at the end of every lecture: https: //bit. ly/3 o. Sr 5 FD EECS 470 Lecture 4 Slide 51