EECS 470 Lecture 19 Simultaneous Multithreading Winter 2021

- Slides: 27

EECS 470 Lecture 19 Simultaneous Multithreading Winter 2021 Jon Beaumont http: //www. eecs. umich. edu/courses/eecs 470 Slides developed in part by Profs. Falsafi, Hill, Hoe, Lipasti, Martin, Roth, Shen, Smith, Sohi, and Vijaykumar of Carnegie Mellon University, Purdue University, University of Pennsylvania, and University of Wisconsin. EECS 470 Lecture 21 Slide 1

Announcements Project Due Friday Written report due next Tuesday via Gradescope Describe the project, what you’ve implemented, and how you tested your design (7%) r In-depth analysis of your design and it’s performance (10%) r m m r Include % contributions of each member m r EECS 470 Think about which programs we care about performance on! halt. s, btest. s… not so much If you can't come to a consensus, email me See spec for more details Lecture 21 Slide 2

Announcements Presentations Homework #5 due next Tuesday r EECS 470 Dropping lowest assignment Lecture 21 Slide 3

Last Time • Learned how to exploit Thread Level Parallelism (TLP) via running multiple threads on multiple cores • Two problems: r Multiple caches means they can get out-of-sync or “incoherent” m r Out-of-order mechanisms makes it harder to ensure “intuitive” ordering of memory operations across threads m EECS 470 Fix with coherency protocols: caches send messages to invalidate stale data Define a specific “consistency” model and require programmer to specify any additional memory orderings needed Lecture 21 Slide 4

Today • Multicores exploits parallelism when we have many threads, but useless when don’t • Any way to improve both TLP and ILP, generally? • Enter multithreading EECS 470 Lecture 21 Slide 5

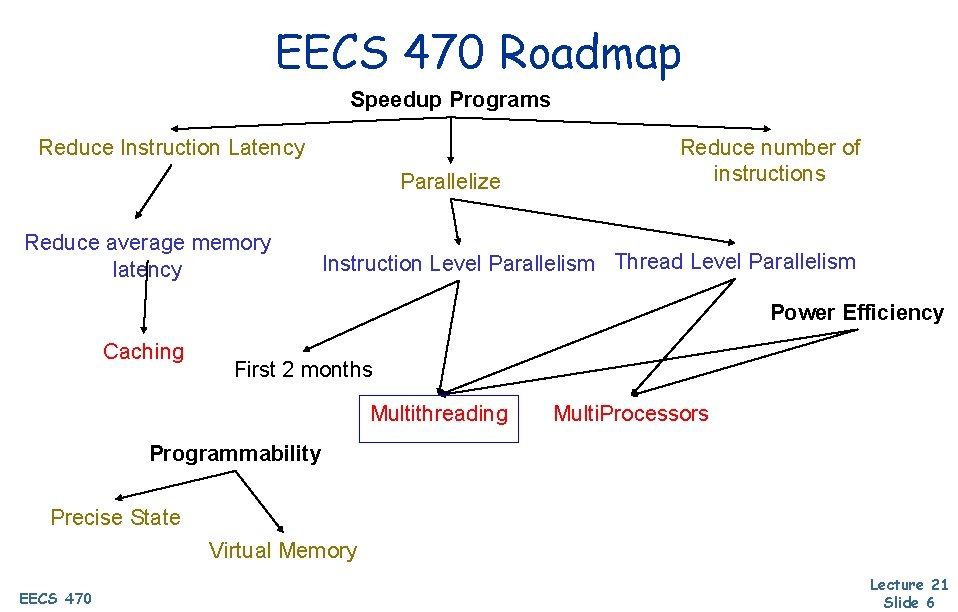

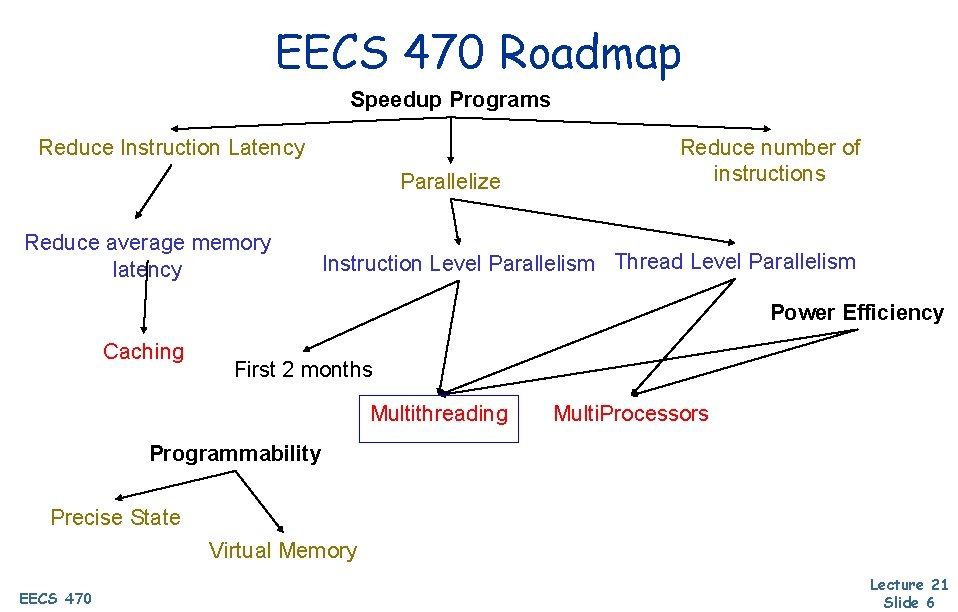

EECS 470 Roadmap Speedup Programs Reduce Instruction Latency Parallelize Reduce average memory latency Reduce number of instructions Instruction Level Parallelism Thread Level Parallelism Power Efficiency Caching First 2 months Multithreading Multi. Processors Programmability Precise State Virtual Memory EECS 470 Lecture 21 Slide 6

Hardware Multithreading EECS 470 Lecture 21 Slide 7

Threads Reminder • Thread: logical sequence of instructions which share register values and address space • Hardware may execute multiple instructions: simultaneously (superscalar / pipelining) or r out of order (dynamic scheduling) r but will always have same results as if run on a single-cycle, scalar processor • Exploit parallelism via ILP across 10 s-100 s of instructions • Multithreading: run multiple threads asynchronously • Exploit parallelism via TLP across 1000 s of instructions EECS 470 Lecture 21 Slide 8

Performance And Utilization • Performance (IPC) important • Utilization (actual IPC / peak IPC) important too • Even moderate superscalars (e. g. , 4 -way) not fully utilized r Average sustained IPC: 1. 5– 2 <50% utilization m m m Mis-predicted branches Cache misses, especially L 2 Data dependences • Multi-threading (MT) Improve utilization by multi-plexing multiple threads on single CPU r One thread cannot fully utilize CPU? Maybe 2, 4 (or 100) can r EECS 470 Lecture 21 Slide 9

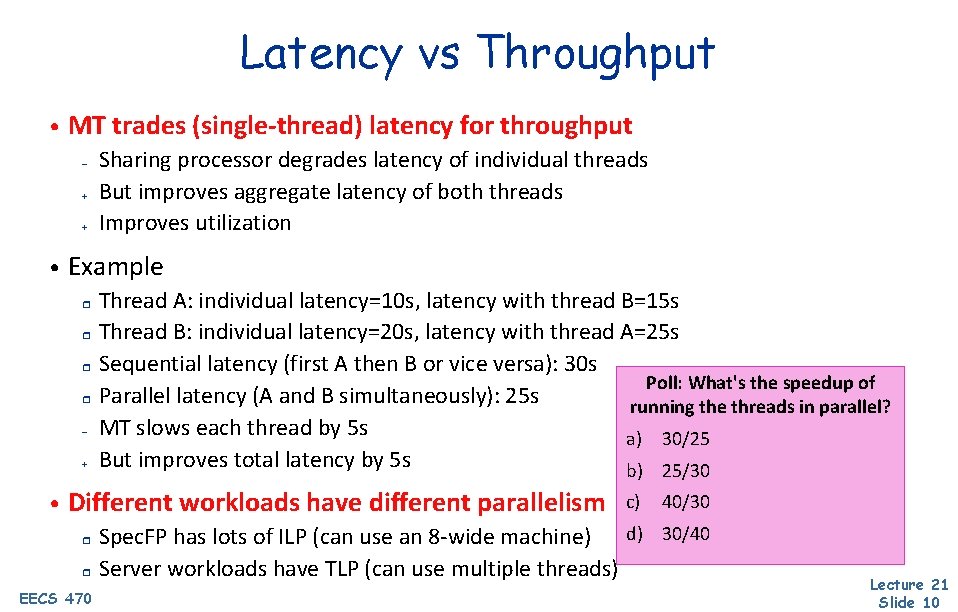

Latency vs Throughput • MT trades (single-thread) latency for throughput – + + • Example r r – + • Sharing processor degrades latency of individual threads But improves aggregate latency of both threads Improves utilization Thread A: individual latency=10 s, latency with thread B=15 s Thread B: individual latency=20 s, latency with thread A=25 s Sequential latency (first A then B or vice versa): 30 s Poll: What's the speedup of Parallel latency (A and B simultaneously): 25 s running the threads in parallel? MT slows each thread by 5 s a) 30/25 But improves total latency by 5 s b) 25/30 Different workloads have different parallelism r r EECS 470 c) 40/30 Spec. FP has lots of ILP (can use an 8 -wide machine) d) 30/40 Server workloads have TLP (can use multiple threads) Lecture 21 Slide 10

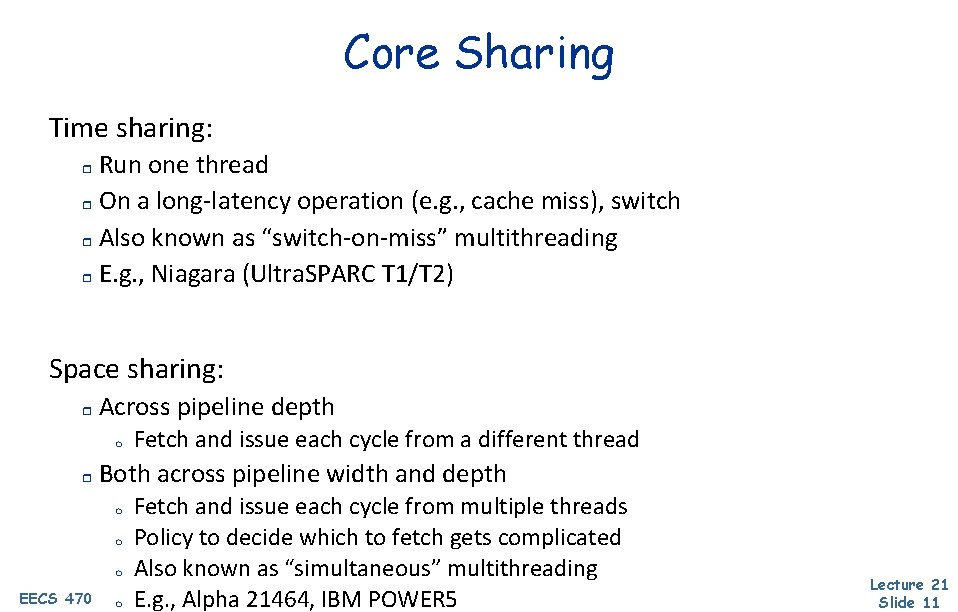

Core Sharing Time sharing: Run one thread r On a long-latency operation (e. g. , cache miss), switch r Also known as “switch-on-miss” multithreading r E. g. , Niagara (Ultra. SPARC T 1/T 2) r Space sharing: r Across pipeline depth m r Both across pipeline width and depth m m m EECS 470 Fetch and issue each cycle from a different thread m Fetch and issue each cycle from multiple threads Policy to decide which to fetch gets complicated Also known as “simultaneous” multithreading E. g. , Alpha 21464, IBM POWER 5 Lecture 21 Slide 11

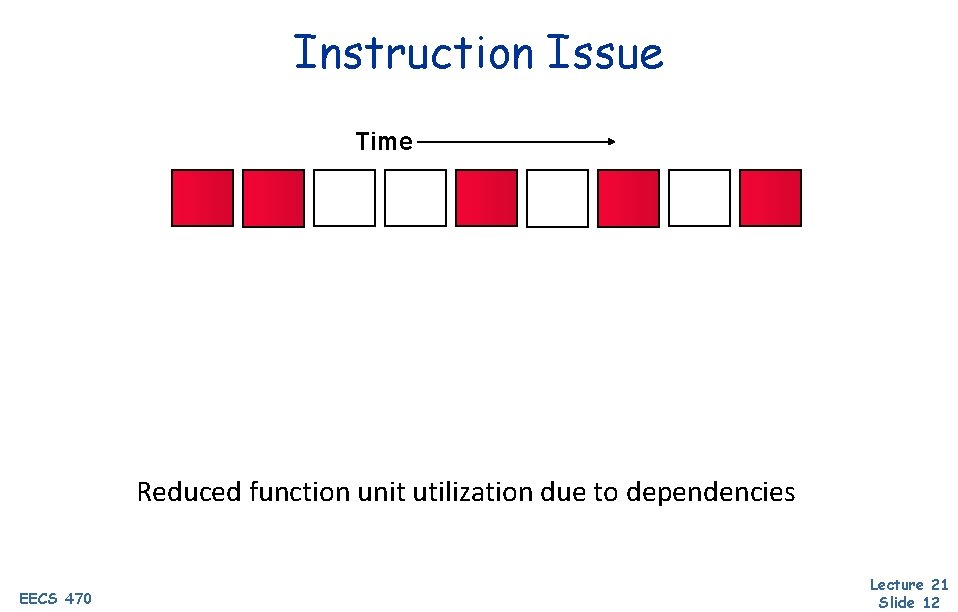

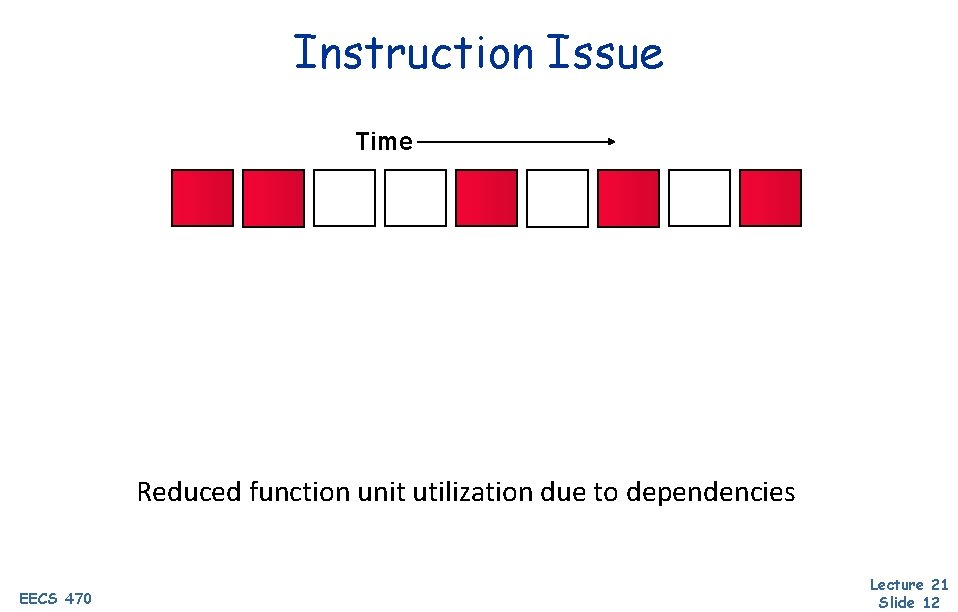

Instruction Issue Time Reduced function unit utilization due to dependencies EECS 470 Lecture 21 Slide 12

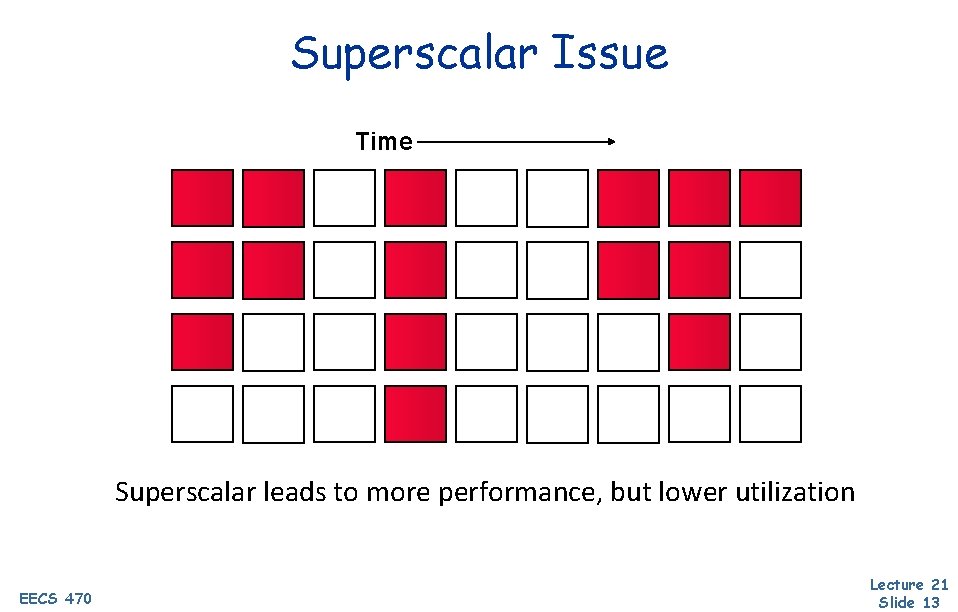

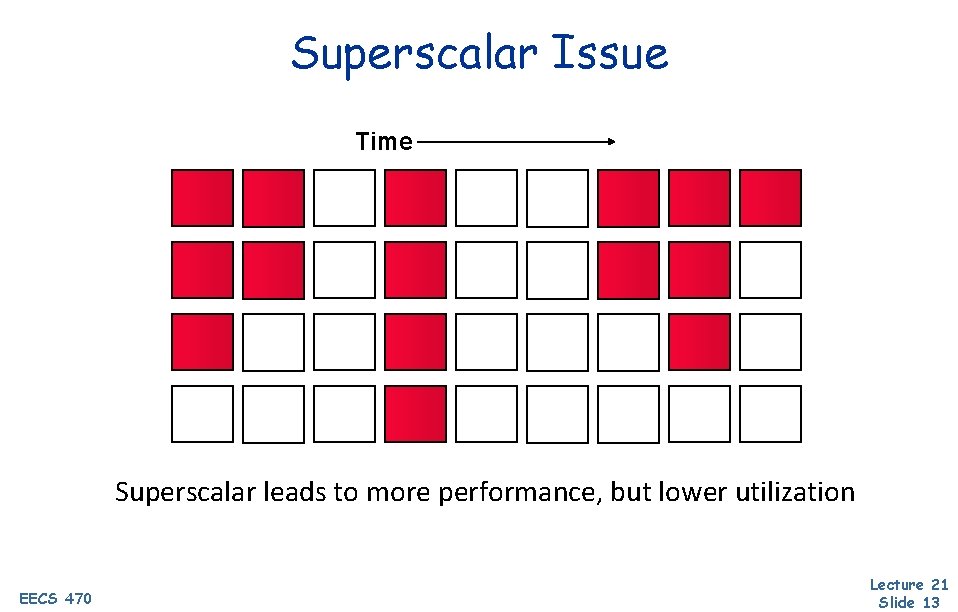

Superscalar Issue Time Superscalar leads to more performance, but lower utilization EECS 470 Lecture 21 Slide 13

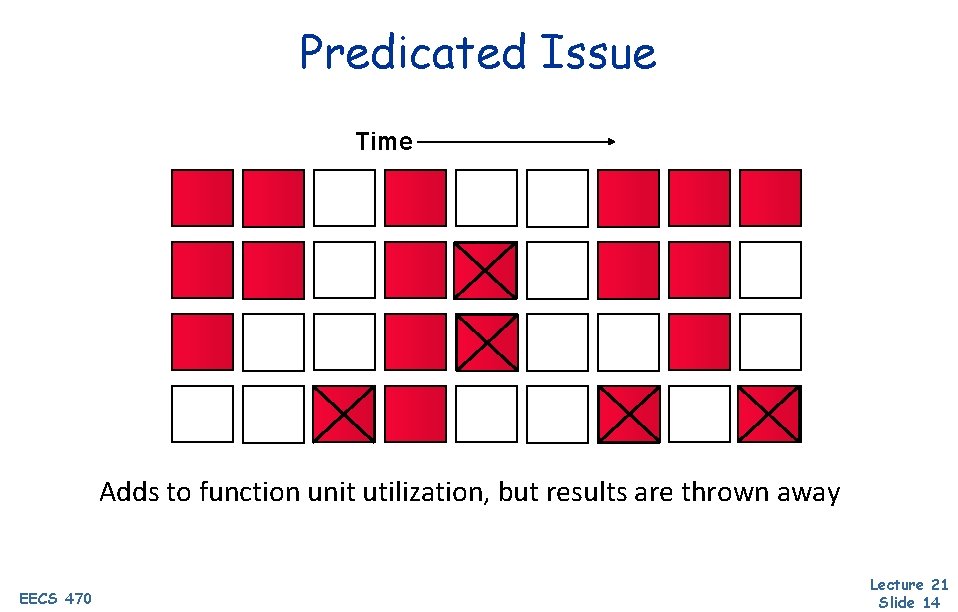

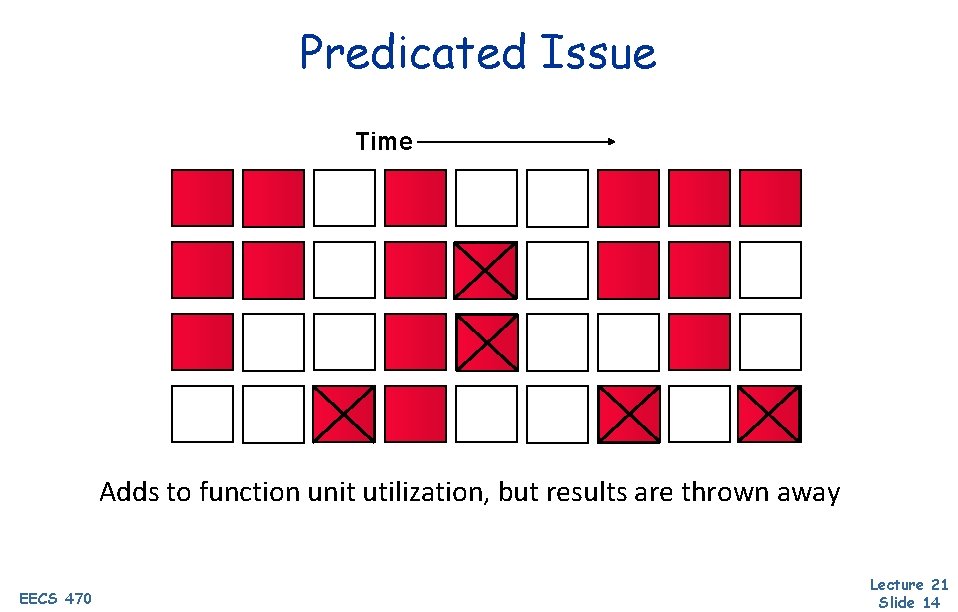

Predicated Issue Time Adds to function unit utilization, but results are thrown away EECS 470 Lecture 21 Slide 14

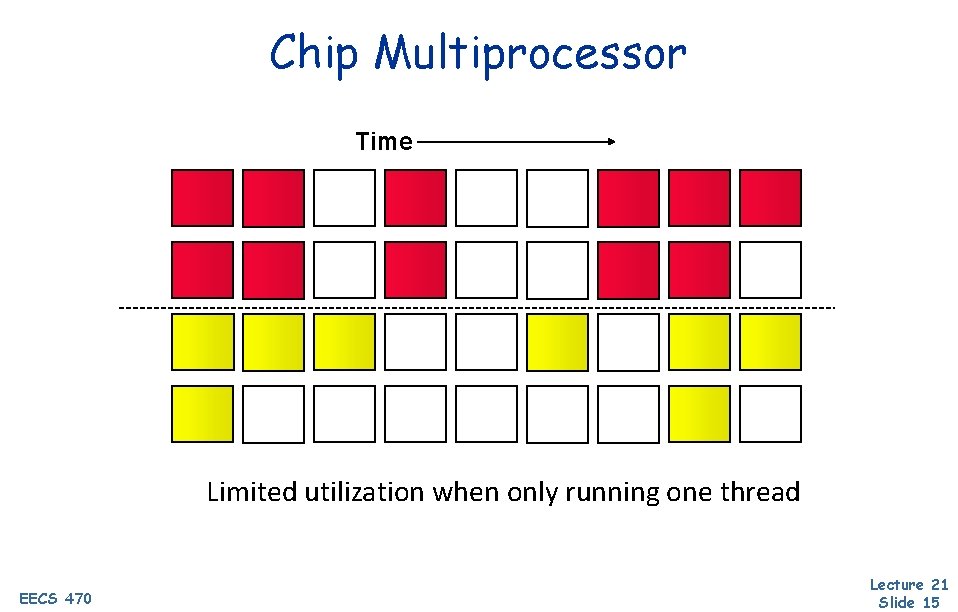

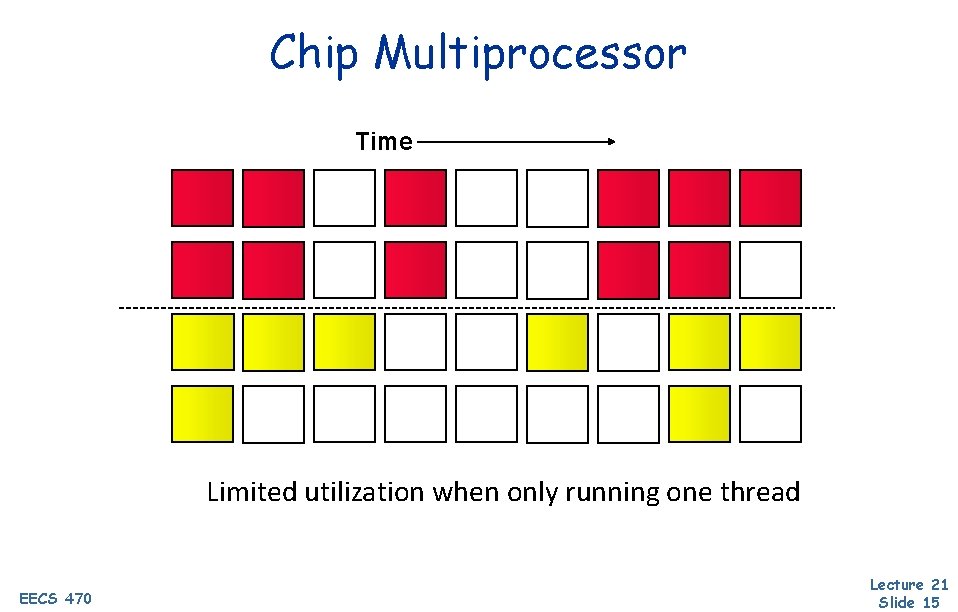

Chip Multiprocessor Time Limited utilization when only running one thread EECS 470 Lecture 21 Slide 15

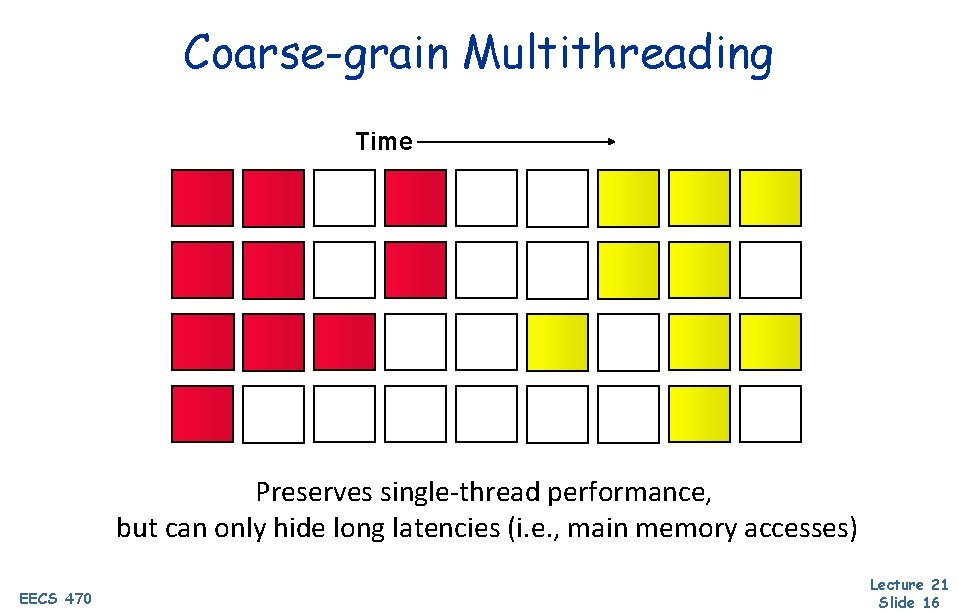

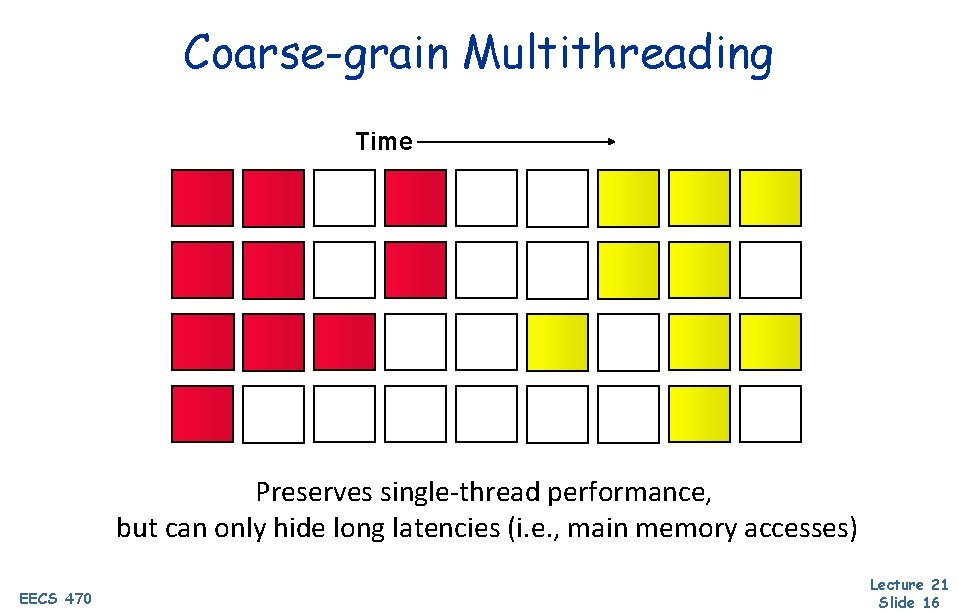

Coarse-grain Multithreading Time Preserves single-thread performance, but can only hide long latencies (i. e. , main memory accesses) EECS 470 Lecture 21 Slide 16

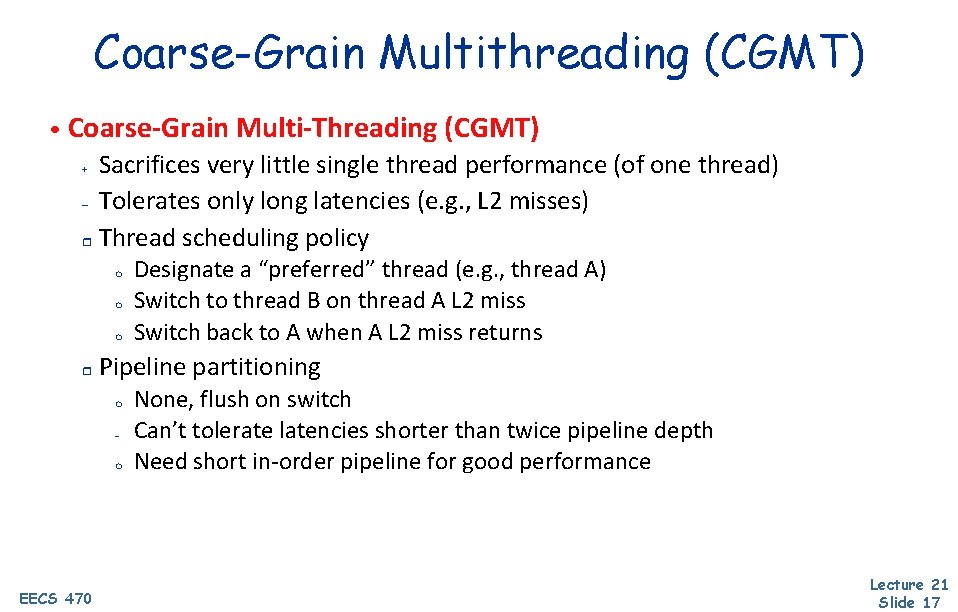

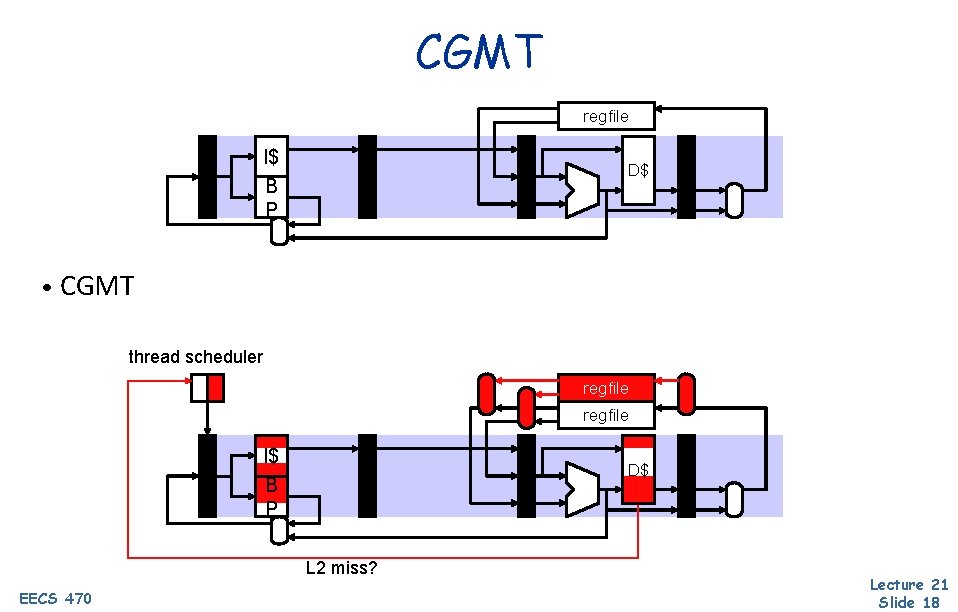

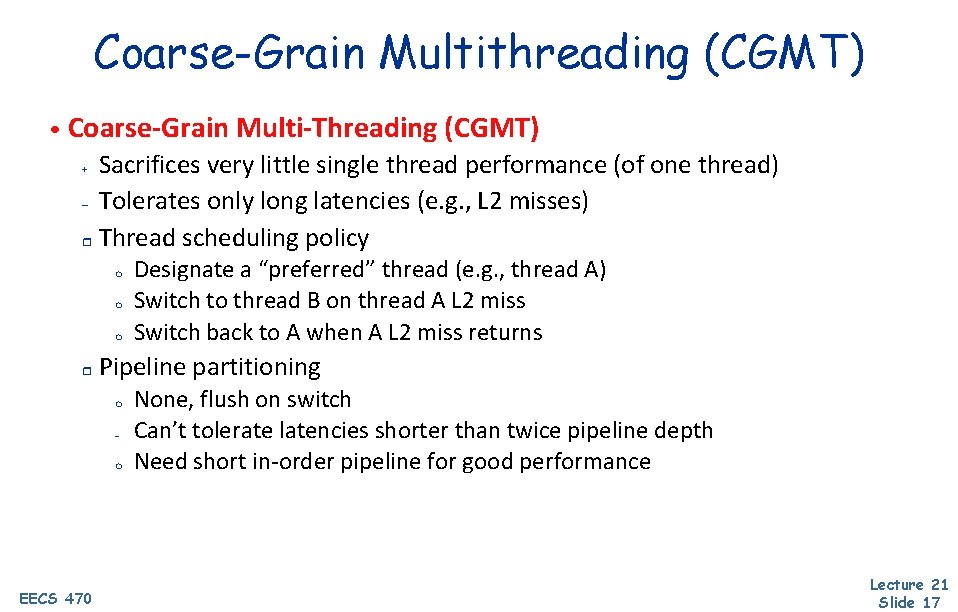

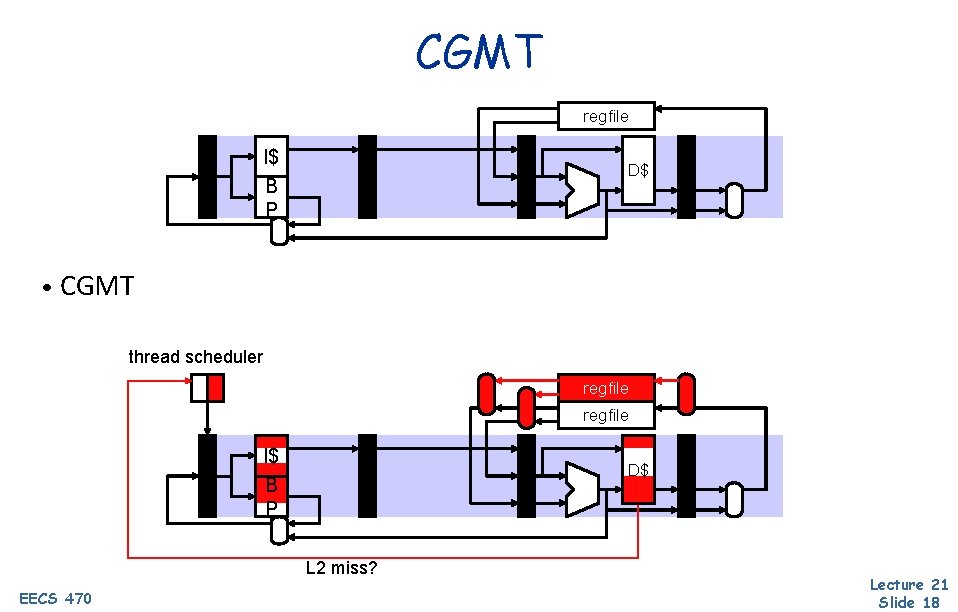

Coarse-Grain Multithreading (CGMT) • Coarse-Grain Multi-Threading (CGMT) Sacrifices very little single thread performance (of one thread) – Tolerates only long latencies (e. g. , L 2 misses) r Thread scheduling policy + m m m r Pipeline partitioning m – m EECS 470 Designate a “preferred” thread (e. g. , thread A) Switch to thread B on thread A L 2 miss Switch back to A when A L 2 miss returns None, flush on switch Can’t tolerate latencies shorter than twice pipeline depth Need short in-order pipeline for good performance Lecture 21 Slide 17

CGMT regfile I$ B P D$ • CGMT thread scheduler regfile I$ B P D$ L 2 miss? EECS 470 Lecture 21 Slide 18

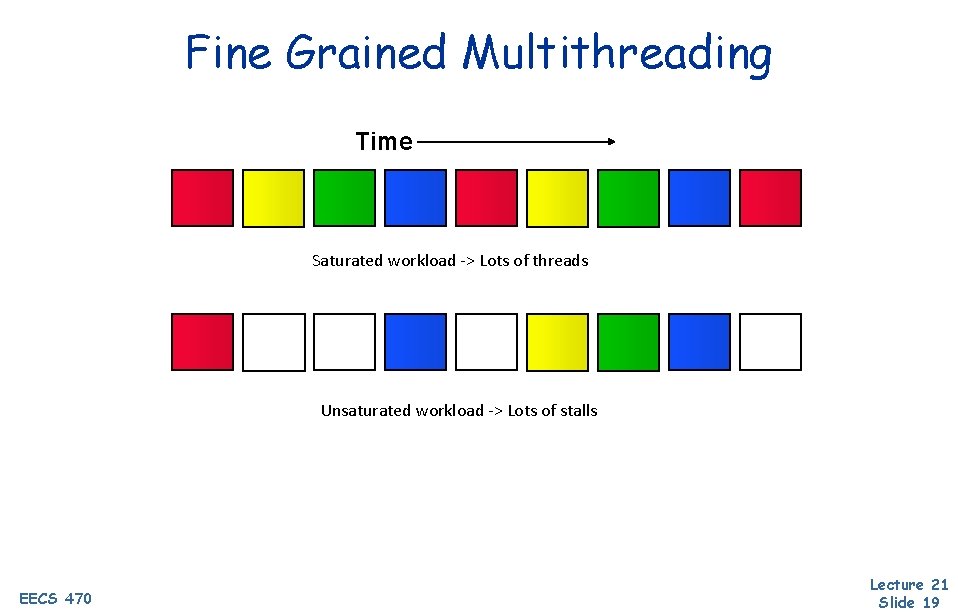

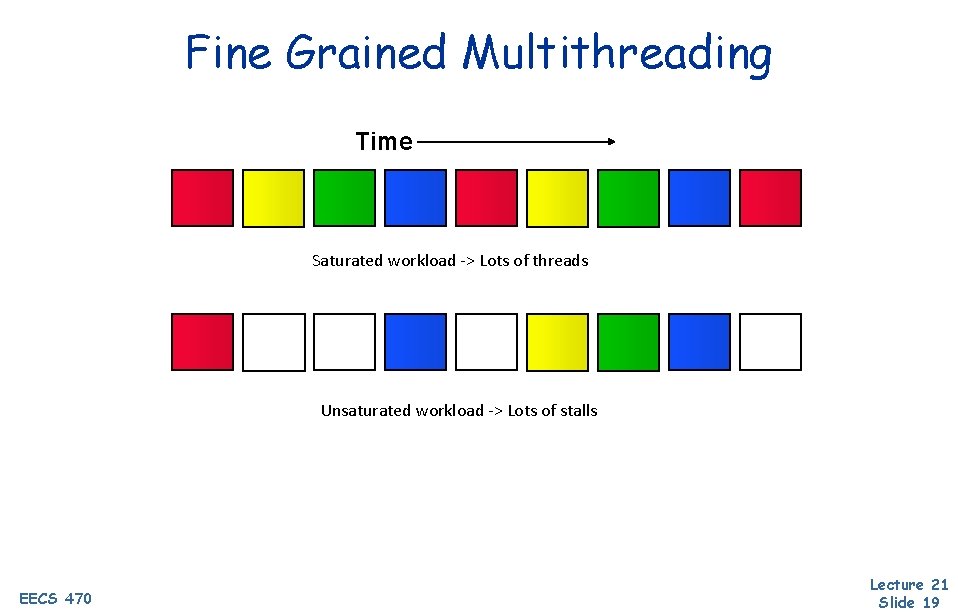

Fine Grained Multithreading Time Saturated workload -> Lots of threads Unsaturated workload -> Lots of stalls EECS 470 Lecture 21 Slide 19

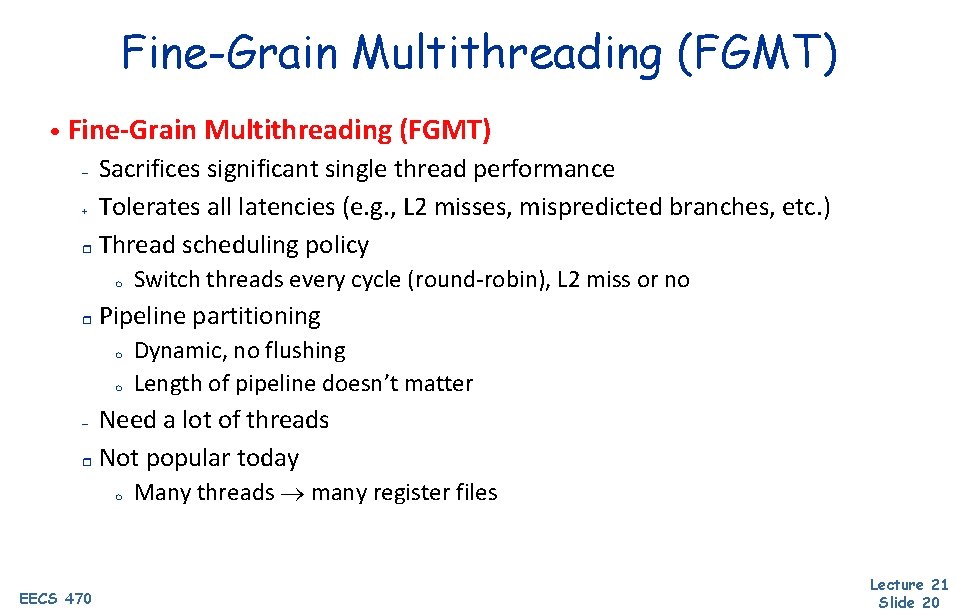

Fine-Grain Multithreading (FGMT) • Fine-Grain Multithreading (FGMT) Sacrifices significant single thread performance + Tolerates all latencies (e. g. , L 2 misses, mispredicted branches, etc. ) r Thread scheduling policy – m r Switch threads every cycle (round-robin), L 2 miss or no Pipeline partitioning m m Dynamic, no flushing Length of pipeline doesn’t matter Need a lot of threads r Not popular today – m EECS 470 Many threads many register files Lecture 21 Slide 20

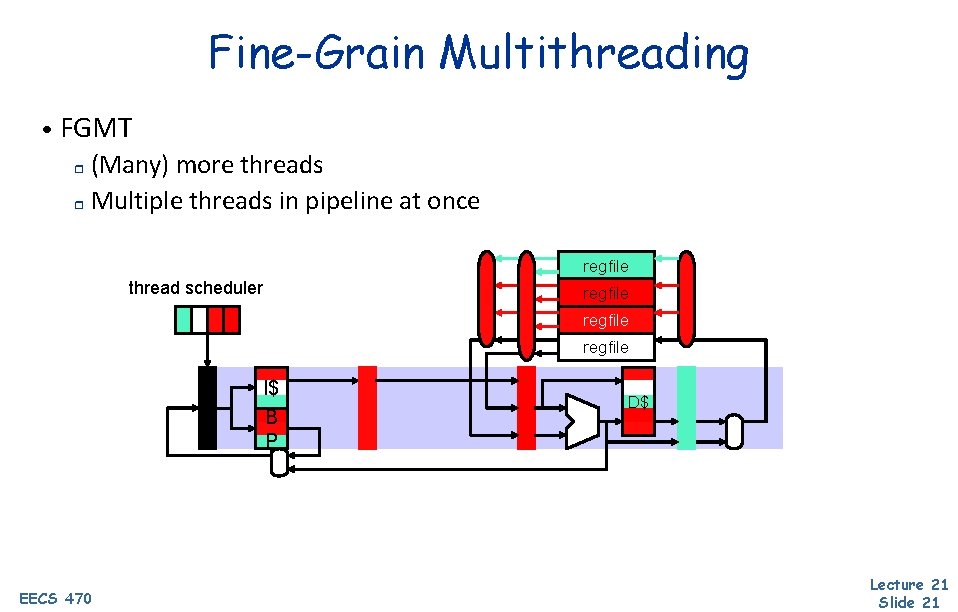

Fine-Grain Multithreading • FGMT (Many) more threads r Multiple threads in pipeline at once r regfile thread scheduler regfile I$ B P EECS 470 D$ Lecture 21 Slide 21

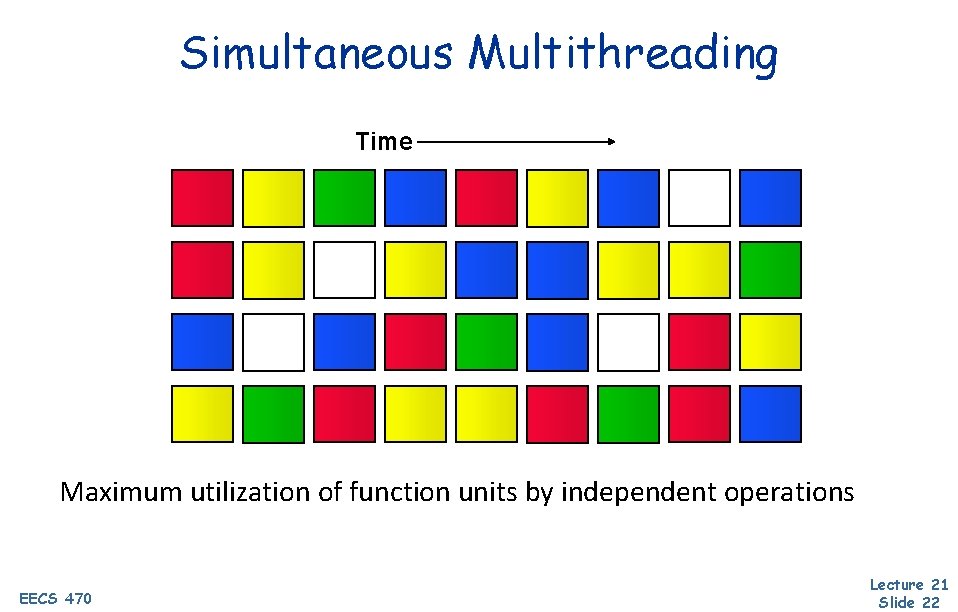

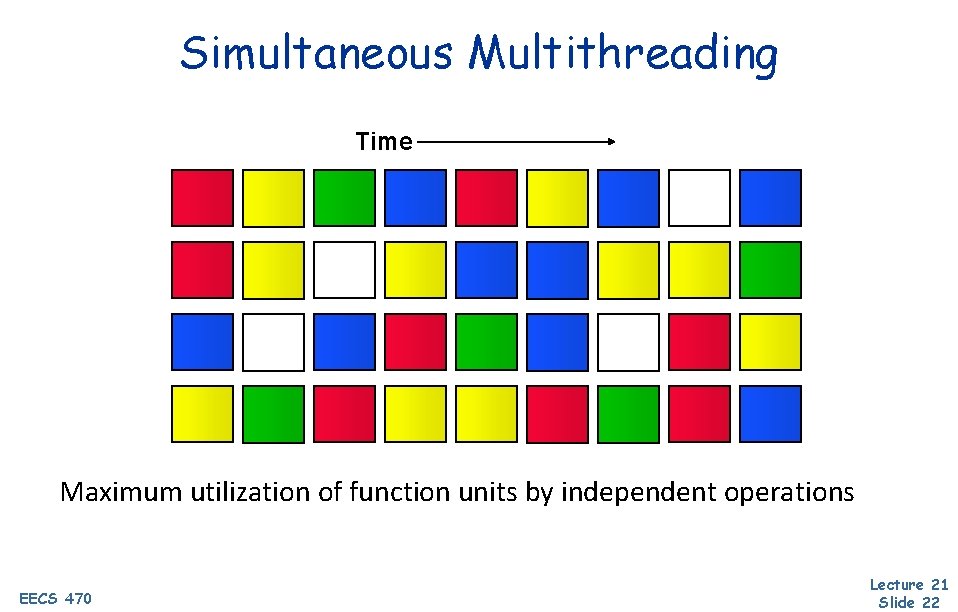

Simultaneous Multithreading Time Maximum utilization of function units by independent operations EECS 470 Lecture 21 Slide 22

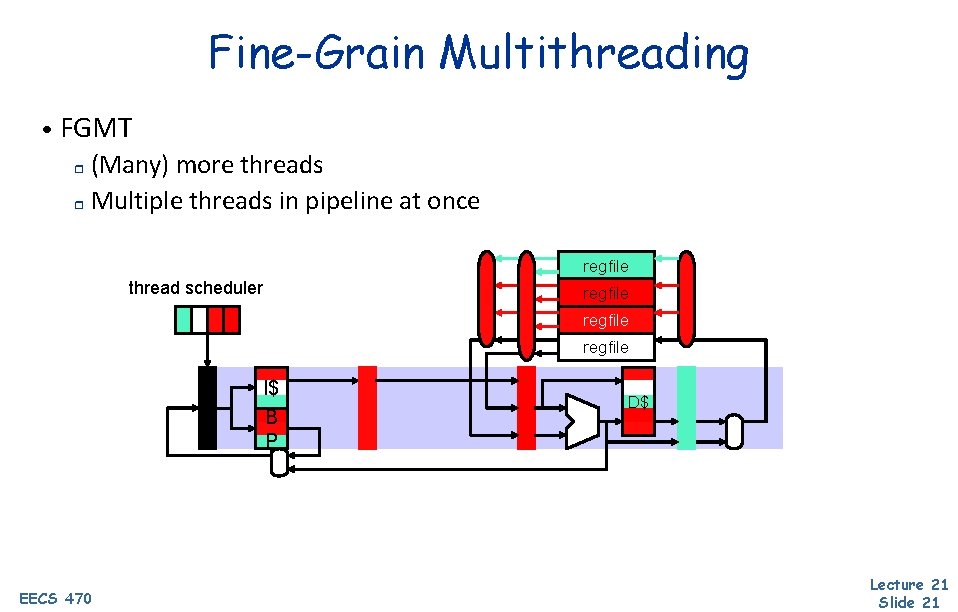

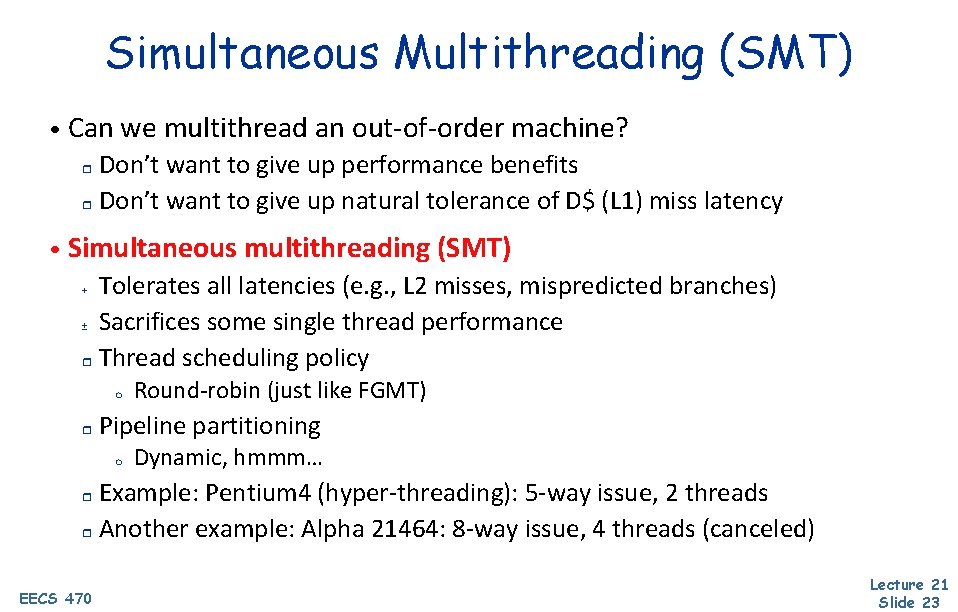

Simultaneous Multithreading (SMT) • Can we multithread an out-of-order machine? Don’t want to give up performance benefits r Don’t want to give up natural tolerance of D$ (L 1) miss latency r • Simultaneous multithreading (SMT) Tolerates all latencies (e. g. , L 2 misses, mispredicted branches) ± Sacrifices some single thread performance r Thread scheduling policy + m r Round-robin (just like FGMT) Pipeline partitioning m Dynamic, hmmm… Example: Pentium 4 (hyper-threading): 5 -way issue, 2 threads r Another example: Alpha 21464: 8 -way issue, 4 threads (canceled) r EECS 470 Lecture 21 Slide 23

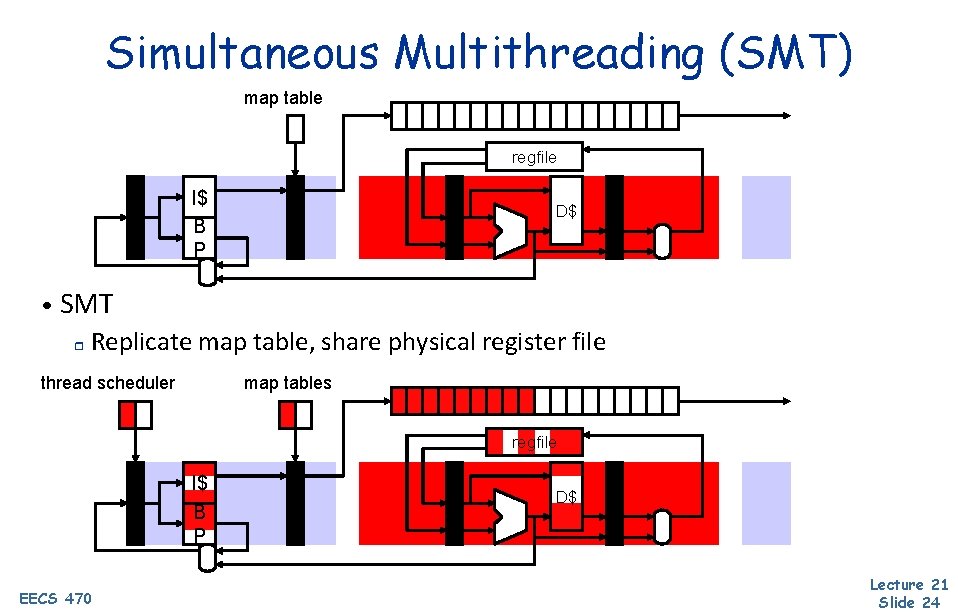

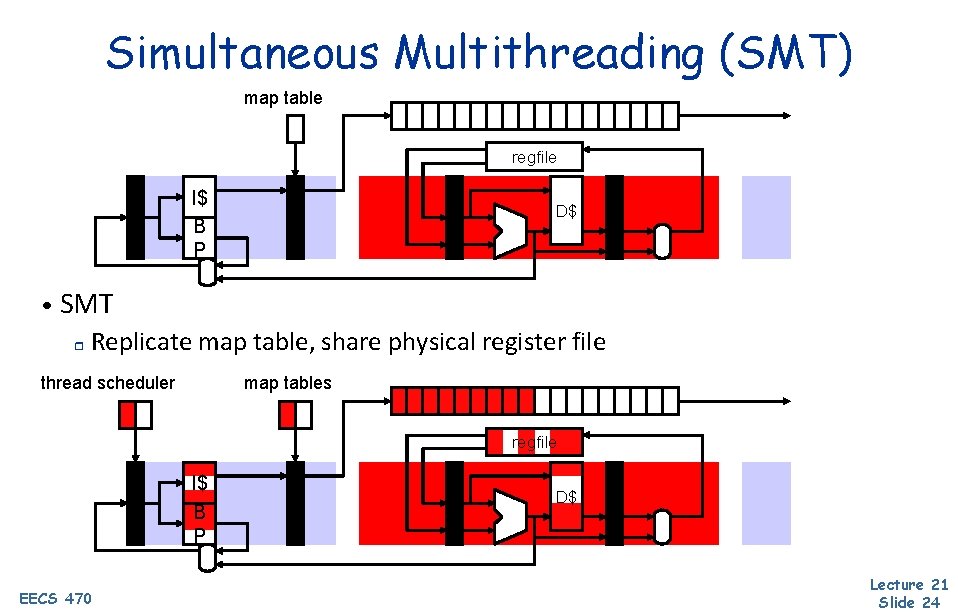

Simultaneous Multithreading (SMT) map table regfile I$ B P D$ • SMT r Replicate map table, share physical register file thread scheduler map tables regfile I$ B P EECS 470 D$ Lecture 21 Slide 24

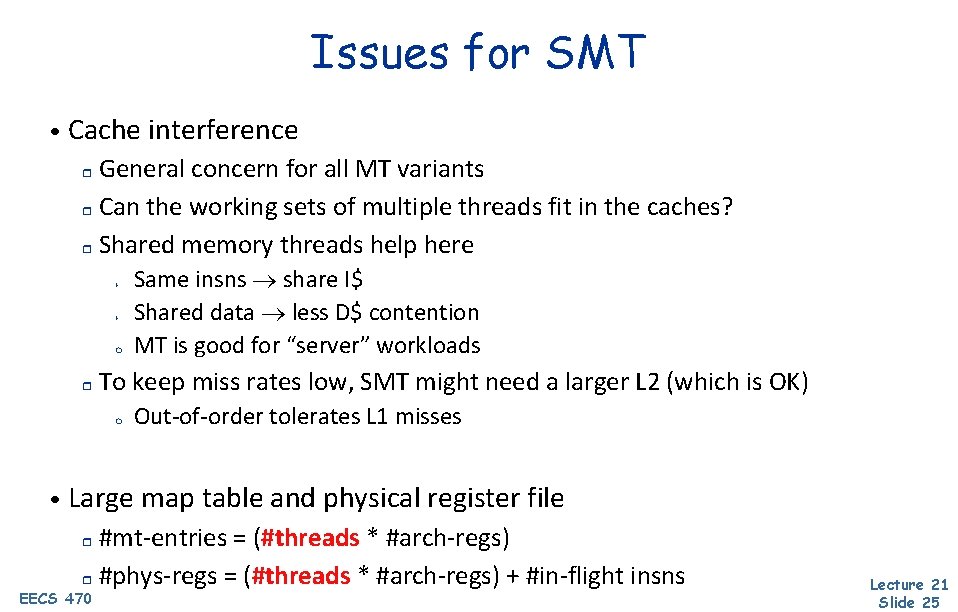

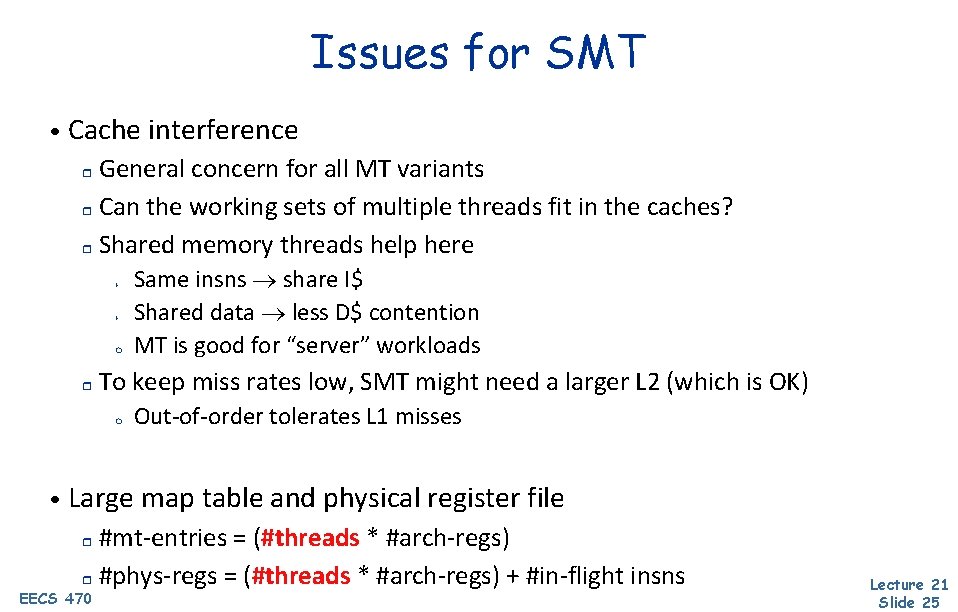

Issues for SMT • Cache interference General concern for all MT variants r Can the working sets of multiple threads fit in the caches? r Shared memory threads help here r + + m r Same insns share I$ Shared data less D$ contention MT is good for “server” workloads To keep miss rates low, SMT might need a larger L 2 (which is OK) m Out-of-order tolerates L 1 misses • Large map table and physical register file #mt-entries = (#threads * #arch-regs) r #phys-regs = (#threads * #arch-regs) + #in-flight insns r EECS 470 Lecture 21 Slide 25

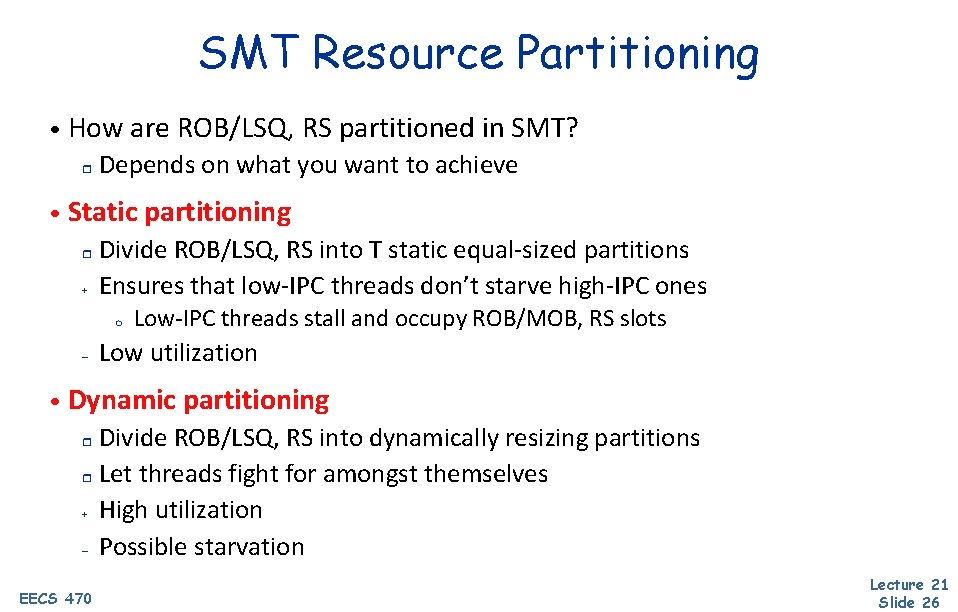

SMT Resource Partitioning • How are ROB/LSQ, RS partitioned in SMT? r Depends on what you want to achieve • Static partitioning r + Divide ROB/LSQ, RS into T static equal-sized partitions Ensures that low-IPC threads don’t starve high-IPC ones m – Low-IPC threads stall and occupy ROB/MOB, RS slots Low utilization • Dynamic partitioning Divide ROB/LSQ, RS into dynamically resizing partitions r Let threads fight for amongst themselves + High utilization – Possible starvation r EECS 470 Lecture 21 Slide 26

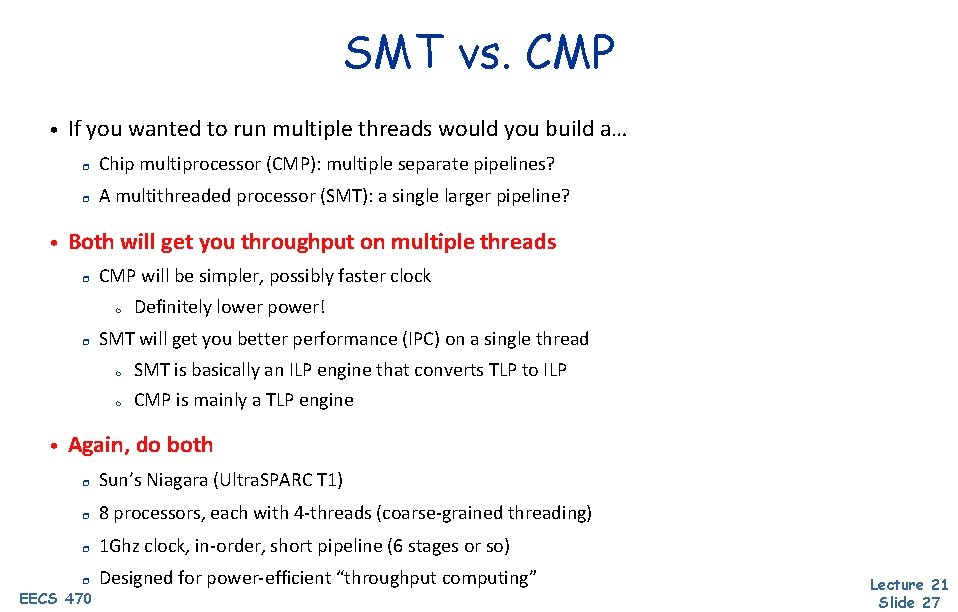

SMT vs. CMP • • If you wanted to run multiple threads would you build a… r Chip multiprocessor (CMP): multiple separate pipelines? r A multithreaded processor (SMT): a single larger pipeline? Both will get you throughput on multiple threads r CMP will be simpler, possibly faster clock m r SMT will get you better performance (IPC) on a single thread m m • Definitely lower power! SMT is basically an ILP engine that converts TLP to ILP CMP is mainly a TLP engine Again, do both r Sun’s Niagara (Ultra. SPARC T 1) r 8 processors, each with 4 -threads (coarse-grained threading) r 1 Ghz clock, in-order, short pipeline (6 stages or so) r Designed for power-efficient “throughput computing” EECS 470 Lecture 21 Slide 27