EECS 470 Lecture 17 Multiprocessors Winter 2021 Jon

![Thread-Level Parallelism struct acct_t { int bal; }; shared struct acct_t accts[MAX_ACCT]; int id, Thread-Level Parallelism struct acct_t { int bal; }; shared struct acct_t accts[MAX_ACCT]; int id,](https://slidetodoc.com/presentation_image_h2/3c1f8e3510a76b9def314fca58ab0e56/image-6.jpg)

![Simple Write-Through Scheme: Valid-Invalid Coherence t 1: Store A=1 P 1 AA[V]: 001 t Simple Write-Through Scheme: Valid-Invalid Coherence t 1: Store A=1 P 1 AA[V]: 001 t](https://slidetodoc.com/presentation_image_h2/3c1f8e3510a76b9def314fca58ab0e56/image-21.jpg)

![Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Bus. Rd / [Bus. Reply] Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Bus. Rd / [Bus. Reply]](https://slidetodoc.com/presentation_image_h2/3c1f8e3510a76b9def314fca58ab0e56/image-29.jpg)

![Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Bus. Rd / [Bus. Reply] Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Bus. Rd / [Bus. Reply]](https://slidetodoc.com/presentation_image_h2/3c1f8e3510a76b9def314fca58ab0e56/image-30.jpg)

- Slides: 37

EECS 470 Lecture 17 Multiprocessors Winter 2021 Jon Beaumont www. eecs. umich. edu/courses/eecs 470 Slides developed in part by Profs. Falsafi, Hill, Hoe, Lipasti, Martin, Roth Shen, Smith, Sohi, and Vijaykumar of Carnegie Mellon University, Purdue University, University of Pennsylvania, and University of Wisconsin. EECS 470 Lecture 19 Slide 1

Announcements • Milestone 3 due today Send a 1 page status update as normal r Sign up if you would like to meet on Thursday r m Let the staff know if you aren't available to meet then • Paid position for EECS 470 course development over the summer See Piazza for details r Let me know if you have any questions r EECS 470 Lecture 19 Slide 2

EECS 470 Roadmap Speedup Programs Reduce Instruction Latency Reduce number of instructions Parallelize Reduce average memory latency Instruction Level Parallelism Thread Level Parallelism First 2 months Caching Power Efficiency Multi. Processors Programmability Precise State EECS 470 Virtual Memory Lecture 19 Slide 3

Multiprocessors EECS 470 Lecture 19 Slide 4

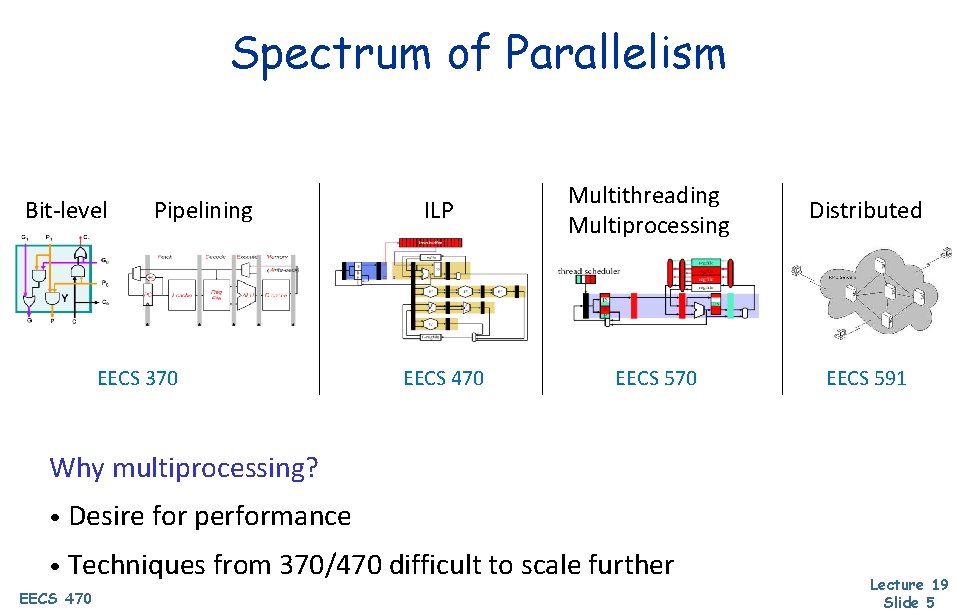

Spectrum of Parallelism Bit-level Pipelining EECS 370 ILP EECS 470 Multithreading Multiprocessing EECS 570 Distributed EECS 591 Why multiprocessing? • Desire for performance • Techniques from 370/470 difficult to scale further EECS 470 Lecture 19 Slide 5

![ThreadLevel Parallelism struct acctt int bal shared struct acctt acctsMAXACCT int id Thread-Level Parallelism struct acct_t { int bal; }; shared struct acct_t accts[MAX_ACCT]; int id,](https://slidetodoc.com/presentation_image_h2/3c1f8e3510a76b9def314fca58ab0e56/image-6.jpg)

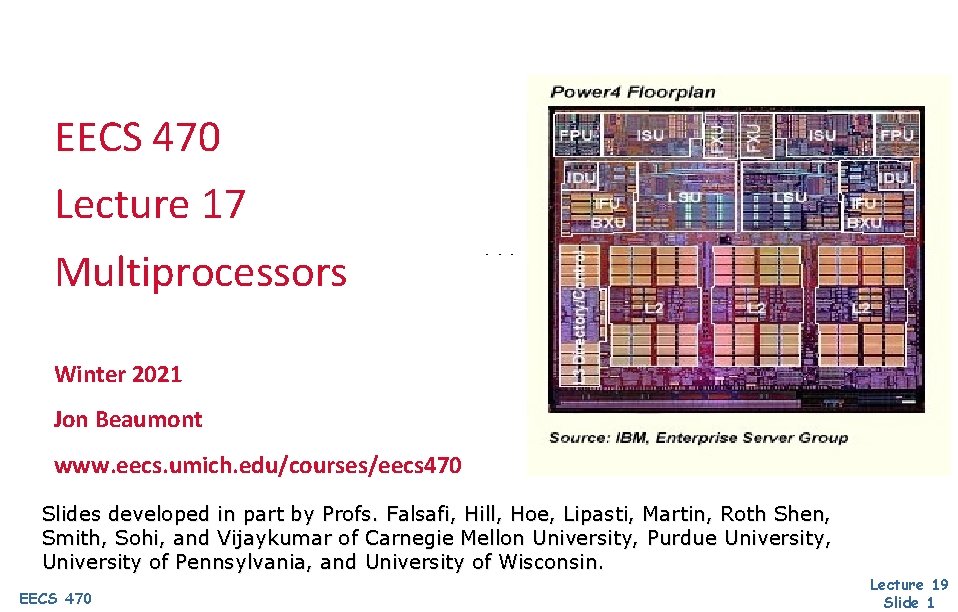

Thread-Level Parallelism struct acct_t { int bal; }; shared struct acct_t accts[MAX_ACCT]; int id, amt; if (accts[id]. bal >= amt) { accts[id]. bal -= amt; spew_cash(); } 0: 1: 2: 3: 4: 5: addi r 1, accts, r 3 ld 0(r 3), r 4 blt r 4, r 2, 6 sub r 4, r 2, r 4 st r 4, 0(r 3) call spew_cash • Thread-level parallelism (TLP) Collection of asynchronous tasks: not started and stopped together r Data shared loosely, dynamically r • Example: database/web server (each query is a thread) r r EECS 470 accts is shared, can’t register allocate even if it were scalar id and amt are private variables, register allocated to r 1, r 2 Lecture 19 Slide 6

Thread-Level Parallelism • “Coarser” than instruction level parallelism r • More independence between different threads than within a thread r • Usually exploited over thousands of instructions, not 10 s-100 s Less data dependencies in TLP than ILP Fewer guarantees about how instructions execute relative to one another outside a thread than within Instructions in a thread are guaranteed to execute (as if they were done) sequentially r Only guaranteed across threads if programmer explicitly indicates so r EECS 470 Lecture 19 Slide 7

Shared-Memory Multiprocessors r Multiple execution contexts sharing a single address space m m r Multiple programs (MIMD) Or more frequently: multiple copies of one program (SPMD) Implicit (automatic) communication via loads and stores P 1 P 2 P 3 P 4 Memory System EECS 470 Lecture 19 Slide 8

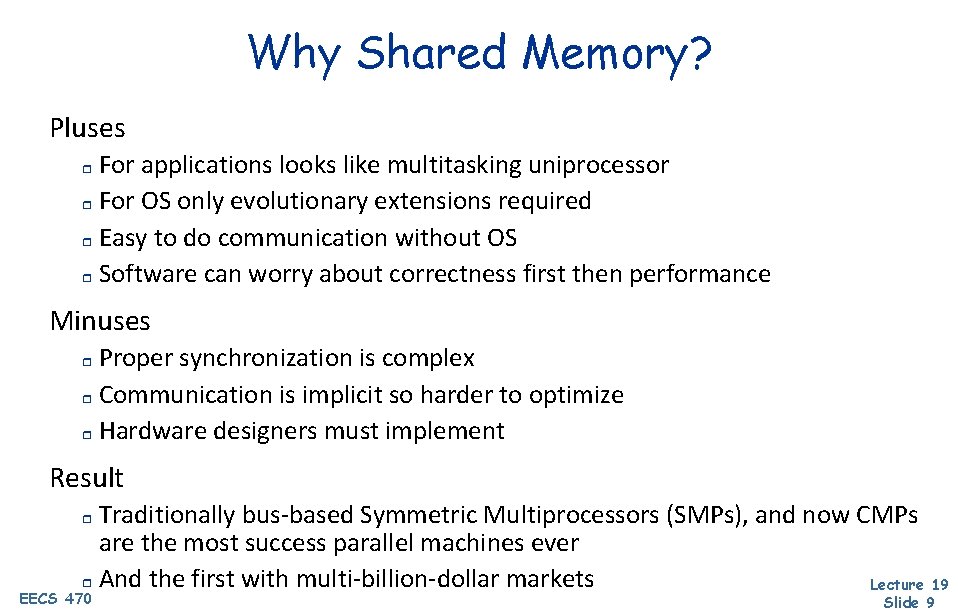

Why Shared Memory? Pluses For applications looks like multitasking uniprocessor r For OS only evolutionary extensions required r Easy to do communication without OS r Software can worry about correctness first then performance r Minuses Proper synchronization is complex r Communication is implicit so harder to optimize r Hardware designers must implement r Result Traditionally bus-based Symmetric Multiprocessors (SMPs), and now CMPs are the most success parallel machines ever r And the first with multi-billion-dollar markets Lecture 19 r EECS 470 Slide 9

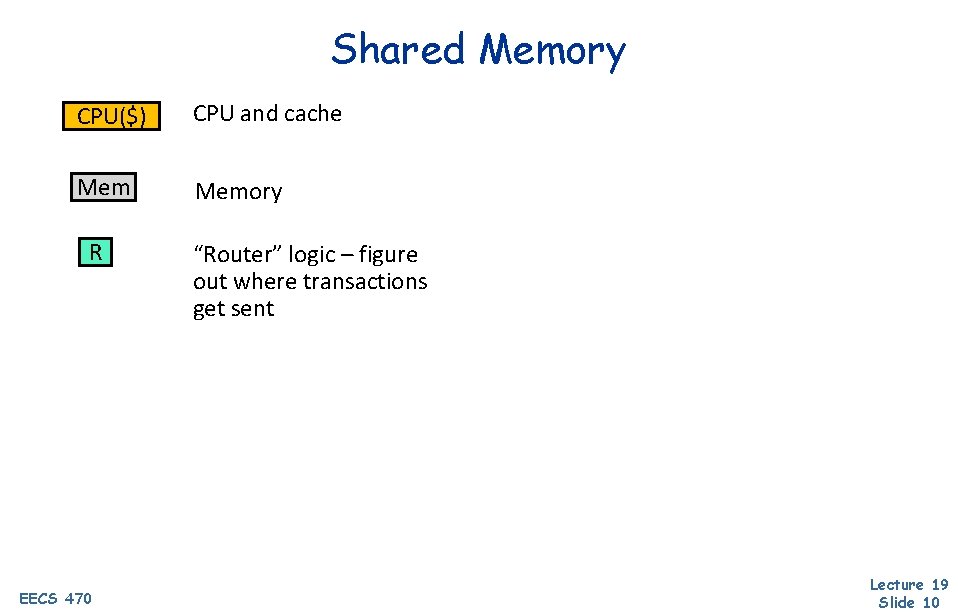

Shared Memory CPU($) CPU and cache Memory R EECS 470 “Router” logic – figure out where transactions get sent Lecture 19 Slide 10

Paired vs. Separate Processor/Memory? • Separate processor/memory Uniform memory access (UMA): equal latency to all memory + Simple software, doesn’t matter where you put data – Lower peak performance r Bus-based UMAs common: symmetric multi-processors (SMP) r • Paired processor/memory Non-uniform memory access (NUMA): faster to local memory – More complex software: where you put data matters + Higher peak performance: assuming proper data placement CPU($) r CPU($) Mem R Mem EECS 470 Mem CPU($) Mem R Mem Lecture 19 Slide 11

Shared vs. Point-to-Point Networks • Shared network: e. g. , bus (left) + – + Low latency Low bandwidth: doesn’t scale beyond ~16 processors Shared property simplifies cache coherence protocols (later) • Point-to-point network: e. g. , mesh or ring (right) Longer latency: may need multiple “hops” to communicate + Higher bandwidth: scales to 1000 s of processors – Cache coherence protocols are complex CPU($) CPU($) Mem R Mem R CPU($) R Mem CPU($) – EECS 470 Lecture 19 Slide 12

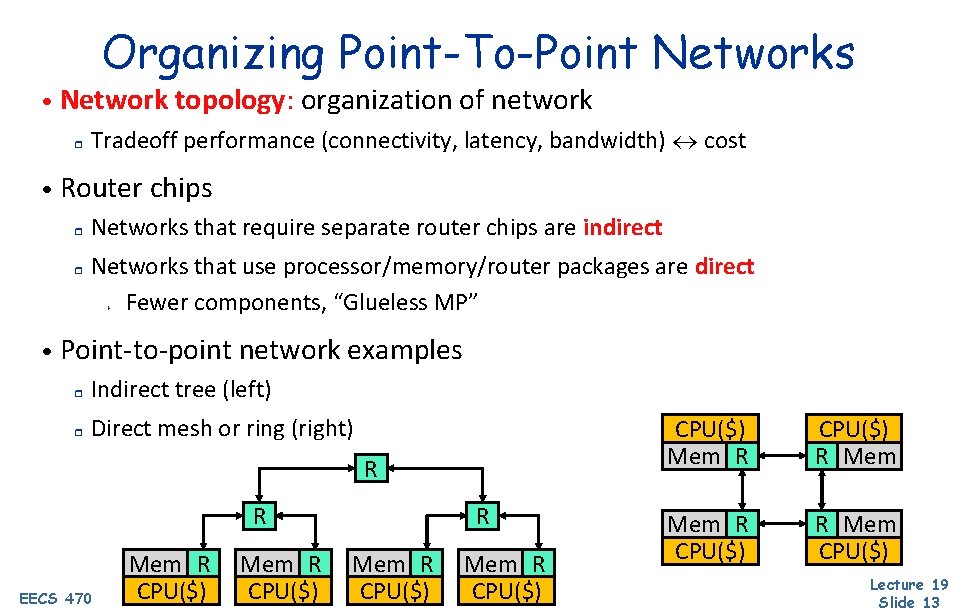

Organizing Point-To-Point Networks • Network topology: organization of network r Tradeoff performance (connectivity, latency, bandwidth) cost • Router chips r r Networks that require separate router chips are indirect Networks that use processor/memory/router packages are direct Fewer components, “Glueless MP” + • Point-to-point network examples r Indirect tree (left) r Direct mesh or ring (right) R R EECS 470 Mem R CPU($) R Mem R CPU($) Mem R CPU($) R Mem CPU($) Lecture 19 Slide 13

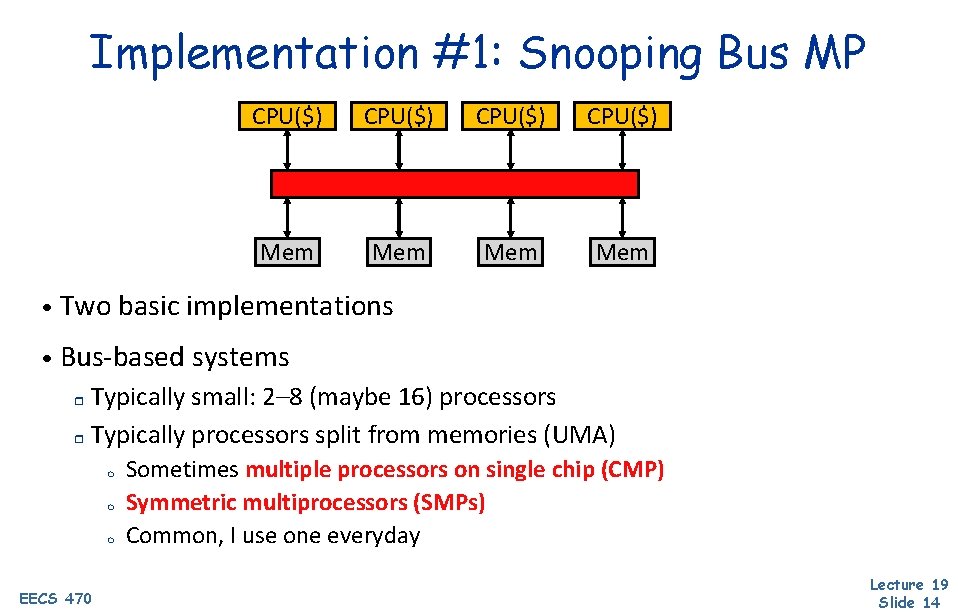

Implementation #1: Snooping Bus MP CPU($) Mem Mem • Two basic implementations • Bus-based systems Typically small: 2– 8 (maybe 16) processors r Typically processors split from memories (UMA) r m m m EECS 470 Sometimes multiple processors on single chip (CMP) Symmetric multiprocessors (SMPs) Common, I use one everyday Lecture 19 Slide 14

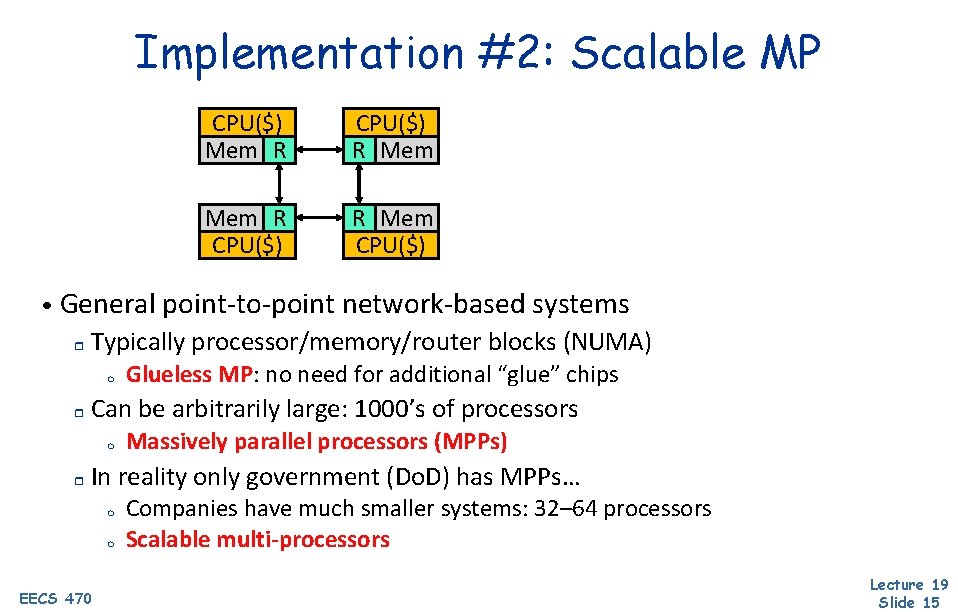

Implementation #2: Scalable MP CPU($) Mem R CPU($) R Mem CPU($) • General point-to-point network-based systems r Typically processor/memory/router blocks (NUMA) m r Can be arbitrarily large: 1000’s of processors m r Glueless MP: no need for additional “glue” chips Massively parallel processors (MPPs) In reality only government (Do. D) has MPPs… m m EECS 470 Companies have much smaller systems: 32– 64 processors Scalable multi-processors Lecture 19 Slide 15

Issues for Shared Memory Systems • Two in particular Cache coherence r Memory consistency model r r Closely related to each other • Different solutions for SMPs and MPPs EECS 470 Lecture 19 Slide 16

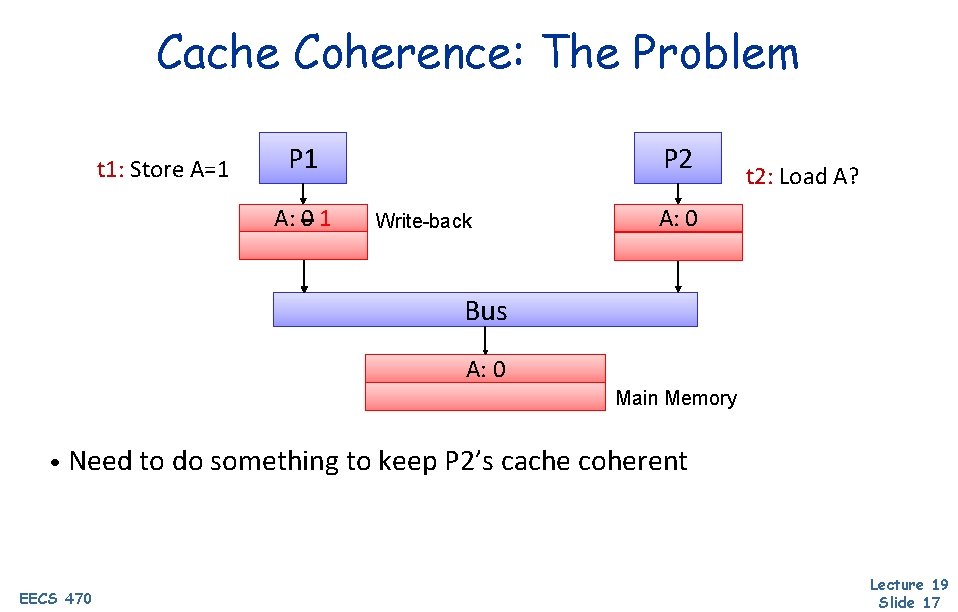

Cache Coherence: The Problem t 1: Store A=1 P 1 A: A: 001 P 2 Write-back t 2: Load A? A: 0 Bus A: 0 Main Memory • Need to do something to keep P 2’s cache coherent EECS 470 Lecture 19 Slide 17

How to solve coherence issues Poll: Which of the following would eliminate coherency issues? (select all that apply) a) Only use write-through caches b) Don't use caches at all c) Only allow one cache to have a copy of a particular block at a time EECS 470 Lecture 19 Slide 18

Approaches to Cache Coherence • Software-based solutions r Mechanisms: m m m Mark cache blocks/memory pages as cacheable/non-cacheable Add “Flush” and “Invalidate” instructions When are each of these needed? Could be done by compiler or run-time system r Difficult to get perfect (e. g. , what about memory aliasing? ) r EECS 470 Lecture 19 Slide 19

Approaches to Cache Coherence • Hardware solutions are far more common • Common approach for small multicore systems: snooping bus protocols Each cache block maintains a particular state r Under certain state updates, the cache broadcasts messages over a bus r Each cache listens ("snoops") on the bus for messages regarding a particular cache block r Certain messages trigger a cache to update its own state r EECS 470 Lecture 19 Slide 20

![Simple WriteThrough Scheme ValidInvalid Coherence t 1 Store A1 P 1 AAV 001 t Simple Write-Through Scheme: Valid-Invalid Coherence t 1: Store A=1 P 1 AA[V]: 001 t](https://slidetodoc.com/presentation_image_h2/3c1f8e3510a76b9def314fca58ab0e56/image-21.jpg)

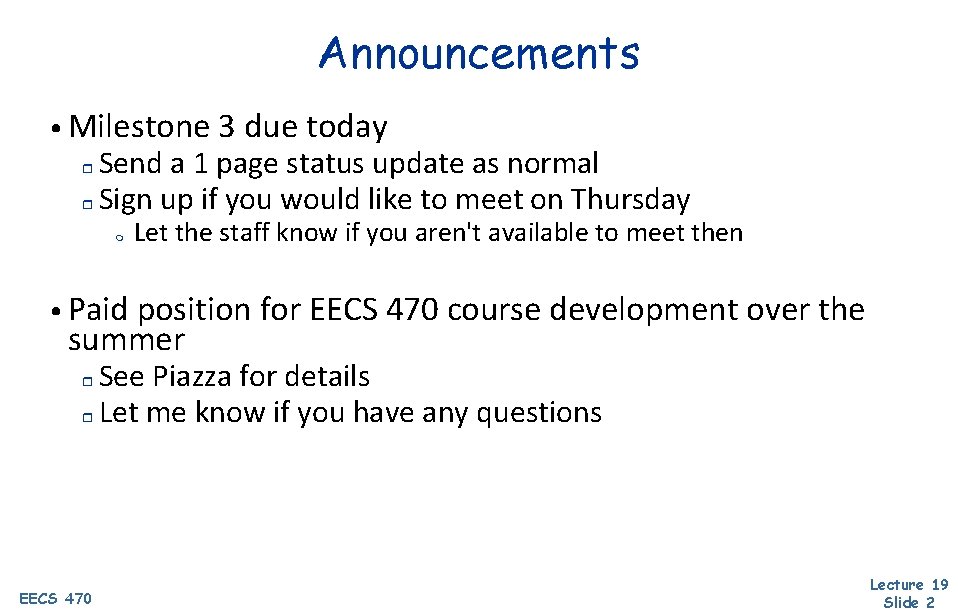

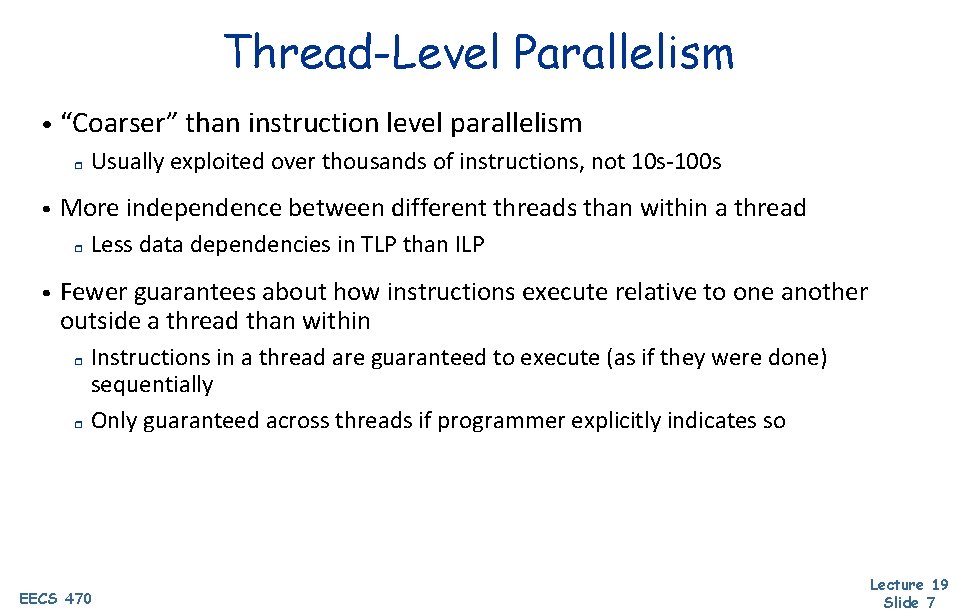

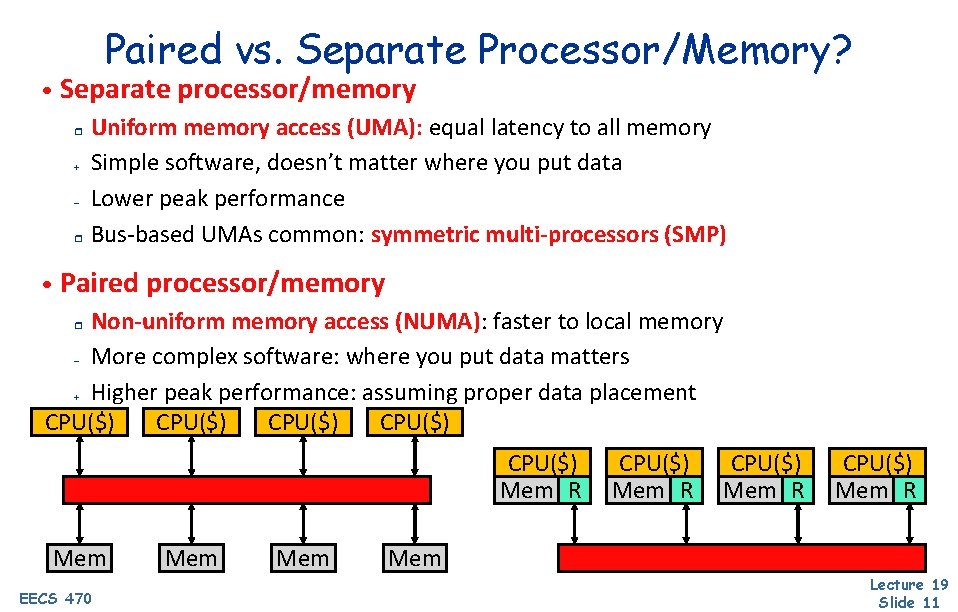

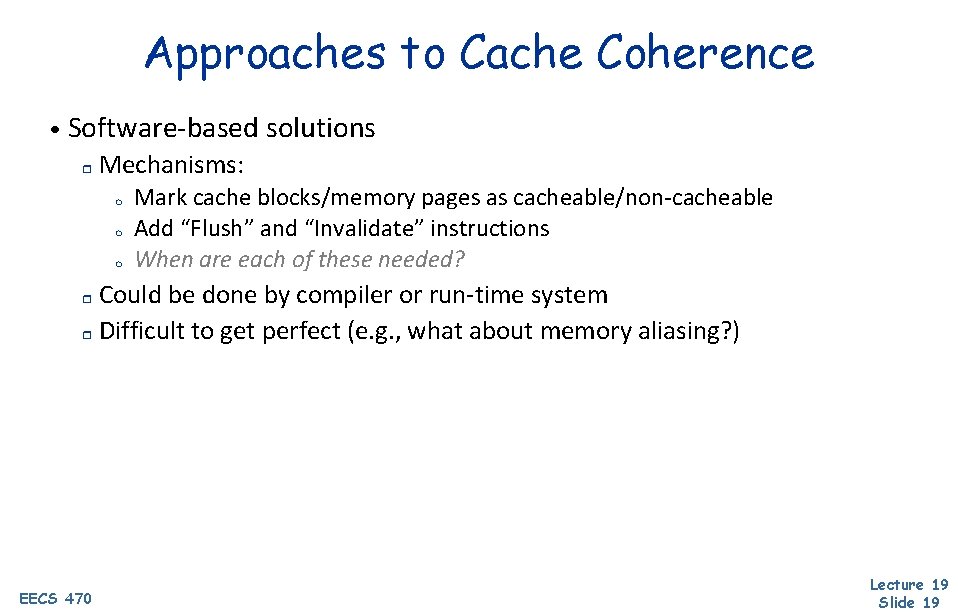

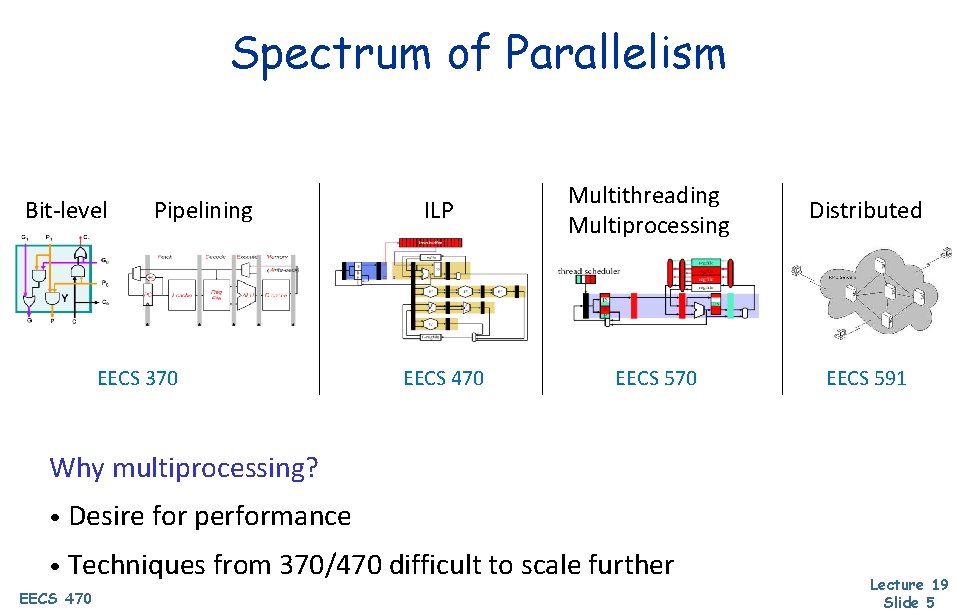

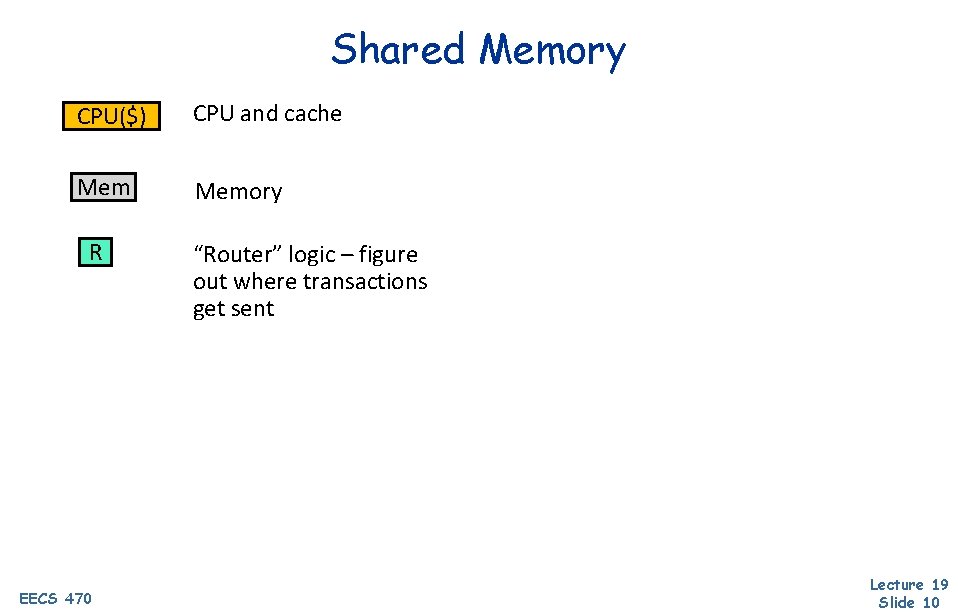

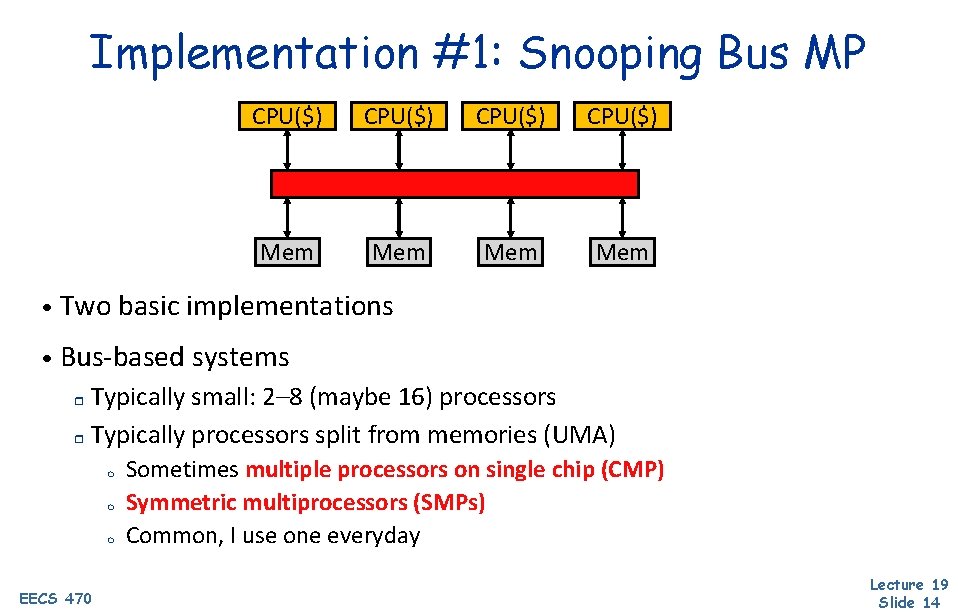

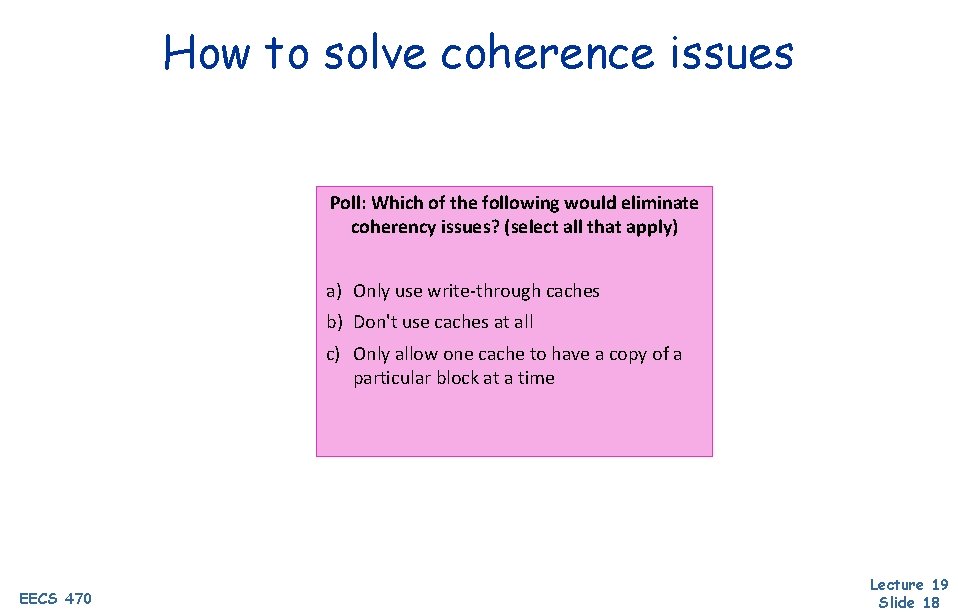

Simple Write-Through Scheme: Valid-Invalid Coherence t 1: Store A=1 P 1 AA[V]: 001 t 2: Bus. Wr A=1 P 2 Write-through No-write-allocate AA[V [V]: I]: 00 t 3: Invalidate A Bus A: A: 001 Main Memory Valid-Invalid Coherence • Allows multiple readers, but must write through to bus Write-through, no-write-allocate cache • All caches must monitor (aka “snoop”) all bus traffic r EECS 470 simple state machine for each cache frame Lecture 19 Slide 21

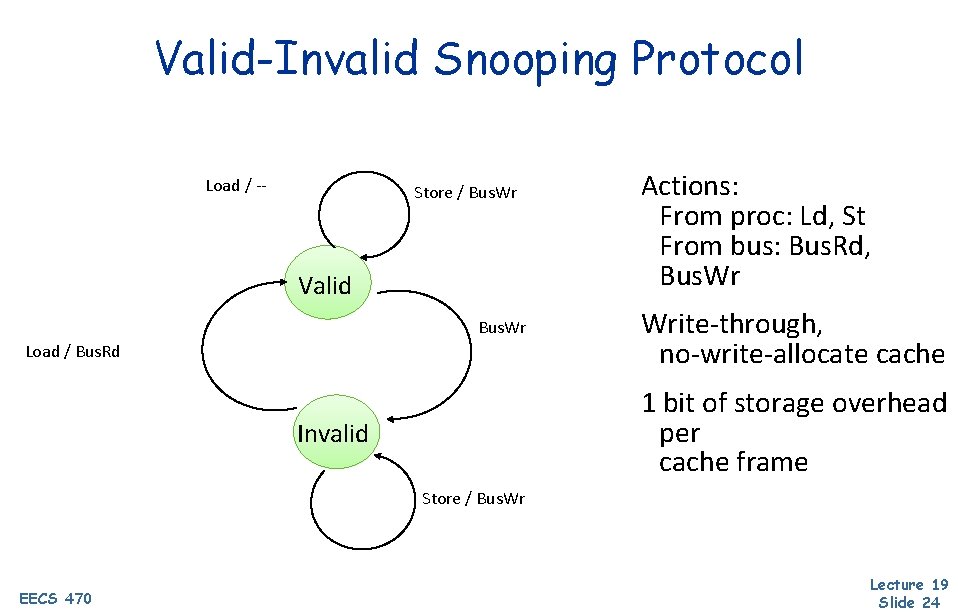

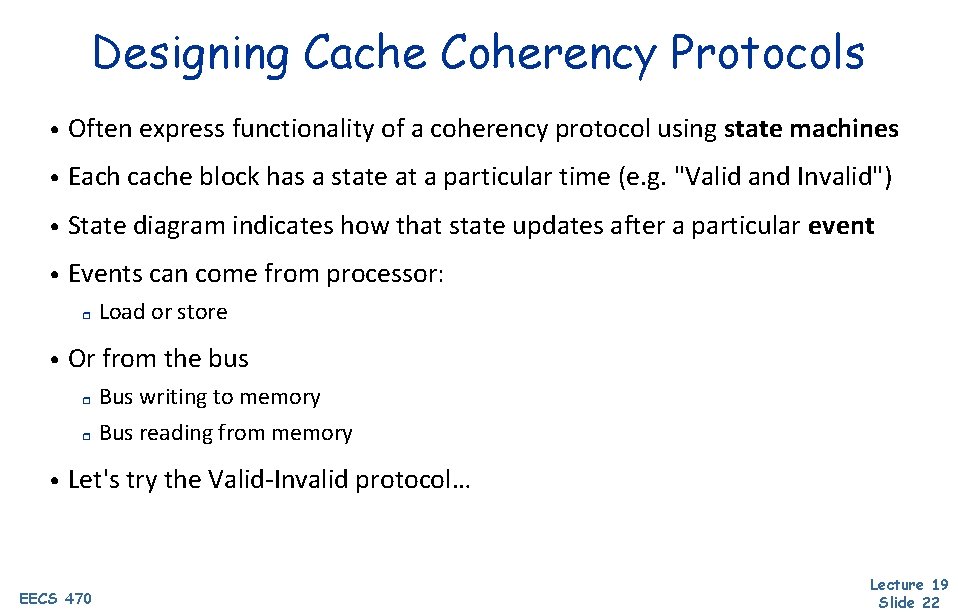

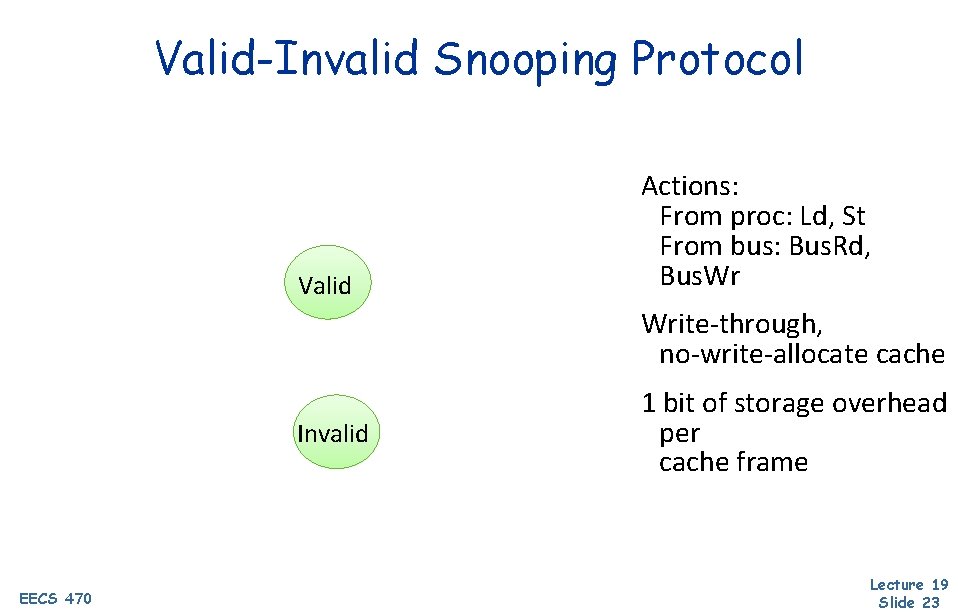

Designing Cache Coherency Protocols • Often express functionality of a coherency protocol using state machines • Each cache block has a state at a particular time (e. g. "Valid and Invalid") • State diagram indicates how that state updates after a particular event • Events can come from processor: r • • Load or store Or from the bus r Bus writing to memory r Bus reading from memory Let's try the Valid-Invalid protocol… EECS 470 Lecture 19 Slide 22

Valid-Invalid Snooping Protocol Valid Actions: From proc: Ld, St From bus: Bus. Rd, Bus. Wr Write-through, no-write-allocate cache Invalid EECS 470 1 bit of storage overhead per cache frame Lecture 19 Slide 23

Valid-Invalid Snooping Protocol Load / -- Store / Bus. Wr Valid Bus. Wr Load / Bus. Rd Actions: From proc: Ld, St From bus: Bus. Rd, Bus. Wr Write-through, no-write-allocate cache 1 bit of storage overhead per cache frame Invalid Store / Bus. Wr EECS 470 Lecture 19 Slide 24

Supporting Write-Back Caches • Write-back caches drastically reduce bus write bandwidth • Key idea: add notion of “ownership” to Valid-Invalid Mutual exclusion – when “owner” has only replica of a cache block, it may update it freely r Sharing – multiple readers are ok, but they may not write without gaining ownership r Need to find which cache (if any) is an owner on read misses r Need to eventually update memory so writes are not lost r EECS 470 Lecture 19 Slide 25

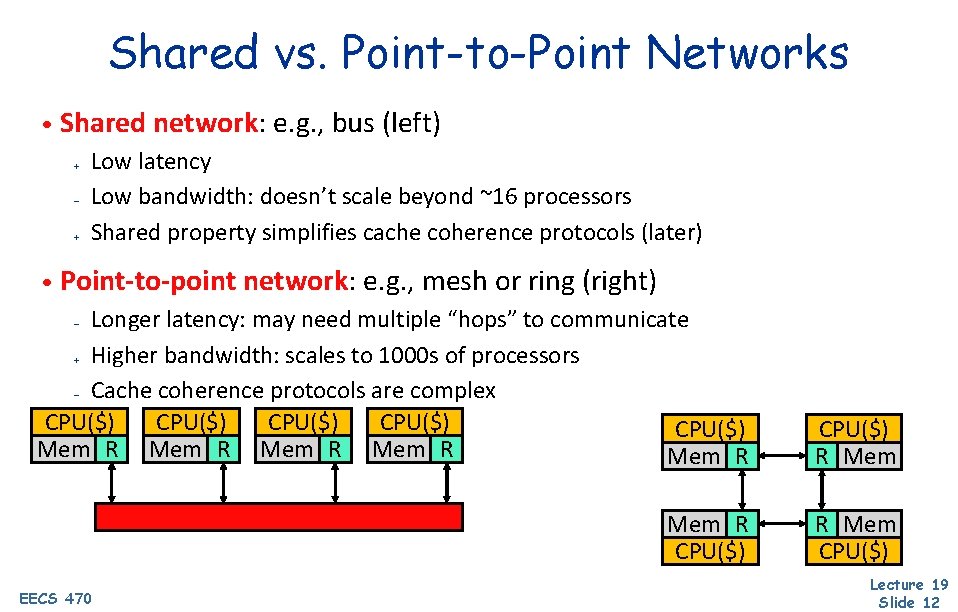

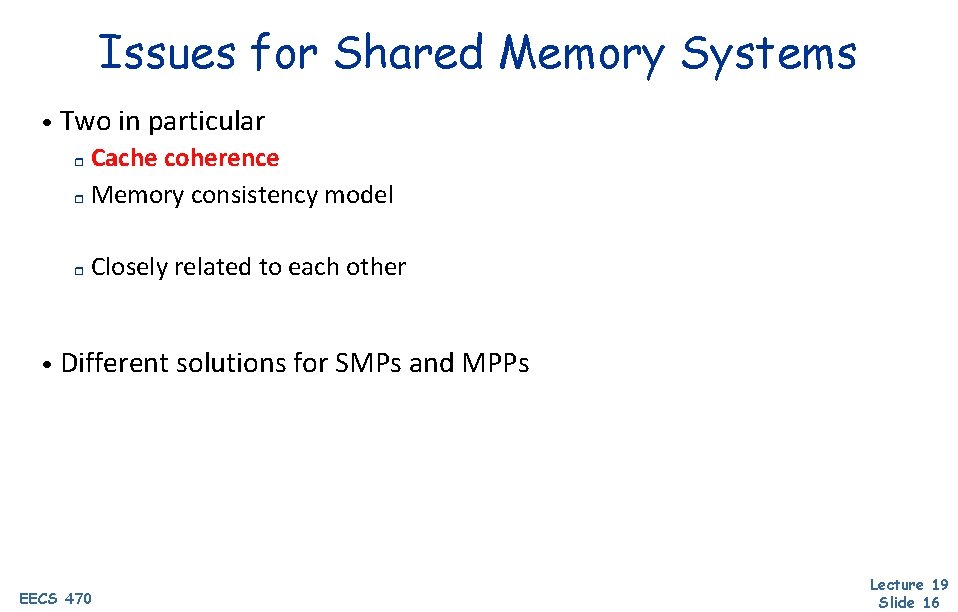

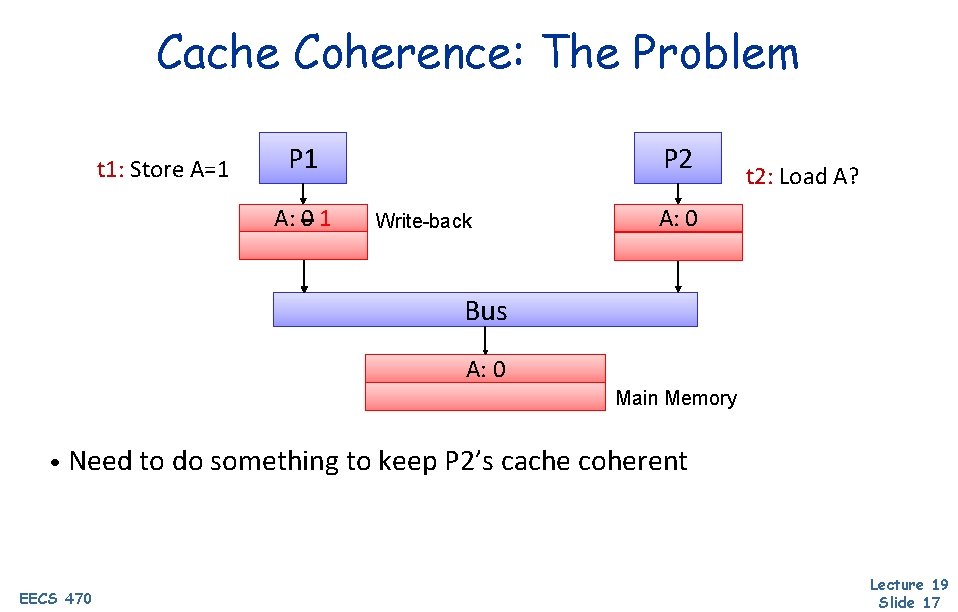

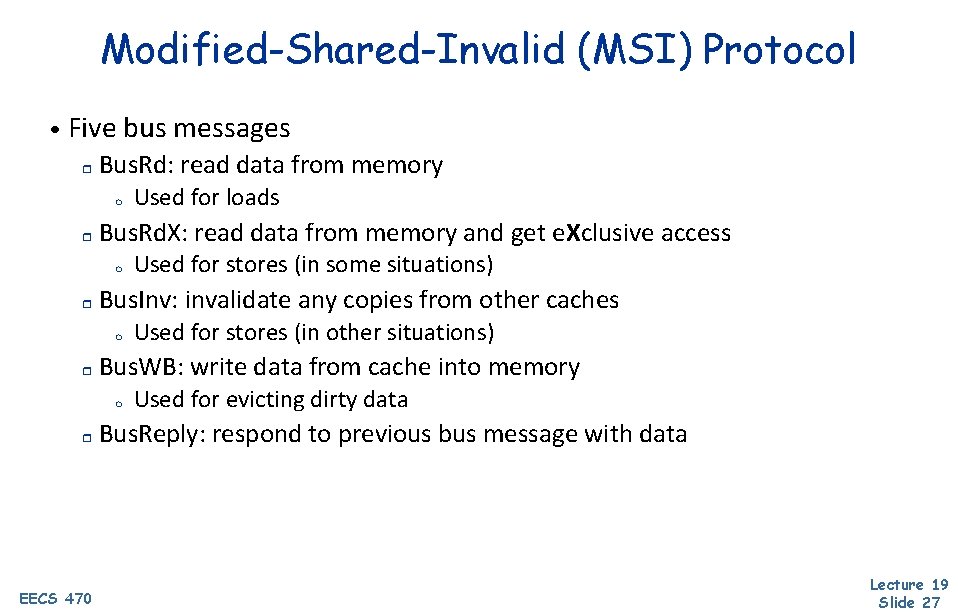

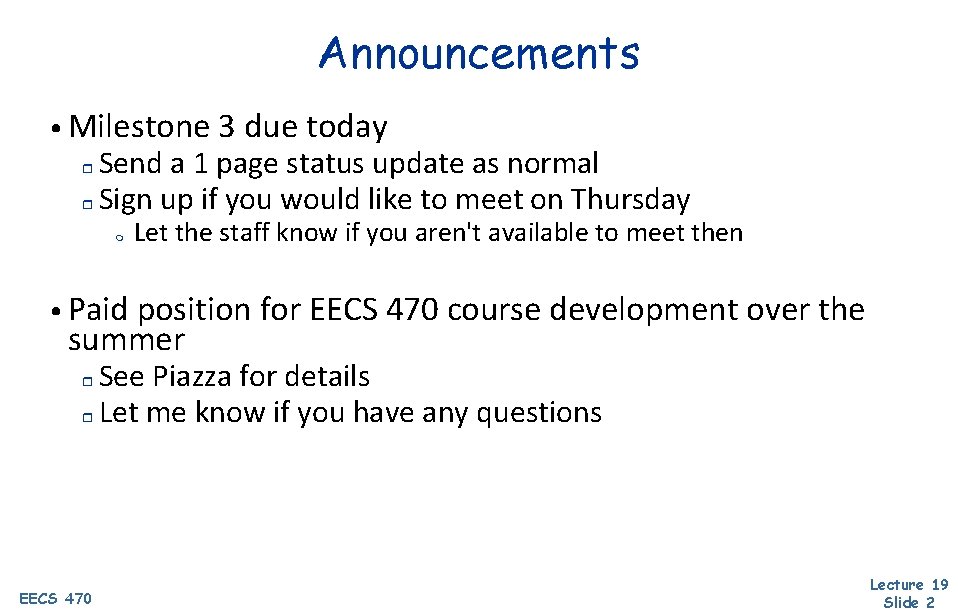

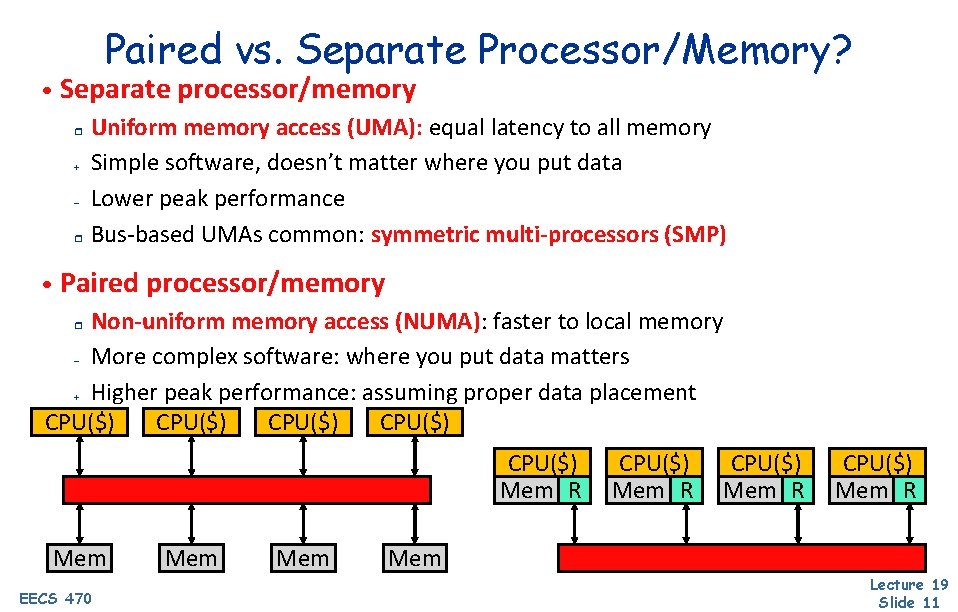

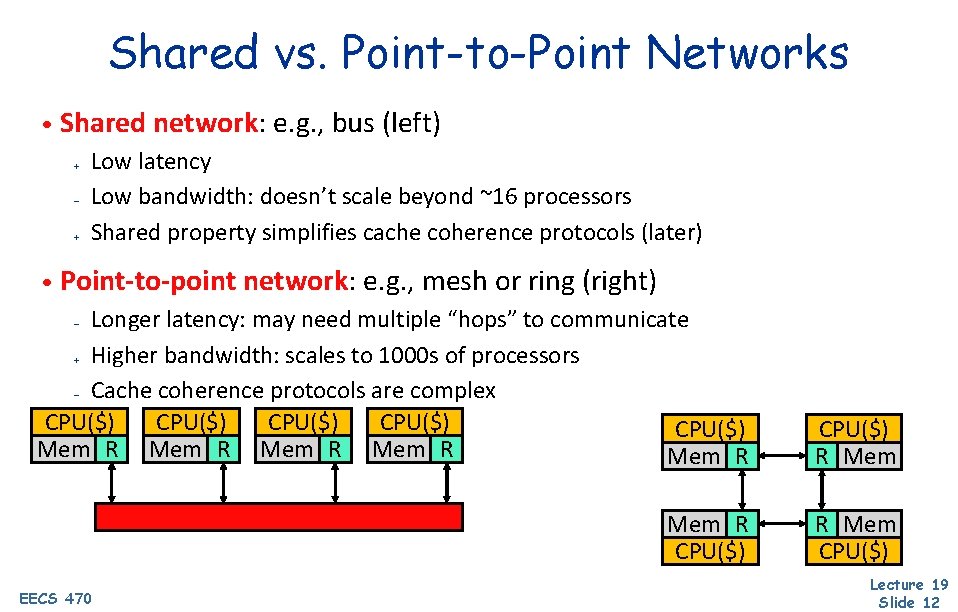

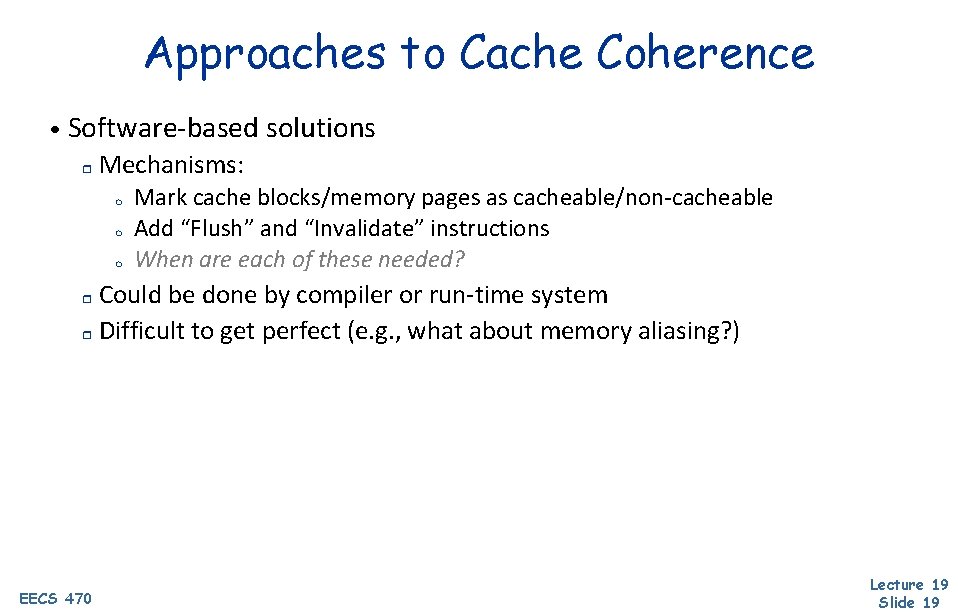

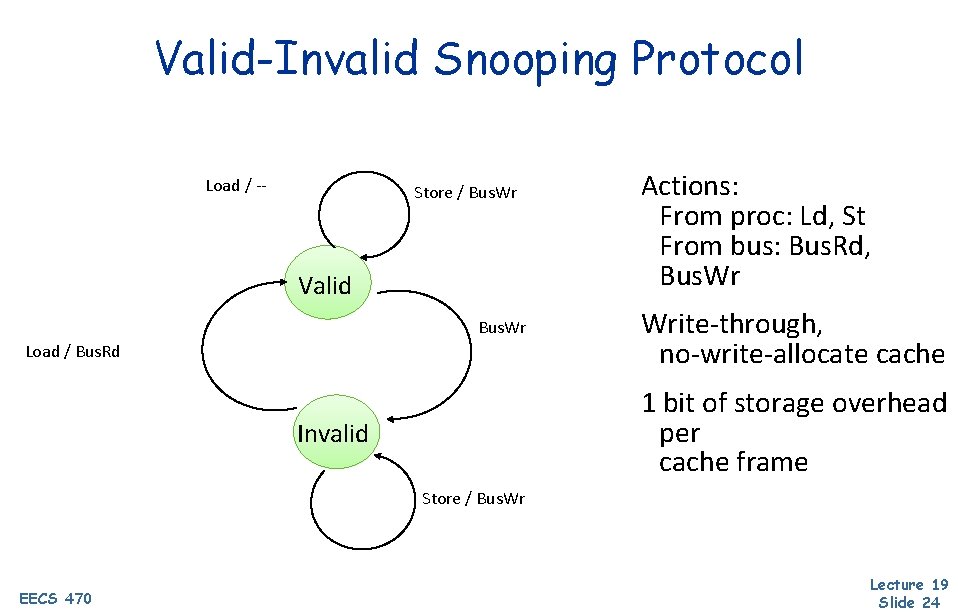

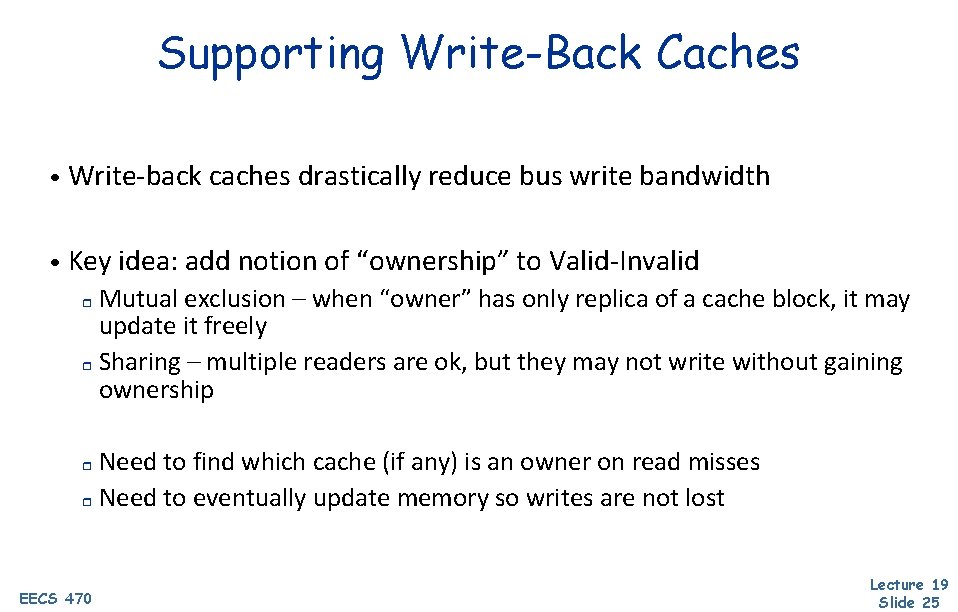

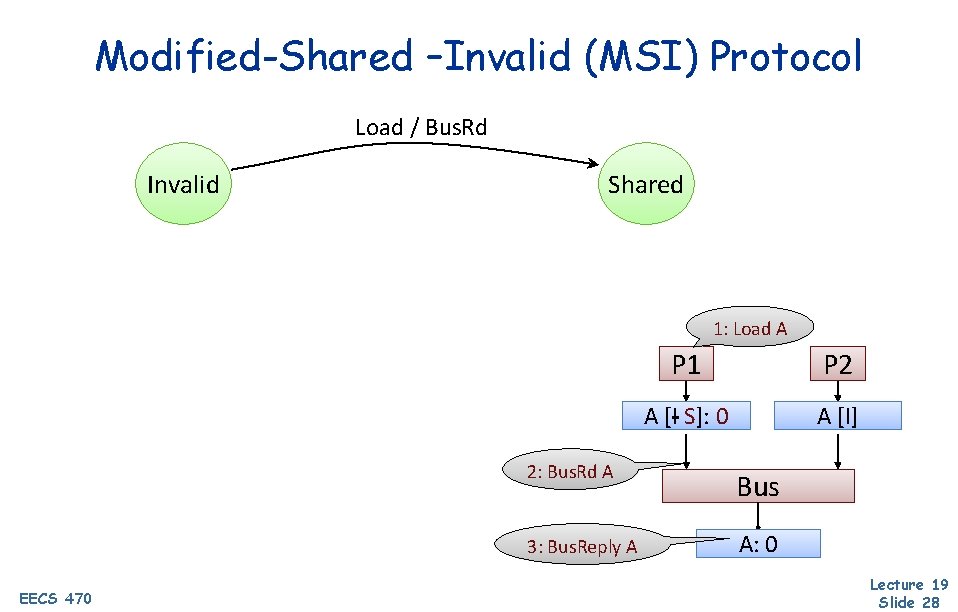

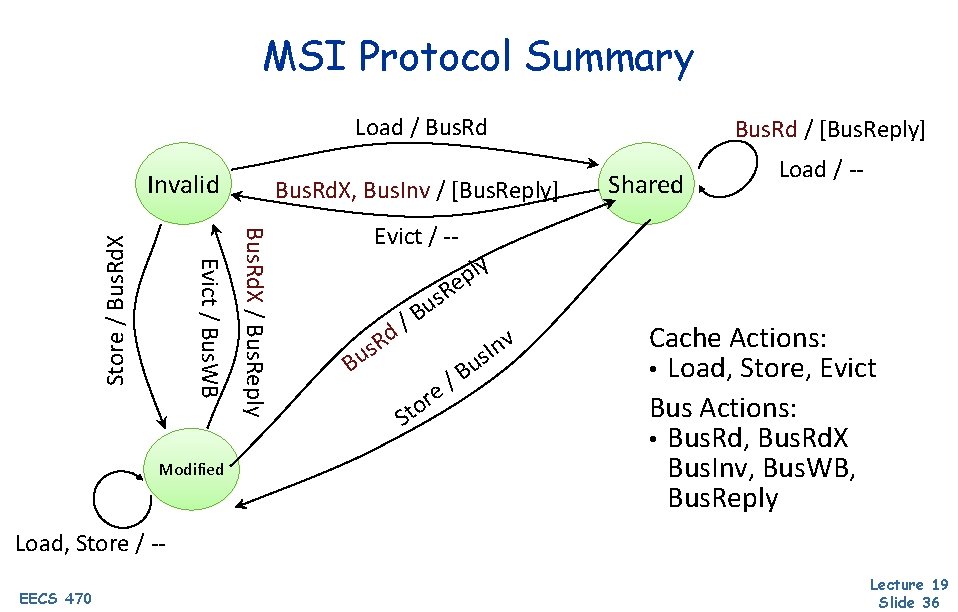

Modified-Shared-Invalid (MSI) Protocol • Three states tracked per-block at each cache Invalid – cache does not have a copy r Shared – cache has a read-only copy; clean r m r Clean == memory is up to date Modified – cache has the only copy; writable; dirty m Dirty == memory is out of date • Three processor actions r EECS 470 Load, Store, Evict Lecture 19 Slide 26

Modified-Shared-Invalid (MSI) Protocol • Five bus messages r Bus. Rd: read data from memory m r Bus. Rd. X: read data from memory and get e. Xclusive access m r EECS 470 Used for stores (in other situations) Bus. WB: write data from cache into memory m r Used for stores (in some situations) Bus. Inv: invalidate any copies from other caches m r Used for loads Used for evicting dirty data Bus. Reply: respond to previous bus message with data Lecture 19 Slide 27

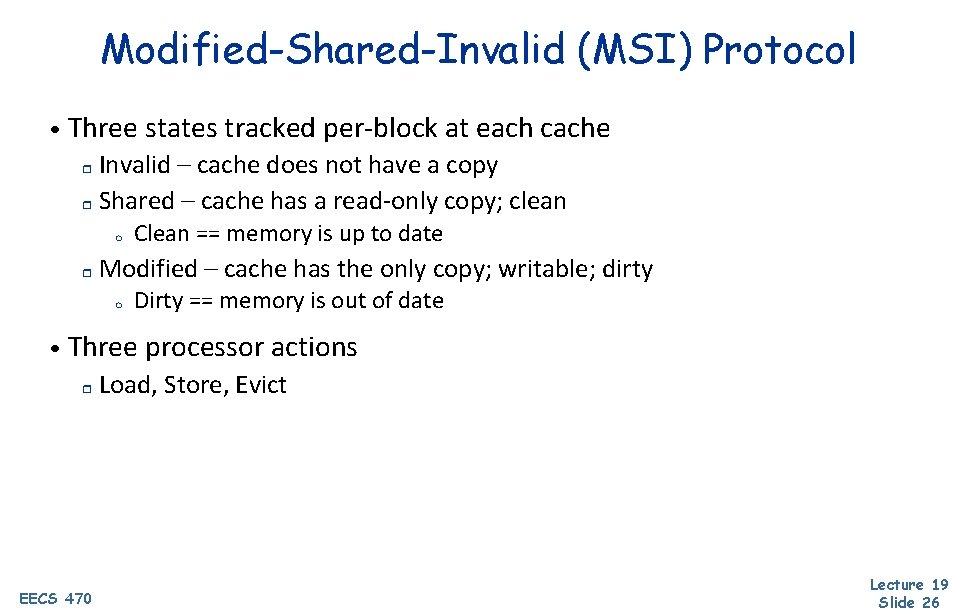

Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Shared 1: Load A EECS 470 P 1 P 2 A [I A S]: [I] 0 A [I] 2: Bus. Rd A Bus 3: Bus. Reply A A: 0 Lecture 19 Slide 28

![ModifiedShared Invalid MSI Protocol Load Bus Rd Invalid Bus Rd Bus Reply Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Bus. Rd / [Bus. Reply]](https://slidetodoc.com/presentation_image_h2/3c1f8e3510a76b9def314fca58ab0e56/image-29.jpg)

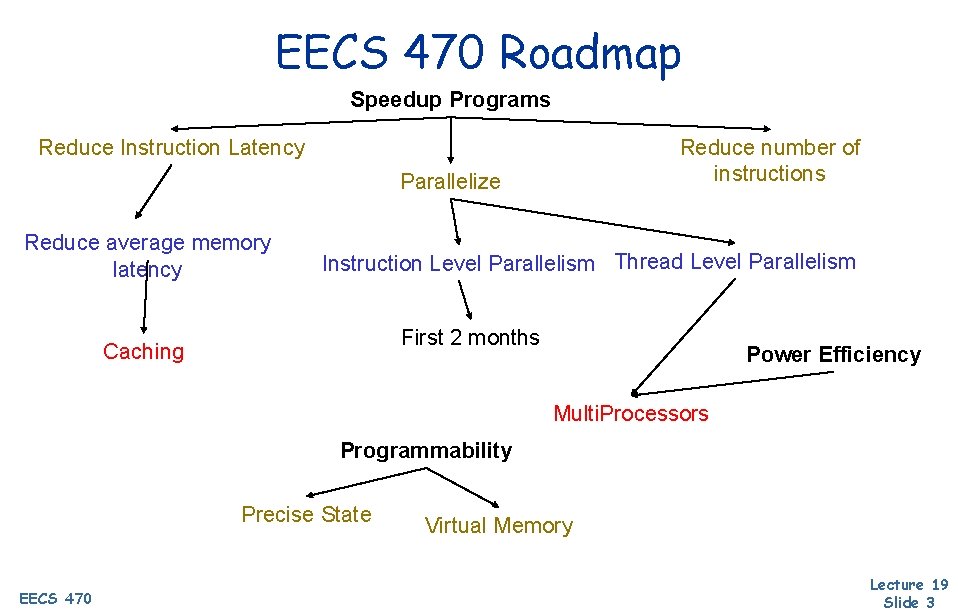

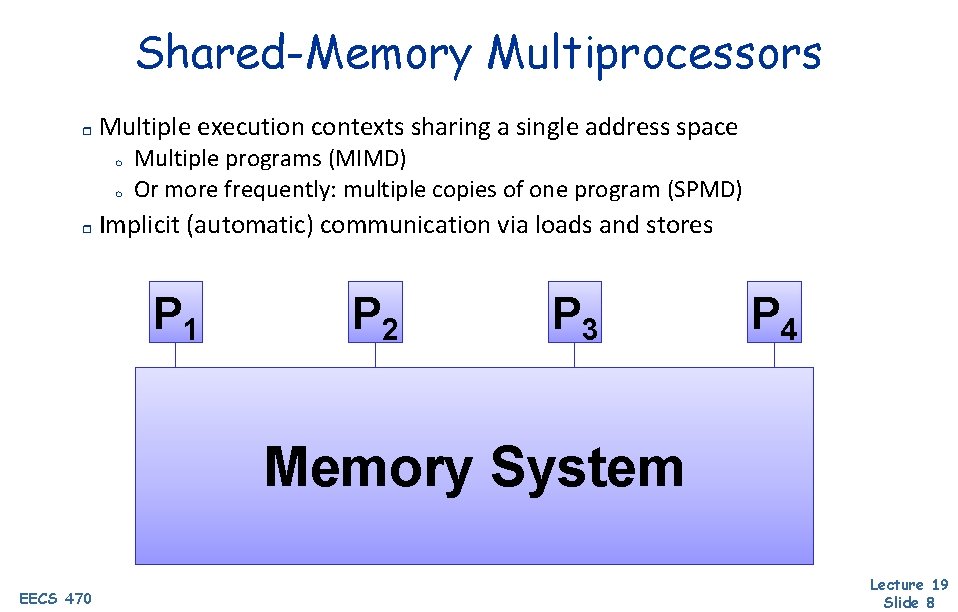

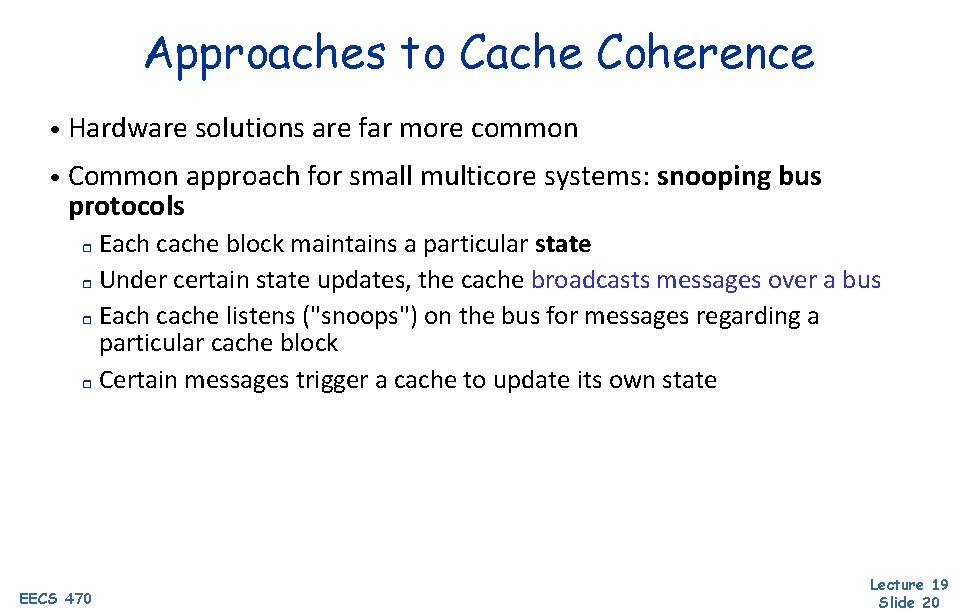

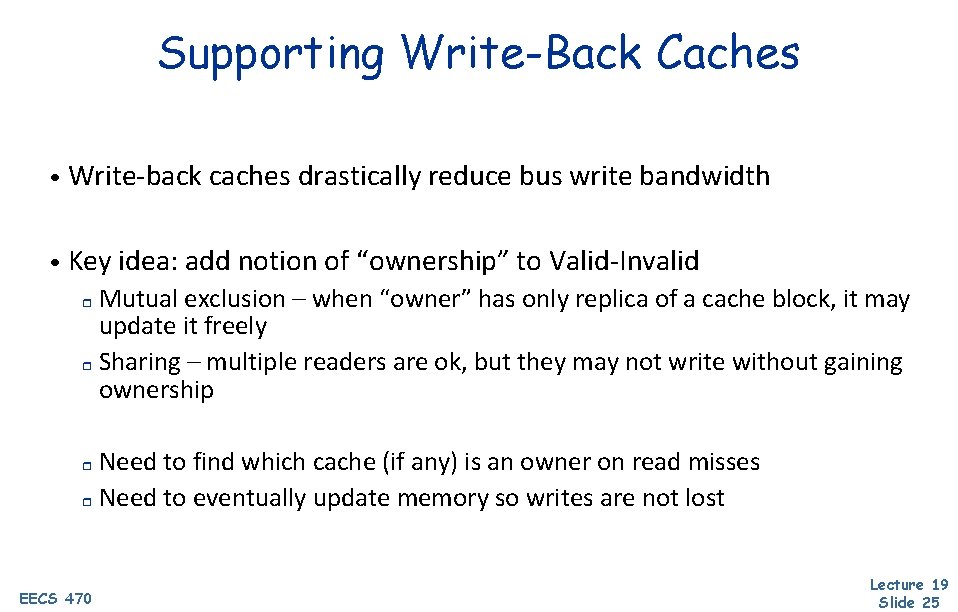

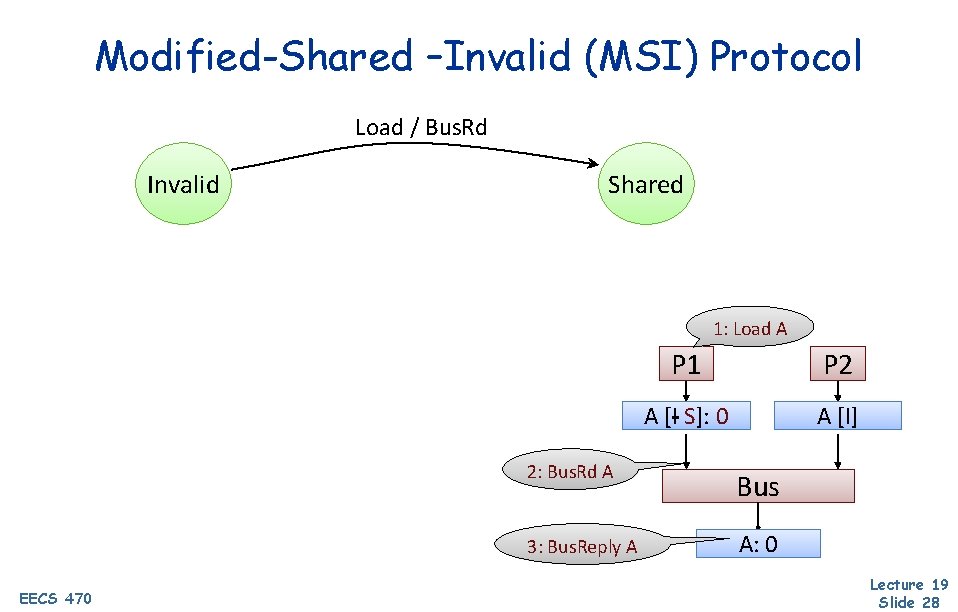

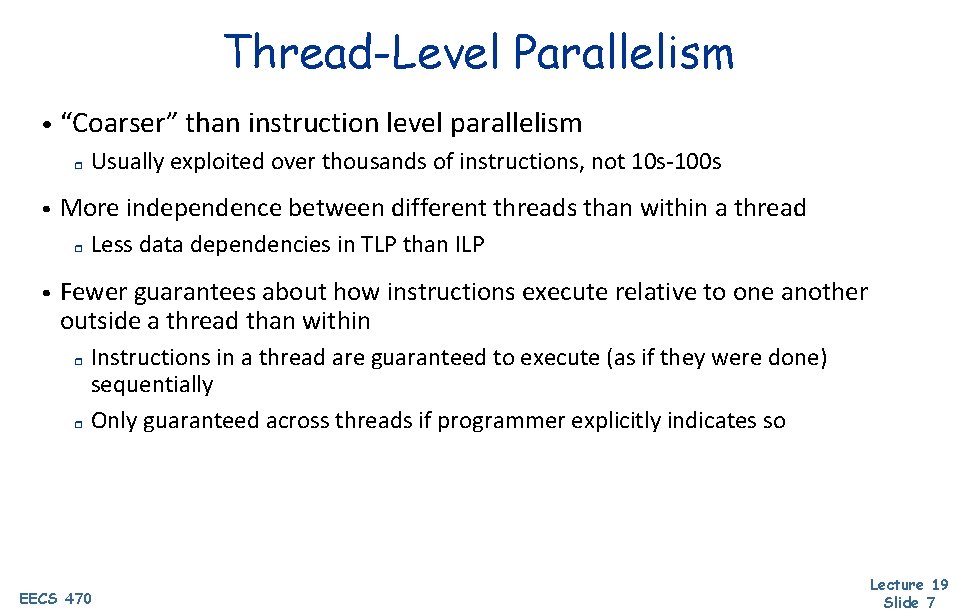

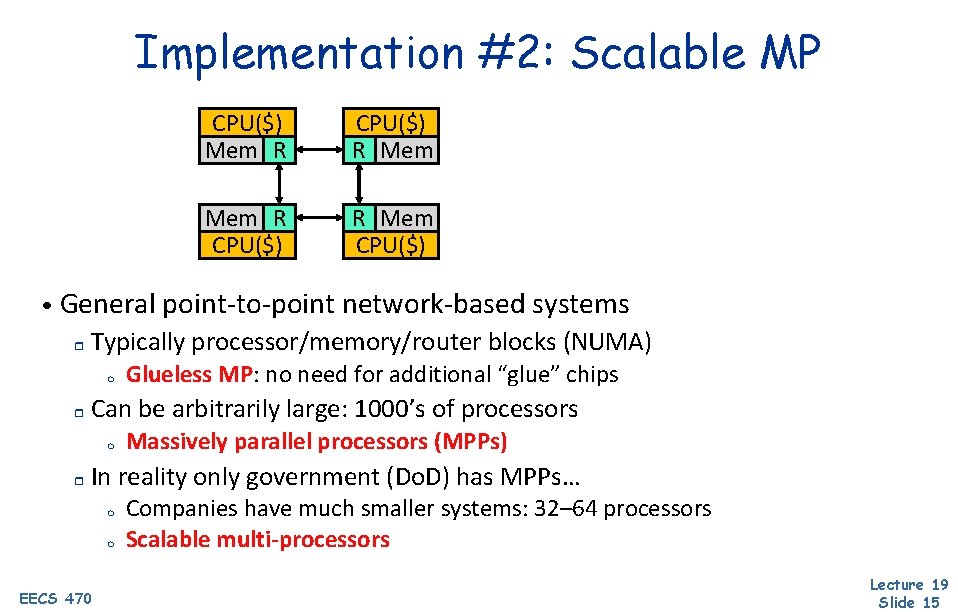

Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Bus. Rd / [Bus. Reply] Load / -- Shared Poll: What state should P 1 and P 2 be after this? 1: Load A 3: Bus. Reply A 1: Load A P 1 P 2 A [S]: 0 A [I A S]: [I] 0 Bus 2: Bus. Rd A A: 0 EECS 470 Lecture 19 Slide 29

![ModifiedShared Invalid MSI Protocol Load Bus Rd Invalid Bus Rd Bus Reply Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Bus. Rd / [Bus. Reply]](https://slidetodoc.com/presentation_image_h2/3c1f8e3510a76b9def314fca58ab0e56/image-30.jpg)

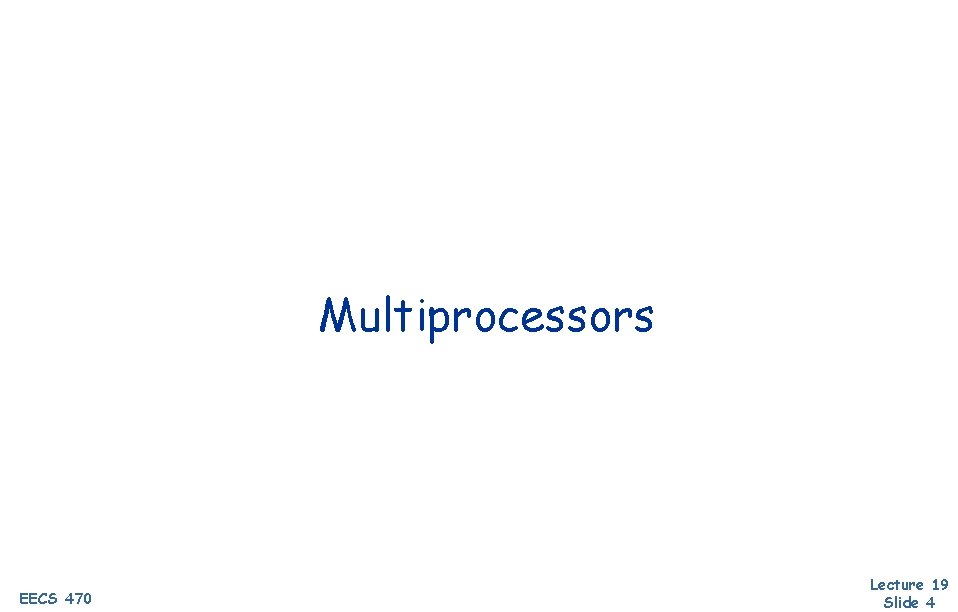

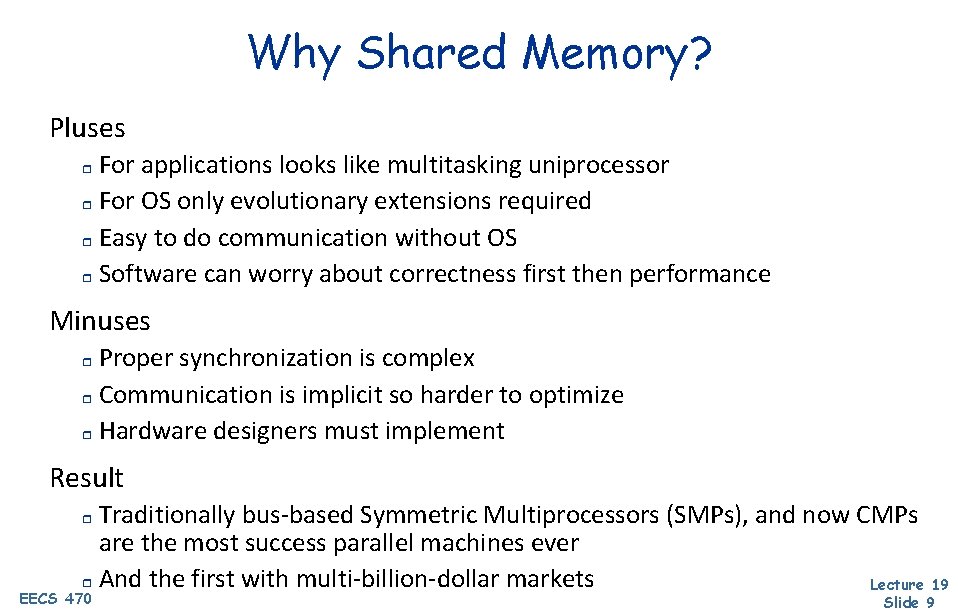

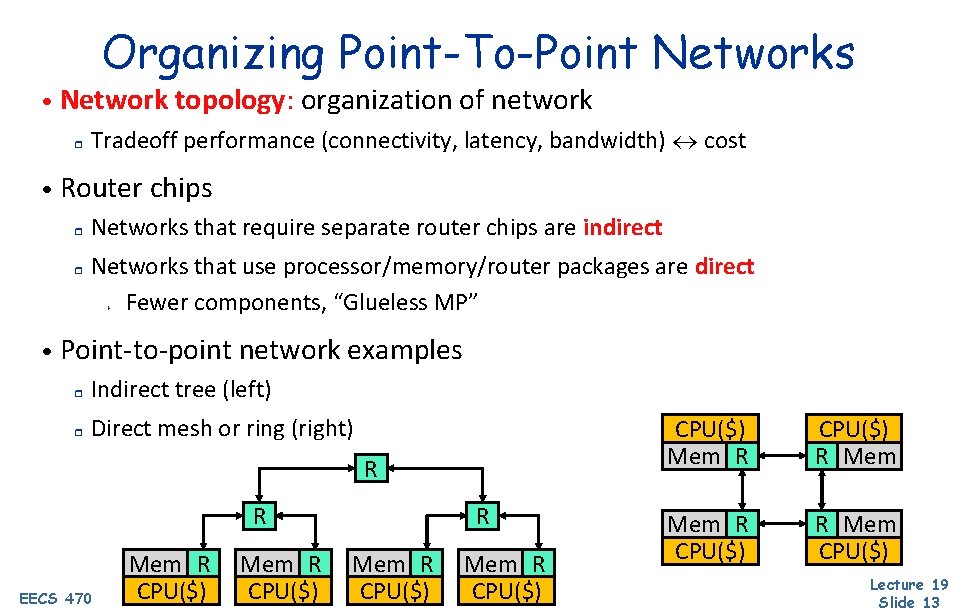

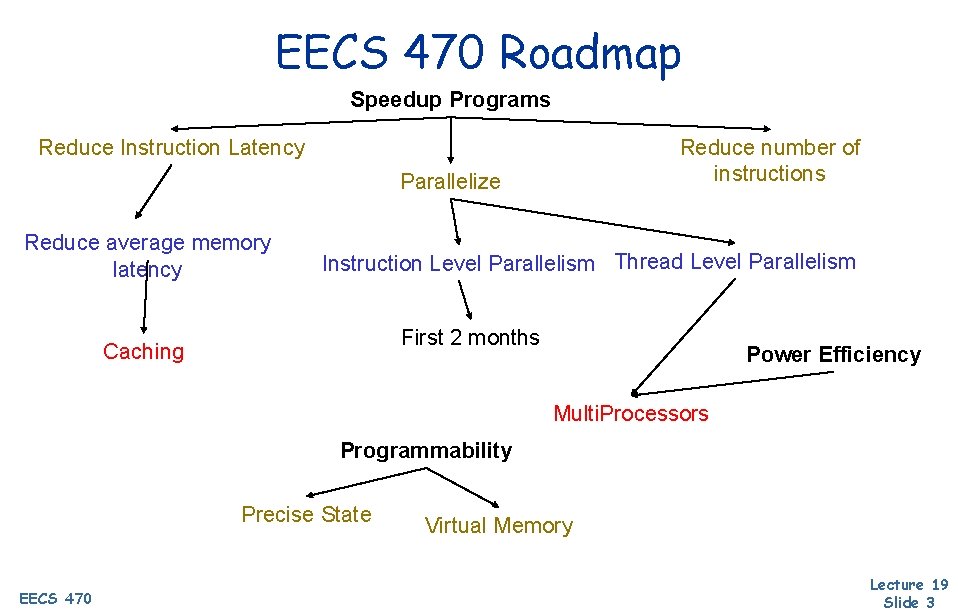

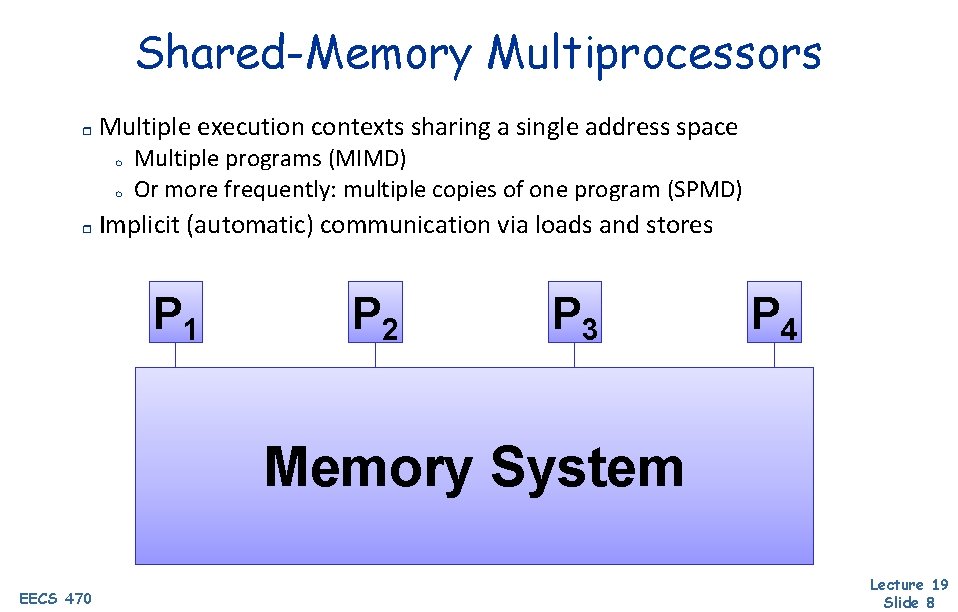

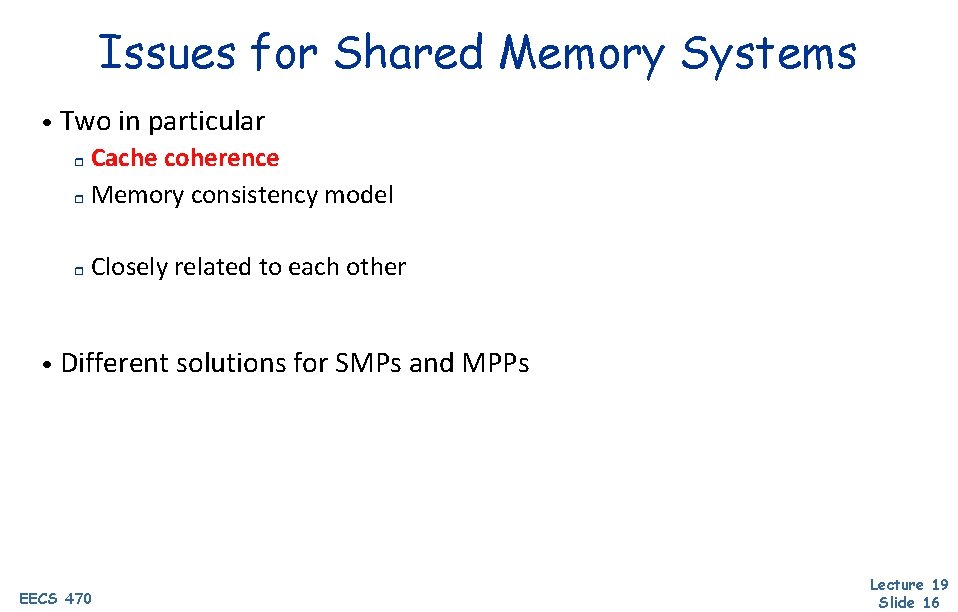

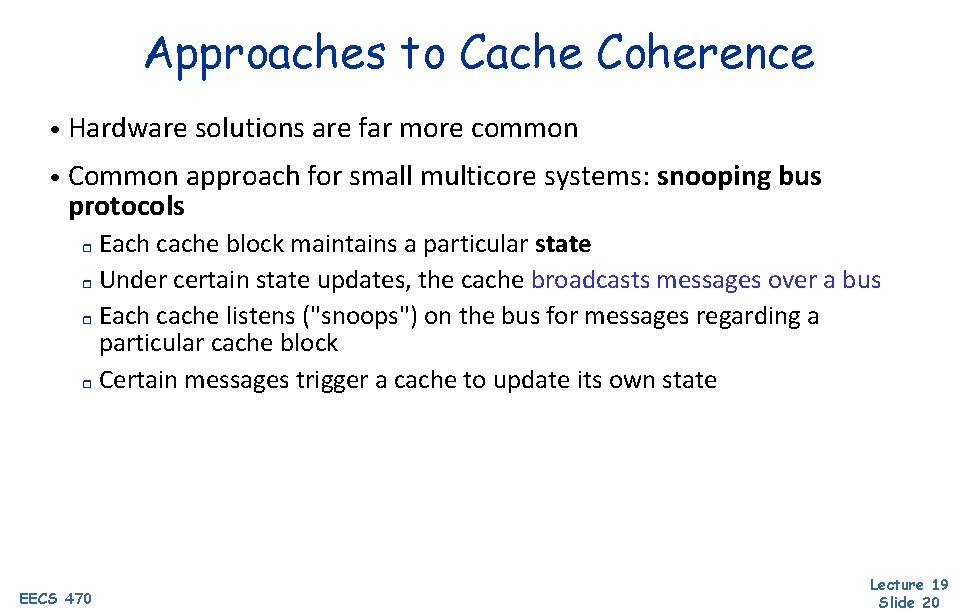

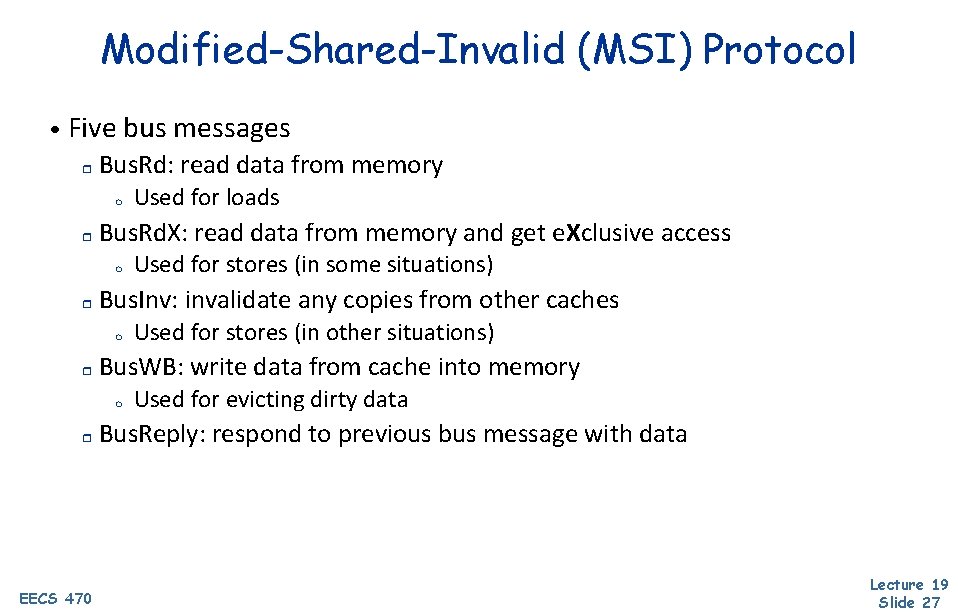

Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Bus. Rd / [Bus. Reply] Load / -- Shared Evict / -- Evict A P 1 P 2 A [S]: 0 [S[I]I]0 AAA[S]: Bus A: 0 EECS 470 Lecture 19 Slide 30

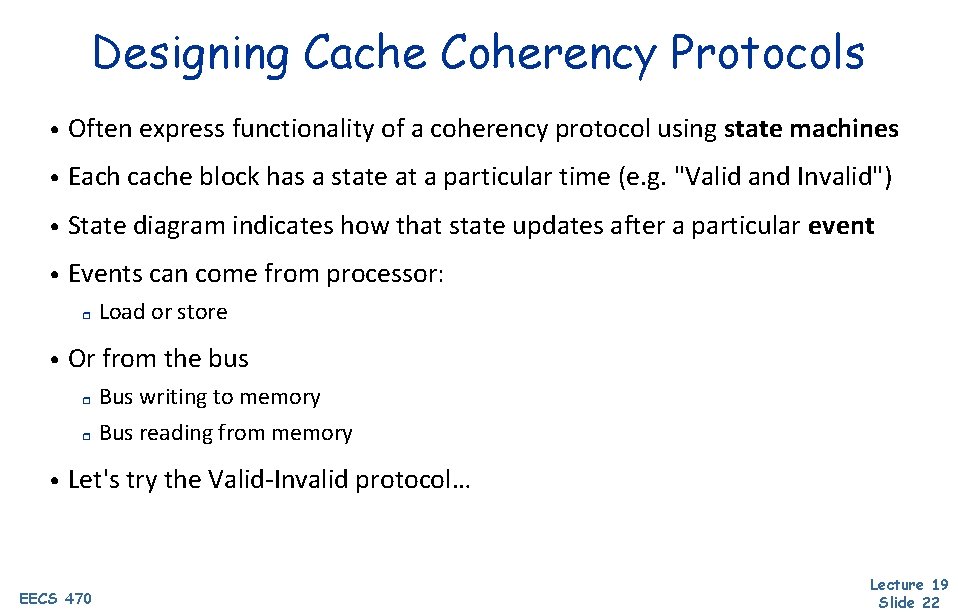

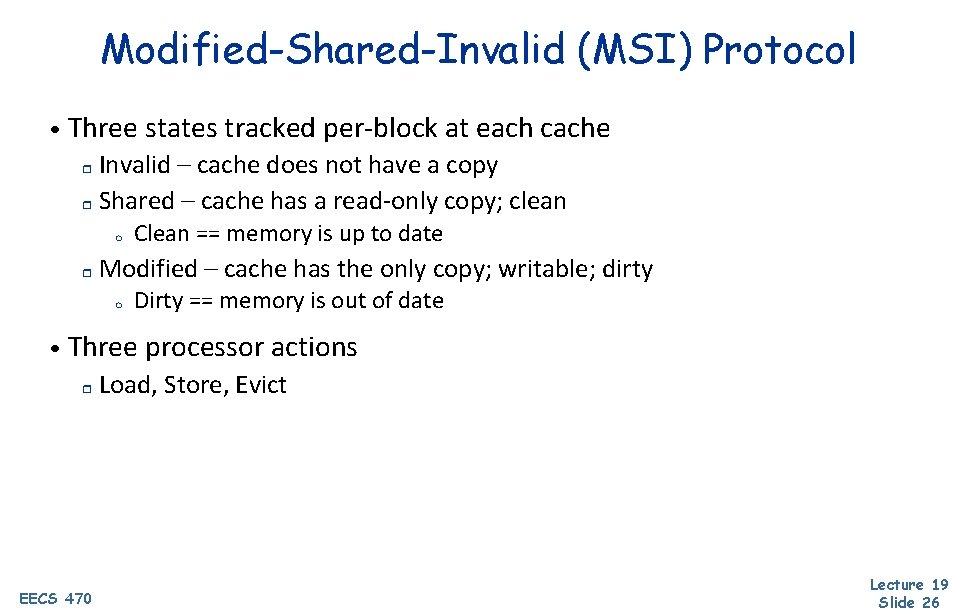

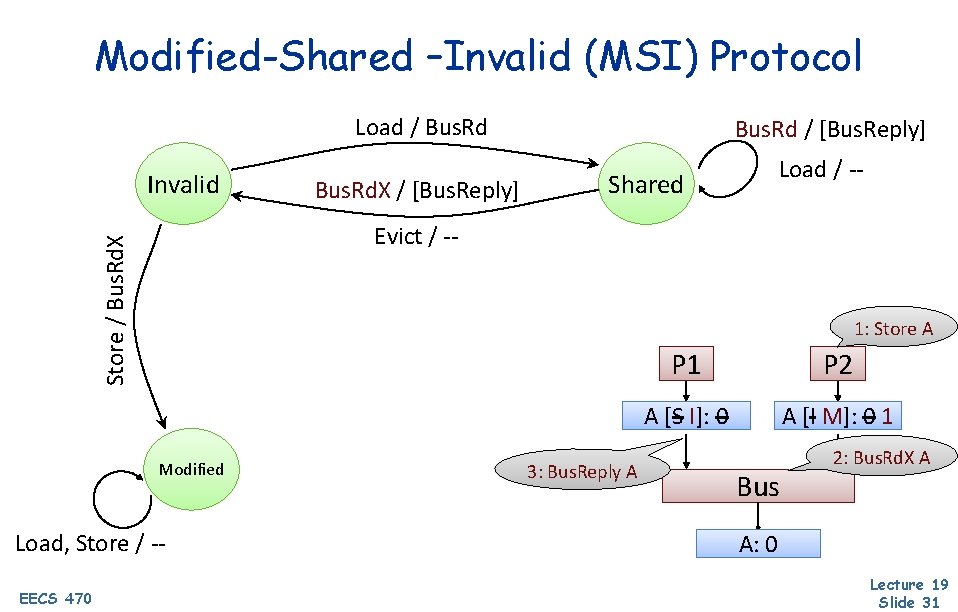

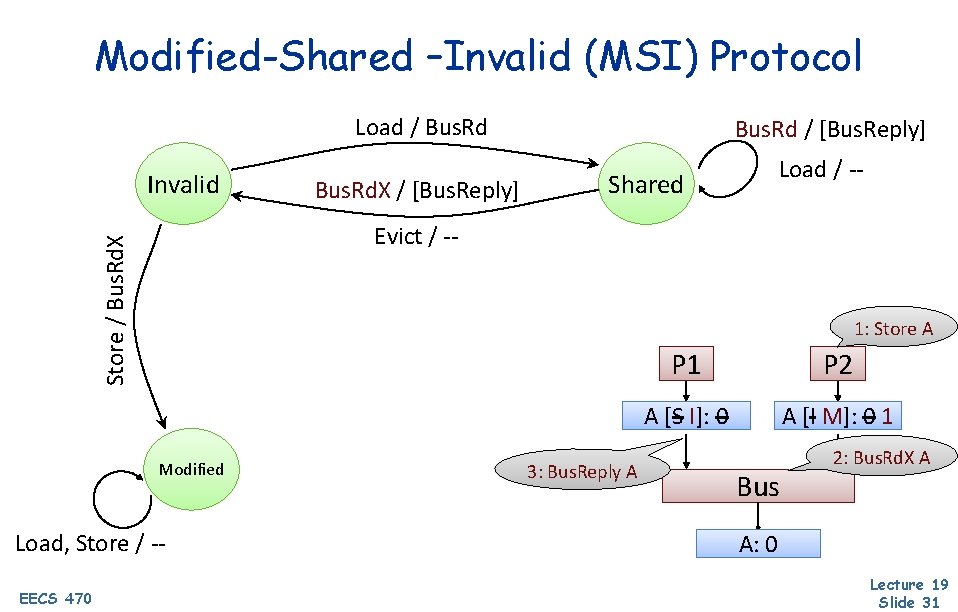

Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Load / -- Shared Store / Bus. Rd. X Evict / -- 1: Store A Modified Load, Store / -EECS 470 Bus. Rd. X / [Bus. Reply] Bus. Rd / [Bus. Reply] 3: Bus. Reply A P 1 P 2 AA[S [S]: I]: 00 A [I AM]: [I] 0 1 Bus 2: Bus. Rd. X A A: 0 Lecture 19 Slide 31

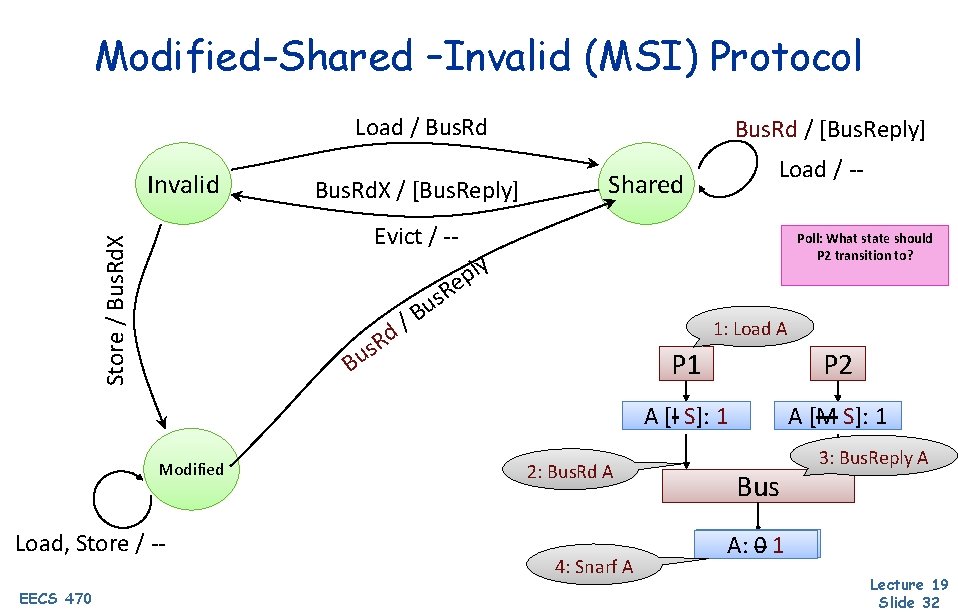

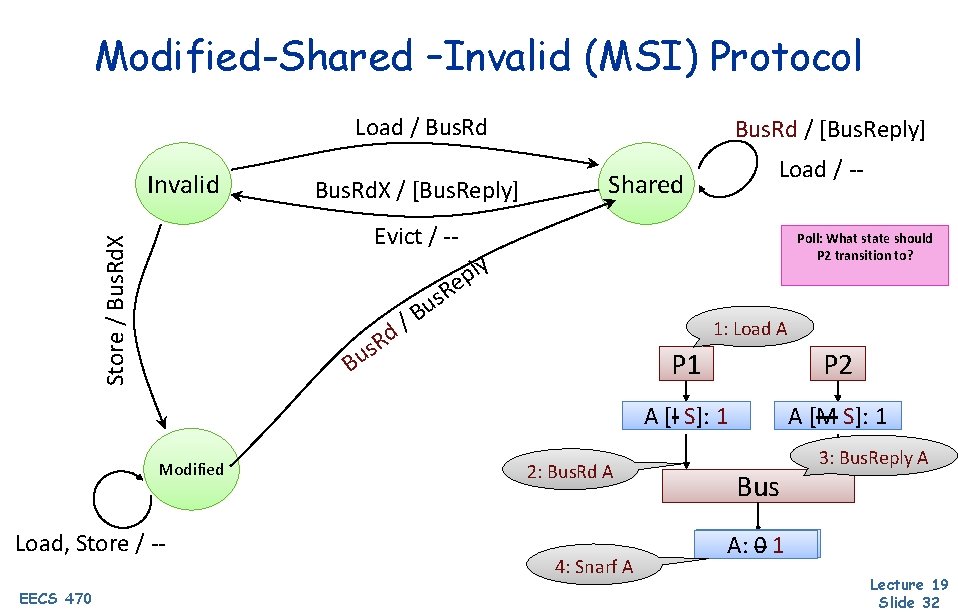

Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Load / -- Shared Store / Bus. Rd. X Evict / -- Poll: What state should P 2 transition to? ly p e R s u B / d 1: Load A R s u B Modified Load, Store / -EECS 470 Bus. Rd. X / [Bus. Reply] Bus. Rd / [Bus. Reply] 2: Bus. Rd A 4: Snarf A P 1 P 2 A [I A S]: [I] 1 AA[M [M]: S]: 11 Bus 3: Bus. Reply A A: A: 001 Lecture 19 Slide 32

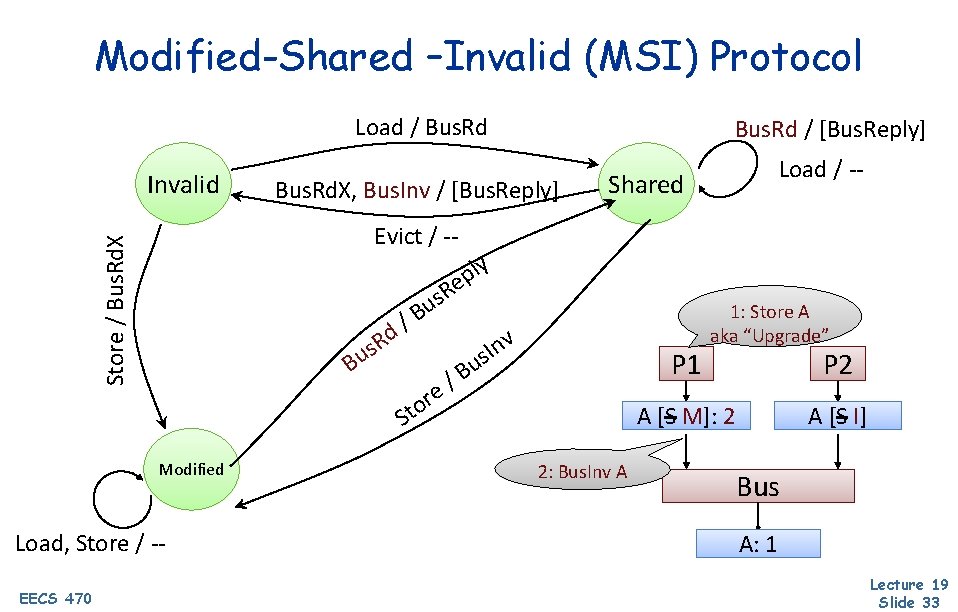

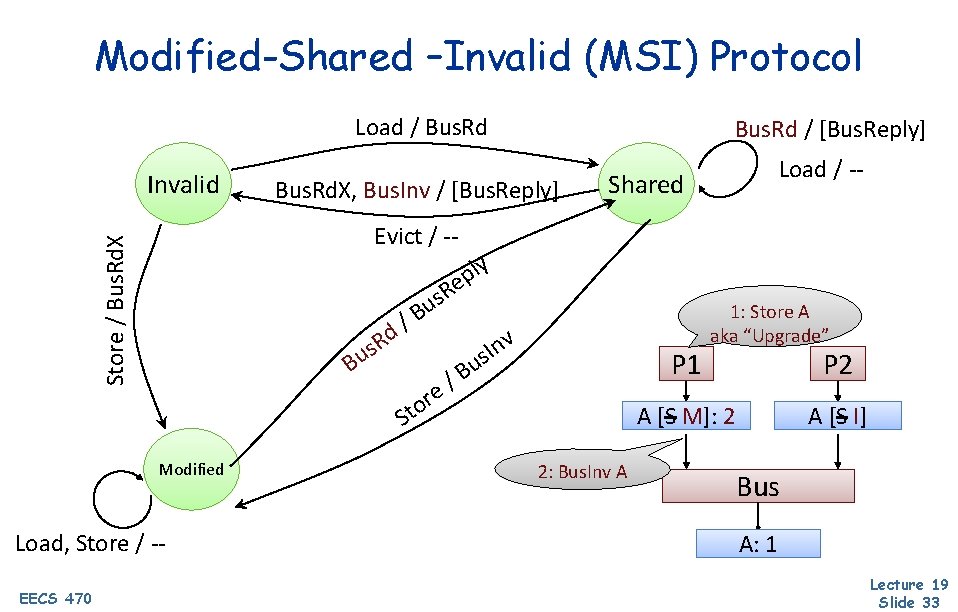

Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Bus. Rd. X, Bus. Rd. X Bus. Inv / [Bus. Reply] Load / -- Shared Store / Bus. Rd. X Evict / -- ly p e R s u B / d R s u B Modified Load, Store / -EECS 470 Bus. Rd / [Bus. Reply] re o St v n I us P 1 /B 1: Store A aka “Upgrade” P 2 AA[S[S]: M]: 1 2 2: Bus. Inv A AA[S]: [S I]1 Bus A: 1 Lecture 19 Slide 33

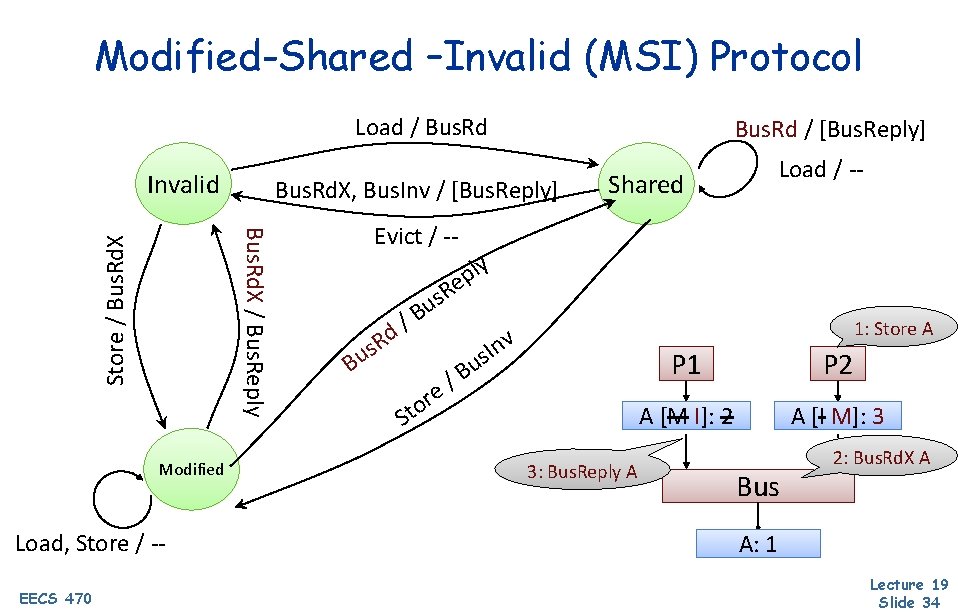

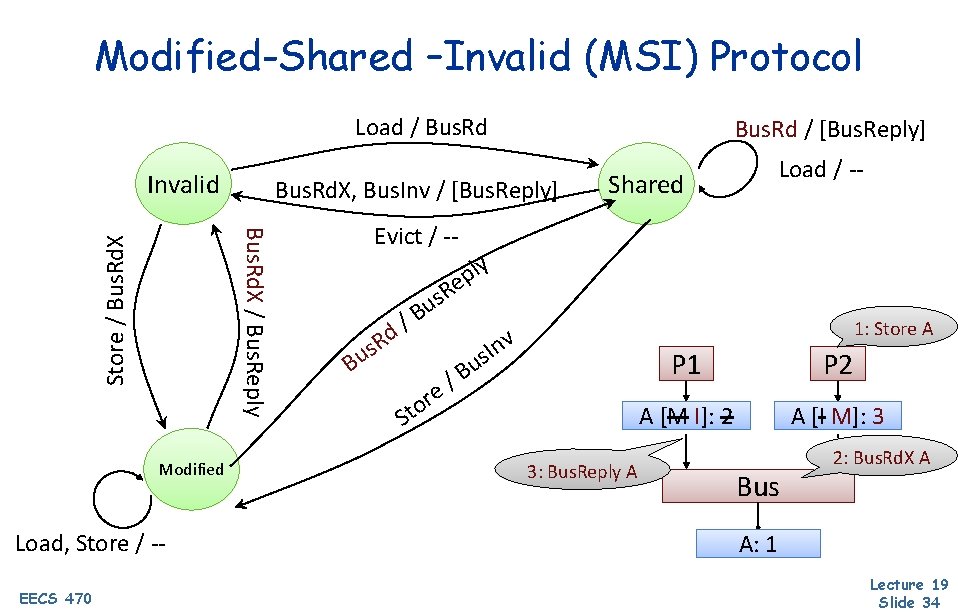

Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Invalid Store / Bus. Rd. X Load, Store / -EECS 470 Bus. Rd. X, Bus. Inv / [Bus. Reply] Bus. Rd. X / Bus. Reply Modified Bus. Rd / [Bus. Reply] Load / -- Shared Evict / -- ly p e R s u B / d B R s u re o St 1: Store A v n I us /B 3: Bus. Reply A P 1 P 2 AA[M [M]: I]: 22 A [IAM]: [I] 3 Bus 2: Bus. Rd. X A A: 1 Lecture 19 Slide 34

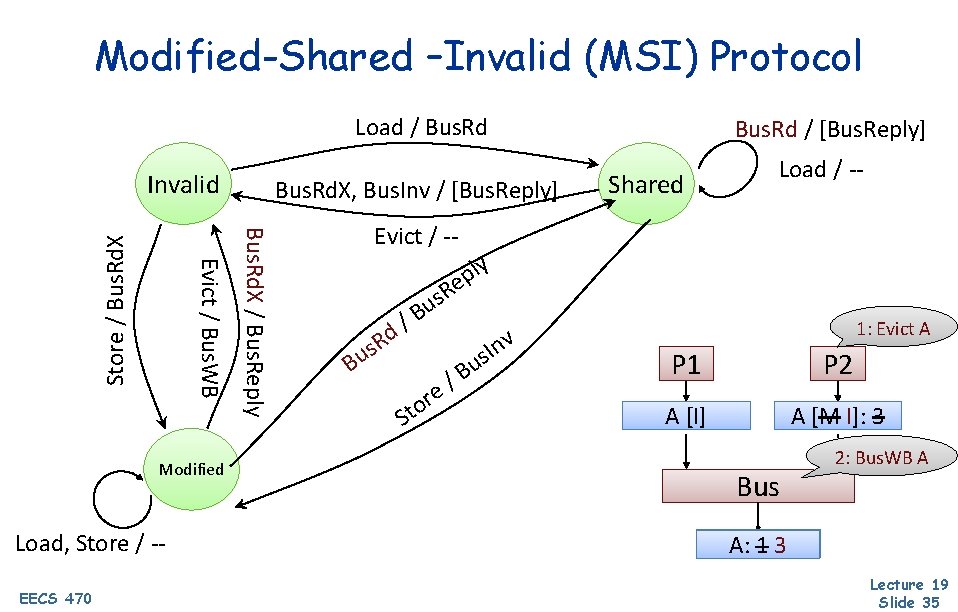

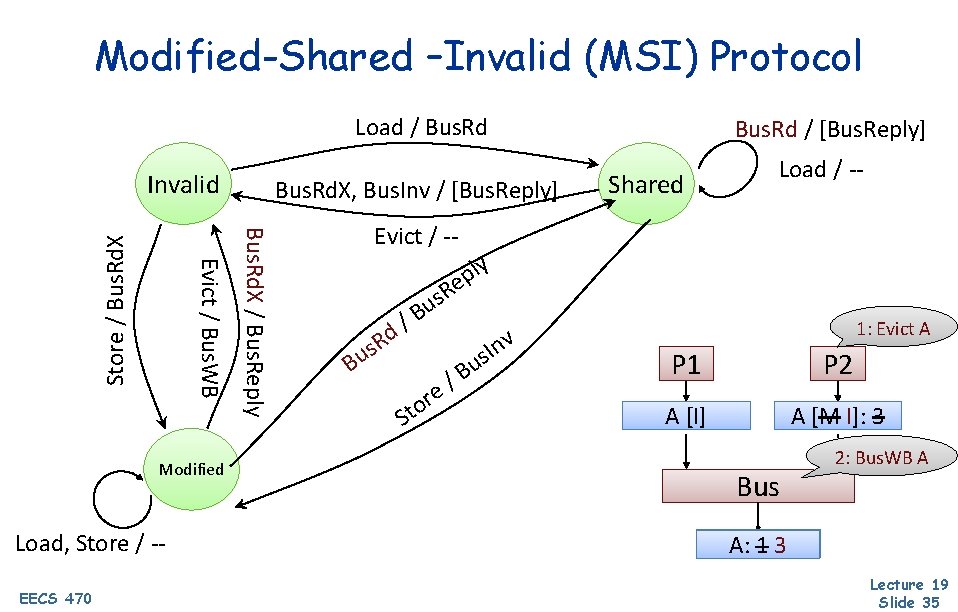

Modified-Shared –Invalid (MSI) Protocol Load / Bus. Rd Modified Load, Store / -EECS 470 Bus. Rd. X, Bus. Inv / [Bus. Reply] Bus. Rd. X / Bus. Reply Evict / Bus. WB Store / Bus. Rd. X Invalid Bus. Rd / [Bus. Reply] Shared Load / -- Evict / -- ly p e R s u B / d B R s u re o St /B v n I us 1: Evict A P 1 P 2 A [I] AA[M [M]: I]: 33 Bus 2: Bus. WB A A: A: 113 Lecture 19 Slide 35

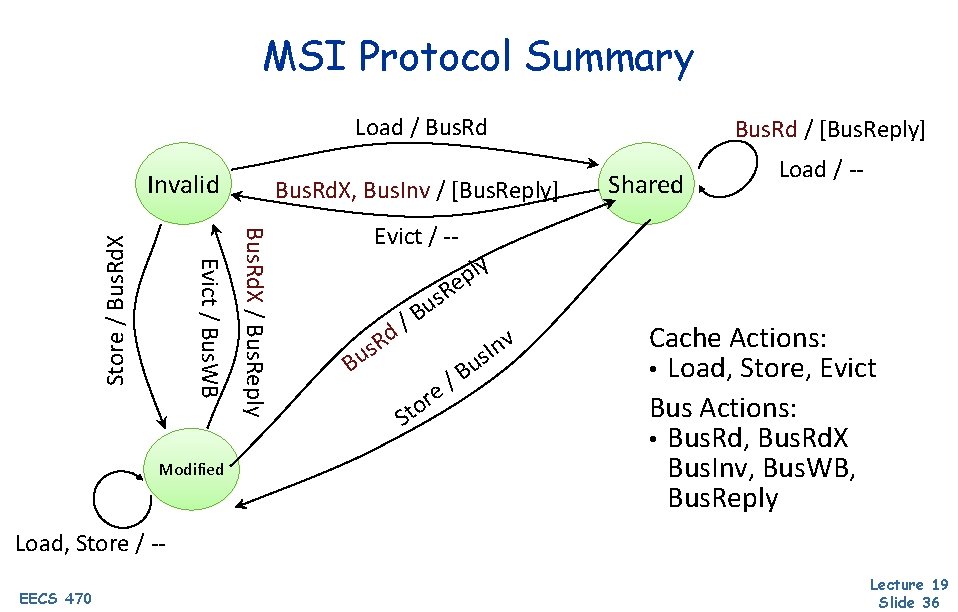

MSI Protocol Summary Load / Bus. Rd Modified Bus. Rd. X, Bus. Inv / [Bus. Reply] Bus. Rd. X / Bus. Reply Evict / Bus. WB Store / Bus. Rd. X Invalid Bus. Rd / [Bus. Reply] Shared Load / -- Evict / -- ly p e R s u B / d B R s u r o t S B / e v n I us Cache Actions: • Load, Store, Evict Bus Actions: • Bus. Rd, Bus. Rd. X Bus. Inv, Bus. WB, Bus. Reply Load, Store / -EECS 470 Lecture 19 Slide 36

Next Time • Continue multicore r Memory consistency • Lingering questions / feedback? I'll include an anonymous form at the end of every lecture: https: //bit. ly/3 o. Sr 5 FD EECS 470 Lecture 19 Slide 37