EECS 470 Lecture 16 Virtual Memory Winter 2021

- Slides: 33

EECS 470 Lecture 16 Virtual Memory Winter 2021 Jon Beaumont http: //www. eecs. umich. edu/courses/eecs 470 Slides developed in part by Profs. Austin, Brehob, Falsafi, Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, and Vijaykumar of Carnegie Mellon University, Purdue University, University of Michigan, and University of Wisconsin. EECS 470 Lecture 18 Slide 1

Administrative HW #4 due Friday (4/2) Let me know if there is any issues with other HW on Gradescope Milestone III next week • No submissions needed • Should target to have simple programs (including memory ops) running correctly • Remaining couple weeks should focus on testing and optimizing EECS 470 Lecture 18 Slide 2

Last Time Finished up memory enhancements r Caching r Prefetching EECS 470 Lecture 18 Slide 3

Today Virtual Memory r Why do we need it? r Evolution: m r Page tables and organization m m r EECS 470 From base and bound through paging Hierarchical Inverted (hash) Cache implications Lecture 18 Slide 4

Virtual Memory EECS 470 Lecture 18 Slide 5

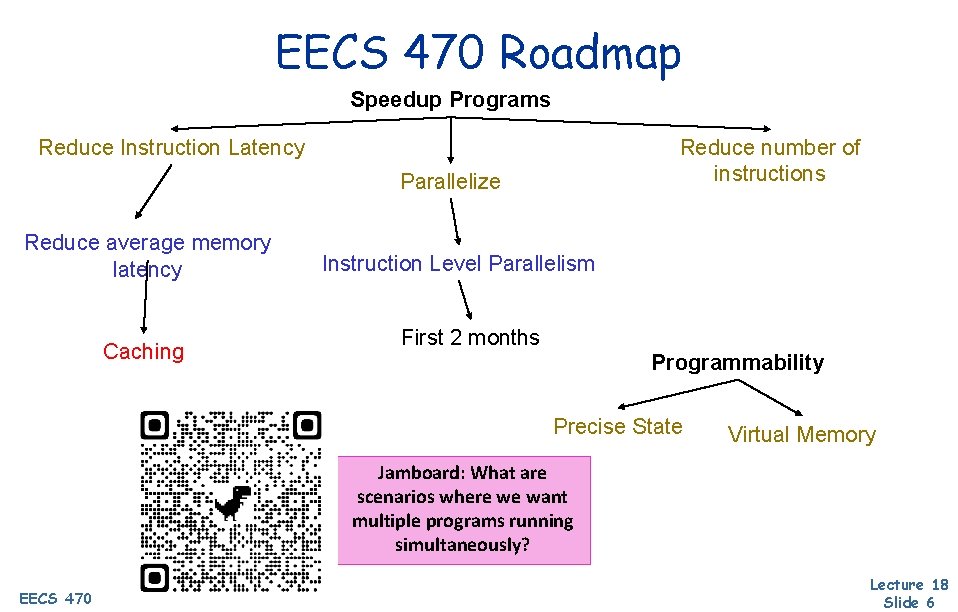

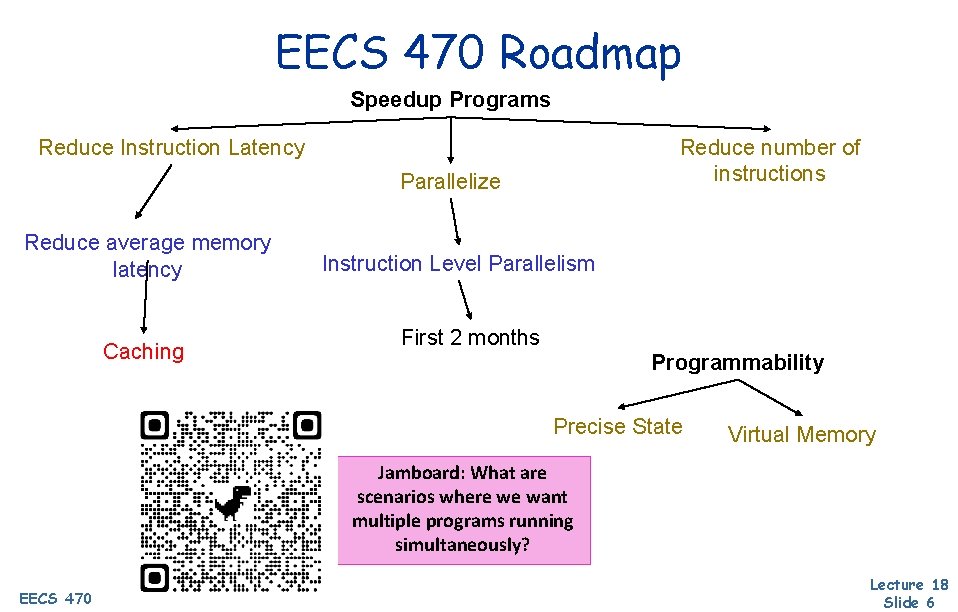

EECS 470 Roadmap Speedup Programs Reduce Instruction Latency Reduce number of instructions Parallelize Reduce average memory latency Caching Instruction Level Parallelism First 2 months Programmability Precise State Virtual Memory Jamboard: What are scenarios where we want multiple programs running simultaneously? EECS 470 Lecture 18 Slide 6

Motivation - Multiprogramming • We like to have multiple processes (program instances) running at the same time • • Practicality: I/O management, network processing, GUI Performance: More power efficient to do many things slowly, rather than few things quickly (remember P ∝ V 3) • Different programs might use the same addresses • Either statically compile applications to be compatible with one another (inflexible) • …or have some way of ensuring address spaces of different processes are independent, even when using same addresses EECS 470 Lecture 18 Slide 7

Motivation - Demand Paging Consider 32 -bit address space • 4 GB, not too bad with modern technology • Might still be expensive/prohibitive for embedded or otherwise minimal systems 48 -bit address space? • 256 TB… no way Use caching principles! Keep data likely to be used in DRAM, less likely in slower, cheaper storage (e. g. disk) EECS 470 Lecture 18 Slide 8

Motivation - Demand Paging But, a bit different than traditional caching • Locality is a lot harder to extract past L 1 -L 3 caches • Penalties of going to disk are much higher (page miss - millions of cycles) So, let OS figure out data placement Need fast way of figuring out where data is on cycle to cycle basis EECS 470 Lecture 18 Slide 9

Motivation Multiprogramming and Demand Paging 2 independent sets of problems, 1 solution Virtual memory “Virtual” means “using indirection” Map “virtual” address (VA) specified in program to “physical” address (PA), which may be in either DRAM or disk EECS 470 Lecture 18 Slide 10

Evolution of Protection Mechanisms Earliest machines had no concept of protection and address translation r r r EECS 470 no need---single process, single user automatically “private and uniform” (but not very large) programs operated on physical addresses directly no multitasking protection, no dynamic relocation (at least not very easily) Lecture 18 Slide 11

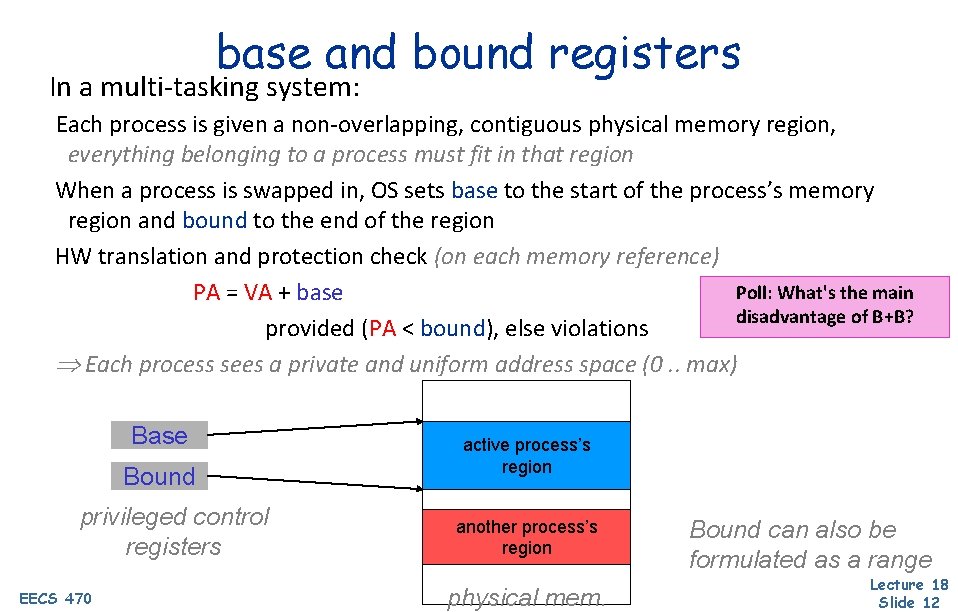

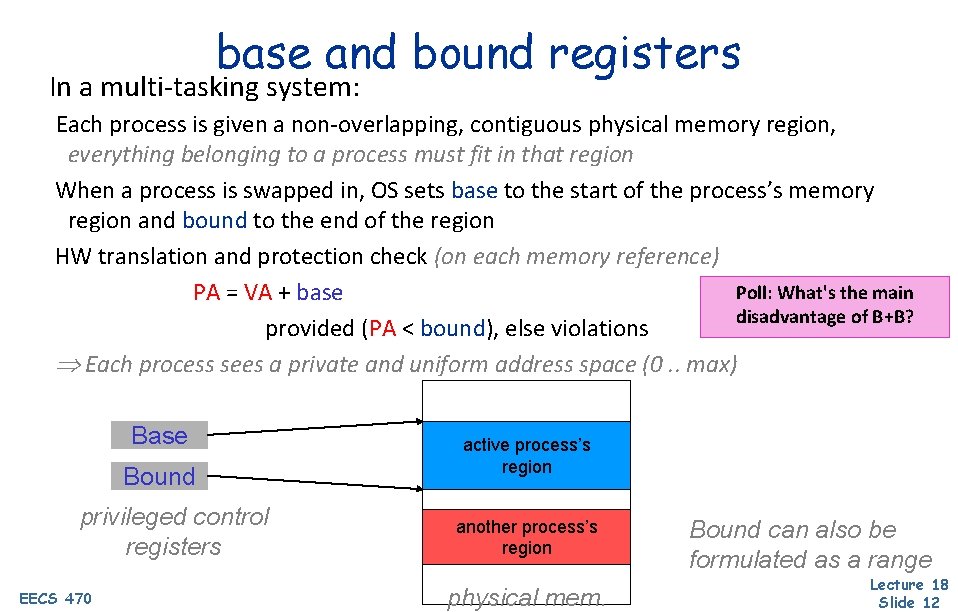

base and bound registers In a multi-tasking system: Each process is given a non-overlapping, contiguous physical memory region, everything belonging to a process must fit in that region When a process is swapped in, OS sets base to the start of the process’s memory region and bound to the end of the region HW translation and protection check (on each memory reference) Poll: What's the main PA = VA + base disadvantage of B+B? provided (PA < bound), else violations Each process sees a private and uniform address space (0. . max) Base Bound privileged control registers EECS 470 active process’s region another process’s region physical mem. Bound can also be formulated as a range Lecture 18 Slide 12

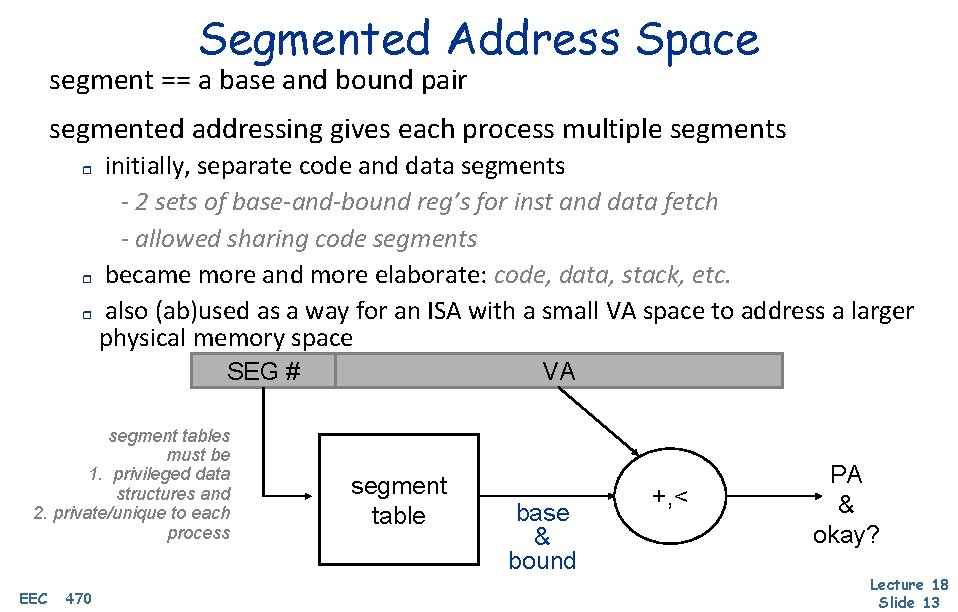

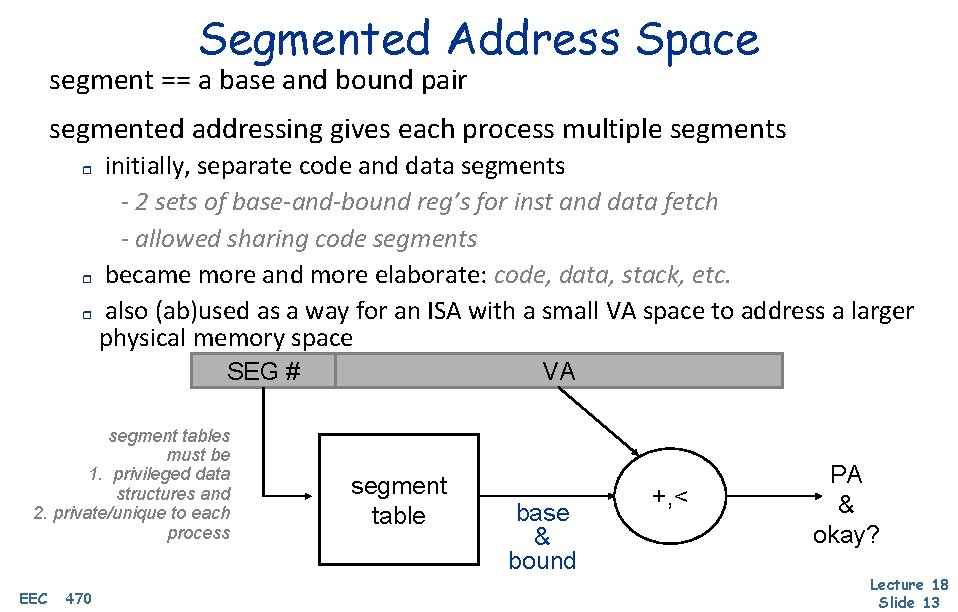

Segmented Address Space segment == a base and bound pair segmented addressing gives each process multiple segments initially, separate code and data segments - 2 sets of base-and-bound reg’s for inst and data fetch - allowed sharing code segments r became more and more elaborate: code, data, stack, etc. r also (ab)used as a way for an ISA with a small VA space to address a larger physical memory space r SEG # segment tables must be 1. privileged data structures and 2. private/unique to each process EECS 470 VA segment table base & bound +, < PA & okay? Lecture 18 Slide 13

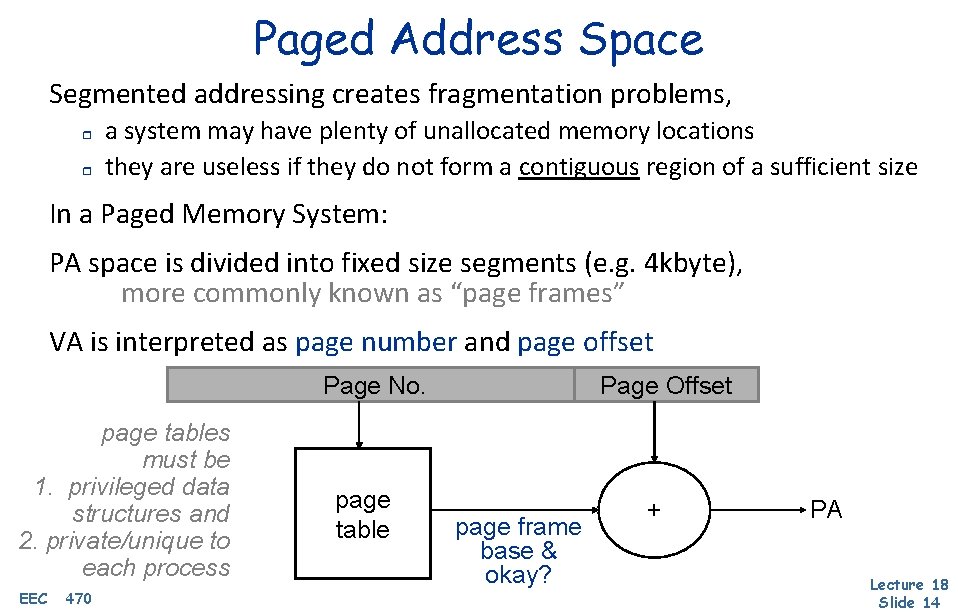

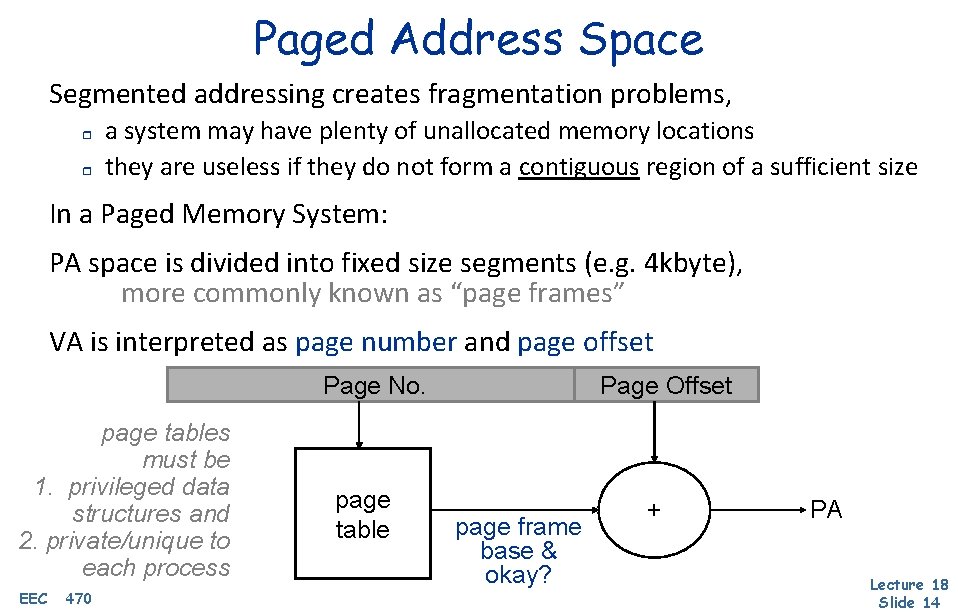

Paged Address Space Segmented addressing creates fragmentation problems, r r a system may have plenty of unallocated memory locations they are useless if they do not form a contiguous region of a sufficient size In a Paged Memory System: PA space is divided into fixed size segments (e. g. 4 kbyte), more commonly known as “page frames” VA is interpreted as page number and page offset Page No. page tables must be 1. privileged data structures and 2. private/unique to each process EECS 470 page table Page Offset page frame base & okay? + PA Lecture 18 Slide 14

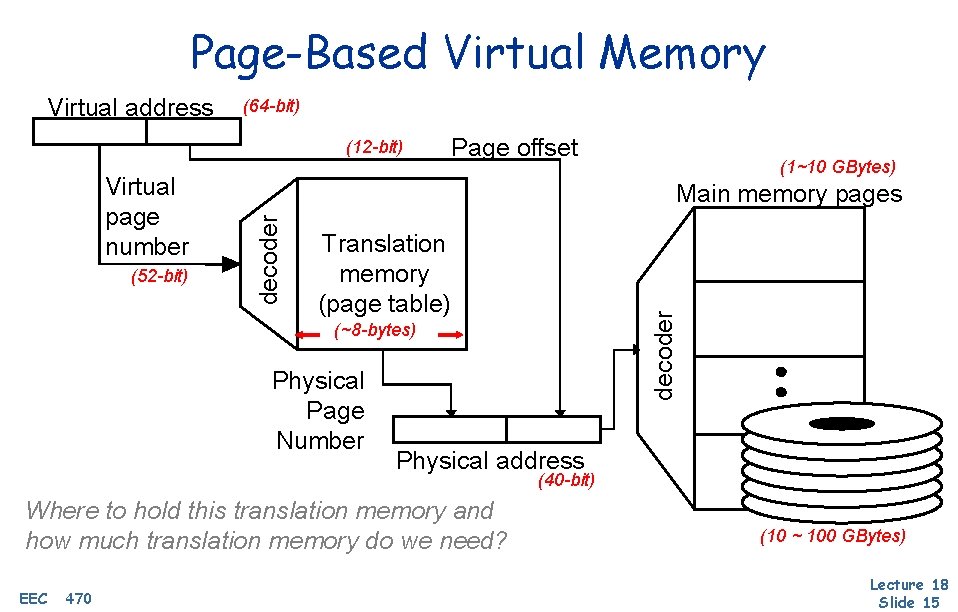

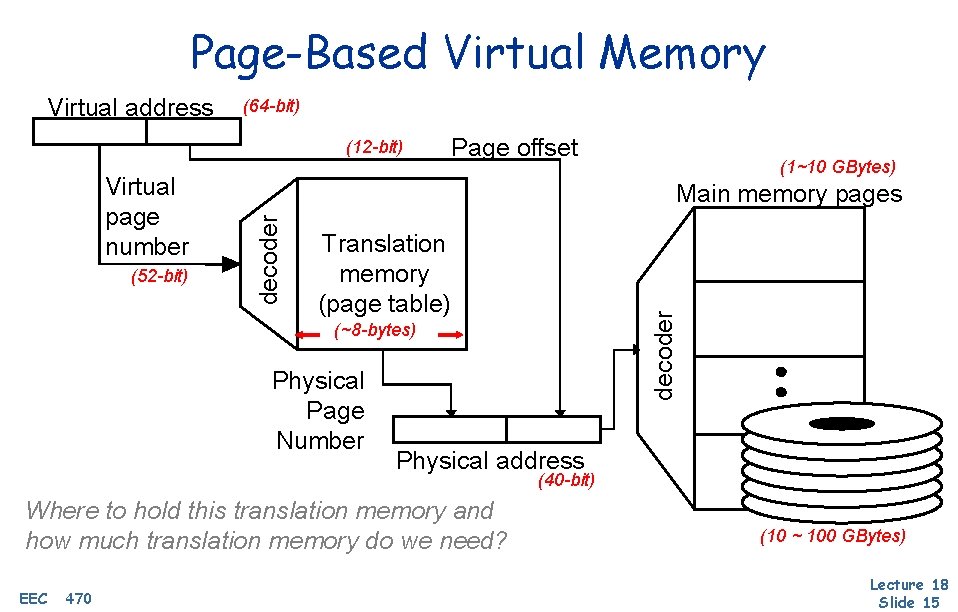

Page-Based Virtual Memory (64 -bit) (12 -bit) (52 -bit) (1~10 GBytes) Main memory pages decoder Virtual page number Page offset Translation memory (page table) decoder Virtual address (~8 -bytes) Physical Page Number Physical address (40 -bit) Where to hold this translation memory and how much translation memory do we need? EECS 470 (10 ~ 100 GBytes) Lecture 18 Slide 15

Page table organization EECS 470 Lecture 18 Slide 16

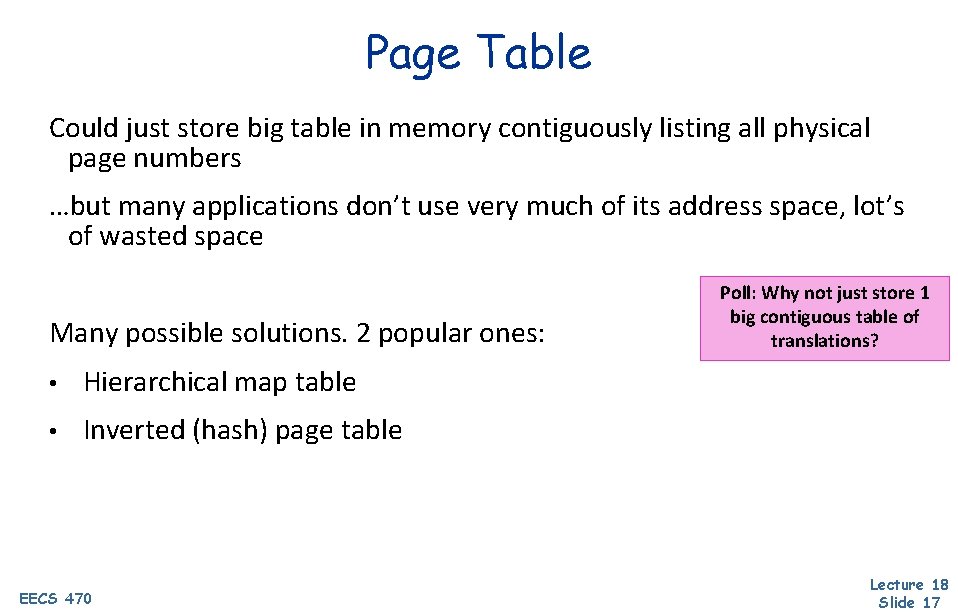

Page Table Could just store big table in memory contiguously listing all physical page numbers …but many applications don’t use very much of its address space, lot’s of wasted space Many possible solutions. 2 popular ones: • Hierarchical map table • Inverted (hash) page table EECS 470 Poll: Why not just store 1 big contiguous table of translations? Lecture 18 Slide 17

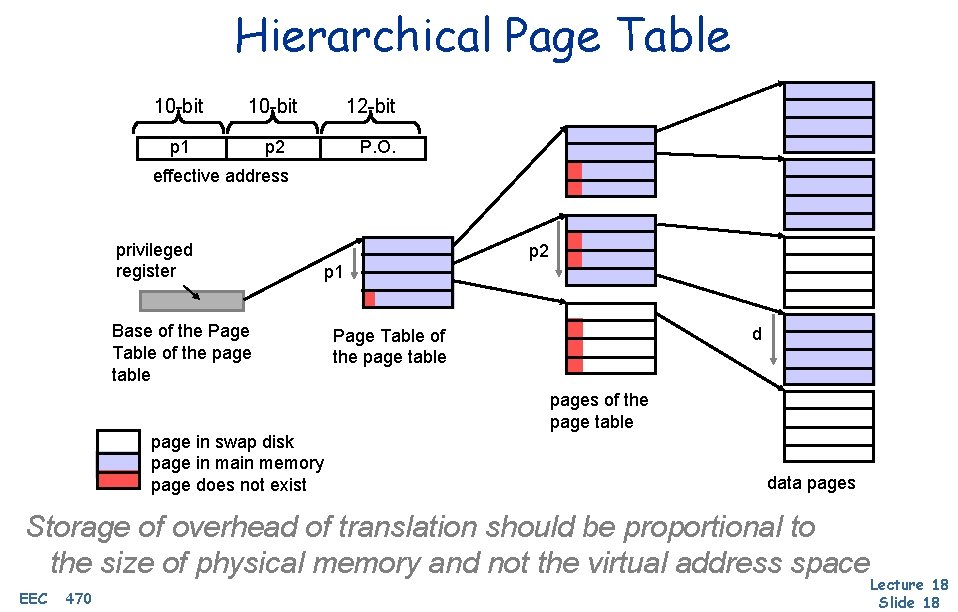

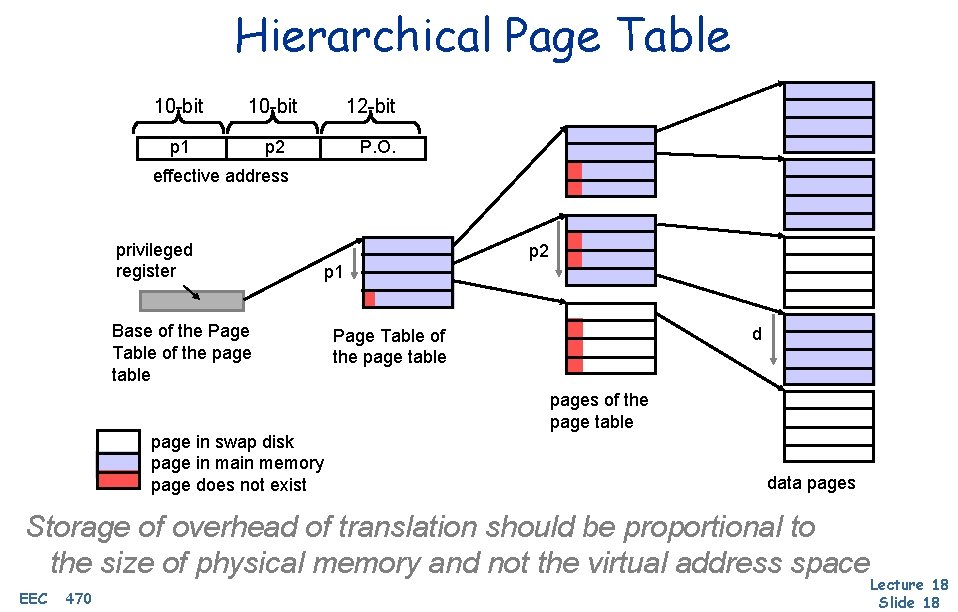

Hierarchical Page Table 10 -bit p 1 p 2 12 -bit P. O. effective address privileged register Base of the Page Table of the page table page in swap disk page in main memory page does not exist p 2 p 1 d Page Table of the page table pages of the page table data pages Storage of overhead of translation should be proportional to the size of physical memory and not the virtual address space EECS 470 Lecture 18 Slide 18

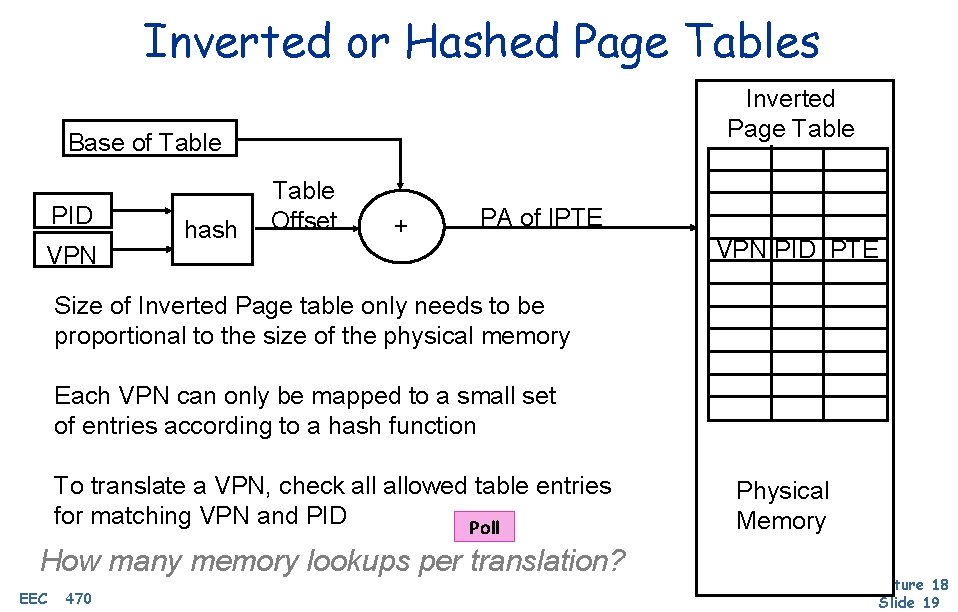

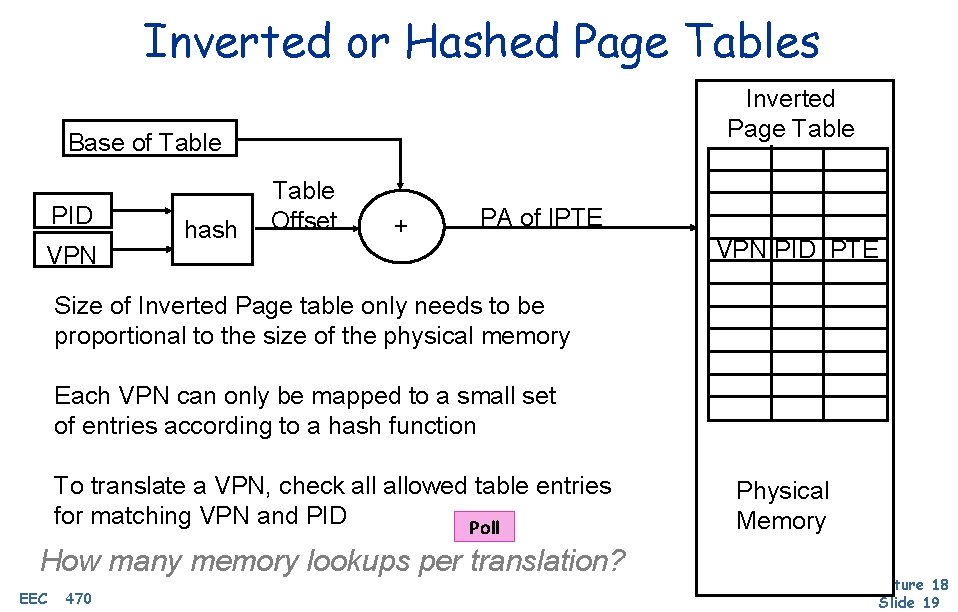

Inverted or Hashed Page Tables Inverted Page Table Base of Table PID VPN hash Table Offset + PA of IPTE VPN PID PTE Size of Inverted Page table only needs to be proportional to the size of the physical memory Each VPN can only be mapped to a small set of entries according to a hash function To translate a VPN, check allowed table entries for matching VPN and PID Poll How many memory lookups per translation? EECS 470 Physical Memory Lecture 18 Slide 19

Virtual-to-Physical Translation EECS 470 Lecture 18 Slide 20

Translation Look-aside Buffer (TLB) Essentially a cache of Virtual address recent address translations VPN Page offset avoids going to the page table Tag on every reference indexed by lower bits of = VPN (virtual page #) tag = unused bits of VPN + Index process ID data = a page-table entry Page offset i. e. PPN (physical page #) and Physical page no. access permission Physical address status = valid, dirty the usual cache design choices (placement, replacement policy Poll multi-level, etc) apply here too. Lecture 18 What should be the relative sizes of ITLB and I-cache? EECS 470 Slide 21

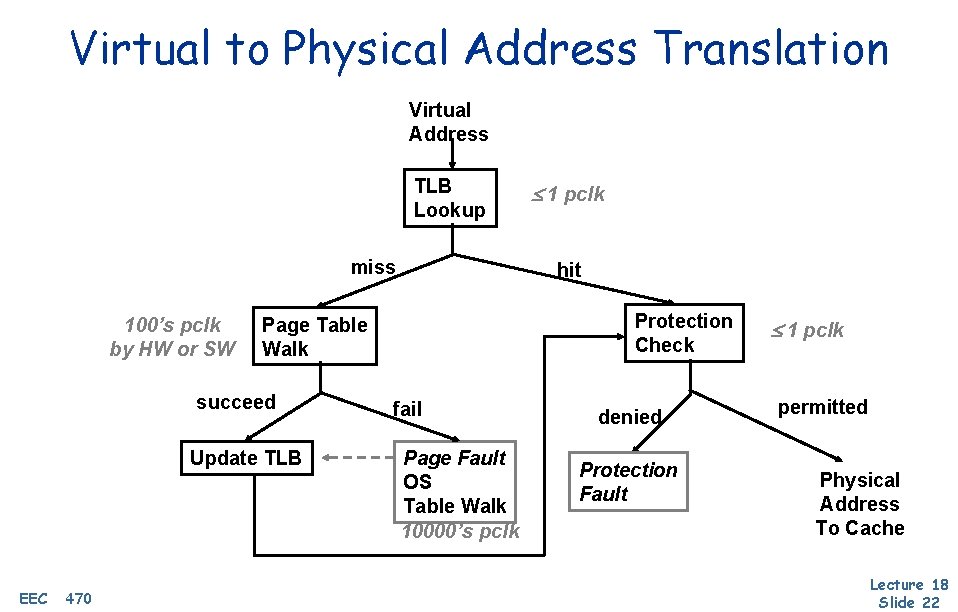

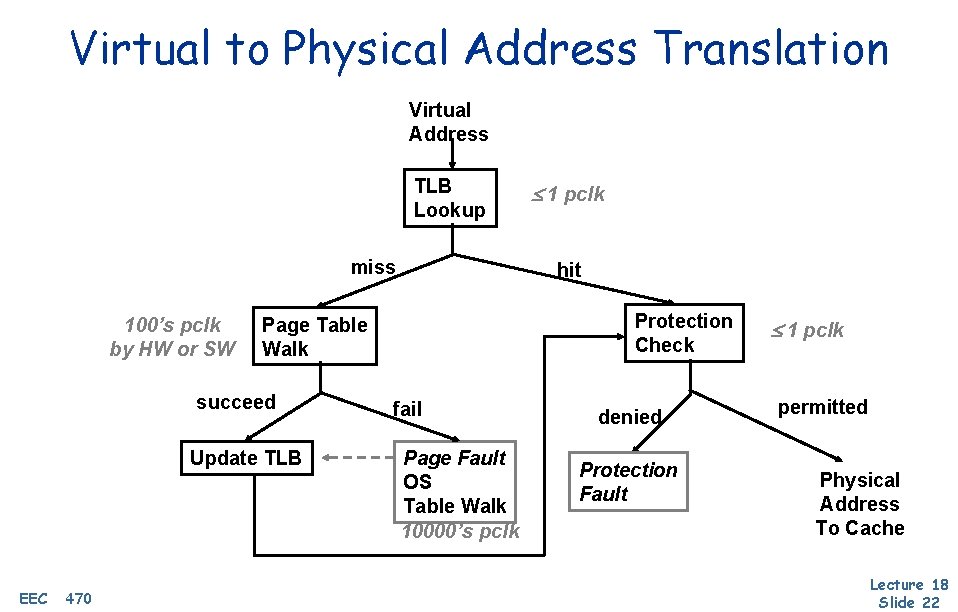

Virtual to Physical Address Translation Virtual Address TLB Lookup miss 100’s pclk by HW or SW Update TLB EECS 470 hit Protection Check Page Table Walk succeed 1 pclk fail Page Fault OS Table Walk 10000’s pclk denied Protection Fault 1 pclk permitted Physical Address To Cache Lecture 18 Slide 22

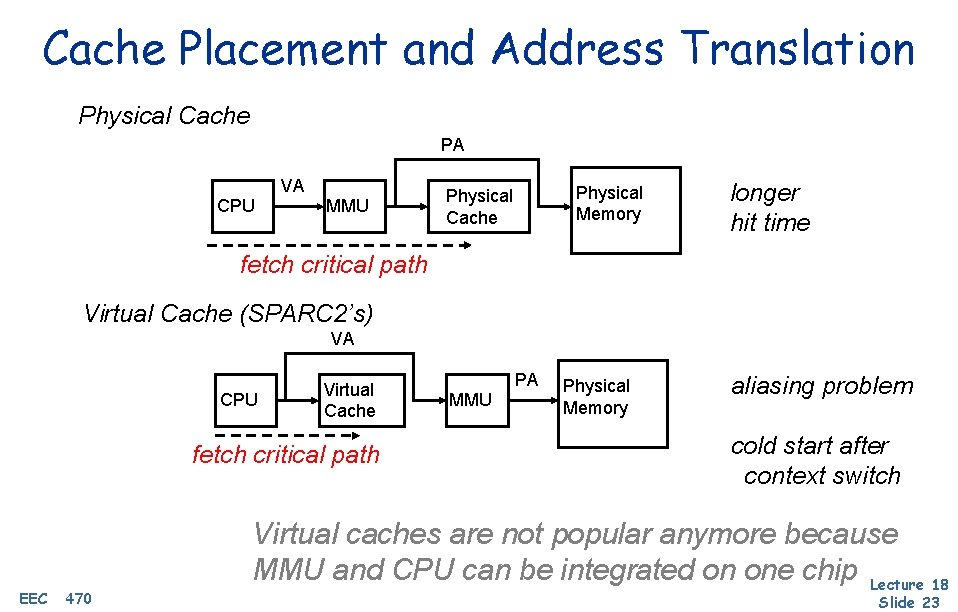

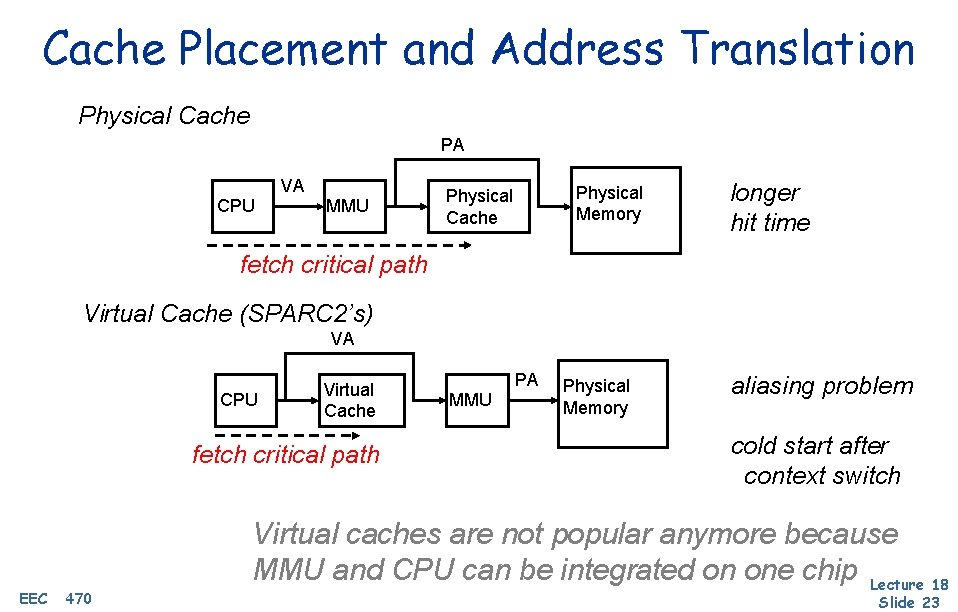

Cache Placement and Address Translation Physical Cache PA VA CPU MMU Physical Memory Physical Cache longer hit time fetch critical path Virtual Cache (SPARC 2’s) VA CPU Virtual Cache fetch critical path EECS 470 PA MMU Physical Memory aliasing problem cold start after context switch Virtual caches are not popular anymore because MMU and CPU can be integrated on one chip Lecture 18 Slide 23

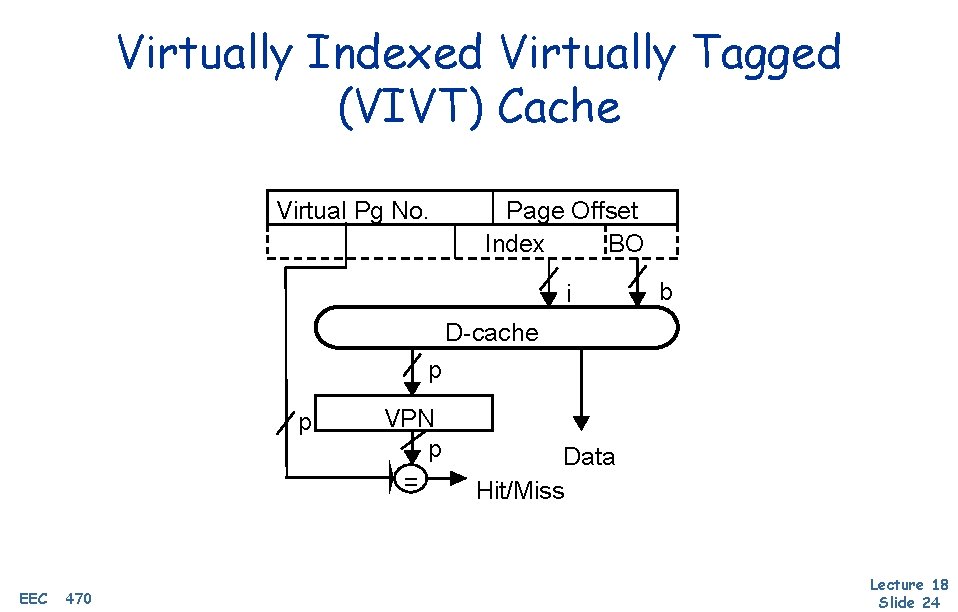

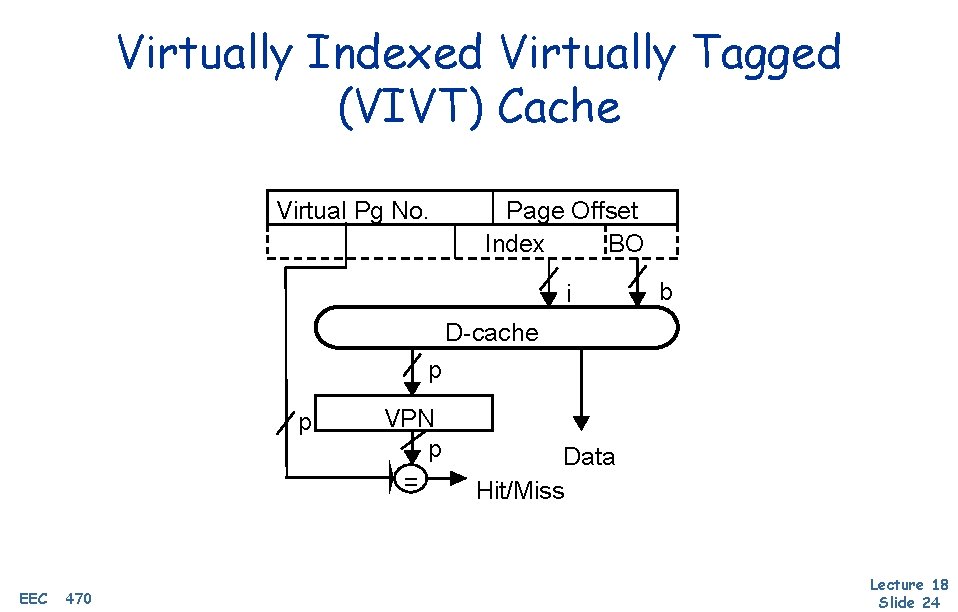

Virtually Indexed Virtually Tagged (VIVT) Cache Virtual Pg No. Page Offset Index BO i b D-cache p p EECS 470 VPN p = Data Hit/Miss Lecture 18 Slide 24

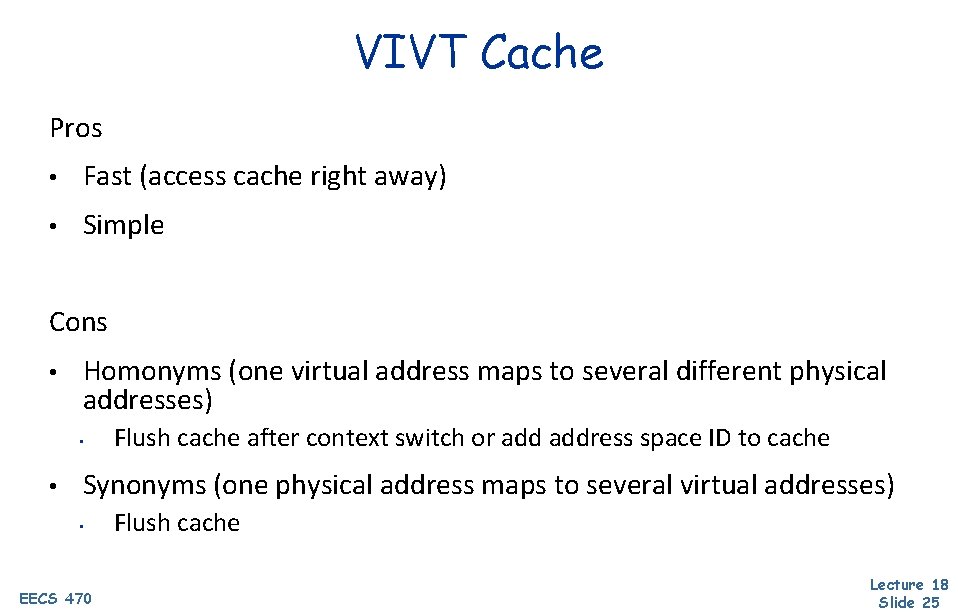

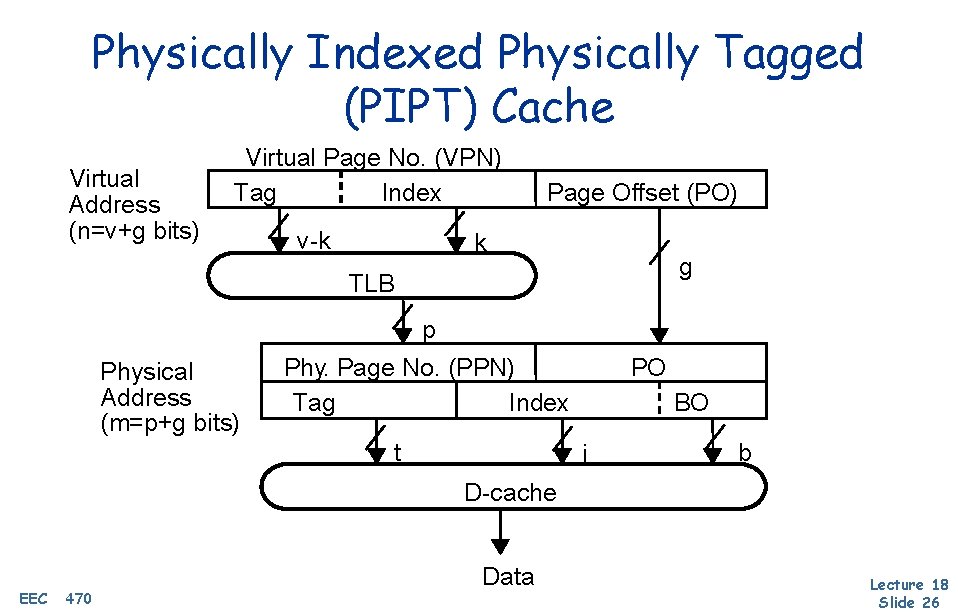

VIVT Cache Pros • Fast (access cache right away) • Simple Cons • Homonyms (one virtual address maps to several different physical addresses) • • Flush cache after context switch or address space ID to cache Synonyms (one physical address maps to several virtual addresses) • EECS 470 Flush cache Lecture 18 Slide 25

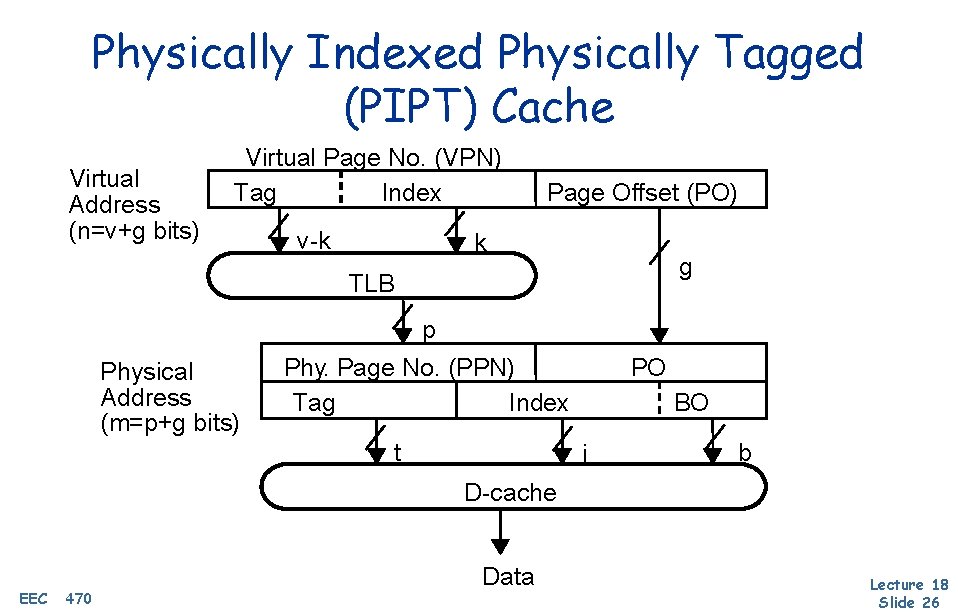

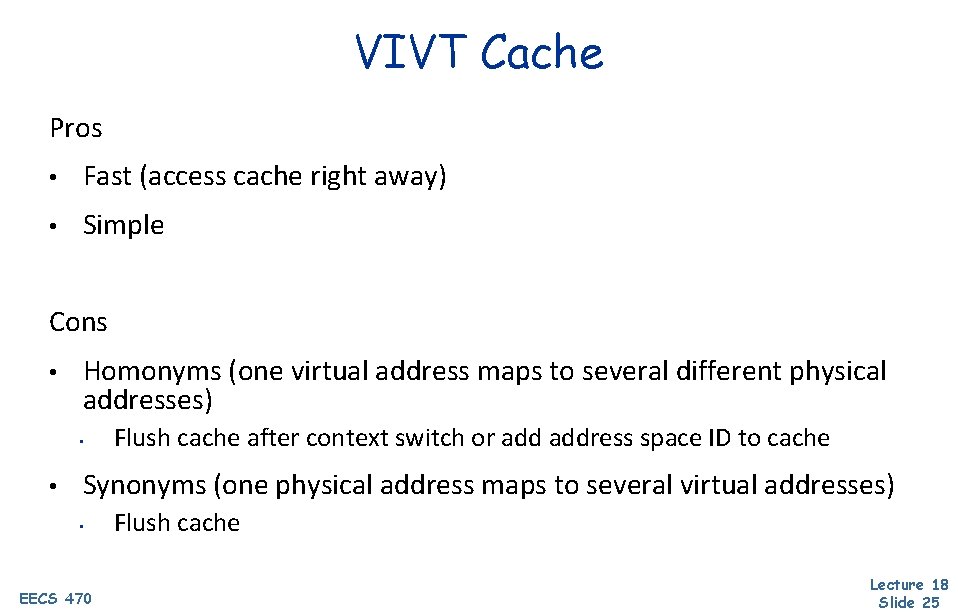

Physically Indexed Physically Tagged (PIPT) Cache Virtual Address (n=v+g bits) Virtual Page No. (VPN) Tag Index v-k Page Offset (PO) k g TLB p Physical Address (m=p+g bits) Phy. Page No. (PPN) Tag Index t PO BO i b D-cache EECS 470 Data Lecture 18 Slide 26

PIPT Cache Pros • Simple • No aliasing (homonyms or synonyms) Cons • Slow (memory translation added to critical path) EECS 470 Lecture 18 Slide 27

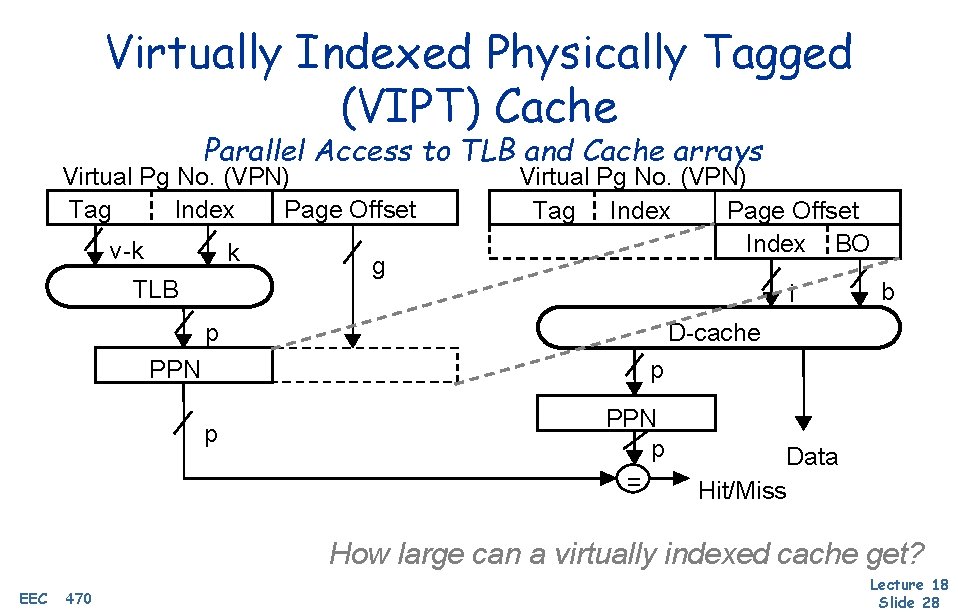

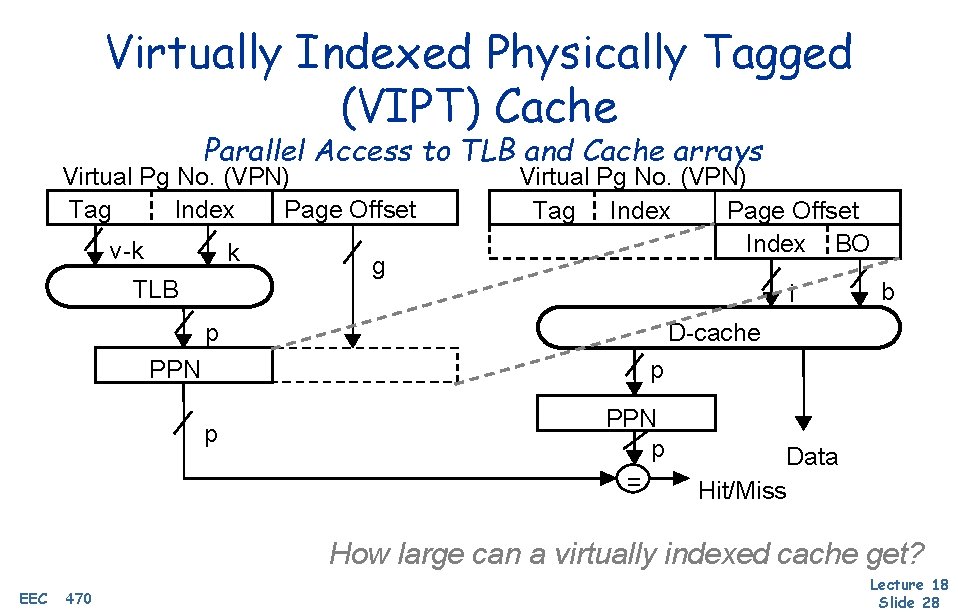

Virtually Indexed Physically Tagged (VIPT) Cache Parallel Access to TLB and Cache arrays Virtual Pg No. (VPN) Tag Index Page Offset v-k k TLB g Virtual Pg No. (VPN) Tag Index Page Offset Index BO i p b D-cache p PPN p = Data Hit/Miss How large can a virtually indexed cache get? EECS 470 Lecture 18 Slide 28

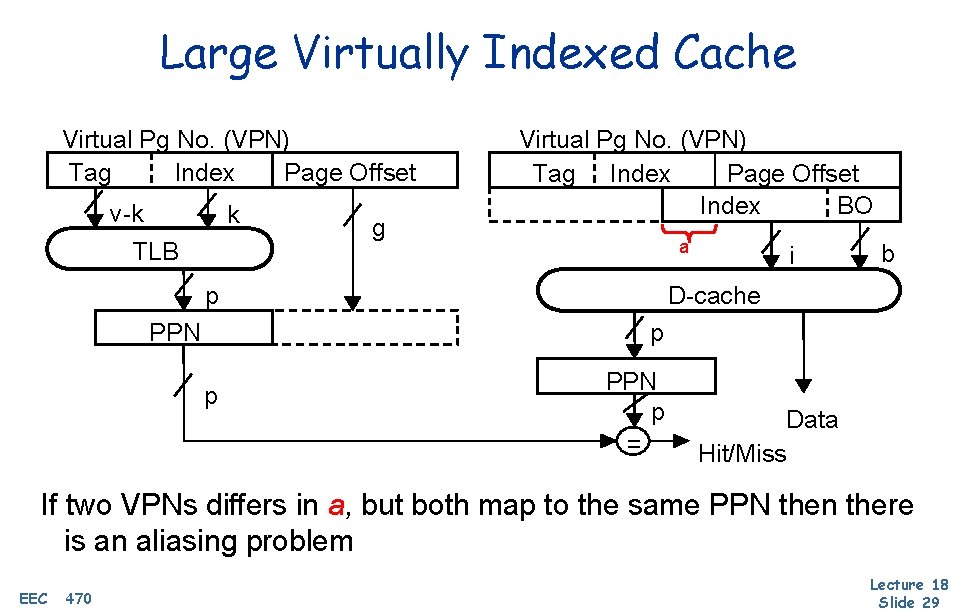

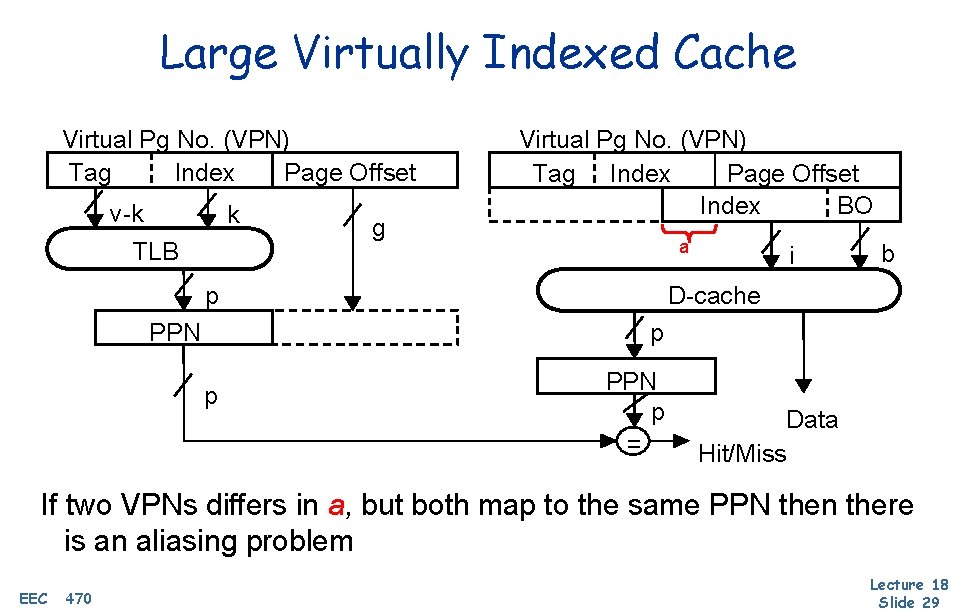

Large Virtually Indexed Cache Virtual Pg No. (VPN) Tag Index Page Offset v-k k TLB g Virtual Pg No. (VPN) Tag Index Page Offset Index BO a p i b D-cache p PPN p = Data Hit/Miss If two VPNs differs in a, but both map to the same PPN then there is an aliasing problem EECS 470 Lecture 18 Slide 29

VIPT Cache Pros • Fast (two memory transactions can be done in parallel) Cons • More complicated • Cache size is constrained EECS 470 Lecture 18 Slide 30

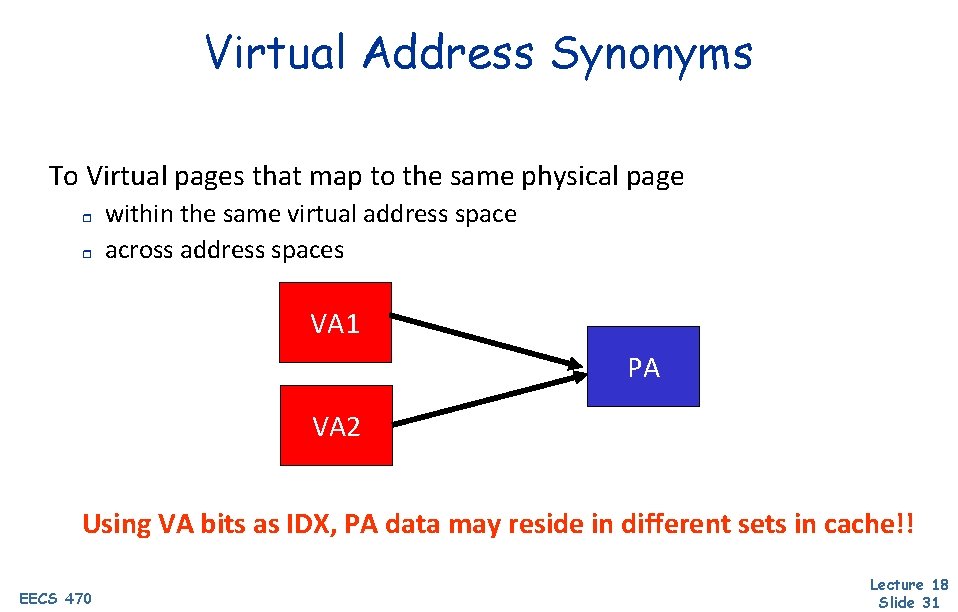

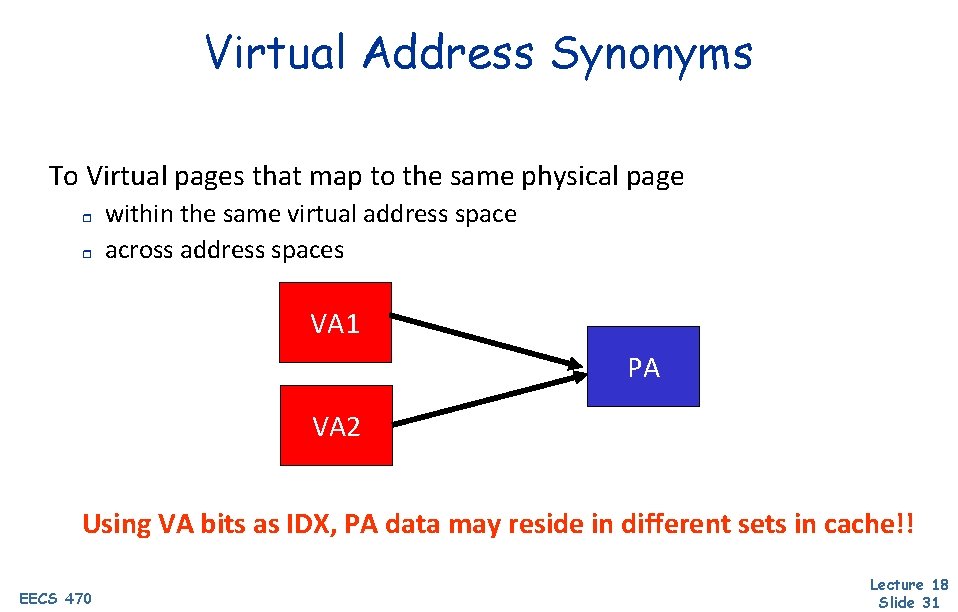

Virtual Address Synonyms To Virtual pages that map to the same physical page r r within the same virtual address space across address spaces VA 1 PA VA 2 Using VA bits as IDX, PA data may reside in different sets in cache!! EECS 470 Lecture 18 Slide 31

Synonym Solutions Limit cache size to page size times associativity r get index from page offset Search all sets in parallel 64 K 4 -way cache, 4 K pages, search 4 sets (16 entries) r Slow! r Restrict page placement in OS r make sure index(VA) = index(PA) Eliminate by OS convention r r EECS 470 single virtual space restrictive sharing model Lecture 18 Slide 32

Next Time Multicore! Lingering questions / feedback? I'll include an anonymous form at the end of every lecture: https: //bit. ly/3 o. Sr 5 FD EECS 470 Lecture 18 Slide 33