EECS 470 Lecture 14 Advanced Caches DEC Alpha

![Software Approach: Restructuring Loops and Array Layout If column-major r x[i+1, j] follows x[i, Software Approach: Restructuring Loops and Array Layout If column-major r x[i+1, j] follows x[i,](https://slidetodoc.com/presentation_image_h2/1029e700c2c92f5cafd8bf475e823153/image-12.jpg)

- Slides: 33

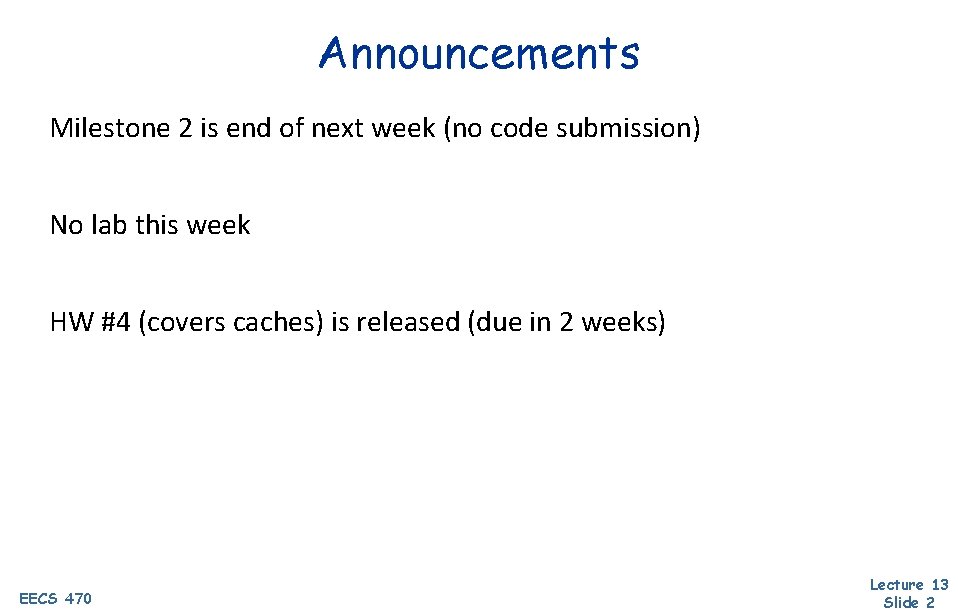

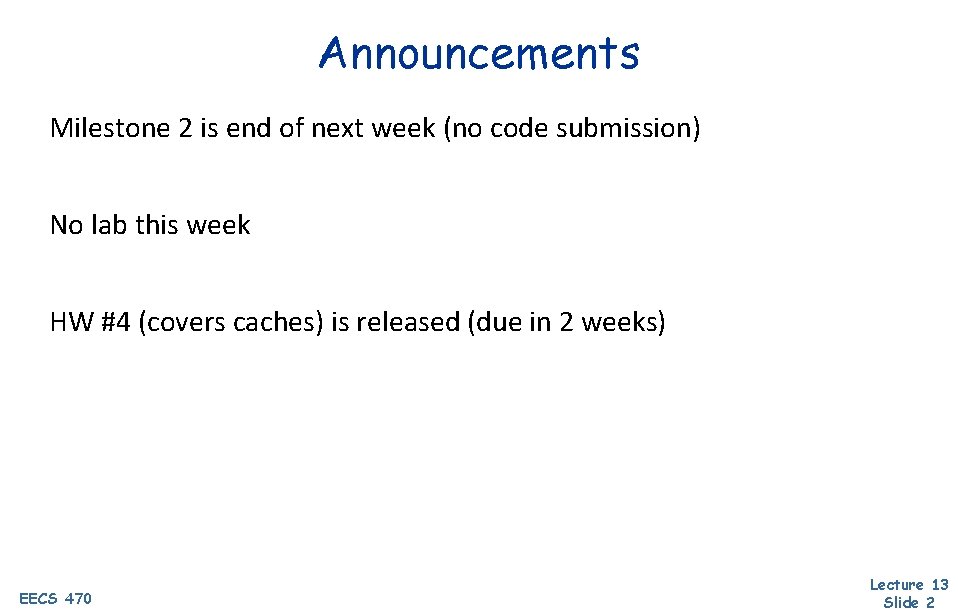

EECS 470 Lecture 14 Advanced Caches DEC Alpha Winter 2021 Instruction Cache BIU Jon Beaumont www. eecs. umich. edu/courses/eecs 470/ Data Cache Slides developed in part by Profs. Austin, Brehob, Falsafi, Hill, Hoe, Lipasti, Mudge, Shen, Smith, Sohi, Tyson, and Vijaykumar of Carnegie Mellon University, Purdue University, University of Michigan, and University of Wisconsin. EECS 470 Lecture 13 Slide 1

Announcements Milestone 2 is end of next week (no code submission) No lab this week HW #4 (covers caches) is released (due in 2 weeks) EECS 470 Lecture 13 Slide 2

Last Time Basic Caches • Memory is much slower than processor • However, data accesses are predictable • • When piece of data is accessed: • • • Specifically, many programs exhibit spatial and temporal locality Store in cache if it’s needed later (temporal locality) Read whole line/block around piece of data into cache as well (spatial locality) Cache considerations: • EECS 470 Cache size, block size, associativity Lecture 13 Slide 3

Today Survey of other cache topics • Classifying and reducing misses • • Software techniques Hardware hashing Victim cache Subblocking • Multi-level caches • Inclusivity • Non-blocking caches EECS 470 Lecture 13 Slide 4

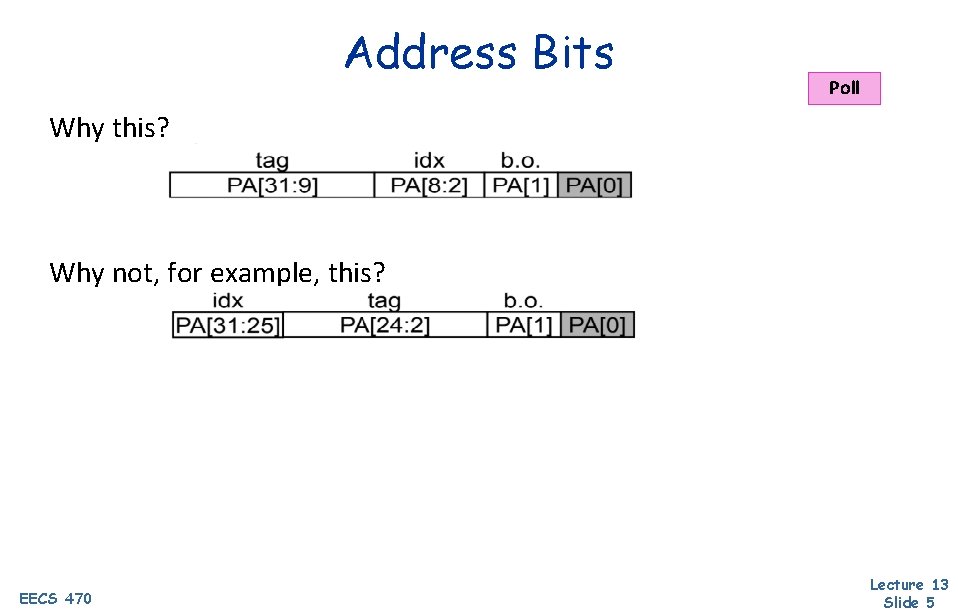

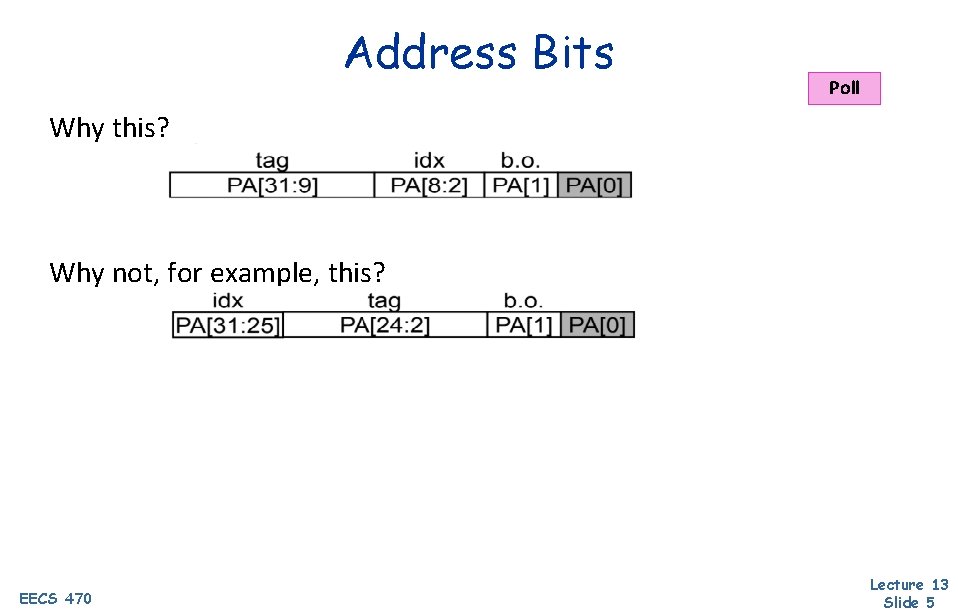

Address Bits Poll Why this? Why not, for example, this? EECS 470 Lecture 13 Slide 5

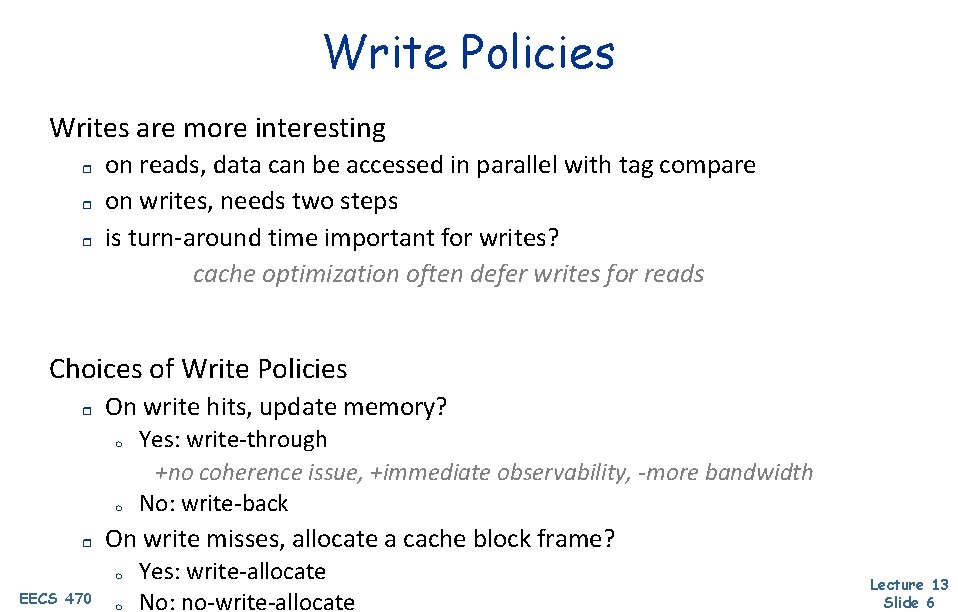

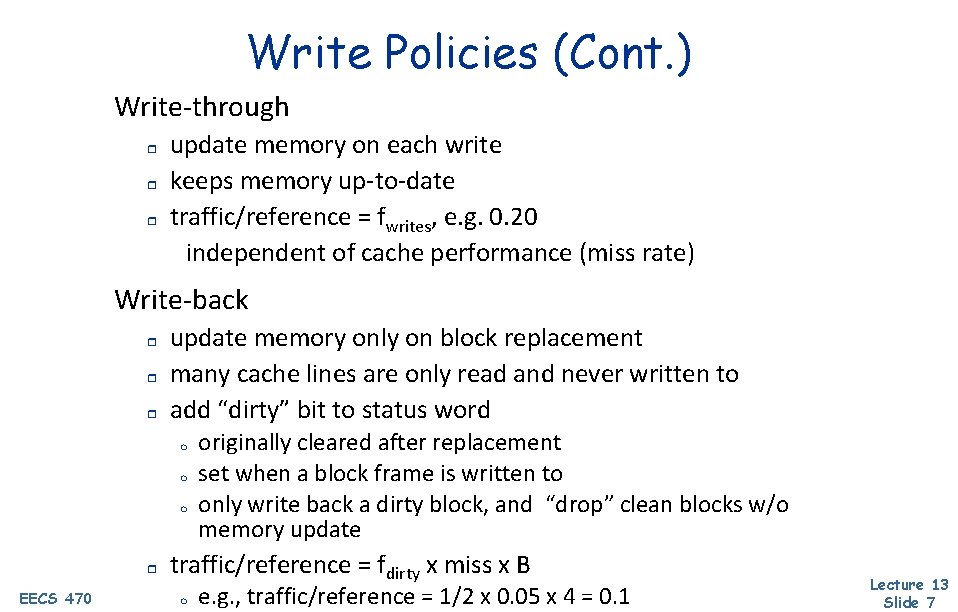

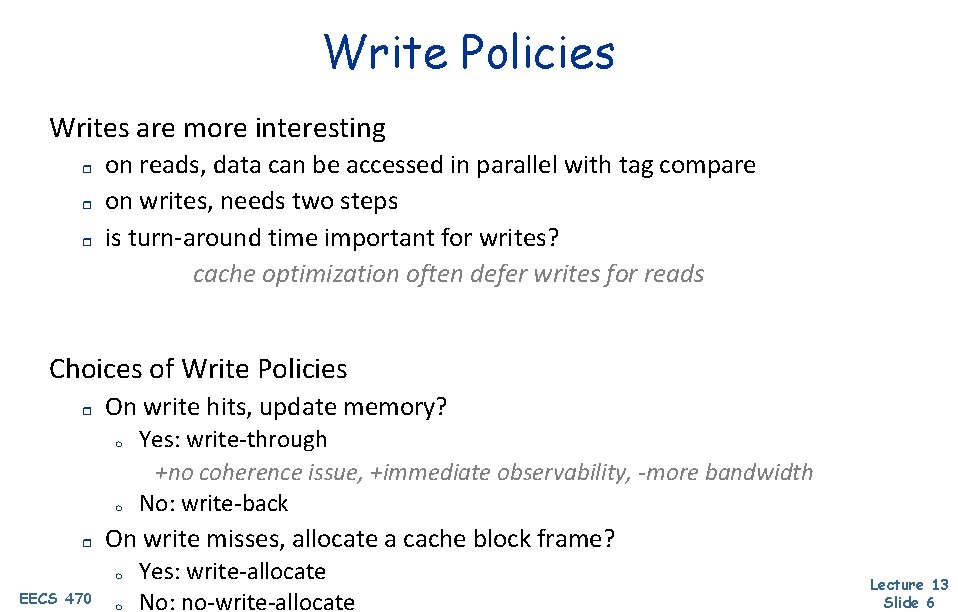

Write Policies Writes are more interesting r r r on reads, data can be accessed in parallel with tag compare on writes, needs two steps is turn-around time important for writes? cache optimization often defer writes for reads Choices of Write Policies r On write hits, update memory? m m r On write misses, allocate a cache block frame? m EECS 470 Yes: write-through +no coherence issue, +immediate observability, -more bandwidth No: write-back m Yes: write-allocate No: no-write-allocate Lecture 13 Slide 6

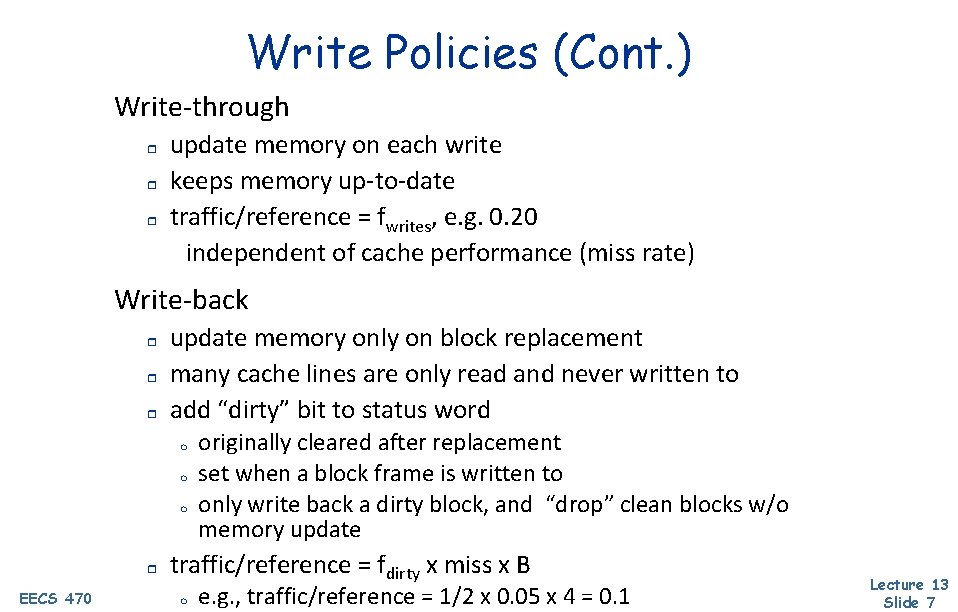

Write Policies (Cont. ) Write-through r r r update memory on each write keeps memory up-to-date traffic/reference = fwrites, e. g. 0. 20 independent of cache performance (miss rate) Write-back r r r update memory only on block replacement many cache lines are only read and never written to add “dirty” bit to status word m m m r EECS 470 originally cleared after replacement set when a block frame is written to only write back a dirty block, and “drop” clean blocks w/o memory update traffic/reference = fdirty x miss x B m e. g. , traffic/reference = 1/2 x 0. 05 x 4 = 0. 1 Lecture 13 Slide 7

Write Policies (Cont. ) Common misconception: (Write-through vs write-back) and (write-allocate vs no-write-allocate) are two independent design decisions One does not preclude the other EECS 470 Lecture 13 Slide 8

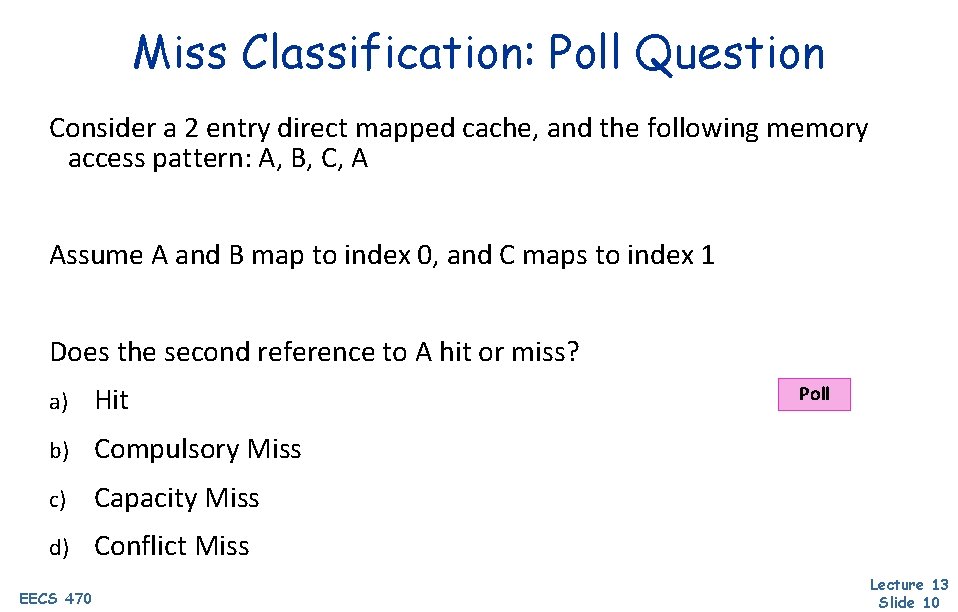

Miss Classification (3+1 C’s) compulsory r “cold miss” on first access to a block — defined as: miss in infinite cache capacity r misses occur because cache not large enough — defined as: miss in fully-associative cache (with optimal placement policy) conflict r r misses occur because of restrictive mapping strategy only in set-associative or direct-mapped cache — defined as: not attributable to compulsory or capacity coherence r r EECS 470 misses occur because of sharing among multiprocessors (More in a couple weeks) Lecture 13 Slide 9

Miss Classification: Poll Question Consider a 2 entry direct mapped cache, and the following memory access pattern: A, B, C, A Assume A and B map to index 0, and C maps to index 1 Does the second reference to A hit or miss? a) Hit b) Compulsory Miss c) Capacity Miss d) Conflict Miss EECS 470 Poll Lecture 13 Slide 10

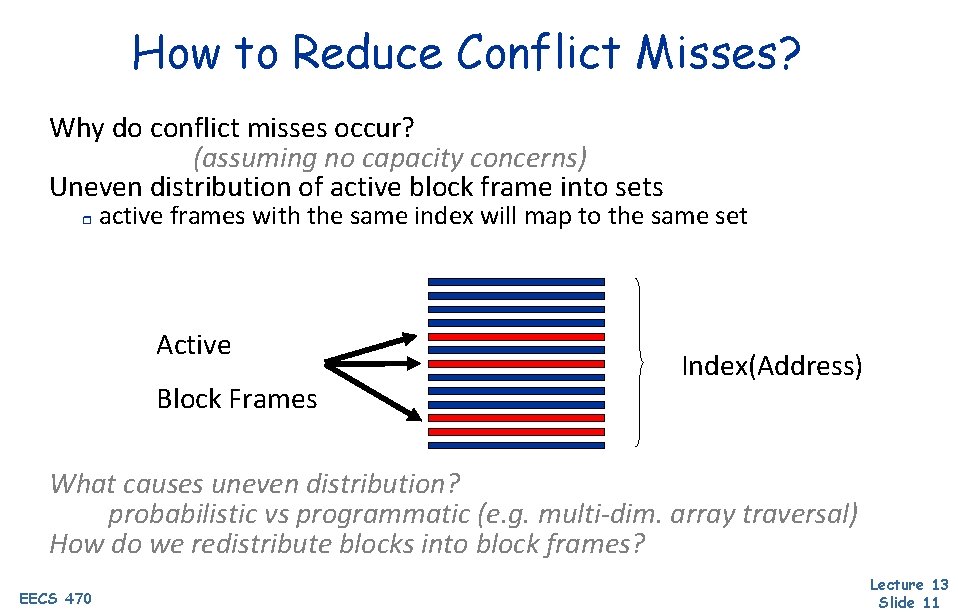

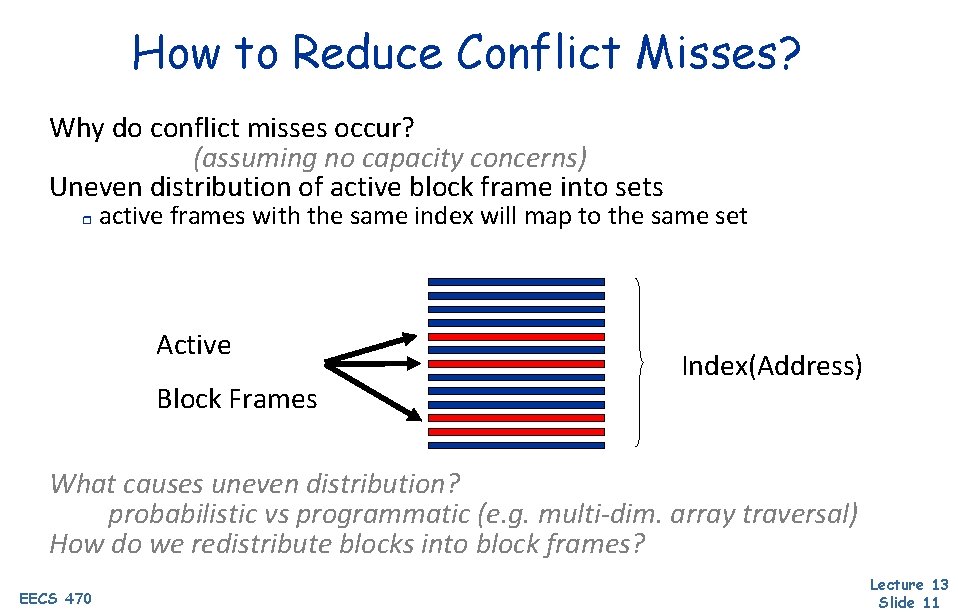

How to Reduce Conflict Misses? Why do conflict misses occur? (assuming no capacity concerns) Uneven distribution of active block frame into sets r active frames with the same index will map to the same set Active Block Frames Index(Address) What causes uneven distribution? probabilistic vs programmatic (e. g. multi-dim. array traversal) How do we redistribute blocks into block frames? EECS 470 Lecture 13 Slide 11

![Software Approach Restructuring Loops and Array Layout If columnmajor r xi1 j follows xi Software Approach: Restructuring Loops and Array Layout If column-major r x[i+1, j] follows x[i,](https://slidetodoc.com/presentation_image_h2/1029e700c2c92f5cafd8bf475e823153/image-12.jpg)

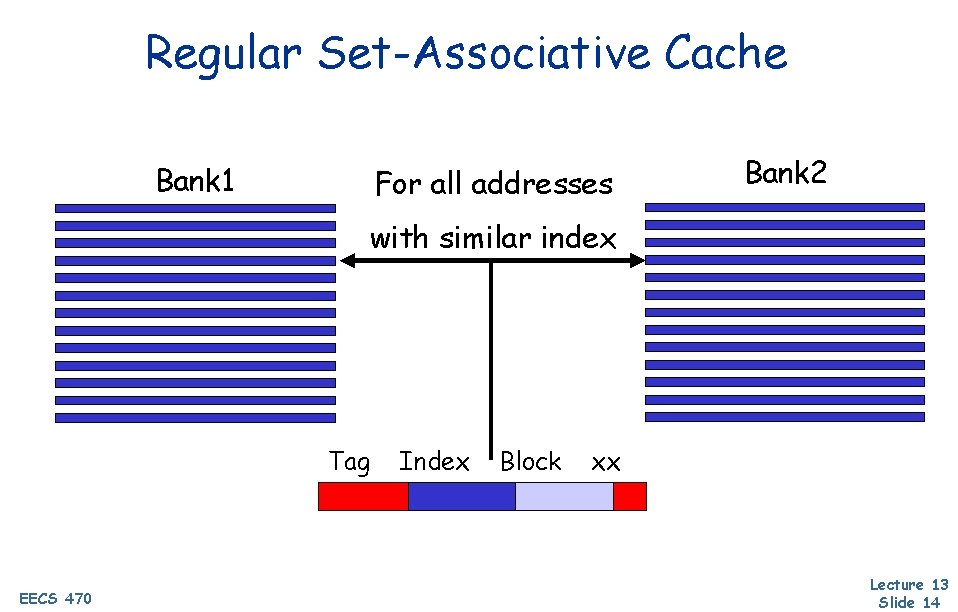

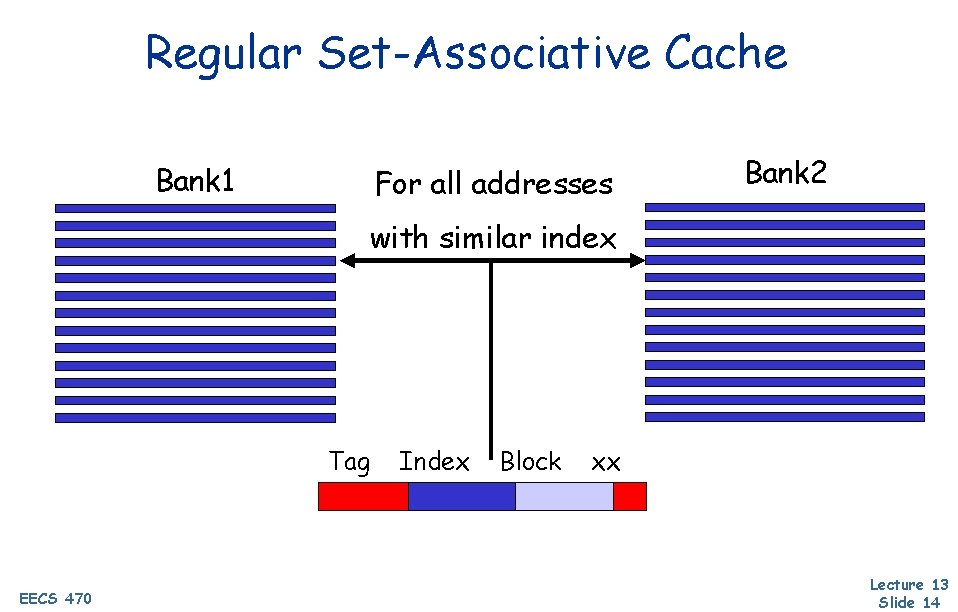

Software Approach: Restructuring Loops and Array Layout If column-major r x[i+1, j] follows x[i, j] in memory r x[i, j+1] is a long away from x[i, j] conflict miss + lost of spatial locality Poor code for i = 1, rows for j = 1, columns sum = sum + x[i, j] Optimizations: Better code for j = 1, columns for i = 1, rows sum = sum + x[i, j] qmerging arrays, data layout, padding array dimension but EECS 470 q there can be conflicts among different arrays q array sizes may be unknown at compile/programming time Lecture 13 Slide 12

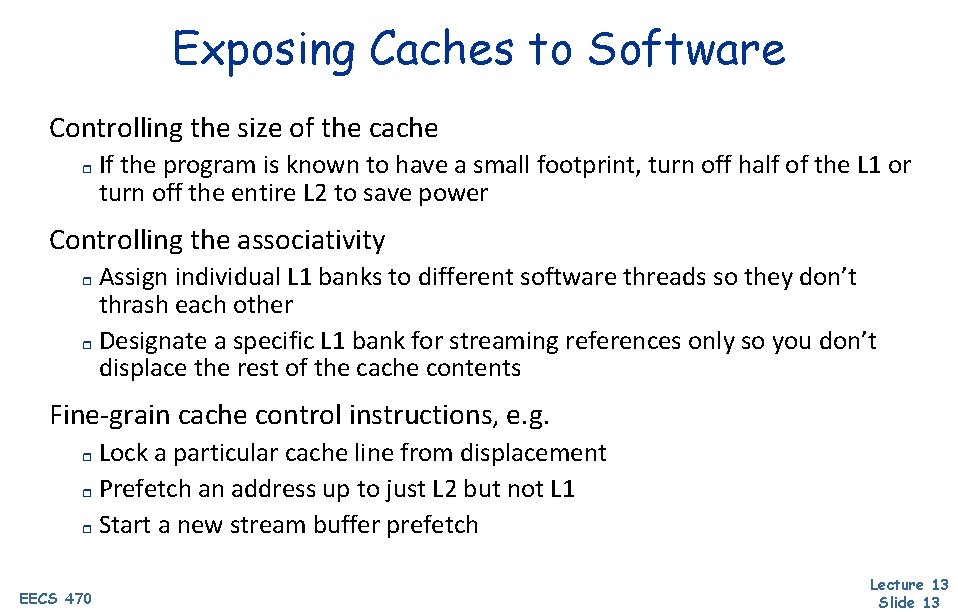

Exposing Caches to Software Controlling the size of the cache r If the program is known to have a small footprint, turn off half of the L 1 or turn off the entire L 2 to save power Controlling the associativity Assign individual L 1 banks to different software threads so they don’t thrash each other r Designate a specific L 1 bank for streaming references only so you don’t displace the rest of the cache contents r Fine-grain cache control instructions, e. g. Lock a particular cache line from displacement r Prefetch an address up to just L 2 but not L 1 r Start a new stream buffer prefetch r EECS 470 Lecture 13 Slide 13

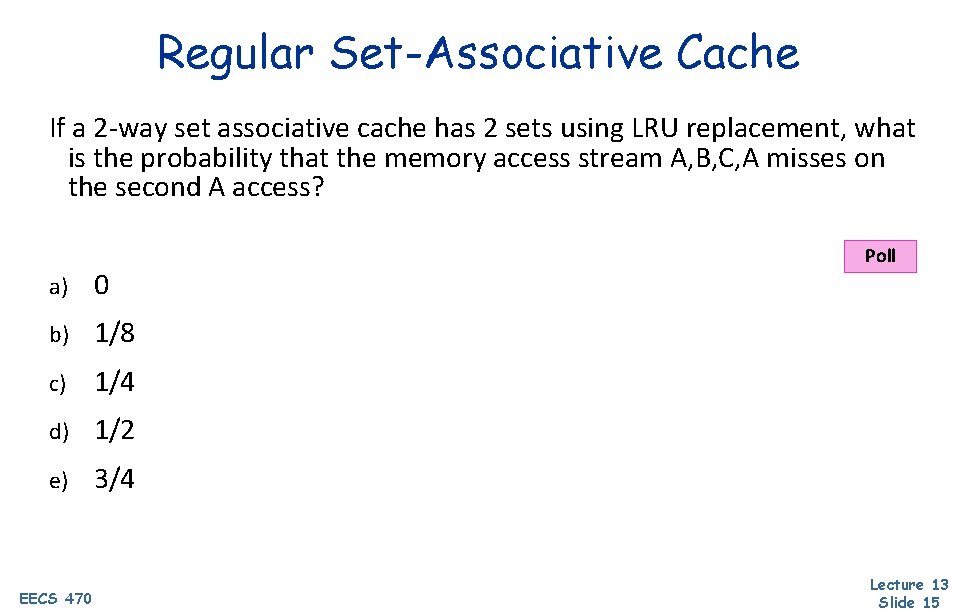

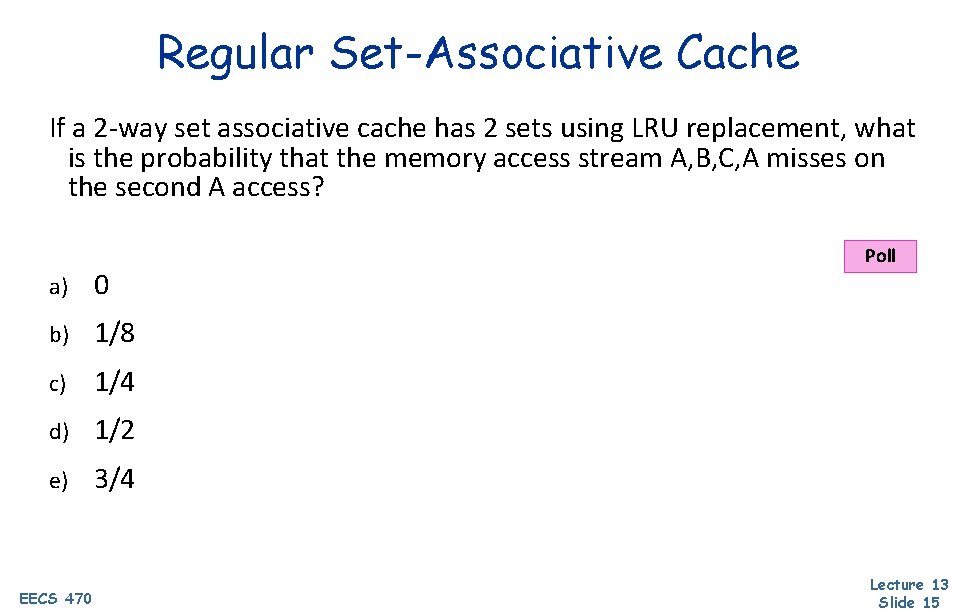

Regular Set-Associative Cache Bank 1 For all addresses Bank 2 with similar index Tag EECS 470 Index Block xx Lecture 13 Slide 14

Regular Set-Associative Cache If a 2 -way set associative cache has 2 sets using LRU replacement, what is the probability that the memory access stream A, B, C, A misses on the second A access? a) 0 b) 1/8 c) 1/4 d) 1/2 e) 3/4 EECS 470 Poll Lecture 13 Slide 15

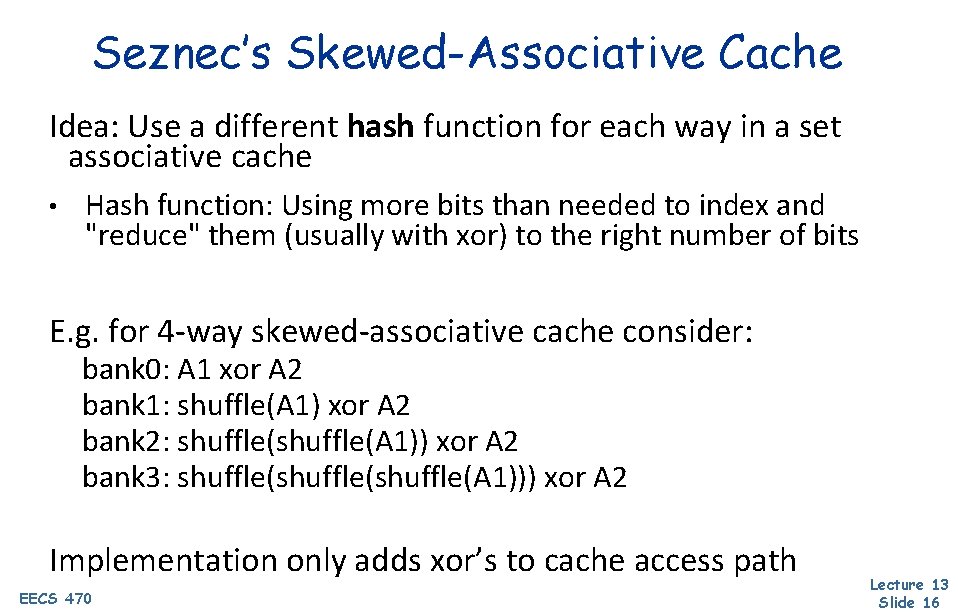

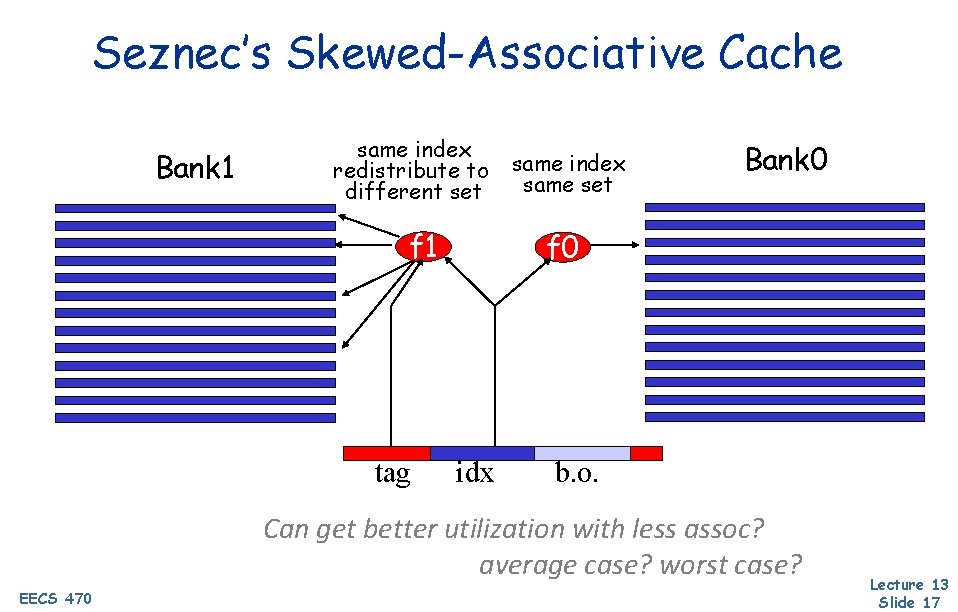

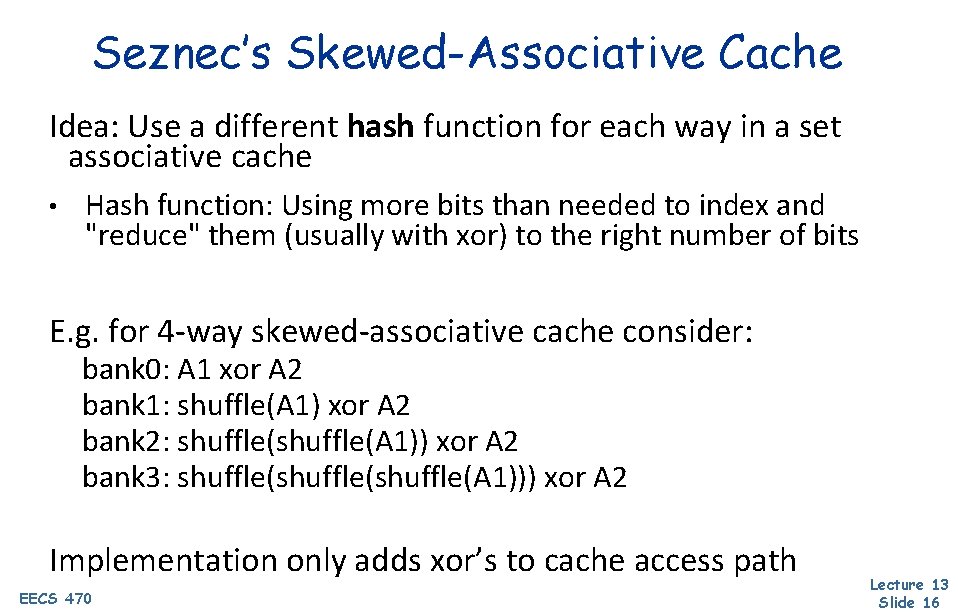

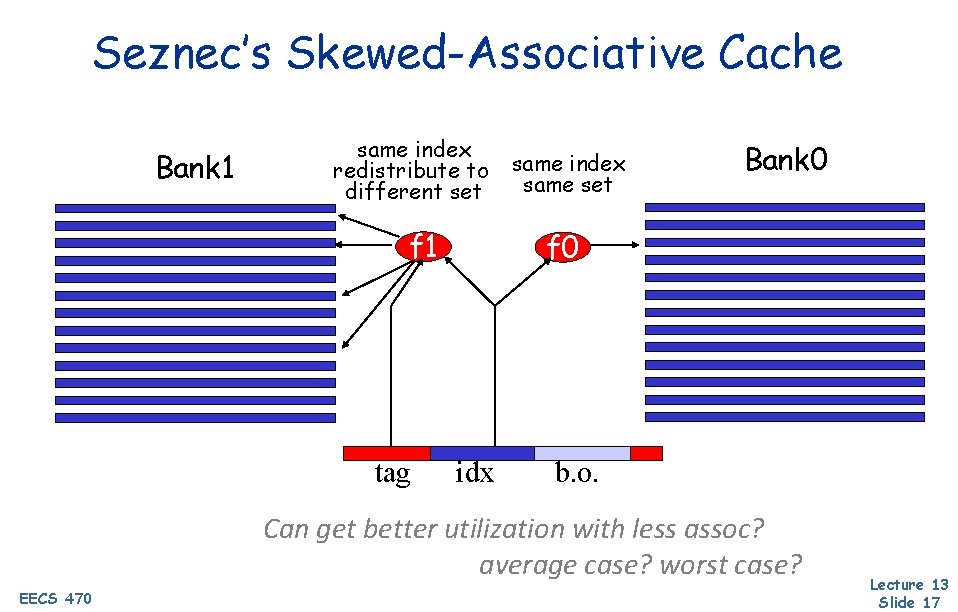

Seznec’s Skewed-Associative Cache Idea: Use a different hash function for each way in a set associative cache • Hash function: Using more bits than needed to index and "reduce" them (usually with xor) to the right number of bits E. g. for 4 -way skewed-associative cache consider: bank 0: A 1 xor A 2 bank 1: shuffle(A 1) xor A 2 bank 2: shuffle(A 1)) xor A 2 bank 3: shuffle(shuffle(A 1))) xor A 2 Implementation only adds xor’s to cache access path EECS 470 Lecture 13 Slide 16

Seznec’s Skewed-Associative Cache Bank 1 same index redistribute to different set f 1 tag same index same set Bank 0 f 0 idx b. o. Can get better utilization with less assoc? average case? worst case? EECS 470 Lecture 13 Slide 17

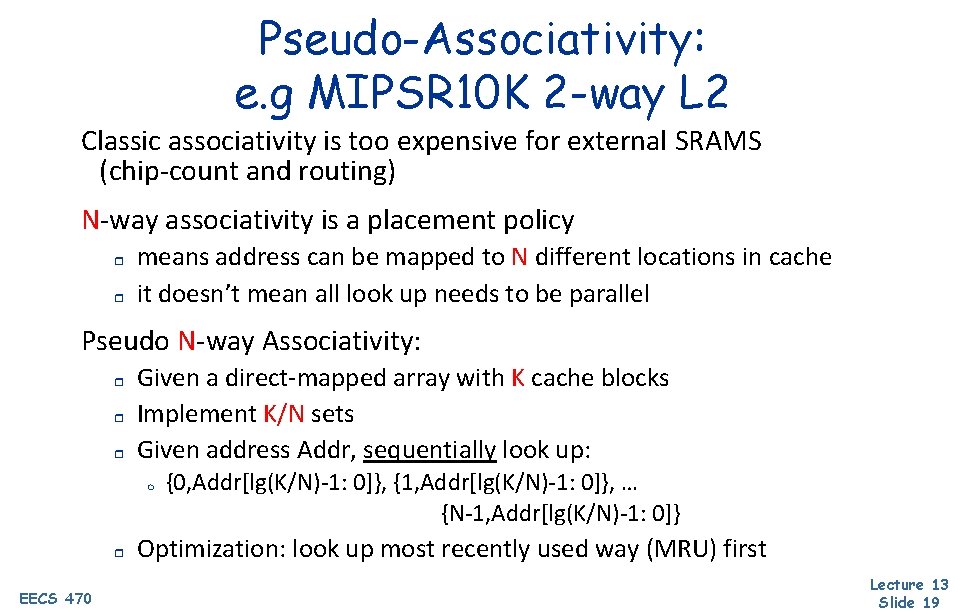

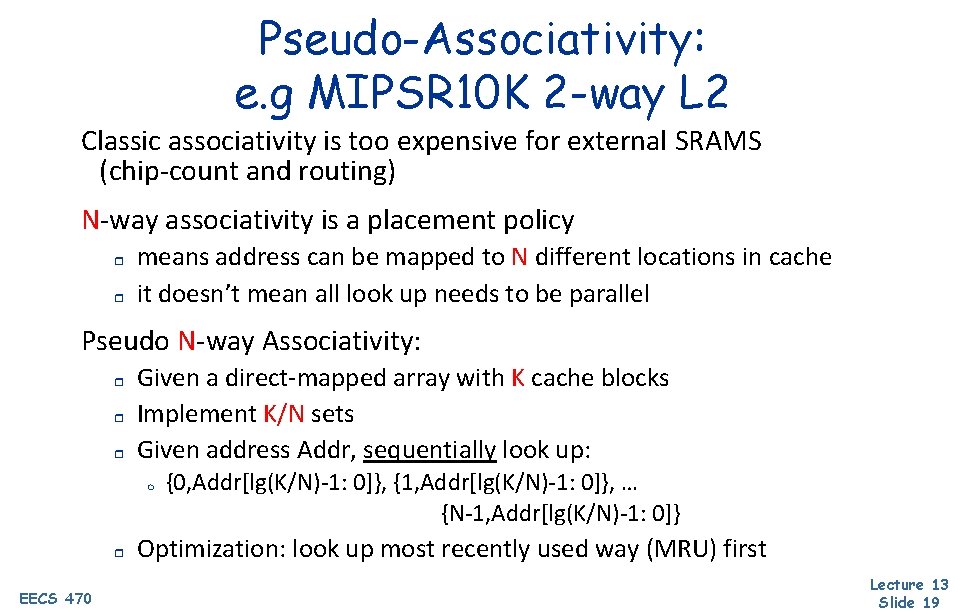

Seznec’s Skewed-Associative Cache If a 2 -way set associative cache has 2 sets using LRU replacement, and each bank is indexed with an independent hashing function, what is the probability that the memory access stream A, B, C, A misses on the second A access? Poll a) 0 b) 1/8 c) 1/4 d) 1/2 e) 3/4 EECS 470 Lecture 13 Slide 18

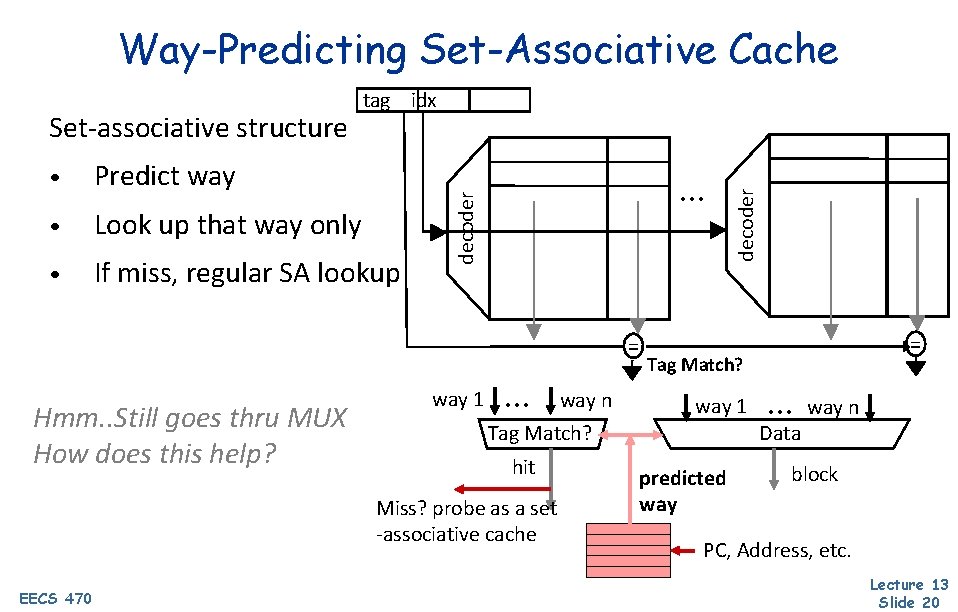

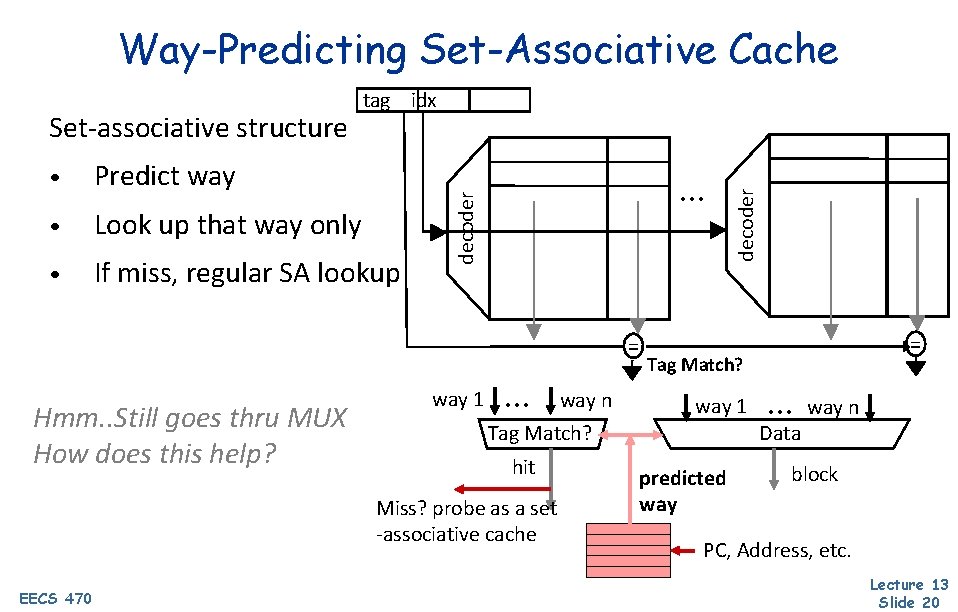

Pseudo-Associativity: e. g MIPSR 10 K 2 -way L 2 Classic associativity is too expensive for external SRAMS (chip-count and routing) N-way associativity is a placement policy r r means address can be mapped to N different locations in cache it doesn’t mean all look up needs to be parallel Pseudo N-way Associativity: r r r Given a direct-mapped array with K cache blocks Implement K/N sets Given address Addr, sequentially look up: m r EECS 470 {0, Addr[lg(K/N)-1: 0]}, {1, Addr[lg(K/N)-1: 0]}, … {N-1, Addr[lg(K/N)-1: 0]} Optimization: look up most recently used way (MRU) first Lecture 13 Slide 19

Way-Predicting Set-Associative Cache Predict way • Look up that way only • If miss, regular SA lookup … = Hmm. . Still goes thru MUX How does this help? way 1 … way n Tag Match? hit Miss? probe as a set -associative cache EECS 470 decoder • decoder Set-associative structure tag idx = Tag Match? way 1 … Data predicted way n block PC, Address, etc. Lecture 13 Slide 20

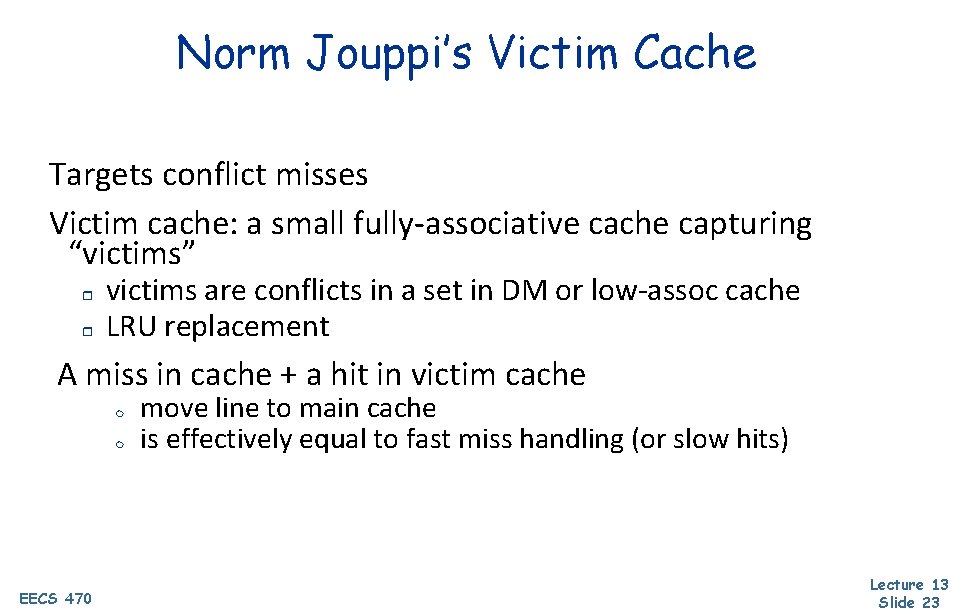

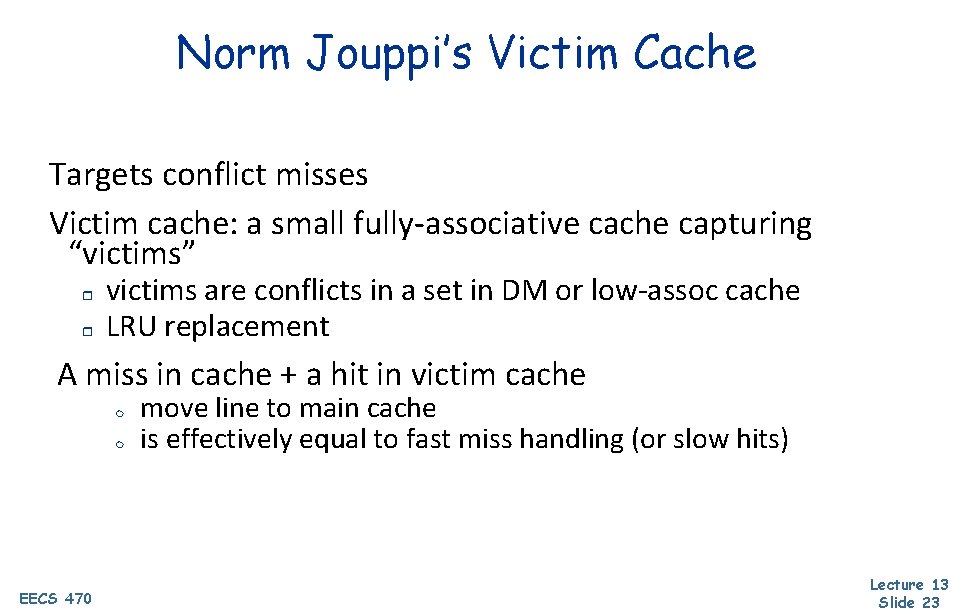

Norm Jouppi’s Victim Cache Situation: working set fits inside cache, except for few memory lines which map to the same cache block and keep knocking each other out Don’t want to increase associativity, since most data accesses don’t have conflict misses EECS 470 Lecture 13 Slide 21

Norm Jouppi’s Victim Cache DM $ small f. a. victim cache Victim $ Next level Motivation: working set fits inside cache, except for a small memory lines which map to the same cache block and keep knocking each other out Lines evicted from the direct-mapped cache due to collision is stored into the victim cache Avoids ping-ponging when the working set contains a few addresses that collides EECS 470 Lecture 13 Slide 22

Norm Jouppi’s Victim Cache Targets conflict misses Victim cache: a small fully-associative cache capturing “victims” r r victims are conflicts in a set in DM or low-assoc cache LRU replacement A miss in cache + a hit in victim cache m m EECS 470 move line to main cache is effectively equal to fast miss handling (or slow hits) Lecture 13 Slide 23

Victim Cache’s Performance Removing conflict misses r even one entry helps some benchmarks Compared to cache size r generally, victim cache helps more for smaller caches Compared to line size r EECS 470 helps more with larger line size (corollary of above) Lecture 13 Slide 24

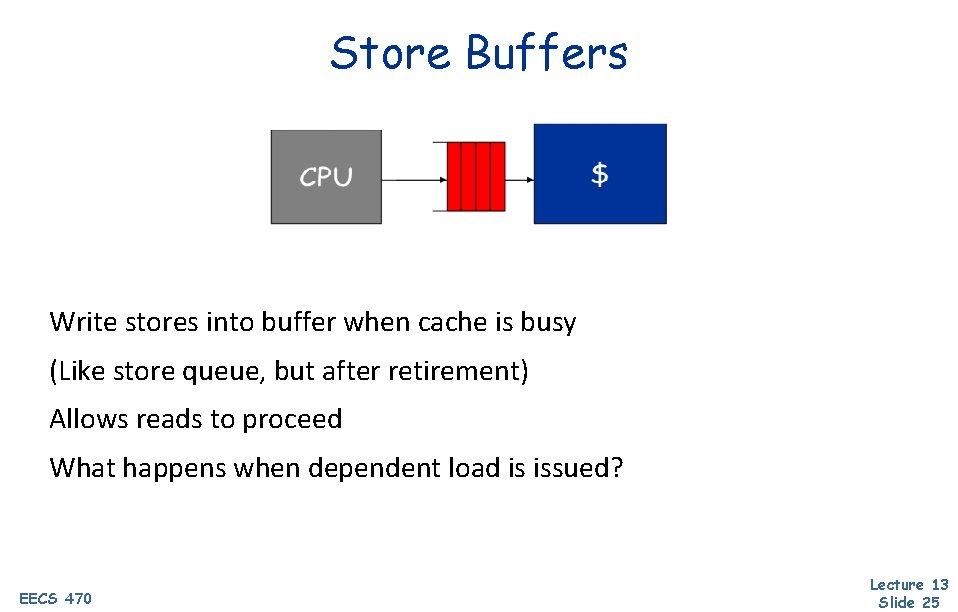

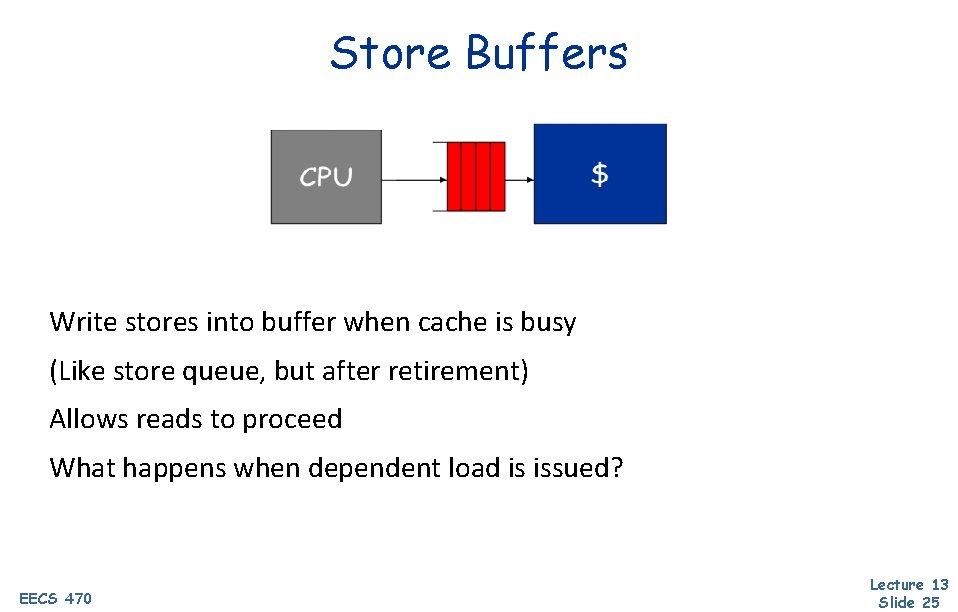

Store Buffers Write stores into buffer when cache is busy (Like store queue, but after retirement) Allows reads to proceed What happens when dependent load is issued? EECS 470 Lecture 13 Slide 25

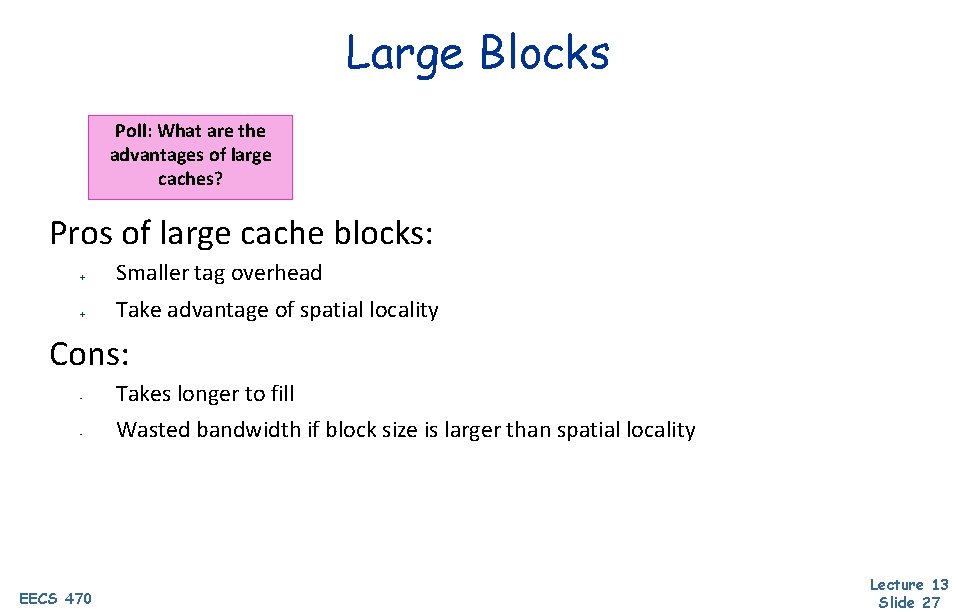

Writeback Buffers Between write-back cache and next level 1. Move replaced, dirty blocks to buffer 2. Read new line 3. Move replaced data to memory Usually only need 1 or 2 write-back buffer entries EECS 470 Lecture 13 Slide 26

Large Blocks Poll: What are the advantages of large caches? Pros of large cache blocks: + Smaller tag overhead + Take advantage of spatial locality Cons: - Takes longer to fill - Wasted bandwidth if block size is larger than spatial locality EECS 470 Lecture 13 Slide 27

Large Blocks and Subblocking Can get the best of both worlds Large cache blocks can take a long time to refill cache line critical word first r restart cache access before complete refill r Large cache blocks can waste bus bandwidth if block size is larger than spatial locality divide a block into subblocks r associate separate valid bits for each subblock r Only load subblock on access, but still have reduced tag overhead r v EECS 470 subblock v subblock tag Lecture 13 Slide 28

Multi-Level Caches Processors getting faster w. r. t. main memory larger caches to reduce frequency of more costly misses r but larger caches are too slow for processor => gradually reduce miss cost with multiple levels r tavg = thit + miss ratio x tmiss EECS 470 Lecture 13 Slide 29

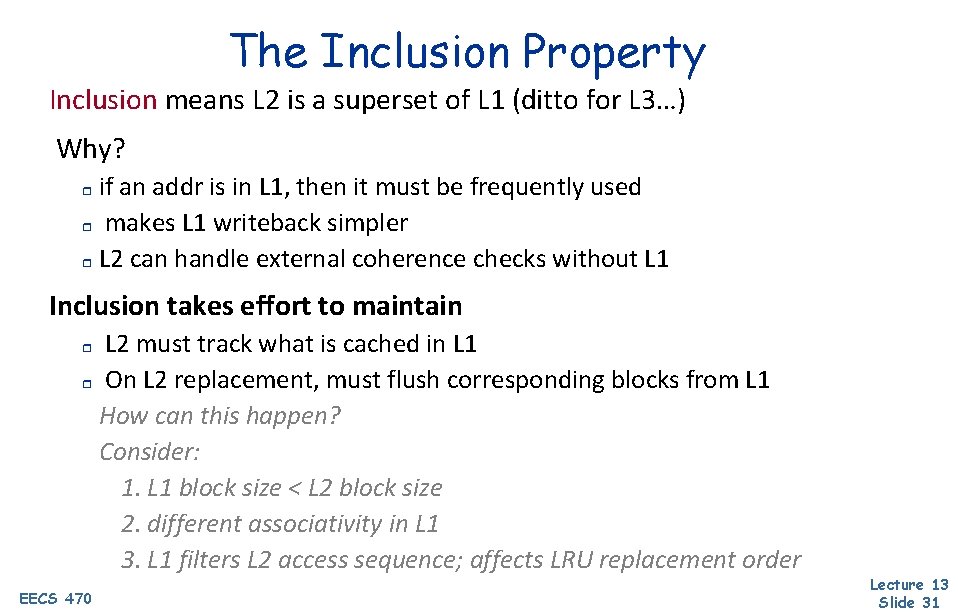

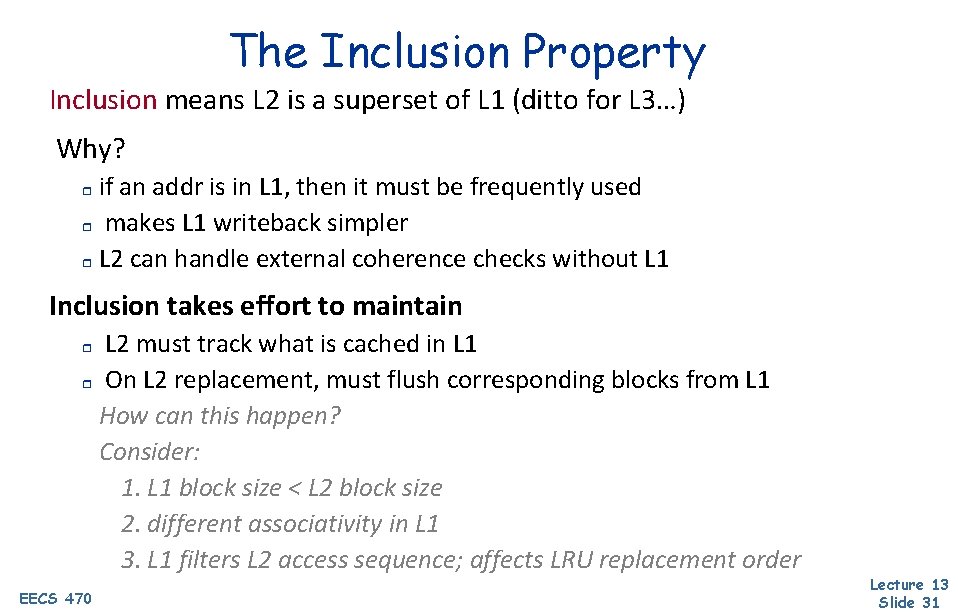

Multi-Level Cache Design Proc L 1 D L 1 I different technology different requirements Þ different choice of capacity block size associativity tavg-L 1 = thit-L 1 + miss-ratio. L 1 x tavg-L 2 = thit-L 2 + miss-ratio. L 2 x tmemory What is miss ratio? global: L 2 misses / L 1 accesses local: L 2 misses / L 1 misses EECS 470 Lecture 13 Slide 30

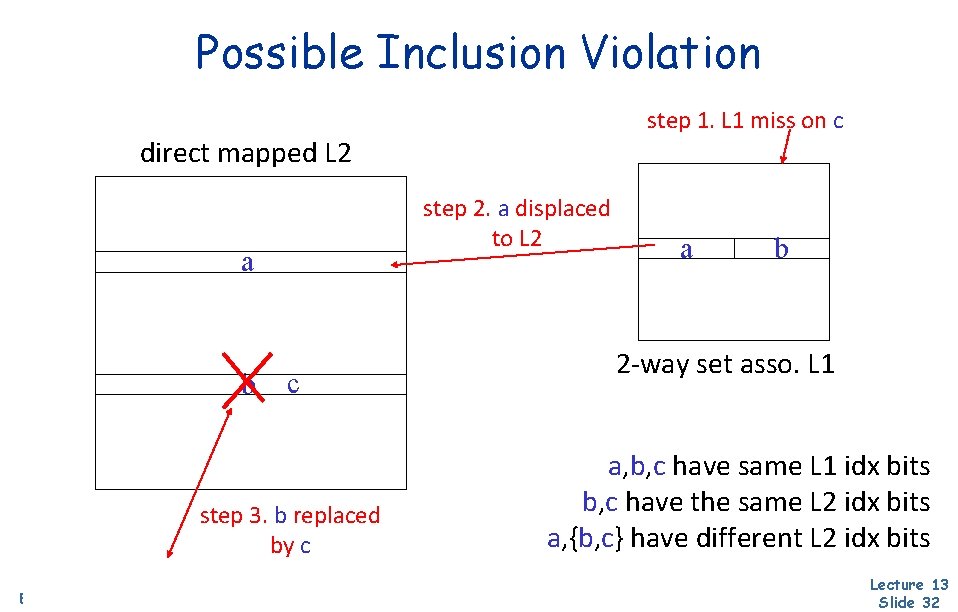

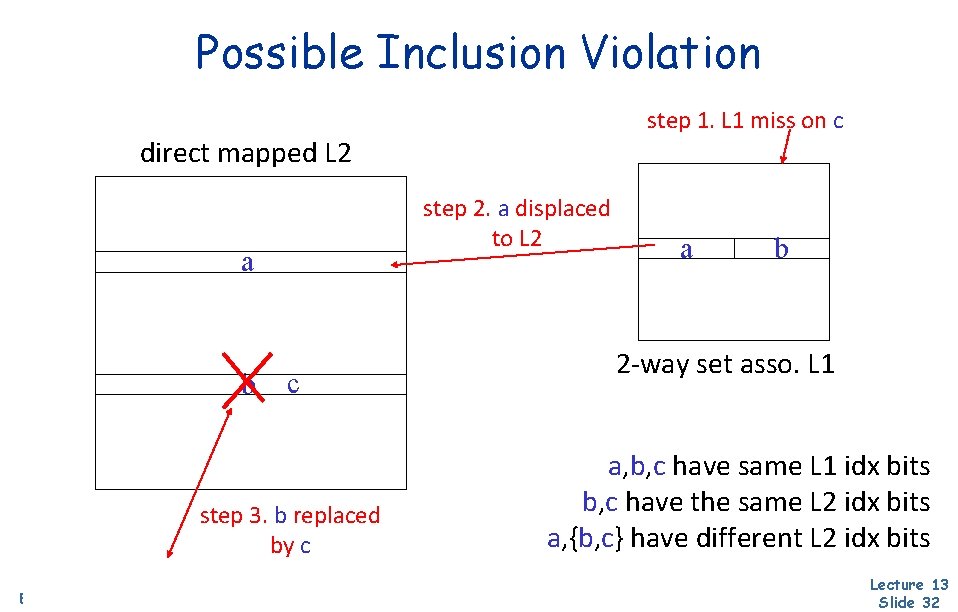

The Inclusion Property Inclusion means L 2 is a superset of L 1 (ditto for L 3…) Why? if an addr is in L 1, then it must be frequently used r makes L 1 writeback simpler r L 2 can handle external coherence checks without L 1 r Inclusion takes effort to maintain L 2 must track what is cached in L 1 r On L 2 replacement, must flush corresponding blocks from L 1 How can this happen? Consider: 1. L 1 block size < L 2 block size 2. different associativity in L 1 3. L 1 filters L 2 access sequence; affects LRU replacement order r EECS 470 Lecture 13 Slide 31

Possible Inclusion Violation step 1. L 1 miss on c direct mapped L 2 step 2. a displaced to L 2 a b c step 3. b replaced by c EECS 470 a b 2 -way set asso. L 1 a, b, c have same L 1 idx bits b, c have the same L 2 idx bits a, {b, c} have different L 2 idx bits Lecture 13 Slide 32

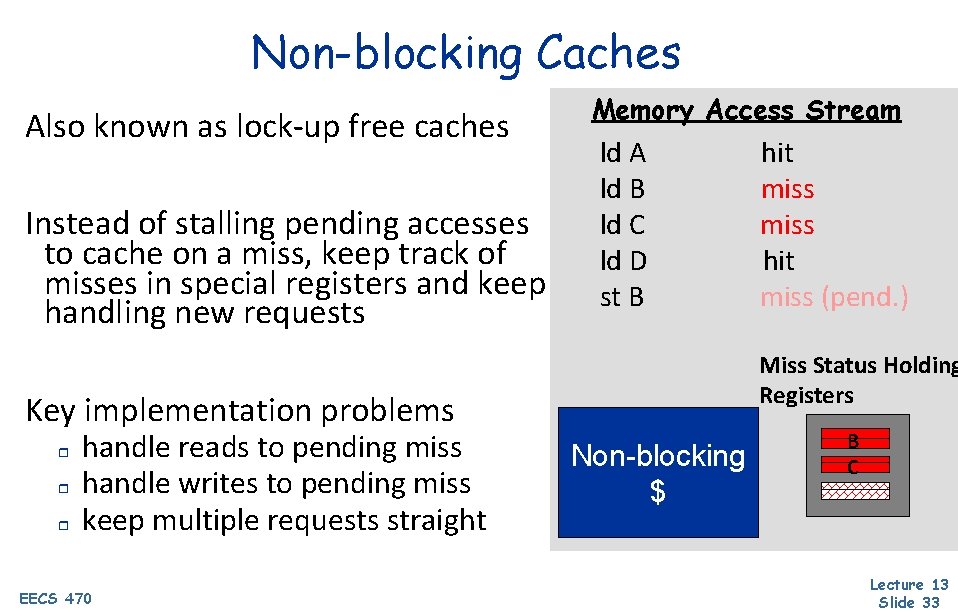

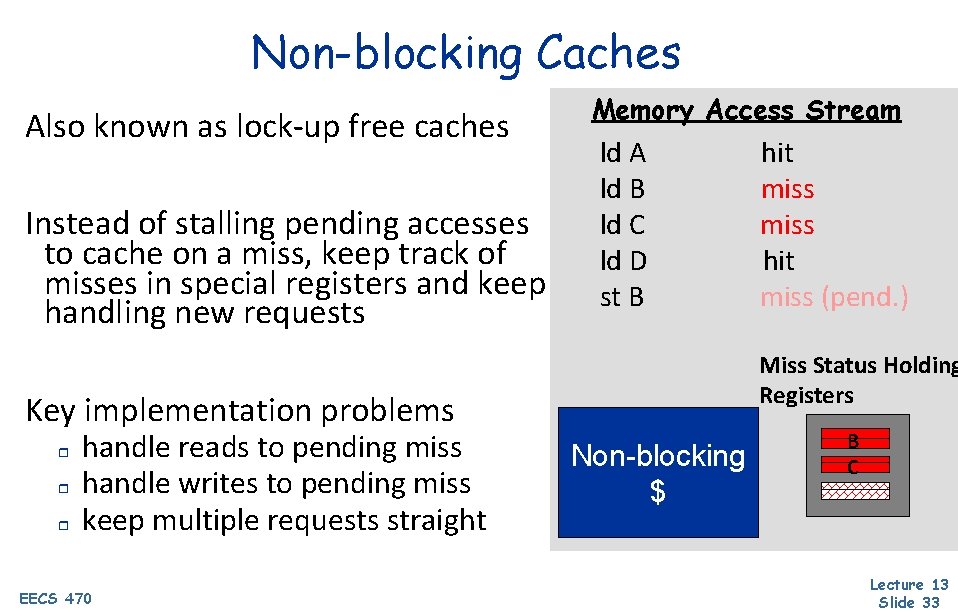

Non-blocking Caches Also known as lock-up free caches Instead of stalling pending accesses to cache on a miss, keep track of misses in special registers and keep handling new requests Memory Access Stream ld A ld B ld C ld D st B Miss Status Holding Registers Key implementation problems r r r handle reads to pending miss handle writes to pending miss keep multiple requests straight EECS 470 hit miss (pend. ) Non-blocking $ B C Lecture 13 Slide 33