EECS 470 Lecture 13 Basic Caches Winter 2021

EECS 470 Lecture 13 Basic Caches Winter 2021 Jon Beaumont http: //www. eecs. umich. edu/courses/eecs 470 Slides developed in part by Profs. Austin, Brehob, Falsafi, Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, and Vijaykumar of Carnegie Mellon University, Purdue University, University of Michigan, and University of Wisconsin. EECS 470 Lecture 13 Slide 1

Announcements Milestone 1 meetings finishing today EECS 470 Lecture 13 Slide 2

Midterm Feedback Thank you to everyone who submitted feedback! Some common requests: More Pr. Office hours during the evening (particularly for folks in other timezones) • I’ll look into alternating times In the meantime, I’m always happy to setup appointments if there’s something you specifically need to talk with me about • • Website improvements • If anyone has web or scripting experience, there may be funding available over the summer to upgrade the autograder and website for 470 • • EECS 470 Upgrade to something similar to EECS 370 Lecture 13 Slide 3

Lingering questions “Can you go over trace caches again? ” • Don’t think I’ll have time today, but I’ll make a note to come back to it if there’s time later • In the meantime, Wikipedia has a pretty accessible article you might find helpful: https: //en. wikipedia. org/wiki/Trace_cache Remember to post any lingering questions / give feedback here: https: //bit. ly/3 o. Sr 5 FD EECS 470 Lecture 13 Slide 4

Readings For Today: r H&P 2. 1 For Wednesday: r EECS 470 N. Jouppi. Improving direct-mapped cache performance… Lecture 13 Slide 5

Memory Systems: Basic Caches EECS 470 Lecture 13 Slide 6

Memory Systems Basic caches r r r introduction fundamental questions cache size, block size, associativity Start today Advanced caches Main memory Virtual memory EECS 470 Lecture 13 Slide 7

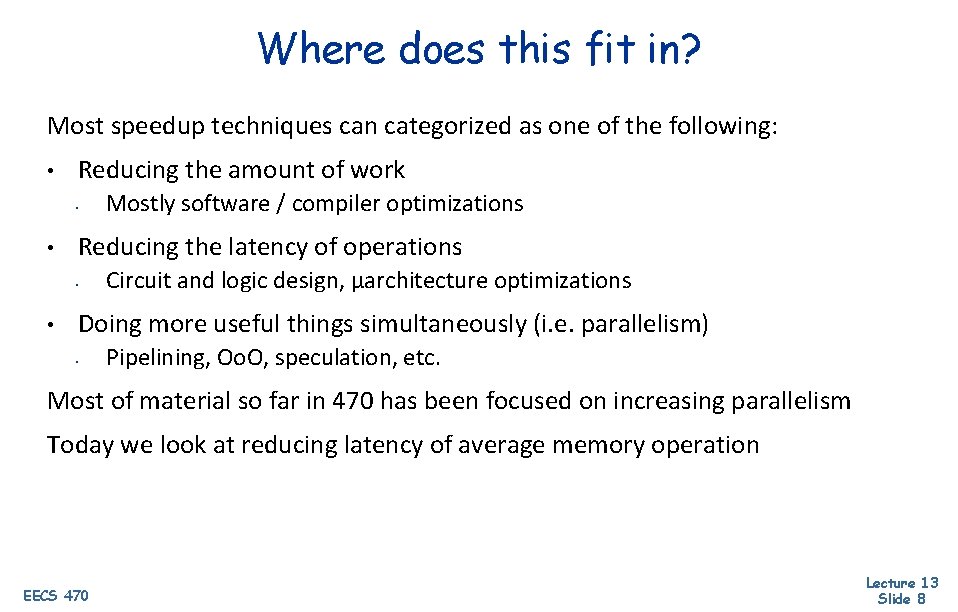

Where does this fit in? Most speedup techniques can categorized as one of the following: • Reducing the amount of work • • Reducing the latency of operations • • Mostly software / compiler optimizations Circuit and logic design, μarchitecture optimizations Doing more useful things simultaneously (i. e. parallelism) • Pipelining, Oo. O, speculation, etc. Most of material so far in 470 has been focused on increasing parallelism Today we look at reducing latency of average memory operation EECS 470 Lecture 13 Slide 8

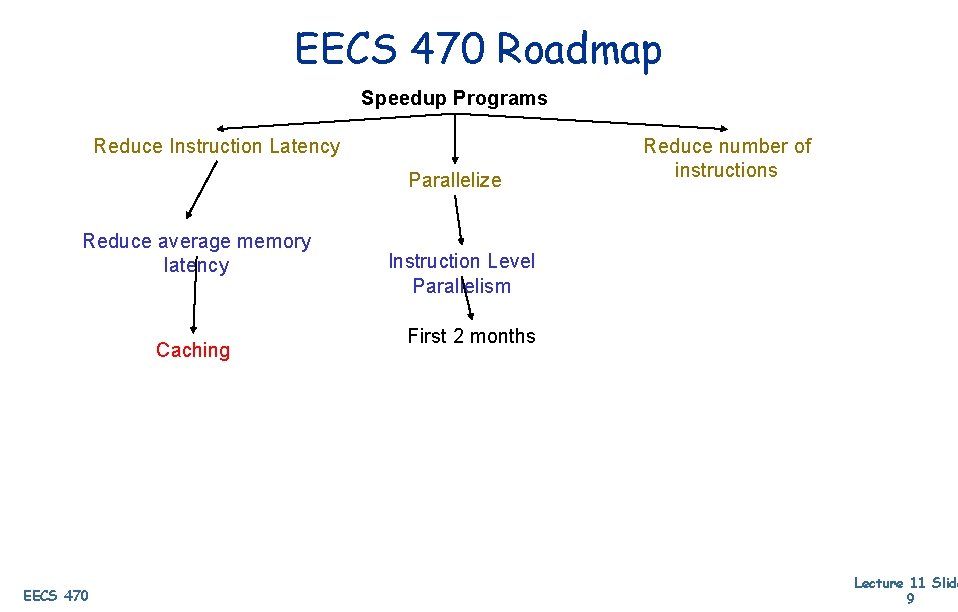

EECS 470 Roadmap Speedup Programs Reduce Instruction Latency Parallelize Reduce average memory latency Caching EECS 470 Reduce number of instructions Instruction Level Parallelism First 2 months Lecture 11 Slide 9

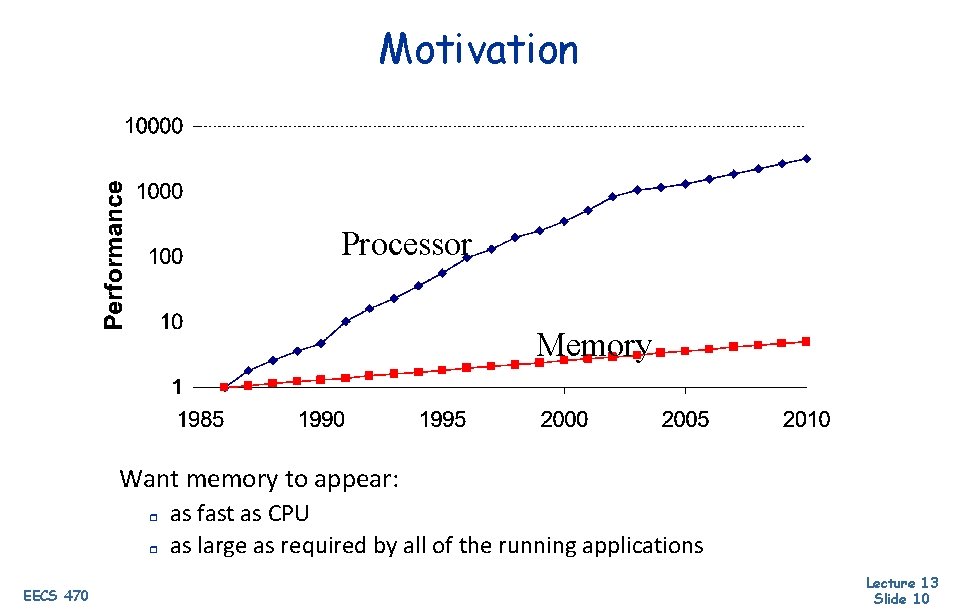

Motivation Processor Memory Want memory to appear: r r EECS 470 as fast as CPU as large as required by all of the running applications Lecture 13 Slide 10

Motivation This would be a problem, except… why? Memory accesses are NOT random Specifically, most programs exhibit: • spatial locality (e. g. indexing through an array) • temporal locality (e. g. reading a variable shortly after referencing it) Idea: include smaller (+faster) memory structures to hold data more likely to be accessed EECS 470 Lecture 13 Slide 11

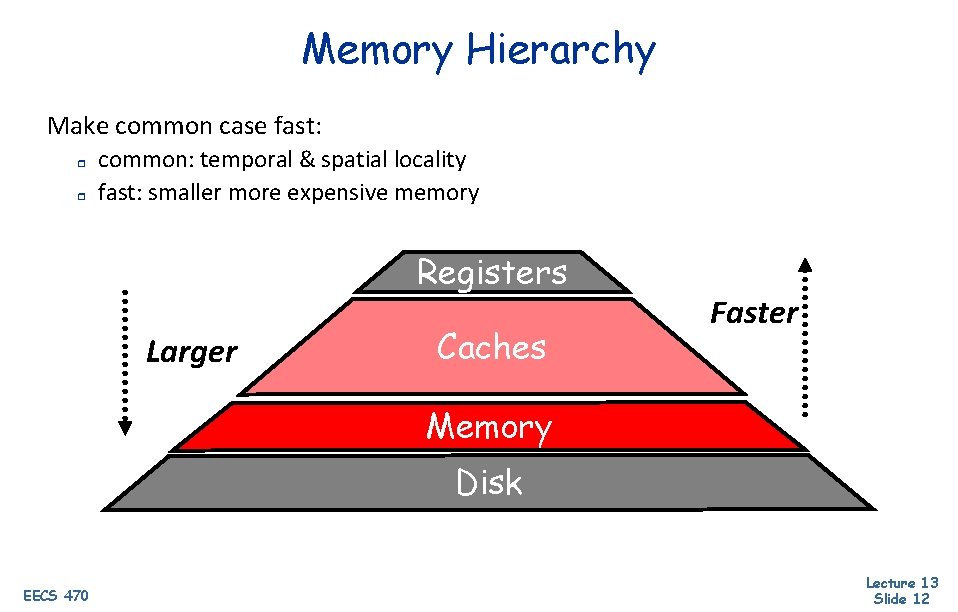

Memory Hierarchy Make common case fast: r r common: temporal & spatial locality fast: smaller more expensive memory Registers Larger Caches Faster Memory Disk EECS 470 Lecture 13 Slide 12

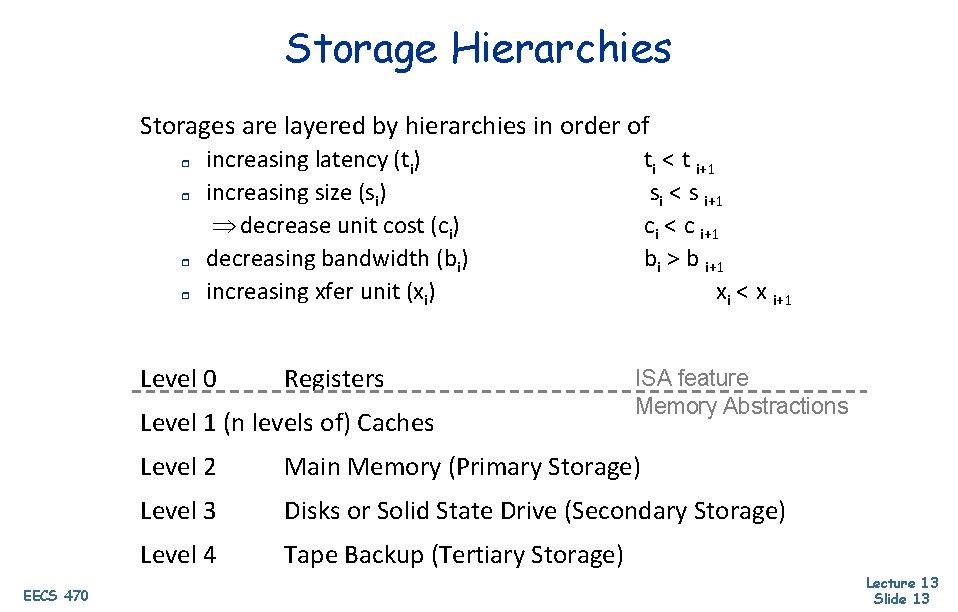

Storage Hierarchies Storages are layered by hierarchies in order of r r increasing latency (ti) increasing size (si) Þ decrease unit cost (ci) decreasing bandwidth (bi) increasing xfer unit (xi) Level 0 Registers Level 1 (n levels of) Caches EECS 470 ti < t i+1 si < s i+1 ci < c i+1 bi > b i+1 xi < x i+1 ISA feature Memory Abstractions Level 2 Main Memory (Primary Storage) Level 3 Disks or Solid State Drive (Secondary Storage) Level 4 Tape Backup (Tertiary Storage) Lecture 13 Slide 13

Locality Example - Poll Question Poll How do the code samples rank w. r. t. temporal locality? Spatial? EECS 470 Lecture 13 Slide 14

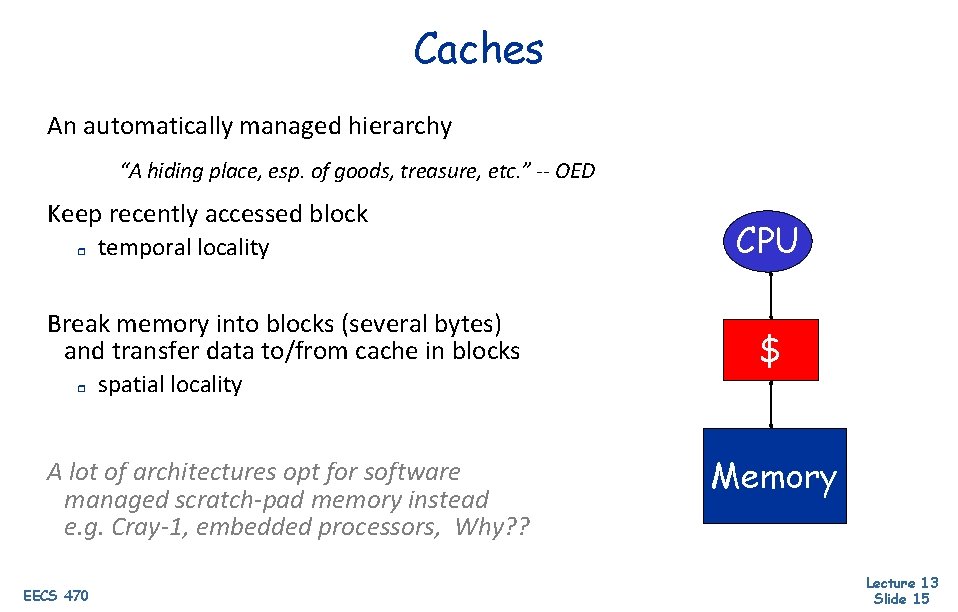

Caches An automatically managed hierarchy “A hiding place, esp. of goods, treasure, etc. ” -- OED Keep recently accessed block r temporal locality Break memory into blocks (several bytes) and transfer data to/from cache in blocks r spatial locality A lot of architectures opt for software managed scratch-pad memory instead e. g. Cray-1, embedded processors, Why? ? EECS 470 CPU $ Memory Lecture 13 Slide 15

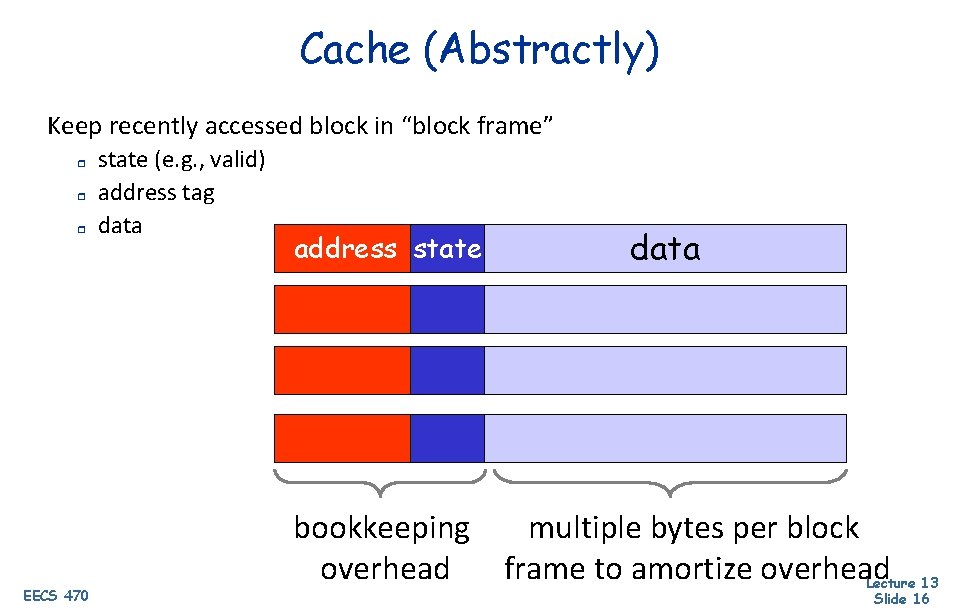

Cache (Abstractly) Keep recently accessed block in “block frame” r r r EECS 470 state (e. g. , valid) address tag data address state data bookkeeping multiple bytes per block overhead frame to amortize overhead Lecture 13 Slide 16

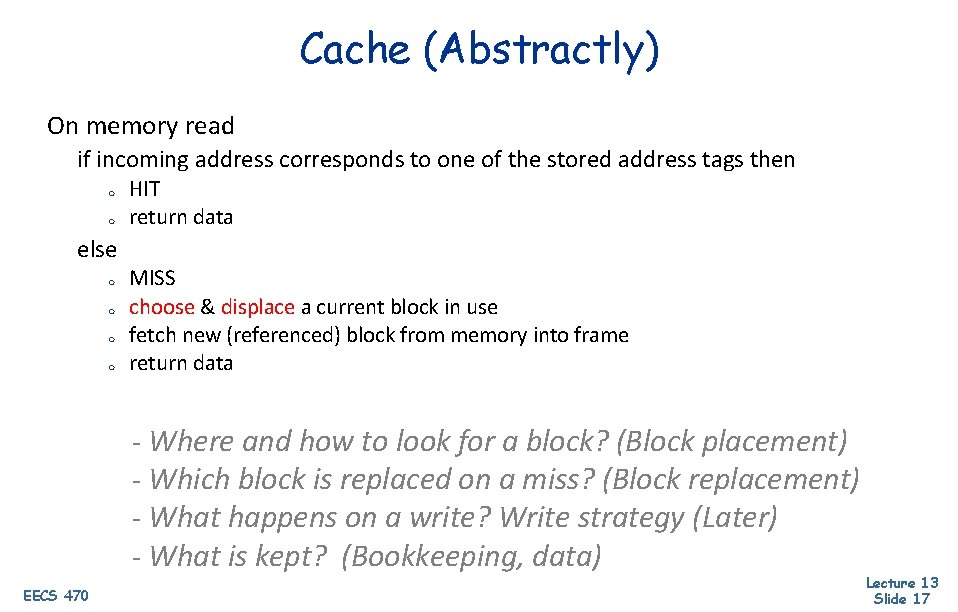

Cache (Abstractly) On memory read if incoming address corresponds to one of the stored address tags then m m HIT return data else m m MISS choose & displace a current block in use fetch new (referenced) block from memory into frame return data - Where and how to look for a block? (Block placement) - Which block is replaced on a miss? (Block replacement) - What happens on a write? Write strategy (Later) - What is kept? (Bookkeeping, data) EECS 470 Lecture 13 Slide 17

Terminology block (cache line) — minimum unit that may be present hit — block is found in the cache miss — block is not found in the cache miss ratio — fraction of references that miss hit time — time to access the cache miss penalty r r r EECS 470 time to replace block in the cache + deliver to upper level access time — time to get first word transfer time — time for remaining words Lecture 13 Slide 18

Cache Performance Poll Question Given a cache with a particular: • Hit time (HT or AT) • Miss ratio (MR) • Miss penalty (MP) Poll: What is the mean access time for the cache? EECS 470 Lecture 13 Slide 19

Cache Performance Assume r r r Cache access time is equal to 1 cycle Cache miss ratio is 0. 01 Cache miss penalty is 20 cycles Mean access time Typically r r r EECS 470 level-1 is 16 K-512 K, level-2 is 512 K-16 M, memory is 128 M-16 G level-1 as fast as the processor (increasingly 2 -cycles) level-1 is 1/10000 capacity but contains 98% of references Lecture 13 Slide 20

Cache Performance Assume r r r Cache access time is equal to 1 cycle Cache miss ratio is 0. 01 Cache miss penalty is 20 cycles Mean access time = Cache access time + miss ratio * miss penalty = 1 + 0. 01 * 20 = 1. 2 Typically r r r EECS 470 level-1 is 16 K-512 K, level-2 is 512 K-16 M, memory is 128 M-16 G level-1 as fast as the processor (increasingly 2 -cycles) level-1 is 1/10000 capacity but contains 98% of references Lecture 13 Slide 21

Fundamental Cache Parameters that affects miss rate Cache size (C) Block size (b) Cache associativity (a) Jamboard EECS 470 Lecture 13 Slide 22

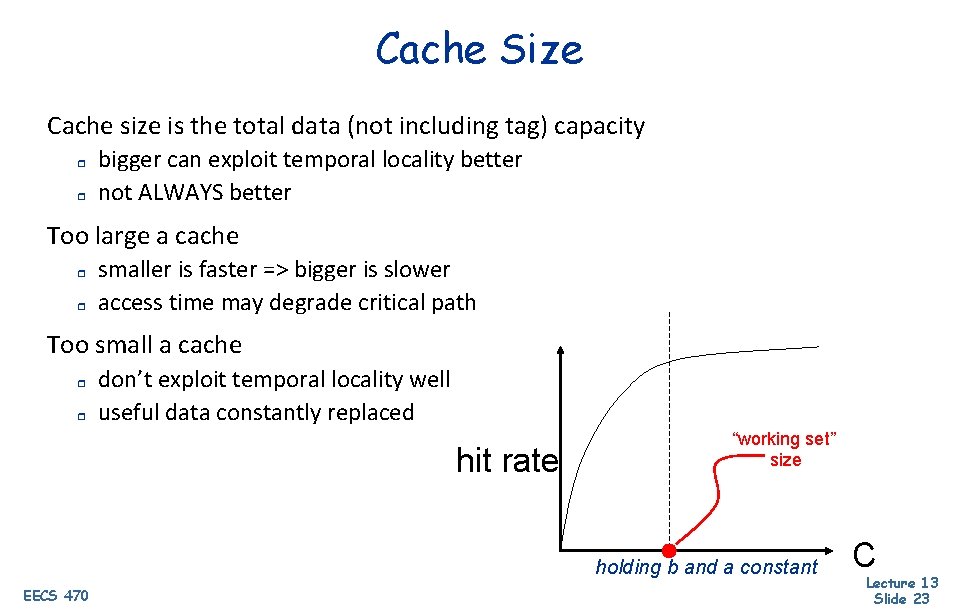

Cache Size Cache size is the total data (not including tag) capacity r r bigger can exploit temporal locality better not ALWAYS better Too large a cache r r smaller is faster => bigger is slower access time may degrade critical path Too small a cache r r don’t exploit temporal locality well useful data constantly replaced hit rate “working set” size holding b and a constant EECS 470 C Lecture 13 Slide 23

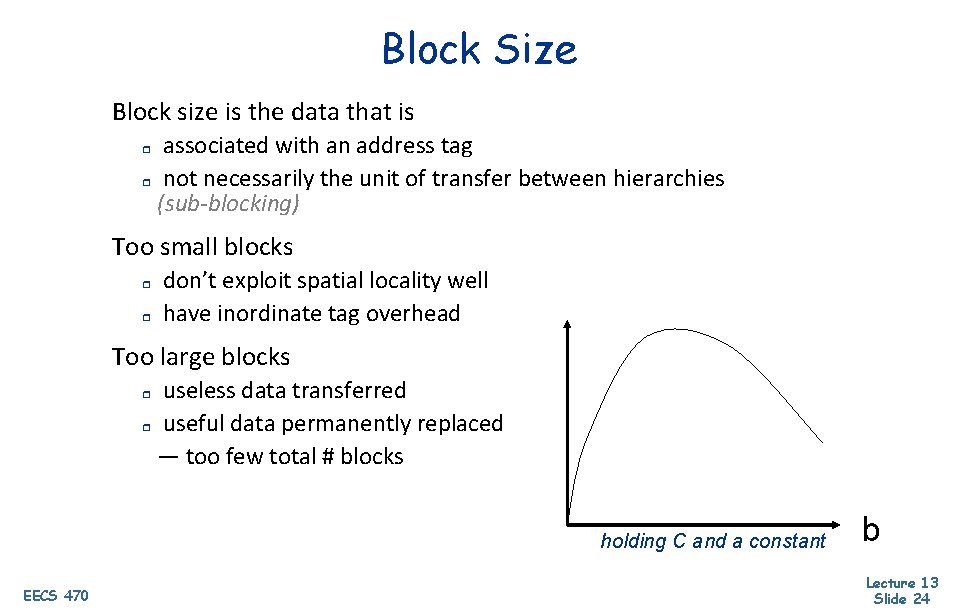

Block Size Block size is the data that is associated with an address tag r not necessarily the unit of transfer between hierarchies (sub-blocking) r Too small blocks r r don’t exploit spatial locality well have inordinate tag overhead Too large blocks useless data transferred r useful data permanently replaced — too few total # blocks r holding C and a constant EECS 470 b Lecture 13 Slide 24

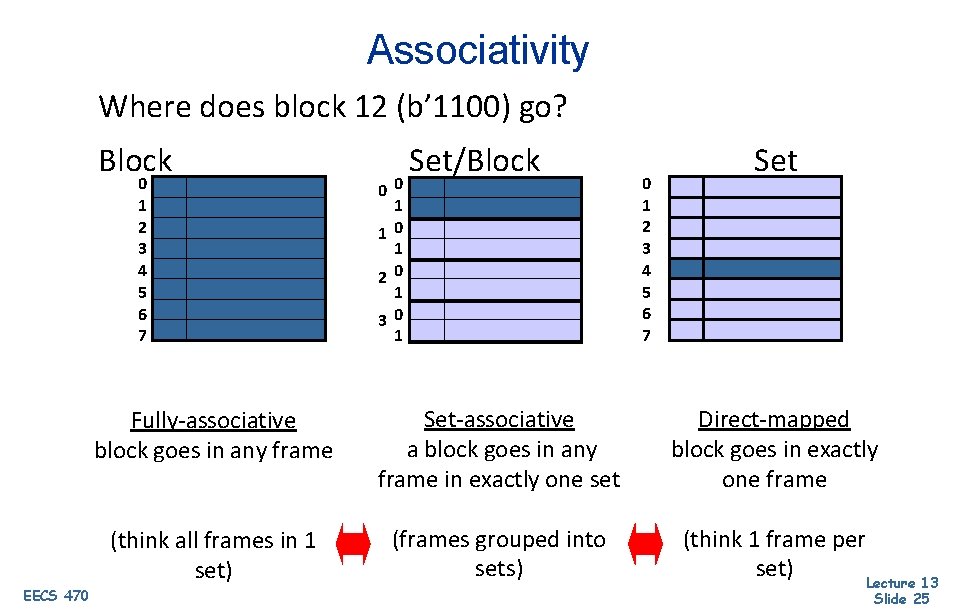

Associativity Where does block 12 (b’ 1100) go? Block 0 1 2 3 4 5 6 7 EECS 470 00 1 10 1 20 1 30 1 Set/Block Fully-associative block goes in any frame Set-associative a block goes in any frame in exactly one set (think all frames in 1 set) (frames grouped into sets) 0 1 2 3 4 5 6 7 Set Direct-mapped block goes in exactly one frame (think 1 frame per set) Lecture 13 Slide 25

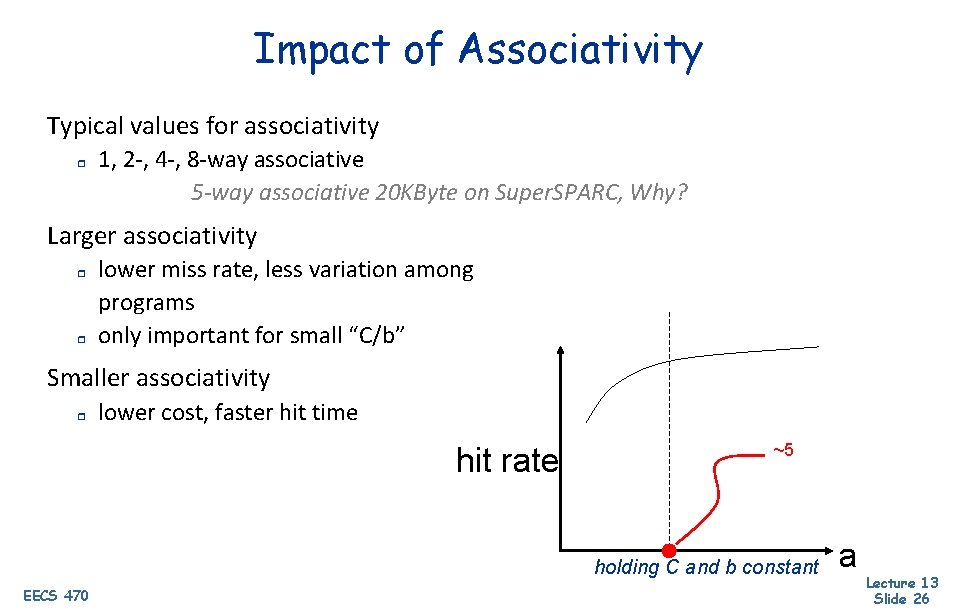

Impact of Associativity Typical values for associativity r 1, 2 -, 4 -, 8 -way associative 5 -way associative 20 KByte on Super. SPARC, Why? Larger associativity r r lower miss rate, less variation among programs only important for small “C/b” Smaller associativity r lower cost, faster hit time hit rate ~5 holding C and b constant EECS 470 a Lecture 13 Slide 26

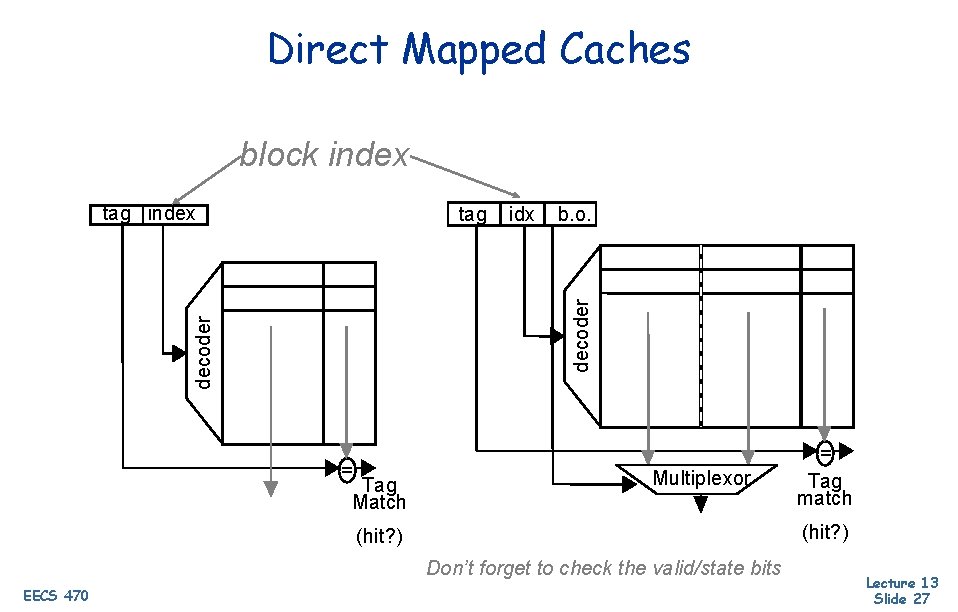

Direct Mapped Caches block index tag index idx b. o. decoder tag = Tag Match Multiplexor (hit? ) Don’t forget to check the valid/state bits EECS 470 = Tag match Lecture 13 Slide 27

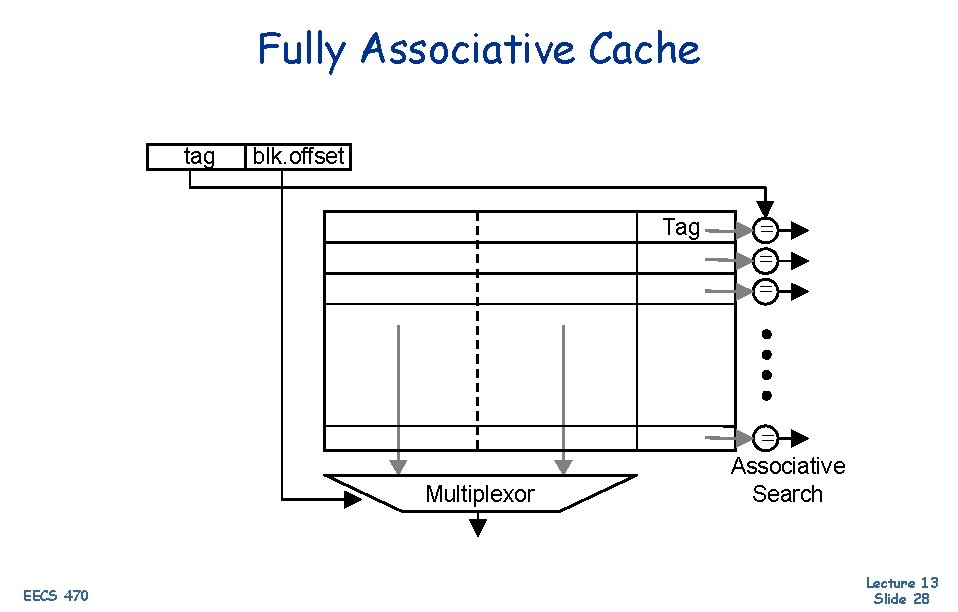

Fully Associative Cache tag blk. offset Tag = = Multiplexor EECS 470 Associative Search Lecture 13 Slide 28

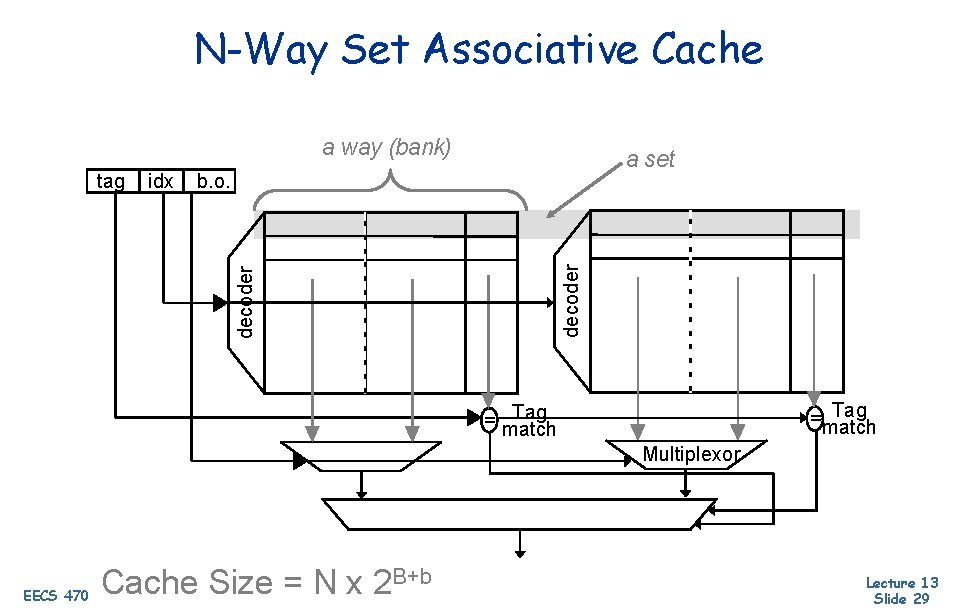

N-Way Set Associative Cache a way (bank) b. o. decoder idx decoder tag a set Tag =match Tag = match Multiplexor EECS 470 Cache Size = N x 2 B+b Lecture 13 Slide 29

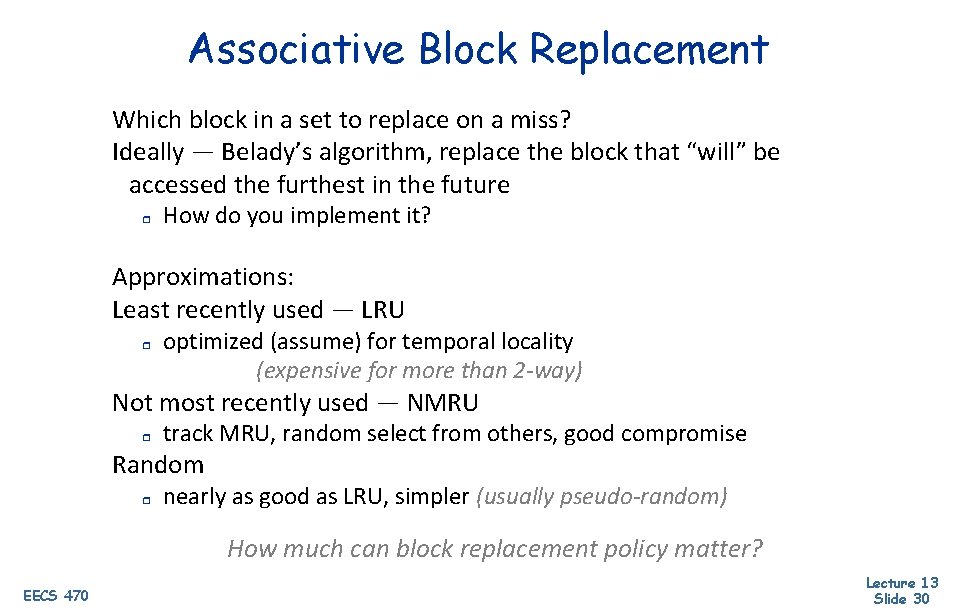

Associative Block Replacement Which block in a set to replace on a miss? Ideally — Belady’s algorithm, replace the block that “will” be accessed the furthest in the future r How do you implement it? Approximations: Least recently used — LRU r optimized (assume) for temporal locality (expensive for more than 2 -way) Not most recently used — NMRU r track MRU, random select from others, good compromise Random r nearly as good as LRU, simpler (usually pseudo-random) How much can block replacement policy matter? EECS 470 Lecture 13 Slide 30

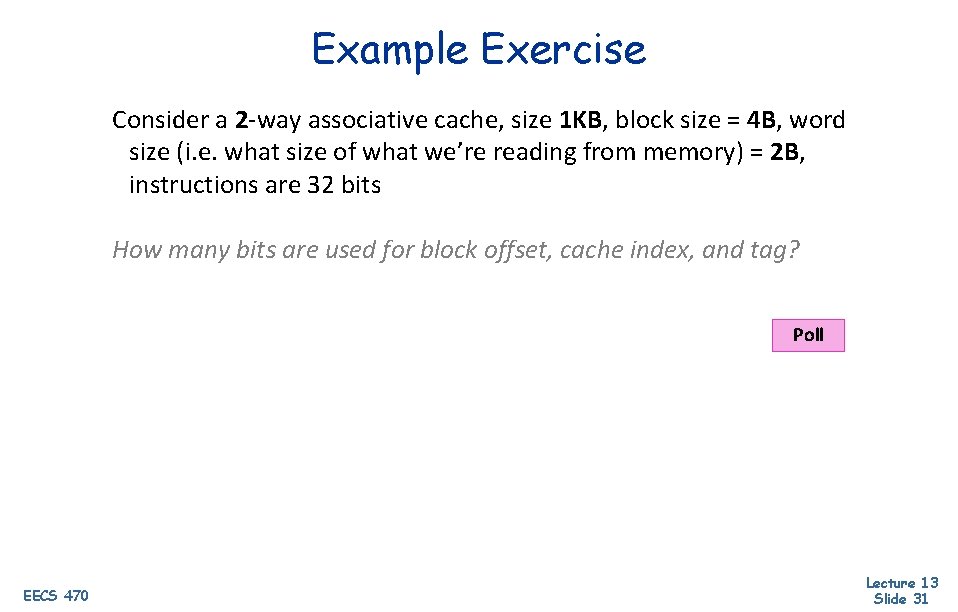

Example Exercise Consider a 2 -way associative cache, size 1 KB, block size = 4 B, word size (i. e. what size of what we’re reading from memory) = 2 B, instructions are 32 bits How many bits are used for block offset, cache index, and tag? Poll EECS 470 Lecture 13 Slide 31

![Example: a=2, C=1 kb, b=4 b, word-size=2 b Basic Solution tag PA[31: 9] idx Example: a=2, C=1 kb, b=4 b, word-size=2 b Basic Solution tag PA[31: 9] idx](http://slidetodoc.com/presentation_image_h2/4c9d774244a20372fdad79b8bba034cf/image-32.jpg)

Example: a=2, C=1 kb, b=4 b, word-size=2 b Basic Solution tag PA[31: 9] idx b. o. PA[8: 2] PA[1] PA[0] idx 7 23 7 7 tag 0 v 0 tag 1 v 1 data 0 data 1 128 -l “ x x 23 -b 1 -b 128 -lines x 4 -bytes 2 -1 -mux = = hit 0 EECS 470 7 hit 0 hit 1 tag idx b. o. hit 0 hit 1 HIT 2 -1 -muxd 16 DATA Lecture 13 Slide 32

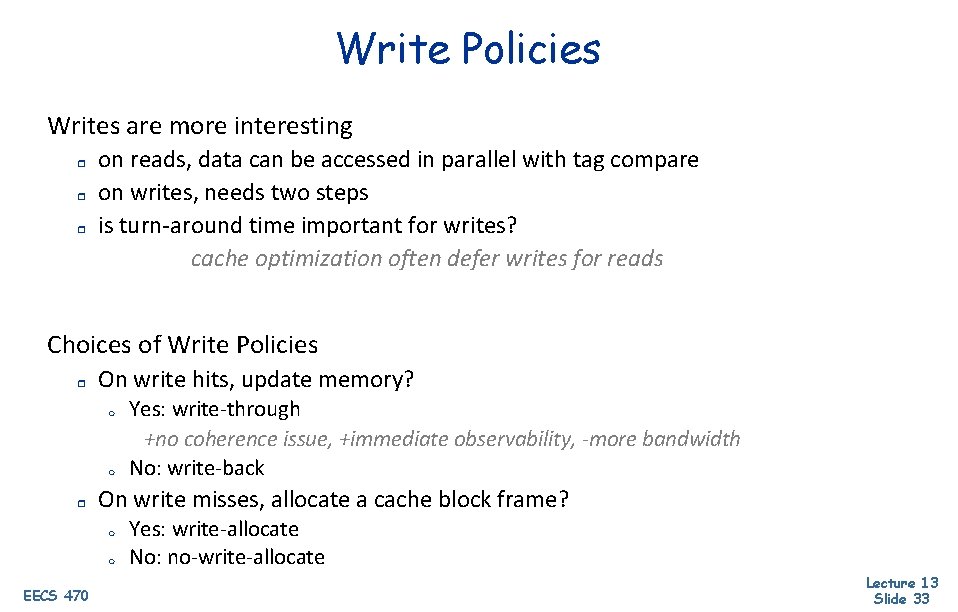

Write Policies Writes are more interesting r r r on reads, data can be accessed in parallel with tag compare on writes, needs two steps is turn-around time important for writes? cache optimization often defer writes for reads Choices of Write Policies r On write hits, update memory? m m r On write misses, allocate a cache block frame? m m EECS 470 Yes: write-through +no coherence issue, +immediate observability, -more bandwidth No: write-back Yes: write-allocate No: no-write-allocate Lecture 13 Slide 33

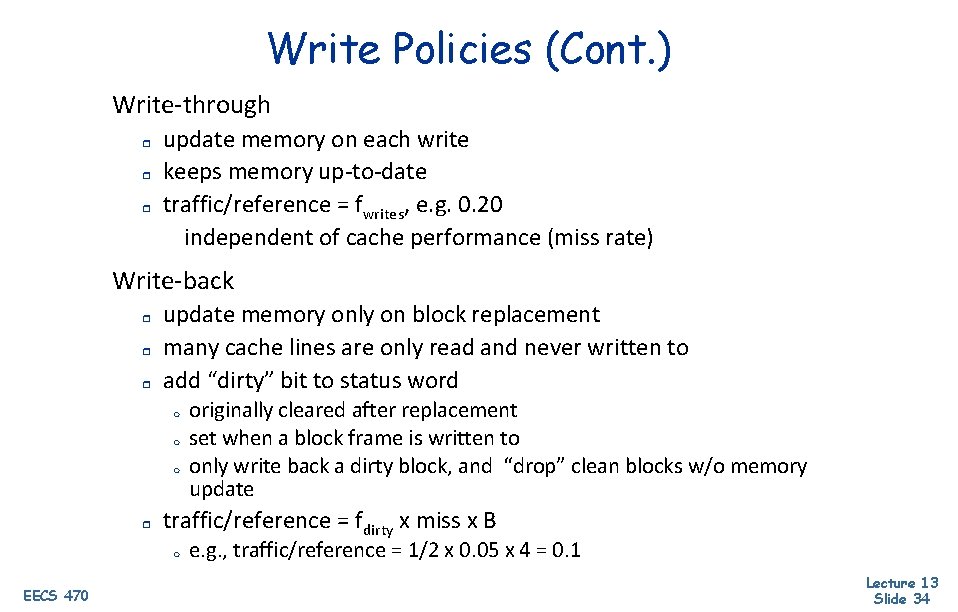

Write Policies (Cont. ) Write-through r r r update memory on each write keeps memory up-to-date traffic/reference = fwrites, e. g. 0. 20 independent of cache performance (miss rate) Write-back r r r update memory only on block replacement many cache lines are only read and never written to add “dirty” bit to status word m m m r traffic/reference = fdirty x miss x B m EECS 470 originally cleared after replacement set when a block frame is written to only write back a dirty block, and “drop” clean blocks w/o memory update e. g. , traffic/reference = 1/2 x 0. 05 x 4 = 0. 1 Lecture 13 Slide 34

Next Time Continue to more advanced cache designs Lingering questions / feedback? I'll include an anonymous form at the end of every lecture: https: //bit. ly/3 o. Sr 5 FD EECS 470 Lecture 13 Slide 35

- Slides: 35