EECS 470 Lecture 12 Wide Instruction Fetch Fall

![Gshare [Mc. Farling] Poll: What would make a good hash function? (select all that Gshare [Mc. Farling] Poll: What would make a good hash function? (select all that](https://slidetodoc.com/presentation_image_h2/05ccf04259e41c416140099fb8e14698/image-13.jpg)

![Hybrid Predictor Hybrid (tournament) predictor [Mc. Farling] q Attacks correlated predictor BHT utilization problem Hybrid Predictor Hybrid (tournament) predictor [Mc. Farling] q Attacks correlated predictor BHT utilization problem](https://slidetodoc.com/presentation_image_h2/05ccf04259e41c416140099fb8e14698/image-14.jpg)

![Trace Cache T$ T P Trace cache (T$) [Peleg+Weiser, Rotenberg+] Overcomes serialization of prediction Trace Cache T$ T P Trace cache (T$) [Peleg+Weiser, Rotenberg+] Overcomes serialization of prediction](https://slidetodoc.com/presentation_image_h2/05ccf04259e41c416140099fb8e14698/image-32.jpg)

- Slides: 34

EECS 470 Lecture 12 Wide Instruction Fetch Fall 2021 Jon Beaumont http: //www. eecs. umich. edu/courses/eecs 470 Slides developed in part by Profs. Austin, Brehob, Falsafi, Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, and Vijaykumar of Carnegie Mellon University, Purdue University, University of Michigan, and University of Wisconsin. EECS 470 Lecture 12 Slide 1

Announcements Milestone 1 Due Friday by midnight Label a git branch as with what you want us to grade q “make” should test your module, “make syn” should synthesize it q Short (<1 page) progress report, include group #, submit to Gradescope q q q Don't spend more than ~20 minutes on this I'll also be sending out info on peer evaluations Lab 6 (last assignment) due by 11: 59 pm Friday via Canvas EECS 470 Lecture 12 Slide 2

Announcements Midterm grades released • Mean: 72% • Stdev: 15% • Max: 95% Submit regrade requests through Gradescope by next Monday EECS 470 Lecture 12 Slide 3

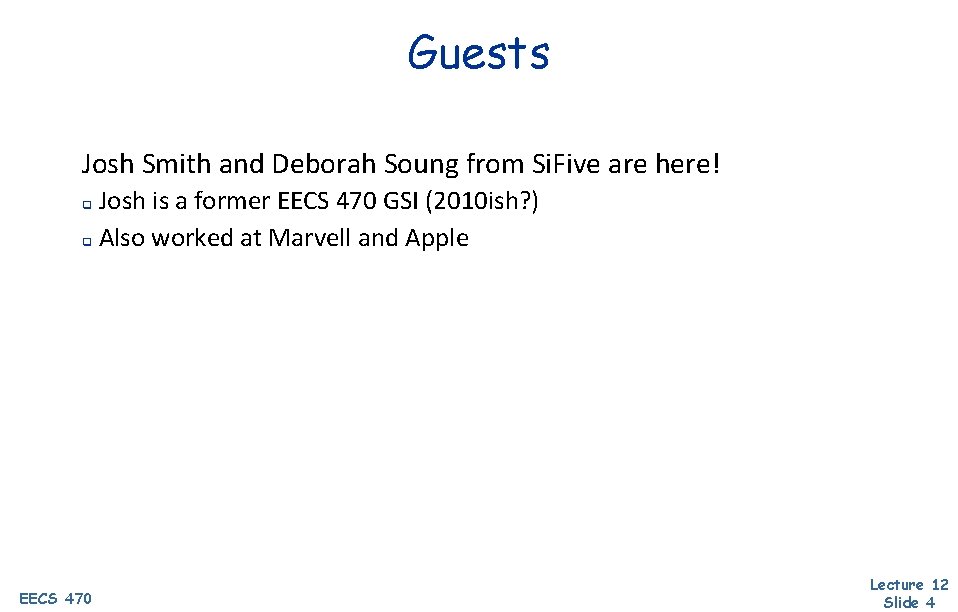

Guests Josh Smith and Deborah Soung from Si. Five are here! Josh is a former EECS 470 GSI (2010 ish? ) q Also worked at Marvell and Apple q EECS 470 Lecture 12 Slide 4

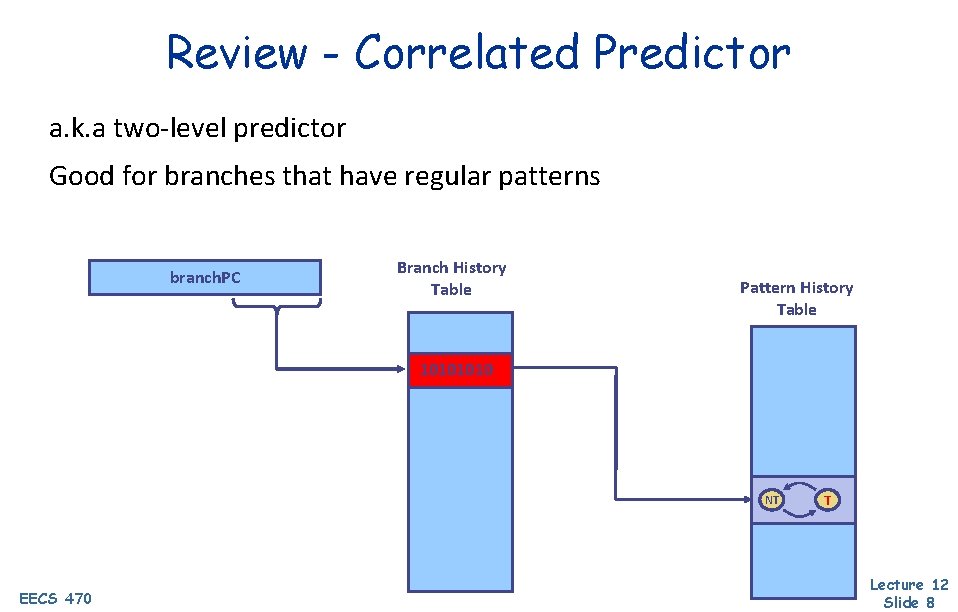

Readings q EECS 470 H & P 3. 3, 3. 9 Lecture 12 Slide 5

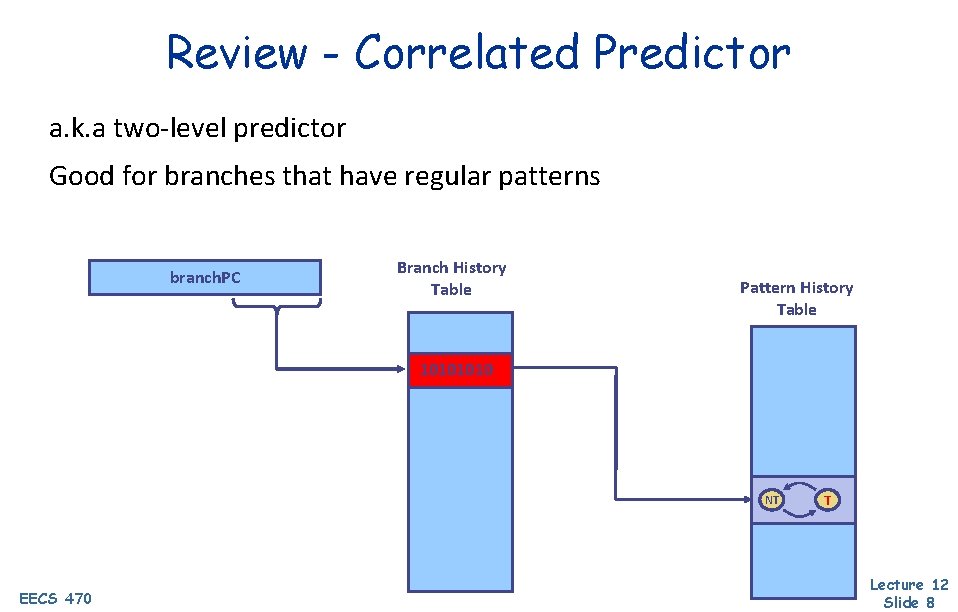

Review - Simple 1 bit predictor Good for branches that always do the same thing Branch History Table branch. PC NT State/prediction Outcome EECS 470 N* T T T* N* T T T N T Lecture 12 Slide 6

Review - Two-bit saturating counter Good for branches that usually do the same thing Branch History Table branch. PC State/prediction Outcome EECS 470 SN NT N* n* t T T T* T T T N T ST Lecture 12 Slide 7

Review - Correlated Predictor a. k. a two-level predictor Good for branches that have regular patterns branch. PC Branch History Table Pattern History Table 1010 NT EECS 470 T Lecture 12 Slide 8

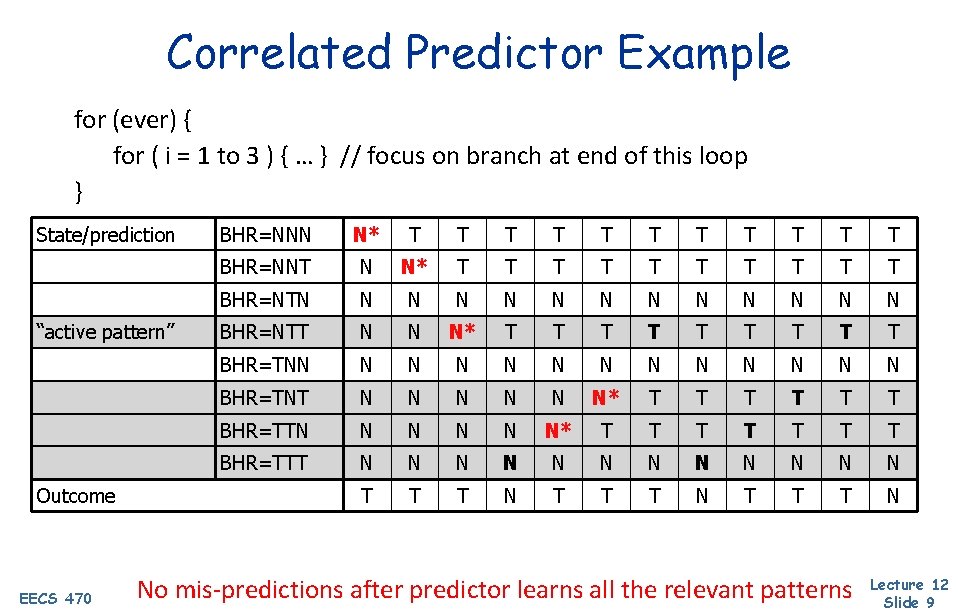

Correlated Predictor Example for (ever) { for ( i = 1 to 3 ) { … } // focus on branch at end of this loop } State/prediction “active pattern” Outcome EECS 470 BHR=NNN N* T T T BHR=NNT N N* T T T T T BHR=NTN N N N BHR=NTT N N N* T T T T T BHR=TNN N N N BHR=TNT N N N* T T T BHR=TTN N N N* T T T T BHR=TTT N N N T T T N No mis-predictions after predictor learns all the relevant patterns Lecture 12 Slide 9

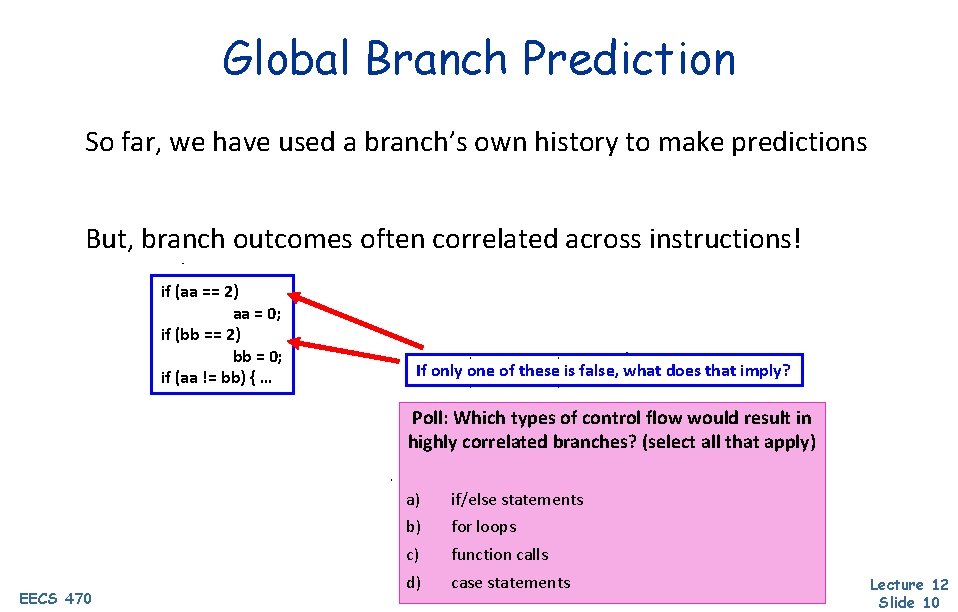

Global Branch Prediction So far, we have used a branch’s own history to make predictions But, branch outcomes often correlated across instructions! if (aa == 2) aa = 0; if (bb == 2) bb = 0; if (aa != bb) { … If only one of these is false, what does that imply? Poll: Which types of control flow would result in highly correlated branches? (select all that apply) EECS 470 a) if/else statements b) for loops c) function calls d) case statements Lecture 12 Slide 10

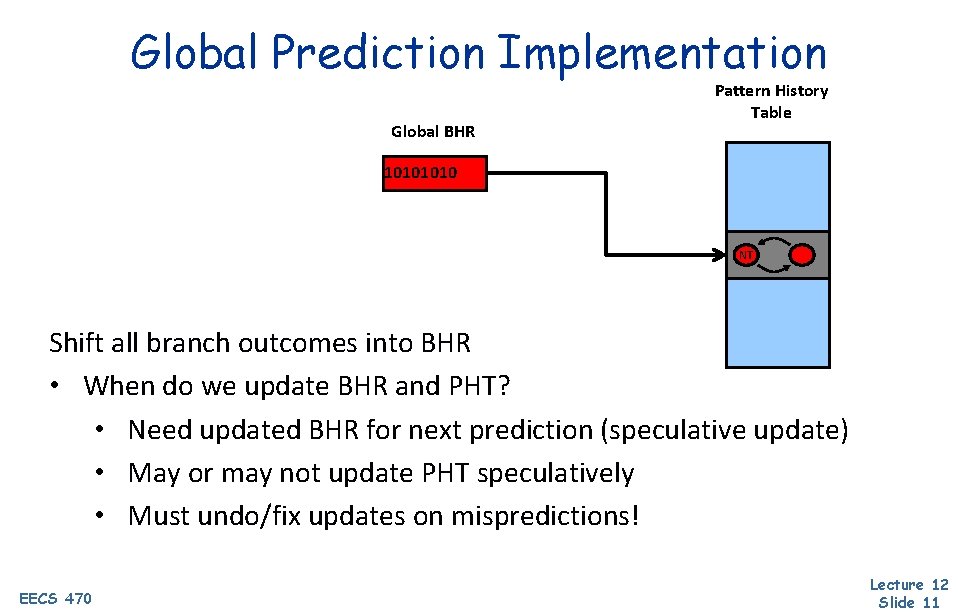

Global Prediction Implementation Global BHR Pattern History Table 1010 NT T Shift all branch outcomes into BHR • When do we update BHR and PHT? • Need updated BHR for next prediction (speculative update) • May or may not update PHT speculatively • Must undo/fix updates on mispredictions! EECS 470 Lecture 12 Slide 11

Correlated Predictor Design Space Design choice I: one global BHR or one per PC (local)? Each one captures different kinds of patterns q Global is better, captures local patterns for tight loop branches q Design choice II: how many history bits (BHR size)? Given unlimited resources, longer BHRs are better, but… BHT utilization decreases + – – – Many history patterns are never seen Many branches are history independent (don’t care) Predictor takes longer to train q Typical length: 8– 12 – Design choice III: Separate per-PC pattern tables? q – EECS 470 Different branches want different predictions for same pattern Storage cost of each PHT is high, only a few patterns matter Lecture 12 Slide 12

![Gshare Mc Farling Poll What would make a good hash function select all that Gshare [Mc. Farling] Poll: What would make a good hash function? (select all that](https://slidetodoc.com/presentation_image_h2/05ccf04259e41c416140099fb8e14698/image-13.jpg)

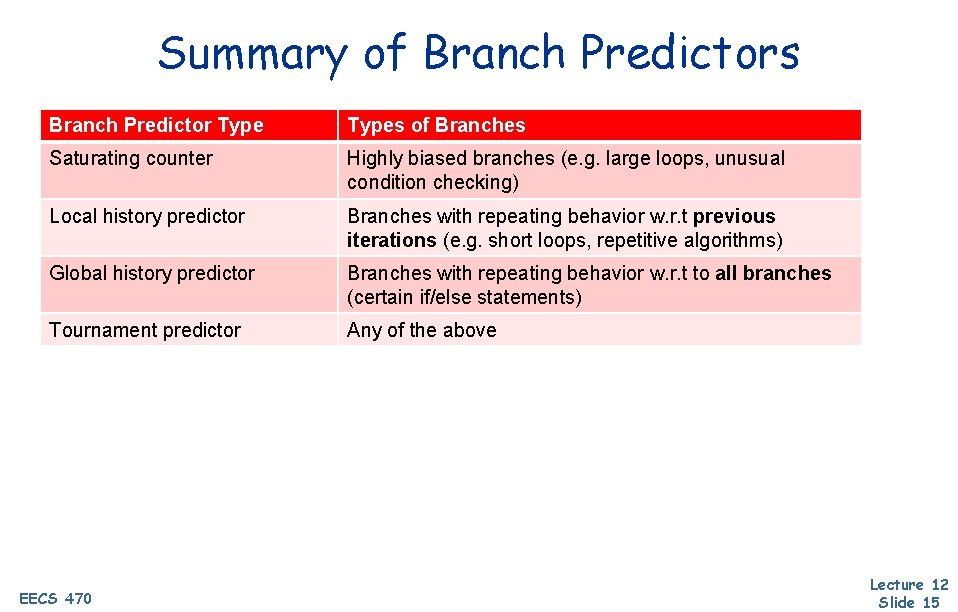

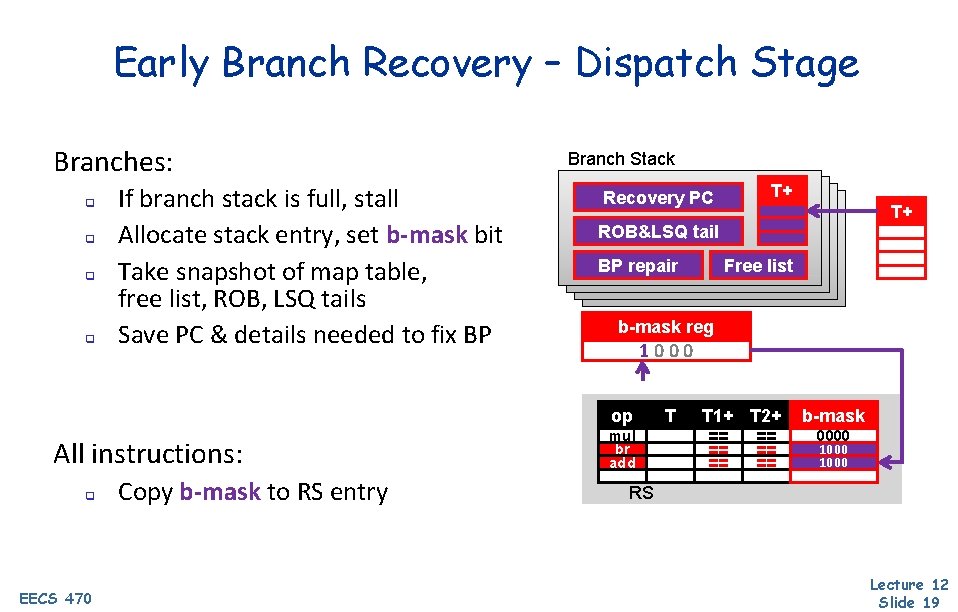

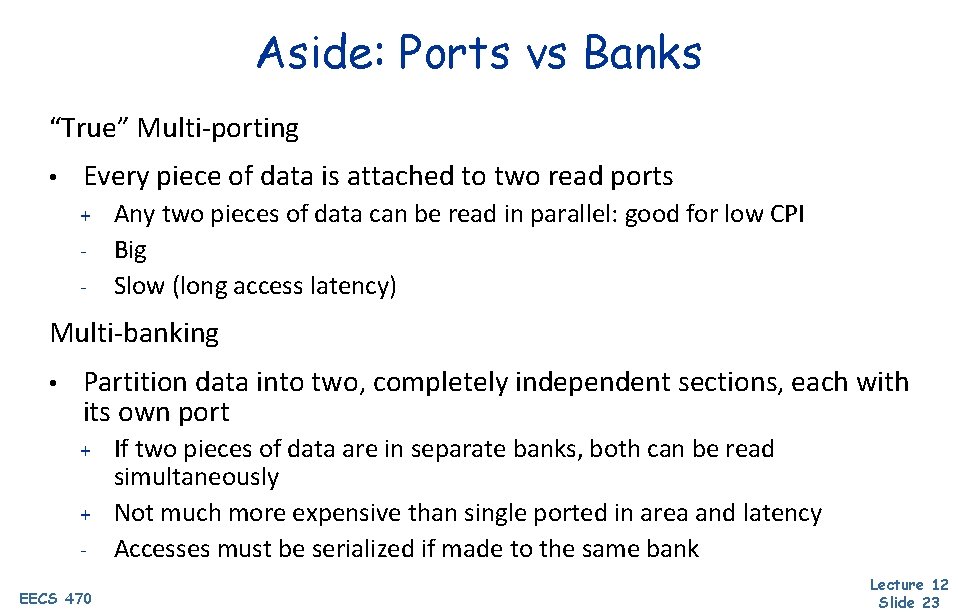

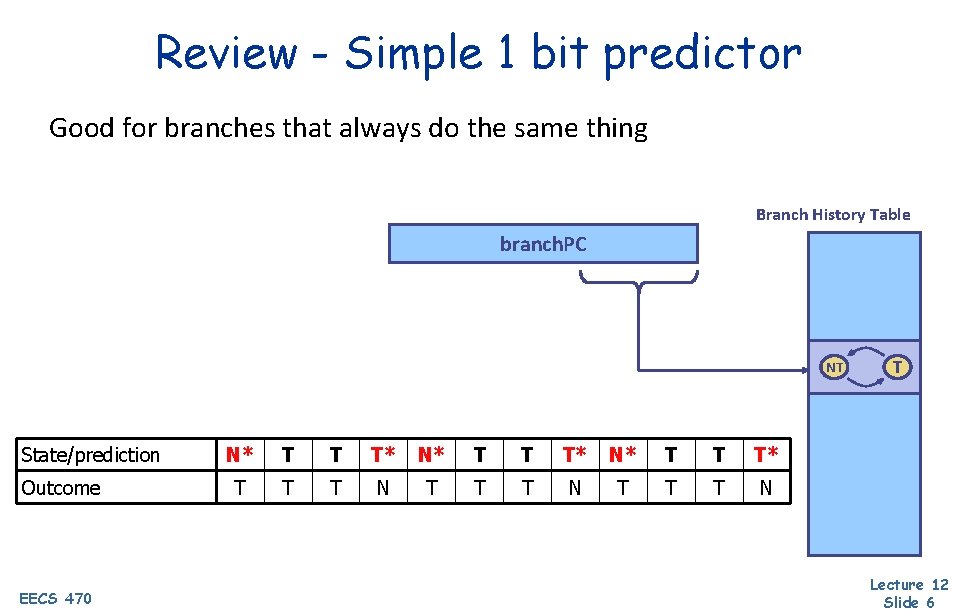

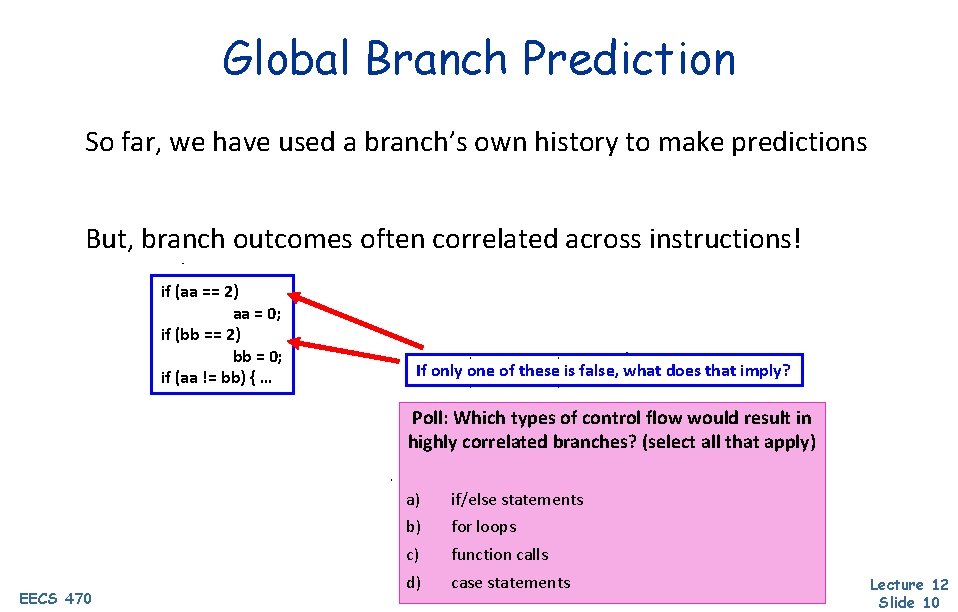

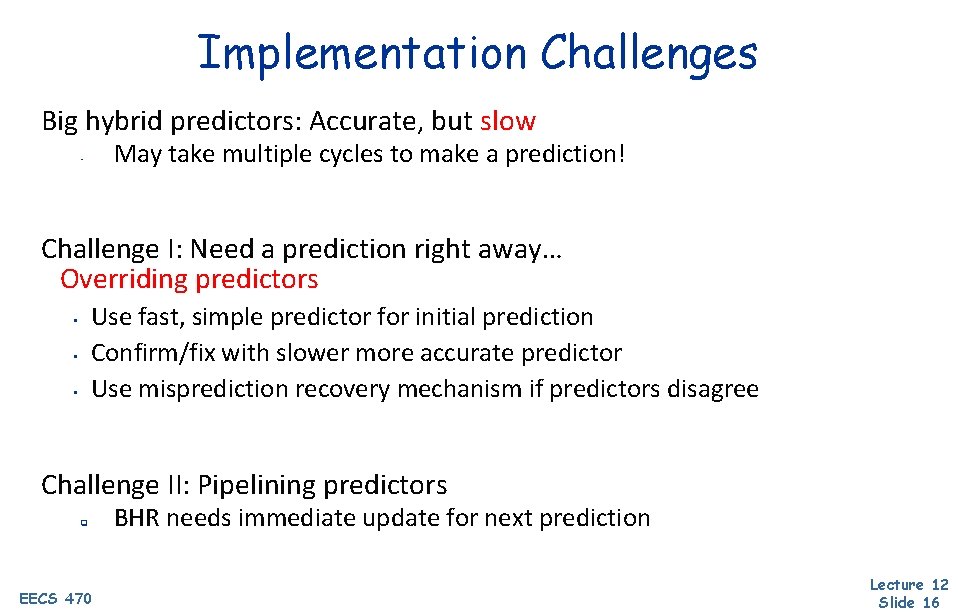

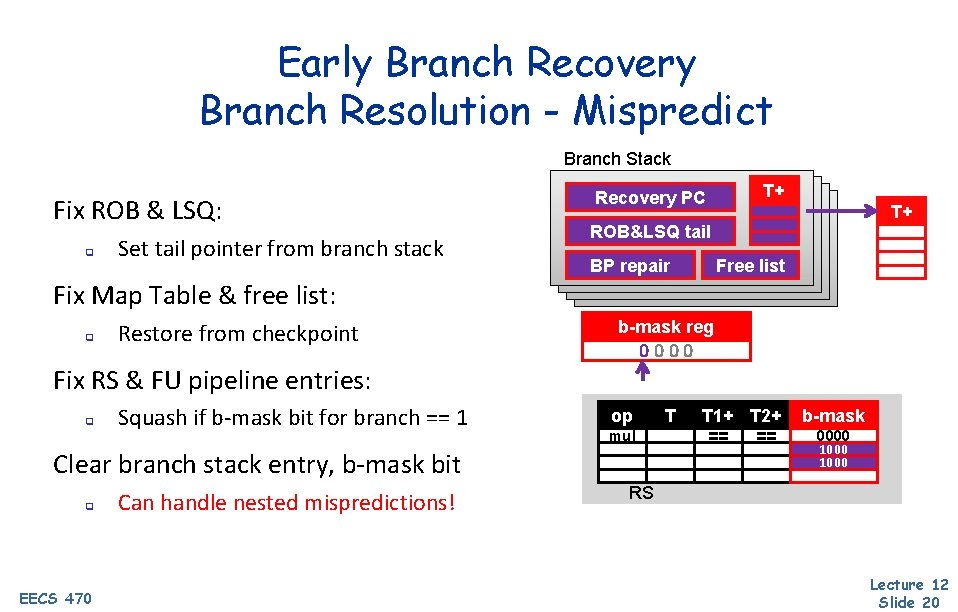

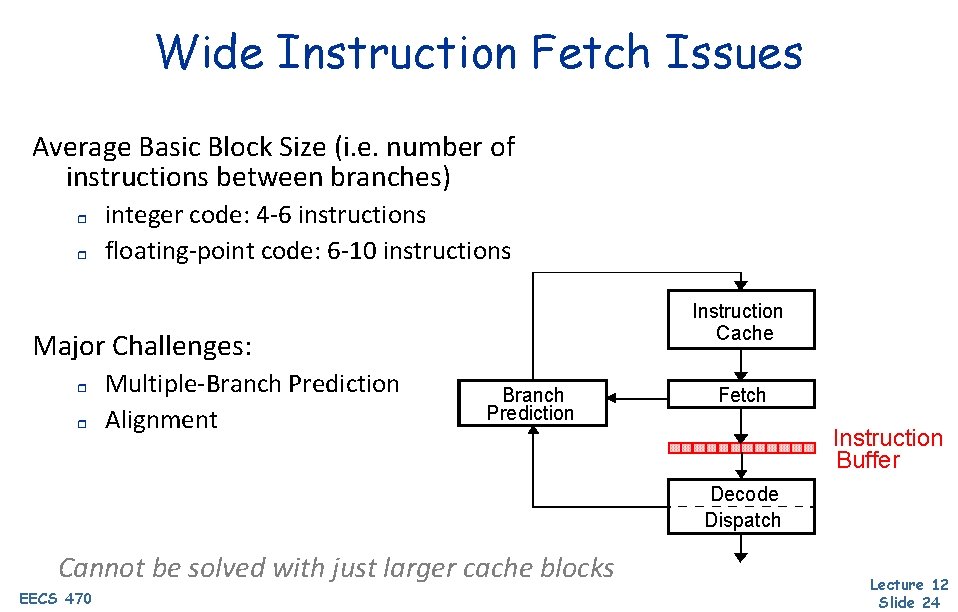

Gshare [Mc. Farling] Poll: What would make a good hash function? (select all that apply) Pattern History Table (shared shown here) branch. PC Global BHR 1010 ? ? ? xor NT T Cheap way to implement global predictors • Global branch history • Per-address patterns (sort of) • Try to make the PHT big enough to avoid collisions EECS 470 Lecture 12 Slide 13

![Hybrid Predictor Hybrid tournament predictor Mc Farling q Attacks correlated predictor BHT utilization problem Hybrid Predictor Hybrid (tournament) predictor [Mc. Farling] q Attacks correlated predictor BHT utilization problem](https://slidetodoc.com/presentation_image_h2/05ccf04259e41c416140099fb8e14698/image-14.jpg)

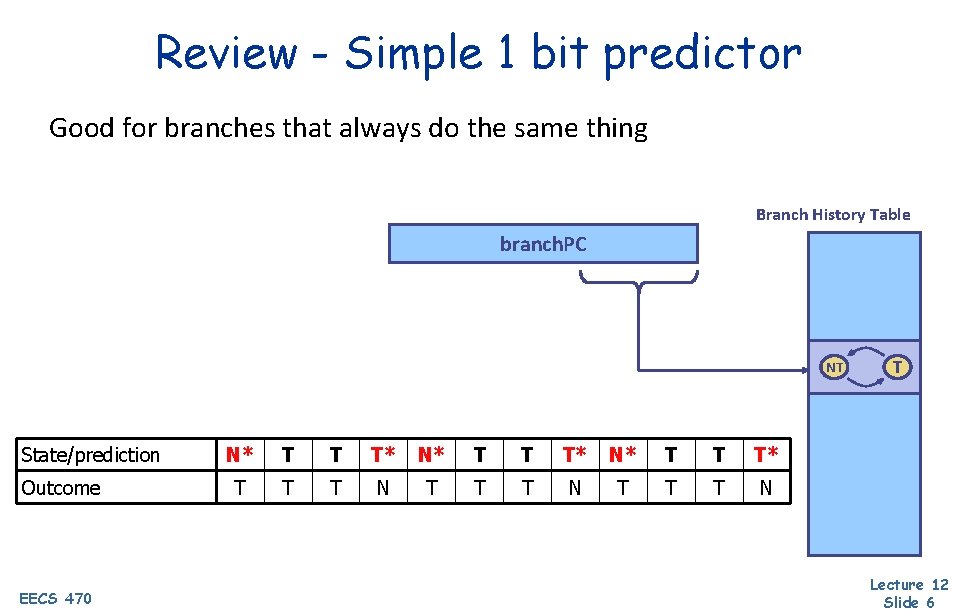

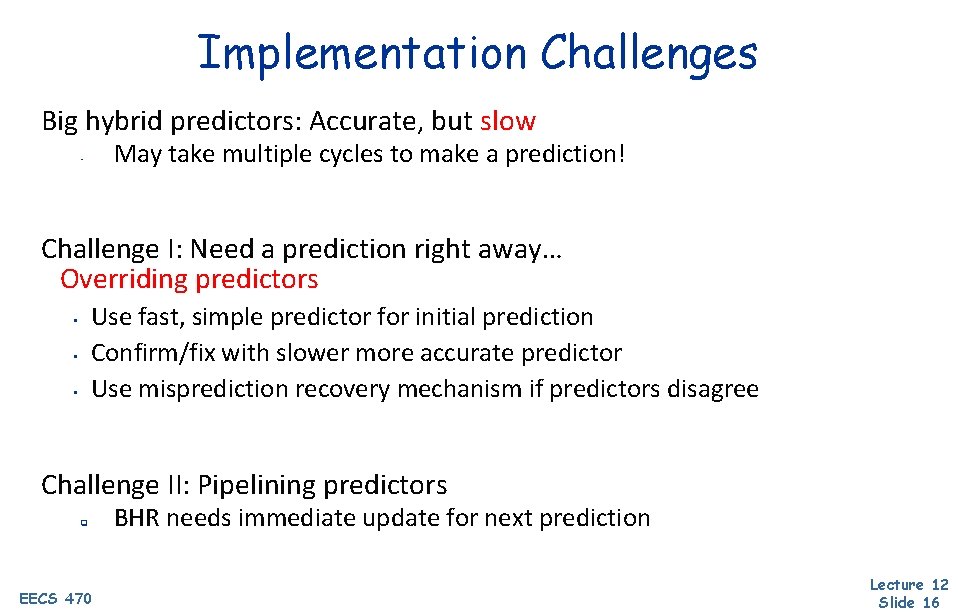

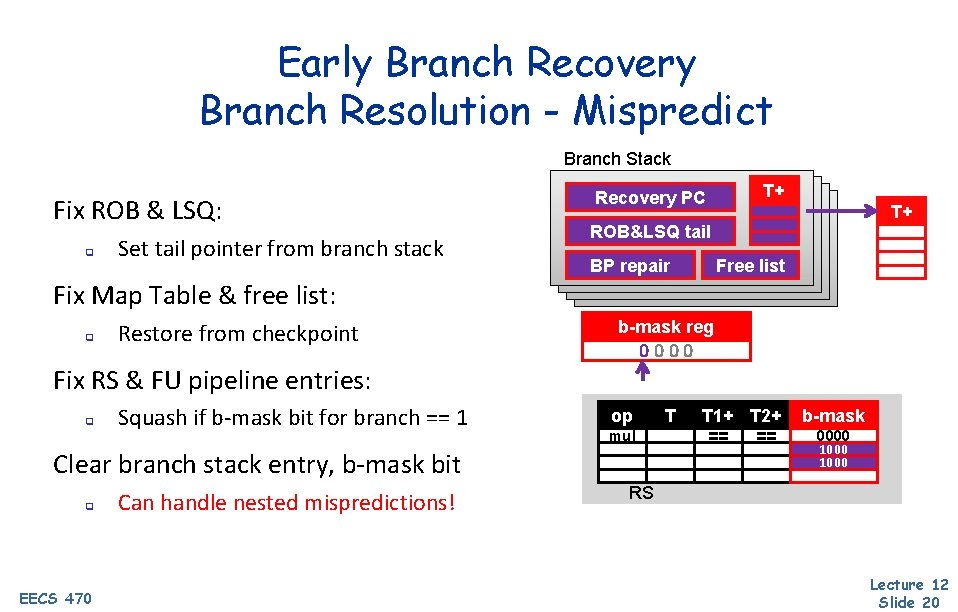

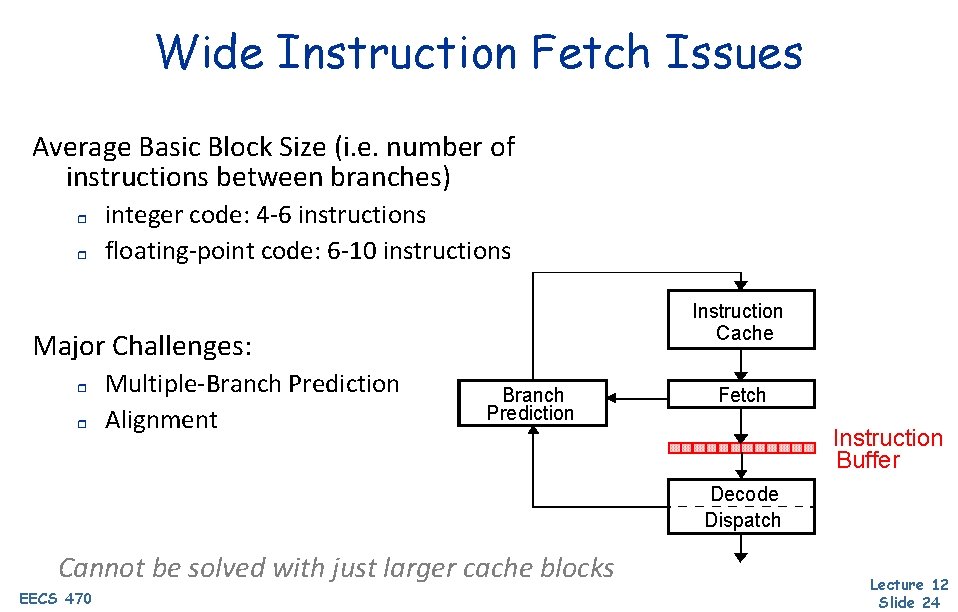

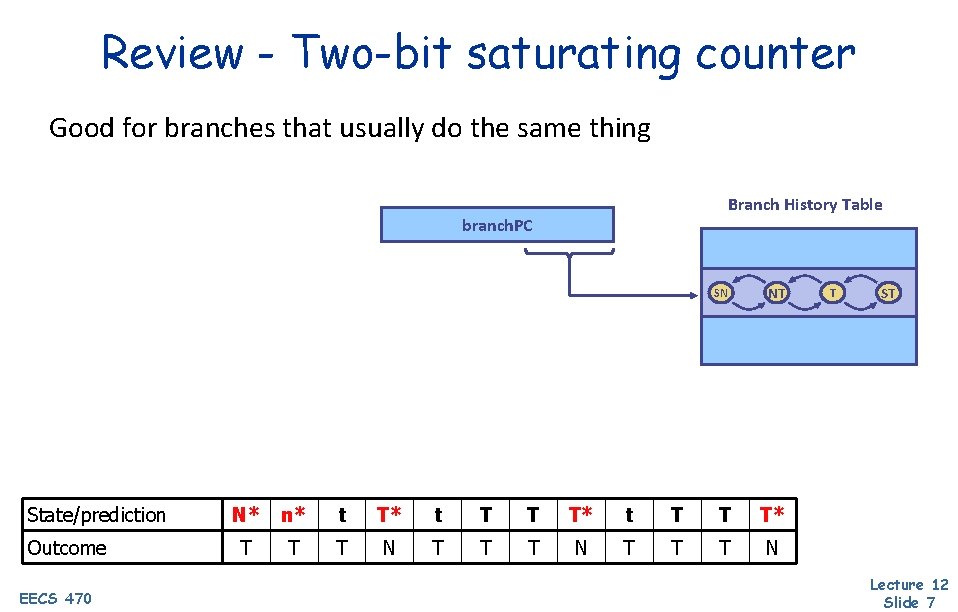

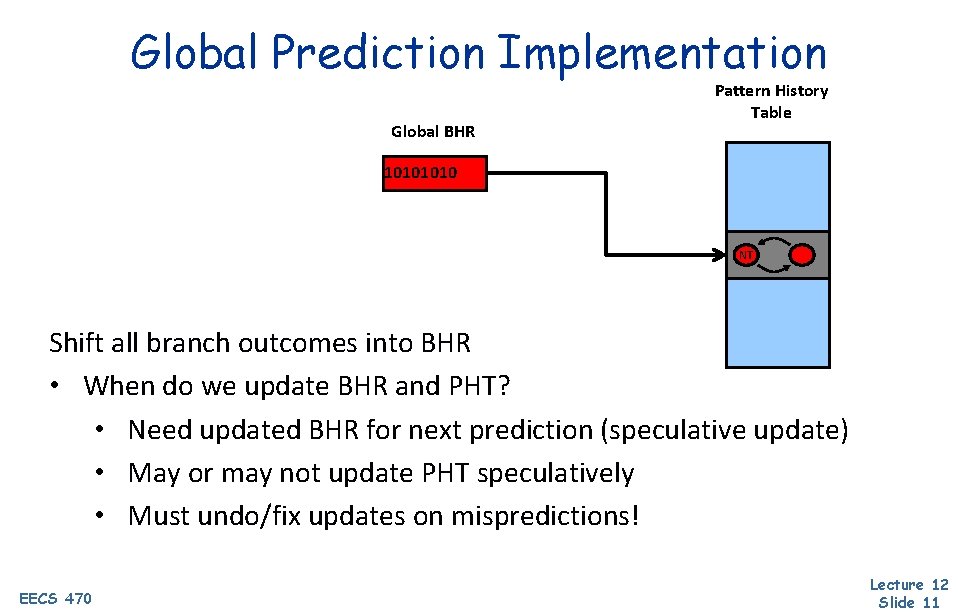

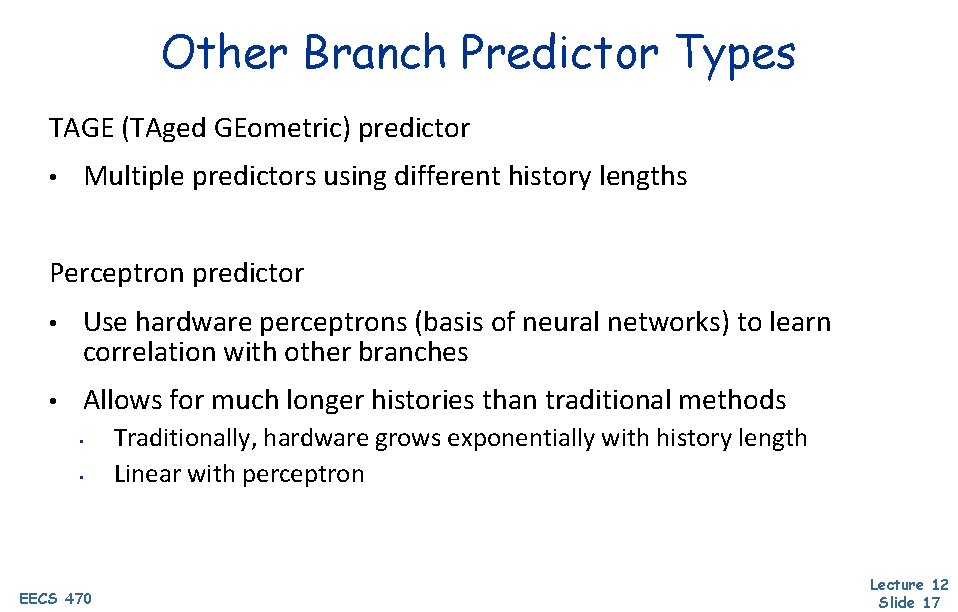

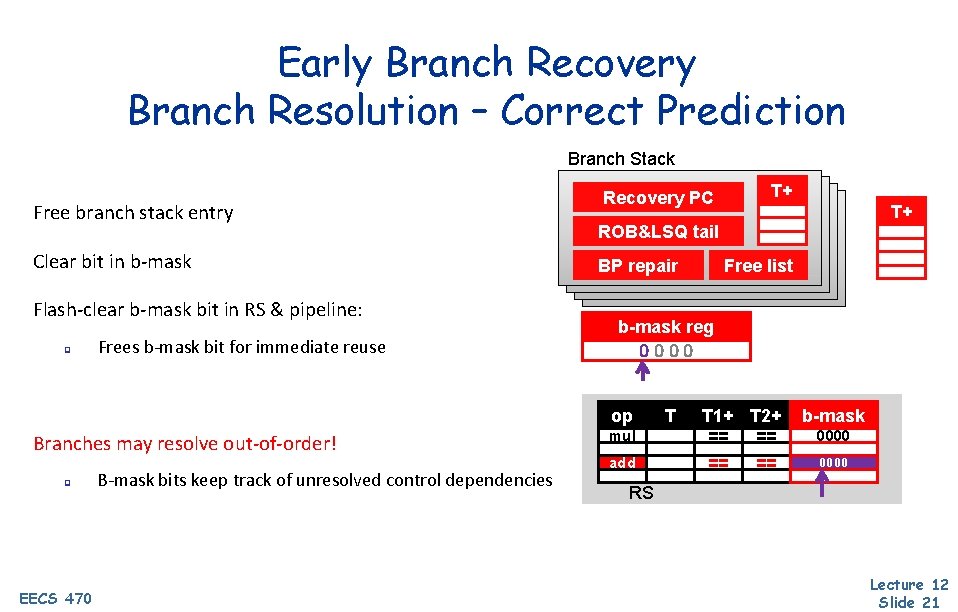

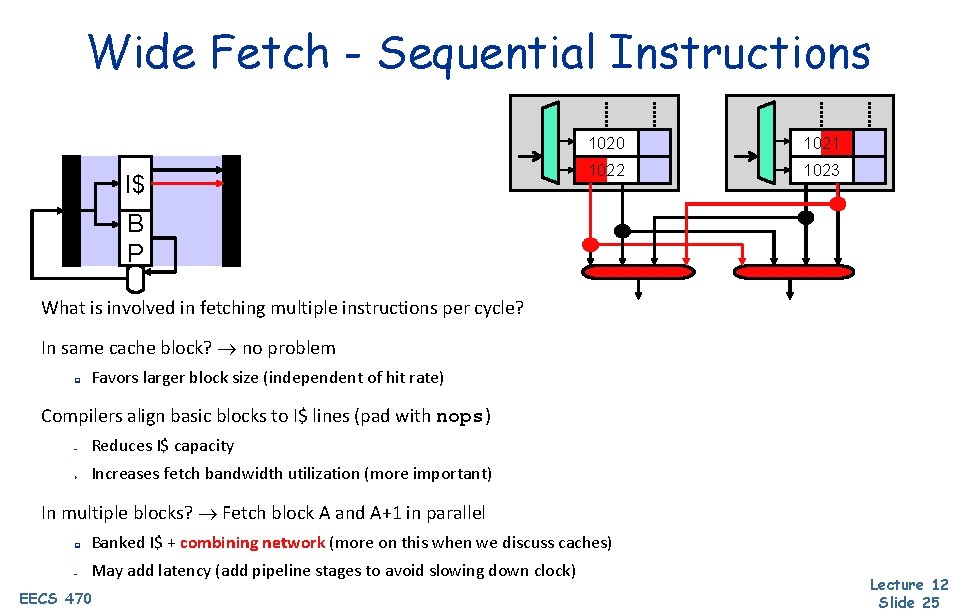

Hybrid Predictor Hybrid (tournament) predictor [Mc. Farling] q Attacks correlated predictor BHT utilization problem q Idea: combine two predictors q q Simple BHT predicts history independent branches Correlated predictor predicts only branches that need history Chooser assigns branches to one predictor or the other Branches start in simple BHT, move mis-prediction threshold + Correlated predictor can be made smaller, handles fewer branches + 90– 95% accuracy q Alpha 21264: Hybrid of Gshare & 2 -bit saturating counters EECS 470 chooser BHT BHR BHT PC Lecture 12 Slide 14

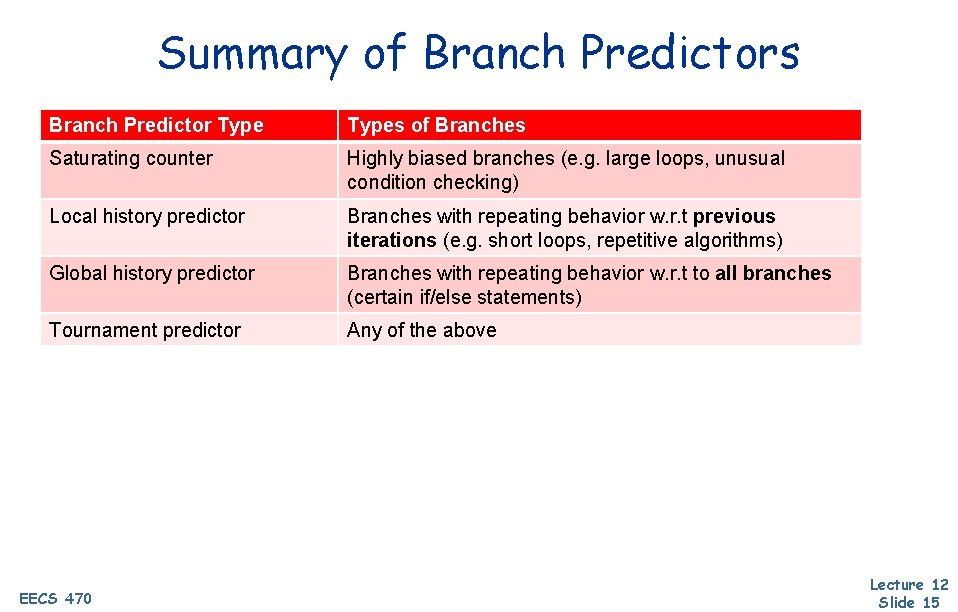

Summary of Branch Predictors Branch Predictor Types of Branches Saturating counter Highly biased branches (e. g. large loops, unusual condition checking) Local history predictor Branches with repeating behavior w. r. t previous iterations (e. g. short loops, repetitive algorithms) Global history predictor Branches with repeating behavior w. r. t to all branches (certain if/else statements) Tournament predictor Any of the above EECS 470 Lecture 12 Slide 15

Implementation Challenges Big hybrid predictors: Accurate, but slow May take multiple cycles to make a prediction! • Challenge I: Need a prediction right away… Overriding predictors Use fast, simple predictor for initial prediction Confirm/fix with slower more accurate predictor Use misprediction recovery mechanism if predictors disagree • • • Challenge II: Pipelining predictors q EECS 470 BHR needs immediate update for next prediction Lecture 12 Slide 16

Other Branch Predictor Types TAGE (TAged GEometric) predictor • Multiple predictors using different history lengths Perceptron predictor • Use hardware perceptrons (basis of neural networks) to learn correlation with other branches • Allows for much longer histories than traditional methods • • EECS 470 Traditionally, hardware grows exponentially with history length Linear with perceptron Lecture 12 Slide 17

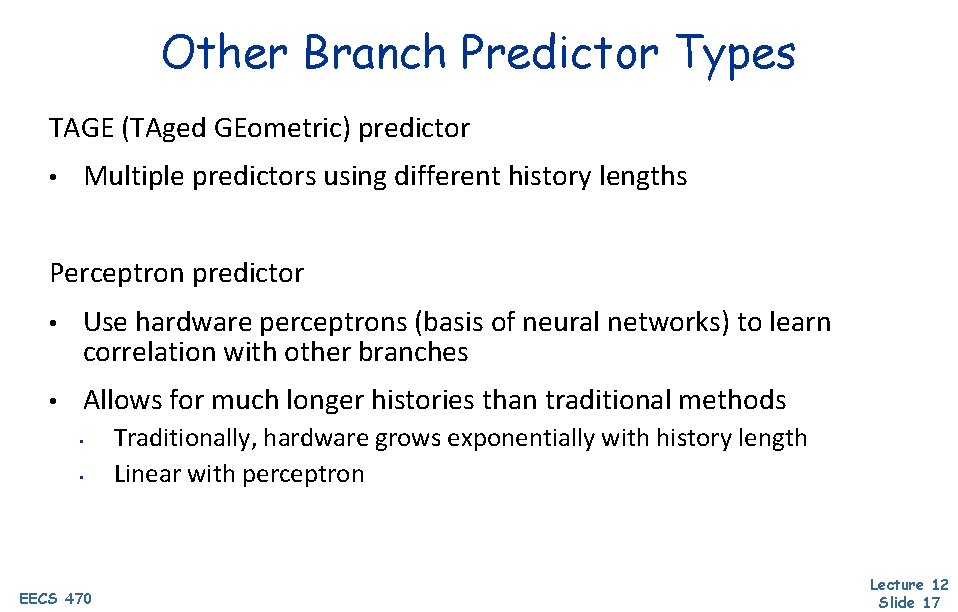

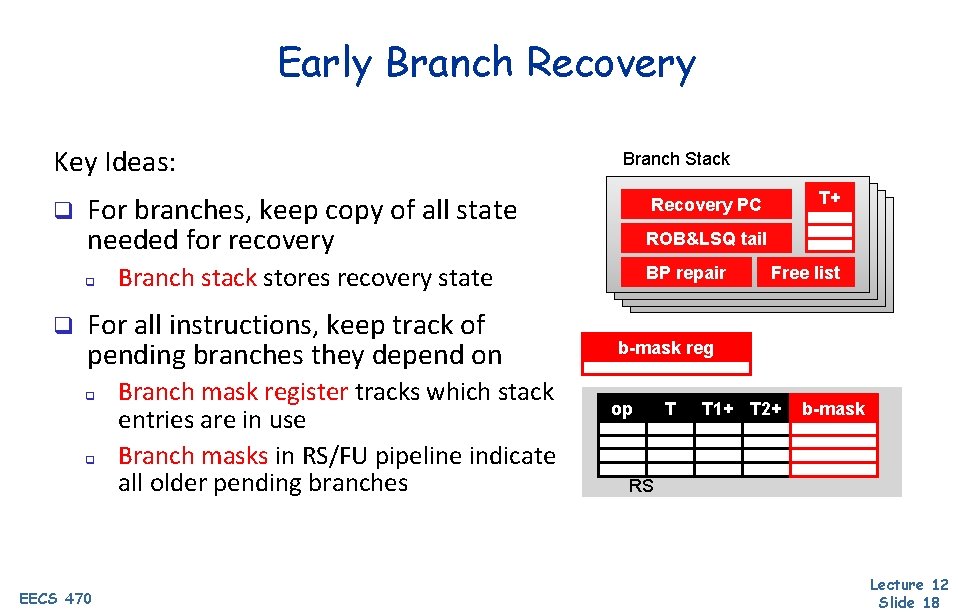

Early Branch Recovery Key Ideas: q For branches, keep copy of all state needed for recovery q q Branch Stack q q EECS 470 ROB&LSQ tail Branch stack stores recovery state For all instructions, keep track of pending branches they depend on Branch mask register tracks which stack entries are in use Branch masks in RS/FU pipeline indicate all older pending branches T+ Recovery PC BP repair Free list b-mask reg op T T 1+ T 2+ b-mask RS Lecture 12 Slide 18

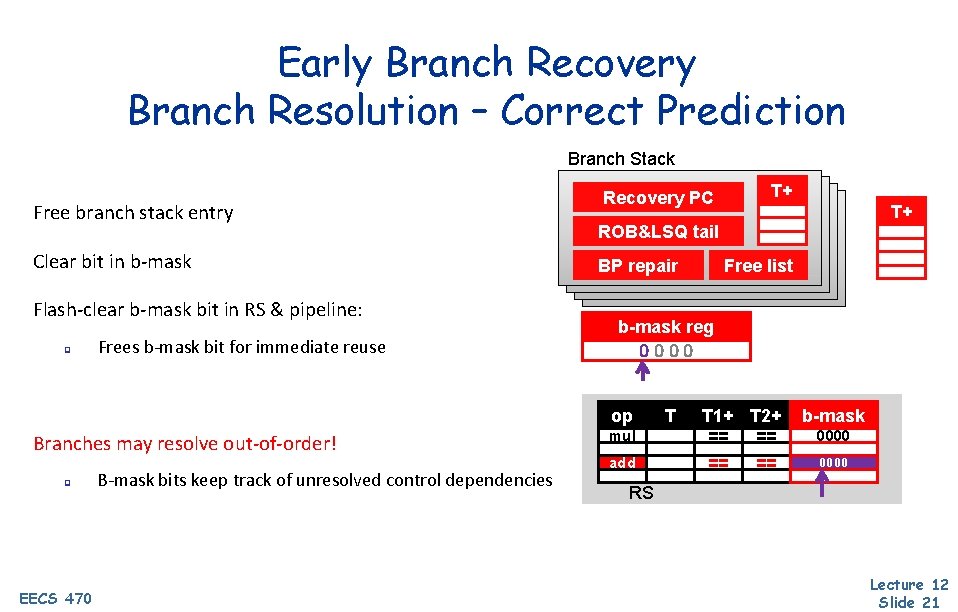

Early Branch Recovery – Dispatch Stage Branches: q q If branch stack is full, stall Allocate stack entry, set b-mask bit Take snapshot of map table, free list, ROB, LSQ tails Save PC & details needed to fix BP Branch Stack Recovery PC q EECS 470 Copy b-mask to RS entry T+ ROB&LSQ tail BP repair Free list b-mask reg 1000 op All instructions: T+ mul br add T T 1+ T 2+ == == == b-mask 0000 1000 RS Lecture 12 Slide 19

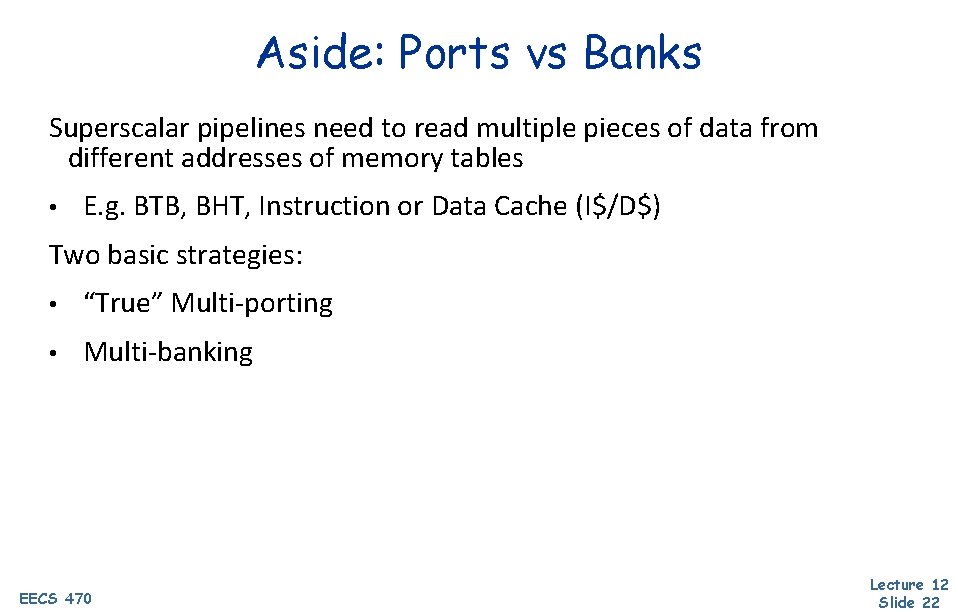

Early Branch Recovery Branch Resolution - Mispredict Branch Stack Fix ROB & LSQ: q Set tail pointer from branch stack Recovery PC T+ T+ ROB&LSQ tail BP repair Free list Fix Map Table & free list: q Restore from checkpoint b-mask reg 0000 Fix RS & FU pipeline entries: q Squash if b-mask bit for branch == 1 Clear branch stack entry, b-mask bit q EECS 470 Can handle nested mispredictions! op mul br add T T 1+ T 2+ == == b-mask 0000 1000 RS Lecture 12 Slide 20

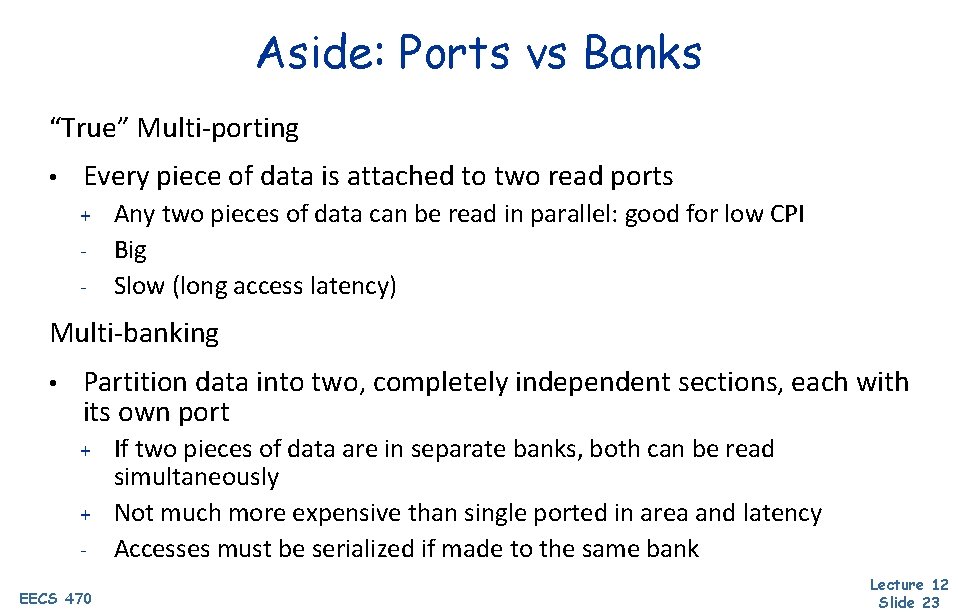

Early Branch Recovery Branch Resolution – Correct Prediction Branch Stack Free branch stack entry Clear bit in b-mask Flash-clear b-mask bit in RS & pipeline: q Frees b-mask bit for immediate reuse q EECS 470 B-mask bits keep track of unresolved control dependencies T+ ROB&LSQ tail BP repair Free list b-mask reg 0000 op Branches may resolve out-of-order! T+ Recovery PC mul br add T T 1+ T 2+ == == b-mask 0000 RS Lecture 12 Slide 21

Aside: Ports vs Banks Superscalar pipelines need to read multiple pieces of data from different addresses of memory tables • E. g. BTB, BHT, Instruction or Data Cache (I$/D$) Two basic strategies: • “True” Multi-porting • Multi-banking EECS 470 Lecture 12 Slide 22

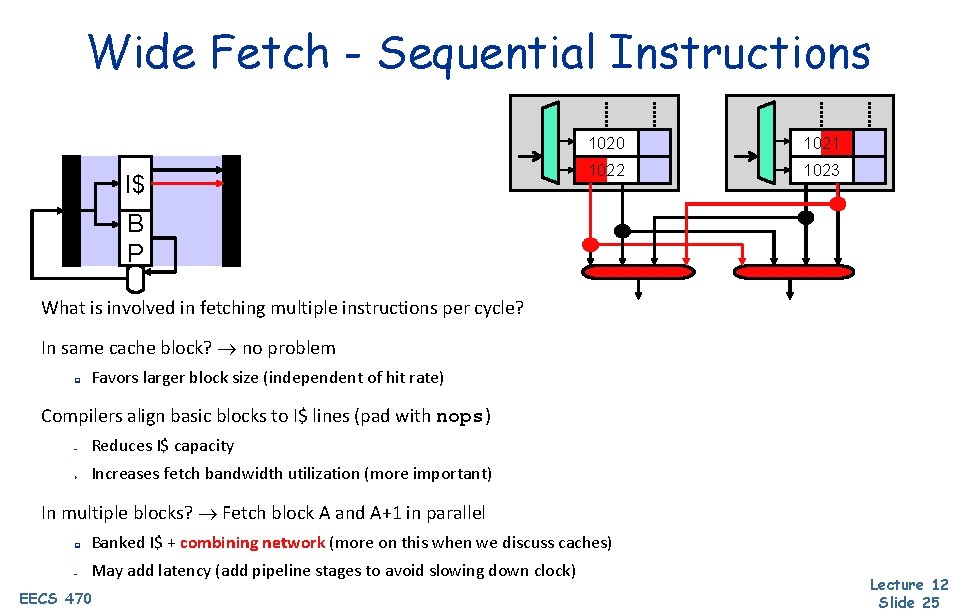

Aside: Ports vs Banks “True” Multi-porting • Every piece of data is attached to two read ports Any two pieces of data can be read in parallel: good for low CPI - Big - Slow (long access latency) + Multi-banking • Partition data into two, completely independent sections, each with its own port If two pieces of data are in separate banks, both can be read simultaneously + Not much more expensive than single ported in area and latency - Accesses must be serialized if made to the same bank + EECS 470 Lecture 12 Slide 23

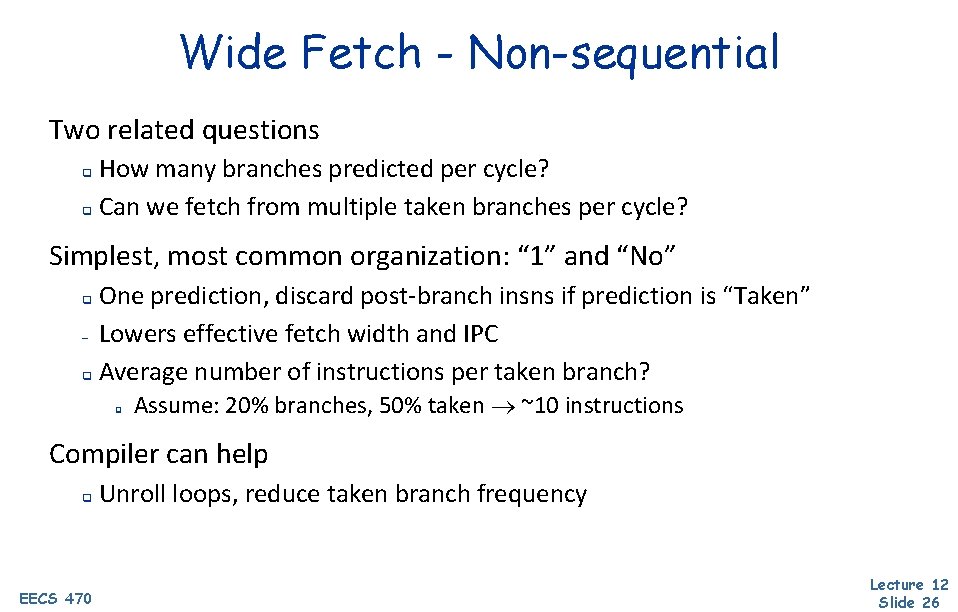

Wide Instruction Fetch Issues Average Basic Block Size (i. e. number of instructions between branches) r r integer code: 4 -6 instructions floating-point code: 6 -10 instructions Instruction Cache Major Challenges: r r Multiple-Branch Prediction Alignment Branch Prediction Fetch Instruction Buffer Decode Dispatch Cannot be solved with just larger cache blocks EECS 470 Lecture 12 Slide 24

Wide Fetch - Sequential Instructions I$ 1020 1021 1022 1023 B P What is involved in fetching multiple instructions per cycle? In same cache block? no problem q Favors larger block size (independent of hit rate) Compilers align basic blocks to I$ lines (pad with nops) – Reduces I$ capacity + Increases fetch bandwidth utilization (more important) In multiple blocks? Fetch block A and A+1 in parallel q Banked I$ + combining network (more on this when we discuss caches) – May add latency (add pipeline stages to avoid slowing down clock) EECS 470 Lecture 12 Slide 25

Wide Fetch - Non-sequential Two related questions How many branches predicted per cycle? q Can we fetch from multiple taken branches per cycle? q Simplest, most common organization: “ 1” and “No” One prediction, discard post-branch insns if prediction is “Taken” – Lowers effective fetch width and IPC q Average number of instructions per taken branch? q q Assume: 20% branches, 50% taken ~10 instructions Compiler can help q EECS 470 Unroll loops, reduce taken branch frequency Lecture 12 Slide 26

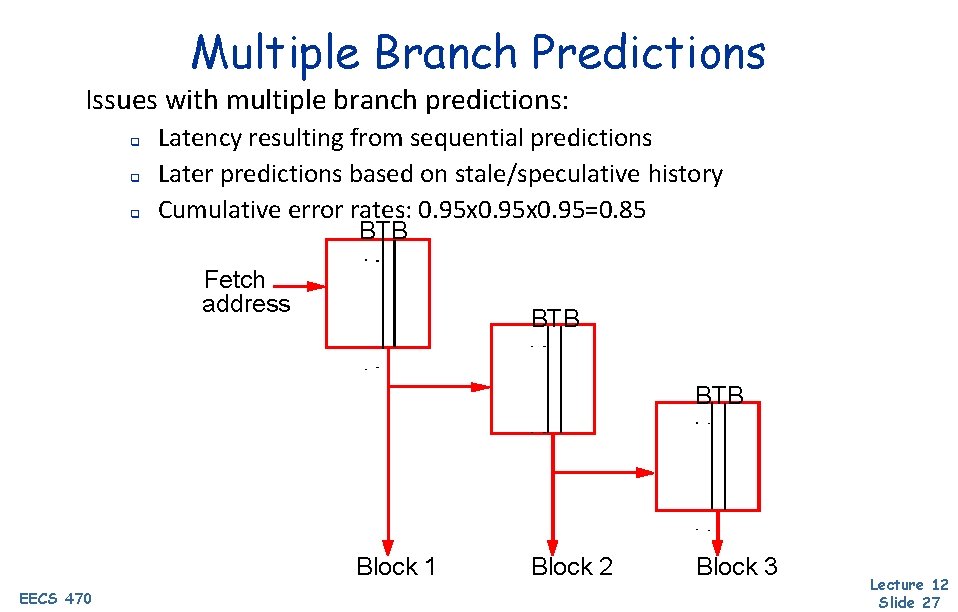

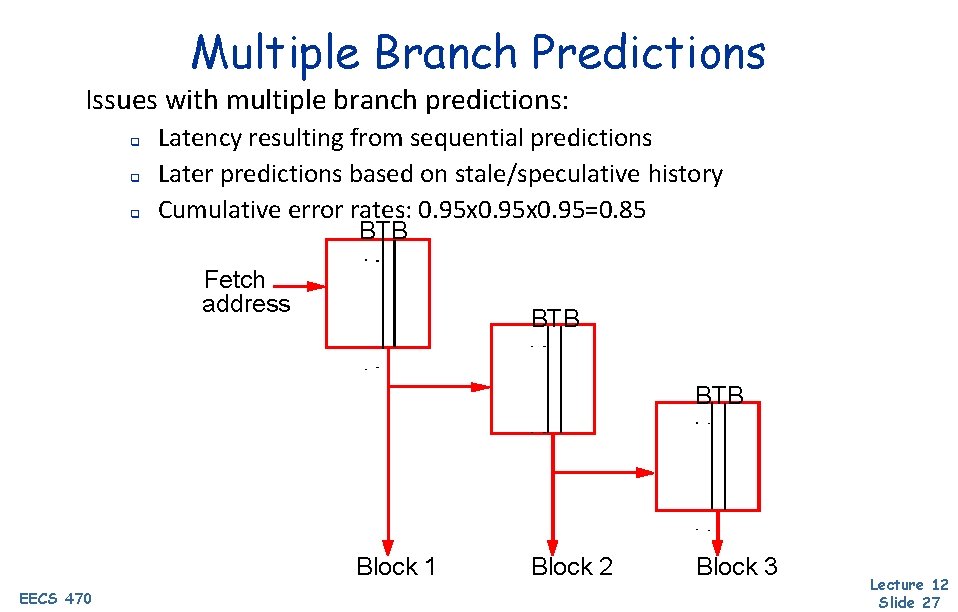

Multiple Branch Predictions Issues with multiple branch predictions: q q q Latency resulting from sequential predictions Later predictions based on stale/speculative history Cumulative error rates: 0. 95 x 0. 95=0. 85 BTB Fetch address BTB Block 1 EECS 470 Block 2 Block 3 Lecture 12 Slide 27

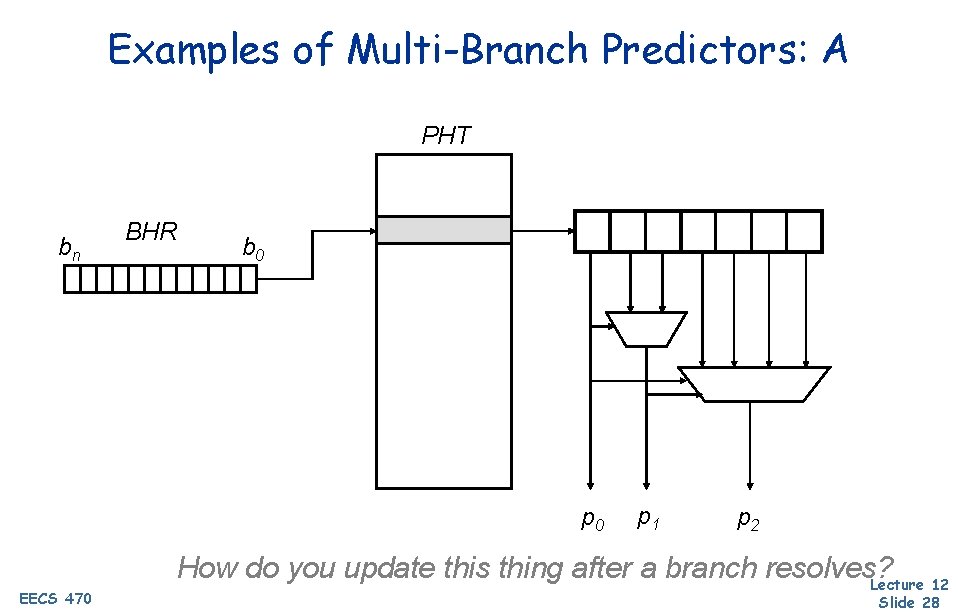

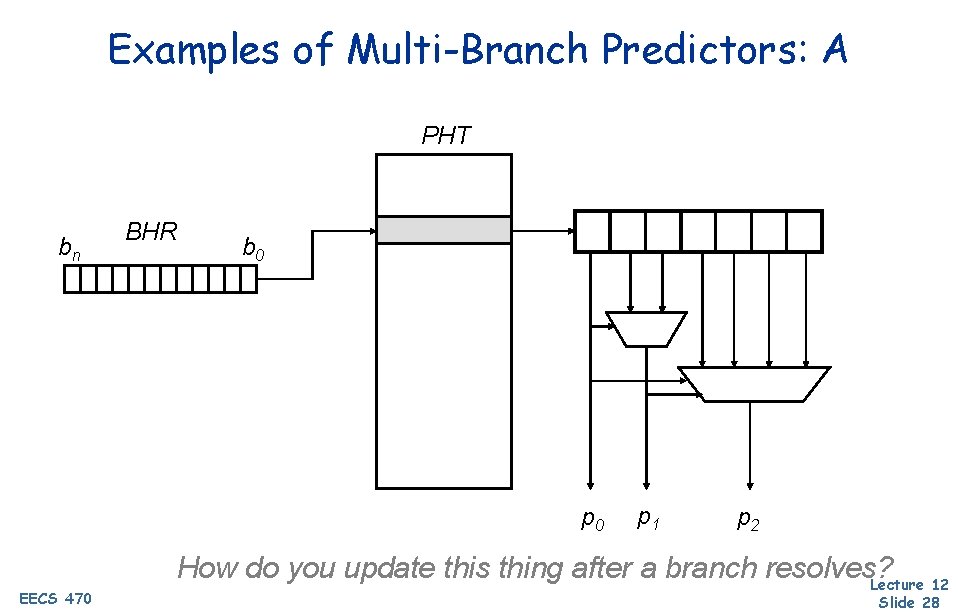

Examples of Multi-Branch Predictors: A PHT bn BHR b 0 p 0 EECS 470 p 1 p 2 How do you update this thing after a branch resolves? Lecture 12 Slide 28

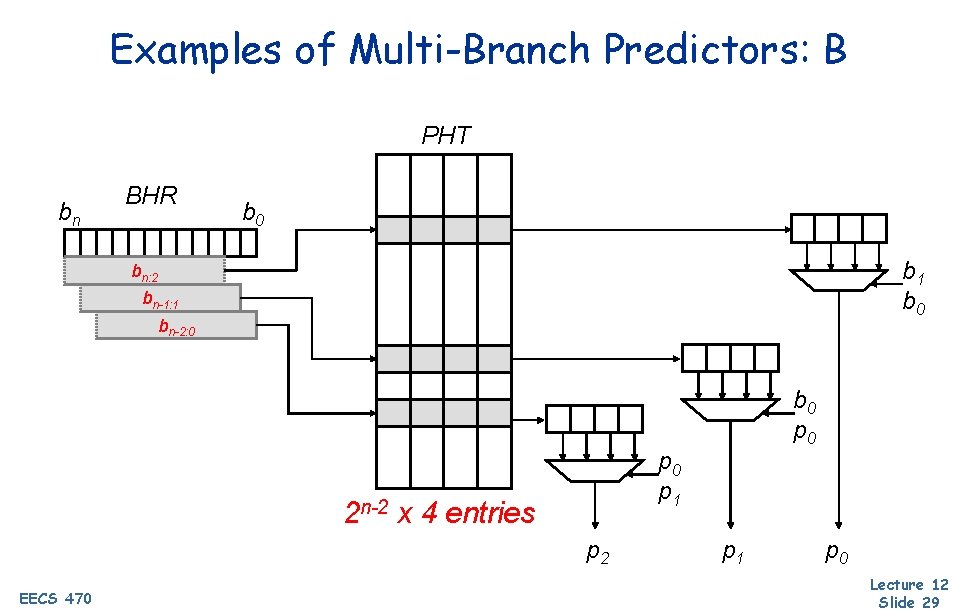

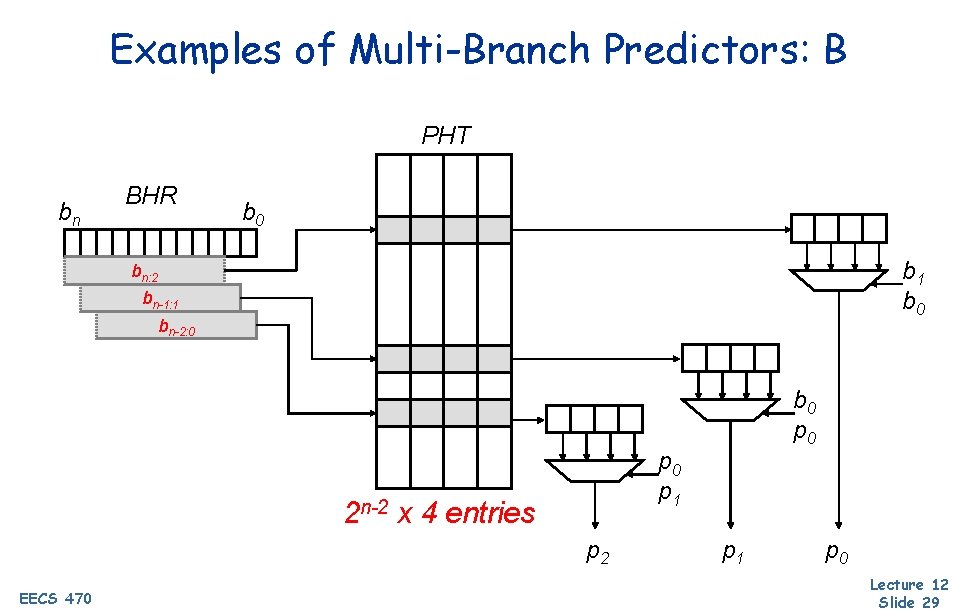

Examples of Multi-Branch Predictors: B PHT bn BHR b 0 b 1 b 0 bn: 2 bn-1: 1 bn-2: 0 p 1 2 n-2 x 4 entries p 2 EECS 470 b 0 p 1 p 0 Lecture 12 Slide 29

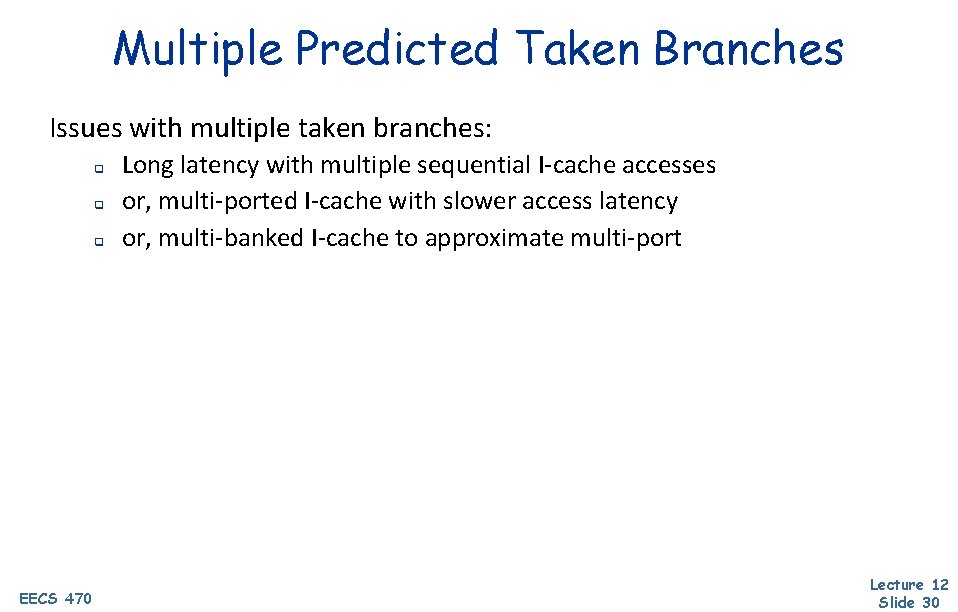

Multiple Predicted Taken Branches Issues with multiple taken branches: q q q EECS 470 Long latency with multiple sequential I-cache accesses or, multi-ported I-cache with slower access latency or, multi-banked I-cache to approximate multi-port Lecture 12 Slide 30

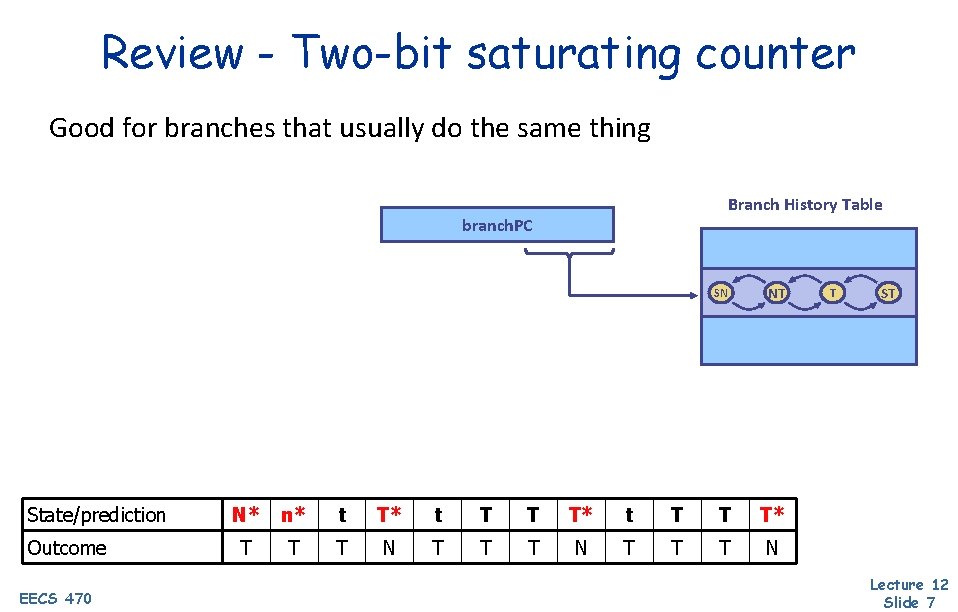

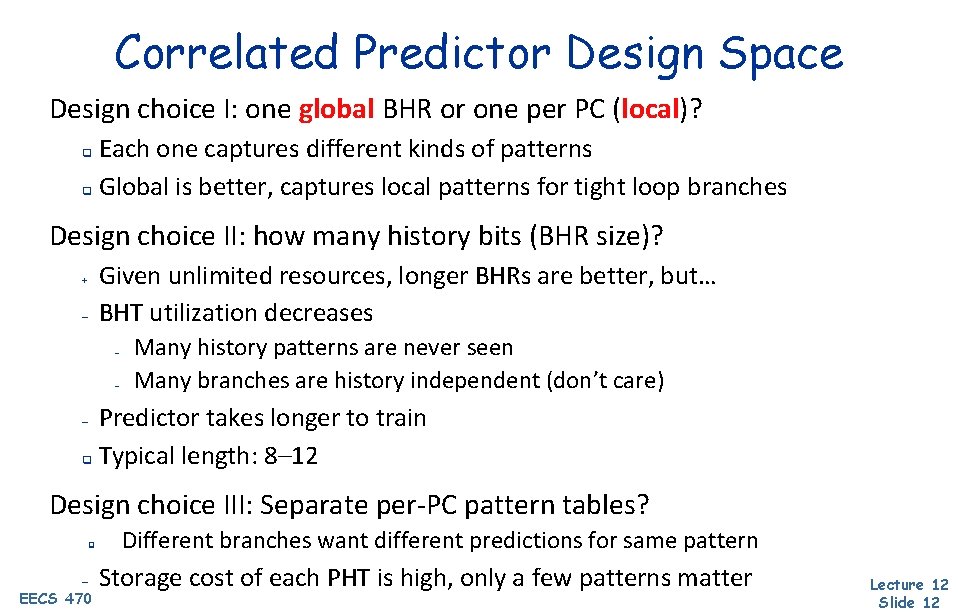

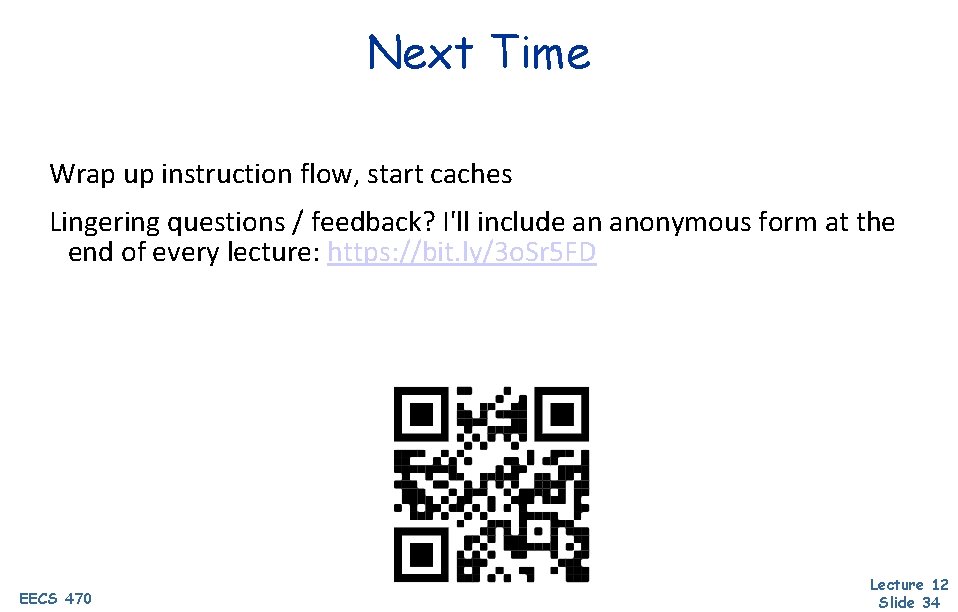

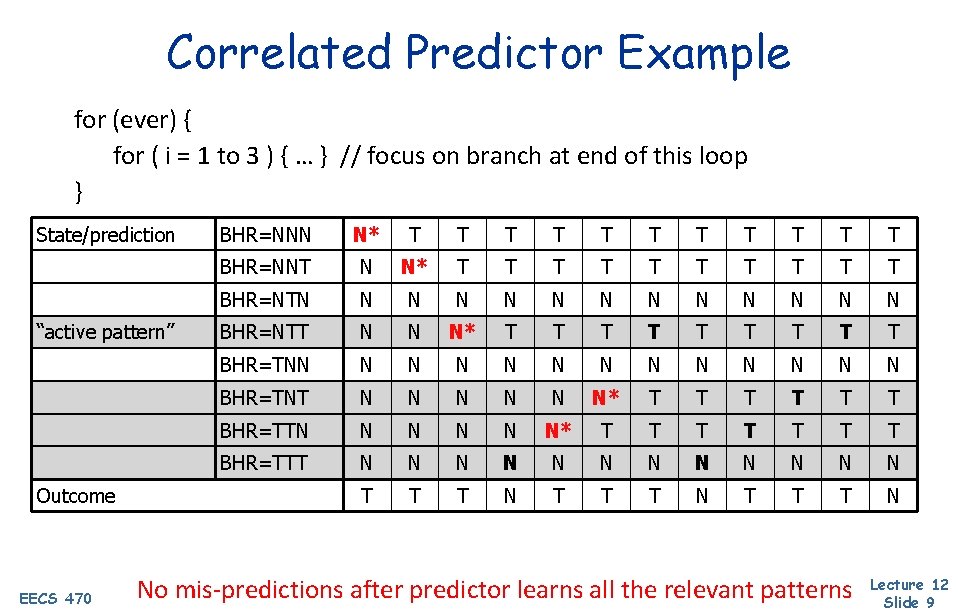

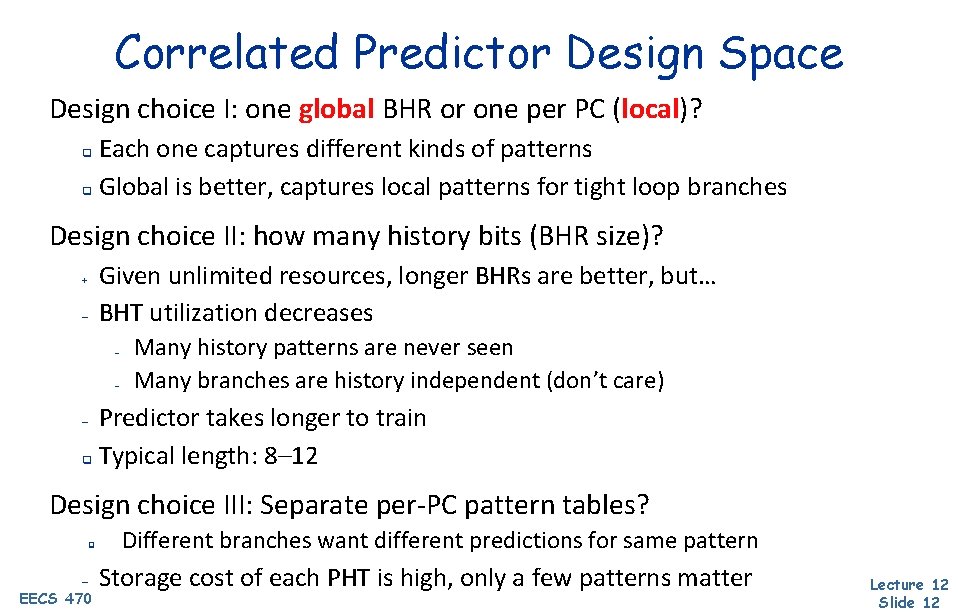

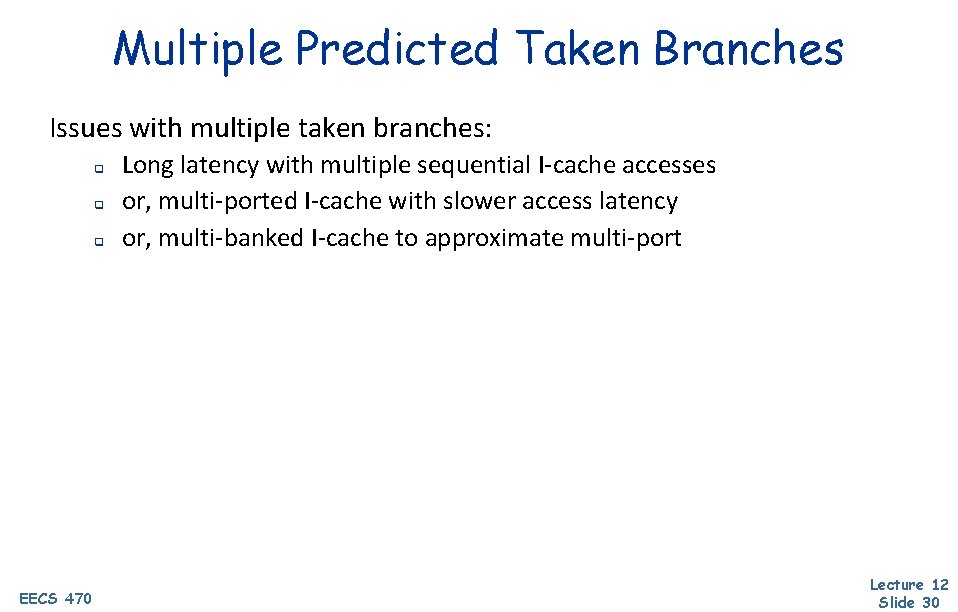

Trace Cache Motivation A B C 10% D 90% E F G A A B C D B C E F G D F G Tracecache line boundaries I-cache line boundaries Storing traces (ABC, DFG) improves code density; fetch continuity EECS 470 Lecture 12 Slide 31

![Trace Cache T T P Trace cache T PelegWeiser Rotenberg Overcomes serialization of prediction Trace Cache T$ T P Trace cache (T$) [Peleg+Weiser, Rotenberg+] Overcomes serialization of prediction](https://slidetodoc.com/presentation_image_h2/05ccf04259e41c416140099fb8e14698/image-32.jpg)

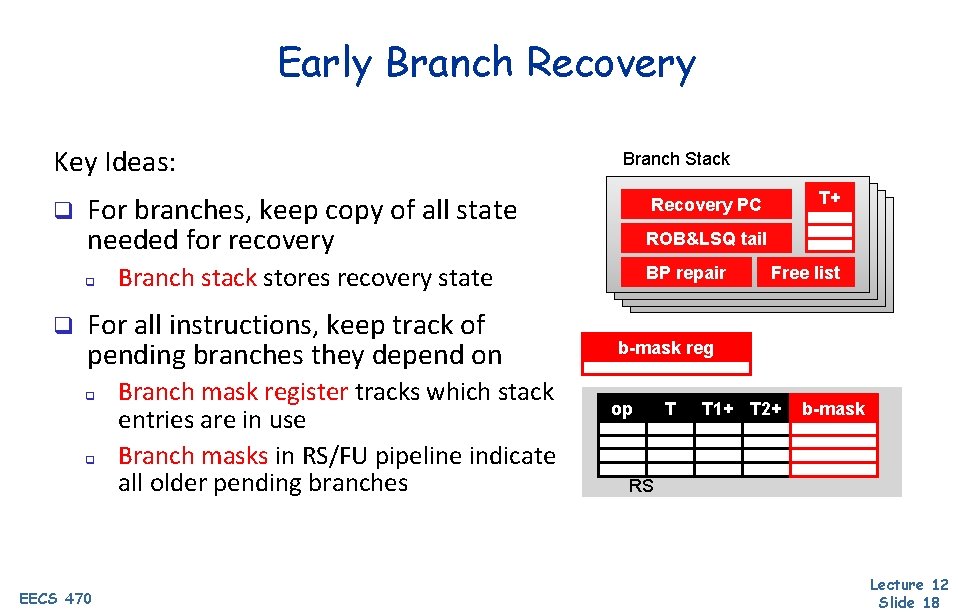

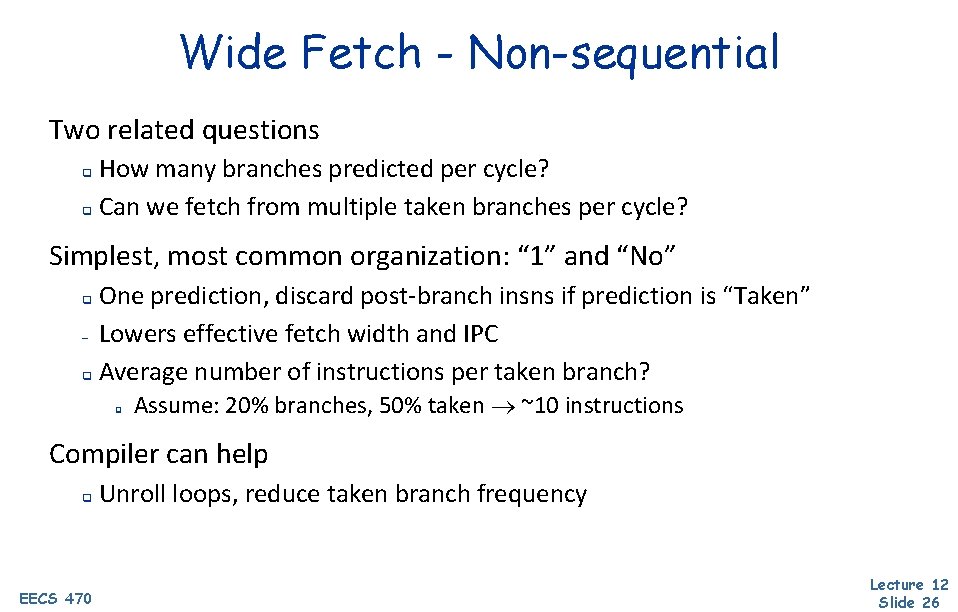

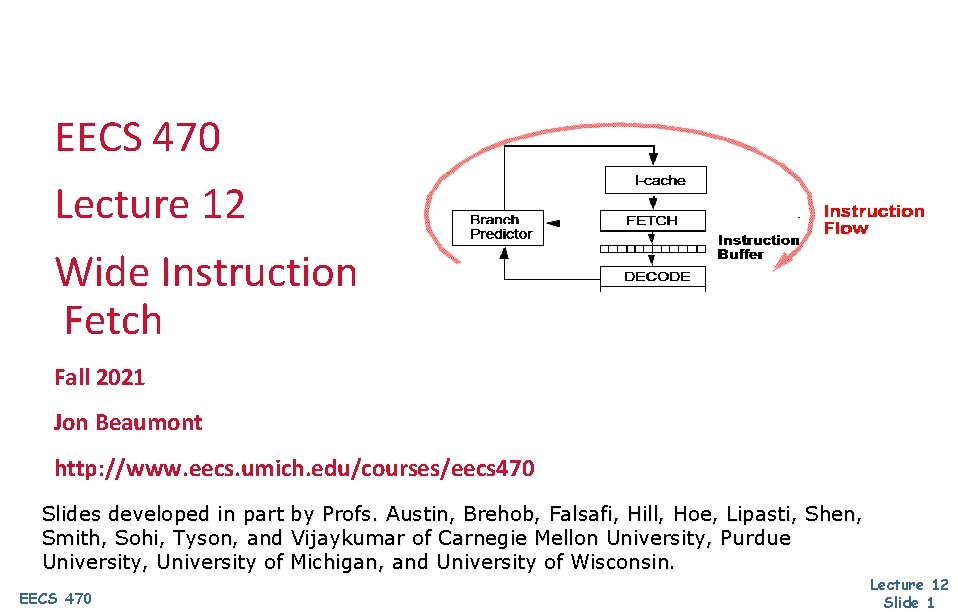

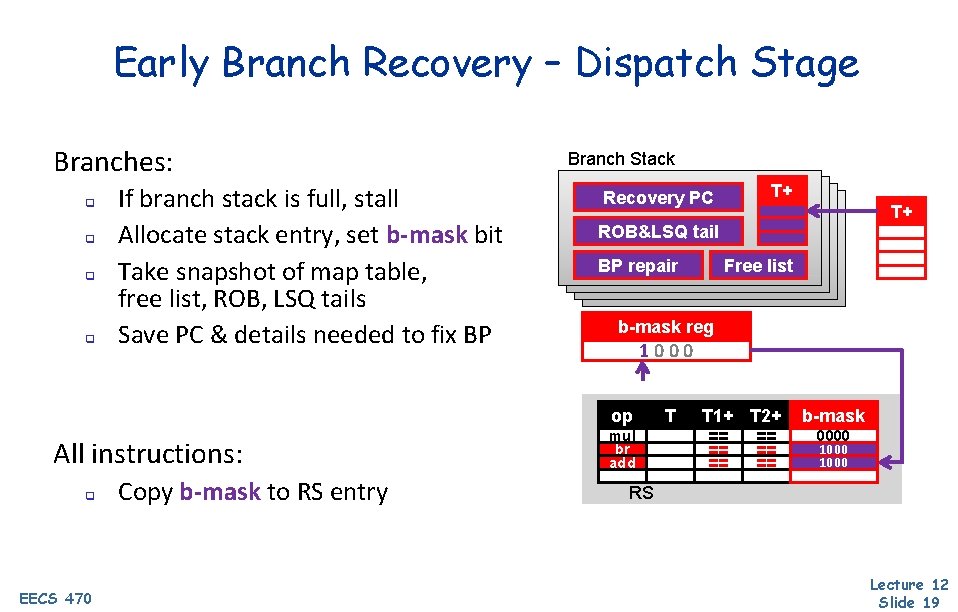

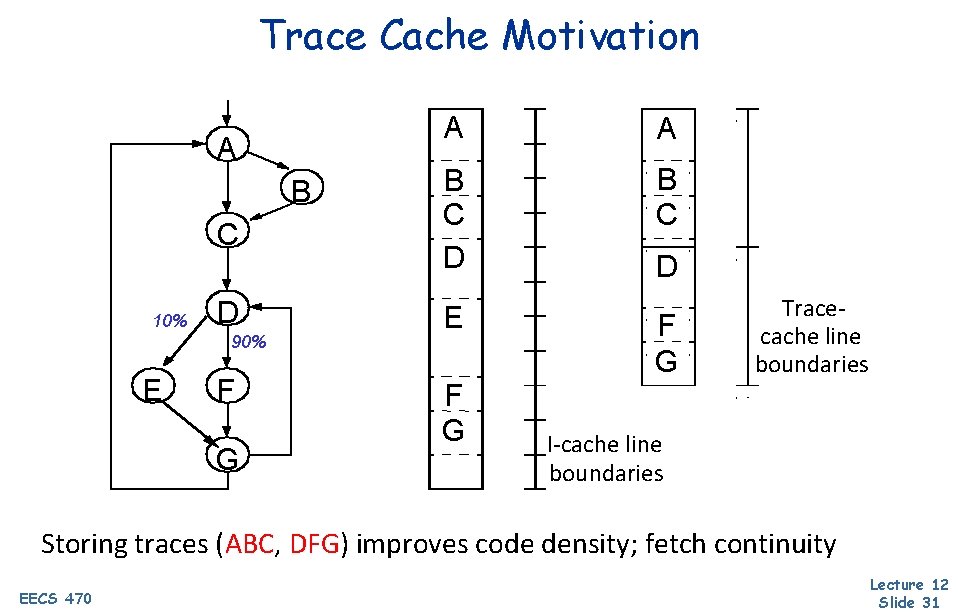

Trace Cache T$ T P Trace cache (T$) [Peleg+Weiser, Rotenberg+] Overcomes serialization of prediction and fetch by combining them q New kind of I$ that stores dynamic, not static, insn sequences q q q Blocks can contain statically non-contiguous insns Tag: PC of first insn + assumed direction of embedded branches Coupled with trace predictor (TP) Predicts next trace, not next branch q Trace identified by initial address & internal branch outcomes EECS 470 q Lecture 12 Slide 32

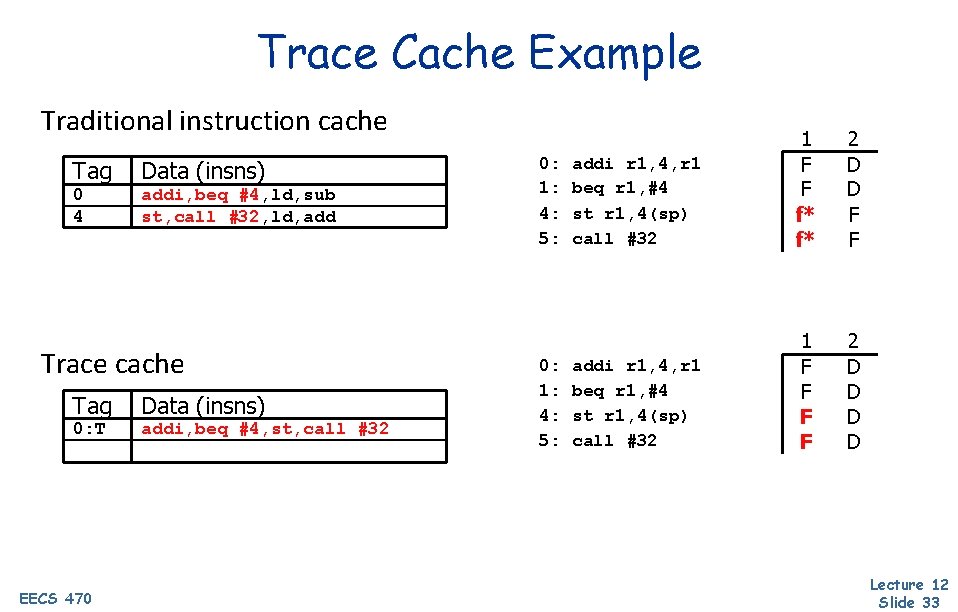

Trace Cache Example Traditional instruction cache Tag Data (insns) 0 4 addi, beq #4, ld, sub st, call #32, ld, add Trace cache Tag Data (insns) 0: T addi, beq #4, st, call #32 EECS 470 0: 1: 4: 5: addi r 1, 4, r 1 beq r 1, #4 st r 1, 4(sp) call #32 1 F F f* f* 2 D D F F addi r 1, 4, r 1 beq r 1, #4 st r 1, 4(sp) call #32 1 F F 2 D D Lecture 12 Slide 33

Next Time Wrap up instruction flow, start caches Lingering questions / feedback? I'll include an anonymous form at the end of every lecture: https: //bit. ly/3 o. Sr 5 FD EECS 470 Lecture 12 Slide 34