EECS 470 Lecture 11 Instruction Flow T T

![Branch Target Buffer [31: 10] [9: 2] PC 1: 0 [19: 10] [13: 2] Branch Target Buffer [31: 10] [9: 2] PC 1: 0 [19: 10] [13: 2]](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-16.jpg)

![Two-Bit Saturating Counters (2 bc) Two-bit saturating counters (2 bc) [Smith] • Replace each Two-Bit Saturating Counters (2 bc) Two-bit saturating counters (2 bc) [Smith] • Replace each](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-23.jpg)

![Two-Bit Saturating Counters (2 bc) Two-bit saturating counters (2 bc) [Smith] • Replace each Two-Bit Saturating Counters (2 bc) Two-bit saturating counters (2 bc) [Smith] • Replace each](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-24.jpg)

![Using More History: Correlated Predictor Correlated (a. k. a. two-level) predictor [Patt] • Exploits Using More History: Correlated Predictor Correlated (a. k. a. two-level) predictor [Patt] • Exploits](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-26.jpg)

![Gshare [Mc. Farling] Poll: What would make a good hash function? (select all that Gshare [Mc. Farling] Poll: What would make a good hash function? (select all that](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-33.jpg)

![Hybrid Predictor Hybrid (tournament) predictor [Mc. Farling] • Attacks correlated predictor BHT utilization problem Hybrid Predictor Hybrid (tournament) predictor [Mc. Farling] • Attacks correlated predictor BHT utilization problem](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-34.jpg)

- Slides: 35

EECS 470 Lecture 11 Instruction Flow T T TT TT T NT T T N N TN TN TT Winter 2021 T NN N N Jon Beaumont http: //www. eecs. umich. edu/courses/eecs 470 Slides developed in part by Profs. Austin, Brehob, Falsafi, Hill, Hoe, Lipasti, Shen, Smith, Sohi, Tyson, and Vijaykumar of Carnegie Mellon University, Purdue University, University of Michigan, and University of Wisconsin. EECS 470 Lecture 11 Slide 1

Announcements P 3 due Thursday r Plan on needing a day or two to thoroughly test combinations of hazards and debug Project Proposal due Friday HW 3 due next Tuesday Midterm on Thursday 3/4 @ 6 -8 pm r Will be administered on Gradescope r Alternate exam on 3/4 at 8 am m r Sample exams available on the website m r You must fill out the form listed on Piazza in order to take the alternate exam One of the exams will be converted to a Gradescope format for practice Covers everything in lecture and lab up through MIPS R 10 K (Lecture 9) No lecture Thursday (2/25), Exam review next Tuesday (3/2) EECS 470 Lecture 11 Slide 2

Last Time Memory disambiguation How to do loads/stores out of order EECS 470 Lecture 11 Slide 3

Today How to effectively fetch instructions (particularly considering branches) Several features that can be useful for your final project • Instruction buffers • Return Address Stack • Correlated branch predictors EECS 470 Lecture 11 Slide 4

Readings For Today: r H & P 3. 3, 3. 9 EECS 470 Lecture 11 Slide 5

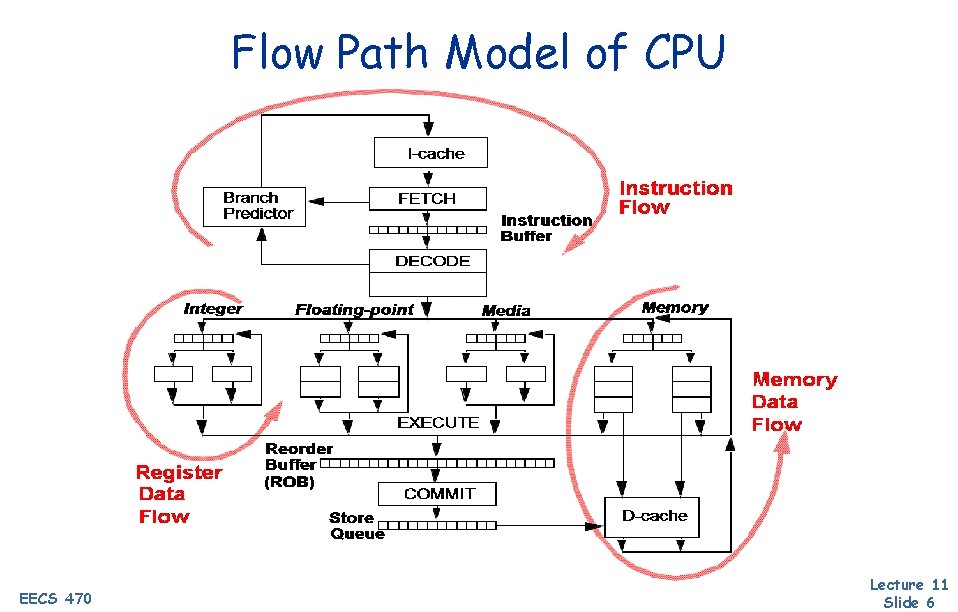

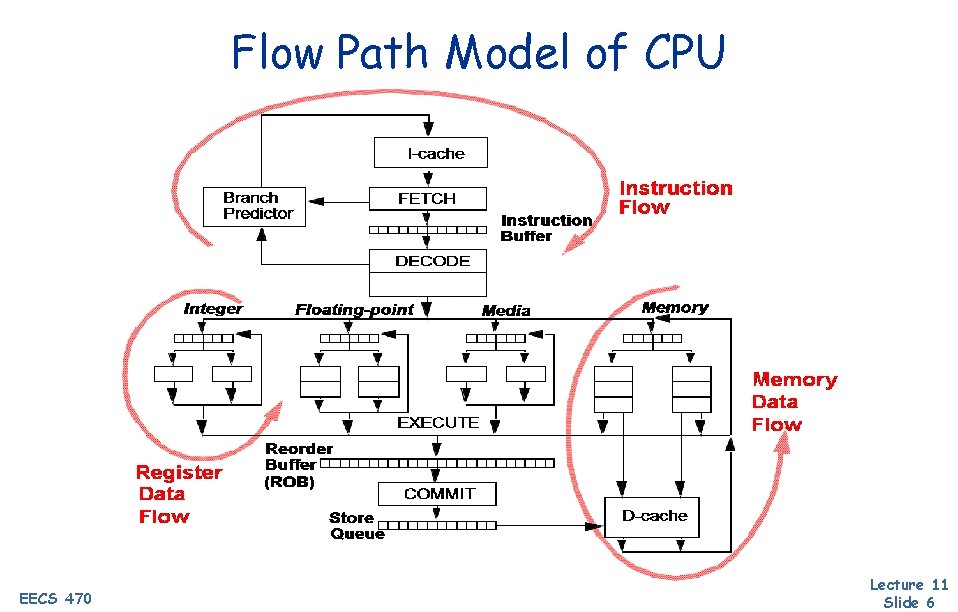

Flow Path Model of CPU EECS 470 Lecture 11 Slide 6

EECS 470 Roadmap Speedup Programs Reduce Instruction Latency Reduce number of instructions Parallelize Programmability Thread, Process, etc. Level Parallelism Instruction Level Parallelism Precise State Register Dataflow Memory Data Flow Pipelining Superscalar Execution Dynamic Scheduling Instruction Flow Revisit later with caches… Branch Prediction Fetch Buffer Memory Disambiguation Target Prediction First month… done BTB RAS Direction Prediction Local Global Hybrid EECS 470 Lecture 11 Slide 7

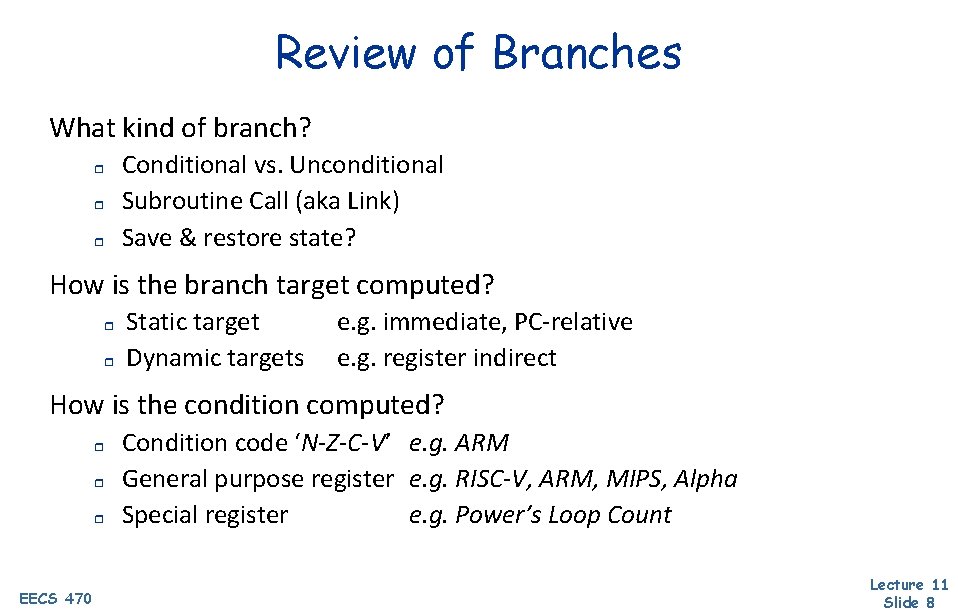

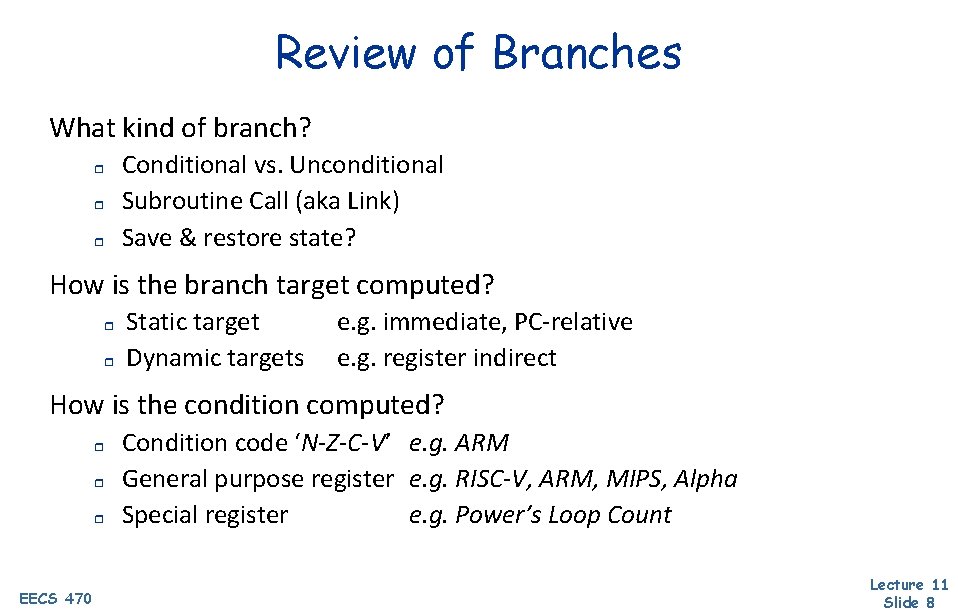

Review of Branches What kind of branch? r r r Conditional vs. Unconditional Subroutine Call (aka Link) Save & restore state? How is the branch target computed? r r Static target Dynamic targets e. g. immediate, PC-relative e. g. register indirect How is the condition computed? r r r EECS 470 Condition code ‘N-Z-C-V’ e. g. ARM General purpose register e. g. RISC-V, ARM, MIPS, Alpha Special register e. g. Power’s Loop Count Lecture 11 Slide 8

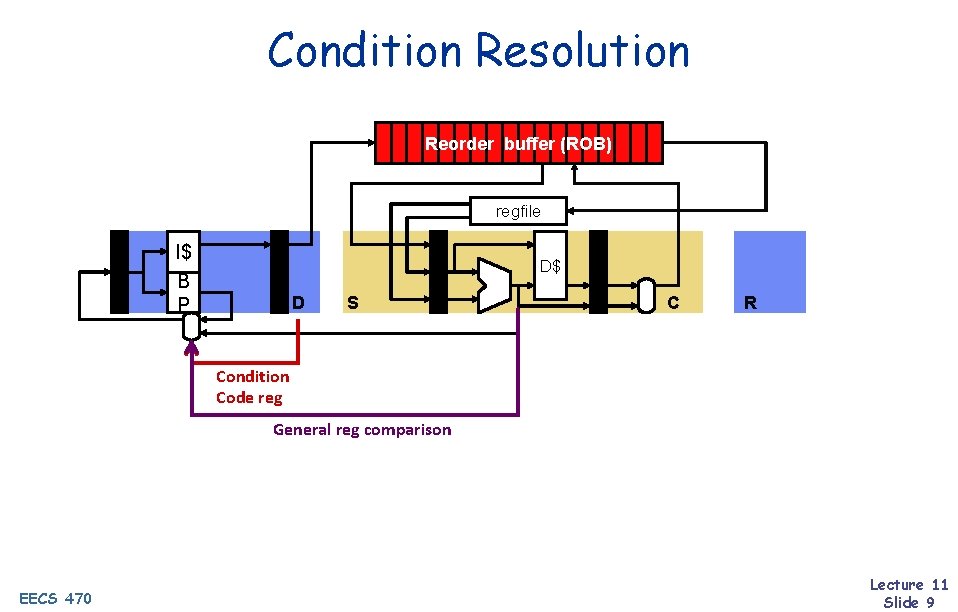

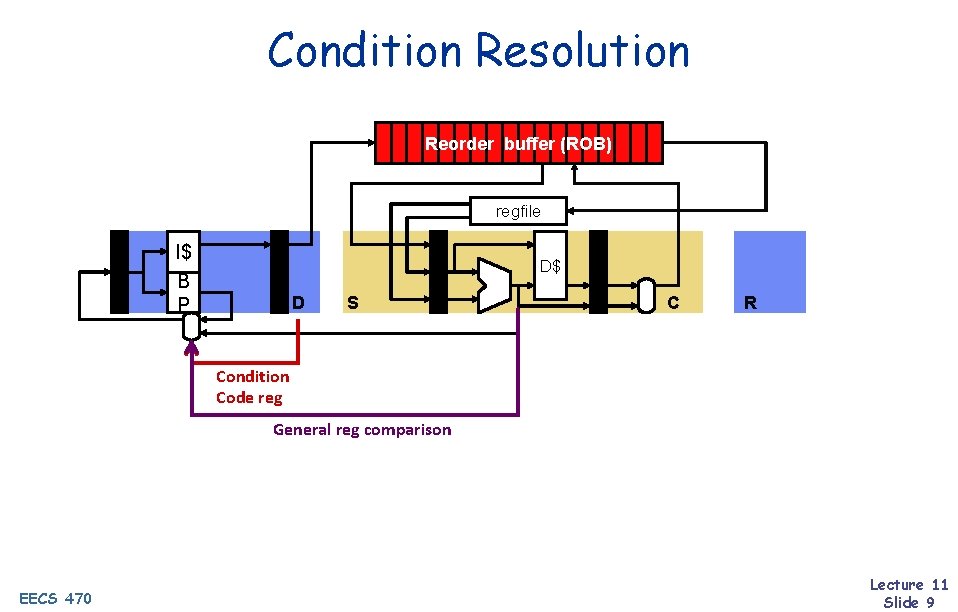

Condition Resolution Reorder buffer (ROB) regfile I$ B P D$ D S C R Condition Code reg General reg comparison EECS 470 Lecture 11 Slide 9

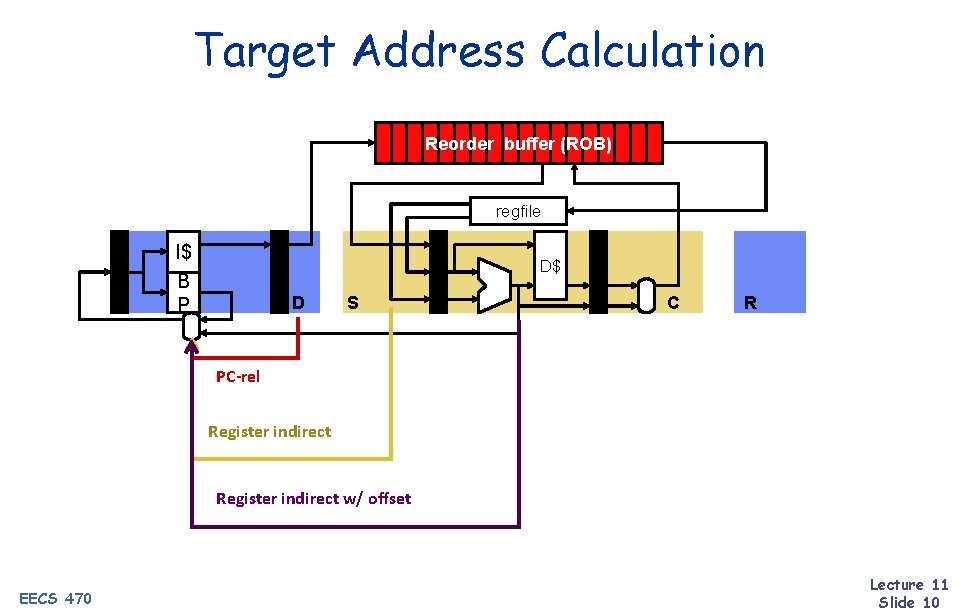

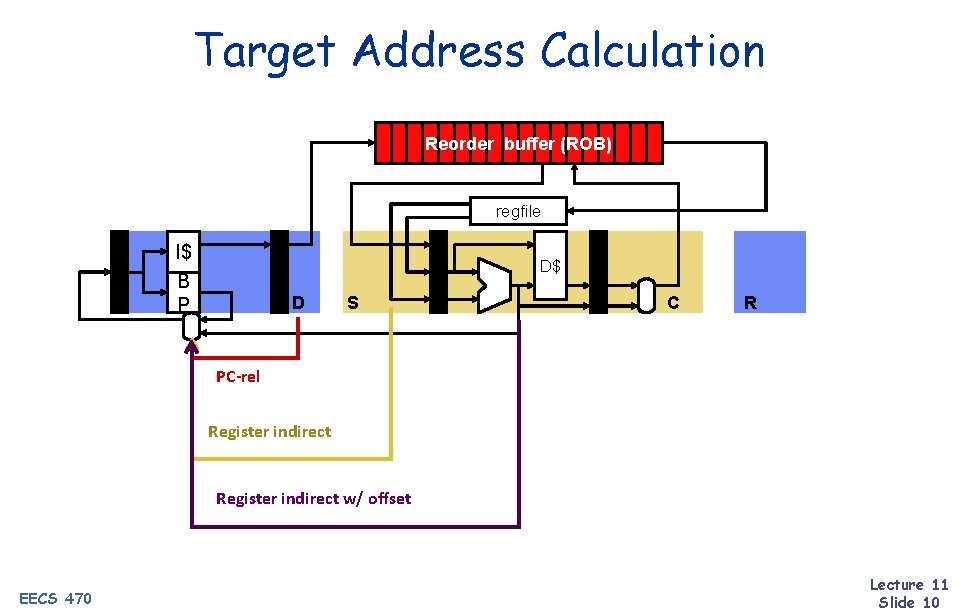

Target Address Calculation Reorder buffer (ROB) regfile I$ B P D$ D S C R PC-rel Register indirect w/ offset EECS 470 Lecture 11 Slide 10

What’s So Bad About Branches? Performance Penalties r r r Use up execution resources Fragmentation of I$ lines Disruption of sequential control flow m m m Need to determine direction Need to determine target Often results in stalls Robs instruction fetch bandwidth and ILP EECS 470 Lecture 11 Slide 11

Instruction Fetch Buffer Fetch Unit Out-of-order Core We use buffers whenever we want to decouple to components of pipeline Where have we seen before? r Fetch buffer smoothes rate mismatch between F and D r r EECS 470 r Simple FIFO queue Decouples fetch stalls and execution stalls Can help hide I$ latencies Lecture 11 Slide 12

Branch Prediction Two high level strategies: • Static: same prediction for a branch made every iteration, • • Compiler, programmer, or hardware Dynamic: some runtime information is used to make a (potentially) different prediction each iteration • Hardware What are advantages/disadvantages of each? EECS 470 Lecture 11 Slide 13

Branch Prediction Strategies Predict Not Taken r r Pipelines do this naturally Does not affect ISA Poll: What control flow would likely result in the worst performance with predict "Not Taken"? a) if/else statement b) case statement c) for loop Software Prediction r r r Extra bit in the branch instruction Bit set by compiler or user; can use profiling Static prediction, same behavior every time Prediction Based on Branch Offsets r Positive offset: predict not taken Negative offset: predict taken Static Dynamic Prediction Based on History in HW What about branch target? r EECS 470 Lecture 11 Slide 14

Dynamic Branch Prediction regfile I$ B P D$ BP part I: target predictor • Applies to all control transfers • Supplies target PC, tells if insn is a branch prior to decode + Easy BP part II: direction predictor • Applies to conditional branches only • Predicts taken/not-taken – Harder EECS 470 Lecture 11 Slide 15

![Branch Target Buffer 31 10 9 2 PC 1 0 19 10 13 2 Branch Target Buffer [31: 10] [9: 2] PC 1: 0 [19: 10] [13: 2]](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-16.jpg)

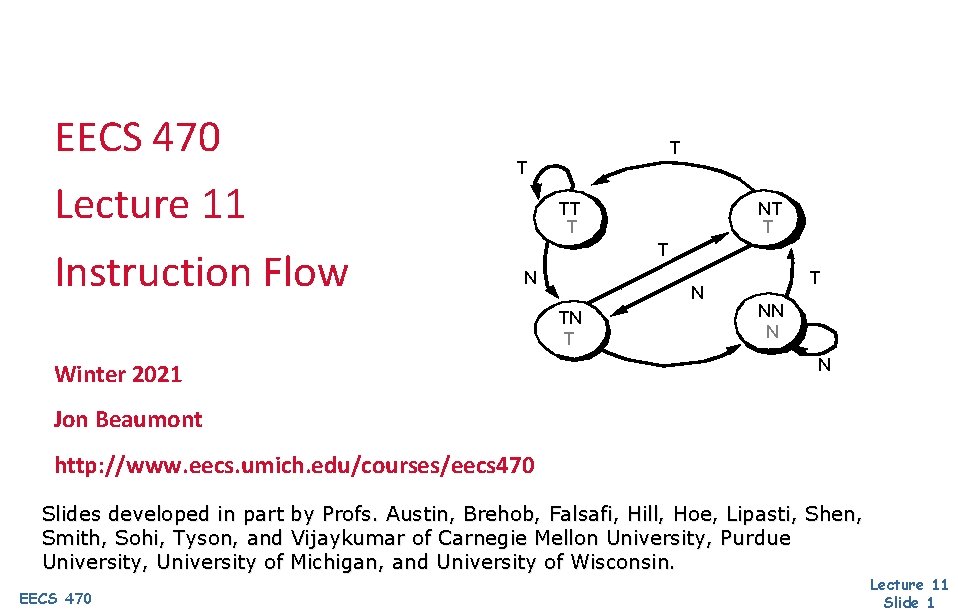

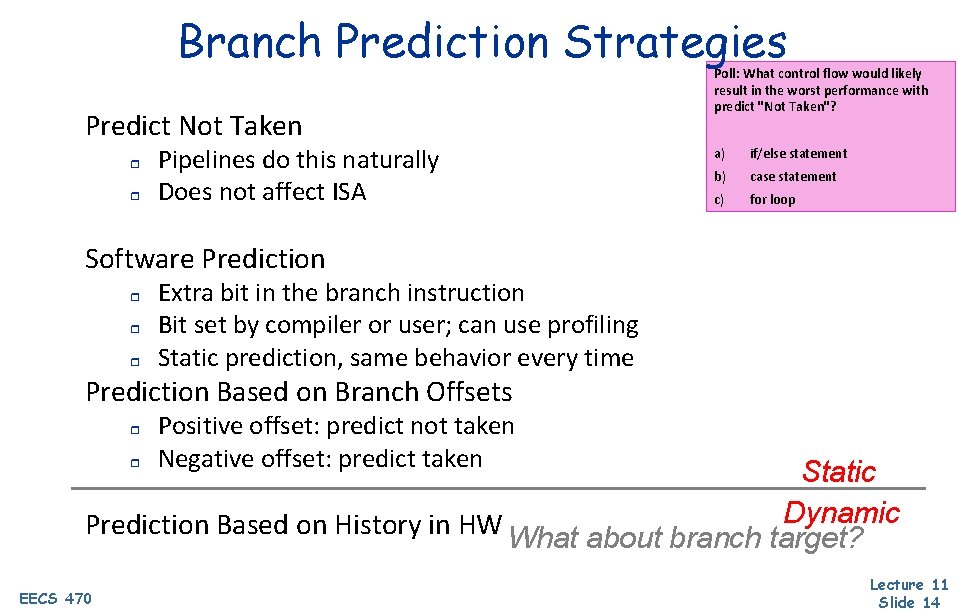

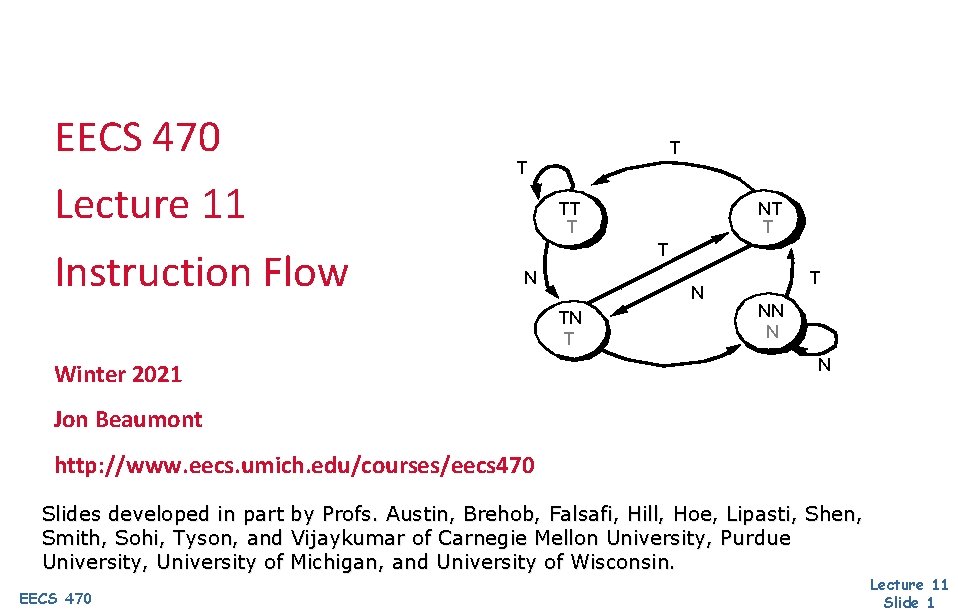

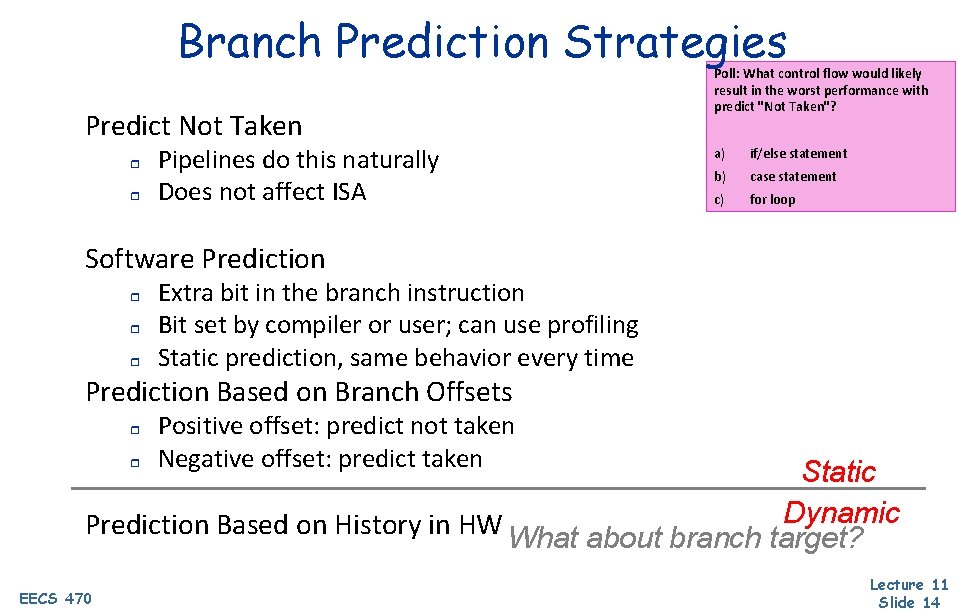

Branch Target Buffer [31: 10] [9: 2] PC 1: 0 [19: 10] [13: 2] = [31: 14] branch? [13: 2] 1: 0 target-PC Branch target buffer (BTB) • Used to predict target of branches • A small cache: address = PC, data = target-PC • Hit? This is a control insn and it’s going to target-PC (if “taken”) • Miss? Not a control insn, or one I have never seen before • Partial data/tags: full tag not necessary, target-PC is just a guess • Aliasing: tag match, but not actual match (OK for BTB) • Pentium 4 BTB: 2 K entries, 4 -way set-associative EECS 470 Lecture 11 Slide 16

Why Does a BTB Work? Because control insn targets are stable • + + + Direct means constant target, indirect means register target Direct conditional branches? Check Direct calls? Check Direct unconditional jumps? Check + Indirect conditional branches? Not that useful not widely supported • Indirect calls? Two idioms + Dynamically linked functions (DLLs)? Check + Dynamically dispatched (virtual) functions? Probably as good as we can do Poll: What's the best way to predict • Indirect unconditional jumps? "return" instruction targets? – Returns? Nope, but… a) Remember last target for that return instr EECS 470 b) Calculate target before decoding c) Keep track of PCs of jump instrs Lecture 11 Slide 17

Return Address Stack (RAS) PC I$ DIRP +4 instruction BTB RAS next-PC Return addresses are easy to predict without a BTB • • Hardware return address stack (RAS) tracks call sequence Calls push PC+4 onto RAS Prediction for returns is RAS[TOS] Q: how can you tell if an insn is a return before decoding it? • RAS is not a cache • A: attach pre-decode bits to I$ • Written after first time insn executes • Two useful bits: return? , conditional-branch? EECS 470 Lecture 11 Slide 18

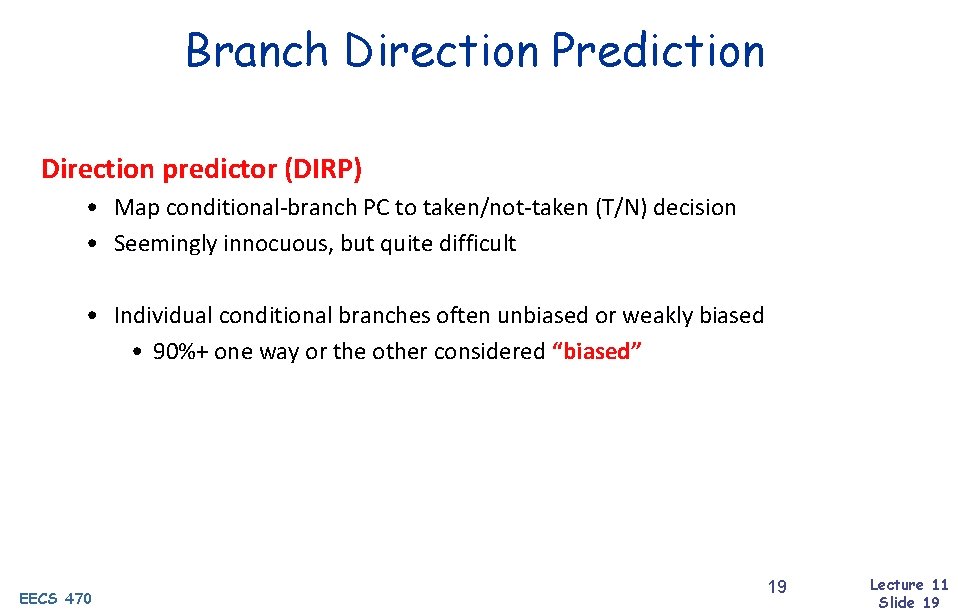

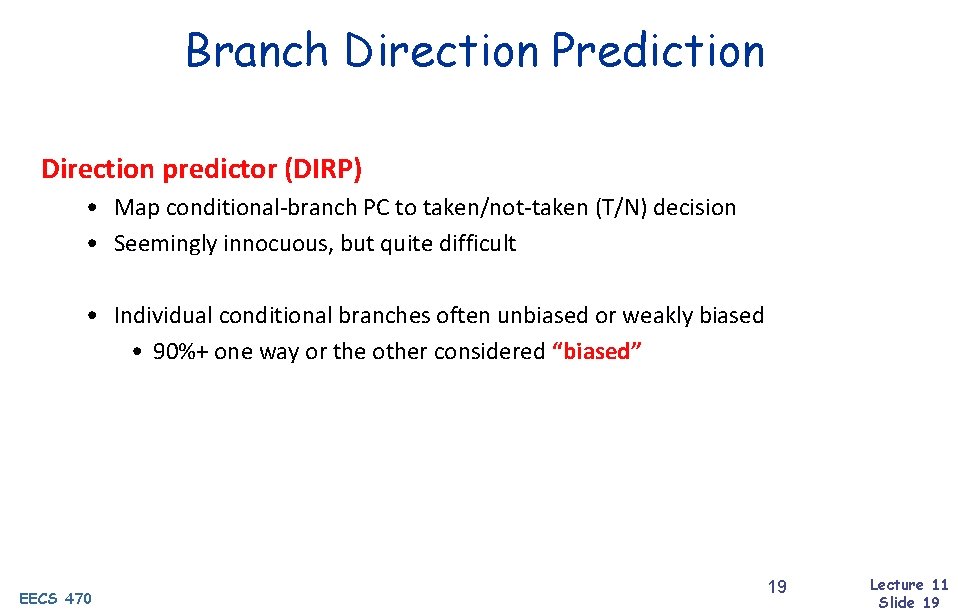

Branch Direction Prediction Direction predictor (DIRP) • Map conditional-branch PC to taken/not-taken (T/N) decision • Seemingly innocuous, but quite difficult • Individual conditional branches often unbiased or weakly biased • 90%+ one way or the other considered “biased” EECS 470 19 Lecture 11 Slide 19

Branch History Table (BHT) Branch history table (BHT): simplest direction predictor • PC indexes table of bits (0 = N, 1 = T), no tags (why not? ) • Essentially: branch will go same way it went last time Branch History Table branch. PC NT T How big should the BHT be? EECS 470 Lecture 11 Slide 20

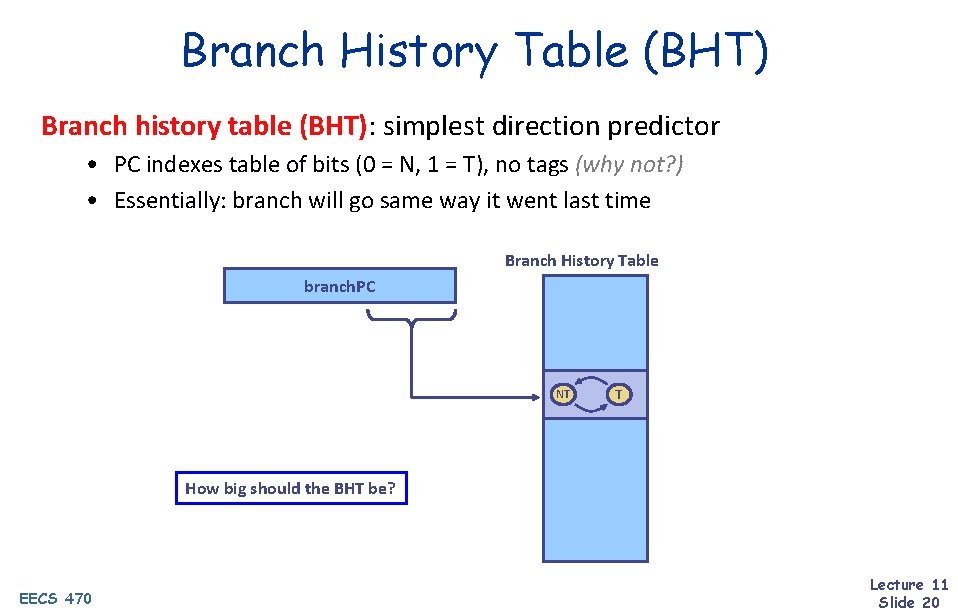

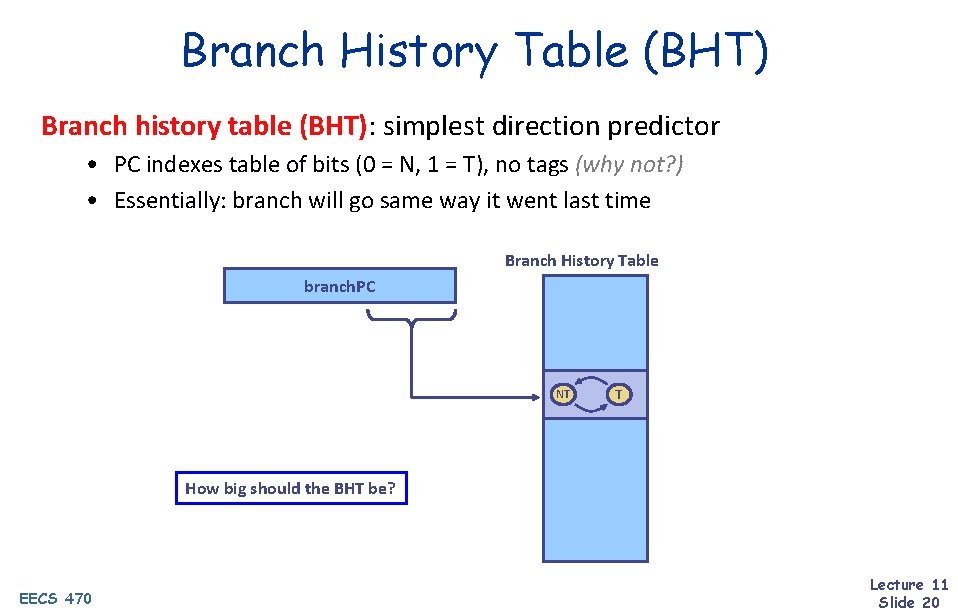

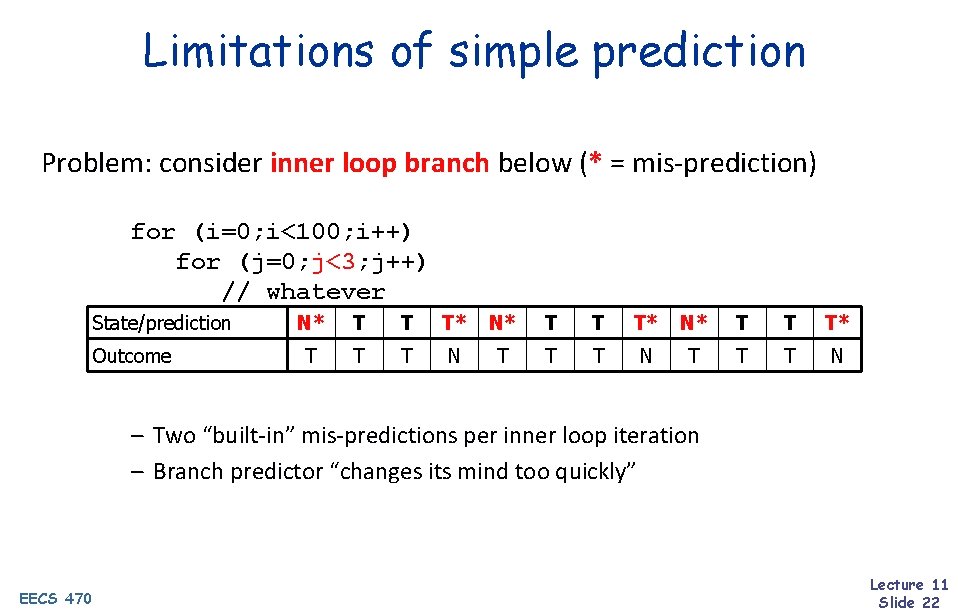

Limitations of simple prediction Problem: consider inner loop branch below (* = mis-prediction) for (i=0; i<100; i++) for (j=0; j<3; j++) // whatever State/prediction Outcome N* T T T Poll: What is the "steady-state" misprediction rate of the inner loop? – Two “built-in” mis-predictions per inner loop iteration – Branch predictor “changes its mind too quickly” EECS 470 Lecture 11 Slide 21

Limitations of simple prediction Problem: consider inner loop branch below (* = mis-prediction) for (i=0; i<100; i++) for (j=0; j<3; j++) // whatever State/prediction Outcome N* T T T* N* T T T N – Two “built-in” mis-predictions per inner loop iteration – Branch predictor “changes its mind too quickly” EECS 470 Lecture 11 Slide 22

![TwoBit Saturating Counters 2 bc Twobit saturating counters 2 bc Smith Replace each Two-Bit Saturating Counters (2 bc) Two-bit saturating counters (2 bc) [Smith] • Replace each](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-23.jpg)

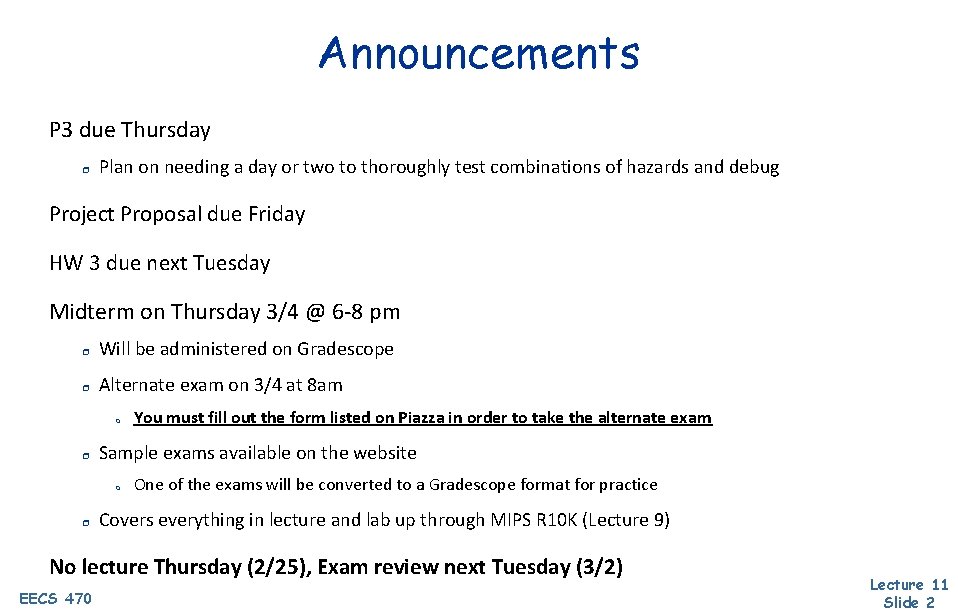

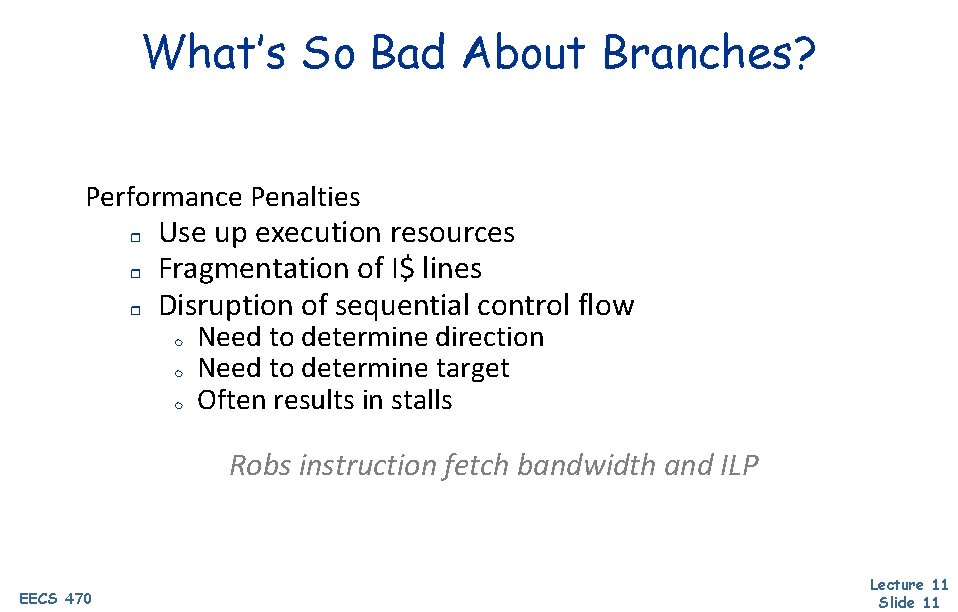

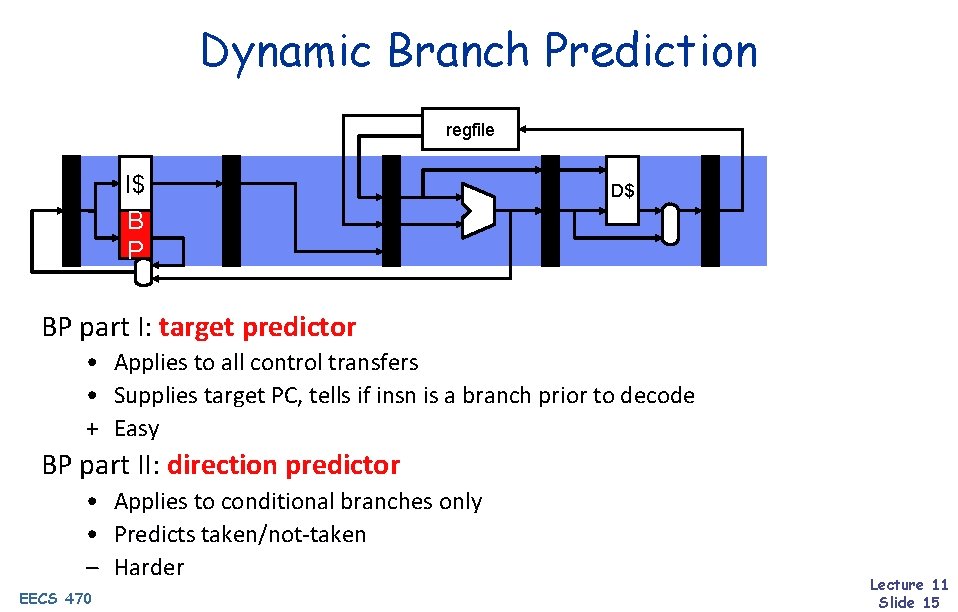

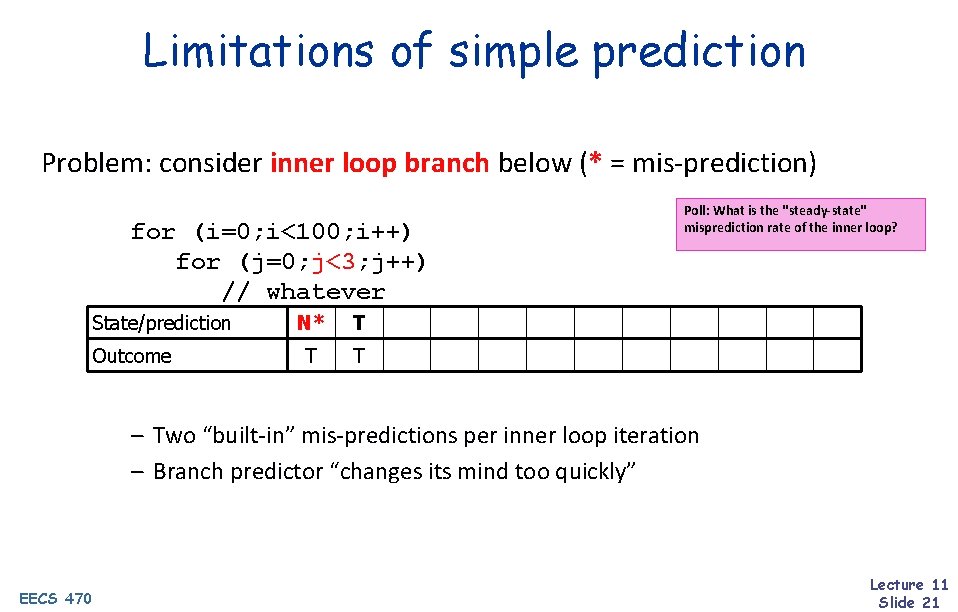

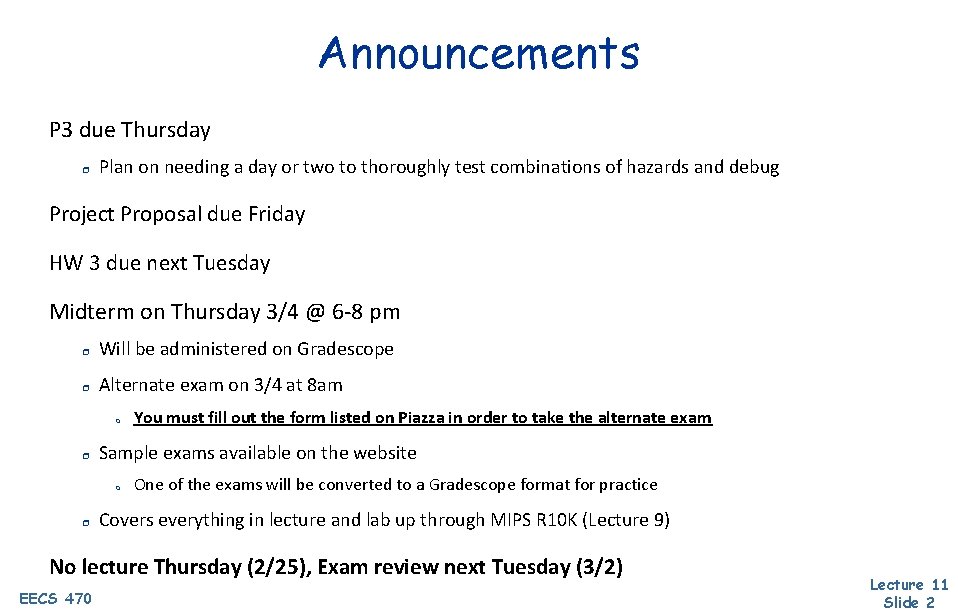

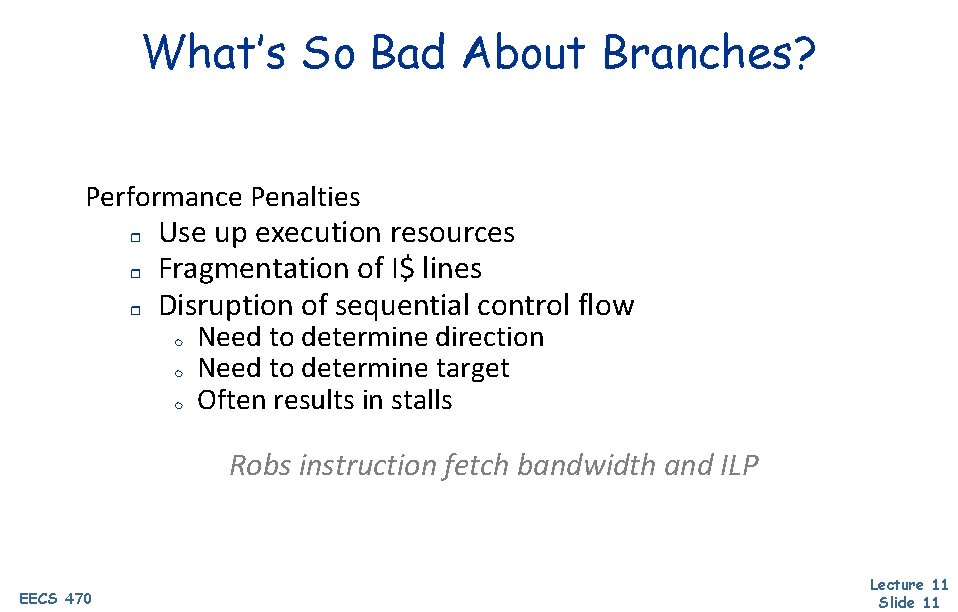

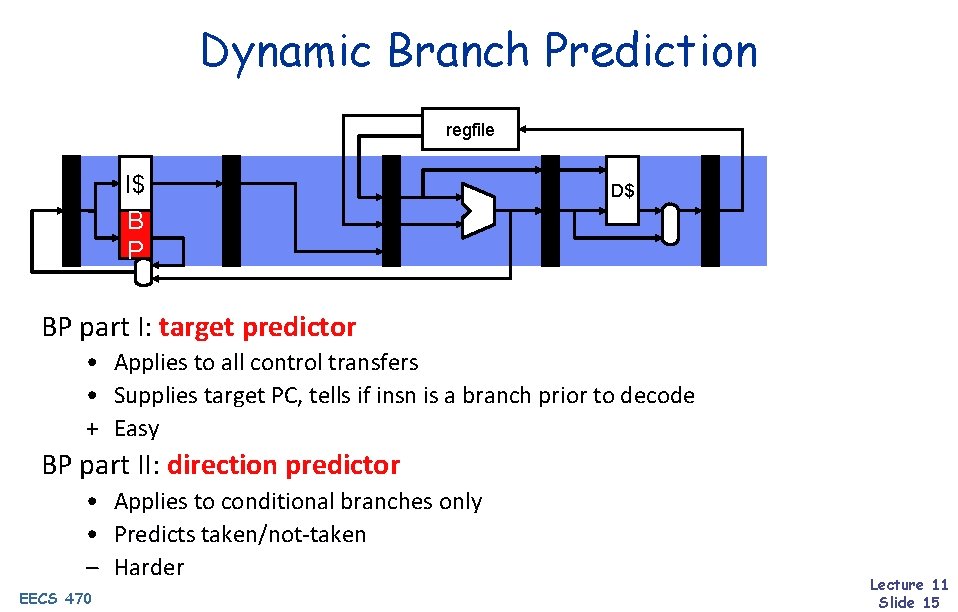

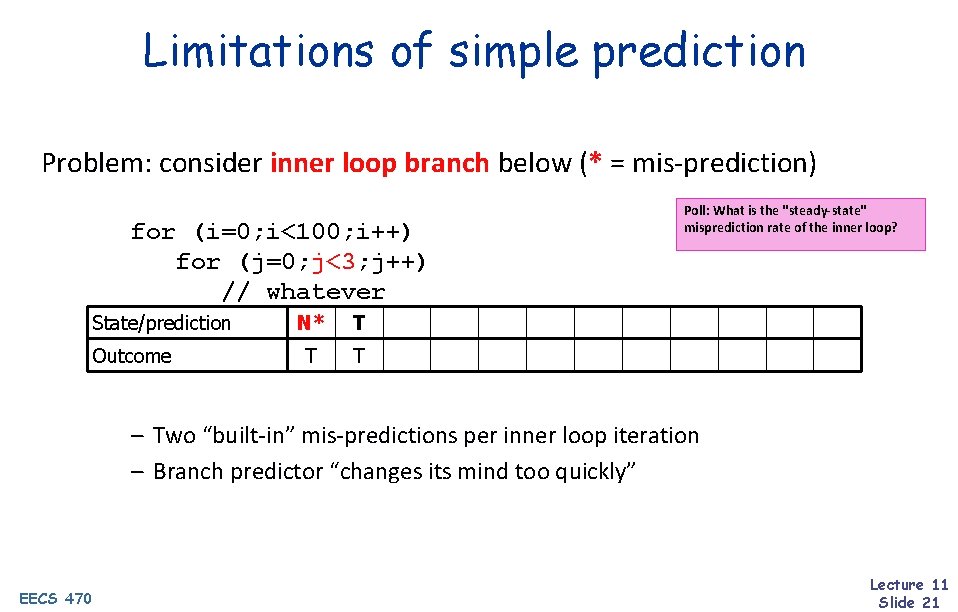

Two-Bit Saturating Counters (2 bc) Two-bit saturating counters (2 bc) [Smith] • Replace each single-bit prediction • (0, 1, 2, 3) = (N, n, t, T) • Force DIRP to mis-predict twice before “changing its mind” State/prediction Outcome N* n* T T T N + Fixes this pathology (which is not contrived, by the way) branch. PC Branch History Table SN EECS 470 NT T ST Lecture 11 Slide 23

![TwoBit Saturating Counters 2 bc Twobit saturating counters 2 bc Smith Replace each Two-Bit Saturating Counters (2 bc) Two-bit saturating counters (2 bc) [Smith] • Replace each](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-24.jpg)

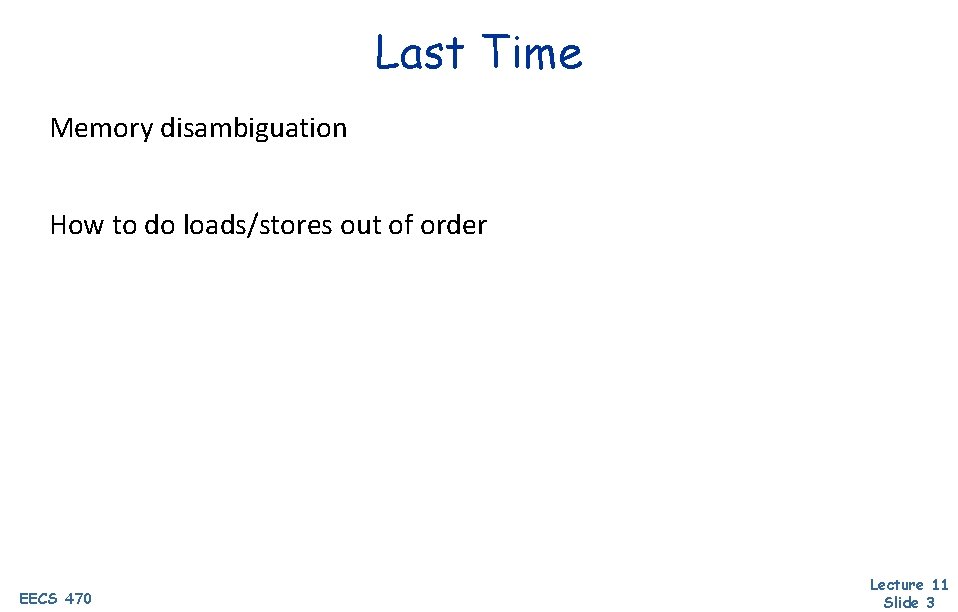

Two-Bit Saturating Counters (2 bc) Two-bit saturating counters (2 bc) [Smith] • Replace each single-bit prediction • (0, 1, 2, 3) = (N, n, t, T) • Force DIRP to mis-predict twice before “changing its mind” State/prediction Outcome N* n* t T T T* T T T N + Fixes this pathology (which is not contrived, by the way) branch. PC Branch History Table SN EECS 470 NT T ST Lecture 11 Slide 24

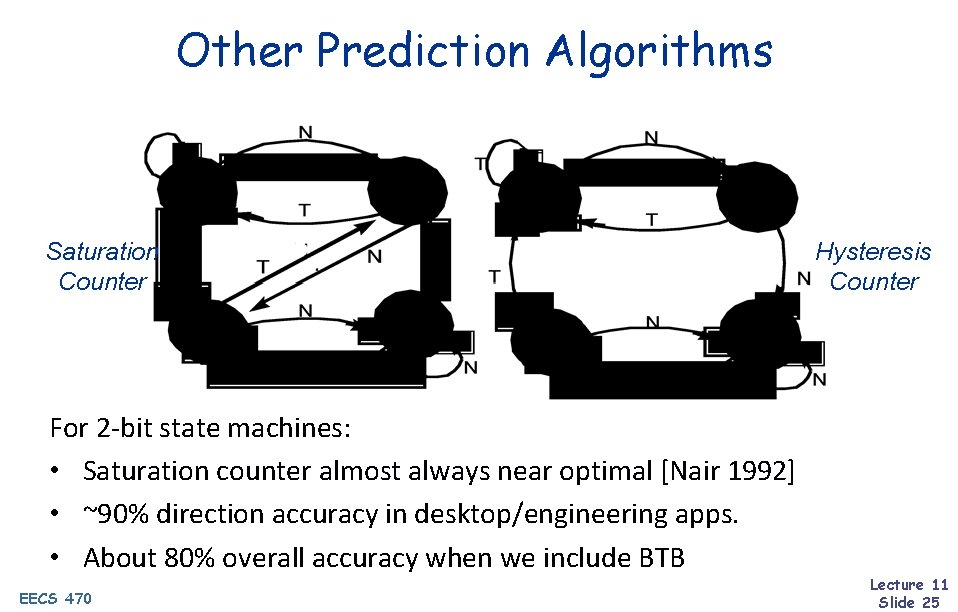

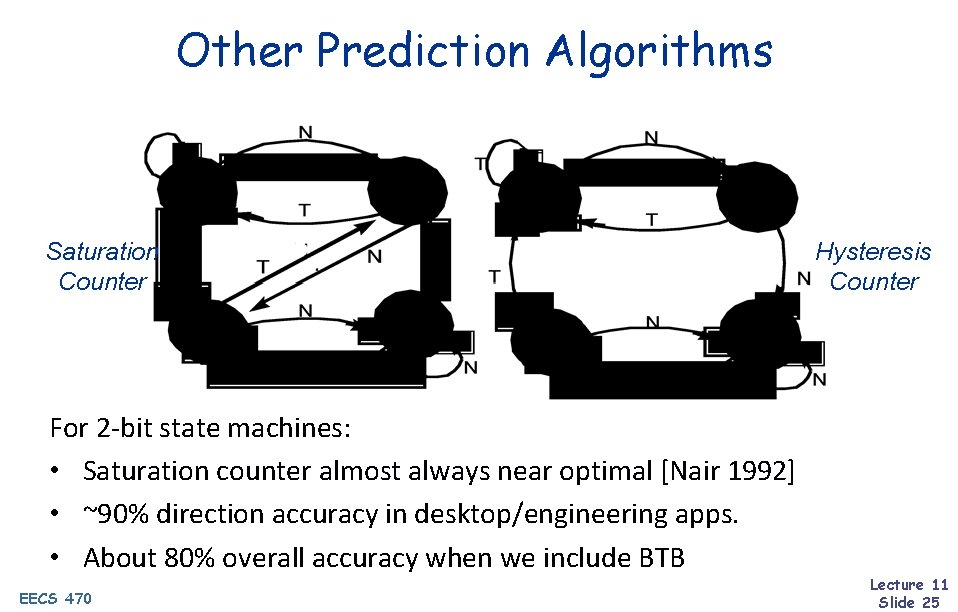

Other Prediction Algorithms Saturation Counter For 2 -bit state machines: • Saturation counter almost always near optimal [Nair 1992] • ~90% direction accuracy in desktop/engineering apps. • About 80% overall accuracy when we include BTB EECS 470 Hysteresis Counter Lecture 11 Slide 25

![Using More History Correlated Predictor Correlated a k a twolevel predictor Patt Exploits Using More History: Correlated Predictor Correlated (a. k. a. two-level) predictor [Patt] • Exploits](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-26.jpg)

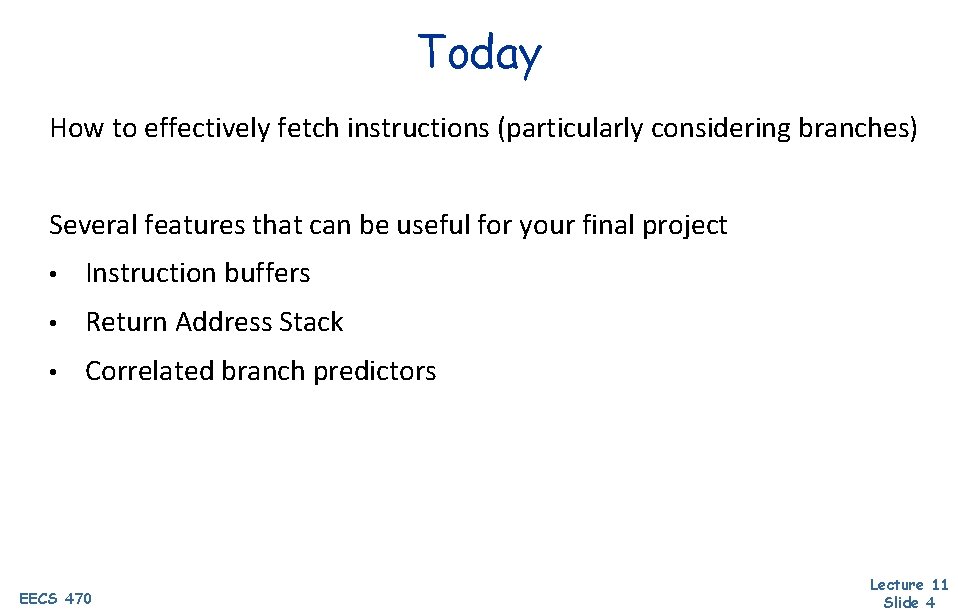

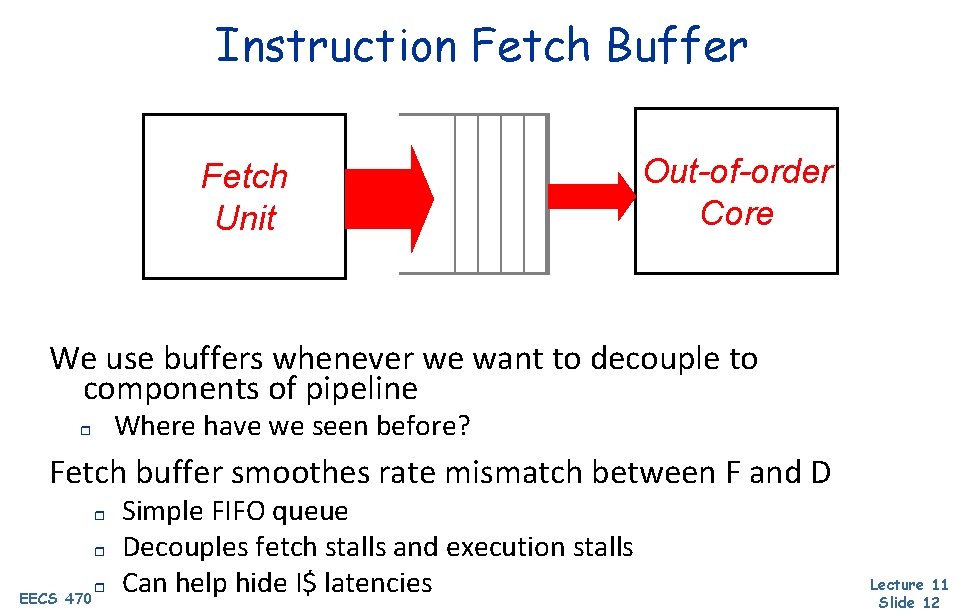

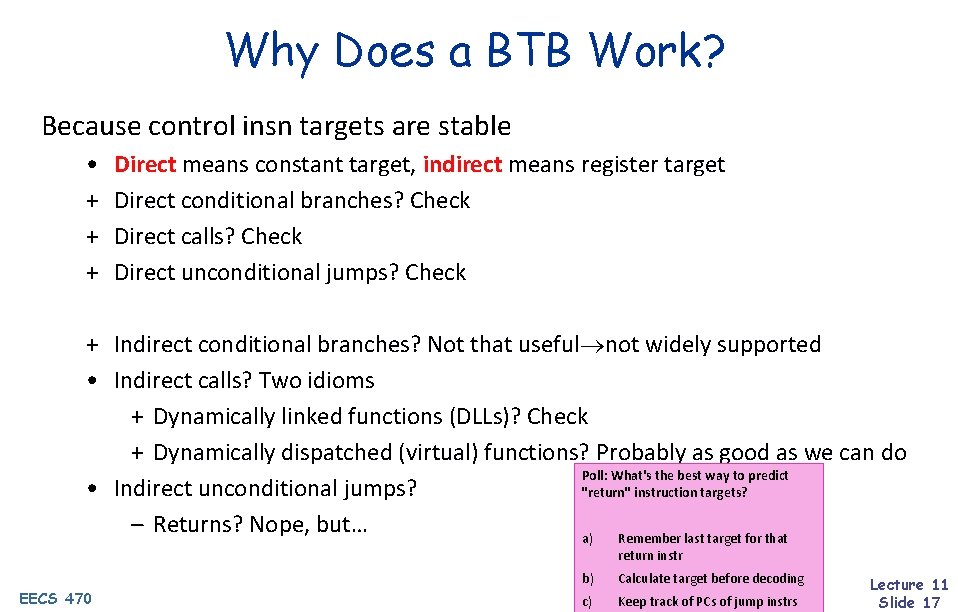

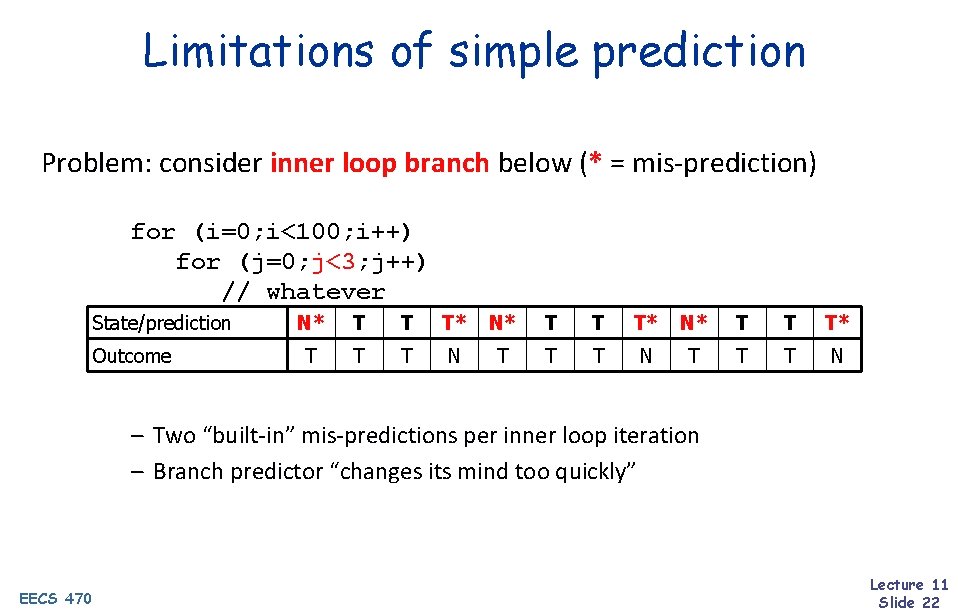

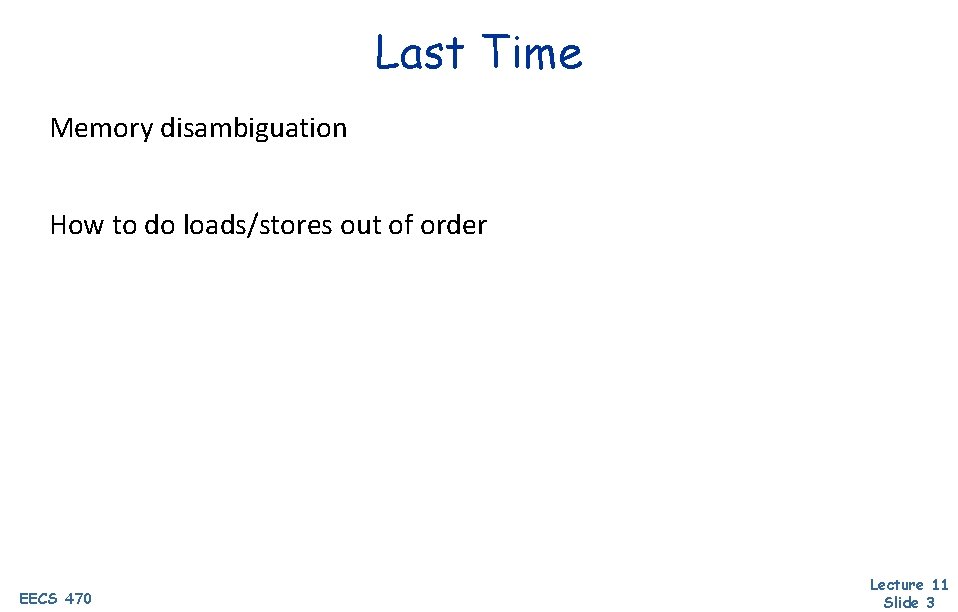

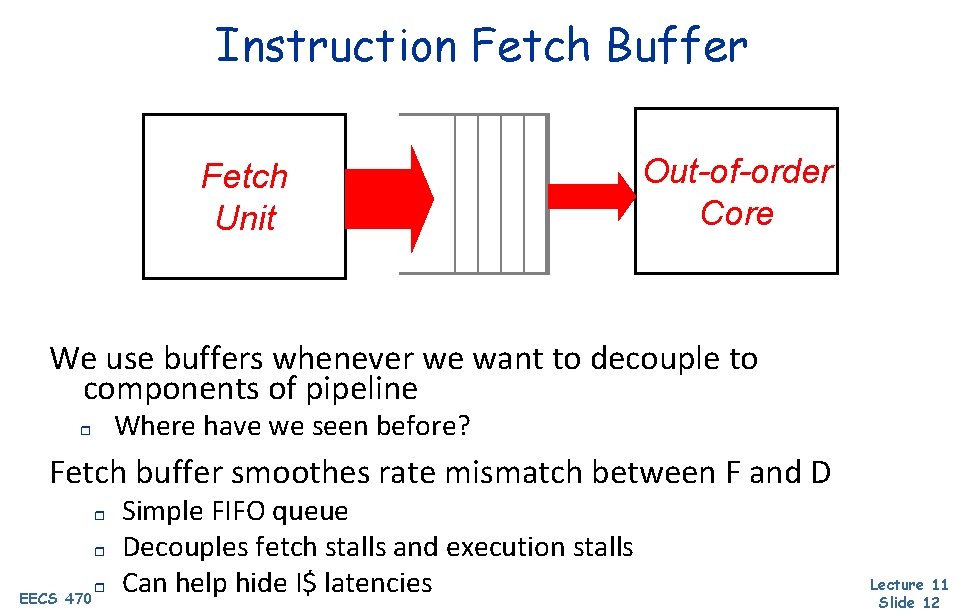

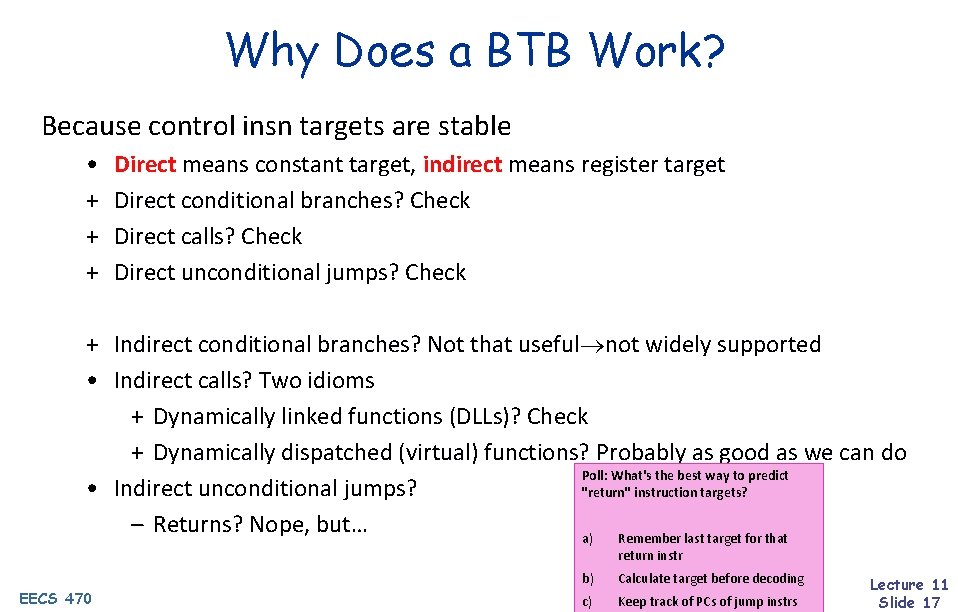

Using More History: Correlated Predictor Correlated (a. k. a. two-level) predictor [Patt] • Exploits observation that branch outcomes are correlated • Maintains separate prediction per (PC, history) • Branch history table (BHT): recent per-PC branch outcomes branch. PC Branch History Table Pattern History Table 1010 What is the prediction for this BHT 1010? NT T When do I update the tables? EECS 470 Lecture 11 Slide 26

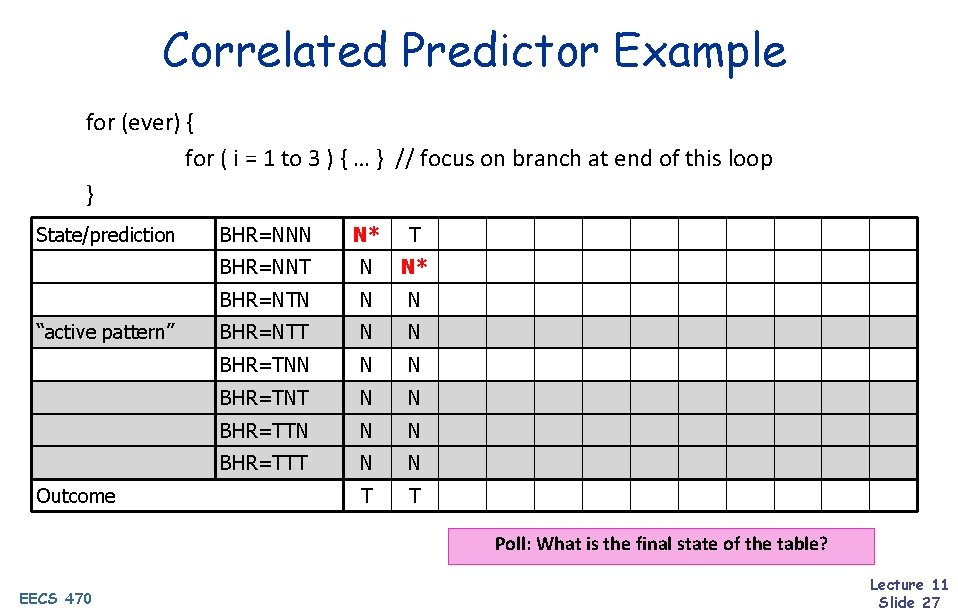

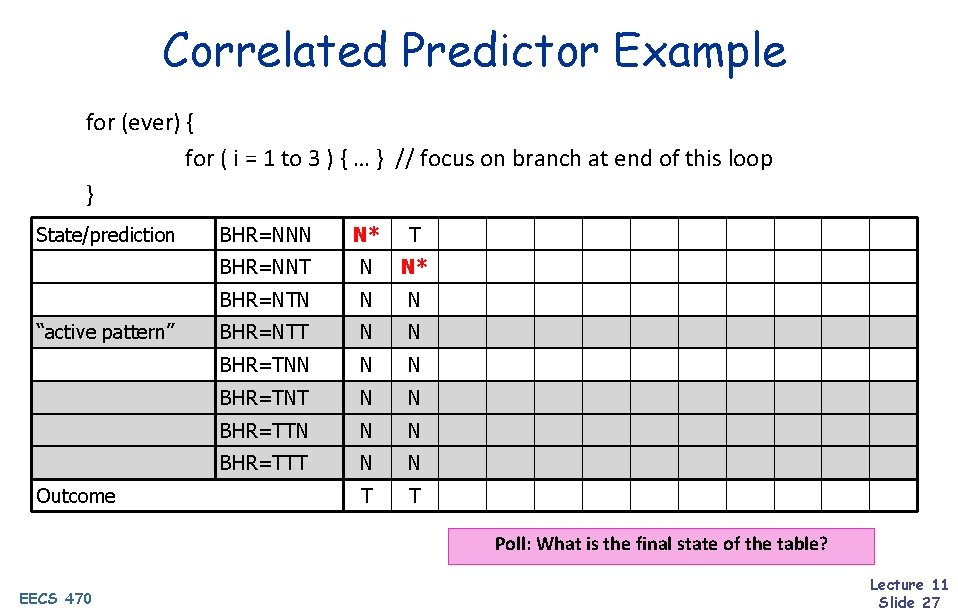

Correlated Predictor Example for (ever) { for ( i = 1 to 3 ) { … } // focus on branch at end of this loop } State/prediction “active pattern” Outcome BHR=NNN N* T BHR=NNT N N* BHR=NTN N N BHR=NTT N N BHR=TNN N N BHR=TNT N N BHR=TTN N N BHR=TTT N N T T Poll: What is the final state of the table? EECS 470 Lecture 11 Slide 27

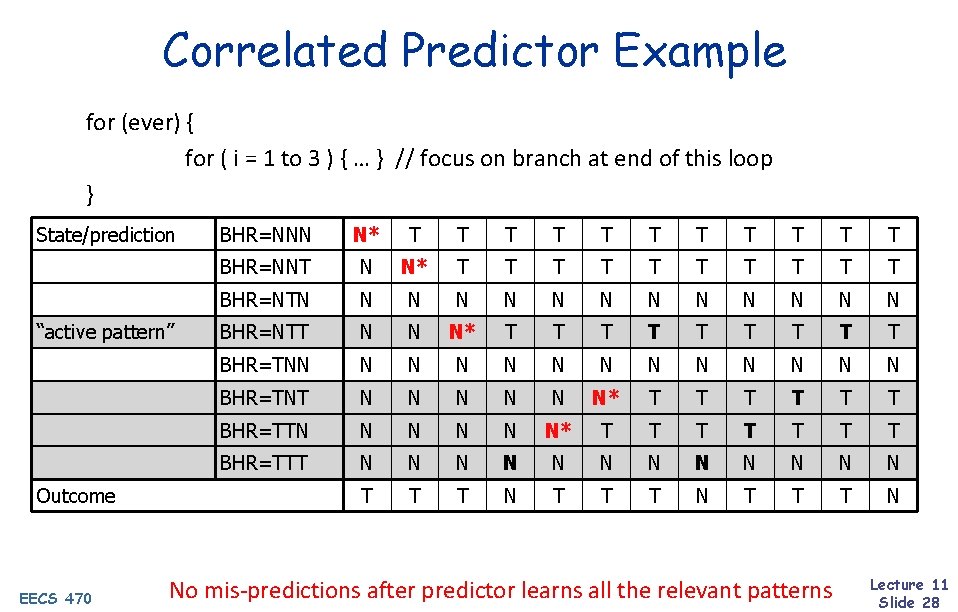

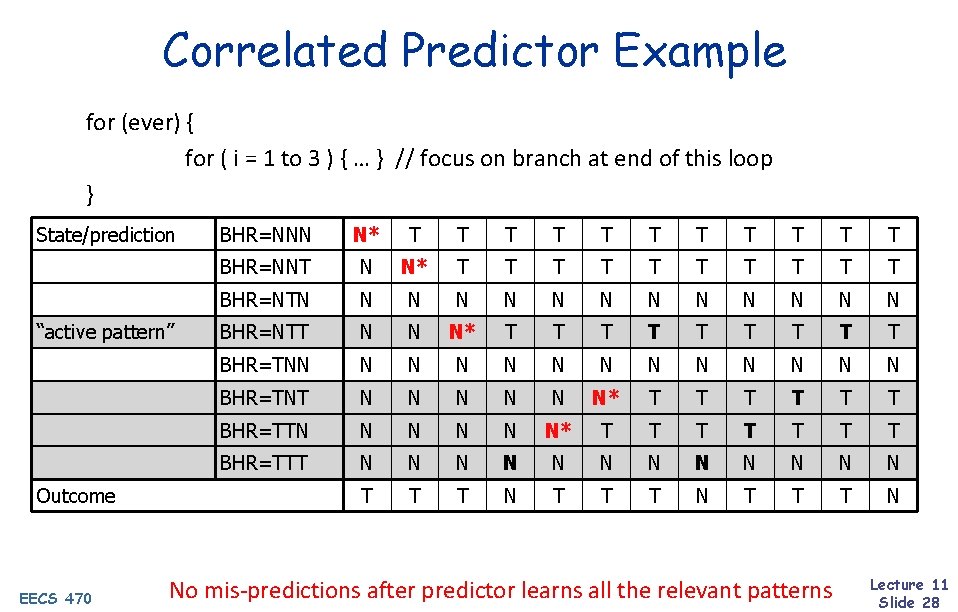

Correlated Predictor Example for (ever) { for ( i = 1 to 3 ) { … } // focus on branch at end of this loop } State/prediction “active pattern” Outcome EECS 470 BHR=NNN N* T T T BHR=NNT N N* T T T T T BHR=NTN N N N BHR=NTT N N N* T T T T T BHR=TNN N N N BHR=TNT N N N* T T T BHR=TTN N N N* T T T T BHR=TTT N N N T T T N No mis-predictions after predictor learns all the relevant patterns Lecture 11 Slide 28

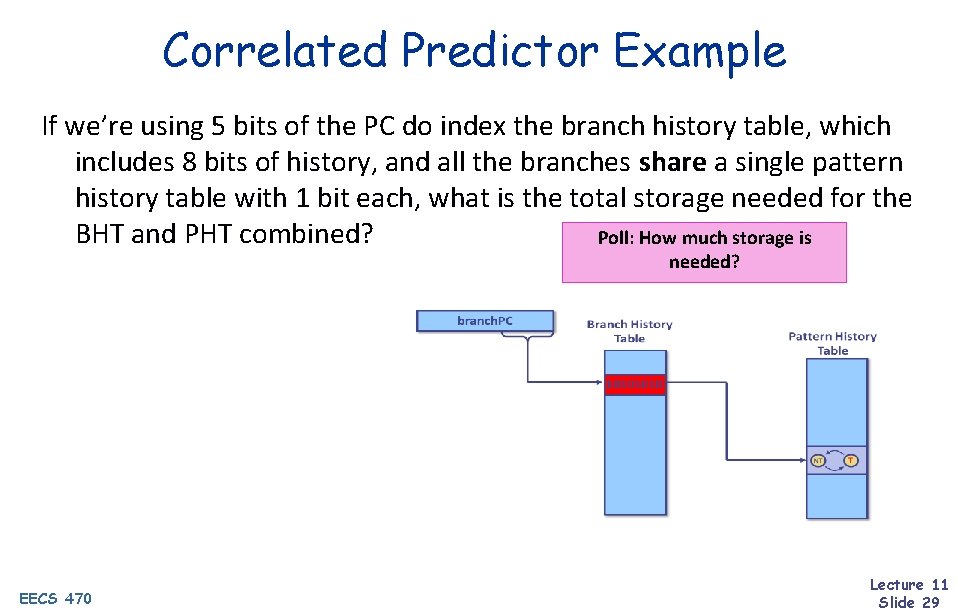

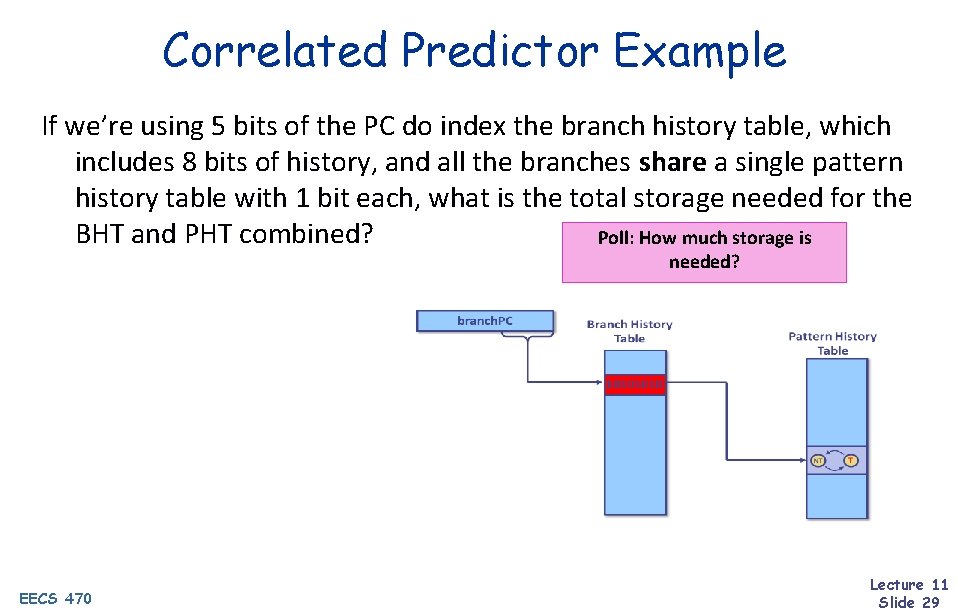

Correlated Predictor Example If we’re using 5 bits of the PC do index the branch history table, which includes 8 bits of history, and all the branches share a single pattern history table with 1 bit each, what is the total storage needed for the BHT and PHT combined? Poll: How much storage is needed? EECS 470 Lecture 11 Slide 29

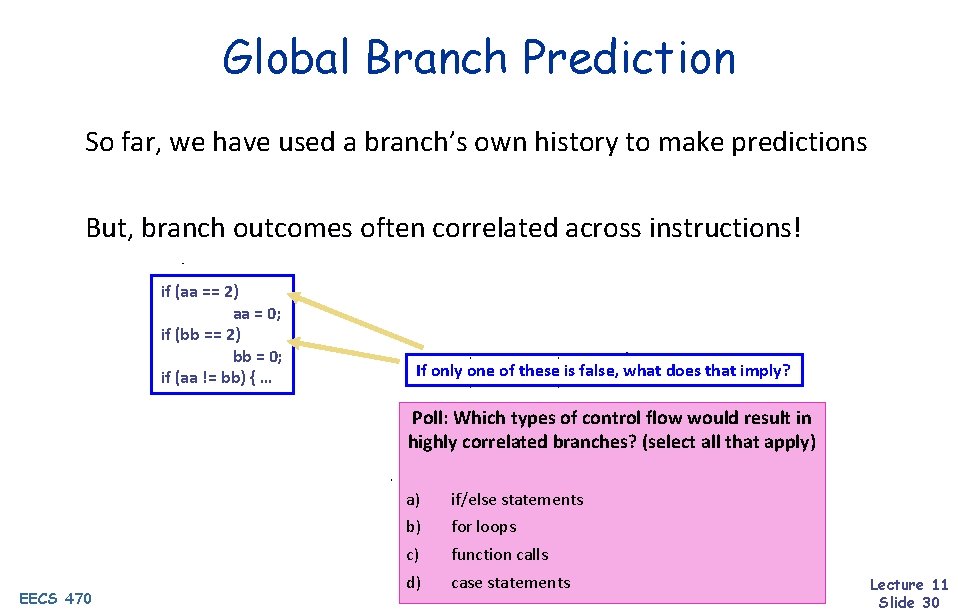

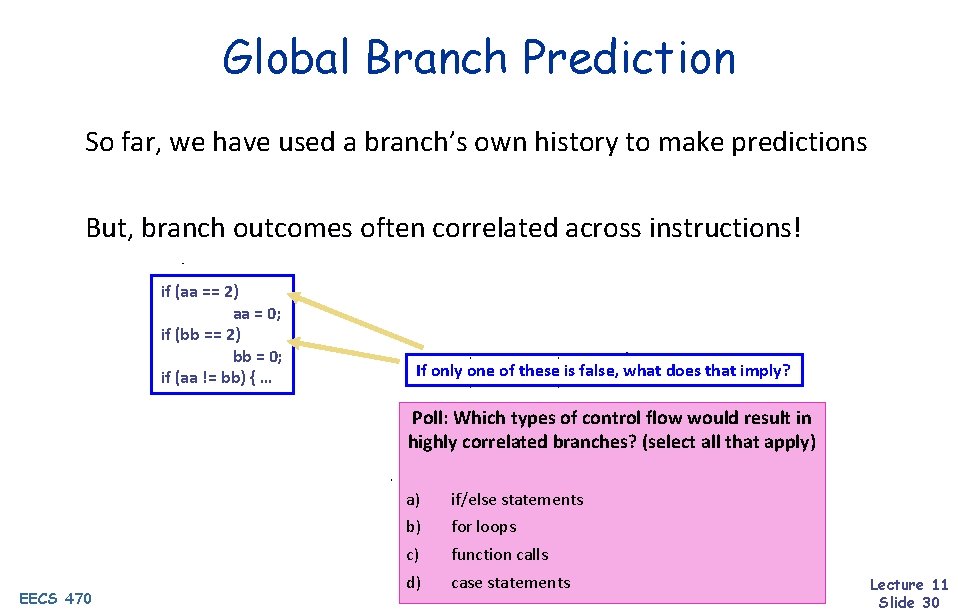

Global Branch Prediction So far, we have used a branch’s own history to make predictions But, branch outcomes often correlated across instructions! if (aa == 2) aa = 0; if (bb == 2) bb = 0; if (aa != bb) { … If only one of these is false, what does that imply? Poll: Which types of control flow would result in highly correlated branches? (select all that apply) EECS 470 a) if/else statements b) for loops c) function calls d) case statements Lecture 11 Slide 30

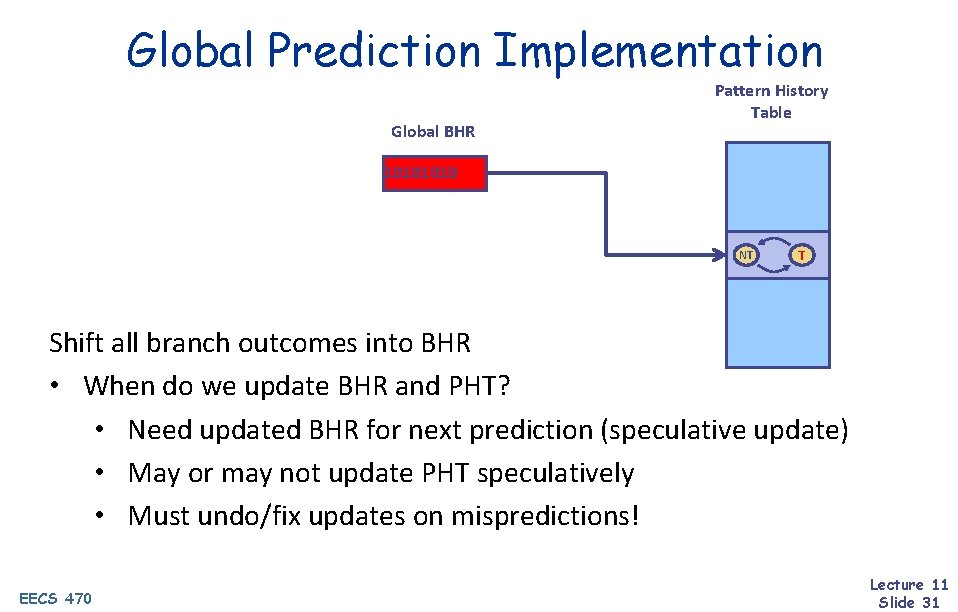

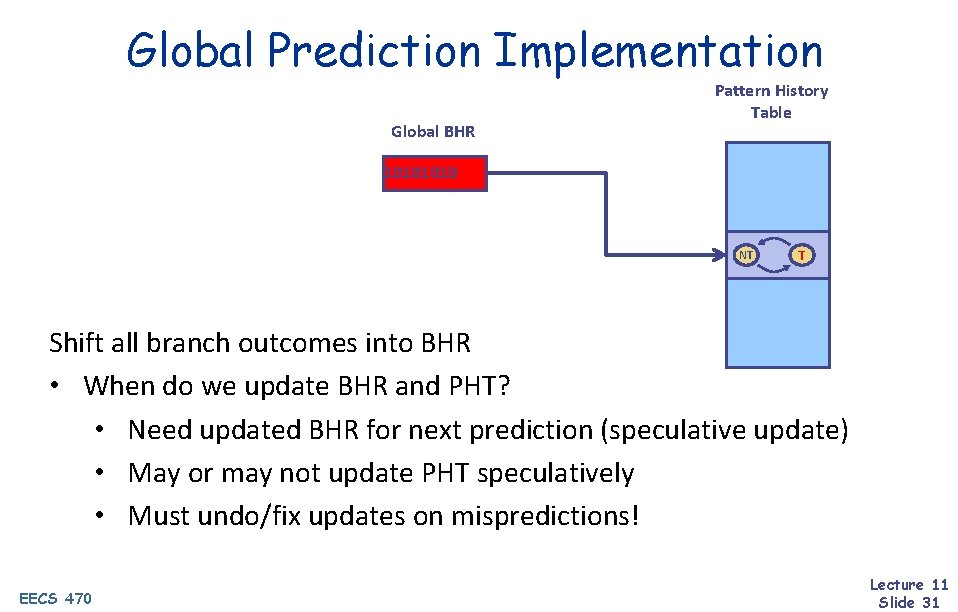

Global Prediction Implementation Global BHR Pattern History Table 1010 NT T Shift all branch outcomes into BHR • When do we update BHR and PHT? • Need updated BHR for next prediction (speculative update) • May or may not update PHT speculatively • Must undo/fix updates on mispredictions! EECS 470 Lecture 11 Slide 31

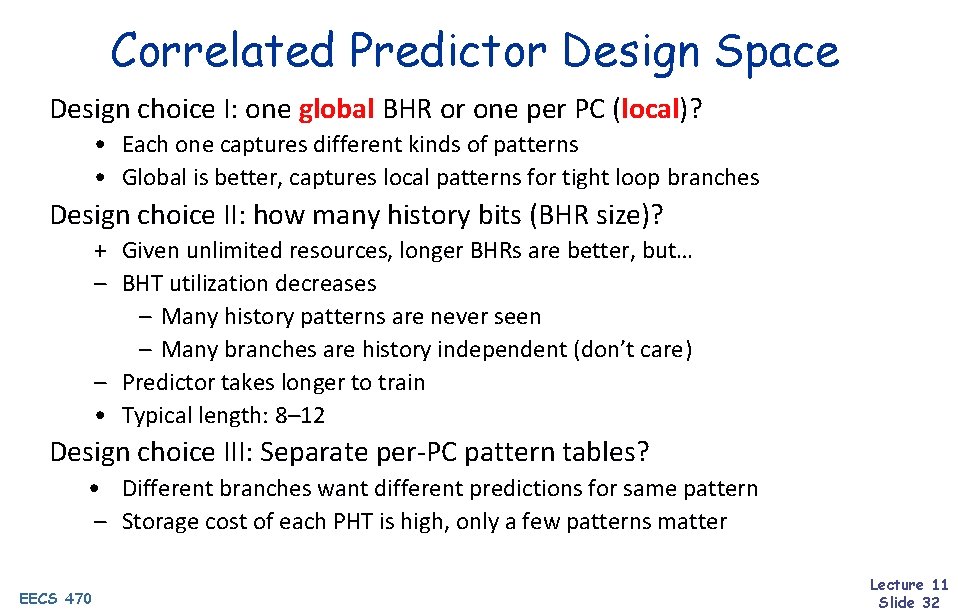

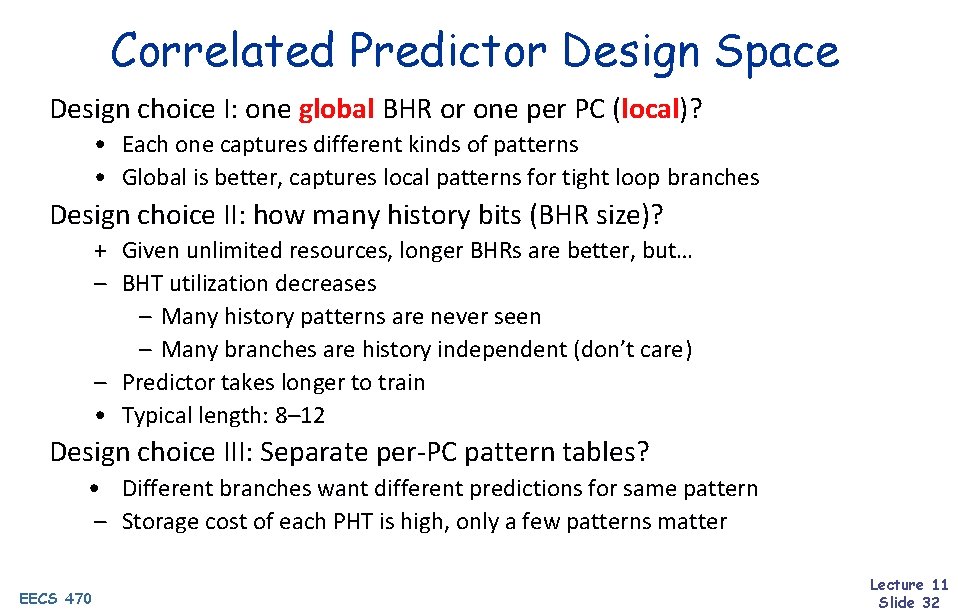

Correlated Predictor Design Space Design choice I: one global BHR or one per PC (local)? • Each one captures different kinds of patterns • Global is better, captures local patterns for tight loop branches Design choice II: how many history bits (BHR size)? + Given unlimited resources, longer BHRs are better, but… – BHT utilization decreases – Many history patterns are never seen – Many branches are history independent (don’t care) – Predictor takes longer to train • Typical length: 8– 12 Design choice III: Separate per-PC pattern tables? • Different branches want different predictions for same pattern – Storage cost of each PHT is high, only a few patterns matter EECS 470 Lecture 11 Slide 32

![Gshare Mc Farling Poll What would make a good hash function select all that Gshare [Mc. Farling] Poll: What would make a good hash function? (select all that](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-33.jpg)

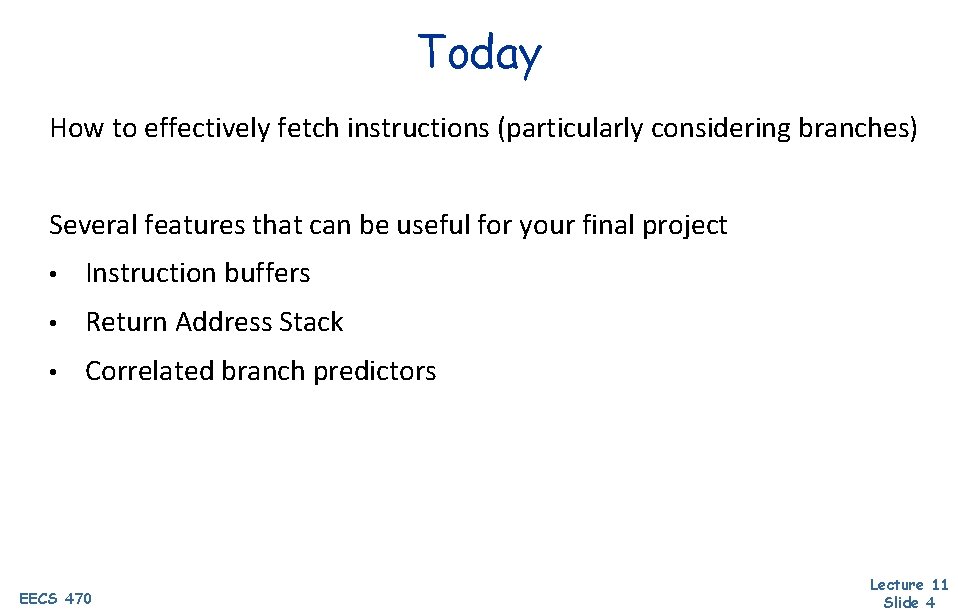

Gshare [Mc. Farling] Poll: What would make a good hash function? (select all that apply) Pattern History Table (shared shown here) branch. PC Global BHR 1010 ? ? ? xor NT T Cheap way to implement global predictors • Global branch history • Per-address patterns (sort of) • Try to make the PHT big enough to avoid collisions EECS 470 Lecture 11 Slide 33

![Hybrid Predictor Hybrid tournament predictor Mc Farling Attacks correlated predictor BHT utilization problem Hybrid Predictor Hybrid (tournament) predictor [Mc. Farling] • Attacks correlated predictor BHT utilization problem](https://slidetodoc.com/presentation_image_h2/35c9c229896119d61db78caa2f850bc5/image-34.jpg)

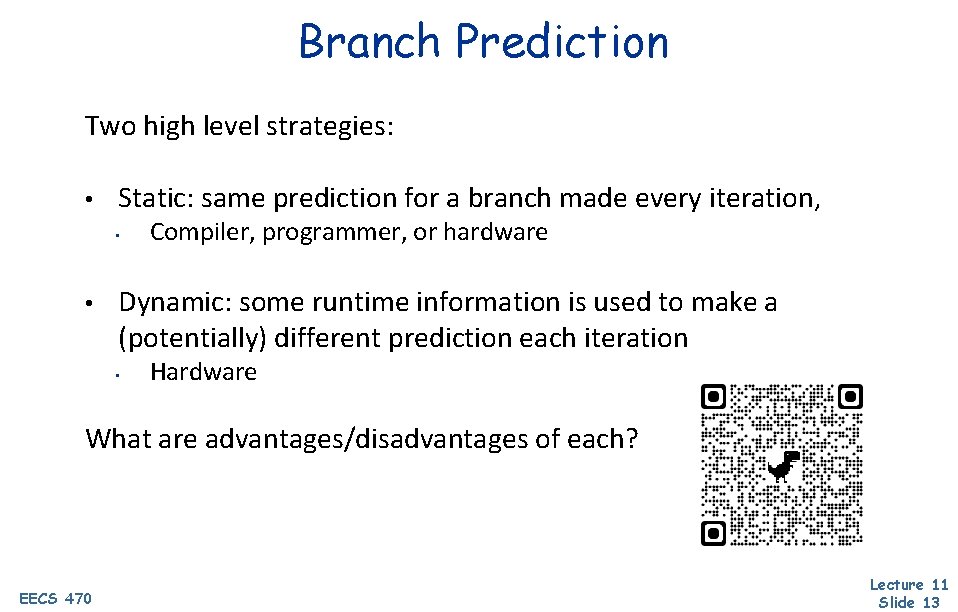

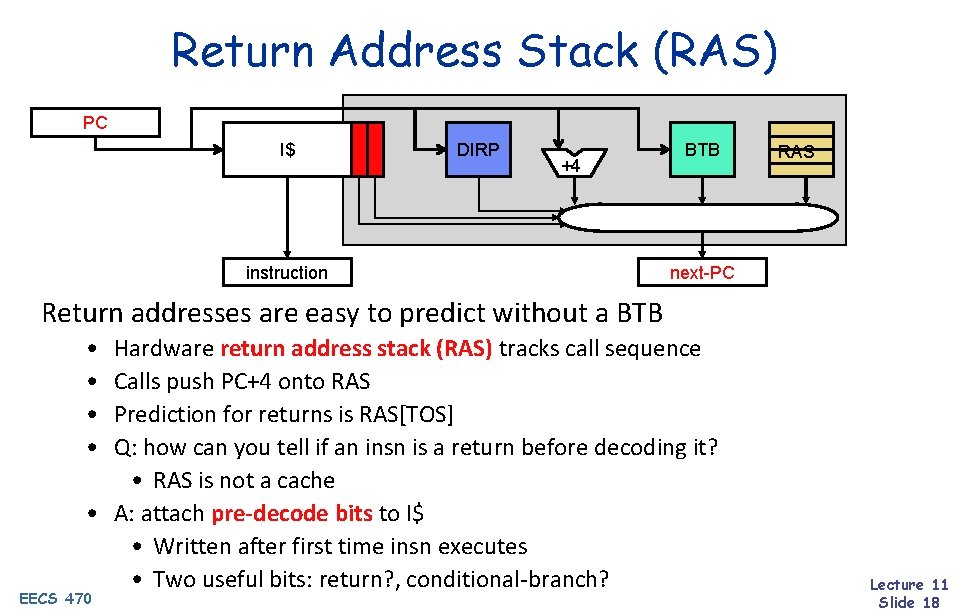

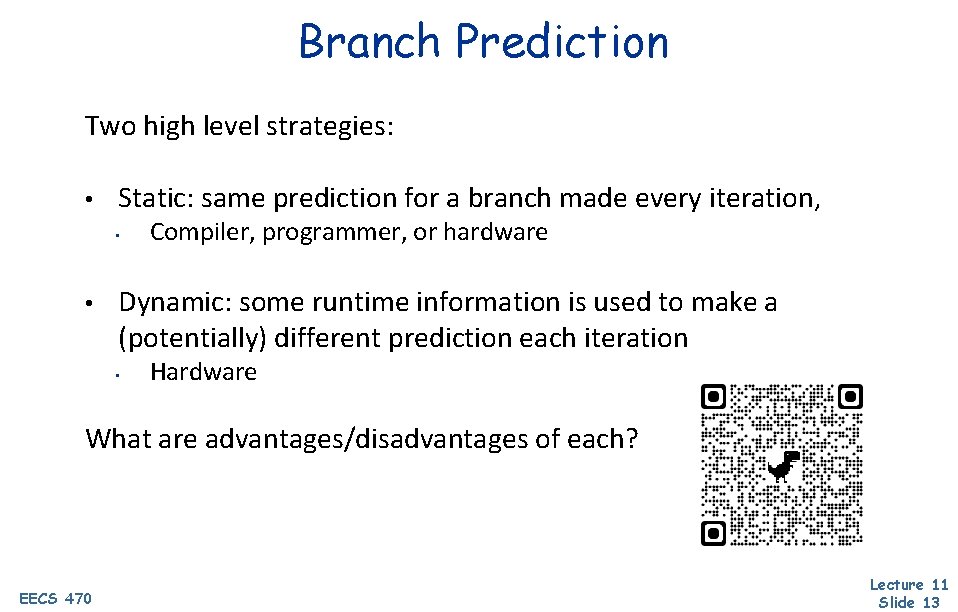

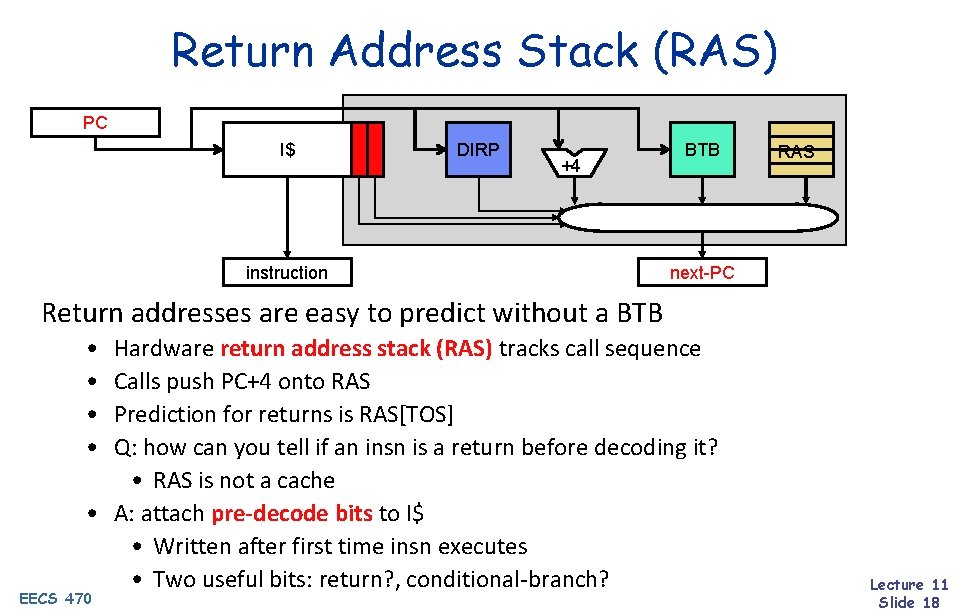

Hybrid Predictor Hybrid (tournament) predictor [Mc. Farling] • Attacks correlated predictor BHT utilization problem • Idea: combine two predictors • Simple BHT predicts history independent branches • Correlated predictor predicts only branches that need history • Chooser assigns branches to one predictor or the other • Branches start in simple BHT, move mis-prediction threshold + Correlated predictor can be made smaller, handles fewer branches + 90– 95% accuracy • Alpha 21264: Hybrid of Gshare & 2 -bit saturating counters EECS 470 chooser BHT BHR BHT PC Lecture 11 Slide 34

Next Time Midterm Review Lingering questions / feedback? I'll include an anonymous form at the end of every lecture: https: //bit. ly/3 o. Sr 5 FD EECS 470 Lecture 11 Slide 35