EECS 252 Graduate Computer Architecture Lec 3 Memory

- Slides: 20

EECS 252 Graduate Computer Architecture Lec 3 – Memory Hierarchy Review: Caches Rose Liu Electrical Engineering and Computer Sciences University of California, Berkeley http: //www-inst. eecs. berkeley. edu/~cs 252 CS 252 -Fall’ 07

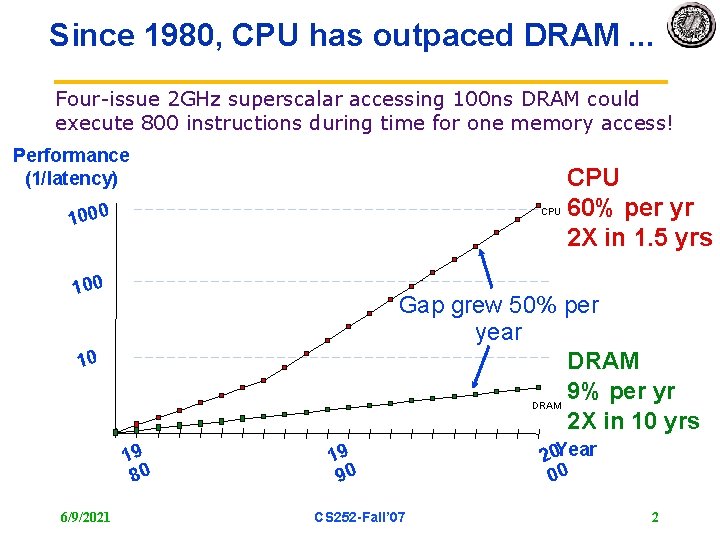

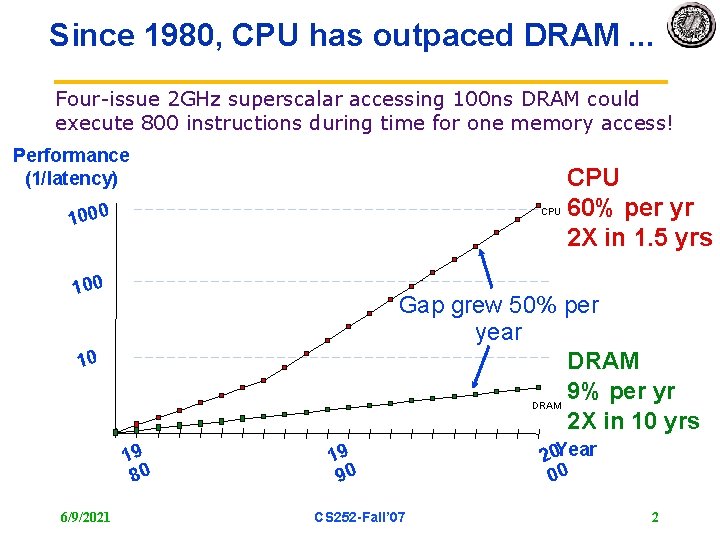

Since 1980, CPU has outpaced DRAM. . . Four-issue 2 GHz superscalar accessing 100 ns DRAM could execute 800 instructions during time for one memory access! Performance (1/latency) 1000 CPU 100 Gap grew 50% per year DRAM 9% per yr DRAM 2 X in 10 yrs 10 19 80 6/9/2021 CPU 60% per yr 2 X in 1. 5 yrs 19 90 CS 252 -Fall’ 07 20 Year 00 2

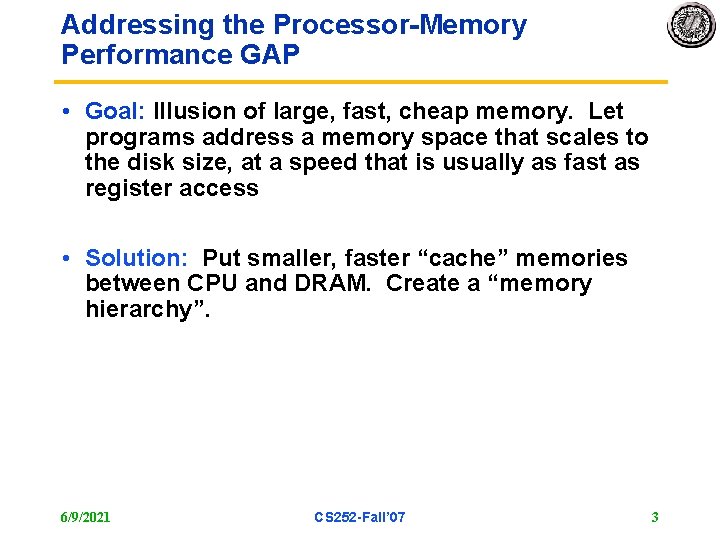

Addressing the Processor-Memory Performance GAP • Goal: Illusion of large, fast, cheap memory. Let programs address a memory space that scales to the disk size, at a speed that is usually as fast as register access • Solution: Put smaller, faster “cache” memories between CPU and DRAM. Create a “memory hierarchy”. 6/9/2021 CS 252 -Fall’ 07 3

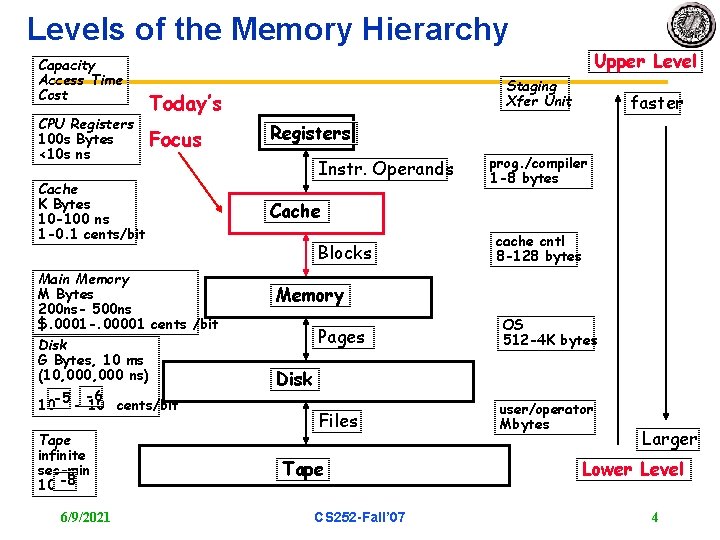

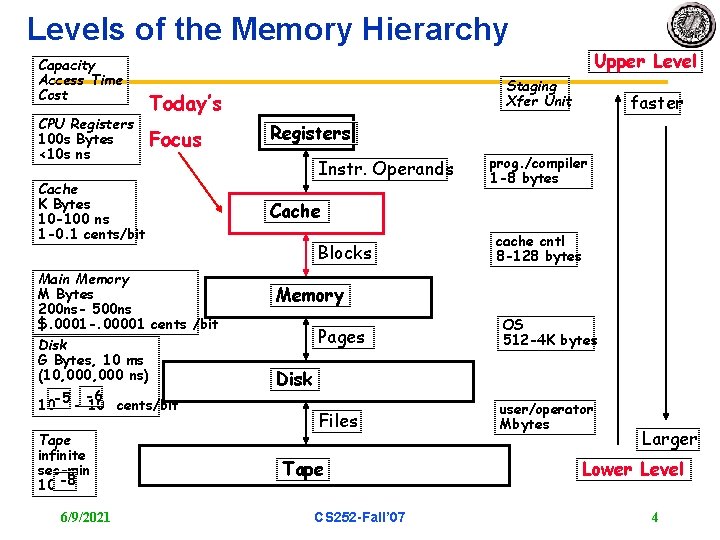

Levels of the Memory Hierarchy Capacity Access Time Cost CPU Registers 100 s Bytes <10 s ns Cache K Bytes 10 -100 ns 1 -0. 1 cents/bit Main Memory M Bytes 200 ns- 500 ns $. 0001 -. 00001 cents /bit Disk G Bytes, 10 ms (10, 000 ns) -5 -6 10 - 10 cents/bit Tape infinite sec-min 10 -8 6/9/2021 Staging Xfer Unit Today’s Focus Upper Level faster Registers Instr. Operands prog. /compiler 1 -8 bytes Cache Blocks cache cntl 8 -128 bytes Memory Pages OS 512 -4 K bytes Files user/operator Mbytes Disk Tape CS 252 -Fall’ 07 Larger Lower Level 4

Common Predictable Patterns Two predictable properties of memory references: • Temporal Locality: If a location is referenced, it is likely to be referenced again in the near future (e. g. , loops, reuse). • Spatial Locality: If a location is referenced it is likely that locations near it will be referenced in the near future (e. g. , straightline code, array access). 6/9/2021 CS 252 -Fall’ 07 5

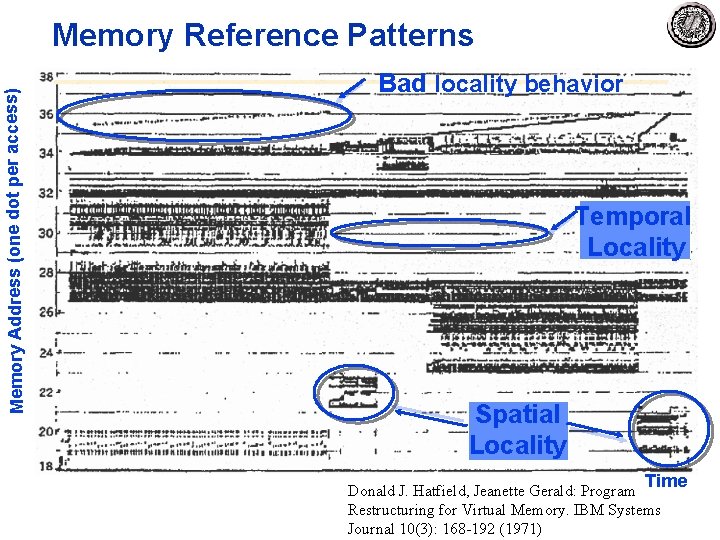

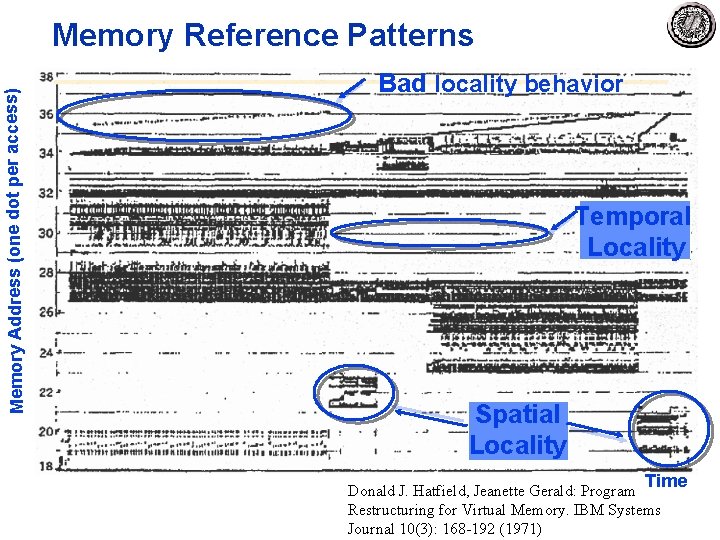

Memory Address (one dot per access) Memory Reference Patterns Bad locality behavior Temporal Locality Spatial Locality Time Donald J. Hatfield, Jeanette Gerald: Program Restructuring for Virtual Memory. IBM Systems Journal 10(3): 168 -192 (1971)

Caches exploit both types of predictability: – Exploit temporal locality by remembering the contents of recently accessed locations. – Exploit spatial locality by fetching blocks of data around recently accessed locations. 6/9/2021 CS 252 -Fall’ 07 7

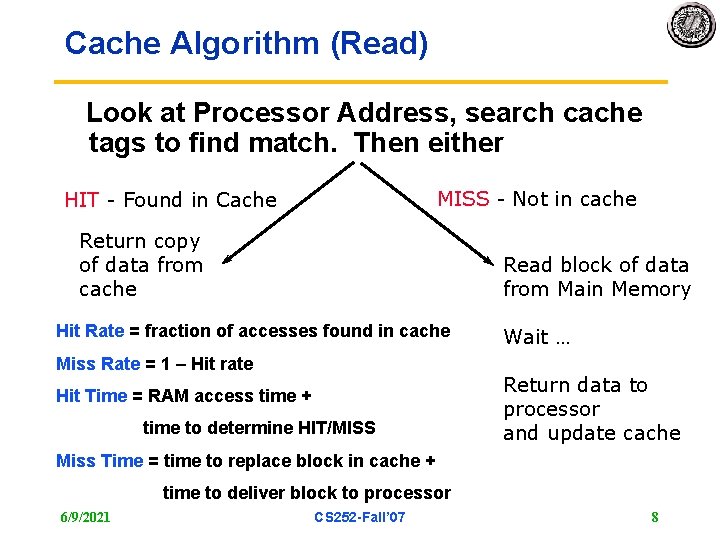

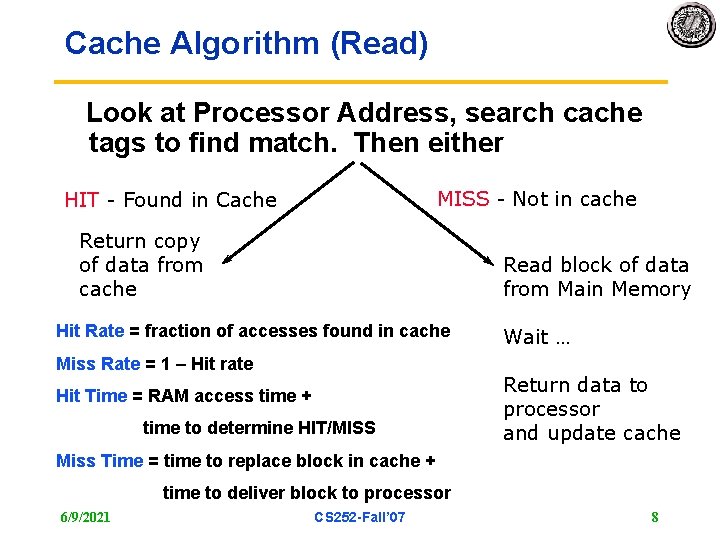

Cache Algorithm (Read) Look at Processor Address, search cache tags to find match. Then either MISS - Not in cache HIT - Found in Cache Return copy of data from cache Read block of data from Main Memory Hit Rate = fraction of accesses found in cache Miss Rate = 1 – Hit rate Hit Time = RAM access time + time to determine HIT/MISS Wait … Return data to processor and update cache Miss Time = time to replace block in cache + time to deliver block to processor 6/9/2021 CS 252 -Fall’ 07 8

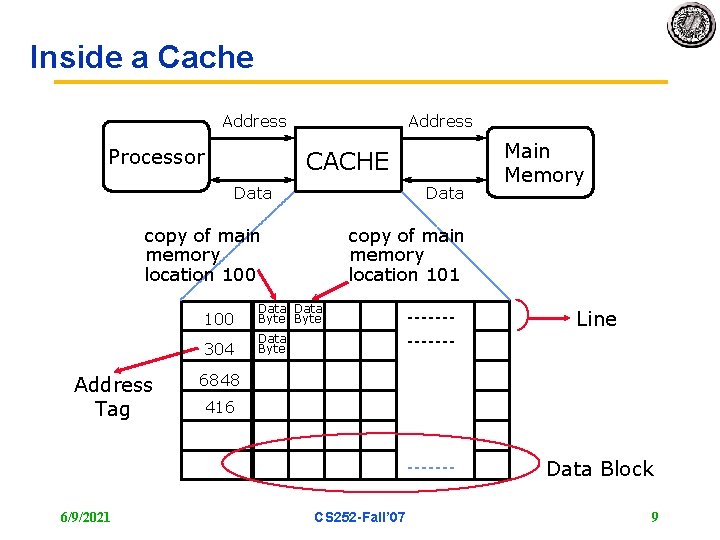

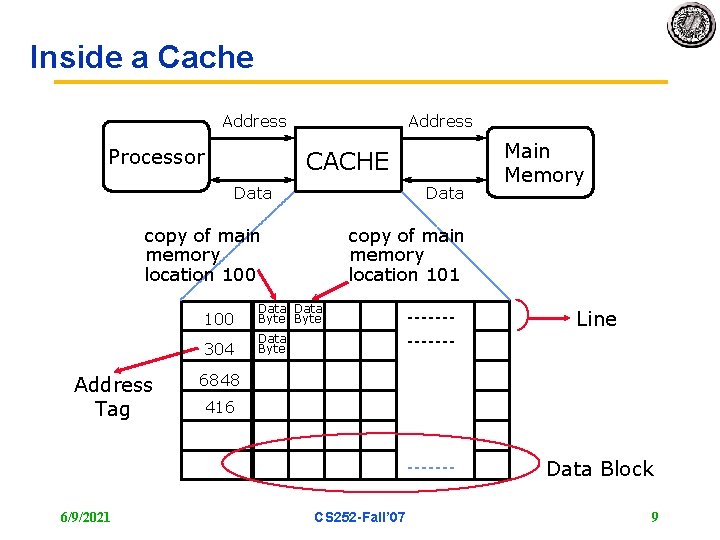

Inside a Cache Address Processor Address CACHE Data copy of main memory location 100 Address Tag Main Memory copy of main memory location 101 100 Data Byte 304 Data Byte Line 6848 416 Data Block 6/9/2021 CS 252 -Fall’ 07 9

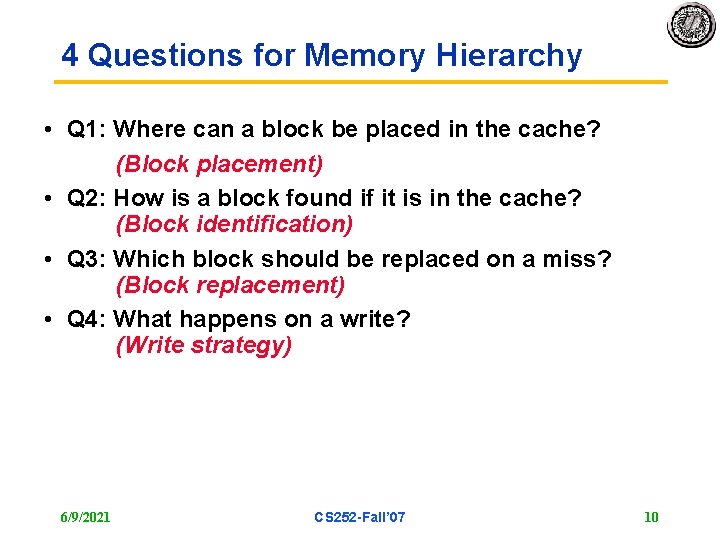

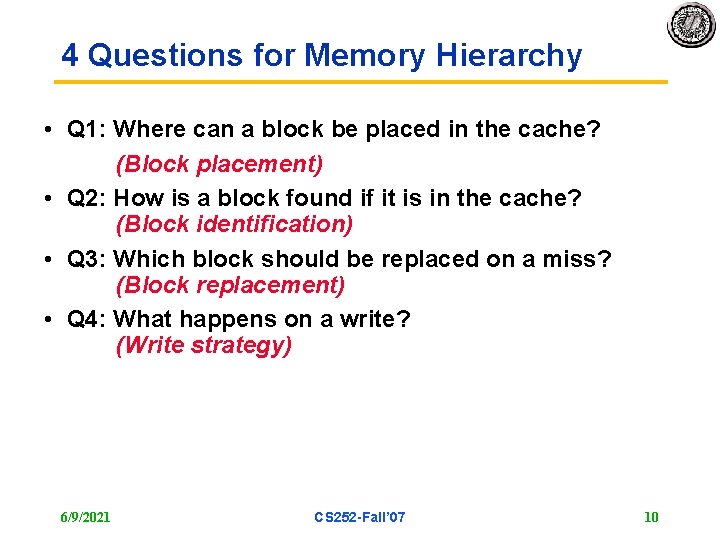

4 Questions for Memory Hierarchy • Q 1: Where can a block be placed in the cache? (Block placement) • Q 2: How is a block found if it is in the cache? (Block identification) • Q 3: Which block should be replaced on a miss? (Block replacement) • Q 4: What happens on a write? (Write strategy) 6/9/2021 CS 252 -Fall’ 07 10

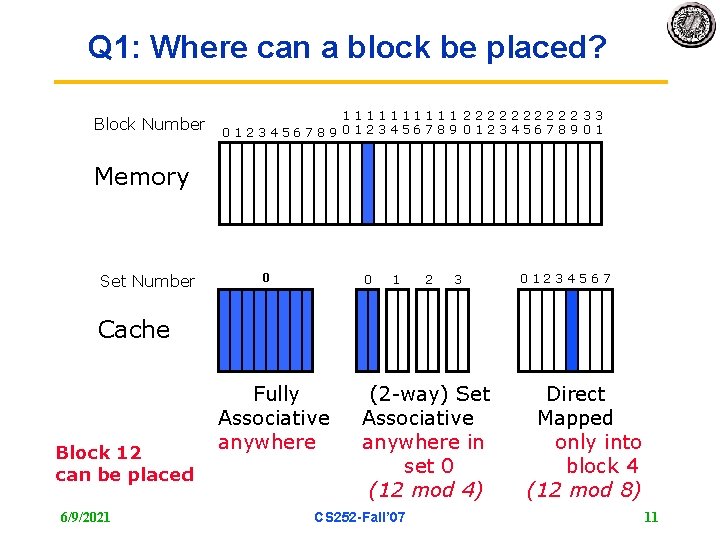

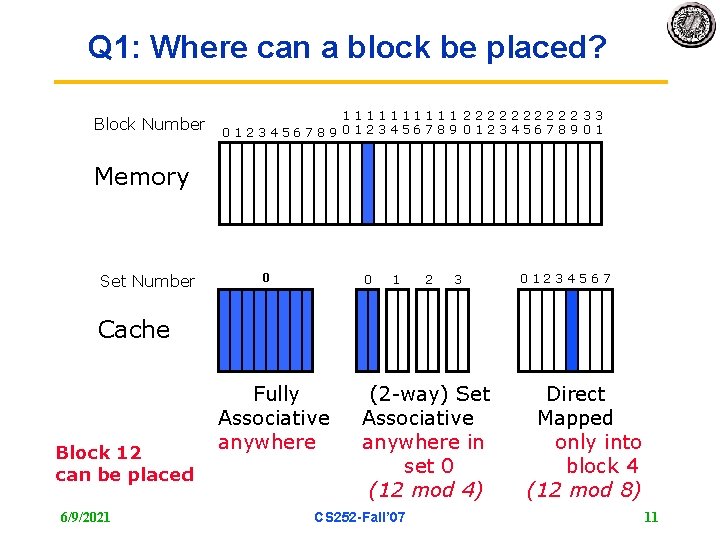

Q 1: Where can a block be placed? Block Number 11111 22222 33 0123456789 01 Memory Set Number 0 0 1 2 3 01234567 Cache Block 12 can be placed 6/9/2021 Fully Associative anywhere (2 -way) Set Associative anywhere in set 0 (12 mod 4) CS 252 -Fall’ 07 Direct Mapped only into block 4 (12 mod 8) 11

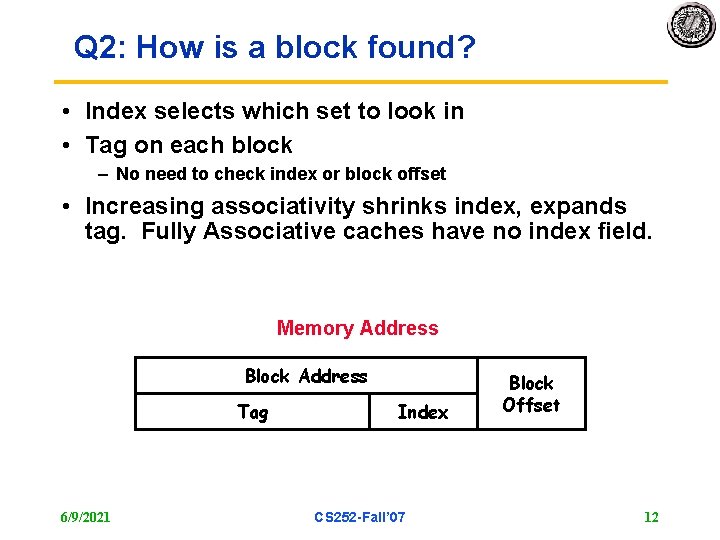

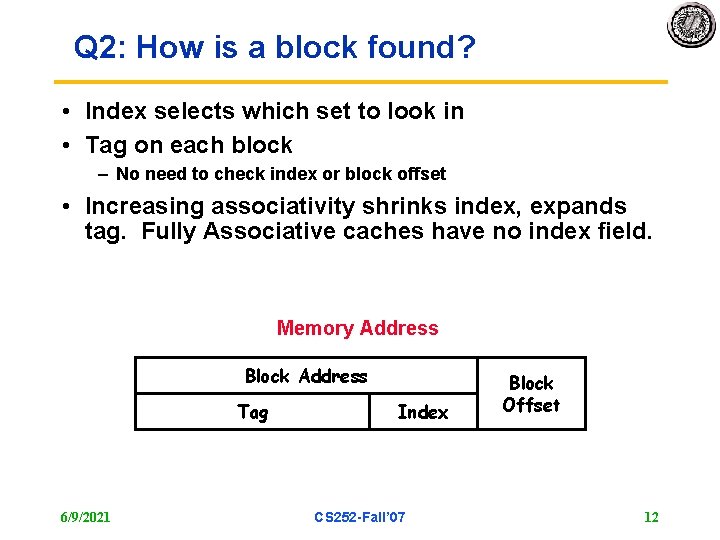

Q 2: How is a block found? • Index selects which set to look in • Tag on each block – No need to check index or block offset • Increasing associativity shrinks index, expands tag. Fully Associative caches have no index field. Memory Address Block Address Tag 6/9/2021 Index CS 252 -Fall’ 07 Block Offset 12

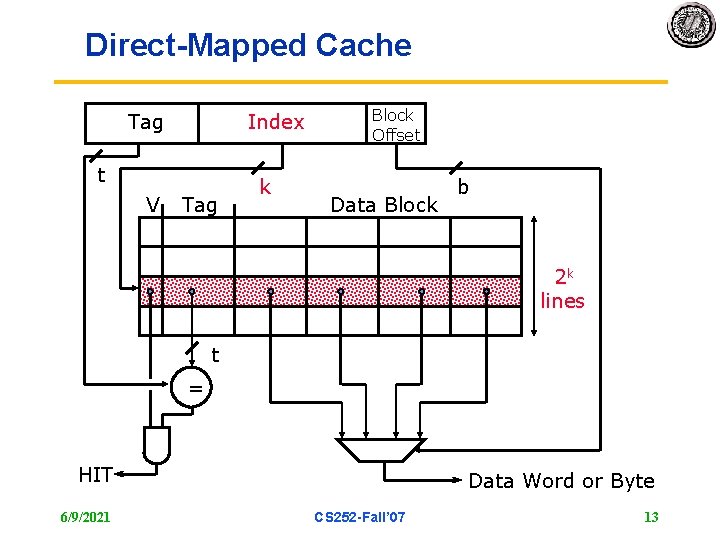

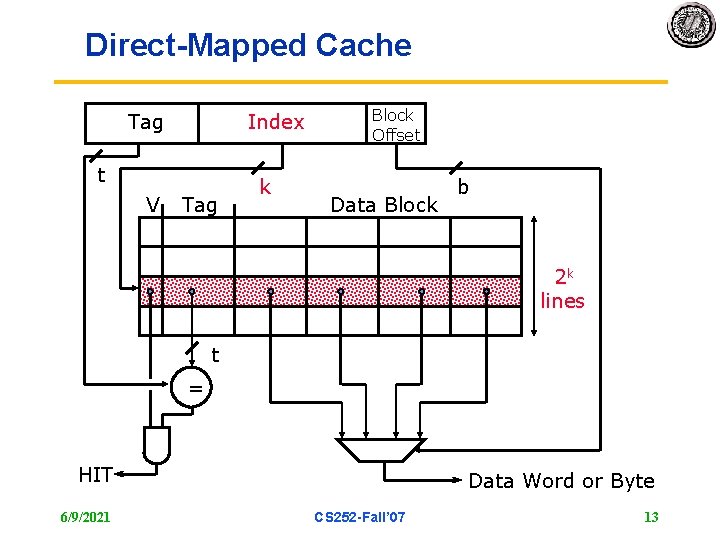

Direct-Mapped Cache Tag Index t V Tag k Block Offset Data Block b 2 k lines t = HIT 6/9/2021 Data Word or Byte CS 252 -Fall’ 07 13

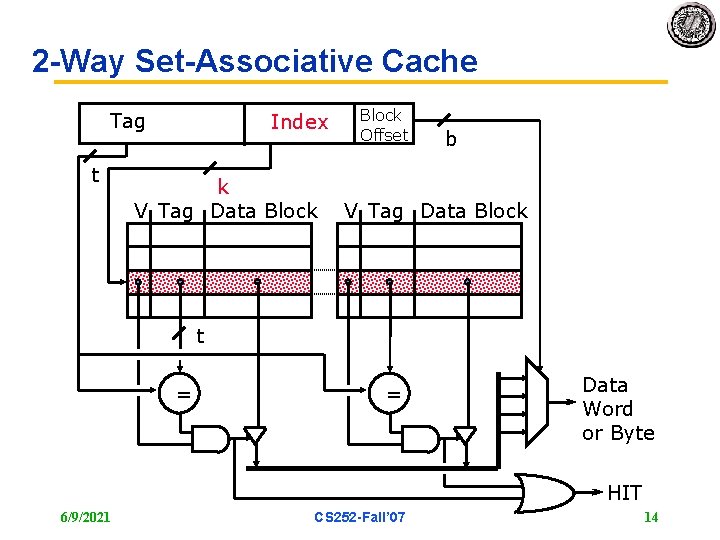

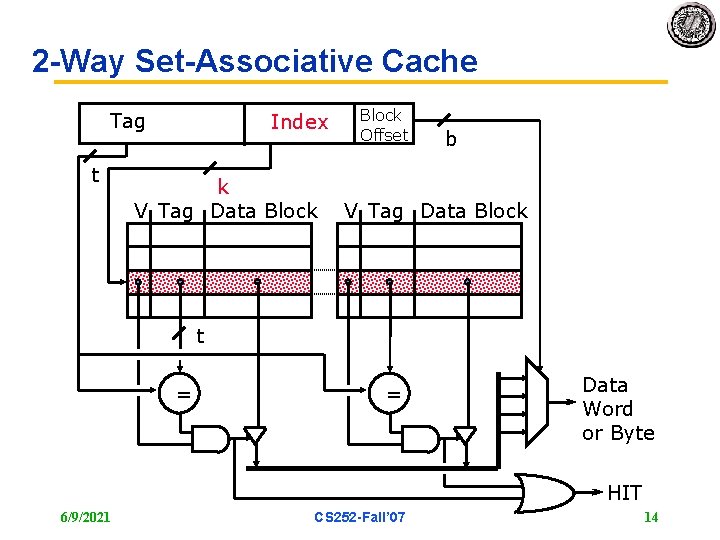

2 -Way Set-Associative Cache Tag t Index k V Tag Data Block Offset b V Tag Data Block t = = Data Word or Byte HIT 6/9/2021 CS 252 -Fall’ 07 14

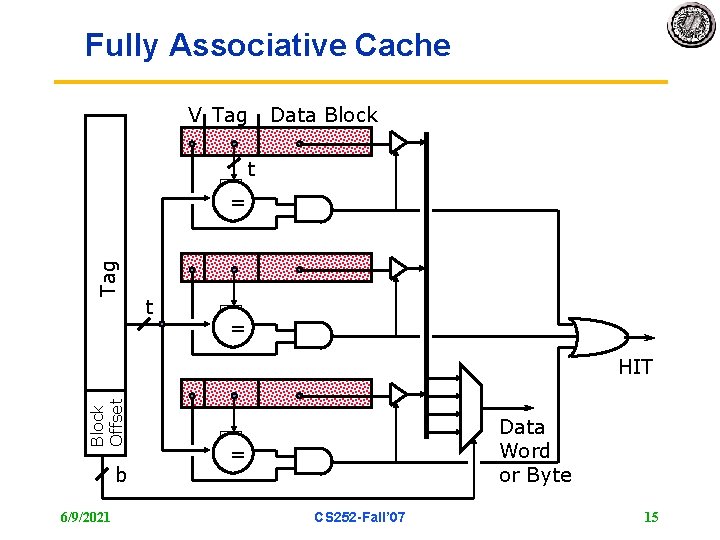

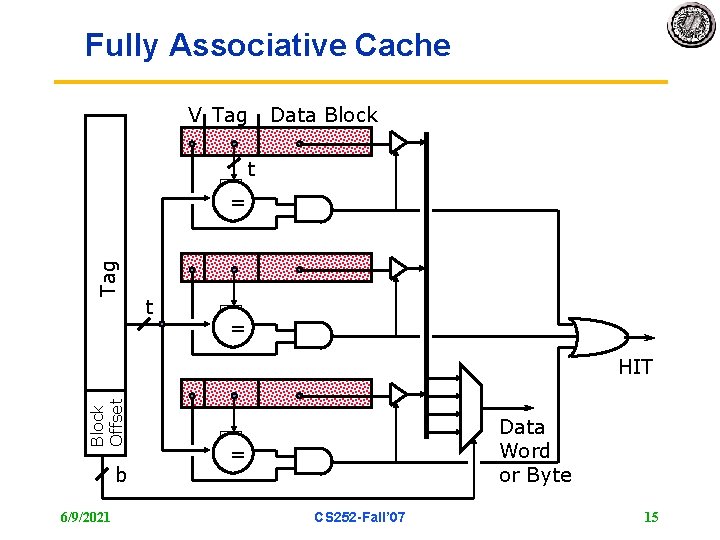

Fully Associative Cache V Tag Data Block t Tag = t = Block Offset HIT b 6/9/2021 Data Word or Byte = CS 252 -Fall’ 07 15

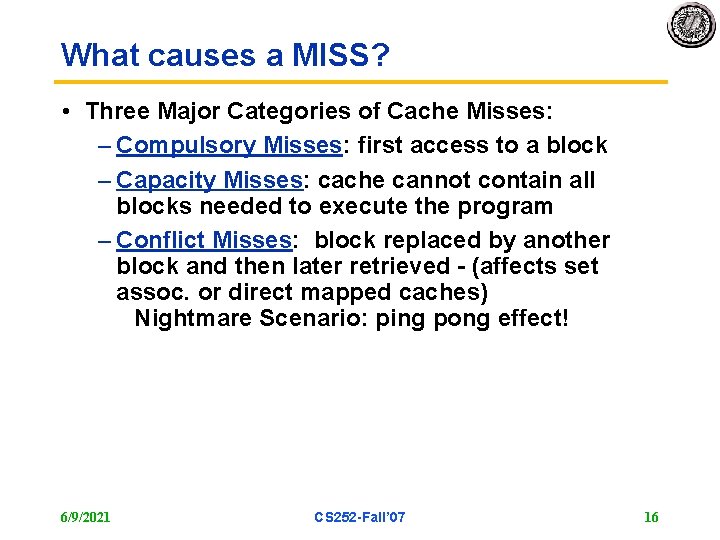

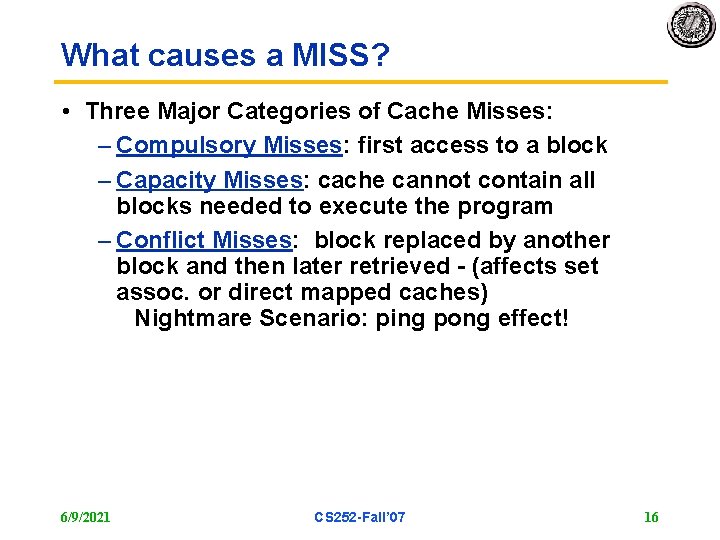

What causes a MISS? • Three Major Categories of Cache Misses: – Compulsory Misses: first access to a block – Capacity Misses: cache cannot contain all blocks needed to execute the program – Conflict Misses: block replaced by another block and then later retrieved - (affects set assoc. or direct mapped caches) Nightmare Scenario: ping pong effect! 6/9/2021 CS 252 -Fall’ 07 16

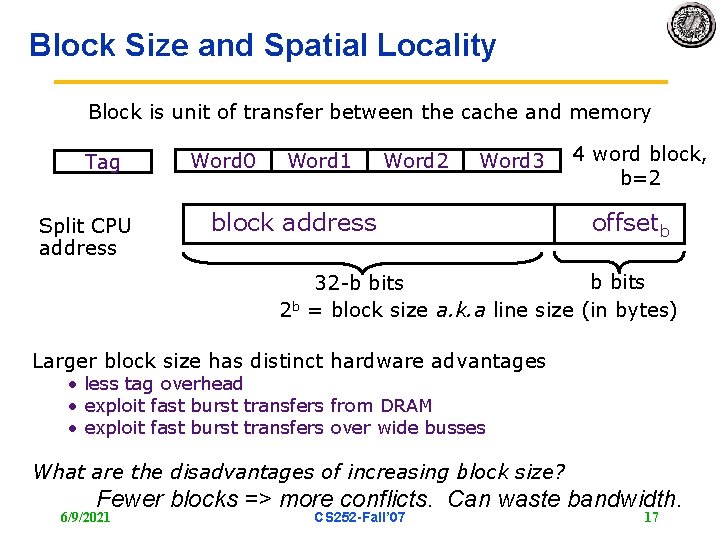

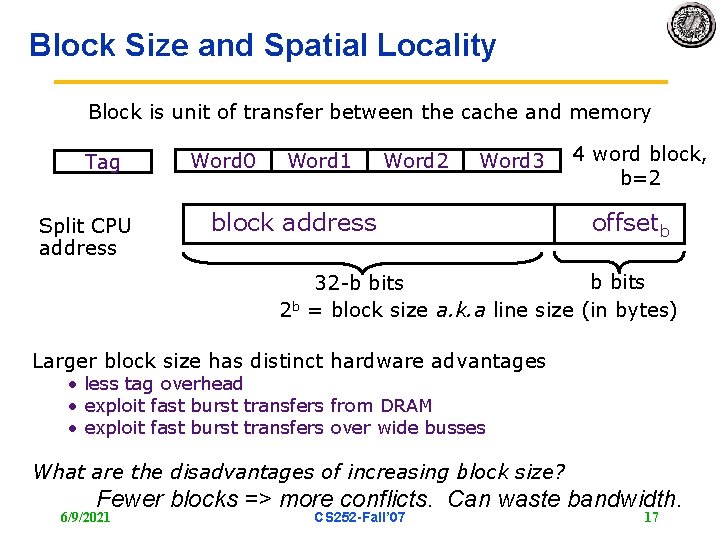

Block Size and Spatial Locality Block is unit of transfer between the cache and memory Tag Split CPU address Word 0 Word 1 Word 2 Word 3 block address 4 word block, b=2 offsetb b bits 32 -b bits 2 b = block size a. k. a line size (in bytes) Larger block size has distinct hardware advantages • less tag overhead • exploit fast burst transfers from DRAM • exploit fast burst transfers over wide busses What are the disadvantages of increasing block size? Fewer blocks => more conflicts. Can waste bandwidth. 6/9/2021 CS 252 -Fall’ 07 17

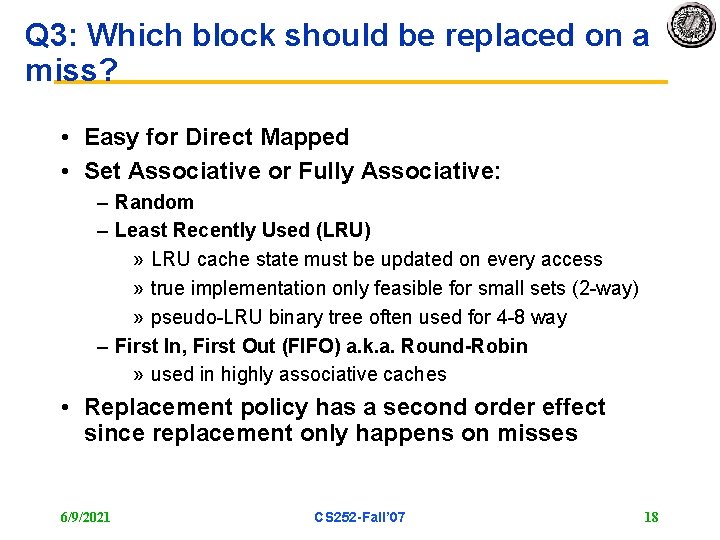

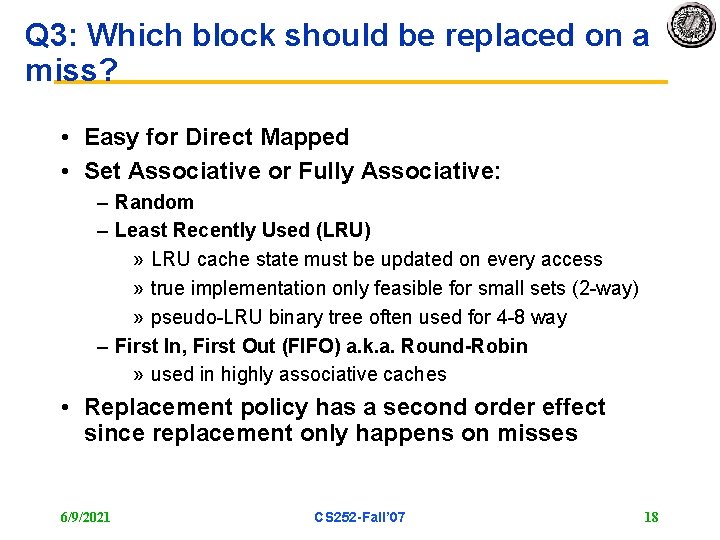

Q 3: Which block should be replaced on a miss? • Easy for Direct Mapped • Set Associative or Fully Associative: – Random – Least Recently Used (LRU) » LRU cache state must be updated on every access » true implementation only feasible for small sets (2 -way) » pseudo-LRU binary tree often used for 4 -8 way – First In, First Out (FIFO) a. k. a. Round-Robin » used in highly associative caches • Replacement policy has a second order effect since replacement only happens on misses 6/9/2021 CS 252 -Fall’ 07 18

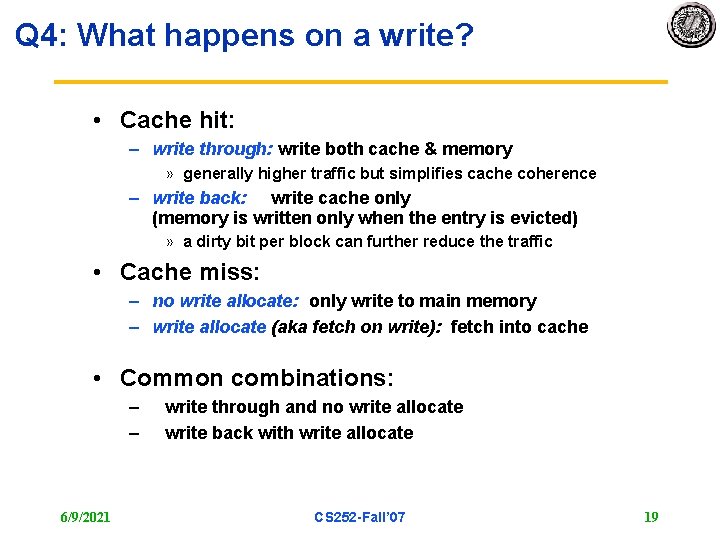

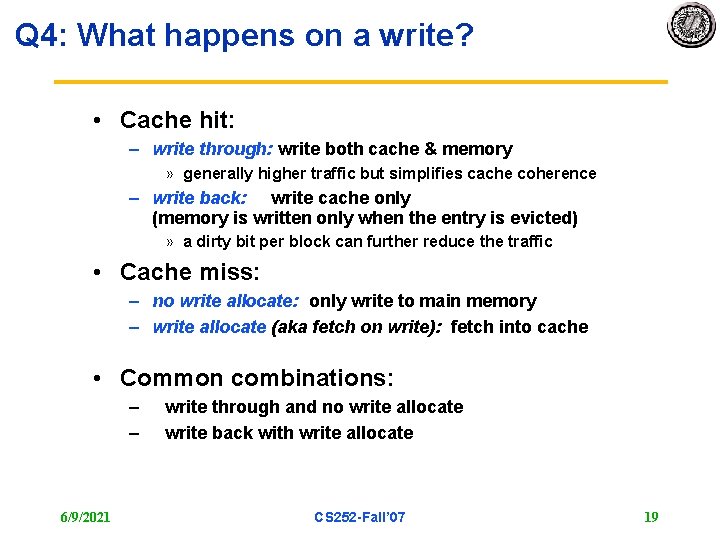

Q 4: What happens on a write? • Cache hit: – write through: write both cache & memory » generally higher traffic but simplifies cache coherence – write back: write cache only (memory is written only when the entry is evicted) » a dirty bit per block can further reduce the traffic • Cache miss: – no write allocate: only write to main memory – write allocate (aka fetch on write): fetch into cache • Common combinations: – – 6/9/2021 write through and no write allocate write back with write allocate CS 252 -Fall’ 07 19

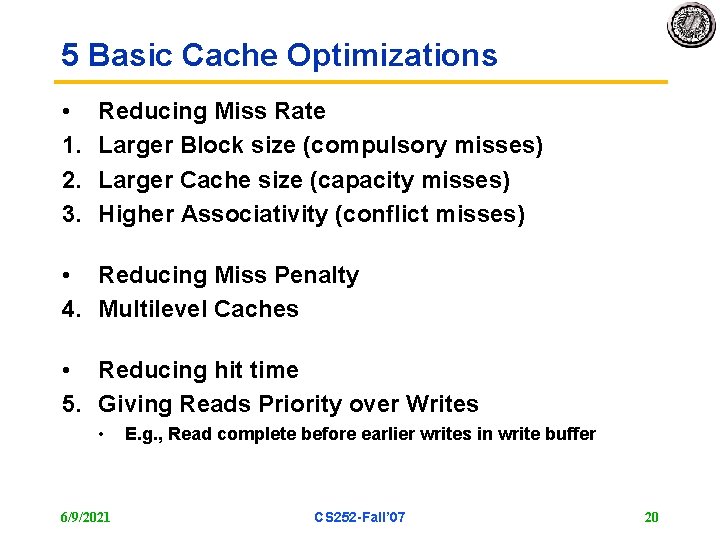

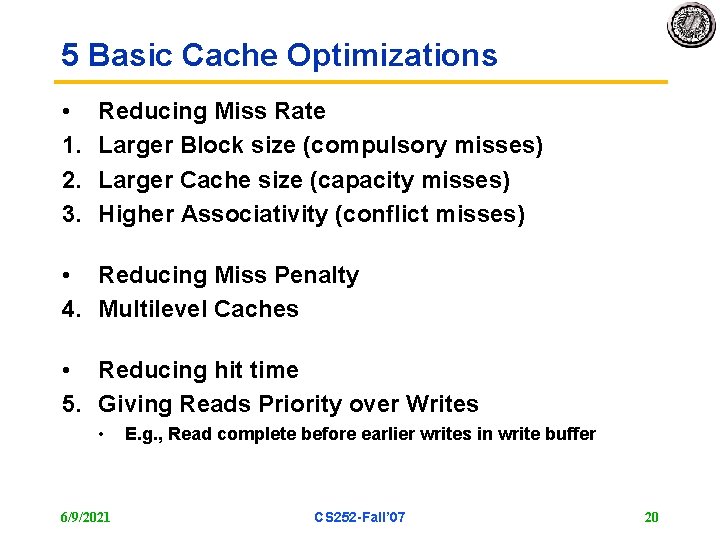

5 Basic Cache Optimizations • 1. 2. 3. Reducing Miss Rate Larger Block size (compulsory misses) Larger Cache size (capacity misses) Higher Associativity (conflict misses) • Reducing Miss Penalty 4. Multilevel Caches • Reducing hit time 5. Giving Reads Priority over Writes • 6/9/2021 E. g. , Read complete before earlier writes in write buffer CS 252 -Fall’ 07 20