EE 193 Parallel Computing Fall 2017 Tufts University

![False sharing details • What happens when – multiple threads all do y[r] +=A[r, False sharing details • What happens when – multiple threads all do y[r] +=A[r,](https://slidetodoc.com/presentation_image_h2/c6fb3e407c650dd97093ccc4db01cfad/image-17.jpg)

![Our Test (v 1) for i=0: 7 { JUST ONCE store mem[i] = i; Our Test (v 1) for i=0: 7 { JUST ONCE store mem[i] = i;](https://slidetodoc.com/presentation_image_h2/c6fb3e407c650dd97093ccc4db01cfad/image-23.jpg)

- Slides: 30

EE 193: Parallel Computing Fall 2017 Tufts University Instructor: Joel Grodstein joel. grodstein@tufts. edu Lecture 6: Hot spots and false sharing 1

Goals • Primary goals: – Understand how cache coherency can affect software performance – Understand what false sharing is, and how it can affect software performance 2

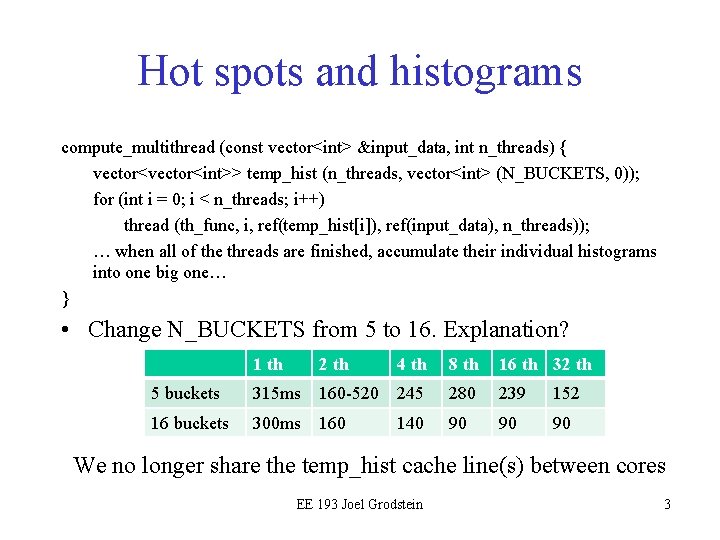

Hot spots and histograms compute_multithread (const vector<int> &input_data, int n_threads) { vector<int>> temp_hist (n_threads, vector<int> (N_BUCKETS, 0)); for (int i = 0; i < n_threads; i++) thread (th_func, i, ref(temp_hist[i]), ref(input_data), n_threads)); … when all of the threads are finished, accumulate their individual histograms into one big one… } • Change N_BUCKETS from 5 to 16. Explanation? 1 th 2 th 4 th 8 th 16 th 32 th 5 buckets 315 ms 160 -520 245 280 239 152 16 buckets 300 ms 160 140 90 90 90 We no longer share the temp_hist cache line(s) between cores EE 193 Joel Grodstein 3

Chapter 4 Shared Memory Programming with Pthreads n This lecture covers section 4. 10 n Caches, Cache-Coherence and False Sharing Copyright © 2010, Elsevier Inc. All rights Reserved 4

Goals • We're going to – take a simple program (multiply a matrix * vector) – collect performance data for various cases – use our knowledge of memory systems to understand the data 5

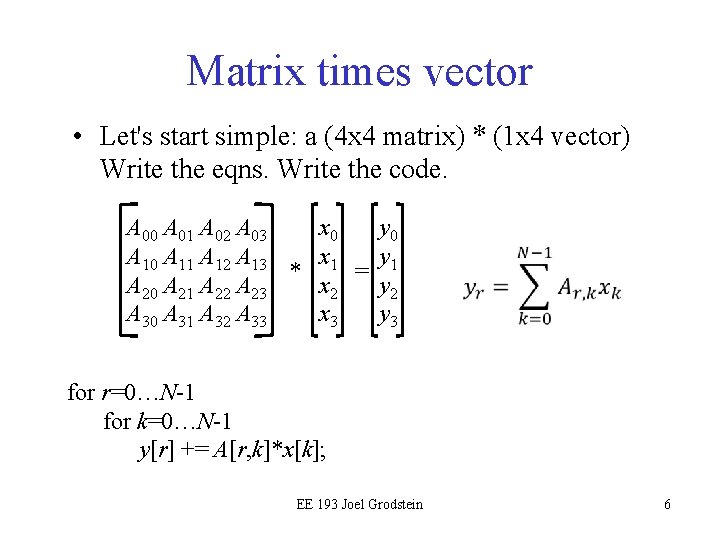

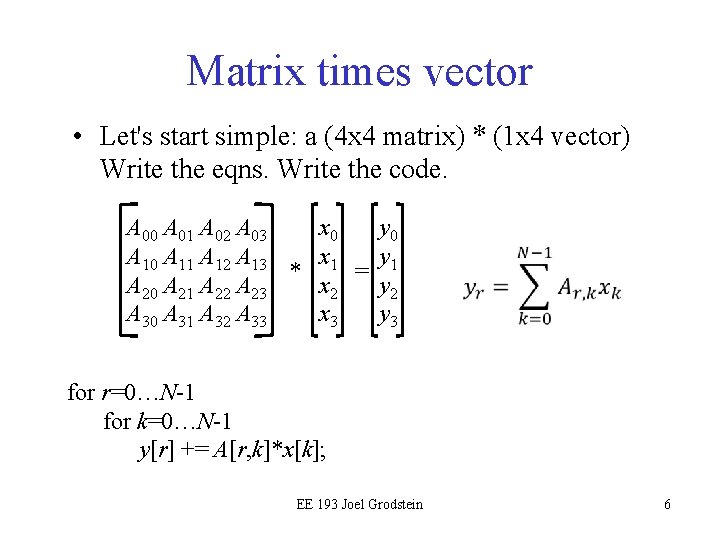

Matrix times vector • Let's start simple: a (4 x 4 matrix) * (1 x 4 vector) Write the eqns. Write the code. A 00 A 01 A 02 A 03 x 0 y 0 A 11 A 12 A 13 x 1 y 1 * = A 20 A 21 A 22 A 23 x 2 y 2 A 30 A 31 A 32 A 33 x 3 y 3 for r=0…N-1 for k=0…N-1 y[r] += A[r, k]*x[k]; EE 193 Joel Grodstein 6

Add multiple threads • Divide up the output by threads. In this case, 4 y values and 2 threads → 2 values per thread. A 00 A 01 A 02 A 03 x 0 y 0 A 11 A 12 A 13 x 1 y 1 * = A 20 A 21 A 22 A 23 x 2 y 2 A 30 A 31 A 32 A 33 x 3 y 3 for r=0…N-1 for k=0…N-1 y[r] += A[r, k]*x[k]; EE 193 Joel Grodstein 7

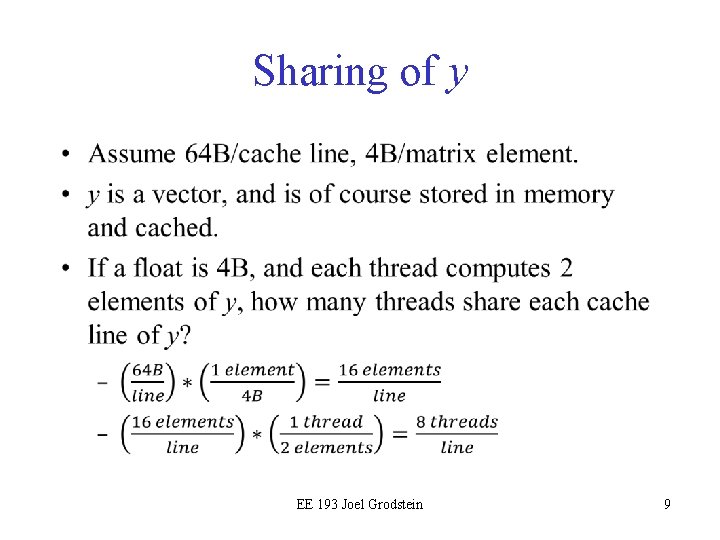

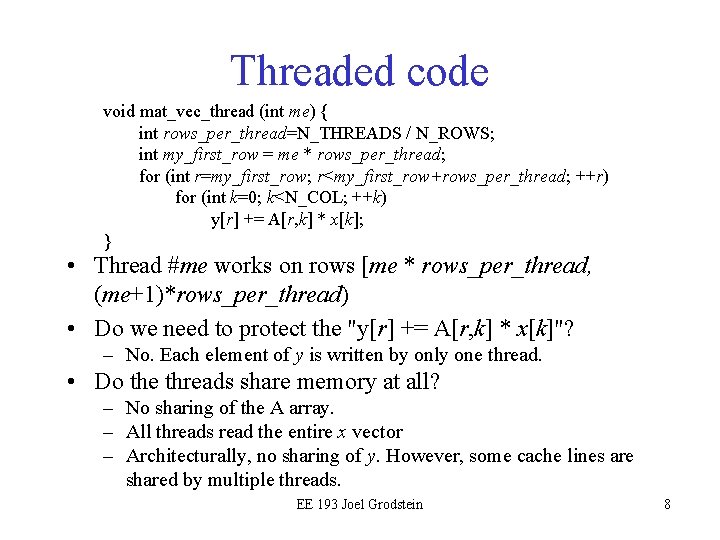

Threaded code void mat_vec_thread (int me) { int rows_per_thread=N_THREADS / N_ROWS; int my_first_row = me * rows_per_thread; for (int r=my_first_row; r<my_first_row+rows_per_thread; ++r) for (int k=0; k<N_COL; ++k) y[r] += A[r, k] * x[k]; } • Thread #me works on rows [me * rows_per_thread, (me+1)*rows_per_thread) • Do we need to protect the "y[r] += A[r, k] * x[k]"? – No. Each element of y is written by only one thread. • Do the threads share memory at all? – No sharing of the A array. – All threads read the entire x vector – Architecturally, no sharing of y. However, some cache lines are shared by multiple threads. EE 193 Joel Grodstein 8

Sharing of y • EE 193 Joel Grodstein 9

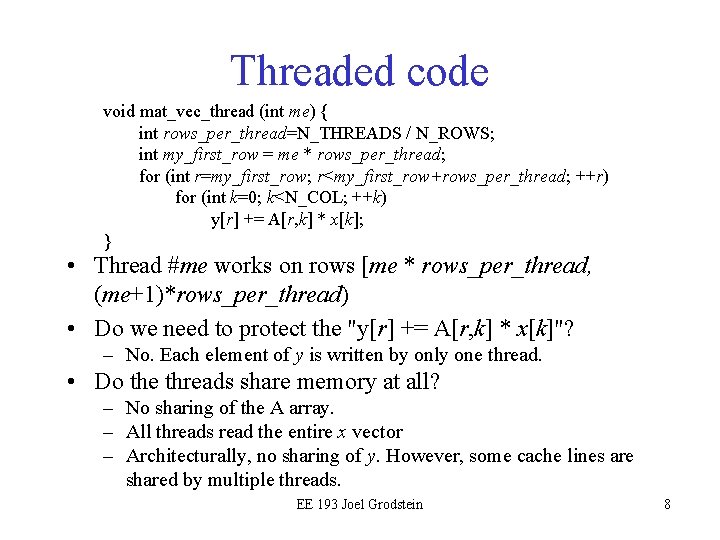

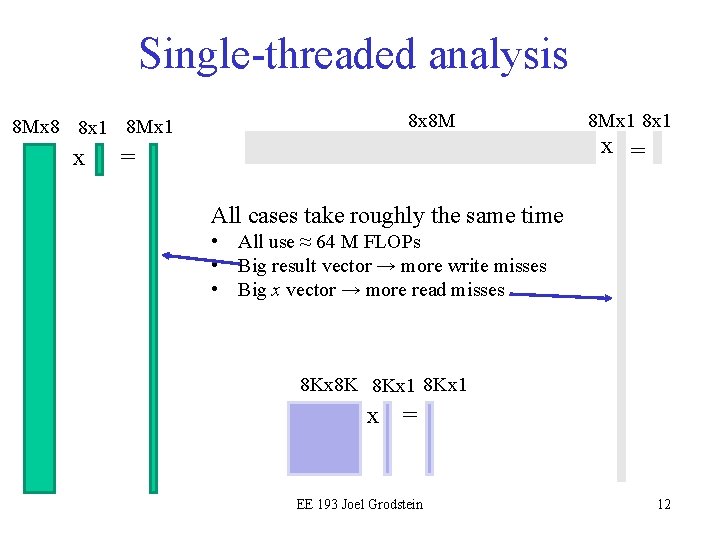

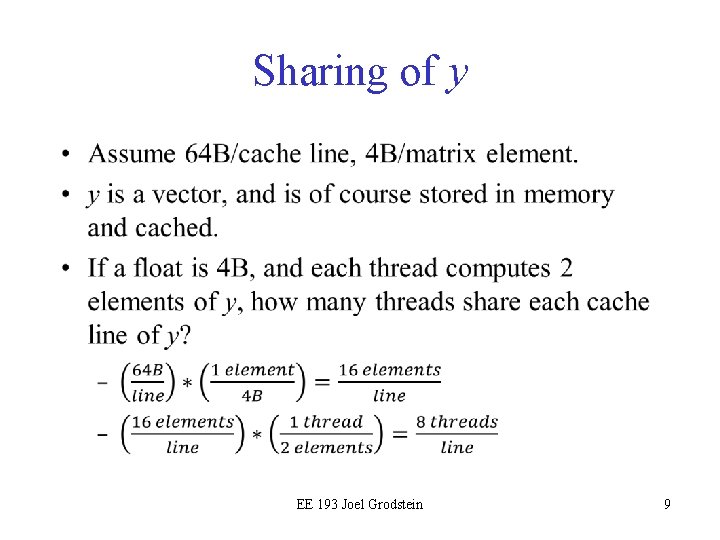

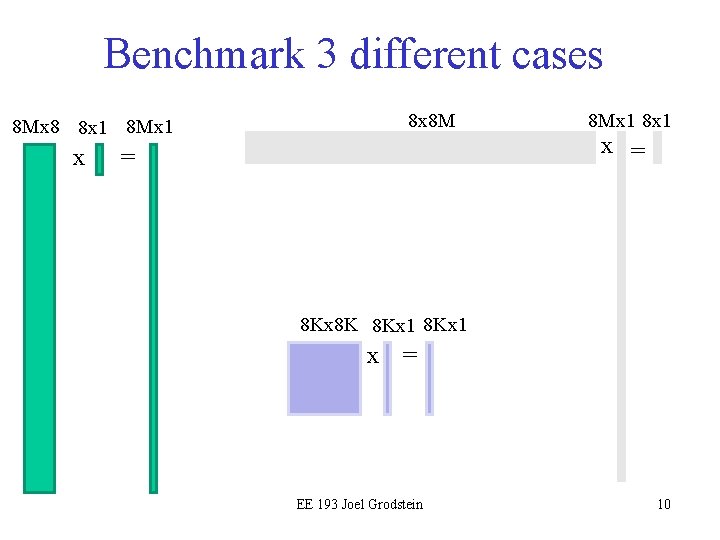

Benchmark 3 different cases 8 x 8 M 8 Mx 8 8 x 1 8 Mx 1 x = 8 Mx 1 8 x 1 x = 8 Kx 8 K 8 Kx 1 x = EE 193 Joel Grodstein 10

Run-times and efficiencies of matrix-vector multiplication (8 Mx 8)*(8 x 1) →(8 Mx 1) (8 Kx 8 K)*(8 Kx 1) →(8 Kx 1) (8 x 8 M)*(8 Mx 1) →(8 x 1) • Single-threaded times: all roughly equal • How about what happens with 4 threads? ‒ Faster than 1 thread ‒ Quite inefficient for 8 x 8 M (times are in seconds) Copyright © 2010, Elsevier Inc. All rights Reserved 11

Single-threaded analysis 8 x 8 M 8 Mx 8 8 x 1 8 Mx 1 x = 8 Mx 1 8 x 1 x = All cases take roughly the same time • All use ≈ 64 M FLOPs • Big result vector → more write misses • Big x vector → more read misses 8 Kx 8 K 8 Kx 1 x = EE 193 Joel Grodstein 12

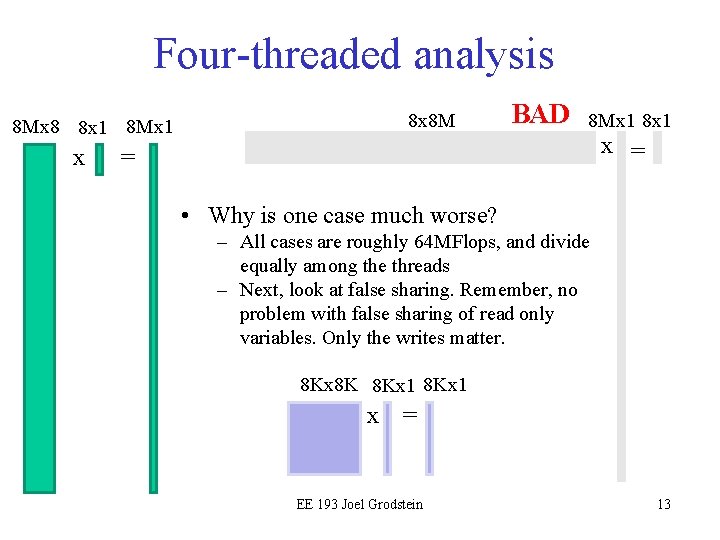

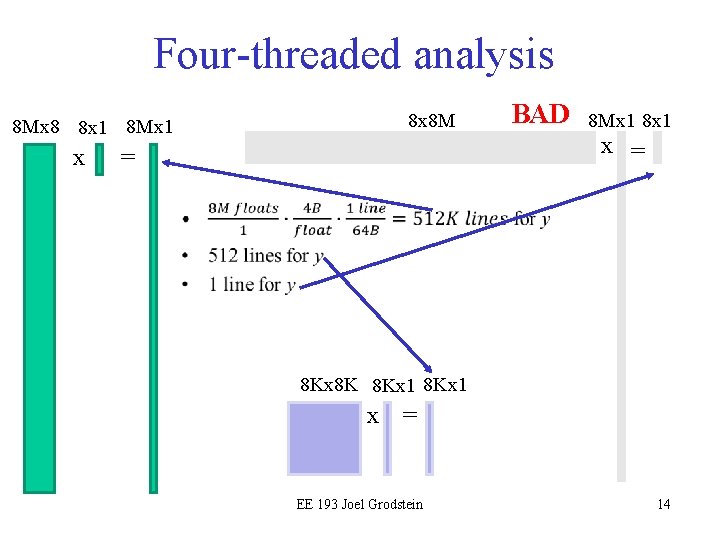

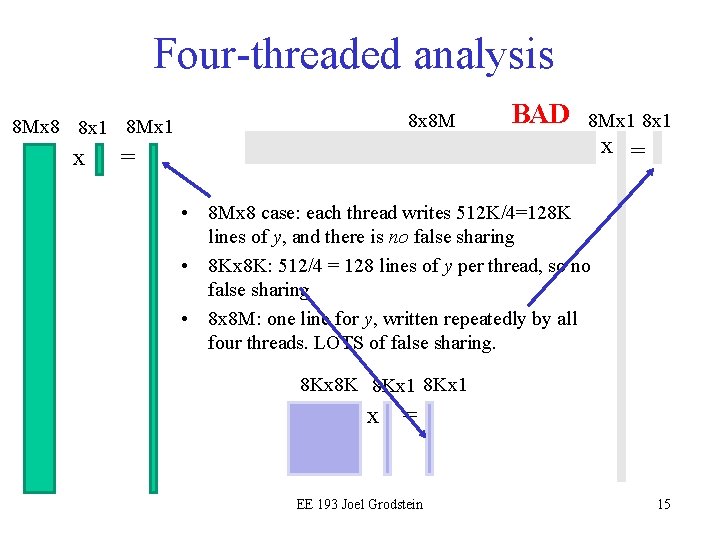

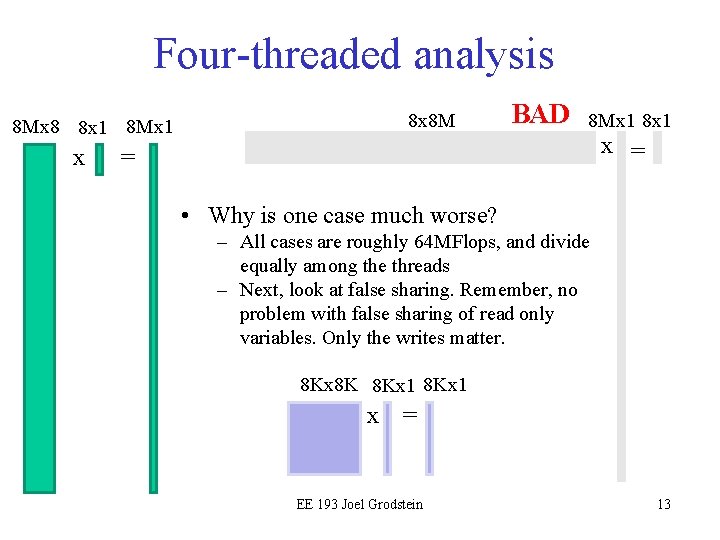

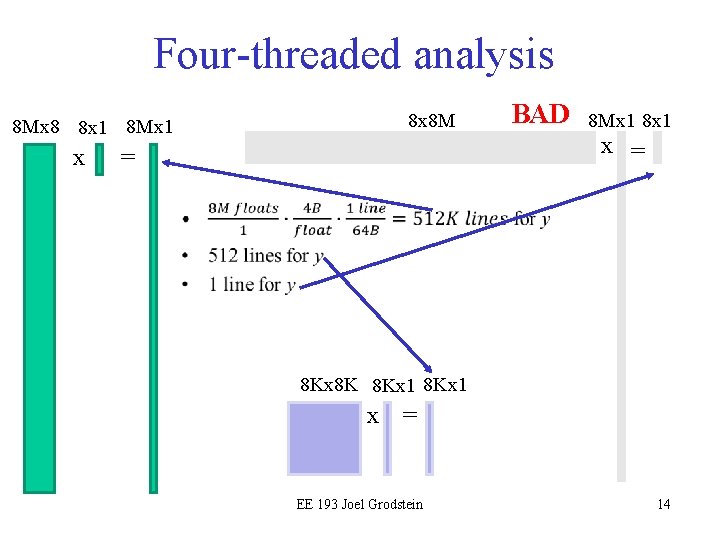

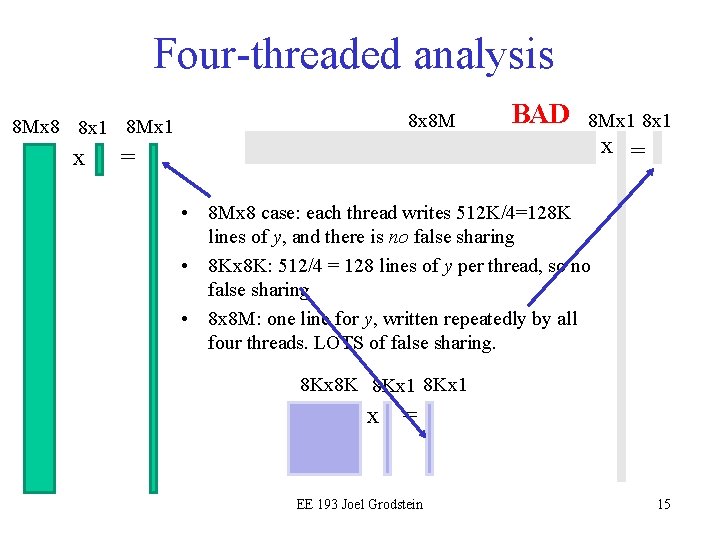

Four-threaded analysis 8 x 8 M 8 Mx 8 8 x 1 8 Mx 1 x BAD 8 Mx 1 8 x 1 = x = • Why is one case much worse? – All cases are roughly 64 MFlops, and divide equally among the threads – Next, look at false sharing. Remember, no problem with false sharing of read only variables. Only the writes matter. 8 Kx 8 K 8 Kx 1 x = EE 193 Joel Grodstein 13

Four-threaded analysis 8 x 8 M 8 Mx 8 8 x 1 8 Mx 1 x = BAD 8 Mx 1 8 x 1 x = • 8 Kx 8 K 8 Kx 1 x = EE 193 Joel Grodstein 14

Four-threaded analysis 8 x 8 M 8 Mx 8 8 x 1 8 Mx 1 x BAD 8 Mx 1 8 x 1 = x = • 8 Mx 8 case: each thread writes 512 K/4=128 K lines of y, and there is no false sharing • 8 Kx 8 K: 512/4 = 128 lines of y per thread, so no false sharing • 8 x 8 M: one line for y, written repeatedly by all four threads. LOTS of false sharing. 8 Kx 8 K 8 Kx 1 x = EE 193 Joel Grodstein 15

Analysis of Matrix Vector • Solutions to false sharing? – Pad the output vector with dummy elements – Use private storage for multiplication loop and share at the end for r=0…N-1 for k=0…N-1 y[r] += A[r, k]*x[k]; Copyright © 2010, Elsevier Inc. All rights Reserved 16

![False sharing details What happens when multiple threads all do yr Ar False sharing details • What happens when – multiple threads all do y[r] +=A[r,](https://slidetodoc.com/presentation_image_h2/c6fb3e407c650dd97093ccc4db01cfad/image-17.jpg)

False sharing details • What happens when – multiple threads all do y[r] +=A[r, i]*x[i] – each thread has a different r, but they may be in the same cache line • Architecturally, each vector element is a different memory location – the architecture guarantees that they do not interact. – But the cache-coherence microarchitecture goes through handstands to ensure that – and it's slow . • Sequence – Lots of threads all read the line – One thread does a store first – Every other thread is forced to eject the line, then re-read it after the store completes – Only one thread can modify the line at a time. EE 193 Joel Grodstein 17

Summary • Frequent read and write access by many threads to a single cache line can cause hot spots. • False sharing is when – different threads use the same cache line, so there is sharing – but they use different memory addresses, so architecturally there is no overlap (i. e. , the sharing is false). • Usually, it's a bad thing for performance. – But now we'll look at a case where we can exploit it. EE 193 Joel Grodstein 18

Multi-core CPUs are complex • We've seen how hard the ring is – Making distributed processors, memory & caches look like they're not distributed (and making it fast) – Lots of crazy cross products. Core #1 requests an address just one cycle after core #2 has dirtied it. But right after you tell core #1 no, core #2 evicts it… Joel Grodstein 19

Our problem for today • Problem: you don't want to sell chips that don't work! • Question: how many of you have designed projects that didn't work the first time? – How did you know they didn't work? – You probably ran some tests • Question: how many of you have designed and tested projects that still had bugs? – Probably a lot of you! – (Even if you didn't know it) Joel Grodstein 20

Our strategy • But when you have real jobs, you'll write really good tests for your products, right? – Good idea. But that's easier said than done. – The stakes are high: if you keep shipping defective products, your company doesn't last real long. • 2014 industry study – More verification engineers than design engineers – And design engineers spend 47% of their time in verification! – And still, only 30% of all chips work the first time – Culprits: design errors (65%), spec changes (45%). Joel Grodstein 21

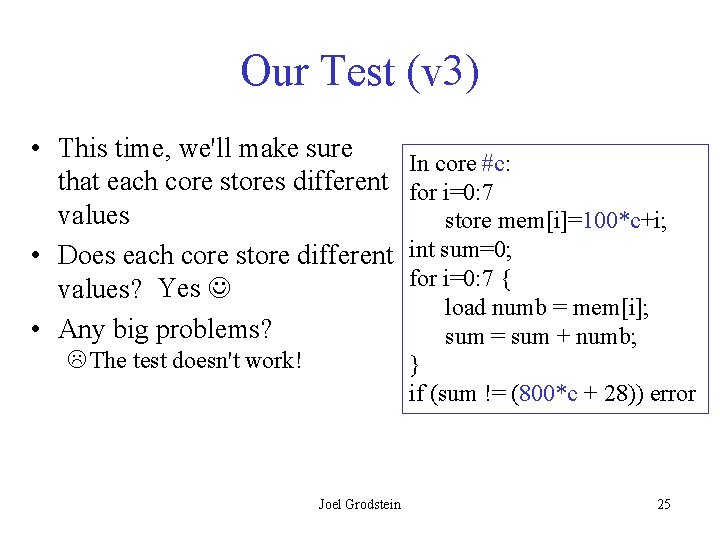

What makes a good test? • Our strategy nonetheless… is to write really good tests. Let's plan out how. • What things should our test do, to stress the shared cache? – – – Lots of loads and stores From lots of different cores Lots of collisions Lots of randomness in inter-core timing Check that the answers are right • Writing that test is really hard – Most multi-threaded code is harder than it looks . – Hint: we're going to have to take advantage of our knowledge of computer engineering . Joel Grodstein 22

![Our Test v 1 for i0 7 JUST ONCE store memi i Our Test (v 1) for i=0: 7 { JUST ONCE store mem[i] = i;](https://slidetodoc.com/presentation_image_h2/c6fb3e407c650dd97093ccc4db01cfad/image-23.jpg)

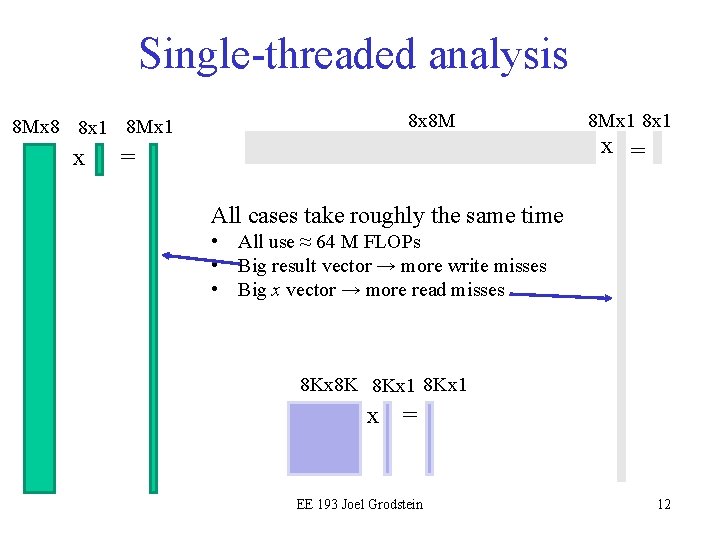

Our Test (v 1) for i=0: 7 { JUST ONCE store mem[i] = i; } int sum=0; EVERY CORE for i=0: 7 { load numb = mem[i]; sum = sum + numb; } if (sum != 28) error • Do we have lots of cores all accessing the same memory locations? Yes • Is our test long and has lots of variety? No – the main part of the test has no stores! Joel Grodstein 23

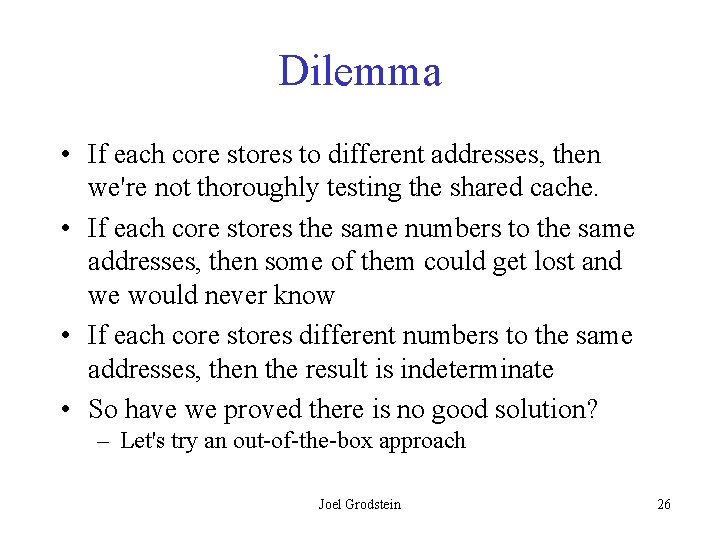

Our Test (v 2) • Move the one-time initialization to every core. • Does every core do both loads & stores now? Yes • Any issues? for i=0: 7 EVERY CORE store mem[i]=i; int sum=0; for i=0: 7 { load numb = mem[i]; sum = sum + numb; – Each core stores the same thing. } So we could have all but one if (sum != 28) error core dropping its stores, and never know Joel Grodstein 24

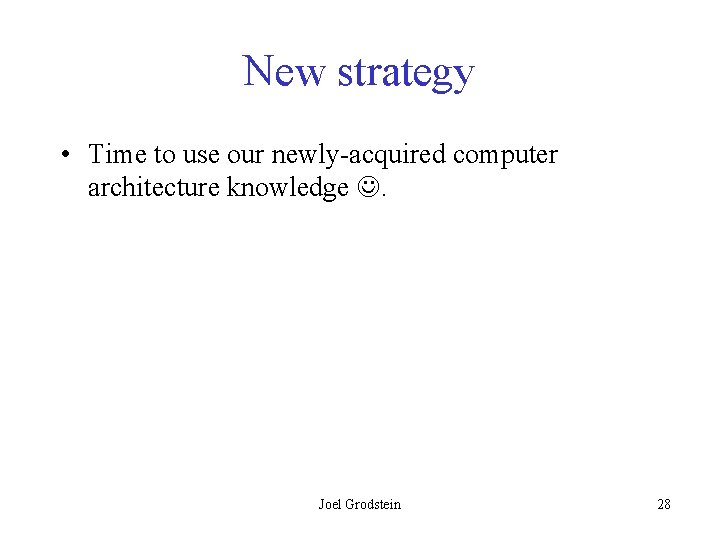

Our Test (v 3) • This time, we'll make sure that each core stores different values • Does each core store different values? Yes • Any big problems? The test doesn't work! Joel Grodstein In core #c: for i=0: 7 store mem[i]=100*c+i; int sum=0; for i=0: 7 { load numb = mem[i]; sum = sum + numb; } if (sum != (800*c + 28)) error 25

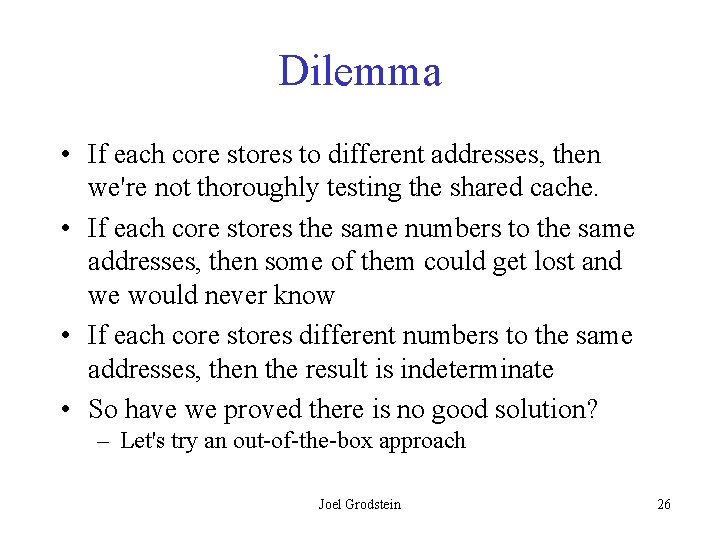

Dilemma • If each core stores to different addresses, then we're not thoroughly testing the shared cache. • If each core stores the same numbers to the same addresses, then some of them could get lost and we would never know • If each core stores different numbers to the same addresses, then the result is indeterminate • So have we proved there is no good solution? – Let's try an out-of-the-box approach Joel Grodstein 26

Our Test (v 4) In core #c: • Still have each core store while (I don't have the lock) different values. request the lock; • Use a lock to prevent conflicts. for i=0: 7 store mem[i]=100*c+i; • Does it work predictably? int sum=0; Yes for i=0: 7 { • How much does it stress the load numb = mem[i]; cache? – Other than the lock itself, only one core accesses the cache at a time . Joel Grodstein sum = sum + numb; } if (sum != (800*c + 28)) error return the lock; 27

New strategy • Time to use our newly-acquired computer architecture knowledge . Joel Grodstein 28

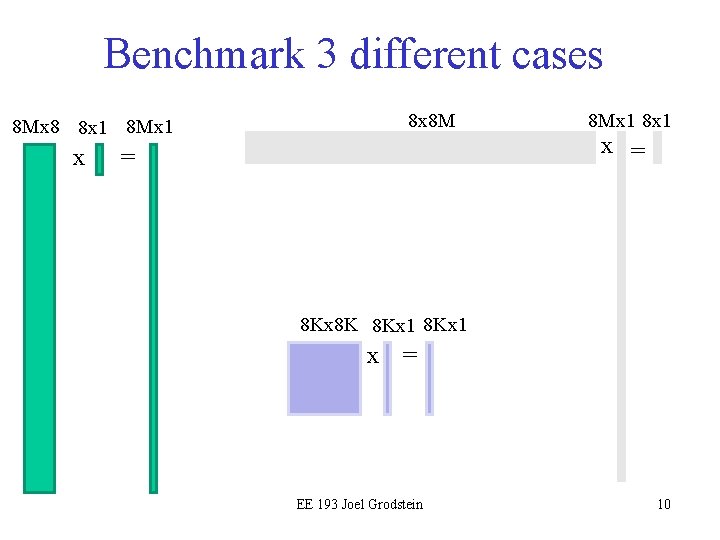

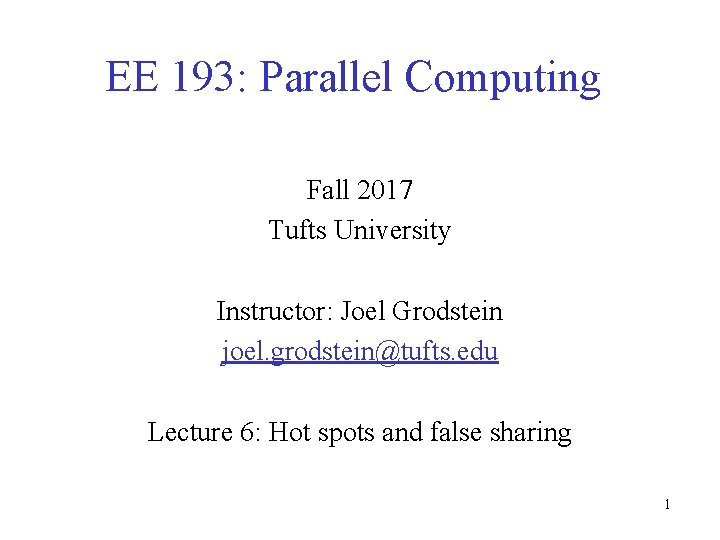

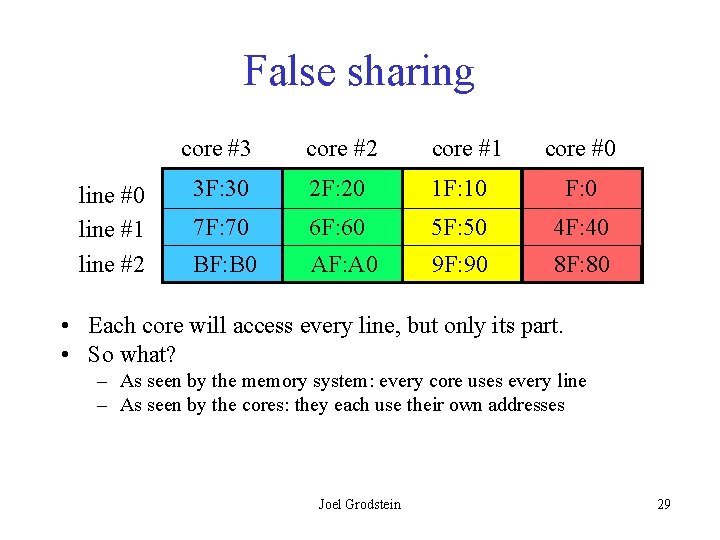

False sharing line #0 line #1 line #2 core #3 core #2 core #1 core #0 3 F: 30 2 F: 20 1 F: 10 F: 0 7 F: 70 6 F: 60 5 F: 50 4 F: 40 BF: B 0 AF: A 0 9 F: 90 8 F: 80 • Each core will access every line, but only its part. • So what? – As seen by the memory system: every core uses every line – As seen by the cores: they each use their own addresses Joel Grodstein 29

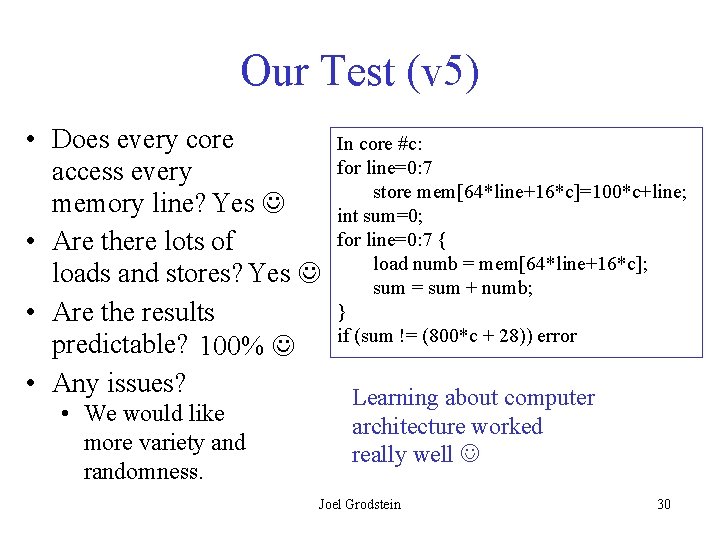

Our Test (v 5) • Does every core access every memory line? Yes • Are there lots of loads and stores? Yes • Are the results predictable? 100% • Any issues? • We would like more variety and randomness. In core #c: for line=0: 7 store mem[64*line+16*c]=100*c+line; int sum=0; for line=0: 7 { load numb = mem[64*line+16*c]; sum = sum + numb; } if (sum != (800*c + 28)) error Learning about computer architecture worked really well Joel Grodstein 30