EDiscovery and ERecordkeeping The Litigation Risk Factor As

- Slides: 38

E-Discovery and E-Recordkeeping: The Litigation Risk Factor As A Driver of Institutional Change Collaborative Expedition Workshop #63 National Science Foundation Washington, D. C. Jason R. Baron Director of Litigation Office of General Counsel U. S. National Archives and Records Administration July 18, 2007 National Archives and Records Administration

Searching Through E-Haystacks: A Big Challenge National Archives and Records Administration 2

Overview l l l Introduction: Myth, Hype, Reality – Information Retrieval and the Problem of Language Case Study: U. S. v. Philip Morris The TREC Legal Track: Initial Results Strategic Challenges in Thinking About Search Issues Across The Enterprise References National Archives and Records Administration 3

The Myth of Search & Retrieval When lawyers request production of “all” relevant documents (and now ESI), all or substantially all will in fact be retrieved by existing manual or automated methods of search. Corollary: in conducting automated searches, the use of “keywords” alone will reliably produce all or substantially all documents from a large document collection. National Archives and Records Administration

The “Hype” on Search & Retrieval Claims in the legal tech sector that a very high rate of “recall” *(i. e. , finding all relevant documents) is easily obtainable provided one uses a particular software product or service. National Archives and Records Administration

The Reality of Search & Retrieval + Past research (Blair & Maron, 1985) has shown a gap or disconnect between lawyers’ perceptions of their ability to ferret out relevant documents, and their actual ability to do so: --in a 40, 000 document case (350, 000 pages), lawyers estimated that a manual search would find 75% of relevant documents, when in fact the research showed only 20% or so had been found. National Archives and Records Administration

More Reality: IR is Hard + Information retrieval (IR) is a hard problem: difficult even with Englishlanguage text, and even harder with nontextual forms of ESI (audio, video, etc. ) caught up in litigation. + A vast field of IR research exists, including some fundamental concepts and terminology, that lawyers would benefit from having greater exposure with. National Archives and Records Administration

Why is IR hard (in general)? + Fundamental ambiguity of language + Human errors + OCR problems + Non-English language texts + Nontextual ESI (in. wav, . mpg, . jpg formats, etc. ) + Lack of helpful metadata National Archives and Records Administration

Problems of language Polysemy: ambiguous terms (e. g. , “George Bush, ” “strike, ”) Synonymy: variation in describing same person or thing in multiplicity of ways (e. g. , “diplomat, ” “consul, ” “official, ” ambassador, ” etc. ) Pace of change: text messaging, computer gaming as latest examples (e. g. , “POS, ” “ 1337”) National Archives and Records Administration

Why is IR hard (for lawyers)? + Lawyers not technically grounded + Traditional lawyering doesn’t emphasize frontend “process” issues that would help simplify or focus search problem in particular contexts + The reality is that huge sources of heterogeneous ESI exist, presenting an array of technical issues + Deadlines and resource constraints + Failure to employ best strategic practices National Archives and Records Administration

Snapshot of 2007 ESI Heterogeneity E-mail, integrated with voice mail & VOIP, word processing (including not in English), spreadsheets, dynamic databases, instant messaging, Web pages including intraweb sites, Blogs, wikis, and RSS feeds, backup tapes, hard drives, removable media, flash drives, new storage devices, remote PDAs, and audit logs and metadata of all types. National Archives and Records Administration

Sedona Guideline 11 (2007) A responding party may satisfy its good faith obligation to preserve and produce potentially relevant electronically stored information by using electronic tools ad processes, such as data sampling, searching, or the use of selection criteria, to identify data reasonably likely to contain relevant information. www. thesedonaconference. org National Archives and Records Administration 12

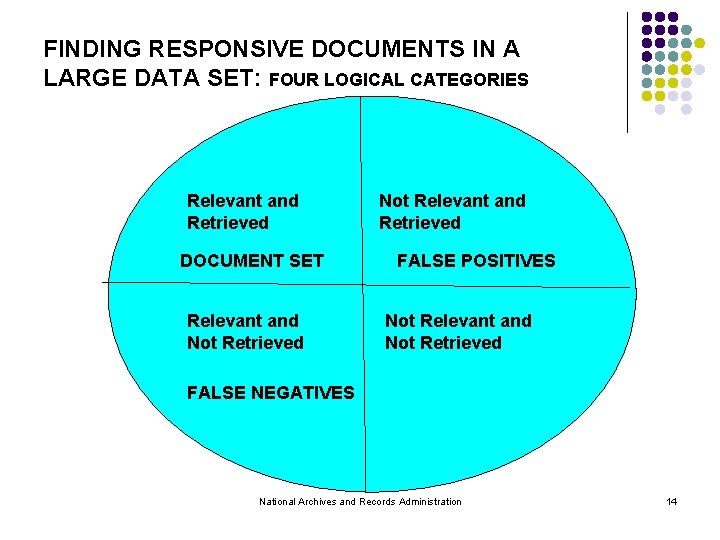

Litigation Targets + Defining “relevance” + Maximizing # responsive docs + Minimizing retrieval “noise” or false positives (non-responsive docs) National Archives and Records Administration

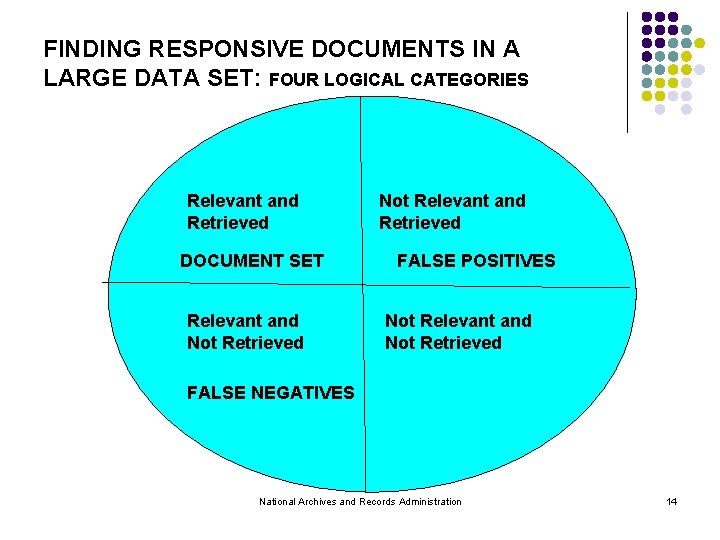

FINDING RESPONSIVE DOCUMENTS IN A LARGE DATA SET: FOUR LOGICAL CATEGORIES Relevant and Retrieved DOCUMENT SET Relevant and Not Retrieved Not Relevant and Retrieved FALSE POSITIVES Not Relevant and Not Retrieved FALSE NEGATIVES National Archives and Records Administration 14

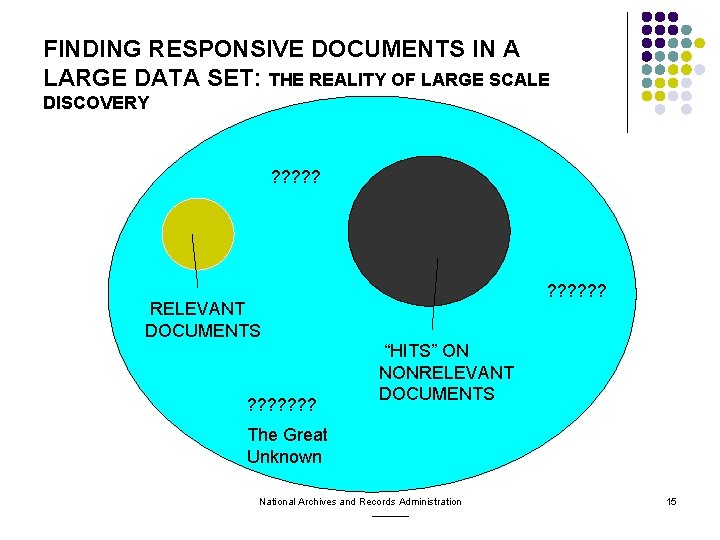

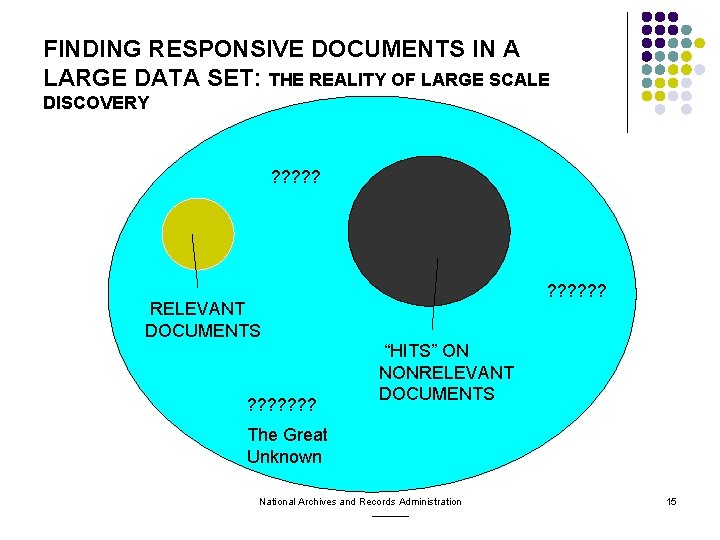

FINDING RESPONSIVE DOCUMENTS IN A LARGE DATA SET: THE REALITY OF LARGE SCALE DISCOVERY ? ? ? RELEVANT DOCUMENTS ? ? ? ? “HITS” ON NONRELEVANT DOCUMENTS The Great Unknown National Archives and Records Administration 15

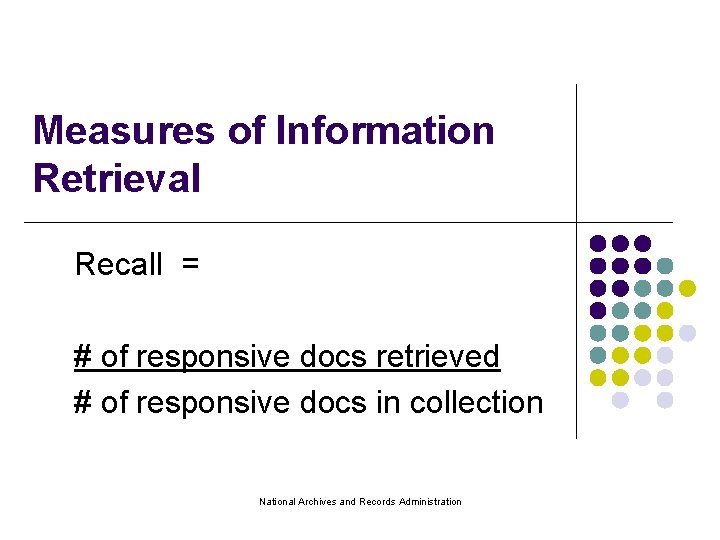

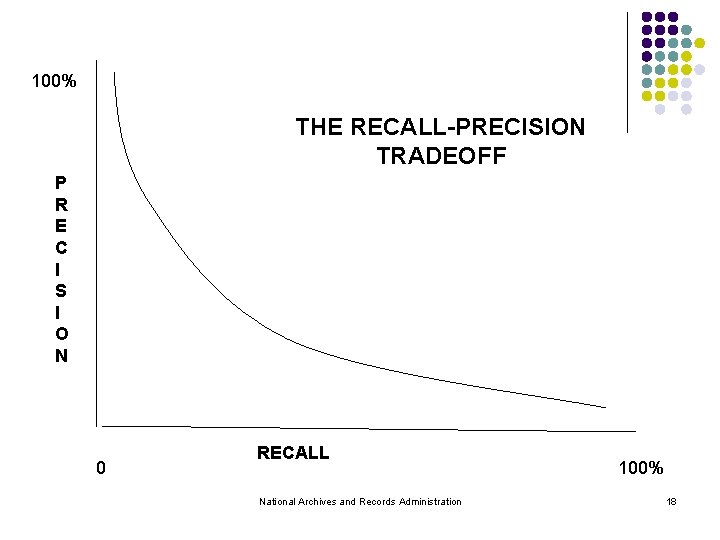

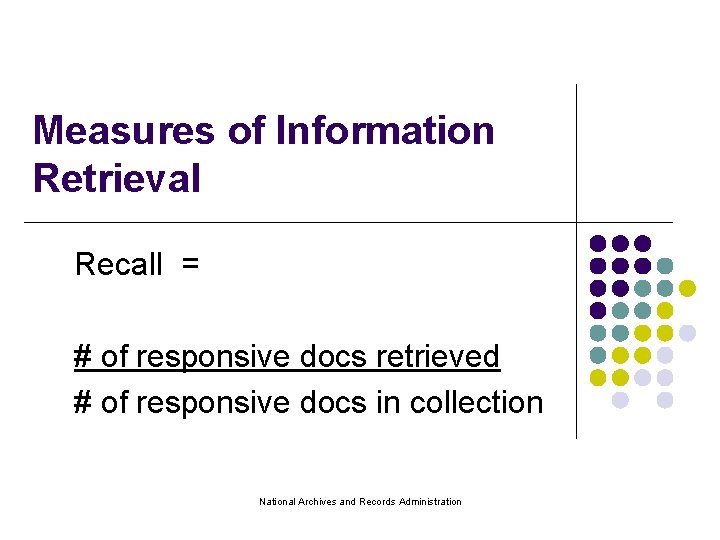

Measures of Information Retrieval Recall = # of responsive docs retrieved # of responsive docs in collection National Archives and Records Administration

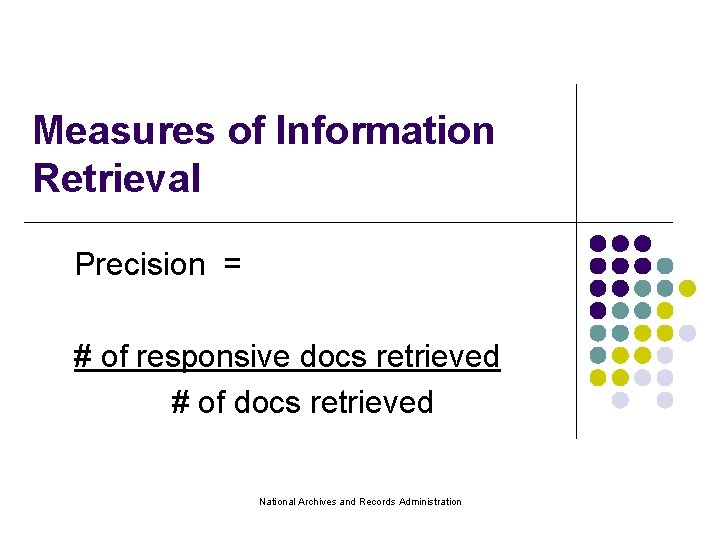

Measures of Information Retrieval Precision = # of responsive docs retrieved # of docs retrieved National Archives and Records Administration

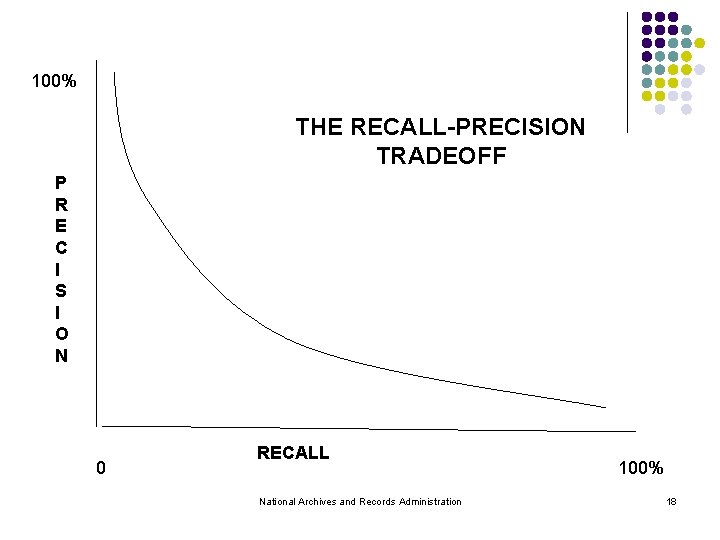

100% THE RECALL-PRECISION TRADEOFF P R E C I S I O N 0 RECALL National Archives and Records Administration 100% 18

Three Questions (1) How can one go about improving rates of recall and precision (so as to find a greater number of relevant documents, while spending less overall time, cost, etc. , sifting through noise? ) (2) What alternatives to keyword searching exist? (3) Are there ways in which to benchmark alternative search methodologies so as to evaluate their efficacy? National Archives and Records Administration

Beyond Reliance on Keywords Alone: Alternative Search Methods Greater Use Made of Boolean Strings Fuzzy Search Models Probabilistic models (Bayesian) Statistical methods (clustering) Machine learning approaches to semantic representation Categorization tools: taxonomies and ontologies Social network analysis National Archives and Records Administration

What is TREC? l l Conference series co-sponsored by the National Institute of Standards and Technology (NIST) and the Advanced Research and Development Activity (ARDA) of the Department of Defense Designed to promote research into the science of information retrieval First TREC conference was in 1992 15 th Conference held November 15 -17, 2006 in U. S. in Gaithersburg, Maryland (NIST headquarters) National Archives and Records Administration 21

TREC 2006 Legal Track l l l The TREC 2006 Legal Track was designed to evaluate th effectiveness of search technologies in a real-world legal context First of a kind study using nonproprietary data since Blair/Maron research in 1985 5 hypothetical complaints and 43 “requests to produce” drafted by Sedona Conference members “Boolean negotiations” conducted as a baseline for search efforts Documents to be searched were drawn from a publicly available 7 million document tobacco litigation Master Settlement Agreement database 6 Participating teams submitted 33 runs. Teams consisted of: Hummingbird, National University of Singapore, Sabir Research, University of Maryland, University of Missouri. Kansas City, and York University National Archives and Records Administration 22

TREC 2006 LEGAL TRACK XML ENCODED TOPICS WITH NEGOTIATION HISTORY – ONE EXAMPLE <? xml version="1. 0" encoding="ISO-8859 -1" ? > - <Trec. Legal. Production. Request> - <Production. Request> <Request. Number>6</Request. Number> <Request. Text>All documents discussing, referencing, or relating to company guidelines or internal approval for placement of tobacco products, logos, or signage, in television programs (network or cable), where the documents expressly refer to the programs being watched by children. </Request. Text> - <Boolean. Query> <Final. Query>(guide! OR strateg! OR approval) AND (place! OR promot! OR logos OR sign! OR merchandise) AND (TV OR "T. V. " OR televis! OR cable OR network) AND ((watch! OR view!) W/5 (child! OR teen! OR juvenile OR kid! OR adolescent!))</Final. Query> - <Negotiation. History> National Archives and Records Administration 23

TREC Legal Track 2006: Percentage of Unique Documents By Topic Found By Boolean, Expert Searcher, and Other Combined Methods of Search National Archives and Records Administration 24

TREC Legal Track 2006: Sort by Increasing Percentage of Unique Documents Per Topic Found By Combined Methods Other Than A Baseline Boolean Search National Archives and Records Administration 25

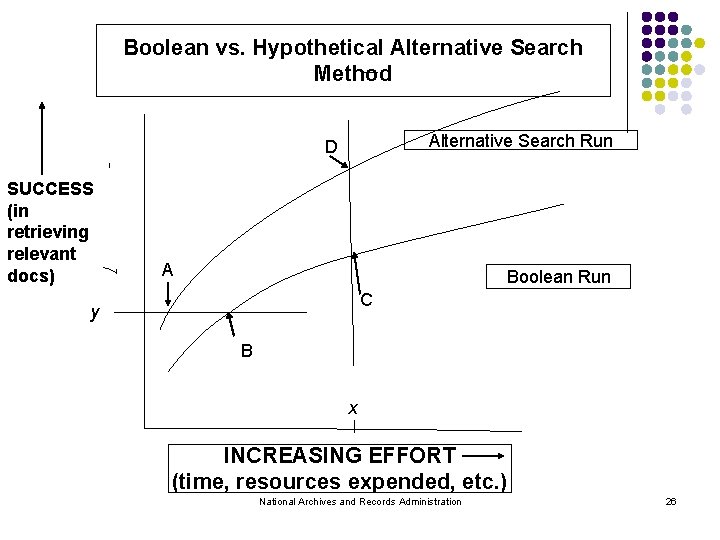

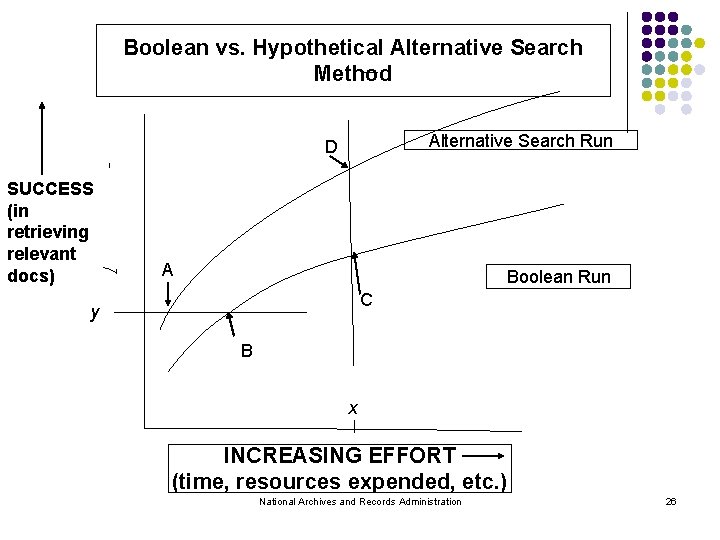

Boolean vs. Hypothetical Alternative Search Method Alternative Search Run D SUCCESS (in retrieving relevant docs) A Boolean Run C y B x INCREASING EFFORT (time, resources expended, etc. ) National Archives and Records Administration 26

Managing Litigation Risk ↑ Success per amount of effort = ↓ Litigation Risk National Archives and Records Administration 27

Strategic Challenges Convincing lawyers and judges that automated searches are not just desirable but necessary in response to large e-discovery demands. National Archives and Records Administration

Challenges (con’t) Having all parties and adjudicators understand that the use of automated methods does not guarantee all responsive documents will be identified in a large data collection. National Archives and Records Administration

Challenges (con’t) Designing an overall review process which maximizes the potential to find responsive documents in a large data collection (no matter which search tool is used), and using sampling and other analytic techniques to test hypotheses early on. National Archives and Records Administration

Challenges (con’t) Parties making a good faith attempt to collaborate on the use of particular search methods, including utilizing multiple “meet and confers” as necessary based on initial sampling or surveying of retrieved ESI, based on whatever methods are used. National Archives and Records Administration

Challenges (con’t) Being open to using new and evolving search and information retrieval methods and tools. National Archives and Records Administration

Leading U. S. Case Precedent on Automated Searches -- Current cases emphasize parties discussing proposed keyword searches under the rubric of coming up with a “search protocol. ” Treppel v. Biovail, 233 F. R. D. 363 (S. D. N. Y. 2006) -- First case discussing parties’ employing or relying on concept searches as an alternative search methodologies. Disability Rights Council v. WMATA, 2007 WL 1585452 (June 1, 2007) National Archives and Records Administration

Future Research TREC 2007 Legal Track The Sedona Conference Search & Retrieval Commentary & additional activities National Archives and Records Administration

References l l J. Baron, “Toward A Federal Benchmarking Standard for Evaluating Information Retrieval Products Used in E-Discovery, ” 6 Sedona Conference Journal 237 (2005) (available on Westlaw and LEXIS) J. Baron, “Information Inflation: Can The Legal System Adapt? ” (with co-author George L. Paul), 13 Richmond J. Law & Technology (2007), vol. 3, article 10 (available online at http: //law. richmond. edu/jolt/index. asp National Archives and Records Administration 35

References l l l J. Baron, D. Oard, and D. Lewis, “TREC 2006 Legal Track Overview, ” available at http: //trec. nist. gov/pubs/trec 15/t 15_procee dings. html (document 3) The Sedona Conference, Best Practices Commentary on The Use of Search & Retrieval Methods in E-Discovery (forthcoming 2007) TREC 2007 Legal Track Home Page, see http: //trec-legal. umiacs. umd. edu/ National Archives and Records Administration 36

References l ICAIL 2007 (International Conference on Artificial Intelligence and the Law), Workshop on Supporting Search and Sensemaking for ESI in Discovery Proceedings, see http: //www. umiacs. umd. edu/~oard/desi-ws/ l see also J. Baron and P. Thompson, “The Search Problem Posed By Large Heterogeneous Data Sets in Litigation: Possible Future Approaches to Research, ” ICAIL 2007 Conference Paper, June 4 -8, 2007, available at http: //www. umiacs. umd. edu/~oard/desi-ws/ (click link to conference paper). National Archives and Records Administration 37

l Jason R. Baron Director of Litigation Office of General Counsel National Archives and Records Administration 8601 Adelphi Road Suite 3110 College Park, MD 20740 (301) 837 -1499 Email: jason. baron@nara. gov National Archives and Records Administration 38