ECE 8443 Pattern Recognition LECTURE 14 SUFFICENT STATISTICS

- Slides: 7

ECE 8443 – Pattern Recognition LECTURE 14: SUFFICENT STATISTICS • Objectives: Sufficient Statistics Dimensionality Complexity Overfitting • Resources: DHS – Chap. 3 (Part 2) Rice – Sufficient Statistics Ellem – Sufficient Statistics TAMU – Dimensionality • URL: . . . /publications/courses/ece_8443/lectures/current/lecture_14. ppt

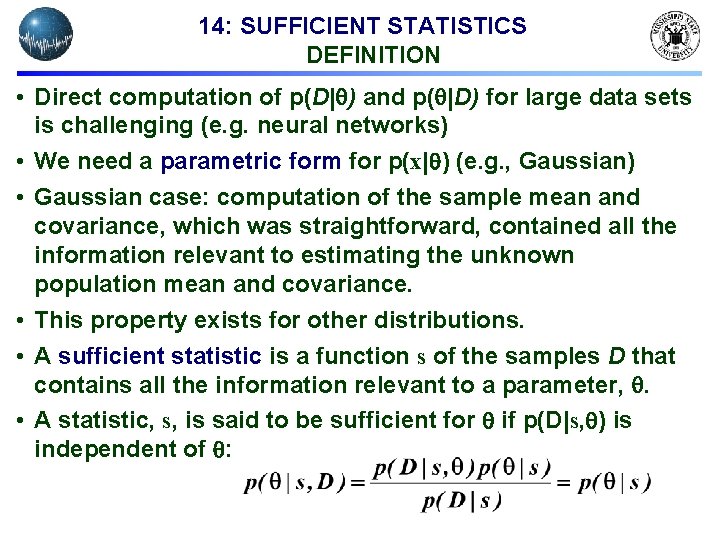

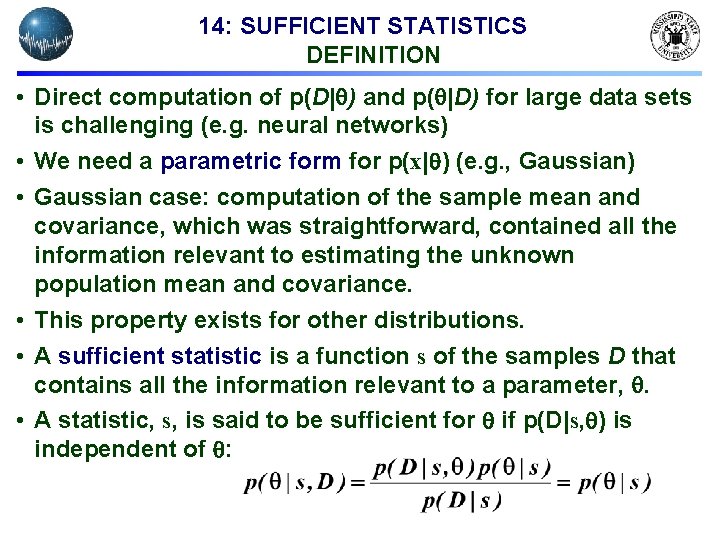

14: SUFFICIENT STATISTICS DEFINITION • Direct computation of p(D| ) and p( |D) for large data sets is challenging (e. g. neural networks) • We need a parametric form for p(x| ) (e. g. , Gaussian) • Gaussian case: computation of the sample mean and covariance, which was straightforward, contained all the information relevant to estimating the unknown population mean and covariance. • This property exists for other distributions. • A sufficient statistic is a function s of the samples D that contains all the information relevant to a parameter, . • A statistic, s, is said to be sufficient for if p(D|s, ) is independent of :

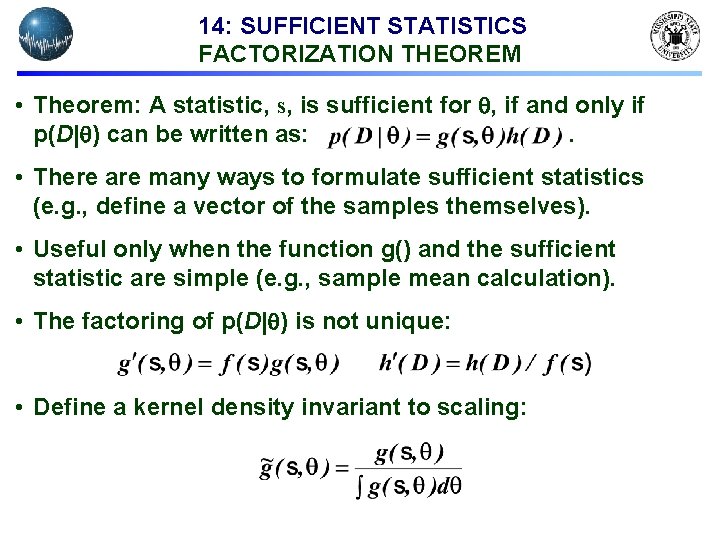

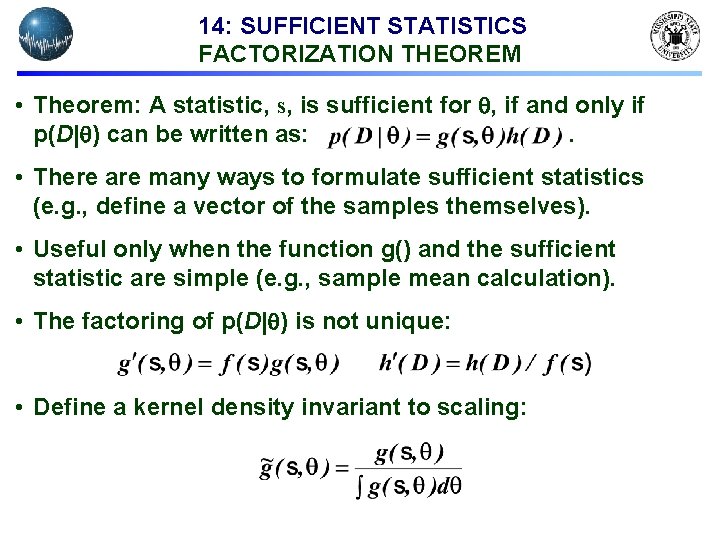

14: SUFFICIENT STATISTICS FACTORIZATION THEOREM • Theorem: A statistic, s, is sufficient for , if and only if p(D| ) can be written as: . • There are many ways to formulate sufficient statistics (e. g. , define a vector of the samples themselves). • Useful only when the function g() and the sufficient statistic are simple (e. g. , sample mean calculation). • The factoring of p(D| ) is not unique: • Define a kernel density invariant to scaling:

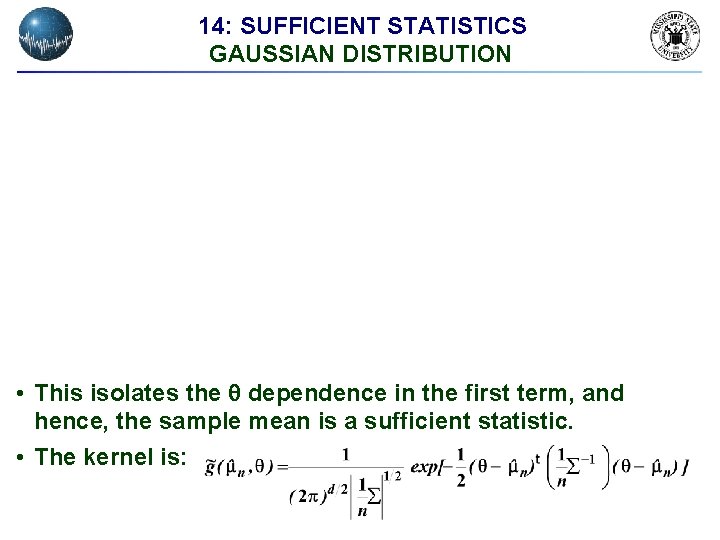

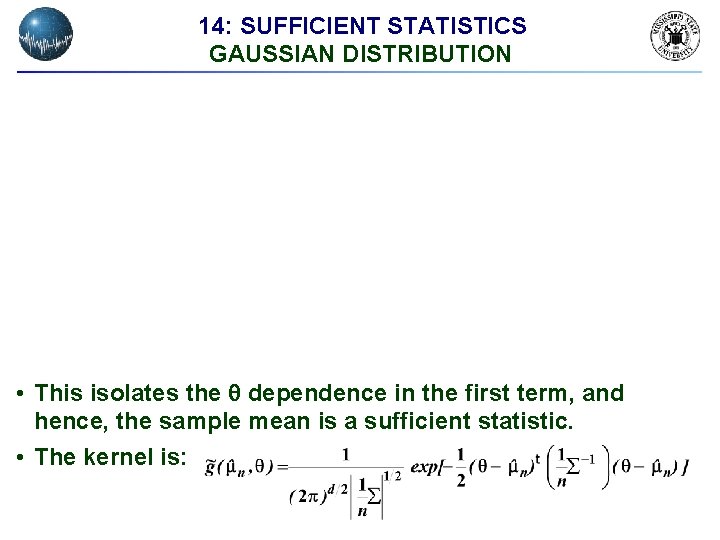

14: SUFFICIENT STATISTICS GAUSSIAN DISTRIBUTION • This isolates the dependence in the first term, and hence, the sample mean is a sufficient statistic. • The kernel is:

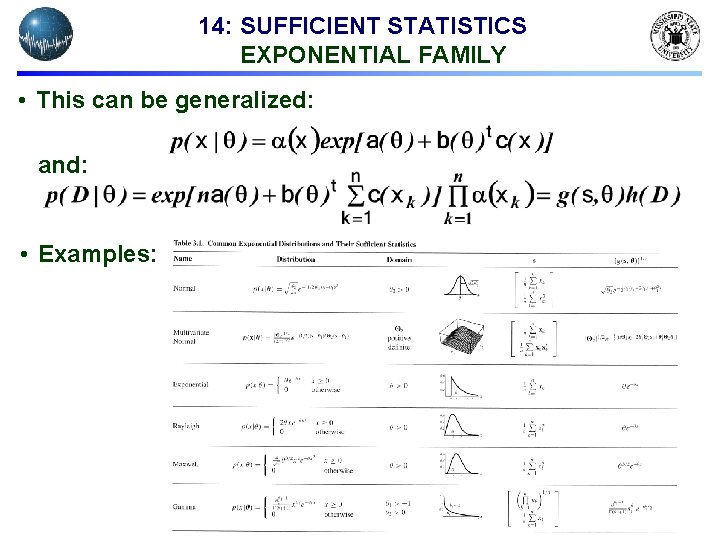

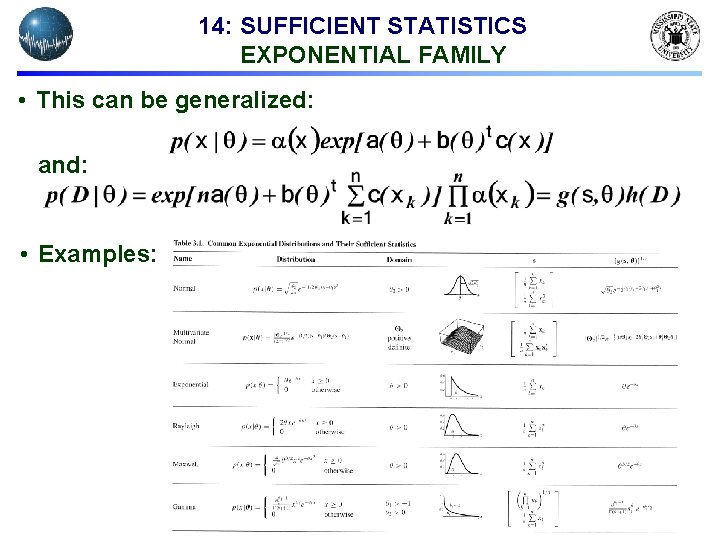

14: SUFFICIENT STATISTICS EXPONENTIAL FAMILY • This can be generalized: and: • Examples:

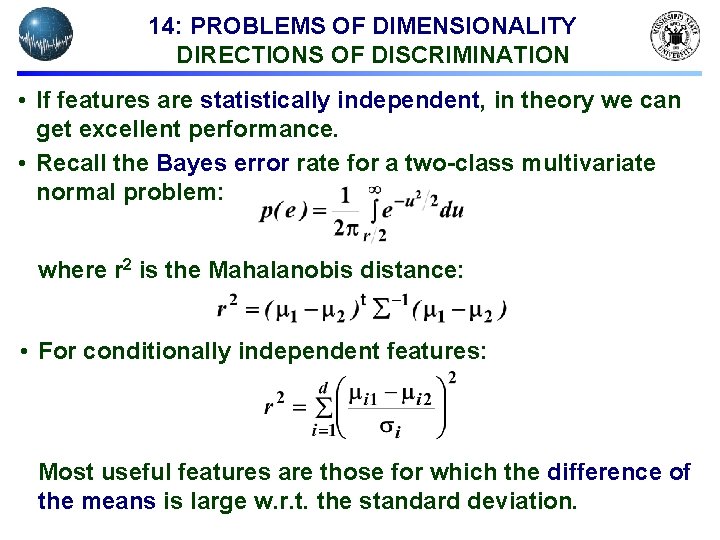

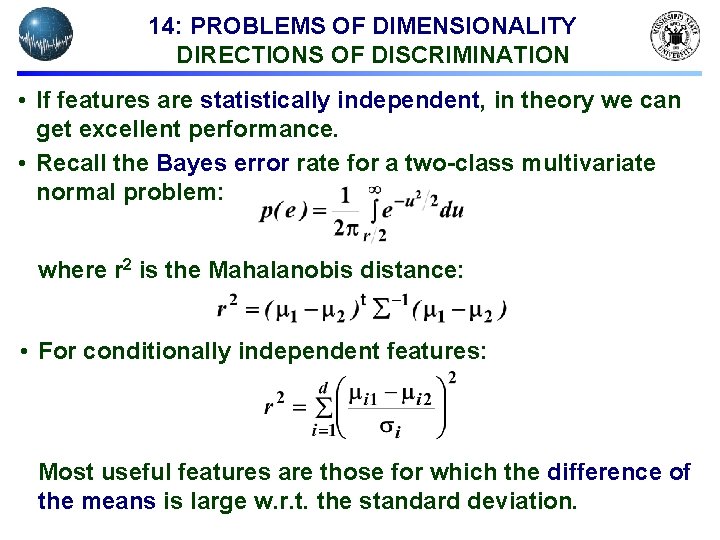

14: PROBLEMS OF DIMENSIONALITY DIRECTIONS OF DISCRIMINATION • If features are statistically independent, in theory we can get excellent performance. • Recall the Bayes error rate for a two-class multivariate normal problem: where r 2 is the Mahalanobis distance: • For conditionally independent features: Most useful features are those for which the difference of the means is large w. r. t. the standard deviation.

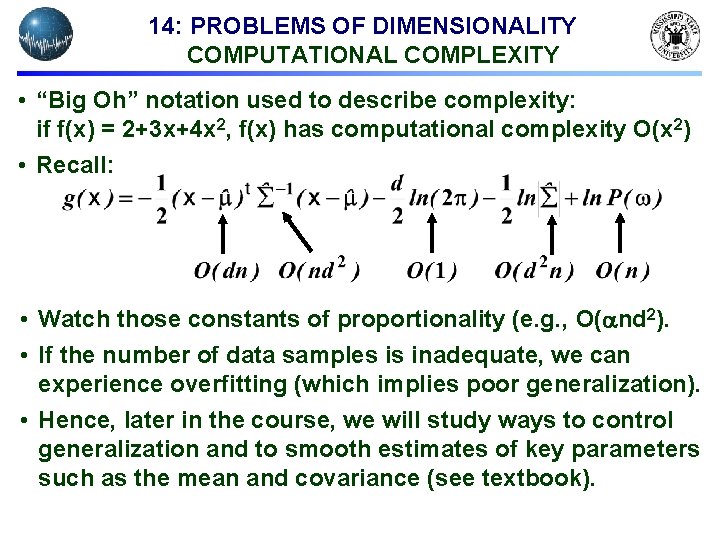

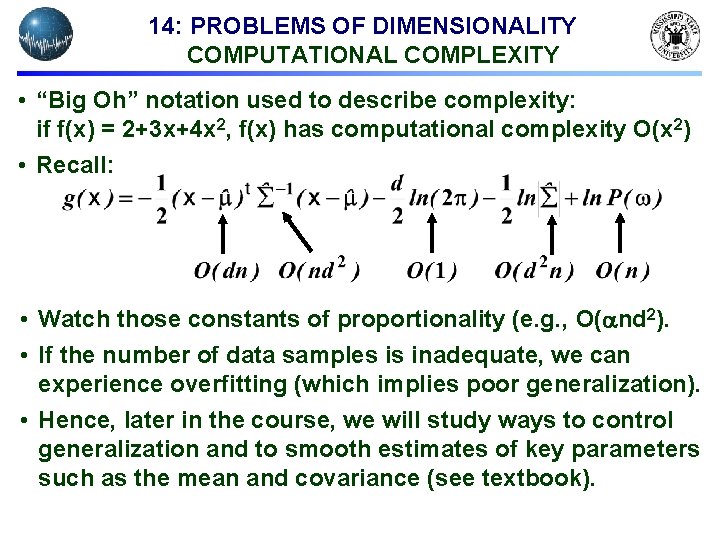

14: PROBLEMS OF DIMENSIONALITY COMPUTATIONAL COMPLEXITY • “Big Oh” notation used to describe complexity: if f(x) = 2+3 x+4 x 2, f(x) has computational complexity O(x 2) • Recall: • Watch those constants of proportionality (e. g. , O( nd 2). • If the number of data samples is inadequate, we can experience overfitting (which implies poor generalization). • Hence, later in the course, we will study ways to control generalization and to smooth estimates of key parameters such as the mean and covariance (see textbook).