ECE 6554 Advanced Computer Vision Spring 2017 Selfsupervision

![Pre-Training for R-CNN Pre-train on relative-position task, w/o labels [Girshick et al. 2014] 18 Pre-Training for R-CNN Pre-train on relative-position task, w/o labels [Girshick et al. 2014] 18](https://slidetodoc.com/presentation_image_h/9faf38e548c07c1f42de92952de5330a/image-18.jpg)

- Slides: 43

ECE 6554: Advanced Computer Vision Spring 2017 Self-supervision or Unsupervised Learning of Visual Representation Badour Al. Bahar 1

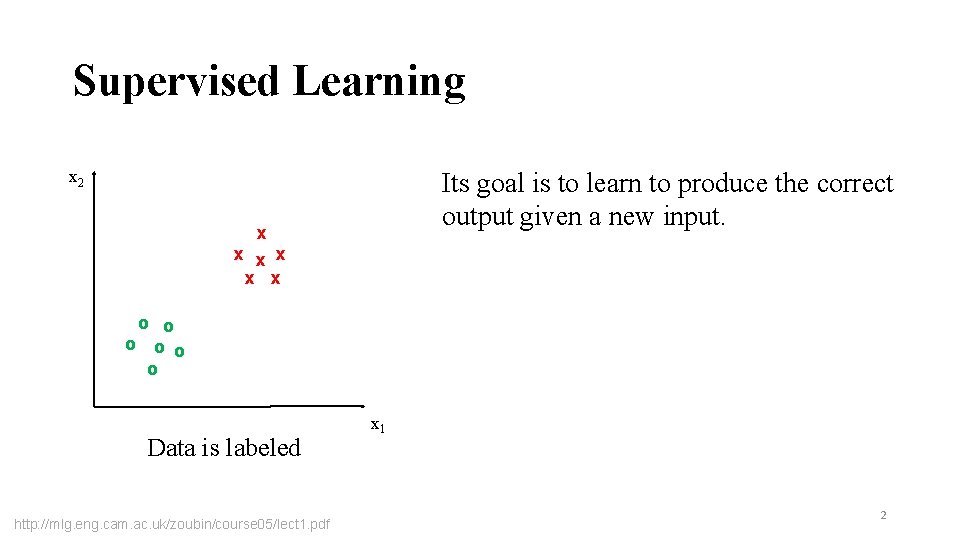

Supervised Learning x 2 Its goal is to learn to produce the correct output given a new input. x x x o o o Data is labeled http: //mlg. eng. cam. ac. uk/zoubin/course 05/lect 1. pdf x 1 2

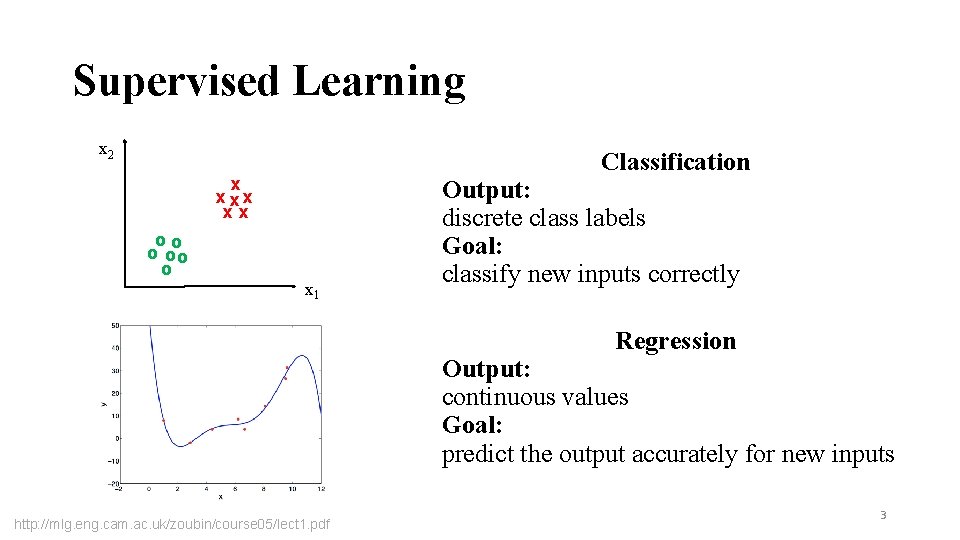

Supervised Learning x 2 Classification x xx oo o x 1 Output: discrete class labels Goal: classify new inputs correctly Regression Output: continuous values Goal: predict the output accurately for new inputs http: //mlg. eng. cam. ac. uk/zoubin/course 05/lect 1. pdf 3

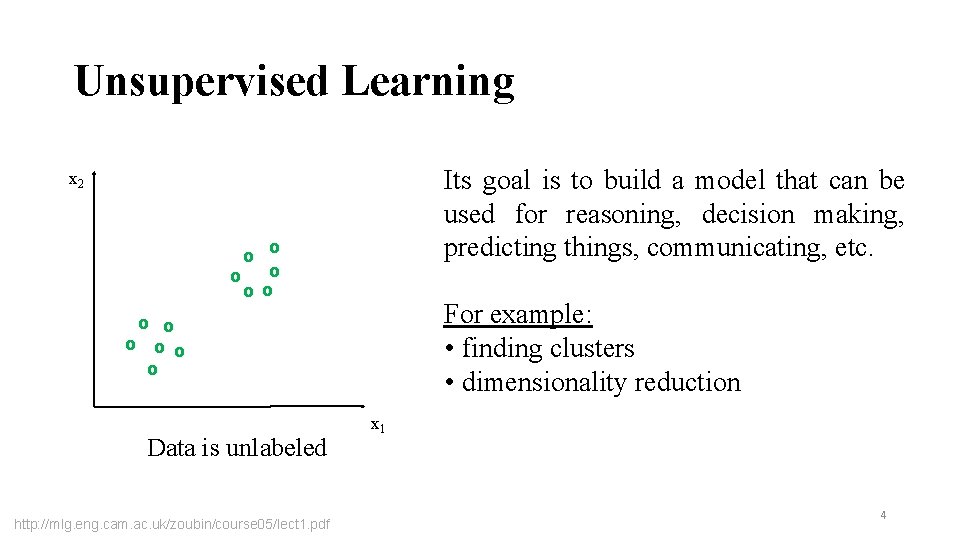

Unsupervised Learning Its goal is to build a model that can be used for reasoning, decision making, predicting things, communicating, etc. x 2 o o o For example: • finding clusters • dimensionality reduction o o o Data is unlabeled http: //mlg. eng. cam. ac. uk/zoubin/course 05/lect 1. pdf x 1 4

Motivation and Strengths: • Unsupervised learning is not expensive and time consuming like supervised learning. • Unsupervised learning requires no human intervention. • Unlabeled data is easy to find with large quantities, unlike labeled data which is scarce. 5

Weaknesses: More difficult than supervised learning because there is NO: v Gold standard (like an outcome variable) v Single objective (like test set accuracy) 6

Unsupervised Visual Representation Learning by Context Prediction C. Doersch, A. Gupta, A. A. Efros ICCV 2015 • Semantic labels from humans are expensive. Do we need semantic labels in order to learn a useful representation? Or is there some other “Less Expensive” pretext task that will learn something similar? Slide: Carl Doersch 7

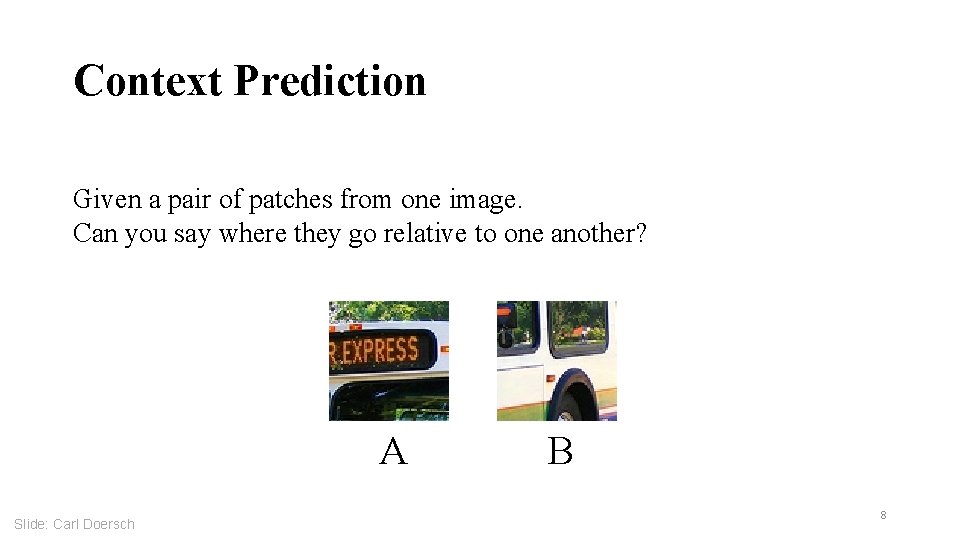

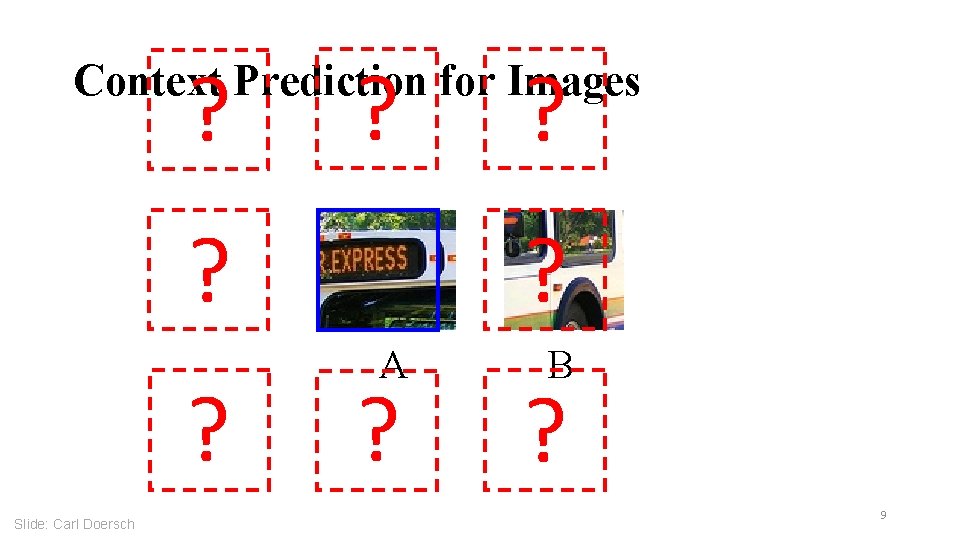

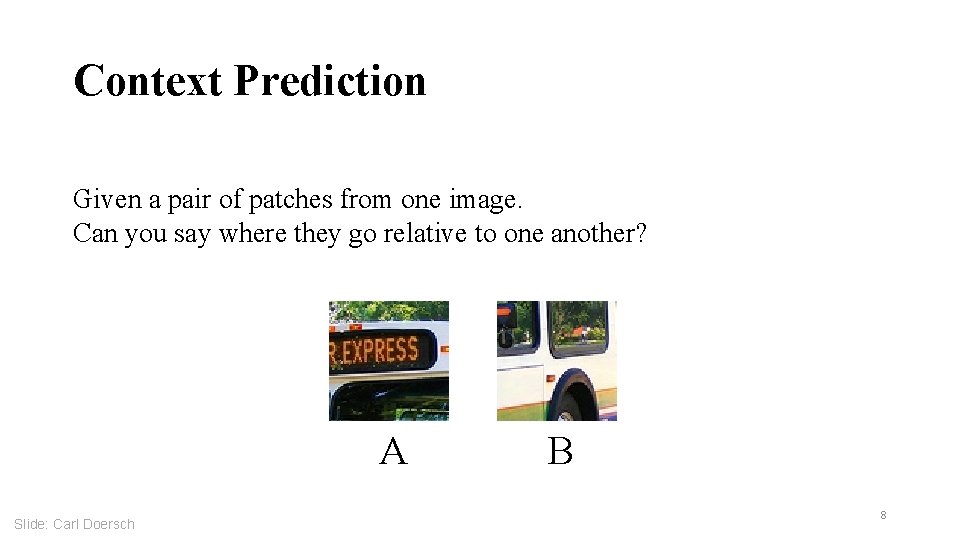

Context Prediction Given a pair of patches from one image. Can you say where they go relative to one another? A Slide: Carl Doersch B 8

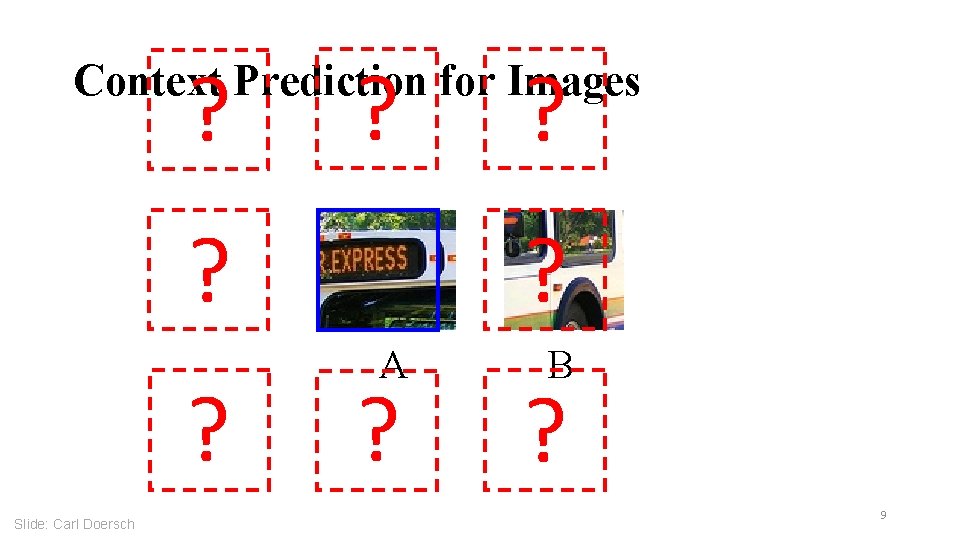

? ? ? Context Prediction for Images ? ? Slide: Carl Doersch ? A ? B ? 9

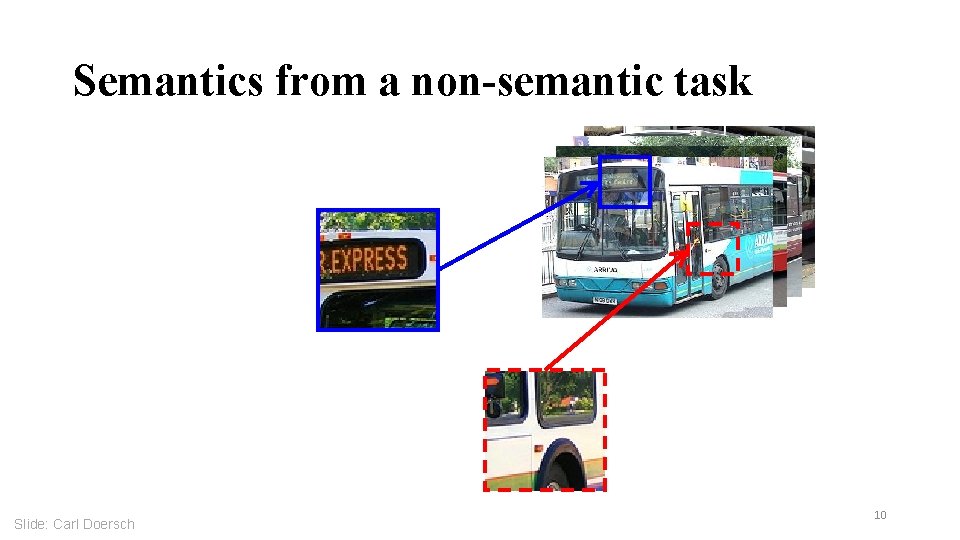

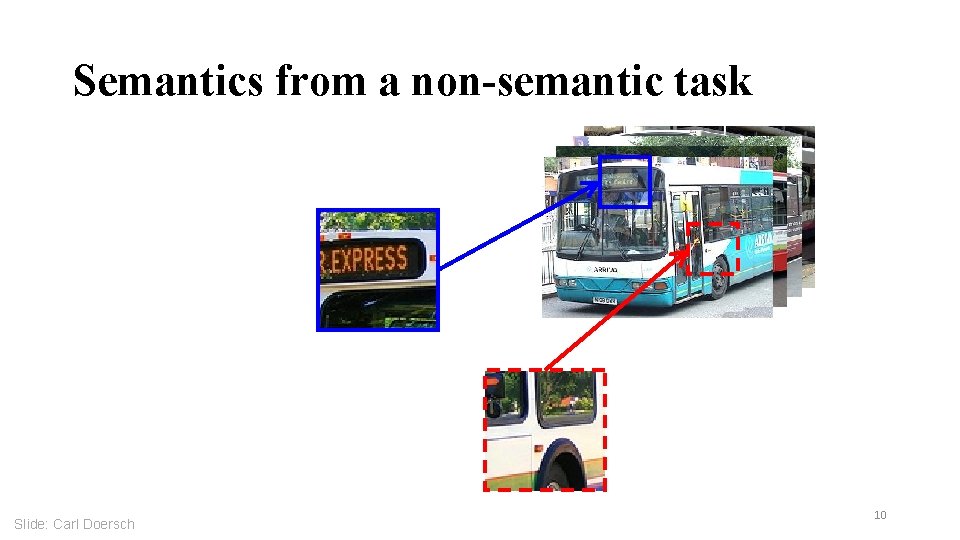

Semantics from a non-semantic task Slide: Carl Doersch 10

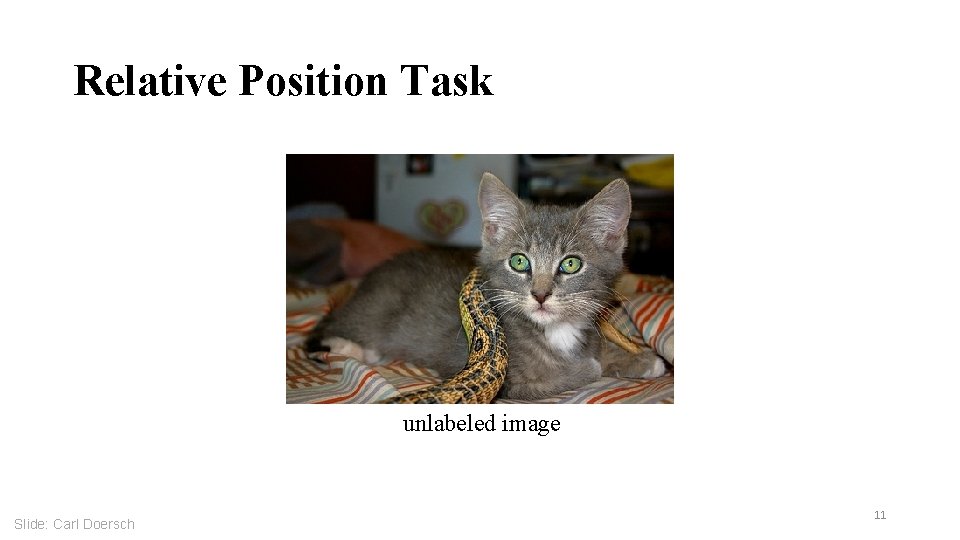

Relative Position Task unlabeled image Slide: Carl Doersch 11

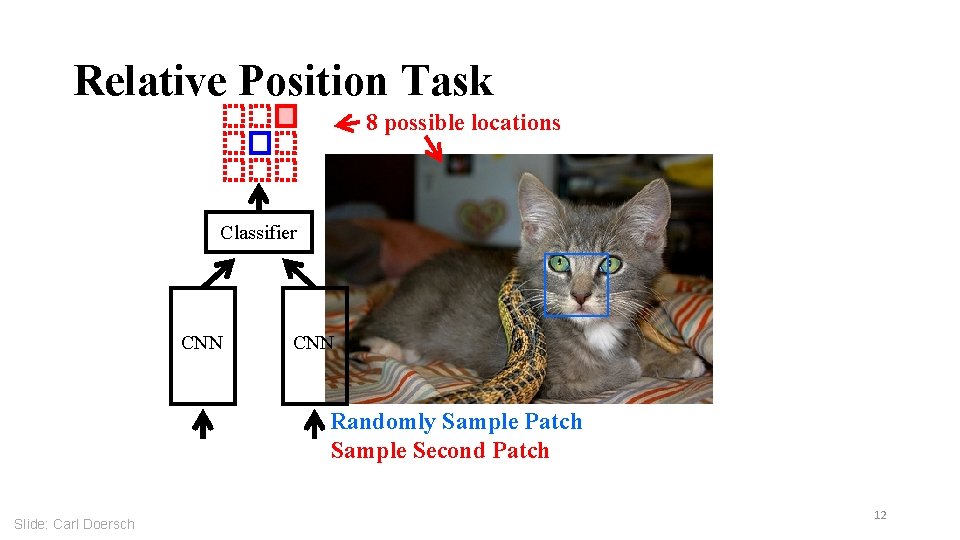

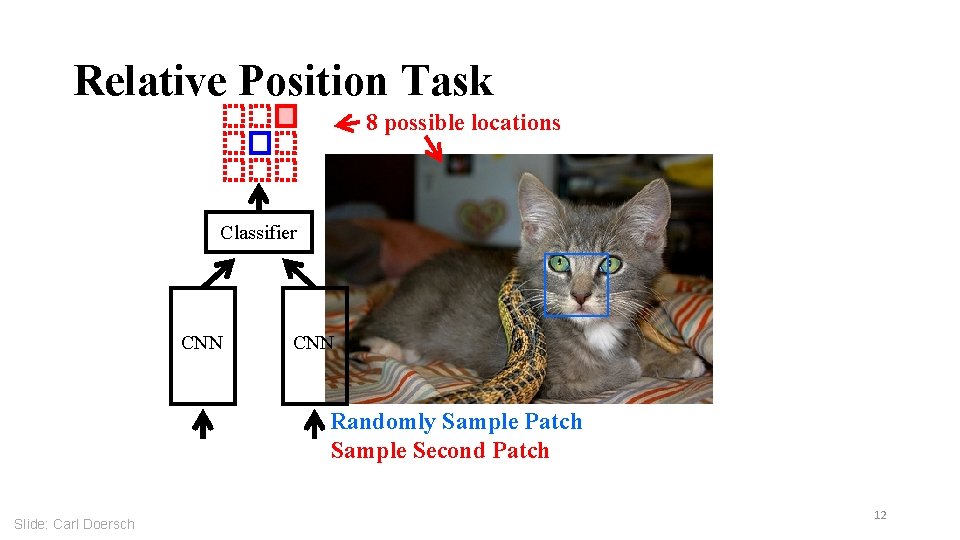

Relative Position Task 8 possible locations Classifier CNN Randomly Sample Patch Sample Second Patch Slide: Carl Doersch 12

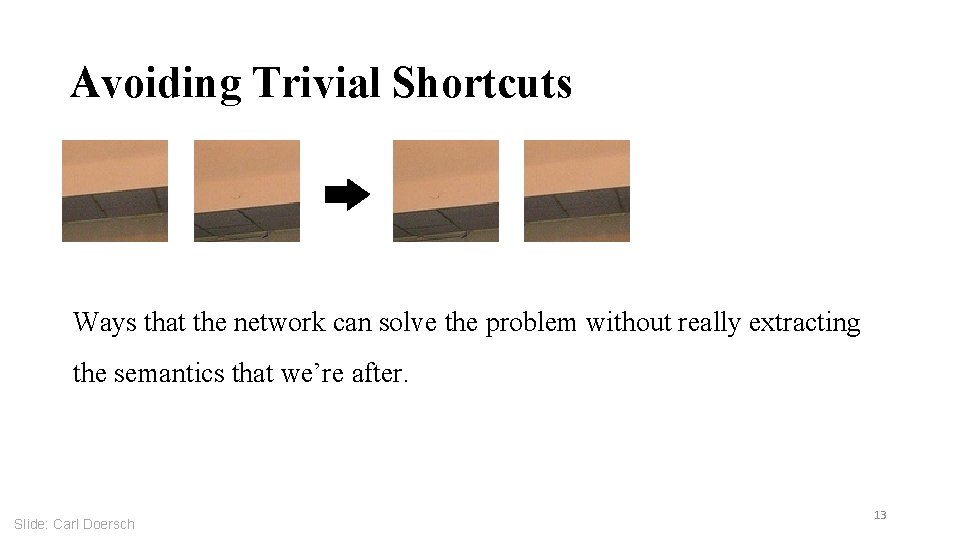

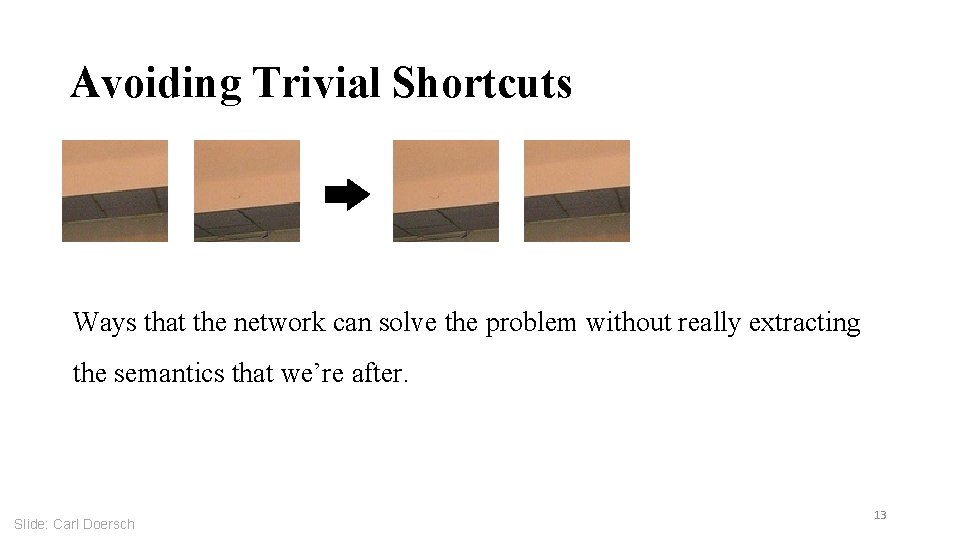

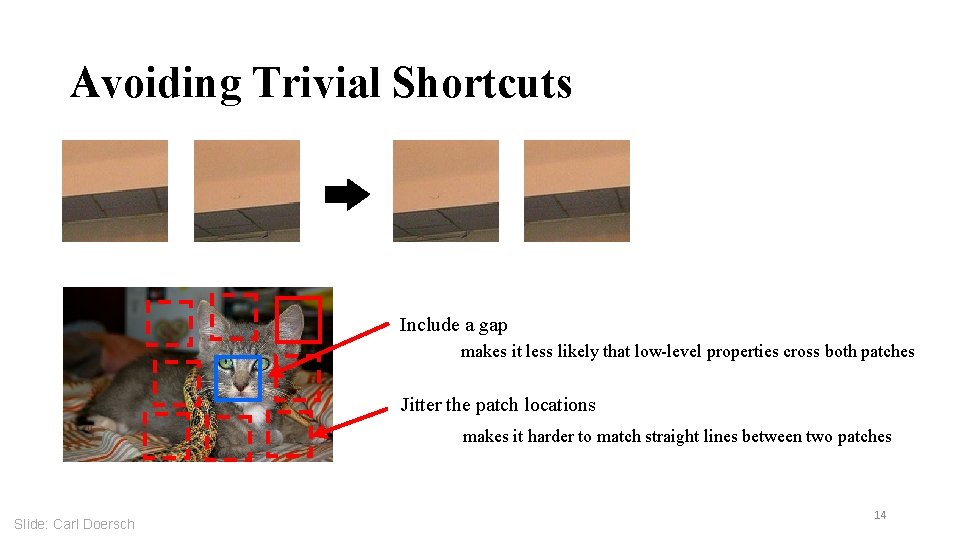

Avoiding Trivial Shortcuts Ways that the network can solve the problem without really extracting the semantics that we’re after. Slide: Carl Doersch 13

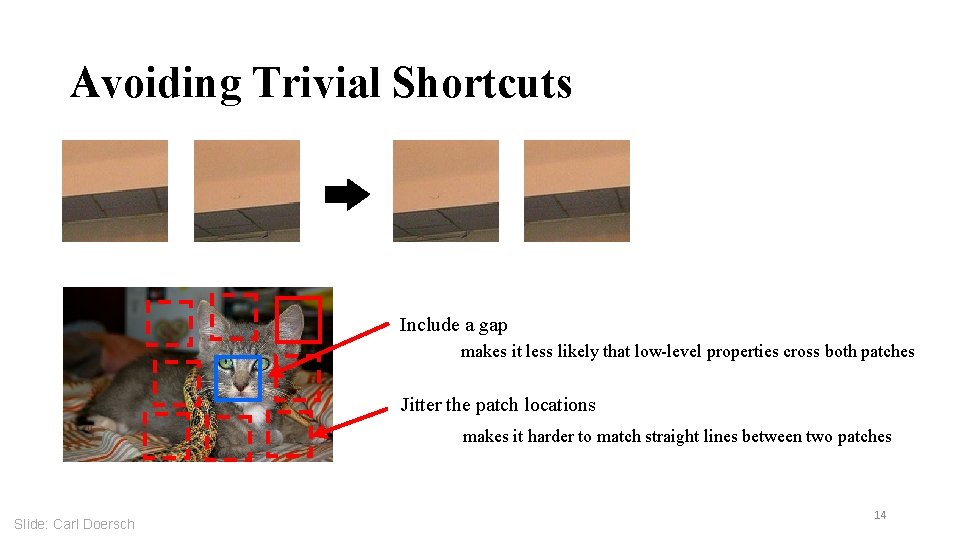

Avoiding Trivial Shortcuts Include a gap makes it less likely that low-level properties cross both patches Jitter the patch locations makes it harder to match straight lines between two patches Slide: Carl Doersch 14

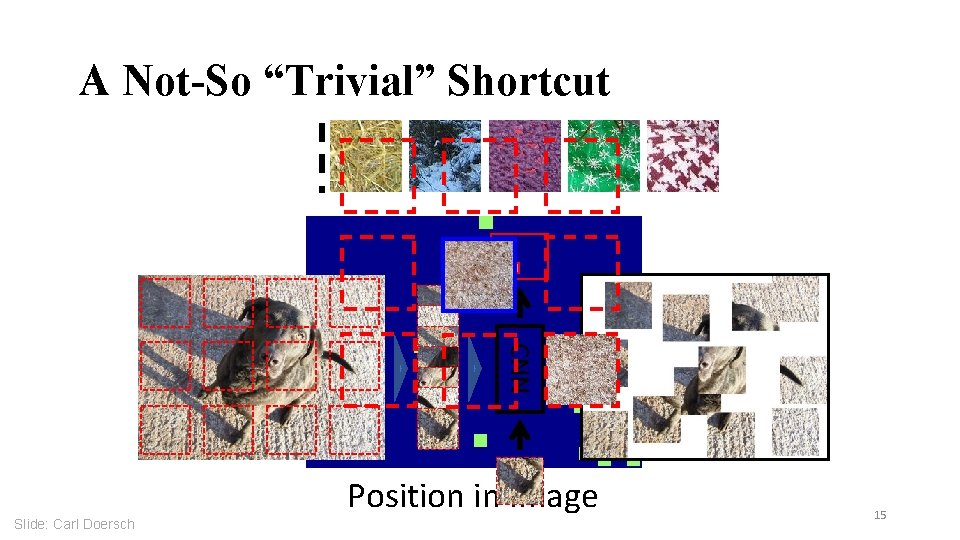

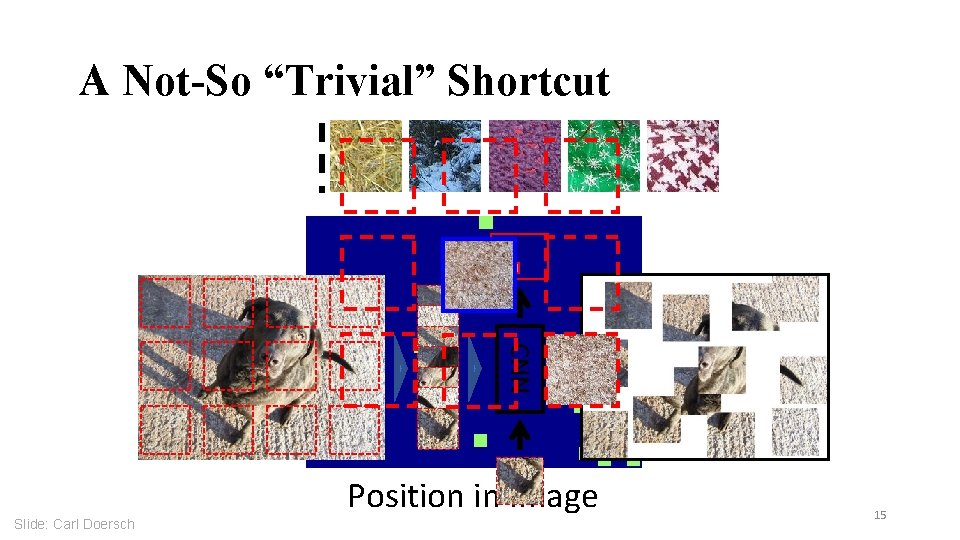

A Not-So “Trivial” Shortcut CNN Slide: Carl Doersch Position in Image 15

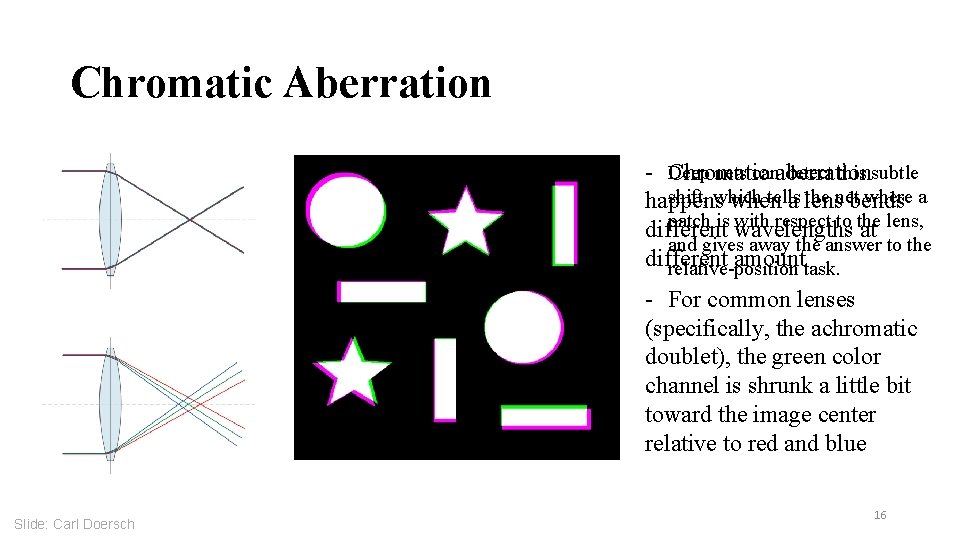

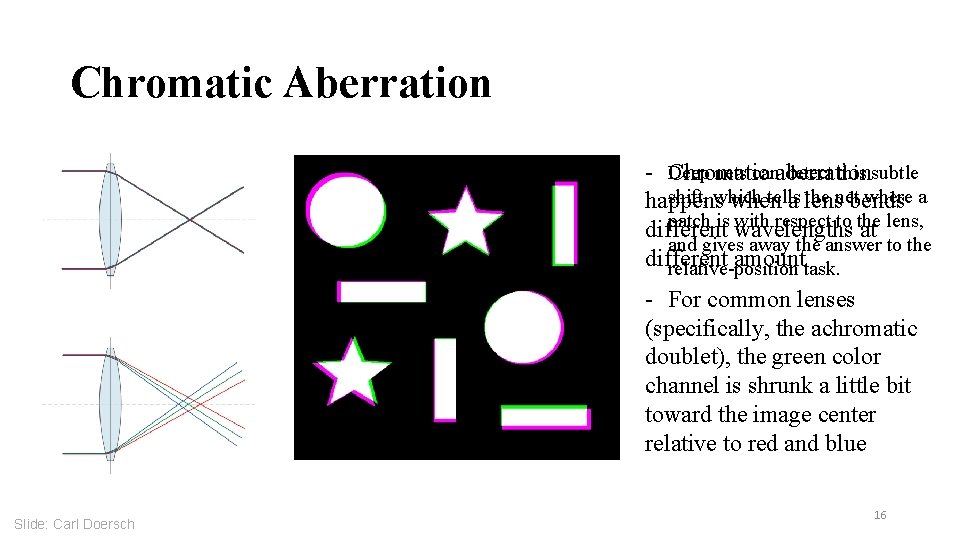

Chromatic Aberration - Chromatic Deep nets canaberration detect this subtle shift, which tells the net where a happens when a lens bends patch is wavelengths with respect to the different at lens, and gives away the answer to the different amounttask. relative-position - For common lenses (specifically, the achromatic doublet), the green color channel is shrunk a little bit toward the image center relative to red and blue Slide: Carl Doersch 16

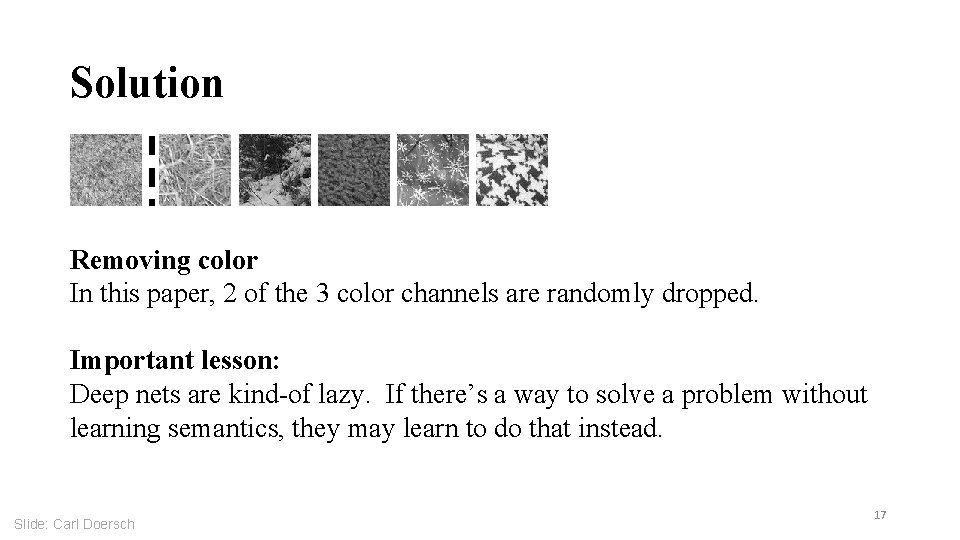

Solution Removing color In this paper, 2 of the 3 color channels are randomly dropped. Important lesson: Deep nets are kind-of lazy. If there’s a way to solve a problem without learning semantics, they may learn to do that instead. Slide: Carl Doersch 17

![PreTraining for RCNN Pretrain on relativeposition task wo labels Girshick et al 2014 18 Pre-Training for R-CNN Pre-train on relative-position task, w/o labels [Girshick et al. 2014] 18](https://slidetodoc.com/presentation_image_h/9faf38e548c07c1f42de92952de5330a/image-18.jpg)

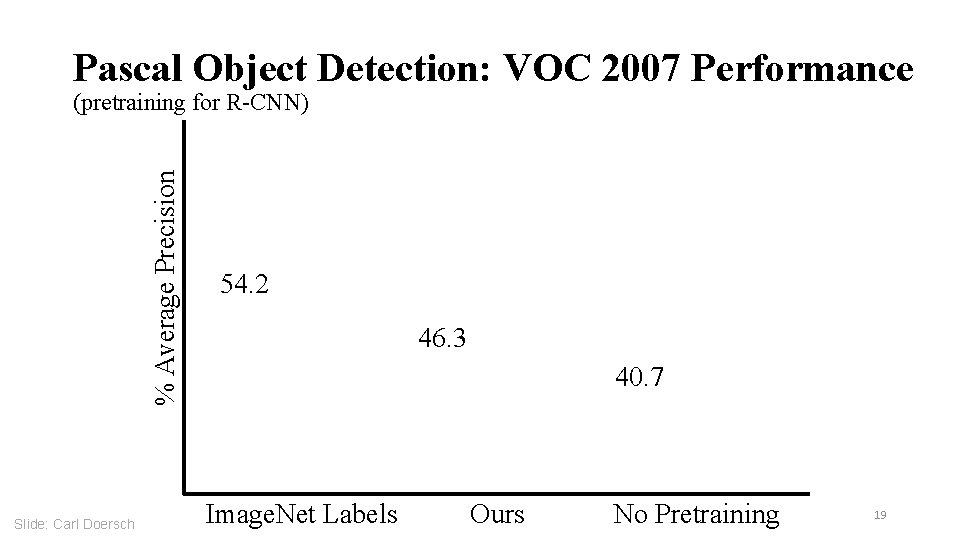

Pre-Training for R-CNN Pre-train on relative-position task, w/o labels [Girshick et al. 2014] 18

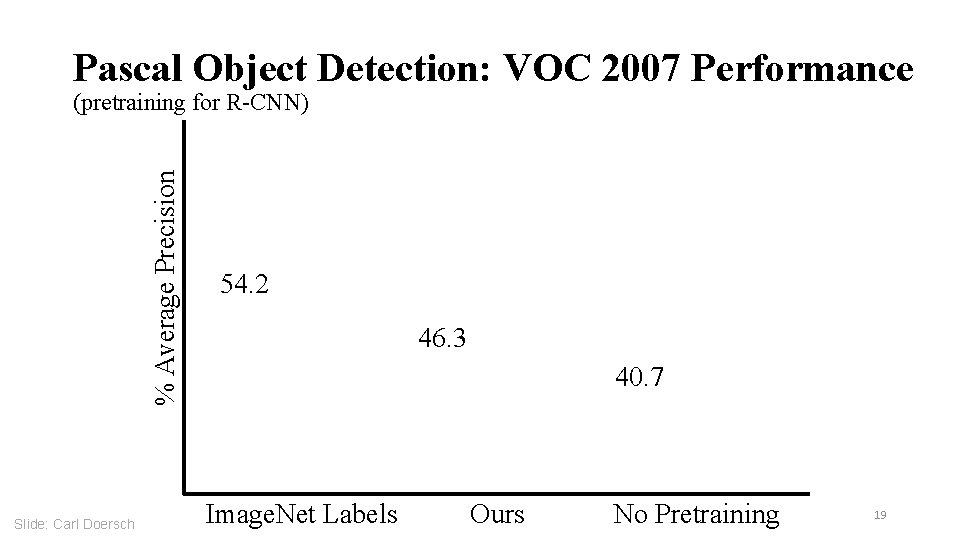

Pascal Object Detection: VOC 2007 Performance % Average Precision (pretraining for R-CNN) Slide: Carl Doersch 54. 2 46. 3 40. 7 Image. Net Labels Ours No Pretraining 19

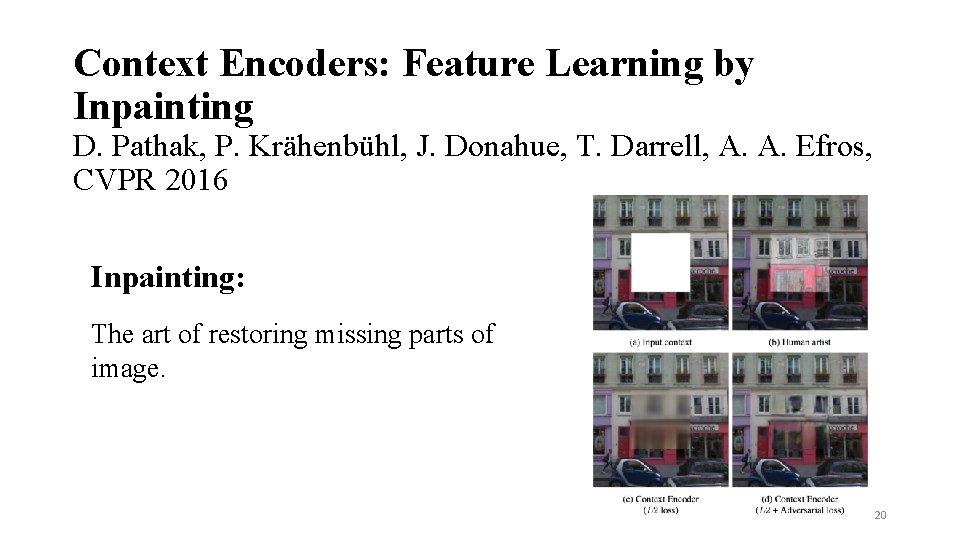

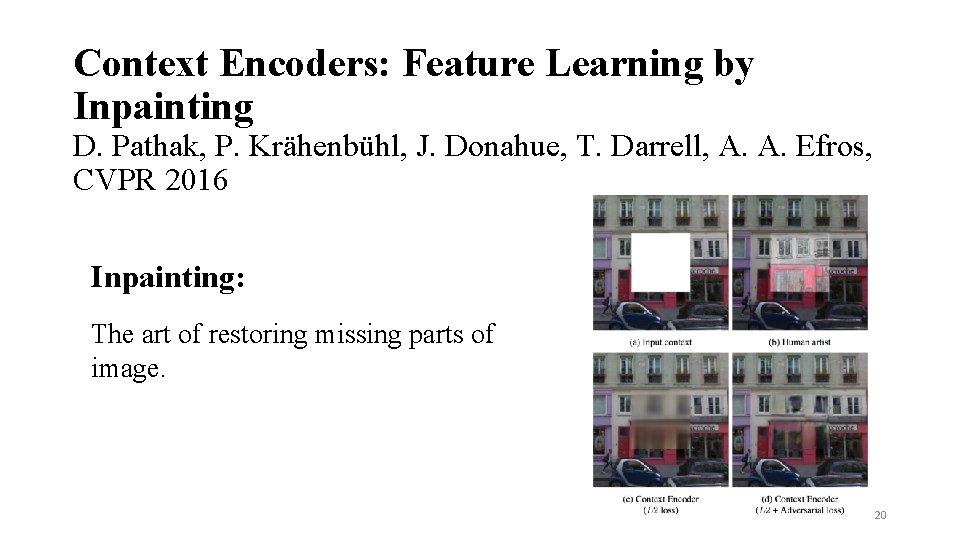

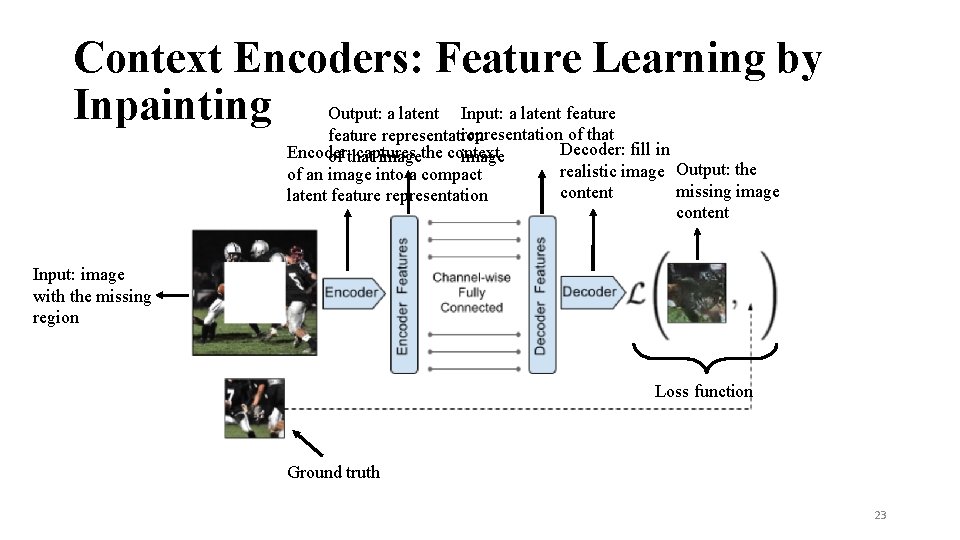

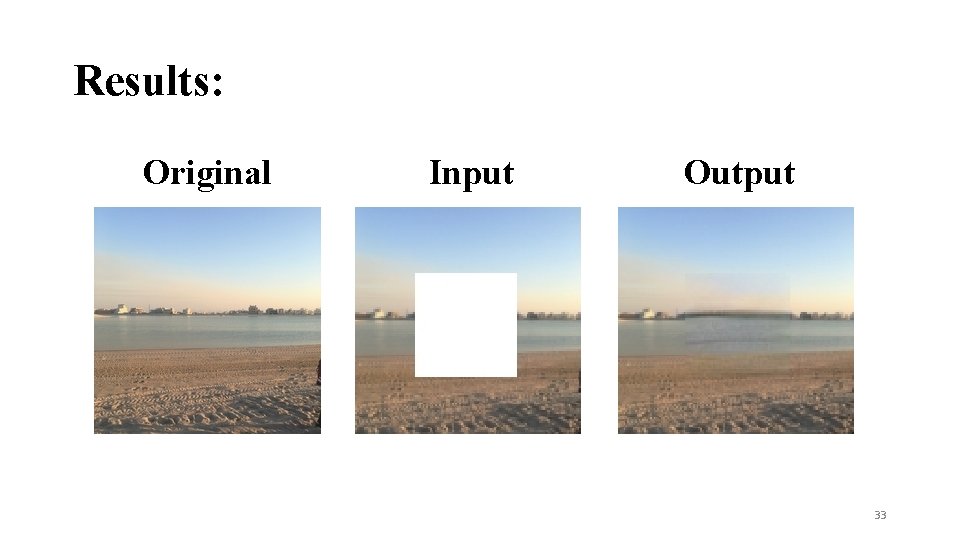

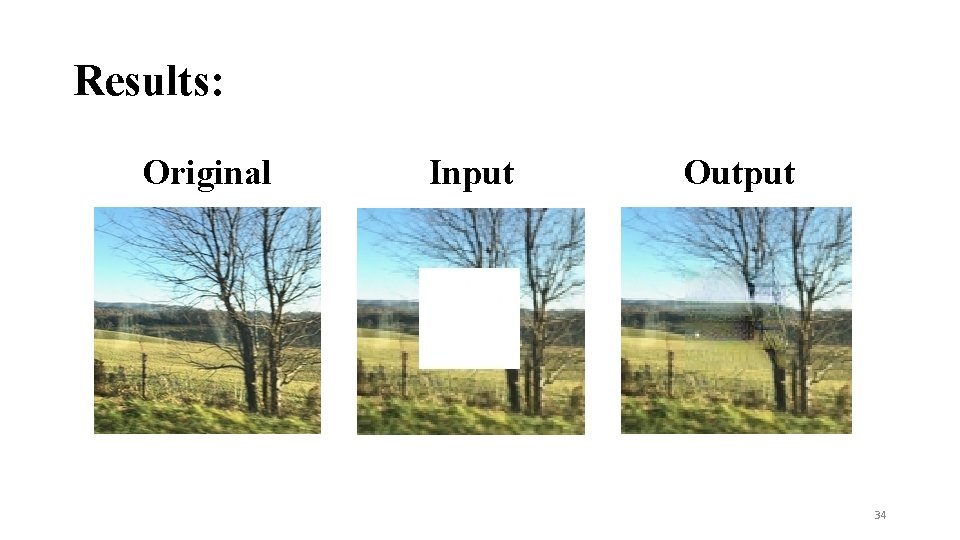

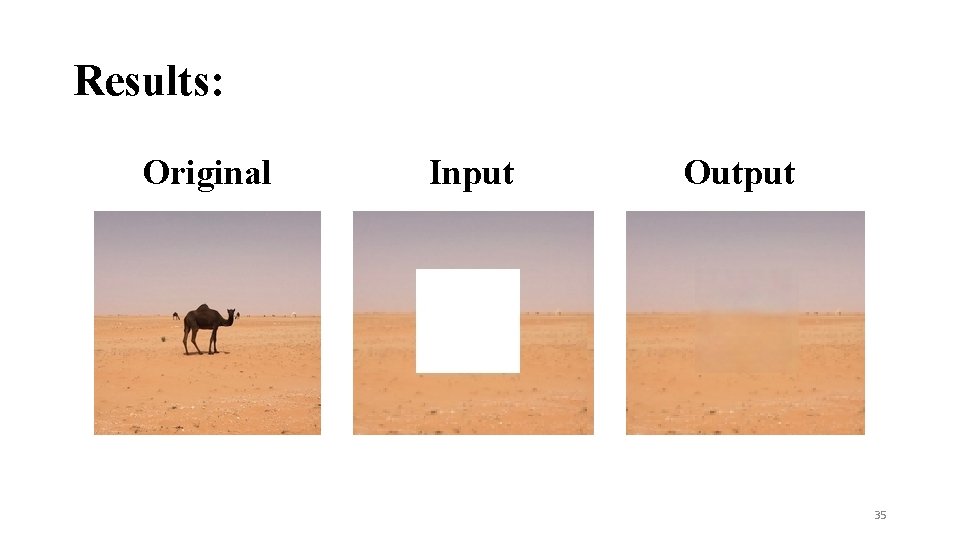

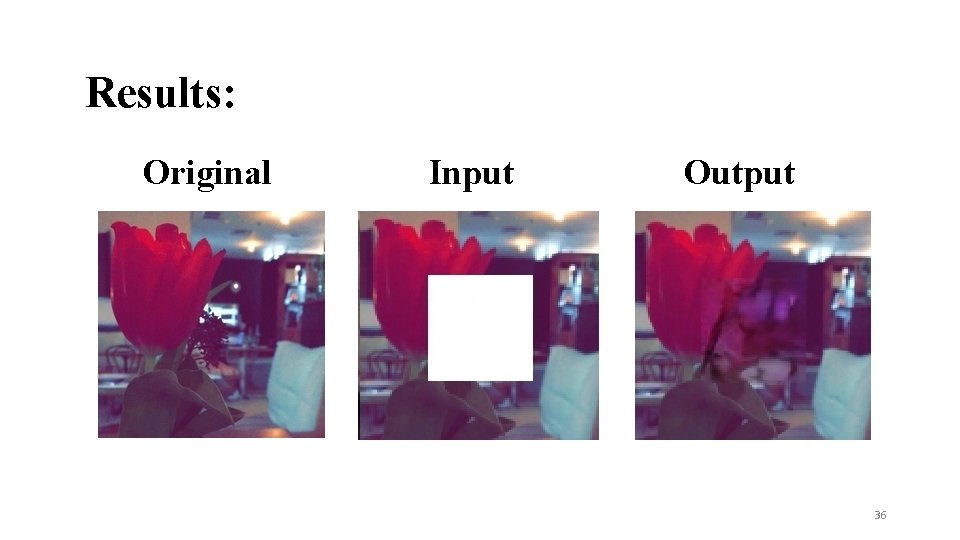

Context Encoders: Feature Learning by Inpainting D. Pathak, P. Krähenbühl, J. Donahue, T. Darrell, A. A. Efros, CVPR 2016 Inpainting: The art of restoring missing parts of image. 20

Context Encoders: Feature Learning by Inpainting Classical inpainting or texture synthesis approaches are local non-semantic methods Hence, they cannot handle large missing region. 21

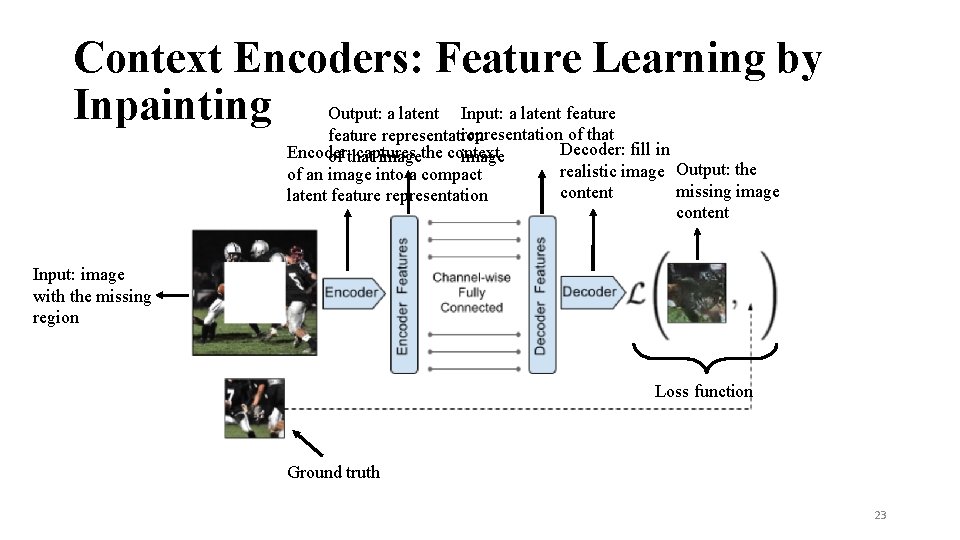

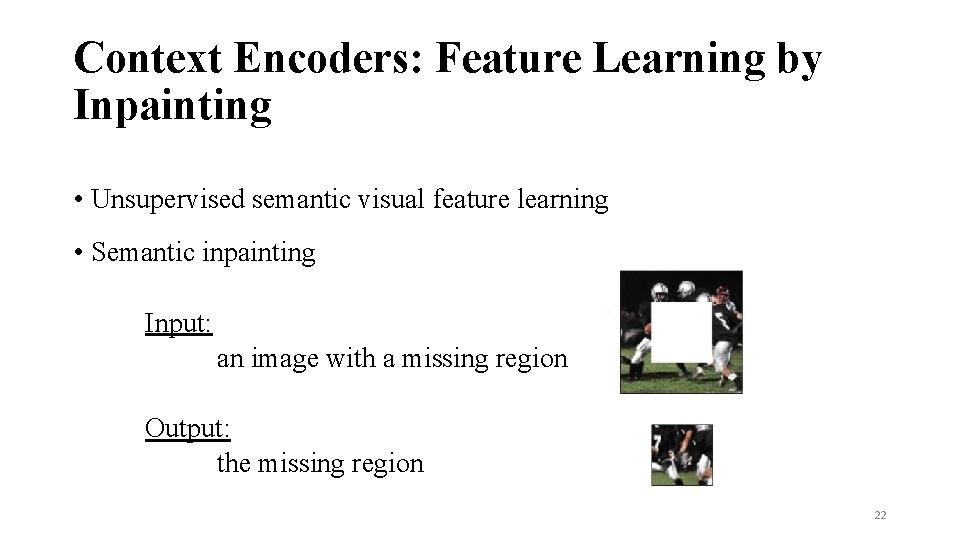

Context Encoders: Feature Learning by Inpainting • Unsupervised semantic visual feature learning • Semantic inpainting Input: an image with a missing region Output: the missing region 22

Context Encoders: Feature Learning by a latent Input: a latent feature Inpainting Output: representation of that feature representation Encoder: captures image of that imagethe context of an image into a compact latent feature representation Decoder: fill in realistic image Output: the missing image content Input: image with the missing region Loss function Ground truth 23

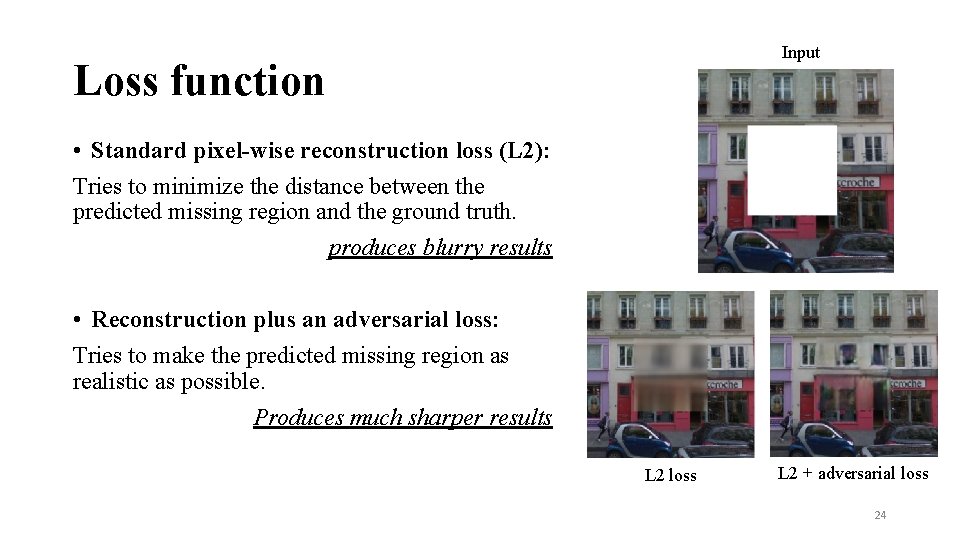

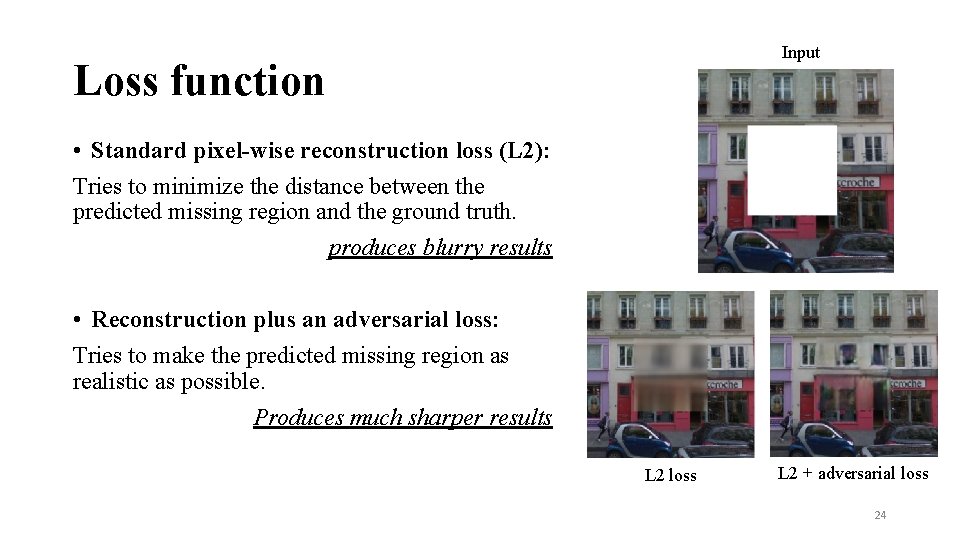

Input Loss function • Standard pixel-wise reconstruction loss (L 2): Tries to minimize the distance between the predicted missing region and the ground truth. produces blurry results • Reconstruction plus an adversarial loss: Tries to make the predicted missing region as realistic as possible. Produces much sharper results L 2 loss L 2 + adversarial loss 24

Results 25

ECE 6554: Advanced Computer Vision Spring 2017 EXPERIMENT Context Encoders: Feature Learning by Inpainting Badour Al. Bahar 26

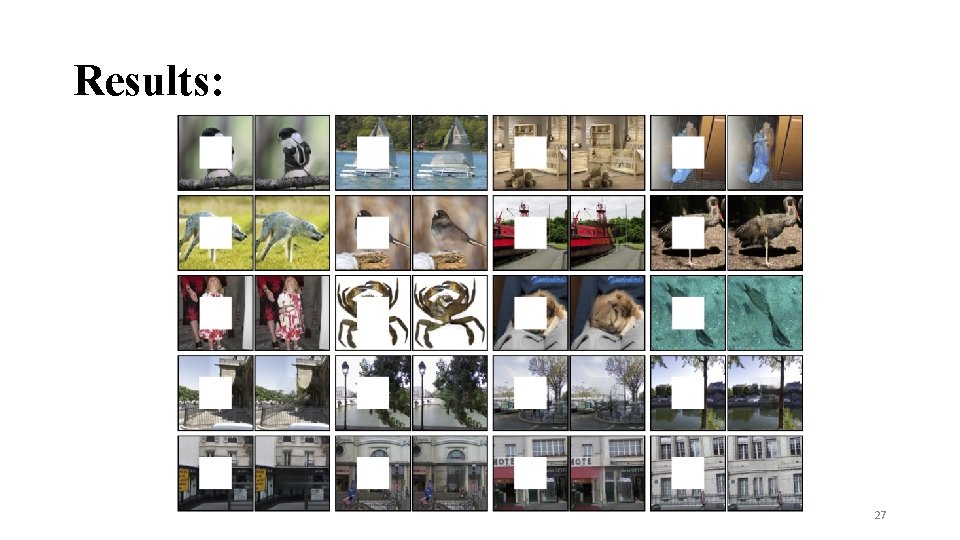

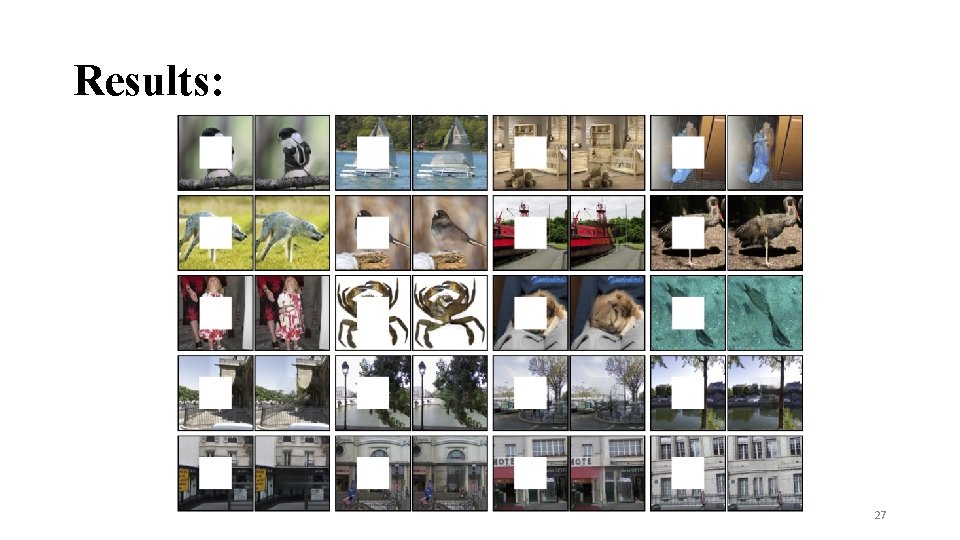

Results: 27

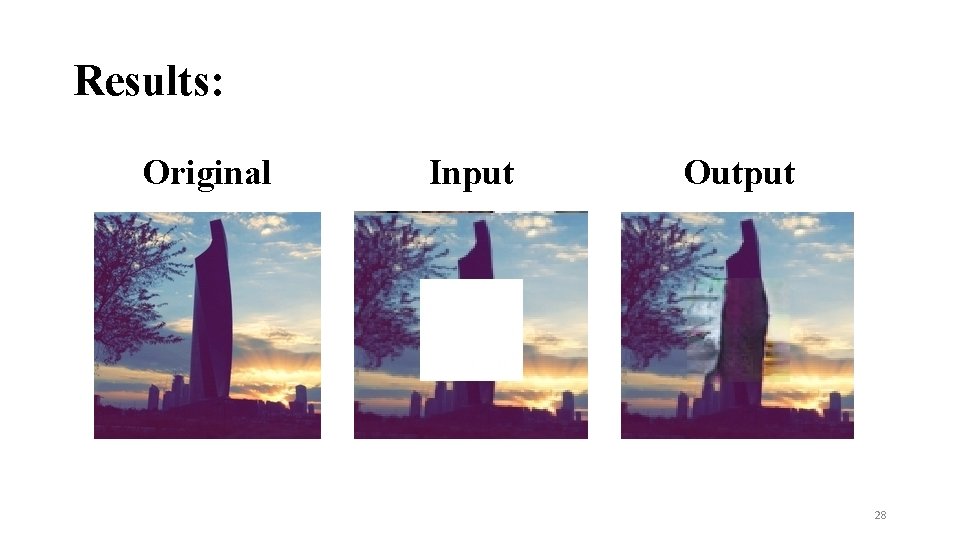

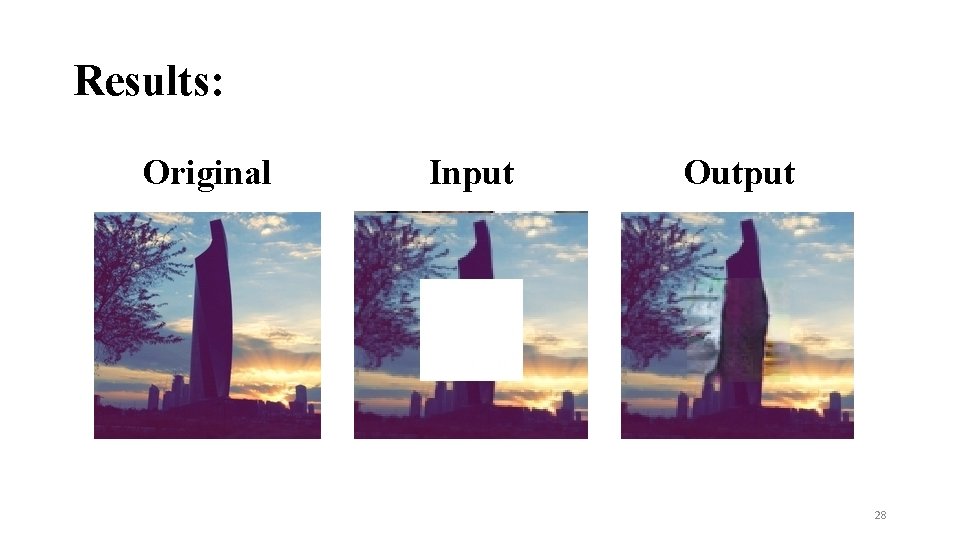

Results: Original Input Output 28

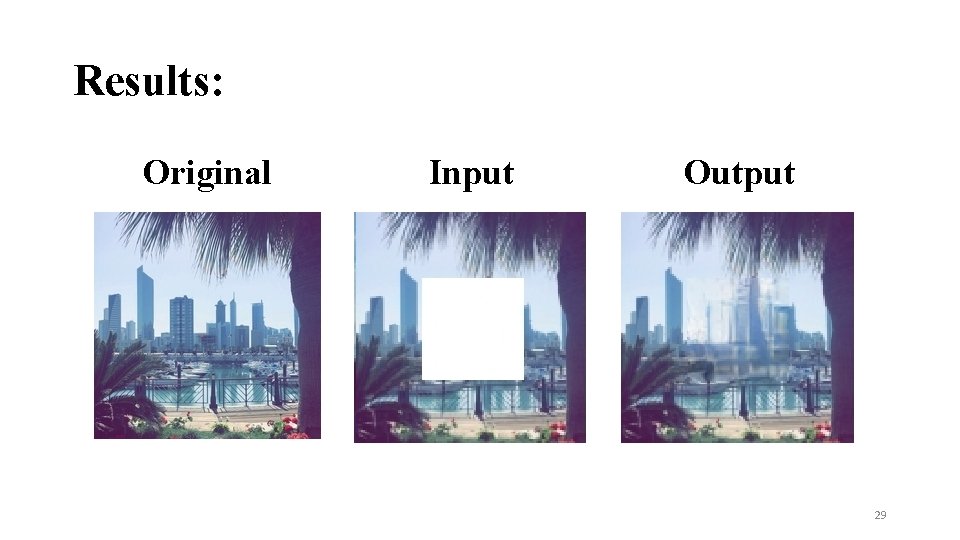

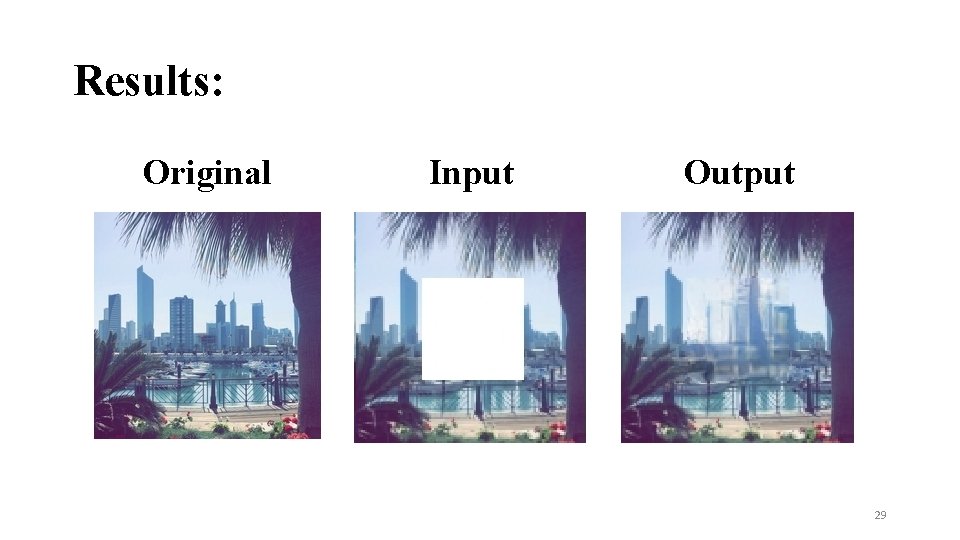

Results: Original Input Output 29

Results: Original Input Output 30

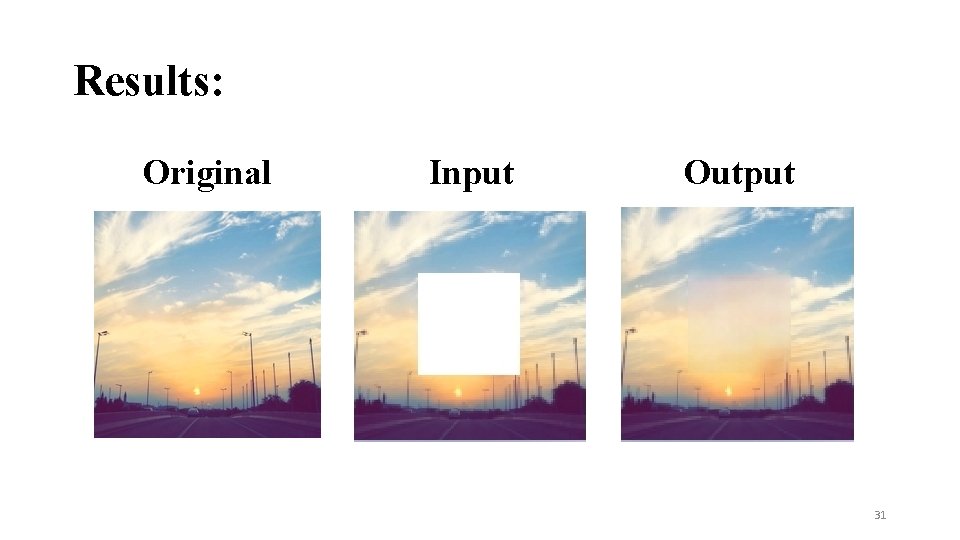

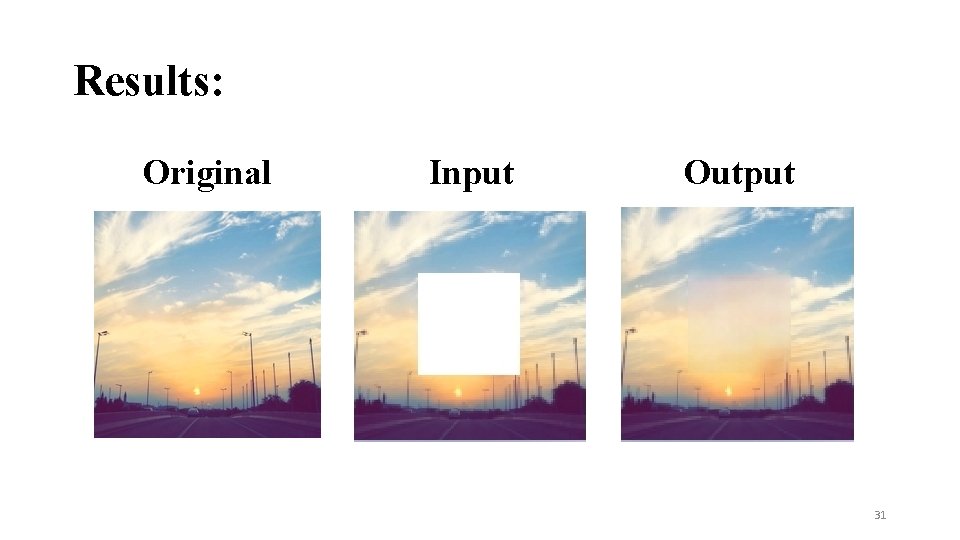

Results: Original Input Output 31

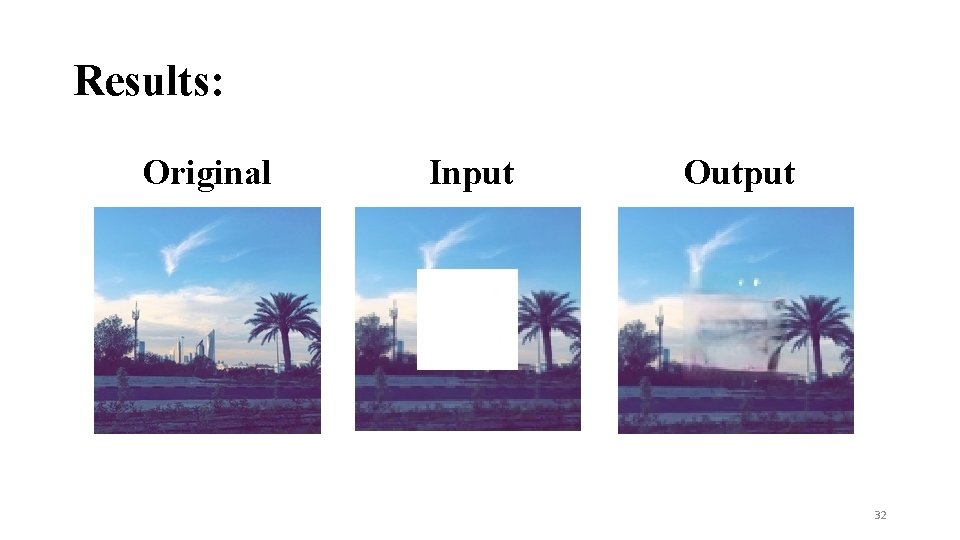

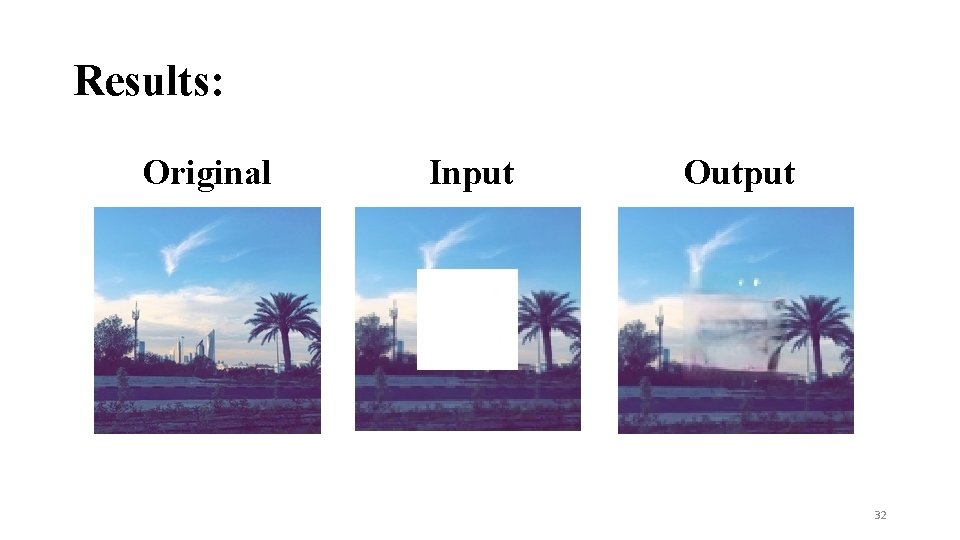

Results: Original Input Output 32

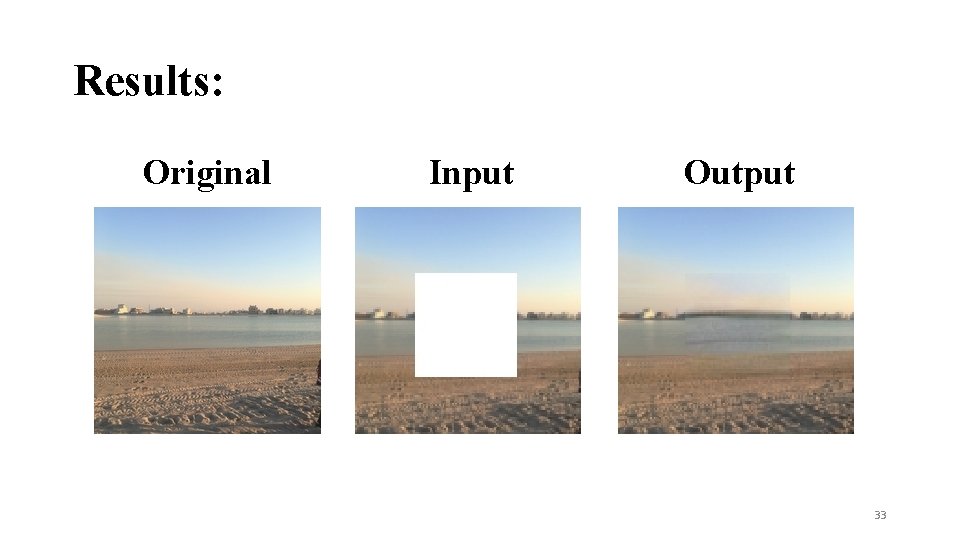

Results: Original Input Output 33

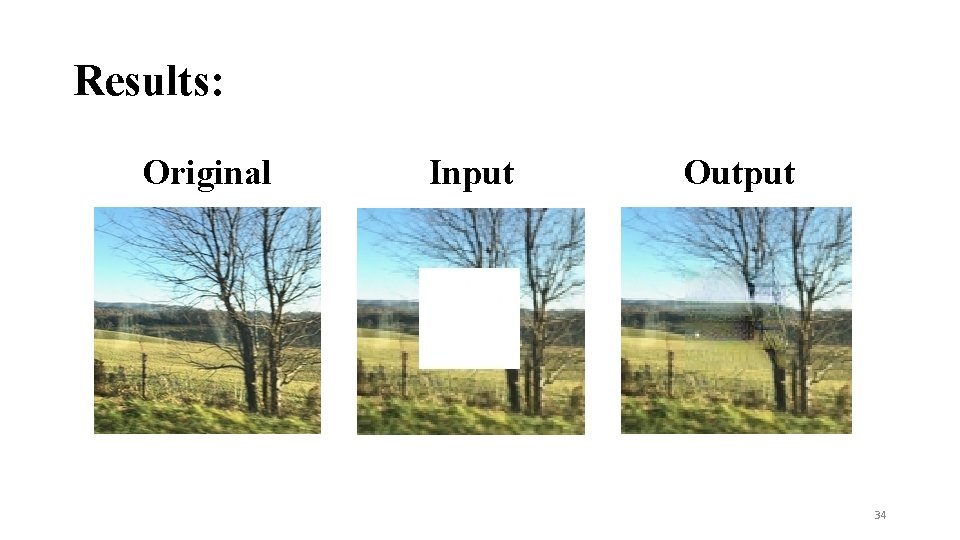

Results: Original Input Output 34

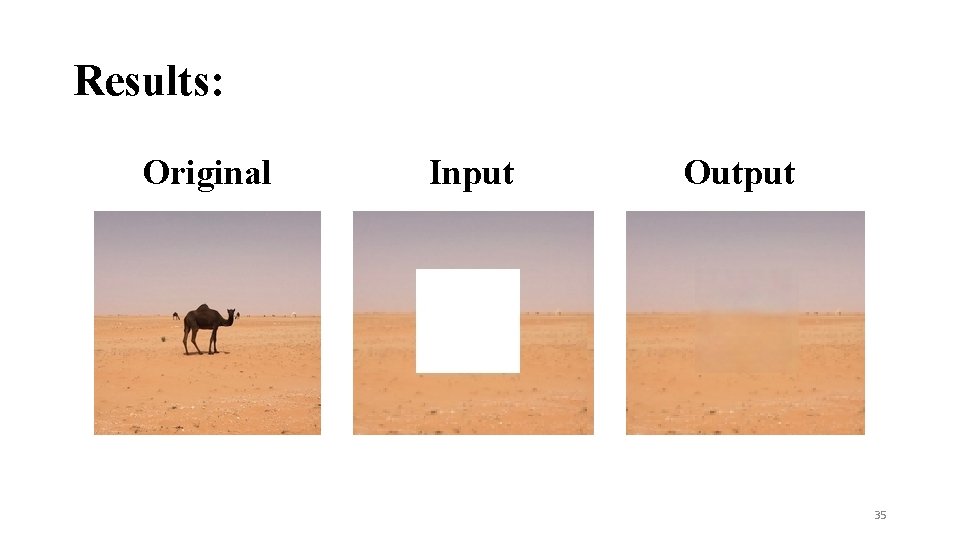

Results: Original Input Output 35

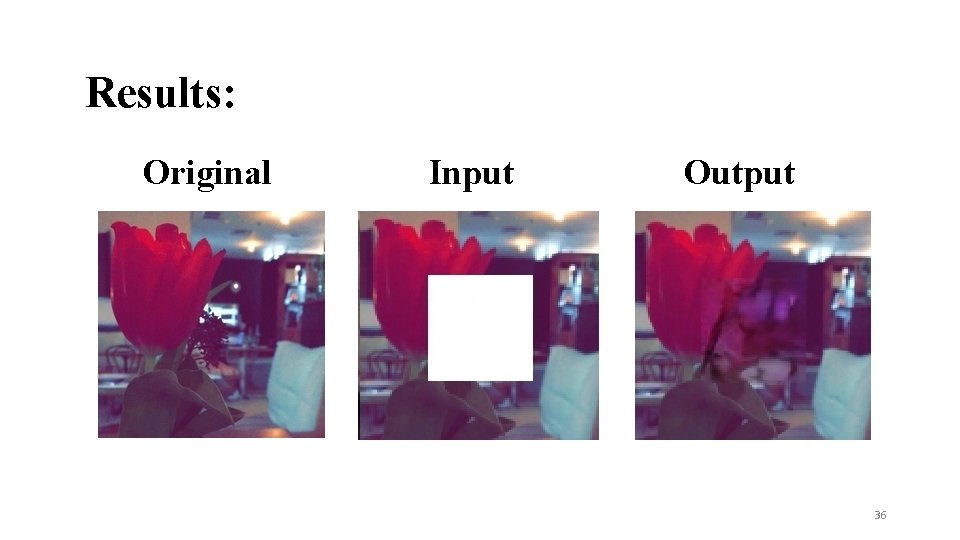

Results: Original Input Output 36

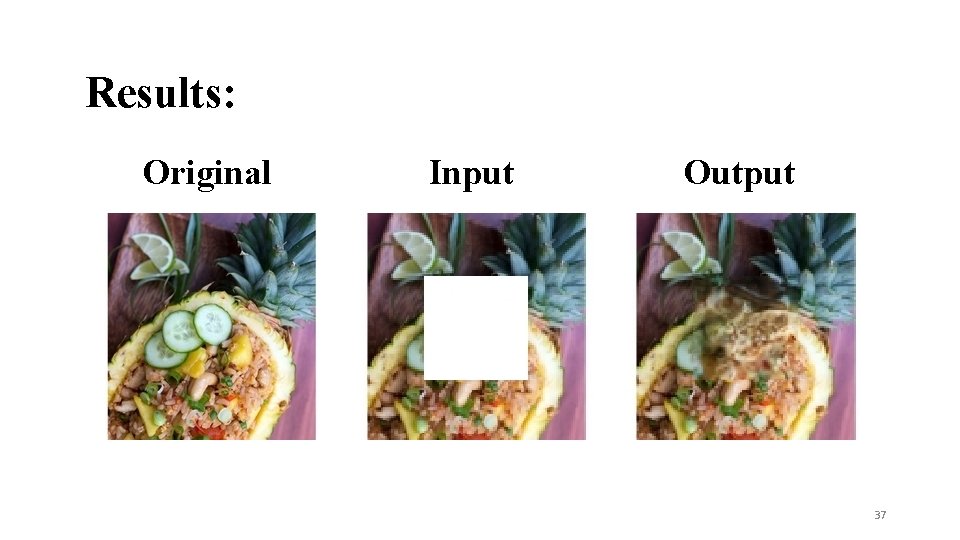

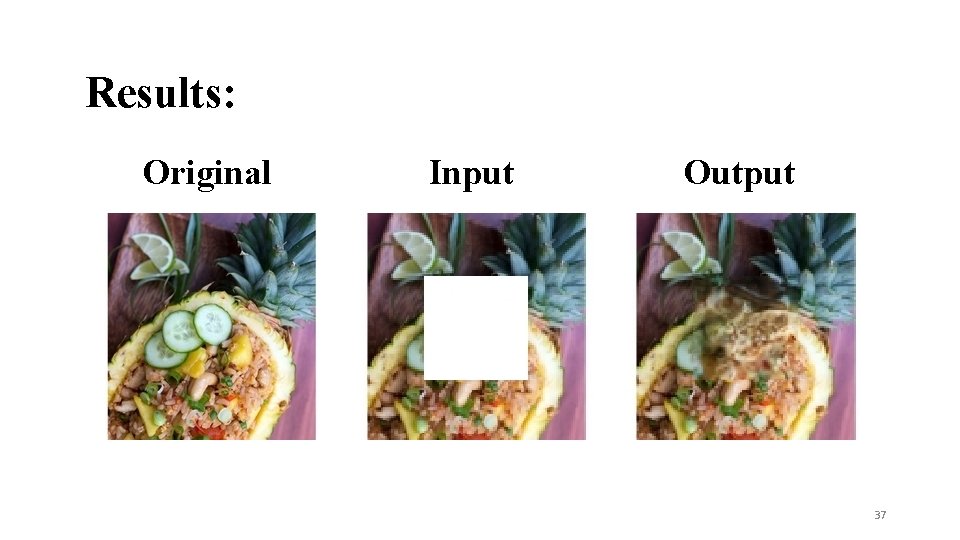

Results: Original Input Output 37

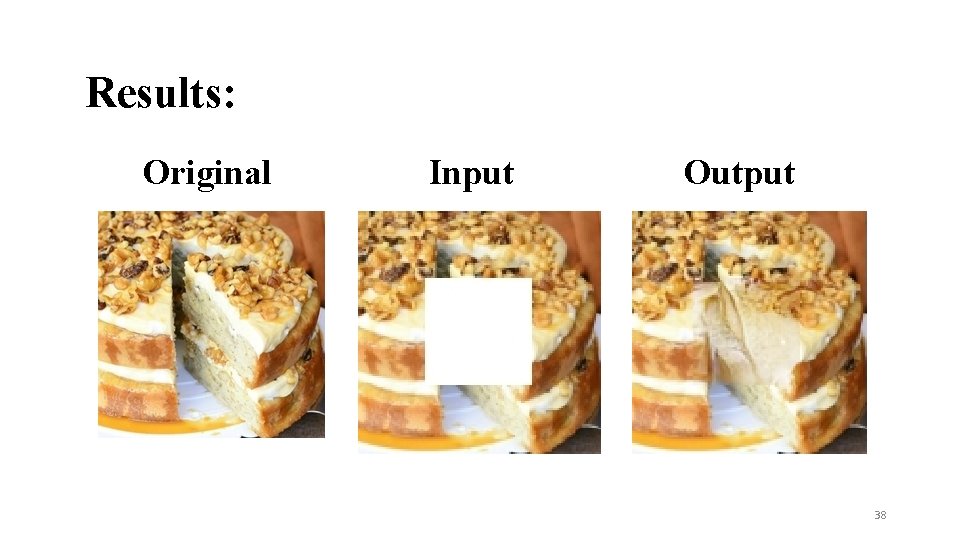

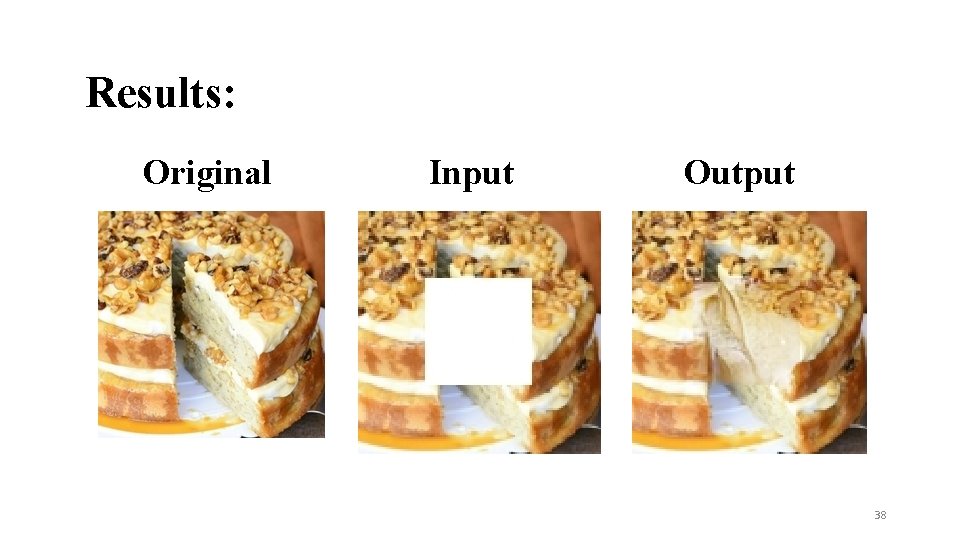

Results: Original Input Output 38

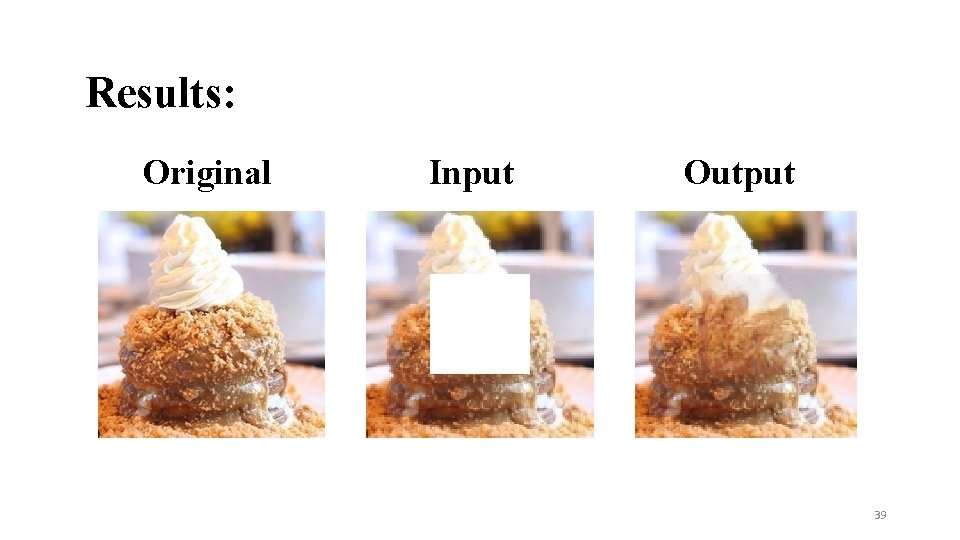

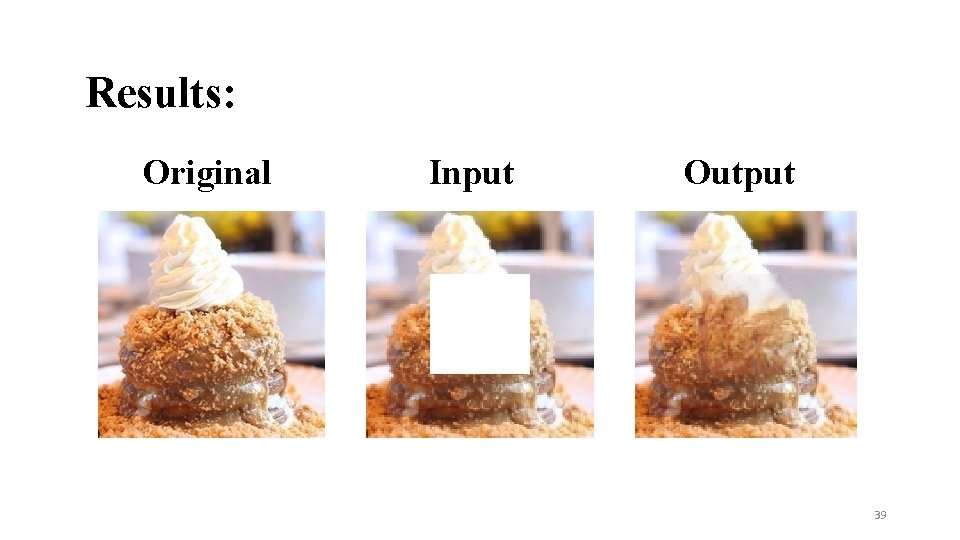

Results: Original Input Output 39

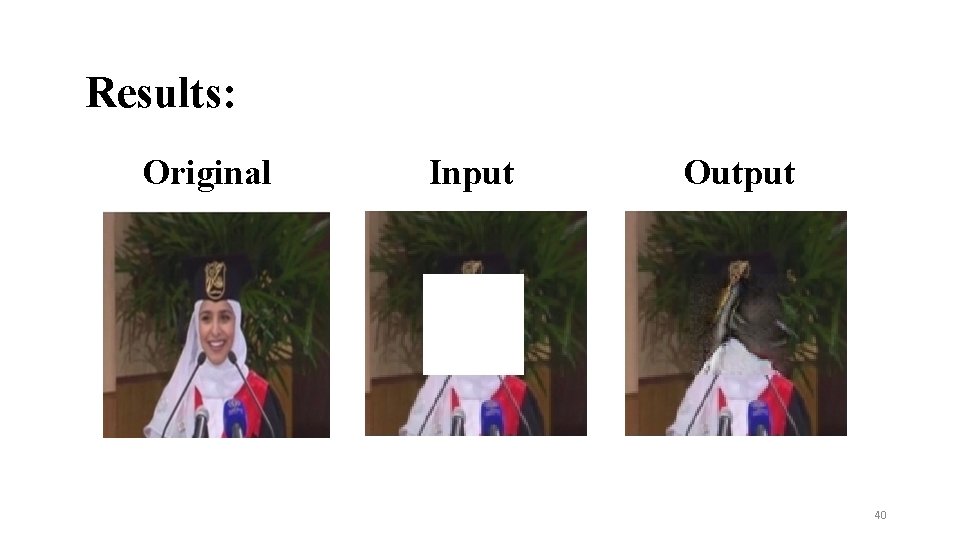

Results: Original Input Output 40

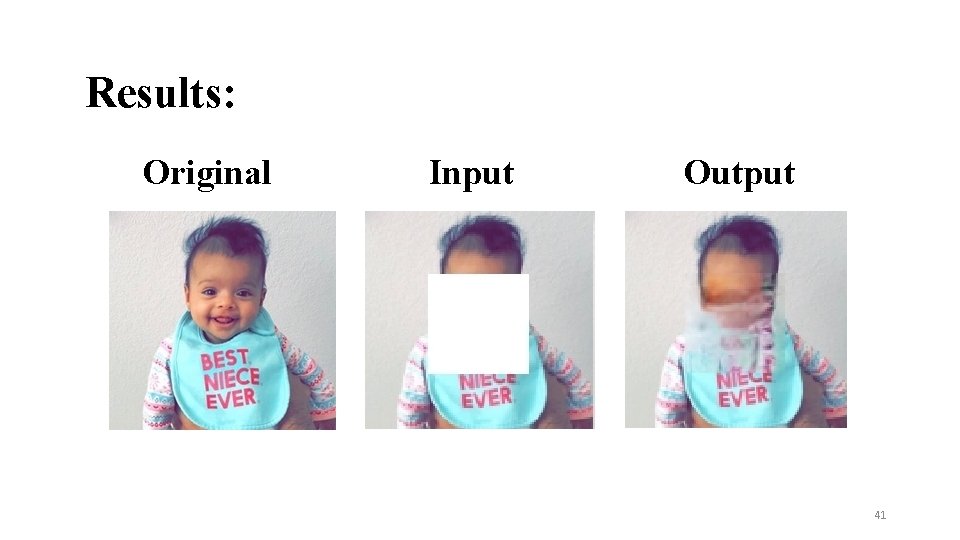

Results: Original Input Output 41

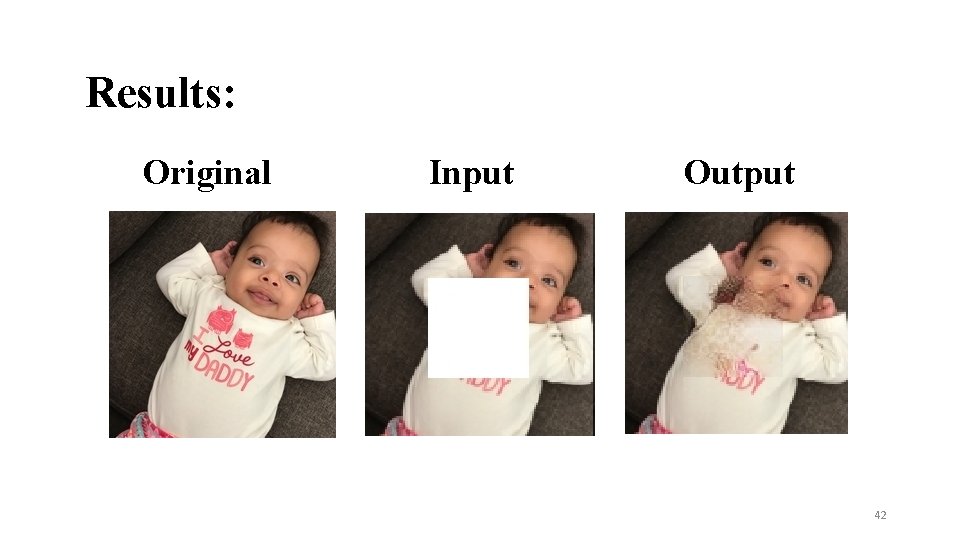

Results: Original Input Output 42

Thank you! 43