ECE 599692 Deep Learning Lecture 9 Autoencoder AE

![Denoising Autoencoder (DAE) [DAE: 2008] 7 Denoising Autoencoder (DAE) [DAE: 2008] 7](https://slidetodoc.com/presentation_image/28cb1ae29ca962bd573765de3796d0a8/image-7.jpg)

![The Two Papers in 2006 • [Hinton: 2006 a] G. E. Hinton, S. Osindero, The Two Papers in 2006 • [Hinton: 2006 a] G. E. Hinton, S. Osindero,](https://slidetodoc.com/presentation_image/28cb1ae29ca962bd573765de3796d0a8/image-8.jpg)

![[Hinton: 2006 b] 10 [Hinton: 2006 b] 10](https://slidetodoc.com/presentation_image/28cb1ae29ca962bd573765de3796d0a8/image-10.jpg)

- Slides: 13

ECE 599/692 – Deep Learning Lecture 9 – Autoencoder (AE) Hairong Qi, Gonzalez Family Professor Electrical Engineering and Computer Science University of Tennessee, Knoxville http: //www. eecs. utk. edu/faculty/qi Email: hqi@utk. edu 1

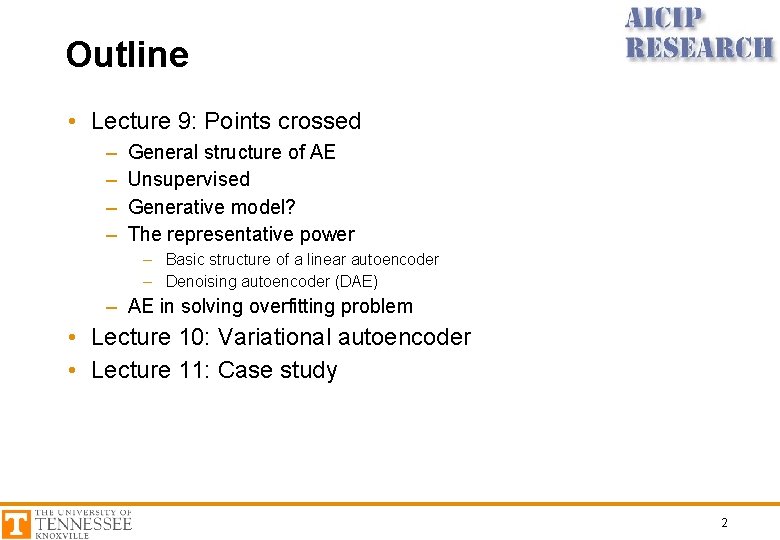

Outline • Lecture 9: Points crossed – – General structure of AE Unsupervised Generative model? The representative power – Basic structure of a linear autoencoder – Denoising autoencoder (DAE) – AE in solving overfitting problem • Lecture 10: Variational autoencoder • Lecture 11: Case study 2

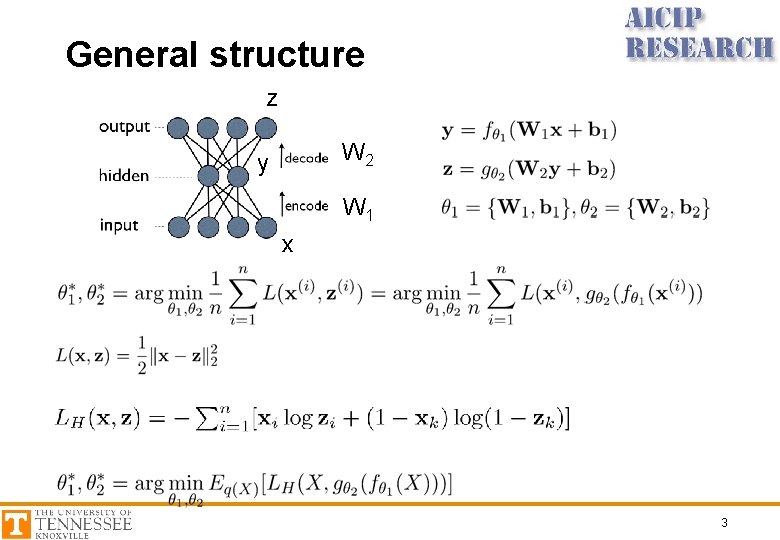

General structure z W 2 y W 1 x 3

Generative Model • The goal is to learn a model P which we can sample from, such that P is as similar as possible to Pgt, where Pgt is some unknown distribution that had generated examples X • The ingredients – Explicit estimate of the density – Ability to sample directly 4

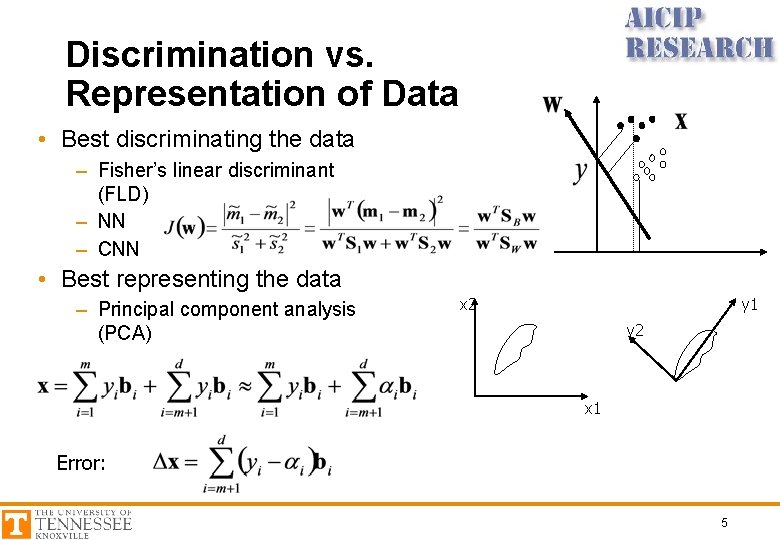

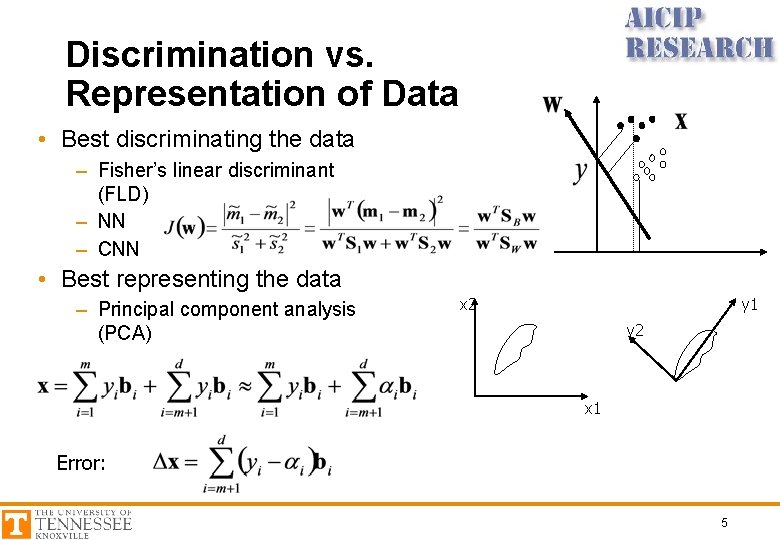

Discrimination vs. Representation of Data • Best discriminating the data – Fisher’s linear discriminant (FLD) – NN – CNN • Best representing the data – Principal component analysis (PCA) x 2 y 1 y 2 x 1 Error: 5

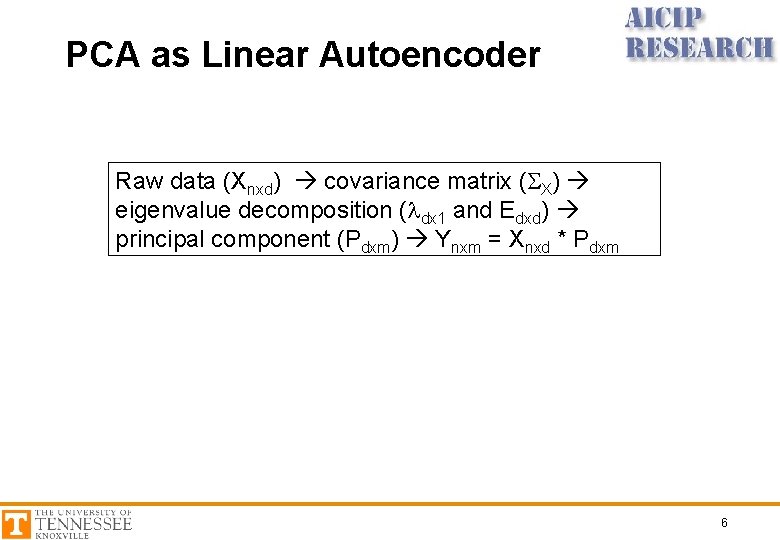

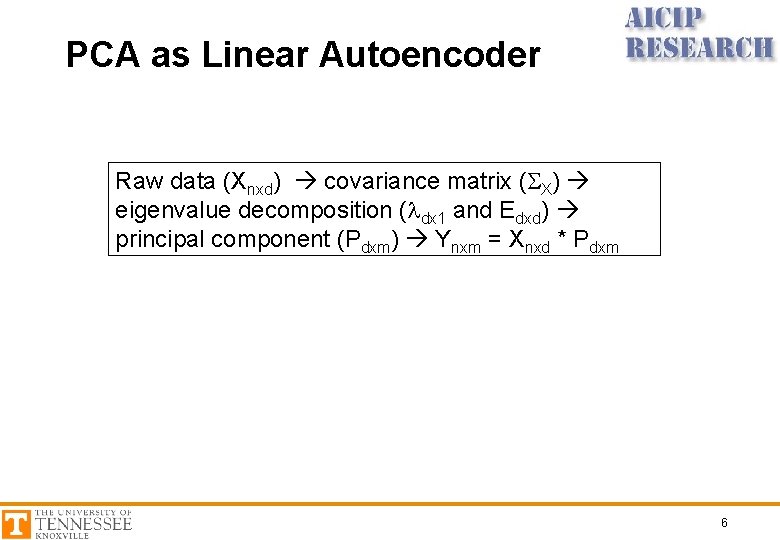

PCA as Linear Autoencoder Raw data (Xnxd) covariance matrix (SX) eigenvalue decomposition (ldx 1 and Edxd) principal component (Pdxm) Ynxm = Xnxd * Pdxm 6

![Denoising Autoencoder DAE DAE 2008 7 Denoising Autoencoder (DAE) [DAE: 2008] 7](https://slidetodoc.com/presentation_image/28cb1ae29ca962bd573765de3796d0a8/image-7.jpg)

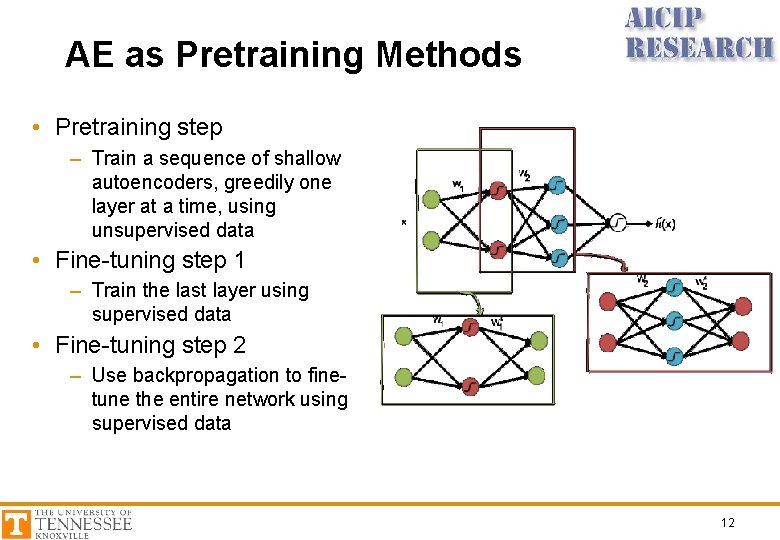

Denoising Autoencoder (DAE) [DAE: 2008] 7

![The Two Papers in 2006 Hinton 2006 a G E Hinton S Osindero The Two Papers in 2006 • [Hinton: 2006 a] G. E. Hinton, S. Osindero,](https://slidetodoc.com/presentation_image/28cb1ae29ca962bd573765de3796d0a8/image-8.jpg)

The Two Papers in 2006 • [Hinton: 2006 a] G. E. Hinton, S. Osindero, Y. W. Teh, “A fast learning algorithm for deep belief nets, ” Neural Computation, 18(7): 1527 -1554, 2006. • [Hinton: 2006 b] G. E. Hinton, R. R. Salakhutdinov, “Reducing the dimensionality of data with neural networks, ” Science, 313: 504 -507, July 2006. 8

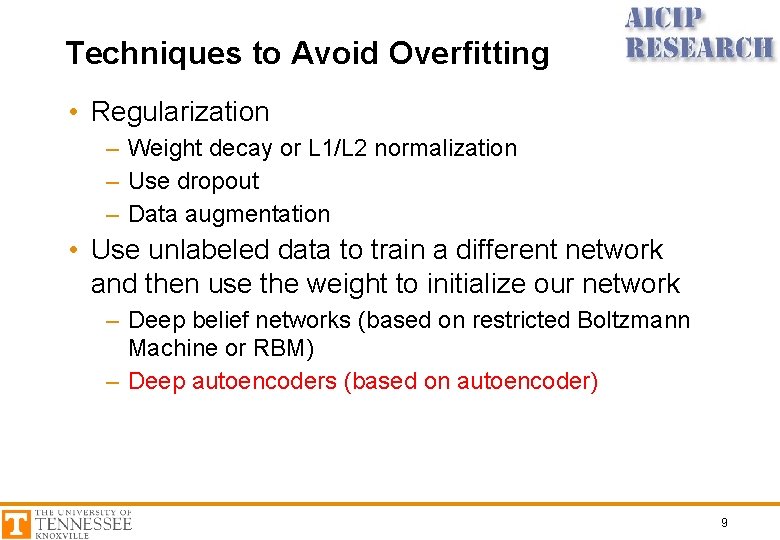

Techniques to Avoid Overfitting • Regularization – Weight decay or L 1/L 2 normalization – Use dropout – Data augmentation • Use unlabeled data to train a different network and then use the weight to initialize our network – Deep belief networks (based on restricted Boltzmann Machine or RBM) – Deep autoencoders (based on autoencoder) 9

![Hinton 2006 b 10 [Hinton: 2006 b] 10](https://slidetodoc.com/presentation_image/28cb1ae29ca962bd573765de3796d0a8/image-10.jpg)

[Hinton: 2006 b] 10

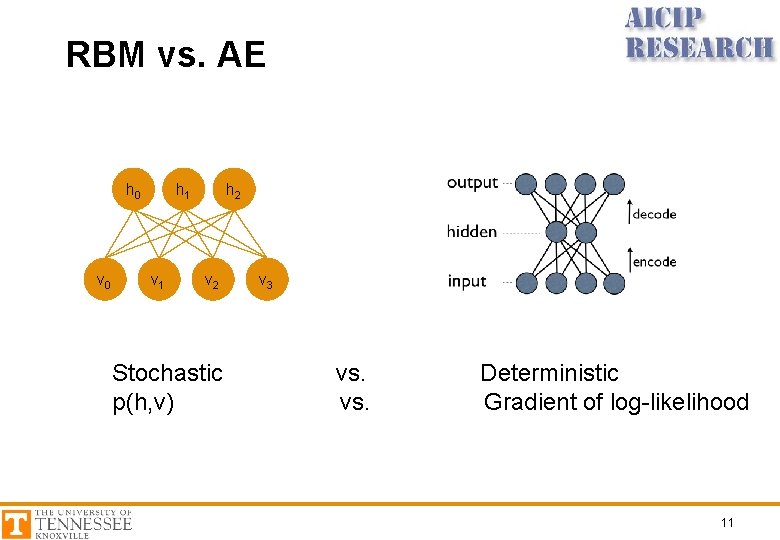

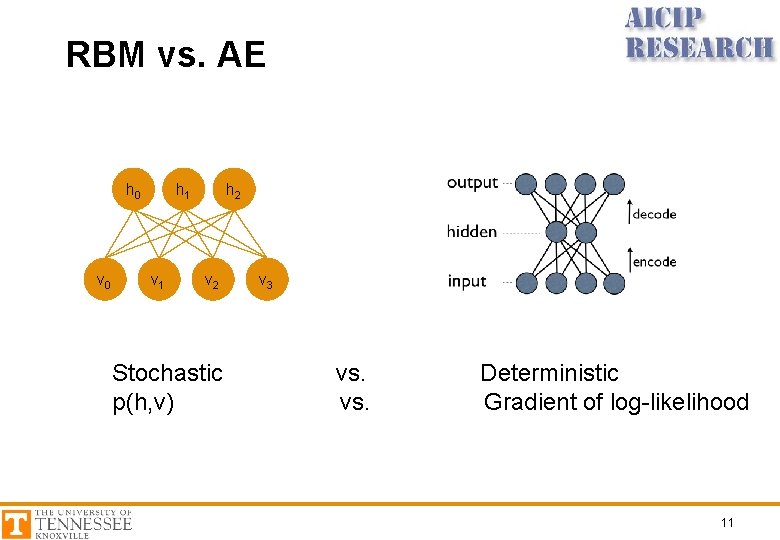

RBM vs. AE h 1 h 0 v 1 h 2 v 2 Stochastic p(h, v) v 3 vs. Deterministic Gradient of log-likelihood 11

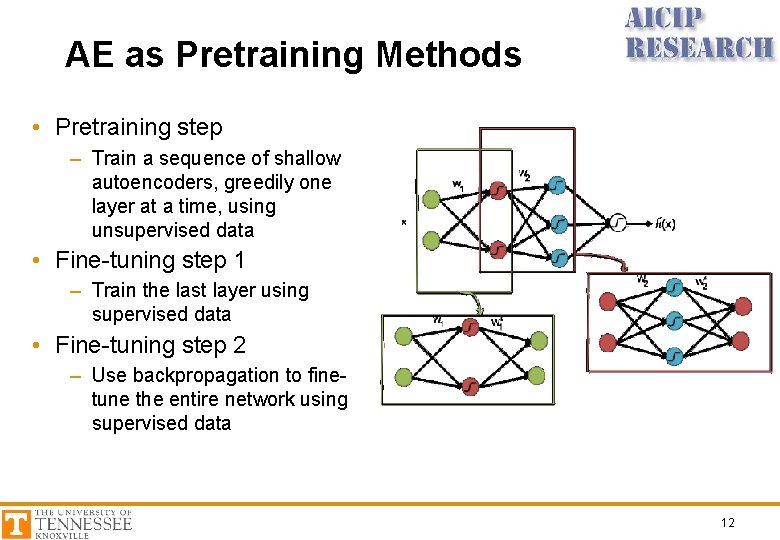

AE as Pretraining Methods • Pretraining step – Train a sequence of shallow autoencoders, greedily one layer at a time, using unsupervised data • Fine-tuning step 1 – Train the last layer using supervised data • Fine-tuning step 2 – Use backpropagation to finetune the entire network using supervised data 12

Recap • • General structure of AE Unsupervised Generative model? The representative power – Basic structure of a linear autoencoder – Denoising autoencoder (DAE) • AE in solving overfitting problem 13