ECE 5984 Introduction to Machine Learning Topics Supervised

- Slides: 26

ECE 5984: Introduction to Machine Learning Topics: – Supervised Learning – Measuring performance – Nearest Neighbour Readings: Barber 14 (k. NN) Dhruv Batra Virginia Tech

TA: Qing Sun • Ph. D candidate at ECE department • Research work/interest: – Diverse outputs based on structured probabilistic models – Structured-output prediction (C) Dhruv Batra 2

Recap from last time (C) Dhruv Batra 3

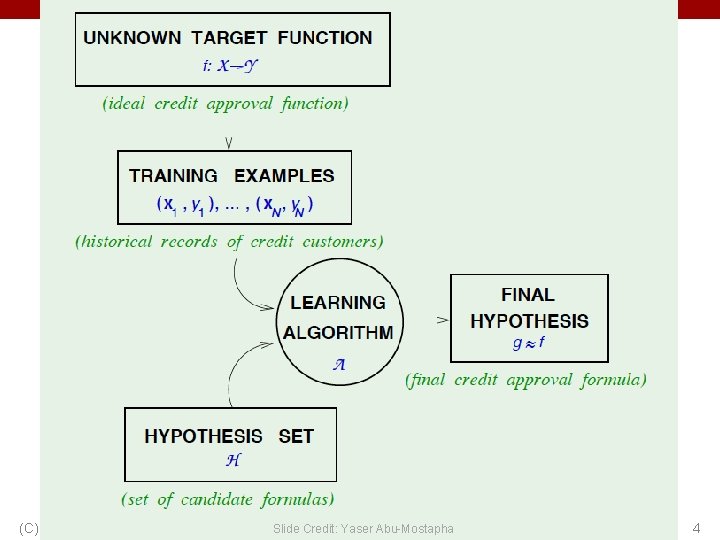

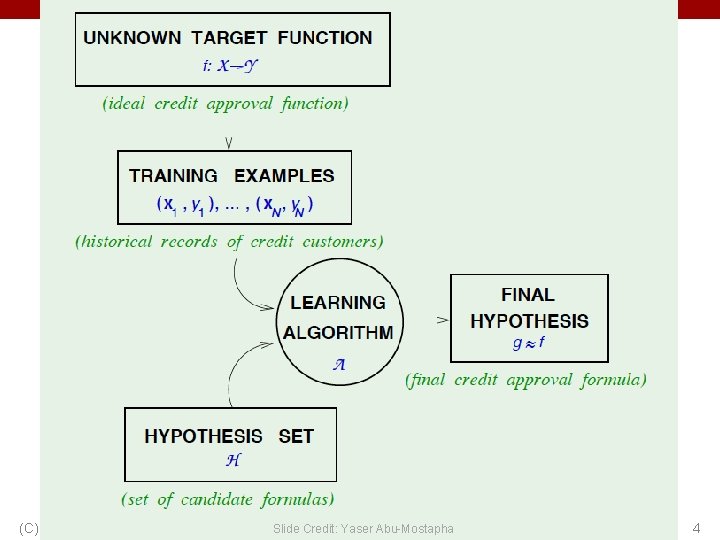

(C) Dhruv Batra Slide Credit: Yaser Abu-Mostapha 4

Nearest Neighbour • Demo 1 – http: //cgm. cs. mcgill. ca/~soss/cs 644/projects/perrier/Nearest. html • Demo 2 – http: //www. cs. technion. ac. il/~rani/Loc. Boost/ (C) Dhruv Batra 5

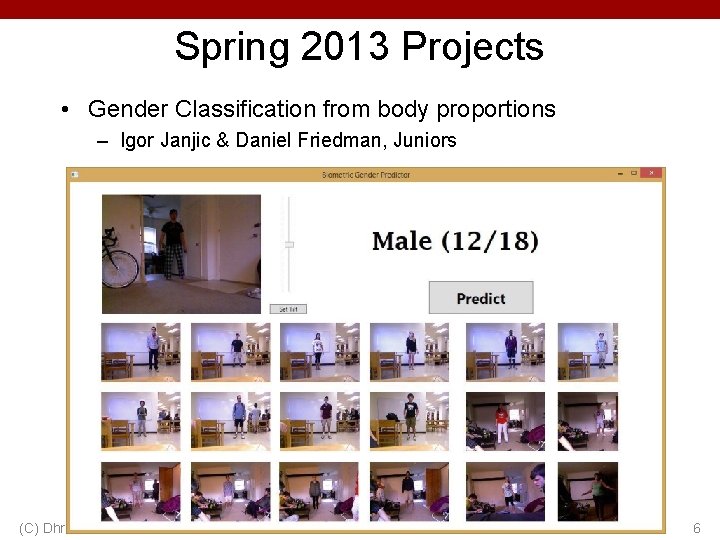

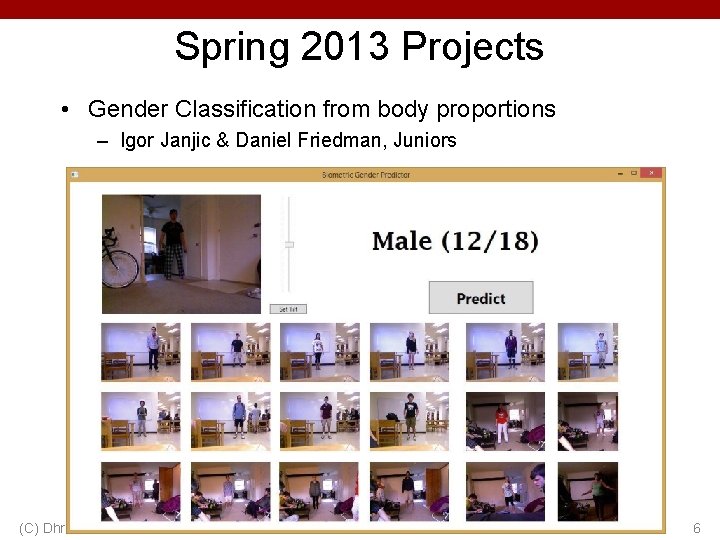

Spring 2013 Projects • Gender Classification from body proportions – Igor Janjic & Daniel Friedman, Juniors (C) Dhruv Batra 6

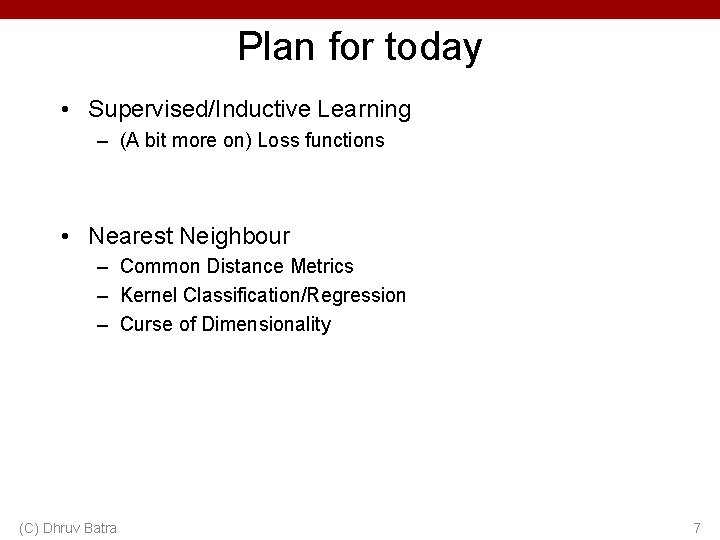

Plan for today • Supervised/Inductive Learning – (A bit more on) Loss functions • Nearest Neighbour – Common Distance Metrics – Kernel Classification/Regression – Curse of Dimensionality (C) Dhruv Batra 7

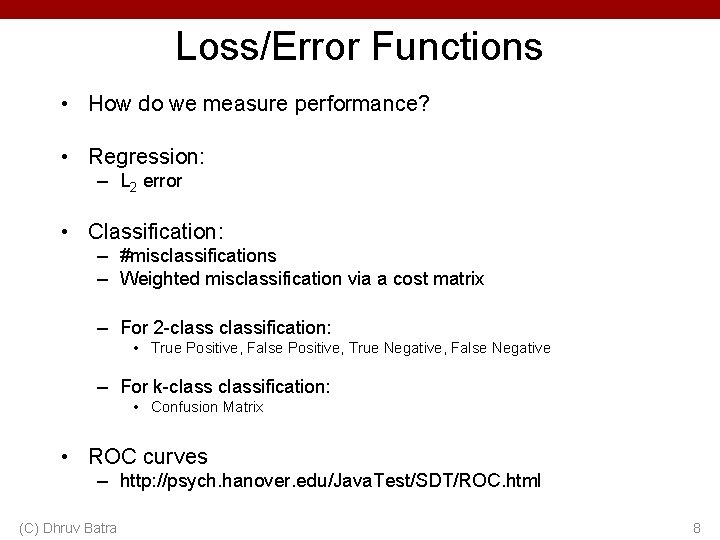

Loss/Error Functions • How do we measure performance? • Regression: – L 2 error • Classification: – #misclassifications – Weighted misclassification via a cost matrix – For 2 -classification: • True Positive, False Positive, True Negative, False Negative – For k-classification: • Confusion Matrix • ROC curves – http: //psych. hanover. edu/Java. Test/SDT/ROC. html (C) Dhruv Batra 8

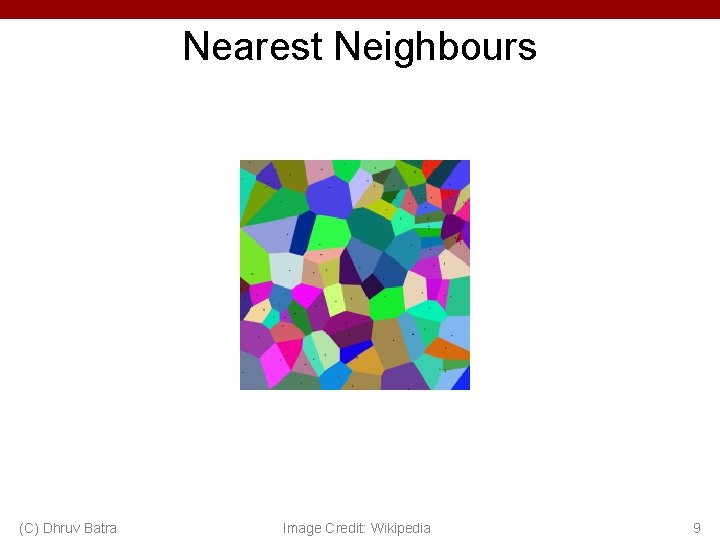

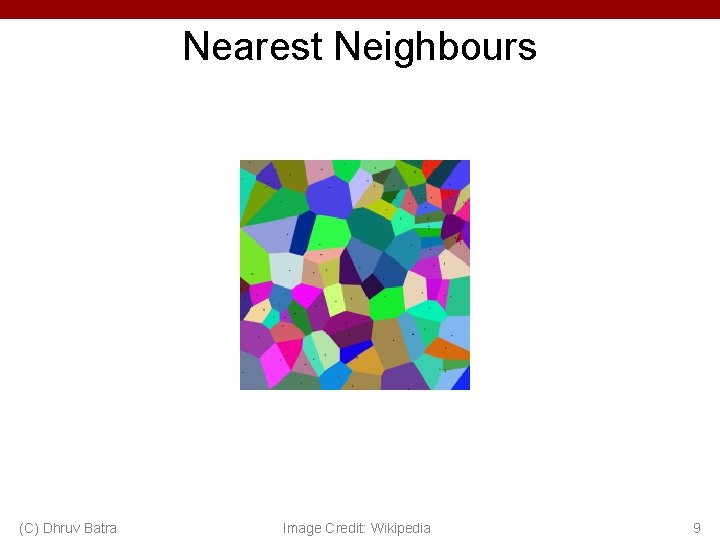

Nearest Neighbours (C) Dhruv Batra Image Credit: Wikipedia 9

Instance/Memory-based Learning Four things make a memory based learner: • A distance metric • How many nearby neighbors to look at? • A weighting function (optional) • How to fit with the local points? (C) Dhruv Batra Slide Credit: Carlos Guestrin 10

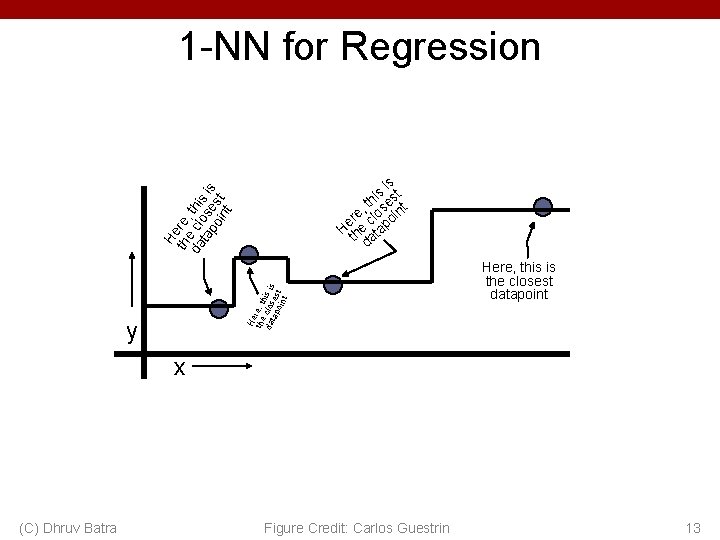

1 -Nearest Neighbour Four things make a memory based learner: • A distance metric – Euclidean (and others) • How many nearby neighbors to look at? – 1 • A weighting function (optional) – unused • How to fit with the local points? – Just predict the same output as the nearest neighbour. (C) Dhruv Batra Slide Credit: Carlos Guestrin 11

k-Nearest Neighbour Four things make a memory based learner: • A distance metric – Euclidean (and others) • How many nearby neighbors to look at? – k • A weighting function (optional) – unused • How to fit with the local points? – Just predict the average output among the nearest neighbours. (C) Dhruv Batra Slide Credit: Carlos Guestrin 12

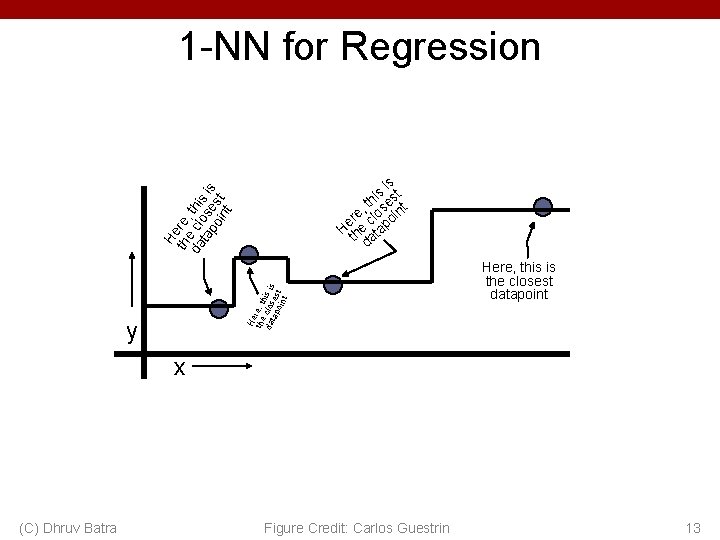

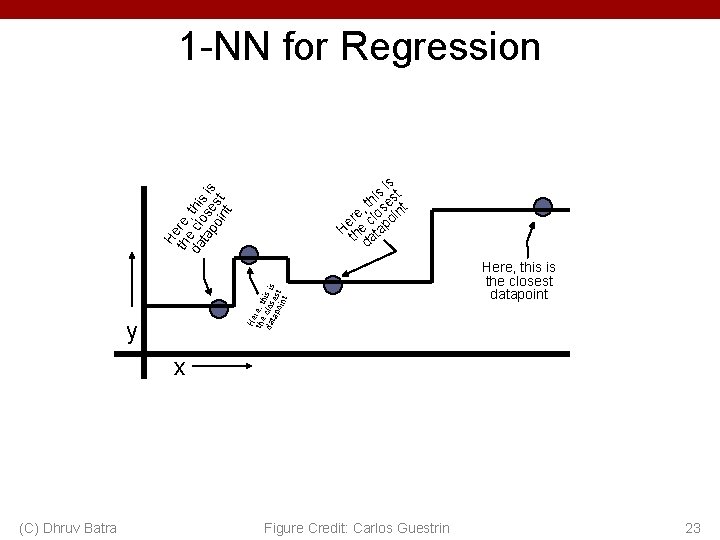

1 -NN for Regression He the re, th da clos is is tap es oin t t H th ere, da e cl this ta ose is po s int t is s st i h , t ose int e er cl o H he tap t da y Here, this is the closest datapoint x (C) Dhruv Batra Figure Credit: Carlos Guestrin 13

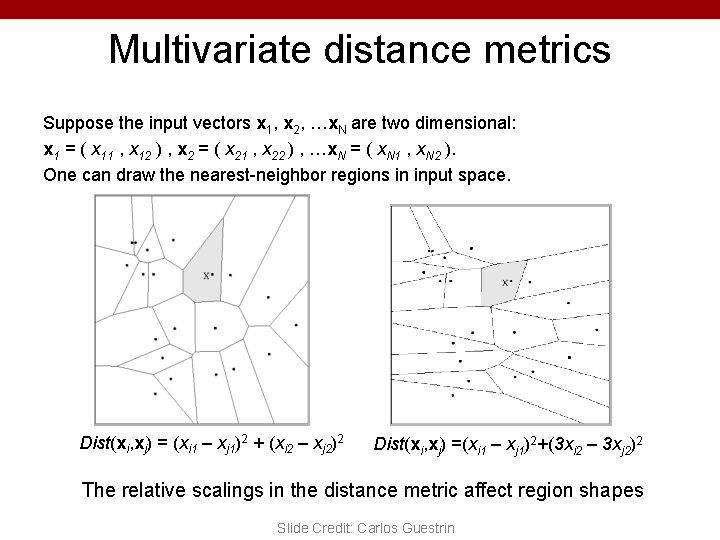

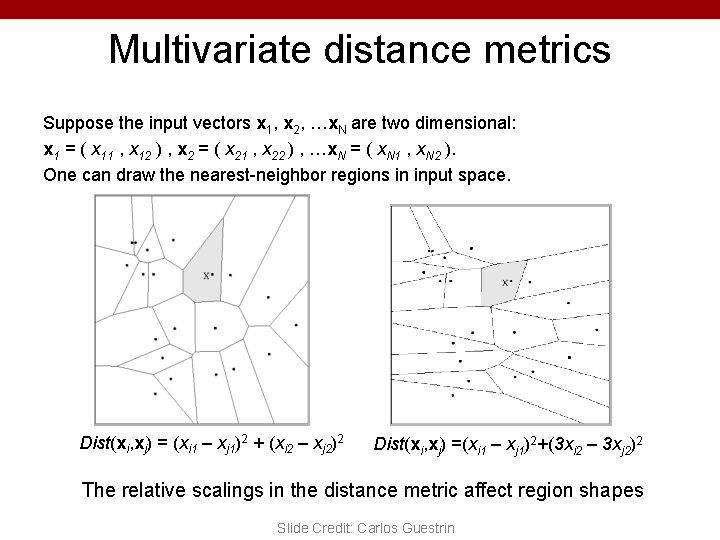

Multivariate distance metrics Suppose the input vectors x 1, x 2, …x. N are two dimensional: x 1 = ( x 11 , x 12 ) , x 2 = ( x 21 , x 22 ) , …x. N = ( x. N 1 , x. N 2 ). One can draw the nearest-neighbor regions in input space. Dist(xi, xj) = (xi 1 – xj 1)2 + (xi 2 – xj 2)2 Dist(xi, xj) =(xi 1 – xj 1)2+(3 xi 2 – 3 xj 2)2 The relative scalings in the distance metric affect region shapes Slide Credit: Carlos Guestrin

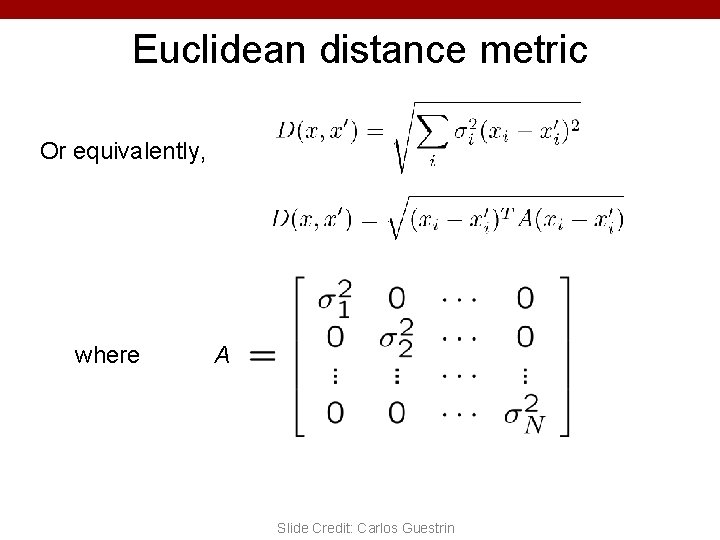

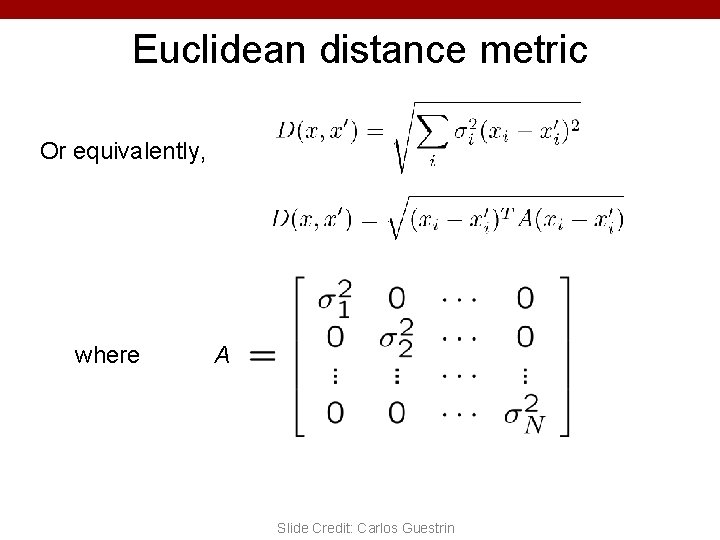

Euclidean distance metric Or equivalently, where A Slide Credit: Carlos Guestrin

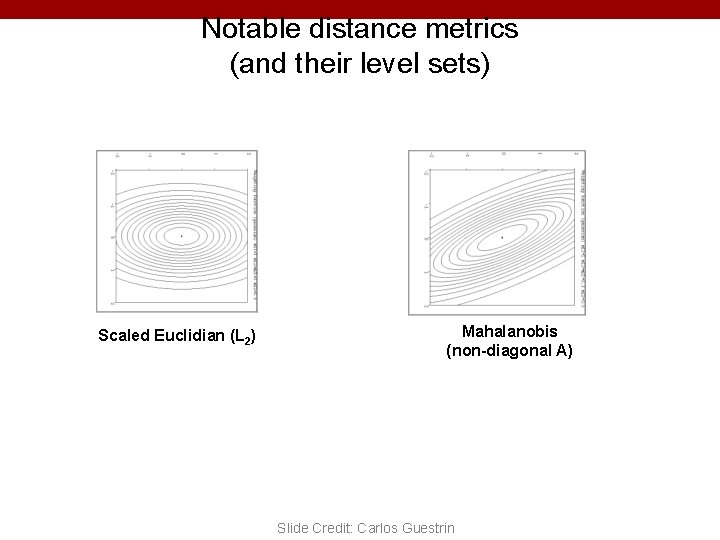

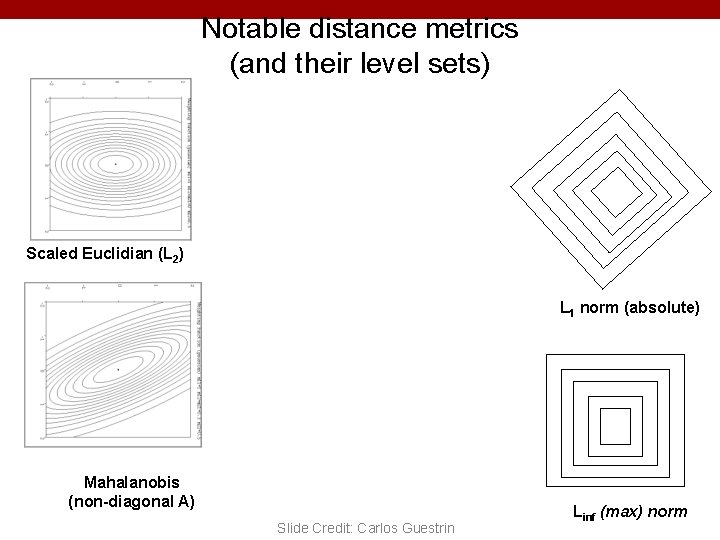

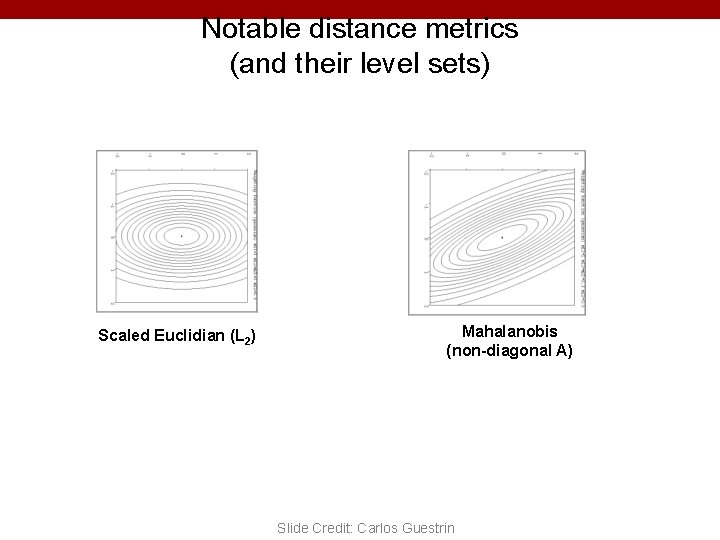

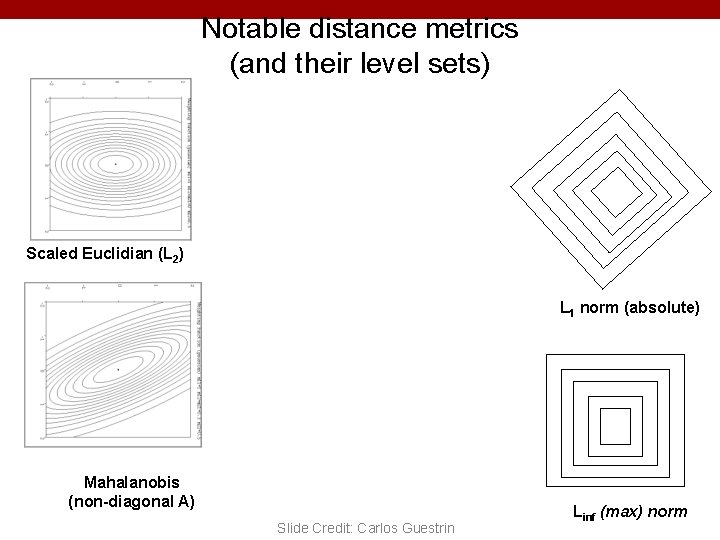

Notable distance metrics (and their level sets) Scaled Euclidian (L 2) Mahalanobis (non-diagonal A) Slide Credit: Carlos Guestrin

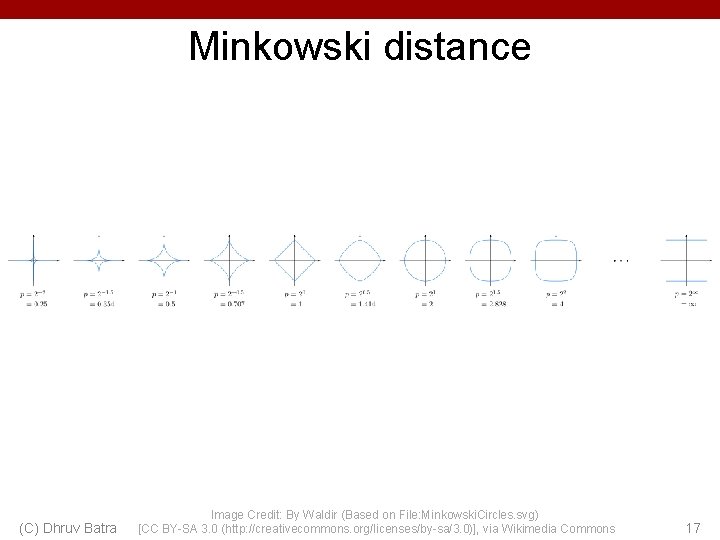

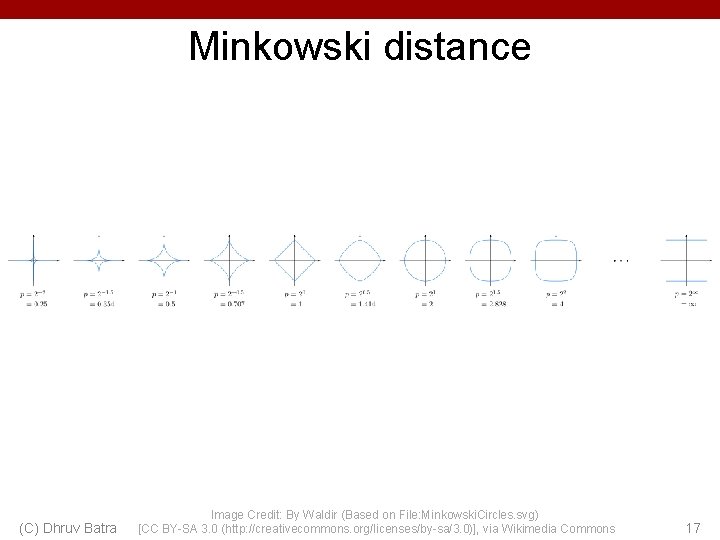

Minkowski distance (C) Dhruv Batra Image Credit: By Waldir (Based on File: Minkowski. Circles. svg) [CC BY-SA 3. 0 (http: //creativecommons. org/licenses/by-sa/3. 0)], via Wikimedia Commons 17

Notable distance metrics (and their level sets) Scaled Euclidian (L 2) L 1 norm (absolute) Mahalanobis (non-diagonal A) Slide Credit: Carlos Guestrin Linf (max) norm

Parametric vs Non-Parametric Models • Does the capacity (size of hypothesis class) grow with size of training data? – Yes = Non-Parametric Models – No = Parametric Models • Example – http: //www. theparticle. com/applets/ml/nearest_neighbor/ (C) Dhruv Batra 19

Weighted k-NNs • Neighbors are not all the same

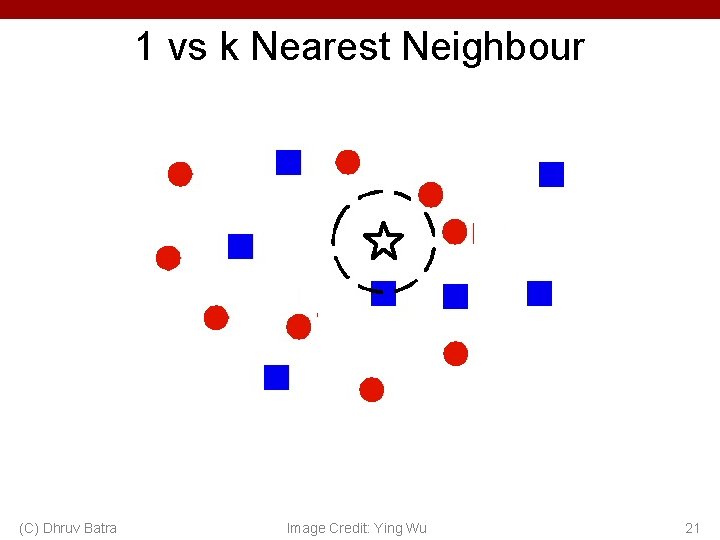

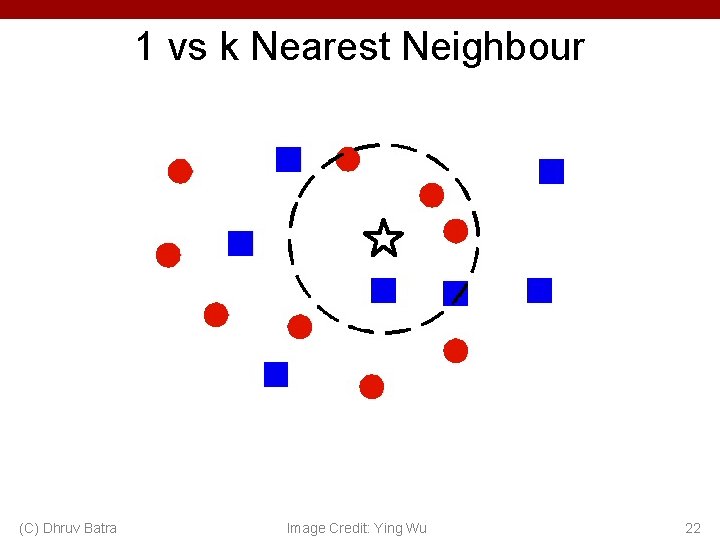

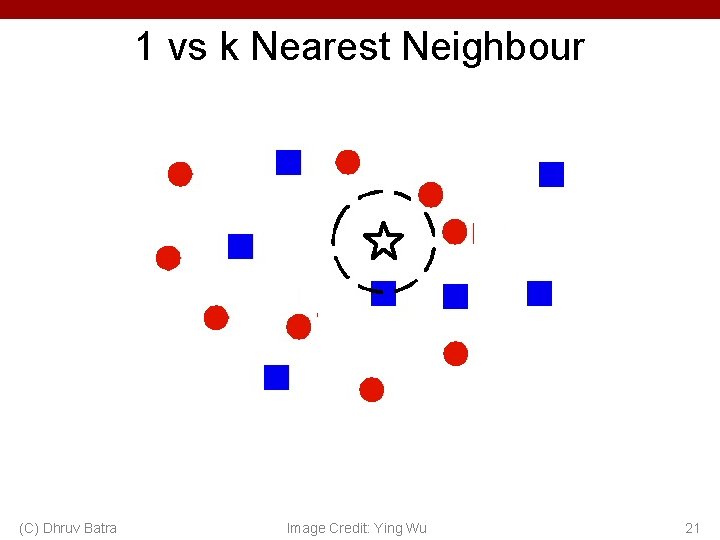

1 vs k Nearest Neighbour (C) Dhruv Batra Image Credit: Ying Wu 21

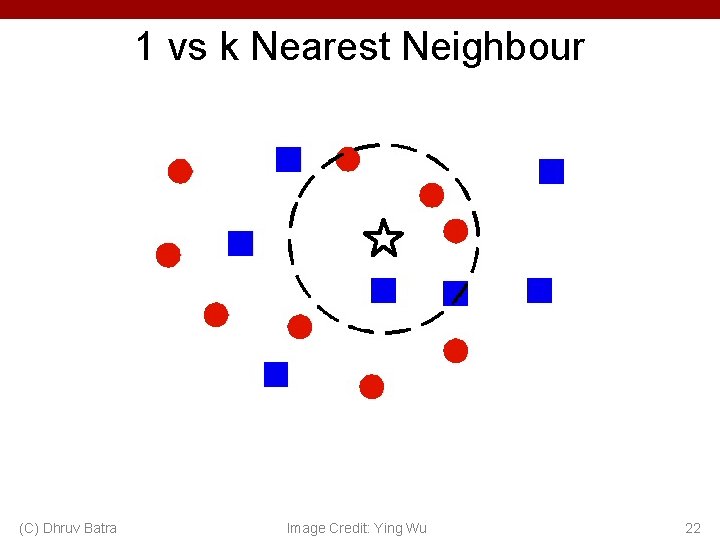

1 vs k Nearest Neighbour (C) Dhruv Batra Image Credit: Ying Wu 22

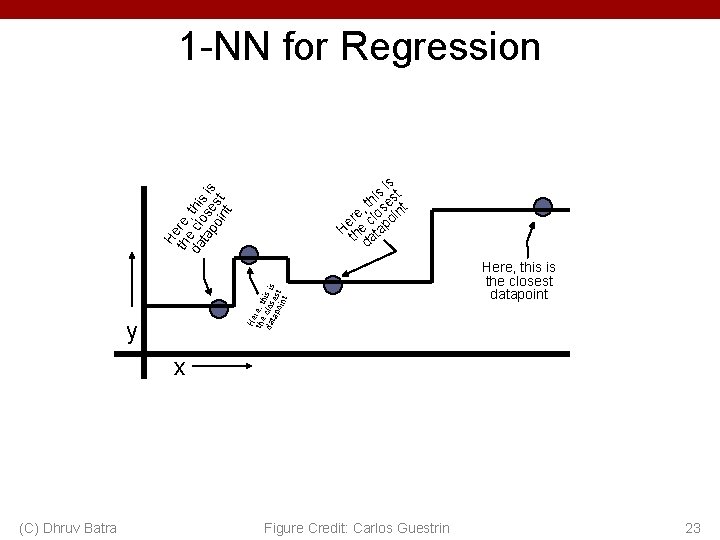

1 -NN for Regression He the re, th da clos is is tap es oin t t H th ere, da e cl this ta ose is po s int t is s st i h , t ose int e er cl o H he tap t da y Here, this is the closest datapoint x (C) Dhruv Batra Figure Credit: Carlos Guestrin 23

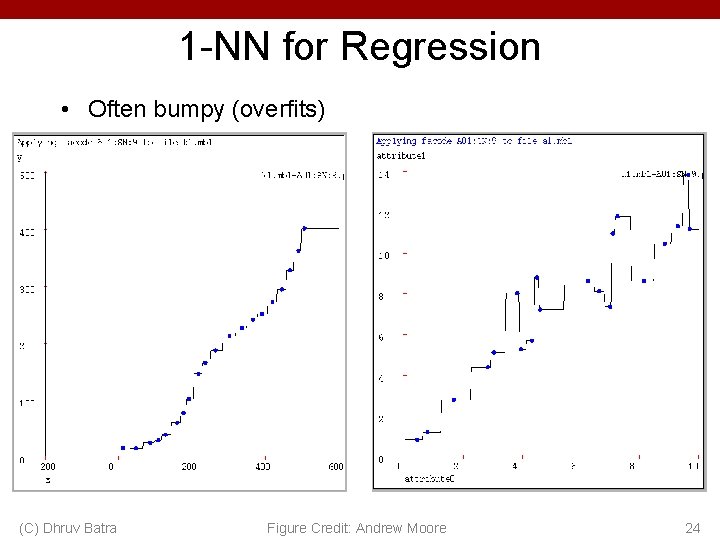

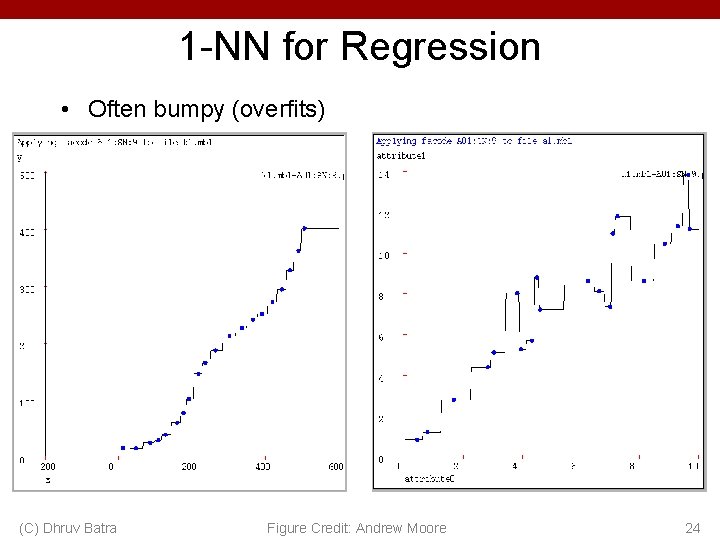

1 -NN for Regression • Often bumpy (overfits) (C) Dhruv Batra Figure Credit: Andrew Moore 24

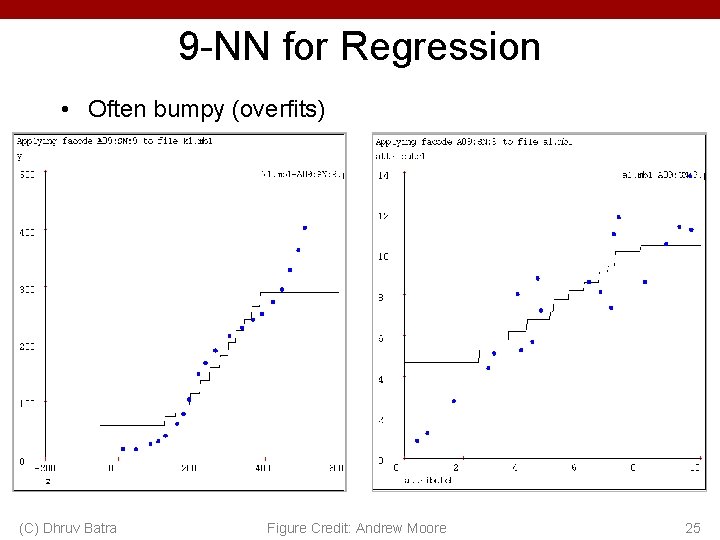

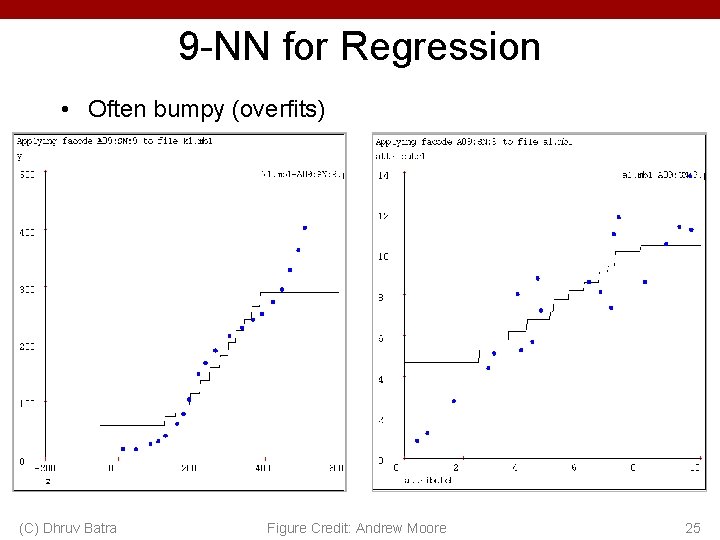

9 -NN for Regression • Often bumpy (overfits) (C) Dhruv Batra Figure Credit: Andrew Moore 25

Kernel Regression/Classification Four things make a memory based learner: • A distance metric – Euclidean (and others) • How many nearby neighbors to look at? – All of them • A weighting function (optional) – wi = exp(-d(xi, query)2 / σ2) – Nearby points to the query are weighted strongly, far points weakly. The σ parameter is the Kernel Width. Very important. • How to fit with the local points? – Predict the weighted average of the outputs predict = Σwiyi / Σwi (C) Dhruv Batra Slide Credit: Carlos Guestrin 26