ECE 5424 Introduction to Machine Learning Topics Supervised

- Slides: 20

ECE 5424: Introduction to Machine Learning Topics: – Supervised Learning – Measuring performance – Nearest Neighbor – Distance Metrics Readings: Barber 14 (k. NN) Stefan Lee Virginia Tech

Administrative • Course add period is over – If not enrolled, please leave. • Virginia law apparently. (C) Dhruv Batra 2

HW 0 • HW 0 is graded – – Average: 81% Median: 85% Max: 100% Min: 35% • The lower your score, the harder you should expect to work. (C) Dhruv Batra 3

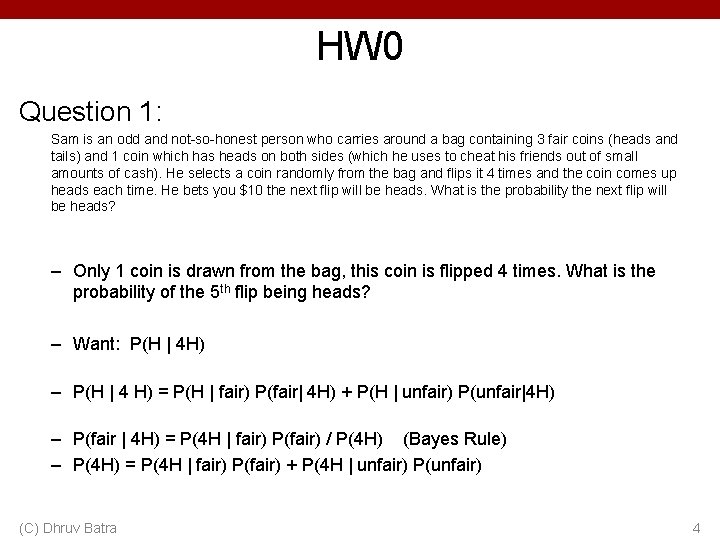

HW 0 Question 1: Sam is an odd and not-so-honest person who carries around a bag containing 3 fair coins (heads and tails) and 1 coin which has heads on both sides (which he uses to cheat his friends out of small amounts of cash). He selects a coin randomly from the bag and flips it 4 times and the coin comes up heads each time. He bets you $10 the next flip will be heads. What is the probability the next flip will be heads? – Only 1 coin is drawn from the bag, this coin is flipped 4 times. What is the probability of the 5 th flip being heads? – Want: P(H | 4 H) – P(H | 4 H) = P(H | fair) P(fair| 4 H) + P(H | unfair) P(unfair|4 H) – P(fair | 4 H) = P(4 H | fair) P(fair) / P(4 H) (Bayes Rule) – P(4 H) = P(4 H | fair) P(fair) + P(4 H | unfair) P(unfair) (C) Dhruv Batra 4

Recap from last time (C) Dhruv Batra 5

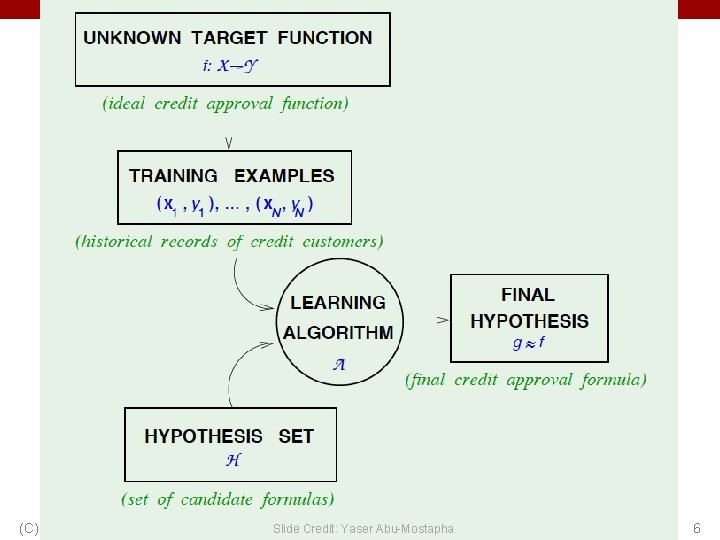

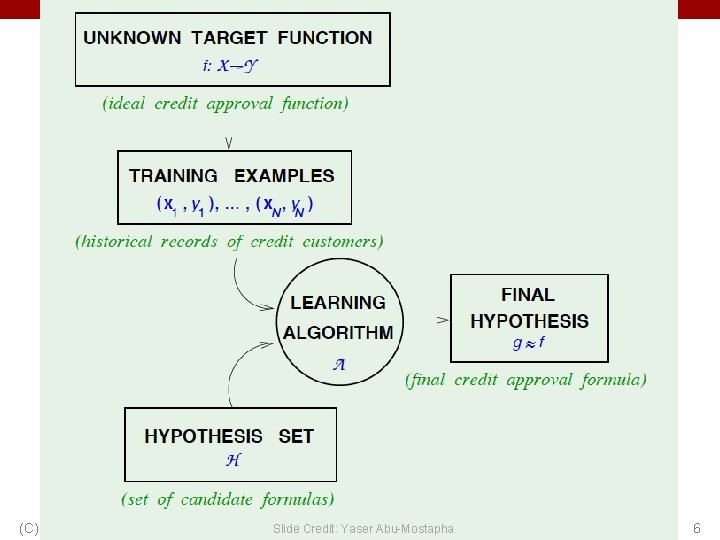

(C) Dhruv Batra Slide Credit: Yaser Abu-Mostapha 6

Nearest Neighbor • Demo – http: //www. cs. technion. ac. il/~rani/Loc. Boost/ (C) Dhruv Batra 7

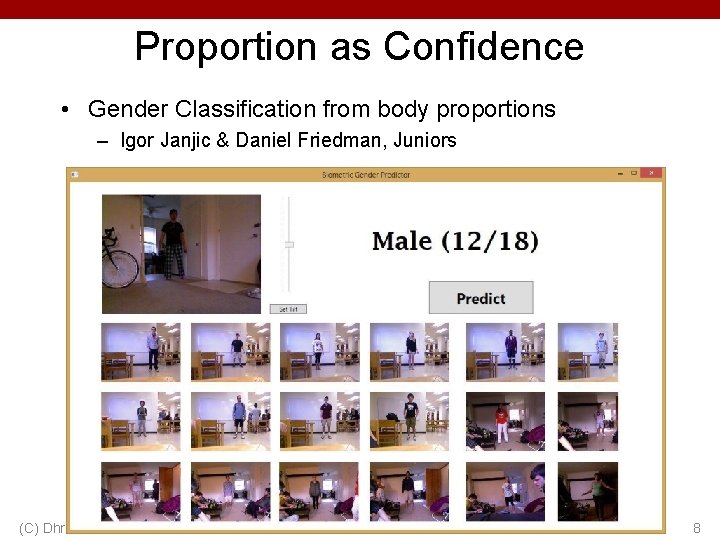

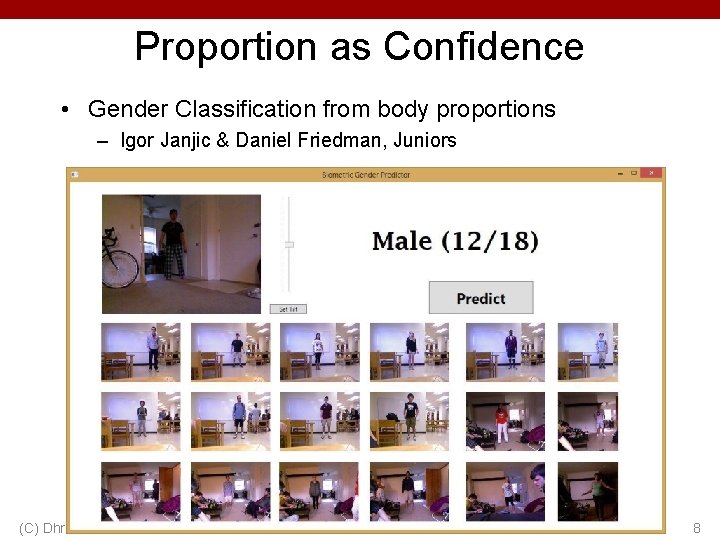

Proportion as Confidence • Gender Classification from body proportions – Igor Janjic & Daniel Friedman, Juniors (C) Dhruv Batra 8

Plan for today • Supervised/Inductive Learning – (A bit more on) Loss functions • Nearest Neighbor – Common Distance Metrics – Kernel Classification/Regression – Curse of Dimensionality (C) Dhruv Batra 9

Loss/Error Functions • How do we measure performance? • Regression: – L 2 error • Classification: – #misclassifications – Weighted misclassification via a cost matrix – For 2 -classification: • True Positive, False Positive, True Negative, False Negative – For k-classification: • Confusion Matrix • ROC curves – http: //psych. hanover. edu/Java. Test/SDT/ROC. html (C) Dhruv Batra 10

Nearest Neighbors (C) Dhruv Batra Image Credit: Wikipedia 11

Instance/Memory-based Learning Four things make a memory based learner: • A distance metric • How many nearby neighbors to look at? • A weighting function (optional) • How to fit with the local points? (C) Dhruv Batra Slide Credit: Carlos Guestrin 12

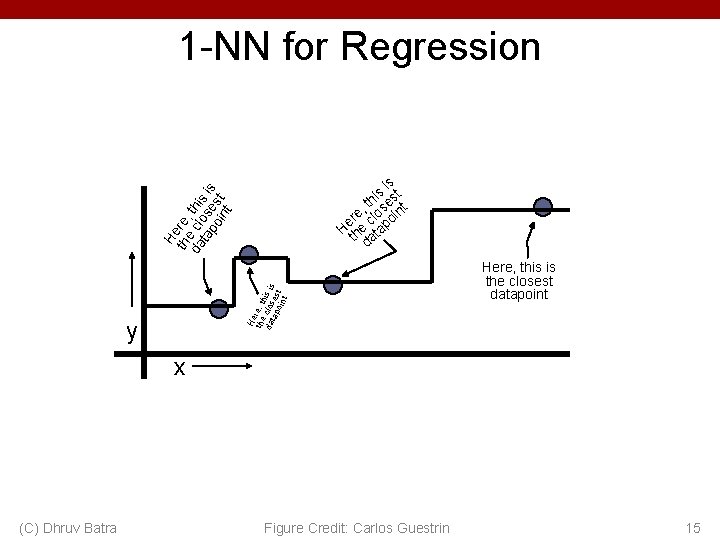

1 -Nearest Neighbour Four things make a memory based learner: • A distance metric – Euclidean (and others) • How many nearby neighbors to look at? – 1 • A weighting function (optional) – unused • How to fit with the local points? – Just predict the same output as the nearest neighbour. (C) Dhruv Batra Slide Credit: Carlos Guestrin 13

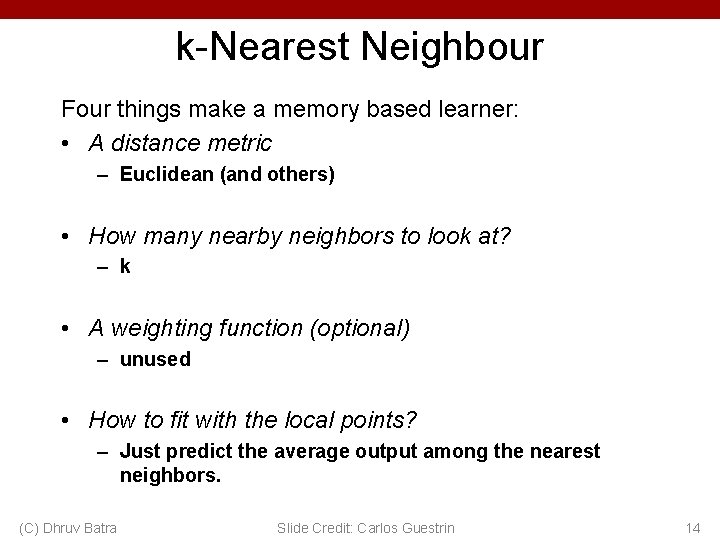

k-Nearest Neighbour Four things make a memory based learner: • A distance metric – Euclidean (and others) • How many nearby neighbors to look at? – k • A weighting function (optional) – unused • How to fit with the local points? – Just predict the average output among the nearest neighbors. (C) Dhruv Batra Slide Credit: Carlos Guestrin 14

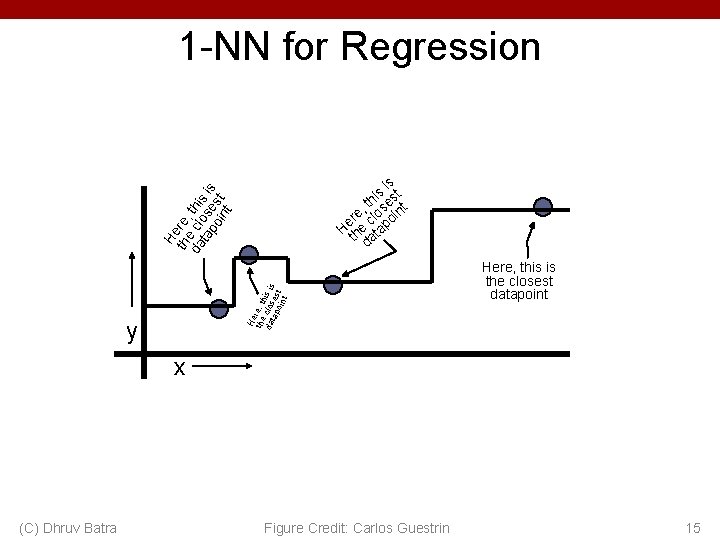

1 -NN for Regression He the re, th da clos is is tap es oin t t H th ere, da e cl this ta ose is po s int t is s st i h , t ose int e er cl o H he tap t da y Here, this is the closest datapoint x (C) Dhruv Batra Figure Credit: Carlos Guestrin 15

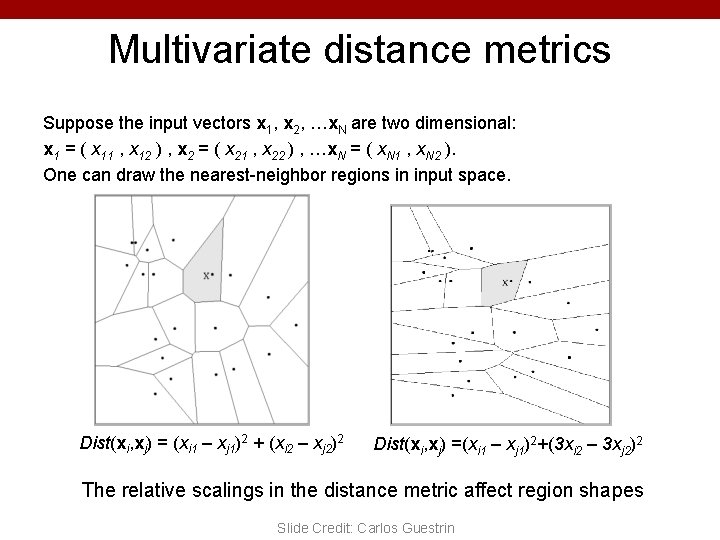

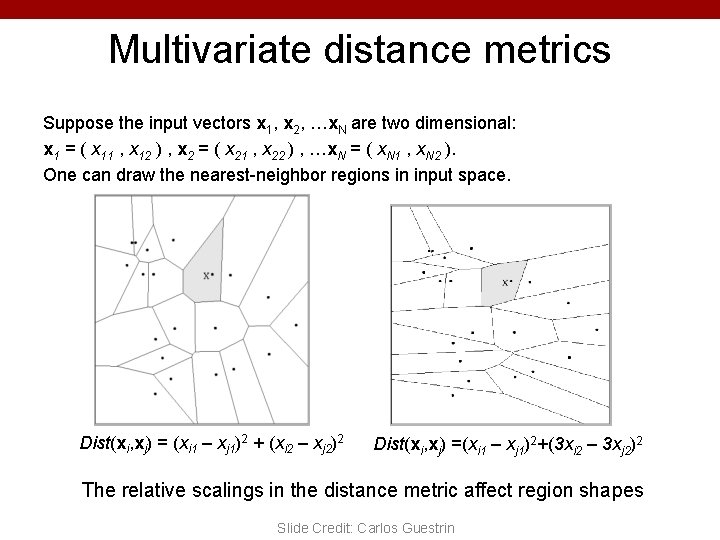

Multivariate distance metrics Suppose the input vectors x 1, x 2, …x. N are two dimensional: x 1 = ( x 11 , x 12 ) , x 2 = ( x 21 , x 22 ) , …x. N = ( x. N 1 , x. N 2 ). One can draw the nearest-neighbor regions in input space. Dist(xi, xj) = (xi 1 – xj 1)2 + (xi 2 – xj 2)2 Dist(xi, xj) =(xi 1 – xj 1)2+(3 xi 2 – 3 xj 2)2 The relative scalings in the distance metric affect region shapes Slide Credit: Carlos Guestrin

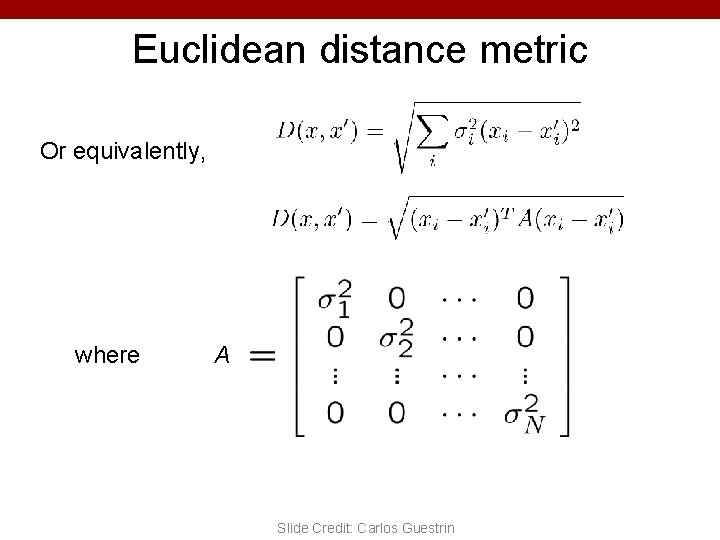

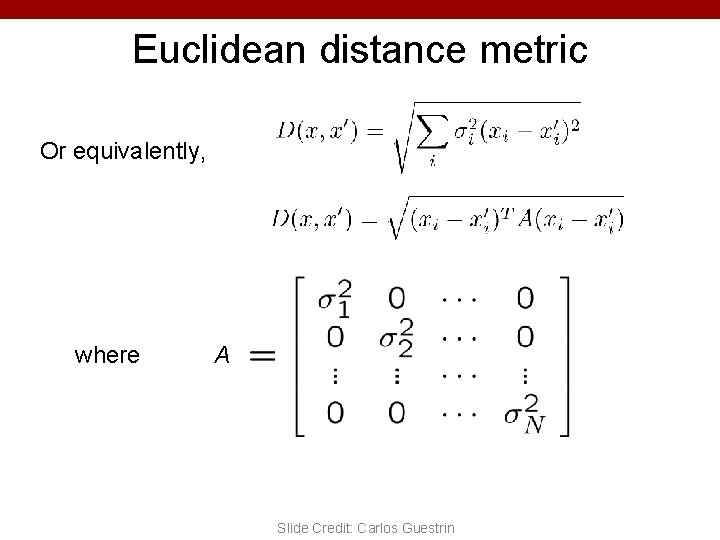

Euclidean distance metric Or equivalently, where A Slide Credit: Carlos Guestrin

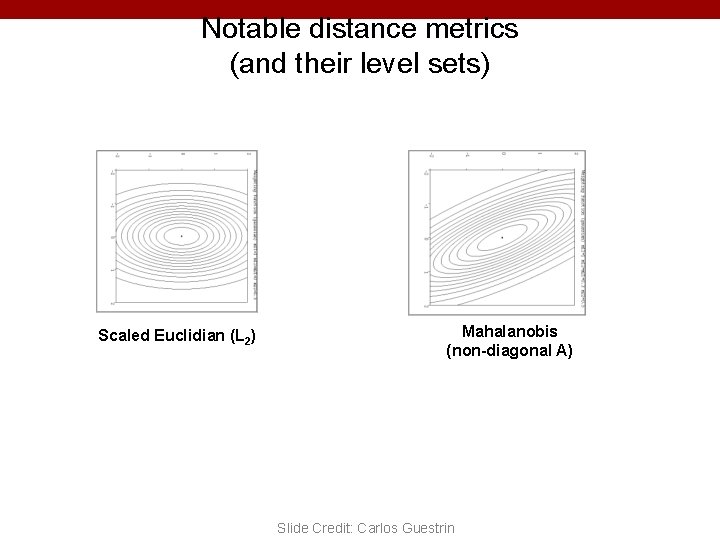

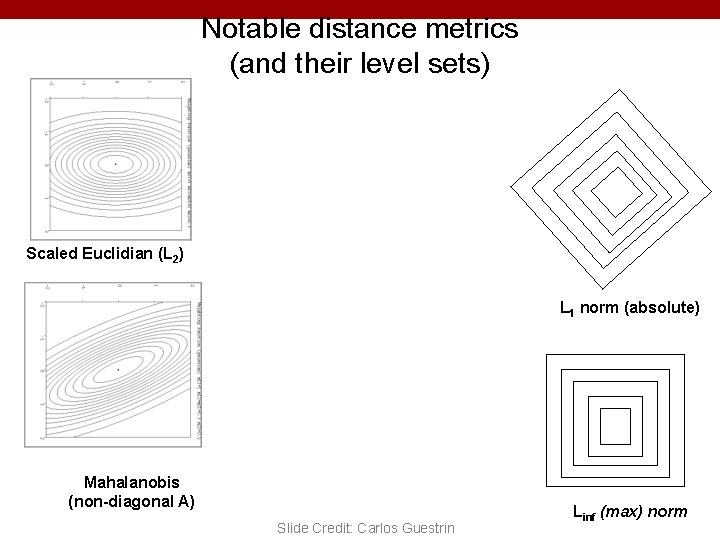

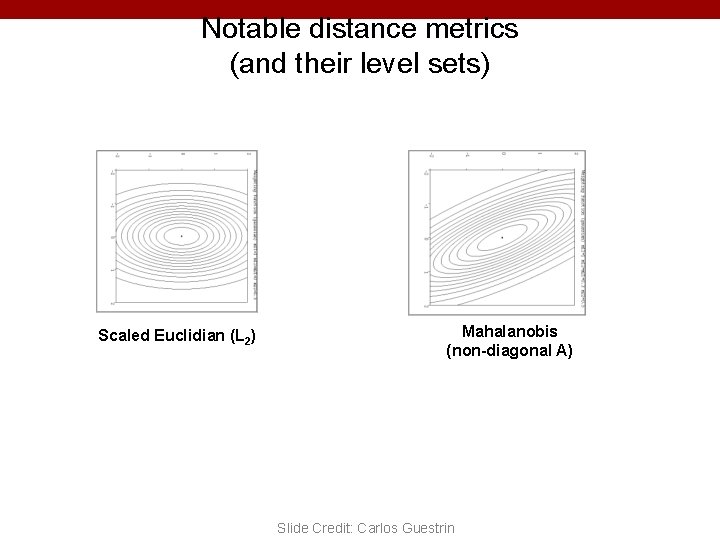

Notable distance metrics (and their level sets) Scaled Euclidian (L 2) Mahalanobis (non-diagonal A) Slide Credit: Carlos Guestrin

Minkowski distance (C) Dhruv Batra Image Credit: By Waldir (Based on File: Minkowski. Circles. svg) [CC BY-SA 3. 0 (http: //creativecommons. org/licenses/by-sa/3. 0)], via Wikimedia Commons 19

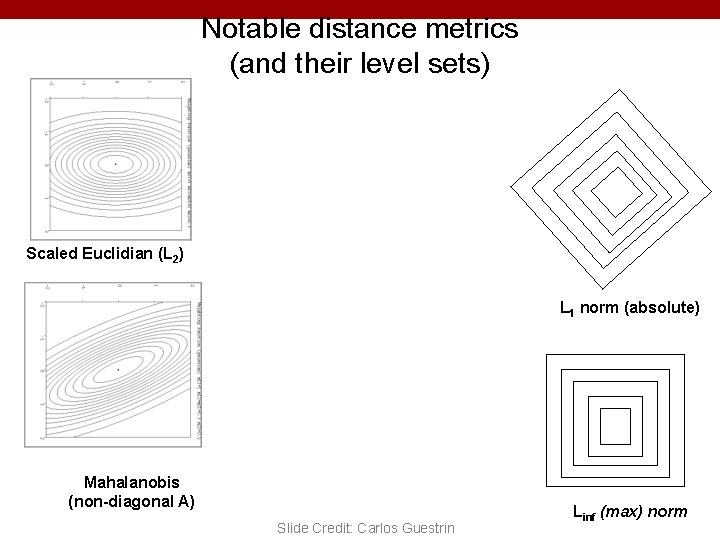

Notable distance metrics (and their level sets) Scaled Euclidian (L 2) L 1 norm (absolute) Mahalanobis (non-diagonal A) Slide Credit: Carlos Guestrin Linf (max) norm